Abstract

Eucalyptus Longhorned Borers (ELB) are some of the most destructive pests in regions with Mediterranean climate. Low rainfall and extended dry summers cause stress in eucalyptus trees and facilitate ELB infestation. Due to the difficulty of monitoring the stands by traditional methods, remote sensing arises as an invaluable tool. The main goal of this study was to demonstrate the accuracy of unmanned aerial vehicle (UAV) multispectral imagery for detection and quantification of ELB damages in eucalyptus stands. To detect spatial damage, Otsu thresholding analysis was conducted with five imagery-derived vegetation indices (VIs) and classification accuracy was assessed. Treetops were calculated using the local maxima filter of a sliding window algorithm. Subsequently, large-scale mean-shift segmentation was performed to extract the crowns, and these were classified with random forest (RF). Forest density maps were produced with data obtained from RF classification. The normalized difference vegetation index (NDVI) presented the highest overall accuracy at 98.2% and 0.96 Kappa value. Random forest classification resulted in 98.5% accuracy and 0.94 Kappa value. The Otsu thresholding and random forest classification can be used by forest managers to assess the infestation. The aggregation of data offered by forest density maps can be a simple tool for supporting pest management.

1. Introduction

Eucalyptus Longhorned Borers (ELB), Phoracantha semipunctata (Fabricius), and P. recurva Newman (Coleoptera: Cerambycidae), are among the most destructive eucalypt pests in regions with Mediterranean climate [1,2]. Eucalyptus globulus is one of the most planted eucalypt species in these regions, and it is known to have low resistance to ELB [2,3].

ELB activity generally starts in late spring when adults emerge and start laying eggs. Upon hatching, ELB larvae bore galleries along the phloem and cambium of trees, eventually preventing sap from flowing, which leads to rapid tree death during summer and fall [1,4,5,6]. The ability of larvae to successfully colonize the host plant depends on low bark moisture content, leaving water-stressed trees particularly susceptible to attack by ELB [1,5,7].

Under the Mediterranean climate, with low rainfall and extended dry summers periods, the attack by ELB often result in significant tree mortality, despite long-lasting efforts to select for more resistant E. globulus genotypes [8]. With droughts expected to increase due to climate change, ELB outbreaks will likely become more frequent and more severe [9].

ELB control methods include biological control, selection of more resistant eucalypts, and various cultural practices aimed at increasing tree adaptation and resilience, but the most effective curative measure is felling all attacked trees and removing them from the stands [6,10]. Damage caused by ELB is usually not detected using traditional surveillance techniques until significant mortality has occurred [11]. Early detection is recognized as the first step to reduce the impact of ELB [1], hence new approaches to monitoring, particularly through remote sensing, can provide an invaluable tool for forest managers in terms of planning and executing control actions.

Traditional survey techniques are restricted by small area coverage and subjectivity [12] but combined with remote-sensing technology can lead to expanded spatial coverage, minimize the response time, and reduce the costs of monitoring forested areas [13]. Following Wulder et al. [14], the appropriate sensor and resolution should be adopted according to the spatial scale which better adjusts to the situation. To date, several studies have demonstrated successful pest and diseases detection and monitoring in forests using different types of sensor and platform [15,16,17]. For instance, in terms of spaceborne optical sensors for large areas, Meddens et al. [18] detected multiple levels of coniferous tree mortality using multi-date and single-date Landsat imagery. Mortality in ash trees was assessed by Waser et al. [19] using multispectral WorldView-2 imagery. In the eucalypt forest context, to predict bronze bug damage Oumar and Mutanga [20] tested WorldView-2 imagery. The airborne optical sensor Compact Air-Borne Spectrographic Imager 2 (CASI-2) has been tested by Stone et al. [21] who conducted a study to assess damage caused by herbivorous insects in Australian eucalypt forests and pine plantations.

In recent years the use of unmanned aerial vehicle (UAV) platforms has become widely employed for pests and disease detection and monitoring [12,22,23,24,25,26,27,28]. The main attributes are very high resolution, suitability for multitemporal analysis, lower operational costs compared with airplanes and satellites, independence of cloud cover, and the ability to operate in specific phenological phases of plants or pest/disease outbreaks [12]. In addition, a large number of passive and active sensors can be assembled, such as RGB (red, green, blue), multispectral and hyperspectral cameras, LiDAR (laser imaging detection and ranging), and RADAR (radio detection and ranging) [29,30]. On the other hand, as stressed by Pádua et al. [29], the disadvantages include small area-coverage when compared with satellites, sensitivity to bad weather, increasingly strict regulations that may restrict operations, and the high volume of data generated.

The imagery classification approaches used in the more recently published UAV studies to detect pests and diseases in forests are diverse. Lehmann et al. [22] used a modified normalized difference vegetation index (NDVI) to discriminate between five classes of infestation by the oak splendor beetle through OBIA (objected-based imagery analysis) classification with an overall Kappa index of agreement of 0.81–0.77. Näsi et al. [23] investigated bark beetle damage at the tree level in South Finland using a combination of RGB, near infrared (NIR), red-edge band and NDVI. Object-based K-NN (K-nearest neighbor) classification was applied, and overall accuracy was 75%. In New Zealand, Dash et al. [12] used NDVI and red-edge NDVI to study the discoloration classes of Pinus radiata through the OBIA classification approach with a random forest (RF) algorithm. In Catalonia (Spain), Otsu et al. [26] detected defoliation of pine trees affected by pine processionary and distinguished pine species at a pixel level using four spectral indices and NIR band. The authors estimated the threshold values using histogram analysis. For comparisons, another classification used was the OBIA with a random forest algorithm and an overall accuracy of 93%. Finally, Iordache et al. [28] studied pine wild disease in Portugal using OBIA and machine-learning random forest algorithm for multispectral and hyperspectral imagery. The overall accuracy of both classifications was 95% and 91%, respectively. To date, no published work has been developed with UAV imagery for ELB detection.

This work arises from the necessity to find monitoring tools that could support pest management decisions regarding ELB attacks in eucalypt stands. In the past, this has been hampered since the identification of dead trees in the field is bothcostly and time-consuming. Furthermore, it is critical that ELB attacks can be identified at early stages of infestation. Current surveying methods only detect problems when large number of trees are infested.

The main objectives of this experimental work were: (1) to detect ELB attacks through UAV imagery by using selected spectral indices and Otsu thresholding; (2) to map trees crown status using large-scale mean-shift (LSMS) segmentation, as well as the machine-learning classification approach with a RF algorithm; and (3) to map tree density using the hexagonal tessellations technique to support phytosanitary interventions.

2. Materials and Methods

2.1. Study Area

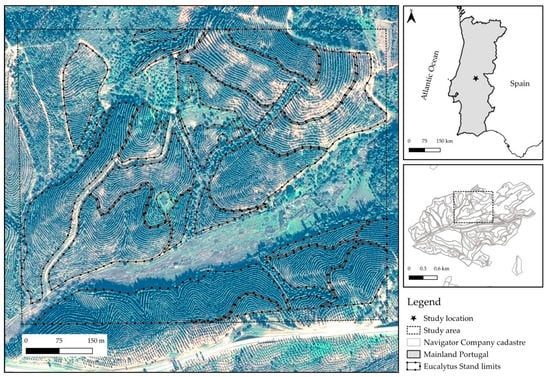

A 30.56 ha E. globulus stand located in central Portugal close to Gavião locality (39°27.909′ N, 7°56.124′ W) was selected for this study (Figure 1). The study area is managed by The Navigator Company (NVG), a Portuguese pulp and paper company, and was selected based on reports of severe pest attacks provided by forest managers of the company.

Figure 1.

Location of the study site. The main element shows the stand thought Pleiades-1A imagery (image date = 11 December 2019).

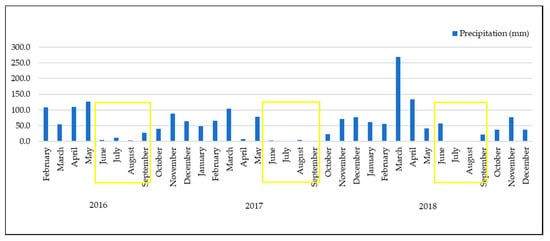

The eucalypt plantation was established in 2014 with a mean stand density of 1250 plants per hectare. Initial mortality caused by ELB was detected in early 2018. Due to the severe drought that occurred in 2017, ELB attack increased over the course of a few months. This is supported by the occurrence of low rainfall in 2017, especially during the summer months, as shown in Figure 2.

Figure 2.

Precipitation distribution (mm/month) observed between 2016 and 2018. Yellow boxes indicate the summer months. Data collected from an own weather station located at 25 km of the study area.

Annual average rainfall is low in this region (700–800 mm/year), so if there is a year drier than the average, the following year’s mortality is likely to increase due to water stress and ELB attacks.

This area is characterized by sandstones both in valleys and hillsides. The soils are generally poor and shallow. According to the Forest and Agriculture Organization (FAO)classification system [31], soils are Leptosols or Plinthic Arenosols. The relief is slightly inclined, and the altitude of the site varies between 160 and 250 m a.s.l. (above sea level). Southern aspects prevail in the study site.

2.2. Data Acquisition

Multispectral images were acquired through four flights on 21 January 2019 using a Parrot SEQUOIA camera (Parrot S.A., Paris, France) on a remotely piloted fixed wing eBee SenseFly drone (Parrot S.A., Paris, France). This single propeller drone allows more stable and longer flights, which in turn reduces the cost and length of missions. Considering the frequency of cloudy days and strong winds during winter season, this aerial vehicle has greater speed in the acquisition of data and efficiency in the operation. The details of flight planning parameters are presented in Table 1.

Table 1.

The flight’s planning parameters for the study area.

The Parrot SEQUOIA is a compact bundle with multispectral and sunshine sensors. The camera collects four discrete and separated spectral bands with 1.2 megapixels (MP) resolution: green (530–570 nm), red (640–680 nm), red-edge (730–740 nm), and near-infrared (770–810 nm). Additionally, the RGB camera captures 16 MP of resolution [32,33].

To improve equal resolution and less likelihood of holes at higher elevation, terrain awareness was used (Figure 3a,b). The mission planning application used was SenseFly eMotion (Parrot S.A., Paris, France). To refine vertical and horizontal accuracy, nine ground control points (GCP’s) were installed and measured with real-time kinematic (RTK) global navigation satellite systems (GNSS). Then, the multispectral camera was calibrated in loco, to adjust the sunshine sensors to the local lighting conditions at solar noon.

Figure 3.

(a) Flight paths in the study area, (b) horizontal perspective of flight paths.

Regarding the imagery processing workflow, all discrete bands imagery and GCPs were imported to Pix4Dmapper Pro software (Version 4.2, Pix4D S.A., Prilly, Switzerland), a photogrammetry and drone mapping software broadly used for UAV imagery processing [34]. The agricultural (multispectral photogrammetry workflow was chosen, which consists in alignment, geometric calibration based on GCP’s, reflectance calibration, point cloud generation, and classification, raster digital surface model (DSM) and orthomosaic generation based on the digital terrain model (DTM).

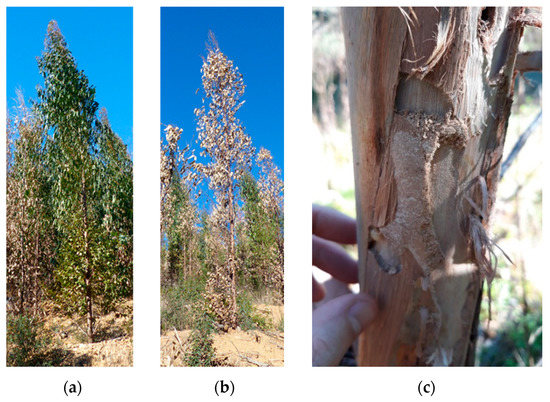

The next step was to analyze the vitality of the stand in the field. UAV multispectral imagery was used to elaborate color composites in which matching of the tree canopies pixel were compared with the real field status of trees. For this end, two different tree vitality status were assigned: healthy trees (Figure 4a) and dead trees—crown completely dead (Figure 4b). Field data collection was carried out through random sampling. Data collection was performed using Arrow Gold Antenna (Eos Positioning Systems, Inc., Terrebonne, QC, Canada) with RTK sensor. The cause of death was verified by removing the bark in order to find signs of ELB attack, namely galleries caused by larvae (Figure 4c).

Figure 4.

Status of the trees according to field evaluation. (a) healthy tree, (b) dead tree, cause of death from Eucalyptus Longhorned Borers (ELB) was confirmed by the observation of galleries originated by Longhorned Borer larvae (c).

Once the status of the trees was assigned in the field, spectral values were extracted for the training and validation datasets.

2.3. Selected Vegetation Indices

Among the considerable number of vegetation indices (VIs) usually used as indicators of vegetation status [35], there are a set of VIs that are generally applied to assess symptoms of stress [12,36,37]. Stressed eucalypt trees reveal chlorophyll content reduction, red discoloration produced by secondary metabolites accumulation such as anthocyanins and carotenoids, or loss of photosynthetic tissues due to defoliation or necrosis [38,39]. Commonly, NIR, red, red edge, and green bands are used to assess vegetation status due to high sensitivity to changes in moisture content, pigment indices, and vegetation health, respectively [20].

Based on their ability to reveal stress in eucalypts, a total of five vegetation indices were calculated using the available spectral reflectance bands from UAV imagery (Table 2). The difference vegetation index (DVI) and NDVI were estimated based on the evidence of correlation with plant stress [40,41]. The green normalized difference vegetation index (GNDVI) and normalized difference red-edge (NDRE) were also estimated, as both have shown high sensitivity to changes in chlorophyll concentrations [20,42,43,44]. In addition, some studies have shown that GNDVI has higher sensitivity to pigment changes than NDVI [45,46]. The soil-adjusted vegetation index (SAVI) was also estimated to reduce soil brightness influence [47].

Table 2.

Selected vegetation indices derived from unmanned aerial vehicle (UAV) imagery.

The values of the spectral indices were extracted for all features collected in the field using the zonal statistics tool provided by QGIS (Version 3.10) [48].

2.4. Pixel-Based Analysis

2.4.1. Otsu Thresholding Method

The Otsu thresholding method is an unsupervised method used to select a threshold automatically from a gray level histogram [52]. This approach assumes that the image contains two classes of pixels following a bimodal histogram, and estimates a threshold value which splits the foreground and the background of an image [26,52,53,54]. The threshold value is calculated aiming to minimize intra-class intensity variance [52]. Although this method is usually applied to images with bimodal histograms, it might be also used for unimodal and multimodal histograms if the precision of the objects is not a requirement [53]. As stressed by Otsu [52], when the histogram valley is flat and broad, it is difficult to detect the threshold with precision. This phenomenon frequently occurs with real pictures and remote sensing imagery.

Generally, as eucalypt stands are aligned and understory vegetation is managed, tree and bare soil reflectance can be easily discriminated. Hence, by splitting soil and vegetation, gaps of eucalypt trees would be more visible. In this study, this method was applied in vegetation indices (VIs)to separate healthy and dead eucalypt. Using the Scikit-image library [55] in Python (Version 3.6.9) [56], the histogram threshold values were calculated.

2.4.2. Local Maxima of a Sliding Window

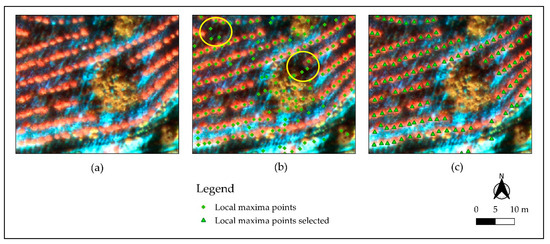

The extraction of tree location can be performed on high-resolution imagery by applying local maxima filter, as stressed by Wulder et al. [57,58,59] and Wang et al. [60]. Therefore, this algorithm enables the estimation of tree density, volume, basal area, and other important parameters used in forest management and planning [57]. The case of pest management is of special interest since the algorithm can be combined with other techniques in order to determine how many trees are infested or dead. To find the number of trees in the multispectral image, the QGIS Tree density plugin (Ghent University, Lieven P.C. Verbeke, Belgium) [61] was used on a brightness image calculated through the mean of the four bands. According to Crabbé et al. [61] this algorithm uses a sliding window to move over the image. The pixel is marked as a local maximum when the central pixel is the brightest of the window. From Figure 5, highlighting of local maxima filtering using a sliding window can be observed.

Figure 5.

Highlighting local maxima filter performed: (a) false color composite (near infrared (NIR), red (R), green (G)), (b) local maxima points, and the yellow circle indicating false positives, (c) Local maxima points selected by visual interpretation.

In the next step, all false positives (Figure 5b) were removed through the interpretation of the false-color composite image (Figure 5c). The position of the trees will be used to estimate the number of individual dead and healthy trees. Finally, local maxima points selected will be also used to extract the crowns from the segmented image using spatial tools.

2.5. Object-Based Analysis and Classification

Geographic object-based image analysis (GEOBIA), as an extension of object-based analysis, consists on dividing imagery into different meaningful image-objects that group neighboring pixels with similar characteristics or semantic meanings such as spectral, shape (geometric), color, intensity, texture, or contextual measures [62,63,64]. As a result of the GEOBIA procedure, a vector file with several polygons or segments with similar properties is obtained instead of individual pixels. This approach is convenient for individual tree classification [65,66], reducing the intra-class spectral variability caused by crown textures, gaps, and shadows [67,68]. On the other hand, the main limitation of this method is related to the over- and under-segmentation, which affect the subsequent classification process. These errors occur when the generated segments do not represent the real shape and area of the image objects [69].

Image segmentation was performed by applying the LSMS algorithm with four spectral bands stacked. The LSMS algorithm, developed by Fukunaga and Hostetler in 1975, is a non-parametric and iterative process that groups pixels with similar meanings [70] by using the radiometric mean and variance of each band [71]. The selection of the LSMS algorithm was motivated by three main reasons: (1) LSMS can be used by the ORFEO ToolBox (OTB) (CNES, Paris, France), which is an easy to use open-source project for remote-sensing imagery processing and analyses [72]; (2) LSMS has been especially developed to be applied in large very high resolution (VHR) images processing [70]; and (3) previous work has shown good results when using UAV imagery [68].

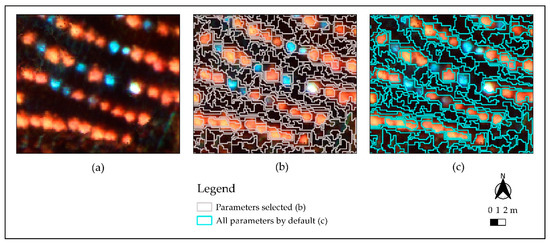

The segmentation technique is not a fully automatized process since its procedure may not create the desired “meaningful” segments. Hence, segmentation parameters are commonly “optimized” to achieve the intended entities [63]. For this aim, different segmentation parameters were tested using OTB version 7.1.0 and evaluated by visual observation. The chosen parameters were spatial radius of 5 (default value), range radius of 10, and minimum segment size of 30. Subsequently the means of bands and VI values in each segment were addressed. From Figure 6, visual comparison of the segmentation parameters tested can be ascertained.

Figure 6.

Highlighting some different segmentation parameters: (a) False color composite (near infrared (NIR), red (R), green (G), (b) segmentation with selected parameters, (c) segmentation with all parameters by default.

Given the most suitable segmentation result, one more step was undertaken to improve the segments that correspond to tree canopies. This optimization consisted of obtaining tree position by applying the local maxima algorithm included in the Tree density plugin of QGIS [61]. By using this tool, bare soil and shadows were eliminated, as they might interfere with the identification of dead trees, which had mostly already lost their leaves.

In order to classify tree canopies into two different classes, healthy and dead trees, supervised machine-learning (ML) classification was used. Among the different object-oriented ML classifiers, the RF algorithm was applied as its performance is one of the most accurate ML algorithms when supervised classification for GEOBIA is conducted [27,28,68,73]. Presented by Breiman [74], RF is an automatic ensemble method based on decision trees where each tree depends on a collection of random variables [75]. RF consists of a large number of independent individual decision trees working together and the final output is estimated based on the outputs of all decision trees involved. Thereby, the return classification is the one that has been the most recurrent. Random selection of the variables used in each decision tree is a keystone to avoid correlation among decision trees. For this purpose, the so-called bootstrap is performed and a significant percentage of samples of the training areas with replacements are used to construct each decision tree. The remaining training areas, called out-of-bag (OOB) data, are used to validate the classification accuracy of RF [74,76]. Therefore, all the decision trees are always constructed using the same parameters but on different training sets.

To supervise RF classification, 1241 out of 25,911 segments were manually selected as training areas based on field data and on-screen interpretation of different image color composites. A stratified division of the training areas was carried out according to the preset classes being divided as 80% for training and 20% for the model validation. As shown in previous studies [68,77], default values were set in OTB when RF was performed, since OTB parameters for training and classification processes worked optimally.

The summary of the performance of the machine-learning RF training model can be observed in Table 3. For each canopy class, high precision and global model performance were obtained, as shown by the Kappa value of 0.96.

Table 3.

Summary of the performance of machine-learning random forest training model.

2.6. Accuracy Assessment

Since it is a very effective way to represent both global accuracy and individual class accuracy, an error matrix was performed to determine the agreement between the classified image and the ground truth data with regard to tree health status [78]. Field data collection through stratified random sampling was conducted for validation purposes. From the error matrix, the overall accuracy, commission error (CE), omission error (OE), producer’s accuracy (PA), and user’s accuracy (UA) were established. CE describes the error associated with the aerial surveyors that classify objects into a different category from the one they belong to, whereas OE represents the error associated with the aerial surveyors that place objects outside of the category that they belong to [79]. In addition, the Kappa (к) statistic was estimated to quantitatively assess the agreement in the error matrix and the chance of an agreement, by determining the degree of association between the remotely sensed classification and the reference data [78,80].

The accuracy assessment of the classifications obtained with Otsu’s method was validated with the data collected in the field. The supervised classification with the random forest was validated with 2% (517 samples) of randomly stratified selected segments using the research tools provided by QGIS [48].

2.7. Density Forest Maps

The results of the classification were joined to the tree’s position layer due to the slightly touching trees phenomenon described by Wang et al. [60]. Counting the number of trees using the classification results could induce error because the tree crows are not always clearly separated.

Density maps were created by grouping the position of the trees with the hexagonal binning technique for 0.1 ha. This strategy serves to minimize the irregular shape of the stand limits and to group the trees into less than discrete groups. More importantly, hexagons are more similar to the circle than squares or diamonds [81]. This technique was first described by Carr et al. [82]. According to Carr [83] maps based on hexagon tessellations offer the opportunity to summarize point data and show the patterns for a single variable, that in this case was made into two types of the canopy.

3. Results

3.1. Spectra Details and Vegetation Indexes

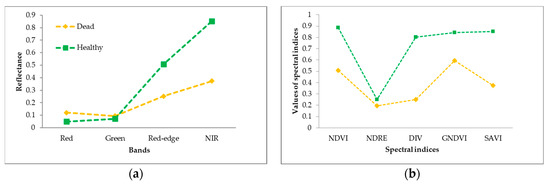

Spectra details of tree canopies are shown in Figure 7a, with the mean reflectance values for each band. NIR spectral region revealed the largest differences between the two tree classes, and therefore the highest discriminating power when compared with red-edge and other bands. As shown in Figure 7b, all indices have discriminative capability, although the NDRE index showed the smallest interval difference.

Figure 7.

Spectral signatures details of all tree canopies: (a) extracted mean values of bands, (b) extracted mean values of vegetation indices (VIs) selected.

3.2. Pixel-Based Analysis

Otsu Thresholding Analysis

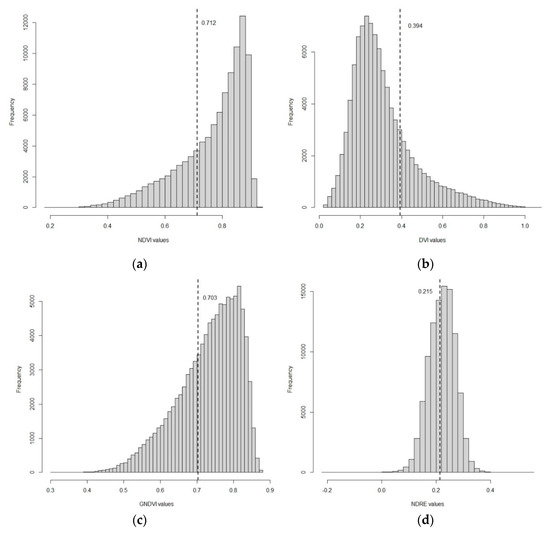

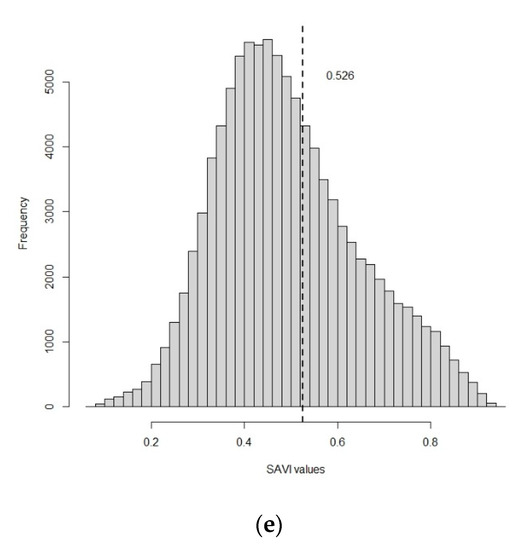

Imagery interval values and histogram threshold values obtained from the Otsu thresholding method, as shown in Table 4 and Figure A1 (Appendix A). The DVI index imagery interval ranged between 0.020 and 1.150, setting the threshold, value at 0.394. The GNDVI and NDVI indices showed a similar histogram threshold with global minima of 0.703 and 0.712, respectively. Their imagery intervals ranged between 0.150 and 0.930 for the NDVI index and between 0.248 and 0.890 for the GNDVI index. The other two indexes, NDRE and SAVI, had imagery intervals between −0.339 and 0.660 and between 0.057 and 0.983, respectively, whereas their threshold values were 0.215 and 0.526, respectively.

Table 4.

Summary of threshold values based on the Otsu method.

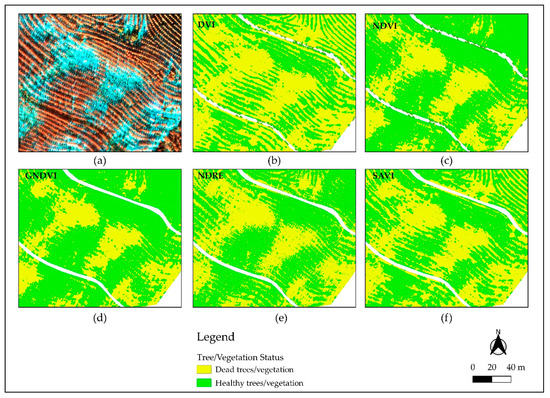

Figure 8 highlights the threshold values of all spectral indices by applying the Otsu thresholding method. If compared with the false-color composite in Figure 8a, where red corresponds to healthy trees and blue-grey to dead trees mixed with bare soil, all indices showed good performance to discriminate between healthy and dead trees. Hence, the application of Otsu methodology seemed to improve the differentiation between classes, at least in planted stands where there is active silvicultural management.

Figure 8.

Contrast between healthy and dead trees for all spectral indices by applying the Otsu thresholding method: (a) highlighting of false-color composite, (b) DVI highlighting dead and healthy trees, (c) NDVI highlighting dead and healthy trees, (d) GNDVI highlighting dead and healthy trees, (e) NDRE highlighting dead and healthy trees, (f) SAVI highlighting dead and healthy trees.

3.3. Object-Based Analysis and Classification

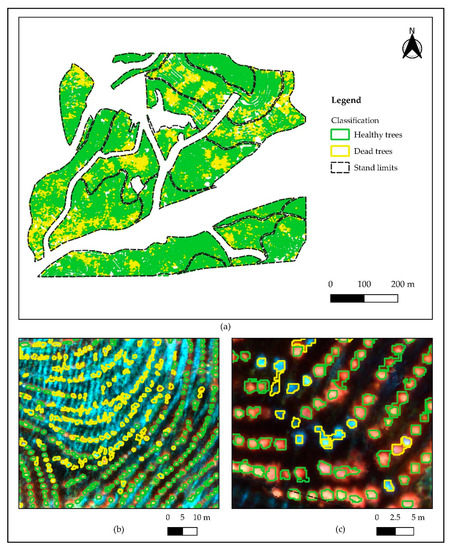

Discrimination of tree canopy status was performed through the classification of crown segments extracted from the points generated with local maxima filtering by applying a machine-learning random forest algorithm (Figure 9). The spectral indices and band information of each segment allowed the identification of tree canopy status (Figure 9b). Large-scale mean-shift segmentation algorithm performed through OTB had good performance, improving the ability to obtain meaningful segments, which in turn resulted in improvements in RF classification capability (Figure 9c).

Figure 9.

Discrimination of tree canopy status: (a) general overview of random forest (RF) classification, (b) highlighting dead and healthy trees, (c) highlighting in detail dead trees in yellow and healthy trees in green.

3.4. Accurracy Assessment

3.4.1. Otsu Thresholding

Histogram thresholding analysis and RF classifier were validated by estimating the confusion matrix and Kappa statistic (Table 5 and Table 6). The confusion matrix to classify tree status with NDVI histogram analysis had the highest overall accuracy (98.2%) and Kappa value (0.96). On the other hand, NDRE histogram analysis had the lowest performance, with 86.4% overall accuracy and a Kappa value of 0.73. The other spectral indices histogram analyses had a similar performance.

Table 5.

Summary of the confusion matrix and Kappa statistics (K) of selected vegetation indices, validated with field data collection.

Table 6.

Confusion matrix of the RF machine learning classification model, with 517 observations selected by stratified random sampling.

3.4.2. Object-Based Analysis and Classification

To perform the error matrix for machine-learning RF, 517 predicted observations were used, 431 for healthy trees and 86 for dead trees. Predicted observations were selected by stratified random sampling using QGIS tools (Table 6). Overall accuracy was 98.5%, which means that this model had the highest accuracy of all the models tested, while the Kappa value was 0.94.

3.5. Density Forest Maps

After RF classification, tree canopy condition data (healthy and dead) was validated and two different outputs were created by applying a hexagonal binning. Both products are representations of the classified data in order to support management decisions. Prior to the density forest map creation, the data was already validated so as no new data was generated, the validation procedures may not be required.

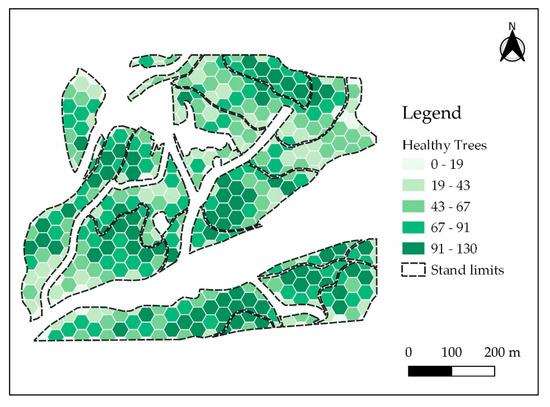

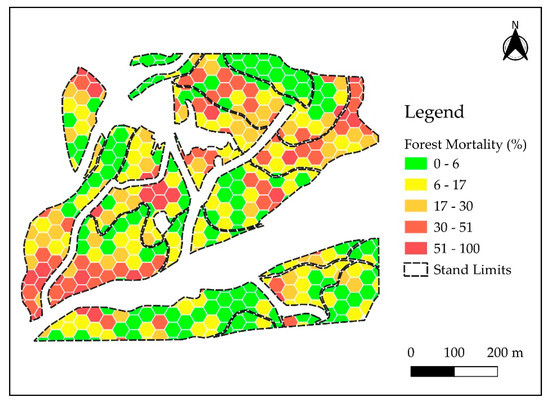

Hexagonal binning was created to allow the aggregation of trees in small areas and, therefore, identify hotspots and density of healthy trees (Figure 10). A total of 422 hexagons were produced with an area of 0.1 ha each, which represents one-tenth of the hectare which is the standard measure. The hexagon with the highest number of healthy trees had 130, while there was only one hexagon where all the trees were dead. Considering the total number of trees classified, 16 hexagons did not show any dead tree whereas a total of 18 hexagons had more than 40 dead trees (Figure 11). On average, each hexagon was expected to have an initial density of 125 trees, since planting density per hectare was 1250 trees.

Figure 10.

The number of healthy trees in each hexagon (natural breaks classification).

Figure 11.

Percentage of mortality in each hexagon (natural breaks classification).

The total number of trees recorded in the studied stand was 28,271 of which 4507 were dead. Since total stand area is 30.56 ha, initial stocking would be about 38,000 trees at plantation, representing a 25% difference. This discrepancy may be due the leafless canopies or missing trees from early mortality events, which are common shortly after establishment.

Forest mortality maps for the plantation area (Figure 11) allow the identification of the most critical spots and are important in the planning of dedicated surveying or sanitary fellings to remove infested trees thus reducing ELB local populations and minimizing future outbreaks.

4. Discussion

This study explored the capability of multispectral images captured with a parrot sequoia camera to detect the mortality caused by ELB on E. globulus plantations. Before establishing a flight plan, the phenology of the trees and the life cycle of the insect must be known, in order to extract as much information as possible. The spatial resolution of the images obtained was 17 cm, which allowed the reduction of spatial scale to the individual tree level.

NIR bands had the greatest discriminating power of the all spectra analyzed and provided more information about different tree canopies. As stressed by Otsu et al. [26], NIR band was more sensitive to defoliation than the red-edge band, as observed in Figure 7b. Regarding the remaining bands, the reflectance of the green band was generally higher than that of red band because of the high absorption related to chlorophyll content [84]. Spectral indices, whose calculation includes bands such as NDVI, also presented highly discriminating behavior. This VI is particularly used to assess defoliation, and damage at high spatial resolutions using multispectral and RGB cameras mounted on UAV [12,22,23,26,85,86].

Histogram analysis allowed the discrimination between healthy and dead trees. Although the detection of the histogram valley in spectral indices was difficult to determine, this method may become a valid alternative to split the two canopy types. NDVI was the most accurate index at 98.2% and Kappa value of 0.96. The second most accurate was the GNDVI index, which had an overall accuracy of 97.4% and Kappa value of 0.95. NDRE was the less accurate index at 84.4% overall accuracy and Kappa value of 0.73. Similar overall accuracy values were obtained by Otsu et al. [26] to detect defoliation of pine needles by pine processionary in four different locations. However, in our study, the NIR band was not used to remove shadows as Miura and Midokawa et al. [87] applied. To minimize the shadow effect, the flight was undertaken at solar noon, so treetops could be easily captured, with shadows present mostly along the plantation lines.

The combination of segmentation and the maximum filtering location allowed the extraction of the tops of trees and carrying out their classification. Applying this method, shadows were removed from other vegetation and bare soil, which has a reflectance very close to that of dead trees. The RF learning machine obtained 98.5% global accuracy and the Kappa value was 0.94. This precision is explained by the great distance between reflectance values and the differences between the two classes. Iordache et al. [28] applied RF classifier on the classification of Pinus pinaster canopy types (infected, suspicious, and healthy) affected by pine wild and obtained an overall accuracy of 95%. Pourazar et al. [27], obtain as overall accuracy 95.58% using five spectral bands and five indices to detect dead and diseased trees.

The forest density maps produced through hexagon tessellations aimed to group the position of classified trees. The ease of reading allows the identification of the most critical areas with tree mortality and to extract important metrics for forest management such as the total number of trees, the standard deviation, and other landscape metrics. Barreto et al. [88] set a hexagonal grid to represent classes of natural Cerrado vegetation in the the Northeast of Brazil, in an area of about 25,590 km2, to study the remaining habitats through quantitative indices. More recently, Amaral et al. [89], used a hexagonal grid subdivided into 1000 ha units to study the restinga (a type of coastal vegetation) in Northern Brazil.

The current strategy to control ELB relies mostly on the identification of dead trees and their removal from plantations before a new generation of adults emerges in late spring. Traditional field surveys are extremely labor-intensive, as they require visual assessment of large eucalypt plantations. As a result, outbreaks are often only detected when large numbers of trees are already dead and ELB populations are high. By allowing the identification of individual infested trees in a much more efficient way, widespread application of the methods described in this study will allow forest managers to detect ELB attacks at an early stage, thus reducing the cost and efficiency of sanitary fellings.

Future work will focus on adding additional classes to the survey in order to improve the discrimination of tree health status. The integration of a UAS-derived canopy model at a 3D tree model could be performed to automatically outline individual tree crowns. Another improvement is the ambition to implement periodical flights at different attack stages to provide a multitemporal analysis. Further research might focus on other remote-sensing platforms at different scales and considering different bioclimatic and geographical settings.

5. Conclusions

In this experimental research, ELB attack detection and assessment were proposed at pixel and object-based level using UAV multispectral imagery. The NIR band showed discriminative power for two canopy classes. In general, all selected spectral indices were effective in discriminating healthy from dead trees. Otsu thresholding analysis resulted in high overall accuracy and Kappa value for most of the vegetation indices used, with the exception of NDRE. The RF machine-learning algorithm applied also resulted in high overall accuracy (98.5%) and Kappa value (0.94). From an operational point of view, these results can have important implications for forest managers and practitioners. In particular, forest mortality and density maps using hexagonal tessellations allow a complete survey and an accurate measure of the scale of the problem and where the most critical hotspots of mortality are located. By carefully planning the time of the year when these surveys are carried out, timely quantification of mortality rates is an important metric for ensuring phytosanitary fellings are conducted as early and as thoroughly as possible.

Author Contributions

Conceptualization, A.D. and C.V.; methodology, A.D., L.A.-M. and C.I.G.; software, A.D. and A.S.; validation, A.D.; formal analysis, A.D. and L.A.-M.; data curation, A.D.; writing—original draft preparation, A.D.; writing—review and editing, A.D., L.A.-M., C.I.G., L.M., A.S., M.S., S.F., N.B. and C.V.; supervision, C.V.; project administration, M.S., S.F., N.B.; funding acquisition, M.S. All authors have read and agreed to the published version of the manuscript.

Funding

This study has been developed within the context of MySustainableForest project which has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 776045.

Acknowledgments

The authors would like to thank Fernando Luis Arede for his support in field data collection, João Rocha for English language check, João Gaspar for their insights, and José Vasques for providing operational data and access to the study area.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Histograms of Otsu Thresholding Analysis

Figure A1.

Histogram and Otsu thresholding values of (a) NDVI, (b) DVI, (c) GNDVI, (d) NDRE and (e) SAVI.

References

- Rassati, D.; Lieutier, F.; Faccoli, M. Alien Wood-Boring Beetles in Mediterranean Regions. In Insects and Diseases of Mediterranean Forest Systems; Paine, T.D., Lieutier, F., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 293–327. [Google Scholar]

- Estay, S. Invasive insects in the Mediterranean forests of Chile. In Insects and Diseases of Mediterranean Forest Systems; Paine, T.D., Lieutier, F., Eds.; Springer: Cham, Switzerland, 2016; pp. 379–396. [Google Scholar]

- Hanks, L.M.; Paine, T.D.; Millar, J.C.; Hom, J.L. Variation among Eucalyptus species in resistance to eucalyptus longhorned borer in Southern California. Entomol. Exp. Appl. 1995, 74, 185–194. [Google Scholar] [CrossRef]

- Mendel, Z. Seasonal development of the eucalypt borer Phoracantha semipunctata, in Israel. Phytoparasitica 1985, 13, 85–93. [Google Scholar] [CrossRef]

- Hanks, L.M.; Paine, T.D.; Millar, J.C. Mechanisms of resistance in Eucalyptus against larvae of the Eucalyptus Longhorned Borer (Coleoptera: Cerambycidae). Environ. Entomol. 1991, 20, 1583–1588. [Google Scholar] [CrossRef]

- Paine, T.D.; Millar, J.C. Insect pests of eucalypts in California: Implications of managing invasive species. Bull. Entomol. Res. 2002, 92, 147–151. [Google Scholar] [CrossRef] [PubMed]

- Caldeira, M.C.; Fernandéz, V.; Tomé, J.; Pereira, J.S. Positive effect of drought on longicorn borer larval survival and growth on eucalyptus trunks. Annu. For. Sci. 2002, 59, 99–106. [Google Scholar] [CrossRef]

- Soria, F.; Borralho, N.M.G. The genetics of resistance to Phoracantha semipunctata attack in Eucalyptus globulus in Spain. Silvae Genet. 1997, 46, 365–369. [Google Scholar]

- Seaton, S.; Matusick, G.; Ruthrof, K.X.; Hardy, G.E.J. Outbreaks of Phoracantha semipunctata in response to severe drought in a Mediterranean Eucalyptus forest. Forests 2015, 6, 3868–3881. [Google Scholar] [CrossRef]

- Tirado, L.G. Phoracantha semipunctata dans le Sud-Ouest espagnol: Lutte et dégâts. Bull. EPPO 1986, 16, 289–292. [Google Scholar] [CrossRef]

- Wotherspoon, K.; Wardlaw, T.; Bashford, R.; Lawson, S. Relationships between annual rainfall, damage symptoms and insect borer populations in midrotation Eucalyptus nitens and Eucalyptus globulus plantations in Tasmania: Can static traps be used as an early warning device? Aust. For. 2014, 77, 15–24. [Google Scholar] [CrossRef]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing very high resolution UAV imagery for monitoring forest health during a simulated disease outbreak. ISPRS J. Photogramm. Remote Sens. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Ortiz, S.M.; Breidenbach, J.; Kändler, G. Early detection of bark beetle green attack using Terrasar-X and RapidEye data. Remote Sens. 2013, 5, 1912–1931. [Google Scholar] [CrossRef]

- Wulder, M.A.; Dymond, C.C.; White, J.C.; Leckie, D.G.; Carroll, A.L. Surveying mountain pine beetle damage of forests: A review of remote sensing opportunities. For. Ecol. Manag. 2006, 221, 27–41. [Google Scholar] [CrossRef]

- Hall, R.; Castilla, G.; White, J.C.; Cooke, B.; Skakun, R. Remote sensing of forest pest damage: A review and lessons learned from a Canadian perspective. Can. Èntomol. 2016, 148, 296–356. [Google Scholar] [CrossRef]

- Senf, C.; Seidl, R.; Hostert, P. Remote sensing of forest insect disturbances: Current state and future directions. Int. J. Appl. Earth Obs. Geoinf. 2017, 60, 49–60. [Google Scholar] [CrossRef]

- Gómez, C.; Alejandro, P.; Hermosilla, T.; Montes, F.; Pascual, C.; Ruiz, L.A.; Álvarez-Taboada, F.; Tanase, M.; Valbuena, R. Remote sensing for the Spanish forests in the 21st century: A review of advances, needs, and opportunities. For. Syst. 2019, 28, 1. [Google Scholar] [CrossRef]

- Meddens, A.J.H.; Hicke, J.A.; Vierling, L.A.; Hudak, A.T. Evaluating methods to detect bark beetle-caused tree mortality using single-date and multi-date Landsat imagery. Remote Sens. Environ. 2013, 132, 49–58. [Google Scholar] [CrossRef]

- Waser, L.T.; Küchler, M.; Jütte, K.; Stampfer, T. Evaluating the Potential of WorldView-2 Data to Classify Tree Species and Different Levels of Ash Mortality. Remote Sens. 2014, 6, 4515–4545. [Google Scholar] [CrossRef]

- Oumar, Z.; Mutanga, O. Using WorldView-2 bands and indices to predict bronze bug (Thaumastocoris peregrinus) damage in plantation forests. Int. J. Remote Sens. 2013, 34, 2236–2249. [Google Scholar] [CrossRef]

- Stone, C.; Coops, N.C. Assessment and monitoring of damage from insects in Australian eucalypt forests and commercial plantations. Aust. J. Entomol. 2004, 43, 283–292. [Google Scholar] [CrossRef]

- Lehmann, J.R.K.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of Unmanned Aerial System–Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Lyytikäinen-Saarenmaa, P.; Blomqvist, M.; Litkey, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Tanhuanpää, T.; Holopainen, M. Using UAV–Based Photogrammetry and Hyperspectral Imaging for Mapping Bark Beetle Damage at Tree–Level. Remote Sens. 2015, 7, 15467–15493. [Google Scholar] [CrossRef]

- Smigaj, M.; Gaulton, R.; Barr, S.L.; Suárez, J.C. Uav–Borne Thermal Imaging for Forest Health Monitoring: Detection of Disease–Induced Canopy Temperature Increase. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 349–354. [Google Scholar] [CrossRef]

- Otsu, K.; Pla, M.; Vayreda, J.; Brotons, L. Calibrating the Severity of Forest Defoliation by Pine Processionary Moth with Landsat and UAV Imagery. Sensors 2018, 18, 3278. [Google Scholar] [CrossRef] [PubMed]

- Otsu, K.; Pla, M.; Duane, A.; Cardil, A.; Brotons, L. Estimating the threshold of detection on tree crown defoliation using vegetation indices from UAS multispectral imagery. Drones 2019, 3, 80. [Google Scholar] [CrossRef]

- Pourazar, H.; Samadzadegan, F.; Javan, F. Aerial multispectral imagery for plant disease detection: Radiometric calibration necessity assessment. Eur. J. Remote Sens. 2019, 52 (Suppl. S3), 17–31. [Google Scholar] [CrossRef]

- Iordache, M.D.; Mantas, V.; Baltazar, E.; Pauly, K.; Lewyckyj, N. A Machine Learning Approach to Detecting Pine Wilt Disease Using Airborne Spectral Imagery. Remote Sens. 2020, 12, 2280. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Tang, L.; Shao, G. Drone remote sensing for forestry research and practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- FAO. FAO–UNESCO Soil Map of the World. Revised Legend. In World Soil Resources Report; FAO: Rome, Italy, 1998; Volume 60. [Google Scholar]

- SenseFly Parrot Group. Parrot Sequoia Multispectral Camara. Available online: https://www.sensefly.com/camera/parrot-sequoia/ (accessed on 31 August 2020).

- Assmann, J.J.; Kerby, J.T.; Cunlie, A.M.; Myers-Smith, I.H. Vegetation monitoring using multispectral. sensors—Best practices and lessons learned from high latitudes. J. Unmanned Veh. Syst. 2019, 7, 54–75. [Google Scholar] [CrossRef]

- Pix4D. Pix4D—Drone Mapping Software. Version 4.2. Available online: https://pix4d.com/ (accessed on 31 August 2020).

- Nobuyuki, K.; Hiroshi, T.; Xiufeng, W.; Rei, S. Crop classification using spectral indices derived from Sentinel-2A imagery. J. Inf. Telecommun. 2020, 4, 67–90. [Google Scholar]

- Cogato, A.; Pagay, V.; Marinello, F.; Meggio, F.; Grace, P.; De Antoni Migliorati, M. Assessing the Feasibility of Using Sentinel-2 Imagery to Quantify the Impact of Heatwaves on Irrigated Vineyards. Remote Sens. 2019, 11, 2869. [Google Scholar] [CrossRef]

- Hawryło, P.; Bednarz, B.; Wezyk, P.; Szostak, M. Estimating defoliation of scots pine stands using machine learning methods and vegetation indices of Sentinel-2. Eur. J. Remote Sens. 2018, 51, 194–204. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Miller, J.R.; Mohammed, G.H.; Noland, T.L.; Sampson, P.H. Vegetation stress detection through Chlorophyll a + b estimation and fluorescence effects on hyperspectral imagery. J. Environ. Qual. 2002, 31, 1433–1441. [Google Scholar] [CrossRef] [PubMed]

- Barry, K.M.; Stone, C.; Mohammed, C. Crown-scale evaluation of spectral indices for defoliated and discoloured eucalypts. Int. J. Remote Sens. 2008, 29, 47–69. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, S.; Rasmussen, J.; Ehsani, R. Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Verbesselt, J.; Robinson, A.; Stone, C.; Culvenor, D. Forecasting tree mortality using change metrics from MODIS satellite data. For. Ecol. Manag. 2009, 258, 1166–1173. [Google Scholar] [CrossRef]

- Datt, B. Remote sensing of chlorophyll a, chlorophyll b, chlorophyll a+ b, and total carotenoid content in eucalyptus leaves. Remote Sens. Environ. 1998, 66, 111–121. [Google Scholar] [CrossRef]

- Datt, B. Visible/near infrared reflectance and chlorophyll content in Eucalyptus leaves. Int. J. Remote Sens. 1999, 20, 2741–2759. [Google Scholar] [CrossRef]

- Deng, X.; Guo, S.; Sun, L.; Chen, J. Identification of Short-Rotation Eucalyptus Plantation at Large Scale Using Multi-Satellite Imageries and Cloud Computing Platform. Remote Sens. 2020, 12, 2153. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Signature analysis of leaf reflectance spectra: Algorithm development for remote sensing of chlorophyll. J. Plant Physiol. 1996, 148, 494–500. [Google Scholar] [CrossRef]

- Olsson, P.O.; Jönsson, A.M.; Eklundh, L. A new invasive insect in Sweden—Physokermes inopinatus: Tracing forest damage with satellite based remote sensing. For. Ecol. Manag. 2012, 285, 29–37. [Google Scholar] [CrossRef]

- Huete, A.R. A soil adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- QGIS Community. QGIS Geographic Information System. Open Source Geospatial Foundation Project. 2020. Available online: https:\qgis.org (accessed on 31 August 2020).

- Richardson, A.J.; Weigand, C.L. Distinguishing vegetation from background information. Photogramm. Eng. Remote Sens. 1977, 43, 1541–1552. [Google Scholar]

- Barnes, E.M.; Clarke, T.R.; Richards, S.E.; Colaizzi, P.D.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T.; et al. Coincident detection of Crop Water Stress, Nitrogen Status and Canopy Density Using Ground-Based Multispectral Data [CD Rom]. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 150, 127–150. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray–Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Goncalves, H.; Corte-Real, L.; Goncalves, J.A. Automatic image registration through image segmentation and SIFT. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2589–2600. [Google Scholar] [CrossRef]

- Goh, T.Y.; Basah, S.N.; Yazid, H.; Safar, M.J.A.; Saad, F.S.A. Performance analysis of image thresholding: Otsu technique. Measurement 2018, 114, 298–307. [Google Scholar] [CrossRef]

- Van der Walt, S.; Schönberger, J.L.; Nunez-Iglesias, J.; Boulogne, F.; Warner, J.D.; Yager, N.; Gouillart, E.; Yu, T.; Scikit-image Contributors. Scikit-Image: Image processing in Python. PeerJ 2014, 2, e453. [Google Scholar] [CrossRef]

- Van Rossum, G.; De Boer, J. Interactively testing remote servers using the Python programming language. CWI Q. 1991, 4, 283–303. [Google Scholar]

- Wulder, M.; Niemann, K.; Goodenough, D.G.; Wulder, M.A. Local maximum filtering for the extraction of tree locations and basal area from high spatial resolution imagery. Remote Sens. Environ. 2000, 73, 103–114. [Google Scholar] [CrossRef]

- Wulder, M.A.; Niemann, K.O.; Goodenough, D.G. Error reduction methods for local maximum filtering of high spatial resolution imagery for locating trees. Can. J. Remote Sens. 2002, 28, 621–628. [Google Scholar] [CrossRef]

- Wulder, M.A.; White, J.C.; Niemann, K.O.; Nelson, T. Comparison of airborne and satellite high spatial resolution data for the identification of individual trees with local maxima filtering. Int. J. Remote Sens. 2004, 25, 2225–2232. [Google Scholar] [CrossRef]

- Wang, L.; Gong, P.; Biging, G.S. Individual Tree-Crown Delineation and Treetop Detection in High-Spatial-Resolution Aerial Imagery. Photogramm. Eng. Remote Sens. 2004, 70, 351–357. [Google Scholar] [CrossRef]

- Crabbé, A.H.; Cahy, T.; Somers, B.; Verbeke, L.P.; Van Coillie, F. Tree Density Calculator Software Version 1.5.3, QGIS. 2020. Available online: https://bitbucket.org/kul-reseco/localmaxfilter (accessed on 31 August 2020).

- Chen, G.; Weng, Q.; Hay, G.J.; He, Y. Geographic object-based image analysis (GEOBIA): Emerging trends and future opportunities. GIScience Remote Sens. 2018, 55, 159–182. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz Feitosa, R.; Van der Meer, F.; Van der Werff, H.; Van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef]

- Holloway, J.; Mengersen, K. Statistical machine learning methods and remote sensing for sustainable development goals: A review. Remote Sens. 2018, 10, 21. [Google Scholar] [CrossRef]

- Murfitt, J.; He, Y.; Yang, J.; Mui, A.; De Mille, K. Ash decline assessment in emerald ash borer infested natural forests using high spatial resolution images. Remote Sens. 2016, 8, 256. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Peña, J.M. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef]

- De Luca, G.; Silva, J.; Cerasoli, S.; Araújo, J.; Campos, J.; Di Fazio, S.; Modica, G. Object-Based Land Cover Classification of Cork Oak Woodlands using UAV Imagery and Orfeo ToolBox. Remote Sens. 2019, 11, 1238. [Google Scholar] [CrossRef]

- Liu, D.; Xia, F. Assessing object-based classification: Advantages and limitations. Remote Sens. Lett. 2010, 1, 187–194. [Google Scholar] [CrossRef]

- Michel, J.; Youssefi, D.; Grizonnet, M. Stable Mean-Shift Algorithm and Its Application to the Segmentation of Arbitrarily Large Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 952–964. [Google Scholar] [CrossRef]

- OTB Development Team. OTB CookBook Documentation; CNES: Paris, France, 2018; p. 305. [Google Scholar]

- Grizonnet, M.; Michel, J.; Poughon, V.; Inglada, J.; Savinaud, M.; Cresson, R. Orfeo ToolBox: Open source processing of remote sensing images. Open Geospat. Data Softw. Stand. 2017, 2, 15. [Google Scholar] [CrossRef]

- Li, M.; Ma, L.; Blaschke, T.; Cheng, L.; Tiede, D. Asystematic comparison of different object-based classification techniques using high spatial resolution imagery in agricultural environments. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 87–98. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cutler, D.R.; Edwards, T.C.; Beard, K.H.; Cutler, A.; Hess, K.T.; Gibson, J.; Lawler, J.J. Random Forests for Classification in Ecology. Ecology 2007, 88, 2783–2792. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with Sentinel-2 data for crop and tree species classifications in central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; CRC Press; Taylor & Francis Group: Boca Raton, FL, USA, 2019; ISBN 1498776663. [Google Scholar]

- Coleman, T.W.; Graves, A.D.; Heath, Z.; Flowers, R.W.; Hanavan, R.P.; Cluck, D.R.; Ryerson, D. Accuracy of aerial detection surveys for mapping insect and disease disturbances in the United States. For. Ecol. Manag. 2018, 430, 321–336. [Google Scholar] [CrossRef]

- Landis, J.; Koch, G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Birch, C.; Oom, S.P.; Beecham, J.A. Rectangular and hexagonal grids used for observation, experiment and simulation in ecology. Ecol. Model. 2007, 206, 347–359. [Google Scholar] [CrossRef]

- Carr, D.B.; Littlefield, R.J.; Nicholson, W.L.; Littlefield, J.S. Scatterplot Matrix Techniques for Large N. J. Am. Stat. Assoc. 1987, 82, 398. [Google Scholar] [CrossRef]

- Carr, D.B.; Olsen, A.R.; White, D. Hexagon Mosaic Maps for Display of Univariate and Bivariate Geographical Data. Cartogr. Geogr. Inf. Sci. 1992, 19, 228–236. [Google Scholar] [CrossRef]

- Rullan-Silva, C.D.; Oltho, A.E.; Delgado de la Mata, J.A.; Pajares-Alonso, J.A. Remote Monitoring of Forest Insect Defoliation—A Review. For. Syst. 2013, 22, 377. [Google Scholar] [CrossRef]

- Cardil, A.; Vepakomma, U.; Brotons, L. Assessing Pine Processionary Moth Defoliation Using Unmanned Aerial Systems. Forests 2017, 8, 402. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Miura, H.; Midorikawa, S. Detection of Slope Failure Areas due to the 2004 Niigata–Ken Chuetsu Earthquake Using High–Resolution Satellite Images and Digital Elevation Model. J. Jpn. Assoc. Earthq. Eng. 2013, 7, 1–14. [Google Scholar]

- Barreto, L.; Ribeiro, M.; Veldkamp, A.; Van Eupen, M.; Kok, K.; Pontes, E. Exploring effective conservation networks based on multi-scale planning unit analysis: A case study of the Balsas sub-basin, Maranhão State, Brazil. J. Ecol. Indic. 2010, 10, 1055–1063. [Google Scholar] [CrossRef]

- Amaral, Y.T.; Santos, E.M.D.; Ribeiro, M.C.; Barreto, L. Landscape structural analysis of the Lençóis Maranhenses national park: Implications for conservation. J. Nat. Conserv. 2019, 51, 125725. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).