Quality Assessment of Photogrammetric Models for Façade and Building Reconstruction Using DJI Phantom 4 RTK

Abstract

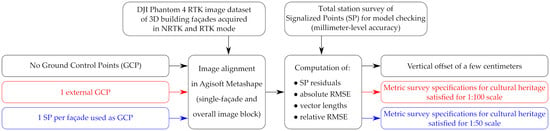

1. Introduction

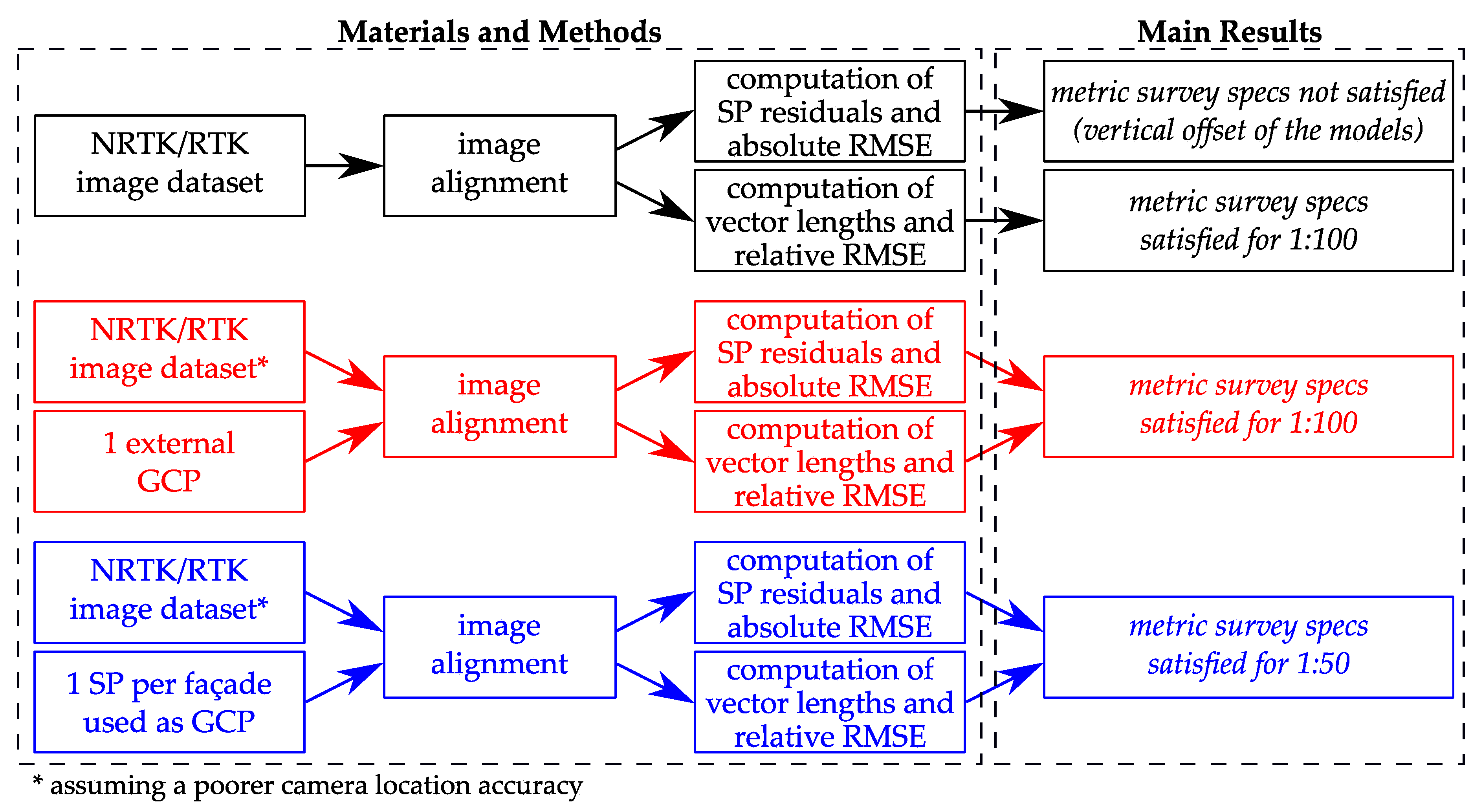

2. Materials and Methods

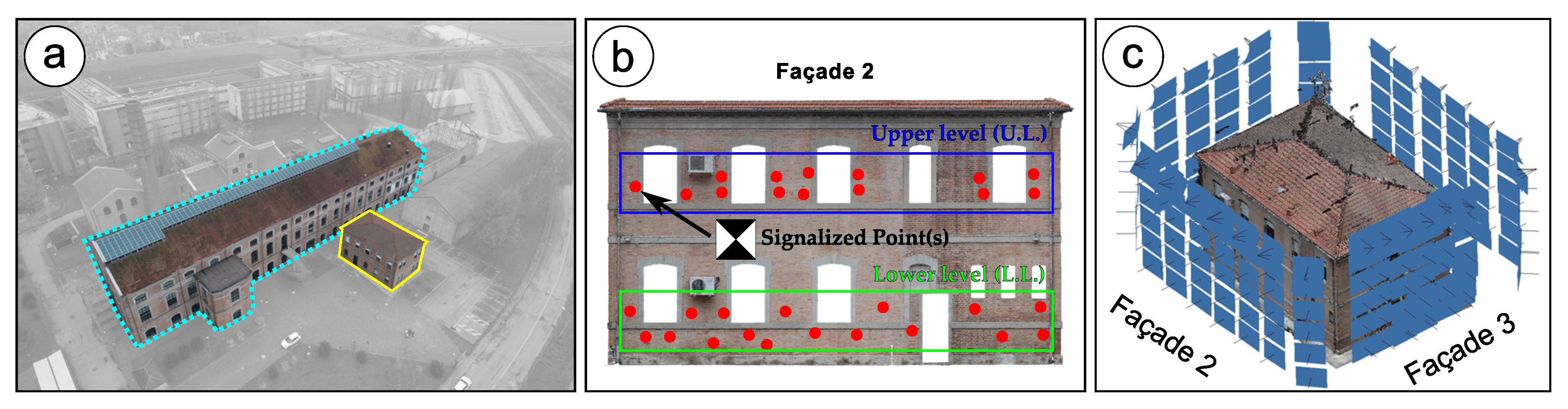

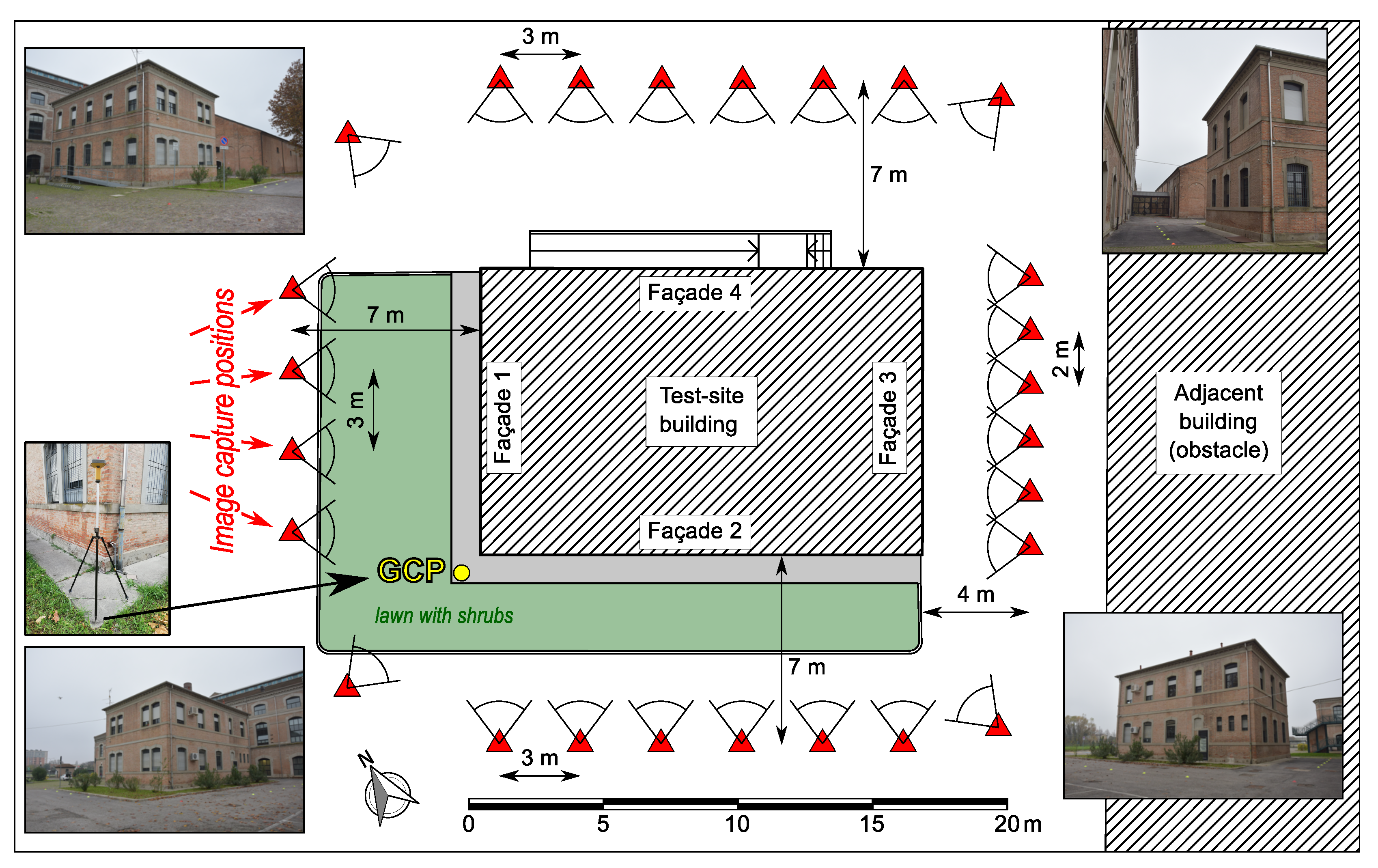

2.1. Test-Site Building

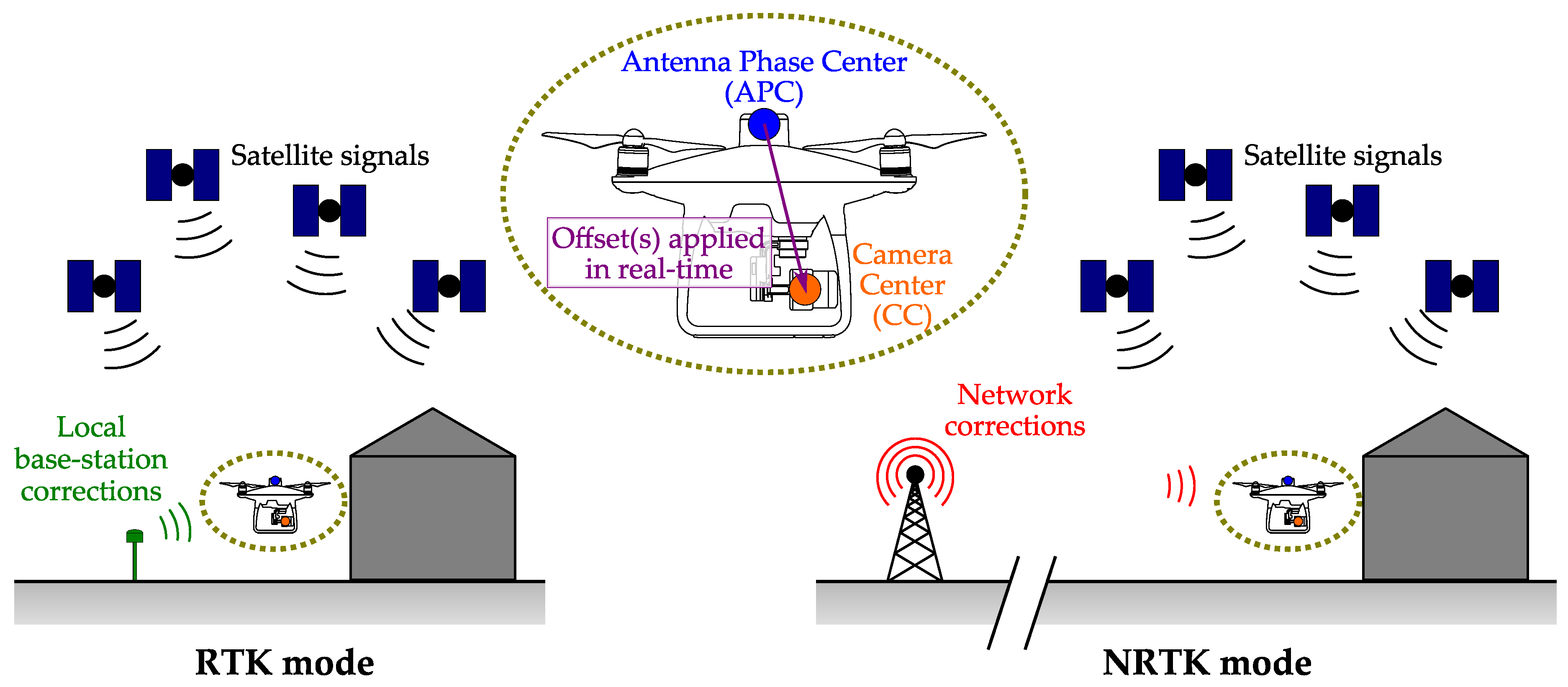

2.2. Unmanned Aircraft

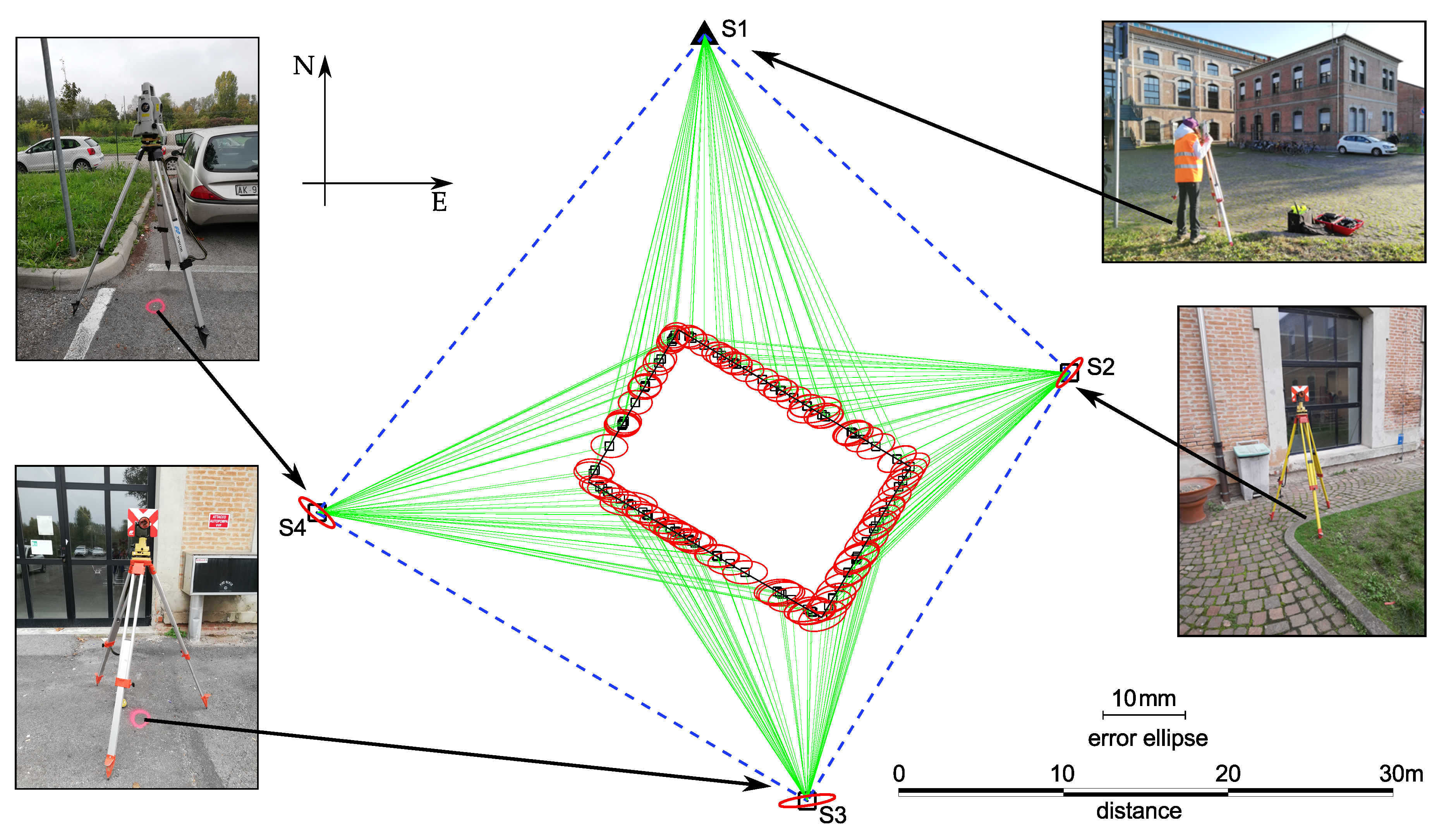

2.3. Survey of SPs

2.3.1. In-Situ Operations

2.3.2. Post-Processing of GNSS Data

2.3.3. Network Adjustment

2.4. Image Dataset Acquisition

2.5. Photogrammetric Model Reconstruction and Assessment of Alignment Quality

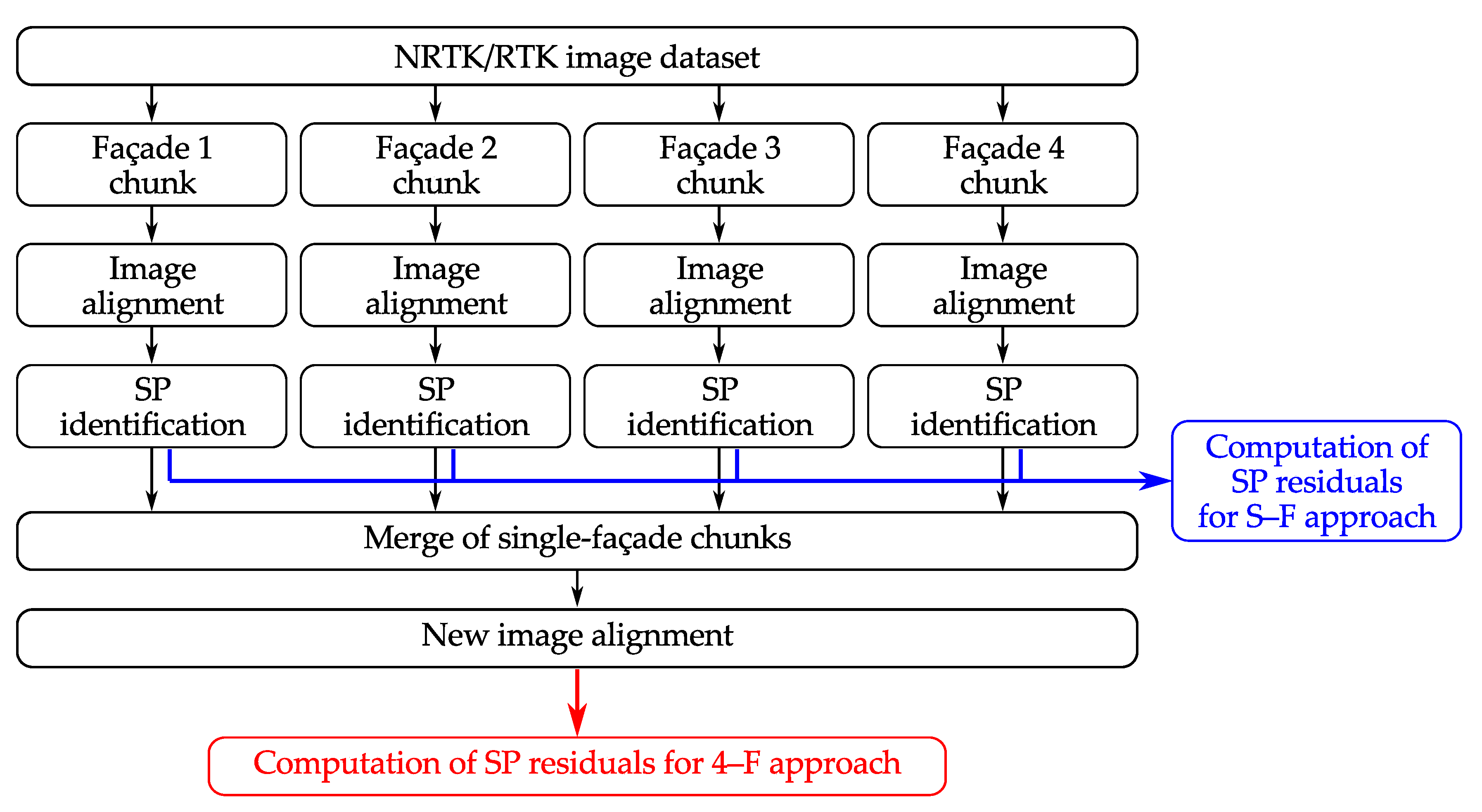

2.5.1. Generation of Photogrammetric Models

2.5.2. Identification of SPs on Images

2.5.3. Computation of Residuals

2.5.4. Computation of Absolute and Relative RMSE

3. Results

3.1. SP Identification Uncertainty Due to Operator

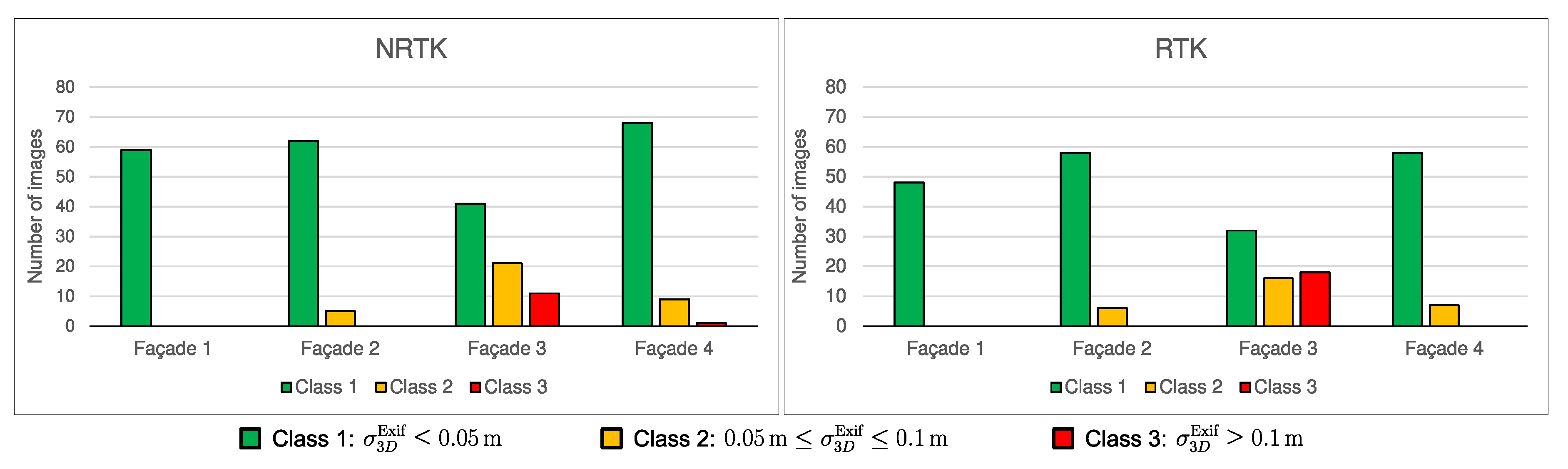

3.2. Analysis of Input Camera Locations in Agisoft Metashape

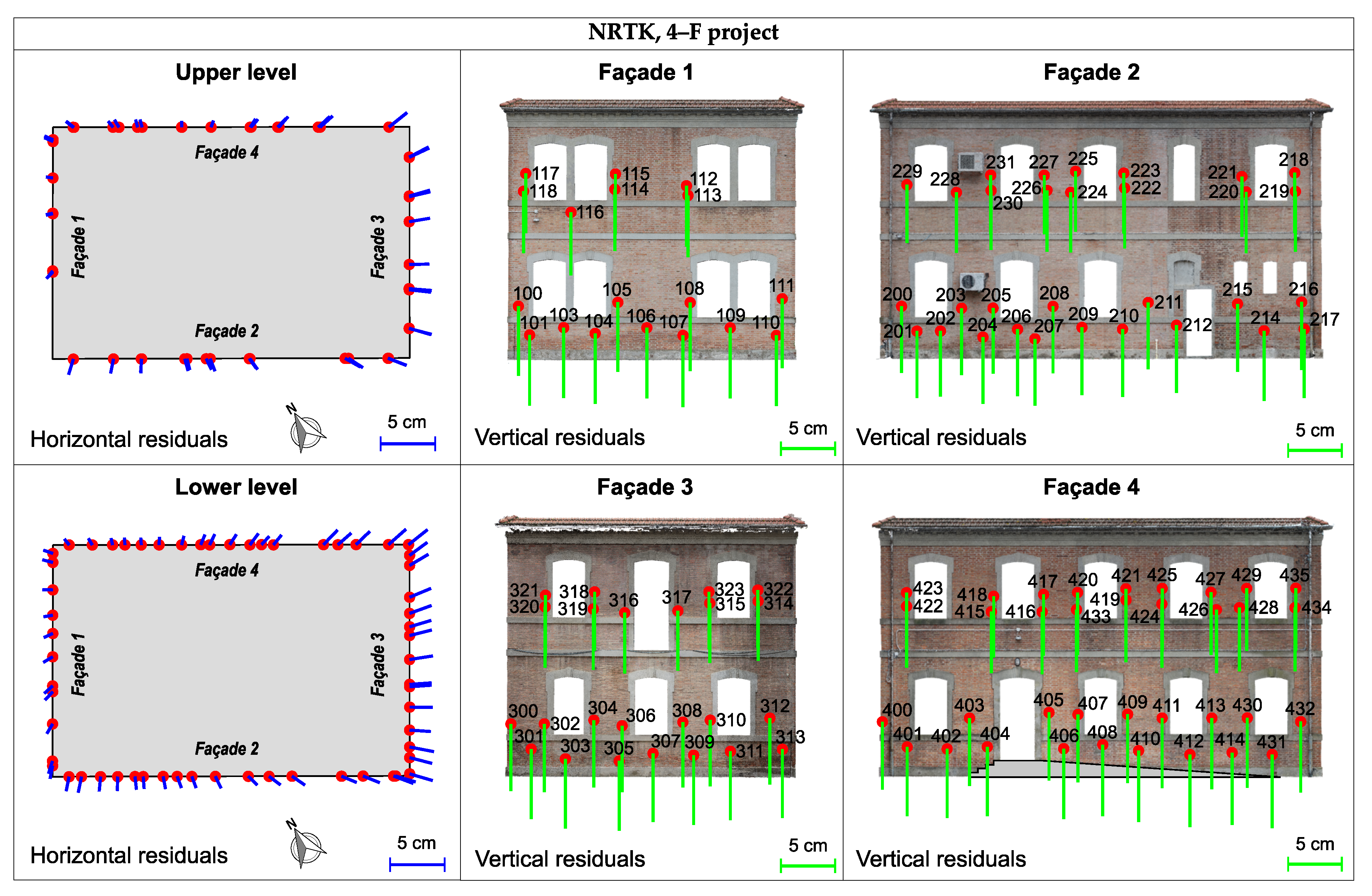

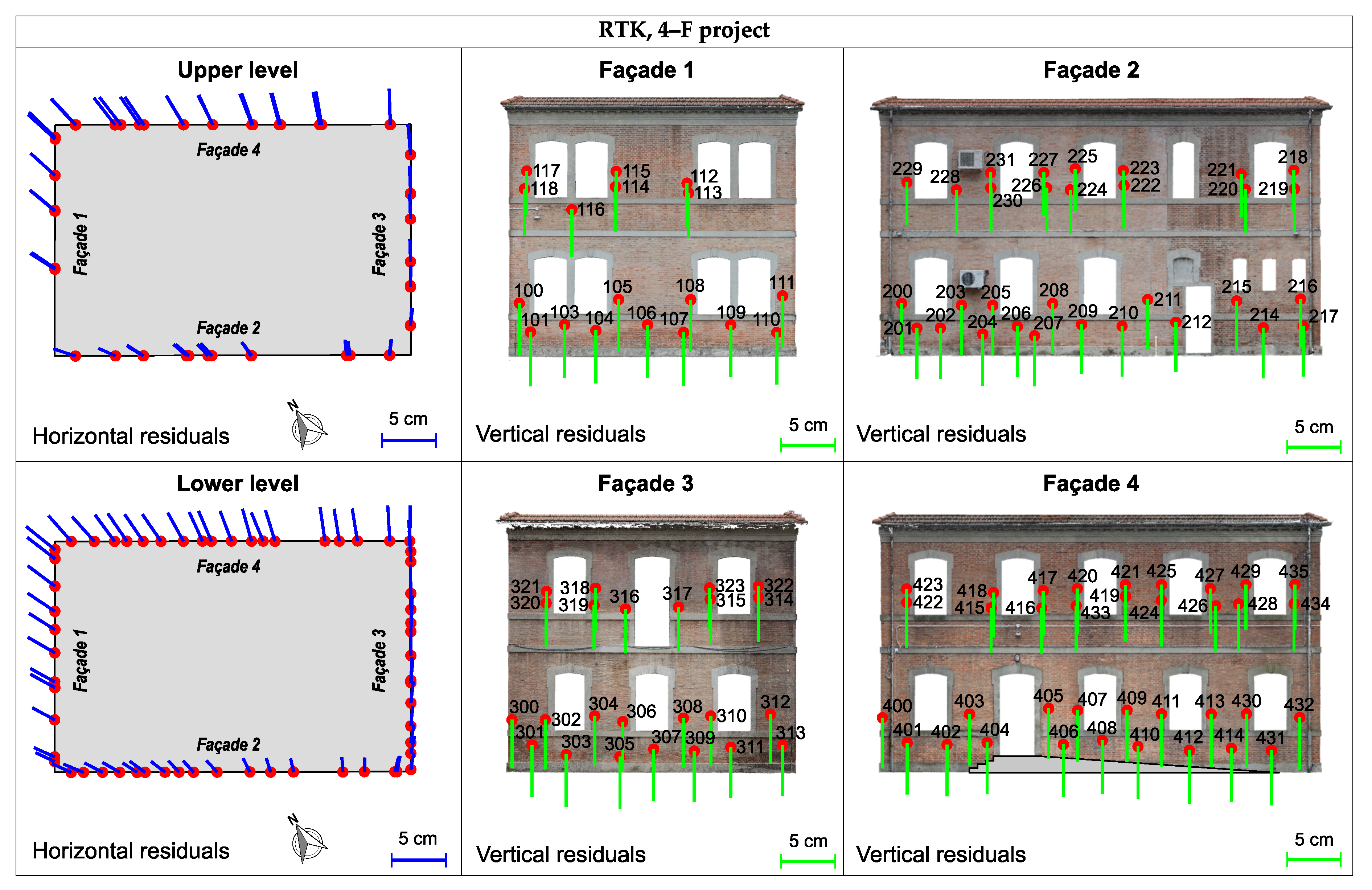

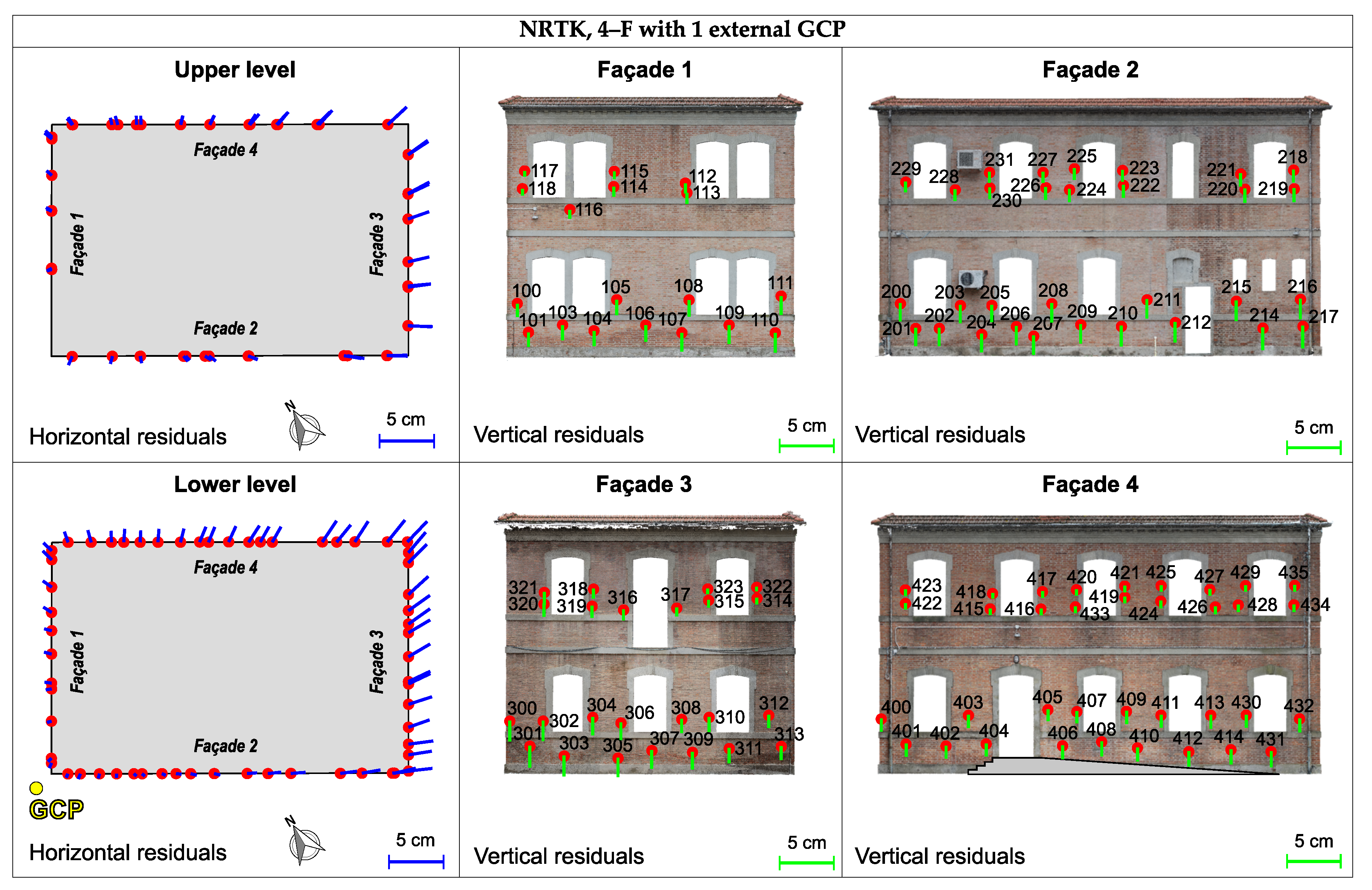

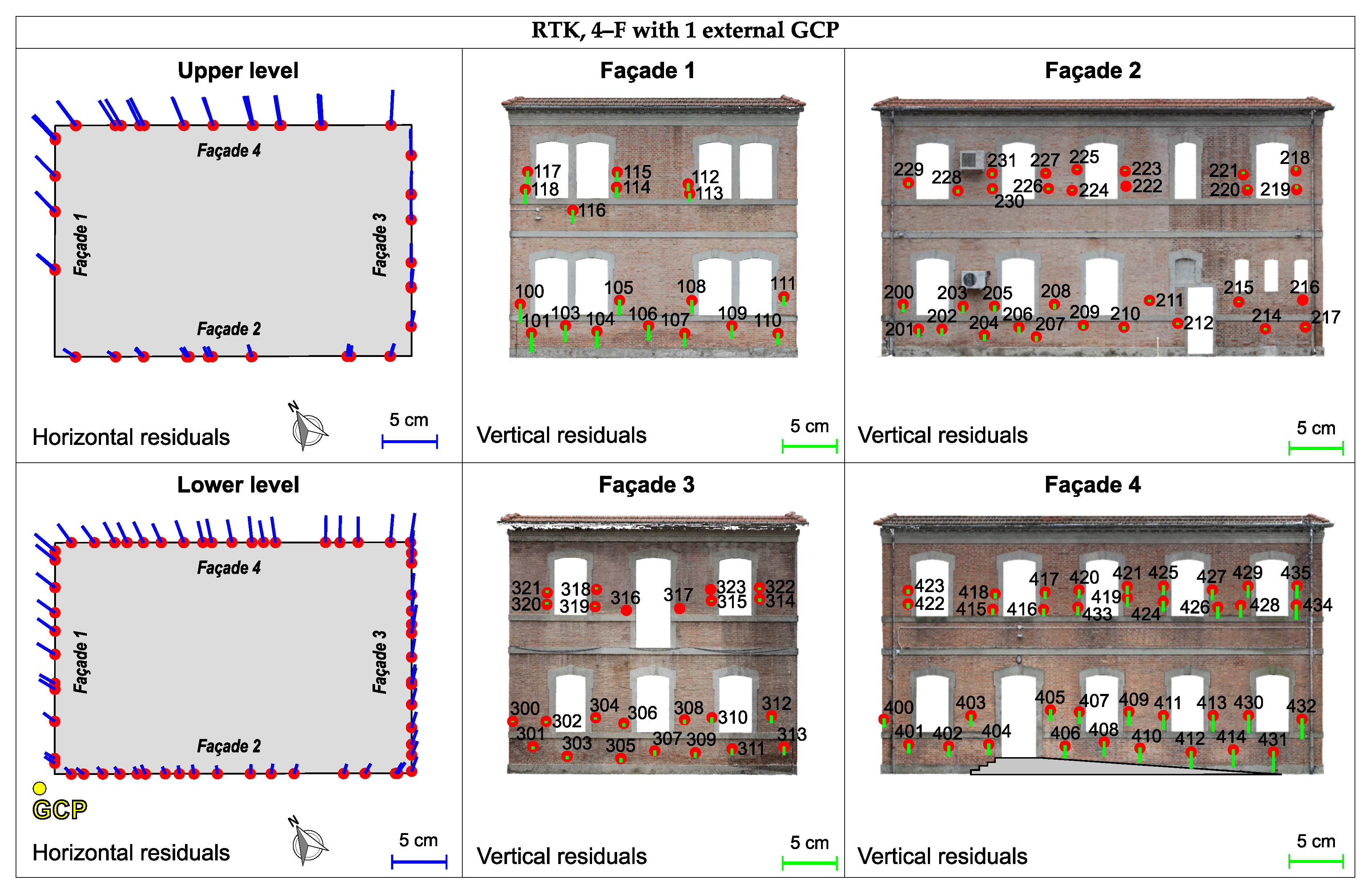

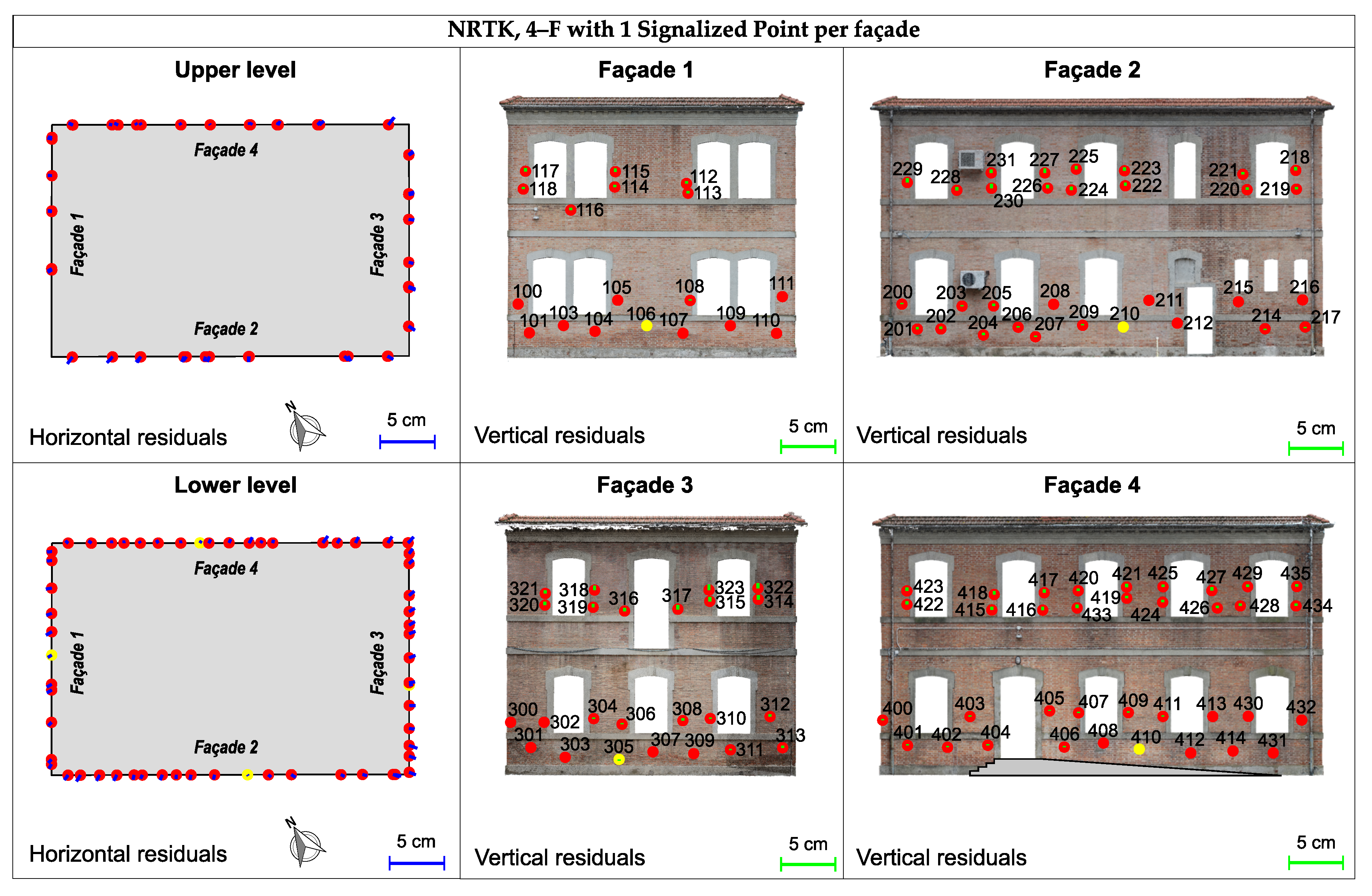

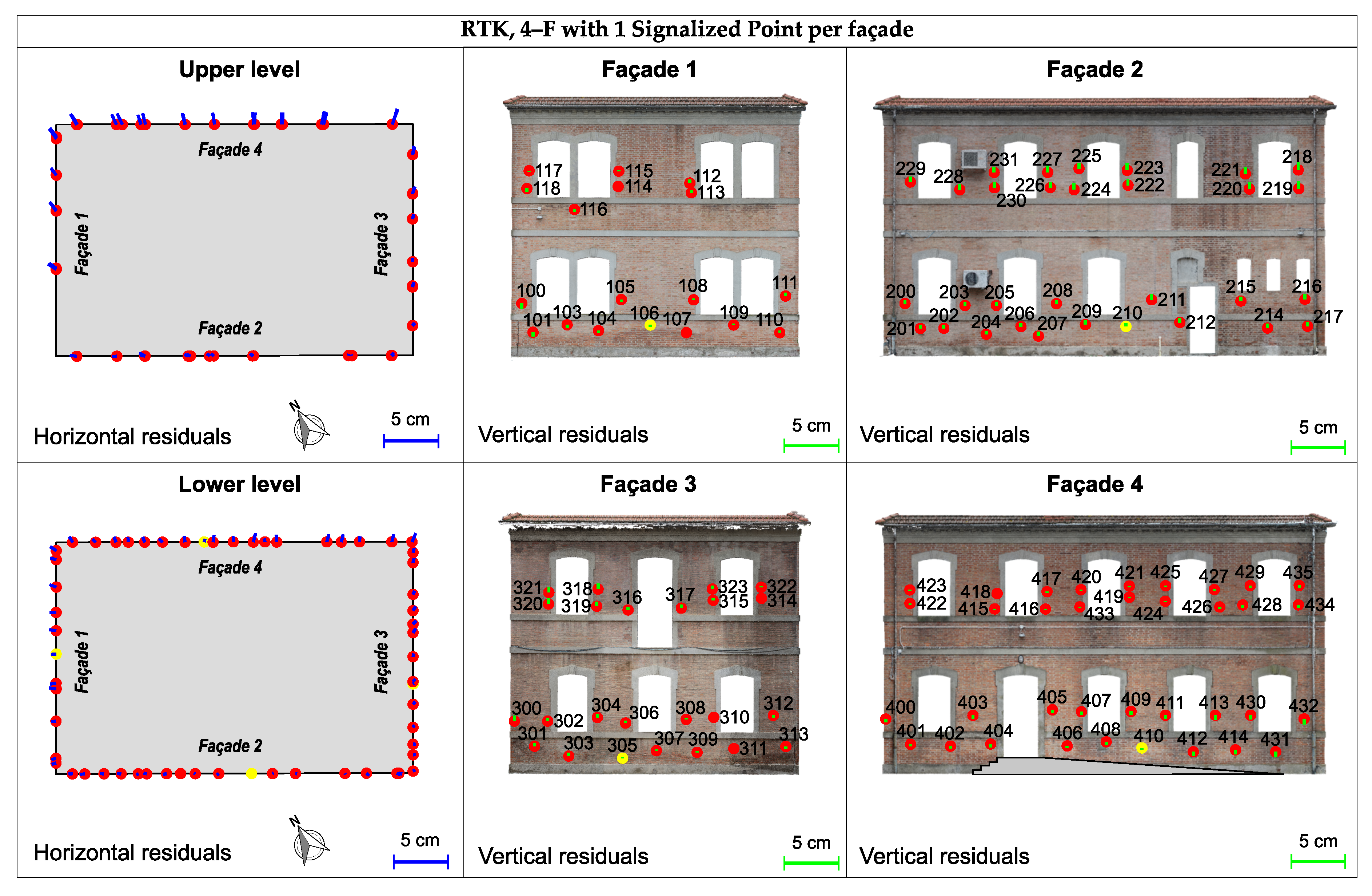

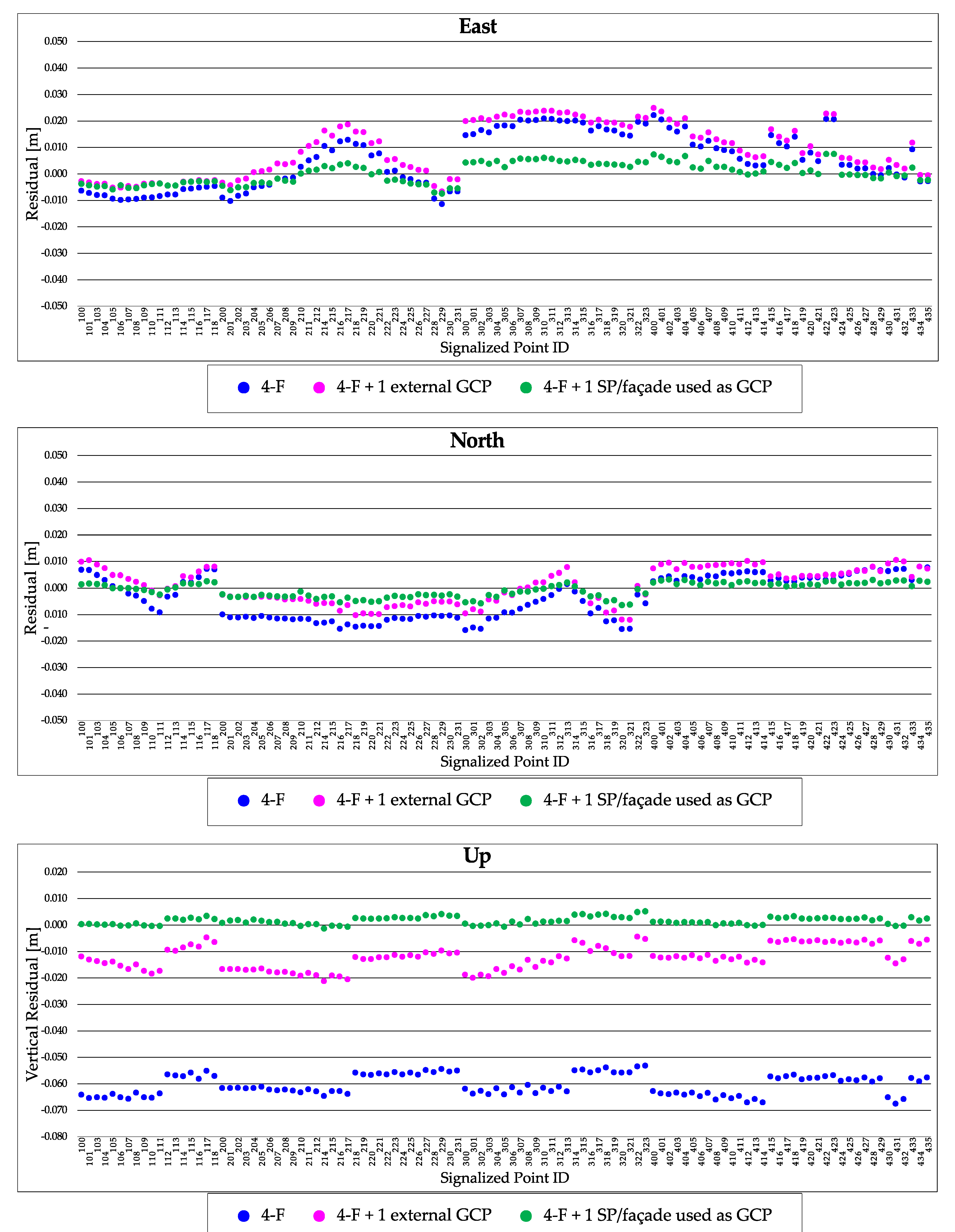

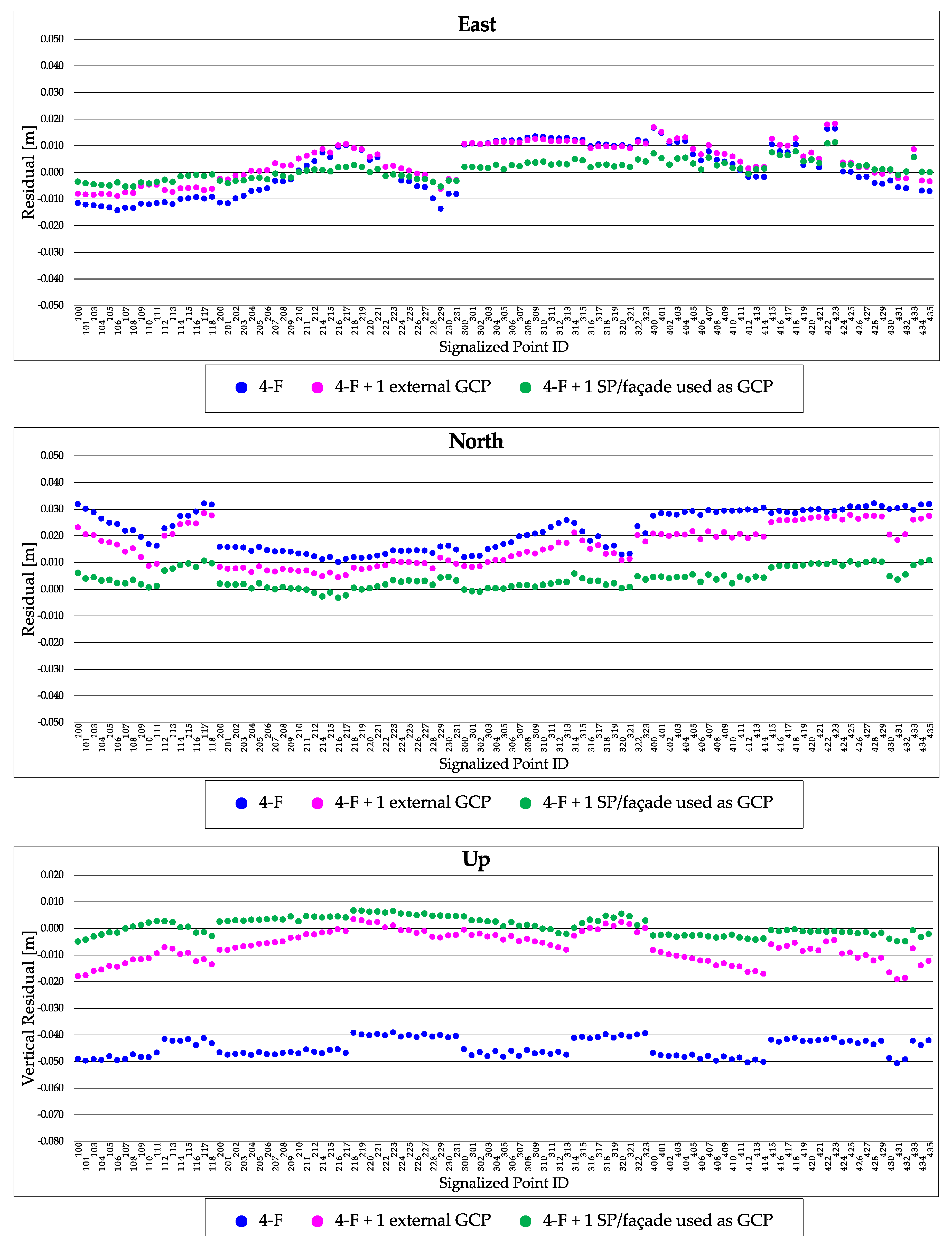

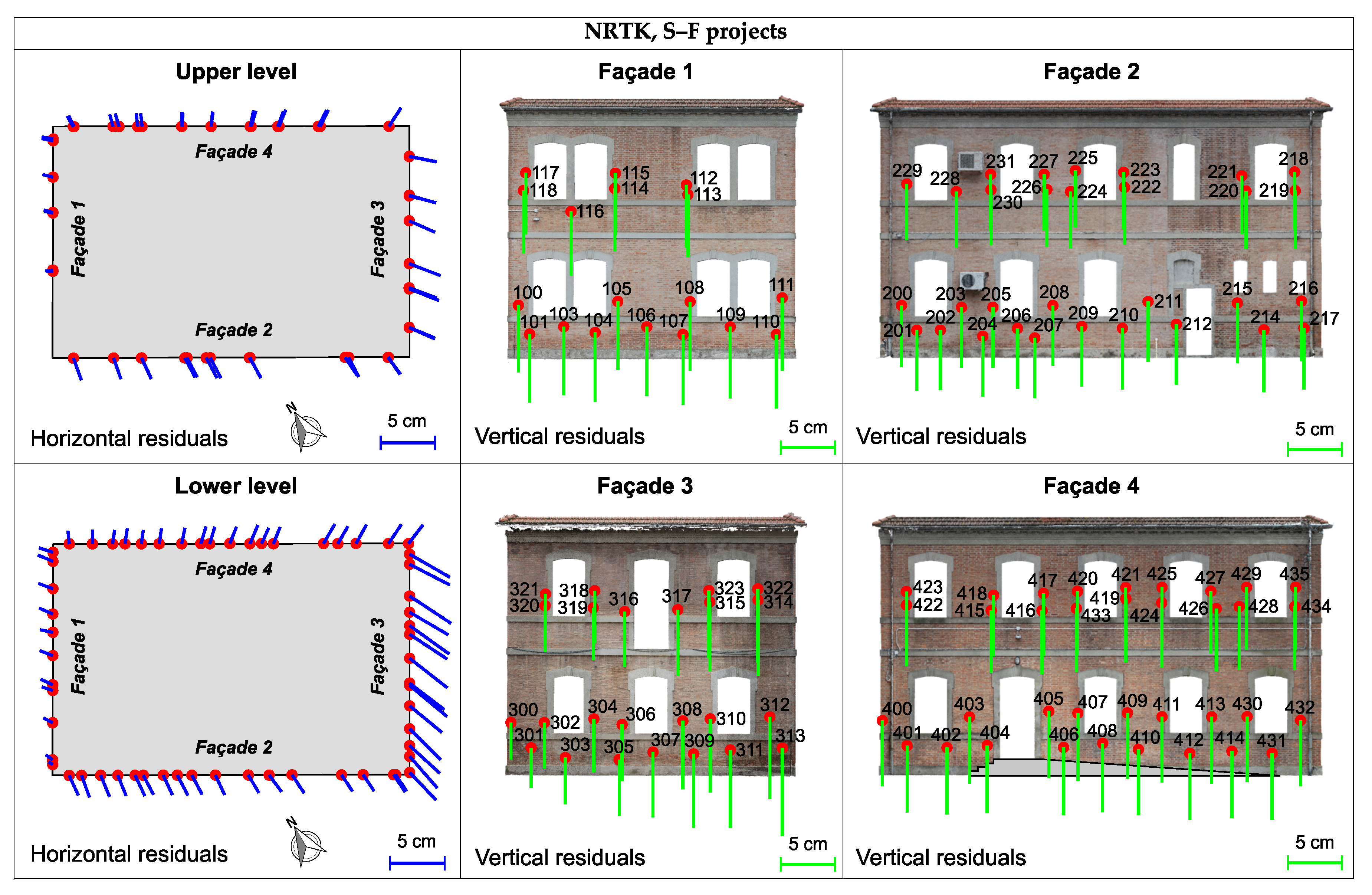

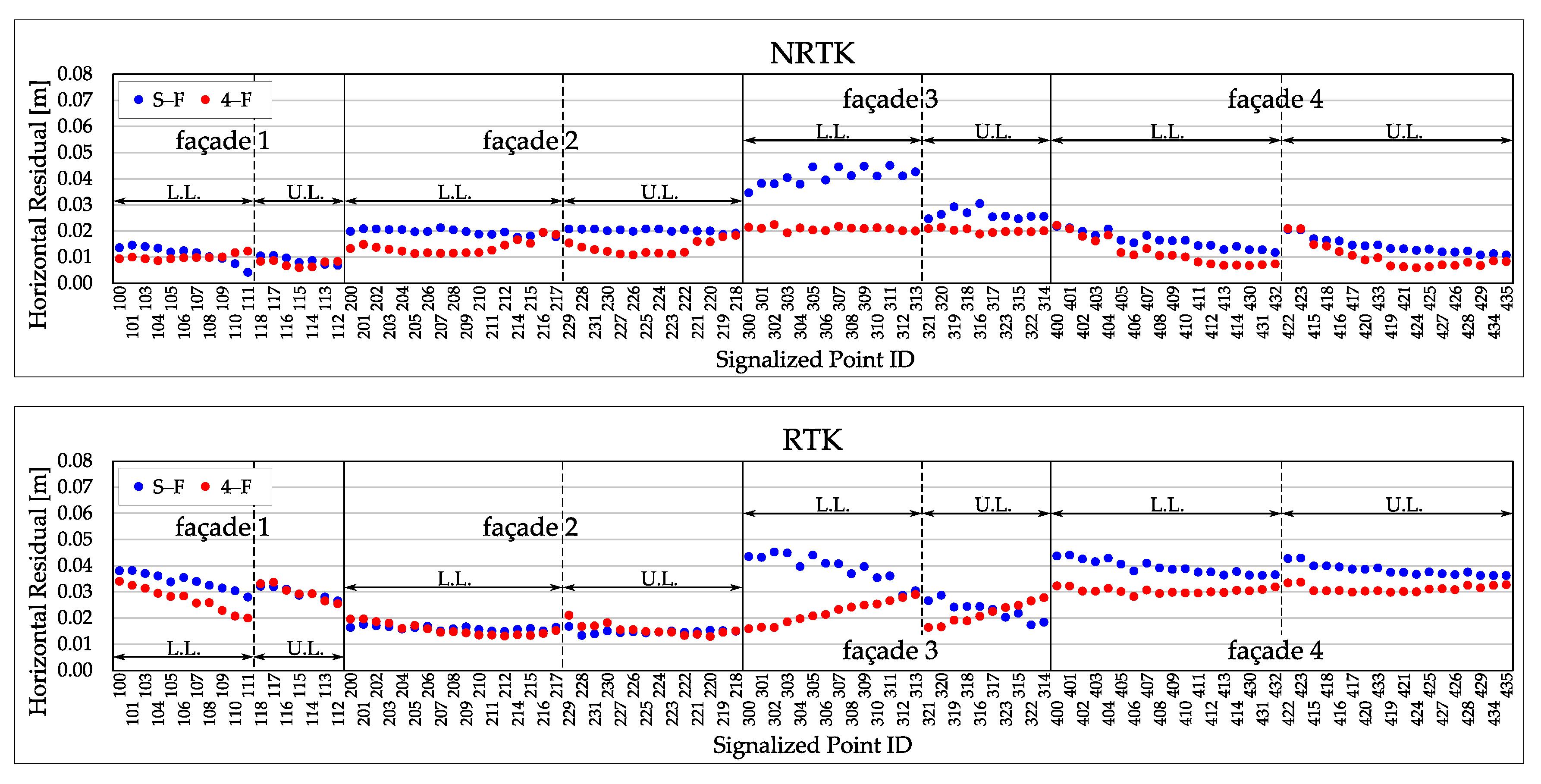

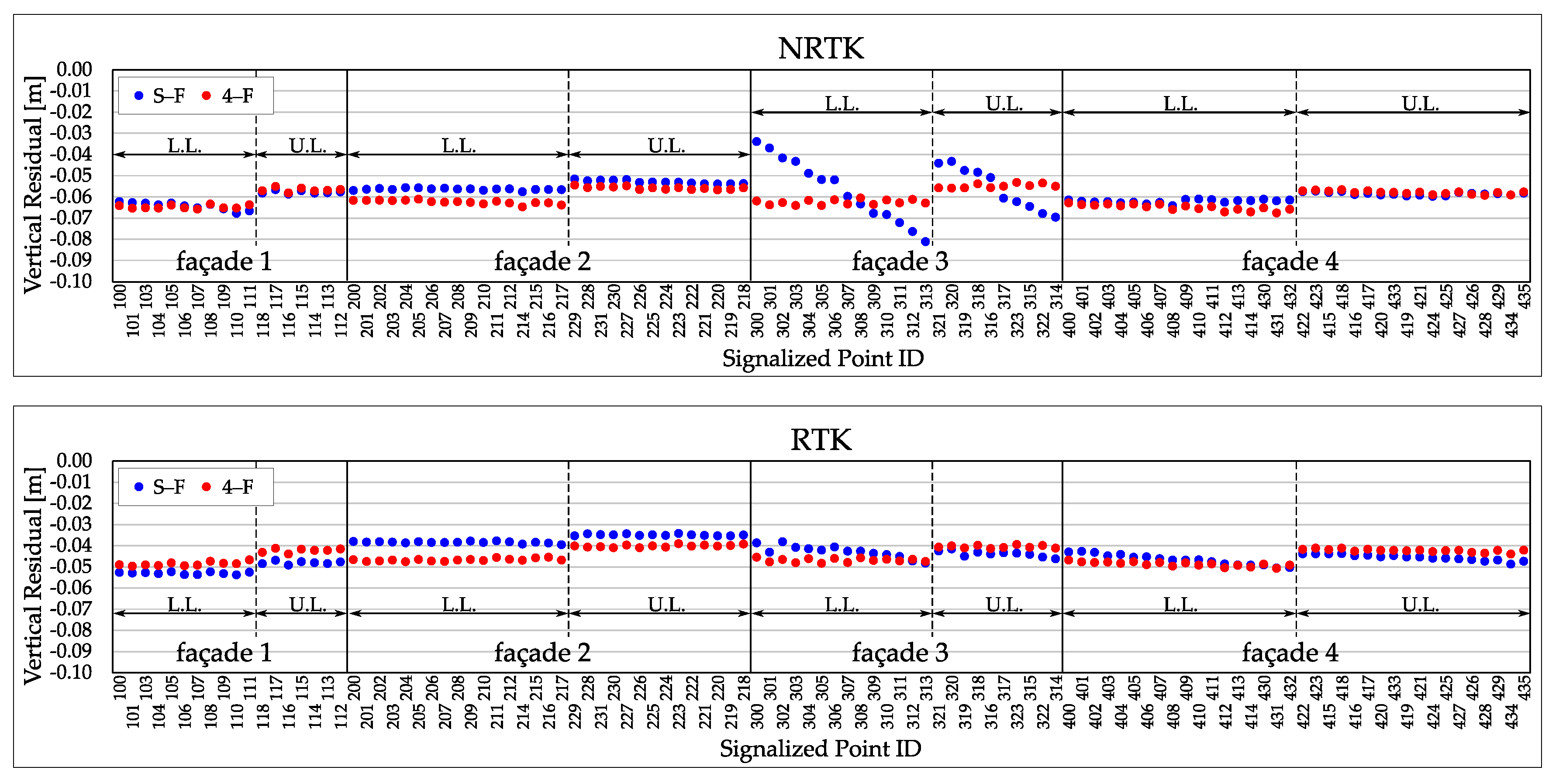

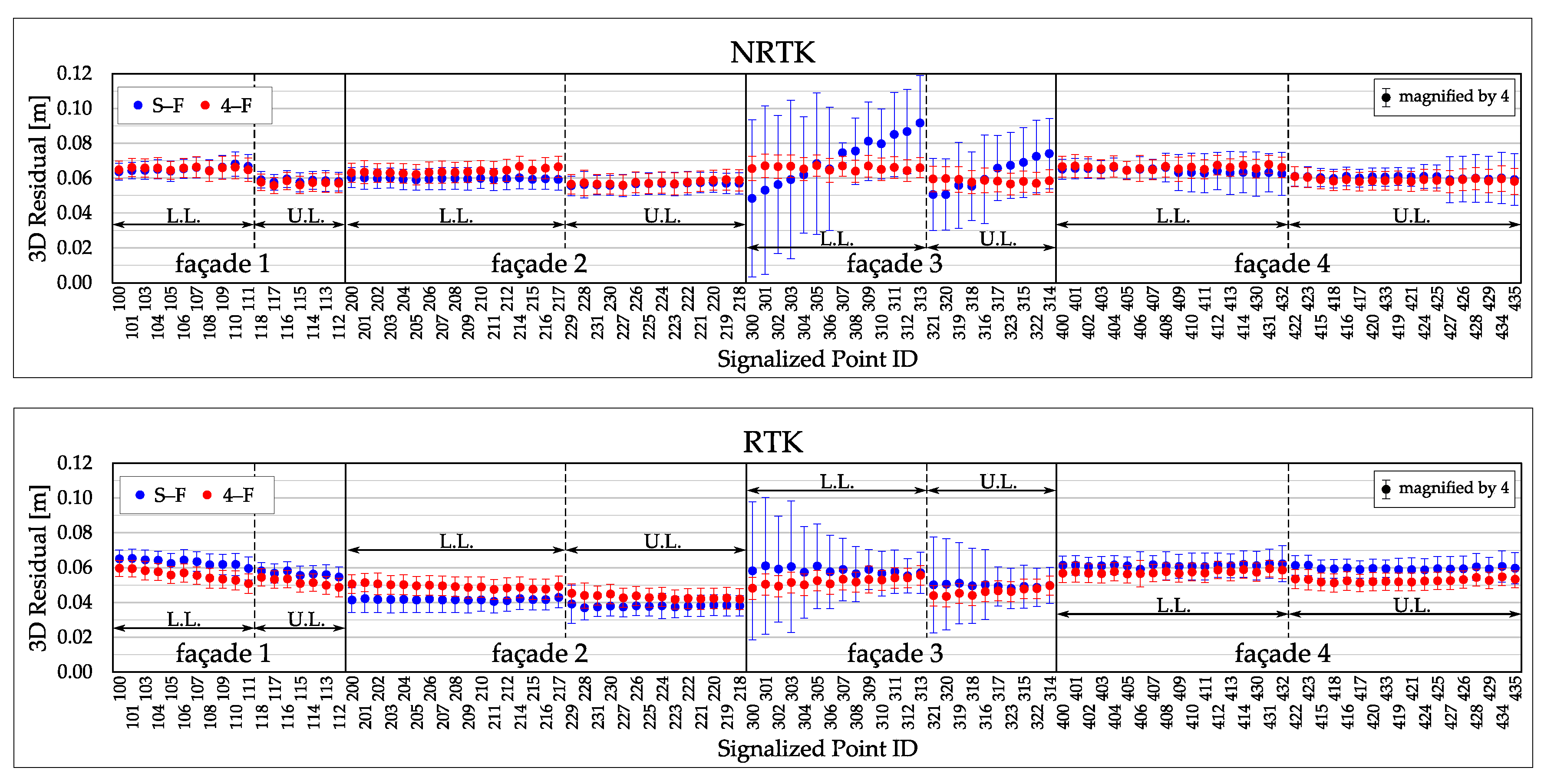

3.3. Horizontal and Vertical Residuals

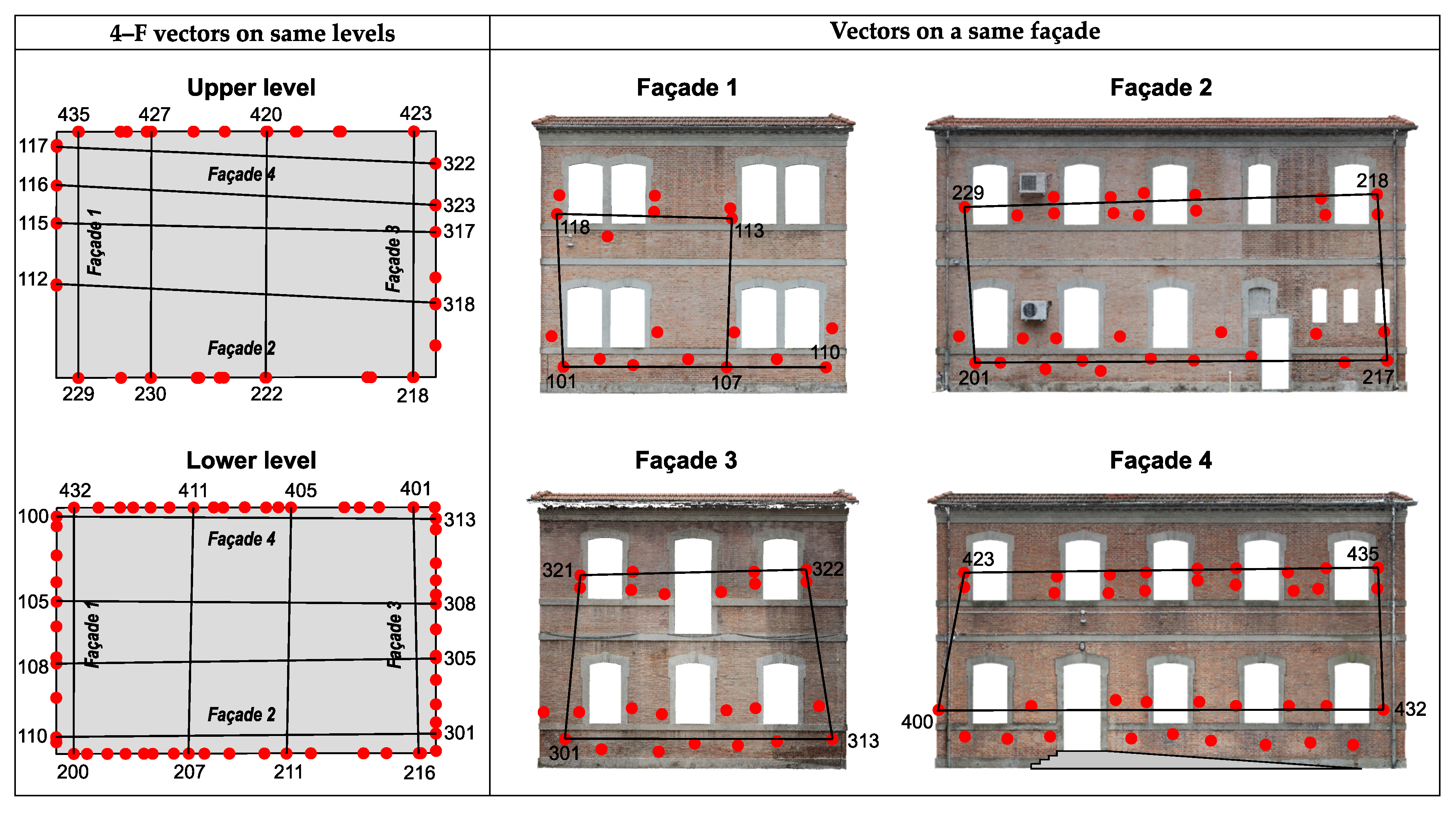

3.4. Computation of Absolute and Relative RMSE

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| 4–F | Four-Façade (overall block) |

| APC | Antenna Phase Center |

| BBA | Block Bundle Adjustment |

| CC | Camera Center |

| CORS | Continuously Operating Reference Station |

| DJI-P4RTK | DJI Phantom 4 RTK |

| DOP | Dilution Of Precision |

| E, N, U | East, North, Up |

| ETRS | European Terrestrial Reference System |

| ETRF | European Terrestrial Reference Frame |

| Exif | Exchangeable image file format |

| F. 1 | Façade 1 |

| F. 2 | Façade 2 |

| F. 3 | Façade 3 |

| F. 4 | Façade 4 |

| GCP | Ground Control Point |

| GNSS | Global Navigation Satellite System |

| GSD | Ground Sample Distance |

| IMU | Inertial Measurement Unit |

| L.L. | Lower Level |

| NRTK | Network Real-Time Kinematic |

| PPK | Post-Processing Kinematic |

| RINEX | Receiver Independent Exchange Format |

| RMS | Root Mean Square |

| RMSE | Root Mean Square Error |

| RTK | Real-Time Kinematic |

| S–F | Single-Façade |

| SP | Signalized Point |

| TLS | Terrestrial Laser Scanning |

| UAV | Unmanned Aerial Vehicle |

| U.L. | Upper Level |

| UTM | Universal Transverse Mercator |

Appendix A. Photogrammetric Residuals

Appendix B. Approaches for Removing the Vertical Offset

References

- Leite, F.; Akcamete, A.; Akinci, B.; Atasoy, G.; Kiziltas, S. Analysis of modeling effort and impact of different levels of detail in building information models. Autom. Constr. 2011, 20, 601–609. [Google Scholar] [CrossRef]

- Tang, L.; Ying, S.; Li, L.; Biljecki, F.; Zhu, H.; Zhu, Y.; Yang, F.; Su, F. An application-driven LOD modeling paradigm for 3D building models. ISPRS J. Photogramm. Remote Sens. 2020, 161, 194–207. [Google Scholar] [CrossRef]

- Luo, L.; Wang, X.; Guo, H.; Lasaponara, R.; Zong, X.; Masini, N.; Wang, G.; Shi, P.; Khatteli, H.; Chen, F.; et al. Airborne and spaceborne remote sensing for archaeological and cultural heritage applications: A review of the century (1907–2017). Remote Sens. Environ. 2019, 232, 111280. [Google Scholar] [CrossRef]

- Pepe, M.; Fregonese, L.; Scaioni, M. Planning airborne photogrammetry and remote-sensing missions with modern platforms and sensors. Eur. J. Remote Sens. 2018, 51, 412–436. [Google Scholar] [CrossRef]

- Pu, S.; Vosselman, G. Knowledge based reconstruction of building models from terrestrial laser scanning data. ISPRS J. Photogramm. Remote Sens. 2009, 64, 575–584. [Google Scholar] [CrossRef]

- Quagliarini, E.; Clini, P.; Ripanti, M. Fast, low cost and safe methodology for the assessment of the state of conservation of historical buildings from 3D laser scanning: The case study of Santa Maria in Portonovo (Italy). J. Cult. Herit. 2017, 24, 175–183. [Google Scholar] [CrossRef]

- Roca, D.; Lagüela, S.; Díaz-Vilariño, L.; Armesto, J.; Arias, P. Low-cost aerial unit for outdoor inspection of building façades. Autom. Constr. 2013, 36, 128–135. [Google Scholar] [CrossRef]

- Martínez, J.; Soria-Medina, A.; Arias, P.; Buffara-Antunes, A.F. Automatic processing of Terrestrial Laser Scanning data of building façades. Autom. Constr. 2012, 22, 298–305. [Google Scholar] [CrossRef]

- Dong, Z.; Liang, F.; Yang, B.; Xu, Y.; Zang, Y.; Li, J.; Wang, Y.; Dai, W.; Fan, H.; Hyyppä, J.; et al. Registration of large-scale terrestrial laser scanner point clouds: A review and benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 163, 327–342. [Google Scholar] [CrossRef]

- El-Din Fawzy, H. 3D laser scanning and close-range photogrammetry for buildings documentation: A hybrid technique towards a better accuracy. Alex. Eng. J. 2019, 58, 1191–1204. [Google Scholar] [CrossRef]

- Alshawabkeh, Y.; El-Khalili, M.; Almasri, E.; Balaawi, F.; Al-Massarweh, A. Heritage documentation using laser scanner and photogrammetry. The case study of Qasr Al-Abidit, Jordan. Digit. Appl. Archaeol. Cult. Herit. 2020, 16, e00133. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P.; Suwardhi, D.; Awalludin, R. Multi-Scale and Multi-Sensor 3D Documentation of Heritage Complexes in Urban Areas. ISPRS Int. J. Geo-Inf. 2018, 7, 483. [Google Scholar] [CrossRef]

- Martínez-Carricondo, P.; Carvajal-Ramírez, F.; Yero-Paneque, L.; Agüera-Vega, F. Combination of nadiral and oblique UAV photogrammetry and HBIM for the virtual reconstruction of cultural heritage. Case study of Cortijo del Fraile in Níjar, Almería (Spain). Build. Res. Inf. 2020, 48, 140–159. [Google Scholar] [CrossRef]

- Westoby, M.; Brasington, J.; Glasser, N.; Hambrey, M.; Reynolds, J. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Zheng, X.; Wang, F.; Li, Z. A multi-UAV cooperative route planning methodology for 3D fine-resolution building model reconstruction. ISPRS J. Photogramm. Remote Sens. 2018, 146, 483–494. [Google Scholar] [CrossRef]

- Mavroulis, S.; Andreadakis, E.; Spyrou, N.I.; Antoniou, V.; Skourtsos, E.; Papadimitriou, P.; Kasssaras, I.; Kaviris, G.; Tselentis, G.A.; Voulgaris, N.; et al. UAV and GIS based rapid earthquake-induced building damage assessment and methodology for EMS-98 isoseismal map drawing: The June 12, 2017 Mw 6.3 Lesvos (Northeastern Aegean, Greece) earthquake. Int. J. Disaster Risk Reduct. 2019, 37, 101169. [Google Scholar] [CrossRef]

- Sun, S.; Wang, B. Low-altitude UAV 3D modeling technology in the application of ancient buildings protection situation assessment. Energy Procedia 2018, 153, 320–324. [Google Scholar] [CrossRef]

- Vitale, V. The case of the middle valley of the Sinni (Southern Basilicata). Methods of archaeological and architectural documentation: 3D photomodelling techniques and use of RPAS. Digit. Appl. Archaeol. Cult. Herit. 2018, 11, e00084. [Google Scholar] [CrossRef]

- Jones, C.A.; Church, E. Photogrammetry is for everyone: Structure-from-motion software user experiences in archaeology. J. Archaeol. Sci. Rep. 2020, 30, 102261. [Google Scholar] [CrossRef]

- Hill, A.C.; Laugier, E.J.; Casana, J. Archaeological Remote Sensing Using Multi-Temporal, Drone-Acquired Thermal and Near Infrared (NIR) Imagery: A Case Study at the Enfield Shaker Village, New Hampshire. Remote Sens. 2020, 12, 690. [Google Scholar] [CrossRef]

- Carnevali, L.; Ippoliti, E.; Lanfranchi, F.; Menconero, S.; Russo, M.; Russo, V. CLOSE-RANGE MINI-UAVS PHOTOGRAMMETRY FOR ARCHITECTURE SURVEY. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-2, 217–224. [Google Scholar] [CrossRef]

- Bakirman, T.; Bayram, B.; Akpinar, B.; Karabulut, M.F.; Bayrak, O.C.; Yigitoglu, A.; Seker, D.Z. Implementation of ultra-light UAV systems for cultural heritage documentation. J. Cult. Herit. 2020. [Google Scholar] [CrossRef]

- Russo, M.; Carnevali, L.; Russo, V.; Savastano, D.; Taddia, Y. Modeling and deterioration mapping of façades in historical urban context by close-range ultra-lightweight UAVs photogrammetry. Int. J. Arch. Herit. 2019, 13, 549–568. [Google Scholar] [CrossRef]

- Rabah, M.; Basiouny, M.; Ghanem, E.; Elhadary, A. Using RTK and VRS in direct geo-referencing of the UAV imagery. NRIAG J. Astron. Geophys. 2018, 7, 220–226. [Google Scholar] [CrossRef]

- Gabrlik, P. The Use of Direct Georeferencing in Aerial Photogrammetry with Micro UAV. IFAC-PapersOnLine 2015, 48, 380–385. [Google Scholar] [CrossRef]

- Rouse, L.M.; Krumnow, J. On the fly: Strategies for UAV-based archaeological survey in mountainous areas of Central Asia and their implications for landscape research. J. Archaeol. Sci. Rep. 2020, 30, 102275. [Google Scholar] [CrossRef]

- Liu, C.; Cao, Y.; Yang, C.; Zhou, Y.; Ai, M. Pattern identification and analysis for the traditional village using low altitude UAV-borne remote sensing: Multifeatured geospatial data to support rural landscape investigation, documentation and management. J. Cult. Herit. 2020. [Google Scholar] [CrossRef]

- Martínez-Carricondo, P.; Agüera-Vega, F.; Carvajal-Ramírez, F.; Mesas-Carrascosa, F.J.; García-Ferrer, A.; Pérez-Porras, F.J. Assessment of UAV-photogrammetric mapping accuracy based on variation of ground control points. Int. J. Appl. Earth Observ. Geoinf. 2018, 72, 1–10. [Google Scholar] [CrossRef]

- Bolkas, D. Assessment of GCP Number and Separation Distance for Small UAS Surveys with and without GNSS-PPK Positioning. J. Surv. Eng. 2019, 145, 04019007. [Google Scholar] [CrossRef]

- Rangel, J.M.G.; Gonçalves, G.R.; Pérez, J.A. The impact of number and spatial distribution of GCPs on the positional accuracy of geospatial products derived from low-cost UASs. Int. J. Remote Sens. 2018, 39, 7154–7171. [Google Scholar] [CrossRef]

- Taddia, Y.; Stecchi, F.; Pellegrinelli, A. USING DJI PHANTOM 4 RTK DRONE FOR TOPOGRAPHIC MAPPING OF COASTAL AREAS. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 625–630. [Google Scholar] [CrossRef]

- Forlani, G.; Dall’Asta, E.; Diotri, F.; Cella, U.M.d.; Roncella, R.; Santise, M. Quality Assessment of DSMs Produced from UAV Flights Georeferenced with On-Board RTK Positioning. Remote Sens. 2018, 10, 311. [Google Scholar] [CrossRef]

- Taddia, Y.; Stecchi, F.; Pellegrinelli, A. Coastal Mapping Using DJI Phantom 4 RTK in Post-Processing Kinematic Mode. Drones 2020, 4, 9. [Google Scholar] [CrossRef]

- Peppa, M.V.; Hall, J.; Goodyear, J.; Mills, J.P. PHOTOGRAMMETRIC ASSESSMENT AND COMPARISON OF DJI PHANTOM 4 PRO AND PHANTOM 4 RTK SMALL UNMANNED AIRCRAFT SYSTEMS. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 503–509. [Google Scholar] [CrossRef]

- Harwin, S.; Lucieer, A. Assessing the Accuracy of Georeferenced Point Clouds Produced via Multi-View Stereopsis from Unmanned Aerial Vehicle (UAV) Imagery. Remote Sens. 2012, 4, 1573–1599. [Google Scholar] [CrossRef]

- Casella, V.; Chiabrando, F.; Franzini, M.; Manzino, A.M. Accuracy Assessment of a UAV Block by Different Software Packages, Processing Schemes and Validation Strategies. ISPRS Int. J. Geo-Inf. 2020, 9, 164. [Google Scholar] [CrossRef]

- Štroner, M.; Urban, R.; Reindl, T.; Seidl, J.; Brouček, J. Evaluation of the Georeferencing Accuracy of a Photogrammetric Model Using a Quadrocopter with Onboard GNSS RTK. Sensors 2020, 20, 2318. [Google Scholar] [CrossRef] [PubMed]

- Kalacska, M.; Lucanus, O.; Arroyo-Mora, J.P.; Laliberté, É.; Elmer, K.; Leblanc, G.; Groves, A. Accuracy of 3D Landscape Reconstruction without Ground Control Points Using Different UAS Platforms. Drones 2020, 4, 13. [Google Scholar] [CrossRef]

- Barba, S.; Barbarella, M.; Di Benedetto, A.; Fiani, M.; Gujski, L.; Limongiello, M. Accuracy Assessment of 3D Photogrammetric Models from an Unmanned Aerial Vehicle. Drones 2019, 3, 79. [Google Scholar] [CrossRef]

- Bryan, P.; Blake, B.; Bedford, J.; Barber, D.; Mills, J. Metric Survey Specifications for Cultural Heritage; English Heritage: Swindon, UK, 2009. [Google Scholar]

- American Society for Photogrammetry and Remote Sensing. ASPRS Positional Accuracy Standards for Digital Geospatial Data. Photogramm. Eng. Remote Sens. 2015, 81, A1–A26. [Google Scholar] [CrossRef]

- Campos, M.B.; Tommaselli, A.M.G.; Ivánová, I.; Billen, R. Data Product Specification Proposal for Architectural Heritage Documentation with Photogrammetric Techniques: A Case Study in Brazil. Remote Sens. 2015, 7, 13337–13363. [Google Scholar] [CrossRef]

- DJI. Phantom 4 RTK User Manual v1.4 and v2.2. Available online: https://www.dji.com/it/phantom-4-rtk/info#downloads (accessed on 29 January 2020).

- Cramer, M.; Stallmann, D. System Calibration for Direct Georeferencing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 79–84. [Google Scholar]

- Honkavaara, E. In-flight camera calibration for direct georeferencing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 166–172. [Google Scholar]

- Zhang, H.; Aldana-Jague, E.; Clapuyt, F.; Wilken, F.; Vanacker, V.; Van Oost, K. Evaluating the potential of post-processing kinematic (PPK) georeferencing for UAV-based structure-from-motion (SfM) photogrammetry and surface change detection. Earth Surface Dyn. 2019, 7, 807–827. [Google Scholar] [CrossRef]

- Wang, S.; Xiao, Y.; Jin, Z. An efficient algorithm for batch images alignment with adaptive rank-correction term. J. Comput. Appl. Math. 2019, 346, 171–183. [Google Scholar] [CrossRef]

- Feng, R.; Du, Q.; Li, X.; Shen, H. Robust registration for remote sensing images by combining and localizing feature- and area-based methods. ISPRS J. Photogramm. Remote Sens. 2019, 151, 15–26. [Google Scholar] [CrossRef]

- Agisoft. Agisoft Metashape User Manual, Professional Edition, Version 1.5. Available online: https://www.agisoft.com/downloads/user-manuals/ (accessed on 29 January 2020).

| Specifications | |

|---|---|

| Horizontal positioning accuracy () | 1.0 cm + 1 ppm (RMS) |

| Vertical positioning accuracy () | 1.5 cm + 1 ppm (RMS) |

| Resolution | 20 Mpix |

| Image Size | |

| Field of View | 84° |

| Focal Length | 8.8 mm |

| Pixel Size | |

| GSD @ 7.00 m | |

| GSD @ 4.00 m | |

| Scale | GSD Threshold | Absolute Tolerance | Relative Tolerance |

|---|---|---|---|

| [m] | [m] | [m] | |

| 1:20 | 0.002 | 0.006 | 0.004 |

| 1:50 | 0.005 | 0.015 | 0.010 |

| 1:100 | 0.010 | 0.030 | 0.020 |

| 1:200 | 0.020 | 0.060 | 0.040 |

| Processing | Dataset | |

|---|---|---|

| Strategy | NRTK | RTK |

| S–F, F. 1 | 59 | 48 |

| S–F, F. 2 | 67 | 64 |

| S–F, F. 3 | 73 | 66 |

| S–F, F. 4 | 78 | 65 |

| 4–F | 233 | 211 |

| Mode | S–F projects | 4–F project | |||||

|---|---|---|---|---|---|---|---|

| E | N | U | E | N | U | ||

| NRTK | Min [m] | −0.002 | −0.001 | −0.003 | −0.004 | −0.002 | −0.002 |

| Max [m] | 0.002 | 0.001 | 0.001 | 0.003 | 0.001 | 0.002 | |

| RTK | Min [m] | −0.001 | −0.002 | −0.001 | −0.004 | −0.002 | 0.000 |

| Max [m] | 0.002 | 0.002 | 0.001 | 0.003 | 0.002 | 0.002 | |

| Mode | Level | Project | S–F | 4–F | |||

|---|---|---|---|---|---|---|---|

| Façade(s) | F. 1 | F. 2 | F. 3 | F. 4 | All | ||

| NRTK | L.L. | Min [m] | 0.004 | 0.018 | 0.035 | 0.012 | 0.007 |

| Max [m] | 0.015 | 0.021 | 0.045 | 0.022 | 0.022 | ||

| Average [m] | 0.011 | 0.020 | 0.041 | 0.016 | 0.014 | ||

| U.L. | Min [m] | 0.007 | 0.019 | 0.025 | 0.011 | 0.006 | |

| Max [m] | 0.011 | 0.021 | 0.030 | 0.021 | 0.021 | ||

| Average [m] | 0.009 | 0.020 | 0.027 | 0.014 | 0.013 | ||

| RTK | L.L. | Min [m] | 0.028 | 0.015 | 0.029 | 0.036 | 0.013 |

| Max [m] | 0.038 | 0.018 | 0.045 | 0.044 | 0.034 | ||

| Average [m] | 0.034 | 0.016 | 0.039 | 0.039 | 0.024 | ||

| U.L. | Min [m] | 0.027 | 0.013 | 0.017 | 0.036 | 0.013 | |

| Max [m] | 0.032 | 0.017 | 0.029 | 0.043 | 0.034 | ||

| Average [m] | 0.030 | 0.015 | 0.023 | 0.038 | 0.025 | ||

| Mode | Level | Project | S–F | 4–F | |||

|---|---|---|---|---|---|---|---|

| Façade(s) | F. 1 | F. 2 | F. 3 | F. 4 | All | ||

| NRTK | L.L. | Min [m] | −0.068 | −0.058 | −0.081 | −0.064 | −0.068 |

| Max [m] | −0.062 | −0.056 | −0.034 | −0.061 | −0.060 | ||

| Average [m] | −0.064 | −0.056 | −0.057 | −0.062 | −0.064 | ||

| U.L. | Min [m] | −0.059 | −0.054 | −0.070 | −0.060 | −0.059 | |

| Max [m] | −0.057 | −0.052 | −0.043 | −0.057 | −0.053 | ||

| Average [m] | −0.058 | −0.053 | −0.056 | −0.059 | −0.056 | ||

| RTK | L.L. | Min [m] | −0.054 | −0.040 | −0.048 | −0.050 | −0.051 |

| Max [m] | −0.052 | −0.038 | −0.038 | −0.043 | −0.045 | ||

| Average [m] | −0.053 | −0.038 | −0.043 | −0.047 | −0.048 | ||

| U.L. | Min [m] | −0.049 | −0.035 | −0.046 | −0.049 | −0.044 | |

| Max [m] | −0.047 | −0.034 | −0.042 | −0.044 | −0.039 | ||

| Average [m] | −0.048 | −0.035 | −0.044 | −0.046 | −0.041 | ||

| Mode | Façade | Absolute RMSE [m] (Scale) | ||||

|---|---|---|---|---|---|---|

| E | N | U | Horizontal | 3D | ||

| S–F | F. 1 | 0.007 | 0.008 | 0.062 | 0.011 (1:50) | 0.063 (<1:200) |

| F. 2 | 0.002 | 0.020 | 0.055 | 0.020 (1:100) | 0.058 (1:200) | |

| F. 3 | 0.017 | 0.032 | 0.058 | 0.036 (1:200) | 0.068 (<1:200) | |

| F. 4 | 0.012 | 0.010 | 0.060 | 0.016 (1:100) | 0.062 (<1:200) | |

| 4–F | All | 0.012 | 0.009 | 0.061 | 0.015 (1:50) | 0.062 (<1:200) |

| Mode | Façade | Absolute RMSE [m] (Scale) | ||||

|---|---|---|---|---|---|---|

| E | N | U | Horizontal | 3D | ||

| S–F | F. 1 | 0.016 | 0.028 | 0.028 | 0.033 (1:200) | 0.061 (<1:200) |

| F. 2 | 0.010 | 0.012 | 0.037 | 0.016 (1:100) | 0.040 (1:200) | |

| F. 3 | 0.030 | 0.016 | 0.043 | 0.034 (1:200) | 0.055 (1:200) | |

| F. 4 | 0.010 | 0.038 | 0.046 | 0.039 (1:100) | 0.060 (<1:200) | |

| 4–F | All | 0.009 | 0.023 | 0.045 | 0.025 (1:100) | 0.051 (1:200) |

| Mode | Type | Absolute RMSE [m] (Scale) | ||

|---|---|---|---|---|

| 4–F | 4–F + 1 ext GCP | 4–F + 1 SP /façade | ||

| NRTK | Horizontal | 0.015 (*) | 0.015 | 0.005 |

| (1:50) | (1:50) | (1:50) | ||

| 3D | 0.062 (*) | 0.020 | 0.005 | |

| () | (1:100) | (1:50) | ||

| RTK | Horizontal | 0.025 (**) | 0.020 | 0.006 |

| (1:100) | (1:100) | (1:50) | ||

| 3D | 0.051 (**) | 0.022 | 0.007 | |

| (1:200) | (1:100) | (1:50) | ||

| Level | Vector | [m] | [m] | [m] | ||

|---|---|---|---|---|---|---|

| NRTK | RTK | NRTK | RTK | |||

| L.L. | 100–313 | 16.395 | 16.420 | 16.419 | 0.025 | 0.024 |

| 105–308 | 16.373 | 16.402 | 16.398 | 0.029 | 0.025 | |

| 108–305 | 16.448 | 16.475 | 16.473 | 0.027 | 0.025 | |

| 110–301 | 16.391 | 16.415 | 16.413 | 0.024 | 0.022 | |

| 200–432 | 10.628 | 10.647 | 10.644 | 0.019 | 0.016 | |

| 207–411 | 10.717 | 10.735 | 10.732 | 0.018 | 0.015 | |

| 211–405 | 10.624 | 10.641 | 10.640 | 0.017 | 0.016 | |

| 216–401 | 10.661 | 10.682 | 10.680 | 0.021 | 0.019 | |

| U.L. | 112–318 | 16.378 | 16.404 | 16.400 | 0.026 | 0.022 |

| 115–317 | 16.373 | 16.398 | 16.394 | 0.025 | 0.021 | |

| 116–323 | 16.432 | 16.457 | 16.454 | 0.026 | 0.022 | |

| 117–322 | 16.364 | 16.389 | 16.387 | 0.026 | 0.023 | |

| 218–423 | 10.603 | 10.623 | 10.621 | 0.020 | 0.019 | |

| 222–420 | 10.627 | 10.645 | 10.645 | 0.017 | 0.017 | |

| 230–427 | 10.639 | 10.658 | 10.655 | 0.019 | 0.016 | |

| 229–435 | 10.644 | 10.664 | 10.661 | 0.020 | 0.017 | |

| Relative RMSE [m] | 0.023 | 0.020 | ||||

| Scale | 1:200 | 1:100 | ||||

| Façade | Vector | [m] | [m] | [m] | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| NRTK | RTK | NRTK | RTK | |||||||

| S–F | 4–F | S–F | 4–F | S–F | 4–F | S–F | 4–F | |||

| F. 1 | 101–110 | |||||||||

| 118–113 | ||||||||||

| 101–118 | ||||||||||

| 107–113 | ||||||||||

| F. 2 | 201–217 | |||||||||

| 218–229 | ||||||||||

| 201–229 | ||||||||||

| 217–218 | ||||||||||

| F. 3 | 301–313 | |||||||||

| 321–322 | ||||||||||

| 301–321 | ||||||||||

| 313–322 | ||||||||||

| F. 4 | 400–432 | |||||||||

| 423–435 | ||||||||||

| 400–423 | ||||||||||

| 432–435 | ||||||||||

| Relative RMSE [m] | 0.007 | 0.014 | 0.005 | 0.013 | ||||||

| Scale | 1:50 | 1:100 | 1:50 | 1:100 | ||||||

| Mode | Type | Relative RMSE [m] (Scale) | |||

|---|---|---|---|---|---|

| S–F | 4–F | 4–F + 1 ext GCP | 4–F + 1 SP /façade | ||

| NRTK | Same level | – | 0.023 (*) | 0.020 | 0.008 |

| – | (1:200) | (1:100) | (1:50) | ||

| Same façade | 0.007 (**) | 0.014 (**) | 0.013 | 0.005 | |

| (1:50) | (1:100) | (1:100) | (1:50) | ||

| RTK | Same level | – | 0.020 (*) | 0.017 | 0.008 |

| – | (1:100) | (1:100) | (1:50) | ||

| Same façade | 0.005 (**) | 0.013 (**) | 0.010 | 0.005 | |

| (1:50) | (1:100) | (1:100) | (1:50) | ||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Taddia, Y.; González-García, L.; Zambello, E.; Pellegrinelli, A. Quality Assessment of Photogrammetric Models for Façade and Building Reconstruction Using DJI Phantom 4 RTK. Remote Sens. 2020, 12, 3144. https://doi.org/10.3390/rs12193144

Taddia Y, González-García L, Zambello E, Pellegrinelli A. Quality Assessment of Photogrammetric Models for Façade and Building Reconstruction Using DJI Phantom 4 RTK. Remote Sensing. 2020; 12(19):3144. https://doi.org/10.3390/rs12193144

Chicago/Turabian StyleTaddia, Yuri, Laura González-García, Elena Zambello, and Alberto Pellegrinelli. 2020. "Quality Assessment of Photogrammetric Models for Façade and Building Reconstruction Using DJI Phantom 4 RTK" Remote Sensing 12, no. 19: 3144. https://doi.org/10.3390/rs12193144

APA StyleTaddia, Y., González-García, L., Zambello, E., & Pellegrinelli, A. (2020). Quality Assessment of Photogrammetric Models for Façade and Building Reconstruction Using DJI Phantom 4 RTK. Remote Sensing, 12(19), 3144. https://doi.org/10.3390/rs12193144