Automatic Detection of Maize Tassels from UAV Images by Combining Random Forest Classifier and VGG16

Abstract

1. Introduction

2. Materials and Methods

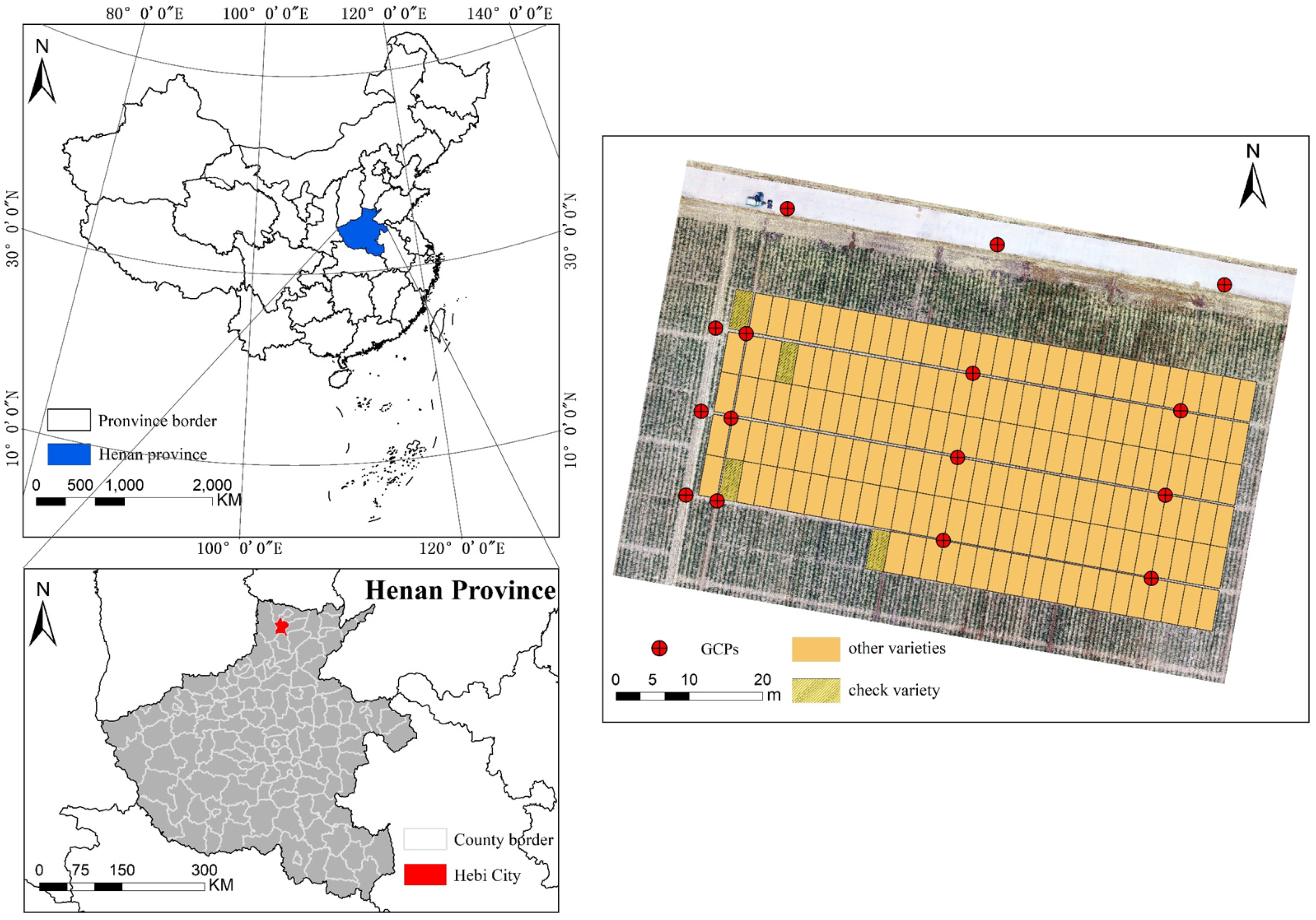

2.1. Data Acquisition

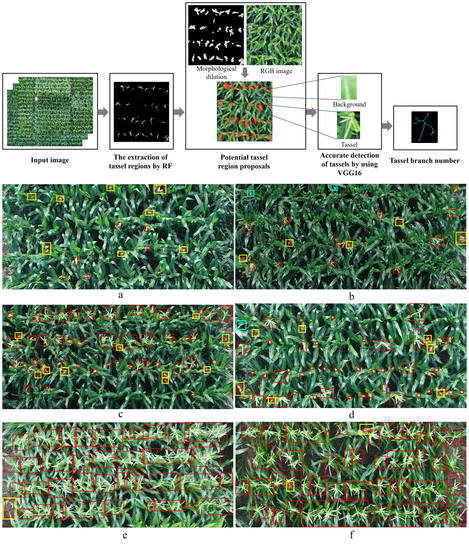

2.2. Schematic Diagram of Method

2.3. Potential Tassel Region Proposals by RF and Morphological Method

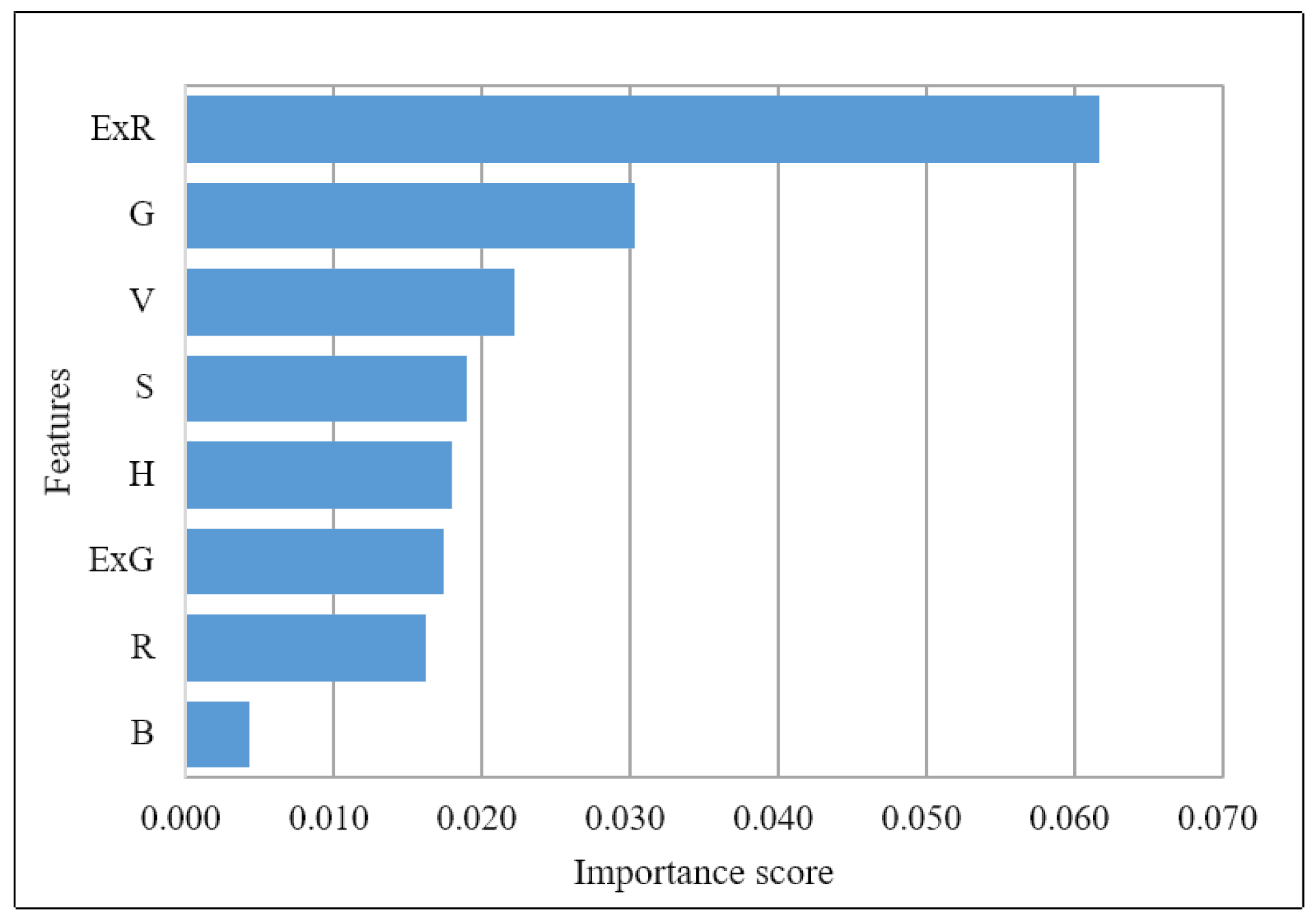

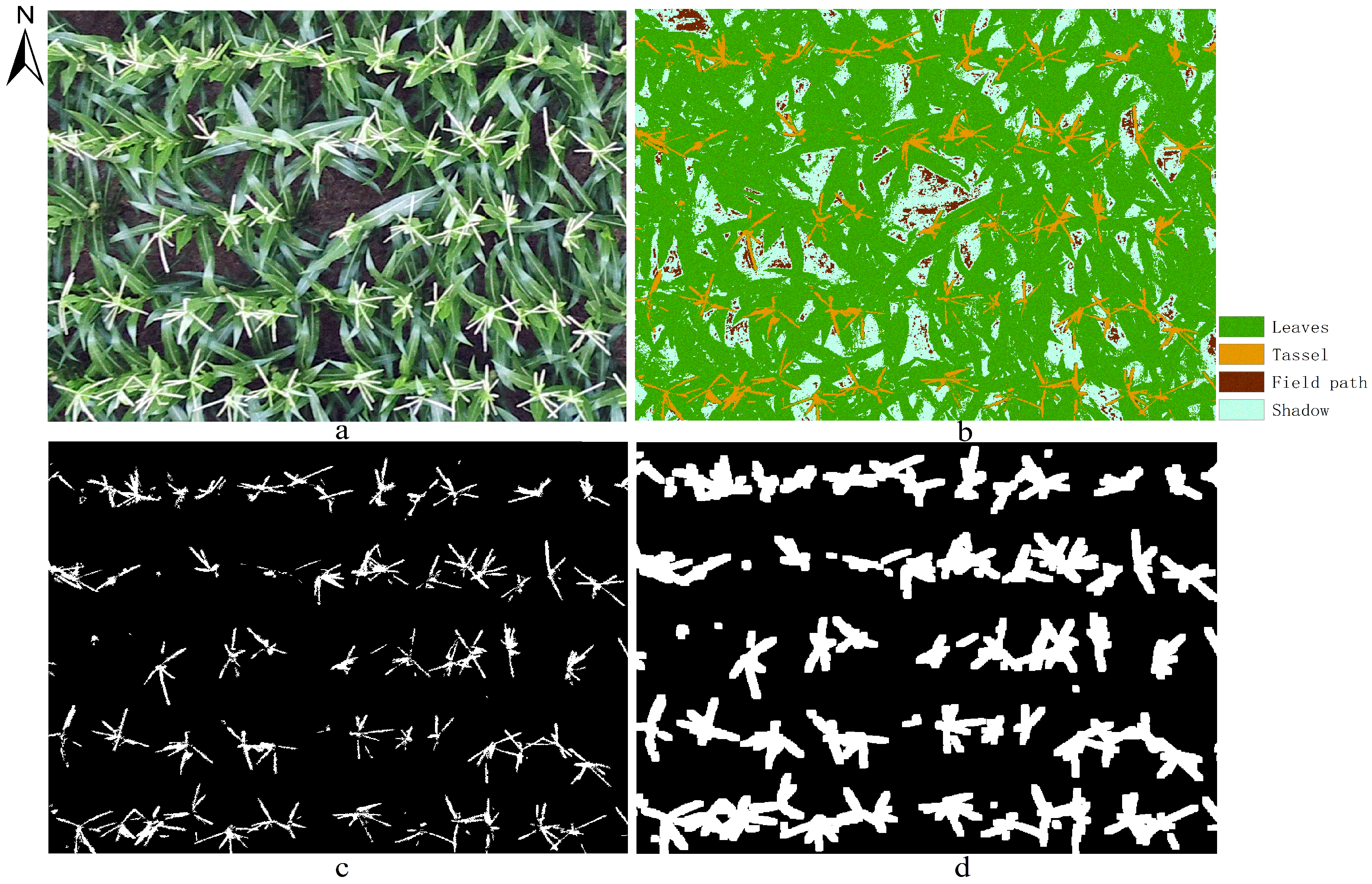

2.3.1. The Extraction of Tassel Regions by RF

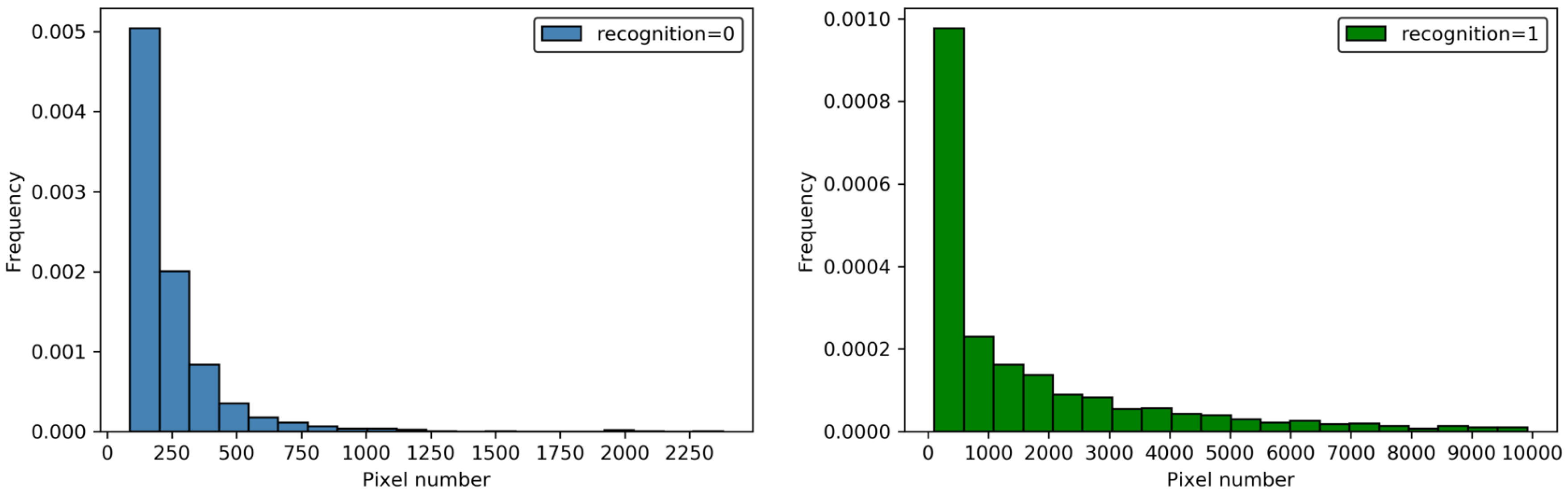

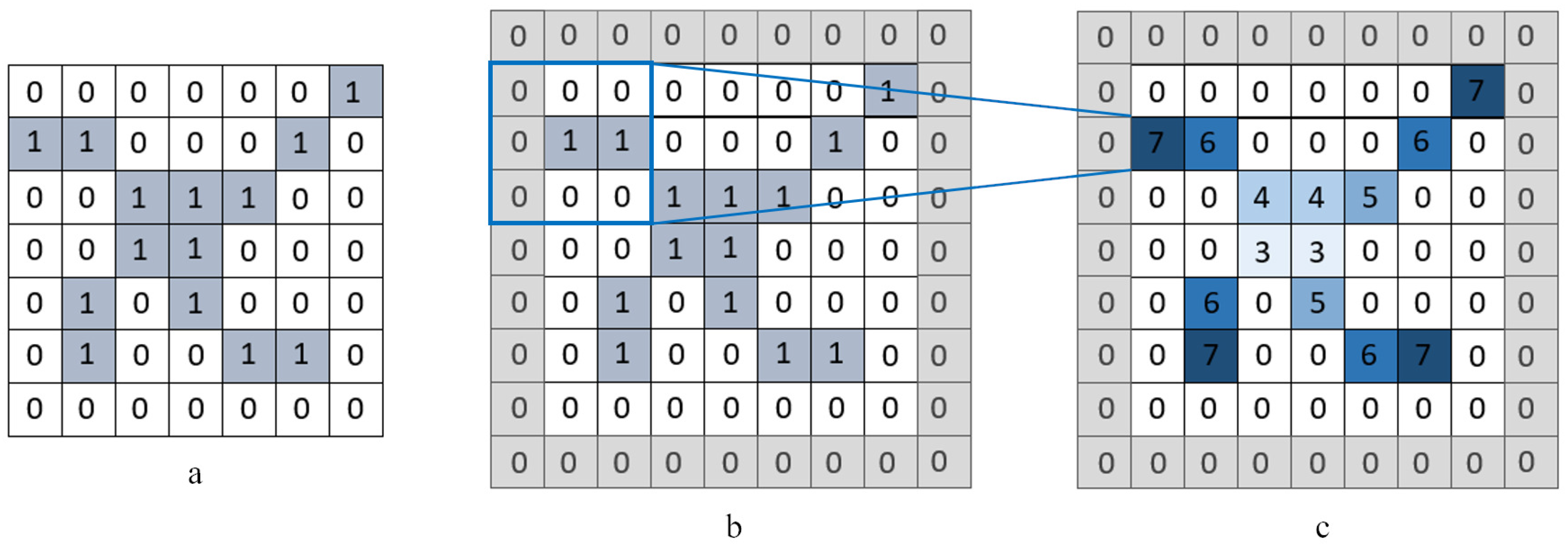

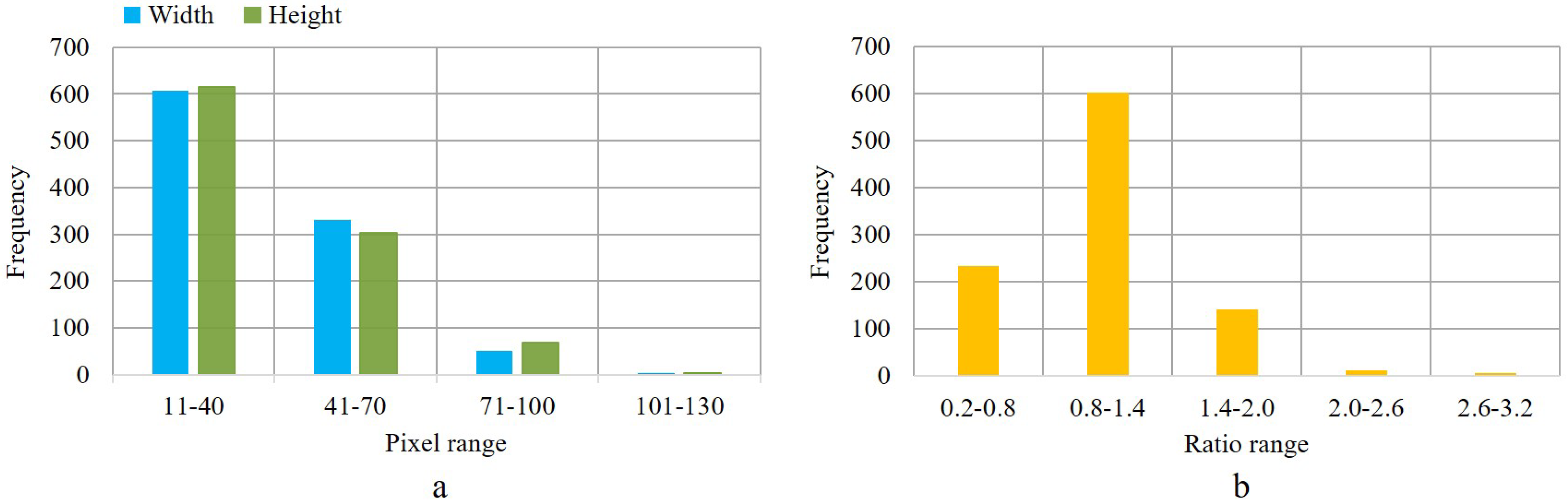

2.3.2. Potential Tassel Region Proposals Based on Morphological Processing

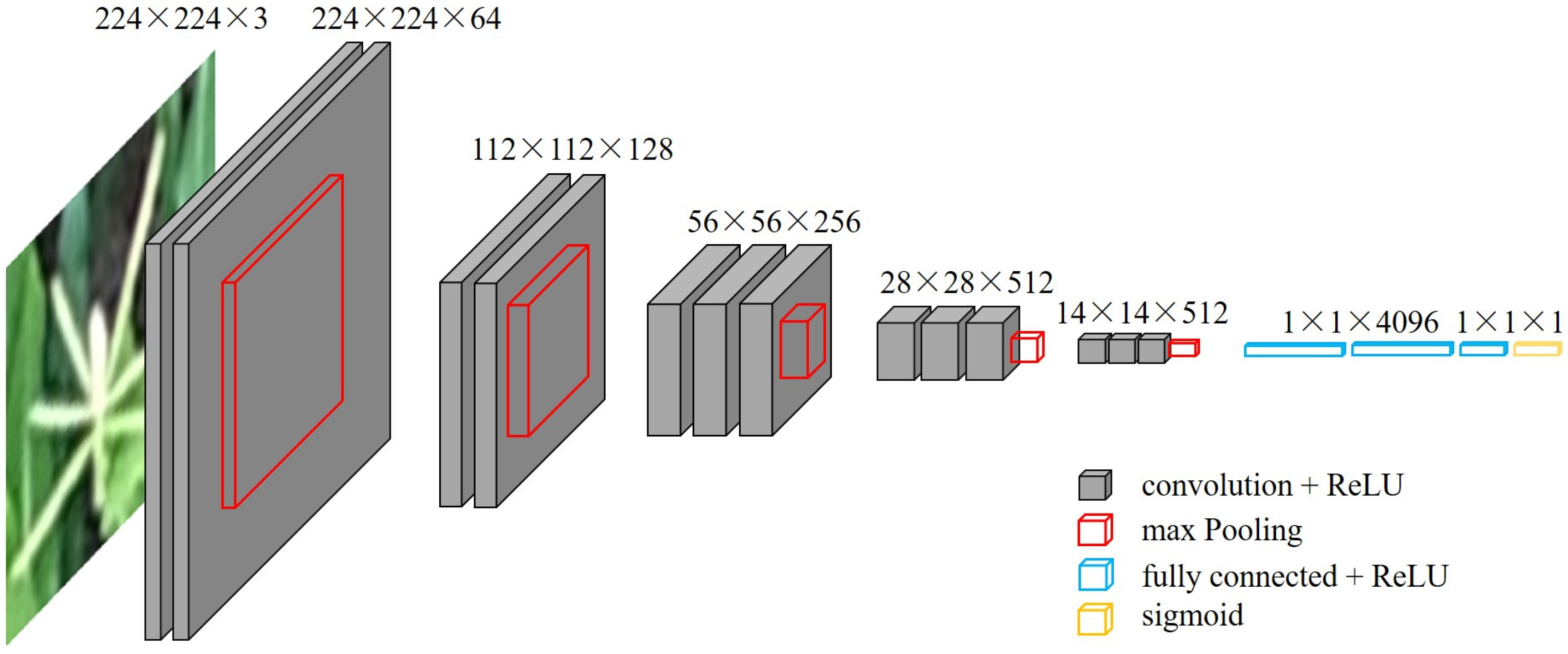

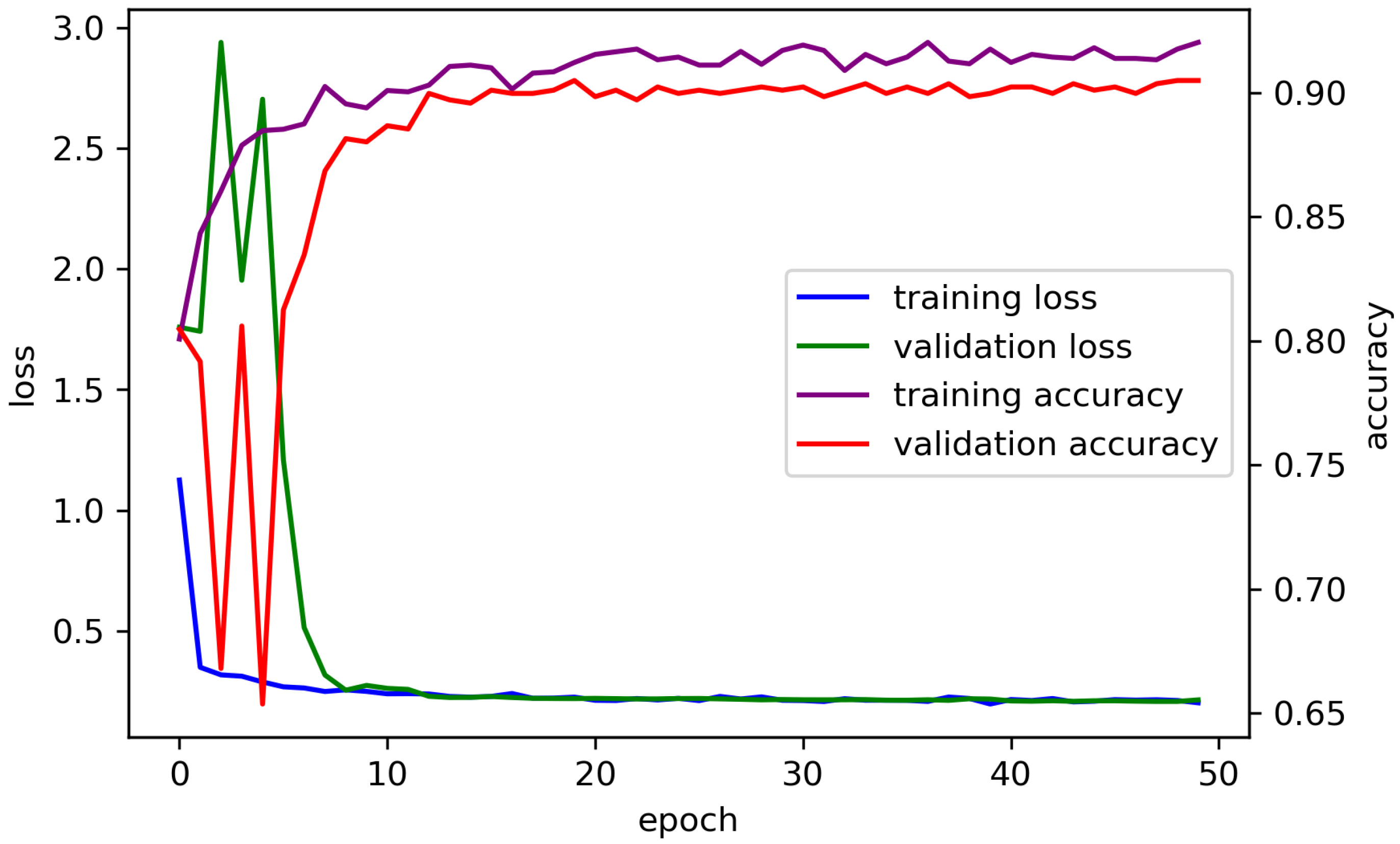

2.4. Fine Detection of Tassels by Using VGG16

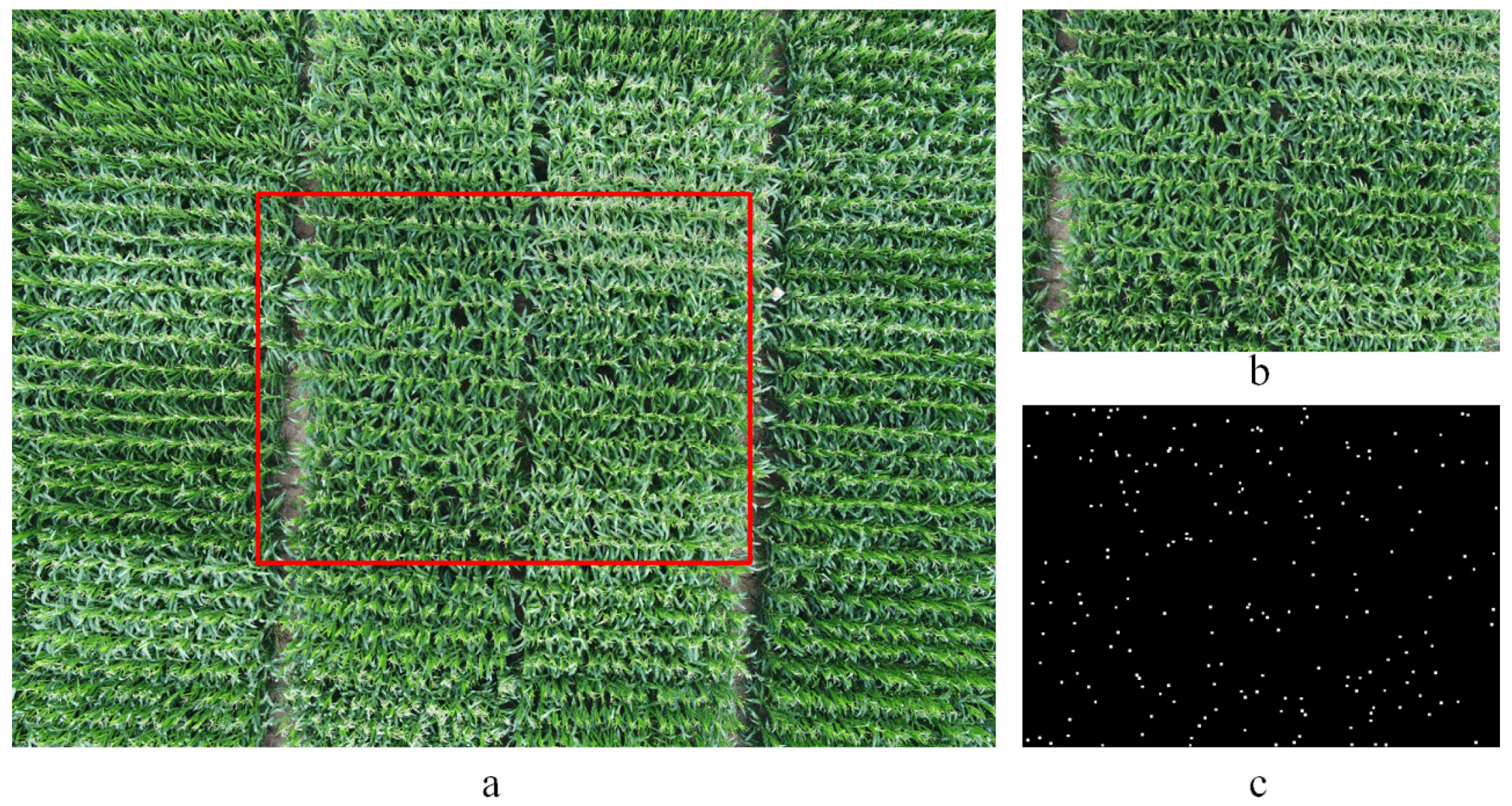

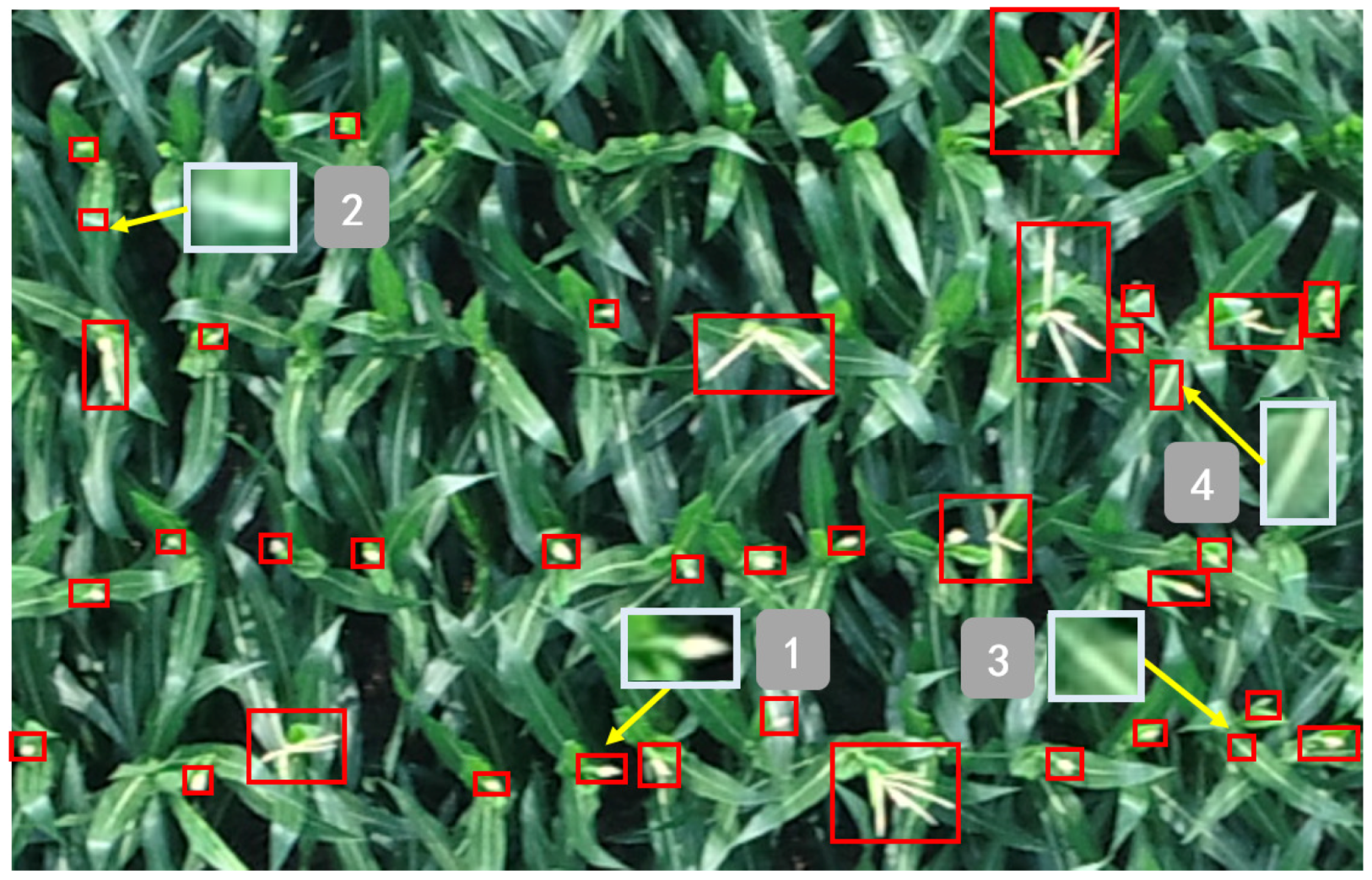

2.5. Extraction of Tassel Branch Number

2.6. Model Evaluation

3. Results

3.1. Influence of the Envelope Rectangles’ Size on Model Accuracy

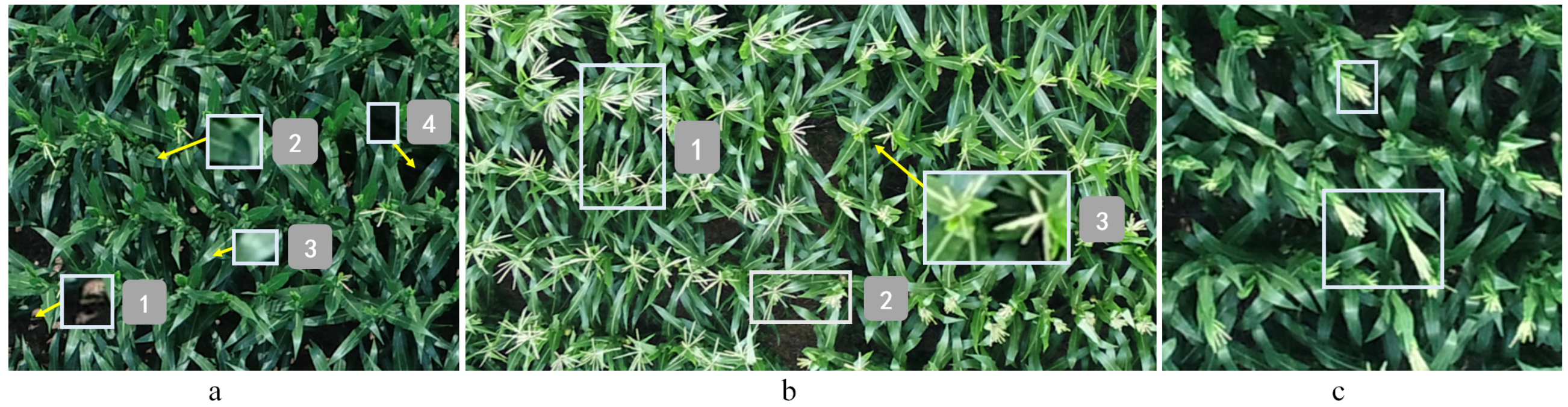

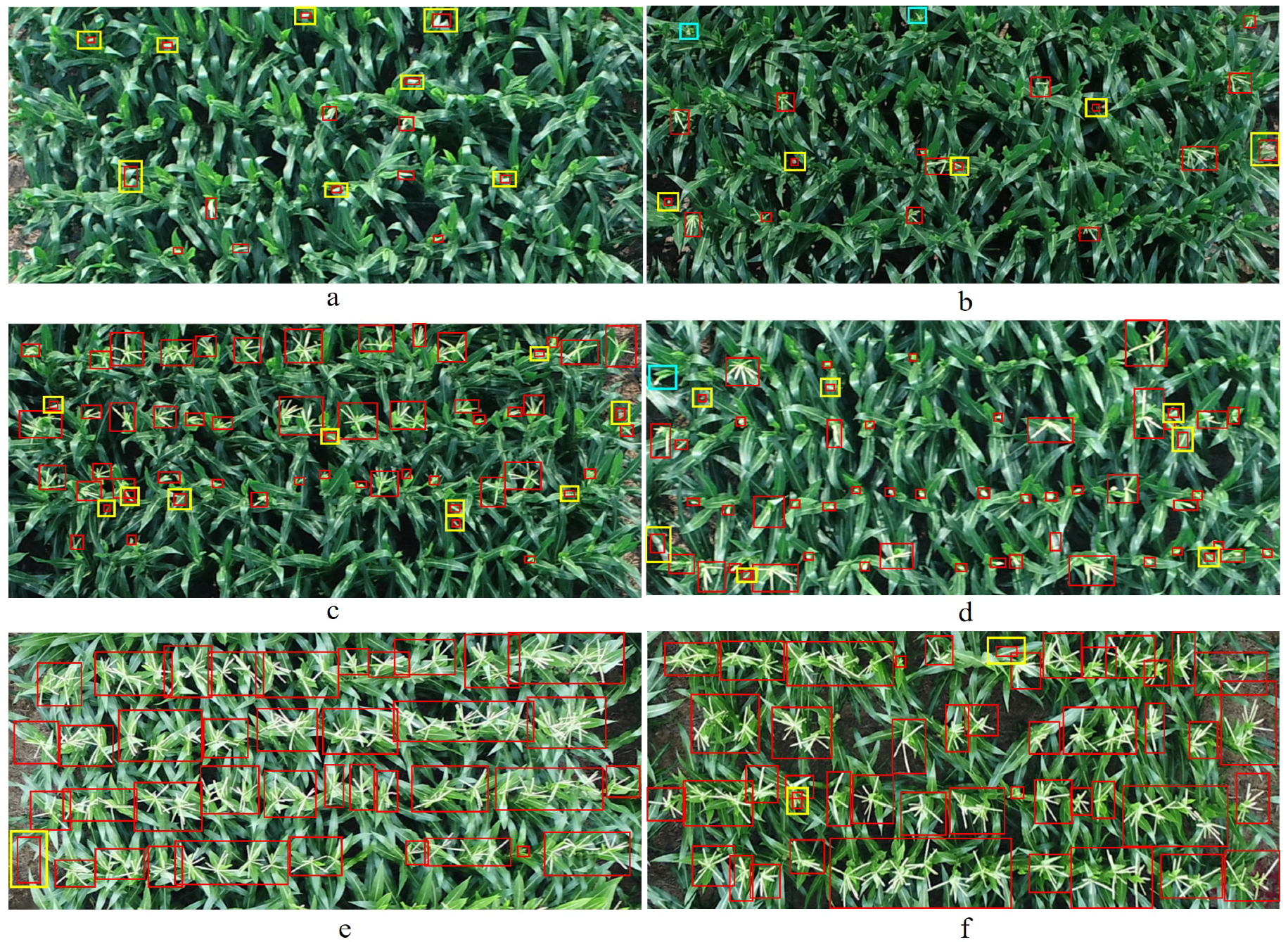

3.2. Influence of Different Tasseling Stages on Detection Accuracy

3.3. The Calculation of Tassel Branch Number

4. Discussion

4.1. Comparison of the Generation Method of Detection Boxes

4.2. Comparison of VGG16 and RF in Fine Detection

5. Conclusions

- (1)

- The potential tassel region proposals with different sizes and shapes could be found by using RF and morphological methods, which is the key point to realize tassels detection in complex scenes. In addition, also the VGG16 network after fine-tuning can distinguish the false positives in the potential tassel region proposals well.

- (2)

- We divided breeding plots into early, middle and late tasseling stages according to the proportion of tasseling plants and whether the tassels have complete morphological characteristics, and found that the detection effect of tassels was highly correlated with the tasseling stages and the detection effect in late tasseling stage was better than that in middle and early stages.

- (3)

- According to the special morphological characteristics of maize tassels, the endpoint detection method based on tassel skeleton can extract the tassel branch number well.

- (4)

- Moreover, the model performance can be effectively improved after enlarged envelope rectangles of potential tassel region proposals (the threshold value determination needs to consider the actual situation of samples).

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| UAV | unmanned aerial vehicle |

| RF | random forest |

| GCPs | ground control points |

References

- Lambert, R.; Johnson, R. Leaf angle, tassel morphology, and the performance of maize hybrids 1. Crop Sci. 1978, 18, 499–502. [Google Scholar] [CrossRef]

- Yulan, Y.; Min, Z.; Lei, Y.; Chunguang, L. Research Progress on the Impact of Maize Tassel on Yield. J. Maize Sci. 2010, 018, 150–152. [Google Scholar]

- Hunter, R.; Daynard, T.; Hume, D.; Tanner, J.; Curtis, J.; Kannenberg, L. Effect of tassel removal on grain yield of corn (Zea mays L.) 1. Crop Sci. 1969, 9, 405–406. [Google Scholar] [CrossRef]

- Gage, J.L.; Miller, N.D.; Spalding, E.P.; Kaeppler, S.M.; de Leon, N. TIPS: A system for automated image-based phenotyping of maize tassels. Plant Methods 2017, 13, 21. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Jin, H.; Liu, Z.; Li, Z.; Ning, M.; Sun, H. Seed maize identification based on texture analysis of GF remote sensing data. Trans. Chin. Soc. Agric. Eng. 2016, 32, 183–188. [Google Scholar]

- Kurtulmuş, F.; Kavdir, I. Detecting corn tassels using computer vision and support vector machines. Expert Syst. Appl. 2014, 41, 7390–7397. [Google Scholar] [CrossRef]

- Ren, T.; Liu, Z.; Zhang, L.; Liu, D.; Xi, X.; Kang, Y.; Zhao, Y.; Zhang, C.; Li, S.; Zhang, X. Early Identification of Seed Maize and Common Maize Production Fields Using Sentinel-2 Images. Remote Sens. 2020, 12, 2140. [Google Scholar] [CrossRef]

- Ribera, J.; He, F.; Chen, Y.; Habib, A.F.; Delp, E.J. Estimating phenotypic traits from UAV based RGB imagery. arXiv 2018, arXiv:1807.00498. [Google Scholar]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 2019, 15, 10. [Google Scholar] [CrossRef]

- Madec, S.; Jin, X.; Lu, H.; De Solan, B.; Liu, S.; Duyme, F.; Heritier, E.; Baret, F. Ear density estimation from high resolution RGB imagery using deep learning technique. Agric. For. Meteorol. 2019, 264, 225–234. [Google Scholar] [CrossRef]

- Lu, H.; Cao, Z.; Xiao, Y.; Fang, Z.; Zhu, Y.; Xian, K. Fine-grained maize tassel trait characterization with multi-view representations. Comput. Electron. Agric. 2015, 118, 143–158. [Google Scholar] [CrossRef]

- Zhengchong, M.; Yahui, S. Algorithm of male tassel recognition based on HSI space. Transducer Microsyst. Technol. 2018, 37, 117–119. [Google Scholar]

- Qi, Z. The Research on Extraction of Maize Phenotypic Information Based on Unmanned Aerial Vehicle. Ph.D. Thesis, Northeast Agricultural University, Harbin, China, 2017. [Google Scholar]

- Liu, Y.; Cen, C.; Che, Y.; Ke, R.; Ma, Y.; Ma, Y. Detection of maize tassels from UAV RGB imagery with faster R-CNN. Remote Sens. 2020, 12, 338. [Google Scholar] [CrossRef]

- White, J.W.; Andrade-Sanchez, P.; Gore, M.A.; Bronson, K.F.; Coffelt, T.A.; Conley, M.M.; Feldmann, K.A.; French, A.N.; Heun, J.T.; Hunsaker, D.J.; et al. Field-based phenomics for plant genetics research. Field Crops Res. 2012, 133, 101–112. [Google Scholar] [CrossRef]

- Kirchgessner, N.; Liebisch, F.; Yu, K.; Pfeifer, J.; Friedli, M.; Hund, A.; Walter, A. The ETH field phenotyping platform FIP: A cable-suspended multi-sensor system. Funct. Plant Biol. 2017, 44, 154–168. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned aerial vehicle remote sensing for field-based crop phenotyping: Current status and perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef]

- Lu, H.; Cao, Z.; Xiao, Y.; Zhuang, B.; Shen, C. TasselNet: Counting maize tassels in the wild via local counts regression network. Plant Methods 2017, 13, 79. [Google Scholar] [CrossRef]

- Tang, W.; Zhang, Y.; Zhang, D.; Yang, W.; Li, M. Corn tassel detection based on image processing. In Proceedings of the 2012 International Workshop on Image Processing and Optical Engineering, Harbin, China, 9–10 June 2012; International Society for Optics and Photonics: The Hague, The Netherlands, 2012; Volume 8335. [Google Scholar]

- Shi, Y.; Thomasson, J.A.; Murray, S.C.; Pugh, N.A.; Rooney, W.L.; Shafian, S.; Rajan, N.; Rouze, G.; Morgan, C.L.; Neely, H.L.; et al. Unmanned aerial vehicles for high-throughput phenotyping and agronomic research. PLoS ONE 2016, 11, e0159781. [Google Scholar] [CrossRef]

- Watanabe, K.; Guo, W.; Arai, K.; Takanashi, H.; Kajiya-Kanegae, H.; Kobayashi, M.; Yano, K.; Tokunaga, T.; Fujiwara, T.; Tsutsumi, N.; et al. High-throughput phenotyping of sorghum plant height using an unmanned aerial vehicle and its application to genomic prediction modeling. Front. Plant Sci. 2017, 8, 421. [Google Scholar] [CrossRef]

- Zaman-Allah, M.; Vergara, O.; Araus, J.; Tarekegne, A.; Magorokosho, C.; Zarco-Tejada, P.; Hornero, A.; Albà, A.H.; Das, B.; Craufurd, P.; et al. Unmanned aerial platform-based multi-spectral imaging for field phenotyping of maize. Plant Methods 2015, 11, 35. [Google Scholar] [CrossRef]

- Jang, G.; Kim, J.; Yu, J.K.; Kim, H.J.; Kim, Y.; Kim, D.W.; Kim, K.H.; Lee, C.W.; Chung, Y.S. Review: Cost-Effective Unmanned Aerial Vehicle (UAV) Platform for Field Plant Breeding Application. Remote Sens. 2020, 12, 998. [Google Scholar] [CrossRef]

- Guo, W.; Potgieter, A.; Jordan, D.; Armstrong, R.; Lawn, K.; Kakeru, W.; Duan, T.; Zheng, B.; Iwata, H.; Chapman, S.; et al. Automatic detecting and counting of sorghum heads in breeding field using RGB imagery from UAV. In Proceedings of the CIGR-AgEng Conference—CIGR 2016, Aarhus, Denmark, 26–29 June 2016; pp. 1–5. [Google Scholar]

- Guo, W.; Zheng, B.; Potgieter, A.B.; Diot, J.; Watanabe, K.; Noshita, K.; Jordan, D.R.; Wang, X.; Watson, J.; Ninomiya, S.; et al. Aerial imagery analysis–quantifying appearance and number of sorghum heads for applications in breeding and agronomy. Front. Plant Sci. 2018, 9, 1544. [Google Scholar] [CrossRef] [PubMed]

- Gnädinger, F.; Schmidhalter, U. Digital counts of maize plants by unmanned aerial vehicles (UAVs). Remote Sens. 2017, 9, 544. [Google Scholar] [CrossRef]

- Reza, M.N.; Na, I.S.; Lee, K.H. Automatic Counting of Rice Plant Numbers after Transplanting Using Low Altitude UAV Images. Int. J. Contents 2017, 13, 1–8. [Google Scholar]

- Shuaibing, L.; Guijun, Y.; Chenquan, Z.; Haitao, J.; Haikuan, F.; Bo, X.; Hao, Y. Extraction of maize seedling number information based on UAV imagery. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2018, 34, 69–77. [Google Scholar]

- Wang, Y.; Zhu, X.; Wu, B. Automatic detection of individual oil palm trees from UAV images using HOG features and an SVM classifier. Int. J. Remote Sens. 2019, 40, 7356–7370. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual tree detection and classification with UAV-based photogrammetric point clouds and hyperspectral imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef]

- Su, J.; Liu, C.; Coombes, M.; Hu, X.; Wang, C.; Xu, X.; Li, Q.; Guo, L.; Chen, W.H. Wheat yellow rust monitoring by learning from multispectral UAV aerial imagery. Comput. Electron. Agric. 2018, 155, 157–166. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L.; Dong, Y.; Shi, Y.; Huang, W.; Han, L.; González-Moreno, P.; Ma, H.; Ye, H.; Sobeih, T. A deep learning-based approach for automated yellow rust disease detection from high-resolution hyperspectral uav images. Remote Sens. 2019, 11, 1554. [Google Scholar] [CrossRef]

- Uijlings, J.R.; Van De Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Zhang, L.; Liu, Z.; Ren, T.; Liu, D.; Ma, Z.; Tong, L.; Zhang, C.; Zhou, T.; Zhang, X.; Li, S. Identification of Seed Maize Fields With High Spatial Resolution and Multiple Spectral Remote Sensing Using Random Forest Classifier. Remote Sens. 2020, 12, 362. [Google Scholar] [CrossRef]

- Waldner, F.; Lambert, M.J.; Li, W.; Weiss, M.; Demarez, V.; Morin, D.; Marais-Sicre, C.; Hagolle, O.; Baret, F.; Defourny, P. Land cover and crop type classification along the season based on biophysical variables retrieved from multi-sensor high-resolution time series. Remote Sens. 2015, 7, 10400–10424. [Google Scholar] [CrossRef]

- De Castro, A.I.; Torres-Sánchez, J.; Peña, J.M.; Jiménez-Brenes, F.M.; Csillik, O.; López-Granados, F. An automatic random forest-OBIA algorithm for early weed mapping between and within crop rows using UAV imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef]

- Li, M.; Ma, L.; Blaschke, T.; Cheng, L.; Tiede, D. A systematic comparison of different object-based classification techniques using high spatial resolution imagery in agricultural environments. Int. J. Appl. Earth Observ. Geoinf. 2016, 49, 87–98. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Liu, T.; Li, R.; Zhong, X.; Jiang, M.; Jin, X.; Zhou, P.; Liu, S.; Sun, C.; Guo, W. Estimates of rice lodging using indices derived from UAV visible and thermal infrared images. Agric. For. Meteorol. 2018, 252, 144–154. [Google Scholar] [CrossRef]

- Strobl, C.; Boulesteix, A.L.; Kneib, T.; Augustin, T.; Zeileis, A. Conditional variable importance for random forests. BMC Bioinform. 2008, 9, 307. [Google Scholar] [CrossRef]

- Strobl, C.; Boulesteix, A.L.; Zeileis, A.; Hothorn, T. Bias in random forest variable importance measures: Illustrations, sources and a solution. BMC Bioinform. 2007, 8, 25. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Ren, Y.; Zhu, C.; Xiao, S. Small object detection in optical remote sensing images via modified faster R-CNN. Appl. Sci. 2018, 8, 813. [Google Scholar] [CrossRef]

| Proportions of Tassel Samples | Precision | Recall | F1-Score | OA |

|---|---|---|---|---|

| 4% | 0.94 | 0.70 | 0.80 | 0.877 |

| 6% | 0.93 | 0.72 | 0.81 | 0.881 |

| 10% | 0.89 | 0.80 | 0.84 | 0.886 |

| 15% | 0.89 | 0.80 | 0.85 | 0.891 |

| 20% | 0.86 | 0.84 | 0.85 | 0.888 |

| 25% | 0.85 | 0.84 | 0.84 | 0.880 |

| Envelope Rectangles’ Size | Validation Accuracy_6 | Validation Accuracy_8 | Validation Accuracy_10 |

|---|---|---|---|

| original envelope rectangles | 0.944 | 0.935 | 0.935 |

| 600 pixels | 0.952 | 0.954 | 0.940 |

| Tasseling Stage | TP | FP | FN | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|

| Early tasseling stage (include 11 breeding plots) | 58 | 45 | 5 | 0.778 | 0.969 | 0.863 |

| Middle tasseling stage (include 13 breeding plots) | 585 | 86 | 24 | 0.872 | 0.961 | 0.914 |

| Late tasseling stage (include 26 breeding plots) | 1347 | 90 | 15 | 0.937 | 0.989 | 0.962 |

| Overall (include 50 breeding plots) | 2090 | 221 | 44 | 0.904 | 0.979 | 0.940 |

| Categories | OA | Precision | Recall | F1-Score |

|---|---|---|---|---|

| tassel | 0.796 | 0.823 | 0.694 | 0.753 |

| non-tassel | 0.780 | 0.879 | 0.826 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zan, X.; Zhang, X.; Xing, Z.; Liu, W.; Zhang, X.; Su, W.; Liu, Z.; Zhao, Y.; Li, S. Automatic Detection of Maize Tassels from UAV Images by Combining Random Forest Classifier and VGG16. Remote Sens. 2020, 12, 3049. https://doi.org/10.3390/rs12183049

Zan X, Zhang X, Xing Z, Liu W, Zhang X, Su W, Liu Z, Zhao Y, Li S. Automatic Detection of Maize Tassels from UAV Images by Combining Random Forest Classifier and VGG16. Remote Sensing. 2020; 12(18):3049. https://doi.org/10.3390/rs12183049

Chicago/Turabian StyleZan, Xuli, Xinlu Zhang, Ziyao Xing, Wei Liu, Xiaodong Zhang, Wei Su, Zhe Liu, Yuanyuan Zhao, and Shaoming Li. 2020. "Automatic Detection of Maize Tassels from UAV Images by Combining Random Forest Classifier and VGG16" Remote Sensing 12, no. 18: 3049. https://doi.org/10.3390/rs12183049

APA StyleZan, X., Zhang, X., Xing, Z., Liu, W., Zhang, X., Su, W., Liu, Z., Zhao, Y., & Li, S. (2020). Automatic Detection of Maize Tassels from UAV Images by Combining Random Forest Classifier and VGG16. Remote Sensing, 12(18), 3049. https://doi.org/10.3390/rs12183049