1. Introduction

Detection and segmentation of small regions of interest (RoIs) from images (e.g., natural vegetation areas, crops, floods, forests, roads, buildings, waters, etc.) is a difficult task in many remote image processing applications. Recently, considerable efforts have been made in this direction with applications in different domains like agriculture [

1,

2], environment [

3,

4], and transport [

5,

6]. On the other hand, the utility of the surveillance/monitoring systems on various areas has been proven by the management of natural disasters [

7] and rescue activities. Different solutions based on image analysis are proposed for detection and analysis of RoIs in areas affected by different types of natural disasters (floods, hurricanes, tornadoes, volcanic eruptions, earthquakes, tsunamis, etc.). Among these, floods are the most expensive types of disasters in the world and represented 31% of the economic losses generated by natural disasters during 2010–2018 [

8]. Determining and evaluating flooded areas during or immediately after flooding in agricultural zones are important for timely assessment of economic damage and taking measures to remedy the situation.

For large areas and low resolution, satellite images are often considered. Thus, in [

9] the Sentinel-2 satellite constellation was used to provide multispectral images to segment multiple ground RoIs by deep convolutional neural networks. In terms of technological advancement, aerial imagery is an important form of documentation about RoIs from the ground. Unlike satellite imagery, these types of images are characterized by a good spatial resolution which can increase the accuracy of the classification and segmentation.

In a post-flood scenario on small areas, there are three ways to determine the ground RoIs: satellites, aircrafts, and unmanned aerial vehicles (UAVs). From these, the UAV solution is the cheapest and most accurate for real time RoI assessment. In addition, the UAV solution is independent of cloudy weather and has better ground resolution.

The difficulty of many algorithms developed and implemented within the solutions of image segmentation is that they have a certain purpose, which turns them into specific algorithms, depending on the application. For example, until recently, most of these solutions were based on extracting features chosen as representative and effective for the classes considered. This approach requires the choice of representative features for the application and suffers from a lack of flexibility. The introduction or elimination of certain features or classes requires modification of the whole architecture.

The alternative solution, most commonly used today, is based on the ability of neural networks to learn to extract the relevant features alone based on a goal/cost, also called loss function, and a set of desired outcomes. However, the compromise is the size of the training set and time. In contrast, the execution/operating time is much shorter. This new approach has as a defining characteristic the ability to learn and, therefore, the ability to adapt to new applications by transfer learning. The results of image segmentation differ from one network to another, not only through statistical indicators (true positive, true negative, false positive, false negative, and accuracy), but also in location of false positive and false negative pixels. Therefore, by combining the segmentation information of more neural networks it is possible to compensate the false positive or false negative cases.

We considered that a solution to improve the accuracy and the time performances is to combine several classifiers (in this case neural networks) that act in a parallel way and to aggregate individual decisions to largely eliminate classification errors. In this case, a multi-network system based on global decision results is more efficient than the individual decisions of the component networks. The number of neural networks and their nature may differ. Thus, a subjective behavior (individual interpretation of single neural networks) can be transformed into a more objective behavior (interpretation based on fusion of information).

The problem addressed in this paper is the detection and evaluation of flooding and vegetation areas from aerial images acquired by UAVs. As mentioned above, the monitoring of flooded areas in rural zones is important to assess the damage and make appropriate post-disaster decisions. Three important classes were chosen to address this problem: the flood class (F), the vegetation class (V), and the rest (R). As the main contribution, the authors proposed a system of detection and evaluation of these RoIs based on the information fusion from a set of primary classifiers (neural networks). Thus, the system contains a multi neural network structure and a final convolutional layer that combines the decision probabilities of these primary classifiers to obtain a better accuracy.

The neural networks were chosen based on our experiments [

3] in the field of aerial image processing and on consulting more research works [

4,

5,

6] highlighting the network performances in various applications. We considered the network diversity and the classification results in such a way that the false positive and false negative cases were corrected and the global accuracy was better than the individual accuracies. We considered that the choice of these five neural networks would ensure a compromise between accuracy and operating time. A larger number of networks would have increased the operating time and a smaller number would have decreased the accuracy.

The system was learned and tested on our dataset (own) containing real images, but which were difficult to segment, acquired in a UAV mission after moderate flooding in Romania.

2. Related Works

Due to rapid development of satellites, aerial vehicles, and information technology, the segmentation of remote images has been extensively studied in recent years. The analysis of the related work was limited to the main aspects involved in our approach: image acquisition, image processing, and, especially, using deep convolutional neural networks (CNNs) for the classification and segmentation of remote images.

2.1. Image Acquisition

To reduce the impact of floods on communities and the environment, it is necessary to manage these situations effectively by high-performance systems that involve detecting and evaluating flood-affected areas in the shortest time possible [

10]. These systems represent a global demand for the management of humanitarian crises and natural disasters and are based on geo-referential/geospatial information obtained in real time using a drone-mounted camera (UAV) [

11], satellite information [

12], or information obtained from ground mounted surveillance systems. In [

13], dynamic images were acquired by a mini-UAV to monitor an urban area of Yuyao, China. In order to differentiate the objects from the ground, the parameters of the co-occurrence matrix from the UAV images were determined. Subsequently, based on the information obtained, the authors defined a classifier consisting of 200 decision trees to extract the flood affected areas. On the other hand, an approach regarding the use of RGB images acquired using a UAV to study the habitat of a river was presented in [

14], where different regions were classified using real mapping of the dominant substrate of the riverbed. The classification methodology consisted of two stages: the classification of the portions of the land and then the segmentation of the images using the classified regions. To make a difference between different types of regions (water, land, and those covered by vegetation) the information obtained at the pixel level was used. Additionally, the authors in [

15] presented another method that had the role to classify and eliminate the shadows from the dynamic images obtained with the help of a UAV. Methods like machine learning and support vector machine were used for classification. The shadows were detected and extracted using a separate class generated based on the region of interest observed in the image and by applying a segmentation threshold. The obtained results indicated that the presence of shadows negatively influences the results of the classification method. In [

16], using a surveillance system, the authors proposed a region-based image segmentation method and a flood risk classifier to identify the local variation of the river discharge surface and to determine the appropriate level of risk. This method has a relatively high robustness in flood warning and detection applications. A solution for flood monitoring was also proposed in [

17]. This technique takes dynamic images and, by applying different processing methods, generates maps of the areas in danger of being flooded. The resulting maps can then be used to monitor and detect areas with high risk of flooding. In [

18], two methods of segmentation of images based on regions, Grow Cut and Growing Region, were applied to images that capture certain areas during severe weather. The authors demonstrated that the segmentation accuracy of the two methods varied quite widely in fog or rain conditions, the Growing Region method giving better results. More recently, the authors in [

19] used the fusion of multiresolution, multisensor, and multitemporal satellite imagery to improve the detection and segmentation of flooded areas in urban zones.

Multispectral analysis can also determine the water leakage in vegetation [

20]. Thus, there is a danger that both water and vegetation rich in chlorophyll will be confused. In general, multispectral analysis is used to analyze the degree of humidity of plants. Likewise, thermal cameras are used more to detect water loss and plant stress [

21]. In the case of RGB analysis, the advantages are the followings: water and vegetation can be easily distinguished by color and texture, the cost of equipment is lower, and, sometimes, even the computational effort is reduced. Regardless of approaches, one of the very difficult points is that water cannot be detected from UAVs if it is covered by high vegetation (trees) or buildings.

The rest of the paper is organized as follows. In

Section 2, a comprehensive study of the related works is done. The materials and methods are described in

Section 3. The system implementation and the associated parameters, obtained in the learning and validation phases, are presented in

Section 4. The experimental results and the performances obtained for flood and vegetation segmentation are presented in

Section 5. Finally, the discussions and conclusions are reported in

Section 6 and

Section 7, respectively.

2.2. Image Processing and Segmentation

The image processing for the purpose of segmentation involves the most appropriate choice of representation (choosing the color or spectral channel, improving the representation, extracting relevant features, and classification). Another problem encountered in remote image processing is the radiometric calibration, but we considered that UAV data is usually not calibrated in the same way as images taken from space platforms. UAV data is far less influenced by atmospheric effects and so a full atmospheric correction is unnecessary.

It can also use patching the image and re-composing the mask patches in the original image format [

3]. For example, in [

22] relevant information on the methods of segmentation of RoIs were provided. Basically, different segmentation methods were presented and analyzed: outline determination, region determination, Markov arbitrary field, or various clustering methods. All these methods provide relatively good accuracy in detecting areas of interest.

One of the most important RoI in the agriculture applications is the vegetation region. Authors in [

1] used RGB images to obtain first the hue color component and then to obtain a binary image for vegetation segmentation. After flooding, one of the most important RoI is the flood extent. To this end, good results were obtained using textural features extracted from the co-occurrence matrix and fractal dimension [

3].

Correlated color information, such as the chromatic cooccurrence matrix, is used for road segmentation [

23] or flood segmentation [

24] from UAV images. First the image is decomposed in patches, and then the most relevant features like contrast, energy, and homogeneity are extracted from the co-occurrence matrix between H (hue) and S (saturation) components. Although good results can be obtained with these methods regarding the accuracy, an important disadvantage is the segmentation time (a few seconds for an image). For flood and vegetation segmentation, other discriminant features, like the histograms of oriented gradients on H color channel and mean intensity on grey level, associated with the minimum distance-based classifier were used in [

25]. Then, a logical scheme between partial decisions increased the final segmentation.

A method for accurate extraction of regions of interest from aerial images was presented in [

26] and was based on the object-based image analysis, integrated with the fuzzy unordered rule induction algorithm, and the random forest algorithm for efficient feature selection. The segmentation process used the region growing-based method.

The authors in [

27] presented a supervised classification solution for a remote hyperspectral image, which integrates spectral and spatial information into a unified Bayesian framework. Compared to other solutions presented, the classification method using a convolutional neural network has been shown to perform well. Another solution with significant results was presented in [

28]. In this paper, the authors adopted a recent method for classifying hyperspectral images using the super pixel algorithm to train the neural network. After obtaining the spatial characteristics, a recurrent convolutional neural network was used to determine the portions that were classified incorrectly. The experimental results indicated that the classification accuracy increased after this method was used.

CNN can be combined with classical extraction of complex, statistical features like textural, fractal types, etc. In this case, the CNN input is not the image, but a feature vector extracted from it. To this end, the authors in [

29] combined texture features like local binary pattern (LBP) histograms with single perceptron type CNN to classify a small region of interest from UAV images in flood monitoring.

2.3. Using Deep CNN

To increase the efficiency of the image segmentation, recent research in the field showed that high accuracy can be achieved in a short period of time using deep neural networks. This means that a system is trained based on features to classify different types of images or regions of those images. The new approach has as a defining characteristic the ability to learn and, therefore, the ability to adapt to new applications by transfer learning.

In [

30], the artificial neural networks used provided good results in image classification. For this purpose, different learning tasks were developed, such as the optimal Bayes classifier or the SuperLearner algorithm that uses cross-validation to estimate the performance of several learning models. The authors of this study sought several methods of building neural networks to develop a more efficient solution. The experimental results obtained underlined the correlation between the architecture of the neural networks and the tasks for which they were developed.

Currently, the systems based on artificial intelligence (especially artificial neural networks) can better perform some tasks of visual classification of RoIs in UAV applications than humans due to the availability of large training data sets and the improvement of neural network algorithms. In the deep learning approach, CNNs (convolutional neural networks) are the most used for implementation. Some of the most popular networks are the following [

31]: AlexNet, GoogLeNet, VGGNet, ResNet, MobileNet, and DenseNet.

Authors in [

32] introduced the first network consisting of two convolutional layers followed by three fully connected layers. The network was called LeNet and was the basis of all classical convolutional networks. By increasing the number of convolutional and fully connected layers of LeNet, another performing neural network, AlexNet [

33], was obtained. Starting from AlexNet and adding connections from the lower layers to the most advanced ones (skip connections), a new neural network, ResNet (residual neural network), was obtained [

34,

35]. ResNet consists of a chain of residual units as a deep CNN, successfully used for satellite image classification [

9]. Depending on layer number, ResNet had different implementation (ResNet-50, ResNet-101, etc.). The authors in [

4] used a CNN-based method for image segmentation, namely FCN (fully convolutional network) [

36] for flooded area mapping. Thus, they used the transfer learning for FCN16s to reuse it for extracting flooding from UAV images.

YOLO (you only look once) is a CNN-based architecture containing anchor boxes and, in recent years, YOLO has proven to be a real-time object detection technique for widely used applications. Initially, YOLO was a convolutional network designed to detect and frame objects in an image. Subsequently, it was used to classify these objects and, then, segment the framing areas [

37,

38,

39]. YOLO v3 [

40] is extremely fast and treats the detection of the regions of interest as a regression problem by dividing the input image into a grid of size m × m, and for each cell in that grid it determines the probability that it belongs to a class of interest. A comprehensive comparison between deep CNNs is presented in [

41].

The generative adversarial nets (GAN) were introduced by Goodfellow et al. [

42] as an adversarial procedure between two main entities: the generator and the discriminator that are both simultaneously learned. Starting from an image, the GAN can synthesize a new image. The generator creates random images, and the discriminator network analyzes these images and then transmits to the generator how real the generated images are. Although the GAN network was not originally intended as a classifier, in recent applications it was used to classify various objects with the aid of an associated probability [

43,

44,

45]. Thus, the authors in [

46] proposed a new method for detection of regions of interest, like flooding in rural areas, using conditional generative adversarial networks (cGAN) and graphics processing units (GPU). The results demonstrate that the proposed method provides high accuracy and robustness compared with other methods for flooding evaluation. Other types of deep CNN like LeNet [

32] (full LeNet, half LeNet) and YOLO v3 (pixel YOLO, decision YOLO) were used separately in [

39] for detection and evaluation of RoIs in flooded zones. These CNNs, partially adapted from the existing literature, used a transfer learning procedure. Additionally, the deep CNNs such as ResNet and GoogLeNet with transfer learning provided good results to classify different ground RoIs from satellite images [

9].

3. Materials and Methods

As mentioned above, the proposed system for flood and vegetation assessment was based on information fusion from a set of efficient neural networks, considered as individual classifiers, grouped through a new convolutional layer into a global system. Fusing the individual decisions of neural networks, considered as subjective factors (due to specific learning), an increase in the degree of objectivity of the global classification was obtained.

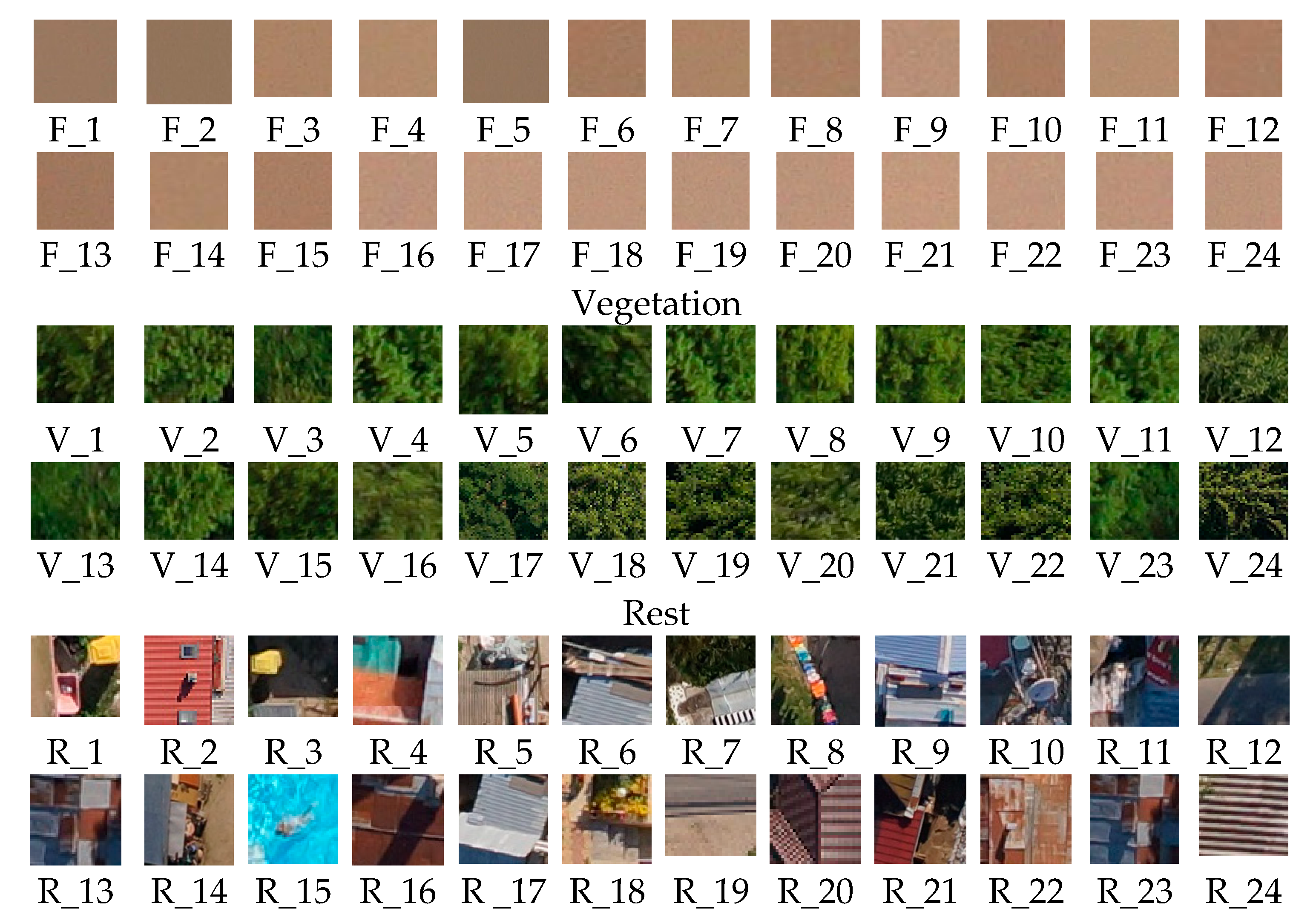

The images were taken from an orthophotoplan created from the images acquired by a UAV in the real case of a flood in a rural region from Romania. Then, each image, extracted from the orthophotoplan, was decomposed into non-overlapped patches that were labeled as one of the mentioned classes: F (flood), V (vegetation), and R (rest).

The proposed neural networks were training (the first phase—the learning phase) with a set of patches (the training set). A weight (corresponding to the confidence level) was established in the validation phase for each neural network. Based on the results of the previous works [

46] and [

39], a fusion system with increasing performance was proposed and implemented for flood and vegetation assessment. The following types of neural networks are considered as primary classifiers (PCs): YOLO, cGAN, LeNet, AlexNet, and ResNet. The fusion algorithm considers two elements: the confidence level (associated with a weight) given to each PC obtained after a validation phase, and the detection probabilities provided by these networks at the time of the operation itself. Each PC receives an input patch and provides an output patch of the same dimension, indexed with the class label (F—blue, V—green, and R—unchanged) and the associated probability, calculated using the cost (loss) function.

The selection of CNN was based on our previous studies [

24,

29,

39], and also on the consultation of other relevant works. We considered individual networks as subjective classifiers based on their structure and learning. Combining more subjective information with an associated confidence (weight), we sought to create a more objective classifier (global classifier). The most important aspect was that an error committed by a classifier can be corrected by the information (probability of belonging to a class) provided by other classifiers.

3.1. UAV System for Image Acquisition

To increase the flood assessment area, we used a fixed-wing UAV with greater autonomy, higher speed, and an extended operating area than a multicopter. The fixed-wing UAV MUROS was implemented by the authors in [

47]. The main characteristics, flight requirements, and performances are given in

Figure 1 and

Table 1.

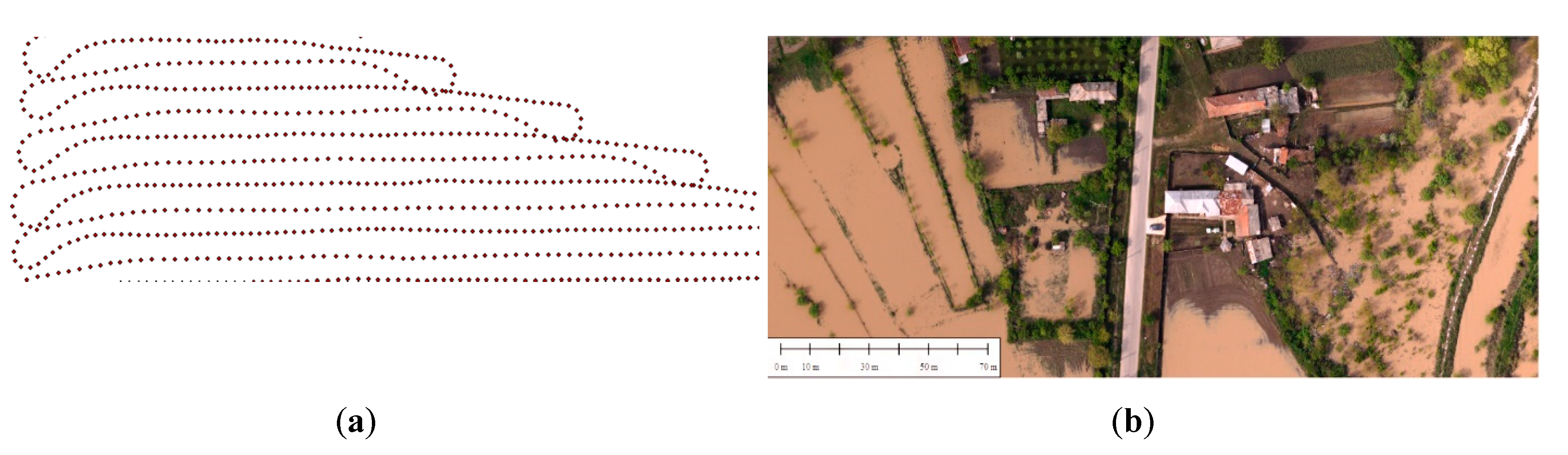

A portion of the UAV, namely flight to image acquisition (GPS points marked), is presented in

Figure 2a. From the successive images, acquired as the result of area surveillance, an orthophotoplan was created with special software (

Figure 2b). To this end, the successive images overlapped in both length and width up to 60%. Then, images of 6000 × 4000 pixels were cropped and regions like flood and vegetation were segmented based on the following operations described in the above section: image decomposition in non-overlapped patches of dimension 64 × 64 pixels; patch classification and marking; and, finally, patch recombination. Some patches were difficult to be analyzed because of mixed zones. We created a database of 2000 images from flooded rural areas.

3.2. YOLO Network

The CNN named decision YOLO, proposed in [

39], operates at a global level, and the architecture is presented in

Figure 3. The network has only convolutional layers grouped in two parts: down sampling and up sampling. The number of parameters in each dimension ascending layer is equal to the number of parameters in the correspondent layer on the descending side, establishing a connection between them.

The proposed network was created starting from YOLO by applying five combinations of convolutional layers followed by max pooling (the down sampling stream) and then five combinations of convolutional layers followed by the up sampling. The architecture contains concatenations between the obtained ascending layer and the descending layer of the same dimensions (a U-net structure). For every two layers, the number of parameters doubles. Finally, the classification probability is provided, and this is used in the convolutional layer of the global classifier. In the integrated scheme of the proposed system, YOLO CNN is referred to as the primary classifier PC1. In the case of the YOLO network, it was observed that the size of the patch sometimes influences the decision of the network. In the case of the presented application, the chosen size was 64 × 64 pixels, taking into account the size of the UAV images. An essential quality of these networks is the short learning time.

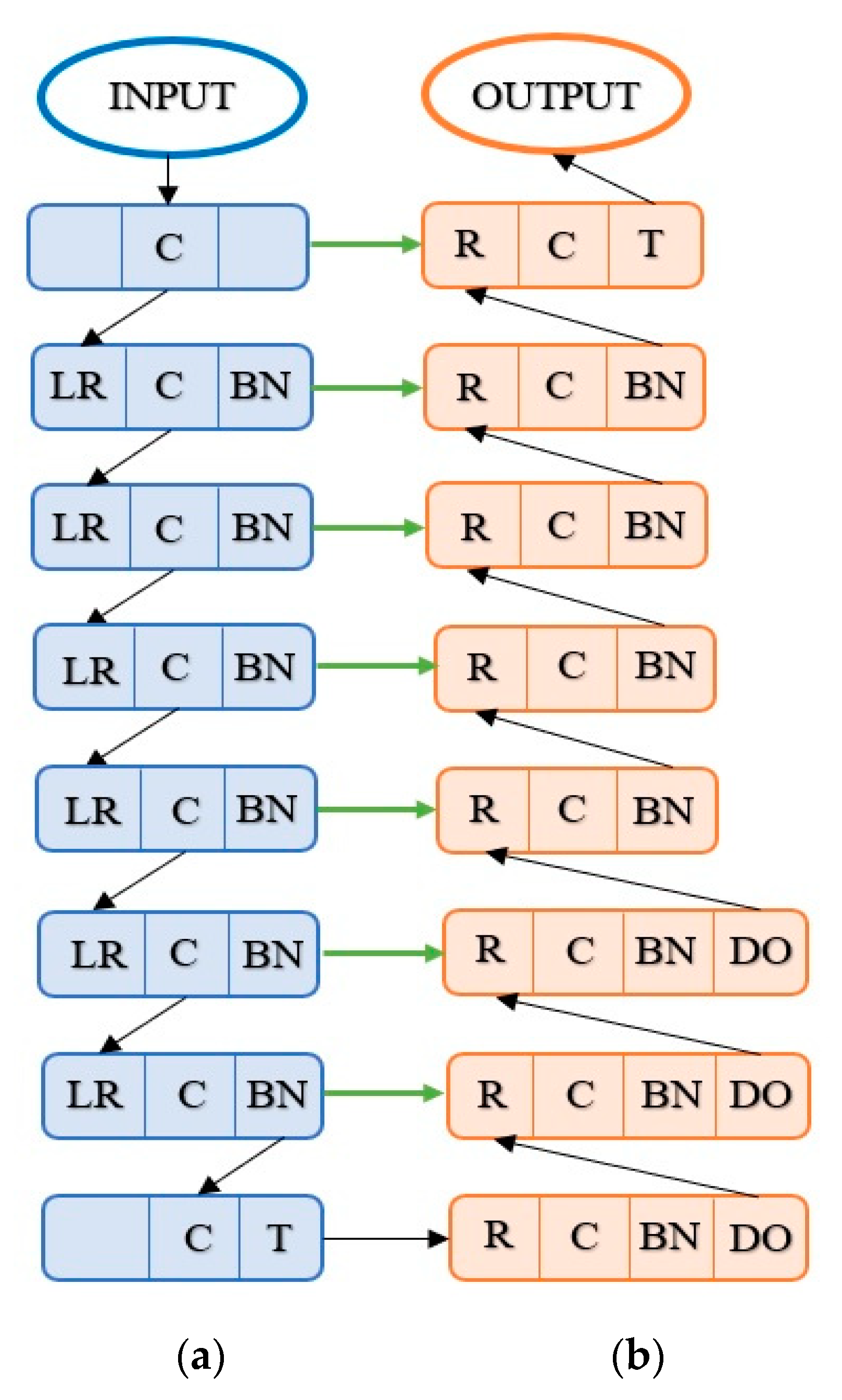

3.3. GAN Network

In this work, a modified variant of the original GAN was used, namely conditional GAN (cGAN) [

48], by considering, as starting images, a pair of an original image and an original mask of the segmented image [

46]. The generator component (G) of cGAN is of the encoder–decoder, U-net type (

Figure 4). This typical architecture successively down samples the data to a point and then applies the inverse procedure. Multiple connections between the encoder and decoder at corresponding levels can be observed at the G structure. The discriminator (D) role is to provide the information with an associated probability that a generated mask from G is a real one. Both G and D are based on typical layers, as presented in

Table 2.

ReLU (R) is a function of activation of a neuron that implements the mathematical function (1):

In certain situations (

Figure 4), it is preferable to consider the negative values and then use the LeakyReLU (LR) variant that lets the negative fraction of the input pass (2).

Like in the case of YOLO net, it should be noted that the use of the direct links in U-net [

49] of the generator does not stop the normal flow of data. As seen in

Figure 4, there are three dropouts (DOs).

The dropout level is the simplest way to combat the overfitting and involves the temporary elimination of some network units. DO is active only in the learning phase. The T unit that appears at the last level of G is the

tanh function (3).

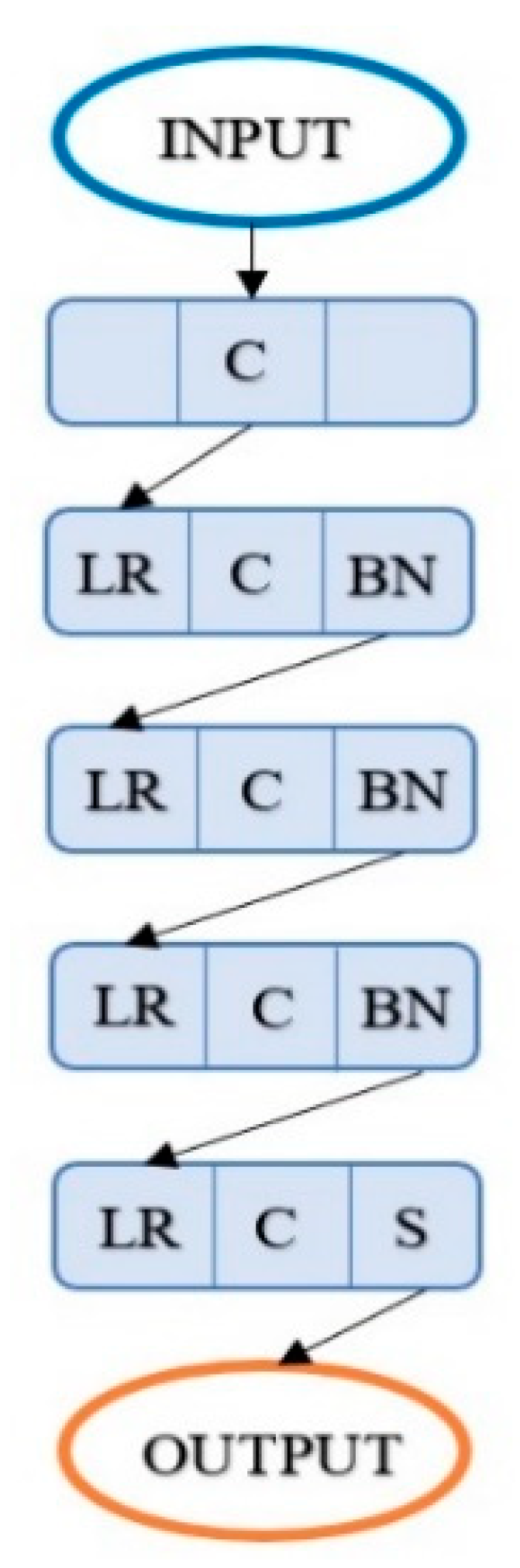

Similarly, the S unit that appears at the last level of D (

Figure 5) is the

sigmoid function (4).

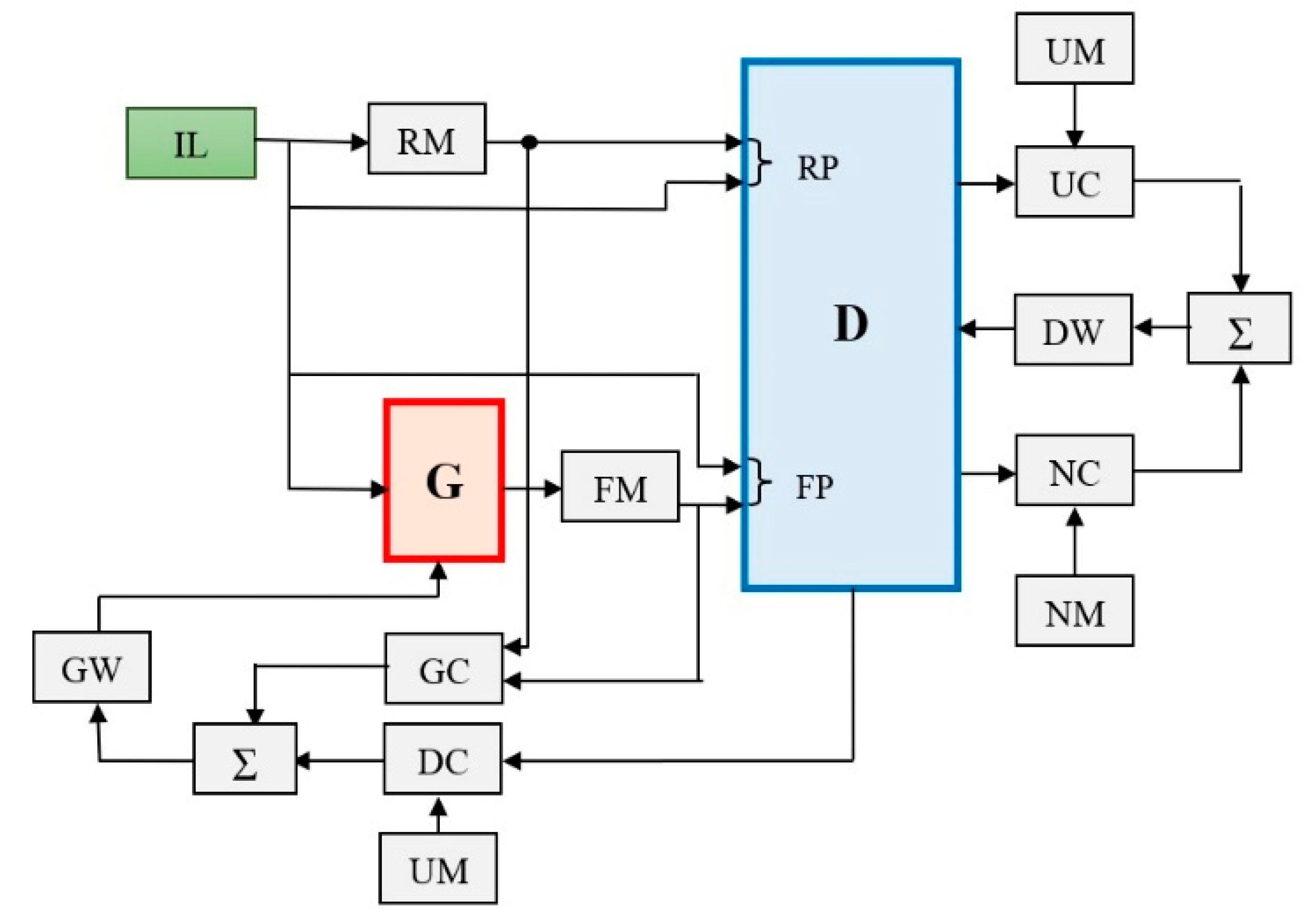

These two main components are connected in a complex architecture (

Figure 6), inspired from [

46], especially in the learning phase, where both G and D internal weights are established. As stated above, the architecture is a conditional generative adversarial network with the objective function

V(

D,

G) (5).

In the learning phase, a set of patches are used to create the corresponding real masks (RM) of flood or vegetation. The same set is introduced in G to obtain fake masks (FM). Two image pairs, RP and FP (real and fake), are considered as D inputs [

46]. There are four comparators (two for D and two for G) that are based on the binary cross entropy criterion for comparisons.

One goal is to minimize the error and gradient between the real segmented image and a unit matrix of 1 (UM). Another goal is to minimize the error and gradient between the fake segmented image and a null matrix of 0 (NM). The results are then used, via a weight optimizer (GW for G and DW for D), to update the weights. The procedure is repeated until the desired number of epochs (iterations) is reached.

G is effectively used only in the learning phase to establish the weights of D. Further, the role of D is to decide whether there is a real image or a fake image and, especially, to provide the decision probability. Due to sigmoid function (4), D provides a value between [0,1] that is the probability that a mask is a real one and this is the probability that the tested patch belongs to a class. cGAN is referred as primary classifier PC2 in the global classifier structure. The learning images (IL) come from our dataset with three classes: flood, vegetation, and rest.

The following technologies were used to test the GAN: Torch, a machine learning framework, to implement the neural network and Python (namely the NumPy, and PIL—Python Image Library—libraries), to evaluate the accuracy of cGAN results.

3.4. LENET

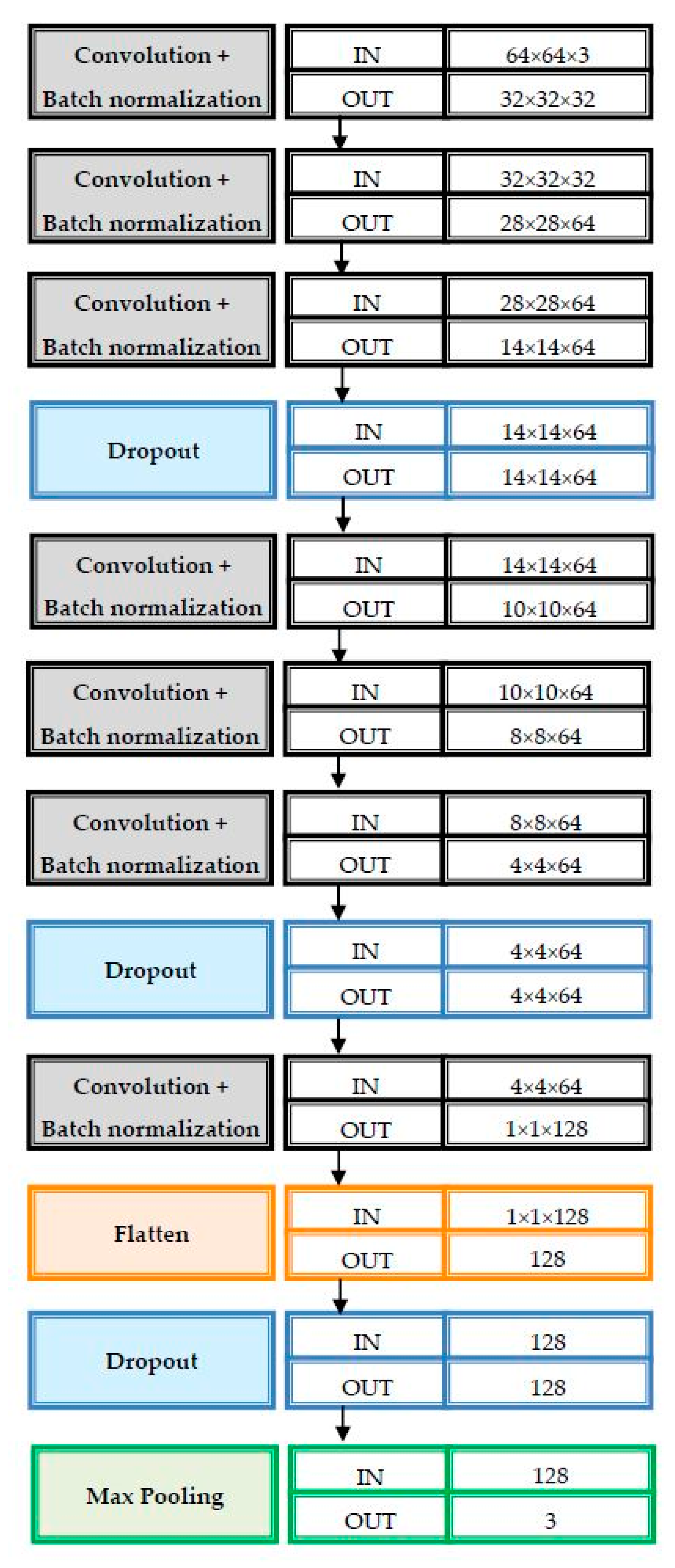

The LeNet-inspired network, containing five pairs of one convolutional layer followed by one max pooling layer, was created as in

Figure 7 [

39]. For simplicity, the number of parameters from one convolution to another was always doubled. In addition, the network contained one flattening layer and seven fully connected layers (dense). Although LeNet is considered effective in recognizing handwritten characters, and the modified alternative has been used successfully in segmenting regions of interest in aerial imagery [

39]. In the experiments of the proposed application it was proved that it could intervene in a complementary way as a consensus agent of the global system.

The cost function used is categorical cross-entropy (6), where N is the number of patches, C is the number of classes,

is the prediction, and

is the correct element that is considered as the probability that the patch

i belongs to the class

j. In the case of a decision, a patch is considered as belonging to the class with the highest probability. LeNet is considered as primary classifier PC

3 in the proposed global system.

3.5. ALEXNET

AlexNet is considered as primary classifier PC

4 in the proposed global system. It was chosen because it sometimes reacted complementarily to the other networks in the field of false positive or false negative areas, thereby contributing to the improvement of the overall classifier performance. The proposed AlexNet classifier, inspired from [

33] is presented in

Figure 8. This deep CNN has the ability of fast network training and the capability of reducing overfitting due to dropout layers.

The activation function, used at the output, was Softmax, which ensured the probability of the image (patch) being part of one of the three classes: F, V, and R. In order to increase the image number for the training phase we used the data augmentation by rotation (90°, 180°, 270°).

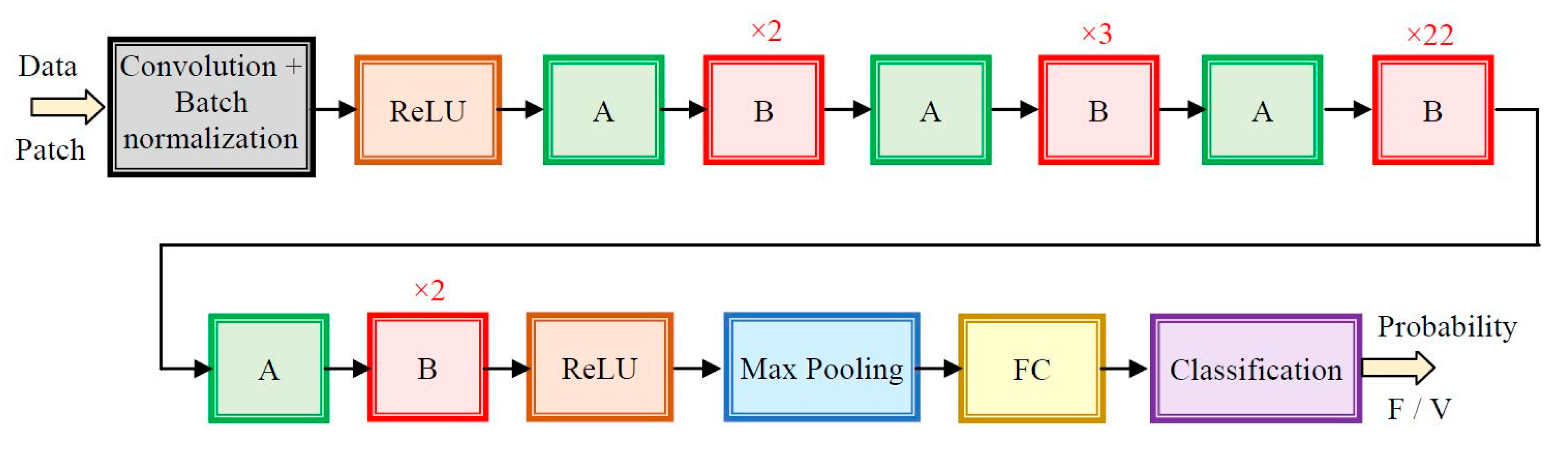

3.6. RESNET

ResNet is an ultra-deep feedforward network with residual connections, designed for large scale image processing. It can have different numbers of layers: 34, 50 (the most popular), 101 (our choice), etc. ResNet resolved the gradient vanishing problem and had a good position in image classification top-5 error.

The ResNet (residual net) architecture, used as primary NN classifier PC

5, is presented in

Figure 9. This deeper network has one of the best performances on object recognition accuracy. ResNet is composed from building blocks (modules), marked by A and B in

Figure 9, with the same scheme of short (skip) connections. The shortcuts are used to keep the previous module outputs from possible inappropriate transformations. The blocks are named residual units [

34] and are based on the residual function

F (7), (8):

where

is the block input,

is the block output,

is the set of weights associated with the

n block, and f is ReLU.

A pipeline of repetitive modules A and B is described in detail in

Figure 10a and

Figure 10b respectively.

4. System Implementation

4.1. System Architecture

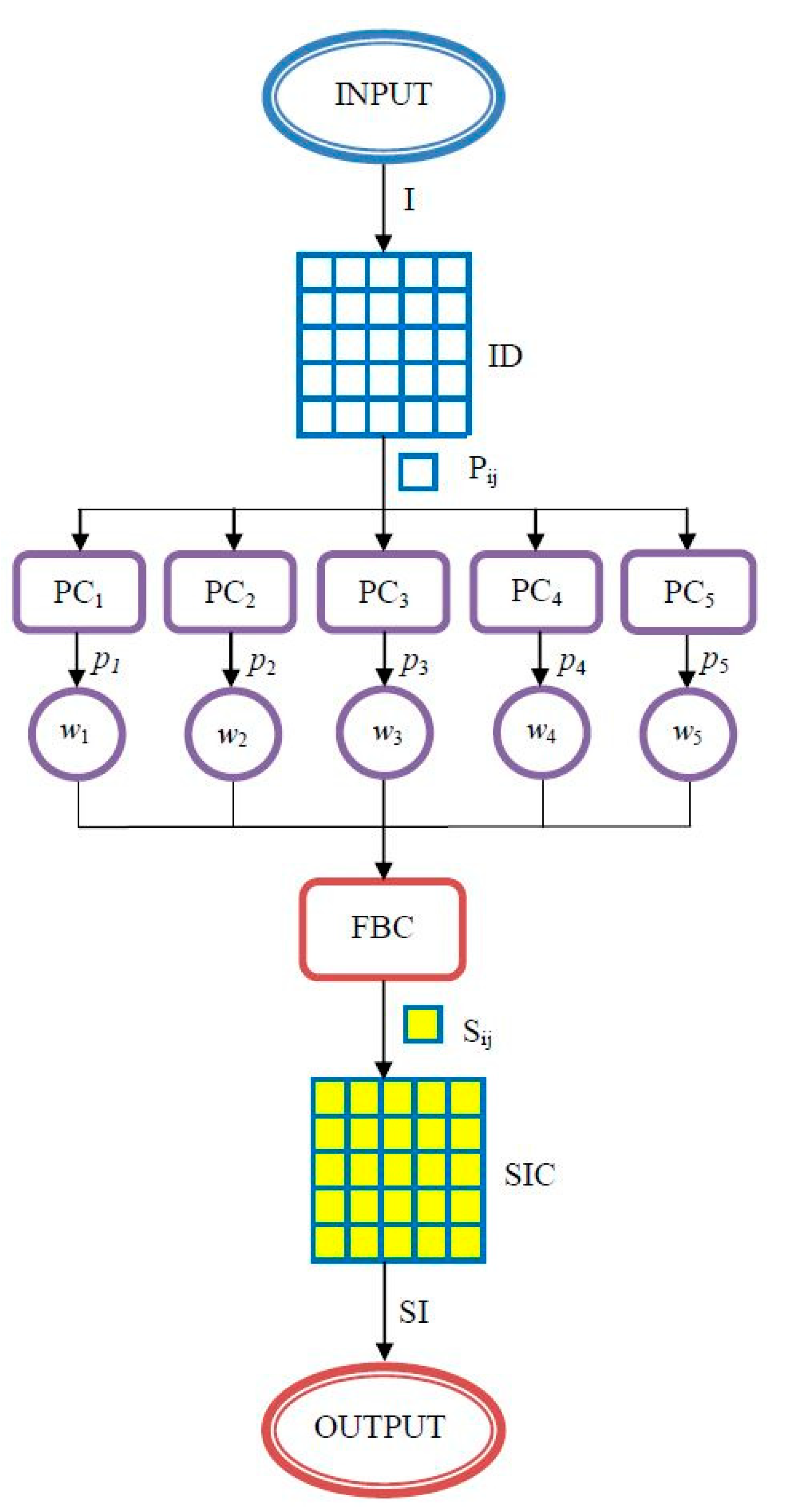

As previously mentioned, the proposed system contains five primary classifiers of the deep neural network type (PCi, i = 1,2,…,5), that have two contributions each: the weights (wi, i = 1, 2,…, 5), established in the validation phase, and the probabilities (pi, i = 1, 2,…, 5), provided at each classification/segmentation operation (operating phase).

A PC weight is expressed as its accuracy

ACC (9) computed from parameters of confusion matrix (

TP,

TN,

FP, and

FN are true positive, true negative, false positive, and false negative cases, respectively):

A score

Sj (

j =

F, V, R) is calculated for each class (

F,

V, and

R) as can be seen in Equations (10), (11), and (12), respectively. These Equations are convolutional laws. The decision is made by the aid of a decision score (

DS), and the class corresponds to the index obtained by maximum

DS selection (13).

The main operating steps of the system are as follows and the flow chart is presented in

Figure 11:

1. The image is decomposed into patches of fixed size (64 × 64 pixels).

2. A patch is passed in parallel through neural networks to obtain individual classification probabilities.

3. The probabilities are merged by the convolutional law that characterizes the system, and the final decision of belonging to one of the classes F, V, R is taken.

4. The patch is marked according to the respective class.

5. The patch is reassembled into an image of the same size as the original image.

6. Return to step 2 until the patches in the original image are finished.

7. The segmented image results.

8. Additionally, the counting of patches from each class is done in order to evaluate the extent of the specific flood and vegetation areas.

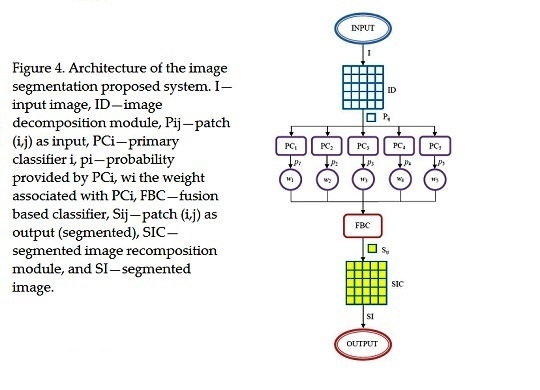

The architecture of the proposed system, based on a decision fusion, expressed by the previous Equations (10)–(13) is presented in

Figure 12. The system contains five classifiers, experimentally chosen, based on the individual accuracy evaluated for each classification task and detailed in the next section.

The meanings of the notations in

Figure 12 are the following: I—image to be segmented (input), ID—image decomposition in patches, P

i,j—patch of I on (

i,j) position, PC

k—primary classifier (PC

1—YOLO, PC

2—GAN, PC

3—LeNet, PC

4—AlexNet, and PC

5—ResNet),

pk—probability of P

i,j classification by PC

k, w

k—weight according to primary classifier PC

k, FBC—fusion based classifier, which indicates the patch class and provides the marked patch, S

i,j—patch classified, SIC—segmented image re-composition from classified patches, and SI—segmented image (output).

As can be seen from the previous Equations and

Figure 12, a new convolutional layer is made by the FBC module with the weights

wi,

(fixed after validation phase). The inputs are the probabilities

pi,

, provided by the primary classifiers, and the output is the decision based on Equations (10)–(13).

The execution time differs from a network to network and of the system implementation. A reduced time was obtained on GPU (graphics processing unit) implementation. The execution time for learning was about 15 h, and the time for segmentation was about 0.2 s for an image. The system architecture used for experimental results consisted of the following: Intel Core I7 CPU, 4th generation, 16 GB RAM, NVIDIA GeForce 770 M (Kepler architecture), 2 GB VRAM, Windows 10 operating system, Microsoft Visual Studio 2013.

4.2. System Tuning: Learning, Validation, and Weight Detection

For the application envisaged in the paper, the system used three phases: learning, validation, and testing (actual operation). First, the learning phase was separately performed for each primary classifier, directly or by transfer learning, to obtain the best performances in terms of time and accuracy. Next, in a similar manner, in the validation phase the attached weight was obtained for each classifier. Finally, the images were processed by the global system presented in

Figure 12.

From the images cropped from orthophotoplan, 4500 patches were selected for learning (1500 flood patches, 1500 vegetation, and 1500 from the rest). Similarly, 1500 patches were selected for validation (500 from each class). As mentioned above, the weight associated with the primary classifiers were established in the validation stage. Examples of such patches are presented in

Figure 13.

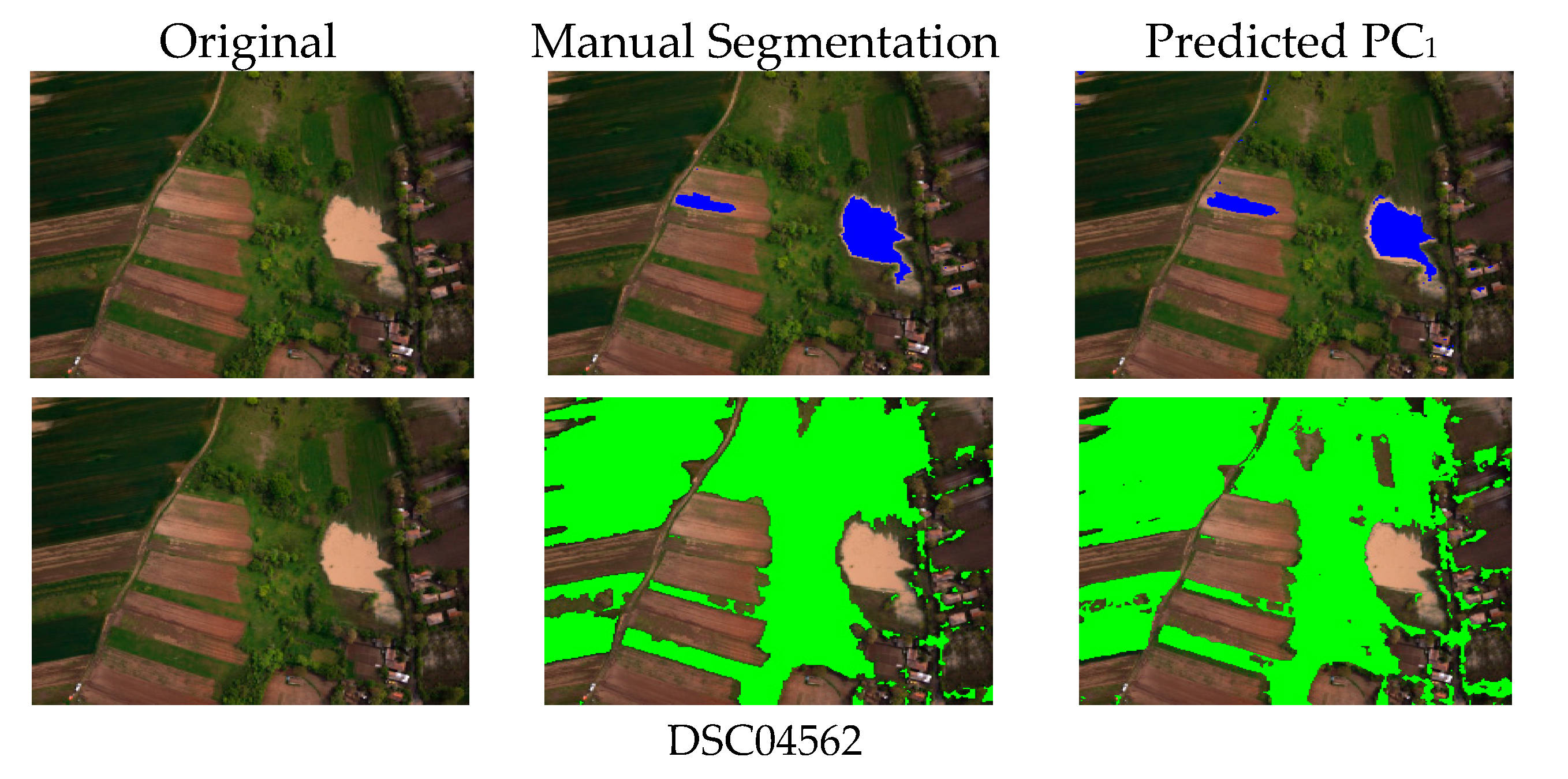

For simplicity, we considered that the patches selected for training and validation contained pixels from a single type of region (F, V, or R). Since the convolution operation is not independent of changes of rotation and mirroring, they could be applied to simulate new cases for the network. Thus, on the patches obtained previously, a 90° rotation was applied, and then a mirror was used to increase the image number four times (18,000 for learning and 6000 for validation). Both learning and validation were performed separately for the three types of regions (F, V, or R). Examples of flood and vegetation segmentation based on YOLO (PC

1), GAN (PC

2), LeNet (PC

3), AlexNet (PC

4), and ResNet (PC

5) are given in

Figure 14 (our dataset). For comparison, the original image and manual segmentation versus predicted segmentation images are presented. As can be seen, errors of segmentation were presented at the edges because of the mixed regions.

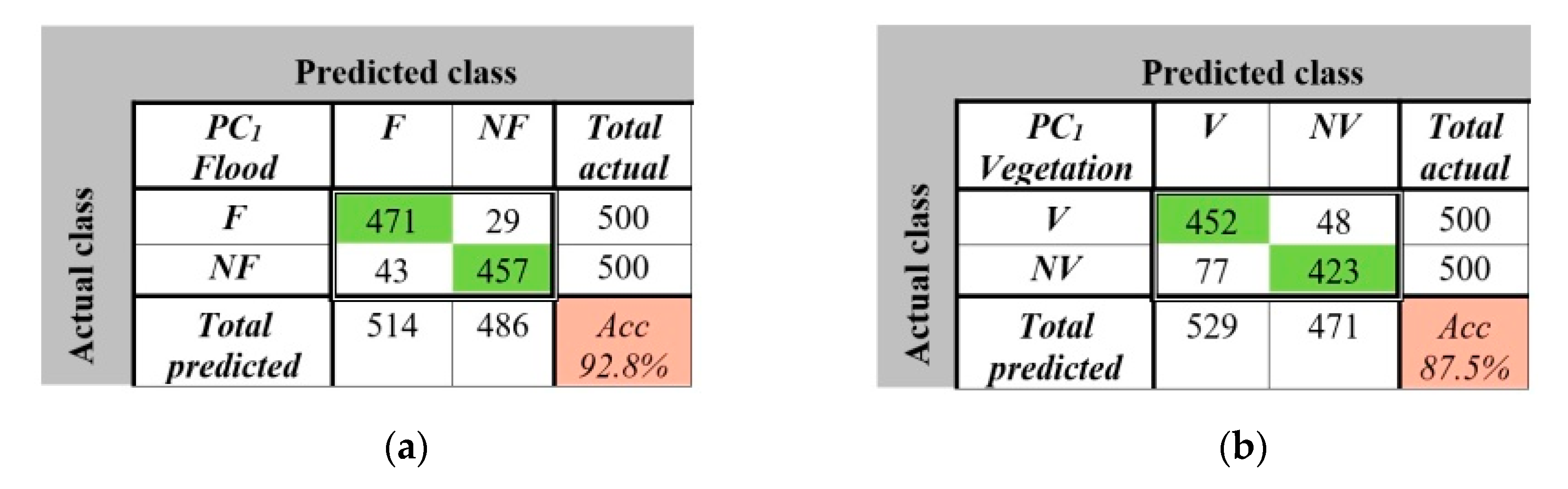

For each of the five neural networks and of the three classes, the confusion matrices were calculated (examples in

Figure 15 are given for YOLO, F, and V classes). Based on the confusion matrices, the performance parameters

TP, TN, FP, FN, and

ACC from (9) were evaluated.

ACC for flood (92.8%) was better than the

ACC for vegetation (87.5%), because, generally, a flood patch is more uniform than a vegetation patch. As a result, the weights (

Table 3) were evaluated (by two digit approximation) for the primary classifiers PC

1, PC

2, PC

3, PC

4, and PC

5, and, also, for each RoI (F, V, and R). To this end the table presents the intermediate parameters (

TP,

TN,

FP, and

FN) to calculate

ACC and, finally, the weights. All the parameters were indexed by the class label (F, V, and R). It can be seen the accuracy was dependent on PCs and classes. Thus, the flood accuracy

ACC-F was greater than other accuracies (

ACC-V, vegetation, and

ACC-R, rest) for all classifiers due to the reason mentioned above.

The meaning of the notations in

Table 3 is as follows: NN-the neural network used, TP, TN, FP and FN are the true positive cases, true negative, false positive and false negative, respectively, ACC-accuracy, w-associated weight. They are associated with classes: F-flood, V-vegetation and R-rest. Thus, TP-F means the true positive in terms of flood detection, ACC-accuracy in flood detection, w

F-weight for flood detection, etc.

5. Experimental Results

After learning and validation of the individual classifiers, the proposed system was tested in a real environment. Like in the previous phases, the images were obtained from a photogrammetry flight over the same rural area in Romania after a moderate flood in order to accurately evaluate the damages in agriculture. Thus, our own dataset was obtained.

In the operational phase, the RoI segmentation was performed by the global system proposed in

Figure 12. First, the image extracted from the orthophotoplan was decomposed in patches according to the methodology described in

Section 3. The patch classification and segmentation were performed based on Equations (10–13), with the weights obtained in the validation phase (

Table 3). For each patch, a primary classifier (

Figure 12) gives the probability to belong to a predicted class (see

pij from

Table 4). Some examples of patch classification and segmentation are given in

Table 4. The decision score S is calculated as in Equation (13). The resulted patches are colored with blue (F), green (V), or are maintained as the initial (R). The real patches and the segmented patches are labeled by the class name. For correct segmentation, in

Table 4 on the same raw, the original and segmented images pair has the same label (F-F; V-V, or R-R). As can be seen, in the last row of

Table 4, there is a false segmentation decision (R-F).

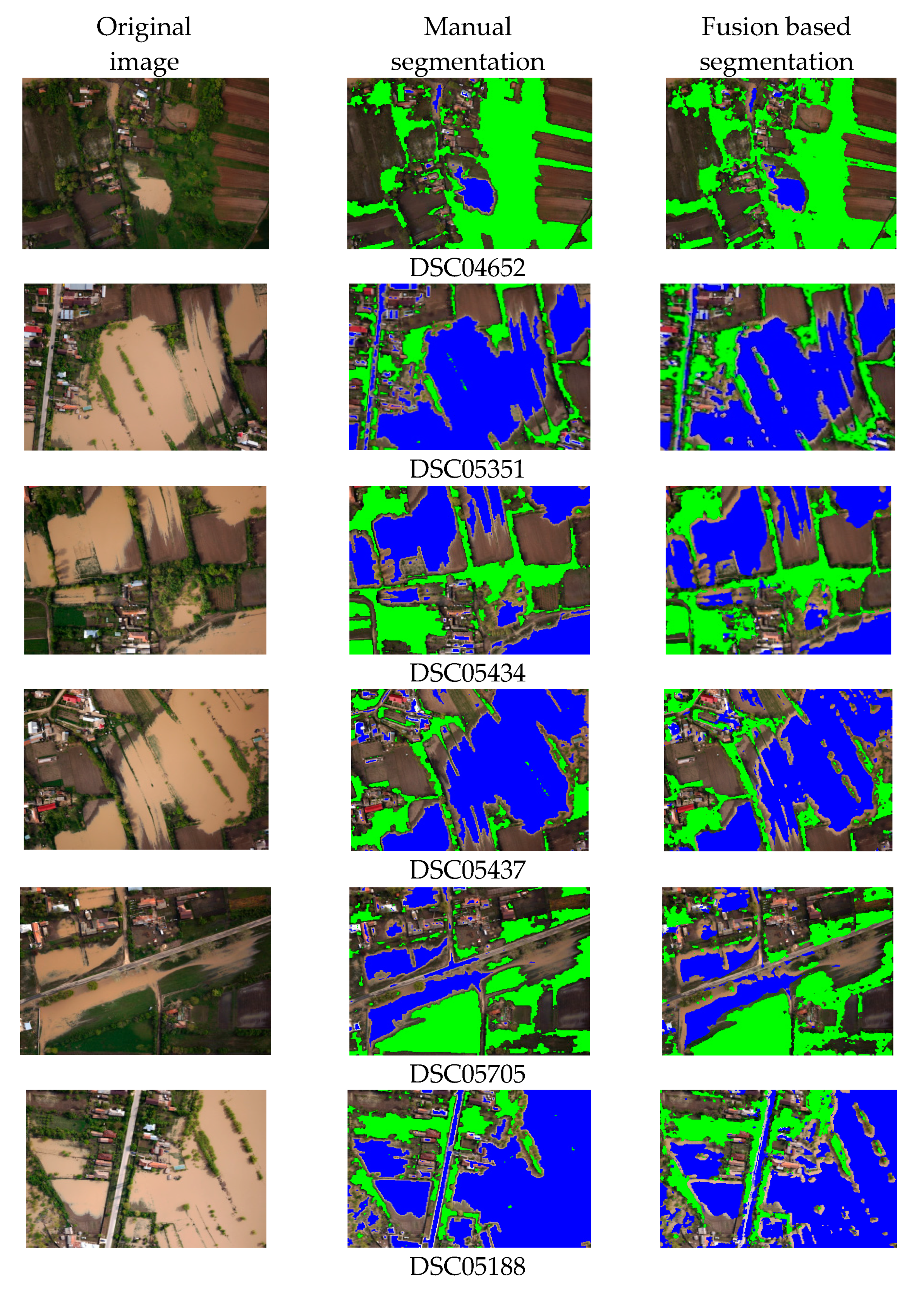

The decision score is also approximated by three digits. In this operational phase, the primary classifiers provide only the probabilities as the evaluated patch belongs to a predicted class and the fusion based classifier evaluates the decision score for the final classification. After image re-composition, the segmented images are shown as in

Figure 16. The examples in

Table 4 show that most patches were correctly classified by all PCs except the last two. The penultimate patch was misclassified by four PCs and the overall result was a misclassification. The last patch was misclassified by two classifiers (PC1 and PC4), but the global classifier result was correct.

In order to evaluate the flood extension and the remaining vegetation, from each analyzed image, the percentage of flood area (FA) and vegetation area (VA) were calculated in manual (MS) and automatic (AS) segmentation cases (

Table 5). Finally, the percentage occupancy of flood and vegetation, from the total investigated area, was evaluated as the average of image occupancy (the last row—Total—in

Table 5). It can see that, generally, the flood segmentation was more accurate than the vegetation segmentation. Compared with the manual segmentation, the flood evaluation differed by 0.53%, and the vegetation evaluation differed by 0.84%.

6. Discussion

The proposed system based on decision fusion combines two sets of information: the PC weights obtained in a distinct validation phase, and the probabilities obtained in the testing (operational) phase. The primary neural networks were first experimentally tested. Accuracy differs from one network to another and from one type of class to another. Thus, in

Table 6 are presented the accuracies of individual networks (YOLO, GAN, LeNet, AlexNet, and ResNet) and of the global system for each class (F, V, and R) and the mean. It can be observed that for individual networks, the best results were obtained for ResNet and flood.

In case of the experiments described in this paper, the accuracy was lower for the YOLO network, for the patch size of 64 × 64 pixels (86.7%). The authors analyzed the performance of the YOLO network on different patch sizes for the same images and found the following: for the 128 × 128 pixel size of the patch, the accuracy was 89% [

39], and for 256 × 256 pixel size the accuracy was 91.2%. This is explained by the mechanism of network operation within the convolution to preserve the size of the patch. The proposed system is adaptable for different patch sizes. In cases of more extensive floods, where no small portions of water mixed with vegetation appear, the system operates with larger patch sizes, and the YOLO network will have a better accuracy (respectively, a higher weight in the global decision).

On the other hand, the purpose of combining several networks was to reduce the individual number of false positive and false negative decisions. It was found that in some cases the decision of the YOLO network leads to a global correct decision. This aspect is also a motivation for choosing several neural networks for the global classification system.

Although the fusion based on a majority vote would have been simpler, we chose a fusion decision that takes into account a more complex criterion based on two elements: a) the "subjectivism" of each network, expressed by the probability of classification, and b) the rigid weights of these networks, previously established (in the validation phase). This is the new convolutional layer of the proposed system that leads to a more objective classification criterion than a simple vote criterion.

Why five networks and not more or less remains an open question. It is a matter of compromise, assumed by our experimental choice. A large number would lead to greater complexity, so a greater computational effort and time, while a small number would lead to lower accuracy.

The classification results were good (

Table 6); however, due to images that were difficult to interpret, erroneous results were also obtained. The main difficulties consist of factors such as (a) the parts of the ground that were wet or recently dried were possibly confused with flood (error R-F), (b) the vegetation that was uneven was possibly confused with class R (V-R error), and c) possible green areas (trees) covered the flooded area (V-F error), etc. One such example is presented in

Table 4, the last row; this is because it contains a surface of land, recently dried, similar with the flood (error R-F).

The obtained results were better than the individual neural networks (

Table 6) and better or similar than other works (

Table 7).

The papers in

Table 7 were mentioned in the Introduction or Related Work sections and refer to similar works as ours. Only paper [

39] addressed both flood and vegetation classification. The results obtained with different deep CNNs have less accuracy than our global system. The authors in [

1] used UAV images for vegetation segmentation, particularly different crops, based on hue color channel and corresponding histogram with different thresholds. The methods in

Table 7 were tested on our database for papers [

29] and [

39]. In [

1] the images are very similar to ours. The images in [

4] and [

13] are very different from our application (urban).

The authors in [

4] used a VGG-based fully convolutional network (FCN-16s) for flooded area extraction from UAV images on a new dataset. However, our data set is more complex and difficult to segmentation. Compared with traditional classifiers such as SVMs, the obtained results are more accurate. As in our study, the problem of floods hidden under trees remains unresolved.

Our previous work [

25] combines an LBP histogram with a CNN for flood segmentation, but the results are less accurate and the operating time is longer because for each patch the LBP histogram must be calculated.

The images that characterize the two main classes, flood and vegetation, may differ inside an orthophotoplan due to the characteristics of the soil (color and composition) that influence the flood color or the texture and color of the vegetation. There are also different features for different orthophotoplans depending on the season and location. Obviously, for larger areas there is the possibility of decreasing accuracy. We recommend a learning action at each application from as many different representative patches as possible.

The network learning was done from patches considered approximately uniform (containing only flood or vegetation). Due to this reason, a smaller segmented area was usually obtained (mixed areas being uncertain). Other characteristic areas, such as buildings and roads, are less common in agricultural regions. They were introduced to the "rest" class. Another study [

46] found that the roads (especially asphalted) cannot be confused with floods.

Compared to our previous works, [

29] and [

39], this paper introduces several neural networks, selected after performance analysis, in an integrative system (the global system) based on fusion of decisions (probabilities). This system can be considered as a network of convolutional networks, the unification layer being also based on a convolution law (10–12).

One of the weak points of our approach is the empirical choice of the neural networks that make up the global system (only based on experimental results). We have relied on our experience in recent years and on the literature. On the other hand, we have modified the well-known networks in order to obtain the best possible performances for our own database. The images used were very difficult to interpret because they contain areas at the boundary between flood and non-flood (for example, wetland and flood), and areas of vegetation are not uniform. On clearly differentiable regions of interest, the results are much better, but we wanted to demonstrate the effectiveness on real, difficult cases.

Neural image processing networks are constantly evolving, modernizing the old ones and appearing new ones. In this case, the question arises: how many and why are the networks involved in such a fusion based system? It is desired to develop a mathematical criterion, an objective, based on optimizing parameters such as time, processing cost, accuracy, etc., to take into account the specific application.

7. Conclusions

In this paper, we proposed an efficient solution for segmentation and evaluation of the flood and vegetation RoIs from aerial images. The system proposed combines in a new convolutional layer the outputs of five classifiers based on neural networks. The convolution is based on weights and probabilities and improves the accuracy of classification. This fusion of neural networks into a global classifier has the advantage of increasing the efficiency of segmentation demonstrated by the examples presented. Images tested and compared were from own database acquired with a UAV in a rural zone. Compared to other methods presented in the references, the accuracy of the method proposed increased for both flood and vegetation zones.

As feature work, we proposed the segmentation of more RoIs from UAV images using multispectral cameras. For more flexibility and adaptability to illumination and weather conditions we will also consider the radiometric calibration of images. We also want to create a bank of pre-trained neural networks that can be accessed and interconnected, depending on the application, to obtain the most efficient fusion classification system.

We want to expand the application for monitoring the evolution of vegetation, which means both the creation of vegetation patterns and the permanent adaptation to color and texture changes that take place during the year.