1. Introduction

Unmanned aerial vehicle (UAV) systems have gained the approval of the scientific community for different applications related to the acquisition of information, becoming common in geospatial research and a wide range of applications [

1]. The cost- and time-effectiveness of UAV systems, compared to traditional field surveys, is partially responsible for their increasing favor. An additional factor contributing to their popularity is that they can be equipped with several sensors, such as optical and hyperspectral cameras, light detection and ranging systems (LiDAR), synthetic aperture radars (SAR), inertial measurement units (IMU), and global positioning systems (GPS) [

1,

2,

3,

4].

Many disciplines benefit from these technologies, including forestry [

5]. The application of UAV in forestry inventory and, more generally, in the extraction of the main forest parameters (e.g., forest stand density, crown widths, basal area, average diameter at breast height, height) is well established. The structural information of forest stands is vital for silviculture and forestry inventories. The accurate detection of tree crowns is necessary to estimate the dendrometric attributes of forest stands, such as the tree position, the stem diameter, the height, the crown extension, and the volume [

6,

7,

8]. Besides, these forest parameters can be valuable ecological indicators, which determine, among others, the carbon sequestration, the shading, the risk of wind-breakage, and the tree growth [

9]. The determination of these parameters is performed at the individual tree level and requires information about single trees.

Thus far, many approaches have been proposed for individual tree detection (ITD) via remote sensing. Generally, they are based on digital elevation models (DEM) that can be generated from LiDAR acquisitions [

7,

10,

11,

12,

13,

14,

15] or structure from motion (SfM) [

5,

9,

11,

16,

17]. SfM uses optical images acquired from multiple points of view to recreate the three—dimensional geometry of an object [

18,

19]. The 3D model generation is carried out by incremental steps. First, the key-points are extracted from the images based on contrast and texture-related rules. The key-points are identified in all input images and then matched between different images [

19,

20]. Then, the bundle adjustment is performed and the sparse point cloud is usually scaled and georeferenced [

21,

22]. The final step consists of the densification of the point cloud thorough specific algorithms [

23].

Regardless of the data source, some 2D ITD methodologies include the computation of the canopy height model (CHM) for the detection and delineation of tree crowns [

5,

24]. First, the local maxima of the CHM are computed to detect treetops [

5,

24], and then, the crowns are delineated using image-processing and segmentation algorithms [

10,

13,

15,

25]. The most common technique for the delineation of crowns consists of watershed segmentation, using as input seeds the local maxima. Segmentation works on contiguous pixels that are grouped based on similar digital number (DN) values [

4,

13,

15,

26]; when the local maxima are identified, they are used as input seeds, or starting points, for the generation of the segments. Many other 2D ITD spectral information methodologies have been explored, but, unlike the others, these procedures mainly work on the segmentation based on brightness levels [

7,

9,

10,

24,

27,

28]. They consider the brightest pixel in a neighborhood as the tree crown apex and identify the tree crown perimeters using dark-pixel and valley-following approaches. Most of the ITD techniques depend on CHM generation methods that may affect the accuracy of tree crown delineation [

13,

29]. CHM is calculated as the difference between the digital surface model (DSM) and the digital terrain model (DTM). Thus, a good DTM is a fundamental prerequisite for the accurate characterization of CHM [

11].

When the DTM of a forest stand is interpolated from LiDAR or photogrammetric point clouds, their accuracy is strongly influenced by the density of the forest stand, meaning the number of ground points identified by the sensor [

11]. Indeed, CHM-based methods for ITD assume that local maxima analysis detects treetops. However, in structurally complex forest stands and steep slope areas, the results should be carefully interpreted [

9]. In this framework, LiDAR data is much more accurate [

5] than the SfM-based approaches, since LiDAR can penetrate tree crowns and obtain terrain information by reaching the ground [

30]. As a result of this, and of the commercialization of light-weighted sensors that can be mounted on UAVs, the most recent applications of ITD methodologies work on 3D datasets acquired with aerial laser scanners (ALS) [

5,

12,

15]. Besides being able to generate more accurate point clouds, LiDAR technologies are more expensive than optical ones [

24,

30]. Even if some countries, such as Norway, Sweden, and Canada, use LiDAR technology for national forest inventories, several annual acquisitions at local and regional scales are generally cost-prohibitive [

30]. Therefore, many countries are not in the economical position to rely on LiDAR technologies. According to White et al. [

29], generally, SfM-derived data for forestry inventories are more cost-effective than LiDAR data and can cost about one-half to one-third of LiDAR data [

29]. Moreover, LiDAR sensors are heavier than multispectral cameras and need to be mounded on UAVs with higher payload capacities. Besides being more expensive, larger UAVs with heavy payloads may require additional training and licensing (most UAV license national systems are based on maximum take-off weight, MTOW, categories). Among others, LiDAR requires also high data storage structures [

24] and powerful computational technology to obtain accurate results [

5]. LiDAR data do not provide users with the spectral information, although some models have a camera integrated into the acquisition system.

Table 1 provides an analysis of the advantages and disadvantages of the optical and LiDAR systems focused on UAV data acquisition for ITD.

The ITD approaches based on UAV aerial images promise to be a cost effective and valid alternative to LiDAR. They provide users with good accuracy data, with little usage of resources. Several studies have been carried out on the accuracy of ITD from UAV-derived information. Some methods identify the tree crowns from the brightness values of visible and infrared images [

27,

28], while some more recent ones work on multiscale filtering, segmentation of imagery, and math morphology algorithms [

8] to define tree crowns [

16,

25,

32]. These methods usually have complex segmentation workflows and require the application of image filters, such as Laplacian filters, Gaussian filters, and math morphology algorithms. Complex segmentation processes are necessary because UAV optical imagery of forested areas is frequently affected by shadows, slope-derived distortions, and low contrast [

33,

34]. These aspects, which are enhanced by the high spectral variability of very high resolution (VHR) imagery, make segmentation difficult. VHR images represent a challenge for segmentation and classification because, unlike in lower resolution images, single pixels no longer capture the characteristics of the classification targets [

26]. Image-based methodologies for ITD, even if efficient, usually require several steps; therefore, high computational time is needed. This is one of the reasons why the image-based processes for ITD have been partially overcome by CHM-based methods. Nevertheless, when CHM is not accurate enough or too expensive, such as structural complex stands, image processing methods that do not require CHM exist and they can be a valuable alternative to CHM-based methods. Indeed, image-based segmentation techniques can provide good accuracy results, especially when a textural analysis is applied [

35,

36]. A shared methodology of texture analysis for segmentation (and classification) is based on the gray level co-occurrence matrix (GLCM) according to the Haralick measures [

37]. For the images of complex structures, some researchers proposed the use of segmentation algorithms based on fractal and multifractal analyses [

38,

39,

40]. It is worthwhile to remember here that a fractal is a rough or fragmented geometrical object that can be subdivided into parts, each of which is (at least approximately) a reduced-size copy of the whole object [

41]. Fractals are described by one quantitative number—a fractal dimension, for the computation of which various methods have been proposed (see, e.g., [

42]), but, generally, it can be treated as information about the considered object’s measure of complexity and self-similarity.

Fractal dimension has been used together with other features for image texture description and segmentation, e.g., Keller et al. [

43]. The fractal dimension has been also utilized in the forestry field. For instance, an interesting description of fractals in forest science can be found in Lorimer et al. [

44]. Zeide and Pfeifer showed that the fractal dimension of tree crowns can be useful in crown classification and foliage distribution within a single tree crown analysis [

45]. Similarly, Mandelbrot suggested applying fractals to modeling trees and analyzing their structure [

41]. A comprehensive review of the application of fractal description in forest science can be found in Lorimer et al. [

44]. Multifractal analysis is an extension of fractal theory and it is based on the assumption that the multifractal is a set of nontrivially intertwined fractals. Hence, the description of multifractal inner structure demands a set of parameters which permit a more detailed characterization both locally and globally.

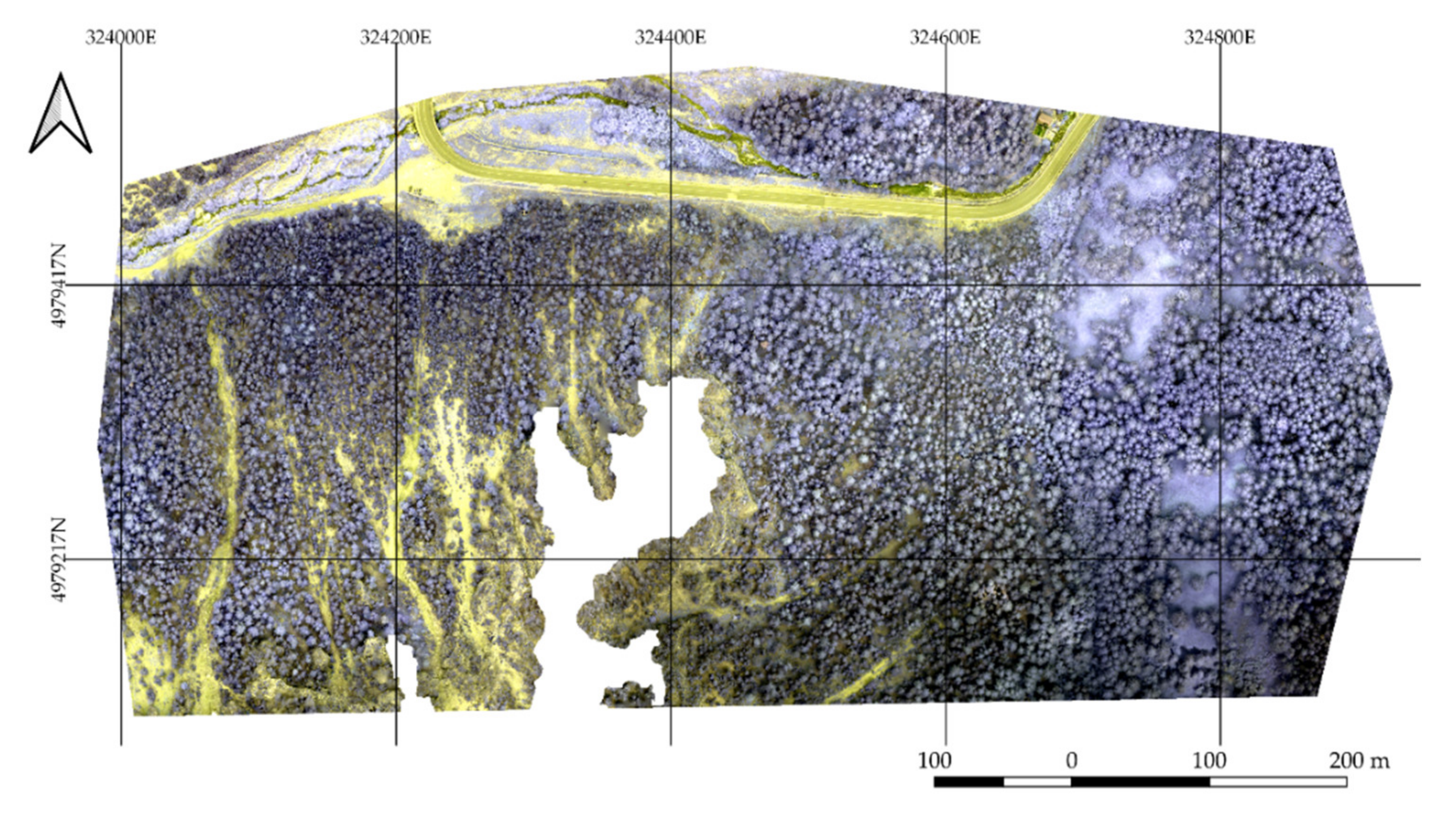

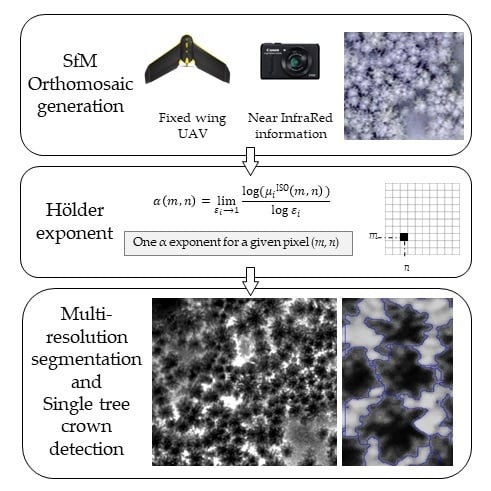

At the beginning of the multifractal image analysis, a measure is assigned to the image and, in the next steps, the measure regularity of this measure is analyzed as the information on the image’s complexity/inhomogeneity. It is worthwhile to underline the fact that various measures defined based on pixel intensities can be applied [

38,

40,

46]. The local (pointwise) degree of regularity of a given measure is described by so-called Hölder exponent values, which strongly depend on the actual position on the image and allow researchers to identify points that differ from the background [

40]. On the other hand, the distribution of Hölder exponents on the image is summarized in the form of the so-called multifractal spectrum, treated as the global characteristic of a measure’s regularity (image complexity/inhomogeneity) [

38,

40]. Global multifractal characteristics have already been applied to VHR optical data [

47,

48], mostly to distinguish between different land cover types. One can find also their application in the context of the study of forest cover, such as in Danila et al.’s work [

49], or to perform the segmentation of plants’ disease images [

50]. On the other hand, local multifractal description by using Hölder exponents has rarely been used, mainly to perform segmentation of medical data [

38,

40] or in the change detection aspects of satellite images [

38,

51]. Nevertheless, the results obtained in papers [

38,

40,

51] suggest the usefulness of the Hölder exponent in the context of image content description. In particular, the authors of these studies underlined the fuller description of complex shapes, heterogeneous measures, and structures typical for satellite remote sensing. It is worth mentioning that, to the best of our knowledge, the Hölder exponent parameter has not been determined for VHR UAV-derived imagery yet or in the context of forest analysis. Therefore, in this study, we focus on the determination of the local Hölder exponent connected with multifractal theory and use it for the segmentation of single tree crowns from VHR UAV-derived imagery. More precisely, we propose to apply this quantitative descriptor as the unique input for the efficient identification of single tree crowns using only a cycle of multiresolution segmentation algorithms.

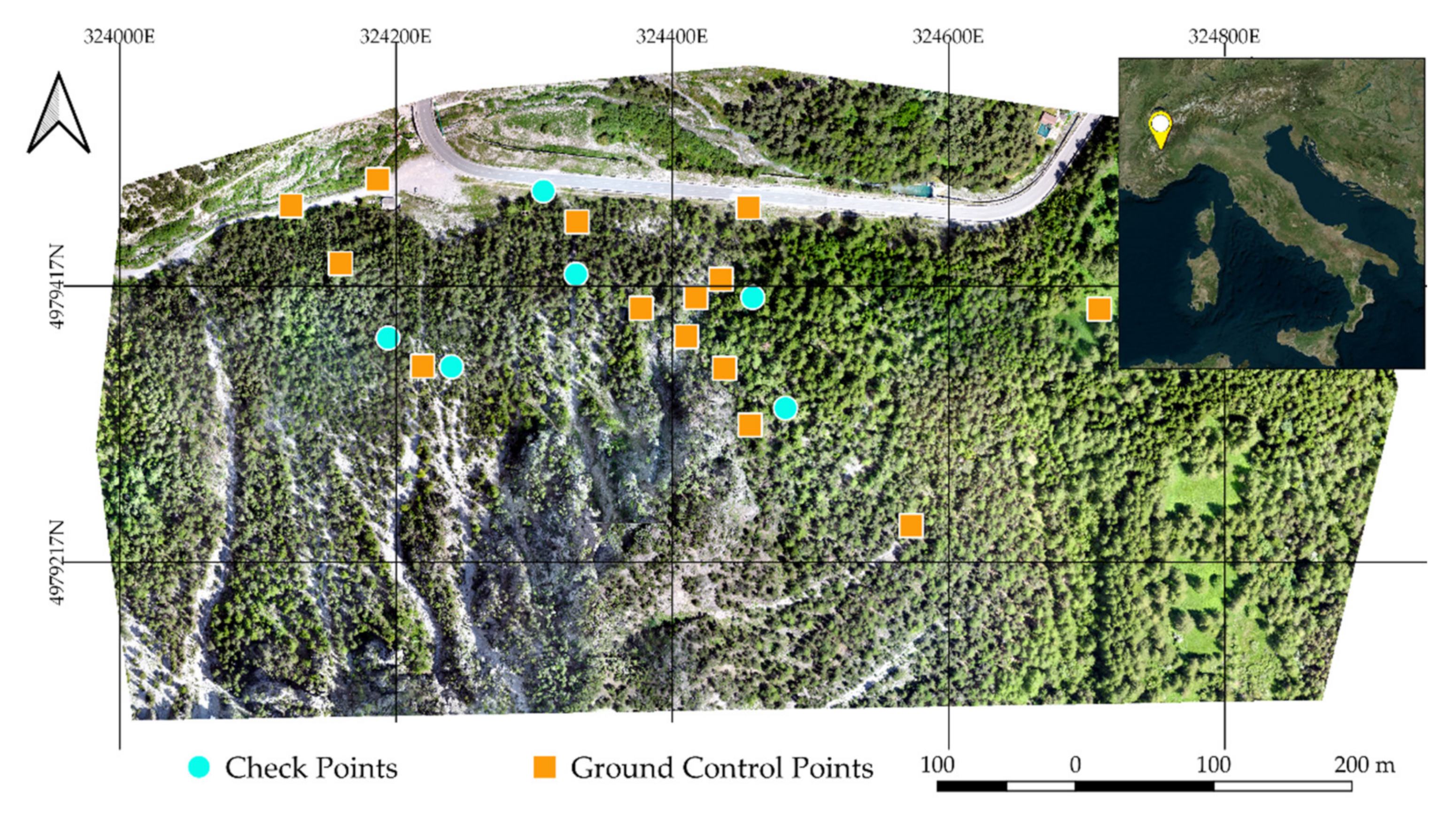

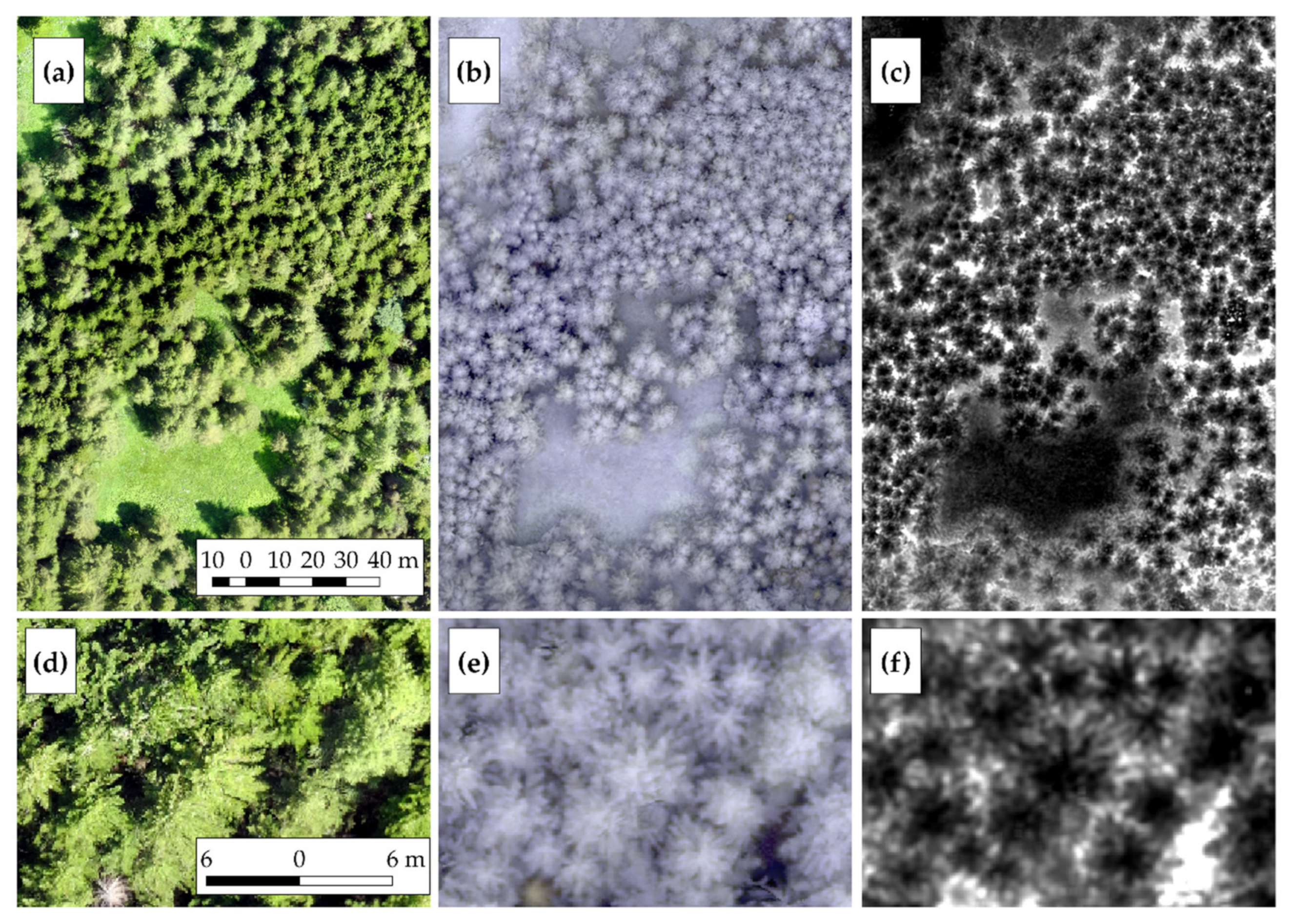

Study Site

This study was conducted in the North-Western Alps in a forest stand located in Cesana Torinese (TO) (44°56′46.1″ N 6°46′29.5″ E). The test study is a coniferous forest (

Figure 1) dominated by silver fir (

Abies alba Mill.), Norway spruce (

Picea abies (L.) H. Karsten), and European larch (

Larix decidua Mill.). Scots pines (

Pinus sylvestris L.) and Swiss pines (

Pinus cembra L.) are sporadically present. The study area extends to approximately 38 hectares. The forest stand is in a high-sloped mountainous area with north-facing exposure. The steep mountainsides make the area particularly prone to rockfall and avalanches.

4. Discussion

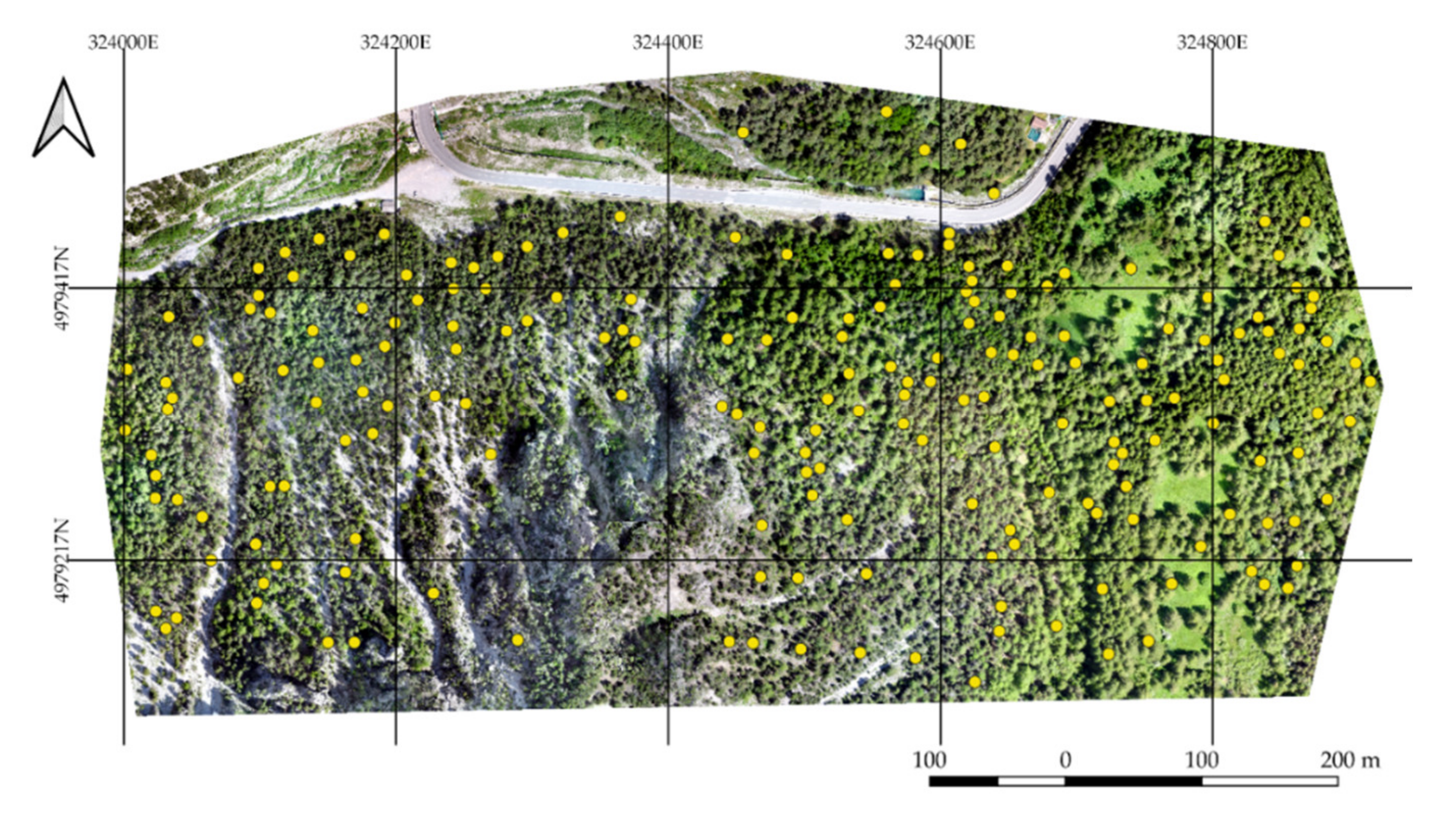

The results of this very first application of multifractals analysis of UAV imagery for the identification of single tree crowns are promising. In a relatively short time (around 13 min), it was possible to analyze 38 hectares of forest using one input layer only. The Hölder exponent analysis results in a clear image of the single tree crowns.

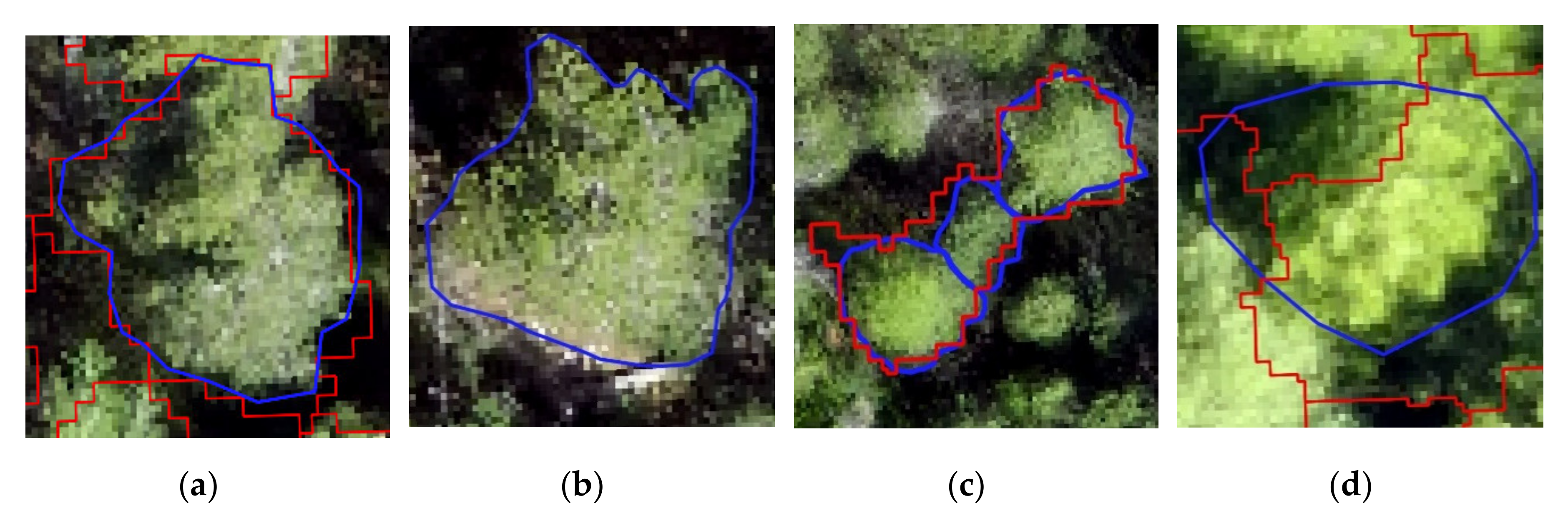

The pixels corresponding to the border of crowns present higher values of Hölder exponent. This most probably led to the underestimation of the dimension of the crowns after the contrast split segmentation. Nevertheless, growing the segmented objects of three pixels and smoothing them allowed us to limit such errors on most of the crowns.

The assessment of the classification reveals promising results. The visual evaluation suggests more than 73% of the

F1 score, which is in accordance with similar studies. Indeed, the very recent application of Qiu et al. [

8] reaches an accuracy level of 76% in the VHR imagery segmentation, but this is also higher than the producer’s and user’s accuracy values obtained by Ke and Quackenbush in 2011 [

25]. Mohan’s and Vieira’s works [

5,

56], respectively, reached 86% and 70% of the

F1 score. It is worth mentioning that these comparisons should be interpreted with caution since many aspects can influence the goodness of the ITD. Firstly, the high level of subjectivity affects visual evaluations. Secondly, the characteristics of the study areas have a dominant role in the results of the ITD. Indeed, the illumination distortions due to the topography, the density, and the structure of the stand, along with the dominant species, can influence the results (and the goodness) of the segmentation. To fairly compare the results, we should have at least similar case studies; indeed, the works mentioned above are realized in flat or low-sloped areas over different types of forest stands. The selected ruleset is an additional influencing factor: it must be underlined that the segmentation applied in this study is intentionally plain and can be further improved, especially in the refining phase.

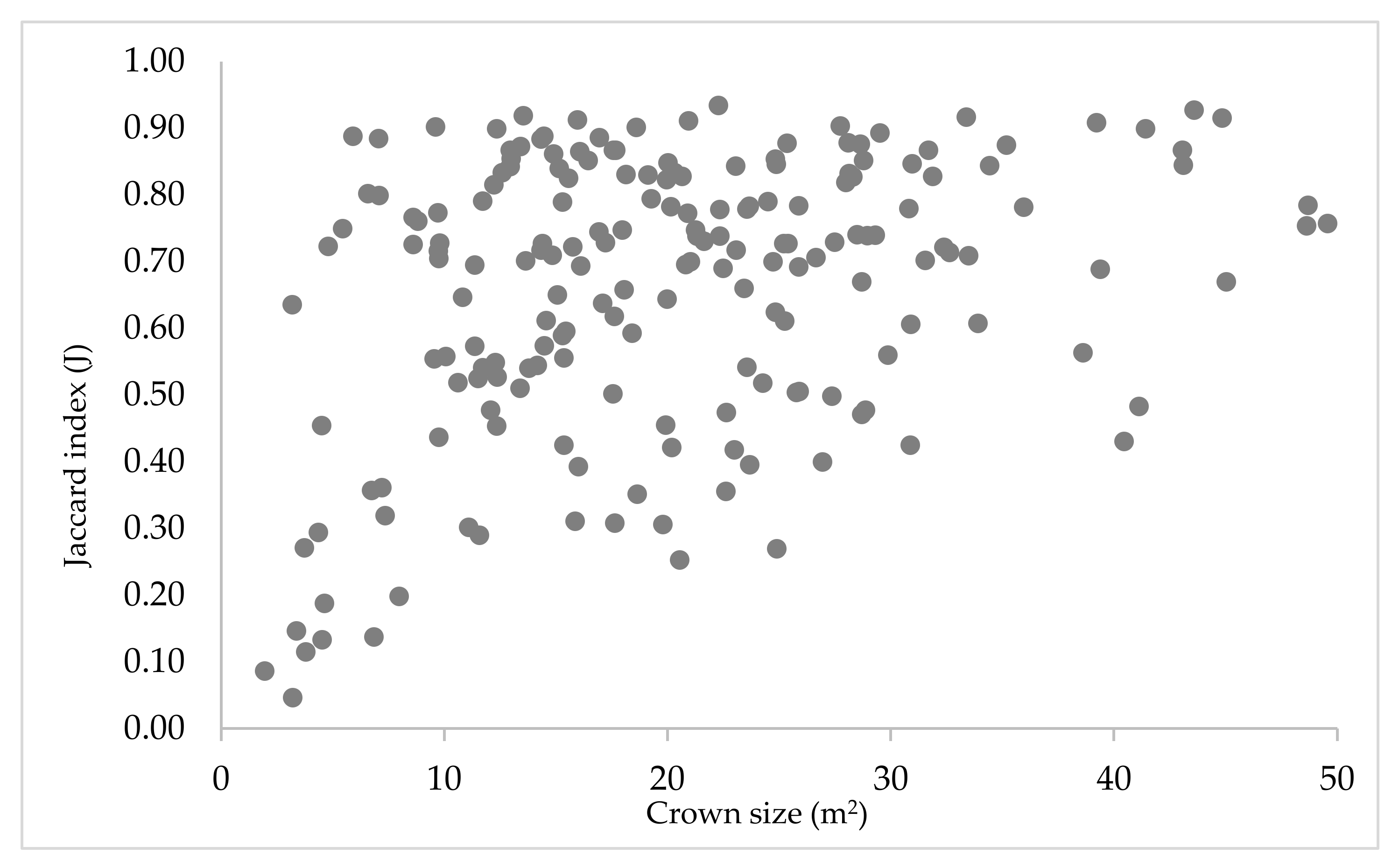

As already mentioned, the visual evaluation is limited in the assessment of the goodness of the segmentation. Several other aspects regarding the shape and the size of the individual tree crowns can be taken into account. The results of the quantitative assessment are clear: the positions of the crowns, as well as their extension, are very well-identified. As evidence, the median value of the centroid distance is 45 cm. Additionally, the area difference is not particularly relevant, since the

RMSE represents only 14% of the average crown area. Thanks to the smoothing process, there is an evident match between the borders of the segmented and reference objects (the

RMSE on the perimeter is almost 3 m). Although the validation indicates a good segmentation, it is important to underline the difficulty of the manual segmentation of references: even for the human eyes, the identification of single trees is not immediate. This is a quite common weakness of ITD (and, more generally, segmentation) researches. The

RMSE of the perimeter has been calculated by Yurtseven et al. in their ITD research [

55]. They obtain a 6-m

RMSE on the perimeter metric, even though they had the chance to identify the crowns on a 1.2 cm/pixel RGB orthomosaic, as an additional demonstration of the subjectivity and complexity of the reference dataset identification. Compared to the existing works of ITD and segmentation, the Hölder exponent provides results that are perfectly in line with the literature.

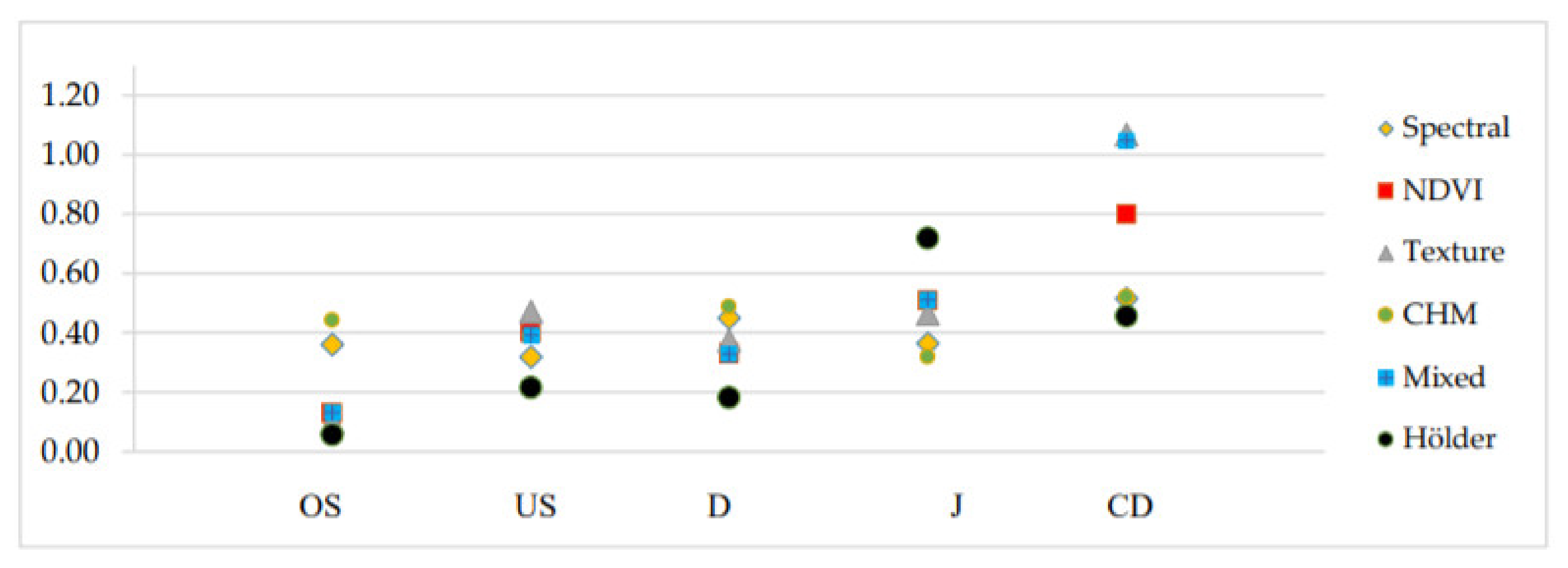

The tendency of the proposed method to under-segment more than over-segment is evident also from the comparison of

US (0.284) and

OS indices (0.084). The Jaccard indicator is 72%, a result which is in line with other studies, despite of the high variability of the delineation of the reference dataset. Hussin et al. [

57] applied the

OS and

US indicators to the assessment of tree segmentation, using satellite imagery of 2-m resolution, and they obtained comparable values for both under-segmentation and over-segmentation. However, in their work, they faced the opposite situation: over-segmentation errors are more dominant than under-segmentation ones. Persello et al. and Clinton et al. [

53,

54] obtained very similar

OS and

US results too, even though both studies focused on the segmentation (and classification) of satellite imagery in urban areas. The 0.18 median value resulting from the D index mirrors the values in the literature and it is a relatively good result. The literature reports values between 0.31 and 0.42. Again, these metrics and comparison should be interpreted with caution since they are the results of segmentation from satellite imagery and this does not include the extraction of single tree crowns. Finally, the Jaccard index, or intersection over union index, values vary between 0.05 and 0.95, with 0.72 as the median value.

On the same segmentation process, the results of Hölder exponent segmentation clearly outclass the others from spectral, textural, and CHM information. From this first application, it emerged that Hölder exponent can facilitate the ITD from UAV VHR imagery. Indeed, by applying a basic segmentation process, we obtained satisfying results in line with the literature, but in a relatively short time and with one elevation-independent input layer only. With this approach, the ITD from optical imagery of densely forested areas might be more accurate than simple spectral and elevation-based analysis. Naturally, this work should not be interpreted as an attempt to discredit ITD from the spectral and CHM dataset but as an alternative and computational low-demanding solution to ITD.