Estimation of the Maturity Date of Soybean Breeding Lines Using UAV-Based Multispectral Imagery

Abstract

1. Introduction

2. Materials and Methods

2.1. Field Experiment

2.2. UAV Data Collection

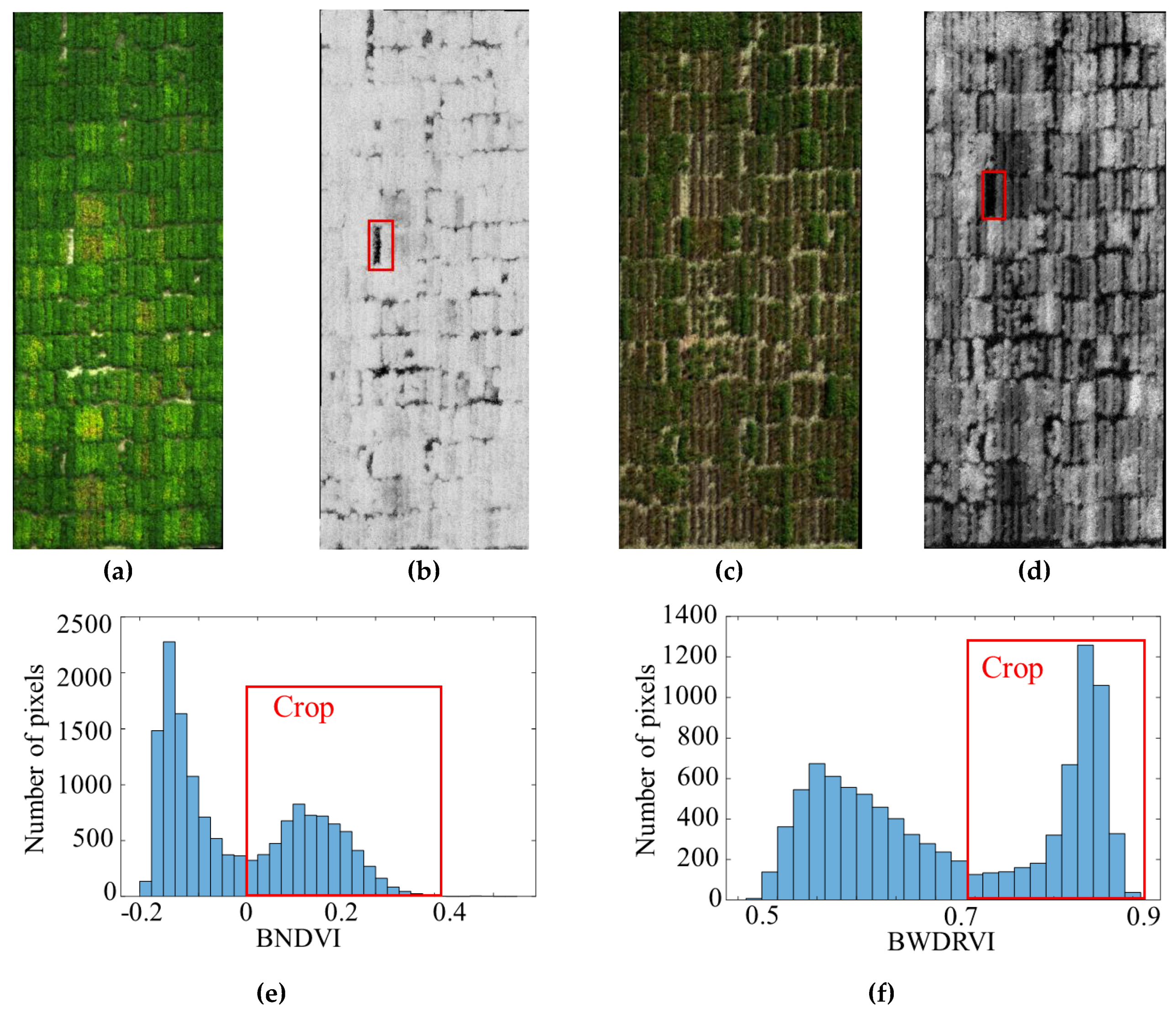

2.3. Image Processing

2.4. Maturity Date and Adjusted Maturity Date

2.5. Data Analysis

3. Results

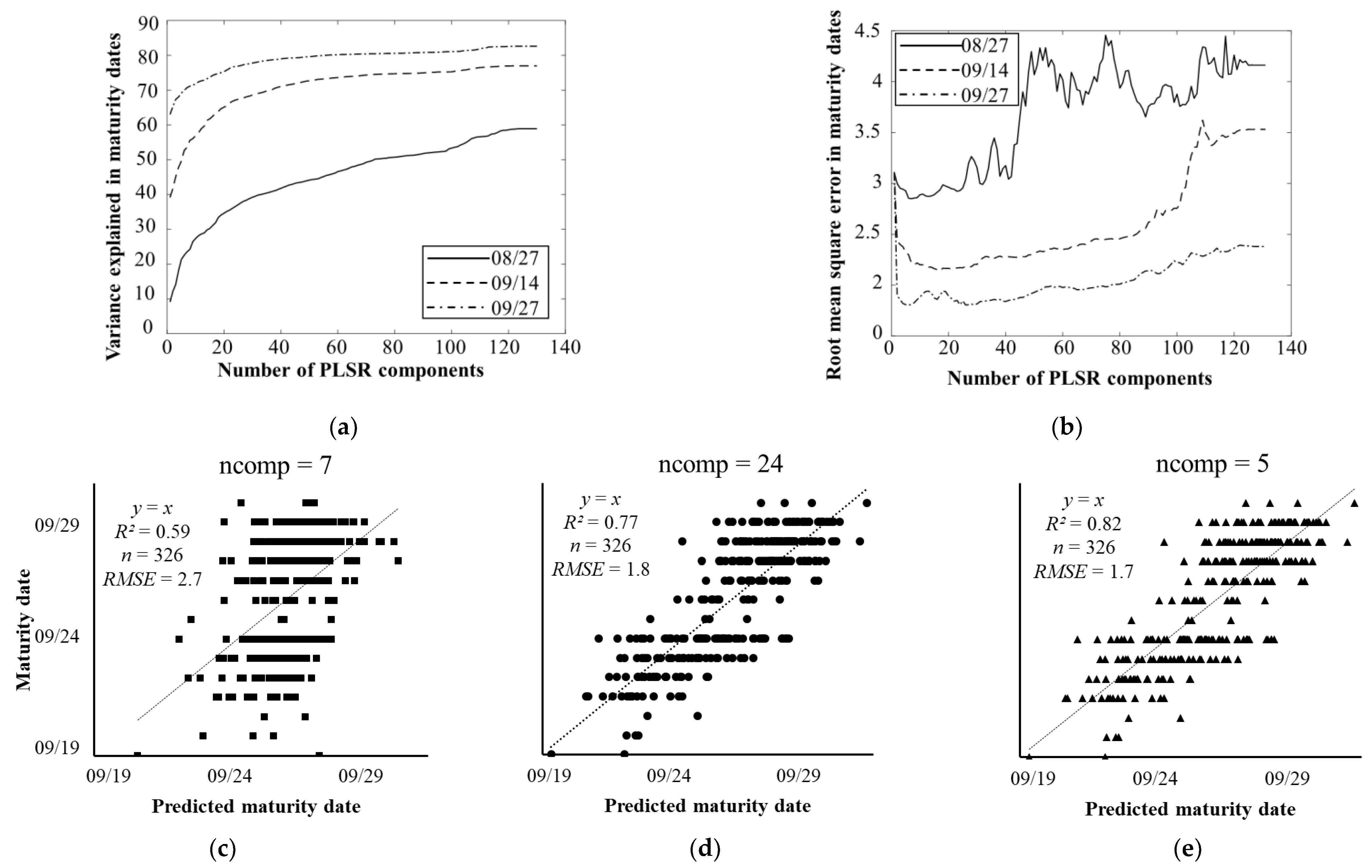

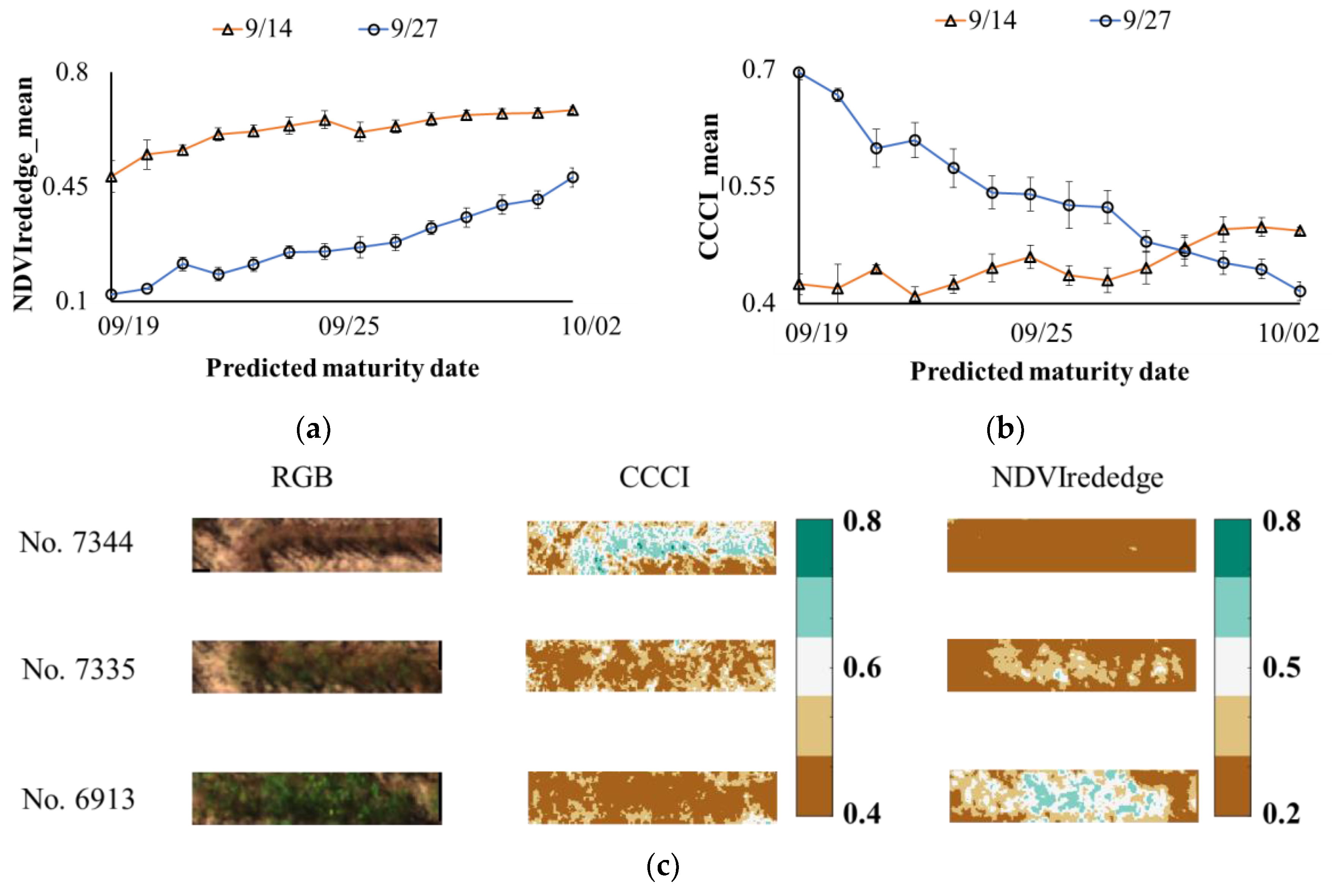

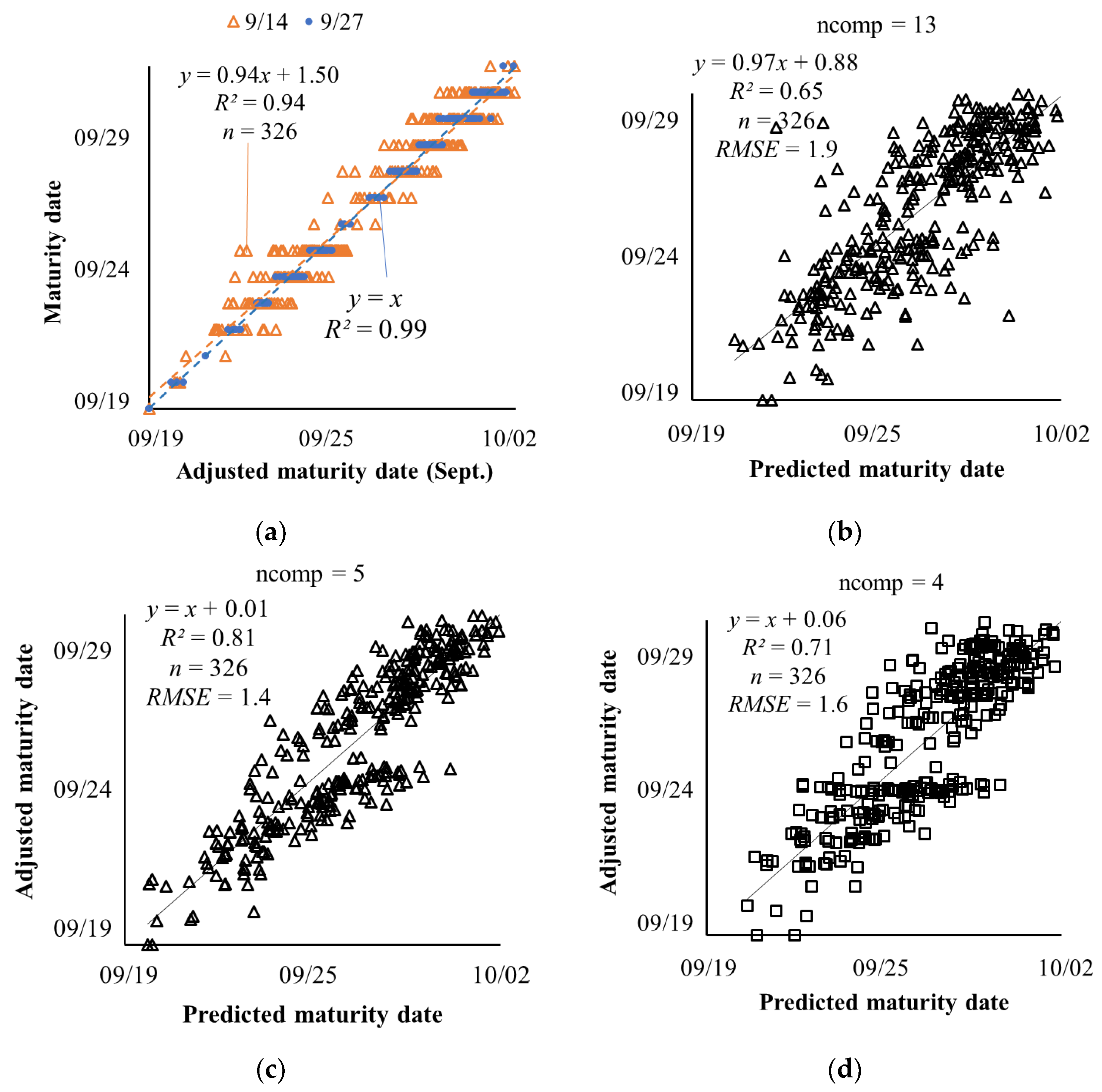

3.1. Estimation of Soybean Maturity Dates Using PLSR

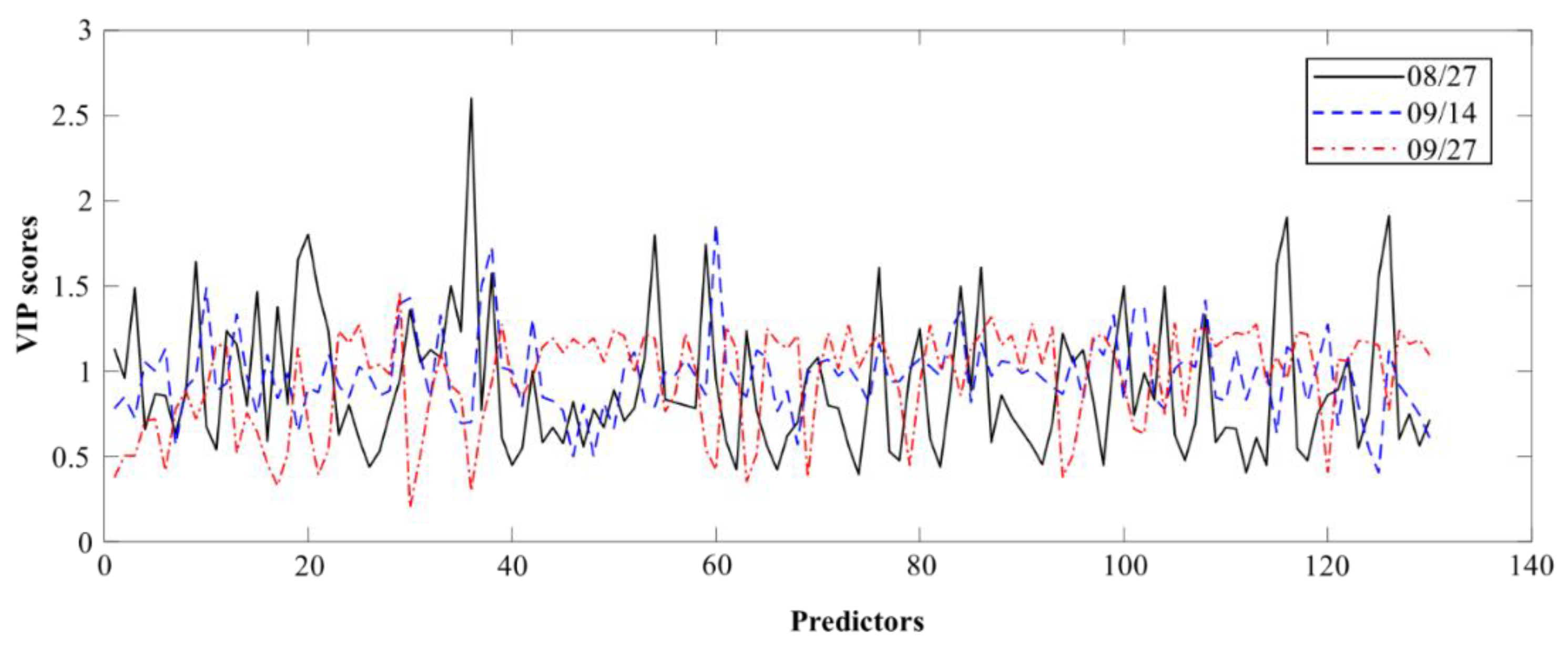

3.2. Model Parsimony

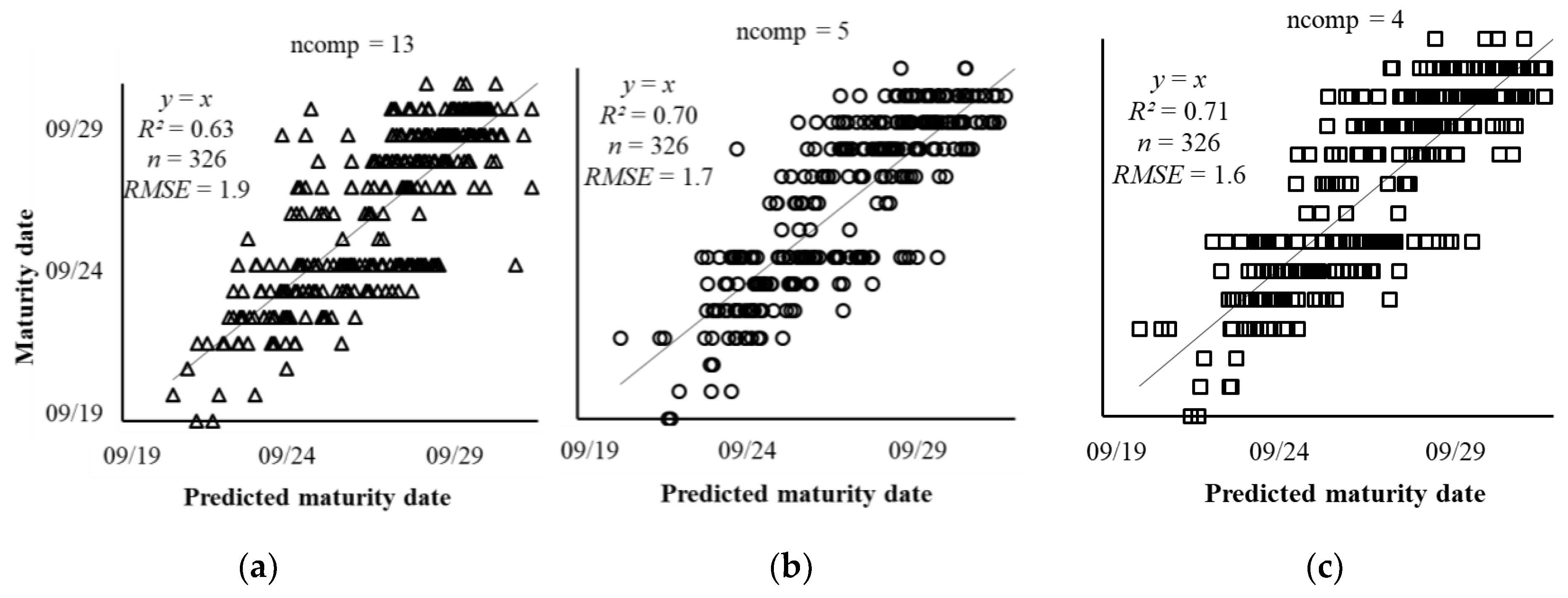

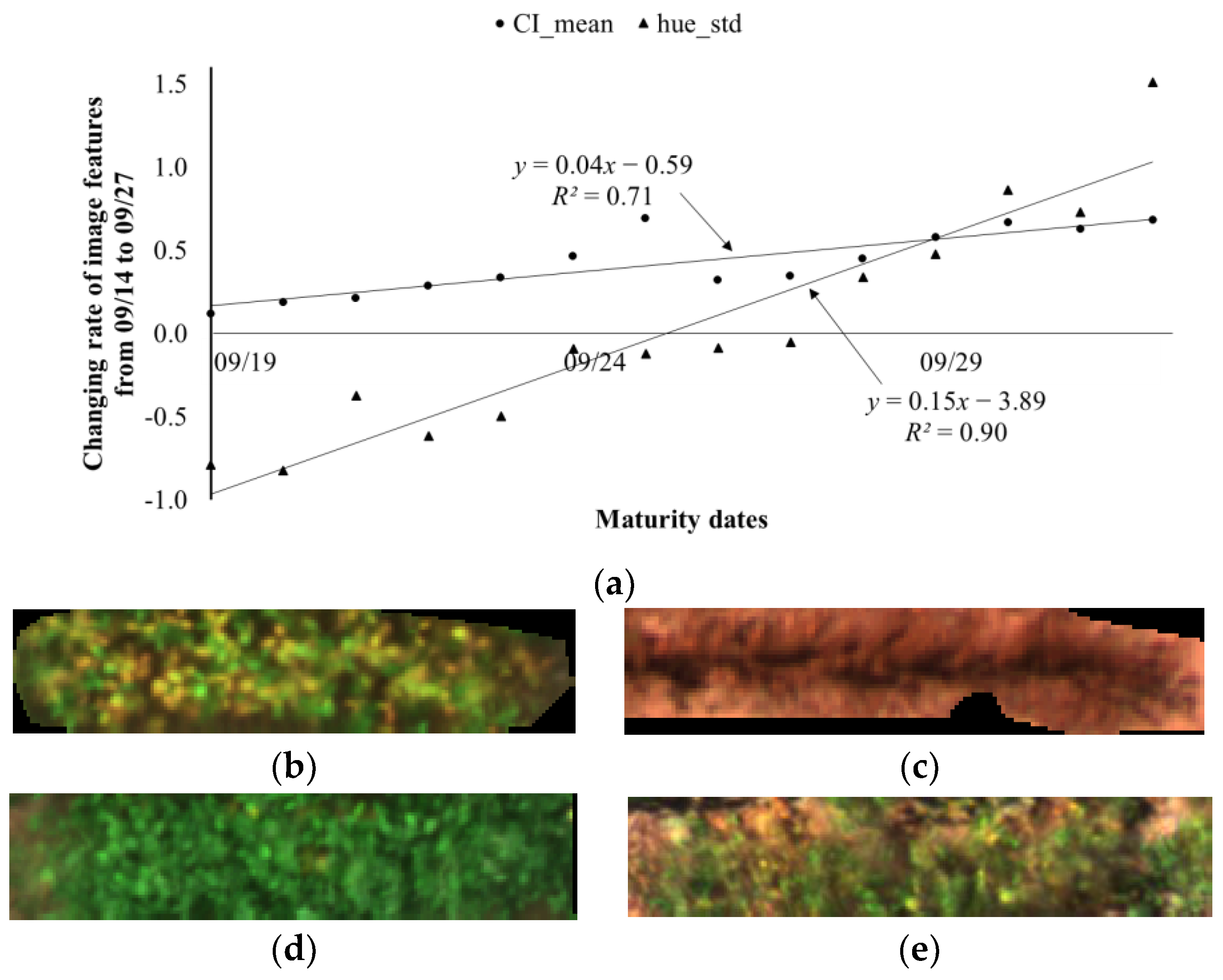

3.3. Adjusted Maturity Dates Based on the Variances in Image Features

4. Discussion and Future Work

4.1. Estimation of Soybean Maturity Dates at Different Growth Stages

4.2. Selected Features for Parsimonious Models

4.3. Adjusted Maturity Dates

4.4. Future Work

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| No. | Index Name | Descriptions | Formula |

|---|---|---|---|

| 1 | NDVI | Normalized difference VI* | |

| 2 | ATSAVI | Adjusted transformed soil-adjusted VI | |

| 3 | ARVI2 | Atmospherically Resistant VI 2 | |

| 4 | BWDRVI | Blue-wide dynamic range VI | |

| 5 | CCCI | Canopy Chlorophyll Content Index | |

| 6 | CIgreen | Chlorophyll Index Green | |

| 7 | CIrededge | Chlorophyll Index RedEdge | |

| 8 | CVI | Chlorophyll VI | |

| 9 | CI | Coloration Index | |

| 10 | CTVI | Corrected Transformed VI | |

| 11 | GDVI | Green Difference VI | |

| 12 | EVI | Enhanced VI | |

| 13 | EVI2 | Enhanced VI 2 | |

| 14 | EVI22 | Enhanced VI 2-2 | |

| 15 | GEMI | Global Environment Monitoring Index | |

| 16 | GARI | Green atmospherically resistant VI | |

| 17 | GLI | Green leaf index | |

| 18 | GBNDVI | Green-Blue NDVI | |

| 19 | GRNDVI | Green-Red NDVI | |

| 20 | H | Hue | |

| 21 | IPVI | Infrared percentage VI | |

| 22 | I | Intensity | |

| 23 | LogR | Log Ratio | |

| 24 | MSAVI | Modified Soil Adjusted VI | |

| 25 | NormG | Norm Green | |

| 26 | NormNIR | Norm NIR | |

| 27 | NormR | Norm Red | |

| 28 | NGRDI | Normalized green red difference index | |

| 29 | BNDVI | Blue-normalized difference VI | |

| 30 | GNDVI | Green NDVI | |

| 31 | NDRE | Normalized Difference Red-Edge | . |

| 32 | RI | Redness Index | |

| 33 | NDVIrededge | Normalized Difference Rededge/Red | |

| 34 | PNDVI | Pan NDVI | |

| 35 | RBNDVI | Red-Blue NDVI | |

| 36 | IF | Shape Index | |

| 37 | GRVI | Green Ratio VI | |

| 38 | DVI | Difference VI | |

| 39 | RRI1 | RedEdge Ratio Index 1 | |

| 40 | IO | Iron Oxide | |

| 41 | RGR | Red–Green Ratio | |

| 42 | SRRedNIR | Red/NIR Ratio VI | |

| 43 | RRI2 | Rededge/Red RedEdge Ratio Index 2 | |

| 44 | SQRTIRR | SQRT(IR/R) | |

| 45 | TNDVI | Transformed NDVI | |

| 46 | TGI | Triangular greenness index | |

| 47 | WDRVI | Wide Dynamic Range VI | |

| 48 | MSR | Modified Simple Ratio | |

| 49 | MTVI2 | Modified Triangular VI | |

| 50 | RDVI | Renormalized Difference VI | |

| 51 | IRG | Red Green Ratio Index | |

| 52 | OSAVI | Optimized Soil Adjusted VI | |

| 53 | SRNDVI | Simple Ratio × Normalized Difference Vegetation Index | |

| 54 | SARVI2 | Soil and Atmospherically Resistant Vegetation Index 2 |

References

- Hincks, J.; The World Is Headed for a Food Security Crisis. Here’s How We Can Avert It. 2018. Available online: https://www.un.org/development/desa/en/news/population/world-population-prospects-2017.html (accessed on 15 February 2019).

- Breene, K. Food Security and Why It Matters. 2018. Available online: https://www.weforum.org/agenda/2016/01/food-security-and-why-it-matters/ (accessed on 15 February 2019).

- Sun, M. Efficiency Study of Testing and Selection in Progeny-Row Yield Trials and Multiple-Environment Yield Trials in Soybean Breeding. Ph.D. Thesis, Iowa State University, Ames, IA, USA, 2014. [Google Scholar]

- Breseghello, F.; Coelho, A.S.G. Traditional and Modern Plant Breeding Methods with Examples in Rice (Oryza sativa L.). J. Agric. Food Chem. 2013, 61, 8277–8286. [Google Scholar] [CrossRef] [PubMed]

- Staton, M. What Is the Relationship between Soybean Maturity Group and Yield; Michigan State University Extension: East Lansing, MI, USA, 2017. [Google Scholar]

- Mourtzinis, S.; Conley, S.P. Delineating Soybean Maturity Groups across the United States. Agron. J. 2017, 109, 1397. [Google Scholar] [CrossRef]

- Fehr, W. Principles of Cultivar Development: Theory and Technique; Macmillan Publishing Company: London, UK, 1991. [Google Scholar]

- Masuka, B.; Atlin, G.N.; Olsen, M.; Magorokosho, C.; Labuschagne, M.; Crossa, J.; Bänziger, M.; Pixley, K.V.; Vivek, B.S.; von Biljon, A.; et al. Gains in Maize Genetic Improvement in Eastern and Southern Africa: I. CIMMYT Hybrid Breeding Pipeline. Crop Sci. 2017, 57, 168–179. [Google Scholar] [CrossRef]

- Yu, N.; Li, L.; Schmitz, N.; Tian, L.F.; Greenberg, J.A.; Diers, B.W. Development of methods to improve soybean yield estimation and predict plant maturity with an unmanned aerial vehicle based platform. Remote Sens. Environ. 2016, 187, 91–101. [Google Scholar] [CrossRef]

- Fehr, W.R.; Caviness, C.E.; Burmood, D.T.; Pennington, J.S. Stage of Development Descriptions for Soybeans, Glycine max (L.) Merrill1. Crop Sci. 1971, 11, 929. [Google Scholar] [CrossRef]

- Peske, S.T.; Höfs, A.; Hamer, E. Seed moisture range in a soybean plant. Rev. Bras. Sement. 2004, 26, 120–124. [Google Scholar] [CrossRef]

- Rundquist, D.C.; Gitelson, A.A.; Viña, A.; Arkebauer, T.J.; Keydan, G.; Leavitt, B. Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophys. Res. Lett. 2003, 30. [Google Scholar] [CrossRef]

- Barnes, E.; Clarke, T.R.; Richards, S.E.; Colaizzi, P.D.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T. Coincident detection of crop water stress, nitrogen status and canopy density using ground based multispectral data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000. [Google Scholar]

- Christenson, B.S.; Schapaugh, W.T.; An, N.; Price, K.P.; Prasad, V.; Fritz, A.K. Predicting Soybean Relative Maturity and Seed Yield Using Canopy Reflectance. Crop. Sci. 2016, 56, 625. [Google Scholar] [CrossRef]

- MicaSense. Use of Calibrated Reflectance Panels for RedEdge Data. 2017. Available online: https://support.micasense.com/hc/en-us/articles/115000765514-Use-of-Calibrated-Reflectance-Panels-For-RedEdge-Data (accessed on 20 April 2019).

- MicaSense. How to Process RedEdge Data in Pix4D. 2018. Available online: https://support.micasense.com/hc/en-us/articles/115000831714-How-to-Process-RedEdge-Data-in-Pix4D (accessed on 20 April 2019).

- Yang, C.; Everitt, J.H.; Bradford, J.M.; Murden, D. Airborne Hyperspectral Imagery and Yield Monitor Data for Mapping Cotton Yield Variability. Precis. Agric. 2004, 5, 445–461. [Google Scholar] [CrossRef]

- Hancock, D.W.; Dougherty, C.T. Relationships between Blue- and Red-based Vegetation Indices and Leaf Area and Yield of Alfalfa. Crop Sci. 2007, 47, 2547–2556. [Google Scholar] [CrossRef]

- Agapiou, A.; Hadjimitsis, D.G.; Alexakis, D.D. Evaluation of Broadband and Narrowband Vegetation Indices for the Identification of Archaeological Crop Marks. Remote Sens. 2012, 4, 3892–3919. [Google Scholar] [CrossRef]

- Henrich, V.; Götze, C.; Jung, A.; Sandow, C. Development of an Online indices-database: Motivation, concept and implementation. In Proceedings of the 6th EARSeL Imaging Spectroscopy SIG Workshop Innovative Tool for Scientific and Commercial Environment Applications, Tel Aviv, Israel, 16–19 March 2009. [Google Scholar]

- Ishtiaq, K.S.; Abdul-Aziz, O.I. Relative Linkages of Canopy-Level CO2 Fluxes with the Climatic and Environmental Variables for US Deciduous Forests. Environ. Manag. 2015, 55, 943–960. [Google Scholar] [CrossRef]

- Eigenvector Research. Vip. 2018. Available online: http://wiki.eigenvector.com/index.php?title=Vip (accessed on 15 February 2019).

- MathWorks Support Team. How to Calculate the Variable Importance in Projection from Outputs of PLSREGRESS. Available online: https://www.mathworks.com/matlabcentral/answers/443239-how-to-calculate-the-variable-importance-in-projection-from-outputs-of-plsregress (accessed on 15 February 2019).

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning; Springer: Berlin/Heidelberg, Germany, 2013; Volume 112. [Google Scholar]

- Chen, D.; Neumann, K.; Friedel, S.; Kilian, B.; Chen, M.; Altmann, T.; Klukas, C. Dissecting the phenotypic components of crop plant growth and drought responses based on high-throughput image analysis. Plant Cell 2014, 26, 4636–4655. [Google Scholar] [CrossRef] [PubMed]

- Gitelson, A.; Merzlyak, M.N. Spectral Reflectance Changes Associated with Autumn Senescence of Aesculus hippocastanum L. and Acer platanoides L. Leaves. Spectral Features and Relation to Chlorophyll. Estim. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Viña, A.; Gitelson, A.A. New developments in the remote estimation of the fraction of absorbed photosynthetically active radiation in crops. Geophys. Res. Lett. 2005, 32. [Google Scholar] [CrossRef]

- MicaSense. DLS Sensor Basic Usage. 2017. Available online: https://micasense.github.io/imageprocessing/MicaSense%20Image%20Processing%20Tutorial%203.html (accessed on 20 April 2019).

| Band Name | Center Wavelength (nm) | Bandwidth * (nm) | Reflectance (%) |

|---|---|---|---|

| Blue (b) | 475 | 20 | 49.2 |

| Green (g) | 560 | 20 | 49.3 |

| Red (r) | 668 | 10 | 49.1 |

| Red edge (re) | 717 | 10 | 48.7 |

| Near-infrared (nir) | 840 | 40 | 49.0 |

| Image Features | August 27 | September 14 | September 27 |

|---|---|---|---|

| re_mean | –0.596 * | – | – |

| S_mean | –0.501 | –0.697 ** | – |

| CCCI_std | –0.487 | –0.930 *** | – |

| Cirededge_mean | 0.504 | – | 0.368 |

| CVI_std | –0.389 | – | – |

| CI_std | 0.184 | –0.662 ** | – |

| GDVI_std | 0.177 | 0.861 *** | – |

| H_mean † | 0.102 | – | – |

| NormG_std | 0.413 | –0.831 *** | – |

| IF_std | –0.170 | – | – |

| RRI2_std | –0.239 | –0.227 | – |

| CI_mean | – | –0.935 *** | – |

| H_std | – | –0.915 *** | – |

| GRVI_mean | – | 0.922 *** | – |

| MTVI2_std | – | 0.795 *** | – |

| hue_std ‡ | – | – | 0.959 *** |

| GEMI_mean | – | – | 0.977 *** |

| GRNDVI_std | – | – | 0.959 *** |

| BNDVI_mean | – | – | 0.934 *** |

| IF_mean | – | – | –0.991 *** |

| Image Features | August 27 | September 14 | September 27 |

|---|---|---|---|

| re_mean | –0.596 * | –0.414 | 0.890 *** |

| S_mean | –0.501 | –0.697 ** | –0.830 *** |

| CCCI_std | –0.487 | –0.930 *** | 0.605 * |

| Cirededge_mean | 0.504 | 0.928 *** | 0.368 |

| CVI_std | –0.389 | –0.718 ** | –0.552 * |

| CI_std | 0.184 | –0.662 ** | 0.931 *** |

| GDVI_std | 0.177 | 0.861 *** | 0.845 *** |

| H_mean | 0.102 | –0.830 *** | –0.409 |

| NormG_std | 0.413 | –0.831 *** | 0.916 *** |

| IF_std | –0.170 | –0.921 *** | –0.585 * |

| RRI2_std | –0.239 | –0.227 | 0.981 *** |

| CI_mean | –0.595 * | –0.935 *** | –0.886 *** |

| H_std | –0.490 | –0.915 *** | 0.268 |

| GRVI_mean | 0.497 | 0.922 *** | 0.499 |

| MTVI2_std | –0.035 | 0.795 *** | 0.989 *** |

| hue_std | –0.413 | –0.931 *** | 0.959 *** |

| GEMI_mean | –0.004 | 0.857 *** | 0.977 *** |

| GRNDVI_std | 0.242 | –0.889 *** | 0.959 *** |

| BNDVI_mean | 0.356 | 0.879 *** | 0.934 *** |

| IF_mean | –0.544 * | –0.879 *** | –0.991 *** |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, J.; Yungbluth, D.; Vong, C.N.; Scaboo, A.; Zhou, J. Estimation of the Maturity Date of Soybean Breeding Lines Using UAV-Based Multispectral Imagery. Remote Sens. 2019, 11, 2075. https://doi.org/10.3390/rs11182075

Zhou J, Yungbluth D, Vong CN, Scaboo A, Zhou J. Estimation of the Maturity Date of Soybean Breeding Lines Using UAV-Based Multispectral Imagery. Remote Sensing. 2019; 11(18):2075. https://doi.org/10.3390/rs11182075

Chicago/Turabian StyleZhou, Jing, Dennis Yungbluth, Chin Nee Vong, Andrew Scaboo, and Jianfeng Zhou. 2019. "Estimation of the Maturity Date of Soybean Breeding Lines Using UAV-Based Multispectral Imagery" Remote Sensing 11, no. 18: 2075. https://doi.org/10.3390/rs11182075

APA StyleZhou, J., Yungbluth, D., Vong, C. N., Scaboo, A., & Zhou, J. (2019). Estimation of the Maturity Date of Soybean Breeding Lines Using UAV-Based Multispectral Imagery. Remote Sensing, 11(18), 2075. https://doi.org/10.3390/rs11182075