Sustainable Decision-Making in Higher Education: An AHP-NWA Framework for Evaluating Learning Management Systems

Abstract

1. Introduction

2. Theoretical Framework and Problem Conceptualization

3. Methodology and Materials

3.1. Simplified Method for Assessing the Relative Importance of Criteria (AHP-NWA Hybrid Model)

3.1.1. Analytic Hierarchy Process (AHP) Method

- Step 1. Defining the Problem and Forming the Hierarchical Structure

- The decision-making problem is decomposed into a hierarchy consisting of:

- Goal: The overall objective of the decision-making process.

- Criteria: Key factors influencing the decision, denoted as , , …,

- Alternatives: The possible options being evaluated.

- Step 2. Pairwise Comparison of Criteria

- Step 3. Normalization of the Pairwise Comparison Matrix

- Step 4. Calculation of Priority Weights

- Step 5. Consistency Check

- Step 6. Evaluation of Alternatives

3.1.2. NWA (Net Worth Analysis)

- Step 7. Final Evaluation of Software

3.2. Decision Structure and Expert Selection

- Team A (Decision-Makers)—Consisted of three university professors and members of the higher education institution’s management. Their task was to define the relevant evaluation criteria in accordance with the institution’s strategic and pedagogical objectives and to determine their weighting factors using the AHP method. The Delphi method, a structured communication technique aimed at reaching expert consensus, was applied for criteria definition [40]. This iterative process enabled team members, through multiple rounds of anonymous scoring and opinion exchange, to reach a consensus on which criteria best reflected the institution’s needs and priorities. The use of the Delphi method helped avoid the dominance of individual opinions and ensured a balanced set of criteria resulting from collective reasoning. The selection of three experts for Panel A was guided by their strategic roles within the institution, all three are senior academic managers directly involved in technology procurement and quality assurance processes. Their inclusion ensures that the weighting of criteria reflects key institutional decision-making perspectives, while maintaining a focused and experienced expert group consistent with similar MCDM studies in higher education contexts.

- Team B (Technical Experts)—Comprised five experienced IT specialists with many years of practice in implementing, administering, and maintaining LMS solutions. Their role was to evaluate the specific platforms based on the criteria defined by Team A. For score aggregation, the NWA method was employed, enabling the assessment of alternatives while considering interdependencies between criteria.

3.3. Methodological Contribution to Sustainability

3.4. Evaluation Criteria

- Performance—This criterion encompasses the system’s functional capabilities, including its capacity to manage and distribute learning content, the availability of interaction tools, system scalability in relation to the number of users and operational stability and reliability under real-world conditions. High performance levels are essential for maintaining continuity of teaching and ensuring a high-quality user experience.

- Cost—Includes all expenses incurred during the lifecycle of the LMS platform, such as licensing fees, initial implementation costs, periodic maintenance, upgrades and potential additional modules. Budget constraints in educational institutions often make this criterion a decisive factor in the selection process.

- Implementation—Refers to the complexity of the installation process and the time required for full integration of the platform into the institution’s existing information ecosystem. It also covers compatibility with current hardware and software resources, as well as the need for adaptation or migration of existing learning materials.

- Security—The assessment of security features includes data protection, integration of security protocols, authentication and authorization mechanisms, communication encryption and system resilience to cyber threats. In the context of handling sensitive student and faculty data, this criterion holds particular importance.

- Usability—Covers the intuitiveness of the user interface, ease of navigation, speed of access to features and system accessibility for users with varying levels of technical expertise. This criterion also includes accessibility for users with disabilities, in accordance with universal design standards.

- Support—Involves the availability of technical assistance, including help desk services, contact with the vendor or supplier, availability of online user communities and forums, as well as the speed and quality of responses to reported issues. Effective technical support can significantly reduce downtime and enhance system reliability.

- Documentation—Pertains to the quality, scope and up-to-dateness of user and system documentation, including manuals, guides and tutorials. Good documentation facilitates the training of teaching and administrative staff, speeds up the implementation process and reduces the need for external support.

4. Research Results

- Determination of criterion weighting factors,

- Consistency check of the pairwise comparison matrix,

- Evaluation of alternatives and aggregation of ratings.

4.1. Criterion Weighting Factors (AHP)

4.2. Consistency Check

4.3. Evaluation of Software Alternatives (Expert Assessment)

5. Discussion

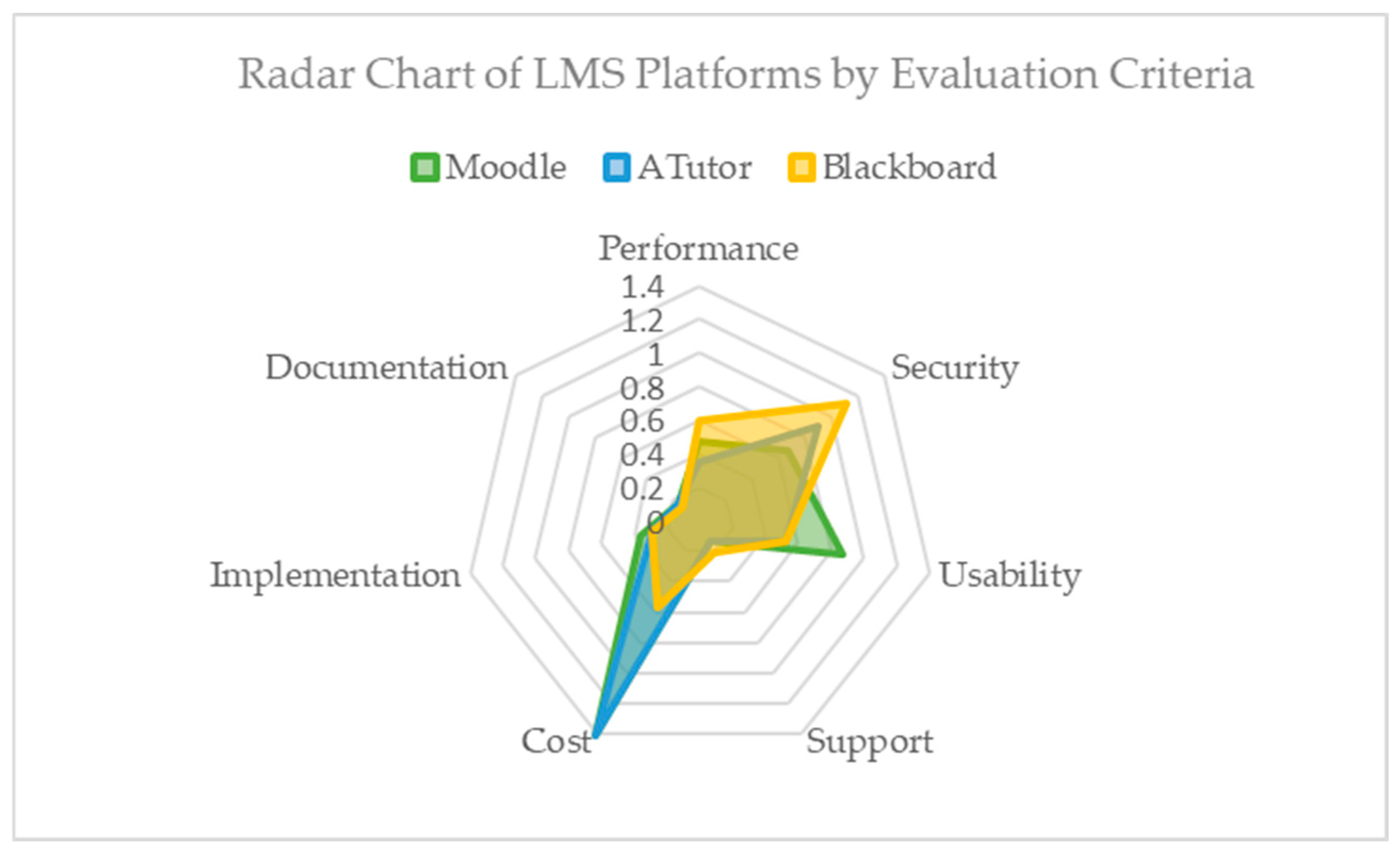

- Moodle—0.586

- ATutor—0.541

- Blackboard—0.490

5.1. Limitations of the Study and Recommendations for Future Research

- Limited number of alternatives—The analysis covered only three LMS platforms (Moodle, ATutor and Blackboard), which restricts the breadth of comparison. Future studies should include a larger number of LMS solutions, such as Canvas, Google Classroom, Sakai, or other specialized platforms, to provide a more comprehensive market analysis.

- Small sample size of experts in Panel A—The first expert panel consisted of only three members, which may affect the representativeness of the findings. Expanding the expert group to include more participants from different types of educational institutions and geographic regions would increase the reliability of results and reduce the risk of individual biases.

- Reliance on subjective expert assessments—The evaluation of criteria and alternatives was based on subjective expert judgments without verification through objective performance metrics in real-world LMS operations. Future research should combine expert assessments with empirical data, such as system response time, operational stability, number of reported security incidents, and end-user satisfaction.

- Static nature of the model—The model reflects the state of LMS platforms at the time of the study. Given that LMS solutions are continually evolving, prices change and new security standards emerge, periodic re-evaluation is recommended to ensure the results remain relevant and up to date.

5.2. Practical Implications

- Identification of key priorities—Recognizing cost, security and usability as the most influential criteria provides institutions with a clear indication of the priorities to consider when selecting a platform. In practice, this means that an LMS solution combining low costs with high levels of security and ease of use can ensure an optimal balance between value and performance, especially in budget-constrained environments.

- Guidance through alternative ranking—The obtained ranking, with Moodle in first place, ATutor in second and Blackboard in third, can serve as a guideline for institutions in similar circumstances, particularly for those considering a transition to open-source solutions. These results suggest that Moodle, due to its combination of low cost, good usability and ease of implementation, may be an attractive option for most institutions.

- Adaptability of the methodological framework—The decision-making framework used in this study can be easily adapted to other decision contexts in education. The transparency of the decision-making process and the ability to present results through clear tables and charts facilitate communication of findings to various target groups, including academic staff, IT departments and institutional leadership. This not only improves the quality of decisions but also increases their acceptance among key stakeholders in the implementation process.

5.3. Potential for Adapting the Model to Other Contexts

- Application in other areas of educational technology—The model can be applied to other segments of educational technology, such as the selection of online examination systems, content management systems (CMS), video conferencing tools, learning analytics software or virtual and augmented reality systems in teaching. In these cases, the criteria could include parameters such as compatibility with existing infrastructure, integration capabilities with other tools, scalability and user experience.

- Use beyond the education sector—The AHP-NWA model can also be employed in decision-making across various fields, including public administration, healthcare, industry and the IT sector.

- Integration with other MCDM techniques—The model is compatible with other multi-criteria decision-making methods and can be extended into hybrid approaches by incorporating techniques such as: TOPSIS, VIKOR or PIPRECIA, resulting in more robust and detailed evaluations. Furthermore, integrating fuzzy logic could help reduce uncertainty and increase result accuracy, especially when the criteria are subjective or difficult to measure.

- Utility for both internal and public analyses—Due to its transparency and the ability to present results in tables and charts, the model can serve not only as a tool for internal evaluations but also for the development of publicly available analyses that support strategic decision-making at the sectoral or community level.

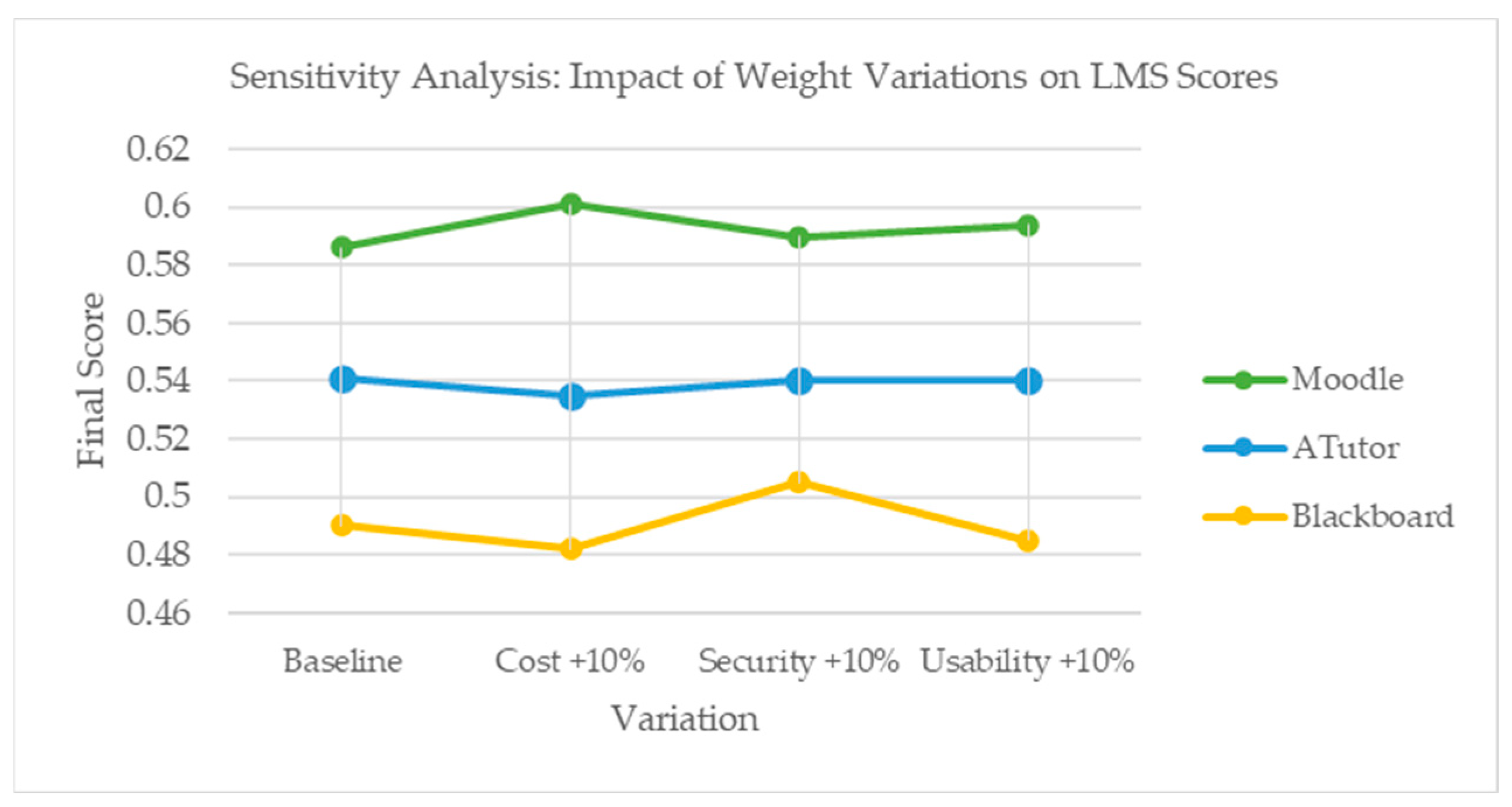

5.4. Sensitivity Analysis

- When the weight of cost was increased to 31%, Moodle’s total score increased from 0.586 to 0.601, while ATutor and Blackboard showed marginal decreases, preserving the ranking order (Moodle > ATutor > Blackboard).

- Increasing the weight of security to 24% favored Blackboard (from 0.490 to 0.505) due to its high security rating, but not sufficiently to surpass ATutor.

- Increasing the weight of usability to 19% further strengthened Moodle’s leading position (0.594), as it had the highest usability score among the alternatives.

5.5. Scenario Analysis

- Scenario 1: Budget-Constrained EnvironmentIn institutions with limited financial resources, the cost criterion was given dominant importance (40%), with reduced weights for performance and support. Under this scenario, Moodle’s advantage became even more pronounced, achieving a total score of 0.622, while Blackboard dropped further due to its high costs.

- Scenario 2: Security-Critical EnvironmentFor institutions dealing with sensitive data, the weight of security was increased to 35%, with usability and implementation reduced. In this case, Blackboard’s score rose to 0.528, reducing the gap with ATutor (0.547), but Moodle remained the top-ranked option (0.593).

- Scenario 3: User-Centric EnvironmentIn contexts prioritizing user experience and accessibility, usability was increased to 30%, while cost and documentation were reduced. Moodle consolidated its leading position (0.612) due to its intuitive interface, while ATutor remained second (0.539).

- Small Private Colleges,

- Large Research Universities,

- Low-Bandwidth or Resource-Constrained Regions.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hashim, M.A.M.; Tlemsani, I.; Matthews, R. Correction: Higher education strategy in digital transformation. Educ. Inf. Technol. 2022, 27, 7379. [Google Scholar] [CrossRef]

- Oliveira, K.K.S.; De Souza, R.A.C. Digital transformation towards education 4.0. Inform. Educ. 2022, 21, 283–309. [Google Scholar] [CrossRef]

- Demartini, C.G.; Benussi, L.; Gatteschi, V.; Renga, F. Education and digital transformation: The “riconnessioni” project. IEEE Access 2020, 8, 186233–186256. [Google Scholar] [CrossRef]

- Sharifov, M.; Safikhanova, S.; Mustafa, A. Review of prevailing trends, barriers and future perspectives of learning managment systems (LMSs) in higher education institutions. Int. J. Educ. Dev. Using Inf. Commun. Technol. 2021, 17, 207–216. [Google Scholar]

- Munna, M.S.H.; Hossain, M.R.; Saylo, K.R. Digital education revolution: Evaluating LMS-based learning and traditional approaches. J. Innov. Technol. Converg. 2024, 6, 2. [Google Scholar] [CrossRef]

- Castro, R. Blended learning in higher education: Trends and capabilities. Educ. Inf. Technol. 2019, 24, 2523–2546. [Google Scholar] [CrossRef]

- Babbar, M.; Gupta, T. Response of educational institutions to COVID-19 pandemic: An inter-country comparison. Policy Futures Educ. 2022, 20, 469–491. [Google Scholar] [CrossRef] [PubMed]

- Adedoyin, O.B.; Soykan, E. COVID-19 pandemic and online learning: The challenges and opportunities. Interact. Learn. Environ. 2023, 31, 863–875. [Google Scholar] [CrossRef]

- Turnbull, D.; Chugh, R.; Luck, J. Transitioning to e-learning during the COVID-19 pandemic: How have Higher Education Institutions responded to the challenge? Educ. Inf. Technol. 2021, 26, 6401–6419. [Google Scholar] [CrossRef]

- Cone, L.; Brøgger, K.; Berghmans, M.; Decuypere, M.; Förschler, A.; Grimaldi, E.; Hartong, S.; Hillman, T.; Ideland, M.; Landri, P.; et al. Pandemic acceleration: COVID-19 and the emergency digitalization of European education. Eur. Educ. Res. J. 2022, 21, 845–868. [Google Scholar] [CrossRef]

- Berking, P.; Gallagher, S. Choosing a learning management system. Adv. Distrib. Learn. (ADL) Co-Lab. 2013, 14, 40–62. [Google Scholar]

- Ali, M.; Wood-Harper, T.; Wood, B. Understanding the technical and social paradoxes of learning management systems usage in higher education: A sociotechnical perspective. Syst. Res. Behav. Sci. 2024, 41, 134–152. [Google Scholar] [CrossRef]

- Wright, C.R.; Lopes, V.; Montgomerie, C.; Reju, S.; Schmoller, S. Selecting a learning management system: Advice from an academic perspective. Educ. Rev. 2014. [Google Scholar]

- Alshomrani, S. Evaluation of technical factors in distance learning with respect to open source LMS. Asian Trans. Comput. 2012, 2, 1117. [Google Scholar]

- Galvis, Á.H. Supporting decision-making processes on blended learning in higher education: Literature and good practices review. Int. J. Educ. Technol. High. Educ. 2018, 15, 25. [Google Scholar] [CrossRef]

- Sahoo, S.K.; Goswami, S.S. A comprehensive review of multiple criteria decision-making (MCDM) methods: Advancements, applications, and future directions. Decis. Mak. Adv. 2023, 1, 25–48. [Google Scholar] [CrossRef]

- Asadabadi, M.R.; Chang, E.; Saberi, M. Are MCDM methods useful? A critical review of analytic hierarchy process (AHP) and analytic network process (ANP). Cogent Eng. 2019, 6, 1623153. [Google Scholar] [CrossRef]

- Wen, Z.; Liao, H.; Zavadskas, E.K. MACONT: Mixed aggregation by comprehensive normalization technique for multi-criteria analysis. Informatica 2020, 31, 857–880. [Google Scholar] [CrossRef]

- Oussous, A.; Menyani, I.; Srifi, M.; Lahcen, A.A.; Kheraz, S.; Benjelloun, F.-Z. An evaluation of open source adaptive learning solutions. Information 2023, 14, 57. [Google Scholar] [CrossRef]

- Altınay-Gazi, Z.; Altınay-Aksal, F. Technology as mediation tool for improving teaching profession in higher education practices. Eurasia J. Math. Sci. Technol. Educ. 2017, 13, 803–813. [Google Scholar] [CrossRef]

- Pettersson, F. Understanding digitalization and educational change in school by means of activity theory and the levels of learning concept. Educ. Inf. Technol. 2021, 26, 187–204. [Google Scholar] [CrossRef]

- Ricard, M.; Zachariou, A.; Burgos, D. Digital education, information and communication technology, and education for sustainable development. In Radical Solutions and eLearning: Practical Innovations and Online Educational Technology; Springer: Singapore, 2020; pp. 27–39. [Google Scholar] [CrossRef]

- Guri-Rosenblit, S. ‘Distance education’ and ‘e-learning’: Not the same thing. High. Educ. 2005, 49, 467–493. [Google Scholar] [CrossRef]

- Yucel, A.S. E-learning approach in teacher training. Turk. Online J. Distance Educ. 2006, 7, 123–131. [Google Scholar]

- Oliveira, P.C.; Cunha, C.J.C.A.; Nakayama, M.K. Learning management systems (LMS) and e-learning management: An integrative review and research agenda. JISTEM-J. Inf. Syst. Technol. Manag. 2016, 13, 157–180. [Google Scholar] [CrossRef]

- Dias, B.D.; Diniz, J.A.; Hadjileontiadis, L.J. Towards an intelligent learning management system under blended learning. In Trends, Profiles and Modeling Perspectives; Intelligent Systems Reference Library; Springer: Berlin/Heidelberg, Germany, 2014; Volume 59. [Google Scholar] [CrossRef]

- Bradley, V.M. Learning management system (LMS) use with online instruction. Int. J. Technol. Educ. 2021, 4, 68–92. [Google Scholar] [CrossRef]

- Rößling, G.; Joy, M.; Moreno, A.; Radenski, A.; Malmi, L.; Kerren, A.; Naps, T.; Ross, R.J.; Clancy, M.; Korhonen, A.; et al. Enhancing learning management systems to better support computer science education. ACM SIGCSE Bull. 2008, 40, 142–166. [Google Scholar] [CrossRef]

- Veluvali, P.; Surisetti, J. Learning management system for greater learner engagement in higher education—A review. High. Educ. Future 2022, 9, 107–121. [Google Scholar] [CrossRef]

- Massam, B.H. Multi-criteria decision making (MCDM) techniques in planning. Prog. Plan. 1988, 30, 1–84. [Google Scholar] [CrossRef]

- Saaty, T.L. The Analytic Hierarchy Process; McGraw-Hill: New York, NY, USA, 1980. [Google Scholar]

- Saaty, T.L. Analytic hierarchy process. In Encyclopedia of Operations Research and Management Science; Springer: Boston, MA, USA, 2013; pp. 52–64. [Google Scholar]

- Colace, F.; De Santo, M. Evaluation models for e-learning platforms and the AHP approach: A case study. IPSI BGD Trans. Internet Res. 2011, 7, 31–43. [Google Scholar]

- Yang, J. Fuzzy comprehensive evaluation system and decision support system for learning management of higher education online courses. Sci. Rep. 2025, 15, 18113. [Google Scholar] [CrossRef] [PubMed]

- Hafizan, C.; Noor, Z.Z.; Abba, A.H.; Hussein, N. An alternative aggregation method for a life cycle impact assessment using an analytical hierarchy process. J. Clean. Prod. 2016, 112, 3244–3255. [Google Scholar] [CrossRef]

- Albayrak, E.; Erensal, Y.C. Using analytic hierarchy process (AHP) to improve human performance: An application of multiple criteria decision-making problem. J. Intell. Manuf. 2004, 15, 491–503. [Google Scholar] [CrossRef]

- Martinez, M.; de Andres, D.; Ruiz, J.-C.; Friginal, J. From measures to conclusions using analytic hierarchy process in dependability benchmarking. IEEE Trans. Instrum. Meas. 2014, 63, 2548–2556. [Google Scholar] [CrossRef]

- Beynon, M. An analysis of distributions of priority values from alternative comparison scales within AHP. Eur. J. Oper. Res. 2002, 140, 104–117. [Google Scholar] [CrossRef]

- Marinović, M.; Viduka, D.; Lavrnić, I.; Stojčetović, B.; Skulić, A.; Bašić, A.; Balaban, P.; Rastovac, D. An intelligent multi-criteria decision approach for selecting the optimal operating system for educational environments. Electronics 2025, 14, 514. [Google Scholar] [CrossRef]

- Linstone, H.A.; Turoff, M. (Eds.) The Delphi Method: Techniques and Applications; Addison-Wesley: Reading, MA, USA, 2002; Available online: https://is.njit.edu/pubs/delphibook/ (accessed on 8 November 2025).

- Basar, G.; Der, O. Multi-objective optimization of process parameters for laser cutting polyethylene using fuzzy AHP-based MCDM methods. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2025, 239, 1225–1241. [Google Scholar] [CrossRef]

- Pant, S.; Garg, P.; Kumar, A.; Ram, M.; Kumar, A.; Sharma, H.K.; Klochkov, Y. AHP-based multi-criteria decision-making approach for monitoring health management practices in smart healthcare system. Int. J. Syst. Assur. Eng. Manag. 2024, 15, 1444–1455. [Google Scholar] [CrossRef]

- Turker, Y.A.; Baynal, K.; Turker, T. The evaluation of learning management systems by using fuzzy AHP, fuzzy TOPSIS and an integrated method: A case study. Turk. Online J. Distance Educ. 2019, 20, 195–218. [Google Scholar] [CrossRef]

- Pucar, Đ.; Popović, G.; Milovanović, G. MCDM methods-based assessment of learning management systems. Teme 2023, 47, 939–956. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, F.; Mu, C. One for all: A general framework of LLMs-based multi-criteria decision making on human expert level. arXiv 2025, arXiv:2502.15778. [Google Scholar]

| Performance | Security | Usability | Support | Cost | Implementation | Documentation | Significance Factor (%) | |

|---|---|---|---|---|---|---|---|---|

| Performance | 1 | 1/3 | 1/3 | 3 | 1/3 | 2 | 5 | 12% |

| 0.091 | 0.058 | 0.054 | 0.196 | 0.098 | 0.150 | 0.185 | ||

| Security | 3 | 1 | 3 | 2 | 1/3 | 3 | 5 | 22% |

| 0.272 | 0.175 | 0.484 | 0.130 | 0.098 | 0.225 | 0.185 | ||

| Usability | 3 | 1/3 | 1 | 3 | 1 | 3 | 5 | 17% |

| 0.272 | 0.058 | 0.161 | 0.196 | 0.294 | 0.225 | 0.007 | ||

| Support | 1/3 | 1/2 | 1/3 | 1 | 1/5 | 1 | 3 | 7% |

| 0.030 | 0.088 | 0.054 | 0.065 | 0.059 | 0.075 | 0.111 | ||

| Cost | 3 | 3 | 1 | 5 | 1 | 3 | 5 | 28% |

| 0.272 | 0.526 | 0.161 | 0.326 | 0.294 | 0.225 | 0.185 | ||

| Implementation | 1/2 | 1/3 | 1/3 | 1 | 1/3 | 1 | 3 | 7% |

| 0.045 | 0.058 | 0.054 | 0.065 | 0.098 | 0.075 | 0.111 | ||

| Documentation | 1/5 | 1/5 | 1/5 | 1/3 | 1/5 | 1/3 | 1 | 3% |

| 0.018 | 0.035 | 0.032 | 0.022 | 0.059 | 0.025 | 0.037 | ||

| TOTAL | 11.033 | 5.700 | 6.200 | 15.333 | 3.400 | 13.333 | 27.000 | 100% |

| 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 |

| Performance | Security | Usability | Support | Cost | Implementation | Documentation | Matrix | Consistency Index | Lambda Max | |

|---|---|---|---|---|---|---|---|---|---|---|

| Performance | 1 | 1/3 | 1/3 | 3 | 1/3 | 2 | 5 | 0.86 | 7.24 | 1.03 |

| 0.119 | 0.075 | 0.058 | 0.207 | 0.095 | 0.145 | 0.163 | ||||

| Security | 3 | 1 | 3 | 2 | 1/3 | 3 | 5 | 1.71 | 7.64 | 1.09 |

| 0.356 | 0.224 | 0.520 | 0.138 | 0.095 | 0.217 | 0.163 | ||||

| Usability | 3 | 1/3 | 1 | 3 | 1 | 3 | 5 | 1.48 | 8.51 | 1.22 |

| 0.356 | 0.075 | 0.173 | 0.207 | 0.284 | 0.217 | 0.163 | ||||

| Support | 1/3 | 1/2 | 1/3 | 1 | 1/5 | 1 | 3 | 0.51 | 7.34 | 1.05 |

| 0.040 | 0.112 | 0.058 | 0.069 | 0.057 | 0.072 | 0.098 | ||||

| Cost | 3 | 3 | 1 | 5 | 1 | 3 | 5 | 2.21 | 7.78 | 1.11 |

| 0.356 | 0.673 | 0.173 | 0.344 | 0.284 | 0.217 | 0.163 | ||||

| Implementation | 1/2 | 1/3 | 1/3 | 1 | 1/3 | 1 | 3 | 0.50 | 6.88 | 0.98 |

| 0.059 | 0.075 | 0.030 | 0.069 | 0.095 | 0.072 | 0.098 | ||||

| Documentation | 1/5 | 1/5 | 1/5 | 1/3 | 1/5 | 1/3 | 1 | 0.24 | 7.36 | 1.05 |

| 0.024 | 0.045 | 0.035 | 0.023 | 0.057 | 0.024 | 0.033 | ||||

| TOTAL | 52.75 | 7.54 | ||||||||

| CR | 0.07 | |||||||||

| Criterion | Significance Factor (%) | Moodle | ATutor | Blackboard | |||

|---|---|---|---|---|---|---|---|

| Expert Evaluation | Points | Expert Evaluation | Points | Expert Evaluation | Points | ||

| Performance | 12% | 4 | 0.475 | 3 | 0.356 | 5 | 0.594 |

| Security | 22% | 3 | 0.673 | 4 | 0.897 | 5 | 1.121 |

| Usability | 17% | 5 | 0.867 | 3 | 0.520 | 3 | 0.520 |

| Support | 7% | 2 | 0.138 | 2 | 0.138 | 3 | 0.207 |

| Cost | 28% | 5 | 1.421 | 5 | 1.421 | 2 | 0.569 |

| Implementation | 7% | 5 | 0.362 | 4 | 0.290 | 4 | 0.290 |

| Documentation | 3% | 5 | 0.163 | 5 | 0.163 | 4 | 0.130 |

| Total | 100% | 4 | 0.586 | 4 | 0.541 | 4 | 0.490 |

| Score | 1 | 2 | 3 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Veljić, A.; Viduka, D.; Ilić, L.; Karabasevic, D.; Šijan, A.; Papić, M. Sustainable Decision-Making in Higher Education: An AHP-NWA Framework for Evaluating Learning Management Systems. Sustainability 2025, 17, 10130. https://doi.org/10.3390/su172210130

Veljić A, Viduka D, Ilić L, Karabasevic D, Šijan A, Papić M. Sustainable Decision-Making in Higher Education: An AHP-NWA Framework for Evaluating Learning Management Systems. Sustainability. 2025; 17(22):10130. https://doi.org/10.3390/su172210130

Chicago/Turabian StyleVeljić, Ana, Dejan Viduka, Luka Ilić, Darjan Karabasevic, Aleksandar Šijan, and Miloš Papić. 2025. "Sustainable Decision-Making in Higher Education: An AHP-NWA Framework for Evaluating Learning Management Systems" Sustainability 17, no. 22: 10130. https://doi.org/10.3390/su172210130

APA StyleVeljić, A., Viduka, D., Ilić, L., Karabasevic, D., Šijan, A., & Papić, M. (2025). Sustainable Decision-Making in Higher Education: An AHP-NWA Framework for Evaluating Learning Management Systems. Sustainability, 17(22), 10130. https://doi.org/10.3390/su172210130