Understanding Continuance Intention of Generative AI in Education: An ECM-Based Study for Sustainable Learning Engagement

Abstract

1. Introduction

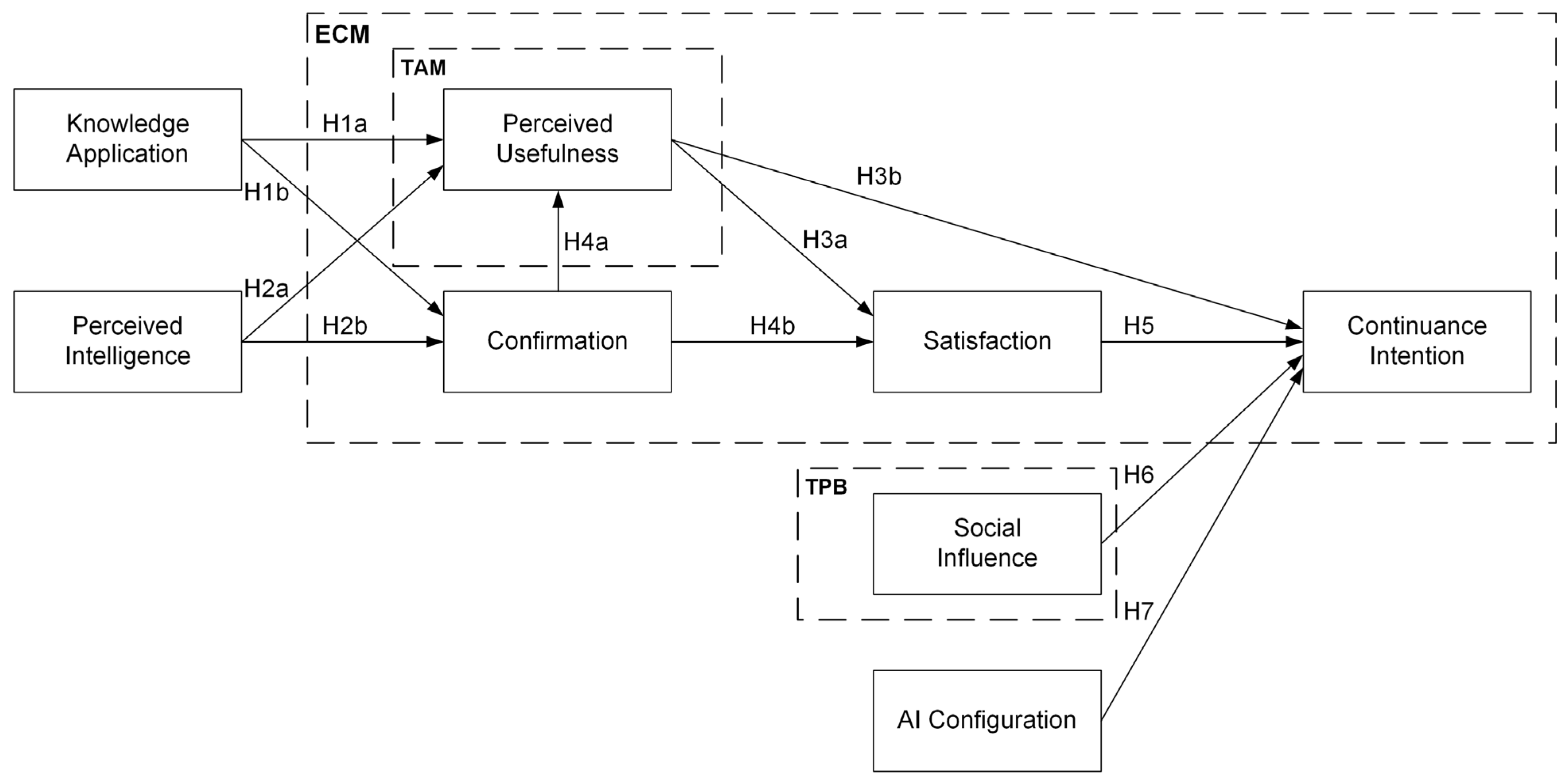

- Determine whether the ECM can effectively explain continuance intention in the context of generative AI;

- Examine the effects of knowledge application and perceived intelligence on perceived usefulness and confirmation;

- Investigate the influence of social influence and AI configuration on continuance intention.

2. Theoretical Foundation and Research Hypotheses

2.1. Knowledge Application

2.2. Perceived Intelligence

2.3. Perceived Usefulness

2.4. Confirmation

2.5. Satisfaction

2.6. Social Influence

2.7. AI Configuration

3. Research Methodology

3.1. Measures

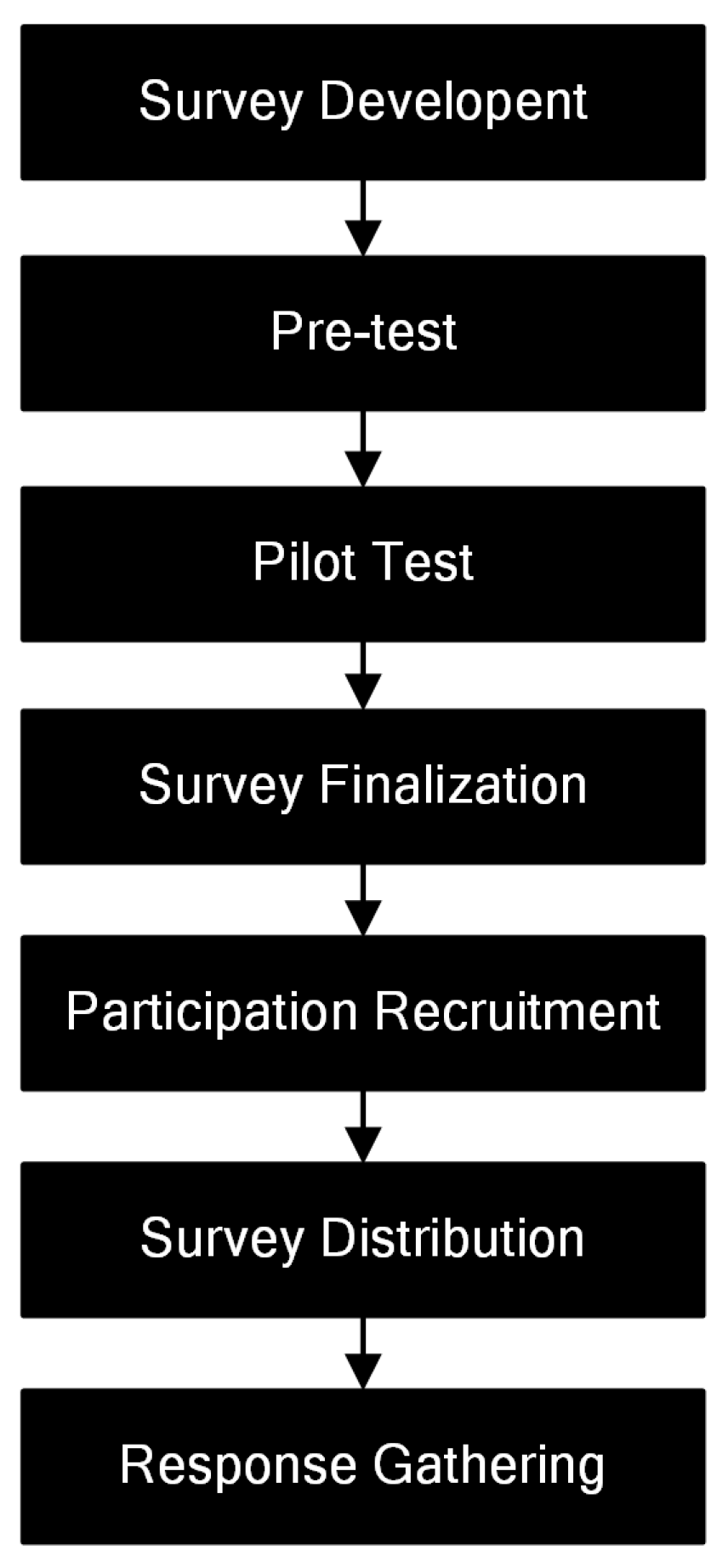

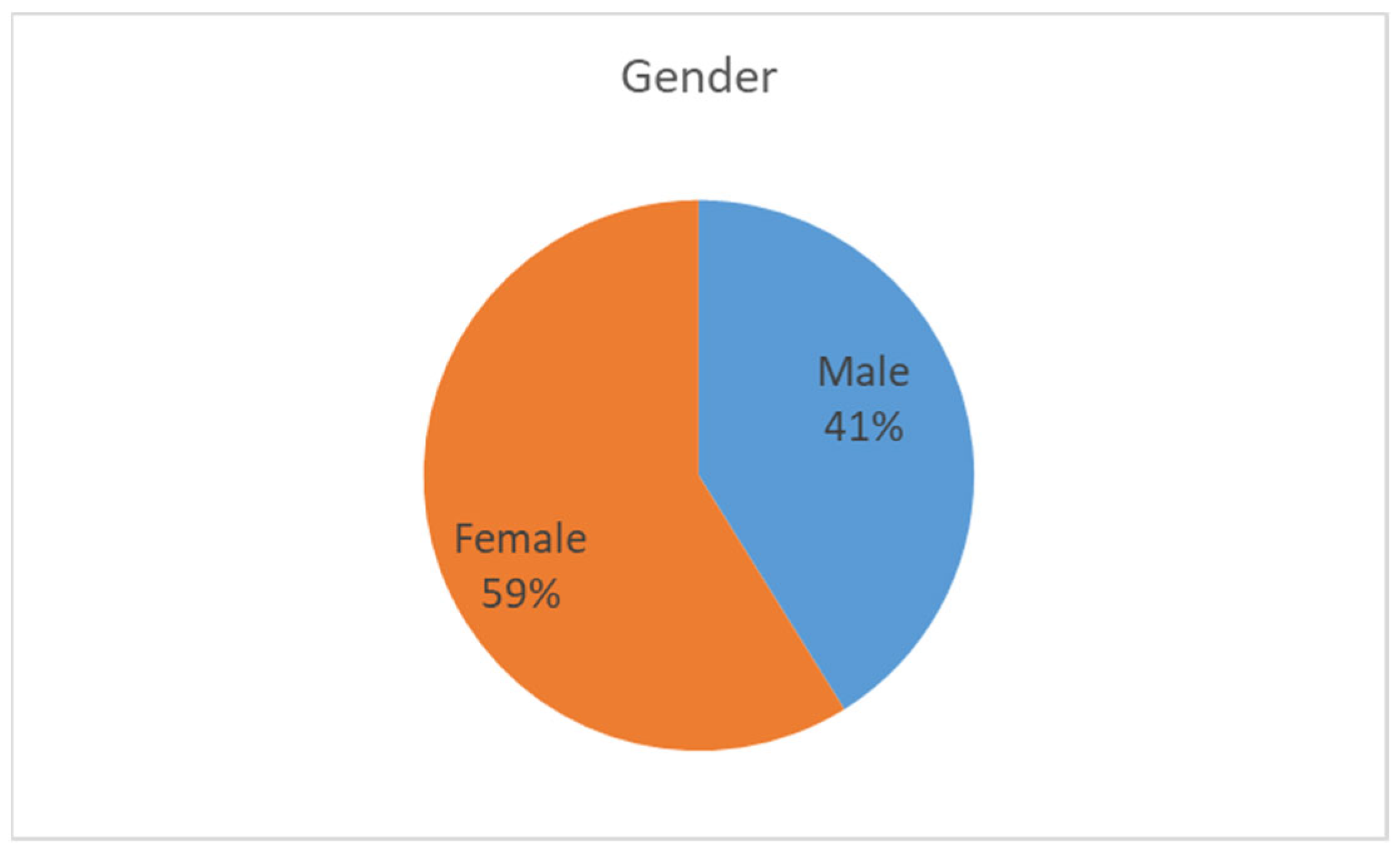

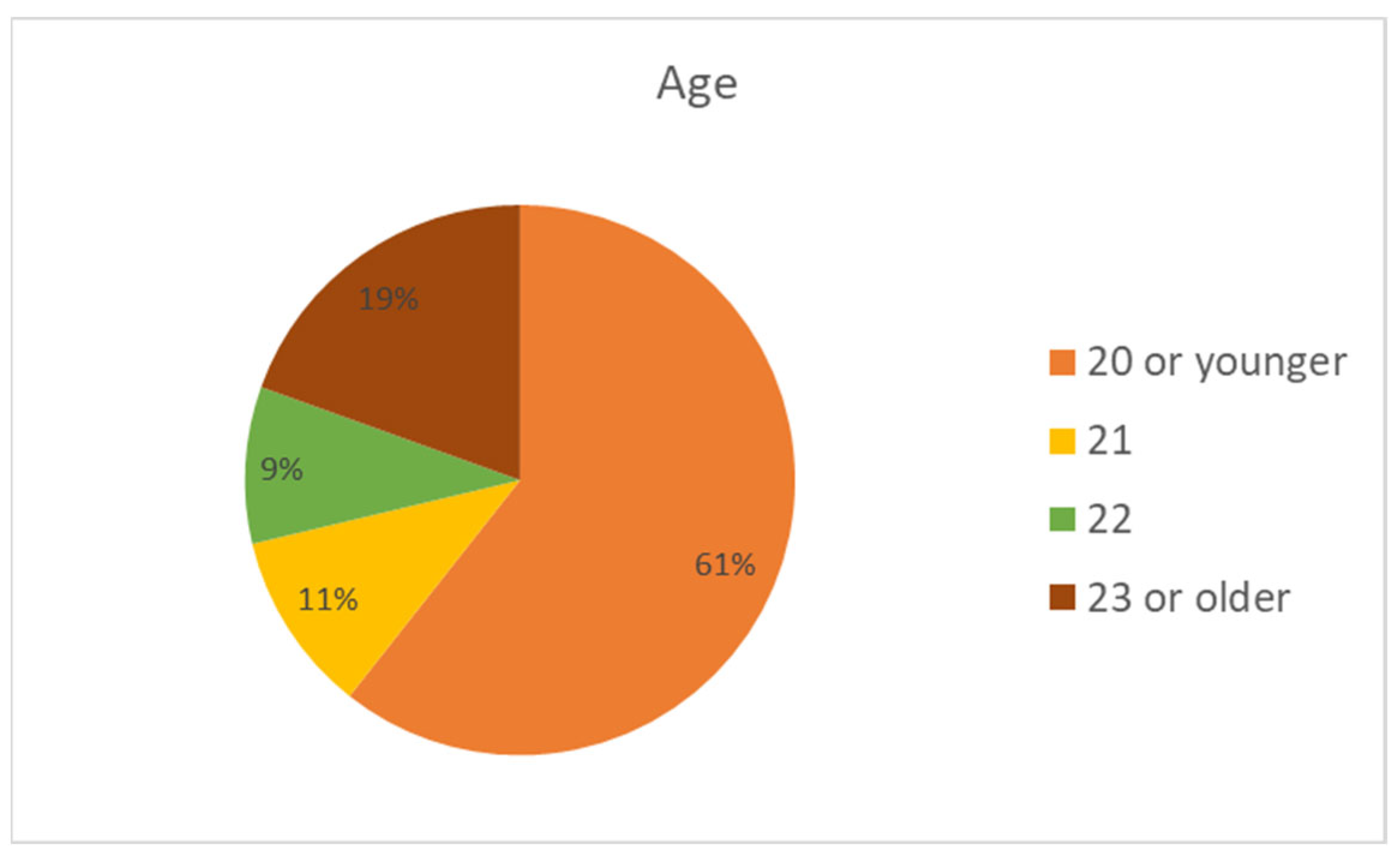

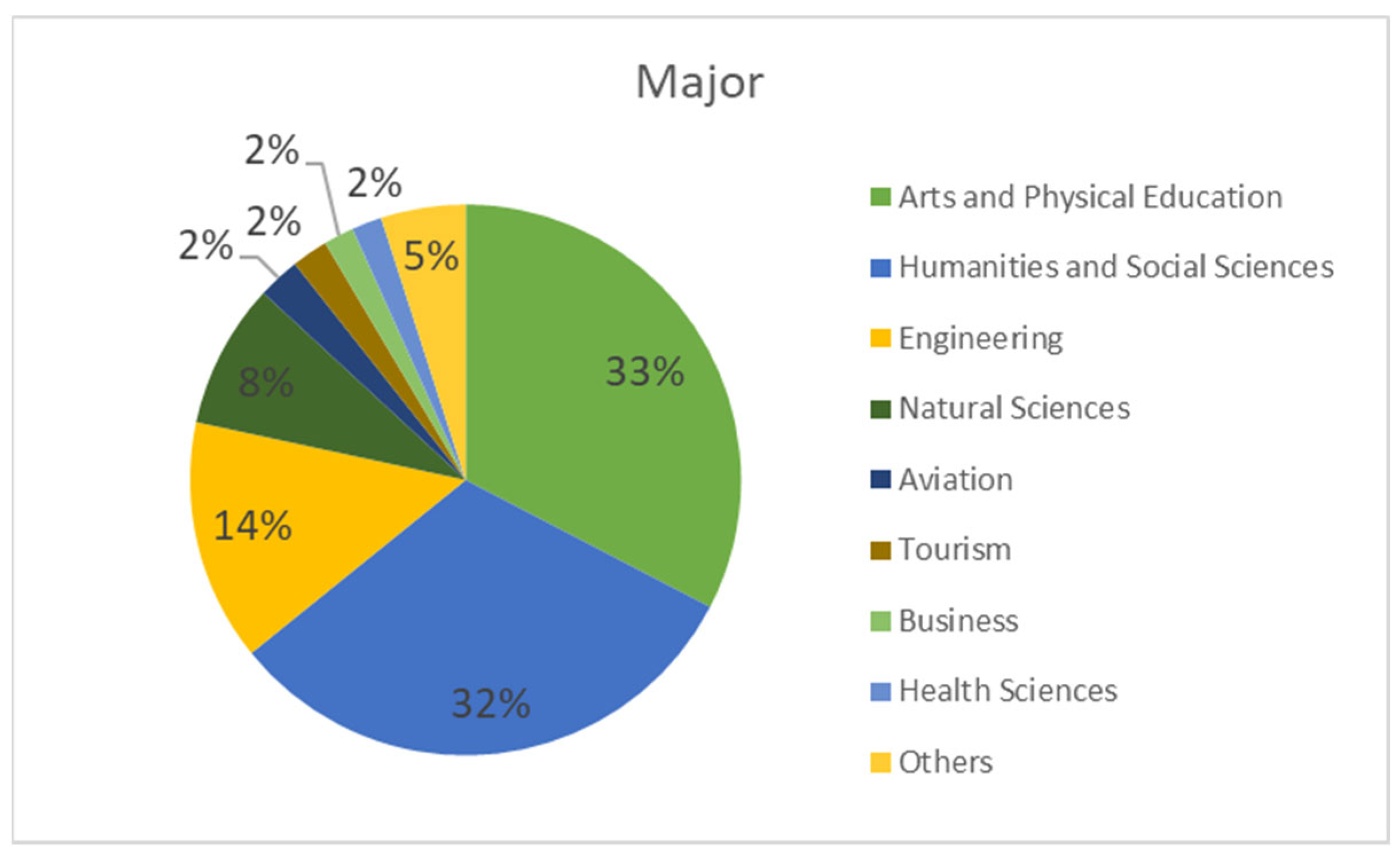

3.2. Data Collection

4. Results

4.1. Common Method Bias

4.2. Measurement Model

4.3. Structural Model

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training; OpenAI: San Francisco, CA, USA, 2018. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 140. [Google Scholar]

- Su, H.; Mokmin, N.A.M. Unveiling the Canvas: Sustainable Integration of AI in Visual Art Education. Sustainability 2024, 16, 7849. [Google Scholar] [CrossRef]

- Holgado-Apaza, L.A.; Ulloa-Gallardo, N.J.; Aragon-Navarrete, R.N.; Riva-Ruiz, R.; Odagawa-Aragon, N.K.; Castellon-Apaza, D.D.; Carpio-Vargas, E.E.; Villasante-Saravia, F.H.; Alvarez-Rozas, T.P.; Quispe-Layme, M. The Exploration of Predictors for Peruvian Teachers’ Life Satisfaction through an Ensemble of Feature Selection Methods and Machine Learning. Sustainability 2024, 16, 7532. [Google Scholar] [CrossRef]

- Huangfu, J.; Li, R.; Xu, J.; Pan, Y. Fostering Continuous Innovation in Creative Education: A Multi-Path Configurational Analysis of Continuous Collaboration with AIGC in Chinese ACG Educational Contexts. Sustainability 2025, 17, 144. [Google Scholar] [CrossRef]

- Badini, S.; Regondi, S.; Frontoni, E.; Pugliese, R. Assessing the capabilities of ChatGPT to improve additive manufacturing troubleshooting. Adv. Ind. Eng. Polym. Res. 2023, 6, 278–287. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Nerdynav. 91 Important ChatGPT Statistics & User Numbers in April 2023 (GPT-4, Plugins Update). Available online: https://nerdynav.com/chatgpt-statistics/ (accessed on 23 April 2025).

- Krishnan, R.; Kandasamy, L. How ChatGPT Shapes Knowledge Acquisition and Career Trajectories in Higher Education: Decoding Students’ Perceptions to Achieve Quality Education. In Indigenous Empowerment Through Human-Machine Interactions; Emerald Publishing Limited: Leeds, UK, 2025; pp. 73–92. [Google Scholar]

- Naznin, K.; Al Mahmud, A.; Nguyen, M.T.; Chua, C. ChatGPT Integration in Higher Education for Personalized Learning, Academic Writing, and Coding Tasks: A Systematic Review. Computers 2025, 14, 53. [Google Scholar] [CrossRef]

- Bhattacherjee, A. Understanding information systems continuance: An expectation-confirmation model. MIS Q. 2001, 25, 351–370. [Google Scholar] [CrossRef]

- Jo, H. Determinants of continuance intention towards e-learning during COVID-19: An extended expectation-confirmation model. Asia Pac. J. Educ. 2022, 45, 479–499. [Google Scholar] [CrossRef]

- Tam, C.; Santos, D.; Oliveira, T. Exploring the influential factors of continuance intention to use mobile Apps: Extending the expectation confirmation model. Inf. Syst. Front. 2020, 22, 243–257. [Google Scholar] [CrossRef]

- Cheng, Y.-M. Extending the expectation-confirmation model with quality and flow to explore nurses’ continued blended e-learning intention. Inf. Technol. People 2014, 27, 230–258. [Google Scholar] [CrossRef]

- Al-Sharafi, M.A.; Al-Emran, M.; Iranmanesh, M.; Al-Qaysi, N.; Iahad, N.A.; Arpaci, I. Understanding the impact of knowledge management factors on the sustainable use of AI-based chatbots for educational purposes using a hybrid SEM-ANN approach. Interact. Learn. Environ. 2022, 31, 7491–7510. [Google Scholar] [CrossRef]

- Nguyen, D.M.; Chiu, Y.-T.H.; Le, H.D. Determinants of Continuance Intention towards Banks’ Chatbot Services in Vietnam: A Necessity for Sustainable Development. Sustainability 2021, 13, 7625. [Google Scholar] [CrossRef]

- Brill, T.M.; Munoz, L.; Miller, R.J. Siri, Alexa, and other digital assistants: A study of customer satisfaction with artificial intelligence applications. J. Mark. Manag. 2019, 35, 1401–1436. [Google Scholar] [CrossRef]

- Eren, B.A. Determinants of customer satisfaction in chatbot use: Evidence from a banking application in Turkey. Int. J. Bank Mark. 2021, 39, 294–311. [Google Scholar] [CrossRef]

- Kasneci, E.; Sessler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- van Dis, E.A.; Bollen, J.; Zuidema, W.; van Rooij, R.; Bockting, C.L. ChatGPT: Five priorities for research. Nature 2023, 614, 224–226. [Google Scholar] [CrossRef]

- Fauzi, F.; Tuhuteru, L.; Sampe, F.; Ausat, A.M.A.; Hatta, H.R. Analysing the Role of ChatGPT in Improving Student Productivity in Higher Education. J. Educ. 2023, 5, 14886–14891. [Google Scholar] [CrossRef]

- Jimenez, K. ChatGPT in the Classroom: Here’s What Teachers and Students Are Saying. Available online: https://www.usatoday.com/story/news/education/2023/03/01/what-teachers-students-saying-ai-chatgpt-use-classrooms/11340040002/ (accessed on 25 April 2025).

- Wang, Y.-Y.; Wang, Y.-S. Development and validation of an artificial intelligence anxiety scale: An initial application in predicting motivated learning behavior. Interact. Learn. Environ. 2022, 30, 619–634. [Google Scholar] [CrossRef]

- Nass, C.I.; Brave, S. Wired for Speech: How Voice Activates and Advances the Human-Computer Relationship; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Shum, H.-y.; He, X.-d.; Li, D. From Eliza to XiaoIce: Challenges and opportunities with social chatbots. Front. Inf. Technol. Electron. Eng. 2018, 19, 10–26. [Google Scholar] [CrossRef]

- Balakrishnan, J.; Dwivedi, Y.K. Conversational commerce: Entering the next stage of AI-powered digital assistants. Ann. Oper. Res. 2021, 333, 653–687. [Google Scholar] [CrossRef]

- Chen, J.-S.; Le, T.-T.-Y.; Florence, D. Usability and responsiveness of artificial intelligence chatbot on online customer experience in e-retailing. Int. J. Retail Distrib. Manag. 2021, 49, 1512–1531. [Google Scholar] [CrossRef]

- Chopra, K. Indian shopper motivation to use artificial intelligence. Int. J. Retail Distrib. Manag. 2019, 47, 331–347. [Google Scholar] [CrossRef]

- Lee, C.T.; Pan, L.-Y.; Hsieh, S.H. Artificial intelligent chatbots as brand promoters: A two-stage structural equation modeling-artificial neural network approach. Internet Res. 2022, 32, 1329–1356. [Google Scholar] [CrossRef]

- Lin, T.; Zhang, J.; Xiong, B. Effects of Technology Perceptions, Teacher Beliefs, and AI Literacy on AI Technology Adoption in Sustainable Mathematics Education. Sustainability 2025, 17, 3698. [Google Scholar] [CrossRef]

- Wu, R.; Gao, L.; Li, J.; Huang, Q.; Pan, Y. Key Factors Influencing Design Learners’ Behavioral Intention in Human-AI Collaboration Within the Educational Metaverse. Sustainability 2024, 16, 9942. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Tiwari, C.K.; Bhat, M.A.; Khan, S.T.; Subramaniam, R.; Khan, M.A.I. What drives students toward ChatGPT? An investigation of the factors influencing adoption and usage of ChatGPT. Interact. Technol. Smart Educ. 2024, 21, 333–355. [Google Scholar] [CrossRef]

- Shahsavar, Y.; Choudhury, A. User Intentions to Use ChatGPT for Self-Diagnosis and Health-Related Purposes: Cross-sectional Survey Study. JMIR Hum. Factors 2023, 10, e47564. [Google Scholar] [CrossRef]

- Choudhury, A.; Shamszare, H. Investigating the Impact of User Trust on the Adoption and Use of ChatGPT: Survey Analysis. J. Med. Internet Res. 2023, 25, e47184. [Google Scholar] [CrossRef]

- Ngo, T.T.A.; An, G.K.; Nguyen, P.T.; Tran, T.T. Unlocking educational potential: Exploring students’ satisfaction and sustainable engagement with ChatGPT using the ECM model. J. Inf. Technol. Educ. Res. 2024, 23, 21. [Google Scholar]

- Chen, H.-J. Verifying the link of innovativeness to the confirmation-expectation model of ChatGPT of students in learning. J. Inf. Commun. Ethics Soc. 2025, 23, 433–447. [Google Scholar] [CrossRef]

- Liu, G.; Ma, C. Measuring EFL learners’ use of ChatGPT in informal digital learning of English based on the technology acceptance model. Innov. Lang. Learn. Teach. 2024, 18, 125–138. [Google Scholar] [CrossRef]

- Lai, C.Y.; Cheung, K.Y.; Chan, C.S. Exploring the role of intrinsic motivation in ChatGPT adoption to support active learning: An extension of the technology acceptance model. Comput. Educ. Artif. Intell. 2023, 5, 100178. [Google Scholar] [CrossRef]

- Saif, N.; Khan, S.U.; Shaheen, I.; Alotaibi, F.A.; Alnfiai, M.M.; Arif, M. Chat-GPT; validating Technology Acceptance Model (TAM) in education sector via ubiquitous learning mechanism. Comput. Hum. Behav. 2024, 154, 108097. [Google Scholar] [CrossRef]

- Ajzen, I. The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Rafiq, F.; Dogra, N.; Adil, M.; Wu, J.-Z. Examining consumer’s intention to adopt AI-chatbots in tourism using partial least squares structural equation modeling method. Mathematics 2022, 10, 2190. [Google Scholar] [CrossRef]

- Nonaka, I.; Takeuchi, H. The Knowledge Creating; Oxford University Press: New York, NY, USA, 1995; Volume 304. [Google Scholar]

- Wang, S.; Noe, R.A. Knowledge sharing: A review and directions for future research. Hum. Resour. Manag. Rev. 2010, 20, 115–131. [Google Scholar] [CrossRef]

- Alavi, M.; Leidner, D.E. Knowledge management and knowledge management systems: Conceptual foundations and research issues. MIS Q. 2001, 1, 107–136. [Google Scholar] [CrossRef]

- Mun, Y.Y.; Hwang, Y. Predicting the use of web-based information systems: Self-efficacy, enjoyment, learning goal orientation, and the technology acceptance model. Int. J. Hum.-Comput. Stud. 2003, 59, 431–449. [Google Scholar]

- Wang, Y.S. Assessing e-commerce systems success: A respecification and validation of the DeLone and McLean model of IS success. Inf. Syst. J. 2008, 18, 529–557. [Google Scholar] [CrossRef]

- Gourlay, S. Conceptualizing knowledge creation: A critique of Nonaka’s theory. J. Manag. Stud. 2006, 43, 1415–1436. [Google Scholar] [CrossRef]

- Moussawi, S.; Koufaris, M.; Benbunan-Fich, R. How perceptions of intelligence and anthropomorphism affect adoption of personal intelligent agents. Electron. Mark. 2021, 31, 343–364. [Google Scholar] [CrossRef]

- Liu, Y.; Li, H.; Carlsson, C. Factors driving the adoption of m-learning: An empirical study. Comput. Educ. 2010, 55, 1211–1219. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A theoretical extension of the technology acceptance model: Four longitudinal field studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer technology: A comparison of two theoretical models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef]

- Ashfaq, M.; Yun, J.; Yu, S.; Loureiro, S.M.C. I, Chatbot: Modeling the determinants of users’ satisfaction and continuance intention of AI-powered service agents. Telemat. Inform. 2020, 54, 101473. [Google Scholar] [CrossRef]

- Yang, H.; Lee, H. Understanding user behavior of virtual personal assistant devices. Inf. Syst. E-Bus. Manag. 2019, 17, 65–87. [Google Scholar] [CrossRef]

- Oliver, R.L. A cognitive model of the antecedents and consequences of satisfaction decisions. J. Mark. Res. 1980, 17, 460–469. [Google Scholar] [CrossRef]

- Tarhini, A.; Masa’deh, R.e.; Al-Busaidi, K.A.; Mohammed, A.B.; Maqableh, M. Factors influencing students’ adoption of e-learning: A structural equation modeling approach. J. Int. Educ. Bus. 2017, 10, 164–182. [Google Scholar] [CrossRef]

- McArthur, D.; Lewis, M.; Bishary, M. The roles of artificial intelligence in education: Current progress and future prospects. J. Educ. Technol. 2005, 1, 42–80. [Google Scholar] [CrossRef]

- Alalwan, A.A.; Dwivedi, Y.K.; Rana, N.P.; Algharabat, R. Examining factors influencing Jordanian customers’ intentions and adoption of internet banking: Extending UTAUT2 with risk. J. Retail. Consum. Serv. 2018, 40, 125–138. [Google Scholar] [CrossRef]

- Tarhini, A.; Hone, K.; Liu, X. A cross-cultural examination of the impact of social, organisational and individual factors on educational technology acceptance between B ritish and L ebanese university students. Br. J. Educ. Technol. 2015, 46, 739–755. [Google Scholar] [CrossRef]

- Han, S.; Yang, H. Understanding adoption of intelligent personal assistants: A parasocial relationship perspective. Ind. Manag. Data Syst. 2018, 118, 618–636. [Google Scholar] [CrossRef]

- Cheng, Y.; Jiang, H. How do AI-driven chatbots impact user experience? Examining gratifications, perceived privacy risk, satisfaction, loyalty, and continued use. J. Broadcast. Electron. Media 2020, 64, 592–614. [Google Scholar] [CrossRef]

- Duong, C.D.; Nguyen, T.H.; Ngo, T.V.N.; Pham, T.T.P.; Vu, A.T.; Dang, N.S. Using generative artificial intelligence (ChatGPT) for travel purposes: Parasocial interaction and tourists’ continuance intention. Tour. Rev. 2025, 80, 813–827. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Elshaer, I.A.; AlNajdi, S.M.; Salem, M.A. Sustainable AI Solutions for Empowering Visually Impaired Students: The Role of Assistive Technologies in Academic Success. Sustainability 2025, 17, 5609. [Google Scholar] [CrossRef]

- Chan, G.; Cheung, C.; Kwong, T.; Limayem, M.; Zhu, L. Online consumer behavior: A review and agenda for future research. BLED 2003 Proc. 2003, 43. [Google Scholar]

- Nikou, S.A.; Economides, A.A. Mobile-based assessment: Investigating the factors that influence behavioral intention to use. Comput. Educ. 2017, 109, 56–73. [Google Scholar] [CrossRef]

- Tanribilir, R.N. Analysing antecedence of an intelligent voice assistant use intention and behaviour. F1000Research 2021, 10, 496. [Google Scholar] [CrossRef]

- Bali, S.; Edi, S.; Tsai-Ching, C.; Cheng-Yi, L.; and Liu, M.-C. Social Influence, Personal Views, and Behavioral Intention in ChatGPT Adoption. J. Comput. Inf. Syst. 2024, 1–12. [Google Scholar] [CrossRef]

- Gnewuch, U.; Morana, S.; Maedche, A. Towards Designing Cooperative and Social Conversational Agents for Customer Service. In Proceedings of the 38th International Conference on Information Systems (ICIS) 2017, Seoul, Republic of Korea, 10–13 December 2017. [Google Scholar]

- Treiblmaier, H.; Putz, L.-M.; Lowry, P.B. Setting a definition, context, and theory-based research agenda for the gamification of non-gaming applications. Assoc. Inf. Syst. Trans. Hum. -Comput. Interact. (THCI) 2018, 10, 129–163. [Google Scholar] [CrossRef]

- Li, X.; Hess, T.J.; Valacich, J.S. Why do we trust new technology? A study of initial trust formation with organizational information systems. J. Strateg. Inf. Syst. 2008, 17, 39–71. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.L.; Xu, X. Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Chin, W.W.; Marcolin, B.L.; Newsted, P.R. A partial least squares latent variable modeling approach for measuring interaction effects: Results from a Monte Carlo simulation study and an electronic-mail emotion/adoption study. Inf. Syst. Res. 2003, 14, 189–217. Available online: https://www.jstor.org/stable/23011467 (accessed on 25 April 2025). [CrossRef]

- Hair, J.F.; Sarstedt, M.; Ringle, C.M.; Mena, J.A. An assessment of the use of partial least squares structural equation modeling in marketing research. J. Acad. Mark. Sci. 2012, 40, 414–433. [Google Scholar] [CrossRef]

- Falk, R.F.; Miller, N.B. A Primer for Soft Modeling; University of Akron Press: Akron, OH, USA, 1992. [Google Scholar]

- Kock, N. WarpPLS 5.0 User Manual; ScriptWarp SystemTM: Lareedo, TX, USA, 2015. [Google Scholar]

- Gefen, D.; Straub, D.W.; Boudreau, M.C. Structural equation modeling and regression: Guidelines for research practice. Commun. AIS 2000, 4, 1–79. [Google Scholar] [CrossRef]

- Nunnally, J.C. Psychometric Theory, 2nd ed.; Mcgraw Hill Book Company: New York, NY, USA, 1978. [Google Scholar]

- Hair, J.; Anderson, R.; Tatham, B.R. Multivariate Data Analysis, 6th ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2006. [Google Scholar]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement Error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Henseler, J.; Hubona, G.; Ray, P.A. Using PLS path modeling in new technology research: Updated guidelines. Ind. Manag. Data Syst. 2016, 116, 2–20. [Google Scholar] [CrossRef]

- Thong, J.Y.; Hong, S.-J.; Tam, K.Y. The effects of post-adoption beliefs on the expectation-confirmation model for information technology continuance. Int. J. Hum.-Comput. Stud. 2006, 64, 799–810. [Google Scholar] [CrossRef]

- Limayem, M.; Hirt, S.G.; Cheung, C.M. How habit limits the predictive power of intention: The case of information systems continuance. MIS Q. 2007, 31, 705–737. [Google Scholar] [CrossRef]

- Romero-Rodríguez, J.-M.; Ramírez-Montoya, M.-S.; Buenestado-Fernández, M.; Lara-Lara, F. Use of ChatGPT at university as a tool for complex thinking: Students’ perceived usefulness. J. New Approaches Educ. Res. 2023, 12, 323–339. [Google Scholar] [CrossRef]

- Alshammari, S.H.; Babu, E. The mediating role of satisfaction in the relationship between perceived usefulness, perceived ease of use and students’ behavioural intention to use ChatGPT. Sci. Rep. 2025, 15, 7169. [Google Scholar] [CrossRef]

- Rahaman, M.S.; Ahsan, M.T.; Anjum, N.; Dana, L.P.; Salamzadeh, A.; Sarker, D.; Rahman, M.M. ChatGPT in Sustainable Business, Economics, and Entrepreneurial World: Perceived Usefulness, Drawbacks, and Future Research Agenda. J. Entrep. Bus. Econ. 2024, 12, 88–123. [Google Scholar]

- Ma, J.; Wang, P.; Li, B.; Wang, T.; Pang, X.S.; Wang, D. Exploring user adoption of ChatGPT: A technology acceptance model perspective. Int. J. Hum.-Comput. Interact. 2025, 41, 1431–1445. [Google Scholar] [CrossRef]

- Sallam, M.; Elsayed, W.; Al-Shorbagy, M.; Barakat, M.; El Khatib, S.; Ghach, W.; Alwan, N.; Hallit, S.; Malaeb, D. ChatGPT usage and attitudes are driven by perceptions of usefulness, ease of use, risks, and psycho-social impact: A study among university students in the UAE. Front. Educ. 2024, 9, 1414758. [Google Scholar] [CrossRef]

- Kim, M.K.; Jhee, S.Y.; Han, S.-L. The Impact of Chat GPT’s Quality Factors on~ Perceived Usefulness, Perceived Enjoyment, and~ Continuous Usage Intention Using the IS Success Model. Asia Mark. J. 2025, 26, 243–254. [Google Scholar] [CrossRef]

- Schillaci, C.E.; de Cosmo, L.M.; Piper, L.; Nicotra, M.; Guido, G. Anthropomorphic chatbots’ for future healthcare services: Effects of personality, gender, and roles on source credibility, user satisfaction, and intention to use. Technol. Forecast. Soc. Chang. 2024, 199, 123025. [Google Scholar] [CrossRef]

- Liu, W.; Jiang, M.; Li, W.; Mou, J. How does the anthropomorphism of AI chatbots facilitate users’ reuse intention in online health consultation services? The moderating role of disease severity. Technol. Forecast. Soc. Chang. 2024, 203, 123407. [Google Scholar] [CrossRef]

- Casheekar, A.; Lahiri, A.; Rath, K.; Prabhakar, K.S.; Srinivasan, K. A contemporary review on chatbots, AI-powered virtual conversational agents, ChatGPT: Applications, open challenges and future research directions. Comput. Sci. Rev. 2024, 52, 100632. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, R.; Zou, Y.; Jin, D. Investigating customers’ responses to artificial intelligence chatbots in online travel agencies: The moderating role of product familiarity. J. Hosp. Tour. Technol. 2023, 14, 208–224. [Google Scholar] [CrossRef]

| Construct | Item | Description | Source |

|---|---|---|---|

| Knowledge Application | KAP1 | Generative AI provides me with instant access to various types of knowledge. | Al-Sharafi, et al. [15] |

| KAP2 | Generative AI allows me to integrate different types of knowledge. | ||

| KAP3 | Generative AI can help us better manage the course materials within the university. | ||

| Perceived Intelligence | PIE1 | I feel that generative AI for learning is competent. | Rafiq, et al. [42] |

| PIE2 | I feel that generative AI for learning is knowledgeable. | ||

| PIE3 | I feel that generative AI for learning is intelligent. | ||

| Perceived Usefulness | PUS1 | I find generative AI useful in my daily life. | Davis [32] |

| PUS2 | Using generative AI helps me to accomplish things more quickly. | ||

| PUS3 | Using generative AI increases my productivity. | ||

| Confirmation | CON1 | My experience with using generative AI is better than what I expected. | Bhattacherjee [11] |

| CON2 | The service level provided by generative AI is better than I expected. | ||

| CON3 | Overall, most of my expectations from using generative AI are confirmed. | ||

| Satisfaction | SAT1 | I am very satisfied with generative AI. | Bhattacherjee [11]; Nguyen, et al. [16] |

| SAT2 | Generative AI meets my expectations. | ||

| SAT3 | Generative AI meets my needs and requirements. | ||

| Social Influence | SOI1 | People who influence me think that I should use generative AI. | Venkatesh, et al. [72] |

| SOI2 | People who are important to me think I should use generative AI. | ||

| SOI3 | Most people who are important to me understand that I use generative AI. | ||

| AI Configuration | CFG1 | I find humanoid generative AI scary. | Wang and Wang [23] |

| CFG2 | I find humanoid generative AI intimidating. | ||

| CFG3 | I don’t know why, but humanoid generative AI scares me. | ||

| Continuance Intention | COI1 | I intend to continue using generative AI in the future. | Bhattacherjee [11] |

| COI2 | I will always try to use generative AI in my daily life. | ||

| COI3 | I will strongly recommend others to use generative AI. |

| Construct | Item | Mean | St. Dev. | Factor Loading | Cronbach’s Alpha | CR (rho_c) | AVE |

|---|---|---|---|---|---|---|---|

| Knowledge Application | KAP1 | 5.688 | 1.250 | 0.888 | 0.875 | 0.923 | 0.800 |

| KAP2 | 5.645 | 1.294 | 0.923 | ||||

| KAP3 | 5.450 | 1.441 | 0.871 | ||||

| Perceived Intelligence | PIE1 | 5.851 | 1.232 | 0.868 | 0.829 | 0.897 | 0.745 |

| PIE2 | 5.234 | 1.486 | 0.827 | ||||

| PIE3 | 5.496 | 1.300 | 0.892 | ||||

| Perceived Usefulness | PUS1 | 5.819 | 1.232 | 0.827 | 0.835 | 0.901 | 0.753 |

| PUS2 | 6.046 | 1.171 | 0.901 | ||||

| PUS3 | 5.720 | 1.300 | 0.873 | ||||

| Confirmation | CON1 | 5.582 | 1.322 | 0.931 | 0.915 | 0.947 | 0.855 |

| CON2 | 5.546 | 1.339 | 0.938 | ||||

| CON3 | 5.489 | 1.255 | 0.905 | ||||

| Satisfaction | CSA1 | 5.571 | 1.256 | 0.933 | 0.925 | 0.952 | 0.869 |

| CSA2 | 5.404 | 1.310 | 0.947 | ||||

| CSA3 | 5.383 | 1.322 | 0.916 | ||||

| Social Influence | SOI1 | 4.582 | 1.737 | 0.920 | 0.863 | 0.917 | 0.787 |

| SOI2 | 4.511 | 1.791 | 0.921 | ||||

| SOI3 | 5.376 | 1.313 | 0.816 | ||||

| AI Configuration | CFG1 | 4.862 | 1.818 | 0.941 | 0.839 | 0.862 | 0.678 |

| CFG2 | 4.259 | 1.818 | 0.745 | ||||

| CFG3 | 3.238 | 1.927 | 0.771 | ||||

| Continuance Intention | COI1 | 5.755 | 1.275 | 0.885 | 0.871 | 0.921 | 0.795 |

| COI2 | 5.074 | 1.652 | 0.879 | ||||

| COI3 | 5.280 | 1.448 | 0.912 |

| Construct | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| 1. Knowledge Application | 0.894 | |||||||

| 2. Perceived Intelligence | 0.681 | 0.863 | ||||||

| 3. Perceived Usefulness | 0.715 | 0.748 | 0.868 | |||||

| 4. Confirmation | 0.647 | 0.734 | 0.771 | 0.925 | ||||

| 5. Satisfaction | 0.657 | 0.746 | 0.722 | 0.862 | 0.932 | |||

| 6. Social Influence | 0.513 | 0.558 | 0.564 | 0.637 | 0.705 | 0.887 | ||

| 7. AI Configuration | 0.142 | 0.107 | 0.171 | 0.125 | 0.142 | 0.216 | 0.824 | |

| 8. Continuance Intention | 0.666 | 0.661 | 0.694 | 0.717 | 0.746 | 0.670 | 0.108 | 0.892 |

| H | Predictor | Outcome | β | t | p | Hypothesis |

|---|---|---|---|---|---|---|

| H1a | Knowledge Application | Perceived Usefulness | 0.275 | 4.854 | 0.000 | Supported |

| H1b | Knowledge Application | Confirmation | 0.274 | 4.380 | 0.000 | Supported |

| H2a | Perceived Intelligence | Perceived Usefulness | 0.271 | 4.729 | 0.000 | Supported |

| H2b | Perceived Intelligence | Confirmation | 0.547 | 10.955 | 0.000 | Supported |

| H3a | Perceived Usefulness | Satisfaction | 0.142 | 3.039 | 0.002 | Supported |

| H3b | Perceived Usefulness | Continuance Intention | 0.303 | 4.998 | 0.000 | Supported |

| H4a | Confirmation | Perceived Usefulness | 0.394 | 5.829 | 0.000 | Supported |

| H4b | Confirmation | Satisfaction | 0.752 | 17.689 | 0.000 | Supported |

| H5a | Satisfaction | Continuance Intention | 0.347 | 4.277 | 0.000 | Supported |

| H6 | Social Influence | Continuance Intention | 0.264 | 3.878 | 0.000 | Supported |

| H7 | AI Configuration | Continuance Intention | −0.050 | 0.989 | 0.323 | Not Supported |

| Study | Research Focus | Theoretical Framework | Methodology | Sample Characteristics | Key Findings (PU) | Implications |

|---|---|---|---|---|---|---|

| Current Study | Post-adoption of Generative AI in education | TAM + ECM + TPB | PLS-SEM, online survey | 282 Korean university students | PU significantly affects satisfaction and continuance intention | Suggests design and training to improve PU |

| [84] | Acceptance of ChatGPT | UTAUT2 | Online survey | 400 Spanish students | Experience strongly influences PU | Promote experience-based learning with ChatGPT |

| [85] | Role of satisfaction in ChatGPT use | TAM | AMOS SEM | 297 students | PU affects satisfaction and behavioral intention | Reinforce usefulness to sustain use |

| [86] | ChatGPT in business/entrepreneurship | Scoping Review | Thematic synthesis | 40 studies (mixed domains) | PU helps decision-making | Need reliability improvement |

| [87] | Adoption of ChatGPT | TAM | Survey, SEM | 784 Chinese users | PU directly influences intention | Improve PU and PEU for adoption |

| [88] | Usage and attitudes in UAE | TAME-ChatGPT (based on TAM) | Cross-sectional, e-survey | 608 UAE students | PU and PEU drive usage and attitudes | Address risks to maximize PU |

| [89] | IS Success Model and ChatGPT | IS Success Model | Survey | 225 experienced users | Quality factors → PU → usage intention | Improve design and functionality |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jung, Y.M.; Jo, H. Understanding Continuance Intention of Generative AI in Education: An ECM-Based Study for Sustainable Learning Engagement. Sustainability 2025, 17, 6082. https://doi.org/10.3390/su17136082

Jung YM, Jo H. Understanding Continuance Intention of Generative AI in Education: An ECM-Based Study for Sustainable Learning Engagement. Sustainability. 2025; 17(13):6082. https://doi.org/10.3390/su17136082

Chicago/Turabian StyleJung, Young Mee, and Hyeon Jo. 2025. "Understanding Continuance Intention of Generative AI in Education: An ECM-Based Study for Sustainable Learning Engagement" Sustainability 17, no. 13: 6082. https://doi.org/10.3390/su17136082

APA StyleJung, Y. M., & Jo, H. (2025). Understanding Continuance Intention of Generative AI in Education: An ECM-Based Study for Sustainable Learning Engagement. Sustainability, 17(13), 6082. https://doi.org/10.3390/su17136082