A Hybrid Framework of Deep Learning Techniques to Predict Online Performance of Learners during COVID-19 Pandemic

Abstract

:1. Introduction

- Previous studies have made models specifically designed for learner prediction relevant to only a single course, which made the model too specific.

- Recent studies that include the models for learner prediction under individual course is not an efficient strategy because it requires allocating resources to each model individually, which is an overburden. Therefore, a more generic model is needed.

- Recent studies have encountered the major limitation of lacking the number of responses used as a dataset for training the model, raising the issue of scalability in these developed models.

- The efficiency of current approaches is hindered by several challenges, such as data imbalance, misclassification, and insufficient feature set of factors considered while assessing student performance.

- The current study has proposed a framework that helps to predict the performance of learners for multiple courses. This helps prevent the creation of separate models that predict performance under a single course. In short, this study has proposed a framework that is generic enough to make sound and valid predictions considering multiple factors in view.

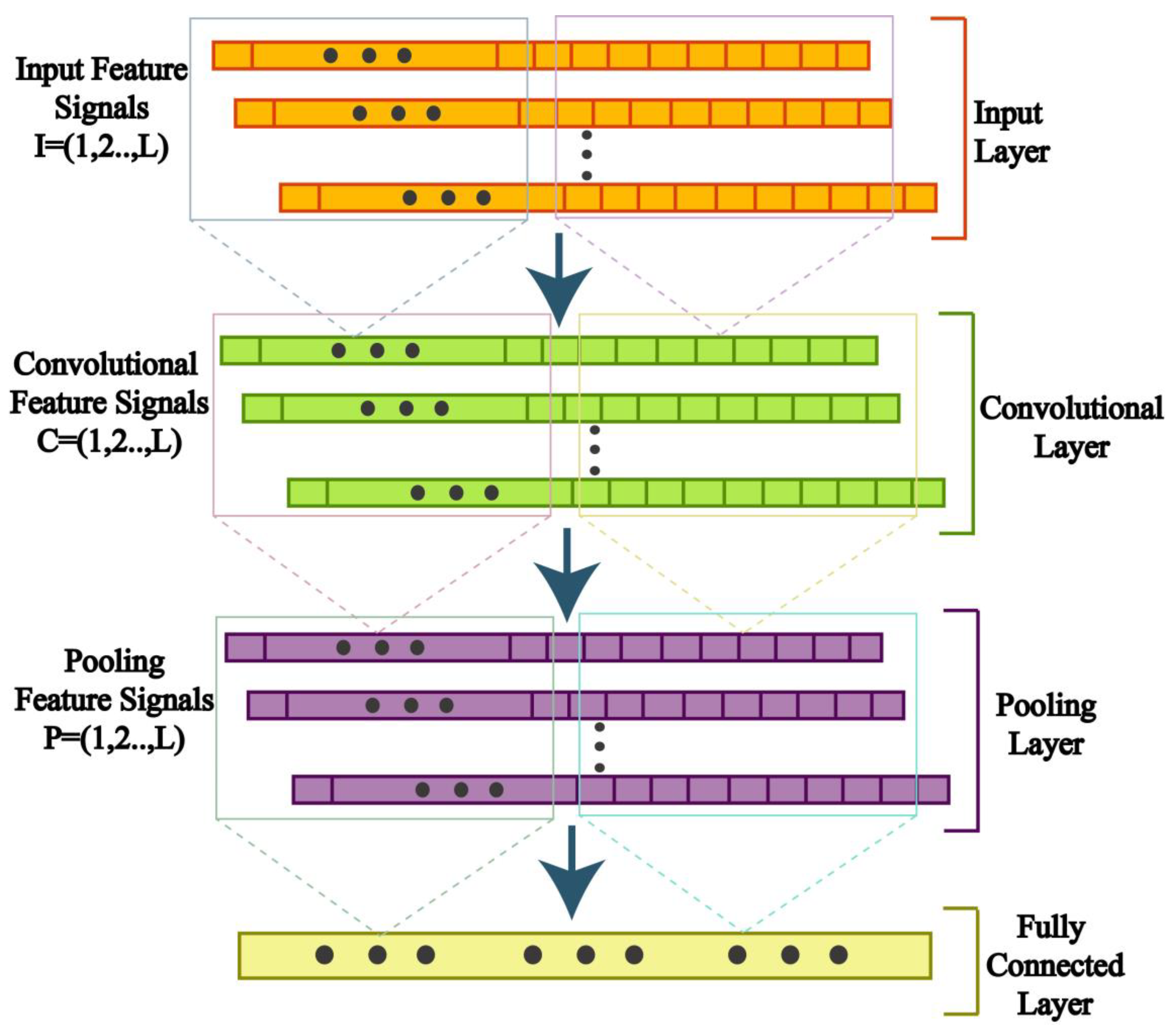

- To develop a reliable and effective learner outcome prediction model, a deep learning hybrid model has been presented. Combining deep learning classifiers (such as 1D-CNN and LSTM) produces a robust model that can more accurately predict learner performance outcomes based on student performance in online learning during the COVID-19 term.

- After the collection of online responses through a survey from all higher education students, their performance has been analyzed, and it has enabled to make the use of this available information to develop an adaptable model that considers a sufficient number of data points that could have an impact on student performance in any way.

- This research utilized the SMOTE method for data resampling and the Median Filtering approach for data imputation. Layers of CNN have automatically performed feature extraction and attribute selection to determine which features most significantly affect the result of predictions made about learners.

- The hybrid deep learning model utilized in this research has improved accuracy in visualizing the presence of experts in the field of advanced study and has helped to attain precision education by assisting weak students.

2. Literature Review

3. Proposed Framework for Performance Assessment

3.1. Materials & Methods

3.1.1. Data Acquisition

3.1.2. Resampling Data

3.1.3. Data Pre-Processing Stage

3.1.4. Feature Selection and Extraction

3.1.5. Machine Learning Classifiers

Convolutional Neural Networks (CNN)

Long-Short Term Memory (LSTM)

3.1.6. Performance Authentication of Model

4. Experiment and Results

Dataset Description

5. Conclusions and Future Recommendations

- Putting deep learning architecture into action for the period after COVID-19.

- Researchers could explore feature reduction techniques or alternative models that balance predictive performance and computational efficiency to mitigate computational challenges, such as simpler RNN variants or attention-based models.

- Increasing the size of the dataset and looking into data augmentation techniques can help prevent overfitting and lead to a more generalized model.

- The proposed framework could be an example for other developing nations facing similar difficulties due to the COVID-19 pandemic.

- Deploying pre-tuned models through transfer learning to improve a model’s performance even further.

- Exploring alternative deep learning architectures like recurrent neural nets (RNNs), GRU, or transformers to enhance efficiency and gather multifaceted student performance data.

- A combination of synchronous and asynchronous learning opportunities across various subject areas should be a focus of future development.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gomede, E.; Gaffo, F.H.; Briganó, G.U.; De Barros, R.M.; Mendes, L.D.S. Application of computational intelligence to improve education in smart cities. Sensors 2018, 267, 267. [Google Scholar] [CrossRef] [Green Version]

- Al Absi, S.M.; Jabbar, A.H.; Mezan, S.O.; Al-Rawi, B.A.; Al_Attabi, S.T. An experimental test of the performance enhancement of a Savonius turbine by modifying the inner surface of a blade. Mater. Today Proc. 2021, 42, 2233–2240. [Google Scholar] [CrossRef]

- Loganathan, M.K.; Tan, C.M.; Mishra, B.; Msagati, T.A.; Snyman, L.W. Review and selection of advanced battery technologies for post-2020 era electric vehicles. In Proceedings of the 2019 IEEE Transportation Electrification Conference, Bengaluru, India, 17–19 December 2019; pp. 1–5. [Google Scholar]

- Yang, S.J.H. Precision education: New challenges for AI in education [conference keynote]. In Proceedings of the 27th International Conference on Computers in Education (ICCE), Kenting, Taiwan, 2–6 December 2019; Asia-Pacific Society for Computers in Education APSCE: Kenting, Taiwan, 2019. [Google Scholar]

- Cook, C.R.; Kilgus, S.P.; Burns, M.K. Advancing the science and practice of precision education to enhance student outcomes. J. Sch. Psychol. 2018, 66, 4–10. [Google Scholar] [CrossRef] [PubMed]

- Maldonado-Mahauad, J.; Pérez-Sanagustín, M.; Kizilcec, R.F.; Morales, N.; Munoz-Gama, J. Mining theory-based patterns from Big data: Identifying self-regulated learning strategies in Massive Open Online Courses. Comput. Hum. Behav. 2018, 80, 179–196. [Google Scholar] [CrossRef]

- Baker, E. (Ed.) International Encyclopedia of Education, 3rd ed.; Elsevier: Oxford, UK, 2010. [Google Scholar]

- Siemens, G.; Long, P. Penetrating the fog: Analytics in learning and education. Educ. Rev. 2011, 46, 30. [Google Scholar]

- Alsuwaiket, M.; Blasi, A.H.; Al-Msie’deen, R.F. Formulating module assessment for Improved academic performance predictability in higher education. Eng. Technol. Appl. Sci. Res. 2019, 9, 4287–4291. [Google Scholar] [CrossRef]

- Garg, R.K.; Bhola, J.; Soni, S.K. Healthcare monitoring of mountaineers by low power wireless sensor networks. Inform. Med. Unlocked 2021, 27, 100775. [Google Scholar] [CrossRef]

- Yan, Z.; Yu, Y.; Shabaz, M. Optimization research on deep learning and temporal segmentation algorithm of video shot in basketball games. Comput. Intell. Neurosci. 2021, 2021, 4674140. [Google Scholar] [CrossRef] [PubMed]

- Alshareef, F.; Alhakami, H.; Alsubait, T.; Baz, A. Educational Data Mining Applications and Techniques. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 729–734. [Google Scholar] [CrossRef]

- Du, X.; Yang, J.; Hung, J.L.; Shelton, B. Educational data mining: A systematic review of research and emerging trends. Inf. Discov. Deliv. 2020, 48, 236–255. [Google Scholar] [CrossRef]

- Mahajan, G.; Saini, B. Educational Data Mining: A state-of-the-art survey on tools and techniques used in EDM. Int. J. Comput. Appl. Inf. Technol. 2020, 12, 310–316. [Google Scholar]

- Salloum, S.A.; Alshurideh, M.; Elnagar, A.; Shaalan, K. Mining in educational data: Review and future directions. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2020; pp. 92–102. [Google Scholar]

- Paulsen, M.F.; Nipper, S.; Holmberg, C. Online Education: Learning Management Systems: Global E-Learning in a Scandinavian Perspective; NKI Gorlaget: Oslo, Norway, 2003. [Google Scholar]

- Palvia, S.; Aeron, P.; Gupta, P.; Mahapatra, D.; Parida, R.; Rosner, R.; Sindhi, S. Online education: Worldwide status, challenges, trends, and implications. J. Glob. Inf. Technol. Manag. 2018, 21, 233–241. [Google Scholar]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Bates, R.; Khasawneh, S. Self-efficacy and college students’ perceptions and use of online learning systems. Comput. Hum. Behav. 2007, 23, 175–191. [Google Scholar]

- Means, B.; Toyama, Y.; Murphy, R.; Bakia, M.; Jones, K. Evaluation of Evidence-Based Practices in Online Learning: A Meta-Analysis and Review of Online Learning Studies. In Learning Unbound: Select Research and Analyses of Distance Education and Online Learning; Department of Education, US: Washington, DC, USA, 2012. Available online: https://www2.ed.gov/rschstat/eval/tech/evidence-based-practices/finalreport.pdf (accessed on 5 July 2023).

- Dias, S.B.; Hadjileontiadou, S.J.; Diniz, J.; Hadjileontiadis, L.J. DeepLMS: A deep learning predictive model for supporting online learning in the COVID-19 era. Sci. Rep. 2020, 10, 19888. [Google Scholar] [CrossRef] [PubMed]

- Dascalu, M.D.; Ruseti, S.; Dascalu, M.; McNamara, D.S.; Carabas, M.; Rebedea, T.; Trausan-Matu, S. Before and during COVID-19: A Cohesion Network Analysis of students’ online participation in moodle courses. Comput. Hum. Behav. 2021, 121, 106780. [Google Scholar]

- Bello, G.; Pennisi, M.A.; Maviglia, R.; Maggiore, S.M.; Bocci, M.G.; Montini, L.; Antonelli, M. Online vs live methods for teaching difficult airway management to anesthesiology residents. Intensive Care Med. 2005, 31, 547–552. [Google Scholar] [CrossRef]

- Al-Azzam, N.; Elsalem, L.; Gombedza, F. A cross-sectional study to determine factors affecting dental and medical students’ preference for virtual learning during the COVID-19 outbreak. Heliyon 2020, 6, e05704. [Google Scholar]

- Chen, E.; Kaczmarek, K.; Ohyama, H. Student perceptions of distance learning strategies during COVID-19. J. Dent. Educ. 2020, 85, 1190. [Google Scholar] [CrossRef]

- Abbasi, S.; Ayoob, T.; Malik, A.; Memon, S.I. Perceptions of students regarding E-learning during COVID-19 at a private medical college. Pak. J. Med. Sci. 2020, 36, S57. [Google Scholar] [CrossRef]

- Means, B.; Bakia, M.; Murphy, R. Learning Online: What Research Tells Us about Whether, When and How; Routledge: Oxfordshire, UK, 2014. [Google Scholar]

- Hooda, M.; Rana, C.; Dahiya, O.; Shet, J.P.; Singh, B.K. Integrating la and EDM for Improving Students Success in Higher Education Using FCN Algorithm. Math. Probl. Eng. 2022, 2022, 7690103. [Google Scholar] [CrossRef]

- Göppert, A.; Mohring, L.; Schmitt, R.H. Predicting performance indicators with ANNs for AI-based online scheduling in dynamically interconnected assembly systems. Prod. Eng. 2021, 15, 619–633. [Google Scholar]

- Chango, W.; Cerezo, R.; Romero, C. Multi-source and multimodal data fusion for predicting academic performance in blended learning university courses. Comput. Electr. Eng. 2021, 89, 106908. [Google Scholar] [CrossRef]

- Mubarak, A.A.; Cao, H.; Hezam, I. Deep analytic model for student dropout prediction in massive open online courses. Comput. Electr. Eng. 2021, 93, 107271. [Google Scholar] [CrossRef]

- Fotso, J.E.M.; Batchakui, B.; Nkambou, R.; Okereke, G. Algorithms for the development of deep learning models for classification and prediction of behaviour in MOOCS. In Proceedings of the2020 IEEE Learning with MOOCS (LWMOOCS), Antigua Guatemala, Guatemala, 29 September–2 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 180–184. [Google Scholar]

- Brahim, G.B. Predicting student performance from online engagement activities using novel statistical features. Arab. J. Sci. Eng. 2022, 47, 10225–10243. [Google Scholar] [CrossRef]

- Atlam, E.S.; Ewis, A.; Abd El-Raouf, M.M.; Ghoneim, O.; Gad, I. A new approach in identifying the psychological impact of COVID-19 on university student’s academic performance. Alex. Eng. J. 2022, 61, 5223–5233. [Google Scholar] [CrossRef]

- Abdelkader, H.E.; Gad, A.G.; Abohany, A.A.; Sorour, S.E. An efficient data mining technique for assessing satisfaction level with online learning for higher education students during the COVID-19. IEEE Access 2022, 10, 6286–6303. [Google Scholar] [CrossRef]

- Stadlman, M.; Salili, S.M.; Borgaonkar, A.D.; Miri, A.K. Artificial Intelligence Based Model for Prediction of Students’ Performance: A Case Study of Synchronous Online Courses During the COVID-19 Pandemic. J. STEM Educ. Innov. Res. 2022, 23, 39–46. [Google Scholar]

- Bansal, V.; Buckchash, H.; Raman, B. Computational intelligence enabled student performance estimation in the age of COVID-19. SN Comput. Sci. 2022, 3, 41. [Google Scholar] [CrossRef]

- Justusson, B.I. Median filtering: Statistical properties. In Two-Dimensional Digital Signal Prcessing II: Transforms and Median Filters; Springer: Berlin/Heidelberg, Germany, 2006; pp. 161–196. [Google Scholar]

- Shi, L.; Jianping, C.; Jie, X. Prospecting information extraction by text mining based on convolutional neural networks—A case study of the Lala copper deposit, China. IEEE Access 2018, 6, 52286–52297. [Google Scholar]

- Qiu, F.; Zhang, G.; Sheng, X.; Jiang, L.; Zhu, L.; Xiang, Q.; Jiang, B.; Chen, P.K. Predicting students’ performance in e-learning using learning process and behaviour data. Sci. Rep. 2022, 12, 453. [Google Scholar] [PubMed]

- Asad, R.; Rehman, S.U.; Imran, A.; Li, J.; Almuhaimeed, A.; Alzahrani, A. Computer-Aided Early Melanoma Brain-Tumor Detection Using Deep-Learning Approach. Biomedicines 2023, 11, 184. [Google Scholar] [PubMed]

- Punlumjeak, W.; Rachburee, N. A comparative study of feature selection techniques for classify student performance. In Proceedings of the 2015 7th International Conference on Information Technology and Electrical Engineering (ICITEE), Chiang Mai, Thailand, 29–30 October 2015; pp. 425–429. [Google Scholar]

- Manna, T.; Anitha, A. Precipitation prediction by integrating Rough Set on Fuzzy Approximation Space with Deep Learning techniques. Appl. Soft Comput. 2023, 139, 110253. [Google Scholar]

- Ajibade SS, M.; Ahmad, N.B.; Shamsuddin, S.M. An heuristic feature selection algorithm to evaluate academic performance of students. In Proceedings of the 2019 IEEE 10th Control and System Graduate Research Colloquium (ICSGRC), Shah Alam, Malaysia, 2–3 August 2019; pp. 110–114. [Google Scholar]

- Jalota, C.; Agrawal, R. Feature selection algorithms and student academic performance: A study. In Proceedings of the International Conference on Innovative Computing and Communications: Proceedings of ICICC, 2021, Delhi, India, 20–21 February 2021; Springer: Delhi, India; Volume 1, pp. 317–328. [Google Scholar]

- Asad, R.; Altaf, S.; Ahmad, S.; Shah Noor Mohamed, A.; Huda, S.; Iqbal, S. Achieving Personalized Precision Education Using the Catboost Model during the COVID-19 Lockdown Period in Pakistan. Sustainability 2023, 15, 2714. [Google Scholar]

- Asad, R.; Altaf, S.; Ahmad, S.; Mahmoud, H.; Huda, S.; Iqbal, S. Machine Learning-Based Hybrid Ensemble Model Achieving Precision Education for Online Education Amid the Lockdown Period of COVID-19 Pandemic in Pakistan. Sustainability 2023, 15, 5431. [Google Scholar]

- Nayani, S.; P, S.R. Combination of Deep Learning Models for Student’s Performance Prediction with a Development of Entropy Weighted Rough Set Feature Mining. Cybern. Syst. 2023, 1–43. [Google Scholar] [CrossRef]

- Zuhri, B.; Harani, N.H.; Prianto, C. Probability Prediction for Graduate Admission Using CNN-LSTM Hybrid Algorithm. Indones. J. Comput. Sci. 2023, 12, 1105–1119. [Google Scholar] [CrossRef]

- Kukkar, A.; Mohana, R.; Sharma, A.; Nayyar, A. Prediction of student academic performance based on their emotional wellbeing and interaction on various e-learning platforms. Educ. Inf. Technol. 2023, 1–30. [Google Scholar] [CrossRef]

- Poudyal, S.; Mohammadi-Aragh, M.J.; Ball, J.E. Prediction of student academic performance using a hybrid 2D CNN model. Electronics 2022, 11, 1005. [Google Scholar] [CrossRef]

| Paper | Contribution | Technique | Results | Limitations |

|---|---|---|---|---|

| [28] | Proposed model for student assessment and feedback | Improved FCN | 84% accuracy | Less accuracy of the model. |

| [29] | Proposed an efficient performance assessment model. | ANN | 95% accuracy | A greedy approach needs to be considered for better outcomes. |

| [30] | Multimode model for student assessment | Data fusion | Successfully predicted the performance of learners | Lack of semantic feature extraction. |

| [31] | Proposed hyper model for assessment of students | LSTM and CNN | Successfully predicted student drop-out | Misclassification in data. |

| [32] | Predicted leaner behavior using deep learning | RNN, GRU LSTM | Successfully in predicting student behavior | Less behavioral features are considered. |

| [33] | ML model for student performance prediction | RF, NB, SVM, MLP and LR | Achieved 97% accuracy | Feature extraction is done poorly. |

| [34] | Model for predicting psychological health of students | LR, SVC, DT, AdaBoost and XGB | Models performed efficiently except for AdaBoost | Model overfitting and take more time for computations. |

| [35] | Proposed model for assessment of student satisfaction | KNN and SVM | Both classifiers performed well | KNN classifier takes more time to learn. |

| [36] | Identification of student learning behavior | Ensemble Learning, SVM, RF, DT, LR and KNN | Ensemble Learning achieved 84% accuracy | Small dataset. |

| [37] | Automated system for learner assessment | LR, RF, XGBoost, Extra Tree, KNN and MLP | Extra Tree showed the highest performance | Model overfitting. |

| Characteristics | Learners’ Feedback | ||||

|---|---|---|---|---|---|

| 5 | 4 | 3 | 2 | 1 | |

| Mentors were guiding properly. | 1540 | 3990 | 2055 | 1800 | 1615 |

| Lectures were taken timely. | 2365 | 6950 | 845 | 380 | 460 |

| Free time was available. | 2820 | 4750 | 1360 | 1095 | 975 |

| Avail of the feedback option after the lecture. | 2190 | 7095 | 975 | 325 | 415 |

| Book reading habit. | 4120 | 5770 | 145 | 555 | 310 |

| Made proper notes during the lecture. | 2845 | 3910 | 1280 | 1500 | 1465 |

| Stress while revising lectures. | 2880 | 4610 | 1350 | 870 | 1290 |

| Knowledge retaining ability. | 2040 | 4190 | 2155 | 1850 | 765 |

| Having healthy relationships with family members. | 2600 | 6220 | 277 | 1018 | 885 |

| Enough income. | 3160 | 5345 | 720 | 660 | 1115 |

| Practicals are conducted weekly. | 1930 | 7434 | 525 | 772 | 339 |

| Strong internet connection. | 2372 | 7550 | 248 | 450 | 380 |

| Healthy diet pattern. | 1445 | 7660 | 540 | 1185 | 170 |

| Exercise daily. | 1372 | 8429 | 135 | 623 | 441 |

| Last semester’s GPA was fine. | 1888 | 6656 | 576 | 1360 | 520 |

| The quiz was taken weekly. | 4189 | 5170 | 622 | 576 | 443 |

| Assignments are uploaded regularly. | 2814 | 5889 | 544 | 1432 | 421 |

| Used social media applications. | 3859 | 5590 | 966 | 240 | 345 |

| Involved in social gatherings. | 1540 | 3990 | 2055 | 1800 | 1615 |

| Attended lecture attentively. | 2365 | 6950 | 845 | 380 | 460 |

| Did a part-time job during studies. | 1445 | 7660 | 540 | 1185 | 170 |

| The nature of the job was online. | 1372 | 8429 | 135 | 623 | 441 |

| The presentation was given online. | 1888 | 6656 | 576 | 1360 | 520 |

| Decision Label | SA | A | N | D | SD |

| Classification | Accuracy | Precision | F1-Score | Recall |

|---|---|---|---|---|

| Safe | 98.8% | 99.4% | 98.1% | 97.6% |

| At Risk | 98.2% | 97.9% | 97.4% | 97.5% |

| Paper | Technique | FS Algorithm | Chosen Attributes | Accuracy |

|---|---|---|---|---|

| [42] | KNN, DT, NB, ANN & SVM | GA | 10 | 91.12% |

| [44] | NB, KNN, DT & DISC | SBS, SFS & DE | 6 | 83.09% |

| [45] | ANN, AdaBoost & SVM | WFS & CFS | 9 | 91% |

| [46] | CatBoost | Pearson Correlation Coefficient | 15 | 96.8% |

| [47] | KNN, DT, SVM, NB & LR | HHO, PSO & HGSO | 25 | 98.6% |

| [Our work] | LSTM, CNN | 1D-CNN | 28 | 98.8% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Altaf, S.; Asad, R.; Ahmad, S.; Ahmed, I.; Abdollahian, M.; Zaindin, M. A Hybrid Framework of Deep Learning Techniques to Predict Online Performance of Learners during COVID-19 Pandemic. Sustainability 2023, 15, 11731. https://doi.org/10.3390/su151511731

Altaf S, Asad R, Ahmad S, Ahmed I, Abdollahian M, Zaindin M. A Hybrid Framework of Deep Learning Techniques to Predict Online Performance of Learners during COVID-19 Pandemic. Sustainability. 2023; 15(15):11731. https://doi.org/10.3390/su151511731

Chicago/Turabian StyleAltaf, Saud, Rimsha Asad, Shafiq Ahmad, Iftikhar Ahmed, Mali Abdollahian, and Mazen Zaindin. 2023. "A Hybrid Framework of Deep Learning Techniques to Predict Online Performance of Learners during COVID-19 Pandemic" Sustainability 15, no. 15: 11731. https://doi.org/10.3390/su151511731

APA StyleAltaf, S., Asad, R., Ahmad, S., Ahmed, I., Abdollahian, M., & Zaindin, M. (2023). A Hybrid Framework of Deep Learning Techniques to Predict Online Performance of Learners during COVID-19 Pandemic. Sustainability, 15(15), 11731. https://doi.org/10.3390/su151511731