Attractiveness of Collaborative Platforms for Sustainable E-Learning in Business Studies

Abstract

:1. Introduction

2. Theoretical Background

2.1. E-Learning Collaboration Platforms

- “Together mode”, which offers a simulation of actual meeting members being in the same room;

- Customisable meetings that support setting up breakout rooms for meeting in smaller groups;

- Ability to record the meetings on the go, accompanied with meeting notes and transcripts.

2.2. Attractiveness of E-Learning Collaboration Platforms for Students

- Saved time—Being able to finish a process or a task faster automatically means saving time and, as such, means fewer costs;

- Strengthened team relationships—One can see students enrolled in a course as closely connected groups. So, it comes to be very valuable to be capable of maintaining sound and effective relationships within groups of participants. With modern collaboration tools, this can be supported and elevated so that every student has a better understanding of teamwork and mutual goals, of course, he attends;

- Better organisation of teaching work—Collaboration tools are facilitators to improve teaching, especially active learning.

2.3. User Experience

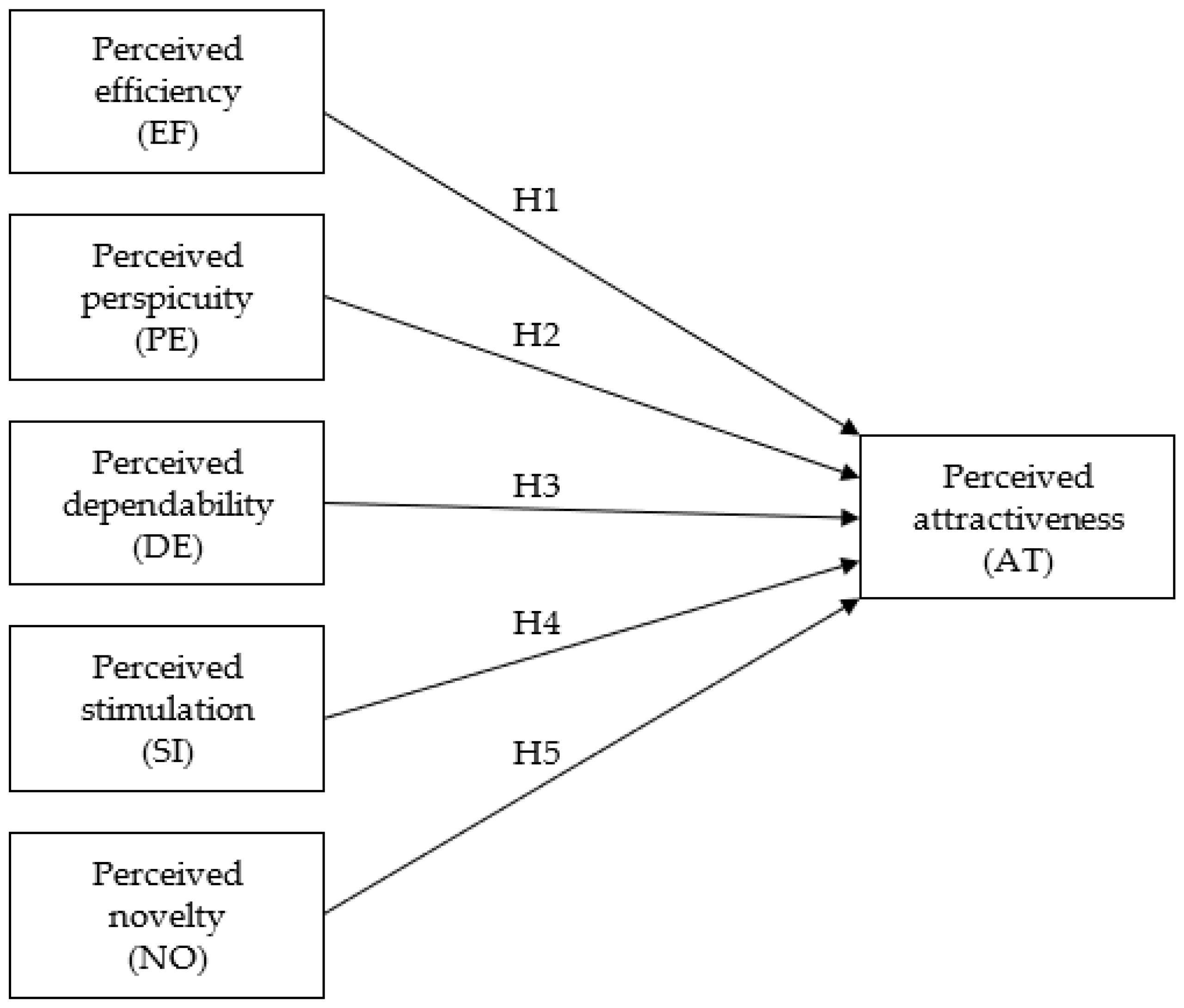

2.4. Research Model

3. Methodology

3.1. Participants and Procedure

3.2. Measurement Instrument

3.3. Data

4. Results

4.1. Reflective Measurement Model Assessment

4.2. Structural Model Assessment

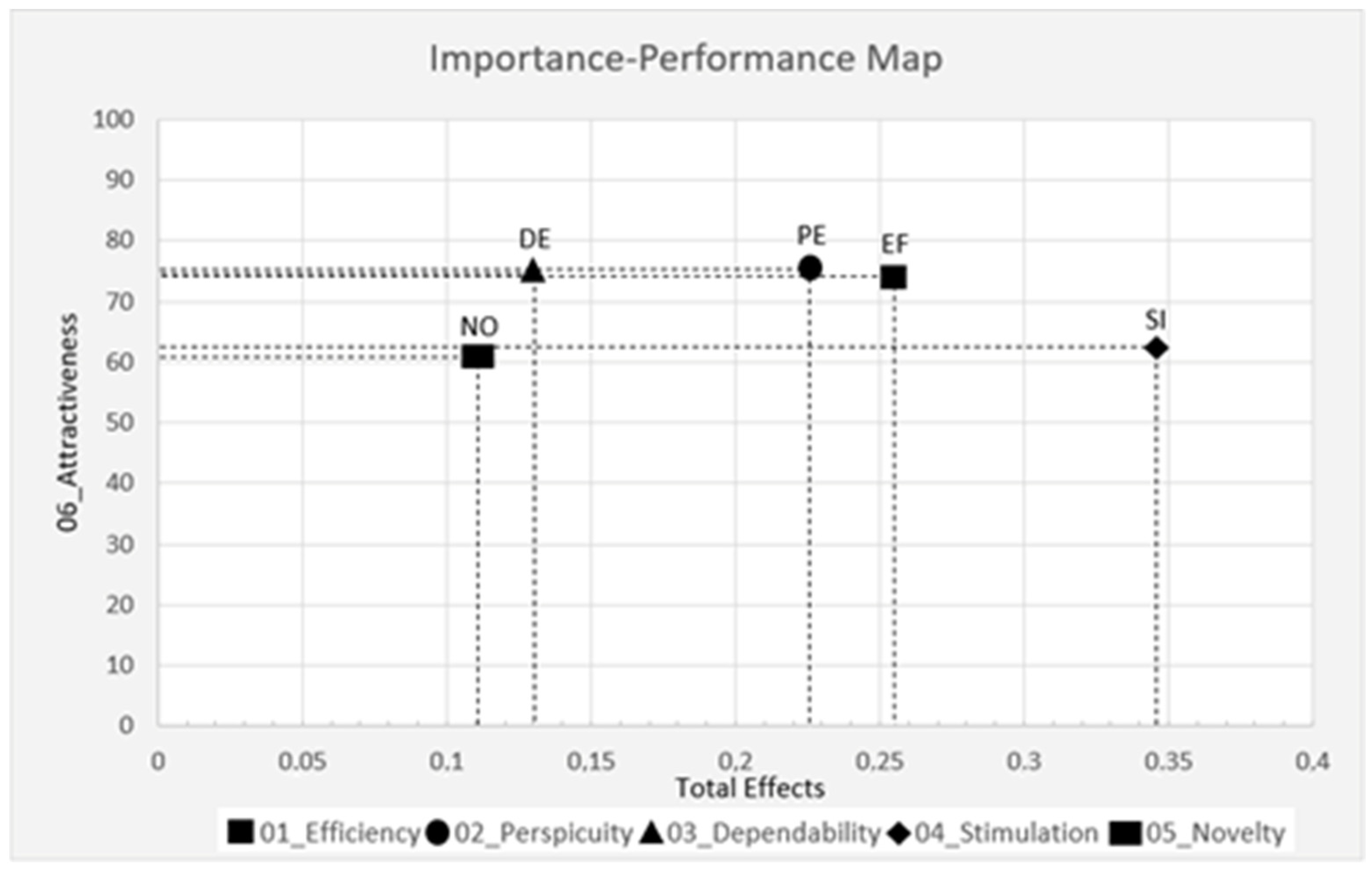

4.3. The Importance—Performance Map Analysis (IPMA)

5. Discussion

5.1. Implication of the Study

5.2. Limitations and Suggestions for Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Roselli, T.; Pragnelli, M.V.; Rossano, V. Studying usability of an educational software. In Proceedings of the IADIS International Conference WWW/Internet 2002, ICWI 2002, Lisbon, Portugal, 13–15 November 2002; pp. 810–814. Available online: https://www.researchgate.net/publication/220970004_Studying_Usability_of_an_Educational_Software (accessed on 30 June 2022).

- Cole, I.J. Usability of Online Virtual Learning Environments: Key Issues for Instructors and Learners. In Student Usability in Educational Software and Games: Improving Experiences; Gonzalez, C., Ed.; IGI Global: Hershey, PA, USA, 2013; pp. 41–58. [Google Scholar] [CrossRef]

- Gunesekera, A.I.; Bao, Y.; Kibelloh, M. The role of usability on e-learning user interactions and satisfaction: A literature review. J. Syst. Inf. Technol. 2019, 21, 368–394. [Google Scholar] [CrossRef]

- Studiyanti, A.; Saraswati, A. Usability Evaluation and Design of Student Information System Prototype to Increase Student’s Satisfaction (Case Study: X University). Ind. Eng. Manag. Syst. 2019, 18, 676–684. [Google Scholar] [CrossRef]

- Squires, D.; Preece, J. Usability and learning: Evaluating the potential of educational software. Comput. Educ. 1996, 27, 15–22. [Google Scholar] [CrossRef]

- Midwest Comprehensive Center. Student Goal Setting: An Evidence-Based Practice. 2018. Available online: http://files.eric.ed.gov/fulltext/ED589978.pdf (accessed on 30 June 2022).

- Ganiyu, A.A.; Mishra, A.; Elijah, J.; Gana, U.M. The Importance of Usability of a Website. IUP J. Inf. Technol. 2017, 8, 27–35. Available online: https://www.researchgate.net/publication/331993738_The_Importance_of_Usability_of_a_Website (accessed on 30 June 2022).

- Nur Sukinah, A.; Noor Suhana, S.; Wan Nur Idayu Tun Mohd, H.; Nur Liyana, Z.; Azliza, Y. A Review of Website Measurement for Website Usability Evaluation. J. Phys. Conf. Ser. 2021, 1874, 2045. [Google Scholar] [CrossRef]

- Chauhan, S.; Akhtar, A.; Gupta, A. Customer experience in digital banking: A review and future research directions. Int. J. Qual. Serv. Sci. 2022, 14, 311–348. [Google Scholar] [CrossRef]

- Artyom, C.; Wunarsa, D.; Shanlong, H.; Zhupeng, L.; Balakrishnan, S. A Review of a E-Commerce Website Based on Its Usability Element. EasyChair Preprint No. 6665. 2021. Available online: https://easychair.org/publications/preprint/vNPc (accessed on 30 June 2022).

- Durdu, P.O.; Soydemir, Ö.N. A systematic review of web accessibility metrics. In App and Website Accessibility Developments and Compliance Strategies; Akgül, Y., Ed.; IGI Global: Hershey, PA, USA, 2022; pp. 77–108. [Google Scholar] [CrossRef]

- Costabile, M.F.; De Marsico, M.; Lanzilotti, R.; Plantamura, V.L.; Roselli, T. On the Usability Evaluation of E-Learning Applications. In Proceedings of the 38th Annual Hawaii International Conference on System Sciences, Hawaii, HL, USA, 3–6 January 2005; p. 6b. [Google Scholar] [CrossRef]

- Duran, D. Learning-by-teaching. Evidence and implications as a pedagogical mechanism. Innov. Educ. Teach. Int. 2017, 54, 476–484. [Google Scholar] [CrossRef]

- Albert, B.; Tullis, T.; Tedesco, D. Beyond the Usability Lab: Conducting Large-Scale Online User Experience Study; Morgan Kaufmann: Burlington, MA, USA, 2010. [Google Scholar] [CrossRef]

- Litto, F.M.; Formiga, M. Educação a Distância: O Estado da Arte; Pearson Education: São Paulo, Brasil, 2009. [Google Scholar]

- Freire, L.; Arezes, P.; Campos, J. A literature review about usability evaluation methods for e-learning platforms. Work 2012, 41, 1038–1044. [Google Scholar] [CrossRef] [Green Version]

- Borrás Gené, O.; Martínez Núñez, M.; Fidalgo Blanco, Á. Gamification in MOOC: Challenges, opportunities and proposals for advancing MOOC model. In Proceedings of the Second International Conference on Technological Ecosystems for Enhancing Multiculturality (TEEM ’14), Salamanca, Spain, 1–3 October 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 215–220. [Google Scholar] [CrossRef]

- Mueller, D.; Strohmeier, S. Design characteristics of virtual learning environments: State of research. Comput. Educ. 2011, 57, 2505–2516. [Google Scholar] [CrossRef]

- Papanastasiou, G.; Drigas, A.; Skianis, C.; Lytras, M.; Papanastasiou, E. Virtual and augmented reality effects on K-12, higher and tertiary education students’ twenty-first century skills. Virtual Real. 2019, 23, 425–436. [Google Scholar] [CrossRef]

- Potkonjak, V.; Gardner, M.; Callaghan, V.; Mattila, P.; Guetl, C.; Petrović, V.M.; Jovanović, K. Virtual laboratories for education in science, technology, and engineering: A review. Comput. Educ. 2016, 95, 309–327. [Google Scholar] [CrossRef] [Green Version]

- Abuhlfaia, K.; de Quincey, E. Evaluating the Usability of an E-Learning Platform within Higher Education from a Student Perspective. In Proceedings of the 2019 3rd International Conference on Education and E-Learning (ICEEL 2019), Barcelona, Spain, 5–7 November 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Cengizhan Dirican, A.; Göktürk, M. Psychophysiological measures of human cognitive states applied in human computer interaction. Procedia Comput. Sci. 2011, 3, 1361–1367. [Google Scholar] [CrossRef] [Green Version]

- MacKenzie, I.S. Human-Computer Interaction: An Empirical Research Perspective; Morgan Kaufmann: Waltham, MA, USA, 2013. [Google Scholar]

- Ardito, C.; Costabile, F.; De Marsico, M.; Lanzilotti, R.; Levialdi, S.; Roselli, T.; Rossano, V. An approach to usability evaluation of e-learning applications. Univers. Access Inf. Soc. 2006, 4, 270–283. [Google Scholar] [CrossRef]

- Salas, J.; Chang, A.; Montalvo, L.; Núñez, A.; Vilcapoma, M.; Moquillaza, A.; Murillo, B.; Paz, F. Guidelines to evaluate the usability and user experience of learning support platforms: A systematic review. In Human-Computer Interaction. HCI-COLLAB 2019. Communications in Computer and Information Science; Ruiz, P., Agredo-Delgado, V., Eds.; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Abuhlfaia, K.; de Quincey, E. The Usability of E-learning Platforms in Higher Education: A Systematic Mapping Study. In Proceedings of the 32nd International BCS Human Computer Interaction Conference (HCI), Belfast, UK, 4–6 July 2018; BCS Learning and Development Ltd.: Belfast, UK, 2018. [Google Scholar] [CrossRef] [Green Version]

- Handayani, K.; Juningsih, E.; Riana, D.; Hadianti, S.; Rifai, A.; Serli, R. Measuring the Quality of Website Services covid19.kalbarprov.go.id Using the Webqual 4.0 Method. J. Phys. Conf. Ser. 2020, 1641, 2049. [Google Scholar] [CrossRef]

- Gordillo, A.; Barra, E.; Aguirre, S.; Quemada, J. The usefulness of usability and user experience evaluation methods on an e-Learning platform development from a developer’s perspective: A case study. In Proceedings of the 2014 IEEE Frontiers in Education Conference (FIE) Proceedings, Madrid, Spain, 22–25 October 2014; pp. 1–8. [Google Scholar] [CrossRef] [Green Version]

- ISO 9241-11; Ergonomic Requirements for Office Work with Visual Display Terminals (Vdts): Part 11: Guidance On Usability. International Organization for Standardization (ISO): Geneva, Switzerland, 1998. Available online: https://www.iso.org/standard/16883.html (accessed on 7 May 2022).

- Hartson, H.R.; Andre, T.S.; Williges, R.C.; Tech, V. Criteria for evaluating usability evaluation methods. Int. J. Hum.-Comput. Interact. 2001, 13, 373–410. [Google Scholar] [CrossRef]

- Quintana, C.; Carra, A.; Krajcik, J.; Soloway, E. Learner-centered design: Reflections and new directions. In Human-Computer Interaction in the New Millennium, 1st ed.; Carrol, J.M., Ed.; Addison Wesley: New York, NY, USA, 2001; pp. 605–626. [Google Scholar]

- Giannakos, M.N. The evaluation of an e-Learning web-based platform. In Proceedings of the 2nd International Conference on Computer Supported Education, Valencia, Spain, 7–10 April 2010; pp. 433–438. [Google Scholar] [CrossRef] [Green Version]

- ISO 9241-210; Ergonomics of Human-System Interaction Part 210: Human-Centered Design For Interactive Systems. International Organization for Standardization (ISO): Geneva, Switzerland, 2010. Available online: https://www.iso.org/standard/52075.html (accessed on 7 May 2022).

- Roto, V.; Law, E.; Vermeeren, A.; Hoonhout, J. (Eds.) User Experience White Paper. Outcome of the Dagstuhl Seminar on Demarcating User Experience, Germany, 2011. Available online: http://www.allaboutux.org/files/UX-WhitePaper.pdf (accessed on 1 June 2022).

- Liu, Y. A Scientometric Analysis of User Experience Research Related to Green and Digital Transformation. In Proceedings of the 2020 Management Science Informatization and Economic Innovation Development Conference (MSIEID), Guangzhou, China, 18–20 December 2020; pp. 377–380. [Google Scholar] [CrossRef]

- Graham, L.; Berman, J.; Bellert, A. Sustainable Learning: Inclusive Practices for 21st Century Classrooms; Cambridge University Press: Melbourne, Australia, 2015. [Google Scholar] [CrossRef]

- Medina-Molina, C.; Rey-Tienda, M.D.L.S. The transition towards the implementation of sustainable mobility. Looking for generalization of sustainable mobility in different territories by the application of QCA. Sustain. Technol. Entrep. 2022, 1, 100015. [Google Scholar] [CrossRef]

- Trapp, C.T.C.; Kanbach, D.K.; Kraus, S. Sector coupling and business models towards sustainability: The case of the hydrogen vehicle industry. Sustain. Technol. Entrep. 2022, 1, 100014. [Google Scholar] [CrossRef]

- Nedjah, N.; de Macedo Mourelle, L.; dos Santos, R.A.; dos Santos, L.T.B. Sustainable maintenance of power transformers using computational intelligence. Sustain. Technol. Entrep. 2022, 1, 100001. [Google Scholar] [CrossRef]

- Marinakis, Y.D.; White, R. Hyperinflation potential in commodity-currency trading systems: Implications for sustainable development. Sustain. Technol. Entrep. 2022, 1, 100003. [Google Scholar] [CrossRef]

- Franco, M.; Esteves, L. Inter-clustering as a network of knowledge and learning: Multiple case studies. J. Innov. Knowl. 2020, 5, 39–49. [Google Scholar] [CrossRef]

- Bouncken, R.B.; Lapidus, A.; Qui, Y. Organizational sustainability identity: ‘New Work’ of home offices and coworking spaces as facilitators. Sustain. Technol. Entrep. 2022, 1, 100011. [Google Scholar] [CrossRef]

- Geitz, G.; de Geus, J. Design-based education, sustainable teaching, and learning. Cogent Educ. 2019, 6, 7919. [Google Scholar] [CrossRef]

- Sinakou, E.; Donche, V.; Boeve-de Pauw, J.; Van Petegem, P. Designing Powerful Learning Environments in Education for Sustainable Development: A Conceptual Framework. Sustainability 2019, 11, 5994. [Google Scholar] [CrossRef] [Green Version]

- Sofiadin, A.B.M. Sustainable development, e-learning and Web 3.0: A descriptive literature review. J. Inf. Commun. Ethics Soc. 2014, 12, 157–176. [Google Scholar] [CrossRef]

- Ben-Eliyahu, A. Sustainable Learning in Education. Sustainability 2021, 13, 4250. [Google Scholar] [CrossRef]

- Hassenzahl, M. The Effect of Perceived Hedonic Quality on Product Appealingness. Int. J. Hum.-Comput. Interact. 2001, 13, 481–499. [Google Scholar] [CrossRef]

- Laugwitz, B.; Held, T.; Schrepp, M. Construction and Evaluation of a User Experience Questionnaire. In HCI and Usability for Education and Work; Holzinger, A., Ed.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 63–76. [Google Scholar] [CrossRef]

- Schrepp, M. User Experience Questionnaire Handbook. 2019. Available online: https://www.ueq-online.org/Material/Handbook.pdf (accessed on 10 March 2022).

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Applying the User Experience Questionnaire (UEQ) in Different Evaluation Scenarios. In Design, User Experience, and Usability. Theories, Methods, and Tools for Designing the User Experience; Marcus, A., Ed.; Springer International Publishing: Geneva, Switzerland, 2014; pp. 383–392. [Google Scholar] [CrossRef]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Construction of a benchmark for the User Experience Questionnaire (UEQ). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 40–44. [Google Scholar] [CrossRef] [Green Version]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Design and Evaluation of a Short Version of the User Experience Questionnaire (UEQ-S). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 103–108. [Google Scholar] [CrossRef] [Green Version]

- Remali, A.M.; Ghazali, M.A.; Kamaruddin, M.K.; Kee, T.Y. Understanding Academic Performance Based on Demographic Factors, Motivation Factors and Learning Styles. Int. J. Asian Soc. Sci. 2013, 3, 1938–1951. [Google Scholar]

- Indreica, S.E. eLearning platform: Advantages and disadvantages on time management. In Proceedings of the 10th International Scientific Conference eLearning and Software for Education, Bucharest, Romania, 24–25 April 2014; pp. 236–243. [Google Scholar] [CrossRef]

- Virtič, M.P. The role of internet in education. In Proceedings of the DIVAI 2012, 9th International Scientific Conference on Distance Learning in Applied Informatics, Štúrovo, Slovakia, 2–4 May 2012; pp. 243–249. [Google Scholar]

- Alexe, C.M.; Alexe, C.G.; Dumitriu, D.; Mustață, I.C. The Analysis of the Users’ Perceptions Regarding the Learning Management Systems for Higher Education: Case Study Moodle. In Proceedings of the 17th International Scientific Conference on eLearning and Software for Education, eLSE 2021, Bucharest, Romania, 22–23 April 2021; pp. 284–293. [Google Scholar] [CrossRef]

- Abbas, A.; Hosseini, S.; Núñez, J.L.M.; Sastre-Merino, S. Emerging technologies in education for innovative pedagogies and competency development. Australas. J. Educ. Technol. 2021, 37, 1–5. [Google Scholar] [CrossRef]

- Alturki, U.; Aldraiweesh, A. Application of Learning Management System (LMS) during the COVID-19 Pandemic: A Sustainable Acceptance Model of the Expansion Technology Approach. Sustainability 2021, 13, 10991. [Google Scholar] [CrossRef]

- Sarnou, H.; Sarnou, D. Investigating the EFL Courses Shift into Moodle during the Pandemic of COVID-19: The Case of MA Language and Communication at Mostaganem University. Arab World Engl. J. 2021, 24, 354–363. [Google Scholar] [CrossRef]

- Bakhmat, L.; Babakina, O.; Belmaz, Y. Assessing online education during the COVID-19 pandemic: A survey of lecturers in Ukraine. J. Phys. Conf. Ser. 2021, 1840, 012050. [Google Scholar] [CrossRef]

- Polhun, K.; Kramarenko, T.; Maloivan, M.; Tomilina, A. Shift from blended learning to distance one during the lockdown period using Moodle: Test control of students’ academic achievement and analysis of its results. J. Phys. Conf. Ser. 2021, 1840, 012053. [Google Scholar] [CrossRef]

- Hill, P. New Release of European LMS Market Report. eLiterate 2016. Available online: https://eliterate.us/new-release-european-lms-market-report/ (accessed on 7 June 2022).

- Somova, E.; Gachkova, M. Strategy to Implement Gamification in LMS. In Next-Generation Applications and Implementations of Gamification Systems, 1st ed.; Portela, F., Queirós, R., Eds.; IGI Global: Hershey, PA, USA, 2022; pp. 51–72. [Google Scholar] [CrossRef]

- Al-Ajlan, A.; Zedan, H. Why Moodle. In Proceedings of the 12th IEEE International Workshop on Future Trends of Distributed Computing Systems, Kunming, China, 21–23 October 2008; pp. 58–64. [Google Scholar] [CrossRef]

- Kintu, M.J.; Zhu, C.; Kagambe, E. Blended learning effectiveness: The relationship between student characteristics, design features and outcomes. Int. J. Educ. Technol. High. Educ. 2017, 14, 7. [Google Scholar] [CrossRef] [Green Version]

- Evgenievich, E.; Petrovna, M.; Evgenievna, T.; Aleksandrovna, O.; Yevgenyevna, S. Moodle LMS: Positive and Negative Aspects of Using Distance Education in Higher Education Institutions. Propós. Represent. 2021, 9, e1104. [Google Scholar] [CrossRef]

- Oproiu, G.C. Study about Using E-learning Platform (Moodle) in University Teaching Process. Procedia Soc. Behav. Sci. 2015, 180, 426–432. [Google Scholar] [CrossRef] [Green Version]

- Parusheva, S.; Aleksandrova, Y.; Hadzhikolev, A. Use of Social Media in Higher Education Institutions—An Empirical Study Based on Bulgarian Learning Experience. TEM J. Technol. Educ. Manag. Inform. 2018, 7, 171–181. [Google Scholar] [CrossRef]

- Ironsi, C.S. Google Meet as a synchronous language learning tool for emergency online distant learning during the COVID-19 pandemic: Perceptions of language instructors and preservice teachers. J. Appl. Res. High. Educ. 2022, 14, 640–659. [Google Scholar] [CrossRef]

- Lewandowski, M. Creating virtual classrooms (using Google Hangouts) for improving language competency. Lang. Issues: ESOL J. 2015, 26, 37–42. [Google Scholar]

- Herskowitz, N. Gartner Recognises Microsoft as Leader in Unified Communications as a Service and Meetings Solutions, Microsoft 365. 2021. Available online: https://www.microsoft.com/en-us/microsoft-365/blog/2021/10/25/gartner-recognizes-microsoft-as-leader-in-unified-communications-as-a-service-and-meetings-solutions/ (accessed on 7 June 2022).

- Microsoft. Welcome to Microsoft Teams. 2021. Available online: https://docs.microsoft.com/en-us/microsoftteams/teams-overview (accessed on 30 April 2021).

- Florjancic, V.; Wiechetek, L. Using Moodle and MS Teams in higher education—A comparative study. Int. J. Innov. Learn. 2022, 31, 264–286. [Google Scholar] [CrossRef]

- Friedman, T. Come the Revolution. New York Times. 15 May 2012. Available online: http://www.nytimes.com/2012/05/16/opinion/friedman-come-the-revolution.html/ (accessed on 7 May 2022).

- Czerniewicz, L.; Deacon, A.; Small, J.; Walji, S. Developing world MOOCs: A curriculum view of the MOOC landscape. J. Glob. Lit. Technol. Emerg. Pedagog. 2014, 2, 122–139. [Google Scholar]

- Mulder, F.; Jansen, D. MOOCs for opening up education and the openupEd initiative. In MOOCs and Open Education around the World; Bonk, C.J., Lee, M.M., Reeves, T.C., Reynolds, T.H., Eds.; Routledge: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- Walther, J.B. Computer-mediated communication. Commun. Res. 1996, 23, 3–43. [Google Scholar] [CrossRef]

- Walther, J.B.; Anderson, J.F.; Park, D.W. Interpersonal Effects in Computer-Mediated Interaction: A meta-analysis of social and antisocial communication. Commun. Res. 1994, 21, 460–487. Available online: www.matt-koehler.com/OtherPages/Courses/CEP_909_FA01/Readings/CmC/Walther_1994b.pdf (accessed on 7 June 2022). [CrossRef] [Green Version]

- Wegerif, R. The social dimension of asynchronous learning networks. J. Asynchronous Learn. Netw. 1998, 2, 34–49. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.103.7298&rep=rep1&type=pdf (accessed on 7 May 2021). [CrossRef]

- Penstein Rosé, C.; Carlson, R.; Yang, D.; Wen, M.; Resnick, L.; Goldman, P.; Sherer, J. Social factors that contribute to attrition in MOOCs. In Proceedings of the First ACM Conference on Learning @ Scale Conference (L@S’ 14), Atlanta, GA, USA, 4–5 March 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 197–198. [Google Scholar] [CrossRef]

- Paechter, M.; Maier, B. Online or face-to-face? Students’ experiences and preferences in e-learning. Internet High. Educ. 2010, 13, 292–297. [Google Scholar] [CrossRef]

- Garrison, D.R.; Anderson, T.; Archer, W. Critical thinking, cognitive presence, and computer conferencing in distance education. Am. J. Distance Educ. 2001, 15, 7–23. [Google Scholar] [CrossRef] [Green Version]

- Chen, K.C.; Jang, S.J. Motivation in online learning: Testing a model of self-determination theory. Comput. Hum. Behav. 2010, 26, 741–752. [Google Scholar] [CrossRef]

- Barnett, E.A. Validation experiences and persistence among community college students. Rev. High. Educ. 2011, 34, 193–230. [Google Scholar] [CrossRef]

- Graham, C.R. Emerging practice and research in blended learning. In Handbook of Distance Education, 3rd ed.; Moore, M.G., Ed.; Routledge: New York, NY, USA, 2013; pp. 333–350. [Google Scholar]

- Ross, B.; Gage, K. Global perspectives on blending learning: Insight from WebCT and our customers in higher education. In Handbook of Blended Learning: Global Perspectives, Local Designs; Bonk, C.J., Graham, C.R., Eds.; Pfeiffer Publishing: San Francisco, CA, USA, 2006; pp. 155–168. [Google Scholar]

- Drossos, L.; Vassiliadis, B.; Stefani, A.; Xenos, M.; Sakkopoulos, E.; Tsakalidis, A. Introducing ICT in traditional higher education environment: Background, design and evaluation of a blended approach. Int. J. Inf. Commun. Technol. Educ. 2006, 2, 65–78. [Google Scholar] [CrossRef]

- Dziuban, C.; Moskal, P. A course is a course is a course: Factor invariance in student evaluation of online, blended and face-to-face learning environments. Internet High. Educ. 2011, 14, 236–241. [Google Scholar] [CrossRef]

- Means, B.; Toyama, Y.; Murphy, R.; Baki, M. The effectiveness of online and blended learning: A meta-analysis of the empirical literature. Teach. Coll. Rec. 2013, 115, 1–47. [Google Scholar] [CrossRef]

- Griffiths, R.; Chingos, M.; Mulhern, C.; Spies, R. Interactive Online Learning on Campus: Testing MOOCs and Other Platforms in Hybrid Formats in the University System of Maryland; Ithaka S+R: New York, NY, USA, 2014; Volume 10, pp. 1–81. [Google Scholar]

- Joseph, A.M.; Nath, B.A. Integration of Massive Open Online Education (MOOC) System with in-Classroom Interaction and Assessment and Accreditation: An Extensive Report from a Pilot Study. WORLDCOMP ‘13. Available online: http://worldcomp-proceedings.com/proc/p2013/EEE3547.pdf (accessed on 11 April 2022).

- Rossiou, E.; Sifalaras, A. Blended Methods to Enhance Learning: An Empirical Study of Factors Affecting Student Participation in the Use of E-Tools to Complement F2F Teaching of Algorithms. In Proceedings of the 6th European Conference on e-Learning (ECEL 2007), Dublin, Ireland, 4–5 October 2007; pp. 519–528. Available online: https://www.researchgate.net/publication/248392307_Blended_Methods_to_Enhance_Learning_An_Empirical_Study_of_Factors_Affecting_Student_Participation_in_the_use_of_e-Tools_to_Complement_F2F_Teaching_of_Algorithms (accessed on 10 April 2022).

- Anderson, A.; Huttenlocher, D.; Kleinberg, J.; Leskovec, J. Engaging with massive online courses. In Proceedings of the 23rd International Conference on World Wide Web, Seoul, Korea, 7–11 April 2014; pp. 687–698. [Google Scholar] [CrossRef] [Green Version]

- Abbas, A.; Arrona-Palacios, A.; Haruna, H.; Alvarez-Sosa, D. Elements of students’ expectation towards teacher-student research collaboration in higher education. In Proceedings of the 2020 IEEE Frontiers in Education Conference (FIE), Uppsala, Sweden, 21–24 October 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Eisenhauer, T. Grow Your Business with Collaboration Tools. Axero Solutions, 2021. Available online: https://info.axerosolutions.com/grow-your-business-with-collaboration-tools (accessed on 5 May 2021).

- Deloitte. Remote Collaboration Facing the Challenges of COVID-19. 2021. Available online: https://www2.deloitte.com/content/dam/Deloitte/de/Documents/human-capital/Remote-Collaboration-COVID-19.pdf (accessed on 30 April 2021).

- Madlberger, M.; Raztocki, N. Digital Cross-Organizational Collaboration: Towards a Preliminary Framework. In Proceedings of the Fifteenth Americas Conference on Information Systems, San Francisco, CA, USA, 6–9 August 2009; Available online: https://ssrn.com/abstract=1477527 (accessed on 30 April 2021).

- Van de Sand, F.; Frison, A.K.; Zotz, P.; Riener, A.; Holl, K. The intersection of User Experience (UX), Customer Experience (CX), and Brand Experience (BX). In User Experience Is Brand Experience. Management for Professionals; Springer Nature: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Hassenzahl, M. Experience Design: Technology for All the Right Reasons. Synth. Lect. Hum.-Cent. Inform. 2010, 3, 1–95. [Google Scholar] [CrossRef]

- Nass, C.; Adam, S.; Doerr, J.; Trapp, M. Balancing user and business goals in software development to generate positive user experience. In Human-Computer Interaction: The Agency Perspective; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar] [CrossRef]

- Lallemand, C.; Gronier, G.; Koenig, V. User experience: A concept without consensus? Exploring practitioners’ perspectives through an international survey. Comput. Hum. Behav. 2015, 43, 35–48. [Google Scholar] [CrossRef]

- Zaki, T.; Nazrul Islam, M. Neurological and physiological measures to evaluate the usability and user-experience (UX) of information systems: A systematic literature review. Comput. Sci. Rev. 2021, 40, 375. [Google Scholar] [CrossRef]

- Kashfi, P.; Feldt, R.; Nilsson, A. Integrating UX principles and practices into software development organisations: A case study of influencing events. J. Syst. Softw. 2019, 154, 37–58. [Google Scholar] [CrossRef]

- Sarstedt, M.; Henseler, J.; Ringle, C.M. Multi-Group Analysis in Partial Least Squares (PLS) Path Modeling: Alternative Methods and Empirical Results. Adv. Int. Mark. 2011, 22, 195–218. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sinkovics, R.R. The Use of Partial Least Squares Path Modeling in International Marketing. Adv. Int. Mark. 2009, 20, 277–320. [Google Scholar] [CrossRef] [Green Version]

- Ringle, C.M.; Wende, S.; Becker, J.-M. SmartPLS 3. Boenningstedt: SmartPLS. 2015. Available online: https://www.smartpls.com (accessed on 10 June 2021).

- Chin, W.W.; Newsted, P.R. Structural equation modeling analysis with small samples using partial least squares. In Statistical Strategies for Small Sample Research; Hoyle, R.H., Ed.; Sage Publications: Thousand Oaks, CA, USA, 1999; pp. 307–341. [Google Scholar]

- Chin, W.W. Commentary: Issues and Opinion on Structural Equation Modeling. MIS Q. 1998, 22, vii–xvi. Available online: http://www.jstor.org/stable/249674 (accessed on 30 June 2022).

- Hair, F.J.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM), 2nd ed.; Sage: Los Angeles, CA, USA, 2017. [Google Scholar]

- Garson, G.D. Partial Least Squares: Regression and Structural Equation Models; Statistical Associates Publishers: Asheboro, NC, USA, 2016. [Google Scholar]

- Jöreskog, K.G.; Wold, H.O.A. The ML and PLS Techniques for modeling with latent varibles: Historical and comparative aspects. In Systems under Indirect Obervation, Part I, 1st ed.; Wold, H., Jöreskog, K.G., Eds.; Elsevier: Amsterdam, The Netherlands, 1982; pp. 263–270. [Google Scholar]

- Cassel, C.; Hackl, P.; Westlund, A.H. Robustness of partial least squares method for estimating latent variable quality structures. J. Appl. Stat. 1999, 26, 435–446. [Google Scholar] [CrossRef]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- Chin, W.W. The partial least squares approach for structural equation modeling. In Modern Methods for Business Research; Macoulides, G.A., Ed.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1998; pp. 295–336. [Google Scholar]

- Daskalakis, S.; Mantas, J. Evaluatiing the impact of a service-oriented framework for healthcare interoperability. In eHealth beyond the Horizont—Get IT There: Proceedings of MIE2008; Anderson, S.K., Klein, G.O., Schulz, S., Aarts, J., Mazzoleni, M.C., Eds.; IOS Press: Amsterdam, The Netherlands, 2008; pp. 285–290. [Google Scholar]

- Fornell, C.G.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Shmueli, G.; Koppius, O.R. Predictive analytics in information systems research. MIS Q. 2011, 35, 553–572. [Google Scholar] [CrossRef] [Green Version]

- Sarstedt, M.; Ringle, C.M.; Hair, J.F. Partial least squares structural equation modeling. In Handbook of Market Research; Homburg, C., Klarmann, M., Vomberg, A., Eds.; Springer: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis, 7th ed.; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2009. [Google Scholar]

- Höck, C.; Ringle, C.M.; Sarstedt, M. Management of multi-purpose stadiums: Importance and performance mesurement of service interfaces. Int. J. Serv. Technol. Manag. 2010, 14, 188–207. [Google Scholar] [CrossRef]

- UN. Resolutions Adopted by the Conference. A/CONF.151/26/Rev.l. In Report of the United Nations Conference on Environment and Development, Rio de Janeiro, Brazil, 3–14 June 1992; United Nations: New York, NY, USA, 1993; Volume I, Available online: https://www.un.org/esa/dsd/agenda21/Agenda%2021.pdf (accessed on 25 March 2021).

- Gibson, R.B. Sustainability assessment: Basic components of a practical approach. Impact Assess. Proj. Apprais. 2006, 24, 170–182. [Google Scholar] [CrossRef]

- Hannay, M.; Newvine, T. Perceptions of distance learning: A comparison of online and traditional learning. Merlot J. Online Learn. Teach. 2006, 2, 1–11. [Google Scholar]

- Nazarenko, A.L. Blended Learning vs. Traditional Learning: What Works? (A Case Study Research). Procedia-Soc. Behav. Sci. 2015, 200, 77–82. [Google Scholar] [CrossRef] [Green Version]

- Engum, S.A.; Jeffries, P.; Fisher, L. Intravenous catheter training system: Computer-based education versus traditional learning methods. Am. J. Surg. 2003, 186, 67–74. [Google Scholar] [CrossRef]

- Ar, A.Y.; Abbas, A. Role of gamification in Engineering Education: A systematic literature review. In Proceedings of the 2021 IEEE Global Engineering Education Conference (EDUCON), Vienna, Austria, 21–23 April 2021; pp. 210–213. [Google Scholar] [CrossRef]

- Thomas, I. Critical Thinking, Transformative Learning, Sustainable Education, and Problem-Based Learning in Universities. J. Transform. Educ. 2009, 7, 245–264. [Google Scholar] [CrossRef]

- UEQ. User Experience Questionnaire. Available online: https://www.ueq-online.org/ (accessed on 10 March 2022).

- Ardito, C.; De Marsico, M.; Lanzilotti, R.; Levialdi, S.; Roselli, T.; Rossano, V.; Tersigni, M. Usability of E-learning tools. In Proceedings of the Working Conference on Advanced Visual Interfaces (AVI ‘04), Gallipoli, Italy, 25–28 May 2004; Association for Computing Machinery: New York, NY, USA, 2004; pp. 80–84. [Google Scholar] [CrossRef] [Green Version]

- Beranič, T.; Heričko, M. The Impact of Serious Games in Economic and Business Education: A Case of ERP Business Simulation. Sustainability 2022, 14, 683. [Google Scholar] [CrossRef]

- Beranič, T.; Heričko, M. Introducing ERP Concepts to IT Students Using an Experiential Learning Approach with an Emphasis on Reflection. Sustainability 2019, 11, 4992. [Google Scholar] [CrossRef] [Green Version]

- Sternad Zabukovšek, S.; Shah Bharadwaj, S.; Bobek, S.; Štrukelj, T. Technology acceptance model-based research on differences of enterprise resources planning systems use in India and the European Union. Inž. Ekon. 2019, 30, 326–338. [Google Scholar] [CrossRef] [Green Version]

- Sternad Zabukovšek, S.; Bobek, S.; Štrukelj, T. Employees’ attitude toward ERP system’s use in Europe and India: Comparing two TAM-based studies. In Cross-Cultural Exposure and Connections: Intercultural Learning for Global Citizenship; Birdie, A.K., Ed.; Apple Academic Press: New York, NY, USA, 2020; pp. 29–69. [Google Scholar] [CrossRef]

- Rožman, M.; Sternad Zabukovšek, S.; Bobek, S.; Tominc, P. Gender differences in work satisfaction, work engagement and work efficiency of employees during the COVID-19 pandemic: The case in Slovenia. Sustainability 2021, 13, 8791. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef] [Green Version]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer technology: A comparison of two theoretical models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef] [Green Version]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef] [Green Version]

| Term | Description with Examples |

|---|---|

| UX principles | Critical factors and basic concepts indicate the understanding of the user experience, which professionals must consider in their work. Example: The hedonic and pragmatic aspects of software application development play an essential role in user experience design as user experience is temporary. |

| UX practices | Includes actions that practitioners need to perform to comply with the user experience principles. Examples of practices are: recognizing users’ personal goals and desires, preparing prototypes, including users in the design process, and assessing software from a hedonistic and pragmatic perspective. |

| UX software | Computer-aided software that developers or designers use to perform a variety of UX practices is usually designed to support specific methods to allow for more systematic software development. Examples: eye-tracking software, persona preparation, visual design, and prototyping software. |

| UX techniques | They allow practitioners to select and load a structure based on best practices, thus allowing them to be more systematic, and therefore, they are also more likely to succeed. Examples are questionnaires and surveys, mind plans, cognitive mapping, field research, and design studio. |

| When | What | How |

|---|---|---|

| Prior to use | Anticipated user experience | Expectations about the experience. |

| During use | Momentary user experience | Facing with the experience. |

| After usage | Episodic user experience | Thinking about the experience. |

| Past use | Cumulative user experience | Call to mind several periods of use. |

| Factors | Description | Items (Measured Variable Codes) |

|---|---|---|

| Perceived efficiency (EF) | The degree to which users can solve tasks without unnecessary effort. | EF1: The e-learning platform works fast. |

| EF2: The e-learning platform is efficient to use. | ||

| EF3: The e-learning platform is practical to use. | ||

| EF4: The e-learning platform is organised. | ||

| Perceived perspicuity (PE) | The degree of ease in getting acquainted with the e-learning platform and learning to use it. | PE1: The e-learning platform is understandable to use. |

| PE2: The e-learning platform is easy to learn. | ||

| PE3: The e-learning platform is easy to use. | ||

| PE4: The e-learning platform is transparent. | ||

| Perceived dependability (DE) | The degree to which the user feels in control of the interaction with the e-learning platform. | DE1: The e-learning platform is predictive. |

| DE2: The e-learning platform is supportive. | ||

| DE3: The e-learning platform is secure. | ||

| DE4: The e-learning platform meets expectations. | ||

| Perceived stimulation (SI) | The degree of excitement and motivation the user perceives when using the e-learning platform. | SI1: The e-learning platform is valuable. |

| SI2: An e-learning platform is exciting. | ||

| SI3: An e-learning platform is interesting. | ||

| SI4: An e-learning platform is motivating. | ||

| Perceived novelty (NO) | The degree to which the e-learning platform is innovative and creative attracts users’ interest. | NO1: The e-learning platform encourages creativity. |

| NO2: An e-learning platform is inventive. | ||

| NO3: An e-learning platform is leading edge. | ||

| NO4: An e-learning platform is innovative. | ||

| Perceived attractiveness (AT) | The degree of the user’s general impression of the e-learning platform. | AT1: The e-learning platform is enjoyable to use. |

| AT2: The e-learning platform is good to use. | ||

| AT3: The e-learning platform is pleasing to use. | ||

| AT4: The e-learning platform is pleasant to use. | ||

| AT5: The e-learning platform is attractive to use. | ||

| AT6: The e-learning platform is friendly to use. |

| Constructs | Item | Mean Value | Standard Deviation | Indicators Loadings | Indicator Reliability | HTMT Confidence Interval | |

|---|---|---|---|---|---|---|---|

| 2.5% | 97.5% | ||||||

| Perceived efficiency (EF) | EF1 | 5.258 | 1.655 | 0.723 | 0.523 | 0.264 | 0.315 |

| EF2 | 5.534 | 1.489 | 0.793 | 0.629 | 0.327 | 0.380 | |

| EF3 | 5.355 | 1.595 | 0.763 | 0.582 | 0.289 | 0.338 | |

| EF4 | 5.524 | 1.604 | 0.766 | 0.587 | 0.325 | 0.388 | |

| Perceived perspicuity (PE) | PE1 | 5.817 | 1.488 | 0.817 | 0.667 | 0.315 | 0.363 |

| PE2 | 5.399 | 1.787 | 0.753 | 0.567 | 0.260 | 0.319 | |

| PE3 | 5.412 | 1.655 | 0.764 | 0.584 | 0.255 | 0.306 | |

| PE4 | 5.386 | 1.744 | 0.795 | 0.632 | 0.337 | 0.396 | |

| Perceived dependability (DE) | DE2 | 5.483 | 1.613 | 0.751 | 0.564 | 0.435 | 0.534 |

| DE3 | 5.551 | 1.628 | 0.747 | 0.558 | 0.325 | 0.400 | |

| DE4 | 5.395 | 1.573 | 0.82 | 0.672 | 0.413 | 0.483 | |

| Perceived stimulation (SI) | SI1 | 4.851 | 1.592 | 0.778 | 0.605 | 0.296 | 0.342 |

| SI2 | 4.448 | 1.553 | 0.818 | 0.669 | 0.268 | 0.304 | |

| SI3 | 4.959 | 1.621 | 0.874 | 0.764 | 0.333 | 0.370 | |

| SI4 | 4.596 | 1.738 | 0.752 | 0.566 | 0.261 | 0.306 | |

| Perceived novelty (NO) | NO1 | 4.642 | 1.786 | 0.857 | 0.734 | 0.579 | 0.676 |

| NO2 | 4.647 | 1.794 | 0.82 | 0.672 | 0.517 | 0.614 | |

| Perceived attractiveness (AT) | AT1 | 5.546 | 1.516 | 0.758 | 0.575 | 0.185 | 0.208 |

| AT2 | 5.615 | 1.583 | 0.789 | 0.623 | 0.199 | 0.226 | |

| AT3 | 5.143 | 1.522 | 0.799 | 0.638 | 0.184 | 0.210 | |

| AT4 | 5.452 | 1.448 | 0.821 | 0.674 | 0.200 | 0.221 | |

| AT5 | 5.006 | 1.594 | 0.825 | 0.681 | 0.203 | 0.227 | |

| AT6 | 5.223 | 1.546 | 0.83 | 0.689 | 0.199 | 0.226 | |

| Construct | Internal Consistency Reliability | Convergent Validity | |

|---|---|---|---|

| Cronbach’s Alpha | Composite Reliability | AVE | |

| 0.60–0.95 | 0.60–0.95 | >0.50 | |

| Perceived efficiency (EF) | 0.759 | 0.847 | 0.58 |

| Perceived perspicuity (PE) | 0.790 | 0.863 | 0.613 |

| Perceived dependability (DE) | 0.667 | 0.817 | 0.598 |

| Perceived stimulation (SI) | 0.820 | 0.881 | 0.651 |

| Perceived novelty (NO) | 0.579 | 0.826 | 0.703 |

| Perceived attractiveness (AT) | 0.891 | 0.916 | 0.647 |

| EF | PE | DE | SI | NO | AT | |

|---|---|---|---|---|---|---|

| Perceived efficiency (EF) | 0.762 | |||||

| Perceived perspicuity (PE) | 0.752 | 0.783 | ||||

| Perceived dependability (DE) | 0.739 | 0.676 | 0.774 | |||

| Perceived stimulation (SI) | 0.633 | 0.579 | 0.584 | 0.807 | ||

| Perceived novelty (NO) | 0.491 | 0.431 | 0.447 | 0.659 | 0.839 | |

| Perceived attractiveness (AT) | 0.744 | 0.753 | 0.722 | 0.786 | 0.619 | 0.804 |

| Constructs | Explained Variance R2 Value | Effect Size f2 Value | Predictive Relevance Q2 Value |

|---|---|---|---|

| Weak (≥0.25) Moderate (≥0.50) Substantial (≥0.75) | Small (≥0.02) Medium (≥0.15) High (≥0.35) | Small (>0.00) Medium (≥0.25) Large (≥0.50) | |

| Perceived efficiency (EF) | 0.104 | ||

| Perceived perspicuity (PE) | 0.104 | ||

| Perceived dependability (DE) | 0.036 | ||

| Perceived stimulation (SI) | 0.259 | ||

| Perceived novelty (NO) | 0.035 | ||

| Perceived attractiveness (AT) | 0.805 | 0.516 |

| Constructs Impact on Perceived Attractiveness (AT) | Path Coefficients (β) | t-Statistics | p-Values | 95% Confidence Intervals | Significant (p < 0.00) |

|---|---|---|---|---|---|

| ≥0.10 | ≥1.96 | <0.001-Strong <0.01-Moderate <0.05-Weak ≥-No Effect | [2.5%, 97.5%] | Yes/No | |

| Perceived efficiency (EF) | 0.254 | 7.533 | 0.000 | [0.187, 0.321] | Yes |

| Perceived perspicuity (PE) | 0.226 | 6.279 | 0.000 | [0.156, 0.299] | Yes |

| Perceived dependability (DE) | 0.130 | 4.375 | 0.000 | [0.071, 0.191] | Yes |

| Perceived stimulation (SI) | 0.346 | 11.358 | 0.000 | [0.285, 0.405] | Yes |

| Perceived novelty (NO) | 0.110 | 4.557 | 0.000 | [0.065, 0.158] | Yes |

| Importance | Performances | |

|---|---|---|

| Perceived efficiency (EF) | 0.254 | 73.847 |

| Perceived perspicuity (PE) | 0.226 | 75.372 |

| Perceived dependability (DE) | 0.13 | 74.509 |

| Perceived stimulation (SI) | 0.346 | 62.164 |

| Perceived novelty (NO) | 0.11 | 60.742 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sternad Zabukovšek, S.; Deželak, Z.; Parusheva, S.; Bobek, S. Attractiveness of Collaborative Platforms for Sustainable E-Learning in Business Studies. Sustainability 2022, 14, 8257. https://doi.org/10.3390/su14148257

Sternad Zabukovšek S, Deželak Z, Parusheva S, Bobek S. Attractiveness of Collaborative Platforms for Sustainable E-Learning in Business Studies. Sustainability. 2022; 14(14):8257. https://doi.org/10.3390/su14148257

Chicago/Turabian StyleSternad Zabukovšek, Simona, Zdenko Deželak, Silvia Parusheva, and Samo Bobek. 2022. "Attractiveness of Collaborative Platforms for Sustainable E-Learning in Business Studies" Sustainability 14, no. 14: 8257. https://doi.org/10.3390/su14148257

APA StyleSternad Zabukovšek, S., Deželak, Z., Parusheva, S., & Bobek, S. (2022). Attractiveness of Collaborative Platforms for Sustainable E-Learning in Business Studies. Sustainability, 14(14), 8257. https://doi.org/10.3390/su14148257