A TLBO-Tuned Neural Processor for Predicting Heating Load in Residential Buildings

Abstract

:1. Introduction

2. Materials and Methods

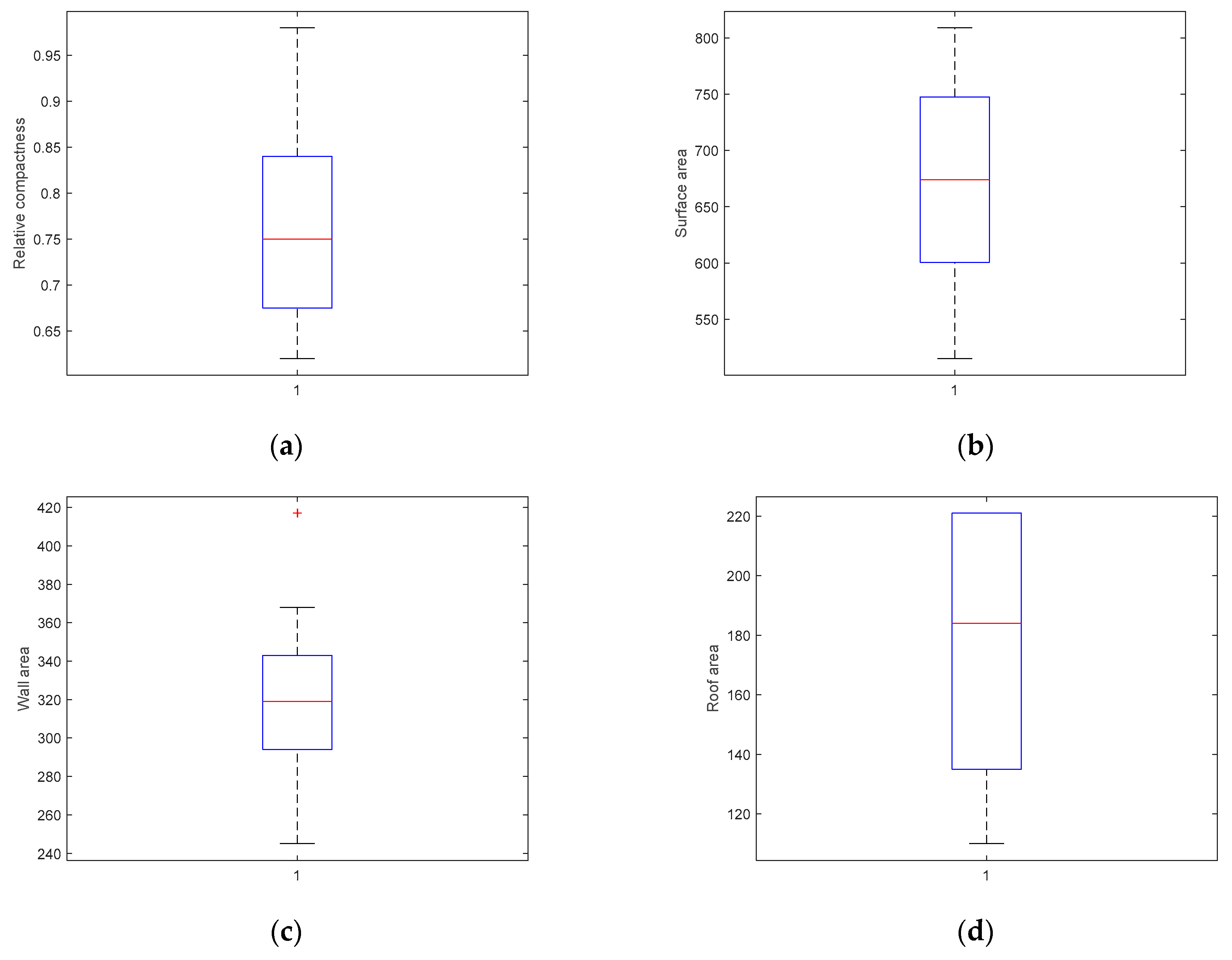

2.1. Data Provision

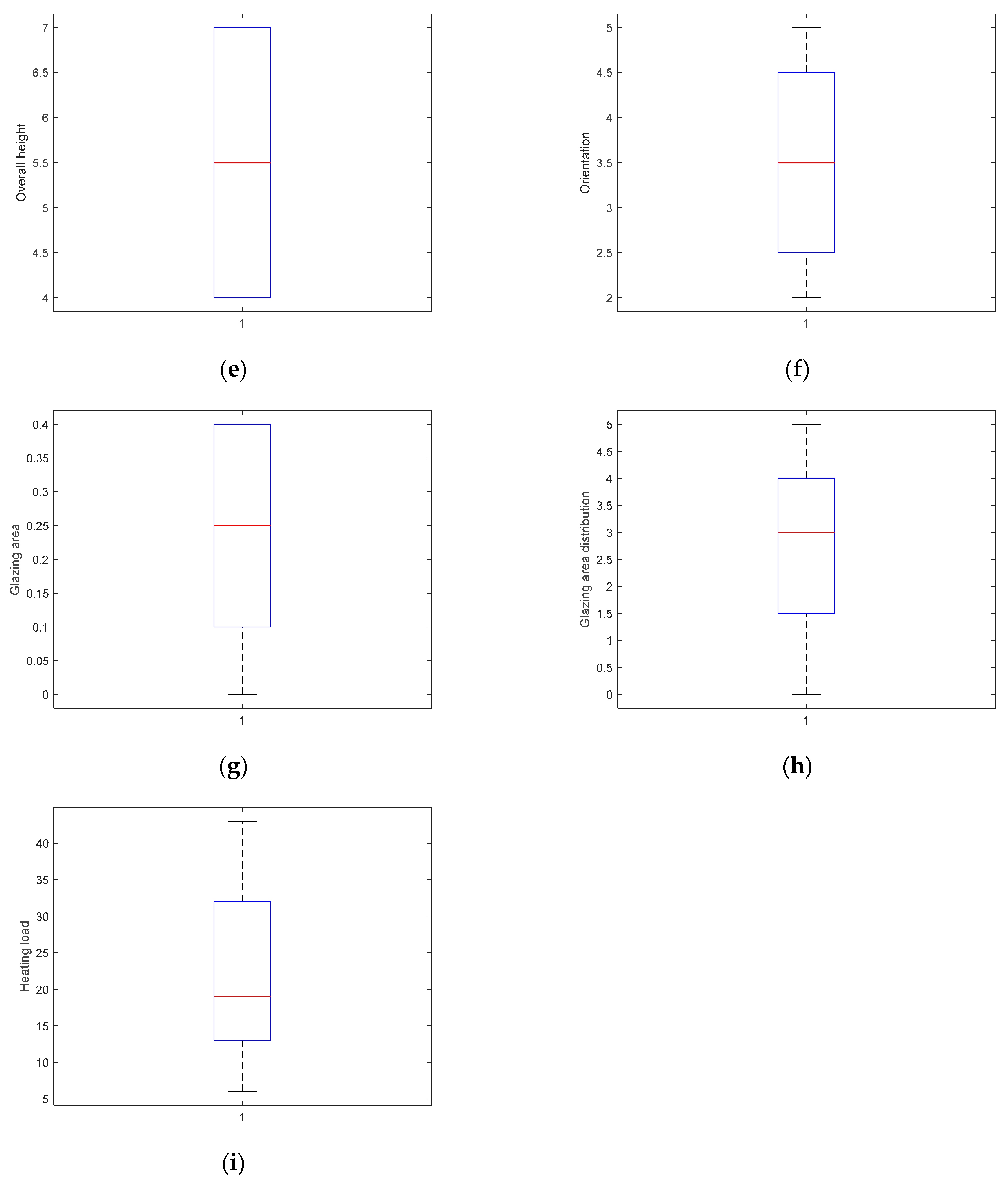

2.2. Methodology

3. Results and Discussion

3.1. Accuracy Indicators

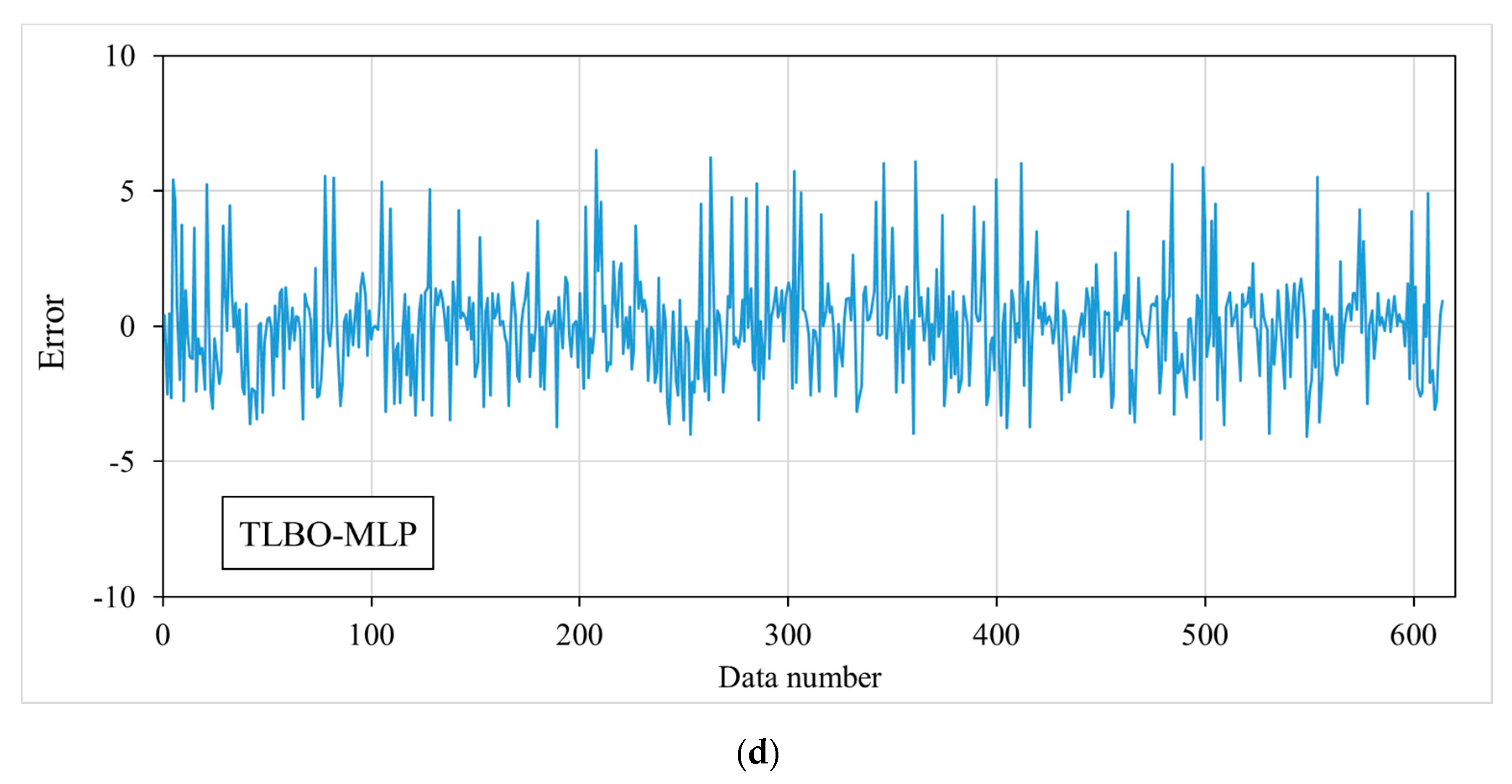

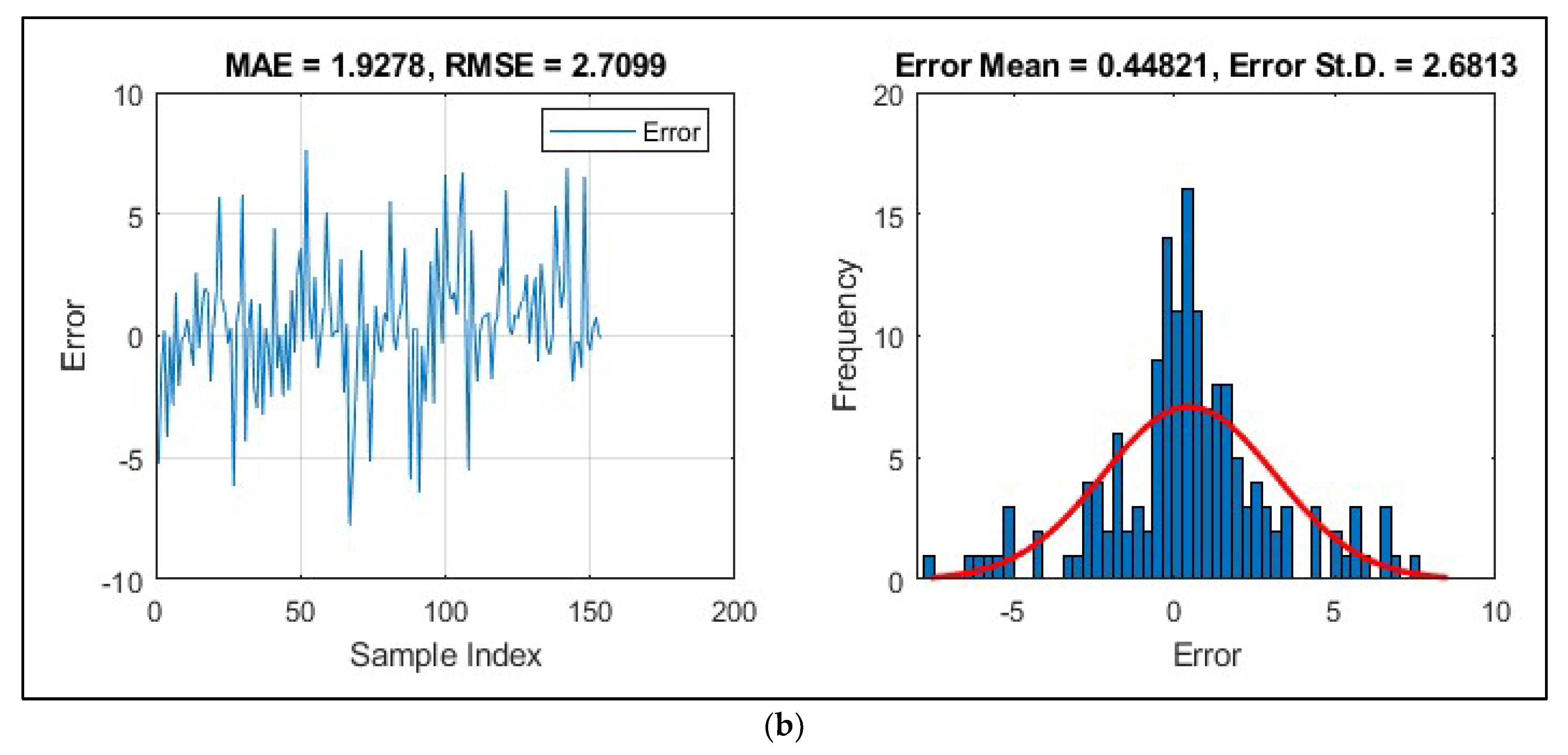

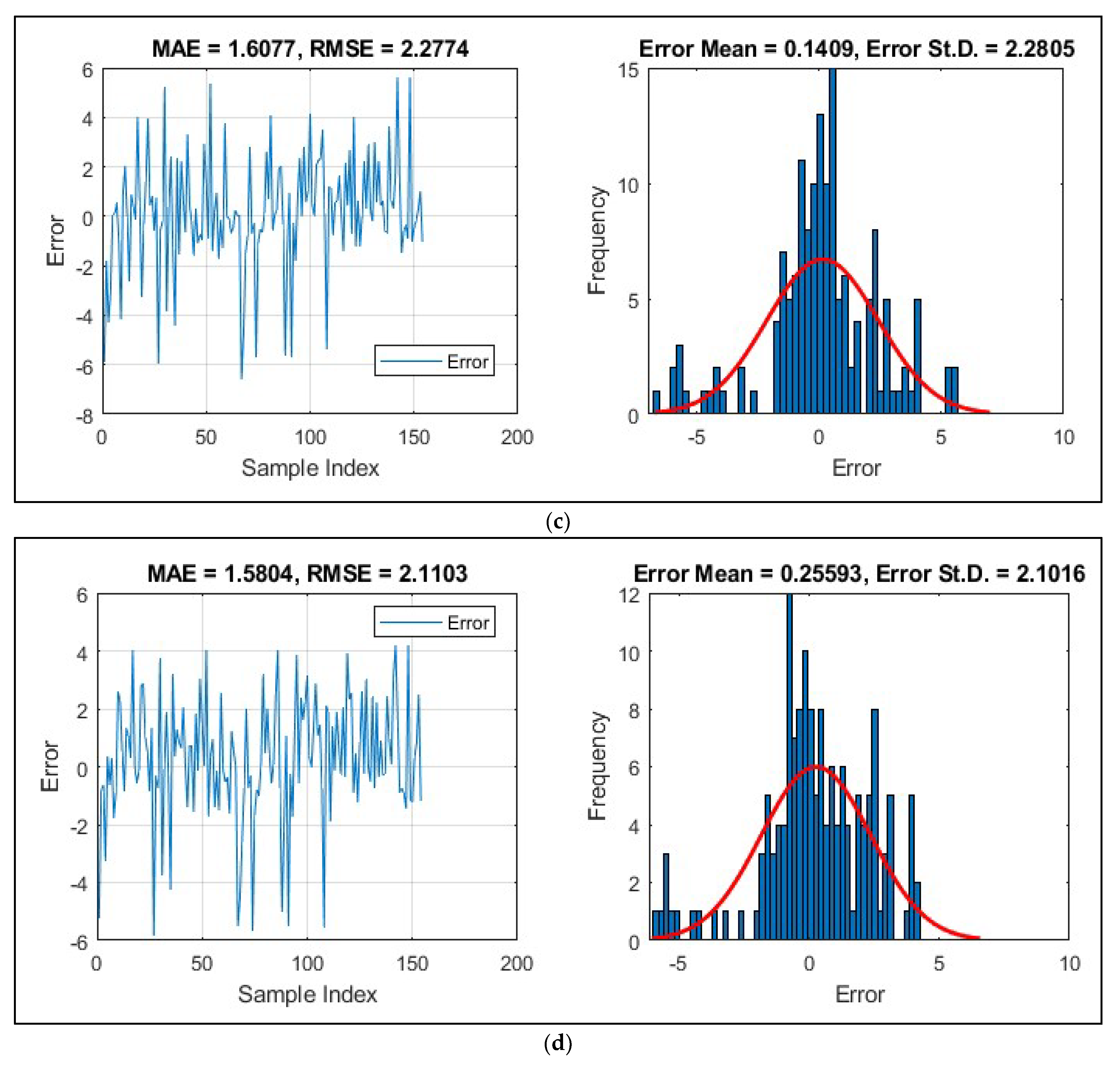

3.2. Incorporated MLP with Optimizers

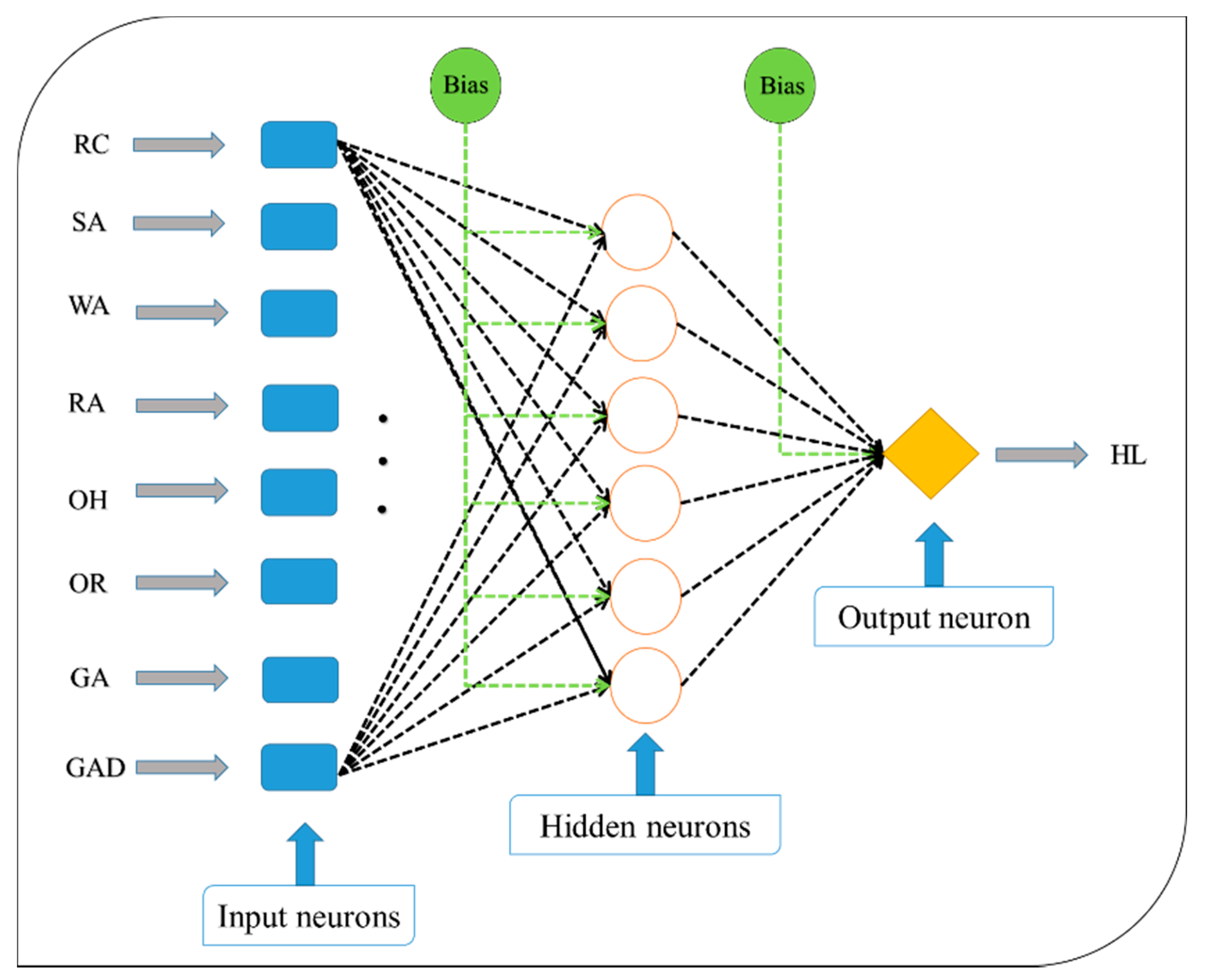

3.3. Prediction Results

3.4. Efficiency Comparison

3.5. Discussion

- (a)

- With an upcoming construction project, the suggested models can give an accurate early measurement of the required thermal load with respect to the dimensions and building characteristics. The models would effectively assist engineers and owners in providing suitable HVAC systems.

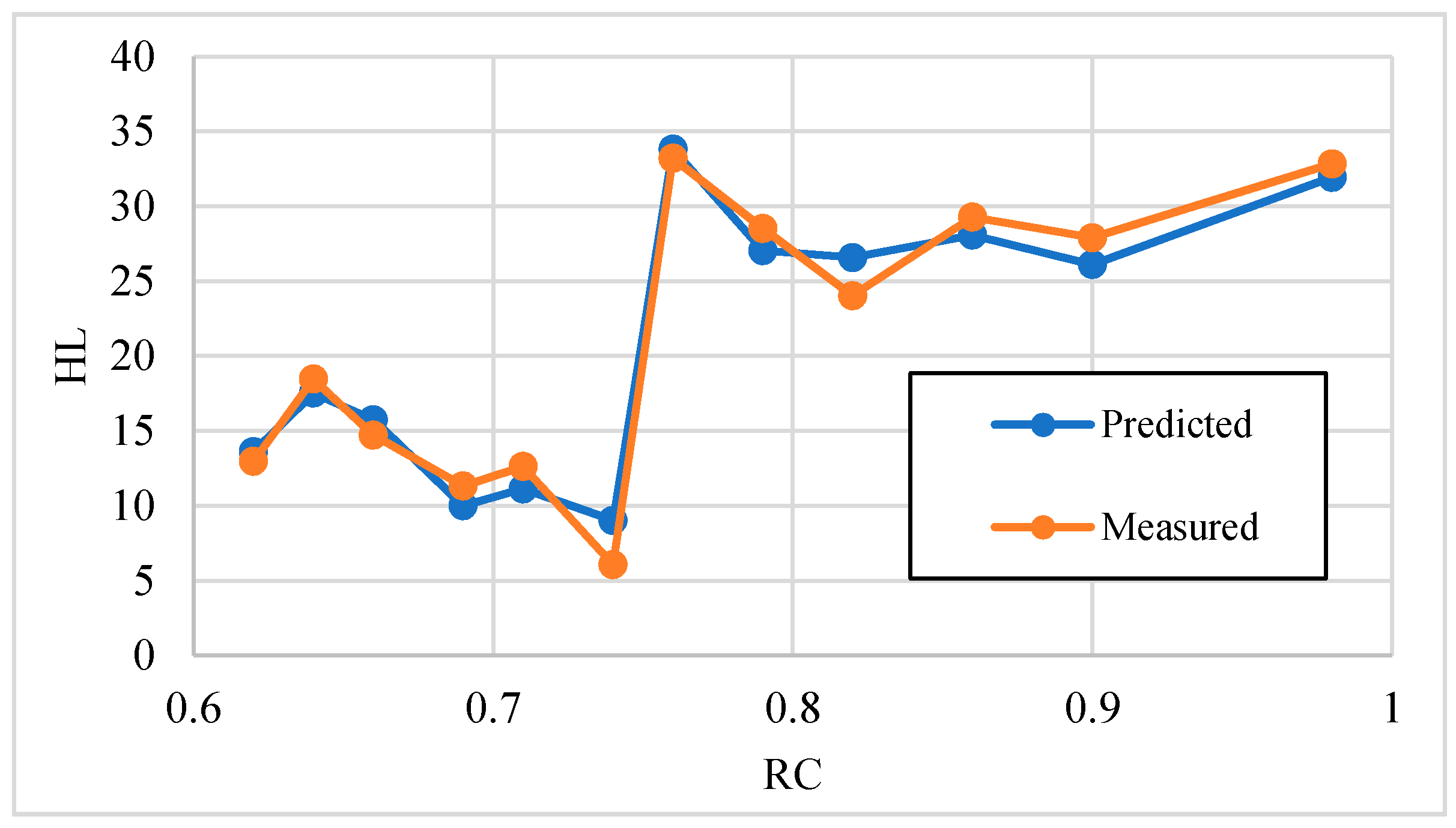

- (b)

- Another form of early-stage assistance would be the proper design of the building itself and tuning the architecture through input parameters (i.e., RA, RC, GA, WA, OH, SA, OR, and GAD) in reconstruction projects. In this sense, it is also possible to investigate the effect of each input parameter separately to achieve an understanding of the thermal load behavior. Figure 9 shows the behavior of the HL with an increase in RC. As shown, the trend is not regular and easy to predict; however, it is nicely predicted by the TLBO-MLP. Hence, this algorithm can give reliable approximations for real-world buildings, too.

4. Conclusions

- According to the sensitivity analysis carried out, the best complexities of the FA-MLP, OIO-MLP, SCE-MLP, and TLBO-MLP ensembles result for the swarm sizes of 50, 200, 50, and 300, respectively.

- Compared to other algorithms, the optimum configuration of the TLBO needed considerably higher computation time for optimizing the MLP.

- Considering the accuracy evaluation (the MEAs of 1.6821, 1.9568, 1.5466, and 1.4626), all four ensembles attained a good perception of the relationship between the HL and influential parameters.

- In the testing phase, the calculated error values of 1.7979, 1.9278, 1.6077, and 1.5804 indicated a low prediction error and the success of the implemented models.

- By comparison, the TLBO-MLP came up to be the strongest model, followed by SCE-MLP, FA-MLP, and OIO-MLP.

- The TLBO and SCE surpassed several other optimizers, including those used in the literature.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Nomenclature

| HVAC | heating, ventilating, and air conditioning |

| ANN | artificial neural network |

| MLP | multi-layer perceptron |

| CL | cooling load |

| HL | heating load |

| PSO | particle swarm optimization |

| ABC | artificial bee colony |

| GWO | gray wolf optimization |

| GOA | grasshopper optimization algorithm |

| FA | firefly algorithm |

| OIO | optics inspired optimization |

| SCE | shuffled complex evolution |

| TLBO | teaching–learning-based optimization |

| RA | roof area |

| RC | relative compactness |

| GA | glazing area |

| WA | wall area |

| OH | overall height |

| SA | surface area |

| OR | orientation |

| GAD | glazing area distribution |

| RMSE | root mean square error |

| MAE | mean absolute error |

| R2 | coefficient of determination |

| ICA | imperialist competitive algorithm |

| WDO | wind-driven optimization |

| WOA | whale optimization algorithm |

| SHO | spotted hyena optimization |

| SSA | salp swarm algorithm |

References

- McQuiston, F.C.; Parker, J.D. Heating, Ventilating, and Air Conditioning: Analysis and Design; John Wiley & Sons: Hoboken, NJ, USA, 1982. [Google Scholar]

- Ihara, T.; Gustavsen, A.; Jelle, B.P. Effect of facade components on energy efficiency in office buildings. Appl. Energy 2015, 158, 422–432. [Google Scholar] [CrossRef]

- Rosen, S.L. Using BIM in HVAC design. Ashrae J. 2010, 52, 24. [Google Scholar]

- Ikeda, S.; Ooka, R. Metaheuristic optimization methods for a comprehensive operating schedule of battery, thermal energy storage, and heat source in a building energy system. Appl. Energy 2015, 151, 192–205. [Google Scholar] [CrossRef]

- Sonmez, Y.; Guvenc, U.; Kahraman, H.T.; Yilmaz, C. A Comperative Study on Novel Machine Learning Algorithms for Estimation of Energy Performance of Residential Buildings. In Proceedings of the 2015 3rd International Istanbul Smart Grid Congress and Fair (ICSG), Istanbul, Turkey, 29–30 April 2015; pp. 1–7. [Google Scholar]

- Lu, N.; Wang, H.; Wang, K.; Liu, Y. Maximum probabilistic and dynamic traffic load effects on short-to-medium span bridges. Comput. Model. Eng. Sci. 2021, 127, 345–360. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, H.; Cao, R.; Zhang, C. Slope stability analysis considering different contributions of shear strength parameters. Int. J. Geomech. 2021, 21, 04020265. [Google Scholar] [CrossRef]

- Zhang, S.-W.; Shang, L.-Y.; Zhou, L.; Lv, Z.-B. Hydrate Deposition Model and Flow Assurance Technology in Gas-Dominant Pipeline Transportation Systems: A Review. Energy Fuels 2022, 36, 1747–1775. [Google Scholar] [CrossRef]

- Liu, E.; Li, D.; Li, W.; Liao, Y.; Qiao, W.; Liu, W.; Azimi, M. Erosion simulation and improvement scheme of separator blowdown system—A case study of Changning national shale gas demonstration area. J. Nat. Gas Sci. Eng. 2021, 88, 103856. [Google Scholar] [CrossRef]

- Peng, S.; Zhang, Y.; Zhao, W.; Liu, E. Analysis of the influence of rectifier blockage on the metering performance during shale gas extraction. Energy Fuels 2021, 35, 2134–2143. [Google Scholar] [CrossRef]

- Zhang, W.; Tang, Z. Numerical modeling of response of CFRP–Concrete interfaces subjected to fatigue loading. J. Compos. Constr. 2021, 25, 04021043. [Google Scholar] [CrossRef]

- Peng, S.; Chen, Q.; Liu, E. The role of computational fluid dynamics tools on investigation of pathogen transmission: Prevention and control. Sci. Total Environ. 2020, 746, 142090. [Google Scholar] [CrossRef]

- Wei, J.; Xie, Z.; Zhang, W.; Luo, X.; Yang, Y.; Chen, B. Experimental study on circular steel tube-confined reinforced UHPC columns under axial loading. Eng. Struct. 2021, 230, 111599. [Google Scholar] [CrossRef]

- Mou, B.; Bai, Y. Experimental investigation on shear behavior of steel beam-to-CFST column connections with irregular panel zone. Eng. Struct. 2018, 168, 487–504. [Google Scholar] [CrossRef]

- Xie, S.-J.; Lin, H.; Chen, Y.-F.; Wang, Y.-X. A new nonlinear empirical strength criterion for rocks under conventional triaxial compression. J. Cent. South Univ. 2021, 28, 1448–1458. [Google Scholar] [CrossRef]

- Ju, B.-K.; Yoo, S.-H.; Baek, C. Economies of Scale in City Gas Sector in Seoul, South Korea: Evidence from an Empirical Investigation. Sustainability 2022, 14, 5371. [Google Scholar] [CrossRef]

- Liu, Z.; Fang, L.; Jiang, D.; Qu, R. A machine-learning based fault diagnosis method with adaptive secondary sampling for multiphase drive systems. IEEE Trans. Power Electron. 2022, 37, 8767–8772. [Google Scholar] [CrossRef]

- Yahya, S.I.; Aghel, B. Estimation of kinematic viscosity of biodiesel-diesel blends: Comparison among accuracy of intelligent and empirical paradigms. Renew. Energy 2021, 177, 318–326. [Google Scholar] [CrossRef]

- Moayedi, H.; Mehrabi, M.; Kalantar, B.; Abdullahi Mu’azu, M.; Rashid, A.S.A.; Foong, L.K.; Nguyen, H. Novel hybrids of adaptive neuro-fuzzy inference system (ANFIS) with several metaheuristic algorithms for spatial susceptibility assessment of seismic-induced landslide. Geomat. Nat. Hazards Risk 2019, 10, 1879–1911. [Google Scholar] [CrossRef] [Green Version]

- Braspenning, P.J.; Thuijsman, F.; Weijters, A.J.M.M. Artificial Neural Networks: An Introduction to ANN Theory and Practice; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1995; Volume 931. [Google Scholar]

- Yahya, S.I.; Rezaei, A.; Aghel, B. Forecasting of water thermal conductivity enhancement by adding nano-sized alumina particles. J. Therm. Anal. Calorim. 2021, 145, 1791–1800. [Google Scholar] [CrossRef]

- Peng, S.; Chen, R.; Yu, B.; Xiang, M.; Lin, X.; Liu, E. Daily natural gas load forecasting based on the combination of long short term memory, local mean decomposition, and wavelet threshold denoising algorithm. J. Nat. Gas Sci. Eng. 2021, 95, 104175. [Google Scholar] [CrossRef]

- Seyedashraf, O.; Mehrabi, M.; Akhtari, A.A. Novel approach for dam break flow modeling using computational intelligence. J. Hydrol. 2018, 559, 1028–1038. [Google Scholar] [CrossRef]

- Pinkus, A. Approximation theory of the MLP model in neural networks. Acta Numer. 1999, 8, 143–195. [Google Scholar] [CrossRef]

- Gao, W.; Alsarraf, J.; Moayedi, H.; Shahsavar, A.; Nguyen, H. Comprehensive preference learning and feature validity for designing energy-efficient residential buildings using machine learning paradigms. Appl. Soft Comput. 2019, 84, 105748. [Google Scholar] [CrossRef]

- Ahmad, A.; Ghritlahre, H.K.; Chandrakar, P. Implementation of ANN technique for performance prediction of solar thermal systems: A Comprehensive Review. Trends Renew. Energy 2020, 6, 12–36. [Google Scholar] [CrossRef] [Green Version]

- Liu, T.; Tan, Z.; Xu, C.; Chen, H.; Li, Z. Study on deep reinforcement learning techniques for building energy consumption forecasting. Energy Build. 2020, 208, 109675. [Google Scholar] [CrossRef]

- Hornik, K. Approximation capabilities of multilayer feedforward networks. Neural Netw. 1991, 4, 251–257. [Google Scholar] [CrossRef]

- Ren, Z.; Motlagh, O.; Chen, D. A correlation-based model for building ground-coupled heat loss calculation using Artificial Neural Network techniques. J. Build. Perform. Simul. 2020, 13, 48–58. [Google Scholar] [CrossRef]

- Mohammadhassani, M.; Nezamabadi-Pour, H.; Suhatril, M.; Shariati, M. Identification of a suitable ANN architecture in predicting strain in tie section of concrete deep beams. Struct. Eng. Mech. 2013, 46, 853–868. [Google Scholar] [CrossRef]

- Sadeghi, A.; Younes Sinaki, R.; Young, W.A.; Weckman, G.R. An Intelligent Model to Predict Energy Performances of Residential Buildings Based on Deep Neural Networks. Energies 2020, 13, 571. [Google Scholar] [CrossRef] [Green Version]

- Sholahudin, S.; Han, H. Simplified dynamic neural network model to predict heating load of a building using Taguchi method. Energy 2016, 115, 1672–1678. [Google Scholar] [CrossRef]

- Khalil, A.J.; Barhoom, A.M.; Abu-Nasser, B.S.; Musleh, M.M.; Abu-Naser, S.S. Energy Efficiency Predicting using Artificial Neural Network. Int. J. Acad. Pedagog. Res. 2019, 3, 1–7. [Google Scholar]

- Ryu, J.-A.; Chang, S. Data Driven Heating Energy Load Forecast Modeling Enhanced by Nonlinear Autoregressive Exogenous Neural Networks. Int. J. Struct. Civ. Eng. Res. 2019. [Google Scholar] [CrossRef]

- Zhao, D.; Ruan, H.; Zhang, Z. Application of artificial intelligence algorithms in the prediction of heating load. In AIP Conference Proceedings; AIP Publishing LLC: Melville, NY, USA, 2019; p. 020040. [Google Scholar]

- Adedeji, P.A.; Akinlabi, S.; Madushele, N.; Olatunji, O.O. Hybrid adaptive neuro-fuzzy inference system (ANFIS) for a multi-campus university energy consumption forecast. Int. J. Ambient Energy 2022, 43, 1685–1694. [Google Scholar] [CrossRef]

- Ahmad, T.; Chen, H. Nonlinear autoregressive and random forest approaches to forecasting electricity load for utility energy management systems. Sustain. Cities Soc. 2019, 45, 460–473. [Google Scholar] [CrossRef]

- Namlı, E.; Erdal, H.; Erdal, H.I. Artificial Intelligence-Based Prediction Models for Energy Performance of Residential Buildings. In Recycling and Reuse Approaches for Better Sustainability; Springer: Berlin/Heidelberg, Germany, 2019; pp. 141–149. [Google Scholar]

- Yepes, V.; Martí, J.V.; García, J. Black hole algorithm for sustainable design of counterfort retaining walls. Sustainability 2020, 12, 2767. [Google Scholar] [CrossRef] [Green Version]

- Jamal, A.; Tauhidur Rahman, M.; Al-Ahmadi, H.M.; Ullah, I.; Zahid, M. Intelligent intersection control for delay optimization: Using meta-heuristic search algorithms. Sustainability 2020, 12, 1896. [Google Scholar] [CrossRef] [Green Version]

- Jitkongchuen, D.; Pacharawongsakda, E. Prediction Heating and Cooling Loads of Building Using Evolutionary Grey Wolf Algorithms. In Proceedings of the 2019 Joint International Conference on Digital Arts, Media and Technology with ECTI Northern Section Conference on Electrical, Electronics, Computer and Telecommunications Engineering (ECTI DAMT-NCON), Nan, Thailand, 30 January–2 February 2019; pp. 93–97. [Google Scholar]

- Ghahramani, A.; Karvigh, S.A.; Becerik-Gerber, B. HVAC system energy optimization using an adaptive hybrid metaheuristic. Energy Build. 2017, 152, 149–161. [Google Scholar] [CrossRef] [Green Version]

- Katebi, J.; Shoaei-parchin, M.; Shariati, M.; Trung, N.T.; Khorami, M. Developed comparative analysis of metaheuristic optimization algorithms for optimal active control of structures. Eng. Comput. 2020, 36, 1539–1558. [Google Scholar] [CrossRef]

- Martin, G.L.; Monfet, D.; Nouanegue, H.F.; Lavigne, K.; Sansregret, S. Energy calibration of HVAC sub-system model using sensitivity analysis and meta-heuristic optimization. Energy Build. 2019, 202, 109382. [Google Scholar] [CrossRef]

- Bamdad Masouleh, K. Building Energy Optimisation Using Machine Learning and Metaheuristic Algorithms. Ph.D. Thesis, Queensland University of Technology, Brisbane, Australia, 2018. [Google Scholar]

- Moayedi, H.; Mu’azu, M.A.; Foong, L.K. Novel Swarm-based Approach for Predicting the Cooling Load of Residential Buildings Based on Social Behavior of Elephant Herds. Energy Build. 2020, 206, 109579. [Google Scholar] [CrossRef]

- Moayedi, H.; Mosavi, A. Electrical Power Prediction through a Combination of Multilayer Perceptron with Water Cycle Ant Lion and Satin Bowerbird Searching Optimizers. Sustainability 2021, 13, 2336. [Google Scholar] [CrossRef]

- Yang, F.; Moayedi, H.; Mosavi, A. Predicting the Degree of Dissolved Oxygen Using Three Types of Multi-Layer Perceptron-Based Artificial Neural Networks. Sustainability 2021, 13, 9898. [Google Scholar] [CrossRef]

- Zhou, G.; Moayedi, H.; Bahiraei, M.; Lyu, Z. Employing artificial bee colony and particle swarm techniques for optimizing a neural network in prediction of heating and cooling loads of residential buildings. J. Clean. Prod. 2020, 254, 120082. [Google Scholar] [CrossRef]

- Bui, D.-K.; Nguyen, T.N.; Ngo, T.D.; Nguyen-Xuan, H. An artificial neural network (ANN) expert system enhanced with the electromagnetism-based firefly algorithm (EFA) for predicting the energy consumption in buildings. Energy 2020, 190, 116370. [Google Scholar] [CrossRef]

- Moayedi, H.; Nguyen, H.; Foong, L. Nonlinear evolutionary swarm intelligence of grasshopper optimization algorithm and gray wolf optimization for weight adjustment of neural network. Eng. Comput. 2021, 37, 1265–1275. [Google Scholar] [CrossRef]

- Moayedi, H.; Mehrabi, M.; Mosallanezhad, M.; Rashid, A.S.A.; Pradhan, B. Modification of landslide susceptibility mapping using optimized PSO-ANN technique. Eng. Comput. 2019, 35, 967–984. [Google Scholar] [CrossRef]

- Moayedi, H.; Mehrabi, M.; Bui, D.T.; Pradhan, B.; Foong, L.K. Fuzzy-metaheuristic ensembles for spatial assessment of forest fire susceptibility. J. Environ. Manag. 2020, 260, 109867. [Google Scholar] [CrossRef]

- Mehrabi, M.; Moayedi, H. Landslide susceptibility mapping using artificial neural network tuned by metaheuristic algorithms. Environ. Earth Sci. 2021, 80, 804. [Google Scholar] [CrossRef]

- Mehrabi, M.; Pradhan, B.; Moayedi, H.; Alamri, A. Optimizing an adaptive neuro-fuzzy inference system for spatial prediction of landslide susceptibility using four state-of-the-art metaheuristic techniques. Sensors 2020, 20, 1723. [Google Scholar] [CrossRef] [Green Version]

- Zhou, G.; Moayedi, H.; Foong, L.K. Teaching–learning-based metaheuristic scheme for modifying neural computing in appraising energy performance of building. Eng. Comput. 2021, 37, 3037–3048. [Google Scholar] [CrossRef]

- Tsanas, A.; Xifara, A. Accurate quantitative estimation of energy performance of residential buildings using statistical machine learning tools. Energy Build. 2012, 49, 560–567. [Google Scholar] [CrossRef]

- Moré, J.J. The Levenberg-Marquardt algorithm: Implementation and theory. In Numerical Analysis; Springer: Berlin/Heidelberg, Germany, 1978; pp. 105–116. [Google Scholar]

- Hecht-Nielsen, R. Theory of the backpropagation neural network. In Neural Networks for Perception; Elsevier: Amsterdam, The Netherlands, 1992; pp. 65–93. [Google Scholar]

- Yang, X.-S. Firefly algorithm. Nat. Inspired Metaheuristic Algorithms 2008, 20, 79–90. [Google Scholar]

- Yang, X.-S. Firefly algorithm, stochastic test functions and design optimisation. arXiv 2010, arXiv:1003.1409. [Google Scholar] [CrossRef]

- Yang, X.-S. Multiobjective firefly algorithm for continuous optimization. Eng. Comput. 2013, 29, 175–184. [Google Scholar] [CrossRef] [Green Version]

- Zeng, Y.; Zhang, Z.; Kusiak, A. Predictive modeling and optimization of a multi-zone HVAC system with data mining and firefly algorithms. Energy 2015, 86, 393–402. [Google Scholar] [CrossRef]

- Kashan, A.H. A new metaheuristic for optimization: Optics inspired optimization (OIO). Comput. Oper. Res. 2015, 55, 99–125. [Google Scholar] [CrossRef]

- Kashan, A.H. An effective algorithm for constrained optimization based on optics inspired optimization (OIO). Comput. Aided Des. 2015, 63, 52–71. [Google Scholar] [CrossRef]

- Jalili, S.; Husseinzadeh Kashan, A. Optimum discrete design of steel tower structures using optics inspired optimization method. Struct. Des. Tall Spec. Build. 2018, 27, e1466. [Google Scholar] [CrossRef]

- Özdemir, M.T.; Öztürk, D. Optimal PID Tuning for Load Frequency Control using Optics Inspired Optimization Algorithm. IJNES 2016, 10, 1–6. [Google Scholar]

- Duan, Q.; Gupta, V.K.; Sorooshian, S. Shuffled complex evolution approach for effective and efficient global minimization. J. Optim. Theory Appl. 1993, 76, 501–521. [Google Scholar] [CrossRef]

- Ira, J.; Hasalová, L.; Jahoda, M. The use of optimization in fire development modeling, The use of optimization techniques for estimation of pyrolysis model input parameters. In Proceedings of the International Conference, Prague, Czechia, 19–20 April 2013. [Google Scholar]

- Meshkat Razavi, H.; Shariatmadar, H. Optimum parameters for tuned mass damper using Shuffled Complex Evolution (SCE) Algorithm. Civ. Eng. Infrastruct. J. 2015, 48, 83–100. [Google Scholar]

- Stewart, I.; Aye, L.; Peterson, T. Global optimisation of chiller sequencing and load balancing using Shuffled Complex Evolution. In Proceedings of the AIRAH and IBPSA’s Australasian Building Simulation 2017 Conference, Melbourne, Australia, 15–16 November 2017. [Google Scholar]

- Yang, T.; Asanjan, A.A.; Faridzad, M.; Hayatbini, N.; Gao, X.; Sorooshian, S. An enhanced artificial neural network with a shuffled complex evolutionary global optimization with principal component analysis. Inf. Sci. 2017, 418–419, 302–316. [Google Scholar] [CrossRef] [Green Version]

- Rao, R.V.; Savsani, V.J.; Vakharia, D. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput. Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Talatahari, S.; Taghizadieh, N.; Goodarzimehr, V. Hybrid Teaching-Learning-Based Optimization and Harmony Search for Optimum Design of Space Trusses. J. Optim. Ind. Eng. 2020, 13, 177–194. [Google Scholar]

- Shukla, A.K.; Singh, P.; Vardhan, M. An adaptive inertia weight teaching-learning-based optimization algorithm and its applications. Appl. Math. Model. 2020, 77, 309–326. [Google Scholar] [CrossRef]

- Nguyen, H.; Mehrabi, M.; Kalantar, B.; Moayedi, H.; Abdullahi, M.A.M. Potential of hybrid evolutionary approaches for assessment of geo-hazard landslide susceptibility mapping. Geomat. Nat. Hazards Risk 2019, 10, 1667–1693. [Google Scholar] [CrossRef]

- Mehrabi, M. Landslide susceptibility zonation using statistical and machine learning approaches in Northern Lecco, Italy. Nat. Hazards 2022, 111, 901–937. [Google Scholar] [CrossRef]

- Tien Bui, D.; Moayedi, H.; Anastasios, D.; Kok Foong, L. Predicting heating and cooling loads in energy-efficient buildings using two hybrid intelligent models. Appl. Sci. 2019, 9, 3543. [Google Scholar] [CrossRef] [Green Version]

- Guo, Z.; Moayedi, H.; Foong, L.K.; Bahiraei, M. Optimal modification of heating, ventilation, and air conditioning system performances in residential buildings using the integration of metaheuristic optimization and neural computing. Energy Build. 2020, 214, 109866. [Google Scholar] [CrossRef]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization; Technical Report-tr06; Engineering Faculty, Computer Engineering Department, Erciyes University: Kayseri, Turkey, 2005. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Atashpaz-Gargari, E.; Lucas, C. Imperialist competitive algorithm: An algorithm for optimization inspired by imperialistic competition. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007; pp. 4661–4667. [Google Scholar]

- Bayraktar, Z.; Komurcu, M.; Werner, D.H. Wind Driven Optimization (WDO): A novel nature-inspired optimization algorithm and its application to electromagnetics. In Proceedings of the 2010 IEEE Antennas and Propagation Society International Symposium, Toronto, ON, Canada, 11–17 July 2010; pp. 1–4. [Google Scholar]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Dhiman, G.; Kumar, V. Multi-objective spotted hyena optimizer: A Multi-objective optimization algorithm for engineering problems. Knowl. Based Syst. 2018, 150, 175–197. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Saremi, S.; Mirjalili, S.; Lewis, A. Grasshopper optimisation algorithm: Theory and application. Adv. Eng. Softw. 2017, 105, 30–47. [Google Scholar] [CrossRef] [Green Version]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- Park, J.; Lee, S.J.; Kim, K.H.; Kwon, K.W.; Jeong, J.-W. Estimating thermal performance and energy saving potential of residential buildings using utility bills. Energy Build. 2016, 110, 23–30. [Google Scholar] [CrossRef]

- Yezioro, A.; Dong, B.; Leite, F. An applied artificial intelligence approach towards assessing building performance simulation tools. Energy Build. 2008, 40, 612–620. [Google Scholar] [CrossRef]

- Wu, D.; Foong, L.K.; Lyu, Z. Two neural-metaheuristic techniques based on vortex search and backtracking search algorithms for predicting the heating load of residential buildings. Eng. Comput. 2020, 38, 347–660. [Google Scholar] [CrossRef]

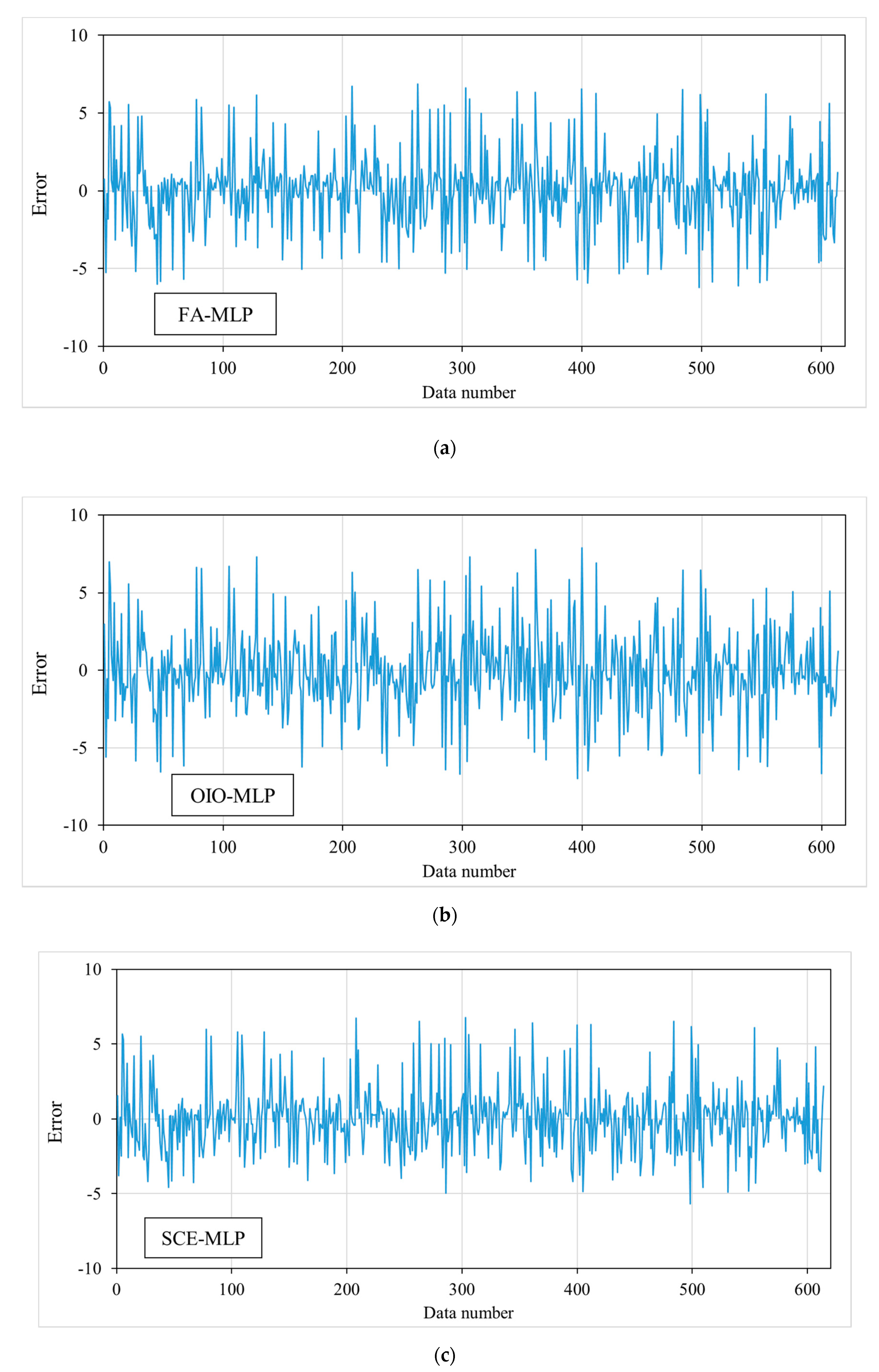

| Study | Models | Network Results | |||||

|---|---|---|---|---|---|---|---|

| Training | Testing | ||||||

| RMSE | MAE | R2 | RMSE | MAE | R2 | ||

| This study | FA-MLP | 2.3838 | 1.6821 | 0.9426 | 2.5456 | 1.7979 | 0.9438 |

| OIO-MLP | 2.6256 | 1.9568 | 0.9304 | 2.7099 | 1.9278 | 0.9373 | |

| SCE-MLP | 2.1448 | 1.5466 | 0.9536 | 2.2774 | 1.6077 | 0.9556 | |

| TLBO-MLP | 1.9817 | 1.4626 | 0.9604 | 2.1103 | 1.5804 | 0.9610 | |

| [49] | ABC-MLP | 2.9855 | 2.1197 | 0.9120 | 2.6159 | 1.9111 | 0.9349 |

| PSO-MLP | 2.9736 | 2.1479 | 0.9126 | 2.5693 | 1.8630 | 0.9370 | |

| [78] | GA-MLP | 2.9986 | 2.1797 | 0.8711 | 2.8878 | 2.0622 | 0.9076 |

| ICA-MLP | 2.8050 | 2.0068 | 0.8816 | 2.7819 | 2.0089 | 0.9115 | |

| [79] | WDO-MLP | 2.5896 | 1.7944 | 0.9344 | 2.8312 | 1.9863 | 0.9213 |

| WOA-MLP | 2.6998 | 1.9702 | 0.9287 | 2.9213 | 2.1921 | 0.9154 | |

| SHO-MLP | 4.2283 | 3.2232 | 0.8337 | 4.1501 | 3.1092 | 0.8385 | |

| SSA-MLP | 2.4321 | 1.6737 | 0.9421 | 2.7527 | 1.9178 | 0.9248 | |

| [51] | GOA-MLP | 2.3715 | 1.6934 | 0.9432 | 2.4459 | 1.7373 | 0.9486 |

| GWO-MLP | 2.2959 | 1.6475 | 0.9468 | 2.2899 | 1.6514 | 0.9551 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almutairi, K.; Algarni, S.; Alqahtani, T.; Moayedi, H.; Mosavi, A. A TLBO-Tuned Neural Processor for Predicting Heating Load in Residential Buildings. Sustainability 2022, 14, 5924. https://doi.org/10.3390/su14105924

Almutairi K, Algarni S, Alqahtani T, Moayedi H, Mosavi A. A TLBO-Tuned Neural Processor for Predicting Heating Load in Residential Buildings. Sustainability. 2022; 14(10):5924. https://doi.org/10.3390/su14105924

Chicago/Turabian StyleAlmutairi, Khalid, Salem Algarni, Talal Alqahtani, Hossein Moayedi, and Amir Mosavi. 2022. "A TLBO-Tuned Neural Processor for Predicting Heating Load in Residential Buildings" Sustainability 14, no. 10: 5924. https://doi.org/10.3390/su14105924

APA StyleAlmutairi, K., Algarni, S., Alqahtani, T., Moayedi, H., & Mosavi, A. (2022). A TLBO-Tuned Neural Processor for Predicting Heating Load in Residential Buildings. Sustainability, 14(10), 5924. https://doi.org/10.3390/su14105924