Abstract

The dramatic growth in the number of buildings worldwide has led to an increase interest in predicting energy consumption, especially for the case of residential buildings. As the heating and cooling system highly affect the operation cost of buildings; it is worth investigating the development of models to predict the heating and cooling loads of buildings. In contrast to the majority of the existing related studies, which are based on historical energy consumption data, this study considers building characteristics, such as area and floor height, to develop prediction models of heating and cooling loads. In particular, this study proposes deep neural networks models based on several hyper-parameters: the number of hidden layers, the number of neurons in each layer, and the learning algorithm. The tuned models are constructed using a dataset generated with the Integrated Environmental Solutions Virtual Environment (IESVE) simulation software for the city of Buraydah city, the capital of the Qassim region in Saudi Arabia. The Qassim region was selected because of its harsh arid climate of extremely cold winters and hot summers, which means that lot of energy is used up for cooling and heating of residential buildings. Through model tuning, optimal parameters of deep learning models are determined using the following performance measures: Mean Square Error (MSE), Root Mean Square Error (RMSE), Regression (R) values, and coefficient of determination (R). The results obtained with the five-layer deep neural network model, with 20 neurons in each layer and the Levenberg–Marquardt algorithm, outperformed the results of the other models with a lower number of layers. This model achieved MSE of 0.0075, RMSE 0.087, R and R both as high as 0.99 in predicting the heating load and MSE of 0.245, RMSE of 0.495, R and R both as high as 0.99 in predicting the cooling load. As the developed prediction models were based on buildings characteristics, the outcomes of the research may be relevant to architects at the pre-design stage of heating and cooling energy-efficient buildings.

1. Introduction

Over the past decade, there has been a rapid increase in the number of residential buildings, which has raised worldwide interest on climate change, global carbon emission [1,2], global warming, urban growth, and fast construction development. In Saudi Arabia, all building sectors are accountable for around 70% of the total energy consumption, and about 50% of that is used by residential buildings [3,4]. Many residential buildings in Saudi Arabia are detached or semidetached types, which demand more cooling and heating loads than typical flat apartments.

The Qassim region has a very harsh arid weather condition. The region has a dry climate characterized by hot summers and cold winters. Thus, energy in buildings is used primarily for their heating during winter and their cooling during summer. In fact, almost 76% of the total energy consumption in residential buildings goes for their heating and cooling load [4].

Surprisingly, the effectiveness of energy consumption is not well documented in this region. Following the Saudi vision 2030 [5] to enhance energy efficiency, this study proposes a predictive model for energy consumption of residential buildings in the Qassim region based on a typical condition of building characteristics.

Building characteristics, such as form orientation, roof type and wall insulation, among others, are essential elements that determine energy consumption by residential buildings. Different terms have been used to identify building characteristics. The building characteristics or building envelope are the key influential design parameter that significantly affects the energy consumption of buildings [6]. Therefore, architects and designers must impose proactive energy prediction strategies for a better future of built environments development and buildings energy efficiency enhancement.

Many studies have addressed building prediction modeling for energy consumption [7,8,9,10,11,12,13]. However, their developed prediction models were based mainly on historical data or recorded data of building energy consumption [8] while building characteristics were ignored. Most of the current studies that consider buildings characteristics mostly apply machine learning methods, although deep learning is rarely used. Deep neural networks which are considered as extension of the conventional artificial neural networks, allow multi-task learning [14] and have been proved to extract features automatically from the related datasets. The majority of the existent studies are based on the benchmark dataset (of 768 records) created by Tsanas and Xifara [15], whose relatively small size is not appropriate for deep learning models.

To fill the identified gap in energy consumption prediction, for this study, a larger dataset of 3840 instances was first generated and deep neural networks models were then developed. The dataset was generated for the capital of the Qassim region in Saudi Arabia, the city of Buraydah. To get the optimum prediction model, deep neural network models are tuned using different combinations of the hyper-parameters: number of hidden layers, number of neurons per layer and training algorithm.

The hyper-parameter tuning is fully described in Section 3.2. As such, the main goal of this study is to identify the optimal parameters of deep learning models for energy consumption prediction based on buildings’ characteristics. Such models are very useful to guide the predesign stage of heating and cooling energy efficient buildings.

The main contributions of this study are:

- A generated dataset for predicting energy loads based on buildings characteristics in arid climate, i.e., Qassim region, Saudi Arabia.Unlike the datasets available in the literature, the generated dataset is larger in size, and also dedicated to arid climate;

- Deep neural network models with different parameter tunings to predict buildings energy consumption. In fact, previous studies of buildings’ energy consumption mostly depended on typical machine learning methods rather than deep learning method. This point is further elaborated in Section 2;

- Development of energy consumption models to predict heating and cooling loads of buildings in arid climate. In contrast to the majority of the state of the art studies, the prediction models in this study are based on the buildings characteristics rather than the historical data of energy consumption.

The rest of the paper is organized as follows. Section 2 briefly reviews literature related works and identifies research gaps. Detail descriptions of methods, dataset generation and the hyper-parameter tuning of the proposed deep neural networks models are described in Section 3. Section 4 reports on the experimental results and performance comparison among the tuned models and with the state of the art models. Section 5 discusses the obtained results and Section 6 concludes the paper.

2. Related Work

Numerous studies in the existing literature have addressed building prediction modeling for energy consumption. However, the majority of them do not consider building characteristics to be the most important predictors but historical data of energy consumption or, in some cases, historical data with climate data. Despite the significance of these studies’ outcomes, there is still a lack of studies on prediction models based on the building characteristics, known as building envelope, data. Building envelope refers to wall materials, roof materials, the window to wall ratio, and facade orientation [16]. Energy prediction based on buildings characteristics allows architects and designers to optimize the building’s design at an early stage. Table 1 summarizes the related studies that have used building’s characteristics as predictors for energy consumption in terms of the prediction method used, the classification method used, either machine learning (ML) or deep learning (DL), the dataset used, and the location of the study.

Table 1.

Summary of the related studies.

In the following, a summary of the related works is provided.

Al Tarhuni et al. [17] predicted each month’s natural gas energy consumption based on integrating the physics-based approach and data energy system characteristics. Adding to that, meteorological data and historical energy consumption for all residences were used. Results of the study indicate that 36% of energy consumption reduction can be achieved with efficient energy prediction.

Al-Rakhami et al. [18] introduced an ensemble learning approach, via the XGBoost method to solve overfitting issues, to design an efficient prediction model. With a dataset containing 768 samplings of building attributes, in comparison to previous studies, they obtained a lower mean square error and better accuracy for cooling and heating energy prediction. The study by Navarro-Gonzalez and Villacampa [22] also addressed the overfitting issue using the Octahedric regression method with lower computational complexity. Because of the high demand of industrial and residential buildings for efficient smart energy techniques, Reference [29] proposed a system that utilized an ensemble deep learning-based approach to predict energy consumption via chronological dependencies. The study used benchmark, residential UCI, and local Korean commercial building datasets. The data is being passed to the proposed ensemble model to extract hybrid discriminative features via convolution neural network (CNN), stacked, and bi-directional long-short term memory (LSTM) architectures. The study is being concluded by showing a lower error rate.

A component-based approach with two construction and zone levels was proposed by Geyer and Singaravel [19] to improve the machine learning models in the whole building design. They showed that high quality of estimation could be achieved with lower error rate of 3.7% and 3.9% for cooling and heating respectively.

Different machine learning approaches for energy load estimation, including Extreme Learning Machine (ELM), Online Sequential ELM (OSELM), and Bidirectional ELM (B-ELM), were proposed by Kumar et al. [20]. Substantial improvement of energy prediction based on ELM and B-ELM were achieved. Besides, a faster learning rate was also observed for the new proposed model compared to SVR, RF and ANNs. ELM was introduced by Naji et al. [21] for building energy consumption estimation using building materials thickness and thermal insulation capability. The results showed improvement in prediction accuracy with ELM compared to Genetic Programming (GP) and ANNs.

Sadeghi et al. [23] applied DNNs, with Multi-Layer Perceptron (MLP) network, to predict heating load and cooling load for a variety of structures. With various extensive testing of data pre-processing techniques, DNNs enhanced previous ANN models.

Seyedzadeh et al. [24] generated two datasets in two different simulation software to investigate ML accuracy in predicting buildings heating and cooling loads. A grid-search coupled with a cross-validation method was used to study the model parameters combinations. Among the five models studied, the outcomes indicated that the Gradient Boosted Regression Trees (GBRT) provides the best accuracy prediction results based on the RMSE. However, NNs was found to be the best for complex data sets.

Sharif and Hammad [25] focused on developing an ANN model to predict energy consumption with a large and complicated dataset generated by the SBMO model. The findings of this study revealed that the suggested ANN models was capable of accurately predicting Total Energy Consumption (TEC), Life Cycle Assessment (LCA), and Life Cycle Cost (LCC) for entire building re-modeling scenarios that included the building envelope, HVAC, and lighting systems.

Singaravel et al. [26] compared the deep learning model with building performance simulation using 201 design cases. The deep learning model showed high accuracy for cooling prediction with an R of 0.983. The model recorded an error for heating prediction with an R of 0.848, which can be resolved using more heating data from a better sampling model. The study confirmed that the simulation results using the deep learning model could be acquired in 0.9 s, which is considered high computation speed on buildings performance simulation.

Tran et al. [27] used real data from residential buildings to develop an evolutionary Neural Machine Inference Model (ENMIM) for energy consumption prediction. Their new ensemble model integrates two single supervised learning machines: the Least Squares Support Vector regression (LSSVR) and the Radial Basis Function Neural Network (RBFNN). The developed model has better accuracy than other compared artificial intelligence techniques for predicting energy consumption.

The study of Zhou et al. [28] uses the Artificial Bee Colony (ABC) and Particle Swarm Optimization (PSO) metaheuristic algorithms to optimize the MLP NN in order to predict the heating and cooling loads of energy-efficient buildings for residential usage. To do so, they used a dataset with eight independent variables: relative compactness, surface area, wall area, roof area, overall height, orientation, glazing area, and glazing area distribution. Their results indicate that using ABC and PSO algorithms improves the MLP performance. Furthermore, they determined that PSO was better than the ABC in terms of MLP performance enhancement.

The above literature review evidences the successful use of machine learning models to address problems related to buildings energy prediction. However, the following research gaps were observed:

- There is a little information on how to use deep learning models and how to tune them to meet the task at hand for the best predictive accuracy and consistency. While machine learning methods are used by most studies, deep learning is rarely used.

- Many of the studies that use building characteristics as inputs to the prediction model are based on the benchmark dataset of 786 records by Tsanas and Xifara [15]. Other self-generated datasets are kept private and are not available for experimental replication.

- Most of the studies are conducted in non-arid climates such as Spain, Greece, the USA, Canada and China.

This paper, therefore, lays out an applicable approach to tuning deep learning models to building energy data using a larger open-access self-generated dataset in Qassim, a typical arid climate region.

3. Method

This section presents the dataset generated to train the DNN models and the tuning of the models using several hyper-parameters.

3.1. Dataset Description

Figure 1 shows a snapshot of the based model: a typical detached family house located in many cities in the Qassim region. The total area of the house is 145.87 m, while the total volume is 408.42 m. Table 2 shows details of the building envelope. Typical heavy construction was applied to build the house. Concrete is the primary material used in walls and roof. A thermal layer (10 mm) was used in the top roof; 4 mm of double clear glass was installed in the windows. Plasterboard was applied as the exterior finishing of the building.

Figure 1.

A snapshot of the case study in IESVE.

Table 2.

Envelope construction features of the selected case study.

The thermal dataset was generated in the Integrated Environmental Solutions Virtual Environment (IESVE) simulation software [30]. IESVE simulation software has been recognized as the building performance simulation by LEED (Leadership in Energy and Environmental Design), BREEAM (Building Research Establishment Environmental Assessment Method) and Green Mark. The dataset contained eight attributes as inputs and two response variables as outputs. For the input variables, the dataset included two building areas with two different floor heights, five glazing areas, six glazing U-values, four roof U-values and eight wall U-values, which equated to 3840 data series. While for the output variables, the dataset included heating and cooling, which referred to the sensible cooling load through the spaces envelope (wall, window and roof) calculated by kWh per year. Five Window to Wall Ratio (WWR), obtained dividing the total glazing area in all the walls divided by the total wall area, with two floor heights were used. Table 3 and Table 4 summarize the physical and thermal characteristics of the input and output variables in the model simulation, respectively. The generated dataset is available at [31].

Table 3.

Descriptions of input variables in the model simulation.

Table 4.

Descriptions of output variables in the model simulation.

The selection of wall and roof materials should achieve the sustainable approach based on energy and environmental aspects [32]. Thickness also plays a key role in the thermal performance inside the buildings [33]. Motivated by [34,35,36,37,38], the wall and roof U-values have been obtained according to the materials and insulation types and thicknesses. For instance, a wall U-value of 3.34 W/mK, as shown in Table 3, is constructed with reinforced concrete (125 mm) covered by inside plaster (10 mm) and exterior render (10 mm). By increasing the reinforced concrete to 250 mm, the U-value improves to 2.82 W/mK. Adding 10 mm of cavity enhanced the wall U-value to 2.11 W/mK, while adding 100 mm of expended polystyrene as insulation and another layer of reinforced concrete (125 mm) achieved a wall U-value of 0.26 W/mK. The roof U-value of 0.47 W/mK is obtained with concrete deck (150 mm), polystyrene insulation (10 mm), tiles and cement finishing (8 mm). The U-value is improved to 0.35 W/mK by increasing the thickness of the insulation from 10 to 60 mm. The U-value 0.13 W/mK is achieved using white roof coatings as a final top finishing layer. All construction details mentioned above are used as an example for achieving the required U-value for each simulation; however, it is worth highlighting that different location, climate, and local resources could affect the availability of the materials for construction. Therefore, any materials should be obtained according to the local authorities, codes and regulations.

3.2. Hyper-Parameter Tuning of Deep Neural Networks Models

This study is based on a self-generated dataset for predicting heating and cooling load based on building characteristics. The dataset contains 3840 observations and eight building characteristics. To achieve the aim of developing an accurate prediction model, sixteen experiments with different configurations have been designed (See Table 5). Three parameters have been tuned: the number of hidden layers (2, 3, 4, 5), the number of neurons in each hidden layer (10, 20), and the backpropagation algorithm (LM algorithm; and SCG algorithm. The single-layer model was excluded as the scope of this study is to tune deep neural networks with two and more hidden layers.

Table 5.

The different configurations of the models’ parameters.

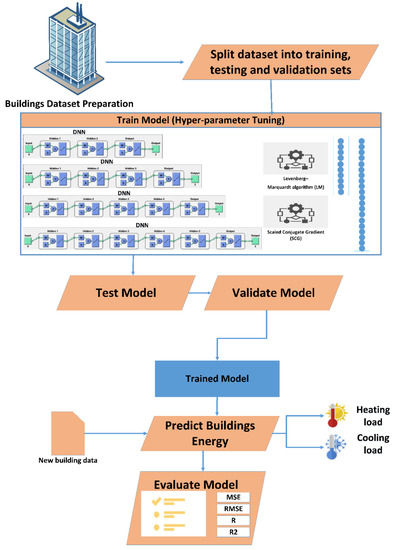

Figure 2 shows the implemented methodology. First, the building dataset is generated using the IESVE software. Second, the dataset is randomly split in (see Table 6): 70% for training, 15% for testing, and 15% for validation. Third, the training phase adjust the network according to its error. Fourth, the testing phase measures the network performance during and after training. Five, in the validation phase, samples are used to measure the generalizability of the proposed network. It is also used to stop training when there is no more improvement in the network generalization.

Figure 2.

The overall methodology followed in this study.

Table 6.

Number of samples for training, testing and validation.

For model evaluation, four metrics were used to measure the performance of the proposed network: Mean Square Error (MSE), Root Mean Square Error (RMSE), Regression (R) values and coefficient of determination (R).

The MSE is the average squared difference between predicted and actual variables, i.e., heating and cooling loads. The lower values, the better.

RMSE calculates the average square error of prediction and is useful when capturing large differences between predicted and actual outputs. Like MSE, low values for RMSE indicate better model performance.

The R value measures the correlation between predicted and actual variables. For closer the R value to 1, the stronger relationship and higher performance model.

R measures the proportion of variance in the dependent variable that is predictable via the independent variables. The closer the R value 1, the stronger relationship and higher performance model.

where identifies the predicted value for sample i, identifies the actual value for sample i, n is the sample size, indicates the mean of the predicted values.

4. Experimental Results

The experiments were carried out on a computer device with Intel i-7 9700K 3.6 GHz CPU, 16 GB RAM, and NVIDIA GeForce RTX 2060 8 GB GPU.

4.1. Prediction Results of Energy Consumption

Table 7 shows the MSE, RMSE, R, and R values obtained for the different models to predict heating and cooling loads collectively. It is worth mentioning that almost all tuned models performed well in terms of R and R. However, they performed completely different in terms of MSE and RMSE. This disparity indicates the importance of choosing the corresponding weights.

Table 7.

Results of tuning the DNN models of the generated dataset.

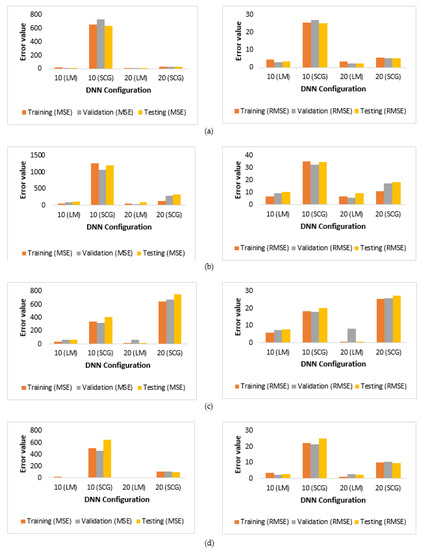

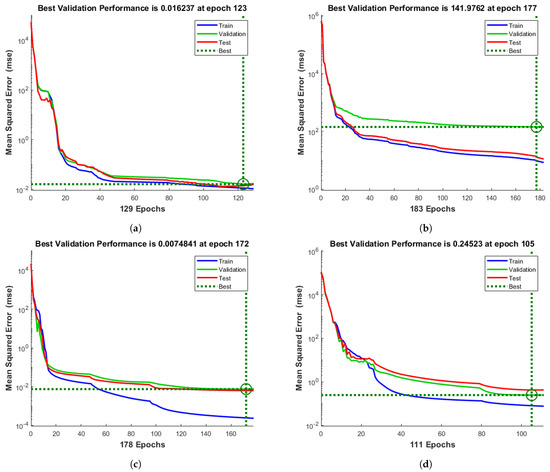

By grouping the experiments based on the number of hidden layers (Figure 3), it is obvious that the best configuration in all groups was: 20 neurons in the hidden layers and LM as the learning algorithm (shaded in Table 7).

Figure 3.

Prediction results, in terms of MSE and RMSE, for the DNN models with different combinations of hyper parameters: (a) two-layer DNN (experiment 3), (b) three-layer DNN (experiment 7), (c) four-layer DNN (experiment 11), and (d) five-layer DNN (experiment 15).

Based on the results, the best two performing models are those used in experiment 3 (two hidden layers of 20 neurons with the LM training algorithm) and experiment 15 (five hidden layers of 20 neurons with the LM algorithm). These two models are comparatively better than all other models and thus are further investigated and compared in the following section. The comparison is made in terms of performance and computation time predicting the heating load and the cooling load independently.

4.2. The Best-Performed Model for Predicting Building Heating and Cooling Loads Independently

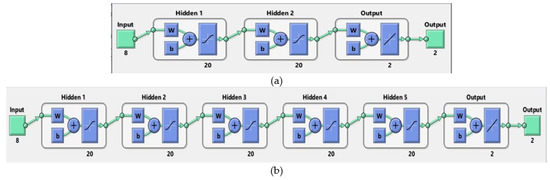

Models utilized in experiment 3 and experiment 15 are represented in Figure 4. To examine the difference between the two model, the same tuned hyper parameters were employed in further experiments predicting the heating and cooling loads independently rather than collectively. The prediction results as well as the computation times are shown in Table 8. Remarkably, the time to train these models was disparate. The time required to train the five-layer model in experiment 3 is lower than time required to train the two-layer model.

Figure 4.

Graphical representation of the best performed models: (a) two-layer model (experiment 3) and (b) five-layer model (experiment 15).

Table 8.

Results of the best performed DNN models for predicting heating load and cooling load separately.

Based on MSE and RMSE measures, the two models performed well predicting both heating load and cooling load independently. However, both models achieved better performance predicting heating load than predicting cooling load. The five-layer DNN performed better than the two-layer DNN in all training, validation and testing datasets. The heating and cooling prediction results of the two models are further illustrated using four kinds of plots: how the models performance improved during training (Figure 5), the corresponding error histogram for the two models (Figure 6), the regression plots that shows the values predicted by the models against the simulated heating and cooling loads (Figure 7), and a sample of the actual and predicted values for both models (Figure 8).

Figure 5.

Evaluation of the best-performed model based on MSE measure in predicting (a) heating load of two-layer DNN, and (b) cooling load of two-layer DNN, (c) heating load of five-layer DNN, and (d) cooling load of five-layer DNN.

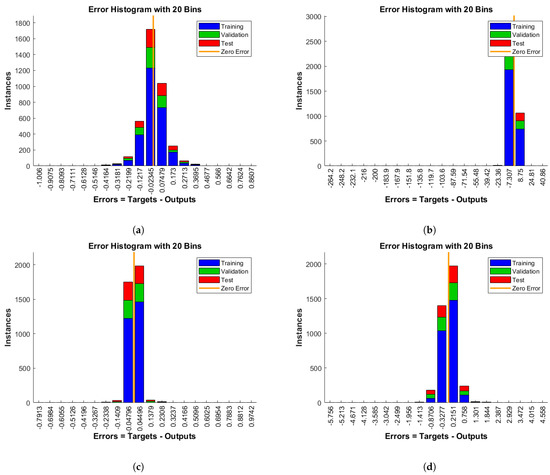

Figure 6.

Error histogram of the best-performed model in predicting (a) heating load of two-layer DNN, and (b) cooling load of two-layer DNN, (c) heating load of five-layer DNN, and (d) cooling load of five-layer DNN.

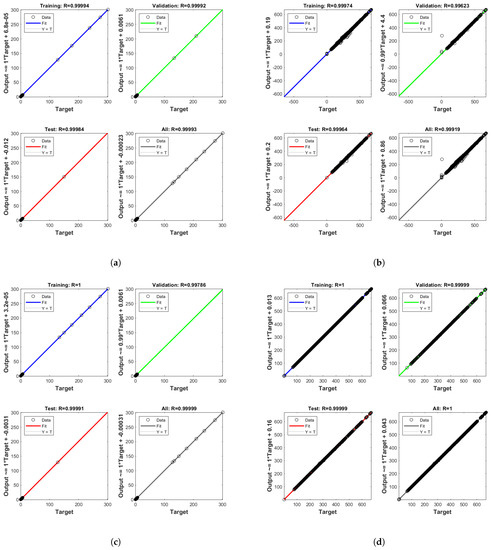

Figure 7.

Regression plot of the best-performed model (a) heating load of two-layer DNN, and (b) cooling load of two-layer DNN, (c) heating load of five-layer DNN, and (d) cooling load of five-layer DNN.

Figure 8.

The actual and predicted values of energy consumption (a) heating load using the two-layer DNN model, (b) cooling load using the two-layer DNN model, (c) heating load using the five-layer DNN model, and (d) cooling load using the five-layer DNN model.

Figure 5 depicts the MSE performance for the training, validation, and test datasets for the two models in log scale. The final network is the network that performed best on the validation set. The five-layer model MSE decreased rapidly as the network was trained to predict both heating and cooling loads and achieved smaller error values than the two-layer model. Based on the validation dataset, the five-layer model achieved an MSE as low as 0.007 at epoch 172 when predicting the heating load (Figure 5c) and 0.245 at epoch 105 when predicting the cooling load (Figure 5d).

Figure 6 shows the error histogram of the two models on the training, testing and validation datasets. The histogram shows the errors between the target values and the predicted values after training the DNN models. As these error values indicate how predicted values are above/below the target values, they can be positive or negative. The bins in the histogram are the number of vertical bars shown in the graph. The total error range is divided into 20 smaller bins. The heating prediction errors obtained with the two-layer model were higher than with the five-layer model. In particular, the highest bin corresponded to error −0.02345, while the height of that bin for the training dataset lies above but close to 1200. For the validation and test datasets, it lies between 1300 and 1600. This means that many samples from the different datasets have an error that lies in that small range. Besides, the zero error falls under the bin just before −0.02345. As most errors are near zero, this indicates that the model performed very well in predicting the heating load and the cooling load.

Another measure of how well the model has fit the data is the regression plot. The regression was plotted in Figure 7 across all samples: training, validation, and testing. The regression plot shows the actual model outputs plotted in terms of the associated target values. As the figure depicts, the linear fit to the output-target relationship is closely intersected. This indicates that the model has learned to fit the data well.

As an example of the prediction results using the two best performing models, Figure 8 shows the actual and predicted values of the cooling load for both models. The actual and predicted cooling loads follow similar trends using the two models. This means that, a specific high configuration of inputs will result in an increased output of cooling load. However, it is obvious that the heating and the cooling prediction results of the five-layer DNN were more accurate than the prediction results of the two-layer DNN.

5. Discussion

Based on the results presented in the previous section, we draw the following conclusions:

- Results of energy performance prediction depend on the parameters’ tuning of the DNNs.

- The prediction model preforms better for the higher number of neurons in each hidden layer.

- The LM Algorithm results in a more accurate prediction model than the SCG algorithm. A possible explanation of this is that the dataset used may considered of small size (less than 10,000 samples) for which the LM is known to perform well when compared to other training algorithms.

- The two-layer and the five-layer models with 20 neurons and LM training algorithm achieved comparable results for predicting the heating and cooling loads collectively. However, for predicting the heating and cooling loads independently, the five layer-model was the more accurate and also faster than the two-layer model

Table 9 presents the previous studies reported performance measures when predicting the heating and cooling loads in the context of buildings. The results are compared with the outcomes of the best model of our study: the five-layer model with 20 neurons and the LM training algorithm. To keep the comparison fair, the buildings characteristics utilized in the studies are also presented. Our best-performed model outperformed the state of the art models in terms of MSE and RMSE for both heating and cooling loads. Also, it achieved comparative results with previous studies in terms of R and R for both predicting heating load and cooling load. This evidences the merit of our model for arid climate to predict the energy consumption of residential buildings.

Table 9.

A comparison of the proposed model and the previous DNN-based studies in terms of heating and cooling loads.

6. Conclusions

Buildings’ energy consumption has a strong influence on the environmental and economic aspects of many developed and developing countries. This study highlighted the importance of tuning DNNs to achieve the best predictive performance of heating and cooling loads for a given use case. The predictive models were trained on a dataset generated by the authors for the extremely arid climate region of Qassim, in Saudi Arabia.

Experimental results of different DNNs showed that the model with higher number of hidden layers and number of neurons using the LM training algorithm outperformed other models in terms of predicting heating and cooling loads independently. Specifically, this model, which contained five layers, with 20 neurons in each layer and the Levenberg–Marquardt algorithm, outperformed the other models and achieved MSE of 0.0075 and RMSE 0.087 in predicting the heating load, and MSE of 0.245 and RMSE of 0.495 in predicting the cooling load.

These results support the effectiveness of deep learning with appropriate weight choosing on the area of buildings energy consumption. The outcomes of the research can help architects of new energy-efficient design buildings in the synthesis and predesign stages. Building regulations authorities could refer to this model for more improvement of local code.

Author Contributions

Conceptualization, A.A.A.-S. and A.A.; methodology, A.A.A.-S. and A.A.; software, A.A.A.-S.; validation, F.C.; formal analysis, A.A.A.-S. and A.A. and D.M.I.; data curation, A.A.A.-S., A.A., D.M.I. and M.A.; writing—original draft preparation, A.A.A.-S., A.A., D.M.I. and M.A.; writing—review and editing, F.C.; visualization, A.A.A.-S., A.A. and D.M.I.; supervision, F.C.; project administration, M.A. All authors have read and agreed to the published version of the manuscript.

Funding

The author(s) gratefully acknowledge Qassim University, represented by the Deanship of Scientific Research, on the financial support for this research under the number (coc-2019-2-2-I-5422) during the academic year 1440 AH/2019 AD.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Abbreviations

The following abbreviations are used in this manuscript:

| ANN | Artificial neural network |

| ABC | Artificial bee colony |

| B-ELM | Bidirectional ELM |

| DL | Deep learning |

| DNN | Deep neural network |

| ELM | Extreme learning machine models |

| ENMIM | Evolutionary neural machine inference model |

| GBRT | Gradient boosted regression trees |

| GP | Gaussian process |

| IRLS | Iteratively reweighted least square |

| IESVE | Integrated Environmental Solutions Virtual Environment |

| LM | Levenberg–marquardt algorithm |

| LSSVR | least squares support vector regression |

| LSTM | Long short-term memory |

| MAE | Mean absolute error |

| ML | Machine learning |

| MLP | Multi-layer perceptron |

| MSE | Mean square error |

| XGBoost | extreme gradient boosting |

| OSELM | Online sequential ELM |

| PSO | Particle swarm optimization |

| R | R squared- coefficient of determination |

| RBFNN | Radial basis function neural network |

| RF | Random forest |

| RMSE | Root means square error |

| SCG | Scaled conjugate gradient algorithm |

| SVM | Support vector machine |

References

- Huo, T.; Ma, Y.; Cai, W.; Liu, B.; Mu, L. Will the urbanization process influence the peak of carbon emissions in the building sector? A dynamic scenario simulation. Energy Build. 2021, 232, 110590. [Google Scholar] [CrossRef]

- Huo, T.; Cao, R.; Du, H.; Zhang, J.; Cai, W.; Liu, B. Nonlinear influence of urbanization on China’s urban residential building carbon emissions: New evidence from panel threshold model. Sci. Total Environ. 2021, 772, 102143. [Google Scholar] [CrossRef] [PubMed]

- Al-Homoud, M.S.; Krarti, M. Energy efficiency of residential buildings in the kingdom of Saudi Arabia: Review of status and future roadmap. J. Build. Eng. 2021, 36, 102143. [Google Scholar] [CrossRef]

- Al-Saadi, S.; Budaiwi, I. Performance-based envelope design for residential buildings in hot climates. Proc. Build. Simul. 2007, 10, 1726–1733. [Google Scholar]

- Saudi Vision 2030. Available online: https://www.vision2030.gov.sa/thekingdom/explore/energy/ (accessed on 18 October 2021).

- Jahani, E.; Cetin, K.; Cho, I.H. City-scale single family residential building energy consumption prediction using genetic algorithm-based Numerical Moment Matching technique. Build. Environ. 2020, 172, 106667. [Google Scholar] [CrossRef]

- Chou, J.-S.; Ngo, N.-T. Time series analytics using sliding window metaheuristic optimization-based machine learning system for identifying building energy consumption patterns. Appl. Energy 2016, 177, 751–770. [Google Scholar] [CrossRef]

- González-Vidal, A.; Jiménez, F.; Gómez-Skarmeta, A.F. A methodology for energy multivariate time series forecasting in smart buildings based on feature selection. Energy Build. 2019, 196, 71–82. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, J.; Chen, H.; Li, G.; Liu, J.; Xu, C.; Huang, R.; Huang, Y. Machine learning-based thermal response time ahead energy demand prediction for building heating systems. Appl. Energy 2018, 10, 16–27. [Google Scholar] [CrossRef]

- Divina, F.; Garcia Torres, M.; Goméz Vela, F.A.; Vazquez Noguera, J.L. A comparative study of time series forecasting methods for short term electric energy consumption prediction in smart buildings. Energies 2019, 12, 1934. [Google Scholar] [CrossRef] [Green Version]

- Bode, G.; Schreiber, T.; Baranski, M.; Müller, D. A time series clustering approach for building automation and control systems. Appl. Energy 2019, 238, 1337–1345. [Google Scholar] [CrossRef]

- Papadopoulos, S.; Bonczak, B.; Kontokosta, C.E. Pattern recognition in building energy performance over time using energy benchmarking data. Appl. Energy 2018, 221, 576–586. [Google Scholar] [CrossRef] [Green Version]

- Chou, J.-S.; Tran, D.-S. Forecasting energy consumption time series using machine learning techniques based on usage patterns of residential householders. Energy 2018, 165, 709–726. [Google Scholar] [CrossRef]

- Roy, S.S.; Samui, P.; Nagtode, I.; Jain, H.; Shivaramakrishnan, V.; Mohammadi-Ivatloo, B. Forecasting heating and cooling loads of buildings: A comparative performance analysis. J. Ambient Intell. Humaniz. Comput. 2020, 11, 1253–1264. [Google Scholar] [CrossRef]

- Tsanas, A.; Xifara, A. Accurate quantitative estimation of energy performance of residential buildings using statistical machine learning tools. Energy Build. 2012, 49, 560–567. [Google Scholar] [CrossRef]

- Wang, Y.; Wei, C. Design optimization of office building envelope based on quantum genetic algorithm for energy conservation. J. Build. Eng. 2021, 35, 102048. [Google Scholar] [CrossRef]

- Al Tarhuni, B.; Naji, A.; Brodrick, P.G.; Hallinan, K.P.; Brecha, R.J.; Yao, Z. Large scale residential energy efficiency prioritization enabled by machine learning. Energy Effic. 2019, 12, 2055–2078. [Google Scholar] [CrossRef]

- Al-Rakhami, M.; Gumaei, A.; Alsanad, A.; Alamri, A.; Hassan, M.M. An ensemble learning approach for accurate energy load prediction in residential buildings. IEEE Access 2019, 7, 48328–48338. [Google Scholar] [CrossRef]

- Geyer, P.; Singaravel, S. Component-based machine learning for performance prediction in building design. Appl. Energy 2018, 228, 1439–1453. [Google Scholar] [CrossRef]

- Kumar, S.; Pal, S.K.; Singh, R.P. Intra ELM variants ensemble based model to predict energy performance in residential buildings. Sustain. Energy Grids Netw. 2018, 16, 177–187. [Google Scholar] [CrossRef]

- Naji, S.; Keivani, A.; Shamshirband, S.; Alengaram, U.J.; Jumaat, M.Z.; Mansor, Z.; Lee, M. Estimating building energy consumption using extreme learning machine method. Energy 2016, 97, 506–516. [Google Scholar] [CrossRef]

- Navarro-Gonzalez, F.J.; Villacampa, Y. An octahedric regression model of energy efficiency on residential buildings. Appl. Sci. 2019, 9, 4978. [Google Scholar] [CrossRef] [Green Version]

- Sadeghi, A.; Younes Sinaki, R.; Young, W.A.; Weckman, G.R. An intelligent model to predict energy performances of residential buildings based on deep neural networks. Energies 2020, 13, 571. [Google Scholar] [CrossRef] [Green Version]

- Seyedzadeh, S.; Rahimian, F.P.; Rastogi, P.; Glesk, I. Tuning machine learning models for prediction of building energy loads. Sustain. Cities Soc. 2019, 47, 101484. [Google Scholar] [CrossRef]

- Sharif, S.A.; Hammad, A. Developing surrogate ANN for selecting near-optimal building energy renovation methods considering energy consumption, LCC and LCA. J. Build. Eng. 2019, 25, 100790. [Google Scholar] [CrossRef]

- Singaravel, S.; Suykens, J.; Geyer, P. Deep-learning neural-network architectures and methods: Using component-based models in building-design energy prediction. Adv. Eng. Inform. 2018, 38, 81–90. [Google Scholar] [CrossRef]

- Tran, D.H.; Luong, D.L.; Chou, J.S. Nature-inspired metaheuristic ensemble model for forecasting energy consumption in residential buildings. Energy 2020, 191, 116552. [Google Scholar] [CrossRef]

- Zhou, G.; Moayedi, H.; Bahiraei, M.; Lyu, Z. Employing artificial bee colony and particle swarm techniques for optimizing a neural network in prediction of heating and cooling loads of residential buildings. J. Clean. Prod. 2020, 254, 120082. [Google Scholar] [CrossRef]

- Ishaq, M.; Soonil, K. Short-Term Energy Forecasting Framework Using an Ensemble Deep Learning Approach. IEEE Access 2021, 9, 94262–94271. [Google Scholar]

- Almhafdy, A. A Dataset for Residential Buildings Energy Consumption with Statisti-239cal and Machine Learning Analysis. Available online: https://github.com/Dr-Dina-M-Ibrahim/A-dataset-for-residential-buildings-energy-consumption-with-statistical-and-machine-learning-analysi (accessed on 18 October 2021).

- IESVE, Integrated Environmental Solutions Virtual Environment (IES). Available online: https://www.iesve.com/ (accessed on 18 October 2021).

- Rad, E.A.; Fallahi, E. Optimizing the insulation thickness of external wall by a novel 3E (energy, environmental, economic) method. Constr. Build. Mater. 2019, 205, 196–212. [Google Scholar]

- D’Agostino, D.; de’Rossi, F.; Marigliano, M.; Marino, C.; Minichiello, F. Evaluation of the optimal thermal insulation thickness for an office building in different climates by means of the basic and modified “cost-optimal” methodology. J. Build. Eng. 2019, 24, 100743. [Google Scholar] [CrossRef]

- Ozel, M. Influence of glazing area on optimum thickness of insulation for different wall orientations. J. Build. Eng. 2019, 147, 770–780. [Google Scholar] [CrossRef]

- Barrau, J.; Ibanez, M.; Badia, F. Impact of the optimization criteria on the determination of the insulation thickness. Energy Build. 2014, 76, 459–469. [Google Scholar] [CrossRef]

- Kurekci, N.A. Determination of optimum insulation thickness for building walls by using heating and cooling degree-day values of all Turkey’s provincial centers. Energy Build. 2016, 116, 197–213. [Google Scholar] [CrossRef]

- Ozel, M. Determination of optimum insulation thickness based on cooling transmission load for building walls in a hot climate. Energy Convers. Manag. 2013, 66, 106–114. [Google Scholar]

- Kayfeci, M.; Keçebaş, A.; Gedik, E. Determination of optimum insulation thickness of external walls with two different methods in cooling applications. Appl. Ther. Eng. 2013, 50, 217–224. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).