Mixed Analysis of the Flipped Classroom in the Concrete and Steel Structures Subject in the Context of COVID-19 Crisis Outbreak. A Pilot Study

Abstract

1. Introduction

- Has the FL model used in the Concrete and Steel Structures subject adapted properly from a face-to-face format to the online format?

- Do the students of the Concrete and Steel Structures subject negatively perceive the change to the online format?

2. Theoretical Framework

2.1. Architecture; Art and Science

2.2. Active Learning and Flipped Learning

2.3. Learning Assessment

3. Methodology

3.1. Goals, Research Design and Methods of the Study

3.2. Participants

3.3. Course Design

- A brief introduction to the topic is explained at class;

- Learning materials (pdf documents) are uploaded in the Moodle platform of the university for parts 1, 2 and 3. In the case of part 4, a video on the topic is uploaded;

- Students read and study the notes at home and watch the video when available;

- Next class starts solving the doubts of the students. That could take up to 50% of class time, depending on the students’ demand. After the doubt solving time, exercises on the topic were solved at class;

- Next class starts also with doubt solving, with a limited time of 15 min. Then, a short exam of maximum 30 min is held. A brief introduction of the next topic is made the last hour of class, and the cycle is repeated from the point 2.

- Videos were uploaded in the Moodle platform of the university, as well as the course notes and exercises on the topic;

- Students watched the video and read and study the notes at home;

- Next class started solving the doubts of the students. After the doubt solving time, exercises on the topic were solved at class;

- Next class started also with doubt solving, with a limited time of 15 min. More exercises on the topic were solved at class. A brief introduction of the next topic was made the last half hour of class, and the cycle is repeated from the point 1.

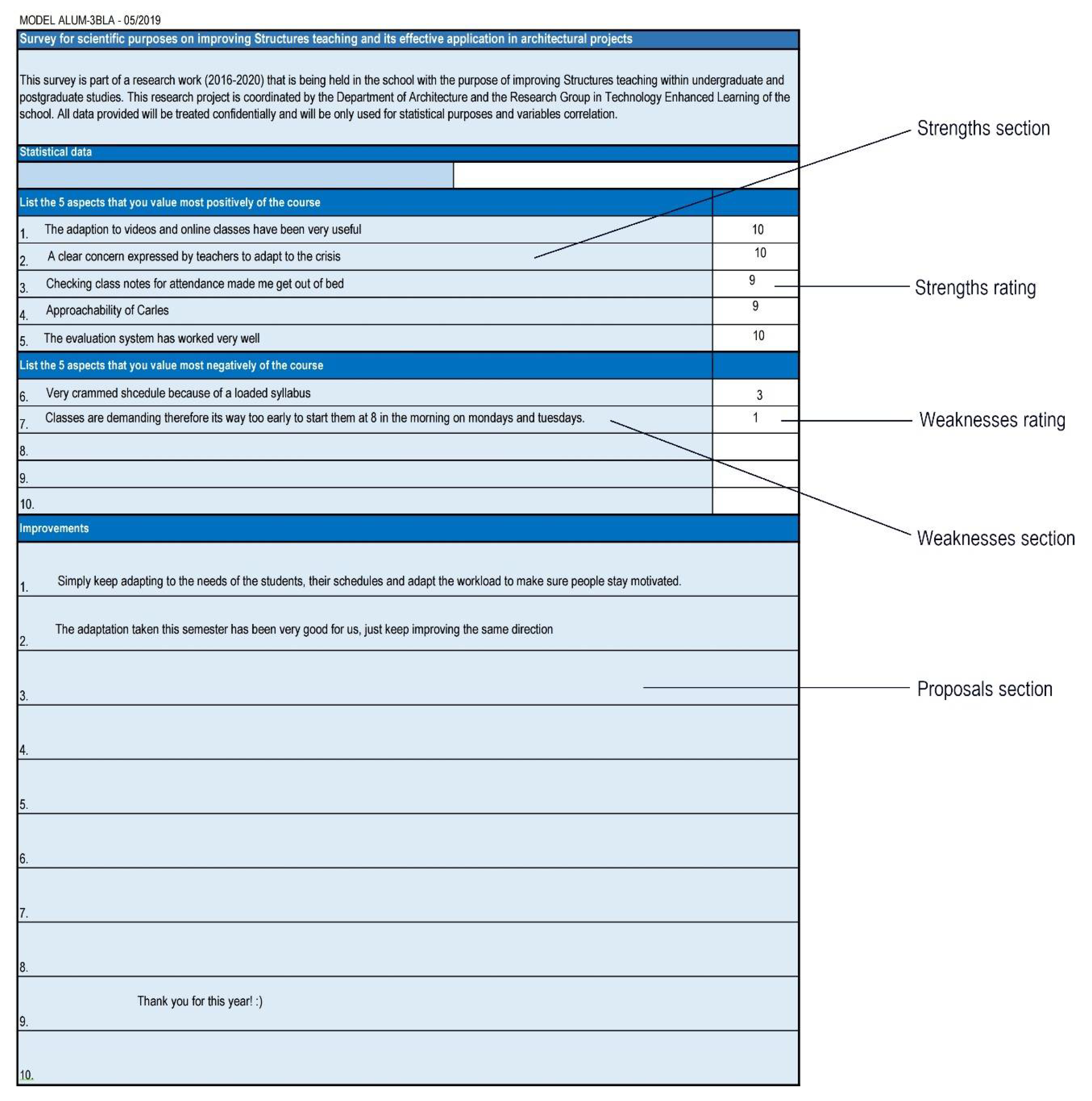

3.4. Instruments

- Firstly, students are requested to identify strengths and weaknesses, five of each type at most;

- Secondly, they are asked to value these strengths and weaknesses between 1 and 10;

- Finally, they are invited to propose how to improve what they have identified as strengths and weaknesses.

- Write in the first 5 boxes the 5 aspects you most liked of this structures course.

- Write in the next 5 boxes the 5 aspects you most disliked of this structures course.

- Put a grade from 1 to 10 for each one of the aspects listed, in the box at the right of each one. For both strengths and weaknesses 1 means you completely dislike the aspect and 10 means you absolutely liked it.

- In the next 10 boxes, write what you think it could be changed to improve each aspect listed. There are 10 boxes, one for each aspect previously stated in both positive and negative aspects.

3.5. Mixed Data Analysis

4. Results

- Index of mention of the proper adaptation of the course to the online format in the strengths section;

- Proportion of responses related with the FL in the strengths section;

- Proportion of responses related with the FL in the weaknesses section;

- Positive and negative extreme marks of the responses related to FL;

- Lower endpoint value for 95% of confidence of the mark in the BLA for the categories related to FL;

- Lower endpoint value for 95% of confidence of the responses in the quantitative survey for the categories related to FL.

- Index of mention of the online classes in the weaknesses section;

- Motivation in class activities and home activities expressed in qualitative surveys;

- Motivation in online classes expressed in qualitative surveys;

- Relationship between positive extreme marks and negative extreme marks of the responses related to classes in online format.

4.1. Qualitative Assessment

4.2. Quantitative Assessment

5. Discussion

- RQ1

- Has the FL model used in the Concrete and Steel Structures subject adapted properly from a face-to-face format to the online format?

- RQ2

- Do the students of the Concrete and Steel Structures subject negatively perceive the change to the online format?

- Index of mention (IM) of the proper adaptation of the course to the online format in the strengths section: 41.66%. It is the third most cited element, after videos (IM = 81.25%) and continuous assessment (IM = 70.83%);

- Proportion of responses related with the FL in the strengths section: 65.01% (121 responses out of 186 total responses);

- Proportion of responses related with the FL in the weaknesses section: 27.27% (27 out of 99 responses);

- Positive and negative extreme marks of the responses related to FL: 25 extreme positive marks (58.14% of the 43 total positive extreme marks) and 2 extreme negative marks (22.22% of the 11 total negative extreme marks);

- Lower endpoint value for 95% of confidence of the mark in the BLA for the categories related to FL. In a scale from 1 to 10, this value is 8.70 for category DB (videos), 6.01 for category PA (class development) and 5.08 for category PB (system and methodology);

- Lower endpoint value for 95% of confidence of the responses in the quantitative survey for the categories related to FL. In a scale from 1 to 5, this value is 3.98 for category DB (videos), 3.70 for category PA (class development) and 3.53 for category PB (system and methodology).

- Index of mention of the online classes in the weaknesses section: 16.67%. It is the third most cited element, after the ratio content/time (IM = 29.16%) and time given for exams (IM = 25.00%);

- Motivation in class activities and home activities expressed in qualitative surveys: in a scale from 1 to 5, average rating of motivation for class activities is 4.00 (listen to teachers’ explanations), 3.93 (do exercises at class) and 3.92 (solve doubts at class). The rating for home activities is 3.478 (study notes at home) and 3.614 (watch to videos at home);

- Motivation in face-to-face and online classes expressed in qualitative surveys: in a scale from 1 to 5, average rating of motivation for attending face-to face classes is 3.85 while the value for attending online classes is 2.935;

- Positive and negative extreme marks of the responses related to classes in online format: two extreme positive marks (4.65% of the 43 total positive extreme marks) and one extreme negative mark (11.11% of the 11 total negative extreme marks).

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- La Salle Academic Guide. Syllabus of the degree in Architecture Studies. Available online: https://www.salleurl.edu/en/education/degree-architecture-studies/syllabus (accessed on 20 January 2021).

- Ministerio de la Presidencia del gobierno de España. Real Decreto 463/2020, de 14 de Marzo, por el que se Declara el Estado de Alarma para la Gestión de la Situación de Crisis Sanitaria Ocasionada por el COVID-19; BOE, Ministerio de la Presidencia, relaciones con las Cortes y Memoria Democrática; Gobierno de España: Madrid, Spain, 2020; pp. 1–3. [Google Scholar]

- Campanyà, C.; Fonseca, D.; Martí, N.; Amo, D.; Simón, D. Assessing the Pilot Implementation of Flipped Classroom Methodology in the Concrete and Steel Structures Subject of Architecture Undergraduate Studies. In Innovative Trends in Flipped Teaching and Adaptive Learning; IGI Global: Hershey, PA, USA, 2019. [Google Scholar]

- Campanyà, C.; Fonseca, D.; Martí, N.; Peña, E.; Ferrer, A.; Llorca, J. Identification of Significant Variables for the Parameterization of Structures Learning in Architecture Students; Springer: Cham, Switzerland, 2018; Volume 747, ISBN 9783319776996. [Google Scholar]

- Bergmann, J.; Sams, A. Flip Your Classroom Reach Every Student in Every Class Every Day, 1st ed.; International Society for Technology in Education: Eugene, OR, USA, 2014. [Google Scholar]

- Zhang, D.; Zhou, L.; Briggs, R.O.; Nunamaker, J.F. Instructional video in e-learning: Assessing the impact of interactive video on learning effectiveness. Inf. Manag. 2006, 43, 15–27. [Google Scholar] [CrossRef]

- Karabulut-Ilgu, A.; Jaramillo Cherrez, N.; Jahren, C.T. A systematic review of research on the flipped learning method in engineering education. Br. J. Educ. Technol. 2018, 49, 398–411. [Google Scholar] [CrossRef]

- Pollio, V. The Ten Books on Architecture; Archit. Read. Essent. Writings from Vitr. to Present; Dover Publications: Mineola, NY, USA, 1960. [Google Scholar] [CrossRef]

- Alberti, L.B.; Núñez, J.F. De Re Aedificatoria; Akal: Madrid, Spain, 1991; p. 476. ISBN 84-7600-924-0. [Google Scholar]

- Viollet-le-Duc, E.-E. Entretiens sur l’architecture. In Del espacio arquitectónico. Ensayo de epistemología de la arquitectura; 1980; ISBN 2884741526. Available online: http://www.amazon.fr/review/create-review/ref=dp_db_cm_cr_acr_wr_link?ie=UTF8&asin=2884741526 (accessed on 27 January 2021).

- Architecture. Encyclopedia Britannica Architecture. Encyclopedia Britannica; Encyclopaedia Britannica, Inc.: Chicago, IL, USA. Available online: https://www.britannica.com/topic/architecture (accessed on 1 March 2021).

- Moore, C.W. Architecture: Art and Science. J. Archit. Educ. 1965, 19. [Google Scholar] [CrossRef]

- Statham, H.H. Vitruvius: The Ten Books of Architecture. Translated by Prof. Morris, H. Morgan. with illustrations prepared under the direction of Prof. H. Langford Warren, 10 × 6¾, xiii + 331 pp. 61 illustrations. Cambridge: Harvard University Press. London: H. Milford. Oxford: University Press, 1914. 15s. n. J. Rom. Stud. 2012, 4. [Google Scholar] [CrossRef]

- Patterson, R. What vitruvius said. J. Archit. 1997, 2, 355–373. [Google Scholar] [CrossRef]

- Blondel, J.-F. Cours d’architecture, ou Traité de la Décoration, Distribution et Construction des Bâtiments: Contenant les Leçons Données en 1750 et les Années Suivantes; General Books LLC: Memphis, TE, USA, 2012; Volume 1, p. 118. ISBN 978-1235227318. [Google Scholar]

- Toca Fernández, A. El Tejido de la Arquitectura; Editorial Universidad de Sevilla: Seville, Spain, 2015. [Google Scholar]

- Precis Des Lecons D’Architecture Donnees A L’Ecole Polytechnique French Edition by Durand, Jean-Nicolas-Louis, Durand-J-N-L 2013 Paperback: Amazon.es: Durand, Jean-Nicolas-Louis, Durand-J-N-L: Libros. Available online: https://www.amazon.es/DArchitecture-Polytechnique-Jean-Nicolas-Louis-Durand-J-N-L-Paperback/dp/B00YZLHCTW (accessed on 1 April 2021).

- The Making of Mechanical Engineers in France: The Ecoles d’Arts et Métiers, 1803–1914. Available online: https://search.proquest.com/openview/d834a795b9699b871dfec86a389c47ab/1.pdf?pq-origsite=gscholar&cbl=1819160 (accessed on 1 April 2021).

- Mejores Programas de Grado en Arquitectura en Europa 2021. Available online: https://www.licenciaturaspregrados.com/Grado/Arquitectura/Europa/ (accessed on 1 April 2021).

- Jefatura de Estado. Ley de Ordenación de la Edificación; Spain. 1999. Available online: https://www.boe.es/buscar/act.php?id=BOE-A-1999-21567 (accessed on 1 March 2021).

- European Parliament. European Parliament. Directive 2005/36/EC of the European Parliament and of the Council. Off. J. Eur. Union 2005, 255, 121. [Google Scholar]

- Ministerio de Educación. Orden EDU/2075/2010 Acuerdo de Consejo de Ministros por el que se Establecen las Condiciones a las que Deberán Adecuarse los Planes de Estudios Conducentes a la Obtención de Títulos que Habiliten para el Ejercicio de la Profesión Regulada de Arquitecto; BOE, 185; Ministerio de Educación: Madrid, Spain, 2010. [Google Scholar]

- Martí, N.; Fonseca, D.; Peña, E.; Adroer, M.; Simón, D. Design of interactive and collaborative learning units using TICs in architectural construction education. Rev. Construcción 2017. [Google Scholar] [CrossRef]

- ANECA. Libro Blanco. Título de Grado en Arquitectura; Agencia Nacional de Evaluación de la Calidad y Acreditación: Madrid, Spain, 2005. [Google Scholar]

- Bonwell, C.C.; Eison, J.A. Active Learning: Creating Excitement in the Classroom; ASHE-ERIC Higher Education Report No.1; School of Education and Human Development, The George Washington University: Washington, DC, USA, 1991; p. 121. ISSN 0884-0040. ISBN 1-878380-08-7. [Google Scholar]

- Nguyen, K.A.; Borrego, M.; Finelli, C.J.; DeMonbrun, M.; Crockett, C.; Tharayil, S.; Shekhar, P.; Waters, C.; Rosenberg, R. Instructor strategies to aid implementation of active learning: A systematic literature review. Int. J. STEM Educ. 2021, 8, 1–18. [Google Scholar] [CrossRef]

- Prince, M. Does Active Learning Work? A Review of the Research. J. Eng. Educ. 2004, 93, 223–231. [Google Scholar] [CrossRef]

- Freeman, S.; Eddy, S.L.; McDonough, M.; Smith, M.K.; Okoroafor, N.; Jordt, H.; Wenderoth, M.P. Active learning increases student performance in science, engineering, and mathematics. Proc. Natl. Acad. Sci. USA 2014, 111, 8410–8415. [Google Scholar] [CrossRef]

- Theobald, E.J.; Hill, M.J.; Tran, E.; Agrawal, S.; Nicole Arroyo, E.; Behling, S.; Chambwe, N.; Cintrón, D.L.; Cooper, J.D.; Dunster, G.; et al. Active learning narrows achievement gaps for underrepresented students in undergraduate science, technology, engineering, and math. Proc. Natl. Acad. Sci. USA 2020, 117, 6476–6483. [Google Scholar] [CrossRef]

- Stains, M.; Harshman, J.; Barker, M.K.; Chasteen, S.V.; Cole, R.; DeChenne-Peters, S.E.; Eagan, M.K.; Esson, J.M.; Knight, J.K.; Laski, F.A.; et al. Anatomy of STEM teaching in North American universities. Science 2018, 359, 1468–1470. [Google Scholar] [CrossRef] [PubMed]

- Hora, M.T.; Ferrare, J.J. Instructional Systems of Practice: A Multidimensional Analysis of Math and Science Undergraduate Course Planning and Classroom Teaching. J. Learn. Sci. 2013, 22, 212–257. [Google Scholar] [CrossRef]

- Kuhn, S. Learning from the Architecture Studio: Implications for Project-Based Pedagogy. Int. J. Eng. Educ. 2001, 17, 249–352. [Google Scholar]

- Condit, C.W.; Drexler, A. The Architecture of the Ecole des Beaux-Arts. Technol. Cult. 1978, 19. [Google Scholar] [CrossRef]

- Dutton, T.A. Design and studio pedagogy. J. Archit. Educ. 1987, 41. [Google Scholar] [CrossRef]

- De Graaff, E.; Cowdroy, R. Theory and practice of educational innovation through introduction of problem-based learning in architecture. Int. J. Eng. Educ. 1997, 13, 166–174. [Google Scholar]

- Bishop, J.L.; Verleger, M.A. The flipped classroom: A survey of the research. In Proceedings of the ASEE Annual Conference and Exposition, Conference Proceedings, Atlanta, GA, USA, 23–26 June 2013; ASEE: Atlanta, GA, USA, 2013. [Google Scholar]

- Bart, M. Survey Confirms Growth of the Flipped Classroom. 2014. Available online: https://www.facultyfocus.com/articles/blended-flipped-learning/survey-confirms-growth-of-the-flipped-classroom/ (accessed on 20 January 2021).

- Pozo Sánchez, S.; López-Belmonte, J.; Moreno-Guerrero, A.-J.; Sola Reche, J.M.; Fuentes Cabrera, A. Effect of Bring-Your-Own-Device Program on Flipped Learning in Higher Education Students. Sustainability 2020, 12, 3729. [Google Scholar] [CrossRef]

- Awidi, I.T.; Paynter, M. The impact of a flipped classroom approach on student learning experience. Comput. Educ. 2019, 128, 269–283. [Google Scholar] [CrossRef]

- Fernandez, A.R.; Merino, P.J.M.; Kloos, C.D. Scenarios for the application of learning analytics and the flipped classroom. In Proceedings of the IEEE Global Engineering Education Conference, Educon, Santa Cruz de Tenerife, Spain, 17–20 April 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- López Núñez, J.A.; López-Belmonte, J.; Moreno-Guerrero, A.-J.; Marín-Marín, J.A. Dietary Intervention through Flipped Learning as a Techno Pedagogy for the Promotion of Healthy Eating in Secondary Education. Int. J. Environ. Res. Public Health 2020, 17, 3007. [Google Scholar] [CrossRef]

- García-Peñalvo, F.J.; Fidalgo-Blanco, Á.; Sein-Echaluce, M.L.; Conde, M.Á. Cooperative micro flip teaching. In Proceedings of the International Conference on Learning and Collaboration Technologies, Toronto, ON, Canada, July 17–22 2016. [Google Scholar]

- Fidalgo-Blanco, Á.; Sein-Echaluce, M.L.; García-Peñalvo, F.J. Ontological Flip Teaching: A Flip Teaching model based on knowledge management. Univers. Access Inf. Soc. 2018. [Google Scholar] [CrossRef]

- Sein-Echaluce, M.L.; Fidalgo Blanco, Á.; García Peñalvo, F.J.; García Peñalvo, F.J.; García Peñalvo, F.J. Trabajo en equipo y Flip Teaching para mejorar el aprendizaje activo del alumnado—[Peer to Peer Flip Teaching]. In Proceedings of the La innovación docente como misión del profesorado: Congreso Internacional Sobre Aprendizaje, Innovación y Competitividad, Zaragoza, Spain, 4–6 October 2017; Servicio de Publicaciones Universidad: Zaragoza, Spain, 2017; pp. 1–6. [Google Scholar]

- Fidalgo-Blanco, A.; Martinez-Nuñez, M.; Borrás-Gene, O.; Sanchez-Medina, J.J. Micro flip teaching—An innovative model to promote the active involvement of students. Comput. Human Behav. 2017. [Google Scholar] [CrossRef]

- Huang, B.; Hew, K.F.; Lo, C.K. Investigating the effects of gamification-enhanced flipped learning on undergraduate students’ behavioral and cognitive engagement. Interact. Learn. Environ. 2019, 27, 1106–1126. [Google Scholar] [CrossRef]

- Pons, O.; Franquesa, J.; Hosseini, S.M.A. Integrated Value Model to assess the sustainability of active learning activities and strategies in architecture lectures for large groups. Sustainability 2019, 11, 2917. [Google Scholar] [CrossRef]

- Sergis, S.; Sampson, D.G.; Pelliccione, L. Investigating the impact of Flipped Classroom on students’ learning experiences: A Self-Determination Theory approach. Comput. Human Behav. 2018. [Google Scholar] [CrossRef]

- Fonseca, D.; Conde, M.Á.; García-Peñalvo, F.J. Improving the information society skills: Is knowledge accessible for all? Univers. Access Inf. Soc. 2018. [Google Scholar] [CrossRef]

- Olmos Migueláñez, S. Evaluación formativa y sumativa de estudiantes universitarios: Aplicación de las tecnologías a la evaluación educativa. Teoría Educ. Educ. Cult. Soc. Inf. 2009, 10, 305–307. [Google Scholar] [CrossRef]

- Williamson, B. Big Data in Education: The Digital Future of Learning, Policy and Practice; SAGE Publications Ltd.: Southend Oaks, CA, USA, 2017. [Google Scholar]

- Enhancing Teaching and Learning Through Educational Data Mining and Learning Analytics: An Issue Brief. Available online: https://mobile.eduq.info/xmlui/handle/11515/35829 (accessed on 1 March 2021).

- Analytics for Learning and Teaching Harmelen—Google Acadèmic. Available online: https://scholar.google.com/scholar?hl=ca&as_sdt=0%2C5&q=Analytics+for+learnig+and+teaching+harmelen&btnG= (accessed on 1 March 2021).

- Chatti, M.A.; Dyckhoff, A.L.; Schroeder, U.; Thüs, H. A reference model for learning analytics. Int. J. Technol. Enhanc. Learn. 2012, 4, 318–331. [Google Scholar] [CrossRef]

- Siemens, G. Learning analytics: Envisioning a research discipline and a domain of practice. In Proceedings of the ACM International Conference Proceeding Series; ACM Press: New York, NY, USA, 2012; pp. 4–8. Available online: https://dl.acm.org/doi/10.1145/2330601.2330605 (accessed on 1 March 2021).

- Clow, D. The learning analytics cycle: Closing the loop effectively. In Proceedings of the ACM International Conference Proceeding Series; ACM Press: New York, NY, USA, 2012; pp. 134–138. Available online: http://oro.open.ac.uk/34330/ (accessed on 1 March 2021).

- Simon, D.; Fonseca, D.; Necchi, S.; Vanesa-Sánchez, M.; Campanyà, C. Architecture and Building Enginnering Educational Data Mining. Learning Analytics for detecting academic dropout. In Proceedings of the Iberian Conference on Information Systems and Technologies, CISTI, Coimbra, Portugal, 19–22 June 2019. [Google Scholar]

- Prenger, R.; Schildkamp, K. Data-based decision making for teacher and student learning: A psychological perspective on the role of the teacher. Educ. Psychol. 2018, 38, 734–752. [Google Scholar] [CrossRef]

- Cohen, L.; Lawrence, M.; Morrison, K. Research Methods in Education, 8th ed.; T&F INDIA: London, UK, 2017. [Google Scholar]

- McKim, C.A. The Value of Mixed Methods Research: A Mixed Methods Study. J. Mix. Methods Res. 2017, 11, 202–222. [Google Scholar] [CrossRef]

- Jamali, H.R. Does research using qualitative methods (grounded theory, ethnography, and phenomenology) have more impact? Libr. Inf. Sci. Res. 2018, 40, 201–207. [Google Scholar] [CrossRef]

- Joshi, A.; Kale, S.; Chandel, S.; Pal, D. Likert Scale: Explored and Explained. Br. J. Appl. Sci. Technol. 2015, 7, 396–403. [Google Scholar] [CrossRef]

- Holland, P.W. Statistics and causal inference. J. Am. Stat. Assoc. 1986, 81, 945–960. [Google Scholar] [CrossRef]

- Polkinghorne, D.E. Narrative configuration in qualitative analysis. Int. J. Qual. Stud. Educ. 1995, 8. [Google Scholar] [CrossRef]

- Næss, P. Validating explanatory qualitative research: Enhancing the interpretation of interviews in urban planning and transportation research. Appl. Mobilities 2020, 5, 186–205. [Google Scholar] [CrossRef]

- Ardianti, S.; Sulisworo, D.; Pramudya, Y.; Raharjo, W. The impact of the use of STEM education approach on the blended learning to improve student’s critical thinking skills. Univers. J. Educ. Res. 2020, 8. [Google Scholar] [CrossRef]

- Kizkapan, O.; Bektas, O. The effect of project based learning on seventh grade students’ academic achievement. Int. J. Instr. 2017, 10. [Google Scholar] [CrossRef]

- Starks, H.; Trinidad, S.B. Choose your method: A comparison of phenomenology, discourse analysis, and grounded theory. Qual. Health Res. 2007, 17. [Google Scholar] [CrossRef]

- Campbell, R.; Pound, P.; Pope, C.; Britten, N.; Pill, R.; Morgan, M.; Donovan, J. Evaluating meta-ethnography: A synthesis of qualitative research on lay experiences of diabetes and diabetes care. Soc. Sci. Med. 2003, 56. [Google Scholar] [CrossRef]

- Bradley, E.H.; Curry, L.A.; Devers, K.J. Qualitative data analysis for health services research: Developing taxonomy, themes, and theory. Health Serv. Res. 2007, 42. [Google Scholar] [CrossRef]

- Jones, P.W.; Harding, G.; Berry, P.; Wiklund, I.; Chen, W.H.; Kline Leidy, N. Development and first validation of the COPD Assessment Test. Eur. Respir. J. 2009, 34. [Google Scholar] [CrossRef] [PubMed]

- Mays, N.; Pope, C. Qualitative Research: Observational methods in health care settings. BMJ 1995, 311. [Google Scholar] [CrossRef] [PubMed]

- Sampson, H. Navigating the waves: The usefulness of a pilot in qualitative research. Qual. Res. 2004, 4. [Google Scholar] [CrossRef]

- Angrist, J.D.; Imbens, G.W.; Rubin, D.B. Identification of Causal Effects Using Instrumental Variables. J. Am. Stat. Assoc. 1996, 91, 444–455. [Google Scholar] [CrossRef]

- Rauch, A.; Wiklund, J.; Lumpkin, G.T.; Frese, M. Entrepreneurial orientation and business performance: An assessment of past research and suggestions for the future. Entrep. Theory Pract. 2009, 33. [Google Scholar] [CrossRef]

- Justice, A.C.; Holmes, W.; Gifford, A.L.; Rabeneck, L.; Zackin, R.; Sinclair, G.; Weissman, S.; Neidig, J.; Marcus, C.; Chesney, M.; et al. Development and validation of a self-completed HIV symptom index. J. Clin. Epidemiol. 2001, 54 (Suppl. 1), S77–S90. [Google Scholar] [CrossRef]

- Smith, G.D.; Phillips, A.N. Correlation without a cause: An epidemiological odyssey. Int. J. Epidemiol. 2020, 49, 4–14. [Google Scholar] [CrossRef]

- Maxwell, J.A. Causal Explanation, Qualitative Research, and Scientific Inquiry in Education. Educ. Res. 2004, 33. [Google Scholar] [CrossRef]

- European Credit Transfer and Accumulation System (ECTS). Education and Training. Available online: https://ec.europa.eu/education/node/90_en (accessed on 31 March 2021).

- ECTS Users’ Guide 2015—Publications Office of the EU. Available online: https://op.europa.eu/en/publication-detail/-/publication/da7467e6-8450-11e5-b8b7-01aa75ed71a1 (accessed on 31 March 2021).

- UserLab|La Salle|Campus Barcelona. Available online: https://www.salleurl.edu/en/userlab (accessed on 1 April 2021).

- Pifarré, M.; Tomico, O. Bipolar laddering (BLA): A participatory subjective exploration method on user experience. In Proceedings of the Proceedings of the 2007 Conference on Designing for User eXperiences, DUX’07, Chicago, IL, USA, 5–7 November 2017; ACM Press: New York, NY, USA, 2007; p. 2. [Google Scholar]

- Fonseca, D.; Redondo, E.; Valls, F.; Villagrasa, S. Technological adaptation of the student to the educational density of the course. A case study: 3D architectural visualization. Comput. Human Behav. 2017. [Google Scholar] [CrossRef]

- Sanchez-Sepulveda, M.; Fonseca, D.; Franquesa, J.; Redondo, E. Virtual interactive innovations applied for digital urban transformations. Mixed approach. Futur. Gener. Comput. Syst. 2019. [Google Scholar] [CrossRef]

- Fonseca, D.; Redondo, E.; Villagrasa, S. Mixed-methods research: A new approach to evaluating the motivation and satisfaction of university students using advanced visual technologies. Univers. Access Inf. Soc. 2015. [Google Scholar] [CrossRef]

- Fonseca, D.; Valls, F.; Redondo, E.; Villagrasa, S. Informal interactions in 3D education: Citizenship participation and assessment of virtual urban proposals. Comput. Human Behav. 2016. [Google Scholar] [CrossRef]

- The Thinker’s Guide to Socratic Questioning—Richard Paul, Linda Elder—Google Llibres. Available online: https://books.google.es/books?hl=ca&lr=&id=ADWbDwAAQBAJ&oi=fnd&pg=PA2&dq=socratic+questioning&ots=1jEZAVwM37&sig=K8apWCQOY7ZOopCPV700LHsqkiA&redir_esc=y#v=onepage&q=socraticquestioning&f=false (accessed on 1 April 2021).

- Turkcapar, M.; Kahraman, M.; Sargin, A. Guided Discovery with Socratic Questioning. J. Cogn. Psychother. Res. 2015, 4, 47. [Google Scholar] [CrossRef]

- Dworkin, S.L. Sample size policy for qualitative studies using in-depth interviews. Arch. Sex. Behav. 2012, 41, 1319–1320. [Google Scholar] [CrossRef]

- Vasileiou, K.; Barnett, J.; Thorpe, S.; Young, T. Characterising and justifying sample size sufficiency in interview-based studies: Systematic analysis of qualitative health research over a 15-year period. BMC Med. Res. Methodol. 2018, 18, 148. [Google Scholar] [CrossRef]

- Kozleski, E.B. The Uses of Qualitative Research: Powerful Methods to Inform Evidence-Based Practice in Education. Res. Pract. Pers. Sev. Disabil. 2017, 42, 19–32. [Google Scholar] [CrossRef]

- Leydens, J.A.; Moskal, B.M.; Pavelich, M.J. Qualitative methods used in the assessment of engineering education. J. Eng. Educ. 2004, 93, 65–72. [Google Scholar] [CrossRef]

- Bailey, R.; Smith, M.C. Implementation and assessment of a blended learning environment as an approach to better engage students in a large systems design class. In Proceedings of the ASEE Annual Conference and Exposition, Atlanta, GA, USA, 23–26 June 2013. [Google Scholar]

- Ghadiri, K.; Qayoumi, M.H.; Junn, E.; Hsu, P.; Sujitparapitaya, S. Developing and implementing effective instructional stratgems in STEM. In Proceedings of the ASEE Annual Conference and Exposition, Indianapolis, Indiana, 15–18 June 2014. [Google Scholar]

- Mok, H.N. Teaching tip: The flipped classroom. J. Inf. Syst. Educ. 2014, 25, 7–11. [Google Scholar]

- Clark, R.M.; Norman, B.A.; Besterfield-Sacre, M. Preliminary experiences with “Flipping” a facility layout/material handling course. In Proceedings of the IIE Annual Conference and Expo 2014, Montreal, QC, Canada, 31 May–3 June 2014. [Google Scholar]

- Holmes, N. Engaging with assessment: Increasing student engagement through continuous assessment. Act. Learn. High. Educ. 2018, 19, 23–34. [Google Scholar] [CrossRef]

- Shih, W.L.; Tsai, C.Y. Students’ perception of a flipped classroom approach to facilitating online project-based learning in marketing research courses. Australas. J. Educ. Technol. 2017, 33. [Google Scholar] [CrossRef]

| Element | Category | Mentions |

|---|---|---|

| Videos 1 | SDB | 39 |

| Continuous assessment | SAA | 34 |

| Online classes development and adaptation 1 | SPA | 20 |

| Guidance/proximity/accessibility/care of the teacher 1 | STA | 17 |

| Documentation uploaded in Moodle 1 | SDA | 12 |

| Division of topics 1 | SPB | 9 |

| Explanations of the teacher | STA | 7 |

| Exercises solved in class 1 | SPA | 5 |

| Subject organization 1 | SPB | 5 |

| No coursework | SPB | 3 |

| Class activities/dynamics 1 | SPA | 3 |

| Formulae given in exams | SAB | 2 |

| Students’ participation in online classes 1 | SSA | 2 |

| Adequate workload 1 | SPB | 2 |

| Combination of theory and practice 1 | SPB | 2 |

| Real cases used as example | SPB | 2 |

| Good schedule (requires to be active) | SPC | 2 |

| Applicability of the subject to real cases | SCA | 2 |

| Flipped class 1 | SPB | 2 |

| Quality of teachers | STA | 2 |

| Element | Category | Mentions |

|---|---|---|

| Too much content to be assimilated in such a short time | WCC | 14 |

| Exams are too long/short of time/stressful | WAB | 12 |

| Online classes | WPA | 8 |

| Classes schedule at 8:00 am | WPC | 7 |

| Lack of time for doubts in class 1 | WPA | 6 |

| Too much theory/too few practice 1 | WPB | 5 |

| Exams too close in time | WAB | 5 |

| More topics should be included in the syllabus | WCA | 5 |

| Rhythm is too high 1 | WPA | 3 |

| Lack of coordination between groups | WPA | 2 |

| Online classes software | WPD | 2 |

| Lack of continuous assessment/Grade depends too much on the final exam | WAA | 2 |

| Some material is only posted in English and Catalan 1 | WDA | 2 |

| No questions allowed in exams | WAB | 2 |

| Student feels difficult to ask at class 1 | WTA | 2 |

| Incomplete notes 1 | WDA | 2 |

| Organization and continuity of the topics 1 | WPB | 2 |

| Element | Category | Marks ≥ 9 |

|---|---|---|

| Videos 1 | SDB | 11 |

| Continuous assessment | SAA | 6 |

| Guidance/proximity/accessibility/care of the teacher 1 | STA | 6 |

| Explanations of the teacher | STA | 4 |

| Class activities/dynamics 1 | SPA | 2 |

| Online classes development and adaptation 1,2 | SPA | 2 |

| Real cases used as example | SPB | 2 |

| Attendance counts | SAA | 1 |

| Formulae given in exams | SAB | 1 |

| Applicability of the subject to real cases | SCA | 1 |

| Documentation uploaded in Moodle 1 | SDA | 1 |

| Good schedule (requires to be active) | SPC | 1 |

| Division of topics 1 | SPB | 1 |

| Exercises solved in class 1 | SPA | 1 |

| Subject organization 1 | SPB | 1 |

| Use of 3 languages | SPA | 1 |

| Teacher concern to adapt to COVID crisis | STA | 1 |

| Element | Category | Marks ≤ 2 |

|---|---|---|

| Classes schedule at 8:00 am | WPC | 2 |

| Exams | WAA | 1 |

| Lack of continuous assessment/Grade depends too much on the final exam | WAA | 1 |

| Exams are too long/short of time/stressful | WAB | 1 |

| No questions allowed in exams | WAB | 1 |

| Too much theory/too few practice 1 | WPB | 1 |

| Organization and continuity of the topics 1 | WPB | 1 |

| Online classes 2 | WPA | 1 |

| Subcategory | Description | Mentions | Index of Mention | Category Weight |

|---|---|---|---|---|

| SAA | Assessment method | 35 | 72.92% | 19.35% |

| SAB | Assessment development/organization | 3 | 6.25% | 1.61% |

| SCA | Content of the course | 4 | 8.33% | 2.15% |

| SCB | Course approach | 0 | 0.00% | 0.00% |

| SCC | Time/content rate | 0 | 0.00% | 0.00% |

| SDA | Documents | 13 | 27.08% | 6.99% |

| SDB | Videos | 39 | 81.25% | 20.97% |

| SPA | Class development | 27 | 56.25% | 17.20% |

| SPB | System and methodology | 21 | 43.75% | 13.44% |

| SPC | Schedule | 2 | 4.17% | 1.08% |

| SPD | Technology use | 1 | 2.08% | 0.54% |

| SSA | Students’ motivation | 2 | 4.17% | 1.08% |

| SSB | Students’ profit | 1 | 2.08% | 0.54% |

| STA | Teachers | 23 | 47.92% | 15.05% |

| Subcategory | Description | Mentions | Index of Mention | Category Weight |

|---|---|---|---|---|

| WAA | Assessment method | 3 | 4.17% | 3.03% |

| WAB | Assessment development/organization | 21 | 37.50% | 21.21% |

| WCA | Content of the course | 6 | 12.50% | 6.06% |

| WCB | Course approach | 0 | 0.00% | 0.00% |

| WCC | Time/content rate | 14 | 29.17% | 14.14% |

| WDA | Documents | 5 | 10.42% | 5.05% |

| WDB | Videos | 2 | 4.17% | 2.02% |

| WPA | Class development | 23 | 41.67% | 23.23% |

| WPB | System and methodology | 10 | 20.83% | 10.10% |

| WPC | Schedule | 8 | 16.67% | 8.08% |

| WPD | Technology use | 3 | 6.25% | 3.03% |

| WSA | Students’ motivation | 1 | 2.08% | 1.01% |

| WSB | Students’ profit | 0 | 0.00% | 0.00% |

| WTA | Teachers | 3 | 4.17% | 3.03% |

| Strengths | Weaknesses | ||||

|---|---|---|---|---|---|

| Subcategory | Description | M | SD | M | SD |

| AA | Assessment method | 8.875 | 1.102 | 2.500 | 0.500 |

| AB | Assessment development/organization | 8.500 | 0.500 | 3.917 | 2.050 |

| CA | Content of the course | 9.000 | 0.000 | 5.500 | 0.500 |

| CB | Course approach | - | - | - | - |

| CC | Time/content rate | - | - | 4.500 | 0.957 |

| DA | Documents | 8.200 | 0.980 | 3.000 | 0.000 |

| DB | Videos | 9.267 | 1.123 | - | - |

| PA | Class development | 9.125 | 0.781 | 4.250 | 1.920 |

| PB | System and methodology | 9.333 | 0.943 | 4.000 | 2.098 |

| PC | Schedule | 9.000 | 0.000 | 2.667 | 1.700 |

| PD | Technology use | - | - | 4.000 | 1.000 |

| SA | Students’ motivation | 6.000 | 0.000 | - | - |

| SB | Students’ profit | - | - | - | - |

| TA | Teachers | 9.556 | 0.643 | 5.000 | 0.000 |

| Subcategory | Description | n | M | SD | μ | X1 | X2 |

|---|---|---|---|---|---|---|---|

| AA | Assessment method | 14 | 7.964 | 2.460 | 1.289 | 6.68 | 9.25 |

| AB | Assessment development/organization | 8 | 5.063 | 2.674 | 1.853 | 3.21 | 6.92 |

| CA | Content of the course | 3 | 6.667 | 1.700 | 1.923 | 4.74 | 8.59 |

| CB | Course approach | - | - | - | - | - | - |

| CC | Time/content rate | 6 | 4.500 | 0.957 | 0.766 | 3.73 | 5.27 |

| DA | Documents | 6 | 7.333 | 2.134 | 1.708 | 5.63 | 9.04 |

| DB | Videos | 15 | 9.267 | 1.123 | 0.569 | 8.70 | 9.84 |

| PA | Class development | 12 | 7.500 | 2.630 | 1.488 | 6.01 | 8.99 |

| PB | System and methodology | 11 | 6.909 | 3.088 | 1.825 | 5.08 | 8.73 |

| PC | Schedule | 4 | 4.250 | 3.112 | 3.050 | 1.20 | 7.30 |

| PD | Technology use | 2 | 4.000 | 1.000 | 1.386 | 2.61 | 5.39 |

| SA | Students’ motivation | 1 | 6.000 | 0.000 | 0.000 | 6.00 | 6.00 |

| SB | Students’ profit | - | - | - | - | - | - |

| TA | Teachers | 10 | 9.100 | 1.497 | 0.928 | 8.17 | 10.03 |

| Aspect and Subcategory Related | Likert Scale 1 n (%) | Parameters | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | M | SD | Skw | Kme | ||

| Continuous assessment | AA | 0 (0.0) | 2 (4.3) | 4 (8.5) | 16 (34.0) | 25 (53.2) | 4.362 | 0.810 | −1.228 | 1.221 |

| Exams | AB | 2 (4.3) | 6 (12.8) | 9 (19.1) | 24 (51.1) | 6 (12.8) | 3.553 | 1.007 | −0.772 | 0.215 |

| Course approach | CB | 0 (0.0) | 1 (2.1) | 8 (17.0) | 28 (59.6) | 10 (21.3) | 4.000 | 0.684 | −0.399 | 0.476 |

| Time/content rate | CC | 4 (8.5) | 11 (23.4) | 17 (36.2) | 11 (23.4) | 4 (8.5) | 3.000 | 1.072 | 0.000 | −0.510 |

| Course documents | DA | 0 (0.0) | 0 (0.0) | 5 (10.6) | 20 (42.6) | 22 (46.8) | 4.362 | 0.666 | −0.565 | −0.652 |

| Content of the videos | DB | 1 (2.1) | 0 (0.0) | 8 (17.0) | 18 (38.3) | 20 (42.6) | 4.191 | 0.866 | −1.165 | 2.203 |

| Organization of the videos | DB | 1 (2.2) | 1 (2.2) | 6 (13.0) | 18 (39.1) | 20 (43.5) | 4.196 | 0.900 | −1.289 | 2.284 |

| Quality of the videos | DB | 1 (2.1) | 3 (6.4) | 4 (8.5) | 15 (31.9) | 24 (51.1) | 4.234 | 0.994 | −1.391 | 1.699 |

| Usefulness of the videos | DB | 1 (2.1) | 1 (2.1) | 8 (17.0) | 10 (21.3) | 27 (57.4) | 4.298 | 0.966 | −1.333 | 1.582 |

| Online classes | PA | 4 (8.9) | 3 (6.7) | 9 (20.0) | 14 (31.1) | 15 (33.3) | 3.733 | 1.236 | −0.824 | −0.109 |

| Exercises done in class | PA | 0 (0.0) | 3 (6.4) | 15 (31.9) | 14 (29.8) | 15 (31.9) | 3.872 | 0.937 | −0.210 | −1.054 |

| Doubt solving | PA | 0 (0.0) | 3 (6.4) | 4 (8.5) | 24 (51.1) | 16 (34.0) | 4.128 | 0.815 | −0.945 | 0.943 |

| Theory and practice integration | PB/CA | 0 (0.0) | 3 (6.4) | 14 (29.8) | 18 (38.3) | 12 (25.5) | 3.830 | 0.883 | −0.218 | −0.755 |

| Flipped class | PB | 1 (2.4) | 1 (2.4) | 18 (42.9) | 14 (33.3) | 8 (19.0) | 3.643 | 0.895 | −0.234 | 0.395 |

| Teachers’ explanations | TA | 1 (2.1) | 0 (0.0) | 6 (12.8) | 20 (42.6) | 20 (42.6) | 4.234 | 0.831 | −1.350 | 3.287 |

| Teacher-student interaction | TA | 0 (0.0) | 0 (0.0) | 7 (14.9) | 14 (29.8) | 26 (55.3) | 4.404 | 0.734 | −0.797 | −0.682 |

| Activity and Subcategory Related | Likert Scale 1 n (%) | Parameters | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | M | SD | Skw | Kme | ||

| Attend to face-to-face classes | SA | 3 (6.52) | 4 (8.70) | 10 (21.74) | 9 (19.57) | 20 (43.48) | 3.848 | 1.251 | −0.778 | −0.385 |

| Listen to teachers’ explanations | SA | 3 (6.52) | 0 (0.00) | 10 (21.74) | 14 (30.43) | 19 (41.30) | 4.000 | 1.103 | −1.165 | 1.249 |

| Do exercises at class | SA | 1 (2.22) | 1 (2.22) | 12 (26.67) | 17 (37.78) | 14 (31.11) | 3.933 | 0.929 | −0.700 | 0.644 |

| Solve doubts at class | SA | 2 (4.26) | 1 (2.13) | 14 (29.79) | 12 (25.53) | 18 (38.30) | 3.915 | 1.069 | −0.772 | 0.300 |

| Study notes at home | SA | 4 (8.70) | 2 (4.35) | 18 (39.13) | 12 (26.09) | 10 (21.74) | 3.478 | 1.137 | −0.479 | −0.073 |

| Watch to videos at home | SA | 4 (9.09) | 3 (6.82) | 12 (27.27) | 12 (27.27) | 13 (29.55) | 3.614 | 1.229 | −0.630 | −0.342 |

| Continuous assessment | SA | 2 (4.35) | 0 (0.00) | 12 (26.09) | 12 (26.09) | 20 (43.48) | 4.043 | 1.042 | −1.010 | 0.970 |

| Attend to online classes | SA | 9 (19.57) | 9 (19.57) | 10 (21.74) | 12 (26.09) | 6 (13.04) | 2.935 | 1.325 | −0.048 | −1.182 |

| Question and Subcategory Related | Likert Scale 1 n (%) | Parameters | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | M | SD | Skw | Kme | ||

| Structural knowledge is useful for architects | CA | 0 (0.0) | 1 (2.2) | 4 (8.7) | 20 (43.5) | 21 (45.7) | 4.326 | 0.724 | −0.927 | 0.877 |

| I will use technical knowledge in my professional life | CA/SA | 4 (8.5) | 5 (10.6) | 11 (23.4) | 14 (29.8) | 13 (27.7) | 3.574 | 1.233 | −0.580 | −0.521 |

| The course has met my expectations | SB | 0 (0.0) | 2 (4.3) | 9 (19.1) | 23 (48.9) | 13 (27.7) | 4.000 | 0.799 | −0.501 | −0.061 |

| The course has met my needs | SB | 1 (2.2) | 4 (8.7) | 13 (28.3) | 16 (34.8) | 12 (26.1) | 3.739 | 1.009 | −0.476 | −0.234 |

| I learned a lot with this structural course | SB | 2 (4.3) | 0 (0.0) | 8 (17.0) | 20 (42.6) | 17 (36.2) | 4.064 | 0.954 | −1.302 | 2.501 |

| Subcategory and Description | Parameters | 95% Confidence Interval | ||||||

|---|---|---|---|---|---|---|---|---|

| M | SD | Skw | Kme | μ | X1 | X2 | ||

| AA | Assessment method | 4.362 | 0.810 | −1.228 | 1.221 | 0.232 | 4.130 | 4.593 |

| AB | Assessment development/organization | 3.553 | 1.007 | −0.772 | 0.215 | 0.288 | 3.265 | 3.841 |

| CA | Content of the course | 3.904 | 0.698 | −0.371 | −0.432 | 0.200 | 3.705 | 4.104 |

| CB | Course approach | 4.000 | 0.684 | −0.399 | 0.476 | 0.196 | 3.804 | 4.196 |

| CC | Time/content rate | 3.000 | 1.072 | 0.000 | −0.510 | 0.306 | 2.694 | 3.306 |

| DA | Documents | 4.362 | 0.666 | −0.565 | −0.652 | 0.190 | 4.171 | 4.552 |

| DB | Videos | 4.230 | 0.872 | −1.424 | 2.709 | 0.249 | 3.981 | 4.480 |

| PA | Class development | 3.915 | 0.745 | −0.467 | −0.149 | 0.213 | 3.702 | 4.128 |

| PB | System and methodology | 3.755 | 0.798 | −0.145 | −0.538 | 0.228 | 3.527 | 3.983 |

| PC | Schedule | - | - | - | - | - | - | - |

| PD | Technology use | - | - | - | - | - | - | - |

| SA | Students’ motivation | 3.712 | 0.793 | −1.342 | 2.572 | 0.227 | 3.485 | 3.938 |

| SB | Students’ profit | 3.940 | 0.823 | −0.654 | 0.204 | 0.235 | 3.704 | 4.175 |

| TA | Teachers | 4.319 | 0.631 | −0.630 | −0.556 | 0.180 | 4.139 | 4.500 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Campanyà, C.; Fonseca, D.; Amo, D.; Martí, N.; Peña, E. Mixed Analysis of the Flipped Classroom in the Concrete and Steel Structures Subject in the Context of COVID-19 Crisis Outbreak. A Pilot Study. Sustainability 2021, 13, 5826. https://doi.org/10.3390/su13115826

Campanyà C, Fonseca D, Amo D, Martí N, Peña E. Mixed Analysis of the Flipped Classroom in the Concrete and Steel Structures Subject in the Context of COVID-19 Crisis Outbreak. A Pilot Study. Sustainability. 2021; 13(11):5826. https://doi.org/10.3390/su13115826

Chicago/Turabian StyleCampanyà, Carles, David Fonseca, Daniel Amo, Núria Martí, and Enric Peña. 2021. "Mixed Analysis of the Flipped Classroom in the Concrete and Steel Structures Subject in the Context of COVID-19 Crisis Outbreak. A Pilot Study" Sustainability 13, no. 11: 5826. https://doi.org/10.3390/su13115826

APA StyleCampanyà, C., Fonseca, D., Amo, D., Martí, N., & Peña, E. (2021). Mixed Analysis of the Flipped Classroom in the Concrete and Steel Structures Subject in the Context of COVID-19 Crisis Outbreak. A Pilot Study. Sustainability, 13(11), 5826. https://doi.org/10.3390/su13115826