1. Introduction

Nowadays, the growing demand for servant robots has prompted researchers to improve technologies for automating these platforms. One of the most fundamental technologies for automating these platforms is their navigation system [

1]. Autonomous mobile robot navigation is an active area of research and has gained the great attention of numerous researchers in the recent past [

2,

3]. Autonomous Mobile Robots (AMR) are designed and programmed to perform multiple tasks in substitution for humans. Humans are usually unable to perform such tasks due to multiple reasons such as bad environmental conditions or unhealthy and dangerous environments, or even repetitive tasks that can be boring. Due to these conditions and the need for robots in strenuous circumstances, the recent past has seen tremendous research in mobile robots. The intention is to have such devices, which could automatically perform most tasks without human intervention [

4]. All the advantages, which can be achieved with AMR usage, also come along with numerous challenges. Localization and navigation are the main challenges in the self-controlling system of these types of robots.

Recent studies have made use of the Global Positioning System (GPS) to address the challenges of location and navigation. A number of studies have been built on the concept of GPS and are very successful in some applications [

5]. Although the use of GPS proved to be successful in a number of applications and provides very high accuracy and precision rates, its usage is mainly in outdoor environments. In indoor environments, the accuracy and precision of GPS fall down rapidly due to certain challenges such as obstacles, walls, and other objects [

6]. Many solutions have also been proposed for the problem as mentioned above, such as the use of Ultrasound, Bluetooth, and WiFi in the indoor environment [

7]. In ultrasound-based models for localization, the distance between the receiver, which is usually located at a fixed point, and the transmitter, which is a moving target itself or mounted on a moving target, is estimated by ultrasonic waves. This distance estimation is calculated by the time interval between sending the signal and receiving it between the sender and receiver [

8]. Bluetooth is energy radiation whose frequency is below the sensitivity of the human eye. Most of the localization methods based on the Bluetooth reflected signal generate the Received Signal Strength Indicator (RSSI), which is designed to indicate how strong a signal is received [

9]. Like ultrasound and Bluetooth, the localization models by using WiFi technology are also based on signal strength. The advantage of WiFi over the previous two devices is its greater availability in urban environments and signals with wider coverage area capability. Therefore, the models based on WiFi for localization purposes have better accuracy and cost less than models based on other signal devices [

8]. However, all these solutions are based on signal strength, which can suffer from unpredictability and instability of signal propagation and atmospheric interference noises from surrounding objects and components [

10,

11].

Deep learning comprises a subfield of machine learning, which has proved to be very successful in a number of applications belonging to different domains such as robotics. This success and development in machine learning have led researchers to use deep learning approaches to investigate AMR localization in indoor environments. Most of these approaches are based on vision-based localization, which is usually implemented as image discovery that finds the most related image in the database to the query image by matching features [

12,

13]. Based on the deep learning approach, many methods have been successfully applied to solve the localization problem; however, they have their limitations due to the limited size of the private datasets. Deep learning-based models have millions of parameters, and training them with small datasets can lead to overfitting. However, despite the fact that this problem can be largely solved using transfer learning and using learned weights from already trained models, the model still has bounded accuracy due to inadequate generalization capabilities.

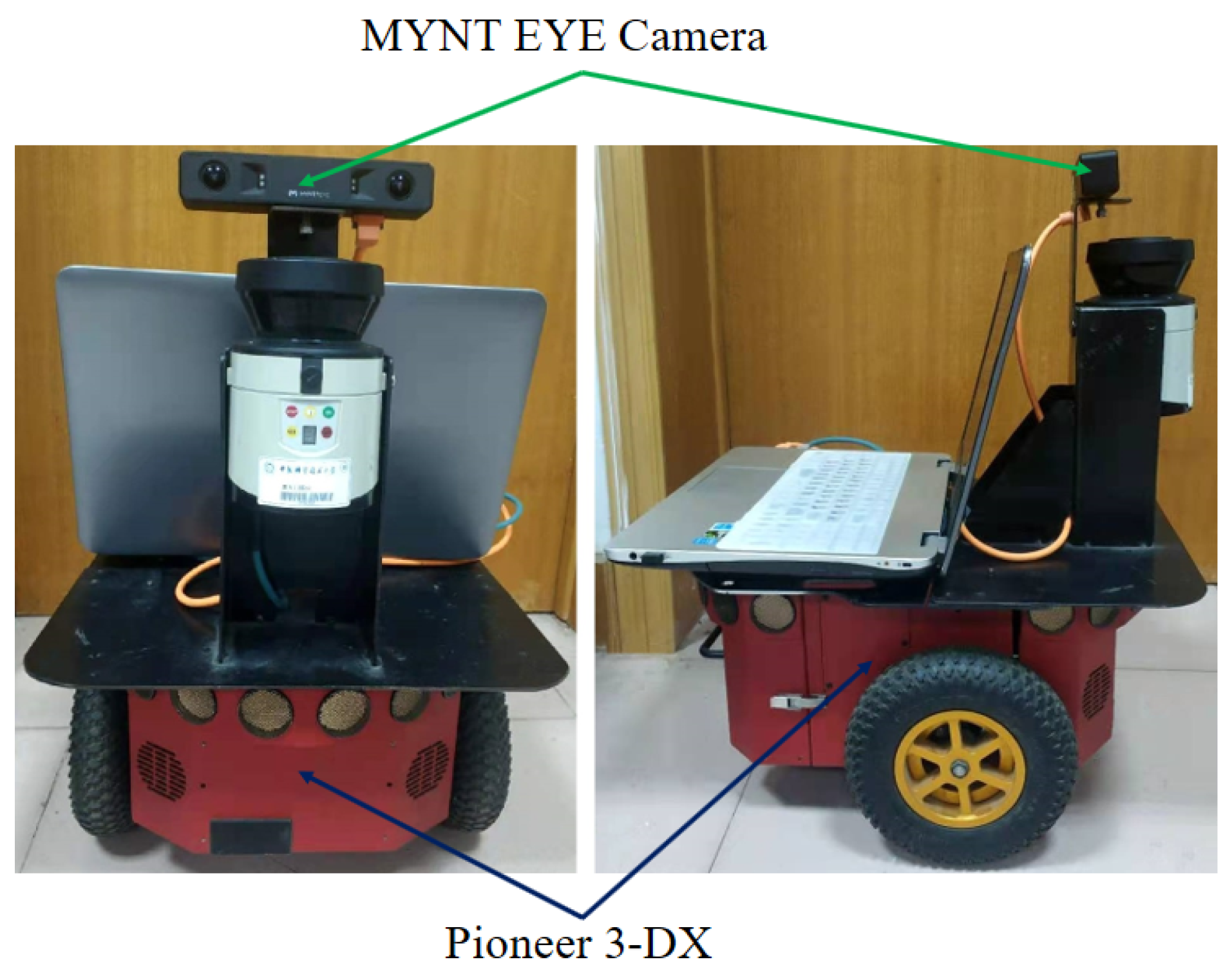

In this paper, to eliminate the challenges mentioned above, we proposed a deep learning-based approach in an end-to-end fashion for indoor mobile robot localization via image classification with a small dataset. By using the floor plan of the robot workspace, topological map information is derived. The navigable area of the topological map is then extracted, where each of them is classified as a robot workstation. In this manner, the mobile robot has the ability to localize and navigate itself throughout the topological map autonomously. In order to design a navigation and localization system, a novel dataset is extracted from a real apartment environment by using the MYNT EYE camera. The proposed CNN-based classifier model is trained on all of these images and then used for the mobile robot’s localization and navigation. The system also uses the CNN-based controller model to detect possible obstacles in the robot’s pathway. We proposed an appropriate cost function to tackle the bounded generalization capability of the CNN classifier model that exists due to the limited size of the provided dataset. Unlike most well-known loss functions, the proposed loss not only considers the probability of the input data when it is allocated to its true class but also considers the probability of allocating the input data to other classes rather than its actual class. The results demonstrated the efficiency, effectivity, and generalization capability of the proposed system.

In the following,

Section 3 describes the problem statement, along with strategy and data acquisition.

Section 4 explains the proposed strategy and details the method implementation. In

Section 5, the results of the proposed models are discussed, along with comparisons with other models. Finally, In

Section 6 and

Section 7, we concluded the discussion and outcomes of the current study, respectively.

2. Related Work

In recent years, many models have been proposed that use these layers to perform the classification task. One of the pioneer models is the AlexNet model [

14], which proposed Rectified Linear Unit (ReLU) [

15] to improve accuracy and performance. The advantage of ReLU is that it rapidly speeds up the convergence process during the training of the network. Later, the VGG model [

16] introduced factorized convolutions, which led to improved CNN-based models. VGG uses smaller receptive windows such as kernels or filters in the convolutional layers, which reduces the network’s size and enhances performance. However, the parameter size is large, and it can not prove well for embedded systems. Google researchers released a version of their architecture called MobileNet [

17]. MobileNet was designed especially for the mobile or embedded architecture-based systems, which have higher hardware constraints. This model reduced the training time and improved the performance. In addition, MobileNet comprised 28 layers in total and achieved an accuracy of 87 percent on the ImageNet dataset.

Junior et al. [

18] proposed an online IoT system for mobile robot localization based on machine learning methods. They first collected a dataset by GoPro, and the Omnidirectional camera performed the localization task based on the image classification. They used the advantage of convolutional neural networks (CNN) to extract the features from images and then categorized them by a machine learning classifier algorithm. Although their model achieved good accuracy, it is not performing in an end-to-end fashion. Foroughi et al. [

19] introduced a robot localization model in hand-drawn maps with the help of CNN and the Monte Carlo Method (MCL). Their localization model is in two phases. In the first phase, they extracted data through the different locations of the given map and trained these data on the designed CNN network to predict the robot’s position. In the second phase, the robot’s actual position on the map was then evaluated more accurately using the MCL model. The performance of their proposed model is highly influenced by the quality of the hand-drawn map. Zhang et al. [

20] proposed a model named Posenet++ for the robot re-localization problem. Their model is a developed version of the VGG16 network that regresses the camera’s position using the input image. Although they used weight from pre-trained models, their model still suffers from the accuracy bounded due to the limited size of the dataset. Radwan et a1. [

21] introduced VLocNet++ to estimate the camera’s position by utilizing a multitask learning method based on the CNN network. Their model integrated the semantic and geometric information of the surrounded into the pose regression networks. Nevertheless, their model may fail in an environment with similar features due to the limited generalizability. Hou et al. [

22] proposed a point cloud map based on the ORB-SLAM algorithm for robot localization and 3D dense mapping in an Indoor Environment. Although their model performed well for the task of 3D mapping, even in a large-scale scene, the effect of the proposed model for the process of robot positioning is poor. Lin et al. [

23] introduced a robust loop closure approach to eliminate the cumulative error caused by the long-term localization of mobile robots. Firstly, they use an instance segmentation network to detect objects in the environment and further realize the construction of a semantic map. Then, the environment is represented as a semantic graph with topological information, and the place recognition is realized by efficient graph matching. Finally, the drift error is corrected by object alignment. Although the proposed method has high robustness, it is not suitable for scenes with few objects. Using CNN and Q-Learning, Saravanan et al. [

24] make robots autonomously search and reach plants in an indoor environment to monitor the health of the plant. They build an IoT enabled autonomous mobile robot using CNN and reinforcement learning. Ran et al. [

25] design a shallow CNN with higher scene classification accuracy and efficiency to process images captured by a monocular camera. In addition, the adaptive weighted control algorithm combined with regular control are used to improve the robot’s motion performance. However, its capability of self-learning and continuous decision-making is still not satisfactory.

4. Methodology

In this section, the proposed approach for mobile robots’ localization and navigation is expressed in detail. The proposed system consists of computer vision processing, which incorporates a topological mapping method and CNN models.

In the designed system, the user submits a request to send the robot to the desired destination. The system first creates a route from the robot’s current position to the destination. The route is then broken into the commands and runs line by line individually. To execute each command, an image request is first sent to the sensor (camera), which is installed on the robot. The sensor takes an image of the environment in which it is located and sends it to the CNN unit. There are two CNN-based models in the CNN unit. The first model is responsible for estimating the position of the image received from the sensor. In fact, the model that is trained on the dataset beforehand assigns the appropriate class to the image. At this stage, if the location predicted by the first model is the destination desired by the user, the system will send a message to the user stating that the mission has been completed and will wait for the next command. Otherwise, the second model is executed, and, if there is a doorway in the same image, the model detects whether it is closed or open. Closed door means that the robot does not have access to the next destination, in which case an appropriate message will be delivered to the user. However, if there are no closed doors and no obstacles in the pathway, the command is eventually executed, and the robot takes action, which can include turning at a certain angle or moving forward. The flowchart of the proposed approach is presented in

Figure 4.

4.1. CNN Models’ Architecture

4.1.1. Classifier Model

The prepared dataset for this study has 32 classes and 534 images. Dealing with such a small dataset with this number of classes for classification requires specific consideration. Deep learning-based models require large amounts of data to show their effectiveness; therefore, the majority of well-known classification models are designed to perform on the dataset with a large amount of data, such as ImageNet [

26], which contains millions of images and thousands of classes. Despite the advances made in deep learning in recent years, there are not many standard methods to design a network to fit private and small datasets. Therefore, there are only a few basic points to consider when designing a network to fit the small datasets.

Overfitting is one of the common problems that can happen when the model suffers from a lack of data. Overfitting occurs when a network performs extremely well on the train data but performs poorly on the unseen data. To prevent overfitting on the small datasets, not training the data on the complex model must be considered. The very deep convolutional networks with a huge number of parameters may work well on large datasets, but they are not flexible when exposed to small datasets. Lack of generalization is another major problem, which can happen due to the lack of data. The data augmentation technique is one of the most effective techniques to address this problem. This technique can increase the amount of the data by applying different transformations to the training set, such as flip, rotate, crop, and many others.

Considering the above explanations, we proposed a simple CNN-based model to classify the prepared dataset into one of 32 categories. The classifier model is trained on the prepared datasets for the classification task using the architecture presented in

Figure 5. The input of the proposed classifier model is an RGB map image with a fixed size of 256 × 256, and it is consists of four convolution layers, four max-pooling layers, and two fully-connected layers. The convolution layers are equipped with the ReLU activation function. The number of output filters in the first, second, third, and fourth convolution layer is 8, 16, 32, and 64, respectively, and two first convolution layers have kernel sizes of 5 × 5 with stride 5 × 5, and the others have sizes of 3 × 3 with stride 3 × 3. After each convolution layer, there is a max-pooling layer with a pool size of 2 × 2 and stride 2. After that, there are two fully-connected layers, where the first one has 512 channels and the second one has 128 channels and is equipped with the ReLU activation function. Finally, the network ends with the softmax [

27] layer. The configuration of the proposed classifier model is outlined in

Table 4.

4.1.2. Controller Model

The proposed controller model is a CNN-based architecture, which detects the presence of possible obstacles in the pathway of the robot by analyzing the image taken from the sensor. These potential obstacles include closed or semi-open doors that prevent the robot from reaching the desired location. The robot can only move from one workstation to another if there is no door between them or be fully open. All the images in the prepared dataset have one of these four modes, including without door, with the fully-open door, with the semi-open door, with the fully closed door (

Figure 6). We have not created a new dataset to detect the door’s situation in the image and only adjust the prepared datasets according to the needs of the controller model. Therefore, the prepared dataset is classified into two groups as free space and occupied. The free space group contains images with no or fully-open doors, while the occupied group contains images with semi-closed or fully-closed doors. The adjusted dataset for training on the doorway controller model has 534 images, of which 282 images belong to the free space class, and the rest belongs to the occupied class. Considering the above explanations, we proposed a CNN-based network as the controller model, classifying the prepared dataset into one of two categories. The architecture of the proposed controller model is exactly the same as the proposed classifier model (

Figure 5), except that the softmax layer has two outputs.

4.2. Data Normalization

In deep learning, the quality of a model is largely affected primarily by training data [

28], but there are some techniques to optimize the performance of the model. One of the effective and very popular techniques is normalization. In deep learning-based models, any changes in earlier layers distribution will be enhanced in the next layers; therefore, each layer tries to adjust to the new distribution. This problem, called Internal Covariate Shift, reduces the speed of the training process and causes the network to take more time to reach the global minimum. Normalization techniques are the standard method to overcome this problem. Besides reducing the Internal Covariate Shift, normalization standardizes the inputs, smooths the objective function, stabilizes the learning process, and decreases the training time. Data normalization is performed to improve the training process of the deep neural network and is usually performed as a preprocessing step before the actual execution of the training process. In data normalization, the value of each feature is mapped to the [0,1] range, where 1 represents the maximum value and 0 represents the minimum value for each feature. Most of the systems have features, which have values, where the difference between the maximum and minimum values has a very large scope. Therefore, it is necessary to apply the logarithmic scaling method for scaling to obtain the values in suitable ranges. A logarithmic scale displays numerical data over a very wide range of values in a compact way.

Batch normalization [

29] and layer normalization [

30] are the two most well-known normalization techniques. In order to investigate the effectiveness of the mentioned methods, we modified and trained both proposed models once by adding a batch normalization layer after each convolution and once by adding layer normalization after each convolution layer. The results are illustrated in

Figure 7.

As it can be seen in

Figure 7, batch normalization did not improve the performance of the proposed classifier and controller models, which can be because of the size of the dataset. Batch normalization depends on the size of the mini-batch [

31] so that the small mini-batch size makes the training process noisy. As the size of the prepared dataset is small, the size of the mini-batch is therefore small as well, and, as a result, batch normalization could not play an effective role.

Figure 7 also shows that layer normalization effectively increased the accuracy of both training and validation sets. In addition, the proposed models with layer normalization decreased the noise of the training and validation curves in comparison to the other two models, which makes it more reliable.

4.3. Cost Function

The goal of the neural network during the training process is to reduce the loss function. This means reducing the difference between computed and targeted values. Cross entropy (CE) loss is one of the most well-known and popular loss functions for classification. In a

classification task, the training dataset with a batch size of

m can be defined as

, where

is a sample of the input data

, and

is the true label of

. The network’s probability when label

is

can be defined as Equation (

1):

where

is the network’s prediction. The formal definition of the CE loss for

can be computed as follows:

When

is labeled as

, then

; otherwise, for all

,

. Based on Equation (

2), the CE loss only considers the probability of the input data when it is allocated to its true class. As mentioned earlier, the number of images of the prepared dataset is quite small, and each class contains a few images. Therefore, in order to increase the performance of the proposed network, we modified the CE loss so that the probability of allocating the input data to other classes rather than its actual class is also considered. Equation (

3) represents the loss when the data are allocated to other classes instead of its true class:

When

is not labeled as

, then

; otherwise, for all

, the

. In addition,

is a variable for controlling the scale of the loss when the data are allocated to other classes instead of its actual class

. By integrating Equations (

2) and (

3), the proposed loss function

can be obtained, which not only considers the probability when the data are labeled truly but also consider the probability when the data are labeled wrongly:

Besides increasing the probability when the data are labeled truly, at the same time, the proposed loss decreases the probability when the data are allocated to other classes instead of its true class.

4.4. Weight Loss

As presented in

Table 3, the prepared dataset for the classifier model involves multiple labels for various locations of the apartment (the same also holds true for the dataset prepared for the controller model). Since the number of images varies for different classes, and, in some classes, the imbalances are higher, which is why the dataset needs to be balanced within each class. As a result, we designed the weight loss plan in order to correct the data imbalances. The weight

is defined as follows:

where

is the number of images of the biggest class in the dataset,

represents the current classes’ image number,

indicates the total number of images of the dataset, and

is a variable for controlling the scale of the weight loss. Using the presented weight, the classes with smaller data sizes attain the weight with a larger value, and the classes with bigger data sizes attain the weight with a smaller value. By applying Equation (

5) into Equation (

4), the weighted loss function is defined as follows:

To evaluate the effectiveness of the proposed loss, we trained both of the proposed classifier and controller models once by using CE loss once by adopting the proposed loss. The results are presented in

Figure 8.

According to

Figure 8, the proposed loss slightly improves the accuracy of both proposed classifier and controller models. In addition, compared to the CE, the proposed loss decreased the noise of the training and validation curves, made them smoother, and converged faster to maximize accuracy:

5. Empirical Results

In this section, the results of the proposed classifier and controller models, along with the proposed loss, are presented. First, evaluation parameters are described, and then the experimental setup, which is used for the execution and implementation of the proposed system, is detailed. Finally, the performance of the proposed classifier and controller models is compared with state-of-the-art models.

5.1. Evaluation Parameters

To evaluate the performance of the proposed classifier and controller models, the standard metrics are used, including accuracy and F1-Score. Let us define TP as True Positive,

as True Negative,

as False Positive, and

as False Negative. Mathematically, these evaluation metrics are defined as Equations (

7) and (

8):

Accuracy is one of the most standard metrics to evaluate the performance of the CNN-based classification models. Accuracy is a metric of how close a measured value is to its standard or reference value. It means that the measurements for a given object are relative to the known value, but the measurements are far from each other, meaning that the accuracy is without precision. The F1-Score, another standard metric for evaluating the classification problem, is a weighted mean of precision and recall. Precision is the quality of being exact. It refers to how two or more measurements are close to each other, regardless of whether those measurements are accurate or not. Recall , also known as sensitivity, measures the ratio of the relevant results predicted correctly by the model. In the case where both precision and recall are influential, the F1-Score can be used.

5.2. Training Strategy and Parameter Tuning

In this step, the effects of different parameters on the proposed classifier and controller models are investigated. These parameters are including, input image size, training testing split ratio, and elements of proposed loss. Whenever an experiment is conducted, one parameter is replaced while the other parameters stay unchanged. In addition, each experiment is taking in 10-fold cross-validation (run ten times) method on both prepared datasets.

The tools and technologies employed for implementing and developing the proposed system primarily consist of Python developmental language V3.5. For deep learning model creation, the Keras framework based on Tensorflow 2.1 library is used. All the training models and procedures are executed on NVIDIA GeForce GTX 1080TI with 8 GB memory. The Stochastic Gradient Descent (SGD) [

32] with a momentum of 0.9 is adopted as the optimization. The SGD is an efficient algorithm developed to reduce the loss function in the quickest way possible. The initial learning rate is set to the

reducing mechanism when validation loss stopped improving. The dataset is divided into a number of mini-batches during the training process. The batch size is set to 16, which means that the input data are divided into 16 batches and processed each batch in parallel. To prevent the possible overfitting, an early-stop strategy is used to monitor the validation loss improvement.

In addition, to make data more robust, an image data generator feature (data augmentation) is used. Data augmentation is a powerful feature and lets the data be enhanced in real-time while the deep learning model is still training or in the execution phase. Using the Keras library, there are a number of options, such as contrast random normalization and geometric transformations, which can be selected to perform the different transformations on data. Any transformation can be applied to any image present in the dataset while it is transferred to the model during training. Utilizing this feature offers two major benefits: saving time and memory overhead during the training phase and making the model more robust.

Table 5 presents the parameters and their values, which are selected for the train data generator.

5.2.1. Image Size

The classification performance of the proposed classifier and controller models is compared by given different input image sizes, including 128 × 128, 228 × 128, 256 × 256, and 465 × 256. Both models are trained on prepared datasets with these sizes, and results on the test set are illustrated in

Table 6.

It can be observed from

Table 6 that, for both models, the input image with sizes of 256 × 256 and 465 × 256 outperform the other input sizes in terms of accuracy. Although the input image with sizes of 256 × 256 and 465 × 256 is increasing the training time, it can be ignored due to their significant improvement of the model’s performance comparing to input image with sizes of 128 × 128 and 228 × 128.

The classifier model with input sizes of 256 × 256 and 465 × 256 attained the same result by achieving 96.2% accuracy. The controller model, when trained with an input size of 465 × 256, achieved an accuracy of 98.7% that is only 0.1% higher than when the input size is 256 × 256. Although the input image with the size of 465 × 256 has the same effect on the performance of the controller model (just improved accuracy 0.1%) as input size of 256 × 256 has, it significantly increases the computation cost by up to four seconds for each epoch training time. Therefore, the input image size of 256 × 256 is selected for further experiments.

5.2.2. Training Testing Split Ratio

The training-test split method is a technique to evaluate the performance of deep learning models for prediction on the data that has never been used during the training process. In order to achieve the best classification performance of the proposed models, the dataset is divided into five different training and testing ratios, including 90:10, 80:20, 70:30, 40:60, and 50:50. The X:Y split ratio means the X% of the data are used for training of the deep learning model while the rest of the Y% data are used to test the model. As each prepared dataset has a total number of 534 images when split into 90:10 ratio, 481 images are selected for training, and 53 images are selected for testing the model. Both proposed classifier and controller models are trained on the prepared datasets with the mentioned split ratio, and the results on the test set are presented in

Table 7.

As presented in

Table 7, the models, when trained with split ratios of 90:10 and 80:20 obtain almost similar results, which is better than when the model trained with split ratios of 70:30, 60:40, and 50:50. However, both proposed models attained the best classification results when trained with a split ratio of 80:20 by achieving an accuracy of 96.2% and 98.6% for classifier and controller models, respectively.

5.2.3. Effect of and of the Proposed Loss Function

As expressed in the methodology section, the parameters

and

are defined to regularize the proposed loss function. The

is defined to control the scale of loss and

for controlling the scale of class weight. In order to evaluate the effect of

and

on the performance of the proposed loss, different values are given to these two variables. Furthermore, the proposed models are trained on both prepared datasets with the various values of

and

, and results on the test set are presented in

Figure 9.

When , the proposed loss will be the same as weighted CE loss, which only considers the probability of the input data when it is allocated to its true class. The proposed loss also considers the probability of allocating the input data to other classes rather than its actual class. Therefore, when the has a smaller value, the loss focuses more on the data that the model correctly predicts their labels, and when the has a bigger value, the second part of the loss function (which is responsible when the label of the data are not correctly predicted) becoming the main part. The best value for and can be achieved empirically and must be adjusted for each dataset separately. On the prepared datasets, both proposed models show their best performance when and are set to 2 and 5, respectively.

5.3. Comparison of Proposed Loss and Cross-Entropy Loss

The performance of the proposed loss is further compared to the CE loss. As mentioned earlier, the proposed loss is an extended version of the CE loss. The CE loss only considers the probability of the input data when it is allocated to its true class, but the proposed loss, in addition to the advantages of the CE, also considers the probability of allocating the input data to other classes rather than its true class. For comparison, the proposed classifier and controller models are trained in 10-fold cross-validation (run ten times) method once with employing the proposed loss and once with CE loss. In order to have a fair comparison, in both experiences, all the parameters and training strategies are the same, and the only difference is the loss function. The classification outcomes on the test set are monitored, and the best results are picked until the 25th, 50th, 75th, and 100th epochs. The results are shown in

Figure 10.

As shown in

Figure 10, the proposed loss leads the network to obtain a better performance compared to CE loss for both classifier and controller models. It can also be seen from

Figure 10 that the classification accuracies for both experiments are increasing as the number of epochs increases, and both proposed classifier and controller models achieved their best performance at epoch 100. In addition, almost in all experiments, the error bar indicates that, when the model is equipped with a proposed loss, it has a smaller standard deviation than when the model is equipped with CE. In total, based on the presented results, it can be concluded that the proposed loss not only improves the performance of the models by increasing the accuracy but also speeds up the optimization procedure and subsequently decreases the training time. The results for 100 epochs are presented in more detail in

Table 8.

5.4. Comparison of the Proposed CNN Model with State-of-the-Art Models

After key parameters evaluation, the performance of the proposed classifier and controller models are compared with three state-of-the-art models, including AlexNet, MobileNetV2, and VGG-16. As discussed in the methodology section, state-of-the-art models are designed to perform on the giant dataset, and, due to their convolution size and depth, overfitting occurs if they perform on the small dataset. Therefore, to train and make these models work properly on the prepared datasets, all of them are modified, and their hyperparameters are tuned according to the prepared datasets. The main modification is replacing the original fully-connected layers of these models with the fully-connected layers as designed for the proposed model. This significantly reduces the number of parameters. In addition, layer normalization is added to the main body of these models after the convolution layers. Finally, a strong dropout [

33], with

, is applied after the first fully connected layer.

The proposed classifier and controller models and adjusted AlexNet, MobileNet, and VGG-16 are trained on the prepared dataset with batch size 16 for 100 epochs. All the models are trained once with CE and once with proposed loss. For the proposed loss, the values of and are set to 2 and 5, respectively. SGD is employed as the optimizer with an initial rate of and a momentum of 0.9. Data are split into a training and test set with an 80:20 ratio. All the models are trained by providing them with the augmented training dataset. The size of input data for the state-of-the-art models have not changed, and the original size has been used, which is 256 × 256 for AlexNet, 224 × 224 for MobilenetV2 and VGG-16.

As the results of the test set of the prepared datasets are presented in

Table 9, the proposed models, when equipped with proposed loss, outperformed the other models by obtaining the accuracy of 96.2% and 89.6% for classifier and controller models, respectively. Moreover, all the models show slightly better performance when they are equipped with the proposed loss comparison to when they employed CE as the loss function. More well-known classification models were intended to be compared with the models as mentioned above, but they had pretty similar performance as presented models and therefore not been mentioned for comparison. These comparisons are not to demonstrate that the proposed classifier and controller models achieved better performance than the state-of-the-art models, but to illustrate that shallow networks such as the proposed classification model are simple yet accurate and effective for a small dataset.

5.5. Comparison of the Proposed CNN Model with State-of-the-Art Models

To evaluate the effectiveness of our approach, we examined the performance of the proposed system for mobile robot localization and navigation in the real environment. As mentioned, a real apartment (

Figure 2) is chosen to take the experiments. Five different routes (see

Table 2) are designed to evaluate the navigation system of the mobile robots.

For each route, if the robot executes all the commands correctly from the starting point and reaches the destination, it is considered a 100% success. Otherwise, if it makes a mistake during taking the route, the success rate will be calculated until the last command is done correctly. For example, if the robot is to move from workstation 1 to workstation 8 (route 1), first it should rotate to the angle of zero (T0), then move straight (Go), and finally stop at workstation 8 (SW8). In addition, before each “Go” command, the system checks the existence of the doorway and only allows the “Go” command to be executed if there is no obstacle in the way. Therefore, to complete path one, four commands must be executed correctly. If the proposed system correctly detects that there is an obstacle on the pathway (closed or semi-closed door), it stops the operation. In this case, only two commands are performed, rotation to the angle of zero (T0) and the obstacle check, which is considered as a 100% success. However, if the system mistakenly detects an obstacle in the path and stops the operation, then only rotation to the angle of zero (T0) command is executed correctly, which is a 25% success rate. We tested each of the five routes fifteen times. We left all the doors open five times for the robot to pass through without any obstacles. We also left at least one of the doors halfway open five times and closed at least one of the doors in the path five times. We tested each designed route fifteen times. Five times, we left all the doors open for the robot to move through the pathway without facing any obstacles. Five times, we also left at least one of the pathway’s doors halfway open, and another five times left at least one of the pathway’s doors fully closed.

We took the experiments of the system, with the proposed and VGG-16 backbone for classifier and controller models, along with proposed and CE loss. The experiment results of the proposed mobile robot localization and navigation system in the real apartment environment are presented in

Table 10.

As the experimental results in

Table 10 show, the designed system performs better when adopting the proposed loss than when employing the CE loss. In addition, the best performance of the proposed localization and navigation system is obtained when the proposed backbone is used for the classifier and controller models along with the proposed loss function.