Implementing Internet of Things Service Platforms with Network Function Virtualization Serverless Technologies

Abstract

1. Introduction

- We present an analysis of some key IoT use cases, discussing the suitability of implementing NFV through serverless computing functions, analyzing service requirements and matching them with serverless capabilities.

- We present a specific architecture for serverless computing. It is based on the integration of Firecracker and Kata containers, and it is capable of running both classic containerized applications and new packet processing applications, with minimal overhead. It is realized by means of NetBricks, a novel NFV framework optimized for speed of execution and rapid code development.

- We show the results of an experimental campaign to evaluate the overall costs and performance of the proposed serverless framework, which is based exclusively on open source software, discussing its suitability with respect to IoT service requirements.

2. Background and Related Work

2.1. Serverless Computing

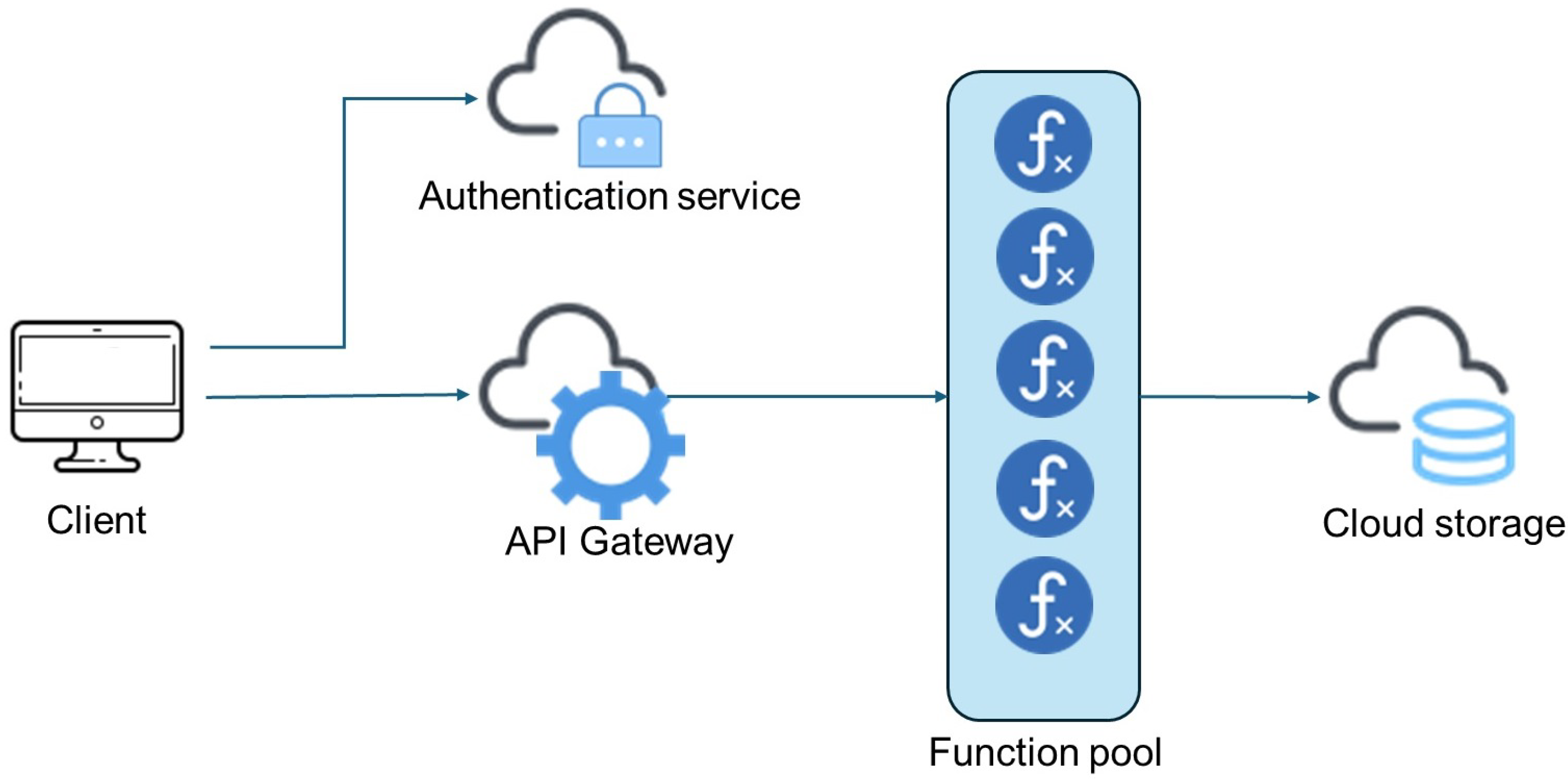

- An authentication service: The serverless customers use the API provided by the cloud service provider to authenticate and be authorized to use its services. Typically, this is handled via a security token.

- FaaS engine: This is the core of the serverless solution. It gives developers the ability to build, run, deploy and maintain applications without thinking about server infrastructure. Each application is divided into a number of functions. Each function performs a simple task, which runs only when necessary for the service. This also allows for the sharing of different functions among different services. The access to the function pool is managed through an application engine named API Gateway, which triggers the execution of the correct function based on events.

- Data storage: Although applications are developed and managed according to the FaaS paradigm, users’ data need to be stored in a database, which is an essential component of the overall solution.

- The developer writes a function and pushes it to the platform. The developer defines also the events that trigger the execution of the function. The most common event is an HTTP request.

- The event is triggered. In case of a HTTP request, a remote user triggers it via a click, or another IoT function/device sends a restful message to the target function.

- The function is executed. The cloud provider checks whether there is an instance of the function already operational, ready to be reused. If not, it spawns a new instance of the function.

- The result is sent to the requesting entity/user, which will likely be used within the composite application workflow.

2.2. Serverless Technologies for the Internet of Things

- Resource footprint;

- Startup time;

- Supported network protocols for function interactions;

- Application portability;

- Security.

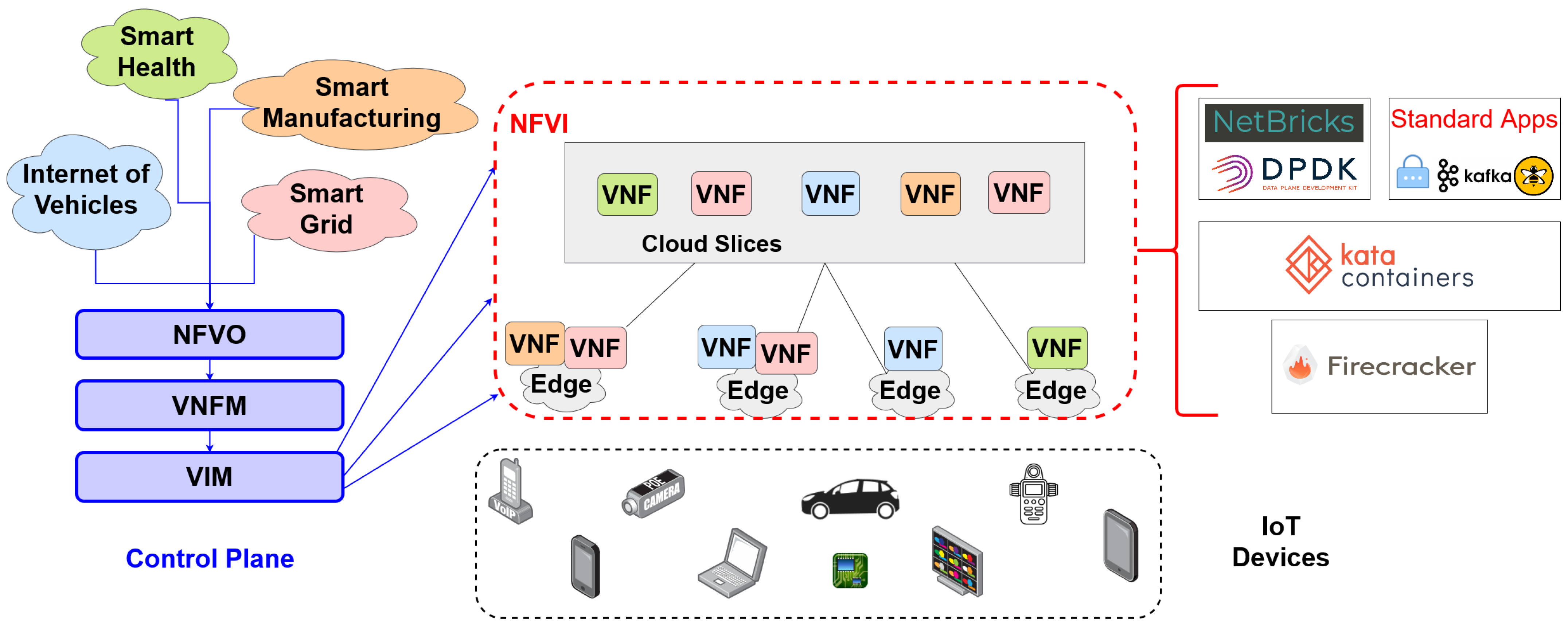

3. Key Internet of Things Scenarios

3.1. Internet of Vehicles

3.2. Smart Manufacturing

3.3. Smart Health

3.4. Smart Grid

4. New Virtualization Solutions for Network Functions

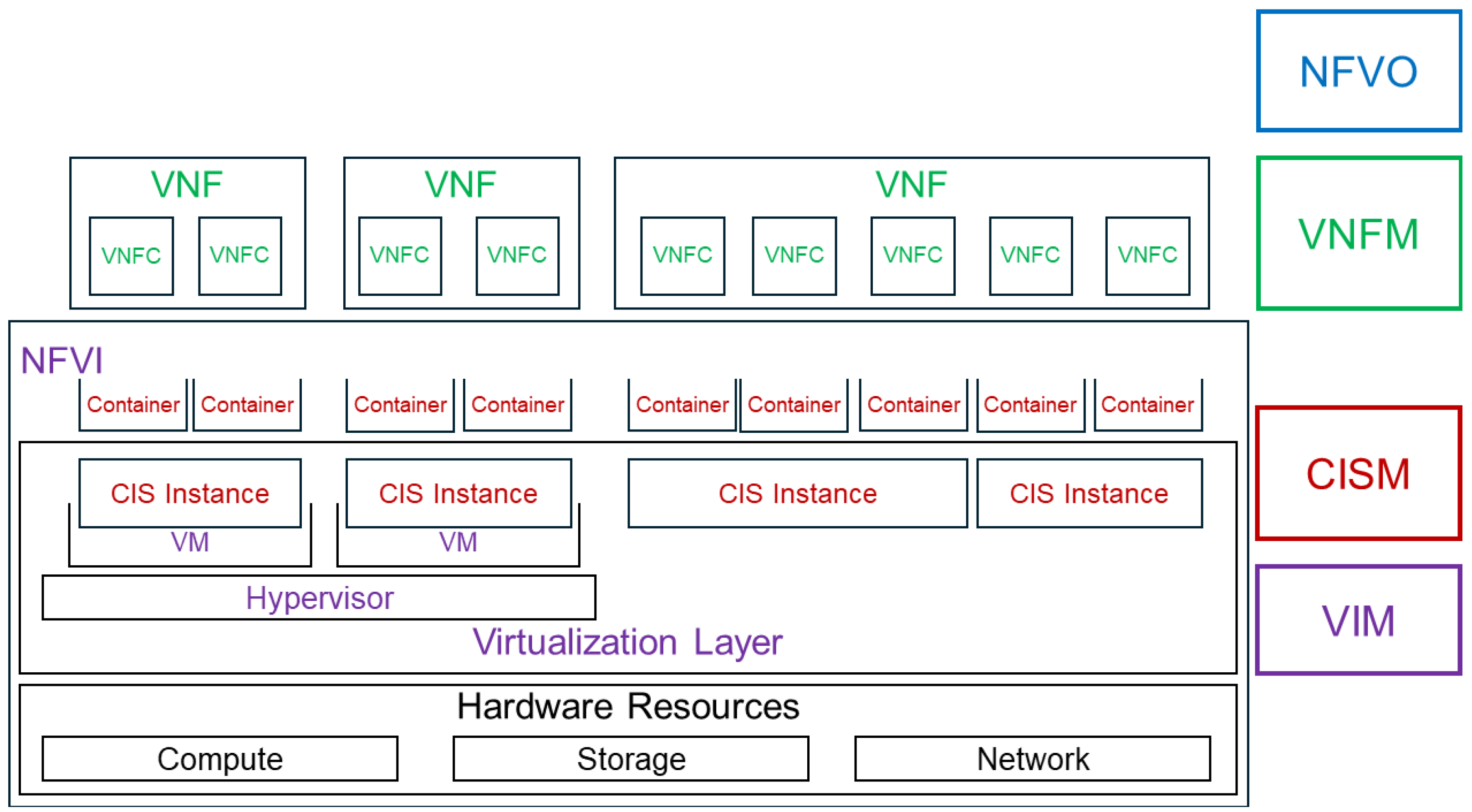

- CISM (container infrastructure service management) is the function that manages the CIS (container infrastructure service), a service offered by the NFVI, making the runtime environment available to one or more container virtualization. In turn, the CIS is exposed by one or more CIS clusters. CNFs are deployed and managed on CIS instances and make use of container cluster networks implemented in the CIS clusters [30].

- CCM (CIS cluster management) focuses on cluster management and provides lifecycle management, configuration management, performance and fault management [30].

- CIR (container image registry) manages OS container images.

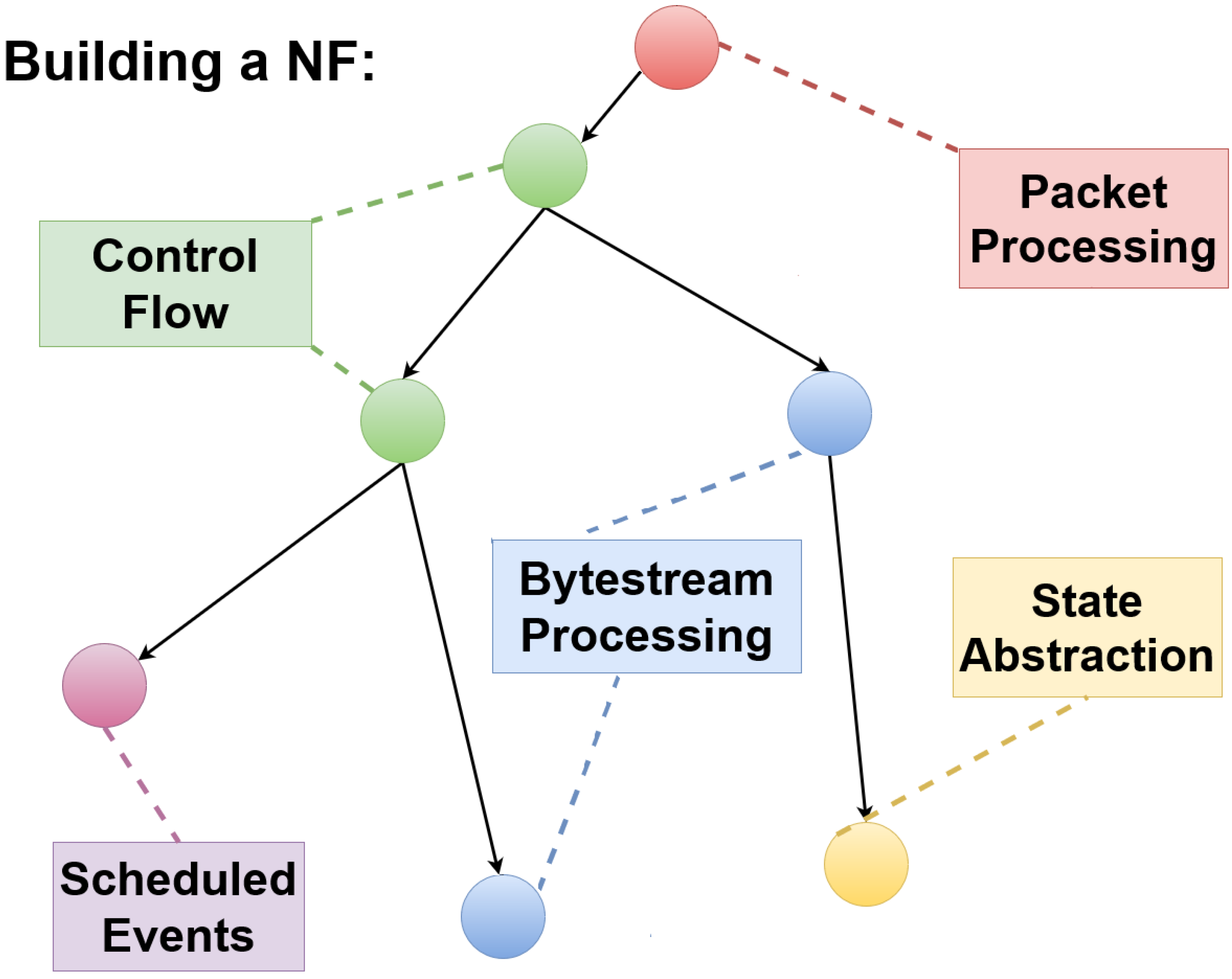

4.1. A Network Function Virtualization Specialized Framework: NetBricks

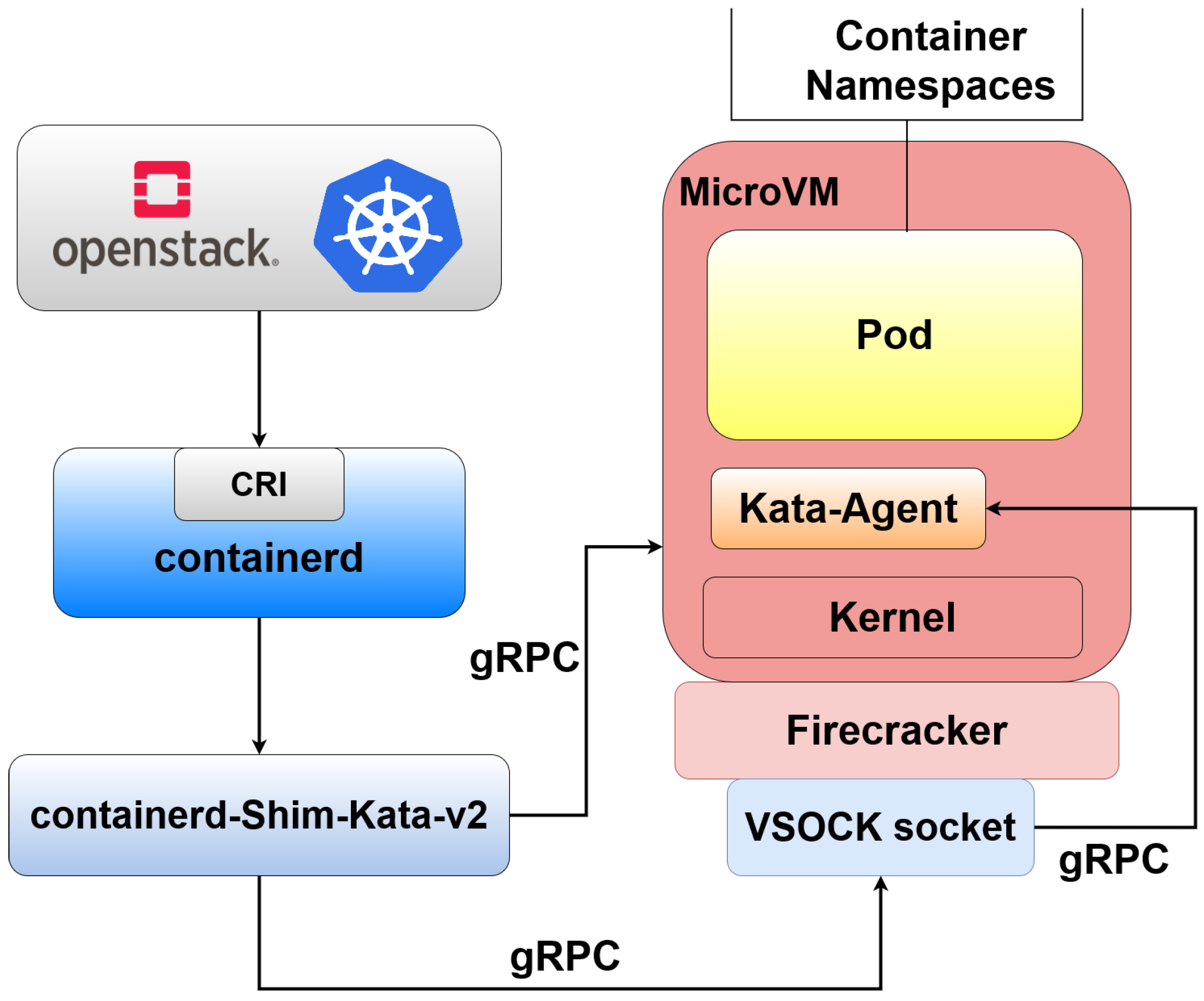

4.2. Nesting Virtualization with Kata Containers

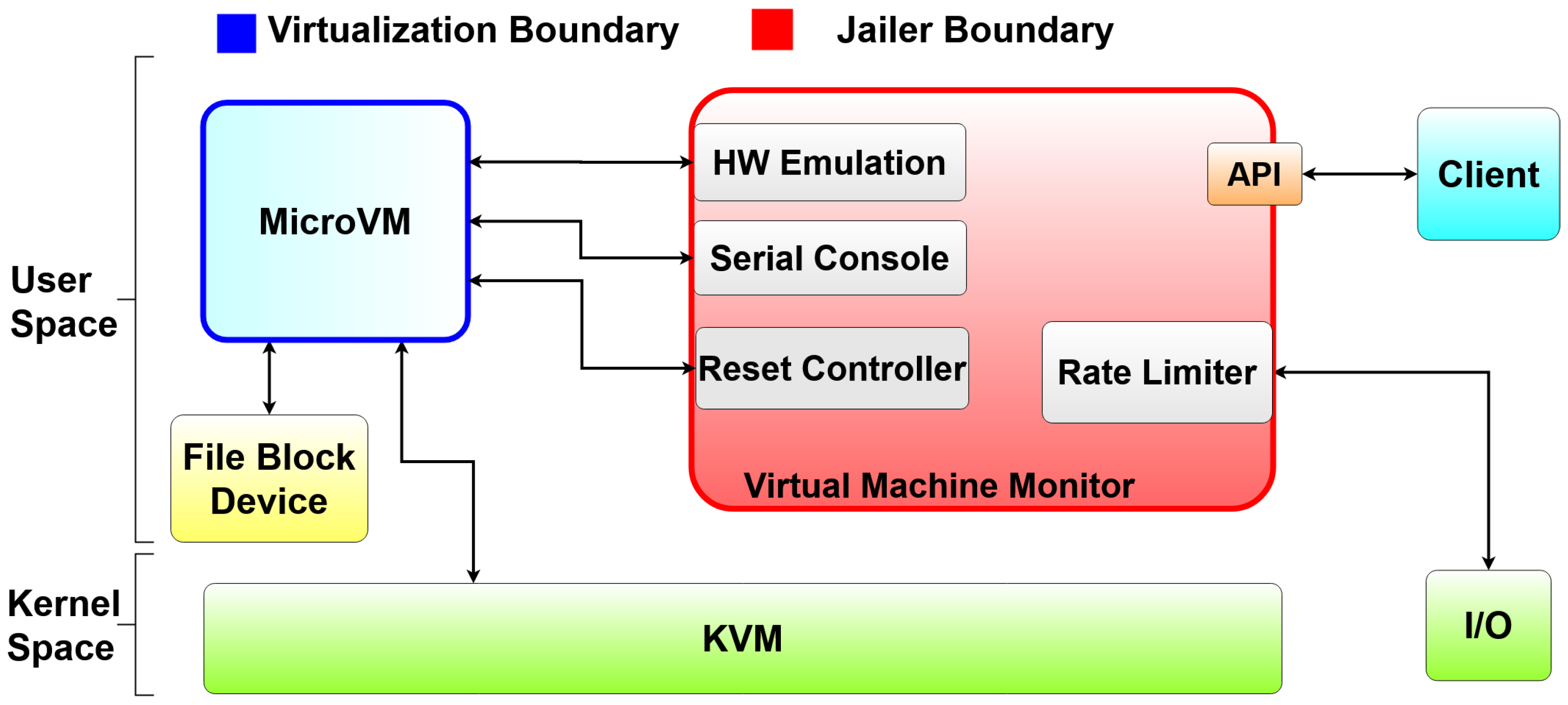

4.3. A Speed Focused Virtual Machine Manager: Firecracker

5. Performance Evaluation

5.1. Internet of Things Virtual Network Functions Requirements

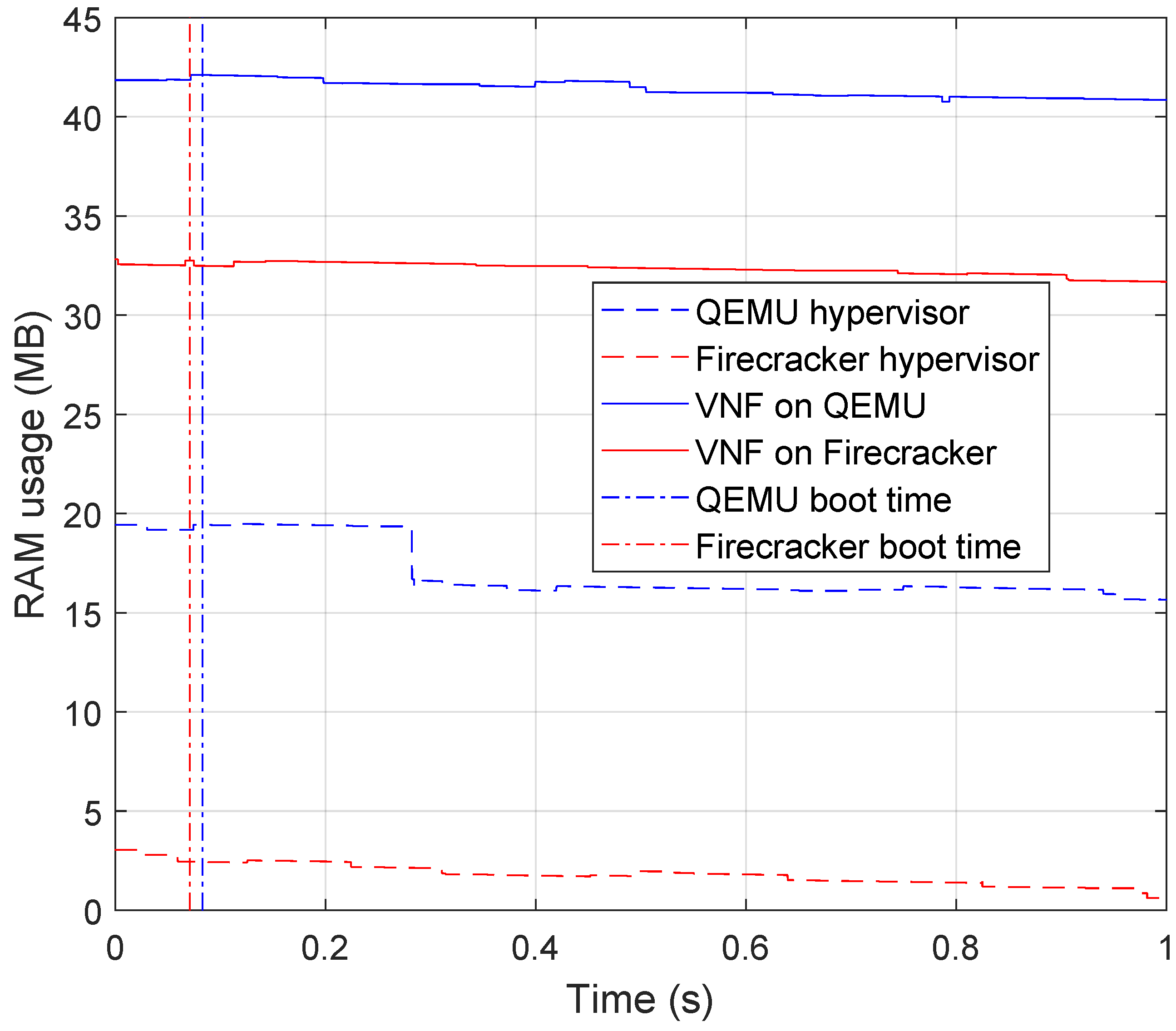

5.2. Experimental Results

5.2.1. NetBricks Virtual Network Function Experiment

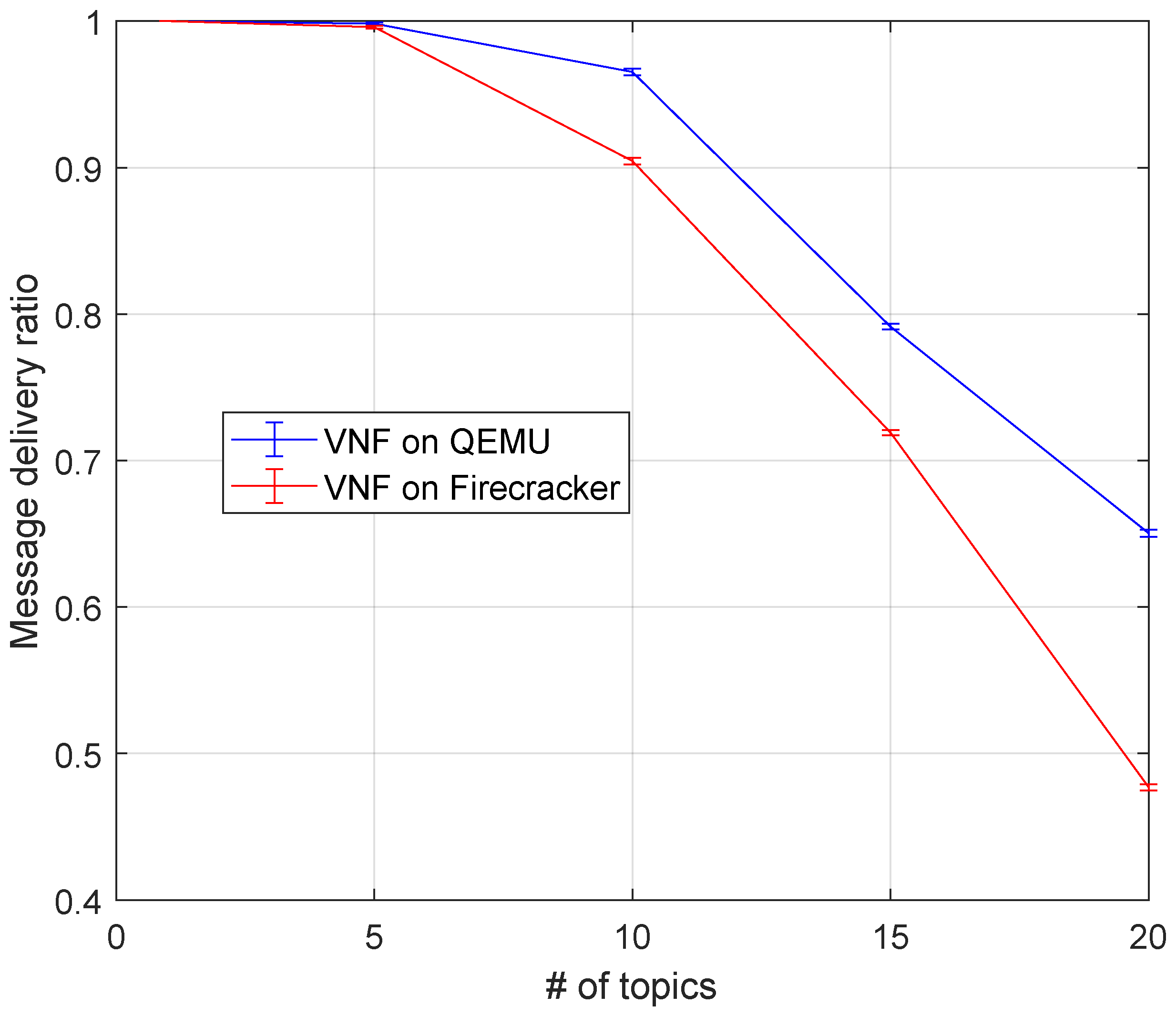

5.2.2. Kafka Broker Virtual Network Function Experiment

- I/O message rate: The handled throughput normalizes to the publication rate. Specifically, it represents the fraction of traffic that is correctly handled by a VNF. A Kafka message is considered undelivered if the broker does not return the corresponding ACK message back to the Sangrenel client by the timeout.

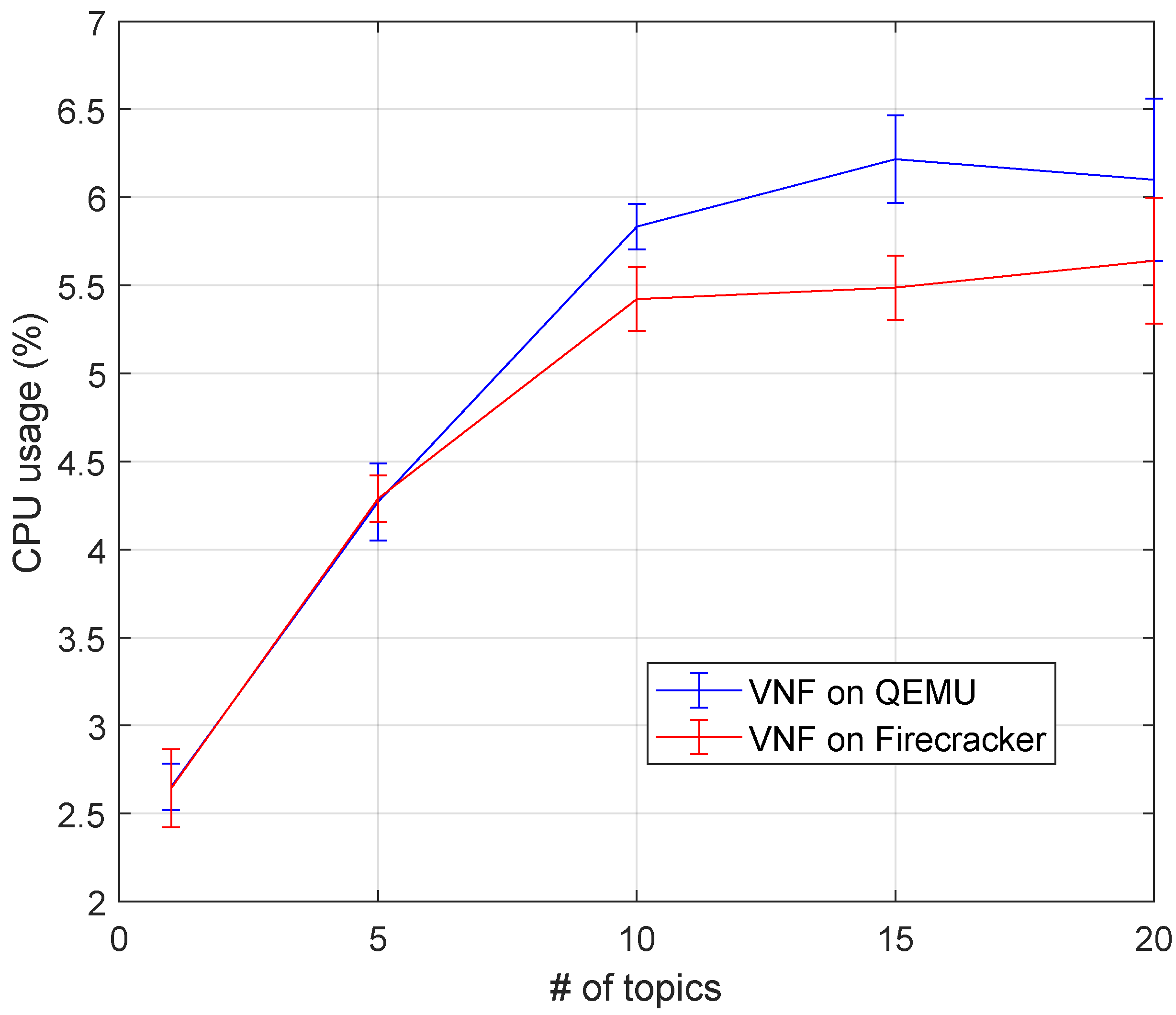

- CPU consumption: the percentage of a CPU core consumed by the VNF and measured on Server #1 using a CPU collector based on the mpstat command.

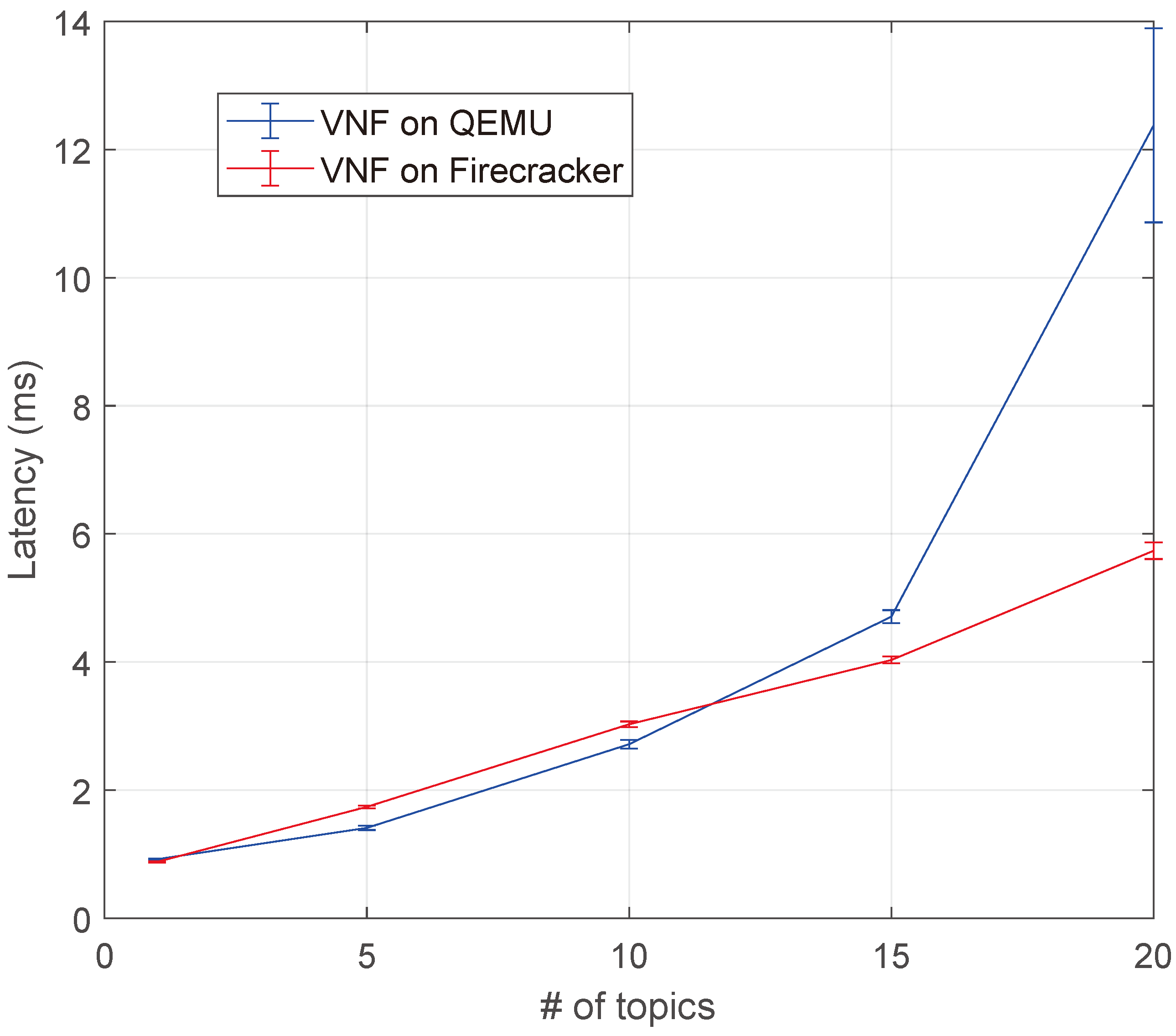

- Batch write latency or simply latency: The time spent by the client while waiting for an ACK message from the Kafka broker. Since the Kafka client and broker run in two servers, located in the same rack and belonging to the same 10G LAN, the message propagation time is negligible. It is thus possible to estimate the service response time. In order to minimize latency, in our experiments, the batch size was set as equal to 1 Kafka message.

- Kafka broker VNF, running inside a VM implemented with Ubuntu OS;

- Kafka broker VNF, running in a Docker container inside the same VM;

- Kafka broker VNF, running in a Kata container inside a Firecracker microVM.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| 3GPP | 3rd Generation Partnership Program |

| API | Application Programming Interface |

| C-V2X | Cellular Vehicle-to-Everything |

| CCM | CIS Cluster Management |

| CIR | Container Image Registry |

| CIS | Container Infrastructure Service |

| CISM | Container Infrastructure Service Management |

| CNF | Cloud-Native Network Function |

| CPU | Central Processing Unit |

| CRI | Container Runtime Interface |

| DPDK | Data Plane Development Kit |

| E2E | End-to-End |

| eMBB | Enhanced Mobile BroadBand |

| eMTC | Enhanced Machine-Type Communications |

| FaaS | Function-as-a-Service |

| gRPC | General-Purpose Remote Procedure Call |

| IoT | Internet of Things |

| KVM | Kernel-Based Virtual Machine |

| MANO | Management and Orchestration |

| MEC | Multi-Access Edge Computing |

| mMTC | Massive Machine-Type Communications |

| NF | Network Function |

| NFV | Network Function Virtualization |

| NFVI | Network Function Virtualization Infrastructure |

| NFVM | Virtual Network Function Manager |

| NFVO | Network Function Virtualization Orchestrator |

| OCI | Open Container Initiative |

| QoS | Quality of Service |

| RAM | Random Access Memory |

| uRLLC | Ultra-Reliable Low Latency Communications |

| VIM | Virtual Infrastructure Manager |

| VM | Virtual Machine |

| VMM | Virtual Machine Manager |

| VNF | Virtual Network Function |

| VNFC | Virtual Network Function Component |

| VSOCK | VM Sockets |

References

- Vaezi, M.; Azari, A.; Khosravirad, S.R.; Shirvanimoghaddam, M.; Azari, M.M.; Chasaki, D.; Popovski, P. Cellular, Wide-Area, and Non-Terrestrial IoT: A Survey on 5G Advances and the Road Toward 6G. IEEE Commun. Surv. Tutor. 2022, 24, 1117–1174. [Google Scholar] [CrossRef]

- Veedu, S.N.K.; Mozaffari, M.; Hoglund, A.; Yavuz, E.A.; Tirronen, T.; Bergman, J.; Wang, Y.P.E. Toward Smaller and Lower-Cost 5G Devices with Longer Battery Life: An Overview of 3GPP Release 17 RedCap. IEEE Commun. Stand. Mag. 2022, 6, 84–90. [Google Scholar] [CrossRef]

- Wang, C.X.; You, X.; Gao, X.; Zhu, X.; Li, Z.; Zhang, C.; Wang, H.; Huang, Y.; Chen, Y.; Haas, H.; et al. On the Road to 6G: Visions, Requirements, Key Technologies, and Testbeds. IEEE Commun. Surv. Tutor. 2023, 25, 905–974. [Google Scholar] [CrossRef]

- Mijumbi, R.; Serrat, J.; Gorricho, J.L.; Bouten, N.; De Turck, F.; Boutaba, R. Network Function Virtualization: State-of-the-Art and Research Challenges. IEEE Commun. Surv. Tutor. 2016, 18, 236–262. [Google Scholar] [CrossRef]

- Aditya, P.; Akkus, I.E.; Beck, A.; Chen, R.; Hilt, V.; Rimac, I.; Satzke, K.; Stein, M. Will Serverless Computing Revolutionize NFV? Proc. IEEE 2019, 107, 667–678. [Google Scholar] [CrossRef]

- Milojicic, D. The Edge-to-Cloud Continuum. Computer 2020, 53, 16–25. [Google Scholar] [CrossRef]

- Wang, L.; Li, M.; Zhang, Y.; Ristenpart, T.; Swift, M. Peeking Behind the Curtains of Serverless Platforms. In Proceedings of the 2018 USENIX Annual Technical Conference (USENIX ATC 18), Boston, MA, USA, 11–13 July 2018; pp. 133–146. [Google Scholar]

- Raith, P.; Nastic, S.; Dustdar, S. Serverless Edge Computing—Where We Are and What Lies Ahead. IEEE Internet Comput. 2023, 27, 50–64. [Google Scholar] [CrossRef]

- Benedetti, P.; Femminella, M.; Reali, G.; Steenhaut, K. Reinforcement Learning Applicability for Resource-Based Auto-scaling in Serverless Edge Applications. In Proceedings of the 2022 IEEE International Conference on Pervasive Computing and Communications Workshops and Other Affiliated Events (PerCom Workshops), Pisa, Italy, 21–15 March 2022; pp. 674–679. [Google Scholar] [CrossRef]

- Cassel, G.A.S.; Rodrigues, V.F.; da Rosa Righi, R.; Bez, M.R.; Nepomuceno, A.C.; André da Costa, C. Serverless computing for Internet of Things: A systematic literature review. Future Gener. Comput. Syst. 2022, 128, 299–316. [Google Scholar] [CrossRef]

- Wang, I.; Liri, E.; Ramakrishnan, K.K. Supporting IoT Applications with Serverless Edge Clouds. In Proceedings of the 2020 IEEE 9th International Conference on Cloud Networking (CloudNet), Virtual Conference, 9–11 November 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Benedetti, P.; Femminella, M.; Reali, G.; Steenhaut, K. Experimental Analysis of the Application of Serverless Computing to IoT Platforms. Sensors 2021, 21, 928. [Google Scholar] [CrossRef]

- Djemame, K.; Parker, M.; Datsev, D. Open-source Serverless Architectures: An Evaluation of Apache OpenWhisk. In Proceedings of the 2020 IEEE/ACM 13th International Conference on Utility and Cloud Computing (UCC), Leicester, UK, 7–10 December 2020; pp. 329–335. [Google Scholar] [CrossRef]

- Persson, P.; Angelsmark, O. Kappa: Serverless IoT deployment. In Proceedings of the 2nd International Workshop on Serverless Computing, Las Vegas, NV, USA, 11–15 December 2017; pp. 16–21. [Google Scholar] [CrossRef]

- López Escobar, J.J.; Díaz-Redondo, R.P.; Gil-Castiñeira, F. Unleashing the power of decentralized serverless IoT dataflow architecture for the Cloud-to-Edge Continuum: A performance comparison. Ann. Telecommun. 2024. [Google Scholar] [CrossRef]

- Mistry, C.; Stelea, B.; Kumar, V.; Pasquier, T. Demonstrating the Practicality of Unikernels to Build a Serverless Platform at the Edge. In Proceedings of the 2020 IEEE International Conference on Cloud Computing Technology and Science (CloudCom), Bangkok, Thailand, 14–17 December 2020; pp. 25–32. [Google Scholar] [CrossRef]

- Pinto, D.; Dias, J.; Ferreira, H.S. Dynamic Allocation of Serverless Functions in IoT Environments. In Proceedings of the 2018 IEEE 16th International Conference on Embedded and Ubiquitous Computing (EUC), Los Alamitos, CA, USA, 29–31 October 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Ferry, N.; Dautov, R.; Song, H. Towards a Model-Based Serverless Platform for the Cloud-Edge-IoT Continuum. In Proceedings of the 2022 22nd IEEE International Symposium on Cluster, Cloud and Internet Computing (CCGrid), Taormina, Italy, 16–19 May 2022; pp. 851–858. [Google Scholar] [CrossRef]

- Containerd—An Industry-Standard Container Runtime with an Emphasis on Simplicity, Robustness and Portability. Available online: https://containerd.io/ (accessed on 2 March 2024).

- Mahmoudi, N.; Khazaei, H. Performance Modeling of Serverless Computing Platforms. IEEE Trans. Cloud Comput. 2022, 10, 2834–2847. [Google Scholar] [CrossRef]

- Sultan, S.; Ahmad, I.; Dimitriou, T. Container Security: Issues, Challenges, and the Road Ahead. IEEE Access 2019, 7, 52976–52996. [Google Scholar] [CrossRef]

- Huawei Technologies. 5G Unlocks a World of Opportunities: Top Ten 5G Use Cases; Huawei Technologies: Shenzhen, China, 2017; Available online: https://www-file.huawei.com/-/media/corporate/pdf/mbb/5g-unlocks-a-world-of-opportunities-v5.pdf?la=en (accessed on 14 July 2020).

- Kumar, D.; Rammohan, A. Revolutionizing Intelligent Transportation Systems with Cellular Vehicle-to-Everything (C-V2X) technology: Current trends, use cases, emerging technologies, standardization bodies, industry analytics and future directions. Veh. Commun. 2023, 43, 100638. [Google Scholar] [CrossRef]

- Thalanany, S.; Hedman, P. Description of Network Slicing Concept; NGMN Alliance: Düsseldorf, Germany, 2016. [Google Scholar]

- Zhang, S. An Overview of Network Slicing for 5G. IEEE Wirel. Commun. 2019, 26, 111–117. [Google Scholar] [CrossRef]

- Ahmed, T.; Alleg, A.; Marie-Magdelaine, N. An Architecture Framework for Virtualization of IoT Network. In Proceedings of the IEEE Conference on Network Softwarization NetSoft, Paris, France, 24–28 June 2019. [Google Scholar]

- Zhang, Y.; Crowcroft, J.; Li, D.; Zhang, C.; Li, H.; Wang, Y.; Yu, K.; Xiong, Y.; Chen, G. KylinX: A Dynamic Library Operating System for Simplified and Efficient Cloud Virtualization. In Proceedings of the 2018 USENIX Conference on Usenix Annual Technical Conference, Boston, MA, USA, 11–13 July 2018; pp. 173–186. [Google Scholar]

- Talbot, J.; Pikula, P.; Sweetmore, C.; Rowe, S.; Hindy, H.; Tachtatzis, C.; Atkinson, R.; Bellekens, X. A Security Perspective on Unikernels. In Proceedings of the 2020 International Conference on Cyber Security and Protection of Digital Services (Cyber Security), Dublin, Ireland, 15–19 June 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Cai, X.; Deng, H.; Lingli Deng, A.E.; Gao, S.; Nicolas, A.M.D.; Nakajima, Y.; Pieczerak, J.; Triay, J.; Wang, X.; Xie, B.; et al. Evolving NFV towards the Next Decade; ETSI White Paper No. 54; ETSI: Sophia Antipolis, France, 2023. [Google Scholar]

- ETSI GS NFV 006 V4.4.1 (2022-12); Network Functions Virtualisation (NFV) Release 4; Management and Orchestration; Architectural Framework Specification. European Telecommunications Standards Institute (ETSI): Sophia Antipolis, France, 2022.

- ETSI GS NFV-SOL 018 V4.3.1; Network Functions Virtualisation (NFV) Release 4; Protocols and Data Models; Profiling Specification of Protocol and Data Model Solutions for OS Container Management and Orchestration. European Telecommunications Standards Institute (ETSI): Sophia Antipolis, France, 2022.

- ETSI GS NFV-IFA 040 V4.2.1; Network Functions Virtualisation (NFV) Release 4; Management and Orchestration; Requirements for Service Interfaces and Object Model for OS Container Management and Orchestration Specification. European Telecommunications Standards Institute (ETSI): Sophia Antipolis, France, 2021.

- AWS Whitepaper. ETSI NFVO Compliant Orchestration in the Kubernetes/Cloud Native World; AWS: Seattle, WA, USA, 2022. [Google Scholar]

- Femminella, M.; Palmucci, M.; Reali, G.; Rengo, M. Attribute-Based Management of Secure Kubernetes Cloud Bursting. IEEE Open J. Commun. Soc. 2024, 5, 1276–1298. [Google Scholar] [CrossRef]

- ETSI GR NFV-IFA 029 V3.3.1; Network Functions Virtualisation (NFV) Release 3; Architecture; Report on the Enhancements of the NFV Architecture Towards “Cloud-Native” and “PaaS”; European Telecommunications Standards Institute (ETSI): Sophia Antipolis, France, 2019.

- Kata Containers Architecture. 2019. Available online: https://github.com/kata-containers/documentation/blob/master/design/architecture.md (accessed on 31 January 2024).

- Agache, A.; Brooker, M.; Iordache, A.; Liguori, A.; Neugebauer, R.; Piwonka, P.; Popa, D.M. Firecracker: Lightweight Virtualization for Serverless Applications. In Proceedings of the USENIX NSDI 20, Santa Clara, CA, USA, 25–27 February 2020; pp. 419–434. [Google Scholar]

- Panda, A.; Han, S.; Jang, K.; Walls, M.; Ratsanamy, S.; Shenker, S. NetBricks: Taking the V out of NFV. In Proceedings of the USENIX NSDI 16, Santa Clara, CA, USA, 16–18 March 2016. [Google Scholar]

- Yu, Z. The Application of Kata Containers in Baidu AI Cloud. 2019. Available online: http://katacontainers.io/baidu (accessed on 31 January 2024).

- Firecracker Design. 2018. Available online: https://github.com/firecracker-microvm/firecracker/blob/master/docs/design.md (accessed on 22 January 2020).

- OpenStack Foundation. Open Collaboration Evolving the container landscape with Kata Containers and Firecracker. In Proceedings of the Open Infrastructure Summit 2019, Denver, CO, USA, 29 April–1 May 2019. [Google Scholar]

- Wang, X. Kata Containers: Virtualization for Cloud-Native; Medium: San Francisco, CA, USA, 2019; Available online: https://medium.com/kata-containers/kata-containers-virtualization-for-cloud-native-f7b11ead951 (accessed on 31 January 2024).

- Halili, R.; Yousaf, F.Z.; Slamnik-Krijestorac, N.; Yilma, G.M.; Liebsch, M.; Berkvens, R.; Weyn, M. Self-Correcting Algorithm for Estimated Time of Arrival of Emergency Responders on the Highway. IEEE Trans. Veh. Technol. 2023, 72, 340–356. [Google Scholar] [CrossRef]

- Yu, Y.; Lee, S. Remote Driving Control With Real-Time Video Streaming Over Wireless Networks: Design and Evaluation. IEEE Access 2022, 10, 64920–64932. [Google Scholar] [CrossRef]

- Apache Kafka. Available online: https://kafka.apache.org/ (accessed on 31 January 2024).

- Alquiza, J. Sangrenel. 2020. Available online: https://github.com/jamiealquiza/sangrenel (accessed on 31 January 2024).

- Kata Containers—The Speed of Containers, The Security of VMs. Available online: https://katacontainers.io (accessed on 31 January 2024).

- QEMU—A Generic and Open Source Machine Emulator and Virtualizer. Available online: https://www.qemu.org/ (accessed on 31 January 2024).

- Kingman, J.F.C. The single server queue in heavy traffic. Math. Proc. Camb. Philos. Soc. 1961, 57, 902–904. [Google Scholar] [CrossRef]

- Kingman, J.F.C. The first Erlang century—And the next. Queueing Syst. 2009, 63, 3–12. [Google Scholar] [CrossRef]

- Slamnik-Kriještorac, N.; Yousaf, F.Z.; Yilma, G.M.; Halili, R.; Liebsch, M.; Marquez-Barja, J.M. Edge-Aware Cloud-Native Service for Enhancing Back Situation Awareness in 5G-Based Vehicular Systems. IEEE Trans. Veh. Technol. 2024, 73, 660–677. [Google Scholar] [CrossRef]

- Coronado, E.; Raviglione, F.; Malinverno, M.; Casetti, C.; Cantarero, A.; Cebrián-Márquez, G.; Riggio, R. ONIX: Open Radio Network Information eXchange. IEEE Commun. Mag. 2021, 59, 14–20. [Google Scholar] [CrossRef]

| Solution | Resource Footprint | Startup Time | Supported Protocols | Application Portability | Security |

|---|---|---|---|---|---|

| VMs | Large: full operating system virtualization | Order of minutes | Any | Complete | Isolation granted, but potentially large attack surfaces |

| Traditional containers * | Medium: multiple abstraction layers and context switches | Order of seconds [20] | Any | Complete | Isolation issues, caused by the use of a shared kernel (see also Ref. [21]) |

| Calvin [14] | Limited memory overhead and optimized network functions | Potentially low | HTTP only | Only apps using HTTP and specifically built for Calvin | N/A |

| Wasm [15] | Low footprint | Fast scaling, but execution can be slowed down [10] | Any | Portable code written in high-level languages | Sandboxed environment |

| Unikernel [16] | Very low, designed for resource-constrained devices | Fast startup time (≪100 ms) | Any (but required libraries may need to be rewritten) | Complete app rewrite is needed | Potentially dangerous kernel functions can be invoked from applications |

| Proposed | Limited memory overhead and CPU consumption (shown in Section 5.2) | ≤100 ms (shown in Section 5.2) | Any | Complete: optimized VNFs written with NetBricks or standard containerized apps | Minimal attack surface: Kata container and microVM isolation from Firecracker |

| Architecture | VNF | |||

|---|---|---|---|---|

| Load Balancer | C-V2X Server | Video Streaming Server | Firewall | |

| NetBricks | Limited memory overhead and optimized network functions | High processing rate (>25 Mbps) [38] | High processing rate (>25 Mbps) [38] | Memory and packet isolation and optimized network functions |

| Kata Containers | Reduced agent and VMM overhead (1 MB, 10 MB) [42] | VM-like isolation, reduced agent and VMM overhead (1 MB, 10 MB) [42] | Reduced agent and VMM overhead (1 MB, 10 MB) [42] | VM-like isolation |

| Firecracker | High density creation rate (150 microVM/s) [37] | Fast scaling and fast startup time (125 ms) [37] | Fast startup time (125 ms) [37] | Minimal attack surface |

| Configuration | Server #1 | Server #2 |

|---|---|---|

| CPU | Intel(R) Xeon(R) CPU E5-2640 v4 @ 2.40 GHz | Intel(R) Xeon(R) CPU E5-2650 v3 @ 2.30 GHz |

| RAM | 128 GB @ 2133 MT/s | 64 GB @ 2133 MT/s |

| Disk | 280 GB (145 MB/s write speed) | 280 GB (145 MB/s write speed) |

| Network interfaces | 1 × 10 Gbps, 4 × 1 Gbps | 1 × 10 Gbps, 4 × 1 Gbps |

| VNF packages | - Kafka broker [45] and ZooKeeper | Kafka publisher, based on Sangrenel [46] |

| - NetBricks packet generator: packet-test | ||

| Virtualization software | - Kata Containers [36,47] as serverless container runtime with Docker | |

| - Firecracker [37,40] or QEMU [48] as microVM hypervisors |

| Deployment Model for Kafka Broker VNF | Amount of CPU Power |

|---|---|

| VM | 0.045 |

| Docker in a VM | 0.054 |

| Kata + Firecracker | 0.034 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Femminella, M.; Reali, G. Implementing Internet of Things Service Platforms with Network Function Virtualization Serverless Technologies. Future Internet 2024, 16, 91. https://doi.org/10.3390/fi16030091

Femminella M, Reali G. Implementing Internet of Things Service Platforms with Network Function Virtualization Serverless Technologies. Future Internet. 2024; 16(3):91. https://doi.org/10.3390/fi16030091

Chicago/Turabian StyleFemminella, Mauro, and Gianluca Reali. 2024. "Implementing Internet of Things Service Platforms with Network Function Virtualization Serverless Technologies" Future Internet 16, no. 3: 91. https://doi.org/10.3390/fi16030091

APA StyleFemminella, M., & Reali, G. (2024). Implementing Internet of Things Service Platforms with Network Function Virtualization Serverless Technologies. Future Internet, 16(3), 91. https://doi.org/10.3390/fi16030091