Abstract

Predicting the energy consumption of buildings plays a critical role in supporting utility providers, users, and facility managers in minimizing energy waste and optimizing operational efficiency. However, this prediction becomes difficult because of the limited availability of supervised labeled data to train Artificial Intelligence (AI) models. This data availability becomes either expensive or difficult due to privacy protection. To overcome the scarcity of balanced labeled data, semi-supervised learning utilizes extensive unlabeled data. Motivated by this, we propose semi-supervised learning to train AI model. For the AI model, we employ the Belief Rule-Based Expert System (BRBES) because of its domain knowledge-based prediction and uncertainty handling mechanism. For improved accuracy of the BRBES, we utilize initial labeled data to optimize BRBES’ parameters and structure through evolutionary learning until its accuracy reaches the confidence threshold. As semi-supervised learning, we employ a self-training model to assign pseudo-labels, predicted by the BRBES, to unlabeled data generated through weak and strong augmentation. We reoptimize the BRBES with labeled and pseudo-labeled data, resulting in a semi-supervised BRBES. Finally, this semi-supervised BRBES explains its prediction to the end-user in nontechnical human language, resulting in a trust relationship. To validate our proposed semi-supervised explainable BRBES framework, a case study based on Skellefteå, Sweden, is used to predict and explain energy consumption of buildings. Experimental results show 20 ± 0.71% higher accuracy of the semi-supervised BRBES than state-of-the-art semi-supervised machine learning models. Moreover, the semi-supervised BRBES framework turns out to be 29 ± 0.67% more explainable than these semi-supervised machine learning models.

1. Introduction

Building energy consumption contributes significantly to climate change [1]. Approximately 40% of the world’s energy is consumed by the building sector [2]. Furthermore, significant population expansion has raised the need for energy in buildings [3]. Therefore, in order to hasten the shift to sustainable building methods, buildings must be energy efficient. Predictive analytics of building energy consumption are an effective method of achieving this energy efficiency [4]. Based on the insights provided by such predictive consumption, utility companies, consumers, and facility managers can take necessary steps to make their buildings energy efficient [5]. Such efficiency is crucial to reduce energy costs and increase the energy security of buildings [6]. Moreover, energy prediction of buildings aids in the implementation of urban greening policies [7].

Meaningful prediction is offered by Artificial Intelligence (AI) models, such as machine learning and deep learning models, by discovering hidden pattern from historical energy consumption data [8]. These AI models learn from a large amount of labeled energy consumption data through supervised learning to offer such predictions [9]. However, obtaining labeled data for building energy consumption prediction is often challenging due to the high cost and effort involved in collecting accurate ground-truth measurements [10]. Many energy datasets lack sufficient labels because of sensor deployment limitations, data privacy concerns [11], and manual annotation burdens [10,12]. This scarcity of supervised labeled data hinders the development of robust and scalable AI models for energy consumption prediction in diverse building environments. A labeled dataset can be imbalanced as well. Hence, using unlabeled energy data to train AI models can overcome the scarcity, inaccessibility, and imbalance issues of labeled energy data. Training an AI model with a small number of labeled data points and a large number of unlabeled data is called semi-supervised learning (SSL) [13,14,15,16]. SSL learns knowledge from a small number of labeled data to meaningfully label a large amount of unlabeled data. Thus, SSL facilitates the training of an AI model by capitalizing on the abundance of unlabeled data, resulting in higher prediction accuracy [12]. SSL methods are divided into five groups [17]: (a) deep generative methods, (b) consistency regularization methods, (c) graph-based methods, (d) pseudo-labeling methods, and e) hybrid methods. Semi-supervised Variational Autoencoders (VAEs), and Generative Adversarial Networks (GANs) are examples of deep generative methods. Although such methods generate new training examples, achieving optimal results for both generative task and downstream task is difficult. Virtual Adversarial Training (VAT) [16] is an example of consistency regularization methods. As a consistency-based SSL method, a novel Semi-supervised Universal Graph Representation Learning (SUGRL) framework was proposed by [18]. This framework performs graph classification in semi-supervised settings when graphs originate from diverse domains with heterogeneous structures. For this purpose, it learns domain-invariant representations through contrastive self-supervised learning and adversarial domain alignment. This method significantly improves generalization across domains using limited labeled data and abundant unlabeled graphs. Another consistency-based SSL method, Rationale-Informed Graph Neural Network (RIGNN), was proposed by [19]. This method identifies and leverages task-relevant subgraphs (rationales) to enhance classification robustness. By disentangling discriminative information from noisy or out-of-distribution data, RIGNN improves generalization under open-world and label-sparse settings. This work underscores the importance of interpretability and selective representation in advancing semi-supervised graph learning. Although these consistency-based methods construct consistency constraints with clear assumptions, data augmentation and perturbation methods may not be fully reliable [17]. Graph Neural Network (GNN) and Autoencoder-based models fall under graph-based methods. However, conventional graph structures are inherently limited to modeling pairwise relationships, which may not fully capture the higher-order dependencies often present in complex real-world data. To address this limitation, Hypergraph-enhanced Dual Semi-supervised Graph Classification (HDSGC) has been proposed as a powerful alternative that incorporates both graph and hypergraph representations to better capture complex and high-order relationships among data points [20]. HDSGC utilizes hyperedges to represent multi-node interactions, enriching the contextual information available for learning. It introduces a dual semi-supervised learning strategy that jointly optimizes node classification on both graph and hypergraph views, leveraging the complementary strengths of each structure. This method achieves improved performance on graph classification tasks with limited labeled data by enhancing representation learning through multi-relational modeling. Although such methods learn more information with graphs, building relationship between training samples may not be always accurate [17]. Disagreement-based models and self-training models fall under pseudo-labeling methods. As an iterative self-training strategy, SemiEvol—Semi-supervised Fine-tuning for LLM Adaptation [20]—leverages both labeled and high-confidence pseudo-labeled data to progressively fine-tune Large Language Models (LLMs). By integrating teacher–student paradigms with dynamic data selection, SemiEvol facilitates robust adaptation across diverse tasks under semi-supervised conditions. Although these methods produce pseudo-labels for unlabeled examples using labeled examples, such pseudo-labels may become noisy [17]. Interpolation Consistency Training (ICT) [21], MixMatch [22], ReMixMatch [23], and FixMatch [24] are examples of hybrid methods. A hybrid SSL framework was introduced through Semi-supervised Active Learning for Graph-level Classification [25], which combines semi-supervised learning with active learning to efficiently label graph-structured data. It strategically selects the most informative graph instances to be labeled, thereby maximizing learning performance with minimal annotation cost. By leveraging both labeled and unlabeled graphs during training, the method balances exploration and exploitation to enhance graph-level classification. This approach demonstrates state-of-the-art results by integrating uncertainty estimation with structure-aware sampling strategies. Another hybrid SSL method was proposed by [26], which combines curriculum learning with pseudo-labeling to improve node classification in graph-based SSL. This method iteratively selects pseudo-labels for unlabeled nodes based on both confidence and the complexity of the node’s features, forming a hybrid curriculum. This curriculum-guided strategy helps the model progressively learn from easier to harder instances, leading to better generalization and faster convergence. This approach outperforms traditional pseudo-labeling methods by incorporating a structured learning process that adapts to the evolving uncertainty of the model [26]. Although such hybrid methods offer more robustness and efficiency, the size of such methods is larger due to integration of various learning strategies. Moreover, based on output, SSL is of four types [27]: semi-supervised classification, semi-supervised clustering, semi-supervised dimension reduction, and semi-supervised regression. The continuous value of energy consumption of a building needs to be predicted [28]. However, among these four categories, semi-supervised regression research to predict value of a continuous variable is relatively scarce [29].

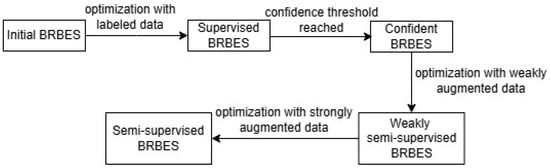

Prediction can be obtained in two ways: a data-driven approach and knowledge-driven approach [30]. A data-driven approach learns from data to predict output. Machine learning, a data-driven approach, extracts actionable insight from training data through statistical technique [31]. Deep learning model also learns hidden representation by applying a representation learning method on preprocessed raw data [32]. Various data-driven approaches, such as, Random Forest (RF) [33], Light Gradient Boosting Machine (LightGBM) [34], Automated Machine Learning (AutoML) [35], and Artificial Neural Network (ANN) [36] are employed to predict energy consumption of buildings. However, a data-driven approach can predict misleading output due to biased or erroneous training dataset [37]. On the other hand, a knowledge-driven approach obtains a predictive output based on knowledge, which is represented by rules [38]. This approach is constituted by an expert system consisting of two components: a knowledge base and an inference engine. The knowledge base represents knowledge of the relevant domain or application area, and the inference engine performs reasoning over this knowledge base to infer predictive output. Examples of knowledge-driven approaches include the Belief Rule-Based Expert System (BRBES), MYCIN [39], and fuzzy logic. A fuzzy logic algorithm was proposed by [40] to predict short-term energy consumption in individual buildings. Their proposed fuzzy logic system comprises fuzzification, rule definition, inference, and defuzzification steps, enabling effective day-to-day consumption predictions. Another study combined a fuzzy rule-based prediction system with multi-objective evolutionary optimization to predict residential building energy consumption [41]. The BRBES was employed by [37] to predict energy consumption of buildings in a transparent manner. They trained the BRBES with evolutionary optimization using supervised labeled data to improve accuracy. A knowledge-driven approach is not dependent on training data [37]. Its knowledge base is formulated by experts with relevant domain knowledge. Hence, a knowledge-driven approach is free from the risk of bias or errors in a training dataset [37]. In contrast to an ad hoc training dataset, property managers are more inclined to believe a prediction output that is based on knowledge pertaining to the energy consumption domain [37]. Moreover, domain knowledge can capture the complex interplay among underlying physical and operational factors of a building, which influence its energy use [42]. Such factors include building design, occupancy patterns, HVAC (Heating, Ventilation, and Air Conditioning) systems, and external environmental conditions. Therefore, we opt for the knowledge-driven approach to infer the prediction of energy consumption of buildings in this paper. Moreover, predictive energy consumption output can become erroneous due to wrong input data caused by human error or blank input data caused by human ignorance, resulting in uncertainties [43]. Such uncertainties are caused by imprecise or incomplete human knowledge [44,45]. Therefore, these uncertainties have to be addressed properly to uphold the prediction accuracy. The belief rule basis of the BRBES, formulated based on a belief structure, can capture uncertainties and nonlinear causal relationships [46]. This rule base of the BRBES, formulated by domain experts, represents knowledge of the energy consumption domain. In case of unavailability of some or all of the input data, the BRBES updates belief degrees of its rule base [47]. THe BRBES obtains its predictive output by applying Evidential Reasoning (ER) on the rule base [8,48]. Thus, the BRBES outperforms other knowledge-driven approaches in terms of handling uncertainties, especially those caused by ignorance [47]. Hence, as knowledge-driven approach, we employ the BRBES in this research to obtain predictive energy consumption output. By representing energy consumption domain knowledge through a rule base, the BRBES offers higher explainability [37]. However, it has lower accuracy compared to conventional machine learning models. To improve its accuracy, we jointly optimize both parameters and structure of the BRBES with the Joint Optimization of Parameter and Structure (JOPS) algorithm [49]. With this joint optimization using supervised labeled data, the initial BRBES transitions to the supervised BRBES. We continue to optimize this supervised BRBES until the confidence level of its accuracy reaches a certain threshold. After reaching this accuracy threshold, the supervised BRBES transitions to the “confident BRBES”. We then integrate SSL with this confident BRBES to train it with a larger synthetic dataset. For the SSL method, we employ the self-training model [50] in this research. As part of the self-training model, we perform data augmentation to synthetically create new unlabeled data through transformation of the existing labeled data points [51]. Through data augmentation, we add more training examples of energy consumption to address overfitting and poor generalization [52]. In this study, we employ two types of data augmentation: “weak” and “strong”. Weak augmentation involves mild transformation of the original data points within a predefined range to increase data variability and generalization. On the other hand, strong augmentation involves more complex transformation to create challenging training examples that force the prediction model to learn more robust features. First, we apply weak augmentation to synthetically generate new unlabeled energy consumption data with equal distribution within a predefined range for each antecedent attribute of BRBES. Thus, we ensure proper diversity and density of the unlabeled data, resulting in a balanced unlabeled energy dataset. We then employ the confident BRBES to assign pseudo-labels to the weakly augmented unlabeled data. Thus, with the self-training model, we ensure that the pseudo-labels are assigned by no model other than the BRBES. This makes the pseudo-labels representative of domain knowledge while handling associated data uncertainties. We then optimize the confident BRBES with both a labeled and a weakly augmented pseudo-labeled energy dataset. If the accuracy increases, the confident BRBES will transition to the “weakly semi-supervised BRBES”. We then apply strong augmentation to generate more complex unlabeled energy data. To assign pseudo-labels to these strongly augmented unlabeled data, we apply the weakly semi-supervised BRBES. We then optimize this weakly semi-supervised BRBES with labeled as well as weakly and strongly augmented pseudo-labeled energy data. If the accuracy increases through this optimization, the weakly semi-supervised BRBES will transition to “semi-supervised BRBES”. Thus, with this semi-supervised BRBES, we provide energy consumption prediction with improved accuracy by overcoming the scarcity and imbalance of supervised labeled data. After obtaining predictive energy consumption output with this semi-supervised BRBES, we provide an explanation and counterfactual of this output to the end user through a user interface. The explanation and counterfactual are prepared in nontechnical human language so that any layperson can understand them. The explanation and counterfactual, formulated based on domain knowledge, create a relationship of trust between our model and human user [37]. Thus, we propose a semi-supervised explainable BRBES framework to predict the continuous value of the energy consumption of buildings accurately in units of kilowatt-hour (kWh) despite the scarcity of labeled data while providing an explanation and counterfactual based on knowledge of energy consumption domain. Thus, we perform semi-supervised regression while also explaining the regression output. We evaluate the accuracy, explainability, and counterfactual of our proposed semi-supervised framework with relevant metrics. In terms of accuracy, our proposed framework outperforms the supervised BRBES through semi-supervised learning. This framework also offers higher accuracy than conventional machine learning models by dealing with data uncertainties and optimizing itself with JOPS. In terms of explainability, this framework outperforms machine learning models by providing domain knowledge-based explanation in human-understandable language. Moreover, we assess our proposed framework’s balance between explainability and accuracy with the Belief Rule-Based adaptive Balance Determination (BRBaBD) algorithm [37]. To achieve our research objective, we focus on the following four research questions in this paper:

- How to address the scarcity of supervised labeled training data of energy consumption? We employ SSL to overcome the scarcity of labeled energy data with weakly and strongly augmented pseudo-labeled data.

- What is the benefit of predicting with BRBES? The benefit is prediction based on knowledge of the energy domain while dealing with data uncertainties.

- Why and how to integrate self-training model with BRBES? For the SSL model, we employ self-training to obtain pseudo-labels of unlabeled energy data with the BRBES only. A mathematical model is proposed to combine self-training with the BRBES.

- How to make the output of BRBES trustworthy to a human user? We make the predictive energy consumption output of the semi-supervised BRBES trustworthy by explaining it in nontechnical human language to a user through a user interface. This explanation is based on knowledge of the concerned domain.

The remainder of the paper is structured as follows: Relevant works are presented in Section 2. We then demonstrate our proposed semi-supervised explainable BRBES framework in Section 3. In Section 4, we present our experimental results. These results are discussed in Section 5. We conclude the paper and present our future research direction in Section 6.

2. Related Work

In this section, the existing SSL methods, unlabeled data labeling techniques, as well as type of data and predictive output are briefly covered. Then, we explain the motivation of the research.

2.1. SSL Methods

Sahoo et al. [11] employed an SSL approach to predict COVID-19 cases accurately from digital chest X-rays and Computed Tomography (CT) scans. Their proposed COVIDCon algorithm consisted of data augmentation (weak and strong), consistency regularization, and multi-contrastive learning. However, COVIDCon algorithm’s classification is based on multi-contrastive learning, with no consideration of knowledge related to COVID-19 domain. Moreover, this algorithm performs classification of images instead of regression over numerical input values. No explanation is provided by COVIDCon in support of the predictive diagnosis of COVID-19. Sahoo et al. [12] proposed a new multi-contrastive semi-supervised learning algorithm “MultiCon” to classify drug function into 12 categories by analyzing the features of two-dimensional images of drug chemical structure. This algorithm used multi-contrastive loss with consistency regularization to predict drug function. However, this algorithm’s multi-contrastive learning approach distinguishes image features through clustering. It does not take into account domain knowledge of drug function. Moreover, this algorithm is restricted to image classification only rather than numerical data regression. “MultiCon” also does not explain the rationale in support of its predictive output on drug function. Li et al. [53] proposed a new semi-supervised learning methodology Mixup Contrastive Mixup (MCoM) to address the imbalanced data problem in tabular cyber security datasets. To tackle this imbalance, their proposed MCoM employed triplet mixup data augmentation on the minority (vulnerable) class. Then they used contrastive and feature reconstruction loss to train the encoder and the decoder. They used a label propagation technique to pseudo-label the subset of the unlabeled data. For the predictor, they used Multi-Layer Perceptron (MLP). However, MLP is a data-driven approach, with no consideration of knowledge of cyber security domain. MCoM performs classification of security data instead of regression. Moreover, security classification output is not explained by MCoM. Silva et al. [54] employed an evolutionary multi-objective algorithm, the SPEA2, for semi-supervised training of Least Squares Support Vector Machine (LSSVM) with both labeled and unlabeled data. However, LSSVM is a data-driven approach, with no consideration of domain knowledge. This paper performs binary classification with LSSVM instead of regression. Moreover, classification output is not explained by LSSVM, resulting in a lack of explainability of the output to the end user. Donyavi et al. [55] proposed a model to perform classification with K-nearest neighbor (KNN). For semi-supervised learning of KNN, they proposed a synthetic, labeled data generation method called Diverse Training Dataset Generation based on a Multi-objective Optimization for Semi-Supervised Classification (DTGMO-SSC). However, KNN is a data-driven approach with no domain knowledge. Moreover, classification output is not explained by this method. Jin et al. [56] proposed Evolutionary Optimization-based Pseudo Labeling (EOPL) for semi-supervised soft sensor development of industrial processes. They employed Gaussian Process Regression (GPR) as the base learner. They enlarged the labeled training dataset by combining the high-confidence pseudo-labeled data with initial labeled data. Then, the GPR model was rebuilt with this enlarged dataset. They also extended EOPL to ensemble EOPL (EnEOPL) for enhanced prediction performance. However, domain knowledge is not taken into account by GPR. Moreover, EOPL and EnEOPL do not explain the predictive output. The diversity of the unlabeled data in feature space is also not emphasized in this research. Gao et al. [57] proposed the Evolutionary Multi-Tasking Semi-Supervised Classification (EMT-SSC) method by combining Support Vector Machine (SVM) with a modified Z-score. However, SVM does not take into account domain knowledge. The BRBES outperforms fuzzy logic to deal with uncertainties, especially those caused by ignorance [47]. The Z-score, used by this method, may not be reliable if the training dataset does not cover all possible data instances. The proposed method performs classification rather than regression for continuous estimation of target variable. Moreover, this method offers no explanation in support of its classification output. Cococcioni et al. [58] presented a semi-supervised-learning-aided evolutionary approach to enhance safety of workplace. They carried out a semi-supervised learning approach to formulate population for NSGA-II. Each chromosome of the population consisted of two classifiers: one classified risk perception of a worker and the other classified level of caution of the same worker. Each classifier was implemented with Multi-Layer Perceptron (MLP), which is a data-driven approach without any domain knowledge. Regression is not taken into account by this framework, nor does it explain the classification output to the end user.

2.2. Labeling the Unlabeled Data

Sahoo et al. [11] used both weak and strong augmentations to generate unlabeled data. For weak augmentation, they used the flip-shift strategy. For strong augmentation, they performed a RandAugment-based strategy. They assigned a pseudo-label to the weakly augmented version of each unlabeled image. Then, they assigned a high-confidence pseudo-label to the strongly augmented version of the same image using cross-entropy loss. They enforced consistency regularization by combining the supervised cross-entropy loss of labeled images with the unsupervised loss. Thus, they ensured consistent distribution of strongly augmented unlabeled image with labeled image. With contrastive learning, they ensured maximum similarity between augmented views of the same image. The “MultiCon” algorithm, proposed by [12], also utilized weakly augmented versions of drug chemical structure images to pseudo-label the corresponding strongly augmented versions of the same images. The MCoM methodology, proposed by [53], employed triplet mixup data augmentation approach to generate synthetic numerical data for underrepresented samples of cyber security classes, resulting in a balanced dataset. Then, they used the label propagation technique to pseudo-label the unlabeled data. Attributing labels to the unlabeled data was taken as a multi-objective optimization problem by the paper [54]. SPEA2 was used to generate the best combination of labels for the unlabeled training data by optimizing the parameters of LSSVM. In [55], positions of the data, generated with DTGMO-SSC, were improved through a multi-objective evolutionary algorithm called Non-dominated Sorting Genetic Algorithm II (NSGA-II). This algorithm had two objectives: accuracy and density. The accuracy function eliminated outlier data, and the density function ensured appropriate distribution and diversity in feature space. Simultaneous consideration of accuracy and diversity was the advantage of the proposed method over the existing ones. In [56], pseudo-labeling of the unlabeled data was taken as an optimization problem, where pseudo-labels served as the decision variables of the Genetic Algorithm. Then, the high-confidence pseudo-labeled data were combined with the initial labeled data to formulate an extended training dataset. In [57], unlabeled data were labeled based on fuzzy logic and cluster assumption. In [58], semi-supervised learning consisted of two stages. In the first stage, each classifier was trained independently with its own supervised training data. In the second stage, training was continued for both classifiers with an unlabeled dataset. The output of one classifier was utilized as a supervised labeled example for the other classifier.

2.3. Type of Data and Predictive Output

Two-dimensional images of chest X-rays and CT scans were classified by the COVIDCon algorithm [11] as COVID-19, pneumonia, or normal cases. The “MultiCon” algorithm, proposed by [12], classified the two-dimensional images of the drug chemical structure into 12 categories based on their therapeutic applications. MCoM, proposed by [53], classified numerical tabular security data as either positive (vulnerable case) or negative (nonvulnerable case). An evolutionary multi-objective algorithm, the SPEA2 proposed in [54], was tested in a set of toy problems to evaluate its suitability for performing classification tasks. The DTGMO-SSC method, proposed by [55], was applied on 55 semi-supervised datasets from machine learning databases KEEL and UCI to perform classification. The effectiveness of the semi-supervised regression of the EOPL and EnEOPL methods, proposed by [56], was verified through the Tennessee Eastman (TE) chemical process and an industrial fed-batch chlortetracycline (CTC) fermentation process. In [57], numerical experiments were performed on two Multi-Objective Multi-Tasking Optimization (MO-MTO) benchmarks and a case study on a stationary gas turbine’s combustion processes to validate the performance of the proposed EMT-SSC algorithm. The effectiveness of the semi-supervised framework, proposed by [58], was demonstrated by testing it on real-world data gathered from shoe manufacturing enterprises. For 130 out of 140 workers, this framework predicted both risk perception and caution level with good accuracy.

We illustrate the categorization of all of the aforementioned semi-supervised prediction models, with regard to their specifications and limitations, in Table 1.

Table 1.

Categorization of existing literature.

2.4. Motivation

Data scarcity presents a significant practical challenge in predicting building energy consumption primarily due to the complexities and costs associated with acquiring high-quality labeled datasets. Factors, such as limited sensor deployments [10], privacy concerns [11], and the labor-intensive nature of manual data annotation [10,12], contribute to this scarcity. This limitation hinders the development of robust predictive models, as insufficient data can lead to overfitting and reduced generalizability across diverse building types and operational conditions. To address this limitation, various recent SSL methods have been extensively explored, in which machine learning, deep learning, or the cluster assumption is employed as the base model to predict output. Such models use statistical learning algorithm or neural network architecture to discover hidden action patterns from training data for prediction. These models do not contain knowledge of the relevant domain, nor do they deal with uncertainties associated with input data. However, domain knowledge is particularly critical for building energy consumption prediction due to the complex interplay of various factors influencing energy use [42]. These factors include building design, occupancy patterns, HVAC systems, and external environmental conditions. Such factors cannot be fully captured from historical energy consumption data or building images [37]. Incorporating domain expertise enables transparent modeling by capturing the underlying physical and operational characteristics of buildings [42]. Some of the SSL methods, studied in the literature, are restricted to image or numerical data classification only rather than numerical data regression. Many of the studied SSL methods do not consider diversity and density while generating unlabeled data in the feature space. In pseudo-labeling based semi-supervised approach, no strong augmentation is performed to reduce noise of the pseudo-label already assigned to the numerical unlabeled data. Moreover, none of the SSL methods we have studied in the literature provide explanation in support of their predictive output.

To address these shortcomings, this research sheds light on a semi-supervised explainable BRBES framework to predict the energy consumption of buildings. This framework deals with energy consumption domain knowledge and data uncertainties with the BRBES, generates equally distributed unlabeled energy data through weak and strong augmentation, assigns pseudo-labels to the unlabeled data with self-training model, applies strong augmentation to reduce noise of the pseudo-label assigned to the weakly augmented unlabeled energy data, performs regression by predicting a continuous numerical value of the energy consumption in kWh, and explains the predictive output in nontechnical human language through a user interface.

3. Method

This section describes the proposed semi-supervised explainable BRBES framework in detail. Particularly, the self-training method is first introduced. Then, the proposed framework is described. Finally, metrics to evaluate the framework are presented.

3.1. Self-Training Method

The self-training method falls under the pseudo-labeling approach of SSL [59]. This method iteratively trains its supervised classifier with both labeled data and data which have been pseudo-labeled in the previous iterations. Initially, the self-training method trains its supervised classifier only with labeled data. Then, it employs this trained classifier to predict labels for the unlabeled data points. The predictive labels, having the highest confidence, are added to the training dataset. This extended training dataset, consisting of both initial labeled data and new pseudo-labeled data, is utilized to retrain the supervised classifier. This iteration typically continues until all unlabeled data are pseudo-labeled [60].

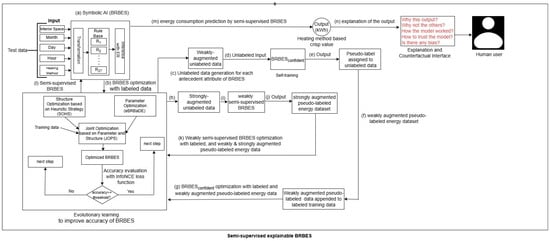

In line with this self-training method, we propose a semi-supervised explainable BRBES framework in this paper. This framework trains the BRBES with both labeled and pseudo-labeled data to provide more accurate prediction and explanation. As shown in Figure 1, this framework consists of the following three major components:

Figure 1.

System Architecture of semi-supervised explainable BRBES framework to provide energy consumption prediction.

- Prediction Model: For the prediction model, we use the BRBES, a symbolic AI model, to provide domain knowledge based prediction while handling associated uncertainties (Figure 1a). We then optimize the initial BRBES with supervised labeled training data using JOPS. Such optimization makes the initial BRBES supervised. We optimize the supervised BRBES unless its accuracy reaches the confidence threshold. If the accuracy reaches this threshold, the supervised BRBES will become a confident BRBES. This confident BRBES will be able to predict pseudo-labels for the unlabeled data points with due confidence. (Figure 1b).

- Data Augmentation: To overcome the shortage of labeled data, we synthetically generate unlabeled data. For this purpose, we leverage two kinds of augmentations: “weak” and “strong”. Theses two types of augmentations are briefly mentioned below.Weak Augmentation: For weak augmentation, we generate unlabeled integer values for relevant antecedent attributes of BRBES. We predict labels for these weakly augmented unlabeled data points with confident BRBES. We then train the confident BRBES with both labeled and weakly augmented pseudo-labeled datasets. If training with this weakly augmented pseudo-labeled dataset improves the accuracy, the confident BRBES will transition to a weakly semi-supervised BRBES (Figure 1c–g).Strong Augmentation: A dataset has two types of noise: class noise and attribute noise [61]. Class noise is triggered against incorrectly assigned class label to a data instance. On the other hand, attribute noise reflects erroneous or missing values for one or more input features (independent variables) of the dataset [61]. To reduce both the class and attribute noise of the weakly augmented pseudo-labeled dataset, we apply strong augmentation. For strong augmentation, we synthetically generate unlabeled float values for the same antecedent attributes of BRBES as weak augmentation. We then predict labels for these strongly augmented unlabeled data points with a weakly semi-supervised BRBES. We optimize the weakly semi-supervised BRBES with labeled, as well as weakly and strongly augmented pseudo-labeled, datasets. If training with the strongly augmented pseudo-labeled dataset improves the accuracy, weakly semi-supervised BRBES will transition to a semi-supervised BRBES (Figure 1h–l).

- Prediction and Explanation: This semi-supervised BRBES provides more accurate prediction, along with an explanation and counterfactual, through a user interface (Figure 1m,n).

3.2. Proposed Semi-Supervised Explainable BRBES Framework

Initial Facts: The architectural design of the proposed semi-supervised explainable BRBES framework for predicting energy consumption in buildings is shown in Figure 1. Figure 1a represents the BRBES as symbolic AI which has five input values: interior space, month, day, hour, and heating method. The unit of interior space is the square meter, the month range is from January to December, the day range is from Monday to Sunday, the hour range is from 00:00 to 23:00, and heating method is either district or electric. These five values constitute five initial facts for the BRBES to provide energy consumption prediction. Among these facts, month, day, and hour are used to calculate solar illumination and interior inhabitance level of a building at a specific time instance. Against these initial facts, the BRBES predicts the energy consumption level in kWh by reasoning over its rule base. We now proceed to illustrate how this rule base of the BRBES models energy consumption knowledge to deliver transparent and explainable prediction.

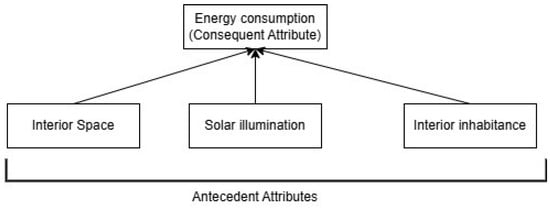

Formalization of Domain Knowledge: The body of knowledge that individuals have acquired in a particular area of expertise is called domain knowledge [62]. It can be viewed as a specialized manifestation of prior knowledge held by a domain expert or individual [63]. Knowledge of the energy consumption domain is represented by the rule base of the BRBES. Here, energy consumption serves as the domain of interest, and the rule base embodies the corresponding domain knowledge. A belief rule consists of two components: an antecedent part and a consequent part. In the rule base of the BRBES within our proposed semi-supervised framework, the antecedent part comprises three attributes: interior space, solar illumination, and interior inhabitance. The solar illumination value, ranging from 0 to 1, is derived based on the month and hour, as illustrated in Table 2. Sunrise and sunset times are utilized to determine the corresponding solar illumination level. Table 3 and Table 4 present the calculation of interior inhabitance (ranging from 0 to 1) in an apartment, in line with the hour of the day, month of the year, and category of the day (weekday/weekend). Each antecedent attribute is assigned three referential values: high (H), medium (M), and low (L). The consequent attribute, Energy Consumption, shares the same referential value structure. We show the hierarchical relationship between three antecedent attributes and their consequent attributes in Figure 2. Against three referential values of each of the three antecedent attributes, the rule base of the BRBES contains twenty-seven rules. There are four ways to create this rule base [47]: transforming expert knowledge into belief rules, formulating belief rules from historical data, leveraging the existing rule base, and generating rules at random without any prior information. Drawing upon the knowledge provided by energy experts, the initial rule base of the BRBES is constructed in this article. These rules, outlined in Table 5, serve as the formal representation of the domain knowledge employed in this work. Initially, for all the rules of the rule base, we assign an equal value (“1”) to both the rule weight and antecedent attribute weight. The consequent component of the rule base contains numerical values that quantify the belief degrees for the associated referential values. The initial belief degrees allocated to these referential values of the consequent attribute of a belief rule are assigned by taking opinions of two energy experts. These belief degrees enable the BRBES to effectively handle uncertainties [47]. This table’s ‘Activation weight’ column is interpreted subsequently in this subsection.

Table 2.

Solar illumination calculation from January to December.

Table 3.

Interior inhabitance computation (weekdays).

Table 4.

Interior inhabitance computation (weekend).

Figure 2.

Three antecedent attributes and one consequent attribute of BRBES.

Table 5.

BRBES’s initial rule base (knowledge of energy consumption domain).

Symbolic AI: As a symbolic AI model, we employ the BRBES in our proposed framework, as shown in Figure 1a. The reasoning mechanism of the BRBES comprises four stages: input transformation, rule activation weight calculation, belief degree update, and rule aggregation [46].

Transformation of Input. In this step, the input data corresponding to the three antecedent attributes of the rule base—interior space, solar illumination, and interior inhabitance—are transformed into their respective referential values. For interior space, the utility values for low (L), medium (M), and high (H) are set to 10, 85, and 200, respectively. For solar illumination, the L, M, and H values are 0, 0.50, and 1; and for occupancy, they are 0.10, 0.55, and 1, respectively. These utility values are initially set based on the opinions of two energy experts and two civil engineers. To illustrate our proposed framework, an apartment in Skellefteå, Sweden, is considered as a case study. This apartment has an interior space of 142 square meters and is heated with electrical technique. The goal is to predict its hourly energy consumption for 11:00 AM on a Saturday in July. The input values are converted into referential values. For interior space, M = (200 − 142)/(200 − 85) = 0.51, H = (1 − 0.51) = 0.49, and L = 0. For solar illumination, H = 1, M = (1 − 1) = 0, and L = 0. For occupancy, L = 1, M = 0, and H = 0.

Rule Activation Weight Calculation. In this step, the activation weight for each of the twenty-seven rules in the rule base is calculated. The activation weight is influenced by the input values corresponding to the three antecedent attributes. To determine the activation weight (ranging from 0 to 1) of each rule, we take into account factors such as the rule’s matching degree, rule weight, the total number of antecedent attributes, and the weight assigned to each antecedent attribute [47]. The mathematical formulations for calculating the matching degree and activation weight of each rule are provided in Equations (A1) and (A2) in Appendix A, respectively. The activation weights of the rules are presented in the last column of Table 5, where Rule No. 12 exhibits the highest activation weight of 0.51 for the given example.

Belief Degree Update. Uncertainty arising from ignorance may result in the unavailability of input data for one or more antecedent attributes. To address this issue, the BRBES updates the initial belief degrees of the referential values for its consequent attribute, as outlined in Equation (A3) of Appendix A [46]. In this manner, the BRBES effectively manages uncertainty due to ignorance.

ER-based Inference. In this step, we utilize an analytical ER approach to aggregate all twenty-seven rules of the BRBES [64,65]. The final aggregated belief degree for each of the three referential values of the consequent attribute is calculated using the analytical ER equations, as presented in Equations (A4) and (A5) of Appendix A. The resulting aggregated belief degrees for the referential values H, M, and L of the consequent attribute are 0, 0.73, and 0.27, respectively. Subsequently, the crisp value of energy consumption is determined from these three aggregated belief degrees of the consequent attribute.

Prediction of Consumed Energy. The three aggregated belief degrees of the consequent attribute in the BRBES are converted into a single numerical crisp value. Table 6 outlines the calculation procedure for this crisp value, which quantifies energy consumption in kWh. In addition to the belief degrees, the apartment’s heating method—either district or electric—is incorporated into the calculation. As noted by [66], electric heating typically results in higher energy consumption compared to district heating. Based on the values presented in Table 6, the final crisp value of energy consumption for the example under consideration is 1.41 kWh, which closely approximates the actual measured consumption of 1.38 kWh.

Table 6.

Quantification of consumed energy in kWh using crisp value.

However, as a knowledge-driven approach, the BRBES tends to exhibit lower accuracy compared to machine learning and deep learning models [67]. Therefore, to enhance the accuracy of the BRBES, we focus on integrating learning-based AI techniques with the framework.

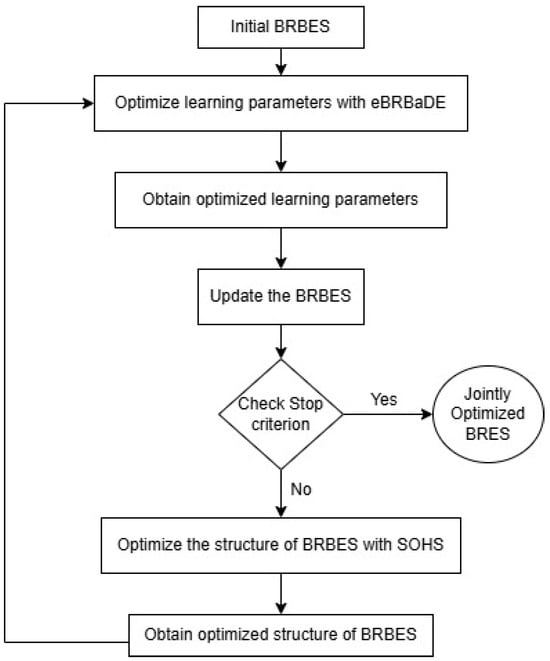

Learning AI. To improve accuracy of initial BRBES, we conducted its learning with labeled training data. As shown in Figure 1b, we performed this learning by optimizing both the parameters and structure of the BRBES. The learning parameters of BRBES include its rule weight, antecedent attribute’s weight, and consequent attribute’s belief degrees [68]. These learning parameters were initially set by a human expert, which may not be accurate for a large dataset [69]. Therefore, many optimal training techniques, such as Particle Swarm Optimization (PSO), Differential Evolution (DE), and Sequential Quadratic Programming, have been employed to facilitate the learning of BRBES parameters in order to increase the accuracy of the initial BRBES results [49,70,71]. For parameter optimization, optimal training approaches based on sequential quadratic are employed. Nevertheless, they have a tendency to become trapped in local optima. As a result, the global optima in the search space cannot be found [69]. Evolutionary algorithms like PSO, DE, Genetic Algorithm (GA), and BAT, however, have circumvented this limitation by conducting random searches from any location inside the search space. Because of the optimization strategy, DE outperforms other evolutionary algorithms to optimize BRBES parameters [72]. Two main elements of the DE are exploration and exploitation. Using the data from the search space’s current focus area, exploitation creates a new solution [73]. To stay out of local optima, exploration enlarges the search space. Finding a solution’s global optima depends heavily on two DE control parameters: crossover (CR) and mutation (F) [74]. The majority of DE research has altered CR and F values according to the objective function’s fitness values [75,76]. They did not, however, take into account the various kinds of uncertainties associated with the DE algorithm. To address these uncertainties of DE, an enhanced Belief Rule-Based adaptive Differential Evolution (eBRBaDE) algorithm has been proposed by [69]. This eBRBaDE utilizes the BRBES’s inherent uncertainty handling capacity to address the uncertainties of DE. It integrates DE with the BRBES to optimize parameters of the BRBES. eBRBaDE contains two different BRBESs, named BRBES_F and BRBES_CR, to calculate the values of F and CR, respectively. By calculating the F and CR values based on population changes and objective function’s values in each iteration, the BRBES assists in achieving the ideal balance of search space between exploration and exploitation. The new population is created by carrying out the DE mutation, crossover, and selection processes using the updated F and CR values. The individual of the new population with the highest fitness value is chosen as the best option after this process is completed and the stop requirements are satisfied. Thus, eBRBaDE provides greater accuracy than DE to produce ideal values for the learning parameters of the initial BRBES by striking a balance between exploration and exploitation through its uncertainty handling mechanism [69]. The initial BRBES is then updated with the optimized values of its learning parameters. The stop criterion is then examined. It will be false for the first iteration, at which point the procedure advances to the structure optimization stage. To optimize the structure of the rule base of BRBES, we selected the optimum number of referential values of its antecedent attributes through Structure Optimization based on Heuristic Strategy (SOHS) algorithm [49]. The new structure’s learning parameters are then used to optimize the parameters of BRBES. Until the structure does not change further, these iterations continue. Thus, we jointly optimize both the parameters and structure of the BRBES with the JOPS algorithm [49]. The flowchart of this joint optimization is presented in Figure 3. To determine the optimal parameters and structure of the BRBES, JOPS uses the generalization error instead of the training error [49]. A BRBES with a small training error on its training dataset may not be able to generate ideal inference output on its testing dataset due to overfitting [49]. On the other hand, the generalization capability, quantified by generalization error, is an important criterion to evaluate a prediction model’s effectiveness in delivering stable results on data not encountered during training [77,78,79]. Using the Hoeffding inequality theorem of probability theory [80], it has been demonstrated that the generalization error is a more useful criterion than the training error for identifying the ideal parameters and structure of the BRBES [49]. An upper bound on the likelihood that the sum of random variables will differ from its expected value is provided by this Hoeffding inequality theorem. Hence, the JOPS selects the BRBES with the minimum generalization error as the most optimal BRBES. THe generalization error, ge, is shown in Equation (1):

Here, the predictive output of the BRBES for the ith input data is represented by , ith input data’s actual output is , the total number of independent input–output data pairs (, ) is quantified by T, the total number of referential values of all antecedent attributes is symbolized by T, r is the probability value within the interval (0, 1], and the interval of the predictive output f(x) is within [u(), u()]. We utilize labeled training dataset to optimize BRBES with JOPS. With this optimization, the initial BRBES transitions to a supervised BRBES, as shown in Equation (2):

Here, defines the initial labeled training data. In the next step, we ensure that the accuracy of this supervised BRBES reaches a certain confidence threshold.

Figure 3.

Flowchart of joint optimization of BRBES.

Confident BRBES. As part of self-training-based semi-supervised regression, we generate unlabeled data through weak and strong augmentation. Unlabeled data refer to a set of antecedent attributes, where the actual value of the corresponding consequent attribute is unknown. In this research, we generate data through augmentation for the antecedent attribute “interior space” of the BRBES. These augmented data are unlabeled because the actual values of their corresponding consequent attributes (crisp value of energy consumption in kWh) are unknown. We then predict the value of energy consumption against unlabeled data of the antecedent attributes using a supervised BRBES. This predictive value of energy consumption is the pseudo-label, which we assign to the unlabeled data. However, before predicting this pseudo-label, the accuracy of the supervised BRBES has to reach the confidence threshold. Otherwise, this BRBES will not be confident enough to predict the pseudo-label with reasonable accuracy. To evaluate this confidence level of the accuracy of supervised BRBES, we use contrastive loss [81]. This contrastive loss measures how similar a predictive output is to the actual output. For the contrastive learning loss function, we use InfoNCE loss [82], as shown in Equation (3):

where is an anchor, which represents a labeled data point with actual output in the test dataset; is the predictive output that is similar (positive pair) to the anchor; is the predictive output that is dissimilar (negative pair) to the anchor; N is the total number of anchors in the test dataset; and the negative sign is intended to minimize the loss. is the similarity function which measures similarity and dissimilarity of the anchor with positive and negative predictive output, respectively, as shown below.

Table 7.

Values of InfoNCE loss function against different levels of similarity.

Weak Augmentation. In this step, we apply weak augmentation to generate unlabeled data for the antecedent attribute “interior space” of BRBES (Figure 1c). For interior space, we generate integer values from 10 (minimum interior space) to 200 (maximum interior space), with an interval of 1. With these generated values, we cover every possible whole number between the minimum and maximum interior space. For solar illumination and interior inhabitance, we do not generate any unlabeled data through augmentation because all possible time instances and seasonal variations are covered in Table 2 (solar illumination) and Table 3 and Table 4 (interior inhabitance). Hence, for these two antecedent attributes, we generate float values from 0 to 1, as specified in Table 2 (solar illumination) and Table 3 and Table 4 (interior inhabitance). With these generated values, we formulate a weakly augmented unlabeled dataset. This dataset combines every single possible integer value of interior space with specific values of solar illumination and interior inhabitance.

Pseudo-Label for Weakly Augmented Unlabeled Data. We then employ confident BRBES to predict the value of consequent attribute ’energy consumption’ against each set of unlabeled antecedent attributes (Figure 1d,e). Thus, with these predictive values, we assign pseudo-labels, , to the weakly augmented unlabeled dataset (Figure 1f). We mathematically show this process in Equation (4):

Weakly semi-supervised BRBES. In this step, we optimize the confident BRBES with both initial labeled dataset and weakly augmented pseudo-labeled dataset using JOPS (Figure 1g). Then, we evaluate whether this pseudo-labeled dataset improves the accuracy of the confident BRBES. This accuracy is evaluated in terms of the InfoNCE loss value using the labeled test dataset. If the accuracy is the same or higher, we include this weakly augmented pseudo-labeled dataset in the training dataset of the BRBES. Thus, with increased accuracy from the weakly augmented pseudo-labeled dataset, we turn our confident BRBES into a weakly semi-supervised one (Figure 1i). In case the accuracy of the confident BRBES is reduced due to the weakly augmented pseudo-labeled dataset, we ignore this pseudo-labeled dataset. We then review the initial rule base (Table 5) of the BRBES to check for possible misrepresentation of the domain knowledge, which might have reduced the accuracy of the pseudo-labels. After fixing this misrepresentation, we go back to Equation (2) for optimizing the BRBES with initial labeled training data. We show this transition from confident BRBES to weakly semi-supervised BRBES in Equation (5):

The predictive accuracy can be greatly impacted by the presence of noisy data in a dataset [61]. Noise present in datasets has been empirically demonstrated to significantly deteriorate model accuracy [61]. Therefore, addressing noise in a dataset is of paramount importance for improved prediction accuracy. Two types of noise are present in a dataset: class noise and attribute noise [61]. Class noise represents an incorrectly assigned class to a data point. Class noise can be from contradictory instances (same data instances with different class labels) or mislabeled instances (instances labeled with wrong class labels). On the other hand, attribute noise reflects erroneous values for one or more input features (independent variables) of the dataset [61]. There are three different kinds of attribute noise: erroneous attribute values, missing or do not know values, and incomplete or do not care values [83]. To reduce both the class noise and attribute noise of the weakly augmented pseudo-labeled dataset , we perform strong augmentation. Such strong augmentation is intended to make this pseudo-labeled dataset more robust.

Strong Augmentation. In this stage, we perform strong augmentation by generating float values for the antecedent attribute “interior space” of the BRBES (Figure 1h). These float values range from 10.00 (minimum interior space) to 200.00 (maximum interior space), with an interval of 0.20, excluding the values of weakly augmented dataset . Thus, in terms of attribute noise, these values with decimal point address the “missing or do not know values” of the antecedent attribute “interior space”. These floating-point values of the interior space are combined with specific values of solar illumination and interior inhabitance to constitute a new set of antecedent attributes. Against this set of antecedent attributes, we employ a weakly semi-supervised BRBES to predict the value of consequent attribute ’energy consumption’ (Figure 1j). Thus, with these predictive values, we assign pseudo-labels, , to the strongly augmented unlabeled dataset. We mathematically show this process in Equation (6):

Semi-supervised BRBES. In this step, we append this strongly augmented pseudo-labeled dataset to the initial labeled and weakly augmented pseudo-labeled dataset. Thus, we formulate an extended dataset to cover maximum possible instances of antecedent attributes to predict energy consumption. Then, we employ the JOPS to optimize the weakly semi-supervised BRBES with this extended dataset (Figure 1k). After this optimization, if the accuracy increases or remains same, the weakly semi-supervised BRBES will transition to a semi-supervised BRBES (Figure 1l). Otherwise, we consider that the strong augmentation is noisy and go back to Equation (6) to refine the design of strong augmentation. We mathematically show this transition from weakly semi-supervised to semi-supervised BRBES in Equation (7):

Figure 4.

Transition steps from initial to semi-supervised BRBES.

Explanation. To make the predictive energy consumption trustworthy, we provide explanation in support of this predictive output to the user through a user interface (Figure 1n). We provide this explanation in nontechnical human language to enable any layperson to understand it. The rule with the maximum activation weight, which is rule 12 (Table 5) in our present example at hand, serves as the foundation for this explanation. The following is our pattern to provide explanation:

“Solar illumination is [e1] in a [e2] [e3], leading to [e4] likelihood for inhabitants to remain inside on a [e5] [e3]. Therefore, because of [e6] interior space, [e1] solar illumination, [e4] interior inhabitance, and [e7] heating technique, amount of consumed energy has been predicted to be mainly [e8]”.

Here, e1 = highest activated rule’s referential value for ‘solar illumination’; e2 = season of the year, where the summer season lasts from June to August, fall lasts from September to October, winter lasts from November to March, and spring lasts from April to May; e3 = daytime. Morning is from 4:00 a.m. to 11:59 a.m., noon (midday) is at 12:00, afternoon is from 12:01 p.m. to 5:59 p.m., evening is from 6:00 p.m. to 8:00 p.m., and night is from 8:01 p.m. to 3:59 a.m.; e4 = highest activated rule’s referential value for ‘interior inhabitance’; e5 = day type. The “weekday” lasts from Monday to Thursday, the “weekend” is Saturday, and we term Friday and Sunday by themselves; e6 = highest activated rule’s referential value for ‘interior space’; e7 = heating approach based on either district or electric technique; e8 = consequent attribute’s referential value with the highest belief degree. According to this pattern, the following is the explanation of our example case’s predictive output:

“Solar illumination is high in a summer morning, leading to low likelihood for inhabitants to remain inside on a weekend morning. Therefore, because of medium interior space, high solar illumination, low interior inhabitance, and electric heating technique, amount of consumed energy has been predicted to be mainly medium”.

From this explanation, a user can comprehend the influence of pertinent factors to reach the predictive outcome of energy consumption. We also provide counterfactual in this interface to inform the user of preconditions to attain a different level of energy consumption. As shown in Table 8, we prepare these counterfactual statements in natural human language. Aggregated final belief degrees of all the three referential values of the consequent attribute ’energy consumption’ serve as the basis to formulate these counterfactual statements. The following is a counterfactual against the explanation of our given example:

Table 8.

Counterfactual against the explanation.

“However, if it had been winter, when people would have stayed inside more often owing to the cold and lack of solar illumination, energy consumption might have been higher. Furthermore, if the apartment had been heated using the district approach, it might have consumed lesser energy”.

Thus, with the combination of explanation and counterfactual, we make our predictive energy consumption trustworthy to the end user. We show this semi-supervised explainable BRBES framework, denoted as , mathematically in Equation (9):

Here, is the crisp value of predictive energy consumption in kWh that we calculate in Equation (8), is the explanation text, and is the counterfactual statement.

3.3. Framework Evaluation

We evaluated the accuracy level of our proposed semi-supervised framework with three metrics: InfoNCE loss, Mean Absolute Error (MAE), and the coefficient of determination (). The explainability level was assessed by five metrics: feature coverage, relevance [84], test–retest reliability [85], coherence [86], and difference between explanation logic and model logic [87]. To assess the counterfactual produced by our framework, we used two metrics: pragmatism [86] and connectedness.

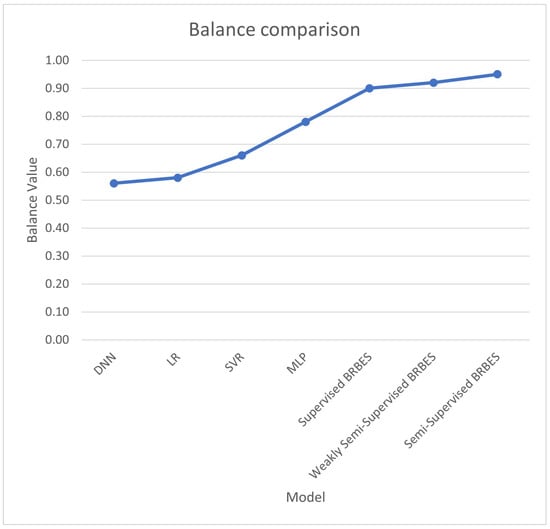

We used the Belief Rule-Based (BRB) adaptive Balance Determination (BRBaBD) method [37] to assess how well our proposed framework strikes a balance between explainability and accuracy. Balance is the final consequent attribute of BRBaBD, which is a multi-level BRBES. Between explainability and accuracy, the value of this balance ranges from 0 to 1, with 0 representing the least ideal point and 1 the most ideal. Its explainability level and accuracy level are its two antecedent attributes. These two antecedent attributes are each a consequent attribute of another BRBES. Five explainability metrics and three accuracy metrics formulate the antecedent attributes for explainability level and accuracy level respectively.

4. Results

4.1. Experimental Configuration

We implemented our proposed semi-supervised explainable BRBES system in Python (version 3.10) and C++ (version 20). We used a cpp file to implement the original BRBES. This cpp file also includes explanation and counterfactual statements, along with the crisp value computation dependent on the heating technique. The second cpp file was then used to implement JOPS in order to determine the ideal BRBES structure and parameter values. The first cpp file’s initial BRBES was fed these ideal values. This initial cpp file calculated the InfoNCE loss, MAE, , coherence, the gap between explanation and model logic, and counterfactual assessment metrics (connectedness and pragmatism). We produced unlabeled data for each of the three BRBES antecedent attributes in the third cpp file.

The Python package “SHAP” was then applied to our optimized BRBES using a script written in Python. This Python script quantified three evaluation metrics: feature coverage, relevance, and test-retest reliability by calculating the SHAP value (feature importance) of each of the three antecedent properties of our rule base (Table 5). By calculating the average percentage of each antecedent attribute’s nonzero SHAP values, we determine feature coverage. We compute each antecedent attribute’s average absolute SHAP value in order to quantify relevance. By computing the Intraclass Correlation Coefficient (ICC) among the SHAP values produced by various model runs, we are able to measure test-retest reliability. This test–retest reliability was then ascertained by calculating the mean value of ICCs over many runs. In order to construct BRBaBD, we then created a fourth cpp file, entered the values of all eight evaluation metrics, and computed the balance between accuracy and explainability.

4.2. Dataset

Skellefteå Kraft of Sweden provided us with the hourly energy consumption statistics for 62 residential apartments in the city of Skellefteå in Sweden [88]. The interior space of this dataset is 58 square meters (sqm) on average, with a minimum of 23 square meters (sqm) and a high of 142 square meters (sqm). Some of these apartments run on the electric heating method and the others on the district heating method. Each of these units is 2.40 m high. Energy data from 1 January 2022 to 31 December 2022, expressed in kWh, are included in this anonymized dataset. This dataset served as our initial training dataset with labels.

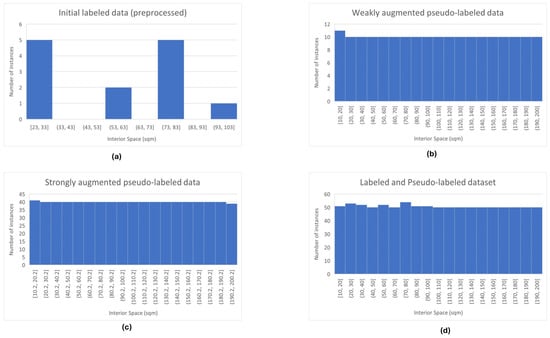

This 62-apartment dataset contains hourly energy consumption value of 24 h for 365 days, which means it has total (62 × 24 × 365) = 543,120 rows. We then preprocessed this dataset by excluding the apartments with same interior space. This resulted in only 13 apartments with unique values of interior space. We replaced the missing hourly energy consumption values of these 13 apartments with mean value of previous and next hour’s energy consumption. We then combined these 13 apartments’ interior space values with three different solar illumination values (Table 2) and seven different occupancy values (Table 3 and Table 4), resulting in a dataset of (13 × 3 × 7) = 273 rows. To train and test our proposed framework, we divided these 13 apartments into two parts: 10 training apartments, and 3 testing apartments. Our weakly augmented pseudo-labeled dataset contains 191 apartments with unique interior space, resulting in (191 × 3 × 7) = 4011 rows. The strongly augmented pseudo-labeled dataset contains (951 − 191) = 760 apartments with unique interior space, resulting in (760 × 3 × 7) = 15,960 rows. Thus, our extended training dataset, consisting of labeled as well as weakly and strongly augmented pseudo-labeled data, has (10 + 191 + 760) = 961 training apartments. This means that only 1% of this extended dataset is labeled, and the remaining 99% is pseudo-labeled. We show the number of rows of the labeled, pseudo-labeled, and extended datasets in Table 9. We show the histogram of number of instances across different interior space ranges in various types of dataset in Figure 5. According to Figure 5a, the data distribution in initial labeled dataset (preprocessed) is uneven, with the majority of interior space instances concentrated in the (23, 33] and (73, 83] ranges. Several intervals, such as (33, 43], (43, 53], (63, 73], (83, 93], and (103, 200] contain no instances of interior space. Such imbalance indicates potential bias in the dataset. The weakly augmented pseudo-labeled dataset (Figure 5b) shows a near-uniform distribution across all bins, suggesting an even representation of interior space values introduced through the weak augmentation. The same near-uniform distribution is visible in strongly augmented pseudo-labeled dataset (Figure 5c), with more frequent instances across interior space ranges. The extended dataset, consisting of both labeled and pseudo-labeled data (Figure 5d), shows the highest number of instances across different interior space ranges.

Table 9.

Labeled, pseudo-labeled, and extended datasets.

Figure 5.

Histogram showing the number of instances against interior space intervals in (a) initial labeled dataset (preprocessed), (b) weakly augmented pseudo-labeled dataset, (c) strongly augmented pseudo-labeled dataset, and (d) labeled and pseudo-labeled dataset.

4.3. Results

With our proposed semi-supervised explainable BRBES framework, we performed regression instead of classification. It is not always feasible to do semi-supervised regression with the clustering assumptions used in semi-supervised classification [89]. As a result, the majority of semi-supervised classification techniques are not suitable for regression directly [89]. Due to the simplicity of data augmentation, a lot of semi-supervised learning techniques are used in language and image datasets [53]. However, our numerical energy consumption data are in tabular format, which lack context. Such a lack of context impedes augmentation due to significant information loss after augmentation [53]. Moreover, traditional data augmentation methods generate too much noise on tabular data. Therefore, due to regression on numerical tabular data, our proposed framework is not directly comparable with traditional semi-supervised learning methods, such as the consistency regularization methods, FixMatch, MixMatch, and ReMixMatch [17].

We compared our proposed semi-supervised explainable BRBES framework with four state-of-the-art semi-supervised models: Support Vector Regressor (SVR), Linear Regressor (LR), Multi-layer Perceptron (MLP) regressor, and Deep Neural Network (DNN). For the SVR, the kernel is the Radial Basis Function (RBF), the regularization parameter (c) is 100, the kernel coefficient (gamma) is 0.10, and epsilon is 0.20. For the LR, both the y intercept () and slope () are based on the least squares method. Our MLP regressor consists of two hidden layers, with six neurons per hidden layer and a dropout of 0.40. For the MLP regressor’s activation function and optimization function, we used Rectified Linear Unit (ReLU) and Stochastic Gradient Descent (SGD), respectively. For this regressor, we ran 50 epochs, with a batch size of 70, resulting in (20,181/70) = 288 iterations per epoch over the extended training dataset. For the DNN, we used eight hidden layers, each having 24 neurons. We used the same activation and optimization function as the MLP in the DNN, with a dropout of 0.50. We ran 100 epochs in the DNN, with a batch size of 70, leading to (20,181/70) = 288 iterations per epoch. Initially, we trained these four models with the labeled training dataset consisting of 10 apartments. To lessen selection bias and prediction variance, we performed five-fold crossvalidation to the original labeled dataset of 13 apartments. We then assigned pseudo-labels to the weakly and strongly augmented unlabeled dataset of 951 apartments using each of these four supervised models. These four supervised models were then retrained with extended training dataset, consisting of both labeled and concerned pseudo-labeled data. Thus, these four models transitioned from supervised to semi-supervised.

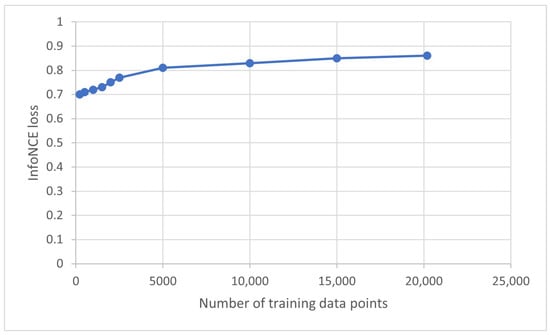

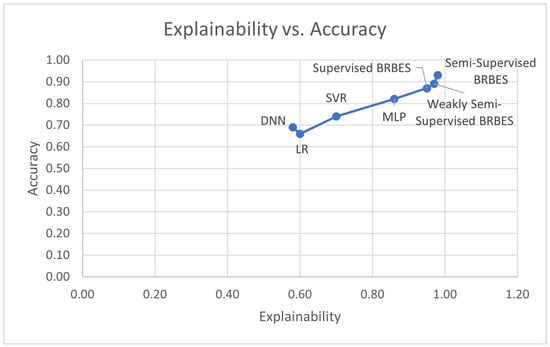

According to Table 10, the InfoNCE loss value of the initial BRBES was only 0.16, which is very low. To improve the accuracy of the initial BRBES, we employed JOPS to optimize its parameters and structure using initial labeled data. In Table 11, we analyze the sensitivity of the accuracy of BRBES to its parameters and structure [90]. We show the effect of optimizing each of the three parameters and the structure of the rule base on the accuracy of the BRBES in this table, where P1 = rule weight, P2 = each antecedent attribute’s weight, P3 = consequent attibute’s belief degrees, S1 = two referential values of each antecedent attribute, S2 = three referential values of each antecedent attribute, and S3 = four referential values of each antecedent attribute. In terms of the structure of the rule base of the BRBES, three referential values (S2) for each antecedent attribute turned out to be the most optimal one. We show this trained rule base of the BRBES with optimal belief degrees of the consequent attribute in Table 12. We then combined the optimal values of all the three parameters with the optimal structure of the BRBES using JOPS. This joint optimization resulted in a supervised BRBES with improved accuracy, as shown in Table 10. As the InfoNCE loss value of the supervised BRBES was above the confidence threshold 0.60, it became a confident BRBES. We show the comparative values of accuracy of the confident BRBES, weakly semi-supervised BRBES, semi-supervised BRBES, and four state-of-the-art semi-supervised models in Table 10. According to this table, the semi-supervised BRBES has higher accuracy than its supervised counterpart. We attribute this higher accuracy to the utilization of the pseudo-labeled training data. Moreover, this semi-supervised BRBES offers higher accuracy than four state-of-the-art semi-supervised models. Compared to SVR, LR, MLP, and DNN, the higher numbers of learning parameters of the BRBES were optimized by JOPS [68]. MLP and DNN optimized only one learning parameter—weight—using the backpropagation learning algorithm [68]. Hence, because of the optimization of a higher number of learning parameters [68], the semi-supervised BRBES offers higher accuracy than these four state-of-the-art semi-supervised models. In Figure 6, we show the increasing accuracy of the semi-supervised BRBES in terms of the InfoNCE loss value, with an increasing number of pseudo-labeled training data points. We compare the explainability metrics in Table 13.

Table 10.

Metrics quantifying accuracy of various models.

Table 11.

Sensitivity analysis of optimization of BRBES.

Table 12.

BRBES’s trained rule base (after optimization).

Figure 6.

InfoNCE loss value of semi-supervised BRBES with different numbers of training data points.

Table 13.

Metrics quantifying explainability of various models.

Every model yielded 100% feature coverage against all three of the antecedent attributes of the rule base. The relevance metric represents average values of SHAP of the concerned input feature. In the third column of Table 13, we show the relevance of each of the three antecedent attributes: interior space, solar illumination, and interior inhabitance for each model. The most relevant attribute (highest SHAP value) for predicting the level of energy consumption across all models is “interior space”, which is followed by “interior inhabitance” and “solar illumination”. Compared to all other models, the semi-supervised BRBES has a higher relevance for every antecedent attribute. Furthermore, the correct formulation of the rule base and explanation interface of the semi-supervised BRBES contributed to a highly reliable test–retest, with the semi-supervised framework’s explanation having 98.83% coherence with background knowledge and an explanation fully compliant with the domain knowledge (represented by rule base) [91]. A high coherence score means that similar inputs activate similar rules of semi-supervised BRBES, indicating that the model is logically stable [92]. To evaluate the coherence of four state-of-the-art semi-supervised models, we calculated these models’ global feature importance SHAP values for each input feature across all predictions. These global feature importance values are considered as explanation of these four models. We then evaluated the coherence by measuring how consistent these SHAP value explanations are across similar inputs [84]. We calculated the difference of four state-of-the-art semi-supervised models by measuring the discrepancy (hamming difference) between feature importance SHAP values and domain knowledge (ground truth) [91]. We evaluate our semi-supervised framework’s counterfactual statements with two counterfactual metrics in Table 14. Our counterfactual statement’s first section addresses seasonal fluctuation, and its second section addresses the heating technique. Given that summer and winter arrive with time, the first section is entirely pragmatic. Due to the high expense of switching from electric to district heating, the second section is only partially pragmatic. Since the counterfactual is completely compatible with the BRBES’s rule base (ground truth), the connectedness is 100%. As the other four models do not generate any counterfactual, these two counterfactual metrics are irrelevant for these four models.

Table 14.

Metrics quantifying counterfactual.

We used the multi-level BRBaBD to assess the model’s explainability versus accuracy balance. Using relevant evaluation metrics, we predicted the model’s explainability and accuracy values in the first layer of this BRBaBD. In Figure 7, we compare our proposed semi-supervised BRBES with four other state-of-the-art semi-supervised models in terms of explainability and accuracy values (between 0 and 1). The explainability versus accuracy balance of the semi-supervised BRBES and the other four semi-supervised state-of-the-art models were predicted in the second layer of BRBaBD. The semi-supervised BRBES exhibited greater balance than the supervised BRBES and the other four state-of-the-art semi-supervised models, as seen in Figure 8. Thus, our proposed semi-supervised framework achieves more optimal balance between explainability and accuracy by outperforming both the supervised BRBES and state-of-the-art semi-supervised models. These four state-of-the-art semi-supervised models fall under a data-driven approach [8]. These models learn representation from historical energy consumption data. They do not contain any knowledge of energy consumption domain [37]. For a biased or imbalanced dataset, the predictive output of these models will be erroneous [37]. Hence, predicting energy consumption with such models is risky in case the historical dataset is underrepresented [37]. This limitation is overcome by our proposed semi-supervised BRBES framework by providing energy prediction based on domain knowledge (ground truth).

Figure 7.

Comparison between explainability and accuracy of different models.

Figure 8.

Comparison of balance between explainability and accuracy of different models.

According to Figure 7, on a scale of 0 to 1, accuracy of the semi-supervised BRBES is 0.93 against an average accuracy of 0.73 of the four state-of-the-art semi-supervised models. Hence, our semi-supervised BRBES offers (0.93 − 0.73) = 0.20 or 20% higher accuracy. We now calculate the Margin of Error (ME) [93] of this higher accuracy on 20181 instances of labeled and pseudo-labeled data using Equation (10):