Abstract

The lack of transparency in many AI systems continues to hinder their adoption in critical domains such as healthcare, finance, and autonomous systems. While recent explainable AI (XAI) methods—particularly those leveraging large language models—have enhanced output readability, they often lack traceable and verifiable reasoning that is aligned with domain-specific logic. This paper presents Nomological Deductive Reasoning (NDR), supported by Nomological Deductive Knowledge Representation (NDKR), as a framework aimed at improving the transparency and auditability of AI decisions through the integration of formal logic and structured domain knowledge. NDR enables the generation of causal, rule-based explanations by validating statistical predictions against symbolic domain constraints. The framework is evaluated on a credit-risk classification task using the Statlog (German Credit Data) dataset, demonstrating that NDR can produce coherent and interpretable explanations consistent with expert-defined logic. While primarily focused on technical integration and deductive validation, the approach lays a foundation for more transparent and norm-compliant AI systems. This work contributes to the growing formalization of XAI by aligning statistical inference with symbolic reasoning, offering a pathway toward more interpretable and verifiable AI decision-making processes.

1. Introduction

Artificial Intelligence (AI) is increasingly being deployed in high-stakes domains such as healthcare, finance, autonomous systems, and law, where decisions have significant real-world consequences. Despite their notable performance, many modern AI models—especially deep-learning-based systems—operate as “black boxes” [1,2], lacking the transparency and traceable logic necessary for human understanding [3,4,5,6]. This opacity undermines user trust, accountability, and interpretability.

Explainable AI (XAI) has thus emerged as a critical area of research, driven by the need for AI systems to generate understandable and justifiable decisions. In domains like healthcare and finance, explainability is essential for professionals to evaluate AI-generated outputs [7,8,9]. Autonomous vehicles face similar scrutiny, where the lack of interpretability hampers public acceptance and regulation [10]. This concern has been institutionalized in policy frameworks such as the EU’s GDPR [11], the OECD’s AI principles [12], and the proposed AI Act [13], with comparable guidelines developed across Asia [14,15,16] and Africa [17,18,19].

Academic progress in XAI has yielded a variety of methods, including model-agnostic techniques like LIME [20] and SHAP [21], and more structured approaches like Anchors [22] and prototype-based explanations [23]. More recently, large language models (LLMs) have been employed to generate human-readable explanations. However, many of these solutions still fall short in providing causally grounded, verifiable reasoning [24], limiting their value in critical contexts.

Rule-based expert systems have historically addressed this gap by embedding human-like reasoning through symbolic inference. Recent hybrid methods have revisited this paradigm, combining statistical learning with structured logic—for instance, via the Shapley–Lorenz approach [25] or confident itemsets [26]. These efforts emphasize how rule-based models can support traceable, actionable decision-making.

Yet, challenges persist. Existing XAI techniques may oversimplify, omit critical details, or overwhelm users with complexity. Furthermore, there remains no consensus on what constitutes a “good” explanation, with effectiveness varying by context [27,28]. Ultimately, XAI must align with human cognitive expectations to foster trust and usability [29,30].

To address these gaps, this paper introduces a novel framework grounded in Nomological Deductive Reasoning (NDR) and built upon Nomological Deductive Knowledge Representation (NDKR). By integrating domain-specific rules with formal logic, the approach aims to produce transparent, law-based explanations that align with normative and epistemic standards.

The rest of the paper is organized as follows: Section 2 outlines the methodology, including a literature review and framework development. Section 3 analyzes existing XAI techniques and their limitations. Section 4 identifies gaps in the explanation clarity and trust. Section 5 details the proposed NDR framework and presents experimental validations, and Section 6 concludes with key contributions and future directions.

2. Methodology

This study combines a systematic literature review with empirical evaluation to develop and assess the Nomological Deductive Reasoning (NDR) framework for explainable Artificial Intelligence (XAI). The approach addresses both theoretical gaps in explainability research and practical challenges in generating trustworthy, traceable AI decisions.

2.1. Conceptual Framework Development

The conceptual design of the NDR framework was informed by a structured literature review of XAI methods published between 2010 and 2024, conducted in accordance with PRISMA guidelines. The review focused on explanation formats, logical structure, and epistemic soundness, emphasizing the importance of producing explanations that are not only interpretable but also grounded in valid reasoning [31,32]. The analysis revealed a prevailing reliance on post hoc and correlation-based interpretability techniques, which often lack formal causal justification. In response, the NDR framework was developed to integrate statistical predictions with symbolic deductive logic, guided by Hempel’s deductive–nomological (D-N) model. In this approach, AI outputs must be derivable from a structured knowledge base of domain-specific rules and antecedent conditions, embedding explainability directly into the reasoning process.

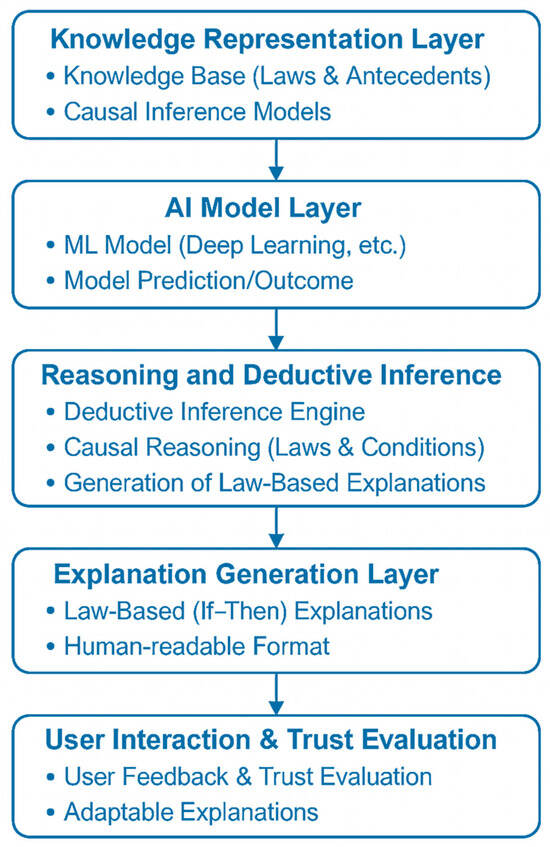

2.2. Architecture and Workflow

The proposed NDR framework comprises five sequential layers: (1) knowledge representation, (2) the AI model, (3) reasoning and deductive inference, (4) explanation generation, and (5) user interaction and trust evaluation. Each layer contributes to generating rule-consistent, human-readable justifications grounded in domain knowledge.

2.3. Experimental Setup

To evaluate the framework, experiments were conducted using the Statlog (German Credit Data) dataset [33], which includes 1000 samples with 20 features related to creditworthiness. Data preprocessing involved the normalization of numerical attributes and the encoding of categorical variables. The dataset was split into training (70%) and test (30%) sets, with a fixed random seed (42) to ensure reproducibility. A Random Forest classifier [34] was trained to generate initial credit-risk predictions. This model was chosen for its robustness and interpretability in structured financial data contexts.

2.4. Deductive Validation and Explanation Generation

Each prediction produced by the classifier was evaluated by the NDR deductive engine. This component queried a formalized financial knowledge base containing symbolic rules such as: “Loan duration > 12 months indicates credit stability” or “Multiple debtors suggest increased financial risk”. The engine determined whether the prediction followed logically from the rules and, if so, generated a corresponding explanation.

2.5. Evaluation Metrics

The framework was assessed along two dimensions: predictive accuracy and interpretability. Specifically, the following parameters were assessed:

- Accuracy: Classification correctness of the machine learning model.

- Rule Consistency: Agreement of predictions with symbolic knowledge.

- Rule Coverage: Percentage of predictions for which valid rule-based explanations were generated.

- Mismatch Penalty: Count of predictions inconsistent with the knowledge base.

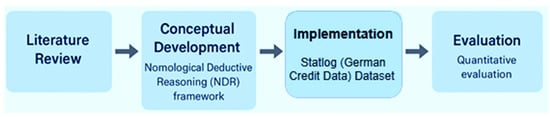

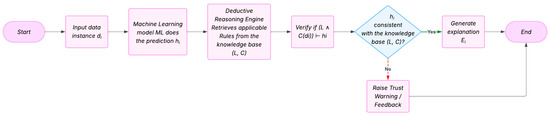

Figure 1 summarizes the methodological flow for developing and evaluating the NDR framework.

Figure 1.

Methodological workflow for developing and evaluating the NDR framework.

2.6. Reproducibility and Future Work

To support transparency and reproducibility, all implementation artifacts—including the source code and the structured knowledge base—are available via a public GitHub version 8 repository. Future work includes a planned user study to assess explanation clarity, trustworthiness, and usability in real-world decision-making scenarios, particularly in credit assessment and clinical diagnostics.

3. Current XAI State of the Art

In this research, the focus was put on the formats of explanations generated by current XAI methods. This angle was chosen for several reasons. First, the way explanations are presented plays a crucial role in how effectively users understand, trust, and act on AI decisions. Second, by examining different explanation formats, bridging the gap between complex AI systems and users with varying technical background becomes easier, ensuring that explanations are both accurate and accessible. Third, as the content and format of explanation are both important in the explanation process, placing the formats of the explanators at the center of the research leads to a better understanding of how the user-interaction cognitive load and decision-making can be enhanced, ultimately improving the overall usability and trustworthiness of AI models across diverse application domains. Therefore, in the context of an exponential growth in the explainable Artificial Intelligence (XAI) field, with more than 200 different methods of explainability on the one hand and a continuously growing need for human-readable explanations on the other hand, the format of various explanations was purposely explored in this paper to better understand why there is still a gap in producing human-readable explanations. XAI methods vary widely in how they present explanations and in the types of AI systems they aim to explain [35,36,37], and it is argued that the understanding of explanation format varieties is key to grasping the current landscape of XAI research. Before exposing the core of this research paper, the following section reviews the explanation formats as per the current state of the art in XAI as broadly classified by various scholars, including Vilone and Longo [38], whose classification appears to be the most comprehensive and structured, with five main types of explanators, namely visual, textual, rule-based, numerical/graphical, and mixed explanations.

3.1. Explanator Formats

In the context of explainable Artificial Intelligence (XAI), explanators refer to the various formats or mechanisms through which explanations of model decisions or behaviors are conveyed to users [28,39]. These explanations aim to make complex AI models, particularly black-box models such as deep learning networks, more understandable and interpretable. The nature of these explanators significantly influence how effectively users can comprehend, trust, and act upon the AI’s outputs [31,40,41]. As per the current XAI state of the art, there is no one-size-fits-all type of explanation, since it is believed that different explanatory formats serve distinct purposes, each tailored to different stakeholders, such as developers, domain experts, or end-users. Next, we review the current formats of explanations generated by different XAI methods.

3.1.1. Visual Explanations

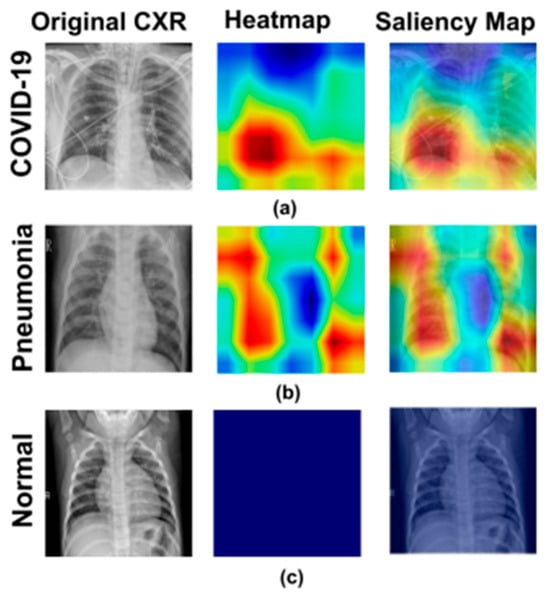

In the XAI field, visual explanations are a type of detail generated by AI algorithms that use visual cues to interpret the reasoning behind the machine learning models, particularly in image processing. This type of explanation is considered particularly useful when interpreting image-based models or in computer-vision tasks. Visual explanations aim to highlight the parts of the input (such as an image or video) that are most relevant to a model’s decision-making process [42]. These explanations are typically in the form of heatmaps, saliency maps, or attention maps that visually guide the user’s understanding of the AI model’s focus areas, as illustrated in Figure 2.

Figure 2.

Chest X-ray examples labeled as (a) COVID-19, (b) Pneumonia, and (c) Normal. Each row (from left to right) shows the input image, AI prediction, and Grad-CAM heatmap. Highlights reflect regions influencing the model’s decision. Source: [43].

The key methods used to generate visual explanations include the following:

Grad-CAM (Gradient-weighted Class Activation Mapping) [44]: This is one of the most popular methods found in the literature for generating visual explanations. Grad-CAM generates heatmaps by computing the gradient of the output category with respect to the feature maps of the last convolutional layer. This heatmap helps visualize which regions of an image contributed most to the model’s prediction.

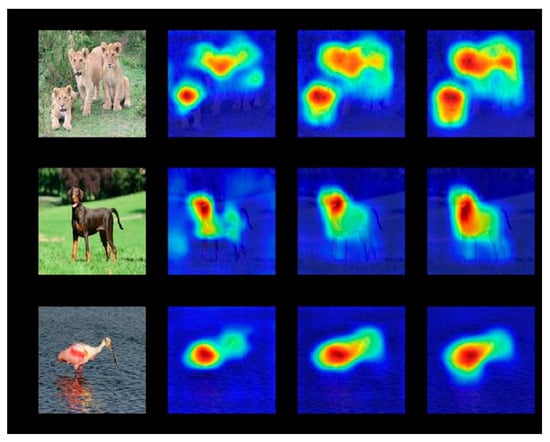

Saliency Maps [45]: This method computes the gradient of the predicted class with respect to the input image and then highlights the areas where small changes in the input would most influence the prediction. It is particularly useful for CNNs in image classification. Practically, saliency maps are the images that highlight the region of interest in a given computer-vision model, where a heatmap is generally superimposed over the input image to highlight the pixels of the image that provided the most important contributions in the prediction task. Figure 3 is an example of such images.

Figure 3.

Example of a saliency map. The pictures on the right are the saliency maps of the left image and show the regions that are more attentive parts for the CNN. (Source: geeksforgeeks.org/what-is-saliency-map; accessed on 25 January 2025).

Activation Maximization [46]: This technique seeks to visualize the features that maximally activate specific neurons in the convolutional network. By generating inputs that lead to high activation in specific neurons, it provides insight into the types of patterns that a deep learning model is sensitive to.

DeepLIFT (Deep Learning Important FeaTures) [47]: Unlike Grad-CAM, DeepLIFT computes the contribution of each input feature by comparing the activations to a reference activation, offering a clearer breakdown of the model’s reasoning.

Other XAI methods used to generate visual explanations include, but are not limited to, Layer-wise Relevance Propagation (LRP) [48], Integrated Gradients (IG) [49], Guided Back propagation [50], RISE [51], Class-Enhanced Attentive Response (CLEAR) [52], and Vision Transformer (ViT) [53].

3.1.2. Textual Explanations

Textual explanations in Artificial Intelligence refer to sentences presented in natural language, which try to provide human-readable narratives of the model’s decision-making process. This aspect makes them suitable for non-technical stakeholders, as the explanations describe, at a certain level of natural language, why a model made a particular prediction or decision. Technically, the approach provides human users of AI systems with clues about the process behind the decision of any ML model, such as the labels assigned to image parts in an image classification task [54]. Table 1 is an example chosen from the literature of the generation of textual explanations.

Table 1.

Examples of textual explanations as found in the XAI literature.

The key methods used to generate textual explanations include the following:

LIME (Local Interpretable Model-Agnostic Explanations) [20]: Generating basically mixed explanations, LIME generates local surrogate models that approximate the decision boundary of a black-box model in a specific region of interest. It then provides a text in a natural-language format, aiming to describe the important features in that region. This makes it easier for humans to understand why the model made a particular decision.

SHAP (Shapley Additive Explanations) [21]: Although this method generates more than just texts, as will be shown later, SHAP provides a game-theoretic approach to explain the output of any machine learning model. By computing Shapley values, the method determines the contribution of each feature to a model’s prediction and provides textual explanations that describe the impact of each feature.

Various other methods for generating textual explanations exist. These include, but are not limited to, Rationales [58], InterpNet [59], Relevance Discriminative Loss [60], Most-Weighted-Path, Most-Weighted-Combination and Maximum-Frequency-Difference [61], and Neural-Symbolic Integration [62].

3.1.3. Rule-Based Explanations

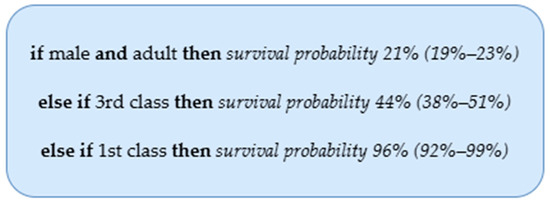

Rule-based explanations are a popular type of explanation technique in data mining, machine learning and Artificial Intelligence by which regularities in data are identified through various rule-extraction techniques. These regularities are mostly presented in the form of the IF-THEN rule, sometimes with AND/OR operators, as illustrated in Figure 4, which is an example decision list that was created using the Titanic data set available in R for the task of predicting whether the passenger survived the Titanic tragedy based on his or her features, such as age (adult or child), gender (male or female), passenger class (first, second, third, or crew) [63]. The “if” statements express a set of features comparable to the model’s inputs, and the “then” statements correspond to the predicted outcome corresponding to the conditions stated by “if”.

Figure 4.

Example of rule-based explanations. Source: [64].

Among the current XAI methods, this approach provides high interpretability of black boxes [37,64] even if some improvements can be added if analyzed from the holistic perspective of the logic and theory of explanation. The key methods used to provide such explanations are the following:

Decision Trees [65]: One of the oldest and most intuitive methods for model explanation. Decision trees split input data into various branches based on specific features, making them highly interpretable. These trees provide explicit rules that explain how a decision is made.

Anchors [66]: Anchors generate simple, understandable decision rules that are highly accurate in specific regions of the input space. These rules are based on the important features that “anchor” the decision in a given region of the input space.

Scalable Rule Lists and Rule Sets [67,68,69]: These methods extract human-readable decision rules directly from a model. They are often used with ensemble methods like decision forests or other machine learning algorithms that rely on multiple decision-making processes.

Various other methods such as Discriminative Patterns [70], MYCIN [71], Fuzzy Inference Systems [72], Automated Reasoning [73], Genetic Rule Extraction (G-REX) [74], Global to Local eXplainer (GLocalX) [75], Mimic Rule Explanation (MRE) [76] and many others exist, as detailed in [37]; these have the capacity to generate explanatory rules of the decision made by the model, although the form may be in some other type than IF…THEN.

3.1.4. Numerical/Graphical Explanations

As indicated by their name, numerical or graphical explanations provide a quantitative understanding of the model’s predictions or decisions. These explanations often rely on numbers, charts, or statistical representations to help users understand the model’s behavior. The key methods in this category include:

Partial Dependence Plots (PDPs) [77,78]: PDPs provide a graphical representation of the relationship between a feature and the predicted outcome while holding other features constant. This method helps to visualize the effect of a feature on the model’s prediction.

Feature Importance Scores [79]: Feature importance methods rank the features based on their contribution to the prediction. For example, Permutation Feature Importance measures the change in the model’s performance when a feature’s values are shuffled, providing insights into how much that feature impacts the model.

SHAP Values [80]: SHAP values provide a numerical explanation by attributing each feature’s contribution to the final prediction. These values can be visualized through force plots, offering a combination of both numerical and graphical insights into the model’s decision-making process.

ICE (Individual Conditional Expectation) Plots [81]: These plots illustrate how the model’s prediction changes when a particular feature varies while others remain fixed, offering a detailed visualization of the impact of specific features.

Concept Activation Vectors (TCAVs) [82]: Testing with Concept Activation Vectors is an explanation method used to interpret neural networks where the language of explanation is expanded from that of input features to include human-friendly concepts for greater intelligibility.

XAI methods that provide numerical or graphical explanations are abundant, including, but not limited to, Distill-and-Compare [83], Feature Contribution [84,85], Gradient Feature Auditing (GFA) [86], Shapley–Lorenz–Zonoid Decomposition [25], Probes [87], and Contextual Importance and Utility [88]. Illustrations of numerical and graphical explanations can be found in [81].

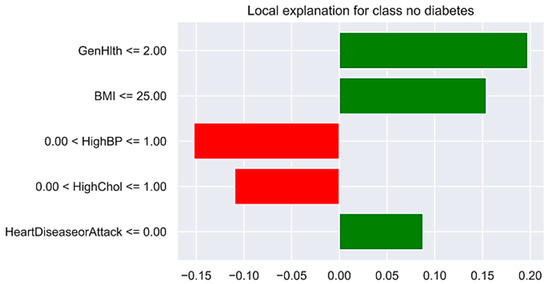

3.1.5. Mixed (Multiformat) Explanations

Mixed explanations combine two or more of the aforementioned formats in a hybrid approach aimed at providing richer, multi-dimensional insights into the model’s behavior by combining the strengths of various formats, as illustrated in Figure 5.

Figure 5.

Illustration of an SP-LIME algorithm calculated from 500 candidate explanations sampled from the data uniformly at random. Red indicates a contribution to non-diabetes and green indicates a contribution to diabetes. Source: [89].

The key methods in this class include, but are not limited to, the following:

LIME and SHAP: Both methods are inherently model-agnostic and allow for explanations that integrate numerical/graphical and textual components. Specifically, LIME explains a model’s decision by approximating it with a simple, interpretable surrogate model, which can be accompanied by a textual explanation of the prediction along with graphical visualizations of feature importance.

Interactive Dashboards: Some systems such as L2X (Learning to Explain) [90], Partial Dependence Plots (PDP) [75], and DeepLIFT (Deep Learning Important FeaTures) [46] use interactive visualizations that combine graphical components, like feature importance graphs, with textual summaries or natural-language explanations. These hybrid methods are increasingly being used in domains like healthcare, where practitioners require a combination of quantitative insights and verbal explanations to make informed decisions.

Counterfactual Explanations [91]: These explanations involve presenting a user with an alternative scenario, i.e., what would need to change in the input to alter the prediction. These can be accompanied by both textual and graphical explanations showing the differences between the original and counterfactual instances.

There exist other XAI methods in this category, including, but not limited to, Image Caption Generation with Attention Mechanism [92], Bayesian Teaching [93], Neighborhood-based Explanations [94], ExplAIner [95], Sequential Bayesian Quadrature (SBQ) [96], and Pertinent Negatives [97].

3.2. Shortfall in Conveying Structured Human-Readable Explanations That Make Transparent the Reasoning Process

Despite the emergence of over 200 XAI techniques and various explanation formats, current methods often fail to deliver cognitively accessible and structured explanations that clearly reveal the model’s reasoning process. This shortfall undermines user trust and hampers their adoption in critical fields such as healthcare, finance, and autonomous systems [98,99,100].

3.2.1. Lack of Contextual Relevance in Visual Explanations

Visual tools like saliency maps, Grad-CAM, and activation maximization help localize influential input regions, yet they often lack contextual grounding. For example, saliency maps may highlight areas without indicating why these features matter, especially when domain-specific knowledge is required to interpret them [101,102]. Techniques like SmoothGrad and Guided Grad-CAM improve the visual clarity but still lack semantic context integration [42]. Consequently, while useful for model debugging, these methods offer limited support for end-users needing actionable insights. As seen in medical imaging applications (e.g., pneumonia/COVID-19 diagnosis), such visual outputs can be unintelligible without prior expertise, limiting their transparency and auditability.

3.2.2. Ambiguity and Imprecision in Textual Explanations

Textual explanation methods like LIME, SHAP, and Anchors aim for interpretability but often oversimplify complex models or use jargon inaccessible to non-experts [103]. LIME, for instance, approximates black-box behavior with local linear models, potentially misrepresenting global reasoning. SHAP provides feature importance grounded in game theory yet lacks insight into why such features contribute, especially when multiple interactions are involved [26]. These methods often fail to communicate the reasoning process, leaving users unable to trace or verify the model’s logic, which critically undermines trust.

3.2.3. Limitations of Rule-Based Explanations

Rule-based approaches—like decision trees, anchors, and model-agnostic logic rules—are intuitive but struggle with scalability in high-dimensional contexts [69]. As complexity increases, rule sets become large and fragmented, overwhelming users and obscuring global model behavior. Local rule-based methods like Anchors, while interpretable in isolation, often lack generalizability and fail to provide a holistic view of model reasoning [104,105].

3.2.4. Overload from Numerical and Graphical Explanations

Quantitative methods—such as feature-importance rankings, PDPs, ICE plots, and SHAP visualizations—effectively quantify feature contributions but often overwhelm users with data in high-dimensional settings [106]. Graphs like SHAP summary plots demand mathematical literacy to interpret, excluding non-experts. For instance, interpreting Figure 4 requires an understanding of symbolic data on 2D plots, which restricts accessibility. Without simplified, domain-aligned presentations, these tools hinder rather than support comprehension, especially where interpretability and accountability are essential.

3.2.5. Mixed-Explanation Methods and Cognitive Overload

Multiformat approaches combining more than one format, such as textual, visual, graphical and rule-based explanations, offer comprehensive insights but often at the cost of usability. Users must navigate multiple formats, risking redundancy, inconsistency, and cognitive overload. The added complexity can obscure rather than clarify the model’s behavior, raising questions about the necessity and effectiveness of combining multiple explanation modalities.

3.2.6. Confabulation and Lack of a Formal Logical Structure in LLM-Based Explanations

Recent efforts to integrate Large Language Models (LLMs) into XAI frameworks show promise due to their natural-language capabilities. However, LLM-generated explanations often suffer from confabulations and lack a formal logical structure [24]. Their outputs, while fluent, may be inconsistent or unverifiable, particularly in high-stakes domains. For example, in the case of bank loan evaluations, stating that an applicant was approved because they “resembled others who were approved” lacks transparency about the criteria applied in the current case and fails to support trustworthy decision-making.

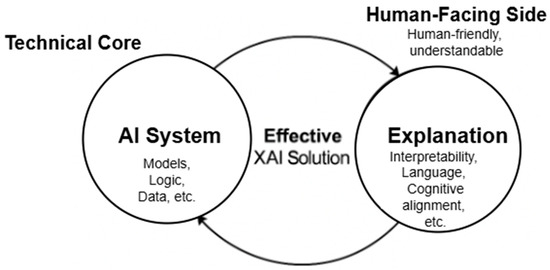

4. Twofold Need for Improvement in XAI Research

Explainable Artificial Intelligence (XAI) presents a dual-faceted challenge, encompassing both the technical imperative of producing faithful and accurate representations of model behavior and the human-centered need for interpretability, usefulness, and actionability. While significant advances have been made in developing explanation techniques, current methods often struggle to reconcile these two dimensions, as discussed in Section 3. Bridging this gap remains a critical concern for the XAI research community, necessitating solutions that not only uncover the rationale behind algorithmic decisions but also render them transparent and cognitively accessible. As illustrated in Figure 6, this bifurcation reflects the tension between extracting and representing information from inherently opaque models and addressing the cognitive, psychological, and social factors that influence human understanding, trust, and interaction with AI systems [107,108,109,110]. Effective XAI solutions must therefore bridge this gap, integrating algorithmic transparency with human-centered communication strategies.

Figure 6.

The two facets of XAI challenges in the field.

In light of the construct presented in Figure 6 (the two facets of XAI), two fundamental questions emerge: (1) From a technical standpoint, is it possible to discuss intelligence—or intelligent systems—without invoking the notion of “knowledge”? If not, how adequate are current knowledge representation approaches in existing XAI methods? (2) Can the challenge of explainability be effectively addressed without engaging with the underlying theoretical foundations of the “explanation” concept itself? The intersection of these two questions highlights the need to assign greater importance to both knowledge representation and the theory of explanation as the XAI community continues its efforts to foster effective user trust in AI systems. These issues are further explored in the following paragraphs.

4.1. The Need for Enhanced Knowledge Representation

From cognitive, philosophical, and psychological perspectives, intelligence and knowledge are fundamentally intertwined. Intelligence is broadly defined as the capacity to apply knowledge for problem-solving, adapt to new situations, and understand complex relationships, while knowledge refers to the facts, information, and skills acquired through experience or education. It is the dynamic interaction between the two that underpins intelligent behavior.

Cognitive science provides a structured view of this relationship, emphasizing that intelligence emerges from both the storage and effective application of structured knowledge. Anderson’s cognitive architecture and the ACT-R theory, for instance, illustrate the centrality of schemas and mental representations in enabling intelligent action [111]. Likewise, Newell and Simon’s problem-solving theory posits that intelligent behavior involves navigating a solution space by leveraging prior knowledge [112].

Philosophical discourse reinforces this perspective. Plato, in The Republic, asserts that knowledge is the foundation of intellectual virtue and a prerequisite for achieving truth and understanding [113]. Descartes’ famous assertion, “Cogito, ergo sum” (“I think, therefore I am”), underscores the indivisibility of thought and knowledge, suggesting that clear and distinct thinking is contingent upon well-grounded knowledge [114].

Psychological theories echo this view. Piaget emphasizes that intelligence develops through the assimilation and accommodation of new knowledge into existing mental schemas [115], while Vygotsky argues that cognitive development—and by extension, intelligence—is socially and culturally mediated through knowledge acquisition and transmission [116].

Building on these foundational insights, the formalization of knowledge for artificial systems began in the 1950s, coinciding with the emergence of early AI. Pioneers such as Marvin Minsky [117], John McCarthy [118,119], and Seymour Papert [120], drawing inspiration from classical philosophers like Aristotle and Plato [121], laid the groundwork for what is now recognized as the field of knowledge representation (KR). Contributions from ancient Eastern traditions, including the Nalanda School, also played a critical role in the early development of KR by promoting structured thought as a cognitive tool [122].

Over the past seven decades, KR has matured into a robust scientific discipline—particularly in ontology engineering—featuring a wide array of techniques that model knowledge through symbolic systems, natural language, and imagery to support intelligent and autonomous software systems [123].

However, despite this rich foundation, many contemporary explainable AI (XAI) approaches inadequately integrate core KR principles. This oversight is particularly evident in data-driven methods, where even knowledge-based approaches, which should enhance interpretability, often suffer from misrepresentation. These methods frequently fall short of the established criteria for KR adequacy, resulting in representations that are incomplete, ambiguous, or opaque [124].

To mitigate these issues, hybrid approaches such as neuro-symbolic AI have emerged that combine the learning capacity of neural networks with the interpretability of symbolic reasoning [125]. While promising in theory, these models encounter challenges in aligning sub-symbolic and symbolic components, often leading to inconsistencies. This misalignment can undermine interpretability, scalability, and transparency—especially in complex or high-stakes domains.

Similarly, knowledge graphs have gained popularity for encoding semantic relationships and supporting AI decision-making processes [126]. They are particularly effective for modeling static relationships and offer high interpretability. However, unless integrated with reasoning engines, they lack the logical rigor required for dynamic or causal inference, thus limiting their utility in domains where explainability is critical.

In sum, accurately and explicitly representing knowledge in a reasoning-compatible manner is essential for developing transparent and trustworthy AI systems, ensuring compliance with representational adequacy standards [124].

4.2. The Need for Effective and Efficient Integration of Explanation Theory into Explainability Design

As explicit knowledge representation emerges as a candidate solution to the first facet of explainable AI—enabling AI systems that are inherently capable of being explained, as discussed and illustrated in Figure 6—the theory of explanation presents itself as the complementary solution to the second critical facet: generating human-compatible explanations grounded in explanation constructs.

A critical challenge in current XAI methods is the insufficient integration of formal explanation theories, which contributes to opaque decision-making in AI systems. Without a clear theoretical foundation, these systems often lack transparency, limiting the traceability and auditability of their reasoning processes. While some efforts have incorporated explanation theories—yielding promising results—the absence of a widely accepted, foundational framework within the XAI community remains a major obstacle.

Several scholars have advocated integrating causal explanation theories, particularly Judea Pearl’s framework of causal inference [127], which models relationships using Bayesian networks [128] and supports counterfactual reasoning through directed acyclic graphs (DAGs). These tools enable mathematically rigorous intervention-based explanations. However, their complexity often hinders accessibility for non-experts, limiting their ability to foster trust. The transparency offered by causal models is indirect, relying on a user’s capacity to interpret statistical relationships and manipulate causal structures.

Other approaches, such as Granger causality [129], causal discovery algorithms [130], and structural causal models [131], have also been explored for their potential to enhance interpretability. Granger causality, while useful for detecting temporal dependencies in time-series data, offers limited support for non-temporal causal reasoning and often lacks intuitive interpretability. Similarly, causal discovery methods—though promising in extracting causal graphs from data—depend heavily on assumptions (e.g., no hidden confounders), and the resulting models are typically opaque to end-users without domain expertise.

Collectively, these methods underscore the promise of causal reasoning for improving explainability and trust in AI. Yet their effectiveness is contingent upon translating complex mathematical outputs into accessible, human-understandable explanations—a challenge that remains unresolved in current XAI practices.

In sum, advancing explainability in AI requires a deliberate and systematic integration of explanation theory. In scientific disciplines, theory serves as the backbone of research, guiding experimental design, methodological development, and result interpretation [132,133]. Without it, explainability research risks becoming fragmented and methodologically incoherent. The lack of a unified theoretical framework has led to ad hoc and domain-specific XAI solutions that are often incapable of delivering transparent reasoning or actionable insights.

This is particularly problematic in high-stakes domains such as healthcare, finance, transportation, and law, where understanding why an AI system makes a decision is as crucial as understanding what that decision is. To achieve truly interpretable and end-to-end explainable AI, the field must adopt a general, formal theory of explanation as the foundation for method development and evaluation. Only then can XAI systems consistently produce explanations that are transparent, trustworthy, and aligned with domain knowledge.

5. Nomological Deductive Reasoning (NDR): A Proposed Solution

Building on the foundations laid in Section 4.1 and Section 4.2, we propose the Nomological Deductive Reasoning (NDR) framework—an approach grounded in Nomological Deductive Knowledge Representation (NDKR)—to address the persistent transparency gap in contemporary explainable AI (XAI) methods. NDR bridges this gap by integrating explicit knowledge representation with Hempel’s deductive–nomological model, thereby enabling the generation of explanations that are both logically rigorous and readily interpretable by human users. At its core, the framework is based on the premise that meaningful explanation requires structured, domain-specific knowledge. Consequently, knowledge representation is treated not merely as a design component but as a foundational mechanism for explainability.

Crucially, the NDKR technique introduces a formal constraint: machine learning models must consult a structured knowledge base—comprising general laws and antecedent conditions—prior to making predictions. This constraint ensures that decision-making is not solely data-driven but also aligned with established causal and logical principles. As a result, the model embeds an internal mechanism for consistency checking and epistemic validation.

Moreover, the NDR framework is inspired by Hempel’s covering-law model of explanation [134], a foundational theory in the philosophy of science that continues to shape formal approaches to explanation. Although more recent models have sought to better reflect human cognitive processes [26,135], rule-based methods remain particularly effective in producing explanations that are transparent, trustworthy, and computationally efficient. Accordingly, by integrating causal inference, deductive reasoning, and domain-specific knowledge, NDR enhances system interpretability and fosters user confidence—without compromising algorithmic performance.

In operational terms, the framework constructs explanations through deductive inference: outcomes are logically derived from general laws and specific conditions. This process mirrors human causal reasoning [136,137,138,139], thereby yielding explanations that are both logically coherent and cognitively aligned. Within the deductive–nomological (D-N) model, a valid explanation demonstrates how the explanandum (the phenomenon to be explained) necessarily follows from the explanans (a set of laws and initial conditions).

This methodological choice is further supported by its effectiveness in addressing “why” questions with clarity and precision. As detailed in Appendix A, our analysis of competing explanatory theories reveals a common emphasis on intelligibility, testability, and causal grounding as key criteria for effective explanation. Furthermore, as noted by Hume [140], human knowledge presupposes that the universe operates under consistent and predictable laws—even when such laws are not immediately observable. Therefore, approaching the explainability challenge through domain-specific laws or principles becomes more understandable to human users.

By the virtue of the covering-law model being materialized through the nomological–deductive formula, while there may be different ways of explaining a phenomenon, science has proved that the event under discussion should be explained by subsuming it under general laws, i.e., by showing that it occurred in accordance with those laws, by virtue of the realization of certain specified antecedent conditions. A D-N explanation is therefore a deductive argument such that the explanandum statement is the result of the explanans [134,141]. Formally, the explanans are made up of , referred to as laws, and as summarized by Equation (1):

where represent the universal generalizations or laws in a certain domain of knowledge, and represent the antecedent or conditions that must hold for the law(s) to apply. This covering-law approach to the explanation can be incorporated into every single explanation from any domain, such that any explanation can be analyzed through the lens of the explanation premises captured in Table 2.

Table 2.

Premises of explanation arguments.

5.1. Mathematical Model of the NDR Framework

- Laws ()Let represent the set of laws or rules governing a certain real-world domain (healthcare diagnosis, bank credit score, traffic code for mobility applications, criminal justice, etc.). These laws are formalized as logical statements or principles that provide the foundation for reasoning in the system.Each law corresponds to a specific rule or law within the system.Example (in credit assessment settings):

- : “If an applicant has multiple financial obligations and a credit purpose that are considered risky, then they are at high risk of default”.

- : “If the loan duration is 12 months and the applicant has stable employment, then the credit is classified as ‘Good’”.

- Conditions (C)Let denote the set of antecedents or conditions that must hold true in order for a law to be applicable to a particular data instance. This means that each condition is a prerequisite or condition that must be satisfied for the corresponding law to be activated or relevant.Example (in medical settings):

- : “Applicant has stable employment”.

- : “The loan duration is 12 months”.

- Data instances (D)Let represent the set of input data fed into the AI system. Each represents a specific instance or data sample.Example (in credit assessment):

- : A data sample where the applicant has stable employment and is applying for a loan payable in more than 12 months.

- : A data sample where the applicant does not have any job and is asking for a loan payable in 3 months.

- Hypothesis or Prediction (H)Let represent the set of predictions or outcomes generated by the AI model. Each corresponds to a specific prediction or outcome generated for the instance .Example (in credit assessment):

- : “The applicant is at high risk for loan payment default”

- : “The applicant is not at high risk for loan payment default”.

- Formalized Deductive InferenceThe key goal of the NDR framework is to use deductive reasoning to formalize the process of how the AI model generates a prediction based on the combination of conditions C and laws L applied to the input data .The formalized deductive inference is represented aswhere

- is an input data instance;

- are the conditions (e.g., characteristics of the applicant);

- are banking principles or laws (e.g., rules about how conditions relate to prediction like good or bad credit);

- denotes the deductive reasoning process, where the combination of applicant conditions and banking laws leads to the prediction for the given instance .

- Formalized Explanation GenerationOnce we have the laws, conditions and input data, the explanation for the prediction can be expressed aswhere

- is the explanation for the prediction ;

- is the function that describes how the laws , conditions , and data combine to generate the outcome ;

- indicates the reasoning flow from the combination of laws and conditions to the prediction.

5.2. NDR Framework Architecture and Operational Integration with Machine Learning

The Nomological Deductive Reasoning (NDR) framework is designed to produce interpretable, actionable decisions by combining data-driven feature extraction with symbolic reasoning grounded in domain knowledge. This section describes the full system architecture, explains how knowledge is encoded, and details the interaction between machine learning and deductive logic. A structured flow and pseudocode are also provided to operationalize the theory in computational terms.

5.2.1. System Architecture Overview

To operationalize the Nomological Deductive Reasoning (NDR) framework, we propose a multi-layered architecture that integrates symbolic knowledge representation, statistical learning, and deductive inference into a unified explanation pipeline. Figure 7 illustrates the overall system design, detailing how each component—from domain knowledge encoding to user interaction—contributes to generating transparent, norm-compliant, and causally grounded explanations. This architecture supports the core objective of aligning machine learning outputs with structured, law-based reasoning to enhance trust and accountability in AI decision-making.

Figure 7.

Proposed architecture for an NDR-based explanation approach.

The Knowledge Representation Layer encodes structured domain-specific knowledge—such as legal statutes, clinical guidelines, or financial regulations, etc.—into a semantic ontology using symbolic rules and causal relationships. This layer forms the epistemic foundation for deductive reasoning. The Machine Learning Layer or AI Model Layer performs statistical learning using traditional [78,142] or deep learning models [143]. It extracts salient features from input data and provides probabilistic or deterministic predictions that are then evaluated by the Reasoning and Deductive Inference Engine against the symbolic rules encoded in the knowledge base. Using logical inference and causal reasoning, it verifies whether the AI’s output aligns with normative expectations and domain constraints. The inferred decision is then passed onto the Explanation Generation Layer to produce human-readable, law-based explanations grounded in domain-specific knowledge as encoded in the knowledge base. These explanations are crafted to be both comprehensible and traceable. Finally, the generated explanation is sent to the User Interaction and Trust Evaluation Layer, which interfaces with end-users, supporting transparent decision communication. Each component contributes to ensuring explainability, traceability, and robustness in high-stakes decision-making scenarios such as in healthcare and medical diagnosis, credit-risk assessment, criminal justice, etc.

5.2.2. Knowledge Encoding: Symbolic Rule Definition

The knowledge base in NDR is composed of two components: domain-specific laws (L) and antecedent conditions (C). These are hand-encoded as logical rules formalized using first-order logic and operationalized as IF–THEN statements. The following are example rules (symbolic format) in a financial-use case:

- IF checking_account = None AND savings < 100 THEN LiquidityRisk = TRUE;

- IF employment_duration < 1 year AND age < 30 THEN IncomeInstability = TRUE;

- IF LiquidityRisk = TRUE AND IncomeInstability = TRUE THEN CreditRisk = High.

Each rule maps observed conditions to semantic risk categories. These rules are stored in a structured ontology that defines the hierarchy and relationships between classes (e.g., ApplicantProfile, RiskIndicator) and properties (e.g., hasCreditBehavior, leadsToRecommendation).

5.2.3. Integration of Machine Learning and Deductive Reasoning

In the NDR framework, machine learning (ML) and symbolic reasoning interact sequentially and complementarily. The ML model is responsible for feature extraction—identifying which attributes are most predictive for the task at hand. However, the final decision is not directly adopted from the ML model. Instead, the most influential features are passed onto the reasoning layer for formal evaluation. This decoupling ensures that ML contributes empirical insights by identifying relevant features, and the deductive system ensures interpretability, logical consistency, and compliance with domain laws. This integration follows a two-stage architecture:

- Stage 1—ML Model Prediction: A supervised ML model (e.g., deep neural network, random forest, or any other model) generates a prediction for input .

- Stage 2—Deductive Reasoning Engine: The reasoning module takes the input instance , the prediction and the knowledge base and and then verifies whether the prediction can be deduced from the knowledge base, such as byIf the deduction is consistent, the prediction is validated, and a logical explanation is generated. If not, the system raises a trust warning or requests further input.

5.2.4. Inference Flow and Operationalization

The following step-by-step flow describes the full inference pipeline from the raw input to the final recommendation:

- Input: For example, in a bank loan application scenario, applicant data are provided as structured records and constitute the system input.

- ML Feature Extraction: A trained model (e.g., Random Forest) identifies the most influential features. These features are passed as symbolic assertions onto the knowledge layer.

- Symbolic Assertion Mapping: Features are translated into logical predicates (e.g., SavingsLow(Applicant123)).

- Deductive Inference: The reasoning engine applies the rules using forward chaining (modus ponens) to derive intermediate-risk indicators and final recommendations.

- Explanation Generation: Activated rules are converted into natural-language templates.

- Output: A classification decision (e.g., “High Credit Risk”) with justification is presented to the user.

The flowchart of the process is presented in Figure 8.

Figure 8.

Flowchart diagram of NDR-based explanations.

5.3. Use-Case Implementation of the NDR-Based Explanations

The initial testing of the NDR framework was conducted for credit scoring on the German Credit dataset [33] by integrating NDR with a Random Forest Classifier algorithm. The experiment aimed to evaluate the framework’s performance in explaining the reasoning behind the predicted creditworthiness based on the input data. Algorithm 1 was used.

5.3.1. Knowledge Base and Ontology Construction in NDR

In the Nomological Deductive Reasoning (NDR) architecture, the knowledge base (KB) serves as the epistemic substrate enabling norm-guided reasoning and explainable inference. Built atop a semantically structured ontology, the KB integrates two core components: domain-specific laws (L) and antecedent conditions (C). These components are formalized using first-order logic (FOL) and operationalized as IF–THEN rules, ensuring that decisions made by machine learning models are both interpretable and normatively aligned. This section details how the KB was constructed using the German Credit Data [33] and aligned with the NDR framework to support high-stakes decision-making in credit-risk assessment.

Ontology Design

The Nomological Deductive Reasoning (NDR) ontology serves as a formal semantic framework for representing the entities, attributes, and interrelations essential for reasoning over structured credit assessment data. It facilitates interpretable, logic-based inference by encoding both normative domain knowledge and case-specific facts in a machine-understandable format. This ontology is structured around key concept classes, which include Applicant, CreditApplication, FinancialProfile, CreditHistory, EmploymentStatus, PropertyOwnership, and RiskAssessment. These classes collectively model an applicant’s demographic attributes, financial behavior, loan parameters, and creditworthiness outcome.

Data properties define attributes such as age, loan amount, duration, employment duration, and credit history. For example, hasAge, hasLoanAmount, hasSavings, and hasNumExistingLoans support quantitative and categorical assessment criteria central to credit decision-making logic.

Object properties (e.g., appliesFor, hasProfile, hasEmploymentStatus) capture relationships between entities, enabling integrated reasoning across multiple dimensions of credit evaluation.

To support logical deduction, the ontology defines derived classes through rule-based expressions using thresholds. For instance,

- Young(x) ≡ hasAge(x) < 25;

- HighLoan(x) ≡ hasLoanAmount(x) > 10,000;

- StableEmployment(x) ≡ hasEmploymentDuration(x) > 1;

- OwnsProperty(x) ≡ ownsProperty(x) = true.

These formally defined concepts underpin the NDR framework’s rule-based laws (e.g., L1–L15 as defined in the Domain-specific Laws’ subsection), enabling precise, explainable reasoning over structured representations aligned with financial norms.

Domain-Specific Laws (L)

Domain-specific laws encode general principles about credit risk that are derived from financial expertise and empirical patterns. In the NDR framework, these laws are formalized in FOL and expressed as deductive rules that define the logical conditions under which an applicant is expected to be high- or low-risk.

L1: High Loan + No Checking Account ⟹ High Risk: Applicants requesting high loan amounts without a checking account are likely to be high-risk:

L2: Young Age + Long Loan Duration ⟹ High Risk: Young applicants with long loan durations are more likely to default:

L3: Stable Employment + High Savings ⟹ Low Risk: Stable employment and substantial savings indicate financial reliability:

L4: Multiple Existing Loans ⟹ High Risk: Multiple existing credits increase the financial burden and risk:

L5: Long Loan Duration Indicates Lower Risk: Longer loan durations are typically associated with structured repayment plans, which reflect lender confidence.

L6: Full Repayment of Previous Credits Implies Trustworthiness: Applicants who have fully repaid past credits are less likely to default.

L7: Stable Employment (>1 year) Signals Financial Stability: Consistent employment indicates a reliable income source.

L8: Ownership of a House or Real Estate Reduces Risk: Property ownership suggests financial maturity and collateral availability.

L9: Very Young Applicants (<25) Have Elevated Default Risk: This reflects the behavioral and income uncertainty that are often correlated with young age groups.

L10: Long-Term Employment (>2 years) Significantly Reduces Risk: Extended employment history contributes strongly to financial reliability.

L11: Absence of a Foreign Worker Status Positively Influences Creditworthiness: Applicants who are not classified as foreign workers exhibit stronger integration and employment stability, which favorably impact credit assessments.

L12: Certain Personal Status Categories Contribute Positively to Credit Assessment: Applicants falling within specific personal status categories (e.g., single male, divorced female) are associated with slightly improved creditworthiness, potentially due to social or economic correlations.

L13: A Low Job Classification Marginally Enhances the Credit Evaluation: Contrary to conventional assumptions, being in the lowest job tier may correlate with basic but stable employment, contributing weakly positively to credit decisions.

L14: A Limited Credit History Increases the Risk of Default: Applicants with minimal or unfavorable credit histories demonstrate higher uncertainty in repayment behavior, which slightly increases risk.

L15: A Low Checking Account Status Slightly Decreases Creditworthiness: Applicants without a robust checking account history tend to receive lower credit assessments due to their perceived lack of financial infrastructure.

Each predicate (e.g., HighLoan, Young, HighRisk) is grounded in ontological definitions, where threshold values and categorical labels (e.g., “young” = age < 25) are specified as concept constraints or semantic annotations.

Antecedent Conditions (C)

Antecedent conditions represent instance-specific facts, derived from input data and expressed as logical assertions. These instantiate the ontology with individual-level information. As an example, applicant A123 has the following information:

- -

- Age: 22;

- -

- Checking Account Status: None;

- -

- Employment duration: 0.5;

- -

- Credit history: critical;

- -

- Number of existing loans: 3;

- -

- Savings: less than 100.

These facts map onto the ontology and activate predicates such as

- Young(A123), HighLoan(A123), NoCheckingAccount(A123), UnstableEmployment(A123), NumExistingLoans(A123) > 2.

Logical Reasoning and Inference

Using the logical rules (L) and instantiated conditions (C), the NDR inference engine performs deductive reasoning to assess whether the AI model’s prediction aligns with the domain norms. For example, applying laws L1, L2, and L4 to A123:

HighLoan(A123) ∧ NoCheckingAccount(A123) → HighRisk(A123)

Young(A123) ∧ LongLoanDuration(A123) → HighRisk(A123)

NumExistingLoans(A123) > 2 → HighRisk(A123)

5.3.2. Data Processing and Model Training

The German Credit Dataset [33] comprises 1000 credit applicants, each described by 20 financial and demographic attributes, including features such as credit history, loan duration, employment status, savings level, and age. The target variable, Class, indicates the applicant’s creditworthiness, where a value of 1 represents Good Credit and a value of 0 denotes Bad Credit. The dataset contains a mixture of categorical and numerical variables, offering a comprehensive profile of each individual.

Data Preprocessing:

Categorical variables were transformed into numerical representations using label encoding. Numerical attributes were preserved in their original form. To ensure experimental reproducibility, the dataset was partitioned into a training set (70%) and a testing set (30%) using a fixed random seed (random_state = 42).

Model Training:

A Random Forest classifier was trained on the processed training set with the following settings:

- Number of trees: n_estimators = 100;

- Criterion: default Gini impurity;

- Random seed for reproducibility: random_state = 42;

- Other hyper parameters retained their default Scikit-learn values.

No additional hyper-parameter optimization (e.g., grid search or randomized search) was performed, as the objective was to demonstrate baseline model behavior integrated with the NDR framework.

Hardware and Software Environment:

The implementation environment consisted of Python 3.10.11 and Scikit-learn 1.5.1. Model training and evaluation were performed on a machine featuring an Intel Core i5-4200M CPU @ 2.5 GHz (2 cores) and 4 GB of RAM, running Windows 10.

Given the relatively small size of the German Credit dataset (1000 instances), computational resource limitations did not impact the training or evaluation performance. The model was trained and evaluated within a reasonable timeframe without any notable delays or constraints.

Model Evaluation and Explanation Generation:

After training, the Random Forest model was used to generate predictions on the test set. These predictions were assessed using standard evaluation metrics (accuracy and confusion matrix). Additionally, a feature importance analysis was performed to identify the most influential variables, supporting the subsequent deductive reasoning.

The predictions were then passed onto the Nomological Deductive Reasoning (NDR) framework, where they were evaluated against a structured financial knowledge base encoded with domain-specific laws. This process generated semantically grounded, human-readable explanations, aligning the model’s decision-making with established banking norms and ontological definitions. The whole process is described by Algorithm 1, and the implementation code is publicly available at https://github.com/HakizimanaGedeon/NDR-Framework-Explainable-AI, accessed on 20 March 2025.

| Algorithm 1. NDR Framework: SP Algorithm with Formal Inference |

|

1: Input: I (input data), KB (Knowledge Base), M (Model), F (Feature Extraction function), R (Reasoning function), E (Explanation Generation function) 2: Output: Explanation Ei for each prediction set of predictions 3: Step 1: Preprocess the input data 4: I′ ← Preprocess(I) {Clean and format input data} 5: Step 2: Model prediction ~ Extract features from the preprocessed input 6: F ← ExtractFeatures (I′) {Feature extraction using ML model} 7: Step 3: Retrieve relevant laws from the Knowledge Base 8: L ← GetLaws(KB) {Retrieve laws from Knowledge Base} 9: Step 4: Retrieve the conditions or antecedents for the relevant laws 10: C ← GetConditions(KB) {Retrieve conditions that must hold true for laws} 11: Step 5: Perform reasoning based on the extracted features and laws 12: C′ ← Reason(F, L, C) {Reason using features, laws and conditions} 13: Step 6: Perform prediction based on reasoning and extracted features 14: ← Predict(M, C′) {Model prediction based on reasoning output and features} 15: Step 7: Generate the explanation based on reasoning, features, and prediction 16: Ei ← E(L′, C′, I′, ) {Explanation generation based on laws, conditions, data, and prediction} 17: Step 8: Output the generated explanation 18: Return Ei |

5.3.3. Experimental Results

To assess the effectiveness of the proposed Nomological Deductive Reasoning (NDR) framework, we conducted a series of experiments using the Statlog (German Credit Data) dataset [33]. Our evaluation focused on three key dimensions: predictive performance, quality of the rule-based explanations, and alignment with the domain-specific knowledge encoded in the Knowledge Base (KB).

Prediction Accuracy

The NDR-augmented model achieved a high prediction accuracy of 97%, correctly classifying individuals as either “Good Credit” or “Bad Credit”. This demonstrates strong statistical performance on the credit-risk classification task.

Rule-Based Explanation Generation

For each prediction, the NDR framework applied relevant rules from the KB to generate structured explanations. These explanations link individual feature values to domain-specific rules grounded in banking regulations, ensuring that decisions are causally interpretable and auditable.

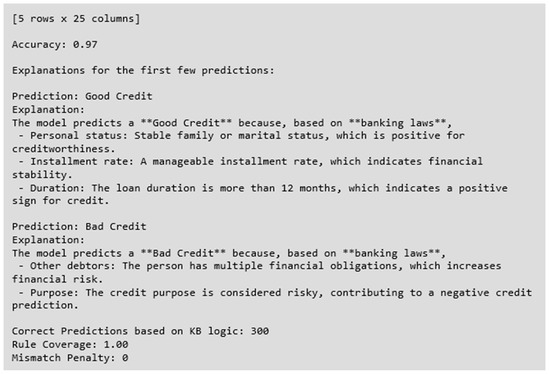

- Example (see Figure 9):

Figure 9. Sample explanations generated by the NDR framework for credit-risk predictions based on the German Credit dataset [33]. Each explanation illustrates how specific input features are mapped to domain rules defined in the Knowledge Base, enabling transparent, causal reasoning behind the model’s decision. The examples demonstrate both positive and negative classifications, with logical justifications grounded in financial regulations.

Figure 9. Sample explanations generated by the NDR framework for credit-risk predictions based on the German Credit dataset [33]. Each explanation illustrates how specific input features are mapped to domain rules defined in the Knowledge Base, enabling transparent, causal reasoning behind the model’s decision. The examples demonstrate both positive and negative classifications, with logical justifications grounded in financial regulations.

- Prediction Case 1: “Good Credit”

- Explanation: “The model predicts a ‘Good credit’ because based on banking laws, the applicant has a stable family or marital status, which is positive for creditworthiness; manageable installment rates, which indicate financial stability; and the loan duration is for more than 12 months, which indicates a positive sign of credit”.

- Model Reasoning: (i) Personal Status: Stable marital/family status → positively influences creditworthiness, (ii) Installment Rate: Manageable installment amount → indicates financial responsibility, and (iii) Duration: Loan duration > 12 months → considered favorable under domain rules.

- Prediction Case 2: “Bad Credit”.

- Explanation: “The model predicts a bad credit because based on banking laws, the person has multiple financial obligations which increases financial risk, and the credit purpose is considered risky, contributing to a negative credit prediction”.

- Model Reasoning: (i) Other Debtors: Multiple financial obligations → higher perceived risk and (ii) Purpose: Loan purpose considered high-risk → contributes to a negative classification.

Alignment with Domain Knowledge

The deductive consistency of the model’s outputs was validated by comparing its generated explanations with the logic encoded in the Knowledge Base. The following were found:

- Rule Consistency: All 300 predictions were logically consistent with the rules defined in the Knowledge Base.

- Rule Coverage: Every prediction was fully accounted for by one or more symbolic rules, resulting in a Rule Coverage = 1.00, meaning 100% of rule coverage before decision-making.

- Mismatch Penalty: There were no mismatches between the model’s explanations and the rule base, indicating a Mismatch Penalty of 0, reflecting 100% loyalty to the rule base.

Summary of Results

Table 3 summarizes the key evaluation metrics, highlighting the NDR framework’s ability to combine high predictive performance with complete logical consistency and rule-based explainability.

Table 3.

Summary of the NDR framework experiment results.

These results demonstrate that NDR not only preserves predictive accuracy but also guarantees that every decision is logically grounded, transparent, and normatively aligned—a crucial requirement for AI adoption in high-stakes domains such as finance.

5.3.4. Evaluation of the Explanatory Quality of the NDR Framework

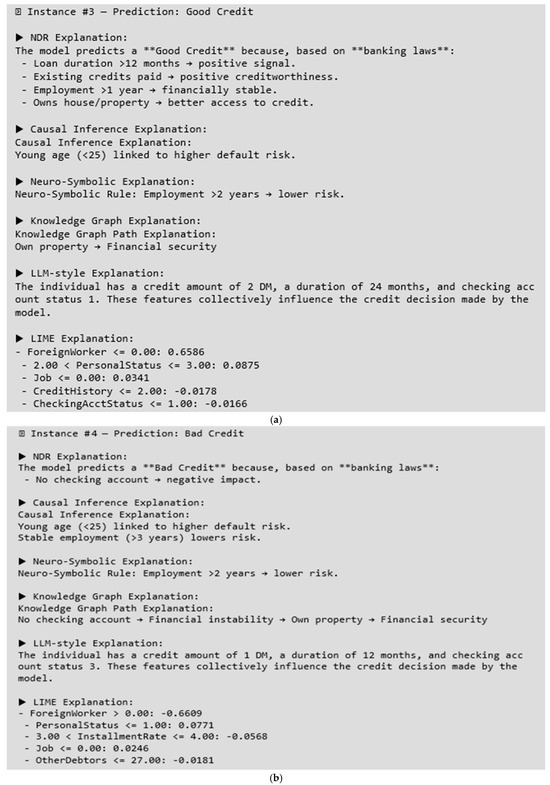

To assess the quality of explanatory outputs generated by the NDR framework, we focused on three key dimensions of explainability: trustworthiness, human-readability, and actionability. These criteria were selected based on a comprehensive review of the literature and the practical needs of high-stakes domains like finance, where decisions must be interpretable, transparent, and actionable. Consider the following explanation produced by NDR for a credit application decision: “The model predicts a *Bad Credit* because based on banking laws, the applicant has multiple financial obligations which increases the financial risk, and the credit purpose is considered risky, contributing to a negative credit prediction”. This explanation scores highly on trustworthiness, as it aligns with established lending criteria and domain-specific reasoning. It also exhibits strong human-readability, using plain language and avoiding technical jargon, which makes it accessible to non-technical users. Finally, the explanation is clearly actionable, offering concrete steps the applicant can take to influence future outcomes (reducing financial obligations such as clearing his existing debts and properly motivating the purpose of the loan). This kind of output reflects the core strengths of the NDR framework and illustrates how it outperforms alternative methods that may produce less coherent, overly technical, or non-instructive explanations vis-à-vis the end user, who may be a simple lay consumer. A comparative qualitative analysis of NDR alongside other emerging methods—LIME, causal inference models, neuro-symbolic reasoning, knowledge graph-based approaches, and LLM-driven narratives—is presented in Figure 10a,b, using consistent instances to highlight interpretive differences. The full code of this comparison is available at https://github.com/HakizimanaGedeon/NDR-Framework-Explainable-AI.

Figure 10.

(a) Comparative explanation outputs for an instance number # predicted as **Good Credit** using six interpretability methods: Nomological Deductive Reasoning (NDR), Causal Inference, Neuro-Symbolic Reasoning, Knowledge Graph Paths, LLM-style explanations, and LIME. NDR stands out by providing a structured, law-based rationale for predictions, offering enhanced transparency compared with the data-driven feature attributions and descriptive summaries from other approaches. (b) Comparative explanation outputs for an instance number # predicted as **Bad Credit** using six interpretability methods: Nomological Deductive Reasoning (NDR), Causal Inference, Neuro-Symbolic Reasoning, Knowledge Graph Paths, LLM-style explanations, and LIME. While the LIME and LLM approaches highlight influential features and descriptive summaries, only NDR provides a normative, rule-based justification anchored in domain knowledge, thereby enhancing interpretability and decision transparency.

On the other hand, as illustrated in Figure 10b, the prediction of “Bad Credit” for Instance #4 is accompanied by explanations generated from six different interpretability frameworks, each offering varying degrees of clarity and utility. Among these, the Nomological Deductive Reasoning (NDR) approach delivers the most structured and contextually grounded explanation. It explicitly attributes the adverse credit decision to the absence of a checking account, referencing domain-specific banking norms that associate such financial behavior with increased risk. This reasoning aligns closely with human cognitive expectations, offering both traceability and normative justification. In contrast, the Causal Inference explanation identifies statistically relevant factors—such as younger age (<25) being linked to a higher default risk and stable employment mitigating risk—but lacks decisiveness in synthesizing these competing signals into a coherent conclusion. Similarly, the Neuro-Symbolic explanation emphasizes employment stability as a favorable indicator but does not resolve the tension between this positive factor and the final negative prediction. The Knowledge Graph explanation constructs a semantic path that includes both risk (absence of a checking account) and security (property ownership) yet stops short of articulating how these are weighed in the final decision. The LLM-style explanation, while human-readable, primarily restates input features without embedding them in a domain-specific interpretive framework. Lastly, the LIME explanation provides local feature-importance scores, showing that a “ForeignWorker” status exerted the strongest negative influence, but it does not offer a global rationale or domain-based interpretation. Overall, the NDR explanation distinguishes itself by integrating formal logic with domain-relevant rules, enabling it to generate actionable, transparent justifications. This makes NDR particularly suitable for high-stakes applications, where explanation fidelity and alignment with institutional norms are critical.

From the illustrations in Figure 10a,b, the trustworthiness of NDR-generated explanations stems, first, from their foundation in formal deductive reasoning. Unlike post hoc or correlation-based techniques, NDR explanations are derived from domain-specific causal laws and antecedent conditions encoded in a structured knowledge base. This ensures that each prediction is not only statistically plausible but also epistemically justified. The deductive inference engine enforces consistency by penalizing predictions that violate predefined rules, thereby improving reliability. The empirical results demonstrated that a high proportion of the model outputs remained consistent with the knowledge base, validating the explanatory rigor of the framework.

Second, human-readability is achieved through structured natural-language explanations that mirror human causal reasoning. Explanations are delivered in an “if–then” format, with clearly articulated reasons based on interpretable features. For example:

Prediction: “Good Credit”

Explanation:

- “The model predicts a ‘Good Credit’ because, based on banking laws, the duration of the loan is longer than 12 months, which indicates a positive sign for credit, and the installment rate is manageable, which suggests financial stability”.which is the more human-friendly form adapted by NDR explanation layer from:

- “If the duration of the loan is longer than 12 months, which indicates a positive sign for credit, and if the installment rate is manageable, suggesting financial stability, then the model predicts a ‘Good Credit’ based on banking laws”.

These explanations are designed to highlight key variables, offer concise reasoning, and use plain language free of technical jargon. Although a formal user study is planned for future work, the current framework was developed with interpretability and end-user accessibility as guiding principles.

Third, the actionability of NDR outputs is supported by their alignment with modifiable input features and real-world financial decision logic. By identifying contributing factors (e.g., installment rate, loan duration, personal status) and explaining their relevance through legal or policy-based rules, the explanations offer users a pathway for improving or justifying outcomes. This makes them particularly useful in domains where decision support is essential.

To summarize the quality of explanations, Table 4 outlines the relationship between NDR’s design mechanisms and the corresponding dimensions of explainability:

Table 4.

Justification matrix: explanation quality in the NDR framework.

These findings reinforce that NDR not only enhances model transparency but also improves the practical utility of AI systems in real-world contexts. By combining formal logic with domain-grounded reasoning, the framework closes the transparency gap identified in current XAI methods.

6. Discussion and Future Work

The initial experimental findings demonstrate the efficacy of the Nomological Deductive Reasoning (NDR) framework in integrating structured domain knowledge into machine learning models for credit scoring. By formalizing and embedding financial principles as logical rules, the NDR framework provides transparent, interpretable, and human-aligned explanations for model predictions. This approach not only bridges the gap between data-driven modeling and domain expertise but also offers pathways to enhance the trustworthiness of AI systems by offering explanations that are logically consistent and verifiable.

A key strength of the NDR framework lies in its ability to generate explanations that align with human reasoning, particularly in high-stakes domains such as finance, where regulatory compliance, user trust, and interpretability are paramount. From the financial experiment, the explanations derived from the NDR model are not only readable and actionable but also grounded in established financial logic, offering stakeholders clarity on why certain predictions are made. This contributes significantly to the field of explainable AI (XAI) by moving beyond statistical approximations toward logic-based, nomologically valid justification. The perfect Rule Coverage and absence of any Mismatch Penalty further underscore the alignment between model behavior and the domain knowledge encoded in the framework.

From a practical standpoint, the NDR framework offers a valuable tool for institutions seeking to improve transparency in their credit-risk assessments, enabling easier auditability and more defensible decision-making. Furthermore, its domain-agnostic architecture opens up possibilities for deployment in other critical areas such as healthcare diagnostics, criminal justice, insurance underwriting, and legal reasoning, where similar demands for explainability and rule adherence exist.

From a theoretical perspective, this work contributes to the ongoing discourse on hybrid AI systems by demonstrating how symbolic reasoning and machine learning can coexist in a mutually reinforcing manner. It also emphasizes the importance of ontology-driven explanations in contexts where decision-making must be not only correct but justifiable.

However, several limitations point to promising avenues for future research. The current implementation of NDR assumes a moderately sized, static rule base, which may face scalability challenges in domains with large, dynamic, or probabilistic rule sets. Enhancing the framework’s ability to operate efficiently with extensive or evolving ontologies remains an open challenge. Additionally, while the deductive component excels in rule-based reasoning, it currently lacks mechanisms to accommodate uncertainty or conflicting rules, which are common in real-world decision-making environments. To address these limitations, future research will focus on three key directions:

- Scalability and Automation: Developing methods for automated rule induction from structured data and text, as well as mechanisms for dynamic rule updates, will be crucial for broadening the applicability of the NDR framework in real-time and large-scale systems.

- Handling Uncertainty: Integrating probabilistic reasoning or fuzzy logic into the NDR architecture could improve its robustness in domains where knowledge is imprecise, incomplete, or uncertain.