Abstract

This paper presents a systematic literature review based on the PRISMA model on machine learning-based Distributed Denial of Service (DDoS) attacks in Internet of Things (IoT) networks. The primary objective of the review is to compare research trends on deployment options, datasets, and machine learning techniques used in the domain between 2019 and 2024. The results highlight the dominance of certain datasets (BoT-IoT and TON_IoT) in combination with Decision Tree (DT) and Random Forest (RF) models, achieving high median accuracy rates (>99%). This paper discusses various datasets that are used to train and evaluate machine learning (ML) models for detecting Distributed Denial of Service (DDoS) attacks in Internet of Things (IoT) networks and how they impact model performance. Furthermore, the findings suggest that due to hardware limitations, there is a preference for lightweight ML solutions and preprocessed datasets. Current trends indicate that larger or industry-specific datasets will continue to gain popularity alongside more complex ML models, such as deep learning. This emphasizes the need for robust and scalable deployment options, with Software-Defined Networks (SDNs) offering flexibility, edge computing being extensively explored in cloud environments, and blockchain-integrated networks emerging as a promising approach for enhancing security.

1. Introduction

The IoT refers to devices that are connected and communicate with one another, typically described as an IoT network. Whether they are used in your smart home, agriculture, or medical tools, IoT devices are important to the functioning of the digital world and are quite vulnerable from a cybersecurity perspective. One of the key properties of these devices is that they are resource-limited, meaning that they are an obvious target of different forms of cyberattacks, including DDoS. Given their wide adoption in daily life and industry, estimates vary regarding the actual number of IoTs devices in use, with some sources claiming that there were expected to be approximately 20 billion IoTs devices [1] by the end of 2024. However, Forbes claimed a figure over tenfold of that [2]. That being said, the need for a robust security solution for this popular technology is obvious.

Intrusion detection system (IDS) based on ML appear to be a natural first choice to explore. ML’s flexibility offers a number of benefits, including real-time detection of DDoS attacks through network analysis. Furthermore, the option of running ML algorithms on different systems can prove useful when memory and computational power are limited. Hence, we use this review as a foundational point to discuss the ML techniques that the current literature proposes. In order to draw more accurate conclusions from our research, we identify the datasets that the ML models are trained and tested on, as different datasets may vary in important properties, which may in turn impact the performance metrics of the proposed models. Finally, we also consider the deployment environments proposed by the reviewed papers. Given the constraints of IoT technology, the deployment options can have an impact on the construction of the ML models and vice versa.

This paper presents a large-scale, in-depth analysis with a distinct focus on DDoS attacks, distinguishing it from more general or smaller-scale surveys, which are discussed in greater detail in Section 3. Additionally, our work provides a comprehensive, multi-dimensional examination of the field, covering deployment strategies, dataset usage, and a comparative evaluation of ML models, offering a level of detail and a combination of insights not found in previous reviews.

This paper aims to follow the PRISMA structure in building up a literature review on ML-based IDSs for DDoS attacks in IoT networks. Our contributions to the topic include creating an updated survey on the deployment options, datasets, and ML techniques used in the academic literature published on the topic. We also compare our results in order to find consistent characteristics as well as gaps in current research and offer direction for future research. In particular, we aim to answer the following research questions:

- What are the deployment/platform solutions using machine learning proposed for mitigating DDoS attacks on IoT networks?

- Which datasets are used to train and evaluate ML models for detecting DDoS attacks in IoT networks and how do they impact model performance?

- How do different ML models compare based on performance metrics, and what factors contribute to achieving high accuracy rates?

- What trends have emerged in the use of machine learning for DDoS detection in IoT networks?

While other reviews on this topic have been published in the past 6 years, this paper stands out in that it includes significantly more reviewed literature than the comparable surveys. Moreover, this paper focuses on DDoS attacks and creates a broad survey of relevant surrounding elements, such as the datasets used, various performance metrics, and deployment contexts, which other papers may omit. Furthermore, with the PRISMA framework, this paper is replicable and follows a standardized research structure.

To give an outline of this paper, Section 1 introduces this paper and motivation, while Section 2 denotes the methodology this paper is based on. Section 3 provides the reader with a foundational understanding of the discussed domain, while Section 4, Section 5 and Section 6 offer a full overview of the literature reviewed. We discuss the results found in Section 7, propose future research directions in Section 8, and conclude the survey in Section 9.

2. Methodology

2.1. Systematic Literature Review Strategy

Selecting a structured framework for conducting a systematic literature review is a customary and essential practice in academic research. It ensures methodological precision, transparency, and reproducibility, enabling researchers to trust and draw reliable conclusions. For its widely accepted and utilized status in the scientific community, we elected to follow and conduct our review using the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) [3] framework for this review, ensuring a structured and transparent research process. The PRISMA framework, first introduced in 2009 and later refined, is a widely recognized standard for systematic reviews and meta-analyses. It provides comprehensive guidelines for conducting research, making it particularly suitable for studies synthesizing evidence from multiple sources. By adopting PRISMA, this study adheres to best practices in research synthesis, ensuring clarity and credibility. In this paper, we are using the PRISMA 2020 version.

The framework consists of two main items: the PRISMA flow diagram [4] and the PRISMA checklist [5].

The PRISMA flow diagram visually represents the process of paper selection for the study in four key stages:

- Identification—records identified through database searches and additional sources.

- Screening—records screened based on titles and abstracts after duplicates are removed.

- Eligibility—full text of articles assessed against predefined criteria for inclusion or exclusion.

- Inclusion—final studies included in qualitative and quantitative synthesis.

The PRISMA checklist is a structured set of 27 points, aimed at ensuring all critical aspects of a systematic review are addressed, concerning search strategies in databases, the selection process, and assessing other markers.

2.2. Databases and Paper Selection

Our main search tool was the DTU FindIt [6] tool/library, which offers access to a wide variety of papers and articles provided to DTU students and staff. As students of the university, we had access to a larger variety of resources compared to a user without login credentials; therefore, a logged-in account is necessary to replicate the results described in this section. This tool aggregates search results from different research databases (although sometimes partially [7]), including the Institute of Electrical and Electronics Engineers (IEEE), Association for Computing Machinery (ACM), and arXiv, and provides access to full-text papers.

Additionally, we also used IEEE Xplore and its search tool. This library is a leading digital collection for scientific and technical research, providing access to peer-reviewed journals, conference proceedings, and technical standards published by the IEEE and its partners. It is widely recognized as a critical resource for engineering, computer science, and technology research. Compared to our search with DTU FindIt, we were able to find new studies with IEEE Xplore and append our list of publications considered.

We also inspected the Association for Computing Machinery (ACM) library, a widely respected resource offering access to peer-reviewed journal articles, and arXiv, an open access repository for pre-prints and research articles independent of DTU FindIt; however, the results searching these databases were already covered by DTU FindIt’s search results in full.

As these databases’ search tools allow for an advanced query, we opted for a detailed search term with multiple keywords to identify the specific papers to be included in this review. Our query process went as follows:

- Locate DTU FindIt and IEEE Xplore database (DTU login necessary);

- Search for all metadata containing (“IoT” OR “Internet of Things”) AND (“Intrusion Detection System” or “IDS”);

- Search for abstract criteria, containing (“ML” OR “Machine Learning”) AND (“IoT network” OR “IoT networks”) AND (“identification” OR “detection”) AND (“DDOS” OR “Distributed Denial of Service”);

- Take items publicized only between 2019 and 30 November 2024.

Our searches resulted in the following number of hits per database:

- DTU FindIt: 65

- IEEE Xplore: 20

These contained 15 duplicates in total; therefore, we concluded our search by identifying 70 unique papers.

During the selection process, certain papers identified in the initial database searches were excluded for a variety of reasons. Some studies were removed due to incomplete documentation, lack of peer review, or insufficient methodological details, which limited their reliability for synthesis. Others were excluded because their scope extended beyond the focus of this review. Duplicate records and papers presenting redundant findings were also filtered out to ensure a focused and high-quality set of papers for further analysis.

2.3. Conformance with PRISMA Criteria

As outlined in the PRISMA checklist, we have adapted our methodology to ensure the validity and transparency of our research. Our approach follows a structured process to enhance reproducibility and adherence to best practices.

The selection process involved four reviewers who independently screened the records. Initially, titles and abstracts were evaluated to exclude irrelevant studies. Full-text reviews were then conducted for studies that met the preliminary criteria, with eligibility assessed through a detailed evaluation by all four reviewers. Discrepancies between reviewers were resolved through discussion. Similarly, data extraction was performed independently by all four reviewers, focusing on selecting key points, which included study objectives, infrastructure, datasets, ML methods, evaluation metrics, constraints, and results. Verification steps ensured data consistency, and the reviewers worked to resolve situations where information was unclear or unspecified. The research included quantitative outcomes focused on performance metrics (accuracy, precision, recall, and F1 score) and qualitative insights into methodologies, and, where applicable, confidence intervals were reported. Missing data were handled through sensitivity checks, with independent reviews determining whether missing details were genuinely unavailable. Based on these evaluations, decisions were made to either exclude, ignore, or estimate missing values using a best-effort approach. Additional variables, such as dataset types and model architectures, were recorded, while assumptions were documented. In reference to the heterogeneity of our data, it was examined by comparing methodologies, datasets, and performance measures, with no formal meta-regression conducted.

Regarding the finalized results, they were tabulated and visualized using performance comparison tables and appropriate graphs, and a meta-analysis discovering trends and patterns is described in the text. Furthermore, sensitivity analyses were conducted by separating outliers, and their impact was assessed on performance. Certainty in the evidence was assessed based on the consistency and transparency of reporting across studies, while confidence levels were evaluated through qualitative assessments rather than statistical grading systems.

To determine the risk of bias, all reviewers independently evaluated the selected papers, comparing published results and identifying discrepancies. Any inconsistencies or missing data were noted, with study design, dataset quality, and reporting transparency considered in the assessment. Discrepancies were resolved through discussion to ensure a fair and unbiased evaluation process.

3. Field Assessment

This chapter defines the key components of the reviewed topic. The components discussed in the literature review are examined individually, but their interconnections—including associated challenges—are also emphasized.

3.1. IoT

There are many definitions to describe IoT. According to [8], “The IoT is a system of networked physical objects that contain embedded hardware and software to sense or interact with the physical world, including human beings”. Based on this definition, IoT devices operate in a system or network, forming an interconnected ecosystem where devices communicate and collaborate to perform specific tasks or achieve shared goals. This connection enables seamless data exchange and interaction between devices and their environments, driving automation, efficiency, and advanced analytics capabilities. We can refer to such interconnected systems as IoT networks. The number of connected IoT devices was expected to grow by 13% in 2024, reaching 18.8 billion, up from 16.6 billion in 2023, which marked a 15% increase over 2022 [1]. IoT devices are now present in nearly every industry, with some also forming part of critical infrastructure.

IoT has become a crucial topic due to the inherent limitations of many IoT devices. This includes low computational power due to these devices being originally created to perform specific tasks efficiently. Furthermore, security-related features, such as encryption, may not be fully implemented. While data collection and processing are essential for IoT applications, privacy issues arise at various stages of this process [9]. Also, the nodes within an IoT network are susceptible to numerous attacks aiming to disrupt the services provided by the IoT or take over the entire network. One of the most significant security threats to IoT systems is DDoS attacks [10,11]. As such, IoT network security has become a well-researched field in the scientific community. For the keywords ‘IoT security’, IEEE Explore [12] returns 4405 results between 2024 and 2025. In this systematic literature review, a subfield of IoT security is reviewed and aims to present the current state of the field.

3.2. Machine Learning

ML is a set of methods used to train machines to make decisions or predictions based on patterns learned from data. According to [13], ML involves creating programs that optimize performance based on past data and experiences. It focuses on developing algorithms that can learn from experience and enhance their performance over time. This learning process may involve modifications to the program’s structure or data. Essentially, machine learning aims to design programs that automatically improve their effectiveness through experience. There are many types of ML algorithms that can be used, each with different advantages and drawbacks. Various techniques exist to determine which methods should be tested in a given scenario, considering factors such as available data, computational resources, time constraints for decision-making, and other relevant parameters [14]. It should be noted that machine learning usually involves a model that should be trained and a method in which the model is trained and also depends on the type of ML, that is, supervised learning, unsupervised learning, or reinforcement learning. ML is a complicated process in itself, and the performance of the model after training is dependent on several factors, such as the quality of the test dataset and how performance is measured. As a result, comparing different models can be challenging.

Since we consider DDoS detection to be a classification task (binary or multiclass), we also chose the metrics/Key Performance Indicators (KPIs) accordingly. In the reviewed papers, multiple KPIs were considered, including accuracy, precision, recall, and F1 score, which allowed us to draw certain conclusions during analysis. However, performance prediction and measurement are also highly dependent on other factors, such as the used dataset and preprocessing of the data.

3.3. DDoS

A Denial of Service (DoS) attack (commonly referred to as a flood attack), in its simplest form, involves configuring a device on the internet to repeatedly send requests to another computer, bypassing the default settings of the command. The data size of each request can be significantly increased, and the time interval between transmissions can be greatly reduced. As a result, the target device becomes overwhelmed with an excessive amount of unnecessary data, ultimately causing it to stop functioning properly. DDoS attacks are highly covert and cause significant damage by allowing attackers to stay anonymous. The process involves creating malicious code designed to target specific systems when triggered. This code spreads across poorly secured systems on the internet and, once activated, launches an attack from these infected systems simultaneously [15].

These types of attacks (DoS and DDoS) not only prevent legitimate users from accessing (essential) services, but may lead to further consequences, including increased costs due to service downtime, recovery efforts, or missed opportunities. Consumed bandwidth, processing power, and other network resources cause collateral damage to surrounding systems.

DDoS attacks, while impossible to completely prevent due to the decentralized nature of the internet, can be effectively managed through a combination of strategies. Adjusting infrastructure configurations is a crucial step, as demonstrated in the late 1990s when default router settings were changed to counter Smurf attacks. A Smurf attack is a type of amplification attack where an attacker sends ICMP echo request packets (pings) to a network’s broadcast address, spoofing the source IP to be the victim’s address. This causes all devices on the network to respond with ICMP echo replies to the victim, overwhelming their system with traffic. Similarly, addressing vulnerabilities like open recursive Domain Name System (DNS) servers is critical to preventing DNS amplification attacks, although progress in reconfiguring these servers remains slow. Filtering distinct or unusual traffic patterns at ingress points is an effective method to minimize disruption by DoS or DDoS attacks. For instance, upstream routers can block Internet Control Message Protocol (ICMP) echo request traffic to stop ping flood attacks, while other anomalous traffic can be safely discarded based on profile analysis. Distributed hosting infrastructures, such as Akamai, are also useful for dispersing attack traffic across multiple highly connected nodes, reducing the impact on any single target. However, short DNS Time-To-Live (TTL) values provide limited benefit unless TTL entries are completely removed. A robust mitigation strategy typically involves a multi-layered approach. Identifying and shutting down source Internet Protocol (IP) addresses, deploying routing tricks to drop malicious traffic, and using high-speed line filtering devices to manage extraneous traffic are all effective techniques. Defensive measures like SYN proxies can also reduce the effectiveness of certain types of attacks. SYN proxies are a defensive technique against DDoS attacks that involve intercepting and managing TCP handshake requests. In large-scale scenarios where attack traffic reaches tens of gigabits per second, collaboration with Internet Service Providers (ISPs) to filter incoming traffic becomes essential to maintain normal operations and protect the network [16].

This literature review aims to help further researchers in leveraging machine learning and artificial intelligence to identify and respond to DDoS attacks in real-time by presenting the current state of the field. To achieve this, we take a closer look at popular datasets and ML techniques already used in relevant studies. We try to identify general trends and compare the outcomes of the respective studies.

3.4. IDS

According to Lee et al. [17], an IDS is a device or software application that monitors a network for malicious activity or policy violations.

IDSs are essential tools for identifying unauthorized access or malicious activities in a system. An intrusion involves accessing a system without authentication or authorization, including activities like tampering with files, malware execution, or remote attempts to compromise a system. Basic protective measures such as antivirus software and firewalls are often insufficient, as malware signatures can be altered and firewall rules can be bypassed. An IDS provides comprehensive monitoring by analyzing incoming and outgoing network traffic as well as detecting intrusions, malicious packets, and policy violations. It records logs and alerts system administrators in real time, offering robust security for organizations. IDS solutions are also available as hardware platforms or software applications and are increasingly adopting machine learning algorithms to predict attacks and classify legitimate traffic. IDS systems are categorized into three main types: Network-based IDS (NIDS), Host-based IDS (HIDS), and Distributed IDS (DIDS) [18].

IDSs can be classified by their detection methods, including signature-based analysis, which identifies known attack patterns; protocol-based analysis, which monitors compliance with protocol rules to detect violations; and anomaly-based analysis, which focuses on spotting unusual behavior to identify potential unknown threats. These methods collectively strengthen IDS capabilities in detecting and mitigating cyberattacks. The anomaly-based analysis addresses limitations in signature-based approaches by detecting unknown and known attacks through abnormal network behavior. Unlike relying on predefined signatures, this method uses heuristic rules or machine learning to classify traffic. It operates in two phases: training to learn the normal behavior of the system and testing to identify deviations indicating anomalies. Techniques such as neural networks, data mining, and artificial immune systems are used, supported by other tools. However, a notable drawback is the occurrence of false positives, where alarms are triggered without actual threats. Research continues to improve accuracy and reduce false alarms [18].

3.5. ML in IDS for DDoS

This paper’s aim is to review the current state of the ML-based IDS for DDoS attack detection. Based on the preliminary searches in the field, it can be seen that there are many different approaches. As we discuss in Section 4, there are many possible ways to implement the IDS from an infrastructural point of view. This impacts how the data are processed, how quickly the system can give an alarm (or take action), and the overall effectiveness of the system. Although this is an important question, many papers are mostly focused on implementing the ML-based detection-related part and emphasize researching an optimal method in terms of accuracy, precision, or other metrics, like F1 score.

3.6. Literature Reviews and Surveys

It is important to have information about what literature reviews are available currently on this topic. Among the papers that we obtained with our search term, the literature reviews and surveys were also marked. As such, we found two literature reviews in this domain. Additionally, we conducted another search on DTU Findit, where four additional relevant studies were found.

The found literature reviews are the following: [19] (2024), [20] (2021), [21] (2020), [22] (2023), [23] (2024), and [24] (2022). This is the number of surveys found with the original query in the mentioned database, so the actual number of reviews—if we considered more databases—would be higher. It should be mentioned that the found literature reviews, in some cases, present a broader perspective of the topic, and are not specifically looking into DDoS detection. An overview of recent advancements in ML-based IDS systems for DDoS detection in IoT networks can be seen in Table 1.

Table 1.

State-of-the-art surveys in ML-based IDS systems on DDoS attacks in IoT network domain (1: threats and attacks, 2: mitigation, 3: performance metrics, 4: research gap), [✓: included, -: not included].

The reviewed studies highlight the significance of ML- and DL-based IDS solutions for detecting DDoS in IoT networks. While various approaches have been explored, including anomaly detection, ensemble learning, and adaptive models, challenges such as dataset diversity, real-time implementation, and scalability remain. Compared to previous works, our paper provides a more detailed evaluation of ML-based IDS systems, particularly focusing on performance metrics, dataset utilization, and model comparisons. By addressing gaps such as underexplored datasets and improving detection accuracy, our study contributes to advancing IDS research in IoT security. Moreover, we prioritized including the latest studies (published between 2018 and 2024) in our paper, further distinguishing it from other surveys.

4. Deployment of the IDS

Deployment refers to the process of implementing and integrating a system or application within a specific environment. In the context of intrusion detection systems for DDoS attack mitigation in IoT networks, deployment defines the physical and virtual infrastructure where the IDS is installed and operated.

The infrastructure and deployment environment are critical because they directly affect the performance, scalability, and security of the IDS. Factors such as network topology, device count, data flow patterns, and computational resources play a significant role in determining the effectiveness of detection mechanisms. Understanding deployment environments also helps assess practical constraints, such as latency, processing overhead, and hardware limitations.

In this chapter, we look at the different deployment types found in our review and discuss them in detail.

Table 2 shows the categorization of all the papers in our research. Table 3 contains details of papers where a specific infrastructure has been explicitly mentioned or discussed; papers targeting general networks or not relating to deployment are excluded.

4.1. General IoT Networks

In most studies analyzed, there is no explicit discussion of specific network infrastructures or deployment. Instead, the primary focus is on the datasets employed to train and evaluate machine learning models. This suggests that researchers prioritize data characteristics over network configurations, as robust data are essential for building accurate and generalized models.

Several datasets are frequently used in IoT-focused intrusion detection studies. These datasets provide labeled traffic data, simulating both benign and malicious activities, which are essential for training machine learning algorithms.

Architecturally, the datasets often mimic centralized IoT networks, with sensor nodes transmitting data to a central server for processing. However, the specifics of these simulated architectures remain secondary to data quality and distribution.

While datasets are discussed in more detail in Section 5, some comments can be made on the infrastructure of the most popular datasets in our studies, TON_IoT, Bot-IoT, and CIC-IDS2017. The TON_IoT dataset [25] comprises diverse data sources gathered from telemetry datasets of IoT and IIoT (Industrial IoT) sensors. These datasets were collected from a realistic, large-scale network environment that was developed. The Bot-IoT dataset [26] is a network cluster connected to a public IoT hub by AWS, via the Message Queuing Telemetry Transport (MQTT) protocol. CIC-IDS2017 [27] includes the profile of 25 users working with many IP-based protocols (HTTPS, FTP, SSH, etc.) in a complete network topology that includes a modem, firewall, switches, and routers, with Windows, Ubuntu, and Mac OS X operating systems.

The emphasis on data rather than deployment infrastructure suggests an effort to ensure machine learning solutions are broadly applicable across multiple IoT environments. Researchers appear to be developing models that generalize well rather than optimizing for specific setups, which could limit applicability. This focus on generalization is particularly relevant given the heterogeneity of IoT systems, where devices, communication protocols, and deployment scales vary widely.

There are some studies that, while mostly focusing on the dataset and the ML methods, mention a specific type of infrastructure, which receives more consideration.

In [28,29,30], there is a focus on fog computing, an approach to network architecture that delegates a larger amount of computing power near the edge devices. As [28] suggests, fog computing is a defensive strategy that improves security, while also improving network routing performance. In [30]’s case, the fog cloud layer processes data and training, and executes the IDS filtering at a lower level, at the IoT gateway.

4.2. Software-Defined Networks (SDNs)

Software-Defined Networking (SDN) is a modern network infrastructure that separates the network controller into three distinct layers. At the application layer, business logic interacts with the SDN controller to request network services and set configuration rules. The Control Layer manages traffic and makes routing decisions. Finally, the Data Layer consists of physical or virtual network devices, which forward data packets based on the rules set by the SDN controller.

With centralization, SDN eliminates the need for each device to make independent routing decisions. This architecture allows for the dynamic configuration and optimization of network resources. Administrators can manage the entire network from a single console or through automated software, enabling dynamic scaling and responding to demand.

In SDN-based systems, for instance, in [31,32,33], the control plane, which handles traffic routing and management, operates as a centralized software module. As per [31], this separation means adaptive traffic control with added flexibility and scalability. It also helps with implementing machine learning models, as SDN controllers can monitor traffic patterns easily. In general, it reduces operational complexity while allowing for automatic load-balancing and dynamic resource allocation. Refs. [32,34] use a special component in the controller, called the SDNWISE Flow Table, which specializes in WIreless SEnsors (WISEs) and uses matches to filter traffic.

Ref. [35] created an SDN-integrated pyramidal, multi-level, conceptually decentralized multi-controller structured IDS system. The paper combines this structure with SDN data plane configurations and ML techniques to achieve a robust, real-data-trained IDS. The MULTI-BLOCK is a multi-layered defence strategy. By leveraging SDN, the framework implements granular traffic control measures, isolating infected devices and disrupting botnets. The goal is to contain attacks at the LAN level, minimizing the burden on central controllers and servers, ensuring critical network availability. It prioritizes the protection of controllers and distant nodes.

The first module is a controller-to-controller (C2C) communication framework within SD-IoT networks. It reduces communication overhead and the enhancement of intrusion attack detection. A secure decentralized communication scheme is introduced, with the goal of synchronized communication and minimized data exchange between controllers. Within the realm of C2C communication, one interface encompasses general communications, whereas the other is for alerts. By adopting this approach, the system effectively reduces both data control overhead and communication overhead among controllers.

The second module, the proposed P4-Enabled Decentralized Traffic Monitoring System (P4-DTMS), provides an efficient approach to managing IoT network traffic. The P4-DTMS module introduces a pipeline, consisting of 24 P4-enabled state tables that capture specific aspects of traffic. These state tables collectively contribute to creating a comprehensive network overview and insights into the network’s traffic patterns. Their algorithm then delves into the state table configuration.

The third module uses the extracted features to implement an ML-based state-driven identifier of attacks at the data plane stage. Deployed as a firewall, the inbound packets are first parsed, where statistics are recorded and updated. Occasionally, when the designated time window closes, results are sent to the higher-level switch. The rules of forwarding or stopping packets are updated; on the other hand, if the window period is still open, the process follows existing protocols.

In [10,36,37], the control plane detection is appended by application layer modules, which are used to describe the requirements or desired behavior of the network. In both cases, this layer and the IDS module receive network data and perform ML tasks. Mazhar et al. [37] specifically highlighted the performance and scaling capabilities of their research, suggesting that high-throughput research centers could be beneficiaries. The paper compares the performance of their IDS system in a centralized vs. distributed context of IoT networks, and found that the IDS system tested uses fewer resources and is quite suitable for low-power devices, such as IoT devices.

Ref. [38] establishes the GADAD (Genetic Algorithm DDoS Attack Detection) system, which focuses on edge-based technologies in stateful SDN-based networks. The GADAD system employs tree-based learning techniques and is designed to be deployed on edge devices in IoT networks to detect both high- and low-volume DDoS attacks. There are three main phases: network traffic preprocessing, feature engineering, and learning. In the first phase, it captures network traffic data exchanged between sensors and the edge server using Wireshark, and flow features are extracted using Zeek. The flow features are effective in both high- and low-volume attacks compared to packet-based features. The system introduces feature and depth tuning, a dual method that reduces memory usage without compromising the system’s detection capabilities. These trained models are then employed to detect and classify incoming network traffic data on the edge server.

However, SDN networks introduce trade-offs, such as a centralized controller being a single point of failure. Additionally, as put in [10], the low-resource IoT devices are still a bottleneck despite improving SDN networks; hence, scaling issues can appear when low-latency response times require optimized and high-performance algorithms. Ref. [35] states that the standard SDN structure suffers from single point of failure (SPOF) limitations, a single controller, which also hinders scalability and performance.

4.3. Edge–Industrial IoT (IIoT) and Wireless Sensor Networks (WSNs)

Edge computing is a term indicating data processing closer to the source of information. These systems prioritize local computing and routing, minimizing latency and reducing the load on centralized servers. Ref. [39] describes edge networks as an architecture consisting of two components: edge servers and edge devices, where the latter forward computationally intensive tasks and data to the former, closer-positioned servers, rather than a central cloud or other servers.

While edge computing disperses the load of central computing to many different nodes, these edge devices have limited resources, leading to worse efficiency in encryption/decryption, and have an overall lower quality of data connected from these nodes, as highlighted in [40]. It represents a decentralized computing paradigm where data processing occurs as close to the source of data generation as possible, rather than relying solely on centralized infrastructure. This shift toward edge computing addresses several challenges faced by IoT deployments, particularly those requiring low-latency responses and real-time decision-making.

IIoT networks are considered to be the numerous sensors and interconnected devices that monitor and control critical systems, such as manufacturing processes, manufacturing plants, power grids, etc. Combined with Edge, they form the category of Edge–IIoT networks, as discussed in [41,42,43]. Ref. [43] describes IIoT networks as larger-scale and more machine-based compared to small-scale home networks. The paper also shows their disadvantages, such as how the heterogeneity of devices and protocols often complicates integration.

Wireless Sensor Networks (WSNs), as described by [44], consist of spatially distributed sensors that monitor and collect data about their environment. The paper highlights problems and solutions of using the MQTT protocol; specifically, these networks are widely used due to their scalability and ease of deployment. Energy efficiency is a primary concern, however, as sensor nodes are often powered by batteries with limited lifespans. Mishra et al. [44] describe utilizing a technology that had its limits. It took several minutes before the test case anomaly was discovered, which could lead to major losses in real life. Their findings concur that while simulation has been very helpful in gathering data and identifying abnormalities, there are still many other routes to explore to enhance this research.

IIoT, Edge–IoT, and WSNs highlight the diversity of infrastructure approaches in IoT deployments, each addressing specific performance, scalability, and security needs. Edge–IoT prioritizes low-latency processing, IIoT emphasizes reliability and uptime in industrial contexts, while WSNs provide a flexible and scalable framework for distributed data collection. Despite their distinct focuses, these architectures often overlap in practice, creating hybrid networks that leverage their combined strengths.

4.4. Blockchain-Integrated Networks (BINs)

Blockchain technology introduces a decentralized ecosystem that enhances security and privacy in IoT networks. These networks can leverage features like immutable data records, distributed consensus, and smart contracts.

The blockchain is a decentralized and immutable storage model that consists of all transaction details that have been initiated by the peer node in the network, stored in a decentralized distributed ledger. Any transaction processed is verified by the consent of the majority of network devices [45].

Studies such as [45,46,47,48] highlight how implementing blockchain-enabled solutions eliminates the need for a trusted central authority by distributing control across all network participants, which enhances fault tolerance and reduces the risk of single points of failure. It also guarantees data integrity and a verifiable trail, building on the extra security of cryptographic hashing and digital signatures. The automated contracts stored in the blockchain can enforce predefined rules and execute actions without intervention, enabling in-place decision-making and alerting or taking appropriate actions. Some proposed systems integrate blockchain into their established IoT network [45,47], while others [46,48] append it with a separate blockchain authentication managing network.

Ref. [49] utilizes blockchain technology in a smart city/urban environment; however, it mentions that this approach introduces computational overhead, which can limit performance in resource-constrained environments. Furthermore, storage requirements increase as each node stores a complete copy of the blockchain ledger, which can be thousands of devices. These resource-heavy protocols also introduce latency, throughput bottlenecks, and higher energy consumption, which is not suitable for some use cases. Ref. [45] states that blockchain is verifiable and immutable, yet it is vulnerable to different, increasingly common attacks. DDoS attacks often caused by flooding of the mempool/memory pool in a blockchain network have severe consequences for legitimate users.

4.5. Medium-Sized Networks

In a two-part paper, Guerra-Manzanares et al. [50,51] explore IDS systems for networks of medium sizes, which is described as “up to 83 devices”. In [50], it is mentioned that a balance is represented between scalability and performance. These networks are often employed in testbeds and simulations to evaluate IDS performance under realistic loads without overwhelming computational resources.

In [51], Guerra-Manzanares et al. detail how the dataset was created for this specific kind of circumstance. They state that this research aims to fill this substantial gap by providing a novel IoT dataset acquired from a medium-sized IoT network architecture, containing both real and emulated devices with 80 virtual devices and 3 physical devices deployed. They mention that the size extension allows for the capture of malware spreading patterns and interactions that cannot be observed in small-sized networks and that no dataset uses the combination of emulated and real devices within the same network. The dataset is composed of normal and actual botnet malicious network data acquired from all the endpoints and servers during the initial propagation steps performed by Mirai, BashLite, and Torii botnet malware.

4.6. Vehicle-Related Networks

Vehicle-related networks, also known as the Internet of Vehicles (IoV), are an infrastructure based on connectivity between vehicles with road-side units, such as cameras or other electronic devices [52,53]. Smart vehicular networks improve many factors, such as road safety, driving experience, and decision-making based on the collected information, and are used to reduce accidents and increase the performance of driving. Securing against the alteration, monitoring, and removal of vital messages has been a big concern due to the vehicle’s connection over a wireless medium making several types of attacks viable, including DoS/DDoS. Additional difficulties arise due to the traditional design of vehicles, which often lacks comprehensive security considerations, particularly regarding autonomous functionality and communication capabilities. Furthermore, the growing number of networked vehicles increases the attack surface, introduces resource constraints, and adds complexity to modern automotive systems.

Because of these reasons, reactive/predictive IDSs have recently received more attention. Refs. [52,53] emphasize real-time communication and low-latency operations. These networks are essential for autonomous vehicles and smart traffic systems, where the speed of data exchange and reliability can impact safety and performance.

Gad et al. [53] train classifiers on the TON_IoT database and apply it to ad hoc networks of vehicles, showing great results despite the dataset being general, supported by many actions taken against overfitting. Their aim is to employ their model on both vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) parts of the network.

Ullah et al. [52] used specific car-hacking datasets. In their paper, they propose an ML model with high accuracy, with the intent for it to be deployed on the vehicles’ firewall. In the intra-vehicle network, the internal smart devices of a vehicle communicate with each other and control the communication of the vehicle in the inter-network. The hacker can target the internal network of the vehicle, which is challenging to defend against, as it is already met with high demand and resource requirements due to its low latency and high-pace environment.

4.7. Home-Built Networks

One study took the approach of a custom-built IoT network deployed in a controlled environment. Kalnoor and Gowrishankar [54] constructed a physical IoT setup in an office space, featuring typical devices like smart cameras, thermostats, and sensors (the exact list of peripherals can be found in Table A1).

This approach allows for real-world validation of IDS performance but is less common due to higher resource demands, and while it presents a case for robust, well-supported data, the fixed setup could also mean leaving flexibility and the possibility of covering edge cases in the background.

4.8. Performance

For performance, it can be recognized that thew majority of papers focus on the machine learning aspect for optimization. The authors of [55] propose that their model is much more effective than the previous state-of-the-art models, thanks to feature selection methods and feature dimensionality narrowed down to only 15 features. Compared to that, The authors of [56] defined new, novel traffic flow features for their ML model, which fit into fewer resources of IoT network platforms.

Others, such as in Ref. [57], use specific tools, such as SPARK, a widely popular data engineering tool in Big Data contexts, for fast processing time and efficiency; utilized in the cloud layer, it improves ML training, and then the model is deployed at the edge. Ref. [58] states that better correctness and application performance brought on by deep learning’s capacity for improved intellect can be used to spot new, novel strikes in IoT systems, which is vital in the context of securing lightweight IoT networks. According to the numbers, when the authors of [59] measured their ML model, after training and installation on the network’s firewall, it reached a speed of classifying almost 3 million messages in 1 s.

There have also been different approaches in terms of integrated deployment of IDSs, or using an additional system to append the existing network. Ref. [46] uses a designated Blockchain Server (BCS) bearing the responsibility of recording and validating transactions, while Ref. [60] choose to add a dedicated ML server, to combat these attacks on the network. Opposing that, SDNs often use their flexibility, such as [37], to integrate the IDS system into the already established network, where it is said that employing an SDN core enables real-time intrusion detection and mitigation. In [34], Bhayo et al. adjusted the existing network by adding a sink module to the IoT controller, containing a logging module that logs all incoming packets in the forwarding layer. These logs are recorded in the controller’s directory.

Table 2.

Studies categorized by infrastructure.

Table 2.

Studies categorized by infrastructure.

| Infrastructure | # of Papers | Citations |

|---|---|---|

| General IoT network, dataset-focused | 32 | [28,29,30,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82] |

| Software-Defined Networks (SDNs) | 9 | [10,31,32,33,34,35,36,37,38] |

| Edge, Edge–IIoT, Wireless Sensor Network (WSN) | 6 | [39,40,41,42,43,44] |

| Blockchain-integrated networks (BINs) | 5 | [45,46,47,48,49] |

| Vehicle-related, Internet of Vehicles | 2 | [52,53] |

| Medium-sized networks | 2 | [50,51] |

| Home-built network | 1 | [54] |

4.9. Conclusion

The analysis highlights that researchers aim to build adaptable solutions capable of functioning across diverse environments, leading to minimal focus on specific deployments. In these general cases, high-quality data take precedence, often overshadowing considerations related to network infrastructure.

Nevertheless, some architectural patterns stand out. For well-established technologies, SDNs, Edge, and Edge–IIoT emerge as popular choices. SDNs have been widely adopted due to their scalability and programmability. Similarly, edge computing has emerged as a vital component in distributed computing by bringing computation closer to data sources, reducing latency, and improving efficiency. Additionally, WSNs have been instrumental in industrial contexts, supporting distributed sensor networks for real-time data collection and analysis. While these technologies are widely known, blockchain has been an emerging topic in research in recent years. Apart from its origins, blockchain and the ledger system’s use cases have been explored since, and evolved into a versatile, decentralized framework across various domains. It offers promising enhancements in security, but introduces performance trade-offs, as it is costly to monitor all transactions.

Table 3.

Studies assessed by their IDS deployment (only including ones that focused, or made remarks about, infrastructure).

Table 3.

Studies assessed by their IDS deployment (only including ones that focused, or made remarks about, infrastructure).

| Paper | Network Type | Deployment Strategy | Data Source | IDS Detection Location | Response Timing |

|---|---|---|---|---|---|

| [45] | BIN | Fog cloud IDS | Nodes + Blockchain host | on site | instant |

| [46] | BIN | Separate blockchain network | Nodes + Blockchain host | on site | instant |

| [47] | BIN | Transactions in blockchain blocks | Blockchain network | on site | instant |

| [48] | BIN | Gateway with blockchain network access | Gateway traffic | on site | instant |

| [49] | BIN, smart city | Device + network | Blockchain Authenticator | on site | instant |

| [39] | Edge–IIoT | Flow controller Edge server | Edge–IoT devices | on site | instant |

| [40] | Edge, Sensors | IDS installed on cloud servers | Sensors | off site | after analysis |

| [43] | Edge–IIoT, 6LoWPAN | Sensor traffic-based | Sensors | off site | after analysis |

| [28] | General | Fog layer | IoT/non-IoT devices | - | - |

| [29] | General | Fog computing | - | - | - |

| [59] | General | Network firewall | - | - | - |

| [60] | General | Dedicated ML server | - | - | - |

| [30] | General | Fog/cloud layer+IoT gateway | Gateway traffic | on site | instant |

| [57] | General | Cloud layer ML training via Spark | IDS in edge layer | on site | instant |

| [52] | IOV | Intra/inter-vehicle data | Combined datasets | - | - |

| [53] | IOV | VANET servers | Dataset based | - | - |

| [51] | medium-sized network | Monitoring server separately | Monitoring server | off site | after analysis |

| [50] | medium-sized network | Monitoring server separately | Monitoring server | off site | after analysis |

| [44] | WSN, MQTT protocol | Integrated IDS | IoT devices | on site | after analysis + instant |

| [32] | SDN WISE | SDN WISE controller | SDN traffic data | on site | instant |

| [10] | SDN | SDN data + application plane | SDN traffic data | on site | instant |

| [36] | SDN | SDN control + application layer | SDN traffic data | on site | instant |

| [31] | SDN | SDN control plane | SDN traffic data | on site | instant |

| [33] | SDN | SDN control plane | SDN traffic data | on site | instant |

| [37] | SDN | SDN application layer | SDN traffic data | on site | instant |

| [34] | SDN WISE | SDN WISE control plane | SDN traffic data | on site | instant |

| [35] | SDN w/PCDMCS | Decentralized IDS + SDN data plane | SDN traffic data | on site | instant |

| [38] | SDN, Edge | Edge servers | Gateway traffic | on site | instant |

| [54] | WSN, Home built | IoT gateway | Gateway traffic | on site | instant |

5. Overview of Reviewed Datasets for ML-Powered IDS

Different datasets are necessary to train and test the machine learning algorithms, which are the foundation of this literature review. This chapter aims to examine the data more closely, highlighting the most used datasets, exploring them in more detail, and identifying some common patterns and differences between them. All papers in this survey were reviewed, and different insights came from this research.

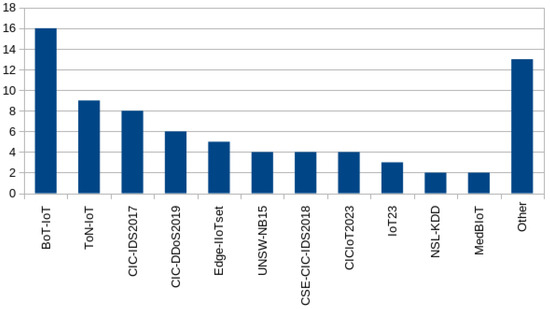

Datasets are usually made up of one or more files that may represent a specific file or attack type. The most common file types are pcap, csv, and txt. These files may have the same number of features, records, and benign/attack flows, constituting a balanced dataset; however, unbalanced datasets are far more common. From our research, only the Edge_IIoT dataset [83] came close to being considered balanced, the sole one out of the eight most popular datasets used according to Figure 1. Using imbalanced datasets may lead to biased trained models, though depending on the specifics of the ML algorithm and data preprocessing, this does not have to be the case.

Figure 1.

Dataset distribution among reviewed papers.

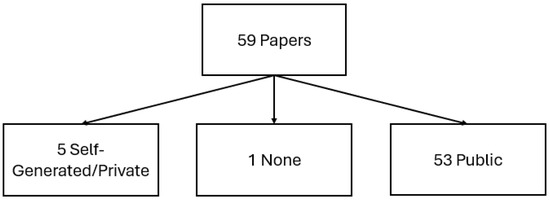

Generally, datasets can be divided into public and private, that is, those one can openly access and use and those one cannot. Figure 2 showcases how many papers utilized public or private datasets, excluding the papers that only include literature reviews. As is noted, in one case [32], no dataset was specified whatsoever. The 53 papers that included public datasets are listed in Table 4, alongside their year of publishing, the number of both records and features, as claimed by the authors, and whether DDoS was included as an attack. What must be noted is that due to the high variance in the way these datasets are structured and created, some values in the table may represent an approximation of such values.

Figure 2.

Overview of dataset distribution.

Furthermore, among the papers that included public datasets, on three occasions [38,39,54], the paper also made use of self-generated or private datasets on top of the public one to enhance or diversify the data or for testing purposes.

In the cases where self-generated or private datasets were used, the description of how these datasets were acquired varied heavily. For example, Ref. [84] creates a tool that is a network traffic generator for IoT devices and tests the proposed tool as well as the generated malicious and benign data in the paper. In other cases [37,38], the paper describes which existing tools were used to gather the data points and gives a general idea of the number of flows, features, and/or traffic types that are a part of the dataset.

The overwhelming majority of papers carried out some form of data processing, e.g., feature filtering or extraction, as the typical first step. In general, the papers chose a subset of both the features and flows available in the dataset. In fact, this step seems to be the foundation in the entire domain of ML-based IDSs, as several papers [40,78] were primarily written with the purpose of proposing improved data preprocessing. One particular paper [72] stands out from the rest as a standard dataset was used, but the network traffic data were turned into images, and computer vision ML was utilised to identify DDoS attacks.

Among the papers, there were several common themes repeated. Firstly, we found that multiple noted the general lack of publicly available, quality IoT datasets to be used in their research. The stated reason for lacking datasets was privacy, as large companies tend to not wish to share their data with researchers [85]. In particular, in cases where an industry-specific IoT device network traffic was necessary, the papers claimed there to be no available datasets, which typically resulted in them creating and publishing their own, as was the case for MedBIoT [51], or self-generating a private dataset, as the paper with a focus on smart agriculture did [86]. Further concerns were noted regarding unbalanced datasets that may have an uneven benign-to-attack traffic ratio or unlabeled datasets. While the majority of the reviewed datasets explicitly included DDoS attacks, a small subset did not specify their presence, instead relying on DoS or other flooding attacks that may serve as proxies for DDoS. As shown in Table 4, most studies in our survey utilized datasets that explicitly feature DDoS attacks. In contrast, a few older or industry-specific datasets, such as X-IIoTID [87], MedBIoT [51], and KDDCUP 1999 [88]—which are also relatively infrequent in the literature—either lacked explicit DDoS attack labels or did not provide sufficient information to confirm their inclusion.

From Figure 1, we observe the most popular datasets to be BoT-IoT [26], TON_IoT [85], and CIC-IDS2017 [89]. Other CIC datasets are also seen among the most popular datasets. For the sake of brevity and clarity, TON_IoT and Bot-IoT will be described in greater detail alongside the CIC datasets, with a special focus on CIC-IDS2017.

5.1. BoT-IoT

The BoT-IoT dataset [26] was created by the Cyber Range Lab of UNSW Canberra in 2019. The dataset is labeled, imbalanced, and was generated in a realistic testbed, usable for both binary and multiclass classification. The researchers collected data from five simulated IoT scenarios (weather station, motion-activated lights, garage door, smart fridge, and smart thermostat) in a testbed environment. Originally, 32 features were collected, such as IP or port addresses, from which an additional 14 new flow features were generated, like total or average bytes per IP, totalling 46 features for the dataset. Five types of attacks are represented in the dataset, including DDoS, DoS, OS and Service Scan, Keylogging, and Data exfiltration. There are over 73,000,000 attack instances in the dataset and just under 10,000 normal traffic instances in Bot-IoT, constituting an imbalanced dataset. Of the attack records, over 38,000,000 account for DDoS attacks specifically. This imbalance has prompted researchers in the domain to develop solutions to overcome the challenges surrounding the use of the dataset—either by merging it with other datasets [90] or applying various algorithms [91] to balance the dataset out.

Table 4.

Table of public datasets (✓: includes DDoS attacks in the dataset; -: not included or unknown).

Table 4.

Table of public datasets (✓: includes DDoS attacks in the dataset; -: not included or unknown).

| Dataset | Year | DDoS | # of Records | # of Features | References of Papers |

|---|---|---|---|---|---|

| KDDCUP 1999 [88] | 1999 | - | 4,898,431 | 41 | [78] |

| DARPA2000 [92] | 2000 | ✓ | 200,000+ | 41 | [54] |

| NSL-KDD [93] | 2009 | - | 150,000+ | 43 | [33,66] |

| ISCX 2012 [94] | 2012 | ✓ | 2,450,324 | - | [31] |

| CTU-13 [95] | 2014 | ✓ | 15,000,000 | - | [31] |

| UNSW-NB15 [96] | 2015 | - | 250,000+ | 48 | [56,57,66,70] |

| SNMP-MIB Dataset [97] | 2016 | - | 4998 | 34 | [44] |

| CIC-IDS2017 [89] | 2017 | ✓ | 2,830,743 | 80 | [29,33,52,64,68,69,71,79] |

| N_BaIoT [98] | 2018 | - | 7,062,606 | 23 | [40] |

| Mirai [99] | 2018 | ✓ | 750,000+ | 115 | [56] |

| CSE-CIC-IDS2018 [100] | 2018 | ✓ | 16,232,943 | 79–83 | [52,68,69,80] |

| DS2OS [101] | 2018 | ✓ | 355,902 | 11 | [76] |

| CIC-DDoS2019 [102] | 2019 | ✓ | 94,000+ | 74 | [39,49,61,65,68,72] |

| BoT-IoT [26] | 2019 | ✓ | 73,360,900 | 46 | [28,30,45,48,55,56,58,61,62,63,67,73,75,76,78,82] |

| IoT23 [103] | 2020 | ✓ | 325,307,990 | 23 | [57,60,81] |

| TON_IoT [85] | 2020 | ✓ | 22,000,000+ | 22–52 | [35,38,53,55,56,57,58,61,75] |

| IoTID20 [104] | 2020 | - | 100,000+ | 86 | [36] |

| Application-Layer DDoS Dataset [105] | 2020 | - | 346,869 | 78 | [47] |

| IoT-CIDDS [77] | 2021 | ✓ | 95,299 | 21 | [77] |

| ETF IoT Botnet [106] | 2021 | - | 2245 | 9 | [56] |

| Edge-IIoTset [83] | 2022 | ✓ | 20,952,648 | 61 | [10,35,41,42,43] |

| X-IIoTID [87] | 2022 | - | 820,834 | 68 | [35] |

| MedBIoT [51] | 2022 | - | 17,845,567 | 100 | [50,51] |

| CICIoT2023 [107] | 2023 | ✓ | 45,000,000+ | 47 | [46,59,74,76] |

5.2. TON_IoT

The TON_IoT dataset [85] was also created by the Cyber Range Lab of UNSW Canberra in 2020. The dataset is labeled, unbalanced, and was generated from an IoT/IIoT network testbed. The dataset contains data from heterogeneous sources, gaining its name (TON) from the data it includes: telemetry, operating systems, and network. The researchers include simulated sensor data from seven IoT/IIoT sensors (weather station, motion-activated lights, garage door, smart fridge, smart thermostat, Modbus service, and GPS) as well as real devices: two phones and a smart TV. Nine types of attacks are represented in the dataset, including Scanning, DoS, DDoS, ransomware, backdoor, data injection, Cross-site Scripting, password cracking attack, and Man-in-The-Middle. There are over 22,000,000 total data records, of which just under 800,000 are normal traffic, which means TON_IoT is also an imbalanced dataset. Over 6,000,000 of the records are from DDoS attacks. Since the dataset contains multiple sub-datasets with unique processed or raw data [25], it is not clear how many features the dataset holds in total, though the combined dataset called combined_IoT_dataset proposed by the original paper [85] uses a total of 22 features.

5.3. CIC-IDS2017

All of the CIC datasets are created by the Canadian Institute for Cybersecurity, sometimes collaborating with an external institution [27]. In the case of CIC-IDS2017 [89], it is the first IDS dataset that the CIC created, back in 2017. The dataset is labeled, imbalanced, and was generated based on the most common attacks in 2016. The researchers aimed to create naturalistic benign background traffic and set out to mimic a real network traffic capture in a complete network configuration. Seven types of attacks are represented in the dataset, including DoS, DDoS, Brute Force, Heartbleed, Web, Infiltration, and Botnet. Almost 2,300,000 of the records account for benign traffic, while the remaining 500,000 are attacks, with the DoS (250,000) and DDoS (128,000) attacks accounting for over half of the attack records. The researchers mention that the dataset contains over 80 features, though in all analyses with this dataset, including the researchers’ own [89], only 80 or fewer features are extracted. Therefore, this dataset is considered to be imbalanced, and, similar to the previously discussed datasets, different mitigation strategies for this imbalance are employed by further research [108].

6. ML Performance Review in IDS

6.1. ML Performance Comparison

This chapter reviews various ML techniques for DDoS attack detection, comparing their performance across multiple datasets. The analysis aims to identify patterns and determine the most effective models for this task.

For each paper reviewed, the analysis prioritized the highest-performing models as reported for a given dataset. When a model was evaluated across multiple datasets, preference was given to the dataset containing the largest number of records, as larger datasets often provide more reliable performance insights. Although the BoT-IoT dataset is frequently used in these studies, this review preferred the TON_IoT dataset. This choice reflects the higher number of classes in the TON_IoT dataset in comparison to BoT-IoT (Section 5).

However, identifying the “best” model without a settled benchmark is not an easy task. The performance metrics used, such as accuracy, precision, and recall, often vary significantly depending on the dataset characteristics, evaluation methodologies, experimental setups, and preprocessing. Understanding the specific contexts and limitations of each dataset is crucial. These are discussed in detail in Section 5.

It is also important to note that the optimal models for binary classification tasks are not always the best for multiclass classification, as these tasks aim for different objectives and face various dataset characteristics, e.g., Ref. [45] proposed XGBoost for binary classification and Random Forest (RF) for multiclass classification.

Inconsistent reporting of evaluation metrics across studies (e.g., [78]) is another challenge, which complicates direct comparisons. DDoS detection can be approached as either binary (e.g., distinguishing between attack and normal traffic) or multiclass (e.g., identifying specific types of traffic or attack categories). To perform analysis, specific metrics were extracted from each study, including accuracy, precision, recall, and F1 score, whenever reported. These metrics were selected based on their usability for the task and their ability to provide an even measure of model performance, particularly in the event of class imbalances.

Some studies, such as [31], were excluded from this comparison due to ambiguity or the absence of precise numerical metrics.

The datasets employed in the reviewed studies were examined to provide context for interpreting the findings. This was followed by the construction of comprehensive performance Table 5 and Table 6. These sorted tables, presented on the basis of accuracy for both binary and multiclass classification tasks, reveal several significant trends in the field. A particular observation here is the prevalence of above 90% accuracy in a majority of studies, except in two cases when performance dropped below this rate ([36,66]). Performance in these models often results from the use of sophisticated methods like feature engineering, dimensionality reduction, and algorithm-level optimizations that play a significant role in providing high performance in DDoS detection tasks.

Table 5.

Binary classification performance (sorted by accuracy).

Table 6.

Multiclass classification performance (sorted by accuracy).

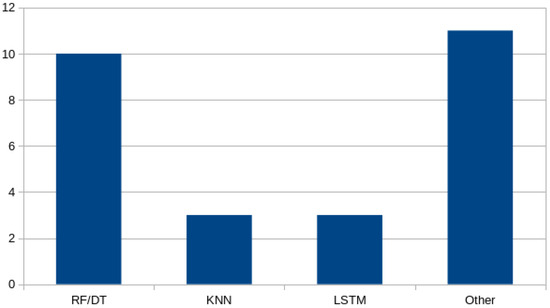

There were significant performance variations between various ML models. For binary classification, RF, DT, and XGBoost were the overall best performers among other models. On the contrary, for multiclass classification, the RF, K-Nearest Neighbors (KNN), and Long Short Term Memory (LSTM) models performed better, suggesting that the flexibility and robustness of the above methods were more suitable to deal with the increased complexity of the multiclass issue. These findings verify the relevance of algorithmic selection to maximize outcomes.

While the majority of studies emphasize accuracy as the primary measure, it is class distribution-prone and can be misleading with high class imbalances in the datasets. In response to these limitations, numerous studies have placed emphasis on measures such as F1 score, which provides a balanced perspective with regard to both recall and precision. This shift emphasizes the need for standard reporting practices since they enable fair and meaningful comparisons among studies and permit the building of robust DDoS detection methodologies.

It is hard to compare the time complexity of different machine learning techniques across various datasets due to differences in the implementation, hardware, and nature of the datasets. Some of the papers provided us with ADT (Average Detection Time) but most did not. To address this, we chose to calculate the Average Validation Time (AVT)—the time it took to run models on test datasets, grouped by particular datasets. Although AVT is a useful rough estimate of model efficiency, it is by no means flawless. It does not account for preprocessing steps or the specific feature sets used, which can prove to be of significant impact. However, we believe that AVT is a handy metric for gaining insight into the relative computational expense of different models on provided datasets.

6.2. Binary Classification

6.2.1. Observations

Table 7, Table 8 and Table 9 indicate that most of the studies achieved an accuracy rate higher than 90%, with a few achieving near-perfect accuracy. More advanced preprocessing techniques like dimensionality reduction and feature engineering often resulted in improved performance. DT, RF, and ensemble algorithms like XGBoost repeatedly ranked high in the best-performing category.

Table 7.

Binary classification performance for BoT-IoT (sorted by accuracy); AVT: Average Validation Time.

Table 8.

Binary classification performance for TON_IoT (sorted by accuracy); AVT: Average Validation Time, ADT: Average Detection Time.

Table 9.

Binary classification performance for CIC-DDoS2019 (sorted by accuracy); AVT: Average Validation Time.

In the TON_IoT dataset, DT and RF achieved perfect accuracy, benefiting from employed preprocessing in [55,61]. Similarly, XGBoost demonstrated exceptional accuracy (99.9987%) on the BoT-IoT dataset, underscoring its robustness [45]. This can also be attributed to carefully designed features and a controlled attacking setting. High performance often reflects an ideal scenario where distinguishing features are quite clear. However, the controlled nature of the dataset might not capture the full complexity found in real-world environments.

The CIC-DDoS2019 dataset showed the worst accuracies and the widest performance spread compared to the other two datasets. This variation could correspond to the complexity of the DDoS attack patterns that are present in the dataset. Deep learning techniques that include CNNs may underline the potential complexity of the dataset, where performance could be improved when spatial or sequential representations of data are the basis for such methodologies.

The general tendency in the performance of the studied models indicates that models based on neural networks require more time and impose higher computational overhead [61], while XGBoost, DT, RF, and ET are faster. However, in the CIC-DDoS2019 dataset, CNN-based models outperformed tree-based and SVM-based alternatives (Table 9).

6.2.2. Challenges in Comparison

Many studies prioritize accuracy, neglecting other key metrics like recall, precision, or F1 score, masking the impact of class imbalance in the datasets. The choice of dataset significantly affects model performance due to variations in traffic volume and class distribution. Hyperparameter tuning and dataset-specific optimizations further limit the validity of direct comparisons.

6.2.3. Trends and Insights, Table 7, Table 8 and Table 9

Ensemble models such as RF and XGBoost are usually the preferred choices in binary classification problems since they have the capability of efficiently picking up complex patterns. Custom preprocessing methods, as seen by studies [61,72], significantly enhance performance, highlighting the importance of dataset-specific tweaks.

6.3. Multiclass Classification

6.3.1. Observations

Similar to binary classification, many studies also achieved high accuracy in multiclass classification. Models based on the RF technique dominate in the BoT-Iot dataset. All of the studies using this dataset for multiclass classification exceeded 99%.

In the case of the TON_IoT dataset, the techniques used are more diverse. RF (including GADAD-RF) achieved perfect scores of 100% [38,55]. Decision Trees and LSTM were just behind the RFs, with scores of 99.9 [61] and 99 [58], respectively. However, those using KNN and voting performed worse (F1 scores: 97.4 [53] and 88.63 [75]). These results imply that the more complex the models used, the worse the predictions.

Unfortunately, the studies covering NN models did not provide testing time; thus, we were unable to benchmark them against tree-based models for multiclass classifiers. However, Table 10 shows that [52] performed ≈45 and ≈19 times slower than the two tree-based equivalents [67].

Table 10.

Multiclass classification performance for BoT-IoT (sorted by accuracy); AVT: Average Validation Time, ADT: Average Detection Time.

6.3.2. Challenges in Comparison

Incomplete reporting of metrics, such as missing F1 scores, limits the reliability of comparisons. Skewed class distributions in multiclass datasets inflate accuracy but obscure the performance of minority classes.

6.3.3. Trends and Insights, Table 10 and Table 11

While RF dominates multiclass tasks, models based on neural networks such as LSTM [52,58] demonstrate growing potential, particularly for sequential data. Tailored preprocessing and feature engineering are critical, as evidenced by studies [38,61]. Standardized reporting practices remain essential for ensuring fair comparisons and reproducibility.

Table 11.

Multiclass classification performance for TON_IoT (sorted by accuracy); AVT: Average Validation Time, ADT: Average Detection Time.

Table 11.

Multiclass classification performance for TON_IoT (sorted by accuracy); AVT: Average Validation Time, ADT: Average Detection Time.

| Paper | Accuracy [%] | Precision [%] | Recall [%] | F1 Score [%] | AVT [ms] | ADT [ms] | Highest Accuracy ML Technique |

|---|---|---|---|---|---|---|---|

| [38] | 100 | 100 | - | 100 | 0.022 | - | GADAD-RF |

| [55] | 100 | 100 | 100 | 100 | 0.795 | - | RF |

| [61] | 99.9 | 99.9 | 99.9 | 99.9 | - | 170 | DT |

| [58] | 99 | 99 | 99 | 99 | - | - | LSTM |

| [53] | 98.5 | 98.2 | 95.9 | 97.4 | - | - | KNN |

| [75] | 96.32 | 93.12 | 84.55 | 88.63 | - | - | Voting (DT, MLP RProp, Logistic Regression) |

7. Discussion

7.1. Datasets

The reviewed studies reveal a strong preference for the BoT-IoT and TON_IoT datasets due to their suitability for both binary and multiclass classification tasks. Both datasets offer rich, labeled data and are gathered from real IoT/IIoT environments and are therefore highly relevant for cybersecurity research. However, their significant class imbalances pose challenges that require additional preprocessing or algorithmic strategies to ensure balanced performance across classes. The BoT-IoT dataset, with its smaller number of attack classes, is particularly popular, while the more diverse TON_IoT dataset provides opportunities to evaluate model performance across a wider range of scenarios. Comparatively, datasets like CIC-IDS2017 are also used, but their specificity to certain attack types and features makes them less applicable in general for all IoT usage. The distribution of datasets among the reviewed papers is summarized in Figure 1.

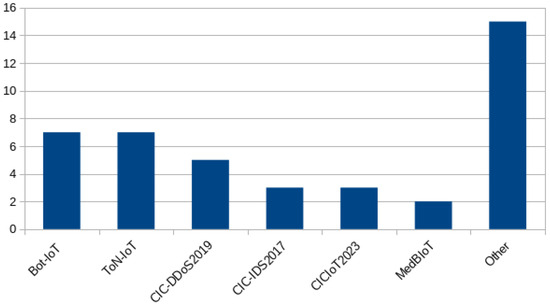

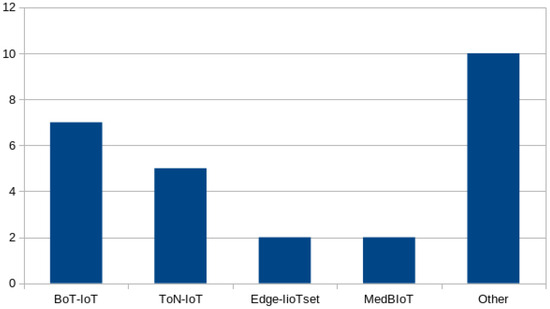

There is no significant difference in the popularity of datasets used for binary and multiclass classification tasks. BoT-IoT remains the most popular dataset, followed very closely by TON_IoT, because both datasets are eligible for these two purposes. Binary classification—labeling data flows as benign or attack—appears to be tackled more often (42 studies) than multiclass classification, which focuses on distinguishing between different attack types (27 studies). The distribution of datasets employed for binary and multi-classification comparisons is presented in Figure 3 and Figure 4, respectively.

Figure 3.

Distribution of datasets used for comparison of binary classification.

Figure 4.

Distribution of datasets used for comparison of multi-classification.

7.2. Machine Learning Techniques

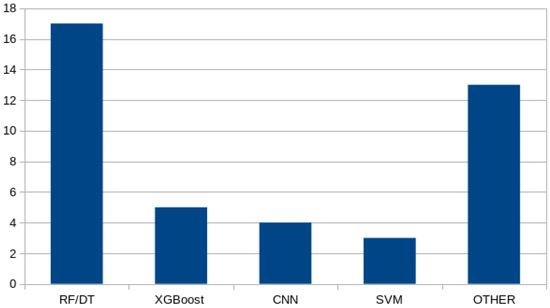

In binary classification tasks, the Random Forest (RF) and Decision Tree (DT) algorithms perform exceptionally well, along with XGBoost. However, for multiclass classification, XGBoost is notably absent as a standalone model. Simple tree-based models appear to outperform others, likely because of their robustness against overfitting. Figure 5 and Figure 6 present the distribution of ML techniques employed for binary and multiclass classification respectively.

Figure 5.

Distribution of ML techniques used for comparison of binary classification.

Figure 6.

Distribution of ML techniques used for comparison of multiclass classification.

7.3. Resource Usage

The hardware used varies from basic laptops with low-end configurations to high-performance computing (HPC) facilities and cloud setups, as can be seen in Table A1. For instance, the majority of the studies used personal computers or laptops with Intel Core i5 or i7 processors and 8–32 GB of RAM. While these setups are sufficient for small datasets and general machine learning models, they struggle to handle large datasets or deep, computationally intensive models. Alternatively, highly resource-consuming systems such as HPC clusters or systems with components with GPUs such as NVIDIA Tesla or RTX4070Ti will be in a position to offer researchers an accelerated method of dealing with big datasets along with computer-resource-intensive models such as CNNs and LSTMs.

One of the most noticeable trends is the dominance of Windows operating systems in most experiments, with some experiments being conducted using Ubuntu or other Linux operating systems in instances where cloud or server-based setups were used. Programming environments are largely comprised of Python-based libraries (e.g., Scikit-learn and TensorFlow), indicating the dominance of Python as an ML research standard language. Other approaches, such as using Rust in the interest of efficiency, were reported but less common.

The choice of infrastructure can significantly impact the scalability, efficiency, and overall feasibility of experiments. HPC or cloud-based experiments benefit from minimized computation time and task parallelization. This is particularly evident in experiments based on deep learning frameworks or utilizing large datasets such as BoT-IoT and TON_IoT. However, personal computer or laptop experiments rely on straightforward models such as Decision Trees or Random Forests due to hardware limits.

7.4. Performance