Explainable AI Frameworks: Navigating the Present Challenges and Unveiling Innovative Applications

Abstract

1. Introduction

1.1. Problem Statement

- Explanation types (extrinsic or intrinsic);

- Model-dependence (model-agnostic or model-specific);

- Explanation scope (global or local);

- Output format (arguments, text, visualizations, etc.);

- Use cases;

- Programming language compatibility;

- Applicability to different ML and deep learning paradigms;

- Model complexity considerations;

- Open-source availability and license type;

- Associated challenges and limitations;

- Primary strengths and potential benefits.

1.2. Objectives

- Categorize XAI frameworks: Develop a methodical framework for classifying XAI solutions based on their core attributes and mechanisms.

- Comparative Analysis: Perform a detailed comparison of the major XAI frameworks, evaluating their strengths, weaknesses, and suitability for various use cases and ML model types.

- Technical Considerations: Examine the technical implications of different XAI frameworks, including programming language dependencies, compatibility with ML and deep learning models, and performance considerations.

- XAI Selection Guidance: Create guidelines and recommendations for practitioners to aid in selecting the most appropriate XAI framework for their specific application scenarios.

- Identify Challenges and Opportunities: Highlight the existing limitations and challenges associated with the current XAI frameworks and pinpoint areas for future research and development.

1.3. Methodology

- Literature review: An extensive review of the existing research on XAI frameworks and the established classification schemes.

- Framework selection: Identifying and selecting a representative set of prominent XAI frameworks based on their popularity, application domains, and technical characteristics.

- Attribute analysis: Performing a comprehensive analysis of each selected framework based on predefined attributes, such as explanation type, model dependence, output format, and use cases. This may involve a documentation review, code analysis (if open-source), and potentially direct interaction with the frameworks through application programming interfaces (APIs) or simple test cases.

- Comparative analysis: Evaluating and comparing the capabilities and limitations of different XAI frameworks across various attributes, highlighting their strengths and weaknesses and suitability for different use cases.

1.4. Related Works

1.5. Our Contribution

1.6. Paper Structure

2. Research Background

2.1. What Is Explainable AI?

2.2. Traditional AI vs. Explainable AI

2.3. Frameworks in XAI

2.4. Categories in XAI

3. Research Methodology

3.1. Literature Review

3.1.1. Constructing the Search Terms

3.1.2. Inclusion Criteria

3.1.3. Exclusion Criteria

- Some studies were misleading and were not relevant to our research objectives.

- There were many papers focused on a single application concept that did not mention much on the XAI framework side.

- The papers did not mention any of the key attributes of the XAI framework.

3.2. Selecting Primary Sources

3.3. Framework Selection

3.4. Attribute Analysis

- Year Introduced: This indicates the framework’s maturity and potential level of development.

- Reference Paper: The core research paper describing the framework’s theoretical foundation and methodology.

- XAI Category: This classifies the framework based on its explanation approach (e.g., extrinsic, intrinsic, model-agnostic, model-specific, global, or local).

- Output Formats: The types of explanations the framework generates (e.g., arguments, textual explanations, or visualizations).

- Current Use Cases: Examples of real-world applications where the framework has been employed.

- Programming Language: The primary programming language used to develop the framework.

- Machine- and Deep Learning Scope: Whether the framework is designed for traditional ML models or specifically caters to deep learning models.

- Understanding Model Complexity: The framework’s ability to explain complex models effectively.

- Development Model: Categorization as a commercial or open-source framework.

- License Type: Applicable only to open-source frameworks, specifying the license terms under which they are distributed.

3.5. Comparative Analysis

4. Discussion

- Explanation Focus: This distinction clarifies whether the framework provides explanations for the overall model’s behavior (global explanations) or focuses on explaining individual predictions (local explanations).

- Model Integration: This categorization highlights whether the framework operates post hoc, analyzing a model after training, or is intrinsically integrated during training.

- Model Compatibility: This attribute specifies whether the framework is model-agnostic, applicable to various AI models, or model-specific, designed for a particular model type (potentially offering deeper insights but limited applicability).

4.1. XAI Frameworks

4.1.1. LIMEs

4.1.2. GraphLIMEs

4.1.3. SHAPs

4.1.4. Anchors

4.1.5. LOREs

4.1.6. Grad-CAM

4.1.7. CEM

4.1.8. LRP

4.1.9. ELI5

4.1.10. What-If Tool

4.1.11. AIX360

4.1.12. Skater

4.1.13. Captum

4.1.14. DLIME

4.1.15. EBM

4.1.16. RISE

4.1.17. Kernel SHAPs

4.1.18. Integrated Gradients

4.1.19. TNTRules

4.1.20. ClAMPs

4.1.21. OAK4XAI

4.1.22. TreeSHAP

4.1.23. CasualSHAPs

4.1.24. INNVestigate

4.1.25. Explain-IT

4.1.26. DeepLIFT

4.1.27. ATTN

4.1.28. Alteryx Explainable AI

4.1.29. InterpretML

4.1.30. DALEX

4.1.31. TCAV

4.2. Comparative Analysis

4.2.1. Finance

4.2.2. Healthcare

4.2.3. Cybersecurity

4.2.4. Legal

4.2.5. Marketing

4.2.6. Aviation

5. Challenges

| XAI Framework | Key Limitations/Challenges | Primary Strengths |

|---|---|---|

| LIMEs [56] | May not fully reflect complex model behavior, computationally expensive for large datasets | Simple, intuitive explanations, work with various models |

| SHAPs [48] | Computationally expensive, explanations can become complex for large feature sets | Grounded in game theory, offers global and local insights |

| Anchors [42] | Reliance on rule generation may not cover all decision boundaries | Provides high-precision, human-understandable rules |

| LOREs [31] | Reliance on an external rule generator; may not scale well with large datasets | Can explain decisions using existing rules, offering fast results |

| Grad-CAM [49] | Primarily for convolutional neural networks (CNNs); they may not work well with other architectures | Efficiently visualizes heatmaps for image classification |

| Integrated Gradients [50] | Relies on choosing a baseline input, can be computationally expensive | Offers smooth visualizations of feature attributions |

| CEM [51] | On choosing an appropriate reference class, which may not be intuitive for complex models | Offers class-specific explanations, interpretable through saliency maps |

| LRP [57] | Computational costs can be high for complex models, explanations can be complex to interpret | Provides comprehensive attribution scores for each input feature |

| What-If Tool [58] | Requires expert knowledge to design effective explanations, limited to specific model types | Allows interactive exploration of model behavior through hypothetical scenarios |

| AIX360 [59] | Primarily focused on fairness analysis; may not provide detailed explanations for individual predictions | Offers various tools for detecting and mitigating bias in models |

| Skater [1] | Limited model support, requires knowledge of counterfactual reasoning | Enables counterfactual explanations, providing insights into “what-if” scenarios |

| Captum [43] | Requires familiarity with the library’s API, not as beginner-friendly as other options | Offers a variety of explainability techniques in a unified Python library |

| Explain-IT [41] | The clustering step through supervised learning may result in introducing bias due to the application of a specific model | provides improved insights into unsupervised data analysis results, enhancing the interpretability of clustering outcomes |

| TCAV [55] | Primarily for image classification; may not be applicable to other domains | Generates interpretable visualizations through concept activation vectors |

| EBMs [60] | Limited to decision tree-based models, can be computationally expensive for large datasets | Offers inherent interpretability through decision trees, facilitates feature importance analysis |

| RISE [44] | Primarily for image classification tasks; may not generalize well to other domains | Generates interpretable heatmaps by identifying relevant regions of an image |

| Kernel SHAPs [34,48] | Relies on kernel functions, which can be computationally expensive and require careful selection | Offers flexibility in handling complex relationships between features through kernels |

| ClAMPs [30] | Limited publicly available information about specific limitations and challenges | Aims to explain model predictions using Bayesian hierarchical clustering |

| OAK4XAI [33] | Relies on finding optimal anchors, which can be computationally expensive | Aims to identify concise and interpretable anchors using game theory concepts |

| TreeSHAPs [34] | Primarily focused on tree-based models, limiting its usage with other model architectures. Explanations for very complex decision trees can become intricate and challenging to interpret. | Offers greater clarity in understanding how tree-based models arrive at predictions. |

| CasualSHAPs [40] | Requires access to additional data and assumptions about causal relationships, which might not always be readily available or reliable. Can be computationally expensive, especially for large datasets. | Provides potential insights into which features drive outcomes, going beyond mere correlation. |

| INNvestigate [45,46] | While aiming for interpretability, the internal workings of the framework itself might not be readily understandable for non-experts. | Explanations for intricate deep learning models might still require some expertise to grasp fully. Makes the decision-making process of “black-box” deep learning models more transparent. |

| DeepLIFT [67,68] | Hyperparameters can significantly impact the explanation results, requiring careful tuning. May not be suitable for all types of deep learning models or tasks. | Pinpoints the importance of individual neurons within a neural network, offering insights into its internal workings. |

| ATTN [54] | Primarily focused on explaining attention mechanisms, limiting its use for broader model interpretability. Understanding attention weights and their impact on the model’s output can be challenging for non-experts. | Helps decipher how attention mechanisms are used in deep learning models, especially for NLP and computer vision tasks. |

| DALEX [36] | Compared to some more established frameworks, DALEX might have less readily available documentation and resources. DALEX might still be under development, and its capabilities and limitations might evolve. | Facilitates “what-if” scenarios for deep learning models, enhancing understanding of how input changes might impact predictions. |

| ELI5 [71] | Limited to sci-kit-learn and XGBoost models. Might not generalize to all ML models | Provides clear, human-readable explanations for decision trees, linear models, and gradient-boosting models |

| GraphLIMEs [39] | Computationally intensive, not ideal for real-time applications. Explains tabular data predictions by modeling feature relationships as graphs. | Especially useful when feature interactions are important |

| DLIME [32] | Relatively new framework with less mature documentation and community support | Aims to combine global and local explanations using game theory and feature attributions for diverse model types |

| InterpretML [47,102] | Requires additional installation and integration to work. Might not be as user-friendly for beginners | Offers a diverse set of interpretability techniques as part of a larger ML toolkit |

| Alteryx Explainable AI [38] | Commercial offering with potential cost implications | Provides various visual and interactive tools for model interpretability within the Alteryx platform |

| TNTRules [29] | The black-box nature may limit the collaborative tuning process, potentially introducing biases in decision-making | aiming to generate high-quality explanations through multiobjective optimization |

5.1. Applicable Recommendations

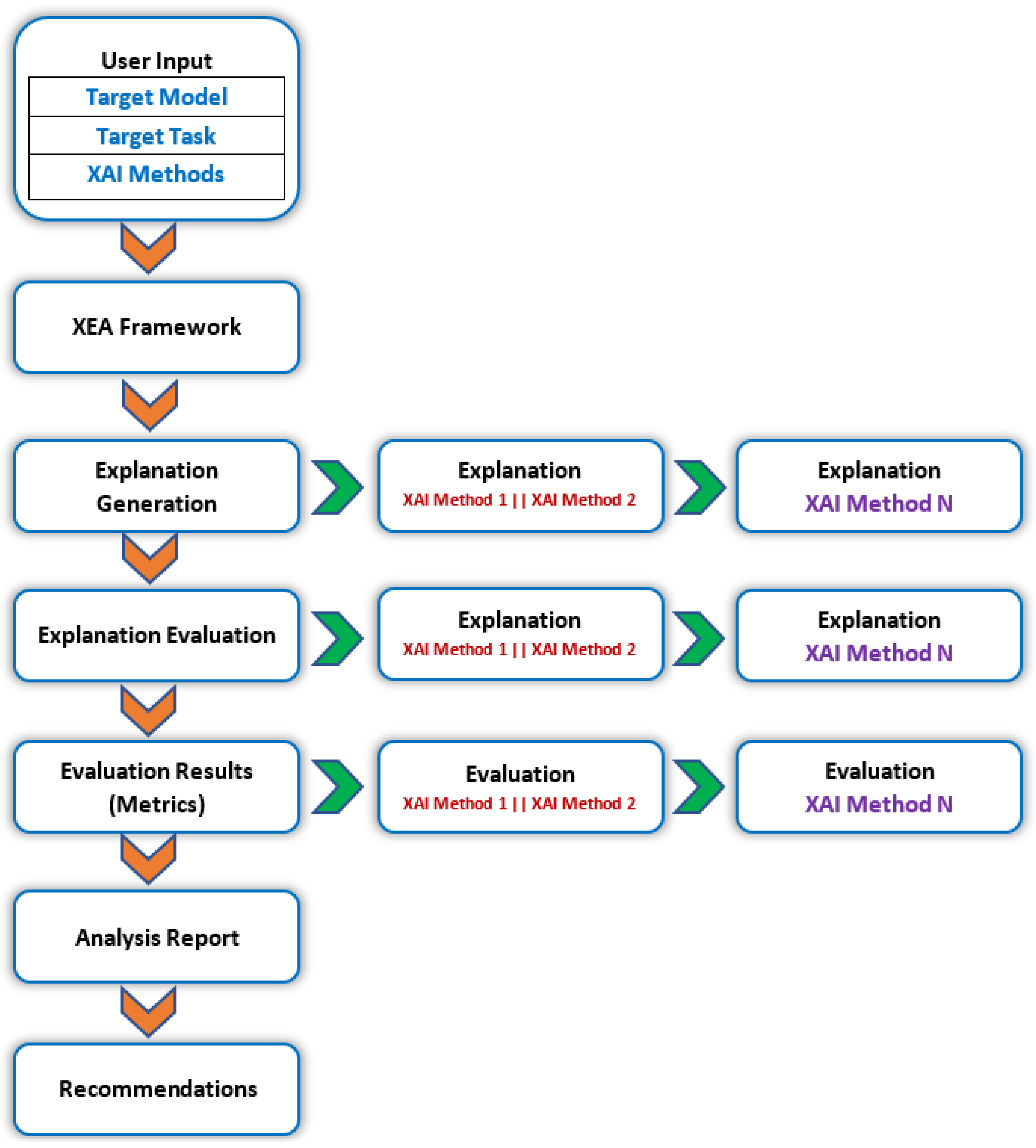

5.2. New Proposed XAI Framework

- Fidelity: Measures how accurately the explanation reflects the true decision-making process of the underlying AI model.

- Fairness: Evaluates whether the explanation highlights or perpetuates any biases present within the model.

- Efficiency: Analyzes the computational cost associated with generating explanations using the XAI method.

- User-Centricity: Assesses the understandability and interpretability of the explanations for users with varying levels of technical expertise.

- Users will specify the XAI methods they wish to compare.

- Details regarding the target AI model and its designated task (e.g., image classification or loan approval prediction) will also be provided by the user.

- The XAIE framework will execute each selected XAI method on the target model, prompting the generation of explanations.

- The generated explanations will then be evaluated against the chosen benchmarks from the comprehensive suite.

- Upon completion, the framework will deliver a comprehensive report comparing the XAI methods based on the evaluation results. This report will include the following:

- A detailed breakdown of the strengths and weaknesses of each XAI method for the specific model and task.

- Visual representations of explanation fidelity and user-friendliness.

- Recommendations for the most suitable XAI method based on user priorities (e.g., prioritizing explainability over efficiency).

6. Future Works

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Dwivedi, R.; Dave, D.; Naik, H.; Singhal, S.; Omer, R.; Patel, P.; Qian, B.; Wen, Z.; Shah, T.; Morgan, G.; et al. Explainable AI (XAI): Core Ideas, Techniques, and Solutions. ACM Comput. Surv. 2023, 55, 194:1–194:33. [Google Scholar] [CrossRef]

- Palacio, S.; Lucieri, A.; Munir, M.; Ahmed, S.; Hees, J.; Dengel, A. Xai handbook: Towards a unified framework for explainable AI. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3766–3775. [Google Scholar]

- Holzinger, A.; Saranti, A.; Molnar, C.; Biecek, P.; Samek, W. Explainable AI Methods—A Brief Overview. In xxAI—Beyond Explainable AI: International Workshop, Held in Conjunction with ICML 2020, July 18, 2020, Vienna, Austria, Revised and Extended Papers; Holzinger, A., Goebel, R., Fong, R., Moon, T., Müller, K.R., Samek, W., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 13–38. [Google Scholar] [CrossRef]

- Le, P.Q.; Nauta, M.; Nguyen, V.B.; Pathak, S.; Schlötterer, J.; Seifert, C. Benchmarking eXplainable AI—A Survey on Available Toolkits and Open Challenges. In Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence, Macau, China, 19–25 August 2023; pp. 6665–6673. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Langer, M.; Oster, D.; Speith, T.; Hermanns, H.; Kästner, L.; Schmidt, E.; Sesing, A.; Baum, K. What do we want from Explainable Artificial Intelligence (XAI)?—A stakeholder perspective on XAI and a conceptual model guiding interdisciplinary XAI research. Artif. Intell. 2021, 296, 103473. [Google Scholar] [CrossRef]

- Liao, V.; Varshney, K. Human-Centered Explainable AI (XAI): From Algorithms to User Experiences. arXiv 2021, arXiv:2110.10790. [Google Scholar]

- Mohseni, S.; Zarei, N.; Ragan, E.D. A Multidisciplinary Survey and Framework for Design and Evaluation of Explainable AI Systems. arXiv 2020, arXiv:1811.11839. [Google Scholar] [CrossRef]

- Hu, Z.F.; Kuflik, T.; Mocanu, I.G.; Najafian, S.; Shulner Tal, A. Recent Studies of XAI-Review. In Proceedings of the 29th ACM Conference on User Modeling, Adaptation and Personalization, New York, NY, USA, 21–25 June 2021; UMAP ’21. pp. 421–431. [Google Scholar] [CrossRef]

- Das, A.; Rad, P. Opportunities and Challenges in Explainable Artificial Intelligence (XAI): A Survey. arXiv 2020, arXiv:2006.11371. [Google Scholar]

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Ser, J.D.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities and Challenges toward Responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Hanif, A.; Beheshti, A.; Benatallah, B.; Zhang, X.; Habiba; Foo, E.; Shabani, N.; Shahabikargar, M. A Comprehensive Survey of Explainable Artificial Intelligence (XAI) Methods: Exploring Transparency and Interpretability. In Web Information Systems Engineering—WISE 2023; Zhang, F., Wang, H., Barhamgi, M., Chen, L., Zhou, R., Eds.; Springer Nature: Berlin/Heidelberg, Germany, 2023; pp. 915–925. [Google Scholar] [CrossRef]

- Hanif, A.; Zhang, X.; Wood, S. A Survey on Explainable Artificial Intelligence Techniques and Challenges. In Proceedings of the 2021 IEEE 25th International Enterprise Distributed Object Computing Workshop (EDOCW), Gold Coast, Australia, 25–29 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 81–89. [Google Scholar] [CrossRef]

- Salimzadeh, S.; He, G.; Gadiraju, U. A Missing Piece in the Puzzle: Considering the Role of Task Complexity in Human-AI Decision Making. In Proceedings of the 31st ACM Conference on User Modeling, Adaptation and Personalization, New York, NY, USA, 26–29 June 2023; UMAP ’23. pp. 215–227. [Google Scholar] [CrossRef]

- Grosan, C.; Abraham, A. Intelligent Systems; Springer: Berlin/Heidelberg, Germany, 2011; Volume 17. [Google Scholar]

- Rong, Y.; Leemann, T.; Nguyen, T.T.; Fiedler, L.; Qian, P.; Unhelkar, V.; Seidel, T.; Kasneci, G.; Kasneci, E. Towards Human-Centered Explainable AI: A Survey of User Studies for Model Explanations. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 2104–2122. [Google Scholar] [CrossRef] [PubMed]

- Došilović, F.K.; Brčić, M.; Hlupić, N. Explainable artificial intelligence: A survey. In Proceedings of the 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 21–25 May 2018; pp. 0210–0215. [Google Scholar] [CrossRef]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy 2021, 23, 18. [Google Scholar] [CrossRef]

- Duval, A. Explainable Artificial Intelligence (XAI); MA4K9 Scholarly Report; Mathematics Institute, The University of Warwick: Coventry, UK, 2019; Volume 4. [Google Scholar]

- Kim, M.Y.; Atakishiyev, S.; Babiker, H.K.B.; Farruque, N.; Goebel, R.; Zaïane, O.R.; Motallebi, M.H.; Rabelo, J.; Syed, T.; Yao, H.; et al. A Multi-Component Framework for the Analysis and Design of Explainable Artificial Intelligence. Mach. Learn. Knowl. Extr. 2021, 3, 900–921. [Google Scholar] [CrossRef]

- John-Mathews, J.M. Some critical and ethical perspectives on the empirical turn of AI interpretability. Technol. Forecast. Soc. Chang. 2022, 174, 121209. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Hughes, L.; Ismagilova, E.; Aarts, G.; Coombs, C.; Crick, T.; Duan, Y.; Dwivedi, R.; Edwards, J.; Eirug, A.; et al. Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int. J. Inf. Manag. 2021, 57, 101994. [Google Scholar] [CrossRef]

- Ribes, D.; Henchoz, N.; Portier, H.; Defayes, L.; Phan, T.T.; Gatica-Perez, D.; Sonderegger, A. Trust Indicators and Explainable AI: A Study on User Perceptions. In Human-Computer Interaction—INTERACT 2021; Ardito, C., Lanzilotti, R., Malizia, A., Petrie, H., Piccinno, A., Desolda, G., Inkpen, K., Eds.; Springer: Cham, Switzerland, 2021; pp. 662–671. [Google Scholar] [CrossRef]

- Antoniadi, A.M.; Du, Y.; Guendouz, Y.; Wei, L.; Mazo, C.; Becker, B.A.; Mooney, C. Current Challenges and Future Opportunities for XAI in Machine Learning-Based Clinical Decision Support Systems: A Systematic Review. Appl. Sci. 2021, 11, 5088. [Google Scholar] [CrossRef]

- Ehsan, U.; Saha, K.; Choudhury, M.; Riedl, M. Charting the Sociotechnical Gap in Explainable AI: A Framework to Address the Gap in XAI. Proc. Acm -Hum.-Comput. Interact. 2023. [Google Scholar] [CrossRef]

- Lopes, P.; Silva, E.; Braga, C.; Oliveira, T.; Rosado, L. XAI Systems Evaluation: A Review of Human and Computer-Centred Methods. Appl. Sci. 2022, 12, 9423. [Google Scholar] [CrossRef]

- Naiseh, M.; Simkute, A.; Zieni, B.; Jiang, N.; Ali, R. C-XAI: A conceptual framework for designing XAI tools that support trust calibration. J. Responsible Technol. 2024, 17, 100076. [Google Scholar] [CrossRef]

- Capuano, N.; Fenza, G.; Loia, V.; Stanzione, C. Explainable Artificial Intelligence in CyberSecurity: A Survey. IEEE Access 2022, 10, 93575–93600. [Google Scholar] [CrossRef]

- Chakraborty, T.; Seifert, C.; Wirth, C. Explainable Bayesian Optimization. arXiv 2024, arXiv:2401.13334. [Google Scholar]

- Bobek, S.; Kuk, M.; Szelążek, M.; Nalepa, G.J. Enhancing Cluster Analysis with Explainable AI and Multidimensional Cluster Prototypes. IEEE Access 2022, 10, 101556–101574. [Google Scholar] [CrossRef]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Pedreschi, D.; Turini, F.; Giannotti, F. Local Rule-Based Explanations of Black Box Decision Systems. arXiv 2018, arXiv:1805.10820. [Google Scholar]

- Zafar, M.R.; Khan, N. Deterministic Local Interpretable Model-Agnostic Explanations for Stable Explainability. Mach. Learn. Knowl. Extr. 2021, 3, 525–541. [Google Scholar] [CrossRef]

- Ngo, Q.H.; Kechadi, T.; Le-Khac, N.A. OAK4XAI: Model Towards Out-of-Box eXplainable Artificial Intelligence for Digital Agriculture. In Artificial Intelligence XXXIX; Bramer, M., Stahl, F., Eds.; Springer: Cham, Switzerland, 2022; pp. 238–251. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Erion, G.; Chen, H.; DeGrave, A.; Prutkin, J.M.; Nair, B.; Katz, R.; Himmelfarb, J.; Bansal, N.; Lee, S.I. From local explanations to global understanding with explainable AI for trees. Nat. Mach. Intell. 2020, 2, 56–67. [Google Scholar] [CrossRef] [PubMed]

- Radhakrishnan, A.; Beaglehole, D.; Pandit, P.; Belkin, M. Mechanism of feature learning in deep fully connected networks and kernel machines that recursively learn features. arXiv 2023, arXiv:2212.13881. [Google Scholar] [CrossRef]

- Biecek, P. DALEX: Explainers for Complex Predictive Models in R. J. Mach. Learn. Res. 2018, 19, 1–5. [Google Scholar]

- Dhurandhar, A.; Chen, P.Y.; Luss, R.; Tu, C.C.; Ting, P.; Shanmugam, K.; Das, P. Explanations based on the missing: Towards contrastive explanations with pertinent negatives. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 2–8 December 2018; NIPS’18. pp. 590–601. [Google Scholar]

- Alteryx. The Essential Guide to Explainable AI (XAI). Available online: https://www.alteryx.com/resources/whitepaper/essential-guide-to-explainable-ai (accessed on 1 April 2024).

- Huang, Q.; Yamada, M.; Tian, Y.; Singh, D.; Yin, D.; Chang, Y. GraphLIME: Local Interpretable Model Explanations for Graph Neural Networks. arXiv 2020, arXiv:2001.06216. [Google Scholar] [CrossRef]

- Heskes, T.; Bucur, I.G.; Sijben, E.; Claassen, T. Causal shapley values: Exploiting causal knowledge to explain individual predictions of complex models. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 6–12 December 2020; NIPS ’20. pp. 4778–4789. [Google Scholar]

- Morichetta, A.; Casas, P.; Mellia, M. EXPLAIN-IT: Towards Explainable AI for Unsupervised Network Traffic Analysis. In Proceedings of the 3rd ACM CoNEXT Workshop on Big DAta, Machine Learning and Artificial Intelligence for Data Communication Networks, New York, NY, USA, 9 December 2019; Big-DAMA ’19. pp. 22–28. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Anchors: High-Precision Model-Agnostic Explanations. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar] [CrossRef]

- Kokhlikyan, N.; Miglani, V.; Martin, M.; Wang, E.; Alsallakh, B.; Reynolds, J.; Melnikov, A.; Kliushkina, N.; Araya, C.; Yan, S.; et al. Captum: A unified and generic model interpretability library for PyTorch. arXiv 2020, arXiv:2009.07896. [Google Scholar] [CrossRef]

- Petsiuk, V.; Das, A.; Saenko, K. RISE: Randomized Input Sampling for Explanation of Black-box Models. arXiv 2018, arXiv:1806.07421. [Google Scholar]

- Alber, M.; Lapuschkin, S.; Seegerer, P.; Hägele, M.; Schütt, K.T.; Montavon, G.; Samek, W.; Müller, K.R.; Dähne, S.; Kindermans, P.J. iNNvestigate Neural Networks! J. Mach. Learn. Res. 2019, 20, 1–8. [Google Scholar]

- Garcea, F.; Famouri, S.; Valentino, D.; Morra, L.; Lamberti, F. iNNvestigate-GUI - Explaining Neural Networks Through an Interactive Visualization Tool. In Artificial Neural Networks in Pattern Recognition; Schilling, F.P., Stadelmann, T., Eds.; Springer: Cham, Switzerland, 2020; pp. 291–303. [Google Scholar] [CrossRef]

- Nori, H.; Jenkins, S.; Koch, P.; Caruana, R. InterpretML: A Unified Framework for Machine Learning Interpretability. arXiv 2019, arXiv:1909.09223. [Google Scholar]

- Lundberg, S.M.; Allen, P.G.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations From Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2017, 128, 336–359. [Google Scholar] [CrossRef]

- Sundararajan, M.; Taly, A.; Yan, Q. Axiomatic Attribution for Deep Networks. arXiv 2017, arXiv:1703.01365. [Google Scholar] [CrossRef]

- Smilkov, D.; Thorat, N.; Kim, B.; Viégas, F.; Wattenberg, M. SmoothGrad: Removing noise by adding noise. arXiv 2017, arXiv:1706.03825. [Google Scholar]

- Zhang, Z.; Cui, P.; Zhu, W. Deep Learning on Graphs: A Survey. IEEE Trans. Knowl. Data Eng. 2022, 34, 249–270. [Google Scholar] [CrossRef]

- Miković, R.; Arsić, B.; Gligorijević, Đ. Importance of social capital for knowledge acquisition—DeepLIFT learning from international development projects. Inf. Process. Manag. 2024, 61, 103694. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Kim, B.; Wattenberg, M.; Gilmer, J.; Cai, C.; Wexler, J.; Viegas, F.; sayres, R. Interpretability Beyond Feature Attribution: Quantitative Testing with Concept Activation Vectors (TCAV). In Proceedings of the International Conference on Machine Learning. PMLR, Stockholm, Sweden, 10–15 July 2018; Volume 6, pp. 2668–2677, ISSN 2640-3498. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should i trust you?” Explaining the predictions of any classifier. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Volume 13–17-August-2016, KDD ’16; pp. 1135–1144. [Google Scholar] [CrossRef]

- Bach, S.; Binder, A.; Montavon, G.; Klauschen, F.; Müller, K.R.; Samek, W. On Pixel-Wise Explanations for Non-Linear Classifier Decisions by Layer-Wise Relevance Propagation. PLoS ONE 2015, 10, e0130140. [Google Scholar] [CrossRef]

- Wexler, J.; Pushkarna, M.; Bolukbasi, T.; Wattenberg, M.; Viegas, F.; Wilson, J. The what-if tool: Interactive probing of machine learning models. IEEE Trans. Vis. Comput. Graph. 2020, 26, 56–65. [Google Scholar] [CrossRef]

- Arya, V.; Bellamy, R.K.E.; Chen, P.Y.; Dhurandhar, A.; Hind, M.; Hoffman, S.C.; Houde, S.; Liao, Q.V.; Luss, R.; Mojsilović, A.; et al. AI Explainability 360: An Extensible Toolkit for Understanding Data and Machine Learning Models. J. Mach. Learn. Res. 2020, 21, 1–6. [Google Scholar]

- Liu, G.; Sun, B. Concrete compressive strength prediction using an explainable boosting machine model. Case Stud. Constr. Mater. 2023, 18, e01845. [Google Scholar] [CrossRef]

- Kawakura, S.; Hirafuji, M.; Ninomiya, S.; Shibasaki, R. Adaptations of Explainable Artificial Intelligence (XAI) to Agricultural Data Models with ELI5, PDPbox, and Skater using Diverse Agricultural Worker Data. Eur. J. Artif. Intell. Mach. Learn. 2022, 1, 27–34. [Google Scholar] [CrossRef]

- Asif, N.A.; Sarker, Y.; Chakrabortty, R.K.; Ryan, M.J.; Ahamed, M.H.; Saha, D.K.; Badal, F.R.; Das, S.K.; Ali, M.F.; Moyeen, S.I.; et al. Graph Neural Network: A Comprehensive Review on Non-Euclidean Space. IEEE Access 2021, 9, 60588–60606. [Google Scholar] [CrossRef]

- Binder, A.; Montavon, G.; Bach, S.; Müller, K.R.; Samek, W. Layer-wise Relevance Propagation for Neural Networks with Local Renormalization Layers. arXiv 2016, arXiv:1604.00825. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), Savannah, GA, USA, 2–4 November 2016. [Google Scholar]

- Arya, V.; Bellamy, R.K.E.; Chen, P.Y.; Dhurandhar, A.; Hind, M.; Hoffman, S.C.; Houde, S.; Liao, Q.V.; Luss, R.; Mojsilović, A.; et al. One Explanation Does Not Fit All: A Toolkit and Taxonomy of AI Explainability Techniques. arXiv 2019, arXiv:1909.03012. [Google Scholar]

- Klaise, J.; Looveren, A.V.; Vacanti, G.; Coca, A. Alibi Explain: Algorithms for Explaining Machine Learning Models. J. Mach. Learn. Res. 2021, 22, 1–7. [Google Scholar]

- Shrikumar, A.; Greenside, P.; Shcherbina, A.; Kundaje, A. Not just a black box: Learning important features through propagating activation differences. arXiv 2016, arXiv:1605.01713. [Google Scholar]

- Shrikumar, A.; Greenside, P.; Kundaje, A. Learning important features through propagating activation differences. In Proceedings of the International Conference on Machine Learning. PMLR, Sydney, NSW, Australia, 6–11 August 2017; pp. 3145–3153. [Google Scholar]

- Li, J.; Zhang, C.; Zhou, J.T.; Fu, H.; Xia, S.; Hu, Q. Deep-LIFT: Deep Label-Specific Feature Learning for Image Annotation. IEEE Trans. Cybern. 2022, 52, 7732–7741. [Google Scholar] [CrossRef] [PubMed]

- Soydaner, D. Attention mechanism in neural networks: Where it comes and where it goes. Neural Comput. Appl. 2022, 34, 13371–13385. [Google Scholar] [CrossRef]

- Fan, A.; Jernite, Y.; Perez, E.; Grangier, D.; Weston, J.; Auli, M. ELI5: Long Form Question Answering. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Korhonen, A., Traum, D., Màrquez, L., Eds.; pp. 3558–3567. [Google Scholar] [CrossRef]

- Cao, L. AI in Finance: A Review. 2020. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3647625 (accessed on 1 April 2024). [CrossRef]

- Goodell, J.W.; Kumar, S.; Lim, W.M.; Pattnaik, D. Artificial intelligence and machine learning in finance: Identifying foundations, themes, and research clusters from bibliometric analysis. J. Behav. Exp. Financ. 2021, 32, 100577. [Google Scholar] [CrossRef]

- Zheng, X.L.; Zhu, M.Y.; Li, Q.B.; Chen, C.C.; Tan, Y.C. FinBrain: When finance meets AI 2.0. Front. Inf. Technol. Electron. Eng. 2019, 20, 914–924. [Google Scholar] [CrossRef]

- Cao, N. Explainable Artificial Intelligence for Customer Churning Prediction in Banking. In Proceedings of the 2nd International Conference on Human-Centered Artificial Intelligence (Computing4Human 2021), Danang, Vietnam, 28–29 October 2021; pp. 159–167. [Google Scholar]

- Weber, P.; Carl, K.V.; Hinz, O. Applications of Explainable Artificial Intelligence in Finance—A systematic review of Finance, Information Systems, and Computer Science literature. Manag. Rev. Q. 2023, 74, 867–907. [Google Scholar] [CrossRef]

- van der Velden, B.H.M.; Kuijf, H.J.; Gilhuijs, K.G.A.; Viergever, M.A. Explainable artificial intelligence (XAI) in deep learning-based medical image analysis. Med. Image Anal. 2022, 79, 102470. [Google Scholar] [CrossRef]

- Simonyan, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. In Proceedings of the Workshop at International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Joyce, D.W.; Kormilitzin, A.; Smith, K.A.; Cipriani, A. Explainable artificial intelligence for mental health through transparency and interpretability for understandability. NPJ Digit. Med. 2023, 6, 6. [Google Scholar] [CrossRef] [PubMed]

- Zebin, T.; Rezvy, S.; Luo, Y. An Explainable AI-Based Intrusion Detection System for DNS Over HTTPS (DoH) Attacks. IEEE Trans. Inf. Forensics Secur. 2022, 17, 2339–2349. [Google Scholar] [CrossRef]

- Andresini, G.; Appice, A.; Caforio, F.P.; Malerba, D.; Vessio, G. ROULETTE: A neural attention multi-output model for explainable Network Intrusion Detection. Expert Syst. Appl. 2022, 201, 117144. [Google Scholar] [CrossRef]

- A. Reyes, A.; D. Vaca, F.; Castro Aguayo, G.A.; Niyaz, Q.; Devabhaktuni, V. A Machine Learning Based Two-Stage Wi-Fi Network Intrusion Detection System. Electronics 2020, 9, 1689. [Google Scholar] [CrossRef]

- Górski, Ł.; Ramakrishna, S. Explainable artificial intelligence, lawyer’s perspective. In Proceedings of the Eighteenth International Conference on Artificial Intelligence and Law, New York, NY, USA, 21–25 June 2021; ICAIL ’21. pp. 60–68. [Google Scholar] [CrossRef]

- Bringas Colmenarejo, A.; Beretta, A.; Ruggieri, S.; Turini, F.; Law, S. The Explanation Dialogues: Understanding How Legal Experts Reason About XAI Methods. In Proceedings of the European Workshop on Algorithmic Fairness: Proceedings of the 2nd European Workshop on Algorithmic Fairness, Winterthur, Switzerland, 7–9 June 2023. [Google Scholar]

- Ramon, Y.; Vermeire, T.; Toubia, O.; Martens, D.; Evgeniou, T. Understanding Consumer Preferences for Explanations Generated by XAI Algorithms. arXiv 2021, arXiv:2107.02624. [Google Scholar] [CrossRef]

- Feng, X.F.; Zhang, S.; Srinivasan, K. Marketing Through the Machine’s Eyes: Image Analytics and Interpretability; Emerald Publishing Limited: England, UK, 2022. [Google Scholar]

- Sutthithatip, S.; Perinpanayagam, S.; Aslam, S.; Wileman, A. Explainable AI in Aerospace for Enhanced System Performance. In Proceedings of the 2021 IEEE/AIAA 40th Digital Avionics Systems Conference (DASC), San Antonio, TX, USA, 3–7 October 2021; pp. 1–7, ISSN 2155-7209. [Google Scholar] [CrossRef]

- Hernandez, C.S.; Ayo, S.; Panagiotakopoulos, D. An Explainable Artificial Intelligence (xAI) Framework for Improving Trust in Automated ATM Tools. In Proceedings of the 2021 IEEE/AIAA 40th Digital Avionics Systems Conference (DASC), San Antonio, TX, USA, 3–7 October 2021; pp. 1–10, ISSN 2155-7209. [Google Scholar] [CrossRef]

- Vowels, M.J. Trying to Outrun Causality with Machine Learning: Limitations of Model Explainability Techniques for Identifying Predictive Variables. arXiv 2022, arXiv:2202.09875. [Google Scholar] [CrossRef]

- Samek, W.; Wiegand, T.; Müller, K.R. Explainable Artificial Intelligence: Understanding, Visualizing and Interpreting Deep Learning Models. arXiv 2017, arXiv:1708.08296. QID: Q38135445. [Google Scholar]

- Letzgus, S.; Wagner, P.; Lederer, J.; Samek, W.; Muller, K.R.; Montavon, G. Toward Explainable Artificial Intelligence for Regression Models: A methodological perspective. IEEE Signal Process. Mag. 2022, 39, 40–58. [Google Scholar] [CrossRef]

- Ribeiro, J.; Silva, R.; Cardoso, L.; Alves, R. Does Dataset Complexity Matters for Model Explainers? In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data), Orlando, FL, USA, 15–18 December 2021; pp. 5257–5265. [Google Scholar] [CrossRef]

- Helgstrand, C.J.; Hultin, N. Comparing Human Reasoning and Explainable AI. 2022. Available online: https://www.diva-portal.org/smash/record.jsf?pid=diva2%3A1667042&dswid=-8097 (accessed on 1 April 2024).

- Dieber, J.; Kirrane, S. Why model why? Assessing the strengths and limitations of LIME. arXiv 2020, arXiv:2012.00093. [Google Scholar] [CrossRef]

- El-Mihoub, T.A.; Nolle, L.; Stahl, F. Explainable Boosting Machines for Network Intrusion Detection with Features Reduction. In Proceedings of the Artificial Intelligence XXXIX, Cambridge, UK, 13–15 December 2021; Bramer, M., Stahl, F., Eds.; Springer: Cham, Switzerland, 2022; pp. 280–294. [Google Scholar] [CrossRef]

- Jayasundara, S.; Indika, A.; Herath, D. Interpretable Student Performance Prediction Using Explainable Boosting Machine for Multi-Class Classification. In Proceedings of the 2022 2nd International Conference on Advanced Research in Computing (ICARC), Belihuloya, Sri Lanka, 23–24 February 2022; pp. 391–396. [Google Scholar] [CrossRef]

- Roshan, K.; Zafar, A. Utilizing XAI Technique to Improve Autoencoder based Model for Computer Network Anomaly Detection with Shapley Additive Explanation(SHAP). Int. J. Comput. Netw. Commun. 2021, 13, 109–128. [Google Scholar] [CrossRef]

- Roshan, K.; Zafar, A. Using Kernel SHAP XAI Method to Optimize the Network Anomaly Detection Model. In Proceedings of the 2022 9th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 23–25 March 2022; pp. 74–80. [Google Scholar] [CrossRef]

- Sahakyan, M.; Aung, Z.; Rahwan, T. Explainable Artificial Intelligence for Tabular Data: A Survey. IEEE Access 2021, 9, 135392–135422. [Google Scholar] [CrossRef]

- Hickling, T.; Zenati, A.; Aouf, N.; Spencer, P. Explainability in Deep Reinforcement Learning: A Review into Current Methods and Applications. ACM Comput. Surv. 2023, 56, 125:1–125:35. [Google Scholar] [CrossRef]

- Šimić, I.; Sabol, V.; Veas, E. XAI Methods for Neural Time Series Classification: A Brief Review. arXiv 2021, arXiv:2108.08009. [Google Scholar] [CrossRef]

- Agarwal, N.; Das, S. Interpretable Machine Learning Tools: A Survey. In Proceedings of the 2020 IEEE Symposium Series on Computational Intelligence (SSCI), Canberra, ACT, Australia, 1–4 December 2020; pp. 1528–1534. [Google Scholar] [CrossRef]

- Beheshti, A.; Yang, J.; Sheng, Q.Z.; Benatallah, B.; Casati, F.; Dustdar, S.; Nezhad, H.R.M.; Zhang, X.; Xue, S. ProcessGPT: Transforming Business Process Management with Generative Artificial Intelligence. In Proceedings of the 2023 IEEE International Conference on Web Services (ICWS), Chicago, IL, USA, 2–8 July 2023; pp. 731–739. [Google Scholar] [CrossRef]

| Paper | Year | Contribution | Limitation |

|---|---|---|---|

| [4] | 2023 | The research highlights that current toolkits only cover a limited range of explanation quality aspects. This work guides researchers in selecting evaluation methods that offer a broader assessment of explanation quality. The research suggests cross-evaluation between toolkits/frameworks and original metric implementations to identify and address inconsistencies. | This research focuses on the limitations of existing toolkits and does not propose a solution (e.g., a new evaluation framework). The specific details of identified evaluation gaps (modalities, explanation types, and missing metrics) are not explicitly mentioned in the paper. |

| [1] | 2023 | The research provides a broad overview of various XAI techniques, encompassing white-box models (interpretable by design) and black-box models with techniques like LIMEs and SHAPs to enhance interpretability. The research highlights the need for a deeper understanding of XAI limitations and explores the importance of considering data explainability, model explainability, fairness, and accountability in tandem. It emphasizes the need for a holistic approach to XAI implementation and deployment. | While the research is comprehensive, it might not delve deep into the specifics of each XAI technique or provide detailed comparisons. The research focuses on identifying limitations and considerations, but it might not offer specific solutions or address how to address the challenges raised. |

| [7] | 2022 | The chapter overviews available resources and identifies open problems in utilizing XAI techniques for user experience design. The chapter highlights the emergence of a research community dedicated to human-centered XAI, providing references for further exploration. | The chapter focuses on the need for bridging design and XAI techniques but does not provide specific details on how to achieve this in practice. The chapter also focuses on the high-level importance of human-centered design but does not delve into specific design techniques for XAI user experiences. |

| [8] | 2021 | The authors reviewed existing research and categorized various design goals and evaluation methods for XAI systems. To address the need for a comprehensive approach, the authors propose a new framework that connects design goals with evaluation methods. | The framework provides a high-level overview of a multidisciplinary approach. Still, it does not delve into specific details of interface design, interaction design, or the development of interpretable ML techniques. It does not cover all aspects like interface design and the development of interpretable models. |

| [2] | 2021 | The research demonstrates how the framework can be used to compare various XAI methods and understand the aspects of explainability addressed by each. The framework situates explanations and interpretations within the bigger picture of an XAI pipeline, considering input/output domains and the gap between mathematical models and human understanding. | The review of current XAI practices reveals a focus on developing explanation methods, often neglecting the role of interpretation. It acknowledges the current limitations of XAI practices and highlights areas for future research, such as explicitly defining non-functional requirements and ensuring clear interpretations accompany explanations. |

| [6] | 2021 | The research acknowledges the growing emphasis on human stakeholders in XAI research and the need for evaluating explainability approaches based on their needs. The research emphasizes the importance of empirically evaluating explainability approaches to assess how well they meet stakeholder needs. | The research emphasizes the need for empirical evaluation but does not discuss specific methods for conducting such evaluations. While advocating for a broader view, the research does not delve into specific considerations for different stakeholder groups. |

| [9] | 2021 | The research proposes a classification framework for explanations considering six aspects: purpose, interpretation method, context, presentation format, stakeholder type, and domain. The research acknowledges the need to expand the framework by including more explanation styles and potentially sub-aspects like user models. | The research focuses on a review of existing explanations, and the provided classification might not encompass all possible XAI explanation styles. While acknowledging the need for personalization, the research does not delve deep into how the framework can be used to personalize explanations based on user needs. |

| [10] | 2020 | The research emphasizes the importance of XAI in today’s ML landscape, considering factors like data bias, trustability, and adversarial examples. The research provides a survey of XAI frameworks, with a focus on model-agnostic post hoc methods due to their wider applicability. | The research specifically focuses on XAI for deep learning models, potentially limiting its generalizability to other ML algorithms. While providing a survey of frameworks, the research does not delve into the specifics of individual XAI methods or their functionalities. |

| [11] | 2019 | The research clarifies key concepts related to model explainability and the various motivations for seeking interpretable ML methods. The study proposes a global taxonomy for XAI approaches, offering a unified classification of different techniques based on common criteria. | With limited detail on frameworks, while providing a taxonomy, the research might not delve into the specifics of individual XAI techniques or their functionalities. The research focuses on identifying limitations and future considerations, but it does not offer specific solutions or address how to address the challenges raised. |

| [5] | 2018 | The research provides a comprehensive background on XAI, leveraging the “Five Ws and How” framework (What, Who, When, Why, Where, and How) to cover all key aspects of the field. The research emphasizes the impact of XAI beyond academic research, highlighting its applications across different domains. | The research identifies challenges and open issues in XAI but does not offer specific solutions or address how to address them. The research provides limited technical details while providing a broad overview of XAI approaches, and it does not delve into the technical specifics of each method. |

| Stage | Description | Details |

|---|---|---|

| Search Terms | Keywords used for searching | XAI Explainable AI, XAI frameworks, XAI techniques, XAI challenges, specific framework names (e.g., XAI LIME and XAI SHAP) |

| Databases | Platforms used for searching | IEEE Xplore, Science Direct, MDPI, Google Scholar, ACM Digital Library, Scopus, and arXiv. |

| Inclusion Criteria | Criteria for Selecting Relevant Papers | • Papers matching key terms |

| • High publication quality | ||

| • Strong experimental design with relevant results | ||

| • High citation count (in some cases) | ||

| • Extensive focus on XAI frameworks | ||

| Exclusion Criteria | Reasons for excluding papers | • Misleading content irrelevant to research objectives |

| • Focus on single application concept with limited XAI framework discussion | ||

| • Lack of mention of key XAI framework attributes | ||

| • Duplicate ideas or methods mentioned in the paper | ||

| Primary Source Selection | Process for selecting key papers | Phase 1: |

| • Review the title, keywords, and abstract to assess relevance. | ||

| • Select papers that align with research needs. | ||

| • Reject irrelevant papers. | ||

| Phase 2: | ||

| • Conduct an in-depth review of selected papers. | ||

| • Analyze objectives, generate ideas, understand the experimental setup, and identify gaps and limitations. |

| XAI Categories | ||||

|---|---|---|---|---|

| Year | XAI Frameworks | Extrinsic (Post Hoc)/Intrinsic | Model-Agnostic/Model-Specific | Global/Local |

| 2024 | TNTRules [29] | Extrinsic | Agnostic | Global |

| 2022 | ClAMPs [30] | Extrinsic | Agnostic | Both |

| 2021 | LOREs [31] | Extrinsic | Agnostic | Local |

| 2021 | DLIME [32] | Extrinsic | Specific | Global |

| 2021 | OAK4XAI [33] | Extrinsic | Specific | Local |

| 2021 | TreeSHAPs [34,35] | Extrinsic | Agnostic | Global |

| 2021 | DALEX [36] | Extrinsic | Agnostic | Local |

| 2020 | CEM [37] | Extrinsic | Agnostic | Local |

| 2020 | Alteryx Explainable AI [38] | Extrinsic | Agnostic | Global |

| 2019 | GraphLIMEs [39] | Extrinsic | Agnostic | Local |

| 2019 | Skater [1] | Extrinsic | Agnostic | Global |

| 2019 | CasualSHAPs [40] | Extrinsic | Agnostic | Global |

| 2019 | Explain-IT [41] | Extrinsic | Agnostic | Local |

| 2018 | Anchors [42] | Extrinsic | Agnostic | Local |

| 2018 | Captum [43] | Intrinsic | Agnostic | Global |

| 2018 | RISE [44] | Extrinsic | Agnostic | Local |

| 2018 | INNvestigate [45,46] | Extrinsic | Specific | Global |

| 2018 | interpretML [47] | Extrinsic | Agnostic | Global |

| 2017 | SHAPs [48] | Extrinsic | Agnostic | Both |

| 2017 | GRAD-CAM [49] | Extrinsic | Specific | Local |

| 2017 | Kernel SHAPs [48] | Extrinsic | Agnostic | Global |

| 2017 | Integrated Gradients [50,51] | Extrinsic | Agnostic | Global |

| 2017 | DeepLIFT [52,53] | Extrinsic | Agnostic | Global |

| 2017 | ATTN [54] | Extrinsic | Specific | Local |

| 2017 | TCAV [55] | Extrinsic | Agnostic | Local |

| 2016 | LIMEs [56] | Extrinsic | Agnostic | Local |

| 2016 | LRP [57] | Extrinsic | Agnostic | Local |

| 2016 | What-IF Tool [58] | Extrinsic | Agnostic | Local |

| 2016 | AIX360 [59] | Intrinsic | Agnostic | Global |

| 2016 | EBM [60] | Extrinsic | Specific | Both |

| 2015 | Eli5 [59,61] | Extrinsic | Agnostic | Local |

| Year | XAI Framework | Output Format | Use Cases | Programming Language | ML/DL | Model Complexity | Open Source/ Commercial | Licence Type |

|---|---|---|---|---|---|---|---|---|

| 2024 | TNTRule [29] | Textual Rules, Visualizations | Optimization Tasks for Parameter tuning of cyber–physical System | Python | Unsupervised ML | Flexible | Open Source | Apache 2.0 |

| 2022 | ClAMPs [30] | Human-readable Rules, Visualization | unsupervised problem to human readable rules | Python | UnSupervised ML, scikit-learn, tensorFlow and PyTorch | Flexible | Open Source | Apache 2.0 |

| 2021 | LOREs [31] | Decision and Counterfactual Rules | Understanding Individual Predictions | Python | Various | Simple to Complex | Open Source | MIT |

| 2021 | DLIME [32] | Text, Visualization | Understanding Model, behavior, identifying Bias, debugging models | Python | Primarily TensorFlow models | Flexible | Open Source | Apache 2.0 |

| 2021 | OAK4XAI [33] | Text, factual grounding highlights | Understanding the reason behind generated outputs, identifying influential features, analyzing model behavior | Python | Various | Simple to Complex | Open Source | N/A |

| 2021 | TreeSHAPs [34,35] | Text, Visualizations (force Plots, dependence Plots) | Understanding feature importance, identifying influential features, analyzing model behavior | Python | Decision trees and Random Forests | Flexible | Open Source | MIT |

| 2021 | DALEX [36] | Visual Explanations including interactive what-if plots and feature attribution heatmaps | Understanding the reasoning behind specific model predictions, identifying influential factors in individual cases. | Python | TensorFlow, PyTorch and scikit-learn | Simple to Complex | Open Source | Apache 2.0 |

| 2020 | CEM [37] | Counterfactual Examples | Understanding individual Predictions, identifying Biases | Python | Various | Simple to Complex | Open Source | Apache 2.0 |

| 2020 | Alteryx Explainable AI [38] | Primarily visual explanation through interactive dashboards within the Alteryx platform | Understanding model behavior, identifying influential features, debugging models, and improving model performance | Python or R | Agnostic or Specific to Model | Varying Complexity from simple linear model to complex deep learning models | Commercial | Proprietary |

| 2019 | Explain-IT [41] | Visualization, feature Contributions | Understanding individual Predictions, identifying feature contribution | Python | Unsupervised ML | from simple to Complex | N/A | N/A |

| 2019 | GraphLIMEs [39] | Feature contributions, Textual explanations, Visualizations | Understanding individual predictions in graph data | Python | Graph ML | Simple to complex | Open Source | Apache 2.0 |

| 2019 | Skater [1] | Text, Visualizations | Understanding Model behavior, identifying bias, debugging models | Python | Framework Agnostic | Flexible | Open Source | Apache 2.0 |

| 2019 | CausalSHAPs [40] | Feature Importance with Visualizations | Understanding causal relationships between features and predictions, identifying features with the strongest causal effect | Python | Primarily Focused on tree-based models | Flexible | Open Source | Apache 2.0 |

| 2018 | Anchors [42] | Feature Importance, Anchor set | Understanding individual predictions, debugging models | Python | ML | Simple to Complex | Open Source | Apache 2.0 |

| 2018 | Captum [43] | Text, Visualizations (integrated with various libraries) | Understanding Model behavior, identifying bias, debugging models, counterfactual explanations | Python | Framework Agnostic, various PyTorch Models | Flexible | Open Source | Apache 2.0 |

| 2018 | RISE [44] | Visualizations (Saliency maps) | Understanding local feature importance in image classification models | Python | Primarily focused on Image classification models | Flexible | Open Source | MIT |

| 2018 | INNvestigate [45,46] | Text, Visualizations (integrated with Matplotlib) | Understanding model behavior, identifying important features, debugging models | Python | Keras models | Flexible | Open Source | Apache 2.0 |

| 2018 | InterpretML [47] | Textual explanations, and visualizations (including feature importance plots, partial dependence plots, and SHAP values) | Understanding model behavior, identifying influential features, debugging models, and improving model performance | Python | Agnostic to specific frameworks | Can handle models of varying complexity | Open Source | Apache 2.0 |

| 2017 | SHAP [48] | Feature importance, Shapley values | Understanding individual predictions, Debugging models | Python | Various | Simple to complex | Open Source | MIT |

| 2017 | Grad-Cam [49] | Heatmaps highlighting important regions | Understanding individual predictions in CNNs | Python | CNNs | Simple to complex | Open Source | Varies |

| 2017 | Kernel SHAP [48] | Text, Visualizations (force plots, dependence plots) | Understanding feature importance, identifying bias, debugging models | Python | Framework agnostic | Flexible | Open Source | Apache 2.0 |

| 2017 | Integrated Gradients [50] | Saliency maps or attribution scores | Understanding feature importance, identifying influential factors in model predictions, debugging models. | Varies | Varies | Varies | Open Source | Varies |

| 2017 | DeepLIFT [67,68] | Textual explanations, visualizations (heatmaps for saliency scores) | Understanding feature importance, identifying influential regions in input data, debugging models | Python | TensorFlow, PyTorch, and Keras | Designed for complex deep learning models | Open Source | Apache 2.0 |

| 2017 | ATTN [54] | Visualizations (heatmaps highlighting attention weights) | Understanding how transformers attend to different parts of the input sequence, identifying which parts are most influential for specific predictions, debugging NLP models | Python | TensorFlow and PyTorch | Primarily designed for complex deep learning models like transformers | Open Source | Varies |

| 2017 | TCAV [55] | Visualizations (heatmaps highlighting concept activation regions) | Understanding which image regions contribute to specific model predictions, identifying biases in image classification models | Python | TensorFlow, PyTorch, and sci-kit-learn | Designed for complex deep learning model image classification | Open Source | Apache 2.0 |

| 2016 | LIMEs [56] | Arguments, Text, Visualizations | Understanding individual predictions, debugging models, Identifying biases, Image Classification, Text Classification, Tabular Data Analysis | Python | TensorFlow, PyTorch, and Keras | Simple to complex | Open Source | BSD 3-Clause |

| 2016 | LRP [57] | Relevance scores | Understanding individual predictions in deep neural networks | Python | Deep neural networks | Simple to complex | Open Source | Varies |

| 2016 | What-IF Tool [58] | Feature contributions, Visualizations | Exploring hypothetical scenarios, Debugging models | Python/ TensorFlow/ JavaScript | Various | Simple to complex | Discontinued | N/A |

| 2016 | AIX360 [65] | Text, Visualizations | Understanding model behavior, identifying bias, debugging models | Python | Framework agnostic | Flexible | Open Source | Apache 2.0 |

| 2016 | EBMs [60] | Text, Visualizations (partial dependence plots, feature importance) | Understanding model behavior, identifying feature interactions, debugging models | Python | Gradient boosting models | Flexible | Open Source | Varies |

| 2015 | ELI5 [71] | Textual explanations, Arguments, Visualizations | Understanding individual predictions, Image Classification, Text Classification, Tabular Data Analysis | Python | Various | Simple to complex | Open Source | Varies |

| XAI Framework | Text | Visualization | Feature Importance | Arguments |

|---|---|---|---|---|

| TNTRules | ✓ | ✓ | ||

| CIAMP | ✓ | ✓ | ||

| LOREs | ✓ | ✓ | ||

| DLIME | ✓ | ✓ | ||

| OAK4XAI | ✓ | ✓ | ||

| TreeSHAPs | ✓ | ✓ | ||

| DALEX | ✓ | ✓ | ||

| CEM | ✓ | |||

| Alteryx | ✓ | |||

| GraphLIMEs | ✓ | ✓ | ✓ | |

| Skater | ✓ | ✓ | ||

| CasualSHAPs | ✓ | ✓ | ||

| Explain-IT | ✓ | ✓ | ||

| Anchors | ✓ | ✓ | ||

| Captum | ✓ | ✓ | ||

| RISE | ✓ | |||

| INNvestigate | ✓ | |||

| InterpretML | ✓ | ✓ | ✓ | |

| SHAPs | ✓ | ✓ | ||

| GRAD-CAM | ✓ | |||

| Kernel SHAPs | ✓ | ✓ | ||

| Integrated Gradients | ✓ | |||

| DeepLIFT | ✓ | ✓ | ||

| ATTN | ✓ | |||

| TCAV | ✓ | |||

| LIMEs | ✓ | ✓ | ||

| LRP | ✓ | |||

| What-IF Tool | ✓ | ✓ | ||

| AIX360 | ✓ | ✓ | ||

| EBM | ✓ | ✓ | ✓ | |

| Eli5 | ✓ | ✓ | ✓ |

| Flexible | Simple to Complex | Complex | |

|---|---|---|---|

| XAI Framework | TNTRules | LOREs | OAK4XAI |

| CIAMP | DALEX | InterpretML | |

| DLIME | CEM | DeepLIFT | |

| TreeSHAPs | Alteryx | ATTN | |

| Skater | GraphLIMEs | TCAV | |

| CausalSHAPs | Explain-IT | ||

| Captum | Anchors | ||

| RISE | SHAPs | ||

| INNvestigate | GRAD-CAM | ||

| Kernel SHAPs | Integrated Gradients | ||

| AIX360 | LIMEs | ||

| EBM | LRP | ||

| What-if | |||

| Eli5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharma, N.A.; Chand, R.R.; Buksh, Z.; Ali, A.B.M.S.; Hanif, A.; Beheshti, A. Explainable AI Frameworks: Navigating the Present Challenges and Unveiling Innovative Applications. Algorithms 2024, 17, 227. https://doi.org/10.3390/a17060227

Sharma NA, Chand RR, Buksh Z, Ali ABMS, Hanif A, Beheshti A. Explainable AI Frameworks: Navigating the Present Challenges and Unveiling Innovative Applications. Algorithms. 2024; 17(6):227. https://doi.org/10.3390/a17060227

Chicago/Turabian StyleSharma, Neeraj Anand, Rishal Ravikesh Chand, Zain Buksh, A. B. M. Shawkat Ali, Ambreen Hanif, and Amin Beheshti. 2024. "Explainable AI Frameworks: Navigating the Present Challenges and Unveiling Innovative Applications" Algorithms 17, no. 6: 227. https://doi.org/10.3390/a17060227

APA StyleSharma, N. A., Chand, R. R., Buksh, Z., Ali, A. B. M. S., Hanif, A., & Beheshti, A. (2024). Explainable AI Frameworks: Navigating the Present Challenges and Unveiling Innovative Applications. Algorithms, 17(6), 227. https://doi.org/10.3390/a17060227