Abstract

The Transportation Problem (TP) is a special type of linear programming problem, where the objective is to minimize the cost of distributing a product from a number of sources to a number of destinations. Many methods for solving the TP have been studied over time. However, exact methods do not always succeed in finding the optimal solution or a solution that effectively approximates the optimal one. This paper introduces two new variations of the well-established Particle Swarm Optimization (PSO) algorithm named the Trigonometric Acceleration Coefficients-PSO (TrigAc-PSO) and the Four Sectors Varying Acceleration Coefficients PSO (FSVAC-PSO) and applies them to solve the TP. The performances of the proposed variations are examined and validated by carrying out extensive experimental tests. In order to demonstrate the efficiency of the proposed PSO variations, thirty two problems with different sizes have been solved to evaluate and demonstrate their performance. Moreover, the proposed PSO variations were compared with exact methods such as Vogel’s Approximation Method (VAM), the Total Differences Method 1 (TDM1), the Total Opportunity Cost Matrix-Minimal Total (TOCM-MT), the Juman and Hoque Method (JHM) and the Bilqis Chastine Erma method (BCE). Last but not least, the proposed variations were also compared with other PSO variations that are well known for their completeness and efficiency, such as Decreasing Weight Particle Swarm Optimization (DWPSO) and Time Varying Acceleration Coefficients (TVAC). Experimental results show that the proposed variations achieve very satisfactory results in terms of their efficiency and effectiveness compared to existing either exact or heuristic methods.

1. Introduction

The Transportation Problem (TP) is one of the most significant types of linear programming problems. The aim of the TP is to minimize the cost of transportation of a given commodity from a number of sources or origins (e.g., factory manufacturing facility) to a number of destinations (e.g., warehouse, store) [1]. Over the years, many classical and stochastic search approaches have been applied for the purpose of solving the TP.

The Northwest Corner method (NWC) is one of the methods that obtains a basic feasible solution to various transportation problems [2]. This process very easily allocates the amounts when few demand and destination stations exist. Moreover, frequently, the exported solution does not approach the optimal. The Minimum Cost Method (MCM) [3] is an alternative method which can yield an initial basic feasible solution. The MCM succeeds in lowering total costs by taking into consideration the lowest available cost values while finding the initial solution. An innovative approach comes from the Vogel Approximation Method (VAM); the VAM is an upgraded version of the MCM which results in a basic feasible solution close to the optimal solution [3]. Both of them take the unit transportation costs into account and obtain satisfactory results; however, VAM is rather slow and computationally intensive for a large range of values. Nevertheless, it has been proven that in problems with a small range of values and a relatively small number of variables, the above exact methods are quite efficient.

In some cases, TP has a complex structure, multifaceted parameters and a huge amount of data to be studied. Therefore, exact methods do not succeed in finding a suitable solution in an acceptable time period; a result, it is unpractical to use them. Taking into consideration the above, apart from conventional solution techniques, various heuristic and metaheuristic methods have been designed to capitalize on their potential capabilities. Specifically, metaheuristic algorithms attempt to find the best feasible solution, surpassing the other technique as much in terms of quality as in computational time [4]. Mitsuo Gen, Fulya Atliparmak and Lin Lin applied a Genetic Algorithm (GA) for a two-stage TP using priority-based encoding, showing that the GA has been receiving great attention and can be successfully applied for combinational optimization problems [5]. Ant Colony Optimization (ACO) algorithms have already proven their efficiency in many complex problems; they constitute a very useful optimization tool for many transportation problems in cases where it is impossible to find an algorithm that finds the optimal solution or in cases where the time interval does not make it possible to approve this solution [6]. The applications of hybrid methods with the combination of two or more heuristic, metaheuristic or even exact methods are also widespread. Interesting research was undertaken in 2019 by Mohammad Bagher Fakhrzad, Fariba Goodarzian and Golmohammadi [7]. In their study, four metaheuristic algorithms, including Red deer Algorithm (RDA), Stochastic Fractal Search (SFS), Genetic Algorithm (GA) and Simulated Annealing (SA), as well as two hybrid algorithms, the RDA and GA (HRDGA) algorithm and the Hybrid SFS and SA (HRDGA) algorithm, were utilized to solve the TP, demonstrating significant effectiveness [7].

Motivated by the above-mentioned applications of metaheuristic algorithms to cope with the TP, this work deals with the application of Particle Swarm Optimization (PSO) to solve the TP effectively. The PSO algorithm was first introduced by Dr. Kennedy and Dr. Eberhart in 1995 and was known as a novel population-based stochastic algorithm, working out complex non-linear optimization problems [8]. The basic idea was originally inspired by simulations of the social behavior of animals such as bird flocking, fish schooling, etc. Possessing their own intelligence, birds of the group connect with each other, sharing their experiences, and follow and trust the mass in order to reach their food or migrate safely without knowing in advance the optimal way to achieve it. The proposed research is expected to enhance the abilities of both the social behavior and personal behavior of the birds. It is observed that the original PSO has deficits in premature convergence, especially for problems with multiple local optimums [9]. The swarm’s ability to function with social experience as well as personal experience is determined in the algorithm through two stochastic acceleration components, known as the cognitive and social components [10]. These components have the aptitude to guide the particles in the original PSO method to the optimum point as the correct selection of their values is the key influence on the success and efficiency of the algorithm. Much research has been carried out with a focus on finding out the best combination of these components [10].

First, this paper examines approaches that have already been applied with great success to solve the TP. Adding to the above, two new PSO variations are presented and applied to solve the TP, operating proper transformations of the main PSO parameters. Experimental results show that these new PSO variations have very good performance and efficiency in solving the TP compared to the former methods.

In order to confirm the technical merit and the applied value of our study, 32 instances of the TP with different sizes have been solved to evaluate and demonstrate the performance of the proposed PSO variations. Their experimental results are compared with those of well-known exact methods, proving their superiority over them. One major innovation of the proposed variations is the appropriate combination of acceleration coefficients (parameters c1, c2) and inertia weight (parameter w) [11] (see Section 3) in order to come up with better computational results compared to existing approaches. Exhaustive experimental results demonstrate that the performance of the new PSO variations noted significantly higher performance not only compared to the exact methods already applied to solve the TP but also compared to the other PSO variations already introduced in the respective literature. Furthermore, in order to check the stability of the proposed PSO variations, many different combinations of the main PSO parameters were tested and validated.

The contribution of the paper is as follows:

- According to our knowledge, PSO has already been applied for solving the fixed- charged TP, and a heuristic approach was used in order to find the shortest path in a network of routes with a standard number of points connected to each other. For the first time, the PSO-based algorithms are applied to solve the basic TP in a large amount of test instances effectively, not only finding the optimal means of items distribution but also discovering the optimal value.

- Moreover, two new PSO variations are introduced, which sustain balance between exploration and exploitation of the search space. These variations proved to be very efficient in solving the TP, achieving better results compared not only to deterministic but also to other already-known PSO-based methods.

- A thorough experimental analysis has been performed on the PSO variations applied to solve the TP to prove their efficiency and stability.

The remainder of the paper is organized as follows: Section 2 presents the mathematical formulation of the TP. The PSO algorithm is briefly described in Section 3. Section 4 presents the initialization procedure of the basic feasible solutions and the steps of the PSO algorithm for the TP. Both the existing PSO variations as well as the new ones are presented in detail in Section 5. A well-documented case study is conducted in Section 6, in order to compare the performance of five exact methods with the classic PSO and its variations. Lastly, conclusive remarks and future recommendations are presented in Section 7.

2. Transportation Problem (TP)

Many researchers have developed various types of transportation models. The most prevalent was presented by Hitchcock in 1941 [12]. Similar studies were conducted later by Koopmans in 1949 [13] and in 1951 by Dantzig [14]. It is well known that the problem has become quite widespread, so several extensions of transportation model and methods have been subsequently developed. However, how is the Transportation Problem defined?

The TP can be described as a distribution problem, with m suppliers (warehouses or factories) and n destinations (customers or demand points). Each of the m suppliers can be allocated to any of the n destinations at a unit shopping cost , which corresponds to the route from point i to point j. The available quantities of each supplier , i = 1, 2,…, m are denoted as and those of each destination , j = 1, 2, …, n are denoted as The objective is to determine how to allocate the available amounts from the supply stations to the destination stations while simultaneously achieving the minimum transport cost and also satisfying demand and supply constraints [12].

The mathematical model of the TP can be formulated as follows:

Equation (1) represents the objective function to be minimized. Equation (2) contains the supply constraints according to which the available number of origin points must be more than or equal to the quantity demanded from the destination points. Respectively, the sum of the amount to be transferred from source to destination must be less than or equal to the available quantity than we possess, as presented in Equation (3). A necessary condition is depicted in Equation (4), as units must take positive and integer values. Without loss of generality, we assume that in this paper, both the supplies and demands are equal following the balanced condition model.

As already mentioned, there are several methods which can lead to finding a basic feasible solution. However, most of the currently used methods for solving transportation problems are considered complex and very expansive in terms of execution time. As a result, it is appealing to seek and discover a metaheuristic approach based on the PSO algorithm to solve the TP efficiently and effectively.

3. Particle Swarm Optimization (PSO)

The Particle Swarm Optimization (PSO) algorithm is considered to be one of the modern innovative heuristic algorithms since its methodology over the years has become extremely prevalent due to its simplicity of implementation; it leads very easily to satisfactory solutions [15]. According to the PSO algorithm, the collective behavior of animals has been analyzed in detail with an eye forward to function as a robust method in order to solve optimization problems in a wide variety of applications [16].

In PSO, each candidate solution can be defined as a particle and the whole swarm can be considered as the population of the algorithm. The particles improve themselves by cooperating and sharing information among the swarm, and they succeed in learning and improving to provide the highest possible efficiency. More precisely, each particle through the search space is intended to find the best value for its individual fitness and, simultaneously, to minimize the objective function by satisfying all the constraints of the problem. Each particle is studied from a perspective that contains three different parameters: position; velocity; and its previous best positions.

Consequently, in n-dimensional search space, each particle of the swarm is represented by , and the equation of its position is as follows:

where is the current position, is the previous position and is the velocity which determines the movement of each particle in the current iteration ().

Respectively, the velocity of the particle is denoted by and is given by the following equation:

where

- denotes the velocity in the current iteration and is the velocity in the previous iteration.

- is the inertia weight, used to balance the global exploitation and local exploitation, providing a memory of the previous particle’s direction which prevents major changes in the suggested direction of the particles.

- and are two variables which are randomly derived from uniform distribution in range [0, 1].

- and are defined as “acceleration coefficients” which have a huge effect on the efficiency of the PSO method. The constant conveys how much confidence a particle has in itself, while expresses how much confidence a particle has in the swarm.

- The variable is the best position of the particle until the iteration , whereas is the finest position of the whole swarm until the same iteration.

- The term is known as the cognitive component; it acts as a kind of memory that stores the best previous positions that the particle has achieved. The cognitive component reflects the tendency of the particles to return to their best positions.

- The term is called the social component. In this particular case, the particles behave according to the knowledge that they have obtained from the swarm, having as a guide the swarm’s best position.

The acceleration coefficients and , together with the random variables and affect to a great extent the evolution of cognitive and social component and hence the velocity value, which, as is known, is mainly responsible for the ultimate direction of the particles.

4. The Basic PSO for Solving the TP

The proposed PSO algorithm used to solve the TP is presented in this section. The primary goal is the initialization of the particles according to the problem’s instances. This is achieved through a sub-algorithm (an initialization algorithm), as presented below. Initially, the amounts of the supply and demand were defined in tables. Subsequently, through control conditions, the amounts were randomly distributed, satisfying the constraints of the sums of supply and demand.

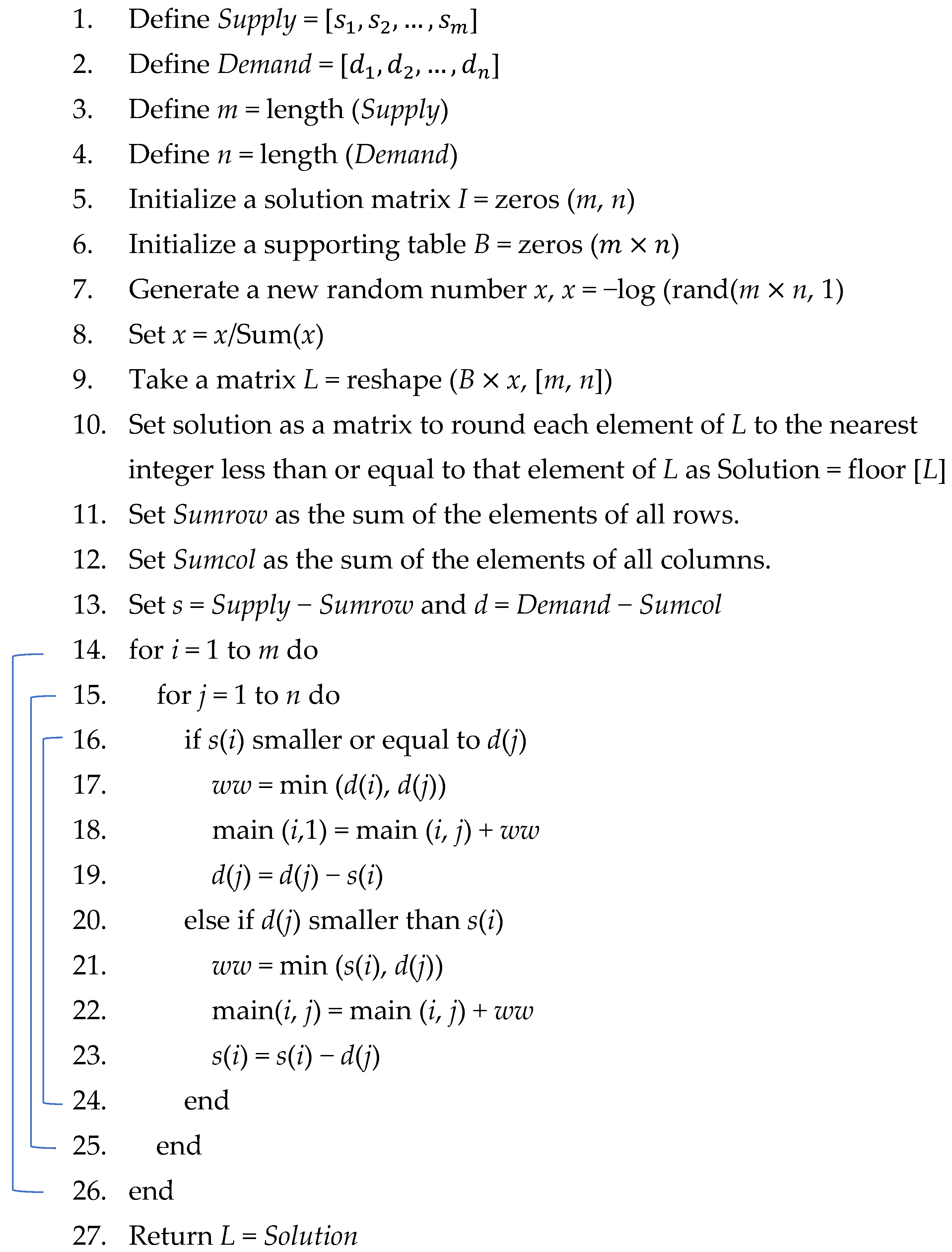

First, Algorithm 1 creates two vectors, namely, Supply and Demand, which are its input, as shown in lines 1 and 2. Next, variables m and n are computed. These variables are equal to the values of parameters Supply and Demand, respectively. Then, a matrix is created consisting of random real numbers (line 7). In line 10, the elements of the candidate solution matrix are rounded to the nearest integer as the amounts of commodities should be non-negative integer values. In the following lines of the algorithm, a process of readjustment and redistribution of matrix L begins so that its values correspond to the given Supply and Demand amounts. In lines 11 and 12, the sum of all elements of each row of matrix L is stored in vector Sumrow, while the sum of all elements of each column of matrix L is stored in vector Sumcol. Then, two new vectors, namely, s and d, are created by subtracting Sumrow from Supply and Sumcol from Demand, respectively. In the following lines, for each cell of the final matrix, the shortcomings of the matrix are located and assembled appropriately to each cell by zeroing out the excess amount of vectors s and d. The output of Algorithm 1 is a matrix consisting of the initial solutions (Initial Basic Feasible Solutions—IBFS), which comprises the input of Algorithm 2 (see below). All possible Initial Basic Feasible Solutions (IBFS) are non-negative integer values satisfying the supply and demand constraints.

| Algorithm 1: Initialization algorithm |

|

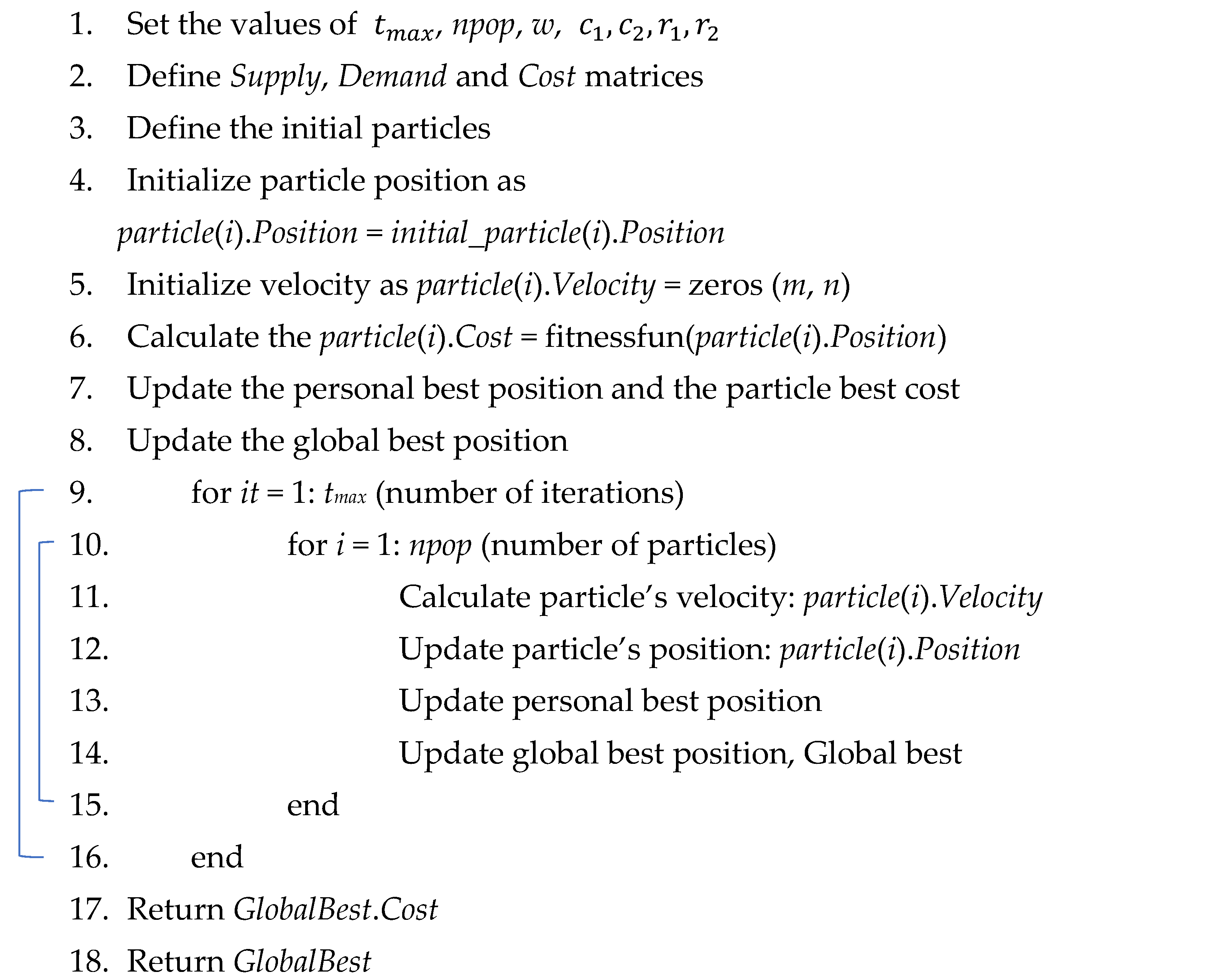

Next, we present the structure of the basic PSO algorithm, which will be applied to solve the TP (Algorithm 2). The process starts with the initialization of the population size npop, the maximum number of iterations , the personal and social acceleration coefficients and , the random variables and and, finally, the inertia weight w (line 1). Moreover, subsequently, the Supply, Demand and Cost matrixes are defined (line 2).

Line 6 calculates the total transport cost of each particle. Then, in lines 7 and 8, whether the total cost of the current particle is less than the minimum transport cost calculated up to then is checked. If the statement is true, the value of global best cost is upgraded, and this particle is now defined as the best. This process is continued for all candidate particles. In lines 9 to 14, through an iterative loop, the position and velocity of the particles are calculated using Equations (5) and (6). The algorithm exports the particle with the optimal position and its respective optimal transport cost.

| Algorithm 2: Particle Swarm Optimization algorithm |

|

The exported values of the particle’s position, although satisfying demand and supply constraints, were observed to be taking occasionally negative and/or fractional values. These values cannot support the aspect of the solution since the values are quoted in quantities (only positive values are allowed); therefore, appropriate modifications have been made for the final form of the particle position.

Two sub-algorithms were designed to repair the algorithm, replacing negative and fractional volumes with natural numbers without breaking the supply and demand conditions.

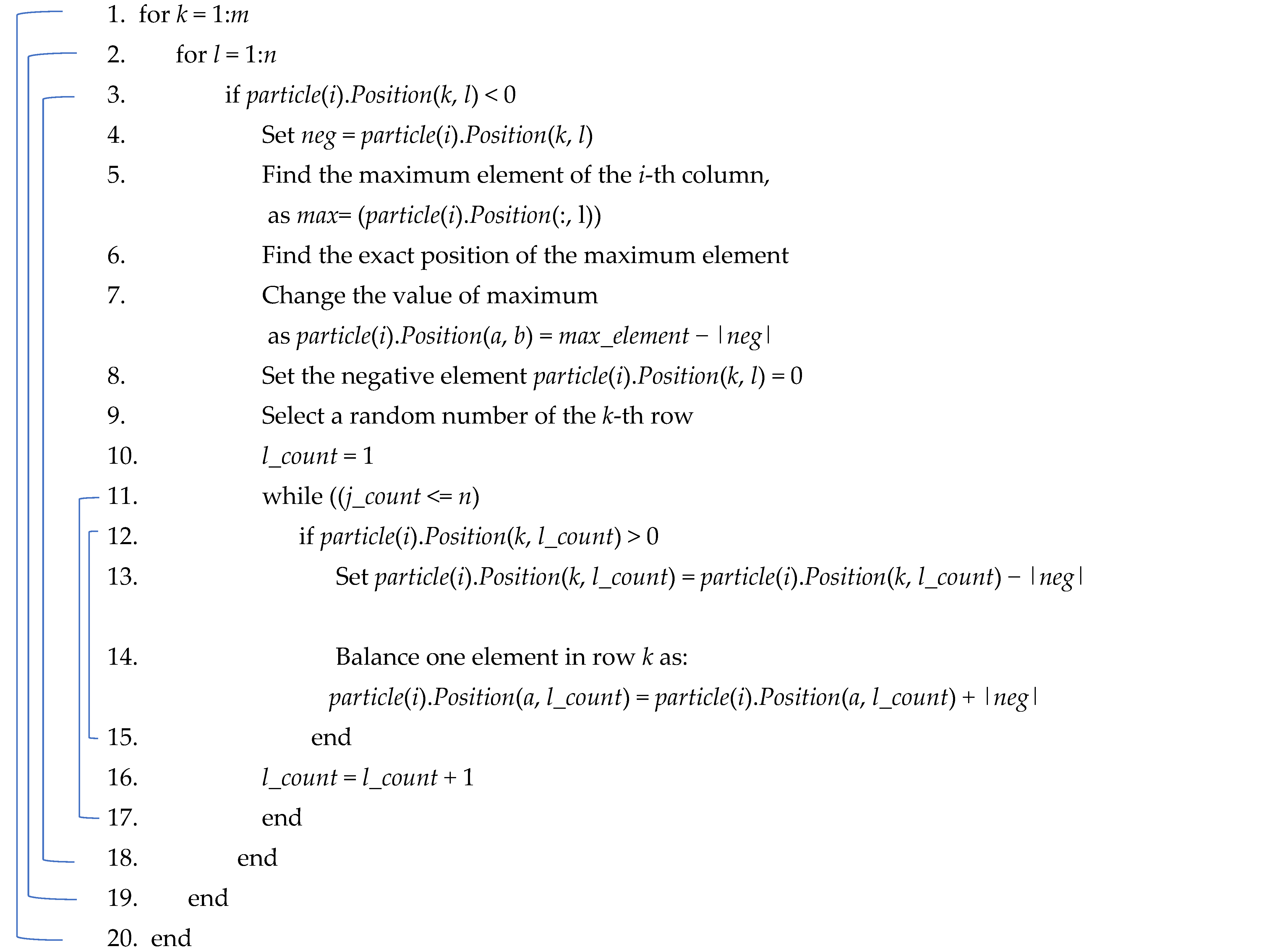

Algorithm 3 takes as input a matrix—particle(i). Position—that has negative values in its cells. The aim is for the negative elements to be eliminated as in [17]. Through an iterative process, which is illustrated in line 3, the algorithm checks each line of the cell of the table and sets as neg the value of the cell with the negative value. Subsequently, it searches the maximum element of the column where its negative element was found, as shown in lines 5 and 6. The cardinal value of the negative value is subtracted from the cell with the largest negative value, while the cell with the negative value is set to zero. In line 9, a cell is randomly selected from the row that corresponds to the negative element. If the value is positive, the cardinal of the negative element is subtracted from it. Simultaneously, a cell of this row is counterbalanced by adding to it the cardinal of the negative cell as shown in line 13. Algorithm 3 exports the particle(i).Position with non-negative values, while sustaining the supply and demand conditions.

| Algorithm 3: Negative values repair algorithm |

|

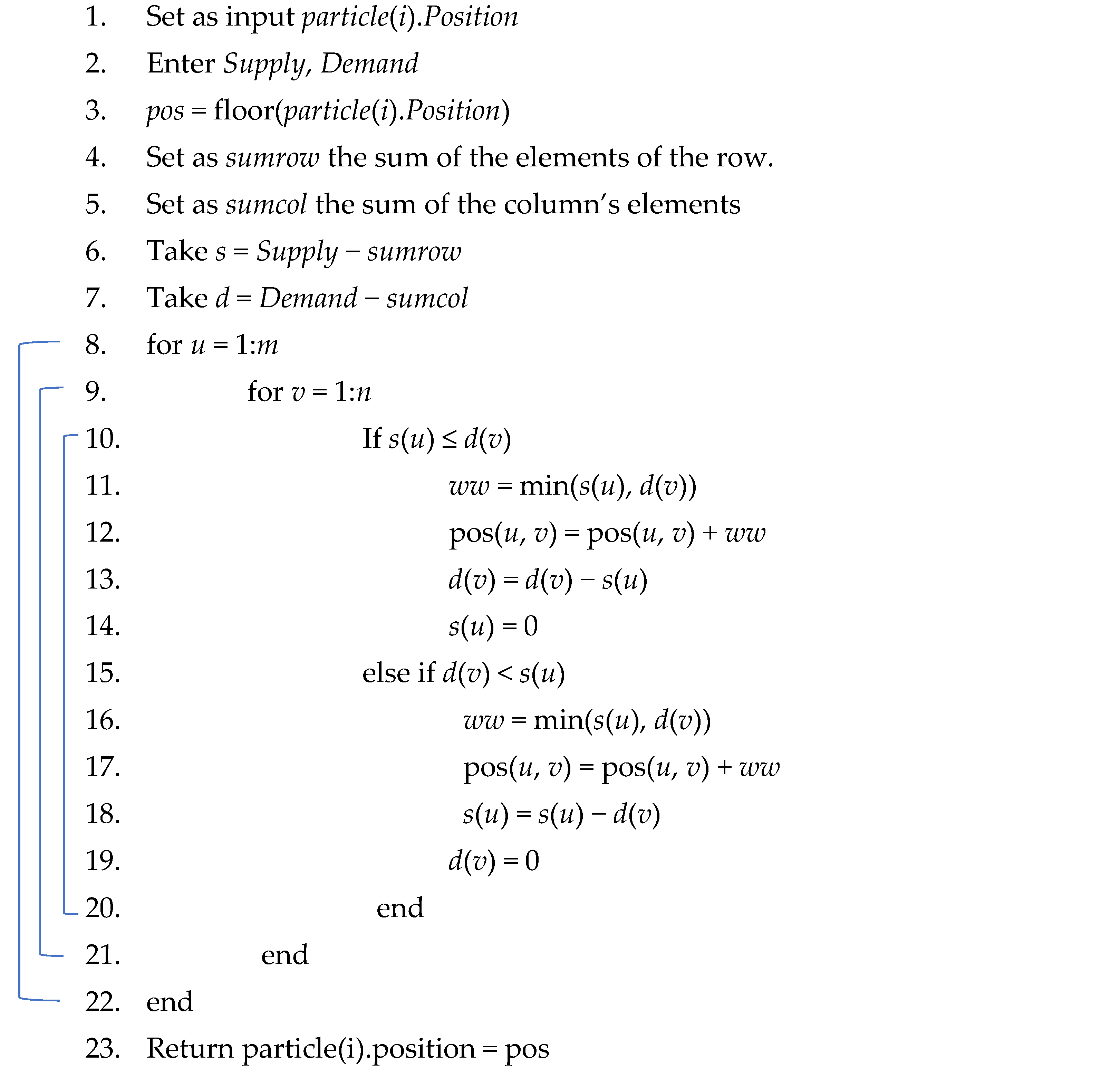

Applying the above transformation, the result is a matrix with positive but also fractional elements. Algorithm 4 takes as its input the matrix of particles’ positions after removing the negative elements. In line 3, a new matrix named pos is defined as containing the integer elements of matrix particle(i).Position. In line 4, a vector named sumrow is created which contains the sum of each row of the pos matrix; while in line 5, a vector named sumcol is created, containing the sum of each column. In lines 6 and 7, the differences between the quantities of the Supply and sumrow and Demand and sumcol matrices are noted, respectively, in order to record the quantities missing from the pos matrix. Then, through an iterative loop, the u cell of s(u) is compared with the v cell of d(v). The minimum quantity of these two is selected and entered into the pos matrix, reallocating the integer amounts in an appropriate manner to satisfy the available supply and demand items. The algorithm terminates when vectors s and v are zeroed and the integer quantities are inserted into pos matrix, which is the output of Algorithm 4. The final solution is a non-negative integer solution matrix satisfying the requested constraints.

| Algorithm 4: Amend fractions |

|

5. Variations of PSO

This section presents two already-known and two new variations of the classical implementation of the PSO, which are presented and used in this contribution to solve the TP. These variations are investigated in order to improve the performance of the classical PSO algorithm.

5.1. Decreasing Weight Particle Swarm Optimization (DWPSO)

The inertia weight w is the most influential parameter with respect to both the success rate and the function evaluation [18]. In DWPSO, the inertia factor is linearly decreasing. The decision to use this variation was not arbitrary; DWPSO is one of the classic and very effective PSO variations since its superiority remains imperishable over years. Through DWPSO, the algorithm focuses on diversity at former iterations and on convergence at latter ones [18]. The right and proper selection of the inertia weight provides a balance among global and local exploitation and results in fewer iterations, on average, to find a sufficiently optimal solution [19]. Exploitation is the capacity of particles to converge to the same peak of the objective function and remain there without wanting to obtain better solutions in their wider field. On the contrary, in the exploration condition, the particles are in constant search, discovering beneficial solutions. After constant research regarding the figurative of inertia weight, Shi and Eberhart concluded that values in the interval [0.9, 1.2] have a positive effect on the improvement of the solution [20]. A linearly decreasing inertia weight with and w between 0.4 and 0.9 was used by Shi and Eberhart, too. According to their claim, is the new inertia weight, which linearly decreases from 0.9 to 0.4.

Equation (7) for DWPSO is given as

where is set as 0.9, performing extensive exploration, and is equal to 0.4, performing more exploitation. Moreover, t is the current iteration of the algorithm and is the maximum number of iterations. A large portion of researchers’ results illustrate that linearly decreasing in the inertia weight can greatly improve the performance of PSO, having better results than the classic implementation of the algorithm.

5.2. Time-Varying Acceleration Coefficients (TVAC)

In population-based optimization methods, proper control of global and local exploration is essential for the efficient identification of the optimum solution.

Rathweera et al. introduced the TVAC in PSO [11]. According to their research, the cognitive parameter starts with a high value and linearly decreases to a low value, whereas the social parameter starts with a low value and linearly increases to a high value [21]. On the one hand, with a large value for the cognitive parameter and small value for the social parameter at the beginning, particles are moving by their own experience according to their own best positions, being able to move freely without following the mass. On the other hand, a small value for the cognitive parameter and a large value for the social parameter help the particles to escape from the area around their personal best positions and allow them to enhance the global search in the latter stages of the optimization procedure, converging toward the global optima. This concept can be mathematically represented as

where defines the value of in the first iteration equal to 2.5 and defines the value of in the last iteration equal to 0.5. Respectively, the value of in the first iteration is and is set to 0.5, while the value of in the first iteration is and is set to 2.5 [21].

5.3. Trigonometric Acceleration Coefficients-PSO (TrigAC-PSO)

In this subsection, a new variation is introduced. According to this variation, the impact of parameters and is extensively studied. First, each particle is guided by the knowledge and experience gained by the swarm (the value of is considerably bigger than the value of ). Next, relying on the learning mechanism, each particle builds its own strategy and acquires its own experience (the value of is becoming smaller while the value of is becoming bigger (see Equations (10) and (11)). This decrement of and increment of take place until both parameters are equalized to 2 in the last generation of the algorithm.

The following equations are used to calculate the cognitive and social acceleration parameters:

Here, in the first iteration, , which is the personal acceleration value, is equal to 0.5, while , which is the social acceleration value, is equal to 3.5. In the last iteration of the algorithm, both personal and social are equal to 2.

The value of inertia weight w varies according to the number of the current iteration t and the number of maximum iterations .

It is described as follows in Equation (12):

5.4. Four Sectors Varying Acceleration Coefficients PSO (FSVAC-PSO)

In the following section, a new variation is developed. This variation is novel and comprises the major technical merit of this contribution. The major role in this variation is the multiple changes of the coefficient parameters and . In this case, the solution is approached both from the knowledge of the particle and from the experience of the whole swarm. The number of iterations is divided into four sectors. Starting from the first iteration, the social and cognitive acceleration coefficient is initialized to 2. In the first sector of iterations, the value of is increasing while the value of is decreasing. As a result, the particle is mostly influenced by its own knowledge, while the influence of the swarm on it is limited; in the second sector, the value of is decreasing while the value of is increasing to an equilibrium between the knowledge of the particle gained at the previous sector and the experience of the swarm; in the third sector, the value of is decreasing while the value of is increasing—explicitly, the particles are allowed to move towards the global best position, following the swarm’s movements; as a result, information about the global best is reallocated to all the particles for more exploration before the swarm finally converges [11]; in the fourth sector, the particles head toward both their own personal best and global best observed by the whole swarm—the concept of this variation is based on the combination of all types of different searching behaviors, as they arise for different values of the coefficient acceleration parameters, culminating in equilibrium between exploitation and exploration of the search space; finally, in the last iteration, the two coefficient parameters are equated.

The formulation is represented in detail below:

- In the first Iteration, as already mentioned:

- In the first sector:where , , and ;

- In the second sector:where , ;

- In the third sector:where ;

- In the fourth sector:where ;

- In the last iteration:

In the above formulations, and are initial and final values of cognitive and social components acceleration factors, respectively. To improve the solution quality, these coefficients are updated in such a way that the values increase and decrease at a steady pace. According to this approach, the solution avoids being trapped into a local optimum, as shown by the experimental results presented in Section 6.

As for the inertia weight w, Equation (12) is used to provide the necessary momentum for particles to roam across the search space.

6. Case Studies and Experimental Results

In this section, the proposed variations of the PSO algorithm are applied in thirty two well-known numerical examples of the TP, as shown in Table 1. The numerical examples of this study come from the research of B. Amaliah, who compared five different methods, which will be presented briefly below, regarding their performance in solving the TP [22].

Table 1.

Detail of 32 numerical examples of the TP.

Vogel’s Approximation Method (VAM) is an iterative procedure such that in each step, proper penalties for each available row and column are taken into account through the least cost and the second-least cost of the transportation matrix [22]. The Total Differences Method 1 (TDM1) was introduced by Hosseini in 2017. The method is based on VAM’s innovation to use penalties for all rows and columns of the transportation matrix. The TDM1 was developed by calculating penalty values only for rows of the transportation matrix [23]. Amaliah et al., in 2019, represented their new method, known as the Total Opportunity Cost Matrix Minimal Cost (TOCM-MT). This method has a mechanism with which to check the value of the least-cost cell before allocating the maximum units ; this is in contradiction to the TDM1, which directly allocates the maximum units to the least cost [24]. Juman and Hoque, in 2015, developed a formulation method called the Juman and Hoque method (JHM). Their study is based on the distribution of supply and demand quantities, taking into account the two minimum-cost cells and their redistribution through penalties [25]. Finally, the last method presented is known as the Bilqis Chastine Erma Method (BCE), which constitutes an enhanced version of the JHM [26].

The whole algorithmic approach was implemented using MATLAB R2021b. The algorithm was tested on a set of different dimensional problems. All parameters of the proposed algorithm were selected after exhaustive experimental testing. Each of the four variations was tested using different parameter values, and those values whose computational results were superior to other values were selected. The number of iterations is set to 100. The parameter is set as a random number derived from the uniform distribution in range [0, 1], and is set as the complement of ; that is, . This modification plays a significant part as it is different from the customary application where both and are randomly derived uniformly from range [0, 1]. Using the former relationship between and , we manage to achieve stronger control over these parameters’ values.

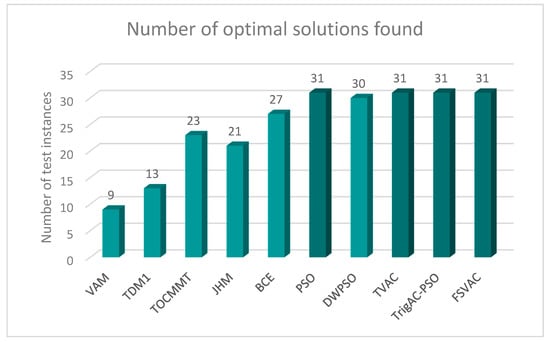

In the following table (Table 2) and Figure 1, the performance of both the exact methods and the PSO-based ones are presented for 30 Monte Carlo runs; more precisely, the best value achieved by each method is depicted. The last column presents the optimal solution of each numerical problem. As shown, the Vogel method manages to find 9 out of the 32 test instances (28.13%); the Total Differences Method 1 (TDM1) succeeds in finding more optimal solutions than the Vogel method by finding 13 out of 32 optimal solutions (40.63%); using the TOCM-MT method, the results show that the method’s performance is better still, finding the optimum in 23 out of 32 test instances (71.9%); the JHM method, which accumulated 21 optimal solutions, was less effective than TOCM-MT (65.62%); the BCE method, which achieved 27 out of 32 test instances (84.4%), proved to be the most efficient compared to all previously mentioned methods; the classic PSO, the TVAC, the TrigAC-PSO and the FSVAG-PSO achieve the optimum in 31 out of 32 test instances (96.88%), while the PWPSO achieves the optimum in 30 out of the 32 (93.76%).

Table 2.

The optimal solution of each method for the 32 test instances.

Figure 1.

The number of optimal solutions that every method achieved.

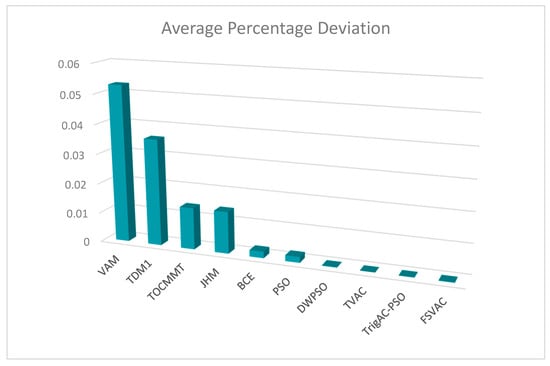

One significant finding of our research is that the new PSO variation, TrigAC-PSO, which is first presented in this study, achieved very good results. The following table (Table 3) examines the deviation of VAM, TDM1, TOCM-MT, JHM, BCE, PSO, DuPSO, TVAC, TrigAC-PSO and FSVAC-PSO. The measurement of deviation shows the difference between the observed value and the expected value of a variable, and it is given by the following formula:

where is the current solution.

Table 3.

The deviation (dev) of the methods for 32 numerical examples.

Considering Table 3 and Figure 2, it is evident that method VAM, TDM1, TOCM-MT and 1HM appear to be more inefficient, deviating from the optimal solution at a significant scale. More precisely, the results of Table 3 show that the solutions achieved by VAM differ from the optimal solution by 5.29%, the results of TDM1 by 3.58%, the results of TOCM-MT by 1.4% and the results of JHM by 1.4%. BCE method presented higher levels of efficiency since the values of deviation were negligible. Analysis of the data of Table 3 reveals that the percentage of the deviation in classic PSO, as well as in its variations, was almost zero. Furthermore, the TVAC method was nearest to the optimal solution, followed by TrigAC-PSO, FSVAC-PSO and finally by DWPSO.

Figure 2.

Average percentage deviation for each method.

The findings from the current study provide us with the basic information for an extensive meta-analysis, allowing us to investigate which of the presented PSO variations has better performance in solving the TP. To serve this cause, many experiments were carried out which investigated different values of PSO population size (number of particles). The classic PSO, as well as each one of its variations (DWPSO, TVAC, TrigAC-PSO, FSVAC-PSO), were tested for 10, 15 and 20 particles for all 32 numerical examples. The results presented in Table 4, Table 5 and Table 6 show the performance of the classic PSO as well of its variations for 30 independent runs. The number of generations was stable and equal to 100 for all runs.

Evidence from this study, presented in Table 4, expounds the accuracy rate of each algorithm for 10 particles. The accuracy rate is given by the following formulation:

where is the total number of runs where optimal solution was found and is the number of runs.

Table 4 shows that the classic PSO obtained 38.33% accuracy rate. A significant increase in accuracy rate, using 10 particles, was evident in DWPSO, which achieved 59.58% accuracy, almost twice as much as the percentage of the classic PSO. Moreover, TVAC obtain a 61.45% accuracy rate. The best results came from TrigAC-PSO, since this PSO variation achieved a 62.81% accuracy rate. Last but not least, FSVAC achieved a 59.5% accuracy rate.

Table 4.

Accuracy of PSO, DWPSO, TVAC, TrigAC-PSO and FSVAC for 10 particles.

Table 4.

Accuracy of PSO, DWPSO, TVAC, TrigAC-PSO and FSVAC for 10 particles.

| PSO | DWPSO | TVAC | TrigAC-PSO | FSVAC | |

|---|---|---|---|---|---|

| Pr.01 | 0.0333 | 0.2 | 0.4666 | 0.5667 | 0.2333 |

| Pr.02 | 0.7667 | 1 | 1 | 1 | 1 |

| Pr.03 | 1 | 1 | 1 | 1 | 1 |

| Pr.04 | 1 | 1 | 1 | 1 | 1 |

| Pr.05 | 0.2333 | 0.6667 | 0.8333 | 0.8667 | 0.8333 |

| Pr.06 | 0.3667 | 1 | 0.9667 | 1 | 1 |

| Pr.07 | 0.7667 | 0.9 | 1 | 1 | 0.9 |

| Pr.08 | 0 | 0 | 0.2667 | 0.1667 | 0.1334 |

| Pr.09 | 0.0333 | 0.3 | 0.2 | 0.2667 | 0.1 |

| Pr.10 | 0 | 0 | 0.0333 | 0 | 0.0333 |

| Pr.11 | 0.1 | 0.1 | 0.1333 | 0.1 | 0.0667 |

| Pr.12 | 0 | 0.3 | 0.4 | 0.4667 | 0.3667 |

| Pr.13 | 0.5334 | 1 | 1 | 0.8667 | 1 |

| Pr.14 | 0 | 0 | 0 | 0.3333 | 0 |

| Pr.15 | 0.7 | 1 | 1 | 1 | 1 |

| Pr.16 | 0 | 0.4667 | 0.4667 | 0.6667 | 0.5667 |

| Pr.17 | 0.4333 | 0.9 | 0.9333 | 0.9 | 0.8333 |

| Pr.18 | 1 | 1 | 1 | 1 | 1 |

| Pr.19 | 0.4667 | 0.7 | 0.7667 | 0.5 | 0.7333 |

| Pr.20 | 0.4667 | 0.8333 | 0.6667 | 0.8667 | 0.7667 |

| Pr.21 | 0.5667 | 0.5333 | 0.3333 | 0.5333 | 0.3939 |

| Pr.22 | 0.3667 | 1 | 0.9667 | 0.8333 | 1 |

| Pr.23 | 0.0333 | 0.0333 | 0.1667 | 0.1667 | 0.1667 |

| Pr.24 | 0 | 0 | 0 | 0 | 0 |

| Pr.25 | 0.5333 | 0.9667 | 0.9333 | 0.9667 | 1 |

| Pr.26 | 0.4667 | 0.6 | 0.7 | 0.7 | 0.7333 |

| Pr.27 | 0.0333 | 0.4667 | 0.5667 | 0.4 | 0.4667 |

| Pr.28 | 0.2 | 0.2667 | 0.0667 | 0.2 | 0 |

| Pr.29 | 0.8333 | 1 | 1 | 1 | 1 |

| Pr.30 | 0.8667 | 1 | 1 | 1 | 1 |

| Pr.31 | 0.4 | 0.8333 | 0.7 | 0.7 | 0.7 |

| Pr.32 | 0.0667 | 0 | 0 | 0.0333 | 0 |

| Average | 0.383338 | 0.595834 | 0.611459 | 0.6281313 | 0.594603 |

The accuracy rate results for 15 particles are presented in Table 5. DWPSO achieved 65.31% accuracy, whereas TVAC reached 66.99%. It is of particular interest that TrigAC-PSO achieved the highest accuracy rate once again by reaching 69.8%. Finally, FSVAC obtained an accuracy rate equal to 66.56%.

Table 5.

Accuracy of PSO, DWPSO, TVAC, TrigAC-PSO and FSVAC for 15 particles.

Table 5.

Accuracy of PSO, DWPSO, TVAC, TrigAC-PSO and FSVAC for 15 particles.

| PSO | DWPSO | TVAC | TrigAC-PSO | FSVAC | |

|---|---|---|---|---|---|

| Pr.01 | 0.0667 | 0.6 | 0.6333 | 0.6333 | 0.4333 |

| Pr.02 | 0.9667 | 1 | 1 | 1 | 1 |

| Pr.03 | 1 | 1 | 1 | 1 | 1 |

| Pr.04 | 1 | 1 | 1 | 1 | 1 |

| Pr.05 | 0.7 | 0.9 | 1 | 1 | 1 |

| Pr.06 | 0.7 | 1 | 0.9667 | 1 | 1 |

| Pr.07 | 0.7667 | 1 | 1 | 0.9667 | 0.9667 |

| Pr.08 | 0 | 0.0667 | 0.3333 | 0.1667 | 0.0333 |

| Pr.09 | 0.0667 | 0.2333 | 0.2333 | 0.2 | 0.1333 |

| Pr.10 | 0.2667 | 0 | 0 | 0.0667 | 0 |

| Pr.11 | 0.2333 | 0.0667 | 0.3 | 0.2 | 0.0333 |

| Pr.12 | 0.1 | 0.4 | 0.4333 | 0.3333 | 0.2 |

| Pr.13 | 0.5667 | 1 | 1 | 0.9667 | 1 |

| Pr.14 | 0 | 0.0333 | 0 | 0.0667 | 0.1 |

| Pr.15 | 0.3 | 0.9333 | 0.9667 | 1 | 1 |

| Pr.16 | 0 | 0.6 | 0.5 | 0.9333 | 0.5333 |

| Pr.17 | 0.5667 | 0.9 | 1 | 0.9667 | 1 |

| Pr.18 | 1 | 1 | 1 | 1 | 1 |

| Pr.19 | 0.5333 | 0.9 | 0.9 | 0.6667 | 1 |

| Pr.20 | 0.6334 | 0.9667 | 0.8667 | 0.9333 | 1 |

| Pr.21 | 0.6667 | 0.4333 | 0.6667 | 1 | 0.4667 |

| Pr.22 | 0.6 | 1 | 1 | 1 | 1 |

| Pr.23 | 0.0667 | 0.2 | 0.3667 | 0.2333 | 0.2 |

| Pr.24 | 0.6666 | 0 | 0 | 0 | 0 |

| Pr.25 | 0.6333 | 1 | 0.8333 | 1 | 1 |

| Pr.26 | 0.3333 | 1 | 0.8 | 0.6667 | 1 |

| Pr.27 | 0.3667 | 0.4 | 0.4667 | 0.8333 | 1 |

| Pr.28 | 0.3333 | 0.1333 | 0.1 | 0.3333 | 0.1667 |

| Pr.29 | 1 | 1 | 1 | 1 | 1 |

| Pr.30 | 0.9333 | 1 | 1 | 1 | 1 |

| Pr.31 | 0.3667 | 1 | 0.9333 | 1 | 1 |

| Pr.32 | 0.1333 | 0.1333 | 0.1 | 0.1667 | 0.0333 |

| Average | 0.486463 | 0.653122 | 0.669894 | 0.69791875 | 0.665622 |

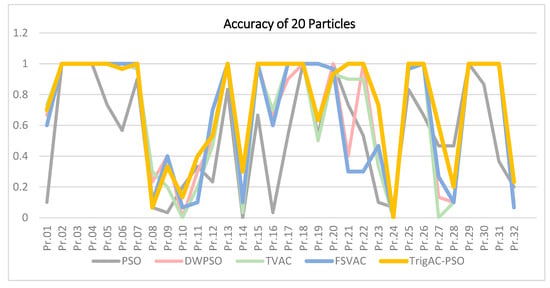

The accuracy rate results for 20 particles are presented in Table 6 and Figure 3. A high percentage of 53.33% was obtained by the classic PSO. Between DWPSO and TVAC, it is evident that both rates were sufficiently close, with accuracy rates ascending up to 66.78% and 66.56%, respectively. FSVAC, the variation which has been proposed and presented in this research, achieved accuracy rate equal to 66%. This new method evinced positive effects in terms of its validity and effectiveness. Last but not least, TrigAC-PSO demonstrated the best performance compared to all other variations, achieving 74.3%. Running the algorithm using 20 particles, TrigAC-PSO found the optimal in 31 out of 32 test instances, reaching 96.88%. Moreover, in 20 out of 32 numerical examples, this variation managed to reach the optimum in all 30 runs, with a success rate of 62.5%. The punctuality of this method rises to 75%; hence, this variation is established, compared to other variations, as the ideal option for the solution of the TP.

Table 6.

Accuracy of PSO, DWPSO, TVAC, TrigAC-PSO and FSVAC for 20 particles.

Table 6.

Accuracy of PSO, DWPSO, TVAC, TrigAC-PSO and FSVAC for 20 particles.

| PSO | DWPSO | TVAC | TrigAC-PSO | FSVAC | |

|---|---|---|---|---|---|

| Pr.01 | 0.1 | 0.6667 | 0.7333 | 0.7 | 0.6 |

| Pr.02 | 1 | 1 | 1 | 1 | 1 |

| Pr.03 | 1 | 1 | 1 | 1 | 1 |

| Pr.04 | 1 | 1 | 1 | 1 | 1 |

| Pr.05 | 0.7333 | 1 | 1 | 1 | 1 |

| Pr.06 | 0.5667 | 1 | 1 | 0.9667 | 1 |

| Pr.07 | 0.9 | 0.9667 | 0.9667 | 1 | 1 |

| Pr.08 | 0.0667 | 0.2333 | 0.3 | 0.0667 | 0.1 |

| Pr.09 | 0.0333 | 0.4 | 0.2 | 0.3333 | 0.4 |

| Pr.10 | 0.2 | 0 | 0 | 0.1333 | 0.0667 |

| Pr.11 | 0.3333 | 0.3 | 0.2 | 0.4 | 0.1 |

| Pr.12 | 0.2333 | 0.5 | 0.4667 | 0.5333 | 0.7 |

| Pr.13 | 0.8333 | 1 | 1 | 1 | 1 |

| Pr.14 | 0 | 0.0333 | 0.0333 | 0.3 | 0.1 |

| Pr.15 | 0.6667 | 1 | 0.9667 | 1 | 1 |

| Pr.16 | 0.0333 | 0.6667 | 0.7 | 1 | 0.6 |

| Pr.17 | 0.5333 | 0.9 | 1 | 1 | 1 |

| Pr.18 | 1 | 1 | 1 | 1 | 1 |

| Pr.19 | 0.5333 | 0.6333 | 0.5 | 0.6333 | 1 |

| Pr.20 | 1 | 1 | 0.9333 | 0.9333 | 0.9667 |

| Pr.21 | 0.7333 | 0.4 | 0.9 | 1 | 0.3 |

| Pr.22 | 0.5333 | 1 | 0.9 | 1 | 0.3 |

| Pr.23 | 0.1 | 0.3333 | 0.3333 | 0.7333 | 0.4667 |

| Pr.24 | 0.0667 | 0 | 0 | 0 | 0 |

| Pr.25 | 0.8333 | 1 | 1 | 1 | 0.9667 |

| Pr.26 | 0.6667 | 1 | 1 | 1 | 1 |

| Pr.27 | 0.4667 | 0.1333 | 0 | 0.6 | 0.2667 |

| Pr.28 | 0.4667 | 0.1 | 0.1 | 0.2 | 0.1 |

| Pr.29 | 1 | 1 | 1 | 1 | 1 |

| Pr.30 | 0.8667 | 1 | 1 | 1 | 1 |

| Pr.31 | 0.3667 | 1 | 1 | 1 | 1 |

| Pr.32 | 0.2 | 0.1 | 0.0667 | 0.2333 | 0.0667 |

| Average | 0.533331 | 0.667706 | 0.665625 | 0.7427031 | 0.659381 |

Figure 3.

Accuracy for 20 particles.

In summary, the proposed method FSVAC-PSO, although it did not demonstrate the highest average success rate, was very accurate in calculating the optimal solution in cases where the aforementioned variations were unable to approach the optimal solution. In more detail, this research experimented on population sizes of 10, 15, 20 particles over 32 well-known test instances used in the respective literature. For each problem, as already mentioned, 30 independent experimental runs were conducted. In the case of 10 particles, the classical PSO found the optimal solution in only in 3 out of 32 test instances in all 30 runs (9.4%); DWPSO found the optimal solution in 10 out of 32 test instances in all 30 runs (31.25%); while TVAC and Trig-PSO managed to find the optimal solution in 9 out of 32 test instances in all 30 runs (28.13%); finally, FSVAC was shown to be the best PSO variation, finding the optimal solution in 11 out of 32 test instances in all 30 runs (34.4%).

In the case of 15 particles, FSVAC also showed the best performance by finding in the optimal solution in 18 out of 32 test instances in all 30 runs (56.25%); the classic PSO found the optimal solution in 4 out of 32 (12.5%) test instances, and TVAC in 11 out of 32, in all 30 runs; last but not least, both DWPSO and TrigAC-PSO found the optimal value in 13 out of 32 test instances in all 30 runs (40.63%).

In the case of 20 particles, the variations TrigAC-PSO and FSVAC are still more accurate than the other PSO variations since they succeeded in finding the optimal solution in 18 out of 32 and in 17 out of 32 test instances in all 30 runs, respectively. The other PSO variations attained relatively lower success rates in finding the optimal solution in all of their runs.

In the following table (Table 7), the most important statistical measures in the cases of 20 particles for 30 independent runs are represented for all PSO variations. These experimental results demonstrate the very good performance and stability of the proposed PSO variations in solving the TP. As presented, in all cases, the mean value is very close to the best one, showing that all these variations are not only efficient but also quite stable. The value of the Coefficient of Variation (CV), which is the basic measure for proving stability of stochastic algorithms, is, for all PSO variations, quite small; more precisely, the mean CV value is for each PSO variation is as follows: Classic PSO, 2.12%; DWPSO, 1.32%; TVAC, 0.87%; TrigAC-PSO, 0.66%; and FSVAC, 1.26%. These values show that TrigAC-PSO, which is one of the new PSO variations presented in this work, is the most stable one.

Table 7.

Statistical measures for 20 particles.

The above results urged us to continue the research for an even greater number of particles, in order to study the behavior of new variations in a multi-solution environment.

More specifically, the aforementioned variations were also tested on the set of 40 and 50 particles. In this case, 10 independent runs were carried out for each test instance, reducing the chances of finding the optimal solution from the 30 independent runs that we have already performed. Selecting more particles revealed significant results.

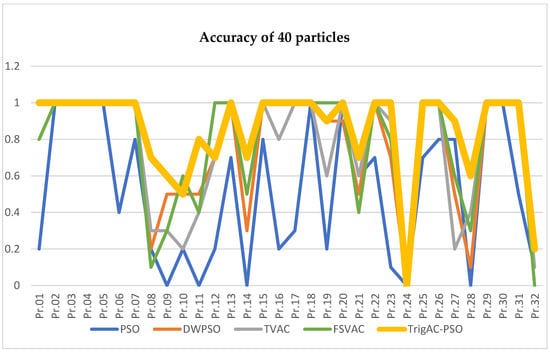

The results showed, once again, the consistent superiority of the proposed variations. Table 8 and Figure 4 shows the accuracy achieved by each variation for 40 particles. These results provide further support for the hypothesis that TrigAC-PSO and FSVAC are still more accurate than the other PSO variations, since they attained accuracy rates 88.31% and 77.5%, respectively; the DWPSO method follows with 75.94%, and TVAC with 74.38%; last but not least is the classic PSO with 51.56%, attaining a spectacular 13% increase over the 10-particle accuracy rates, but maintaining a steady performance for 15 and 20 particles.

Table 8.

Accuracy of PSO, DWPSO, TVAC, TrigAC-PSO and FSVAC for 40 particles.

Figure 4.

Accuracy for 40 particles.

In the following table (Table 9), the most important statistical measures in the case of 40 particles for 10 independent runs are represented for all PSO variations. According to the particularly low values of the Coefficient of Variation (CV), we can infer that the PSO variations are extremely stable; more precisely, the mean CV value for each PSO variation is as follows: Classic PSO, 2.14%; DWPSO, 0.93%; TVAC, 0.86%; TrigAC-PSO, 0.47%; and FSVAC, 0.81%. These values show that TrigAC-PSO, which is one of the new PSO variations presented in this work, is once again the most stable method.

Table 9.

Statistical measures for 40 particles.

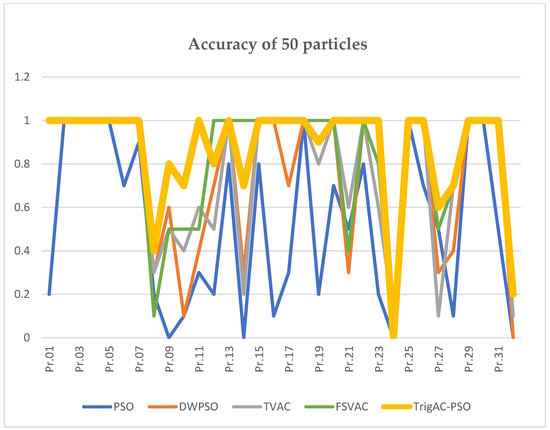

The following table (Table 10) and Figure 5 present the accuracy for the 50 particles. The accuracy for each PSO variation is as follows: Classic PSO, 52.5%; DWPSO, 74.3%; TVAC, 76.56%; TrigAC-PSO, 86.88%; and FSVAC, 82.19%. The two new variations range at the highest levels. These are particularly promising results, demonstrating that the increase in the particle’s number leads to an increase in the PSO variation’s accuracy, especially in the case of TrigAC-PSO and FSVAC. The results of 50 particles are equal to or better than the results that are currently presented. Overall, TrigAC-PSO was the one that obtained the most robust results.

Table 10.

Accuracy of PSO, DWPSO, TVAC, TrigAC-PSO and FSVAC for 50 particles.

Figure 5.

Accuracy for 50 particles.

The results of Table 11 lead to similar conclusions. In order to examine the stability for the 50 particles, it is worth comparing the CV values of the proposed variations with those of the traditional variations. Superior results are seen from TrigAC-PSO, as the CV value is equal to 0.4%, followed by the FSVAC with 0.59%. The other values of variations ranged as follows: Classic PSO, 2.19%; DWPSO, 0.77%; and TVAC, 0.83%.

Table 11.

Statistical measures for 50 particles.

The overall results demonstrate two inferences of decisive importance: first, the PSO algorithm and its variations have successfully solved the TP with maximum accuracy and efficiency; second, TrigAC-PSO, beyond any doubt, is the leading option for solving the TP in terms of both stability and the solution’s quality.

7. Conclusions

As technology is developing, the need for product improvement and trading is of high priority in obtaining a more economical solution. The PSO algorithm was applied with success in order for the TP to be solved. Furthermore, two new variations were introduced and compared to already-known variations. These variations induced exceptional results and indicated their superiority against the existing variations and the well-known exact methods in the literature. The proposed PSO variations have been tested in a variety of test instances with different combined values of inertia weight as well as social and personal acceleration parameters. It was evidently proven that the solution quality is inseparably linked with the selection of proper values for controlling the algorithm parameters. In order to see the effectiveness and stability of the proposed variations, we compared their results with those of other PSO variations for the same instances. Remarkably, the punctuality of one of our variations rose to 88%, and it was finally established as the ideal option compared to all other variations for the solution of TP.

It can be easily observed that this PSO variationis simple compared to other variations with complex structures. It was a challenge to achieve better results by creating and running simple computational algorithms, proving that keeping a balance between human and artificial intelligence is the key to the success of computational intelligence.

A more comprehensive analysis may be needed in order to examine the TP to a greater extent. Moreover, the proposed PSO variations could be applied to more complex networks such as the Sioux Fall network [27] in order to demonstrate the algorithm’s good performance and independence of the network’s size. Except for this, some other real constraints can be proposed in order to find the optimal solution for the TP with PSO algorithm variations not only in balanced instances but also in more realistic unbalanced instances in the future. Moreover, combining the proposed PSO variations with other meta-heuristic methods to solve the TP will be an interesting challenge.

Author Contributions

Conceptualization, C.A. and G.N.B.; methodology, C.A. and G.N.B.; software, C.A.; validation, C.A. and G.N.B.; formal analysis, C.A. and G.N.B.; investigation, C.A. and G.N.B.; resources, C.A.; data curation, C.A.; writing—original draft preparation, C.A.; writing—review and editing, C.A. and G.N.B.; visualization, C.A.; supervision, G.N.B.; project administration, G.N.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data and the programming code used in this paper can be sent, upon request, to the interested reader. Please contact: gbeligia@upatras.gr.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Karagul, K.; Sahin, Y. A novel approximation method to obtain initial basic feasible solution of transportation problem. J. King Saud Univ. Eng. Sci. 2019, 32, 211–218. [Google Scholar] [CrossRef]

- Deshpande, V.A. An optimal method for obtaining initial basic feasible solution of the transportation problem. In Proceedings of the National Conference on Emerging Trends in Mechanical Engineering Patel College of Engineering & Technology (GCET), Vallabh Vidyanagar (ETME-2009), Vallabh Vidyanagar, India, 5–6 March 2010; Volume 20, p. 21. [Google Scholar]

- Taha, H.A. Operations Research: An Introduction, 8th ed.; Pearson Prentice Hall: Hoboken, NJ, USA, 2007. [Google Scholar]

- Mostafa, R.R.; El-Attar, N.E.; Sabbeh, S.F.; Vidyarthi, A.; Hashim, F.A. ST-AL: A hybridized search based metaheuristic computational algorithm towards optimization of high dimensional industrial datasets. Soft Comput. 2022, 27, 13553–13581. [Google Scholar] [CrossRef] [PubMed]

- Gen, M.; Altiparmak, F.; Lin, L. A genetic algorithm for two-stage transportation problem using priority-based encoding. OR Spectr. 2006, 28, 337–354. [Google Scholar] [CrossRef]

- Swiatnicki, Z. Application of ant colony optimization algorithms for transportation problems using the example of the travelling salesman problem. In Proceedings of the 2015 4th International Conference on Advanced Logistics and Transport (ICALT), Valenciennes, France, 20 May 2015. [Google Scholar] [CrossRef]

- Fakhrzadi, M.; Goodarziani, F.; Golmohammadi, A.M. Addressing a fixed charge transportation problem with multiroute and different capacities by novel hybrid meta-heuristics. J. Ind. Syst. Eng. 2019, 12, 167–184. [Google Scholar]

- Eberhart, R.; Kennedy, J. A New Optimizer Using Particle Swarm Theory. In Proceedings of the Sixth International Symposium on Micro Machine and Human Science, Nagoya, Japan, 4 October 1995. [Google Scholar]

- Salehizadeh, S.M.A.; Yadmellat, P.; Menhaj, M.B. Local Optima Avoidable Particle Swarm Optimization. In Proceedings of the IEEE Swarm Intelligence Symposium, Nashville, TN, USA,, 15 May 2009. [Google Scholar] [CrossRef]

- Lin, S.-W.; Ying, K.-C.; Chen, S.-C.; Lee, Z.-J. Particle swarm optimization for parameter determination and feature selection of support vector machines. Expert Syst. Appl. 2008, 35, 1817–1824. [Google Scholar] [CrossRef]

- Ratnweera, S.K.; Halgamuge, H.C. Watson Self-organizing hierarchical particle swarm optimizer with time-varying acceleration coefficients. IEEE Trans. Evol. Comput. 2004, 8, 240–255. [Google Scholar] [CrossRef]

- Hitchcock, F.L. The Distribution of a Product from Several Sources to Numerous Localities. J. Math. Phys. 1941, 20, 224–230. [Google Scholar] [CrossRef]

- Koopmans, T. Optimum Utilization of the Transportation System. Econometrica 1949, 17, 136–146. [Google Scholar] [CrossRef]

- Dantzig, G.B. Application of the simplex method to a traznsportation problem. In Activity Analysis of Production and Allocation; Koopmans, T.C., Ed.; John Wiley and Sons: New York, NY, USA, 1951; pp. 359–373. [Google Scholar]

- Rosendo, M.; Pozo, A. A hybrid particle swarm optimization algorithm for combinatorial optimization problems. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Barcelona, Spain, 18–23 July 2010; pp. 1–8. [Google Scholar]

- Wang, D.; Tan, D.; Liu, L. Particle swarm optimization algorithm: An overview. Soft Comput. 2017, 22, 387–408. [Google Scholar] [CrossRef]

- Huang, H.; Zhifang, H. Particle swarm optimization algorithm for transportation problems. In Particle Swarm Optimization; Intech: Shanghai, China, 2009; pp. 275–290. [Google Scholar]

- Wang, J.; Wang, X.; Li, X.; Yi, J. A Hybrid Particle Swarm Optimization Algorithm with Dynamic Adjustment of Inertia Weight Based on a New Feature Selection Method to Optimize SVM Parameters. Entropy 2023, 25, 531. [Google Scholar] [CrossRef] [PubMed]

- Jain, M.; Saihjpal, V.; Singh, N.; Singh, S.B. An Overview of Variants and Advancements of PSO Algorithm. Appl. Sci. 2022, 12, 8392. [Google Scholar] [CrossRef]

- Shi, Y.; Eberhart, R. A modified particle swarm optimizer. In Proceedings of the IEEE International Conference on Evolutionary Computation, Anchorage, AK, USA, 4–9 May 1998; pp. 69–73. [Google Scholar]

- Sengupta, S.; Basak, S.; Peters, R.A., II. Particle Swarm Optimization: A Survey of Historical and Recent Developments with Hybridization Perspectives. Mach. Learn. Knowl. Extr. 2019, 1, 157–191. [Google Scholar] [CrossRef]

- Amaliah, B.; Fatichah, C.; Suryani, E. A new heuristic method of finding the initial basic feasible solution to solve the transportation problem. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 2298–2307. [Google Scholar] [CrossRef]

- Hosseini, E. Three new methods to find initial basic feasible solution of transportation problems. Appl. Math. Sci. 2017, 11, 1803–1814. [Google Scholar] [CrossRef]

- Amaliah, B.; Fatichah, C.; Suryani, E. Total opportunity cost matrix—Minimal total: A new approach to determine initial basic feasible solution of a transportation problem. J. Egypt. Inform. 2019, 20, 131–141. [Google Scholar] [CrossRef]

- Juman, Z.A.M.S.; Hoque, M.A. An efficient heuristic to obtain a better initial feasible solution to the transportation problem. Appl. Soft Comput. 2015, 34, 813–826. [Google Scholar] [CrossRef]

- Amaliah, B.; Fatichah, C.; Suryani, E. A Supply Selection Method for better Feasible Solution of balanced transportation problem. Expert Syst. Appl. 2022, 203, 117399. [Google Scholar] [CrossRef]

- Sun, D.; Chang, Y.; Zhang, L. An ant colony optimisation model for traffic counting location problem. Transport 2012, 165, 175–185. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).