An Adversarial DBN-LSTM Method for Detecting and Defending against DDoS Attacks in SDN Environments

Abstract

1. Introduction

- (1)

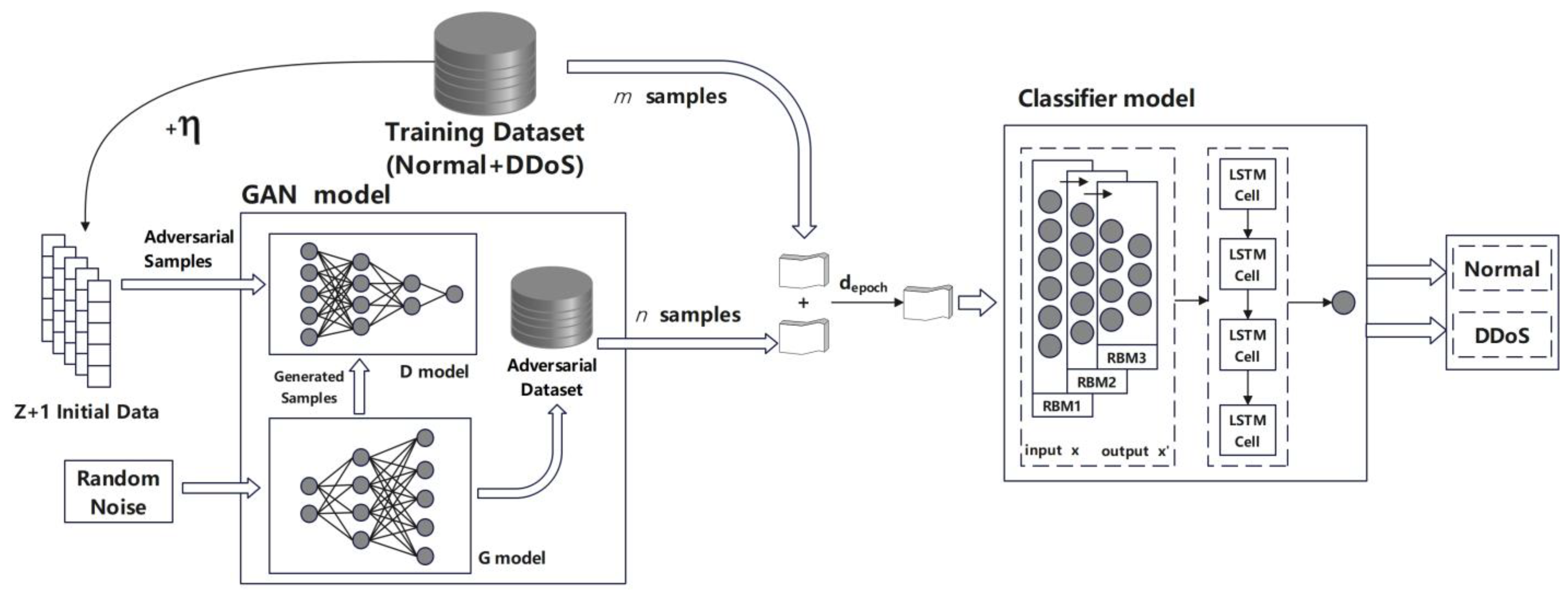

- We utilize the GAN model to generate many examples and construct an adversarial dataset. The perturbed samples from the adversarial dataset are added to the training data, which already contains normal and unperturbed DDoS samples, creating augmented data samples for adversarial training.

- (2)

- We construct a DBN-LSTM model to represent the characteristics of DDoS attacks in SDN. This model extracts the time series information, completes the classification of dimension-reduction data, and significantly reduces the computational cost of large-scale data.

2. Related Work

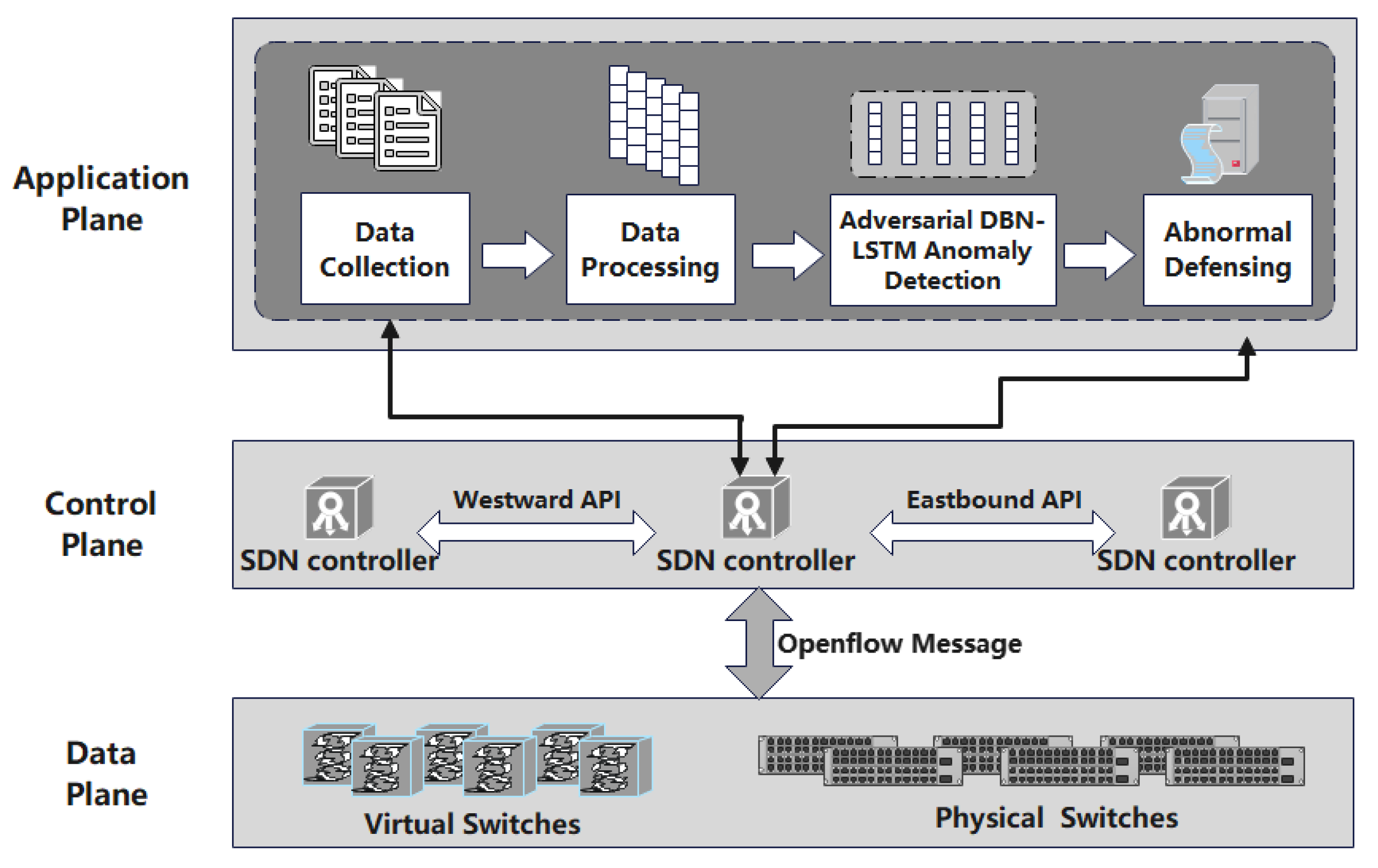

3. Proposed System

- Data Collection: the SDN controller collects data from the physical and virtual switches in the data layer.

- Data Processing: non-numerical features collected from the switch will be converted into numerical features.

- Adversarial Deep Learning Anomaly Detection: this module will detect and mark the DDoS attack through the trained deep learning model.

- Abnormal Defensing: this module will perform corresponding operations on DDoS attacks to avoid the harm of DDoS attacks.

3.1. Data Collection

3.2. Data Processing

3.3. Adversarial DBN-LSTM Anomaly Detection

| Algorithm 1 The GAN model training steps |

| Require: the G model; the D model; the Training dataset 1: Initialize and 2: while t iterations training or stop condition not met do 3: do 4: Sample from 5: Sample from 6: Generates by G 7: Calculate 8: end for 9: Sample 10: Calculate 11: end while 12: |

| 13: return G and D |

3.4. Abnormal Defending

| Algorithm 2 Abnormal Defending Process |

| Require: Suspect flows 1: Identify the suspect flows based on IP addresses and ports that make the analysis interval anomalous 2: Identify the destination IP address that receives the most flows 3: Identity in those flows the attackers’ IP address, which has the same destination port 4: If IPs e ports are on the Safe List then 5: Forward packets |

| 6: Else 7: Drop packets |

| 8: End if |

4. Experimental Results and Discussion

4.1. Evaluating Indicator

4.2. Experimental Setup

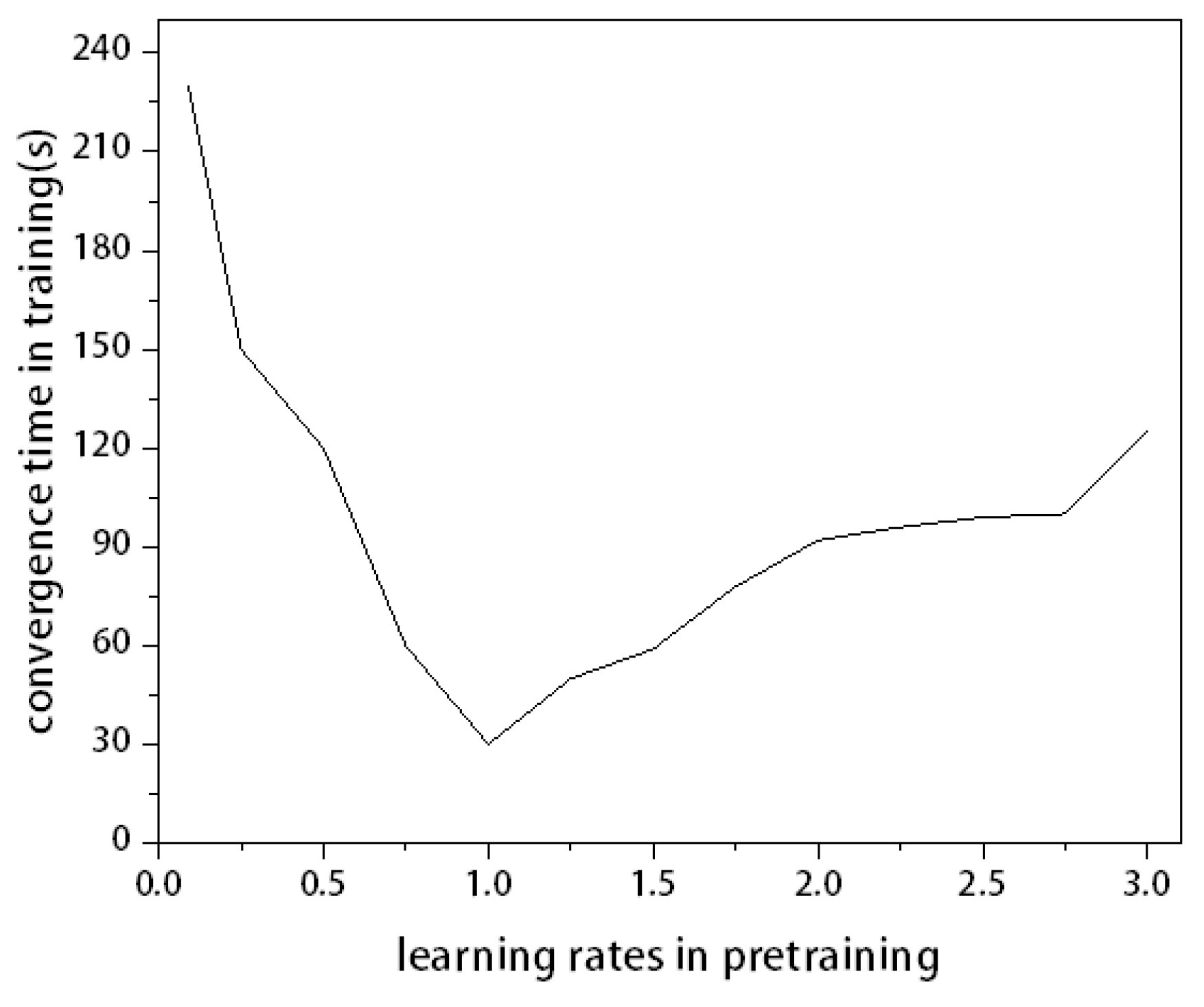

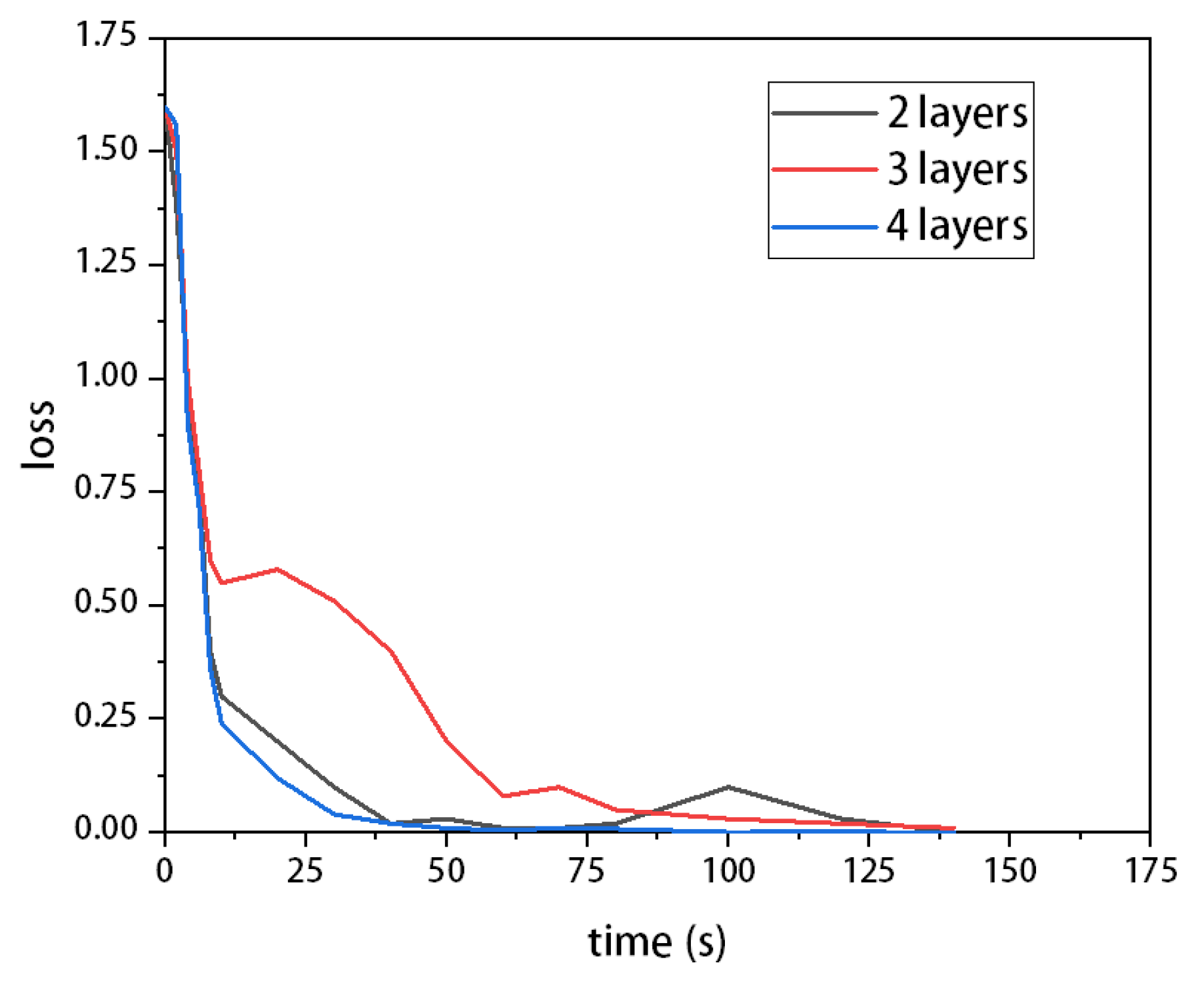

4.3. Experimental Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mudassar, M.; Katt, B.; Gkioulos, V. Cyber ranges and security testbeds: Scenarios, functions, tools and architecture. Comput. Secur. 2020, 88, 101636. [Google Scholar]

- Hu, D.; Hong, P.; Chen, Y. Fadm: Ddos flooding attack detection and mitigation system in software-defined networking. In Proceedings of the 2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017. [Google Scholar]

- Abubakar, A.; Pranggono, B. Machine learning based intrusion detection system for software defined networks. In Proceedings of the Seventh International Conference on Emerging Security Technologies (EST), Canterbury, UK, 6-8 September 2017. [Google Scholar]

- Niyaz, Q.; Sun, W.; Javaid, A.Y. A deep learning based ddos detection system in software-defined networking (SDN). arXiv 2016, arXiv:1611.07400. [Google Scholar] [CrossRef]

- Khamaiseh, S.; Serra, E.; Xu, D. vswitchguard: Defending openflow switches against saturation attacks. In Proceedings of the IEEE Computer Society Signature Conference on Computers Software and Applications (COMPSAC), Madrid, Spain, 13–17 July 2020. [Google Scholar]

- Shieh, C.S.; Nguyen, T.T.; Lin, W.W.; Lai, W.K.; Horng, M.F.; Miu, D. Detection of Adversarial DDoS Attacks Using Symmetric Defense Generative Adversarial Networks. Electronics 2022, 11, 1977. [Google Scholar] [CrossRef]

- Jiang, H.; Lin, J.; Kang, H. FGMD: A robust detector against adversarial attacks in the IoT network. Future Gener. Comput. Syst. 2022, 132, 194–210. [Google Scholar] [CrossRef]

- Nguyen, T.N. The challenges in ml-based security for SDN. In Proceedings of the 2018 2nd Cyber Security in Networking Conference (CSNet), Paris, France, 24-26 October 2018. [Google Scholar]

- Papernot, N.; McDaniel, P.; Jha, S.; Fredrikson, M.; Celik, Z.B.; Swami, A. The limitations of deep learning in adversarial settings. In Proceedings of the 2016 IEEE European symposium on security and privacy (EuroS&P), Saarbrucken, Germany, 21–24 March 2016. [Google Scholar]

- Khamaiseh, S.Y.; Alsmadi, I.; Al-Alai, A. Deceiving Machine Learning-Based Saturation Attack Detection Systems in SDN. In Proceedings of the 2020 IEEE Conference on Network Function Virtualization and Software Defined Networks (NFV-SDN), Chandler, AZ, USA, 14–16 November 2020. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards deep learning models resistant to adversarial attacks. arXiv 2019, arXiv:1706.06083. [Google Scholar]

- Ujjan, R.M.A.; Pervez, Z.; Dahal, K.; Bashir, A.K.; Mumtaz, R.; González, J. Towards sFlow and adaptive polling sampling for deep learning based DDoS detection in SDN. Future Gener. Comput. Syst. 2021, 125, 156–167. [Google Scholar] [CrossRef]

- Zainudin, A.; Ahakonye, L.A.C.; Akter, R.; Kim, D.-S.; Lee, J.-M. An Efficient Hybrid-DNN for DDoS Detection and Classification in Software-Defined IIoT Networks. IEEE Internet Things J. 2022. [Google Scholar] [CrossRef]

- Al Razib, M.; Javeed, D.; Khan, M.T.; Alkanhel, R.; Muthanna, M.S.A. Cyber Threats Detection in Smart Environments Using SDN-Enabled DNN-LSTM Hybrid Framework. IEEE Access 2022, 10, 53015–53026. [Google Scholar] [CrossRef]

- Novaes, M.P.; Carvalho, L.F.; Lloret, J.; Proença, M.L., Jr. Adversarial Deep Learning approach detection and defense against DDoS attacks in SDN environments. Future Gener. Comput. Syst. 2021, 125, 156–167. [Google Scholar] [CrossRef]

- Alghazzawi, D.; Bamasag, O.; Ullah, H.; Asghar, M.Z. Efficient detection of DDoS attacks using a hybrid deep learning model with improved feature selection. Appl. Sci. 2021, 11, 11634. [Google Scholar] [CrossRef]

- Assis, M.V.; Carvalho, L.F.; Lloret, J.; Proença, M.L., Jr. A GRU deep learning system against attacks in software defined networks. J. Netw. Comput. Appl. 2021, 177, 102942. [Google Scholar] [CrossRef]

- Javeed, D.; Gao, T.; Khan, M.T. SDN-enabled hybrid DL-driven framework for the detection of emerging cyber threats in IoT. Electronics 2021, 10, 918. [Google Scholar] [CrossRef]

- Saini, P.S.; Behal, S.; Bhatia, S. Detection of DDoS attacks using machine learning algorithms. In Proceedings of the 2020 7th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 12–14 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 16–21. [Google Scholar]

- Carvalho, L.F.; Abrão, T.; de Souza Mendes, L.; Proença, M.L., Jr. An ecosystem for anomaly detection and mitigation in software-defined networking. Expert Syst. Appl. 2018, 104, 121–133. [Google Scholar] [CrossRef]

- Mittal, M.; Kumar, K.; Behal, S. Deep learning approaches for detecting DDoS attacks: A systematic review. Soft Comput. 2022, 1–37. [Google Scholar] [CrossRef] [PubMed]

- de Assis, M.V.; Carvalho, L.F.; Rodrigues, J.J.; Lloret, J.; Proença, M.L. Near realtime security system applied to SDN environments in IoT networks using convolutional neural network. Comput. Electr. Eng. 2020, 86, 106738. [Google Scholar] [CrossRef]

- Priyadarshini, R.; Barik, R.K. A deep learning based intelligent framework to mitigate DDoS attack in fog environment. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 825–831. [Google Scholar] [CrossRef]

- Wang, M.; Lu, Y.; Qin, J. A dynamic MLP-based DDoS attack detection method using feature selection and feedback. Comput. Secur. 2020, 88, 101645. [Google Scholar] [CrossRef]

| Accuracy | False | |

|---|---|---|

| Our method | 91.23% | 8.77% |

| DBN + LSTM | 7.62% | 92.38% |

| LSTM | 6.34% | 93.77% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, L.; Wang, Z.; Huo, R.; Huang, T. An Adversarial DBN-LSTM Method for Detecting and Defending against DDoS Attacks in SDN Environments. Algorithms 2023, 16, 197. https://doi.org/10.3390/a16040197

Chen L, Wang Z, Huo R, Huang T. An Adversarial DBN-LSTM Method for Detecting and Defending against DDoS Attacks in SDN Environments. Algorithms. 2023; 16(4):197. https://doi.org/10.3390/a16040197

Chicago/Turabian StyleChen, Lei, Zhihao Wang, Ru Huo, and Tao Huang. 2023. "An Adversarial DBN-LSTM Method for Detecting and Defending against DDoS Attacks in SDN Environments" Algorithms 16, no. 4: 197. https://doi.org/10.3390/a16040197

APA StyleChen, L., Wang, Z., Huo, R., & Huang, T. (2023). An Adversarial DBN-LSTM Method for Detecting and Defending against DDoS Attacks in SDN Environments. Algorithms, 16(4), 197. https://doi.org/10.3390/a16040197