Deep Learning Based on EfficientNet for Multiorgan Segmentation of Thoracic Structures on a 0.35 T MR-Linac Radiation Therapy System

Abstract

:1. Introduction

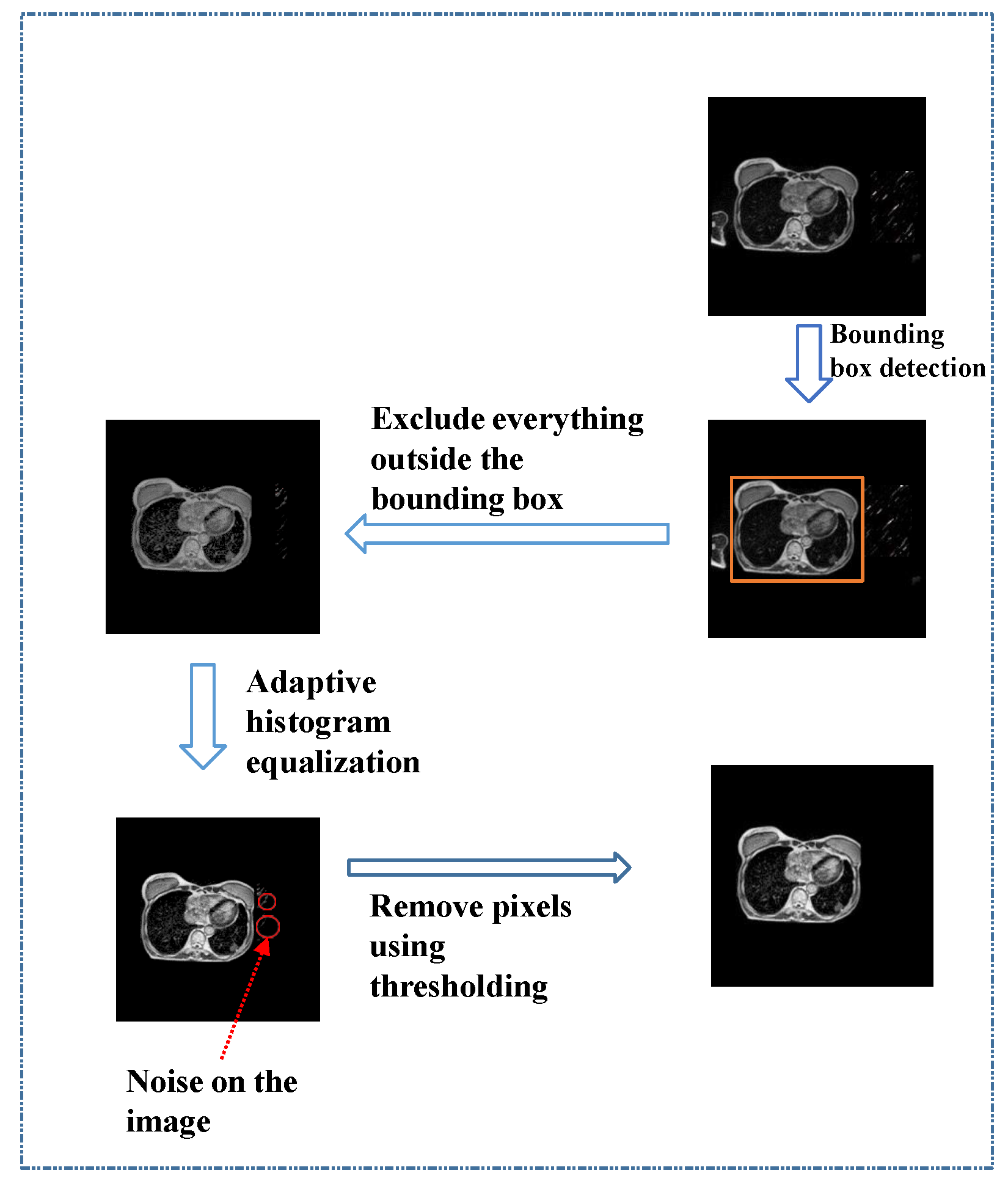

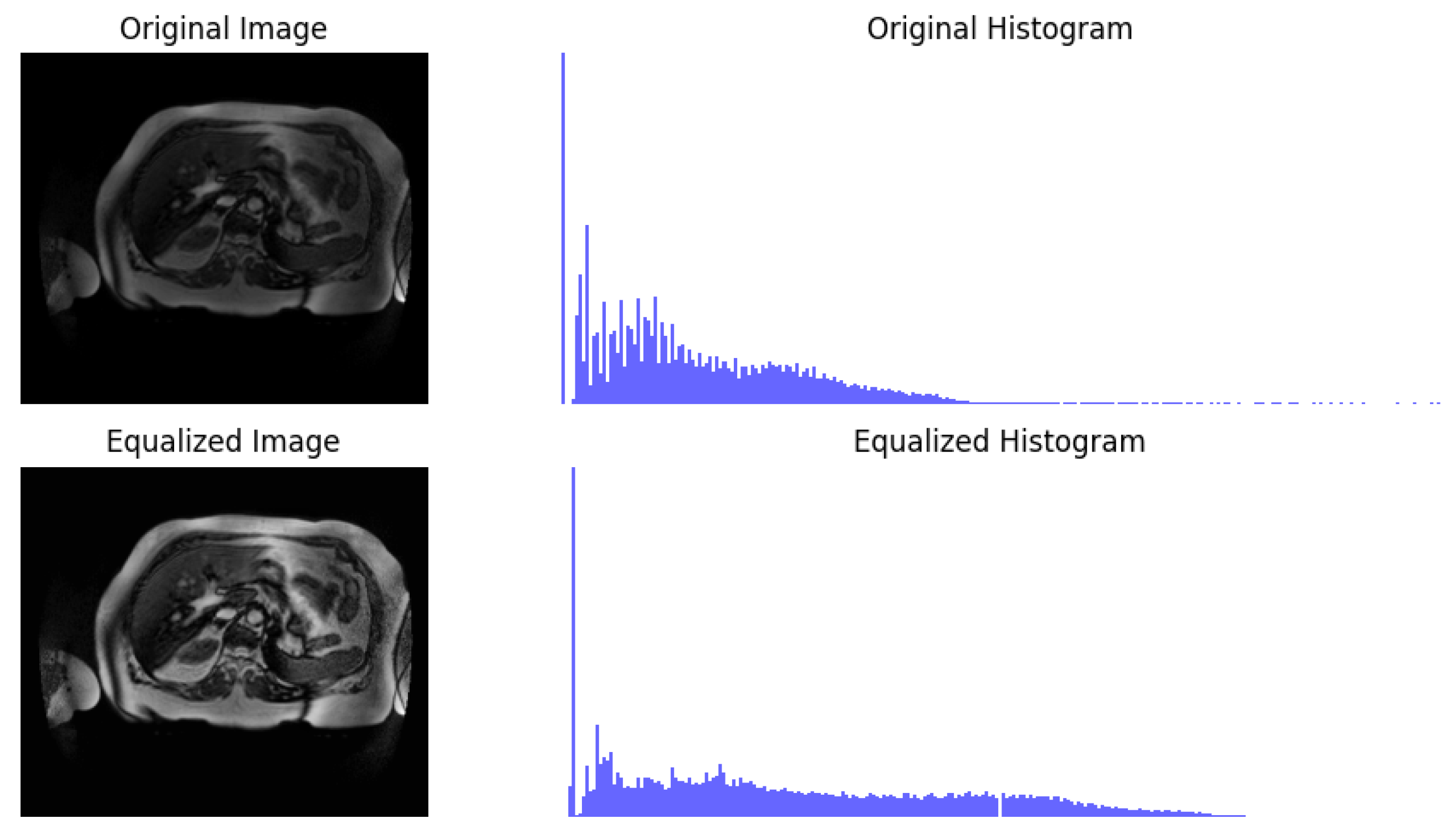

- A preprocessing approach is presented for MRI datasets, utilizing YOLO for region-of-interest detection, then removal of unwanted regions, followed by adaptive histogram equalization and thresholding, to enhance dataset quality and completeness.

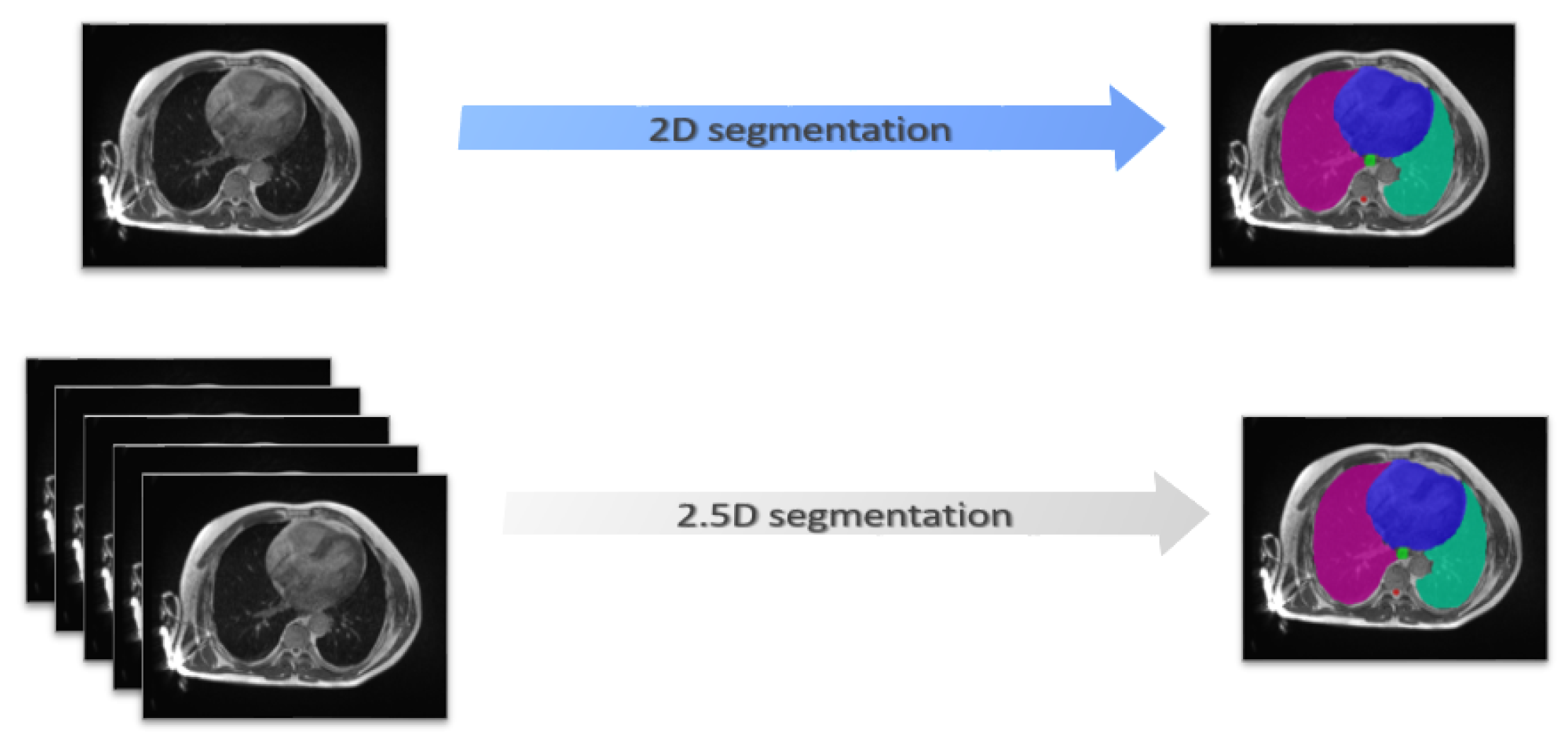

- A novel approach for multiorgan segmentation in the thoracic region of MR images is proposed based on the EfficientNet as an encoder for UNet with a 2.5D strategy in the context of a 0.35 T MR-Linac radiation therapy system.

- The efficiency of the proposed model is demonstrated through extensive experimentation on an internal dataset of 81 patients.

2. Materials and Methods

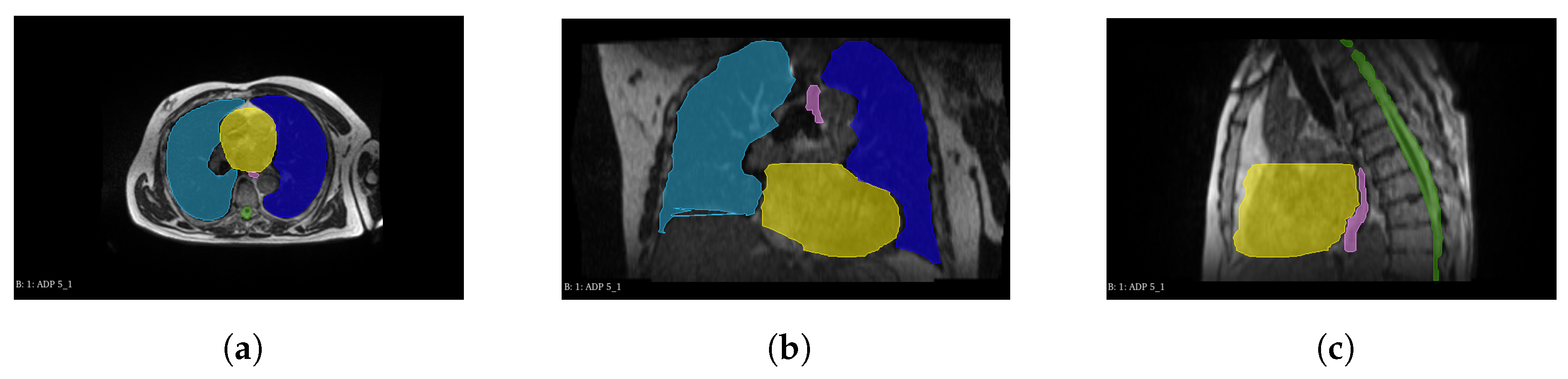

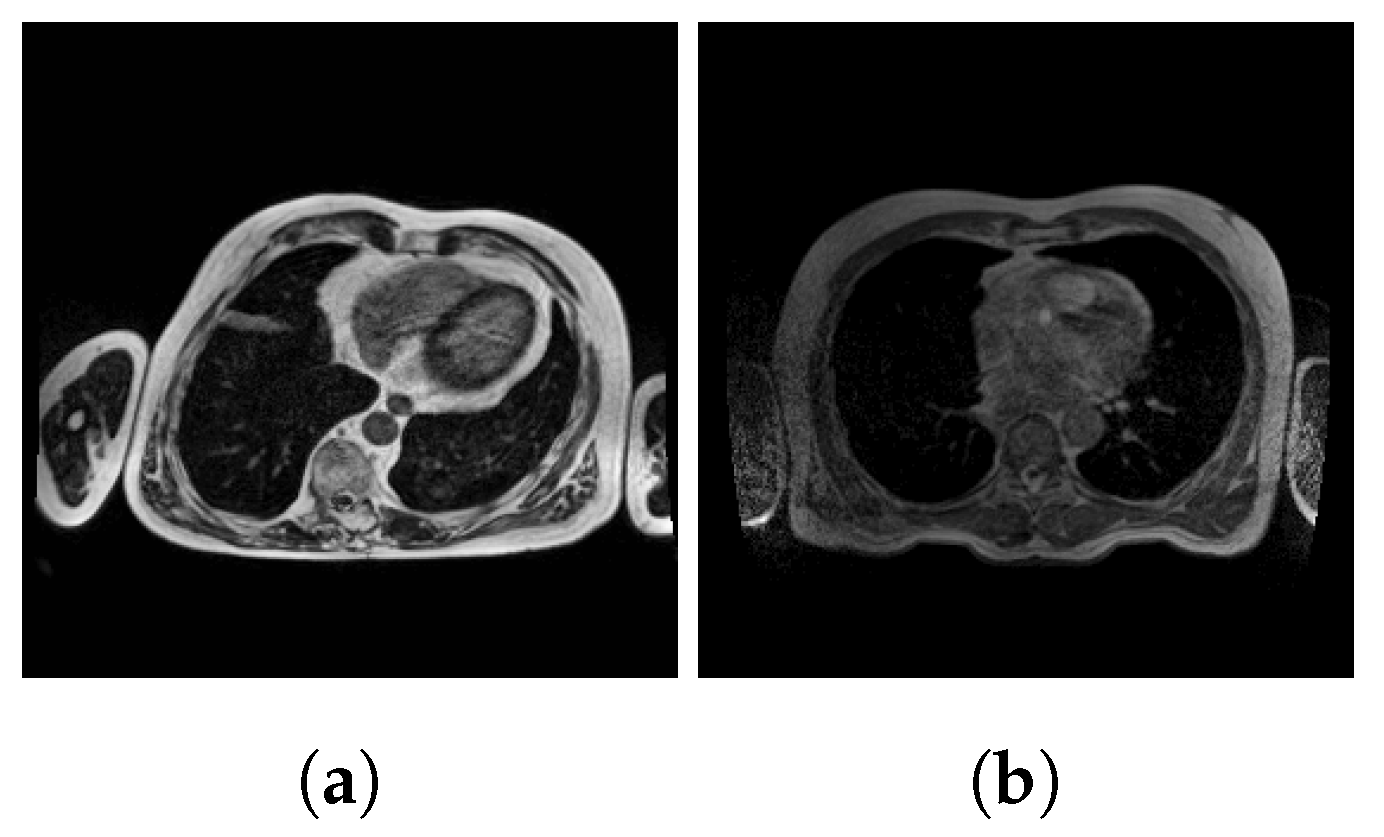

2.1. Dataset

2.2. Preprocessing

2.3. Efficient-UNet Model for Multiorgan Segmentation

2.3.1. Encoder Blocks

2.3.2. Decoder

2.4. Training Strategies

2.5. Evaluation Metrics

2.6. Implementation

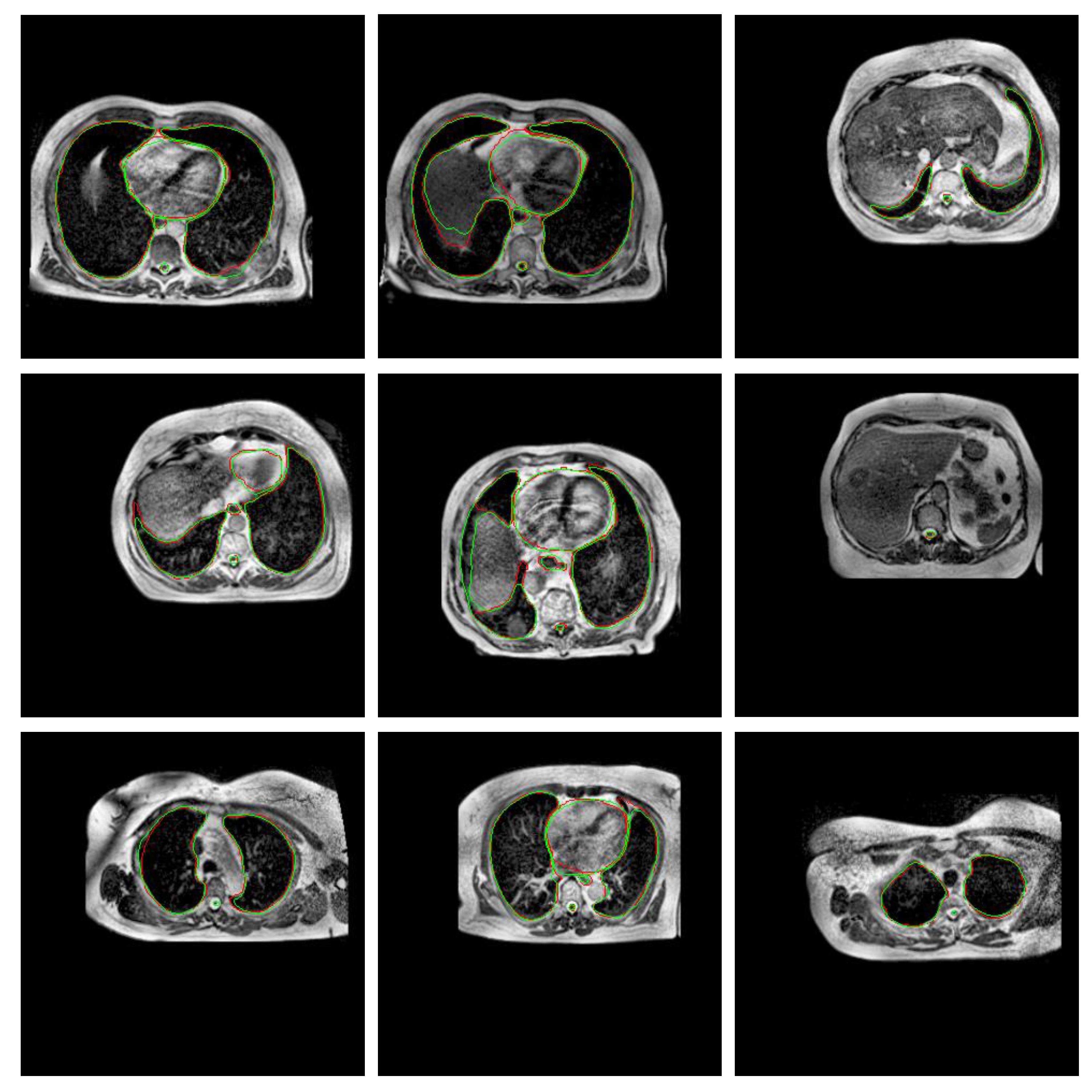

3. Experiments and Results

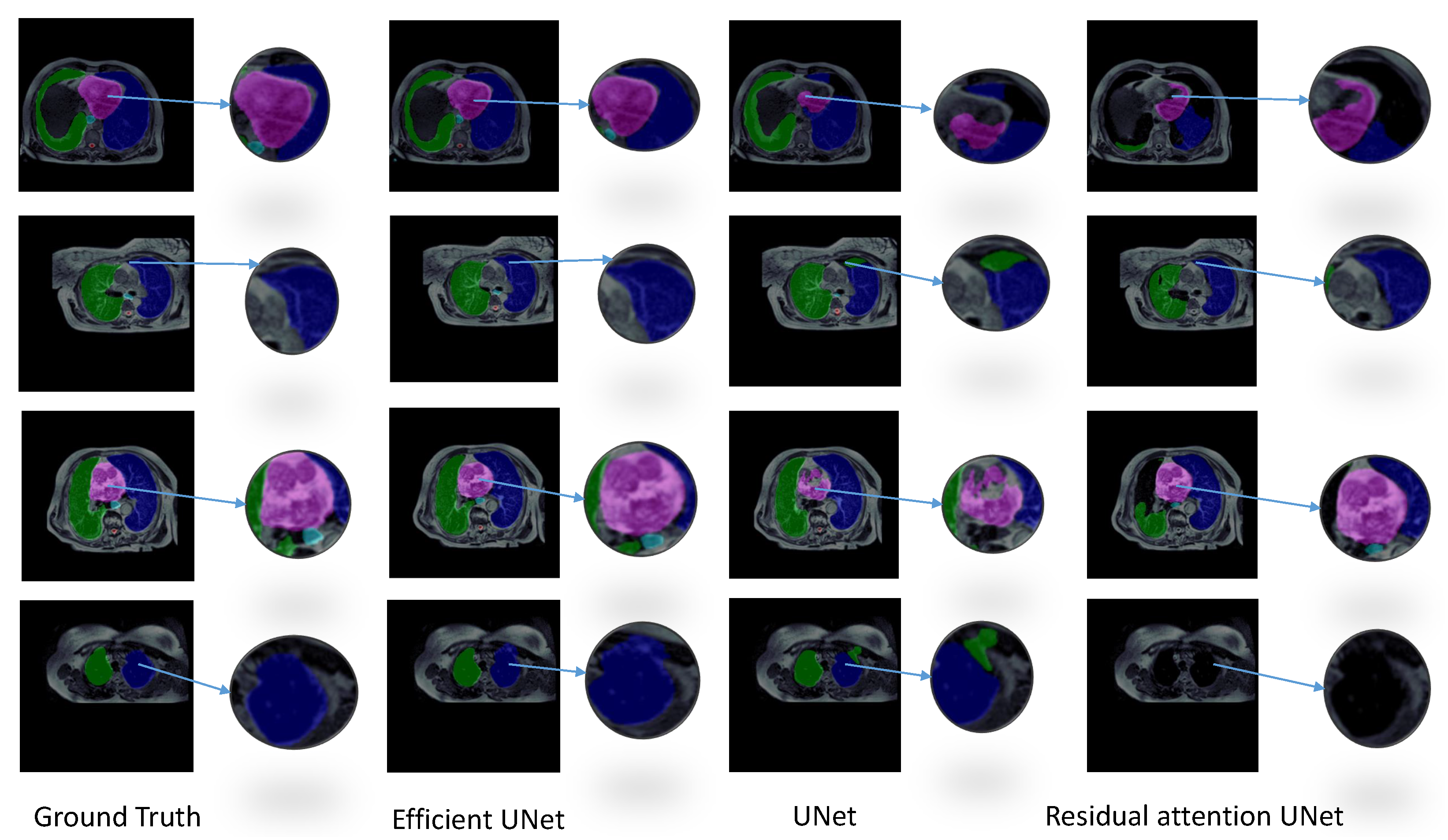

3.1. Experiment 1: Model Selection

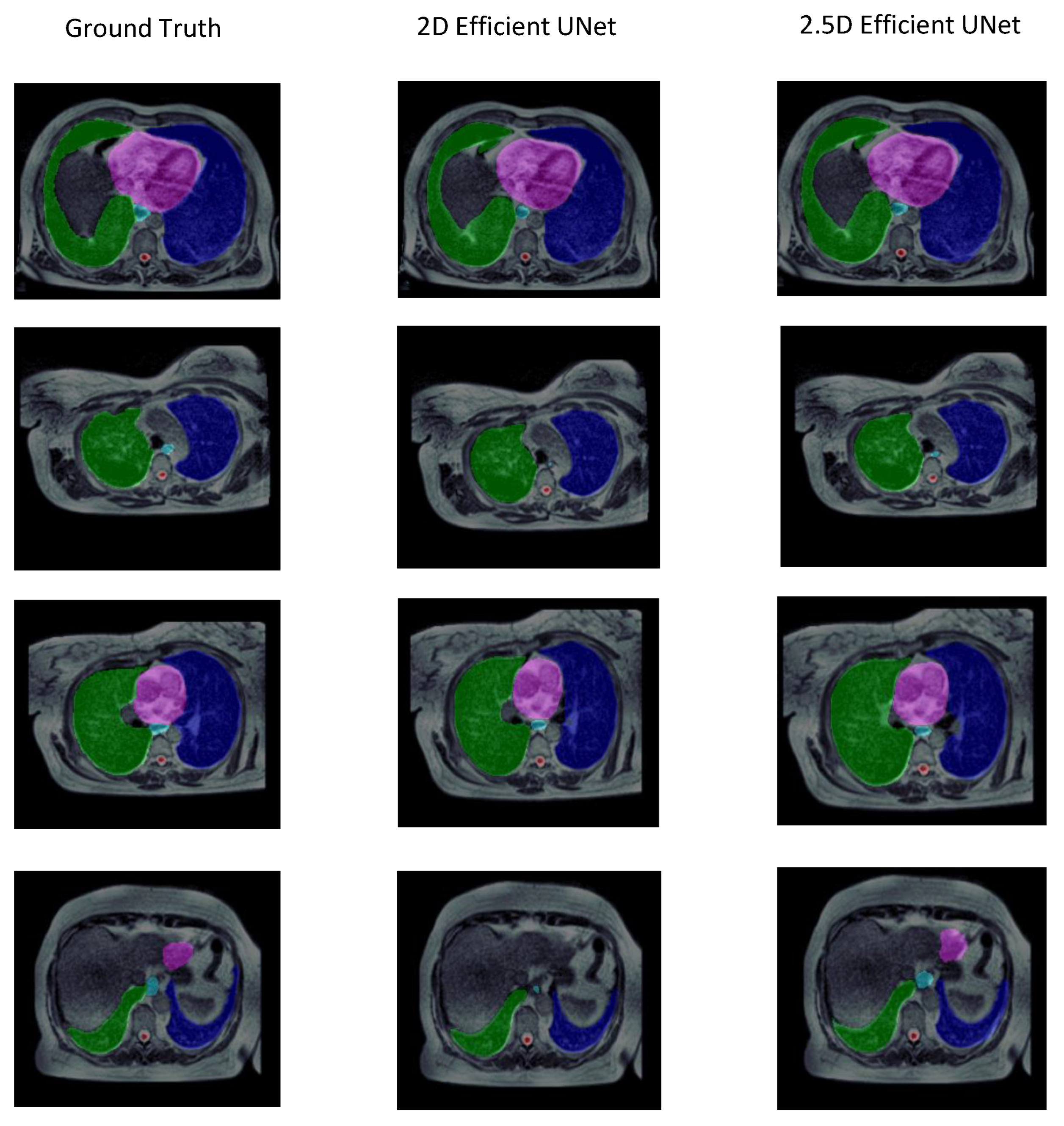

3.2. Experiment 2: Training Strategy Selection

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Winkel, D.; Bol, G.H.; Kroon, P.S.; van Asselen, B.; Hackett, S.S.; Werensteijn-Honingh, A.M.; Intven, M.P.; Eppinga, W.S.; Tijssen, R.H.; Kerkmeijer, L.G.; et al. Adaptive radiotherapy: The Elekta Unity MR-linac concept. Clin. Transl. Radiat. Oncol. 2019, 18, 54–59. [Google Scholar] [CrossRef]

- Klüter, S. Technical design and concept of a 0.35 T MR-Linac. Clin. Transl. Radiat. Oncol. 2019, 18, 98–101. [Google Scholar] [CrossRef]

- Tong, N.; Cao, M.; Sheng, K. Shape constrained fully convolutional DenseNet with adversarial training for multi-organ segmentation on head and neck low field MR images. Int. J. Radiat. Oncol. Biol. Phys. 2019, 105, S93. [Google Scholar] [CrossRef]

- Liu, Y.; Lei, Y.; Fu, Y.; Wang, T.; Zhou, J.; Jiang, X.; McDonald, M.; Beitler, J.J.; Curran, W.J.; Liu, T.; et al. Head and neck multi-organ auto-segmentation on CT images aided by synthetic MRI. Med. Phys. 2020, 47, 4294–4302. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Ruan, D.; Xiao, J.; Wang, L.; Sun, B.; Saouaf, R.; Yang, W.; Li, D.; Fan, Z. Fully automated multiorgan segmentation in abdominal magnetic resonance imaging with deep neural networks. Med. Phys. 2020, 47, 4971–4982. [Google Scholar] [CrossRef] [PubMed]

- Sharp, G.; Fritscher, K.D.; Pekar, V.; Peroni, M.; Shusharina, N.; Veeraraghavan, H.; Yang, J. Vision 20/20: Perspectives on automated image segmentation for radiotherapy. Med. Phys. 2014, 41, 050902. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef]

- Trullo, R.; Petitjean, C.; Ruan, S.; Dubray, B.; Nie, D.; Shen, D. Segmentation of organs at risk in thoracic CT images using a sharpmask architecture and conditional random fields. In Proceedings of the 2017 IEEE 14th international symposium on biomedical imaging (ISBI 2017), Melbourne, VIC, Australia, 18–21 April 2017; pp. 1003–1006. [Google Scholar]

- Im, J.H.; Lee, I.J.; Choi, Y.; Sung, J.; Ha, J.S.; Lee, H. Impact of Denoising on Deep-Learning-Based Automatic Segmentation Framework for Breast Cancer Radiotherapy Planning. Cancers 2022, 14, 3581. [Google Scholar] [CrossRef]

- Khalil, M.I.; Tehsin, S.; Humayun, M.; Jhanjhi, N.; AlZain, M.A. Multi-Scale Network for Thoracic Organs Segmentation. Comput. Mater. Contin. 2022, 70, 3251–3265. [Google Scholar]

- Mahmood, H.; Islam, S.M.S.; Hill, J.; Tay, G. Rapid segmentation of thoracic organs using u-net architecture. In Proceedings of the 2021 Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, QLD, Australia, 29 November–1 December 2021; pp. 1–6. [Google Scholar]

- Simpson, A.L.; Antonelli, M.; Bakas, S.; Bilello, M.; Farahani, K.; Van Ginneken, B.; Kopp-Schneider, A.; Landman, B.A.; Litjens, G.; Menze, B.; et al. A large annotated medical image dataset for the development and evaluation of segmentation algorithms. arXiv 2019, arXiv:1902.09063. [Google Scholar]

- Lambert, Z.; Petitjean, C.; Dubray, B.; Kuan, S. Segthor: Segmentation of thoracic organs at risk in ct images. In Proceedings of the 2020 Tenth International Conference on Image Processing Theory, Tools and Applications (IPTA), Paris, France, 9–12 November 2020; pp. 1–6. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Ni, Z.L.; Bian, G.B.; Zhou, X.H.; Hou, Z.G.; Xie, X.L.; Wang, C.; Zhou, Y.J.; Li, R.Q.; Li, Z. Raunet: Residual attention u-net for semantic segmentation of cataract surgical instruments. In Proceedings of the International Conference on Neural Information Processing, Sydney, NSW, Australia, 12–15 December 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 139–149. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Baheti, B.; Innani, S.; Gajre, S.; Talbar, S. Eff-unet: A novel architecture for semantic segmentation in unstructured environment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 358–359. [Google Scholar]

- Trullo, R.; Petitjean, C.; Nie, D.; Shen, D.; Ruan, S. Joint segmentation of multiple thoracic organs in CT images with two collaborative deep architectures. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Proceedings of the Third International Workshop, DLMIA 2017, and 7th International Workshop, ML-CDS 2017, Held in Conjunction with MICCAI 2017, Québec City, QC, Canada, 14 September 2017; Proceedings 3; Springer: Berlin/Heidelberg, Germany, 2017; pp. 21–29. [Google Scholar]

- Han, L.; Chen, Y.; Li, J.; Zhong, B.; Lei, Y.; Sun, M. Liver segmentation with 2.5 D perpendicular UNets. Comput. Electr. Eng. 2021, 91, 107118. [Google Scholar] [CrossRef]

- Dice, L.R. Measures of the amount of ecologic association between species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- Costa, L.d.F. Further generalizations of the Jaccard index. arXiv 2021, arXiv:2110.09619. [Google Scholar]

- Huttenlocher, D.P.; Klanderman, G.A.; Rucklidge, W.J. Comparing images using the Hausdorff distance. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 850–863. [Google Scholar] [CrossRef]

- Altman, D.G.; Bland, J.M. Measurement in medicine: The analysis of method comparison studies. J. R. Stat. Soc. Ser. D Stat. 1983, 32, 307–317. [Google Scholar] [CrossRef]

- Mazonakis, M.; Damilakis, J.; Varveris, H.; Prassopoulos, P.; Gourtsoyiannis, N. Image segmentation in treatment planning for prostate cancer using the region growing technique. Br. J. Radiol. 2001, 74, 243–249. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Zhang, F.; Wang, Q.; Yang, A.; Lu, N.; Jiang, H.; Chen, D.; Yu, Y.; Wang, Y. Geometric and dosimetric evaluation of the automatic delineation of organs at risk (OARs) in non-small-cell lung cancer radiotherapy based on a modified DenseNet deep learning network. Front. Oncol. 2022, 12, 861857. [Google Scholar] [CrossRef] [PubMed]

- Gali, M.S.K.; Garg, N.; Vasamsetti, S. Dilated U-Net based Segmentation of Organs at Risk in Thoracic CT Images. In Proceedings of the SegTHOR@ ISBI, Venice, Italy, 8–11 April 2019. [Google Scholar]

- Larsson, M.; Zhang, Y.; Kahl, F. Robust abdominal organ segmentation using regional convolutional neural networks. Appl. Soft Comput. 2018, 70, 465–471. [Google Scholar] [CrossRef]

- Cao, Z.; Yu, B.; Lei, B.; Ying, H.; Zhang, X.; Chen, D.Z.; Wu, J. Cascaded SE-ResUnet for segmentation of thoracic organs at risk. Neurocomputing 2021, 453, 357–368. [Google Scholar] [CrossRef]

- Güngör, G.; Serbez, İ.; Temur, B.; Gür, G.; Kayalılar, N.; Mustafayev, T.Z.; Korkmaz, L.; Aydın, G.; Yapıcı, B.; Atalar, B.; et al. Time analysis of online adaptive magnetic resonance–guided radiation therapy workflow according to anatomical sites. Pract. Radiat. Oncol. 2021, 11, e11–e21. [Google Scholar] [CrossRef]

| Model | DSC | IoU | HD (mm) | # Parameters | Training Time | Inference Time |

|---|---|---|---|---|---|---|

| Efficient-UNet | 0.804 ± 0.058 | 0.711 ± 0.062 | 25.663 ±18.724 | 20,225,550 | 4 h | 3.71 s |

| UNet | 0.761 ± 0.078 | 0.657 ± 0.086 | 35.915 ± 13.632 | 30,106,806 | 3.9 h | 7.07 s |

| ResAtt UNet | 0.677 ± 0.105 | 0.561 ± 0.115 | 21.536 ± 8.803 | 34,877,746 | 13.9 h | 5.37 s |

| Strategy | DSC | IoU | HD (mm) | Volume (Bland–Altman) | Volume (Correlation) |

|---|---|---|---|---|---|

| 2.5D | 0.820 ± 0.041 | 0.725 ± 0.052 | 10.353 ± 4.974 | 153.943 ± 50.149 | 0.734 ± 0.090 |

| 2D | 0.804 ± 0.058 | 0.711 ± 0.062 | 25.663 ± 18.724 | 151.915 ± 55.747 | 0.726 ± 0.073 |

| Organ | Strategy | Metrics | ||||

|---|---|---|---|---|---|---|

| IoU | DSC | HD (mm) | Volume | |||

| Bland-Altman | Correlation | |||||

| Left Lung | 2D | 0.895 ± 0.033 | 0.944 ± 0.018 | 14.492 ± 13.280 | 225.900 ± 101.430 | 0.978 ± 0.009 |

| 2.5D | 0.895 ± 0.031 | 0.944 ± 0.017 | 9.443 ± 1.953 | 216.580 ± 78.003 | 0.976 ± 0.008 | |

| Right Lung | 2D | 0.912 ± 0.011 | 0.953 ± 0.006 | 70.157 ± 43.920 | 209.380 ± 47.580 | 0.994 ± 0.001 |

| 2.5D | 0.904 ± 0.017 | 0.949 ± 0.009 | 9.739 ± 2.853 | 236.69 ± 69.311 | 0.992 ± 0.004 | |

| Heart | 2D | 0.860 ± 0.018 | 0.924 ± 0.010 | 19.403 ± 19.860 | 235.939 ± 108.410 | 0.983 ± 0.009 |

| 2.5D | 0.856 ± 0.037 | 0.922 ± 0.021 | 11.270 ± 8.454 | 237.638 ± 84.649 | 0.975 ± 0.018 | |

| Esophagus | 2D | 0.356 ± 0.116 | 0.513 ± 0.134 | 10.772 ± 5.280 | 74.510 ± 16.860 | 0.454 ± 0.205 |

| 2.5D | 0.384 ± 0.078 | 0.551 ± 0.080 | 11.710 ± 5.689 | 66.017 ± 13.650 | 0.422 ± 0.254 | |

| Spinal cord | 2D | 0.534 ± 0.133 | 0.685 ± 0.121 | 13.490 ± 11.250 | 13.835 ± 4.430 | 0.223 ± 0.138 |

| 2.5D | 0.585 ± 0.096 | 0.733 ± 0.077 | 9.600 ± 5.920 | 12.770 ± 5.130 | 0.304 ± 0.164 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chekroun, M.; Mourchid, Y.; Bessières, I.; Lalande, A. Deep Learning Based on EfficientNet for Multiorgan Segmentation of Thoracic Structures on a 0.35 T MR-Linac Radiation Therapy System. Algorithms 2023, 16, 564. https://doi.org/10.3390/a16120564

Chekroun M, Mourchid Y, Bessières I, Lalande A. Deep Learning Based on EfficientNet for Multiorgan Segmentation of Thoracic Structures on a 0.35 T MR-Linac Radiation Therapy System. Algorithms. 2023; 16(12):564. https://doi.org/10.3390/a16120564

Chicago/Turabian StyleChekroun, Mohammed, Youssef Mourchid, Igor Bessières, and Alain Lalande. 2023. "Deep Learning Based on EfficientNet for Multiorgan Segmentation of Thoracic Structures on a 0.35 T MR-Linac Radiation Therapy System" Algorithms 16, no. 12: 564. https://doi.org/10.3390/a16120564

APA StyleChekroun, M., Mourchid, Y., Bessières, I., & Lalande, A. (2023). Deep Learning Based on EfficientNet for Multiorgan Segmentation of Thoracic Structures on a 0.35 T MR-Linac Radiation Therapy System. Algorithms, 16(12), 564. https://doi.org/10.3390/a16120564