Abstract

The article offers a possible treatment for the numerical research of tasks which require searching for an absolute optimum. This approach is established by employing both globalized nature-inspired methods as well as local descent methods for exploration and exploitation. Three hybrid nonconvex minimization algorithms are developed and implemented. Modifications of flower pollination, teacher-learner, and firefly algorithms are used as nature-inspired methods for global searching. The modified trust region method based on the main diagonal approximation of the Hessian matrix is applied for local refinement. We have performed the numerical comparison of variants of the realized approach employing a representative collection of multimodal objective functions. The implemented nonconvex optimization methods have been used to solve the applied problems. These tasks utilize an optimization of the low-energy metal Sutton-Chen clusters potentials with a very large number of atoms and the parametric identification of the nonlinear dynamic model. The results of this research confirms the performance of the suggested algorithms.

1. Introduction

1.1. Background and Related Work

The global optimization of multimodal objective functions remains a crucial and difficult mathematical problem. Currently, much attention in the scientific literature is paid to approximate methods for global optimization [1], which allow for the possibility of finding a “high quality” solution in an acceptable (from a practical point of view) time. Among them, metaheuristic optimization methods are widely used [2]. Unlike classical optimization methods, metaheuristic methods can be used in situations where information about the nature and properties of the function under study is almost completely absent. Heuristic methods based on intuitive approaches make it possible to find sufficiently good solutions to the problem without proving the correctness of the procedures used and the optimality of the result obtained. Metaheuristic methods combine one or more heuristic methods (procedures) based on a higher-level search strategy. They are able to leave the vicinity of local extrema and perform a fairly complete study of the set of feasible solutions.

The classification of metaheuristic methods is currently conditional, since the characteristic groups of methods are based on similar ideas. For example, well-known bioinspired algorithms can be divided into the following categories:

- Evolutionary algorithms (evolutionary strategies, genetic search, biogeography-based algorithms, differential evolution, others) [2,3,4,5,6,7,8,9,10,11,12];

- “Wildlife-inspired” algorithms [2,13,14,15,16,17,18,19,20,21];

- Algorithms inspired by human community or inanimate nature [2,13,22,23,24,25,26]; etc.

The Flower Pollination (FP) algorithm was proposed by X.S. Yang in 2012 at the University of Cambridge (UK) (see, for example, [9,14]). The Teaching-Learning-based optimization (TL) algorithm was proposed by R. Rao in 2011 (see, e.g., [9,22,23]).

The swarm optimization method, which mimics the behavior of fireflies, borrows ideas from observing fireflies that engage with partners, attracting them with their light. Agents (fireflies) forming a swarm use dynamic decision sets to choose neighbors and find the direction of movement, which is determined by the strength of the signal emanating from them. X.S. Yang from the University of Cambridge proposed the firefly algorithm (FA) in 2007 (see, for instance, [15,16,17,18]). For example, the known modifications of the method are the Gaussian FA [17], chaotic FA [18], and others.

Recent molecular physics methods resolve to obtain a meaningful understanding of the structure of different substances [27]. Searching for atomic-molecular clusters with a low potential is one of the classical problems of computational chemistry (see, for example, [28]). This problem is reduced to finding the minimum of potential functions in the form of special mathematical models that have already been created for several hundreds of structures. The Cambridge Energy Landscape Database [29] presents the results of global optimization for various types of models: inert gas clusters, metal clusters, ionic clusters, silicon and germanium clusters, and others. The main difficulty with problems in this class is their non-convexity, which is expressed by a huge number of local extremes of potential functions. Experts give estimates that, in some cases, prove an exponential increase in the number of local extrema from the number of atoms or optimized variables. The most famous and frequently considered potential functions in the scientific literature are the models of Lennard-Jones, Morse, Keating, Gupta, Dzugutov, Sutton-Chen and others. Since the exact value of the global minimum is unknown in most cases, the principle of presenting the “best of known” solutions are used in the works of this direction. This means that the presented solution is “probably optimal”, until one of the experts finds a lower value.

Regular studies regarding optimization problems formulated for the potentials of atomic-molecular clusters began in the 1990s by specialists from Great Britain and the USA [28]. At this stage, for the Sutton-Chen potential, for example, only 80 atoms were recorded as indicators of the dimension of the problems, which corresponds to 240 variables for the optimized function, but the number of local extrema in the recorded problems were already estimated by an astronomical indicator −. The search for a solution to optimize problems for low-potential clusters of ever-increasing dimensions continues to be an urgent problem, due to the emergence of similar formulations in chemistry, physics, materials science, nanoelectronics, nanobiology, pharmaceuticals, and other fields [30].

1.2. Question and Contributions

The article suggests an approach that applies the advantages of nature-inspired algorithms for the global exploration and first order methods for local optimization. We applied modifications of the following bioinspired methods for global search: flower pollination, teacher-learner, and firefly algorithms. The modified trust region method [31,32] based on the main diagonal approximation of the Hessian matrix [33] is used for local minimization. The proposed approach makes it possible to create computational schemes that make it possible to efficiently solve global optimization problems. It is also necessary at each iteration of the algorithm to ensure that the population is sufficiently diverse. For this purpose, biodiversity assessment was introduced into the proposed algorithm schemes.

The article is organized in the following way: Section 1.3 formulates the statement of the non-local optimization problem; Section 2 presents the developed hybrid algorithms based on nature-inspired and local descent methods; Section 3 describes performed computational experiments on a set of test and applied optimization tasks, presents numerical study results; Section 4 concludes the paper.

1.3. The Global Optimization Problem

We consider a global optimization problem with parallelepiped constraints. The problem statement is the following:

Here, is the smooth and multiextremal objective function; is the number of arguments (dimension); are box constraints.

2. Description of Proposed Algorithms

Three multiextremal optimization algorithms established on flower pollination, teacher-learner, firefly, trust region methods are developed and implemented. This section introduces them.

2.1. Algorithm Based on Flower Pollination and Local Search Methods

2.1.1. Parameters

- —number of agents;

- – number of iterations of the local search method;

- —biodiversity factor;

- —switching probability, it puts control on global and local pollination (search) procedures.

2.1.2. Step-by-Step Algorithm

We have implemented two variants of the FP: the original one, which uses only the objective function value (v.1, ), and the modified one, in which the minimization trajectory is constructed established on the modified trust region method (v.2, ), beginning from each point obtained by using the “flower pollination” procedure. In both variants, a measure of biodiversity is calculated at the sixth step. See Algorithm 1.

| Algorithm 1 FP |

The iteration is complete. |

2.2. Algorithm Based on Teacher-Learner and Local Descent Methods

2.2.1. Parameters

- —number of students;

- —number of iterations of the local search method;

- —biodiversity factor.

2.2.2. Step-by-Step Algorithm

Two variants of the TL have been implemented: the original (v.1, ) and the hybridized with the modification of trust region (v.2, ) method for local descents. See Algorithm 2.

| Algorithm 2 TL |

The iteration is complete. |

2.3. Algorithm Based on Firefly and Local Search Methods

2.3.1. Parameters

- —number of fireflies;

- —number of iterations of the local search method;

- —biodiversity factor;

- —mutation coefficient;

- —attraction ratio;

- —light absorption factor;

- —power rate.

2.3.2. Step-by-Step Algorithm

We have implemented two variants of the FA: the classic version (v.1, ) and the combined with the modified trust region (v.2, ) method for local search. In the proposed algorithm, the biodiversity coefficient is used, which makes it possible to evaluate the measure of biodiversity and, if necessary, increase the representativeness of the training set in order to increase the final efficiency of the algorithm. See Algorithm 3.

| Algorithm 3 FA |

The iteration is complete. |

3. Numerical Study of Developed Algorithms

The algorithms were implemented in C (GCC/MinGW) using uniform software standards and studied on a set of test problems [34,35]. Table 1 contains the values of algorithmic parameters. A numerical study of the properties of the proposed algorithms is carried out in comparison with other algorithms presented by us earlier in the [36]: modifications of genetic (GA), biogeography (BBO) and particle swarm (PSO) algorithms.

Table 1.

The values of algorithmic parameters.

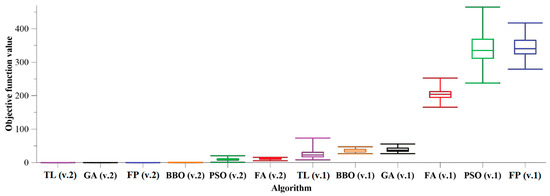

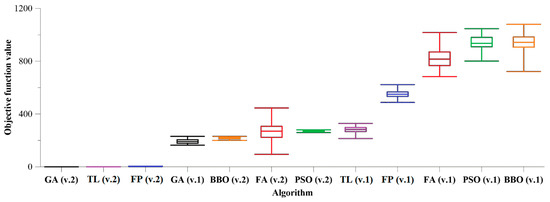

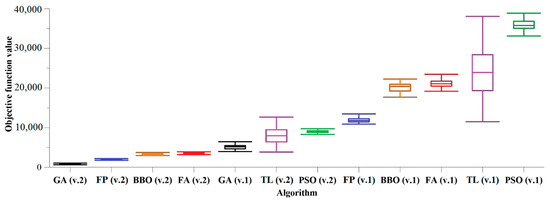

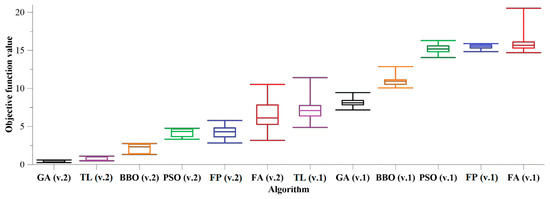

The equal testing requirements were assured for every algorithm. The number of agents for all algorithms is . The number of task variables is 100. We launched the algorithms 50 times from the same uniformly distributed initial approximations. The additional stop criterion is exceeding 10,000 calls to the objective function. Below is an exposition of each optimization task and the results of numerical comparison of algorithms in the form of box diagrams for this test problem (Figure 1, Figure 2, Figure 3 and Figure 4). The ordinate axle plots the mean values of the objective function over the set of agents, received from 50 launches. Table 2 shows statistics on algorithmic launches.

Figure 1.

Box and whisker diagrams of algorithm comparison (Griewank problem).

Figure 2.

Box and whisker diagrams of algorithms comparison (Rastrigin problem).

Figure 3.

Box and whisker diagrams of algorithms comparison (Schwefel problem).

Figure 4.

Box and whisker diagrams of algorithms comparison (Ackley problem).

Table 2.

Statistics on algorithm launches: mean and standard deviation (SD).

We conducted numerical experiments applying a computer with the following characteristics: 2x Intel Xeon E5-2680 v2 2.8 GHz (20 cores); 128 Gb DDR3 1866 MHz.

3.1. The Griewank Problem

Absolute minimum point and value:

3.2. The Rastrigin Problem

Absolute minimum point and value:

3.3. The Schwefel Problem

Absolute minimum point and value:

3.4. The Ackley Problem

Absolute minimum point and value:

3.5. The Sutton-Chen Problem

We consider finding the low-energy Sutton-Chen clusters task [37,38,39,40] for extremely large dimensions. The Sutton-Chen potential is often used in computations of the nanocluster properties of metals such as silver, rhodium, nickel, copper, gold, platinum, and others [38,39,40]. The following global optimization problem is considered:

Here, are special parameters.

To investigate the problems of the optimization of atomic-molecular cluster potentials, we implemented three-phase computational technology, which combines several methods from a collection of basic at each phase [41].

Phase 1 is concentrated on the random approximation of a neighborhood of a record point and established on a collection of algorithms that generates starting approximations: “level” (LG), “averaged” (AG), “gradient” (GG), “level-averaged” (LAG), “level-gradient” (LGG), “averaged-gradient” (AGG), “level-averaged-gradient” (LAGG). At the phase 2, the problem of descent of the approximations produced at the phase 1 [42] is determined by applying one of the “starter algorithms” that can find low-energy configurations of atoms. We implemented six variants of “starter algorithms”: three bioinspired algorithms described in the paper (FP, TL, FA), the variant of the method proposed by B.T. Polyak [43,44], the “raider method” [45], and the modification of the multivariate dichotomy method [46,47]. In all these algorithms we applied the modification of trust region method for local search (phase 3).

Nowadays, solutions are presented only for Sutton-Chen configurations up to 80 atoms in the Cambridge Energy Landscape Database [29] and other public sources. We made a performance investigation of developed techniques for clusters of 3–80 atoms and compared the solutions obtained with the best of the known ones [48]. The solutions obtained during numerical study coincided with the known results represented in [29].

We investigated very large problems for configurations of atoms from 81 to 100. For each algorithm-generator and each starter method, we performed computational experiments. We used an excess of the time limit (24 h) as a stopping criterion. Table 3 presents the results of the numerical testing of computational technology for the task in which the cluster consists of 85 atoms.

Table 3.

The results of the numerical testing of computational technology (N = 5).

As can be noticed from Table 3, there is a leading computational technology with a combination of the LAGG as an initial approximation generator and the raider algorithm as a starter, among all the investigated variants.

Table 4 presents the best-found values obtained. It was not possible to compare our results with the computations of other researchers due to the absence of such information.

Table 4.

The best-found values (number of atoms: from 81 to 100).

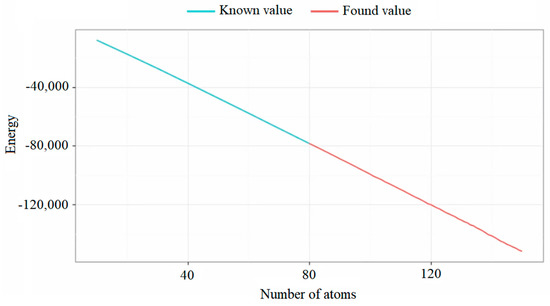

Figure 5 demonstrates the dependency of the best-found values on the dimension. We plot the number of atoms along the horizontal axis, the found values along the vertical.

Figure 5.

Dependence of the best-found values on the dimension.

3.6. The Parametric Identification Problem for the Nonlinear Dynamic Model

The proposed algorithms are universal and allow one to investigate problems of a wide class. For example, those that go beyond the considered finite-dimensional setting are dynamic problems. The tasks of parametric identification are to find the values of the model parameters that make it possible to ensure its closeness to the experimental data.

Table 5 presents the known data corresponding to the dynamics of the changes in the trajectories over time .

Table 5.

The changes in the trajectories of the system over time.

With the help of the proposed algorithms, the model problem of the parametric identification of the following dynamic system was solved:

As the functional to be optimized, we used the integral of the modular discrepancy of the system (7) values and known values (see Table 5) in the time span :

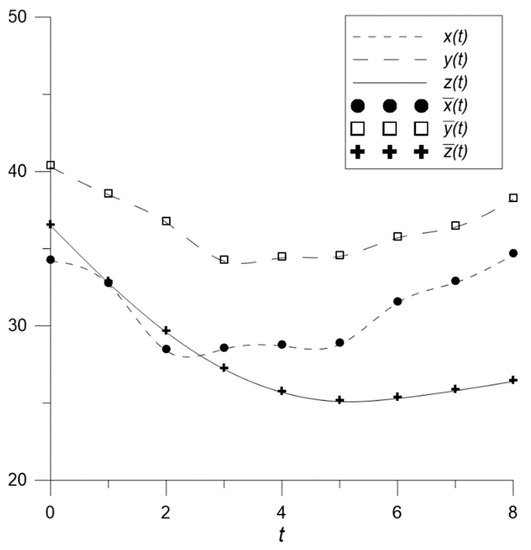

Figure 6 demonstrates the trajectories received in solving the model parametric identification problem, relative to the tabular data presented in Table 5. Time is plotted on the graph along the abscissa.

Figure 6.

Trajectories obtained when solving the model identification problem.

3.7. Statistical Testing of Proposed Algorithms

We tested the implemented algorithms on the optimization benchmark [34]. As test problems, we selected 22 multimodal objective functions (numbered 7 to 28 in the benchmark). The dimensions of all problems were 100 variables. We compared the algorithms with each other and ranked them for each task. For each algorithm, we calculated the percentage of the number of problems on which it showed a certain place in the rating. Table 6 shows the results of statistical testing of combined algorithms in the following format: “percentage of reaching a certain place in the rating (the number of tasks on which this was achieved)”.

Table 6.

Results of statistical testing of combined algorithms.

Established on the performed computational experiments, we can assert that among the investigated nature-inspired methods, there is no definite leader that would demonstrate the best results on all test problems. However, it can be seen from Table 6 that, in decreasing efficiency, the algorithms can be arranged as follows: GA, TL, FP, BBO, FA, and PSO. In all cases considered, the combined algorithms based on the bioinspired and local search methods showed essential improvements over the basic multi-agent methods.

4. Conclusions

The introduced treatment for the numerical investigation of the tasks of searching for an absolute optimum of multimodal functions is established by the use of flower pollination, teacher-learner, firefly, modified trust region algorithms, and assessment of biodiversity measure. Hybrid methods for non-convex optimization were proposed and implemented.

The developed algorithms were studied on a collection of test problems characterized by various levels of complexity. All variants of the algorithms based on combinations with the modification of trust region method for local search showed essential improvements over the original ones. Statistical testing of proposed algorithms noticed that modifications of teacher-learner, and flower pollination methods were in the lead more often than variants of firefly method.

The implemented technology was used to explore the promising problem from the applied field of atomic-molecular modeling. In the course of the investigation, we obtained feasible optimal configurations for Sutton-Chen clusters of extremely large dimensions (81–100 atoms). A comparative analysis of the results of numerical experiments did not disclose any sharp deflections from the observed regularity between the found values of the potential function and the number of atoms. In addition, the parametric identification problem for the nonlinear dynamic model was solved. The solutions obtained have found a meaningful interpretation.

Author Contributions

Conceptualization, P.S. and A.G.; methodology, P.S. and A.G.; software, P.S.; validation, P.S. and A.G.; formal analysis, P.S.; investigation, P.S.; resources, P.S. and A.G.; data curation, P.S. and A.G.; writing—original draft preparation, P.S.; writing—review and editing, P.S. and A.G.; visualization, P.S.; supervision, A.G.; project administration, A.G.; funding acquisition, P.S. and A.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the grant from the Ministry of Education and Science of Russia within the framework of the project “Theory and methods of research of evolutionary equations and controlled systems with their applications” (state registration number 121041300060-4).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Floudas, C.A.; Pardalos, P.M. Encyclopedia of Optimization; Springer: New York, NY, USA, 2009. [Google Scholar]

- Karpenko, A.P. Modern Search Optimization Algorithms. Algorithms Inspired by Nature; BMSTU Publishing: Moscow, Russia, 2014. (In Russian) [Google Scholar]

- Ashlock, D. Evolutionary Computation for Modeling and Optimization; Springer: New York, NY, USA, 2006. [Google Scholar]

- Back, T. Evolutionary Algorithms in Theory and Practice: Evolution Strategies, Evolutionary Programming, Genetic Algorithms; Oxford University Press: Oxford, UK, 1996. [Google Scholar]

- Whitley, D. A genetic algorithm tutorial. Stat. Comput. 1994, 4, 65–85. [Google Scholar] [CrossRef]

- Price, K.; Storn, R.M.; Lampinen, J.A. Differential Evolution: A Practical Approach to Global Optimization; Springer: New York, NY, USA, 2006. [Google Scholar]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Neri, F.; Tirronen, V. Recent advances in differential evolution: A survey and experimental analysis. Artif. Intell. Rev. 2010, 33, 61–106. [Google Scholar] [CrossRef]

- Xing, B.; Gao, W.J. Innovative Computational Intelligence: A Rough Guide to 134 Clever Algorithms; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Sopov, E. Multi-strategy genetic algorithm for multimodal optimization. In Proceedings of the 7th International Joint Conference on Computational Intelligence (IJCCI), Lisbon, Portugal, 12–14 November 2015; pp. 55–63. [Google Scholar]

- Semenkin, E.; Semenkina, M. Self-configuring genetic algorithm with modified uniform crossover operator. Adv. Swarm Intelligence. Lect. Notes Comput. Sci. 2012, 7331, 414–421. [Google Scholar]

- Das, S.; Maity, S.; Qub, B.-Y.; Suganthan, P.N. Real-parameter evolutionary multimodal optimization: A survey of the state-of-the art. Swarm Evol. Comput. 2011, 1, 71–88. [Google Scholar] [CrossRef]

- Yang, X.S. Nature-Inspired Metaheuristic Algorithms; Luniver Press: Frome, UK, 2010. [Google Scholar]

- Yang, X.S. Flower pollination algorithm for global optimization. In Proceedings of the International Conference on Unconventional Computing and Natural Computation (UCNC 2012), Orléan, France, 3–7 September 2012; pp. 240–249. [Google Scholar]

- Yang, X.S. Firefly algorithm, stochastic test functions and design optimization. Int. J. Bio-Inspired Comput. 2010, 2, 78–84. [Google Scholar] [CrossRef]

- Yang, X.S.; He, X. Firefly algorithm: Recent advances and applications. Int. J. Swarm Intell. 2013, 1, 36–50. [Google Scholar] [CrossRef]

- Farahani, S.M.; Abshouri, A.A.; Nasiri, B.; Meybodi, M.R. A Gaussian firefly algorithm. Int. J. Mach. Learn. Comput. 2011, 1, 448–453. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.S.; Talatahari, S.; Alavi, A.H. Firefly algorithm with chaos. Commun. Nonlinear Sci. Numer. Simul. 2013, 1, 89–98. [Google Scholar] [CrossRef]

- Simon, D. Biogeography-based optimization. IEEE Trans. Evol. Comput. 2008, 12, 702–713. [Google Scholar] [CrossRef]

- Liu, Y.; Ling, X.; Shi, Z.; Lv, M.; Fang, J.; Zhang, L. A survey on particle swarm optimization algorithms for multimodal function optimization. J. Softw. 2011, 6, 2449–2455. [Google Scholar] [CrossRef][Green Version]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Glob. Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput.-Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Rao, R.V.; Patel, V. An elitist teaching-learning-based optimization algorithm for solving complex constrained optimization problems. Int. J. Ind. Eng. Comput. 2012, 3, 535–560. [Google Scholar] [CrossRef]

- Geem, Z.W.; Kim, J.H.; Loganathan, G.V. A new heuristic optimization algorithm: Harmony search. Simulation 2001, 76, 60–68. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Nasuto, S.; Bishop, M. Convergence analysis of stochastic diffusion search. Parallel Algorithms Appl. 1999, 14, 89–107. [Google Scholar] [CrossRef]

- Brooks, R.L. The Fundamentals of Atomic and Molecular Physics; Springer: New York, NY, USA, 2013. [Google Scholar]

- Doye, J.P.K.; Wales, D.J. Structural consequences of the range of the interatomic potential a menagerie of clusters. J. Chem. Soc. Faraday Trans 1997, 93, 4233–4243. [Google Scholar] [CrossRef]

- The Cambridge Energy Landscape Database. Available online: http://www.wales.ch.cam.ac.uk/CCD.html (accessed on 15 August 2022).

- Cruz, S.M.A.; Marques, J.M.C.; Pereira, F.B. Improved evolutionary algorithm for the global optimization of clusters with competing attractive and repulsive interactions. J. Chem. Phys. 2016, 145, 154109. [Google Scholar] [CrossRef]

- Yuan, Y. A review of trust region algorithms for optimization. Iciam 2000, 99, 271–282. [Google Scholar]

- Yuan, Y. Recent advances in trust region algorithms. Math. Program. 2015, 151, 249–281. [Google Scholar] [CrossRef]

- Gornov, A.Y.; Anikin, A.S.; Zarodnyuk, T.S.; Sorokovikov, P.S. Modified trust region algorithm based on the main diagonal approximation of the Hessian matrix for solving optimal control problems. Autom. Remote Control 2022, 10, 122–133. (In Russian) [Google Scholar]

- Ding, K.; Tan, Y. CuROB: A GPU-based test suit for real-parameter optimization. In Proceedings of the Advances in Swarm Intelligence: 5th International Conference. Part II, Hefei, China, 17–20 October 2014; pp. 66–78. [Google Scholar]

- Rastrigin, L.A. Extreme Control Systems; Nauka: Moscow, Russia, 1974. (In Russian) [Google Scholar]

- Sorokovikov, P.S.; Gornov, A.Y. Modifications of genetic, biogeography and particle swarm algorithms for solving multiextremal optimization problems. In Proceedings of the 10th International Workshop on Mathematical Models and their Applications (IWMMA 2021), Krasnoyarsk, Russia, 16–18 November 2021. in print. [Google Scholar]

- Doye, J.P.K.; Wales, D.J. Global minima for transition metal clusters described by Sutton–Chen potentials. New J. Chem. 1998, 22, 733–744. [Google Scholar] [CrossRef]

- Todd, B.D.; Lynden-Bell, R.M. Surface and bulk properties of metals modelled with Sutton-Chen potentials. Surf. Sci. 1993, 281, 191–206. [Google Scholar] [CrossRef]

- Liem, S.Y.; Chan, K.Y. Simulation study of platinum adsorption on graphite using the Sutton-Chen potential. Surf. Sci. 1995, 328, 119–128. [Google Scholar] [CrossRef]

- Ozgen, S.; Duruk, E. Molecular dynamics simulation of solidification kinetics of aluminium using Sutton–Chen version of EAM. Mater. Lett. 2004, 58, 1071–1075. [Google Scholar] [CrossRef]

- Sorokovikov, P.S.; Gornov, A.Y.; Anikin, A.S. Computational technology for the study of atomic-molecular Morse clusters of extremely large dimensions. IOP Conf. Ser. Mater. Sci. Eng. 2020, 734, 012092. [Google Scholar] [CrossRef]

- Wales, D.J.; Doye, J.P.K. Global optimization by basin-hopping and the lowest energy structures of Lennard-Jones clusters containing up to 110 atoms. J. Phys. Chem. A 1997, 101, 5111–5116. [Google Scholar] [CrossRef]

- Polyak, B.T. Minimization of nonsmooth functionals. USSR Comput. Math. Math. Phys. 1969, 9, 14–29. [Google Scholar] [CrossRef]

- Gornov, A.Y.; Anikin, A.S. Modification of the Eremin-Polyak method for multivariate optimization problems. In Proceedings of the Conference “Lyapunov Readings”, Irkutsk, Russia, 30 November–2 December 2015; p. 20. (In Russian). [Google Scholar]

- Gornov, A.Y. Raider method for quasi-separable problems of unconstrained optimization. In Proceedings of the Conference “Lyapunov Readings”, Irkutsk, Russia, 3–7 December 2018; p. 28. (In Russian). [Google Scholar]

- Gornov, A.Y.; Andrianov, A.N.; Anikin, A.S. Algorithms for the solution of huge quasiseparable optimization problems. In Proceedings of the International workshop “Situational management, intellectual, agent-based computing and cybersecurity in critical infrastructures”, Irkutsk, Russia, 11–16 March 2016; p. 76. [Google Scholar]

- Levin, A.Y. On a minimization algorithm for convex functions. Rep. Acad. Sci. USSR 1965, 160, 1244–1247. (In Russian) [Google Scholar]

- Sorokovikov, P.S.; Gornov, A.Y.; Anikin, A.S. Computational technology for studying atomic-molecular Sutton-Chen clusters of extremely large dimensions. In Proceedings of the 8th International Conference on Systems Analysis and Information Technologies, Irkutsk, Russia, 8–14 July 2019; pp. 86–94. (In Russian). [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).