Traditional vs. Automated Computer Image Analysis—A Comparative Assessment of Use for Analysis of Digital SEM Images of High-Temperature Ceramic Material

Abstract

1. Introduction

2. Methods and Materials

3. Traditional Methods of Image Analysis

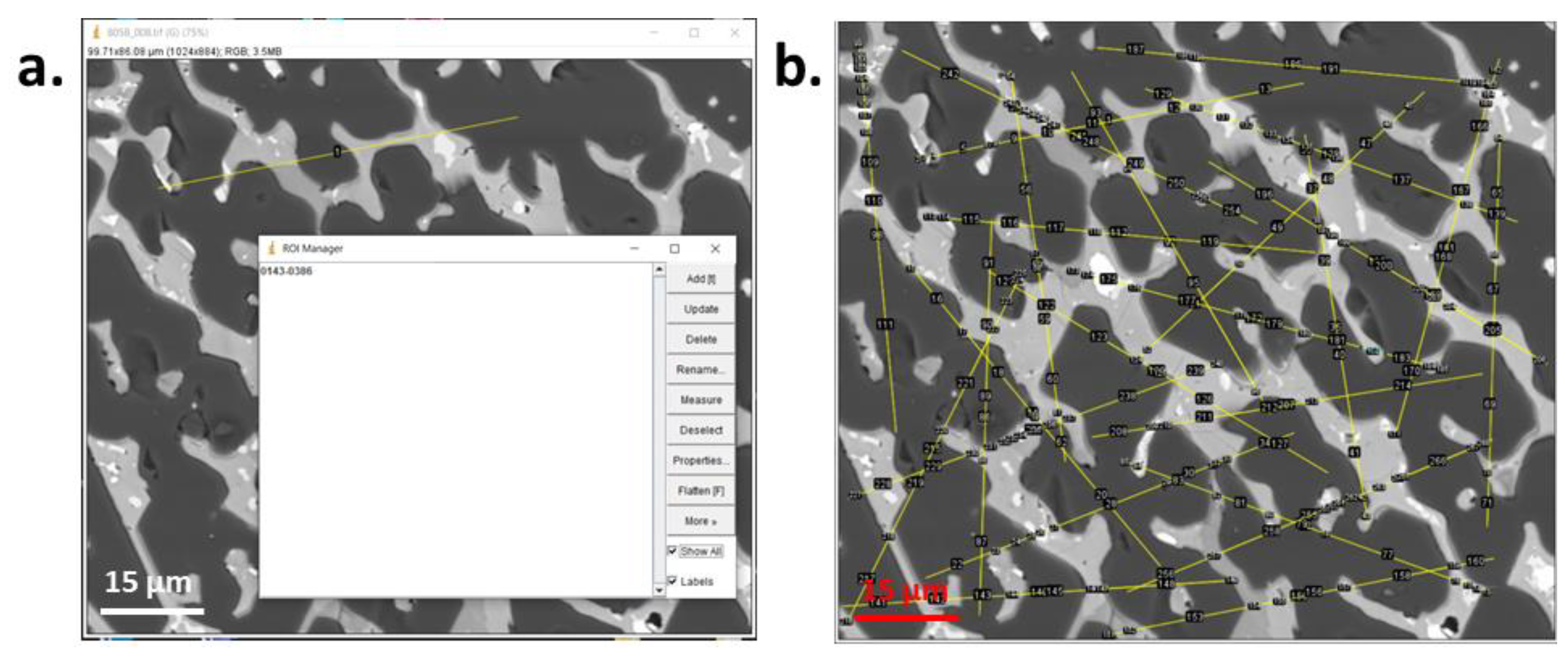

3.1. Linear Analysis

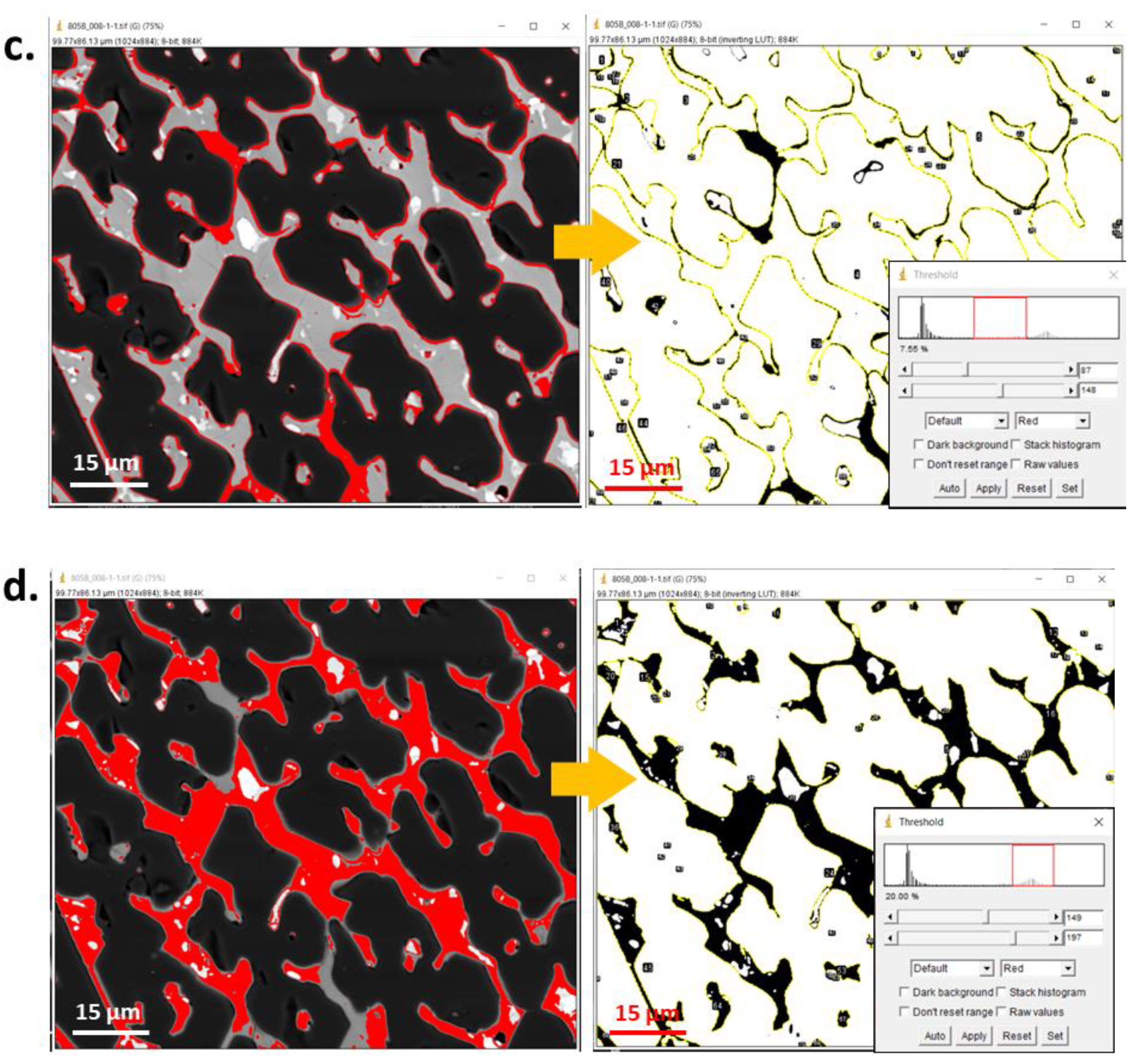

3.2. Planimetry

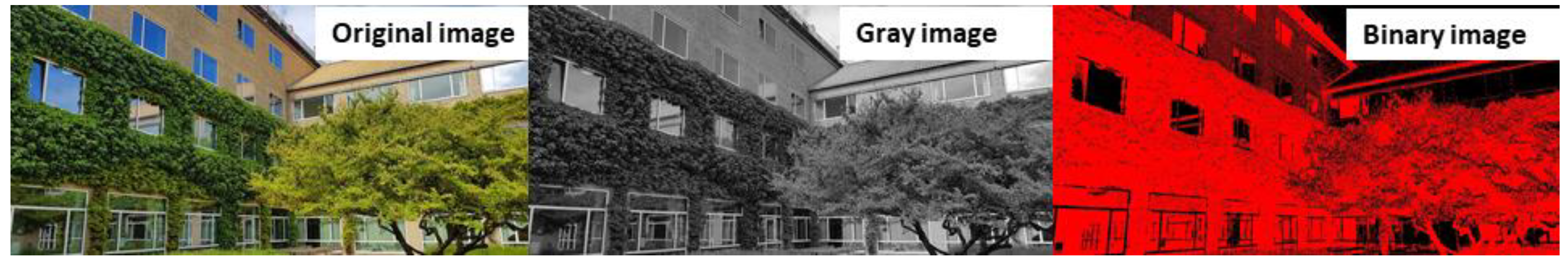

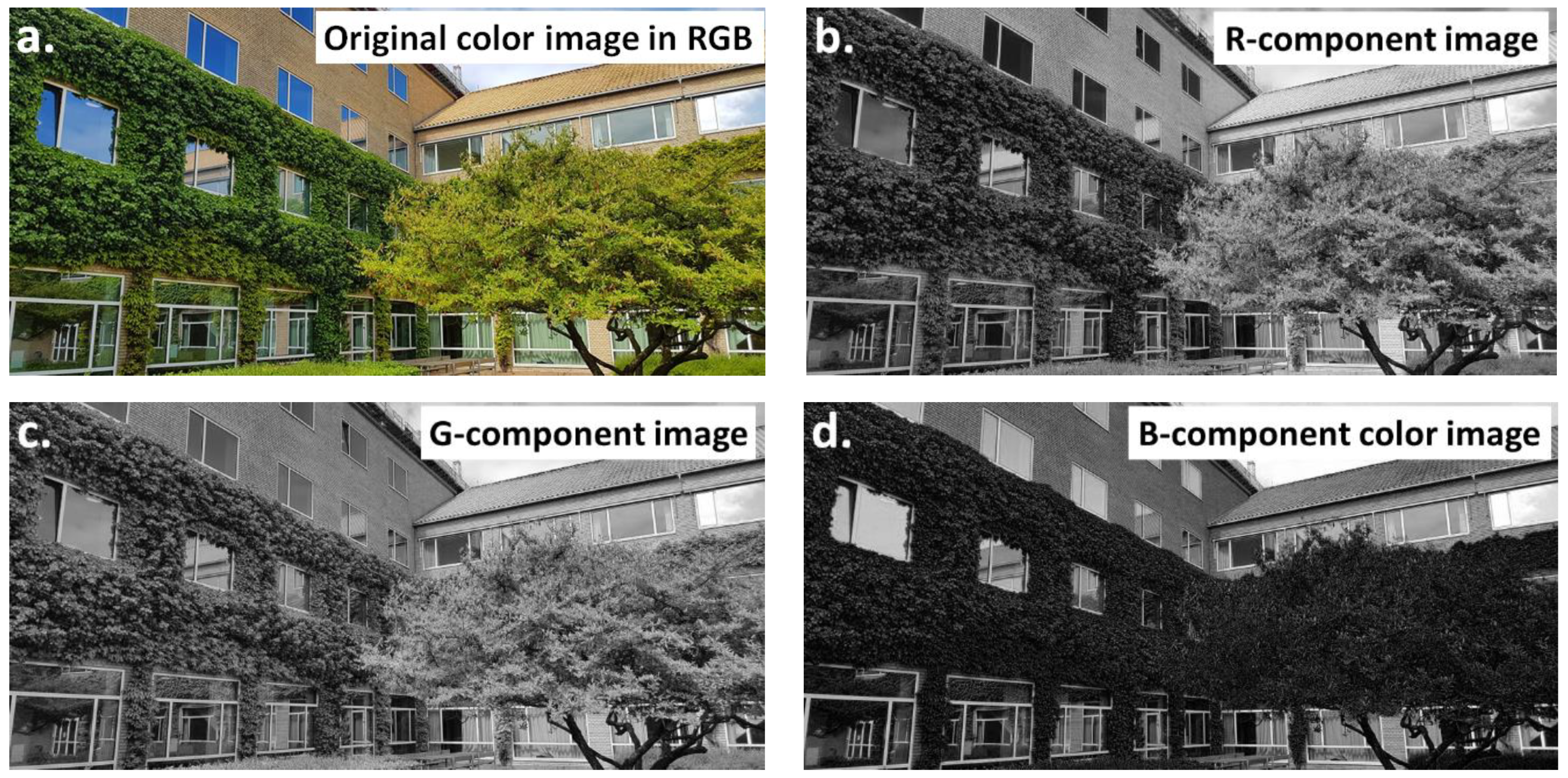

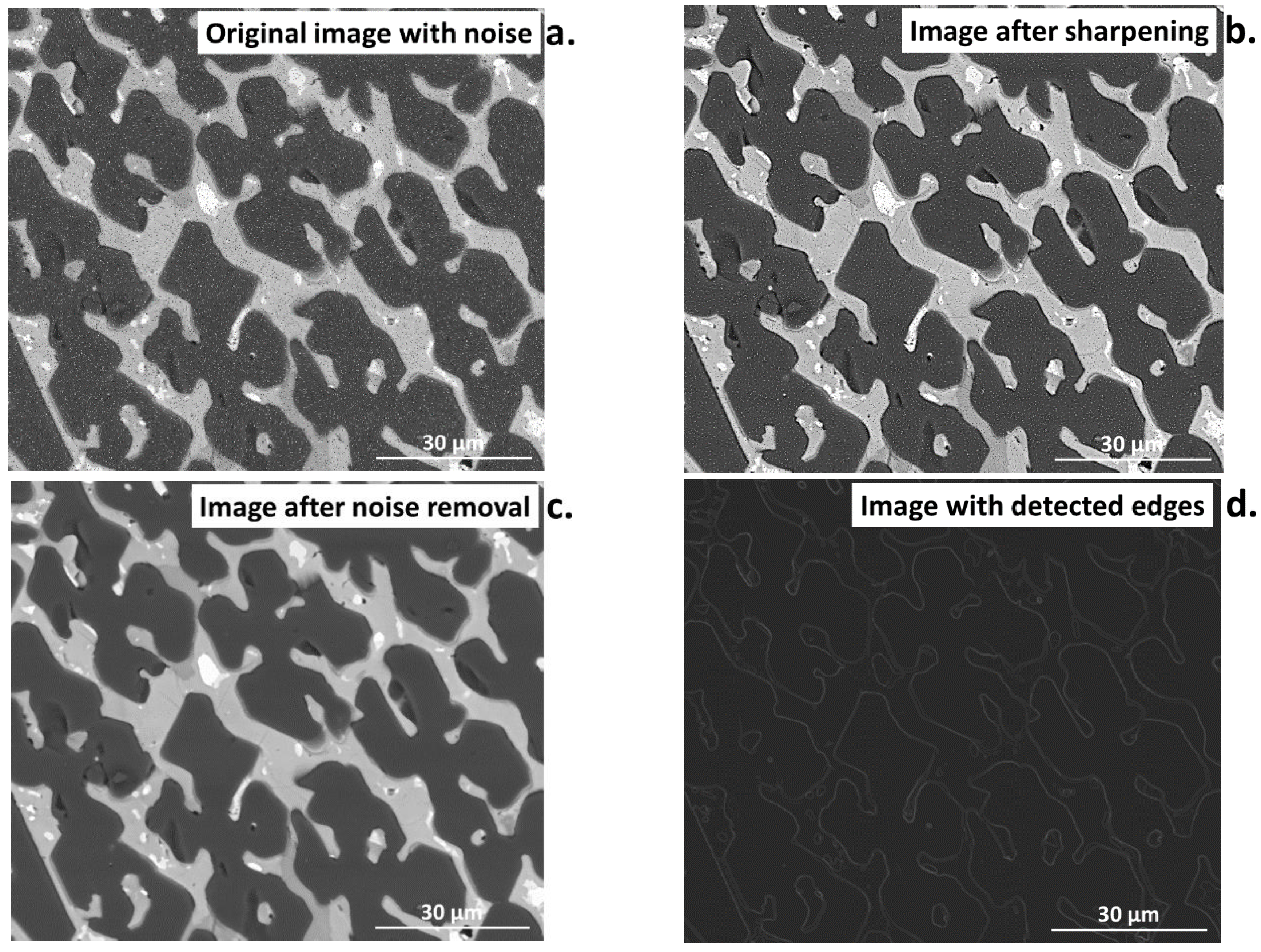

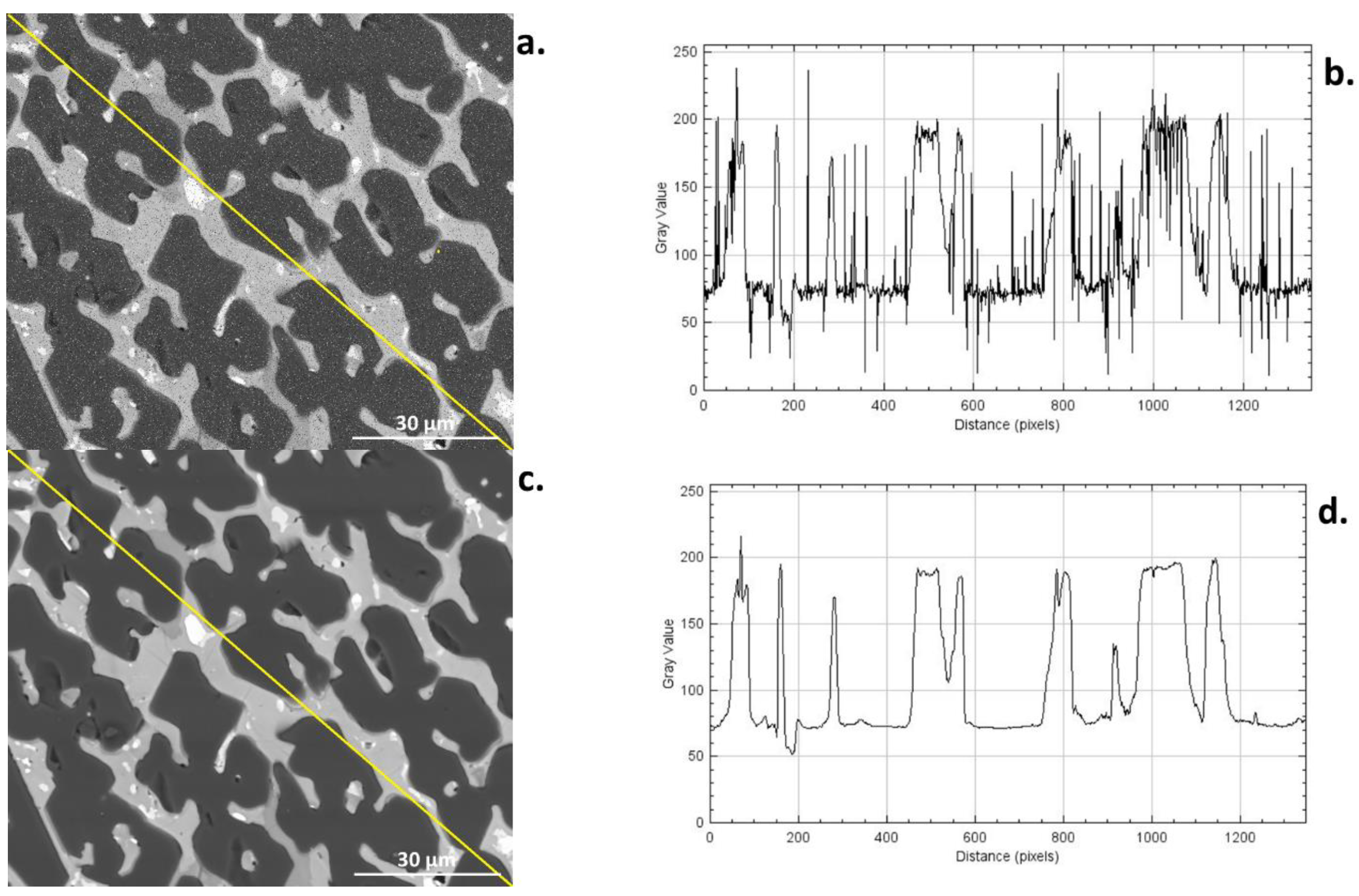

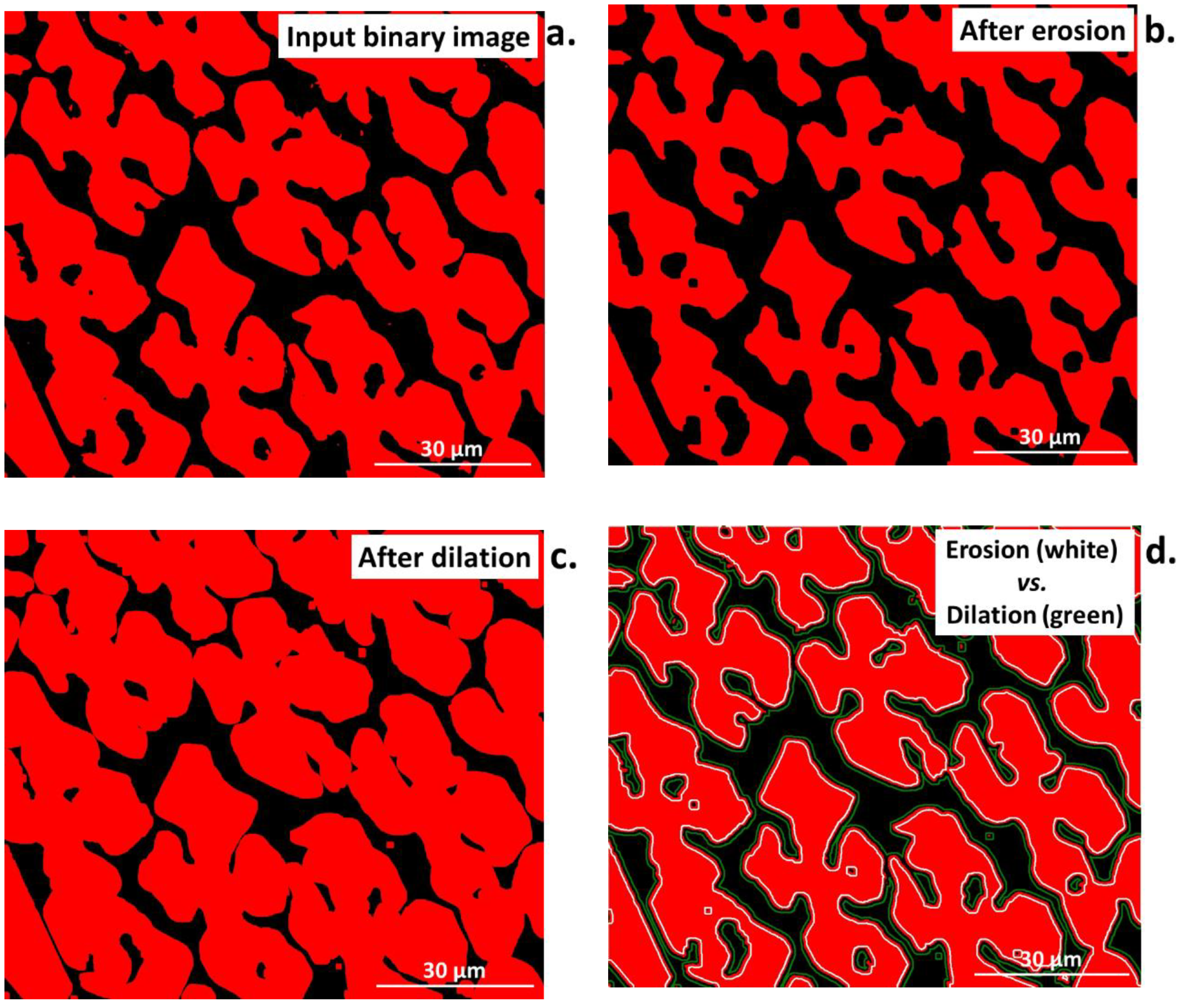

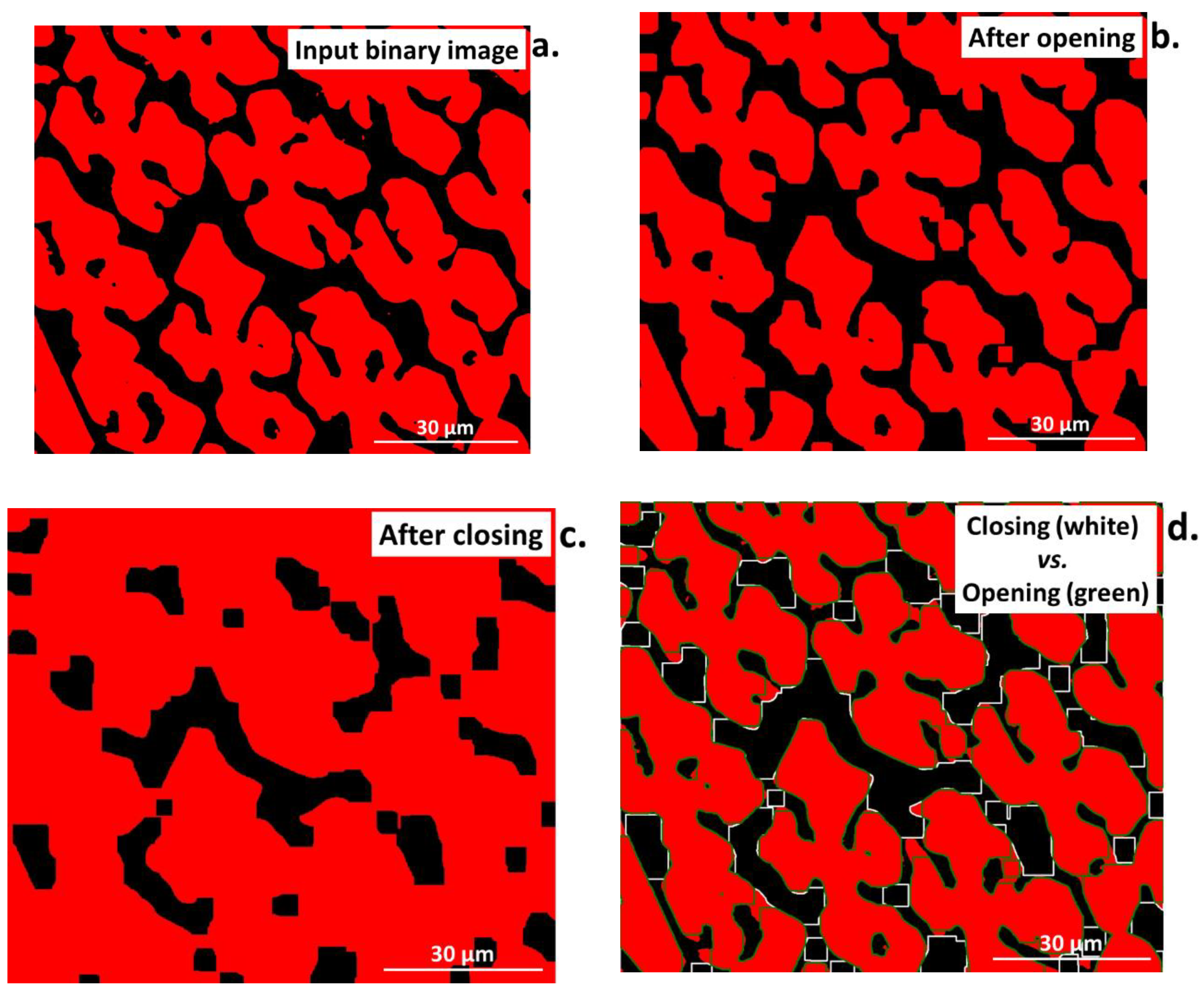

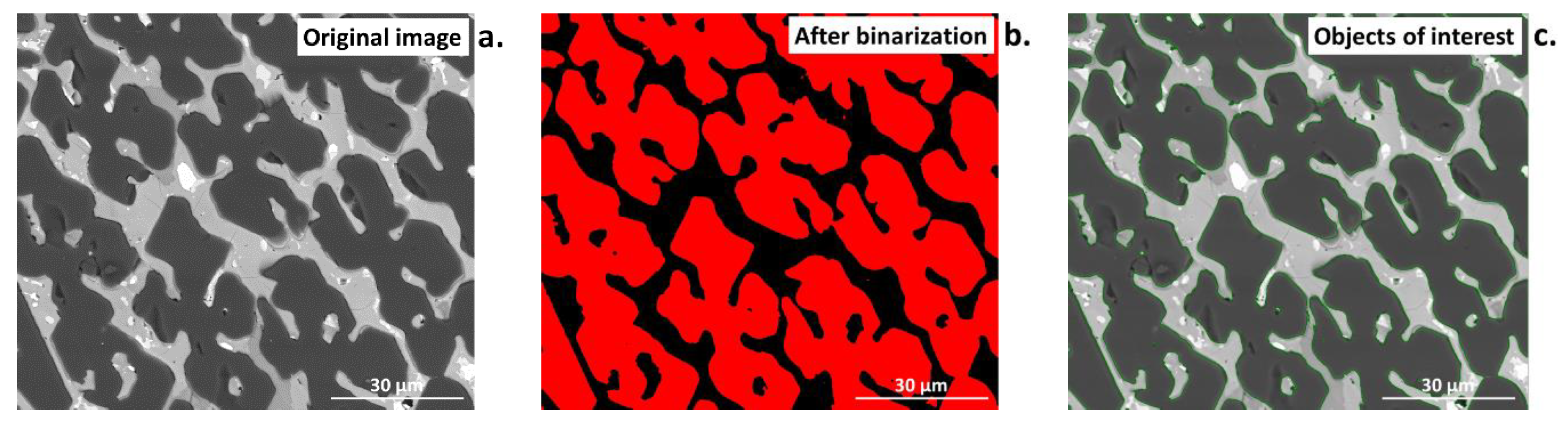

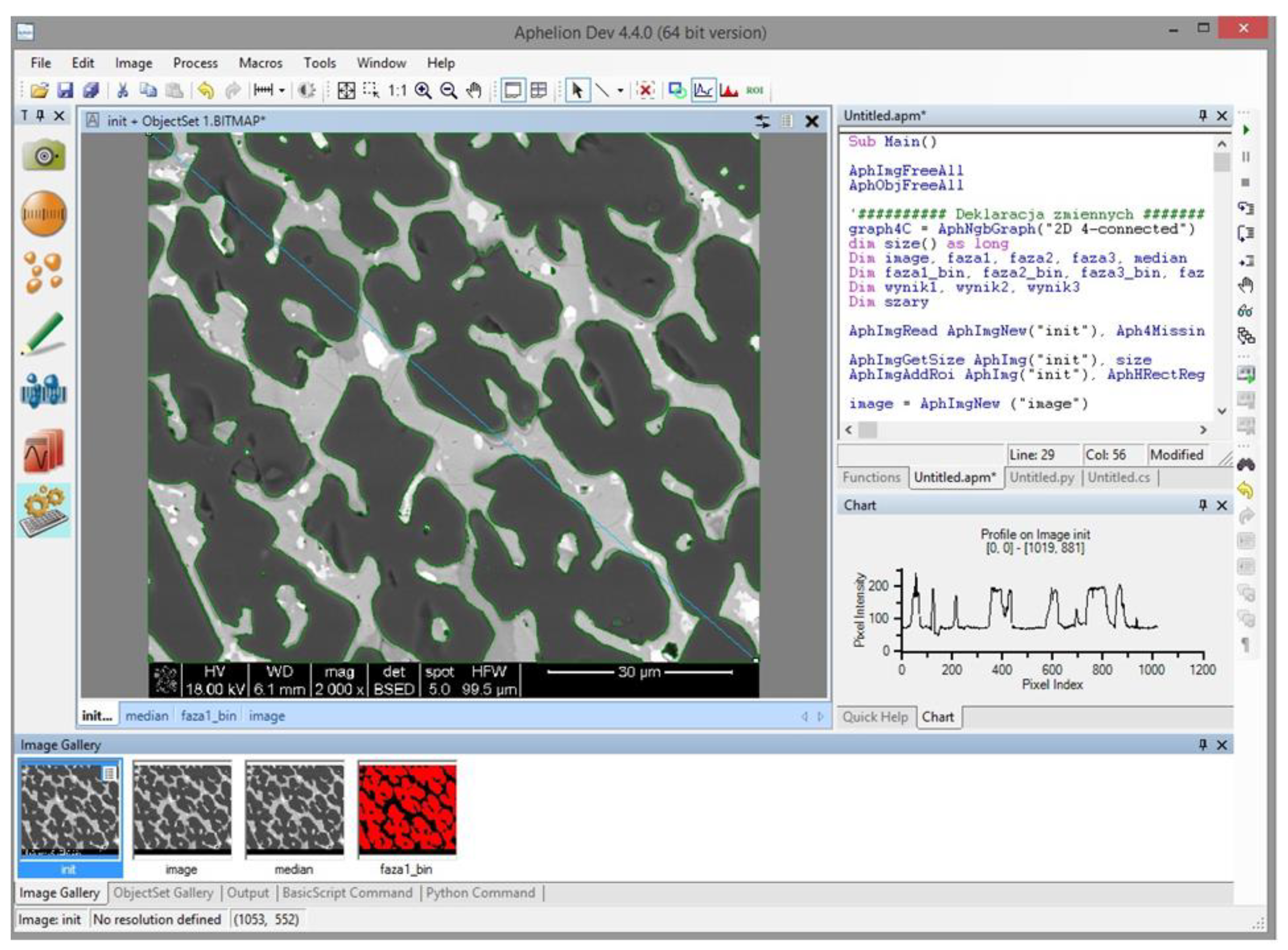

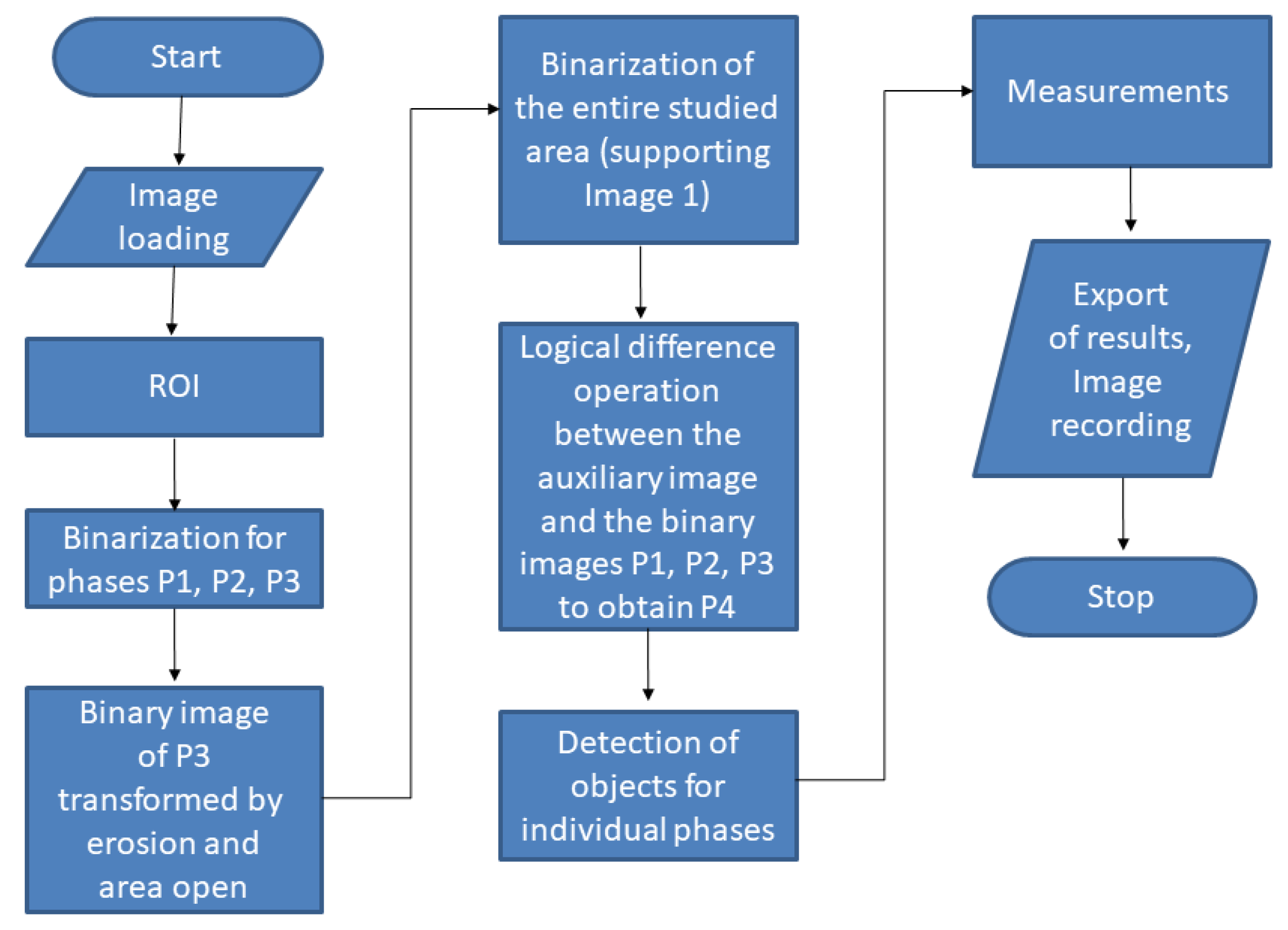

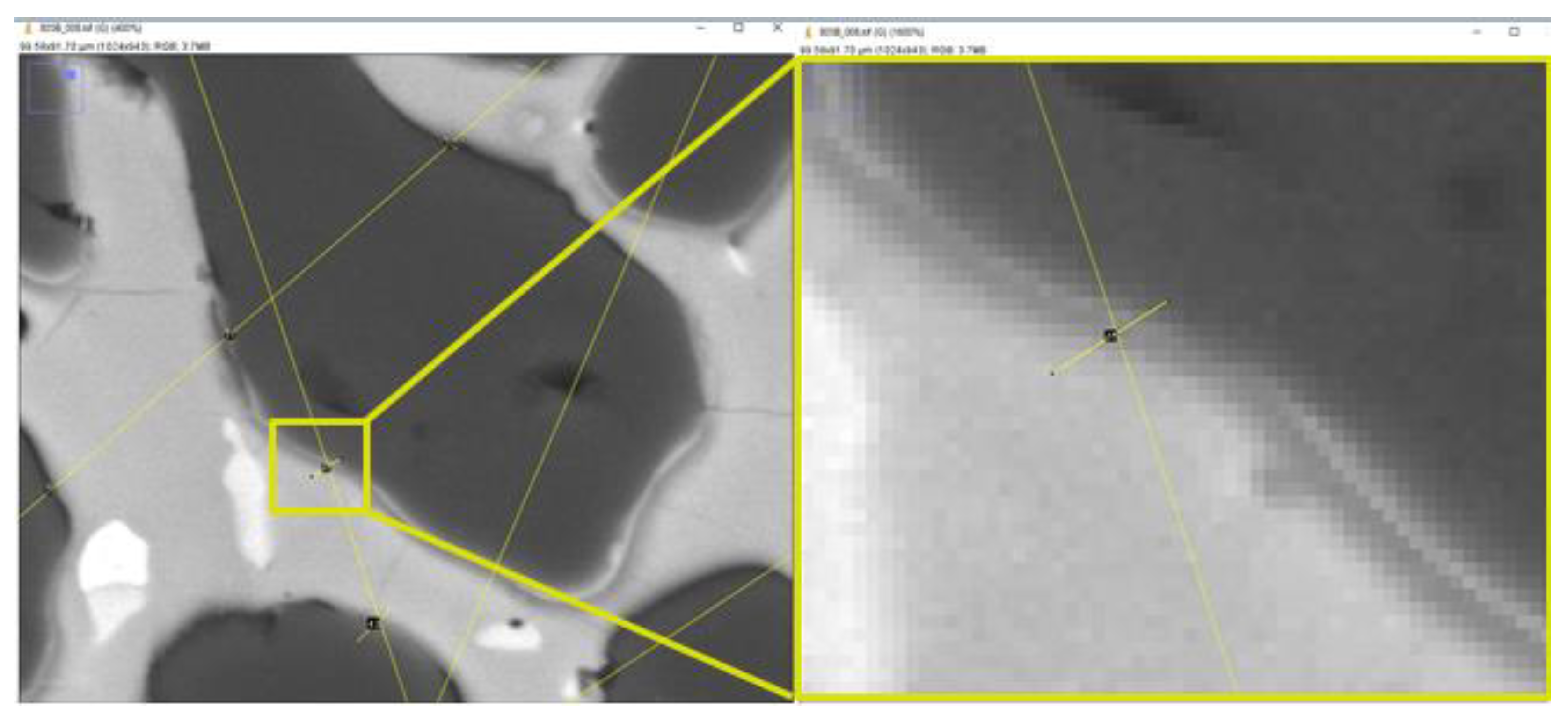

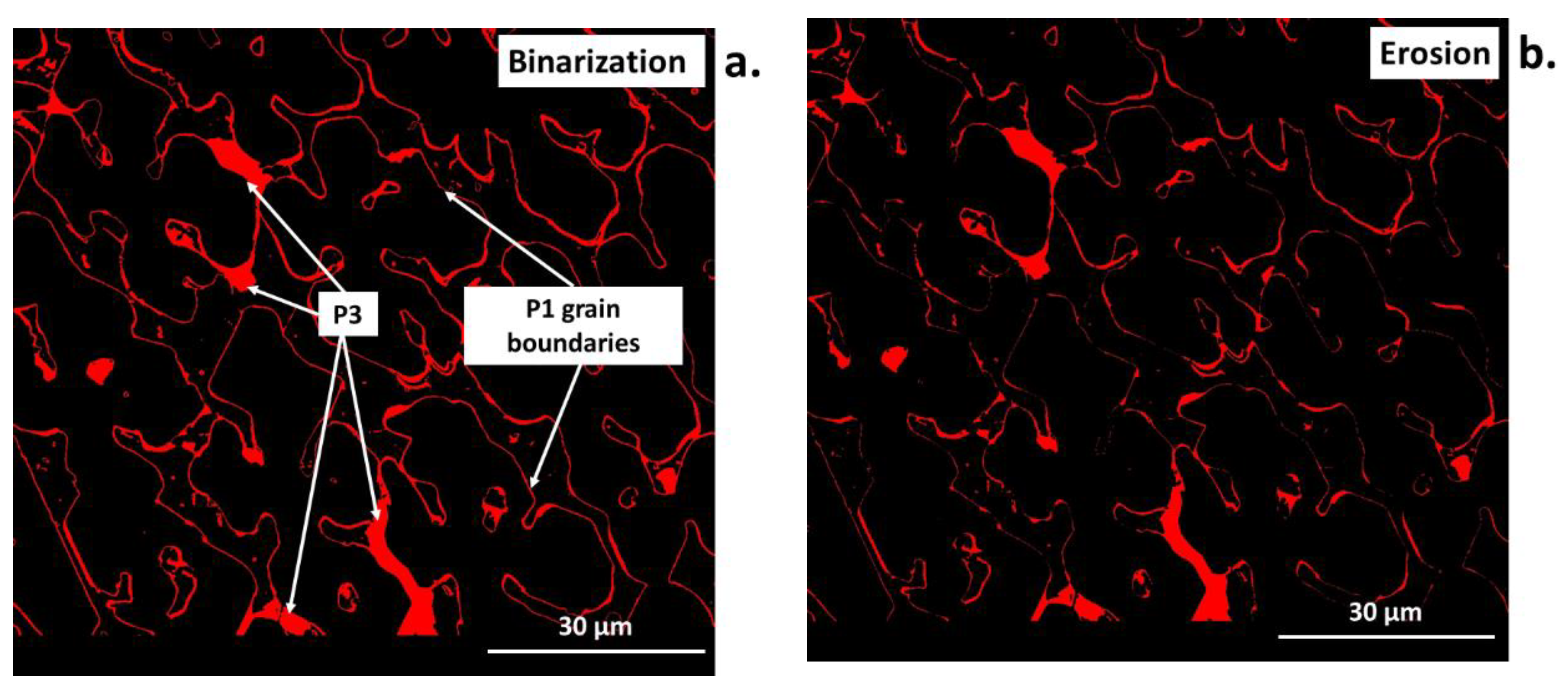

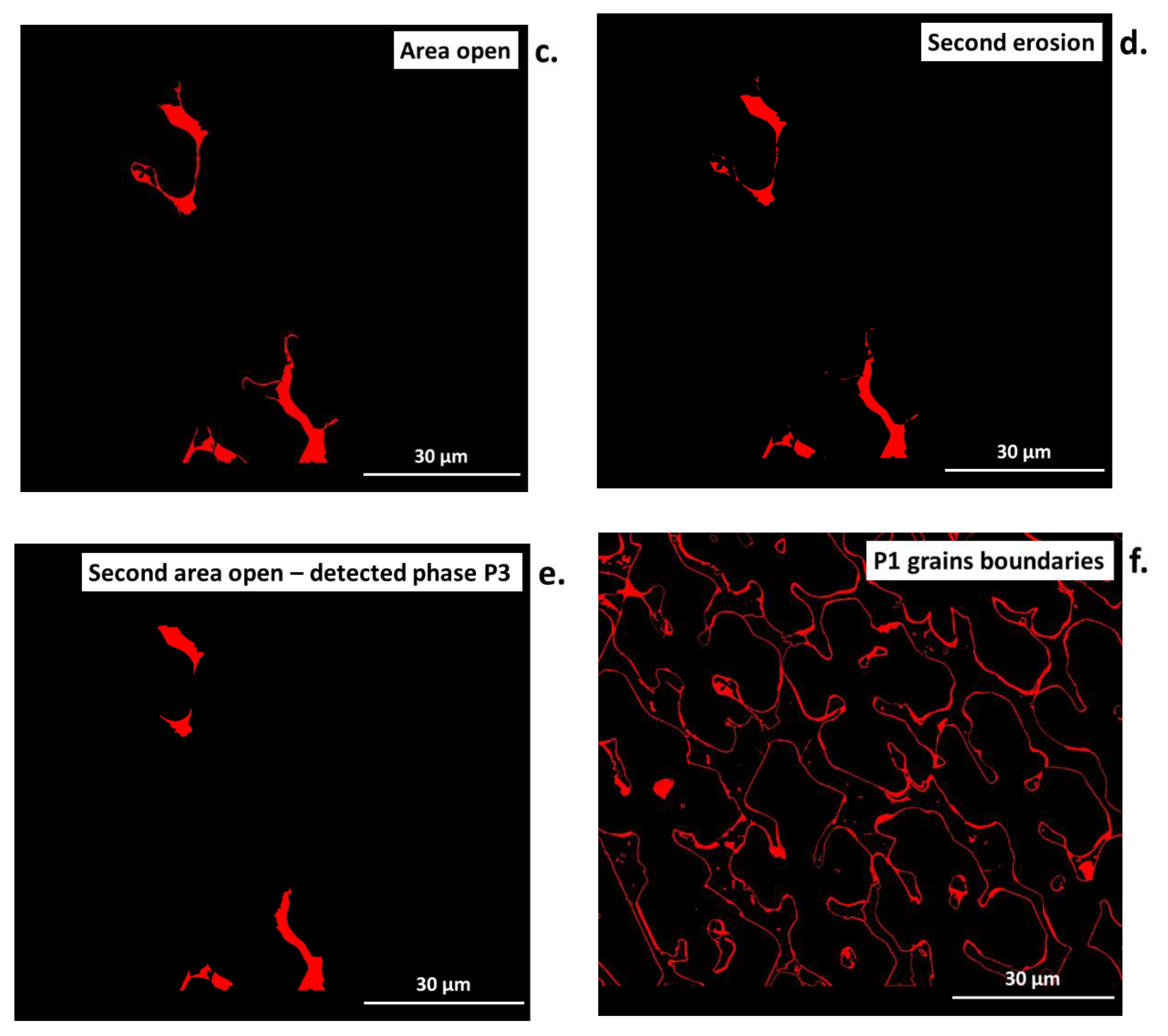

4. Operations in Computer Image Analysis

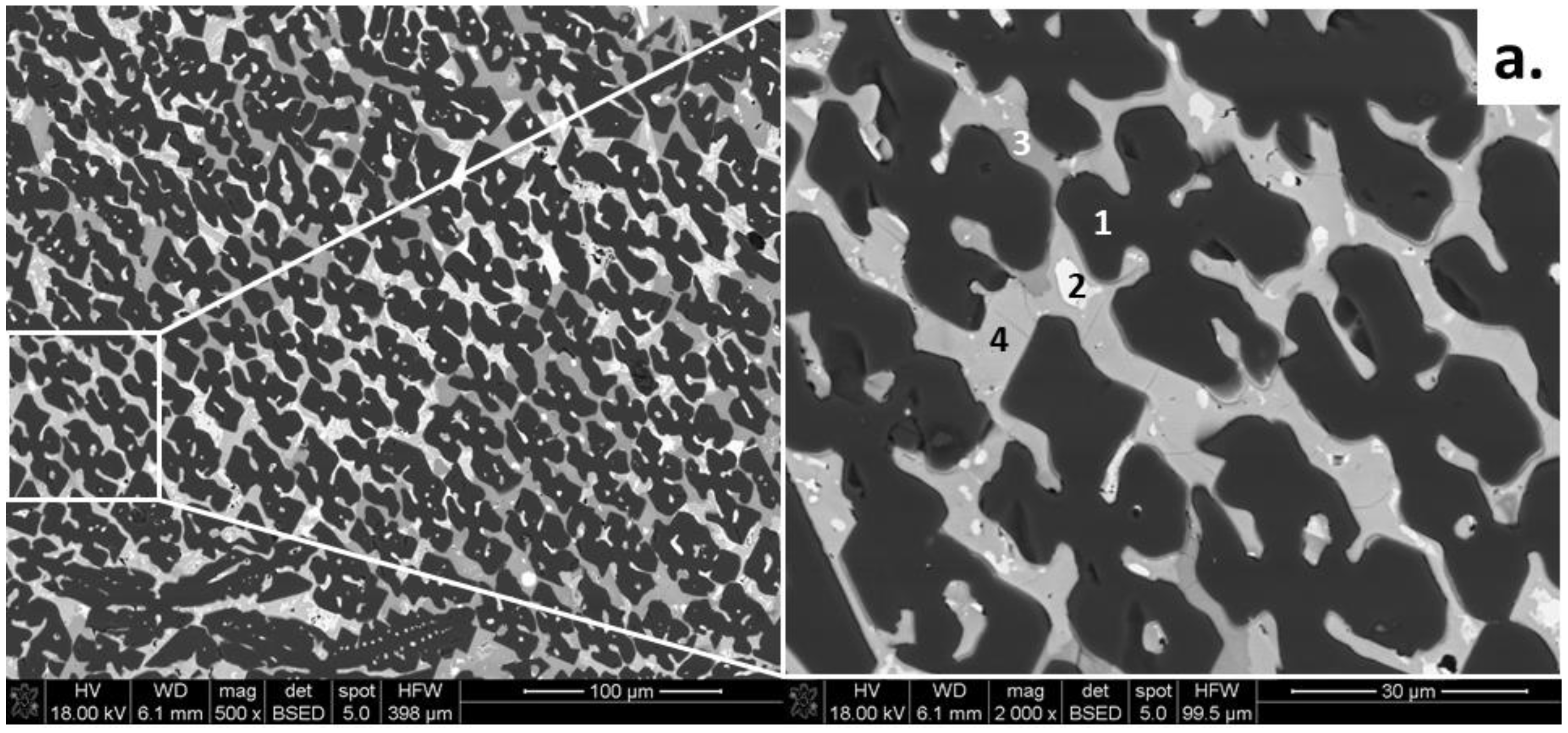

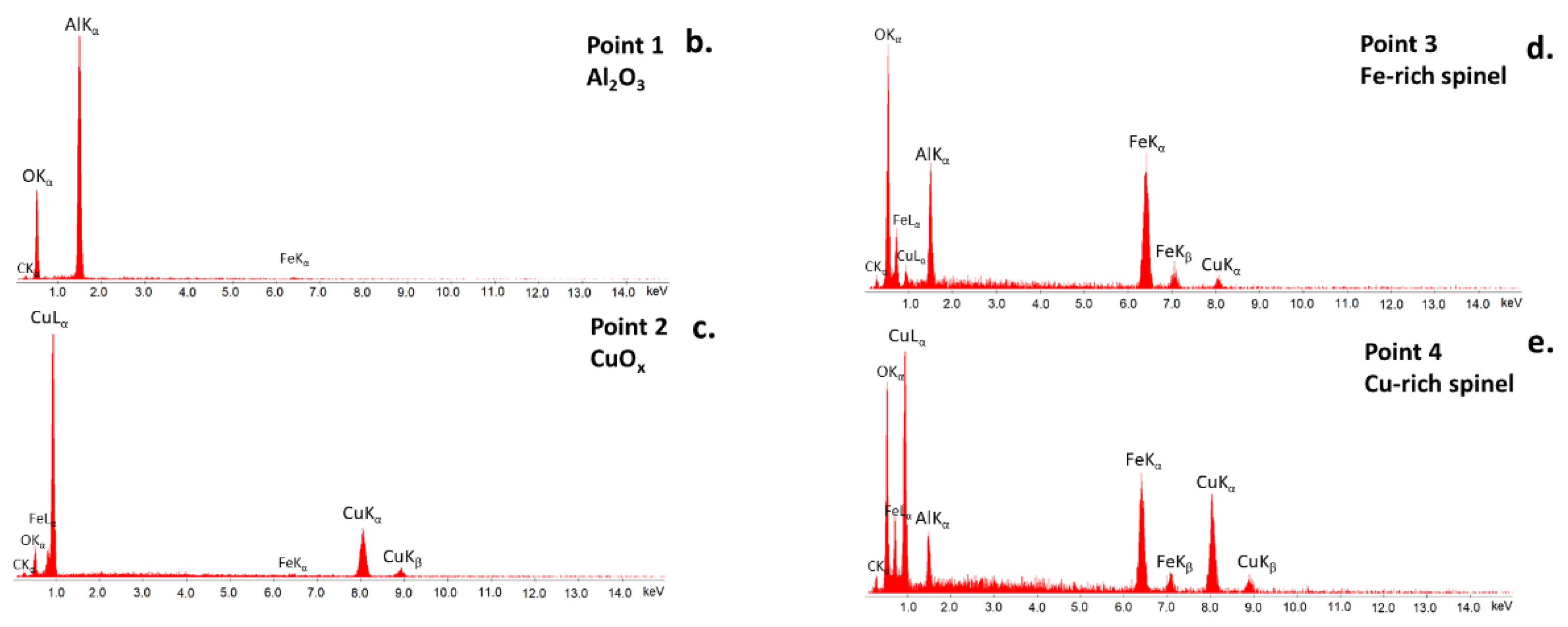

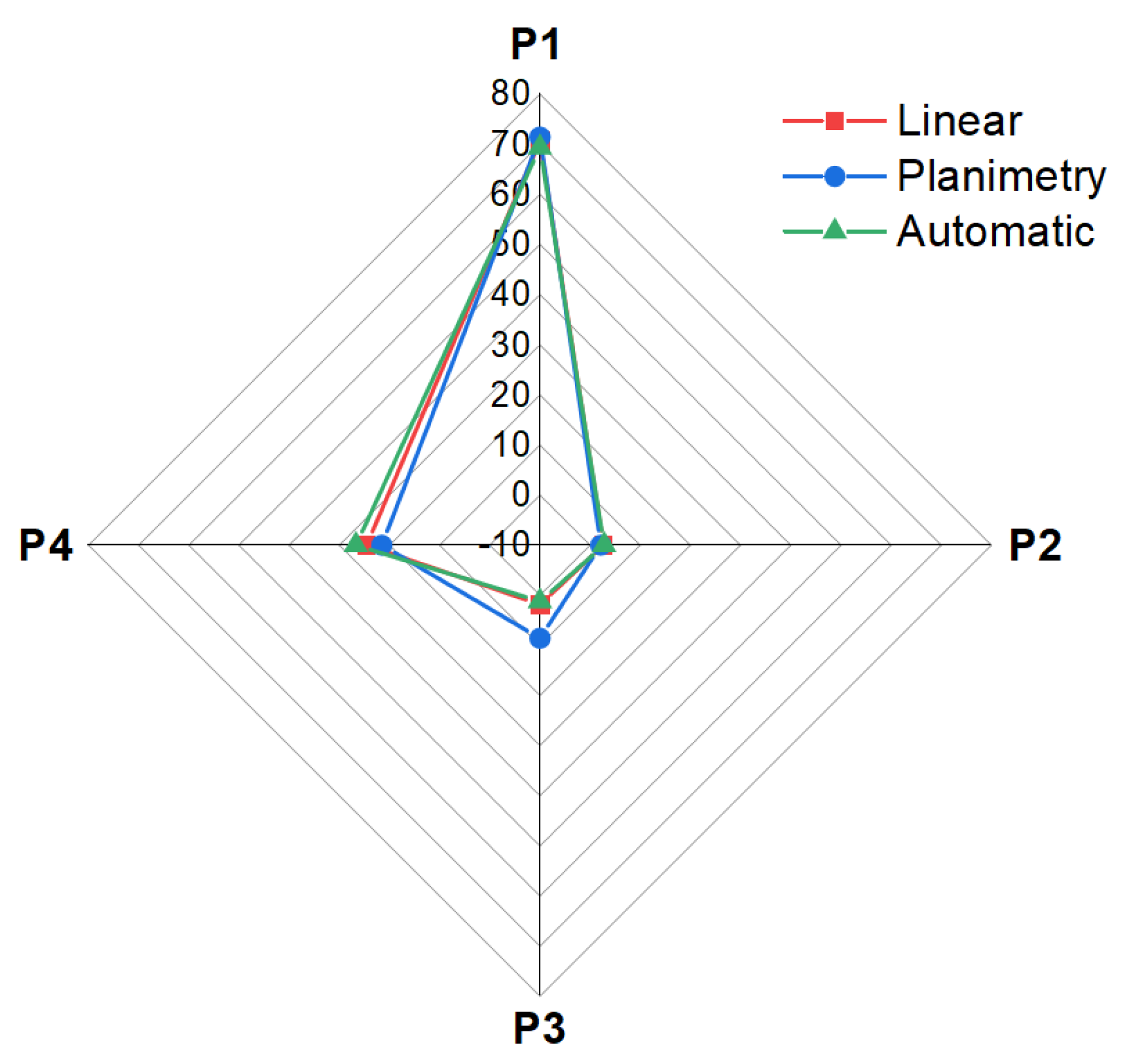

5. Results of the Quantification Analysis of the SEM Image

5.1. Traditional Stereology-Based Methods

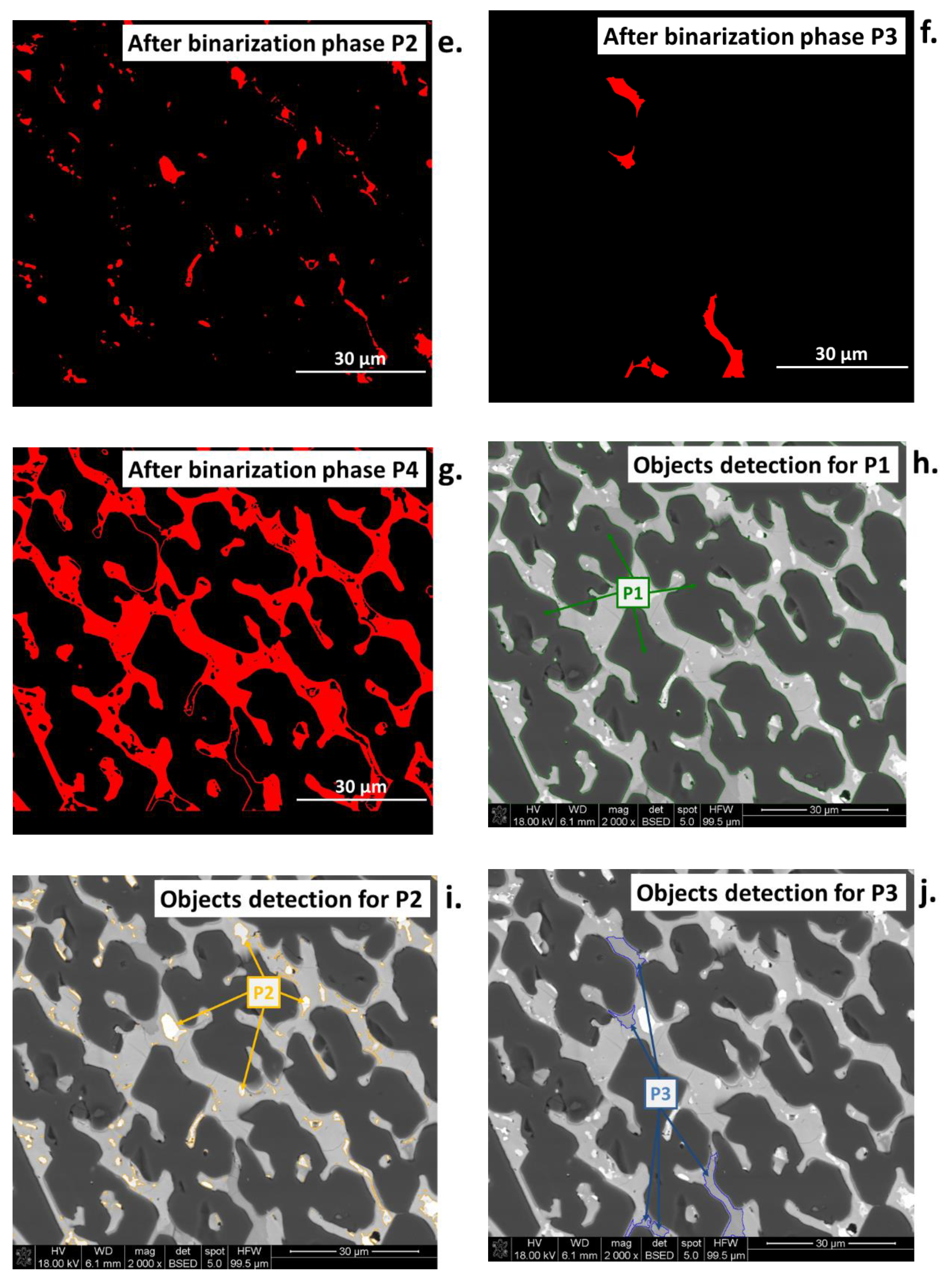

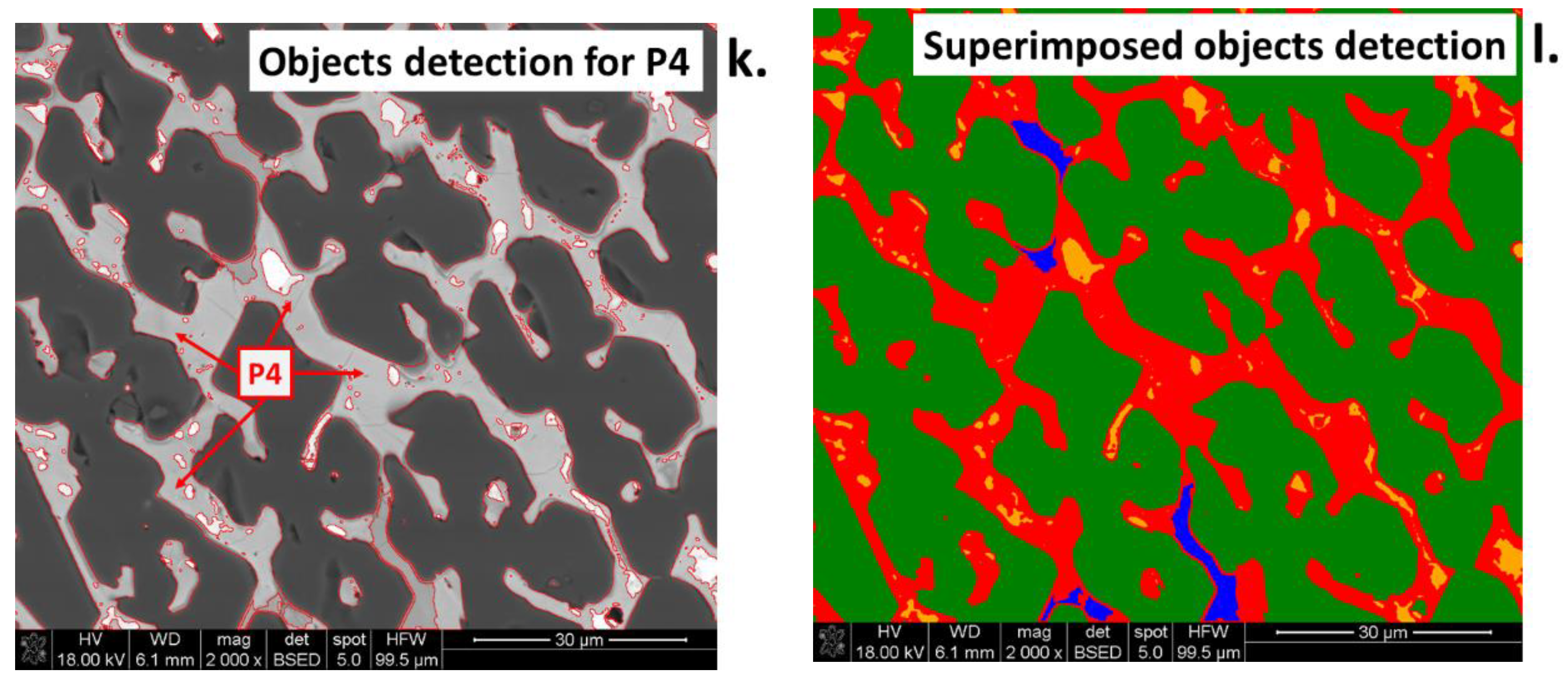

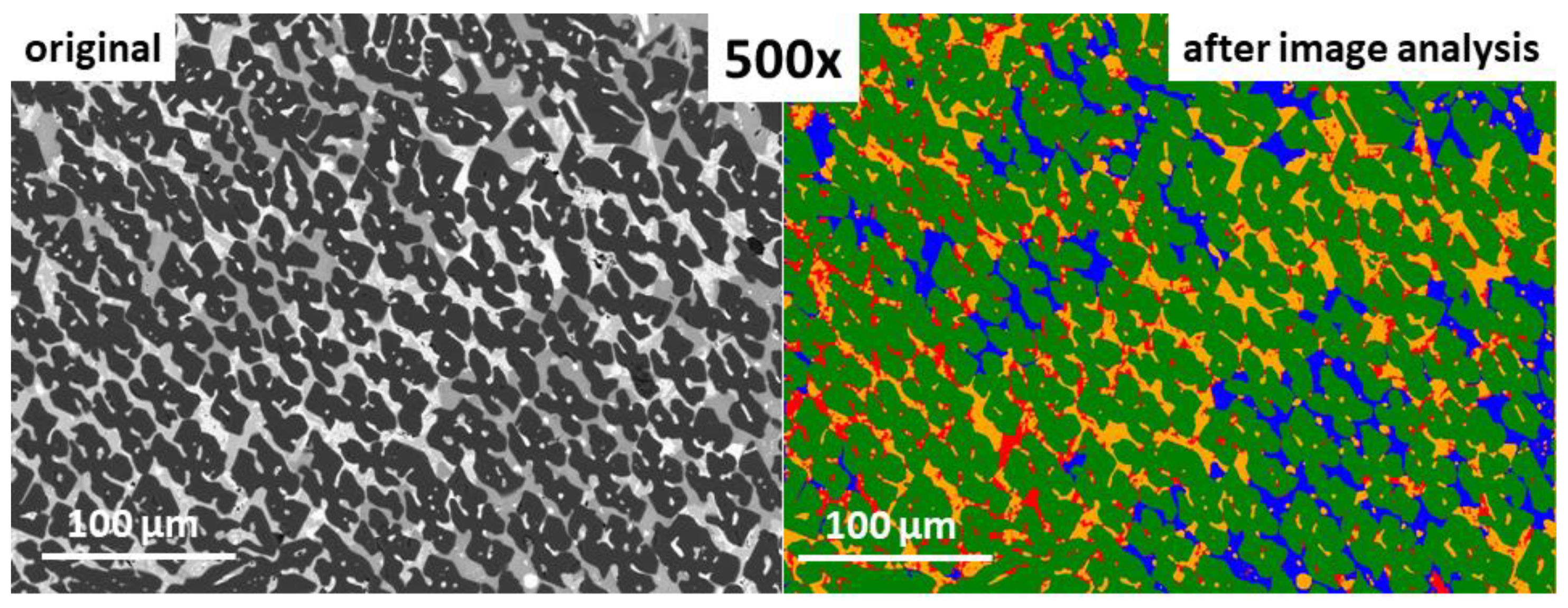

5.2. Automated Method

| Phase Name * | Amount of Phase, % | |

|---|---|---|

| Magnification | 2000× | 500× |

| P1 (green) | 66.3 ± 0.1 * | 69.3 ± 0.1 |

| P2 (orange) | 10.7 ± 0.4 | 2.8 ± 1.3 |

| P3 (blue) | 9.9 ± 0.2 | 1.2 ± 2.1 |

| P4 (red) | 13.1 ± 0.2 | 26.7 ± 0.1 |

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Prusak, Z.; Tadeusiewicz, R.; Jastrzębski, R.; Jastrzębska, I. The Advances and Perspectives in Using Medical Informatics for Steering Surgical Robots in Welding and Training of Welders Applying Long-Distance Communication Links. Weld. Technol. Rev. 2020, 92, 15–26. [Google Scholar]

- Konovalenko, I.; Maruschak, P.; Prentkovskis, O. Automated method for fractographic analysis of shape and size of dimples on fracture surface of high-strength titanium alloys. Metals 2018, 8, 161. [Google Scholar] [CrossRef]

- Tadeusiewicz, R.; Korohoda, P. Computer Analysis and Image Processing; (In Polish: Komputerowa Analiza i Prztwarzanie Obrazów); Publisher of the Foundation for the Advancement of Telecommunications (In Polish: Wydawnictwo Fundacji Postępu Telekomunikacji): Kraków, Poland, 1997. [Google Scholar]

- Lyu, K.; She, W.; Miao, C.; Chang, H.; Gu, Y. Quantitative Characterization of Pore Morphology in Hardened Cement Paste via SEM-BSE Image Analysis. Constr. Build. Mater. 2019, 202, 589–602. [Google Scholar] [CrossRef]

- Korzekwa, J.; Gądek-Moszczak, A. Application of the Image Analysis Methods for the Study of Al2O3 Surafce Coatings. Qual. Prod. Improv. 2019, 1, 406–411. [Google Scholar] [CrossRef]

- Zając, M.; Giela, T.; Freindl, K.; Kollbek, K.; Korecki, J.; Madej, E.; Pitala, K.; Kozioł-Rachwał, A.; Sikora, M.; Spiridis, N.; et al. The first experimental results from the 04BM (PEEM/XAS) beamline at Solaris. Nucl. Instrum. Methods Phys. Res. Sect. B Beam Interact. Mater. At. 2021, 492, 43–48. [Google Scholar] [CrossRef]

- Berg, J.C.; Barone, M.F.; Yoder, N. SMART Wind Turbine Rotor: Data Analysis and Conclusions; SAND2014-0712; Sandia National Laboratories: Albuquerque, CA, USA, 2014. [Google Scholar]

- Winstroth, J.; Schoen, L.; Ernst, B.; Seume, J.R. Wind Turbine Rotor Blade Monitoring Using Digital Image Correlation: A Comparison to Aeroelastic Simulations of a Multi-Megawatt Wind Turbine. J. Phys. Conf. Ser. 2014, 524, 012064. [Google Scholar] [CrossRef]

- Schneider, C.A.; Rasband, W.S.; Eliceiri, K.W. NIH Image to ImageJ: 25 Years of Image Analysis. Nat. Methods 2012, 9, 671–675. [Google Scholar] [CrossRef]

- Kitchen, M.J.; Buckley, G.A.; Gureyev, T.E.; Wallace, M.J.; Andres-Thio, N.; Uesugi, K.; Yagi, N.; Hooper, S.B. CT Dose Reduction Factors in the Thousands Using X-Ray Phase Contrast. Sci. Rep. 2017, 7, 15953. [Google Scholar] [CrossRef]

- Mangala, A.G.; Balasubramani, R.A. Review On Vehicle Speed Detection Using Image Processing. Int. J. Curr. Eng. Sci. Res. 2017, 4, 23–28. [Google Scholar]

- Liu, B.; Su, S.; Wei, J. The Effect of Data Augmentation Methods on Pedestrian Object Detection. Electronics 2022, 11, 3185. [Google Scholar] [CrossRef]

- Tundys, B.; Bachanek, K.; Puzio, E. Smart City. Models, Generations, Measuremet and Directions of Development; (In Polish: Modele, generacje, pomiar i kierunki rozwoju); Edu-Libri: Kraków, Poland, 2022. [Google Scholar]

- Soldek, J.; Shmerko, V.; Phillips, P.; Kukharev, G.; Rogers, W.; Yanushkevich, S. Image Analysis and Pattern Recognition in Biometric Technologies 1997. In Proceedings of the International Conference on the Biometrics: Fraud Prevention, Enchanced Service, Las Vegas, NV, USA, 1 January 1997; pp. 270–286. [Google Scholar]

- Shao, Z.; Zhou, Y.; Zhu, H.; Du, W.L.; Yao, R.; Chen, H. Facial Action Unit Recognition by Prior and Adaptive Attention. Electronics 2022, 11, 3047. [Google Scholar] [CrossRef]

- Wang, Y.; Yu, M.; Jiang, G.; Pan, Z.; Lin, J. Image registration algorithm based on convolutional neural network and local homography transformation. Appl. Sci. 2020, 10, 732. [Google Scholar] [CrossRef]

- Wilkinson, C.; Cowan, J.A.; Myles, D.A.A.; Cipriani, F.; McIntyre, G.J. VIVALDI—A thermal-neutron laue diffractometer for physics, chemistry and materials science. Neutron News 2002, 13, 37–41. [Google Scholar] [CrossRef]

- Pacilè, S.; Baran, P.; Dullin, C.; Dimmock, M.; Lockie, D.; Missbach-Guntner, J.; Quiney, H.; McCormack, M.; Mayo, S.; Thompson, D.; et al. Advantages of breast cancer visualization and characterization using synchrotron radiation phase-contrast tomography. J. Synchrotron Radiat. 2018, 25, 1460–1466. [Google Scholar] [CrossRef]

- Popov, A.I.; Zimmermann, J.; McIntyre, G.J.; Wilkinson, C. Photostimulated luminescence properties of neutron image plates. Opt. Mater. 2016, 59, 83–86. [Google Scholar] [CrossRef]

- McIntyre, G.J.; Mélési, L.; Guthrie, M.; Tulk, C.A.; Xu, J.; Parise, J.B. One picture says it all—High-pressure cells for neutron Laue diffraction on VIVALDI. J. Phys. Condens. Matter 2005, 17, S3017–S3024. [Google Scholar] [CrossRef]

- Goldstein, J.I.; Newbury, D.E.; Michael, J.R.; Ritchie, N.W.M.; Scott, J.H.J.; Joy, D.C. Scanning Electron Microscopy and X-Ray Microanalysis, 4th ed.; Springer: Berlin/Heidelberg, Germany, 2018; EBook. [Google Scholar]

- Wolszczak, P.; Kubínová, L.; Janáček, J.; Guilak, F.; Opatrný, Z. Comparison of Several Digital and Stereological Methods for Estimating Surface Area and Volume of Cells Studied by Confocal Microscopy. Cytometry 1999, 36, 85–95. [Google Scholar]

- Gądek-Moszczak, A.; Wojnar, L.; Piwowarczyk, A. Comparison of Selected Shading Correction Methods. System Safety: Hum. Tech. Facil. Environ. 2019, 1, 819–826. [Google Scholar]

- Profile, S.E.E. Focus Stacking Algorithm for Scanning Electron Microscopy (In Polish: Algorytm cyfrowej korekcji głębi ostrości w elektronowej mikroskopii skaningowej). Mechanik 2016, 87, 59–68. [Google Scholar]

- Jastrzębska, I.; Jastrzębski, A. Smart Equipment for Preparation of Ceramic Materials by Arc Plasma Synthesis (APS). In Proceedings of the Electronic Materials and Applications EMA2022, Orlando, FL, USA, 18–20 January 2017; Available online: https://ceramics.org/meetings-events/acers-meeting-archives/electronic-materials-and-applications-ema-2017-archive (accessed on 1 December 2022).

- Jastrzębska, I.; Szczerba, J.; Stoch, P.; Błachowski, A.; Ruebenbauer, K.; Prorok, R.; Śniezek, E. Crystal Structure and Mössbauer Study of FeAl2O4. Nukleonika 2015, 60, 45–47. [Google Scholar] [CrossRef]

- Jastrzebska, I.; Bodnar, W.; Witte, K.; Burkel, E.; Stoch, P.; Szczerba, J. Structural Properties of Mn-Substituted Hercynite. Nukleonika 2017, 62, 95–100. [Google Scholar] [CrossRef]

- Jastrzębska, I.; Szczerba, J.; Stoch, P. Structural and microstructural study on the arc-plasma synthesized (APS) FeAl2O4-MgAl2O4 transitional refractory compound. High-Temp. Mater. Process. 2017, 36, 299–303. [Google Scholar] [CrossRef]

- Śnieżek, E.; Szczerba, J.; Stoch, P.; Prorok, R.; Jastrzębska, I.; Bodnar, W.; Burkel, E. Structural properties of MgO-ZrO2 ceramics obtained by conventional sintering, arc melting and field assisted sintering technique. Mater. Des. 2016, 99, 412–420. [Google Scholar] [CrossRef]

- Szczerba, J.; Śniezek, E.; Stoch, P.; Prorok, R.; Jastrzebska, I. The role and position of iron in 0.8CaZrO3−0.2CaFe2O4. Nukleonika 2015, 60, 147–150. [Google Scholar] [CrossRef]

- Słowik, G. Fundamentals of Electron Microscopy and Its Selected Applications in Characterization of Carrier Catalysts. In Adsorbents and Catalysts. Selected Technologies and the Environment; (In Polish: Podstawy mikroskopii elektronowej i jej wybrane zastosowania w charakterystyce katalizatorów nośnikowych. Rozdział 12 Adsorbenty i katalizatory. Wybrane technologie a środowisko); Ryczkowski, J., Ed.; University of Rzeszów: Rzeszów, Poland, 2012; Chapter 12; pp. 219–243. [Google Scholar]

- Trzciński, J. Combined SEM and Computerized Image Analysis of Clay Soils Microstructure: Technique & Application. Advances in Geotechnical Engineering: The Skempton Conference. In Proceedings of the Advances in Geotechnical Engineering, London, UK, 29–31 March 2004; pp. 654–666. [Google Scholar]

- Wojnar, L.; Kurzydłowski, K.J.; Szala, J. Practice of Image Analysis; (In Polish: Praktyka Analizy Obrazu); Polish Steorological Sciety: Kraków, Poland, 2002; EBook. [Google Scholar]

- ASTM E112-10; Standard Test Methods for Determining Average Grain Size, American Society for Testing and Materials. Endolab: Riedering, Germany.

- ISO 2624; Copper and Copper Alloys-Estimation of Average Grain Size. ISO: Geneva, Switzerland.

- Makowski, K. New Requirements for Ergonomic Parameters of Respiratory Protection Equipment. (In Polish: Nowe Wymagania w Zakresie Parametrów Ergonomicznych Sprzętu Ochrony Układu Oddechowego). Occup. Saf. 2020, 10, 22–25. [Google Scholar]

- Russ, J.; Neal, F. Segmentation and Thresholding. In The Image Processing Handbook, 7th ed.; CRC Press: Boca Raton, FL, USA, 2016; pp. 381–437. [Google Scholar]

- Grabowski, G. Quantitative Microstructure Analysis of Ceramic Materials; (In Polish: Ilościowa Analiza Mikrostruktury Materiałów Ceramicznych); Akapit: Kraków, Poland, 2022. [Google Scholar]

- Wojnar, L. Image Analysis How Does It Work ? (In Polish: Analiza Obrazu Jak to działa?); Cracow University of Technology Kraków: Kraków, Poland, 2020. [Google Scholar]

- Liu, Z.; Hong, H.; Gan, Z.; Wang, J.; Chen, Y. An Improved Method for Evaluating Image Sharpness Based on Edge Information. Appl. Sci. 2022, 12, 6712. [Google Scholar] [CrossRef]

- Malik, M.; Spurek, P.; Tabor, J. Cross-Entropy Based Image Thresholding. Schedae Inform. 2015, 24, 21–29. [Google Scholar]

- Ludwig, M.; Śnieżek, E.; Jastrzębska, I.; Piwowarczyk, A.; Wojteczko, A.; Li, Y.; Szczerba, J. Corrosion of Magnesia-Chromite Refractory by PbO-Rich Copper Slags. Corros. Sci. 2022, 109949, 1–22. [Google Scholar] [CrossRef]

- The Mohs Hardness Scale and Chart For Select Gems. Available online: https://www.gemsociety.org/article/select-gems-ordered-mohs-hardness/ (accessed on 18 October 2022).

- Tadeusiewicz, R.; Jastrzębska, I.; Jastrzębski, R. The Possibility of Creating a Welding Mask with Computer Processing of Spatial Image Instead of Welding Filters. Weld. Technol. Rev. 2010, 2–15. [Google Scholar]

- Gądek-Moszak, A. Problem of the Objects Detection on Low Quality 3D Image—Example Solution. Qual. Prod. Improv. 2017, 6, 131–141. [Google Scholar] [CrossRef]

- Piwowarczyk, A.; Wojnar, L. Machine Learning Versus Human-Developed Algorithms in Image Analysis of Microstructures. Qual. Prod. Improv. 2019, 1, 412–416. [Google Scholar] [CrossRef]

- Chan, H.; Cherukara, M.; Loeffler, T.D.; Narayanan, B.; Sankaranarayanan, S.K.R.S. Machine Learning Enabled Autonomous Microstructural Characterization in 3D Samples. npj Comput. Mater. 2020, 6, 1–9. [Google Scholar] [CrossRef]

| Point | Phase | Chemical Composition, mol. % * | ||

|---|---|---|---|---|

| Cu | Fe | Al | ||

| 1 | Alumina Al2O3 | - | 0.9 | 47.0 |

| 2 | Copper oxide CuOx | 67.3 | 1.8 | 0.6 |

| 3 | Fe-rich spinel (Fe,Cu)(Fe,Al,Cu)2O4 | 2.6 | 31.9 | 13.0 |

| 4 | Cu-rich spinel (Cu,Fe)(Cu,Fe,Al)2O4 | 30.7 | 19.4 | 7.7 |

| Name | Color Space Definitions |

|---|---|

| RGB | Red, Green, and Blue |

| HSI | Hue, Saturation, and Intensity |

| HSV | Hue, Saturation, and Value |

| YUV | Luminance and Chrominance |

| Phase Name | Phase Amount, % | |

|---|---|---|

| Linear | Planimetry | |

| P1 (darkest) | 70.6 | 71.4 |

| P2 (lightest) | 2.7 | 2.1 |

| P3 (dark grey) | 1.9 | 8.6 |

| P4 (light grey) | 24.6 | 21.6 |

| Parameter | Description |

|---|---|

| Pixel Count | Number of pixels making up the object |

| Height | The difference between an object’s highest Y coordinate and its lowest Y coordinate |

| Width | The difference between an object’s right X coordinate and its left X coordinate |

| Centroid | The average position of all pixels in an object expressed as a pair of x, y coordinates (i.e., the center of mass of the object) |

| Major Axis | Angle in radians from the X-axis of the principal axis of inertia. This object attribute gives the main orientation of the object to the X-axis. |

| BR Fill Ratio | The ratio between the area of an object and the area of its bounding rectangle. The bounding rectangle has the same orientation as the X.Y coordinate system of the image. |

| Perimeter | An estimate of the object perimeter based on the number of 4-connected neighboring pixels along the object boundary |

| Crofton Perimeter | Facility circuit estimate based on a more complex analysis than 4-connectivity |

| Compactness | An object attribute that is equal to 16.Area/Perimeter^2 |

| Bounding Rectangle To Perimeter | The ratio between the perimeter of an object and the perimeter of its bounding rectangle, where the latter is oriented along the X, and Y axis. The perimeter measure used for this ratio is Perimeter, as described above. |

| Number of Holes | The number of holes in an object. A hole is one or more connected background pixels completely contained within an object. |

| Area | Facility area |

| Elongation | The absolute value of the difference between the inertia of the major and minor axes is divided by the sum of these inertias. The minor axis is defined as the axis perpendicular to the major axis. |

| Circularity | For a given object this attribute is equal to: |

| Intercepts | Several transitions from background to object in 0°, 45°, 90°, and 135° directions |

| Equivalent Diameter | Specifies the diameter of a circle whose area is equal to the area of the object |

| Convexity | This attribute is equal to the area of the object divided by the area of its convex hull |

| Perimeter Variation | The sum of the changes in direction between the boundary pixels where a change of 45 degrees equals 1, a change of 90 degrees equals 2, and a change of 135 degrees equals 3 |

| Convex Min Angle | The minimum of the angles formed by adjacent pairs of line segments comprising a polygonal object boundary is given in radians |

| Symmetry Mean Difference | The average of the absolute values of the difference in length between the centroid and the two opposite boundary points of the object |

| Convex Area | The convex hull area of the object |

| Convex Perimeter | Circumference of the object’s convex hull using the Perimeter measure |

| Holes Area | A vector containing the surface area of the holes in the object |

| Holes Total Area | The total area of facility openings |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jastrzębska, I.; Piwowarczyk, A. Traditional vs. Automated Computer Image Analysis—A Comparative Assessment of Use for Analysis of Digital SEM Images of High-Temperature Ceramic Material. Materials 2023, 16, 812. https://doi.org/10.3390/ma16020812

Jastrzębska I, Piwowarczyk A. Traditional vs. Automated Computer Image Analysis—A Comparative Assessment of Use for Analysis of Digital SEM Images of High-Temperature Ceramic Material. Materials. 2023; 16(2):812. https://doi.org/10.3390/ma16020812

Chicago/Turabian StyleJastrzębska, Ilona, and Adam Piwowarczyk. 2023. "Traditional vs. Automated Computer Image Analysis—A Comparative Assessment of Use for Analysis of Digital SEM Images of High-Temperature Ceramic Material" Materials 16, no. 2: 812. https://doi.org/10.3390/ma16020812

APA StyleJastrzębska, I., & Piwowarczyk, A. (2023). Traditional vs. Automated Computer Image Analysis—A Comparative Assessment of Use for Analysis of Digital SEM Images of High-Temperature Ceramic Material. Materials, 16(2), 812. https://doi.org/10.3390/ma16020812