1. Introduction

As a critical component of renewable energy systems, photovoltaic power generation plays an increasingly vital role in the global transformation of energy infrastructure [

1]. Nevertheless, PV power output exhibits significant variability and instability due to its dependence on diverse meteorological factors such as atmospheric temperature, relative humidity, wind speed, directional airflow, and solar irradiance, among others [

2]. These inherent fluctuations introduce considerable complexities for maintaining grid stability, formulating effective dispatch strategies, and executing electricity market operations [

3]. Consequently, high-accuracy forecasting of PV power generation enables more efficient allocation of grid resources, lowers peak-shaving expenses, and improves renewable energy absorption capacity, thereby emerging as a pivotal research domain within the energy sector [

4].

Traditional methodologies for photovoltaic power prediction are generally classified into two main streams: physical model-based approaches and statistical data-driven techniques [

5]. Physical approaches formulate mathematical representations by combining numerical weather prediction (NWP) information with the inherent physical characteristics of PV systems. Since this methodology does not require historical operational records, it proves especially advantageous for newly commissioned power stations [

6]. However, the predictive accuracy of such methods is heavily dependent on the precision of NWP inputs and the comprehensiveness of the underlying physical representations [

7]. In contrast, statistical techniques rely on historical power generation data to establish predictive models, employing conventional time-series algorithms such as Auto Regressive Integrated Moving Average (ARIMA) [

8], and Auto Regressive Moving Average (ARMA) [

9], among others. Despite their widespread application, these conventional methods exhibit notable limitations in modeling nonlinear relationships and processing high-dimensional datasets. Specifically, physical models demonstrate high sensitivity to the quality and resolution of meteorological data, whereas statistical approaches often fail to accurately represent complex dynamic behaviors under rapidly changing weather conditions. These inherent shortcomings have accelerated the adoption of machine learning strategies in PV forecasting, thereby promoting a fundamental transition from traditional modeling paradigms toward data-driven analytical frameworks [

10].

The proliferation of big data technologies and enhanced accessibility of high-quality datasets have led to a growing utilization of machine learning techniques in photovoltaic power forecasting [

11]. Methods including Support Vector Machines (SVM) [

12], Random Forest (RF) [

13], and gradient boosting tree variants such as XGBoost [

14] and LightGBM [

15] demonstrate a pronounced ability to autonomously capture intricate nonlinear interactions and multivariate coupled characteristics from historical records, thereby substantially improving prediction accuracy [

16]. These data-driven algorithms exhibit clear advantages over conventional approaches, particularly in recognizing complex patterns and processing high-dimensional datasets, effectively addressing numerous constraints inherent in traditional forecasting frameworks. Through adaptive learning mechanisms, machine learning models uncover latent data patterns while maintaining considerable robustness and generalization performance [

17]. Nonetheless, with the escalating complexity of prediction scenarios and continuous expansion of data volumes, these methods increasingly reveal limitations in modeling long-term temporal dependencies and capturing sophisticated spatiotemporal relationships [

18]. This identified gap has subsequently accelerated the development and deployment of more advanced deep learning architectures within the PV forecasting domain [

19].

Deep learning architectures have driven substantial advancements in photovoltaic power forecasting, largely attributable to their superior capabilities in automated feature representation and sequential pattern modeling [

20]. Particularly, recurrent neural network variants like Long Short-Term Memory (LSTM) [

21] and gated recurrent unit (GRU) [

22] excel at modeling temporal relationships across extended time horizons and have shown exceptional efficacy in ultra-short-term prediction scenarios [

23]. Through sophisticated gating mechanisms that dynamically regulate information flow—selectively preserving relevant features while discarding redundant information—these models effectively alleviate the persistent challenges of gradient vanishing and explosion that plague conventional recurrent networks [

6].

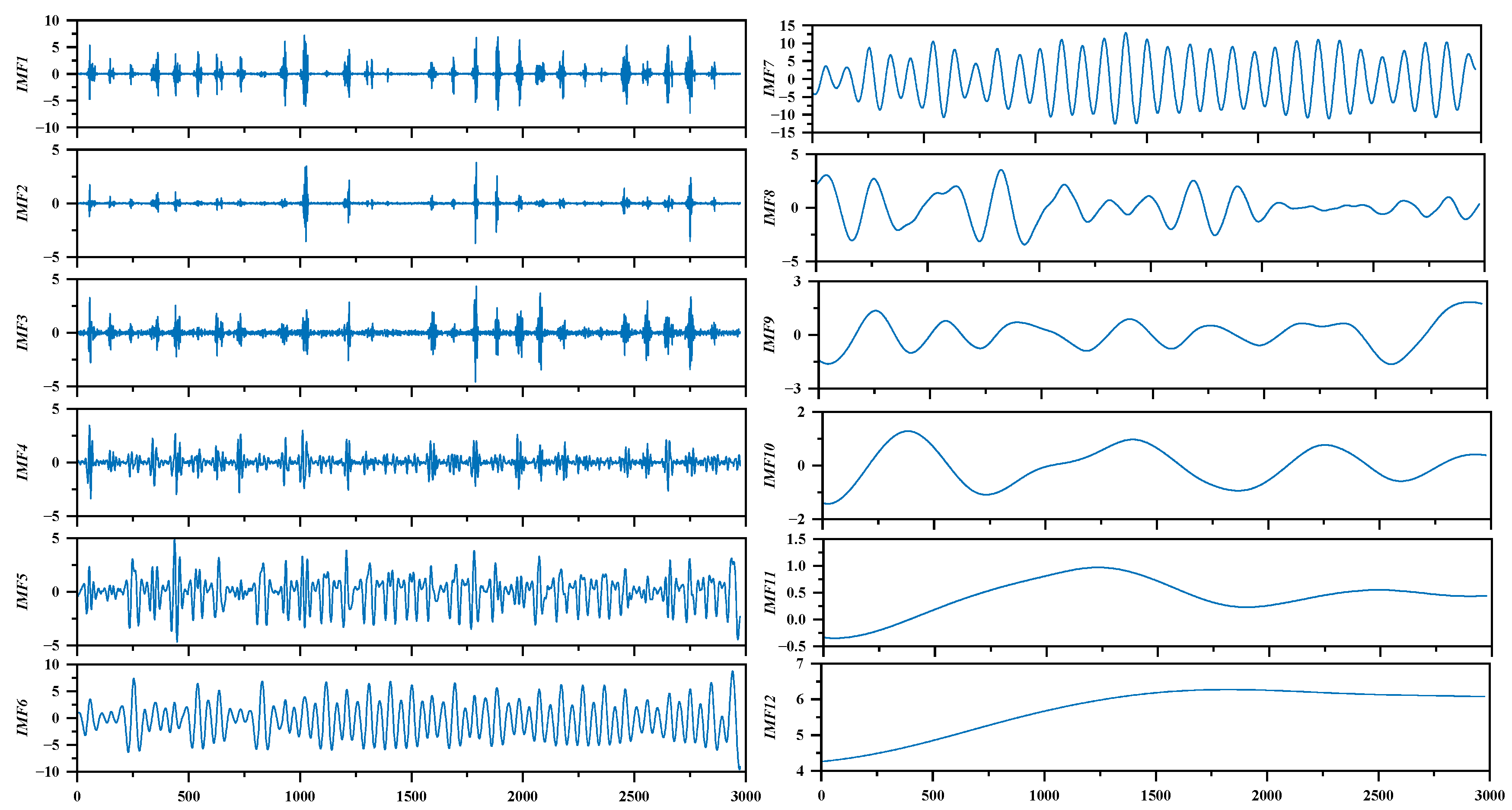

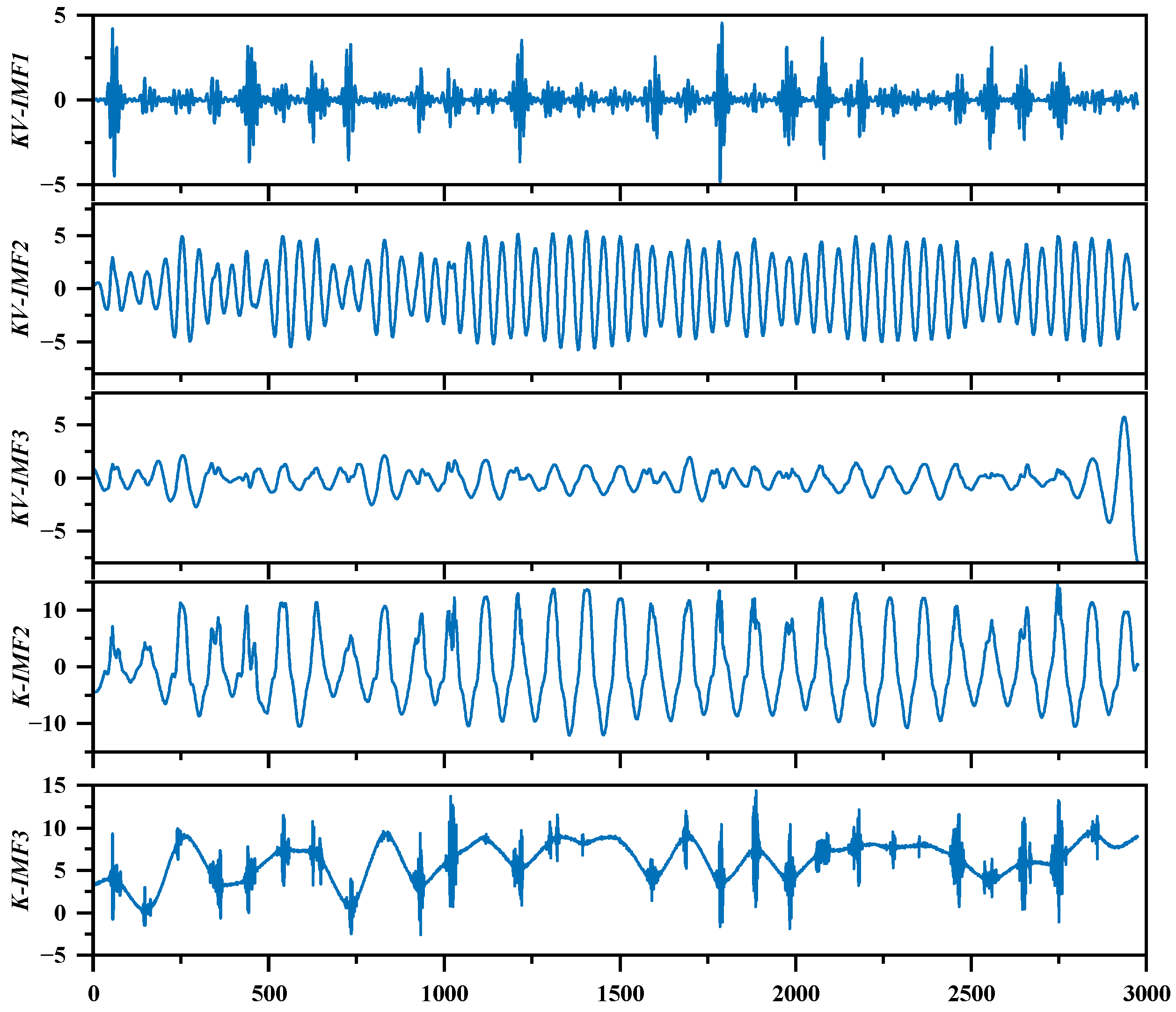

In pursuit of augmented predictive capabilities in deep learning frameworks, numerous optimization and integration methodologies have been introduced. One prominent strategy involves the coupling of variational mode decomposition (VMD) with gated recurrent units refined by metaheuristic optimization techniques. This hybrid approach dissects raw photovoltaic data into multiple intrinsic mode functions (IMFs) spanning distinct frequency bands, effectively attenuating non-stationary characteristics in the original sequences. Concurrently, the implementation of intelligent optimization algorithms for comprehensive hyperparameter configuration significantly enhances the development of more resilient forecasting architectures [

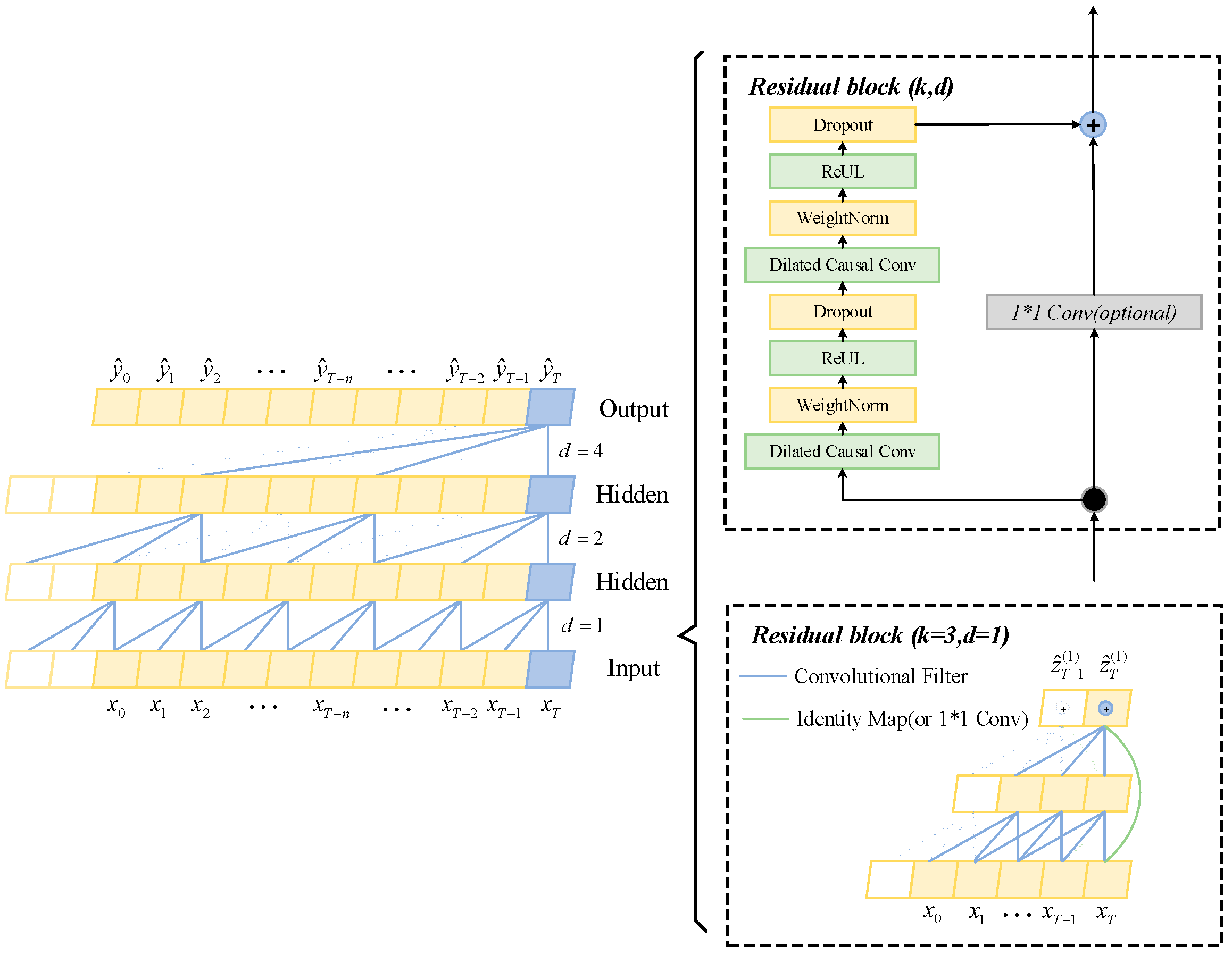

24]. As deep learning methodologies continue to evolve, composite structures incorporating temporal convolutional networks (TCN) [

25], bidirectional gated recurrent units (BiGRU) [

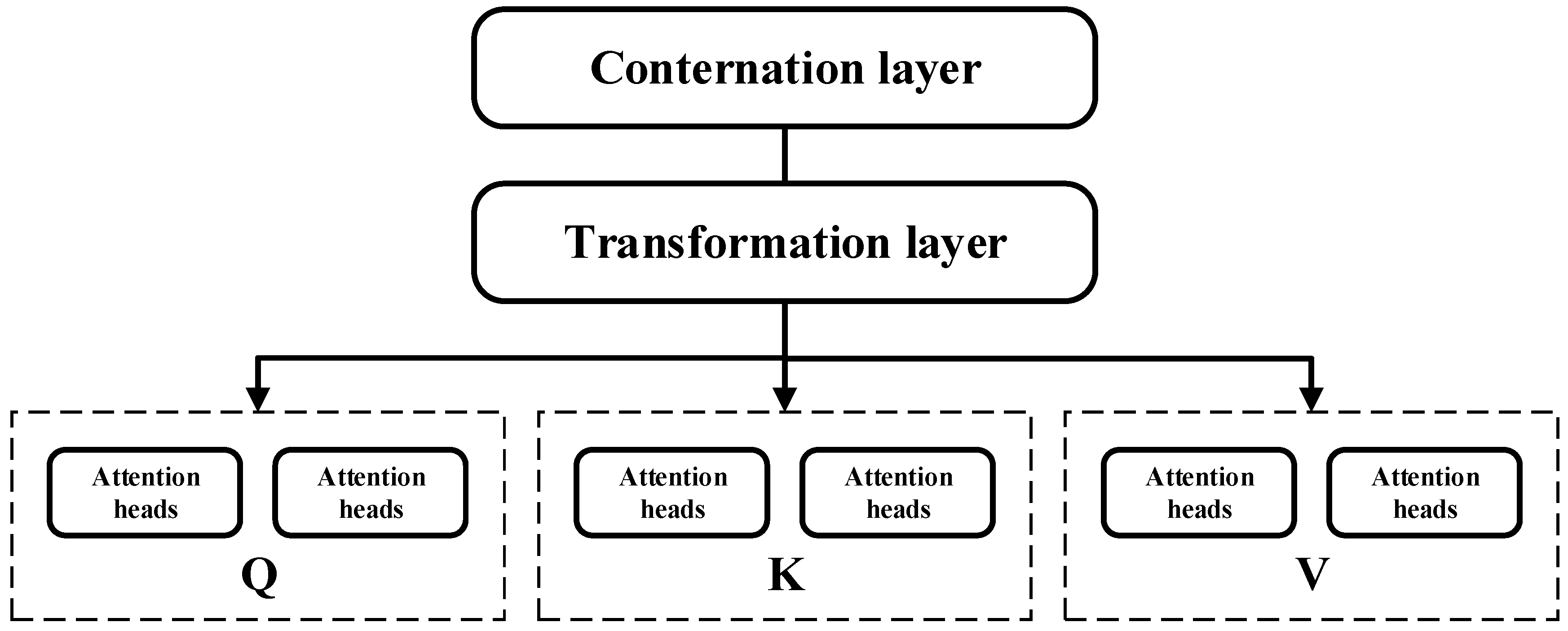

26], and attention mechanisms have manifested pronounced superiority. The prototypical TCN-BiGRU-Attention framework employs a staged processing pipeline: initially, the TCN component utilizes dilated convolutional operations to derive multi-scale temporal attributes from input sequences; subsequently, the BiGRU module models both forward and backward temporal relationships within the extracted features; ultimately, the attention component dynamically prioritizes salient temporal information through adaptive weighting of BiGRU outputs, synthesizing the final forecast [

27]. This synergistic architecture capitalizes on the extended temporal dependency modeling of TCN, the bidirectional context encoding of BiGRU, and the discriminative feature emphasis enabled by attention, collectively elevating both predictive precision and operational stability [

28].

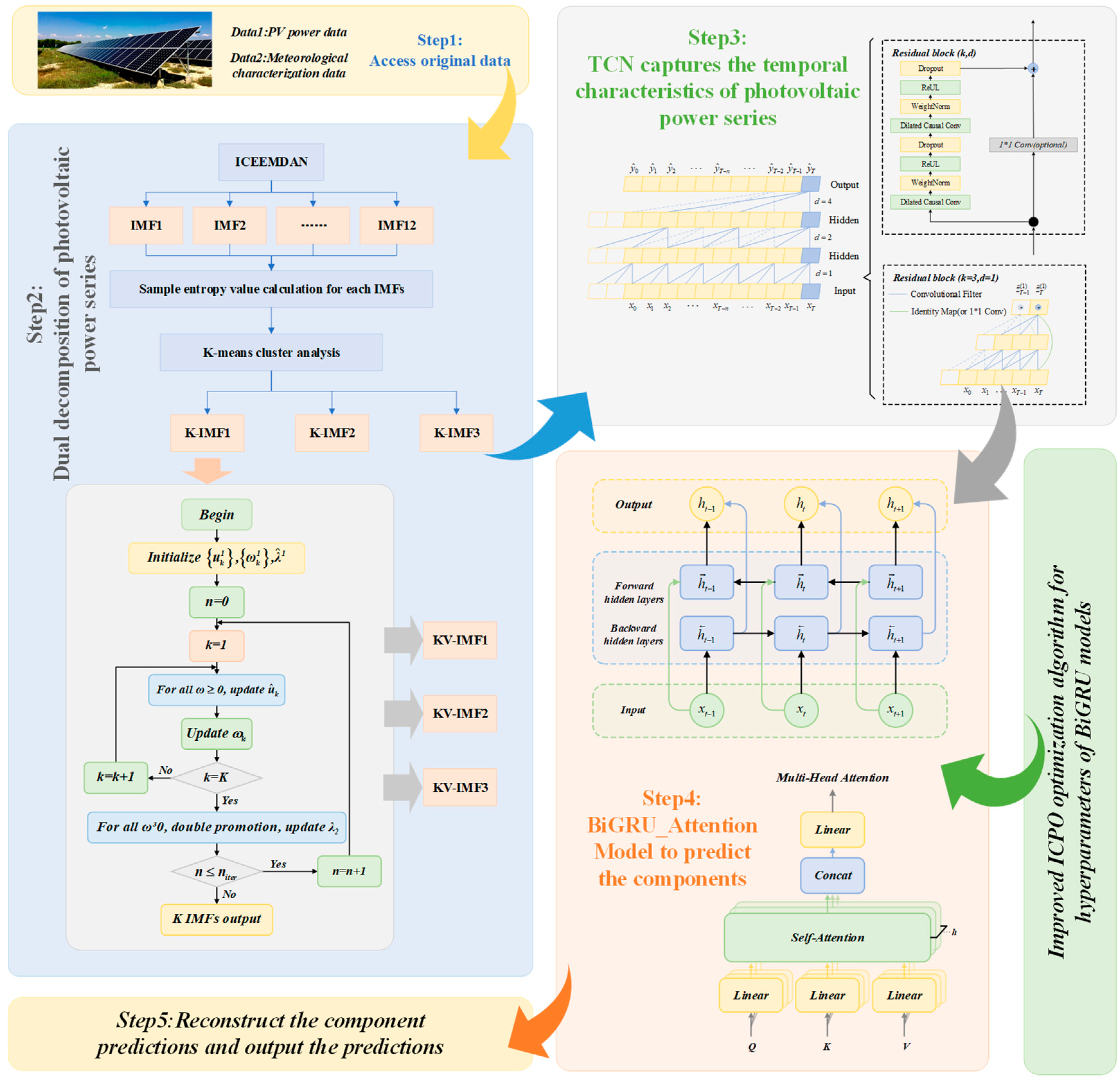

This paper presents a hybrid forecasting framework for short-term photovoltaic power prediction, built upon a dual-stage decomposition architecture. The proposed methodology initiates with the application of Improved Complete Ensemble Empirical Mode Decomposition with Adaptive Noise (ICEEMDAN) to disassemble the original PV power sequence into constituent components. Subsequent computation of sample entropy for these components enables their classification into high-, medium-, and low-frequency categories through K-means clustering. The highest-frequency cluster then undergoes secondary decomposition via Variational Mode Decomposition (VMD) to further refine its characteristics. Each resulting subcomponent is processed through a TCN_BiGRU_Attention network for individual prediction, with the final PV power forecast obtained through systematic reconstruction of all subsequence predictions. Experimental validation confirms the superior predictive accuracy of this integrated approach compared to conventional forecasting techniques. The principal contributions of this research are delineated as follows:

A novel hybrid forecasting methodology is introduced, integrating ICEEMDAN, VMD, TCN, BiGRU, Attention mechanism, and ICPO optimization. The TCN_BiGRU_Attention composite architecture demonstrates enhanced capability in capturing long-range temporal dependencies within power sequences while leveraging attention mechanisms to accentuate critical temporal features, thereby effectively modeling complex nonlinear relationships inherent in PV power data.

A comprehensive feature extraction strategy is implemented through the synergistic application of ICEEMDAN, K-means clustering, and VMD techniques. This multi-layered decomposition approach facilitates thorough exploitation of data characteristics across different frequency domains, substantially improving the model’s predictive performance.

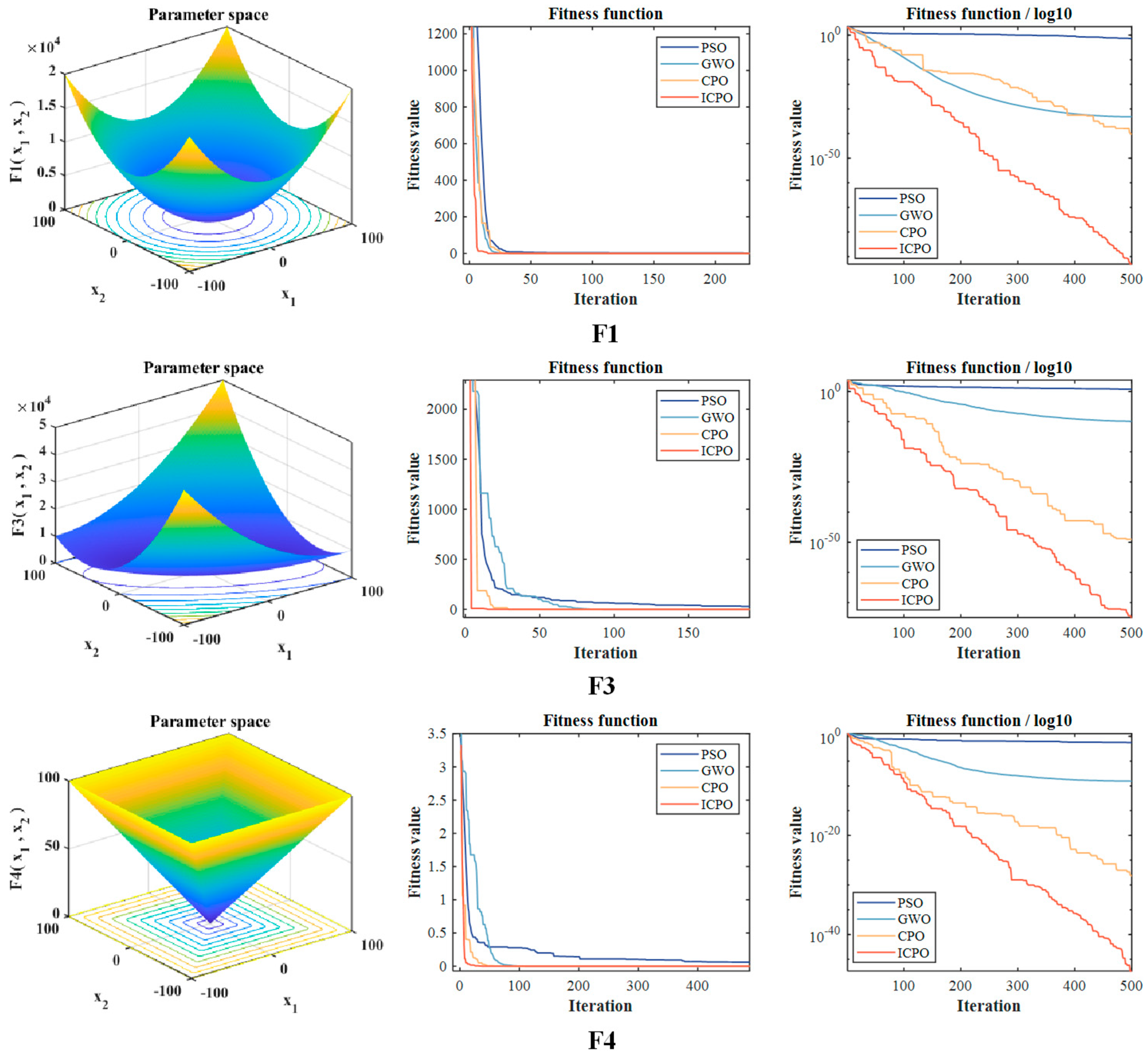

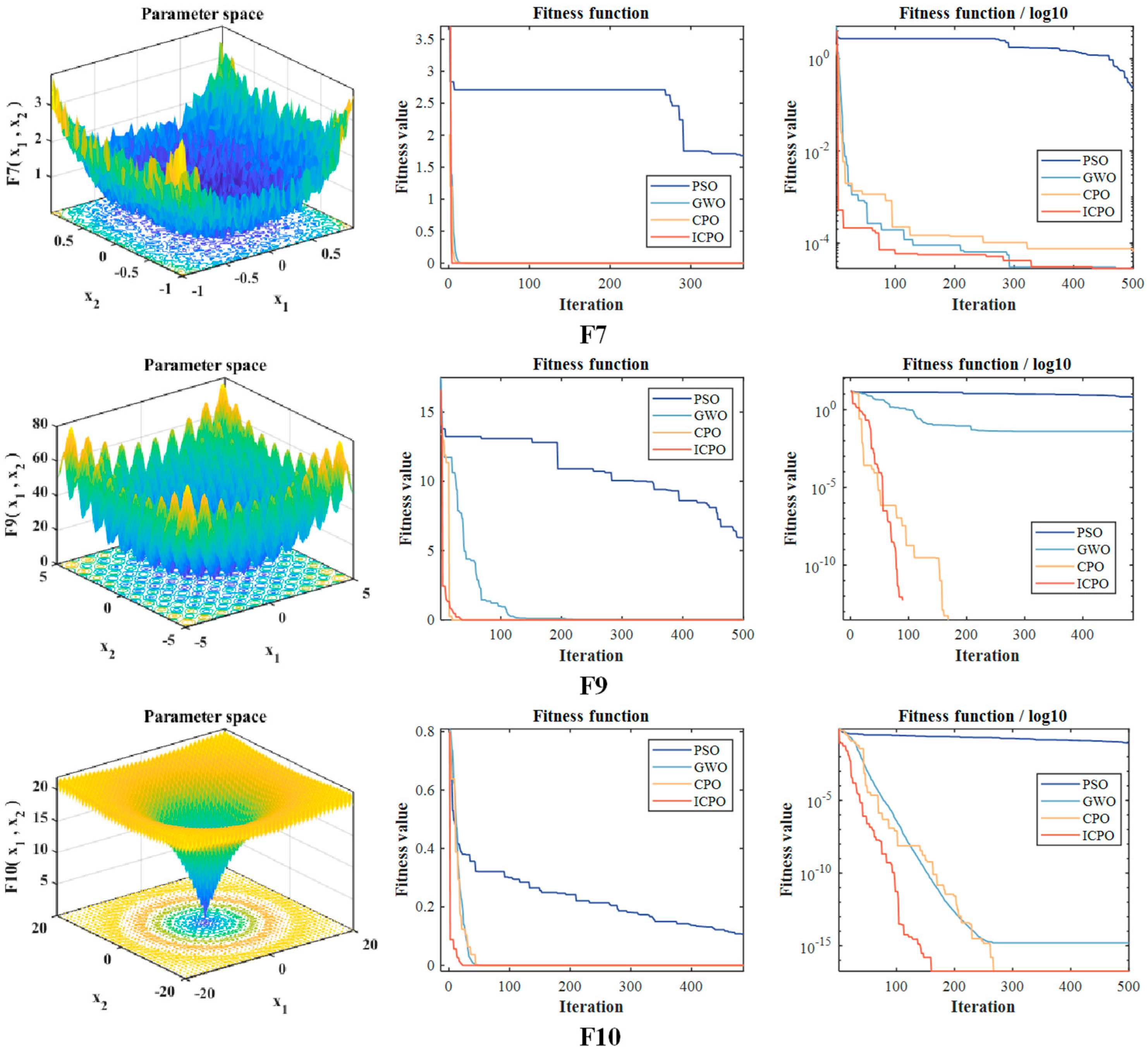

Significant enhancements to the Crested Porcupine Optimizer (CPO) are achieved through incorporating Chebyshev Chaotic Mapping, Triangular Wandering Strategy, and Lévy Flight mechanisms. These modifications promote superior initial population distribution within the search space, consequently accelerating the convergence rate of the TCN_BiGRU_Attention model during training.

3. Flowchart of the Probabilistic Prediction Model

This paper introduces an integrated framework for PV power forecasting, which synergistically combines Variational Mode Decomposition (VMD) for sequence processing and an Improved CPO (ICPO) algorithm to enhance the TCN_BiGRU_Attention model’s efficacy. The role of ICPO is specifically dedicated to the hyperparameter tuning of the deep learning network, thereby refining its predictive performance.

Figure 3 depicts the overall structure of this proposed system, with its operational sequence detailed in the following steps:

Step 1: Decompose the PV power sequence using VMD to obtain relatively smooth and less complex multicomponent to enhance the model’s ability to capture power generation features.

Step 2: Divide the dataset. Divide the dataset into a training set and a test set to facilitate the input model for prediction.

Step 3: Construct the TCN_BiGRU_Attention prediction model. Multiple TCN layers are used to extract the PV power series time features, BiGRU is used to capture the bi-directional information of the time series, and Attention mechanism is used to understand the importance of different time features.

Step 4: Optimize the hyperparameters of the TCN_BiGRU_Attention prediction model using the ICPO algorithm.

Step 5: The optimized TCN_BiGRU_Attention prediction model provides test samples in order to predict the PV power.

6. Conclusions

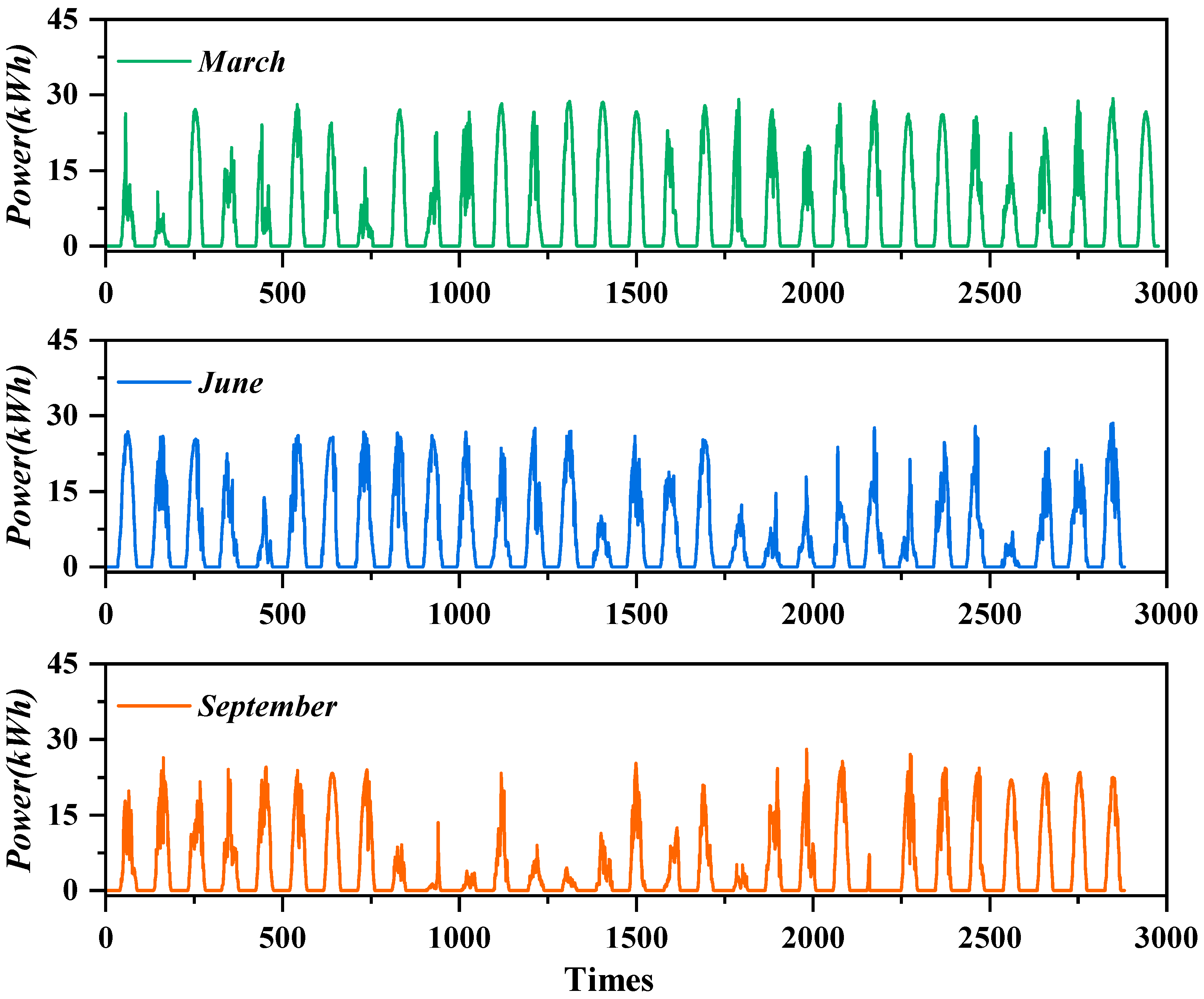

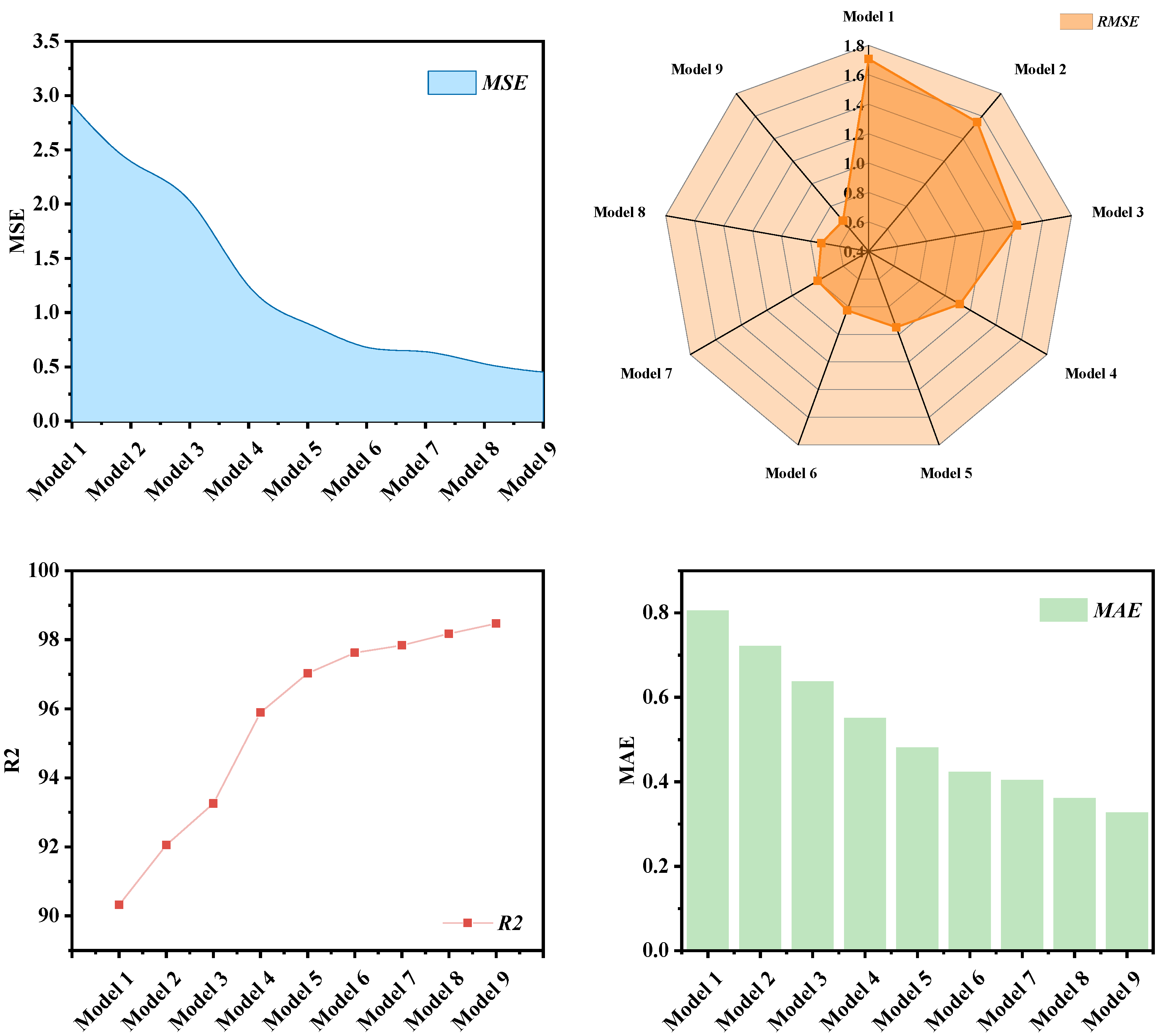

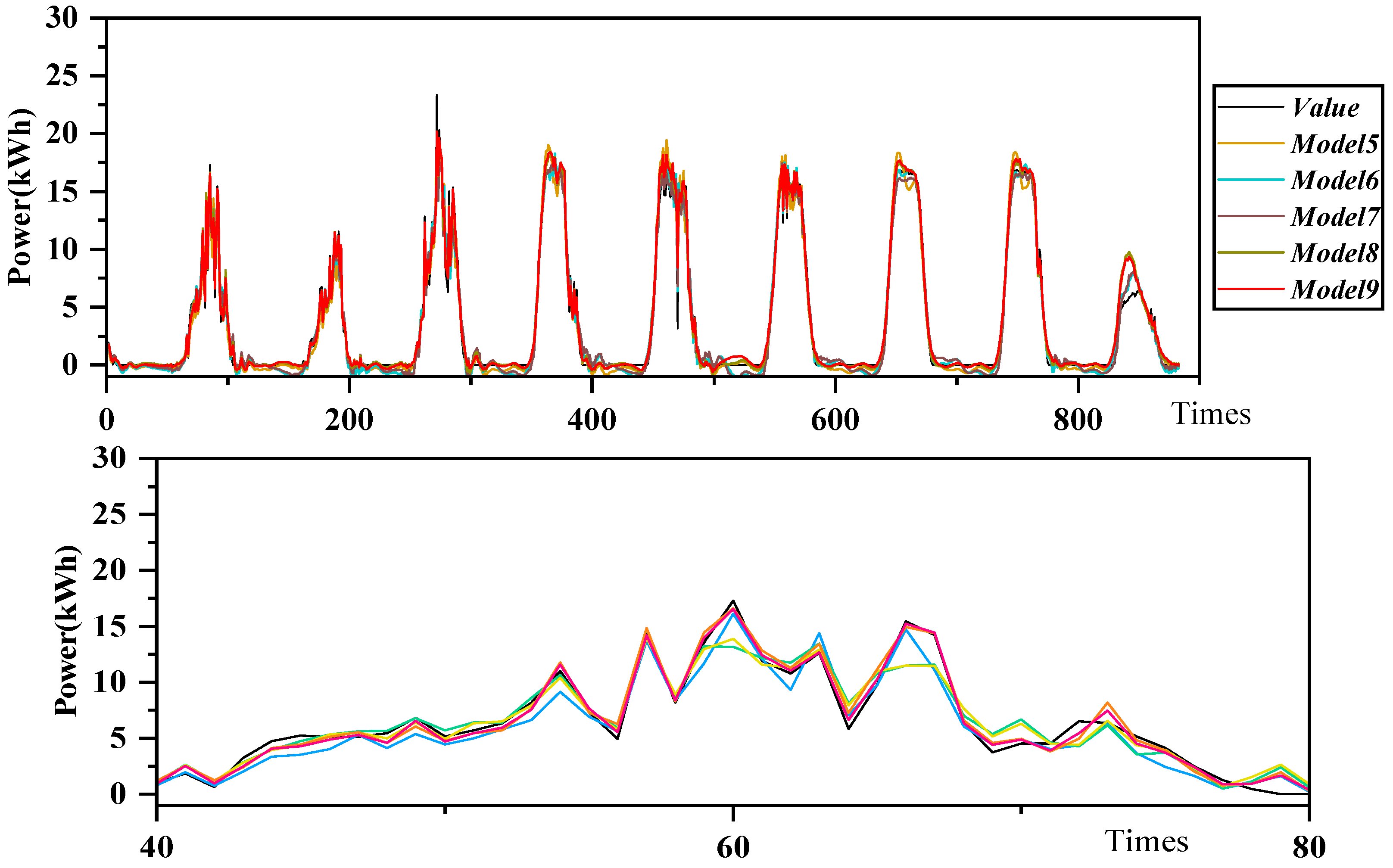

In this paper, a hybrid learning model based on dual decomposition model and optimized decomposition model is proposed in order to consider one decomposition of PV power using ICEEMDAN, and then using K-means cluster analysis to cluster and analyze the decomposed components into three components of high frequency, mid-frequency, and low-frequency, and then using VMD to decompose the high-frequency component, and finally inputting the decomposed component plus the feature data into the TCN_BiGRU_Attention prediction model for prediction. In order to improve the estimation accuracy of the TCN_BiGRU_Attention model, ICPO is used to optimize the hyperparameters of the TCN_BiGRU_Attention model. This study takes the data of a photovoltaic power station in northern China in March as an example and uses the data of January and July in this region to test the generalization ability. The results are discussed as follows.

(a) The ICEEMDAN algorithm is employed to decompose the original dataset and extract feature signals across various frequency bands. Subsequently, K-means clustering is utilized to segment these decomposed feature signals into several subsequences, thereby delving deeper into the underlying structure of the data. In the final step, VMD is applied to further decompose each subsequence, thereby extracting more refined feature details.

(b) In this paper, the standard CPO algorithm is optimized and the ICPO method is introduced to optimize the hyperparameters of the TCN_BiGRU_Attention model. It reduces the number of manual adjustments to the parameters of the TCN_BiGRU_Attention model and shortens the computation time.

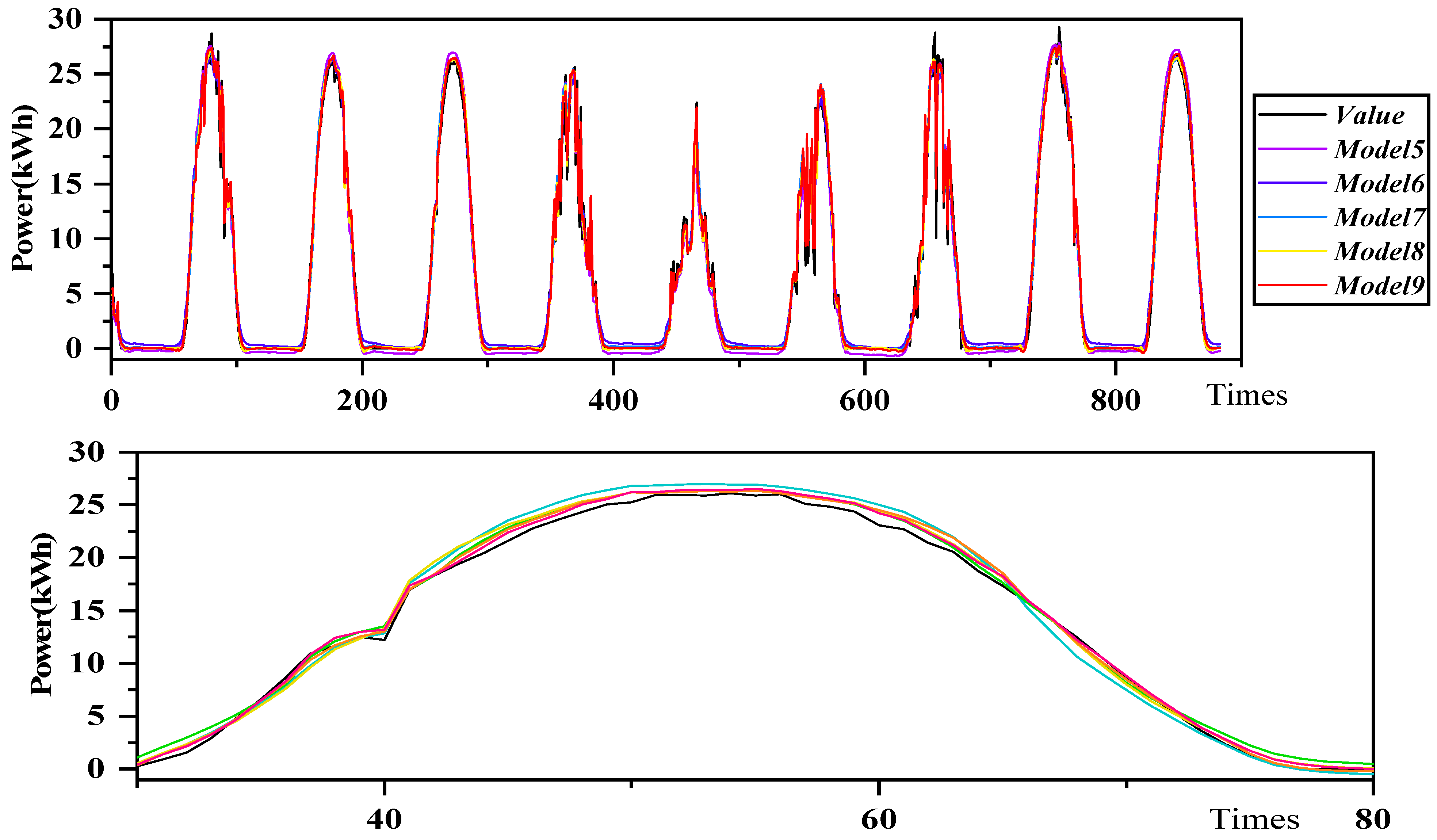

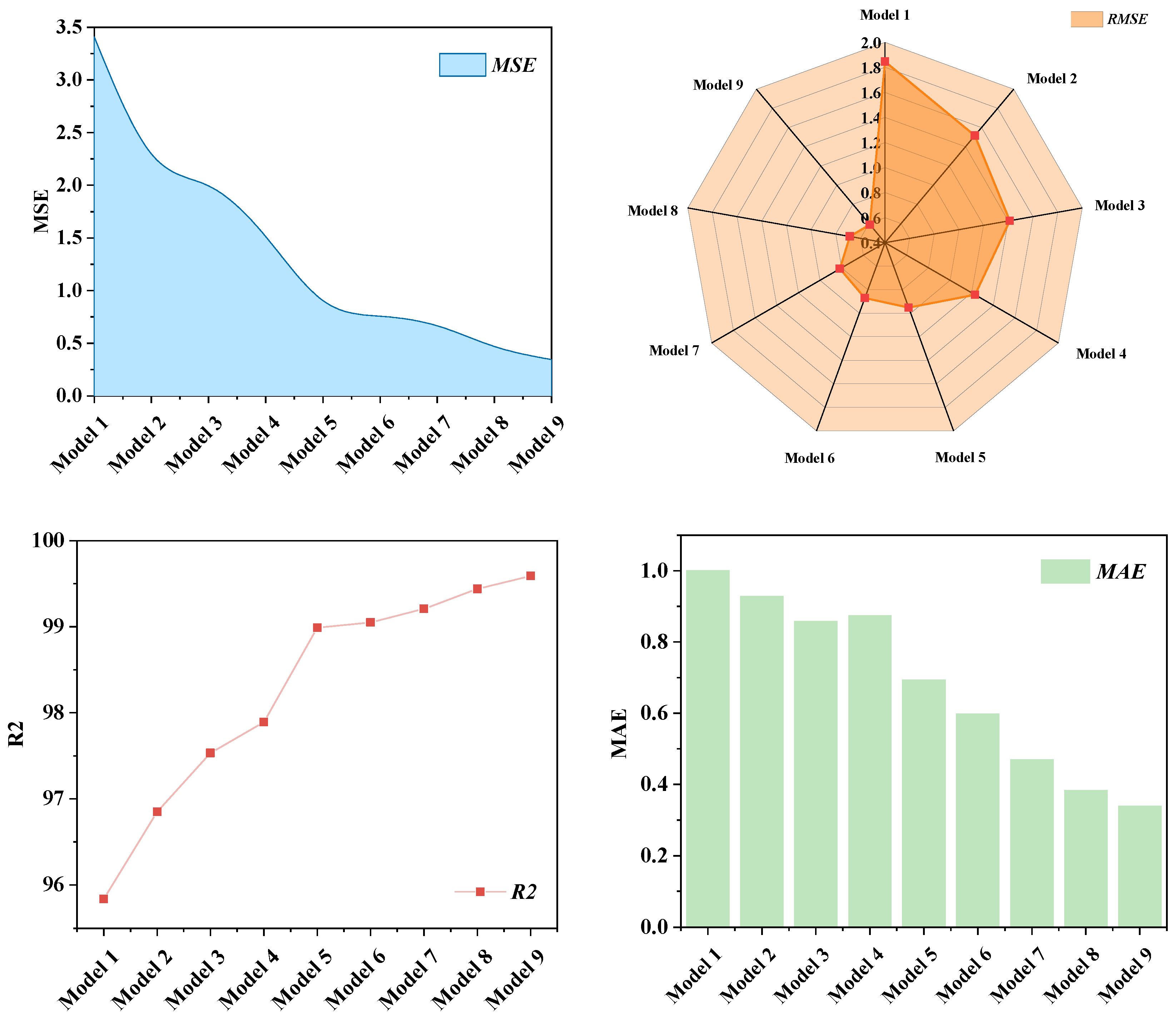

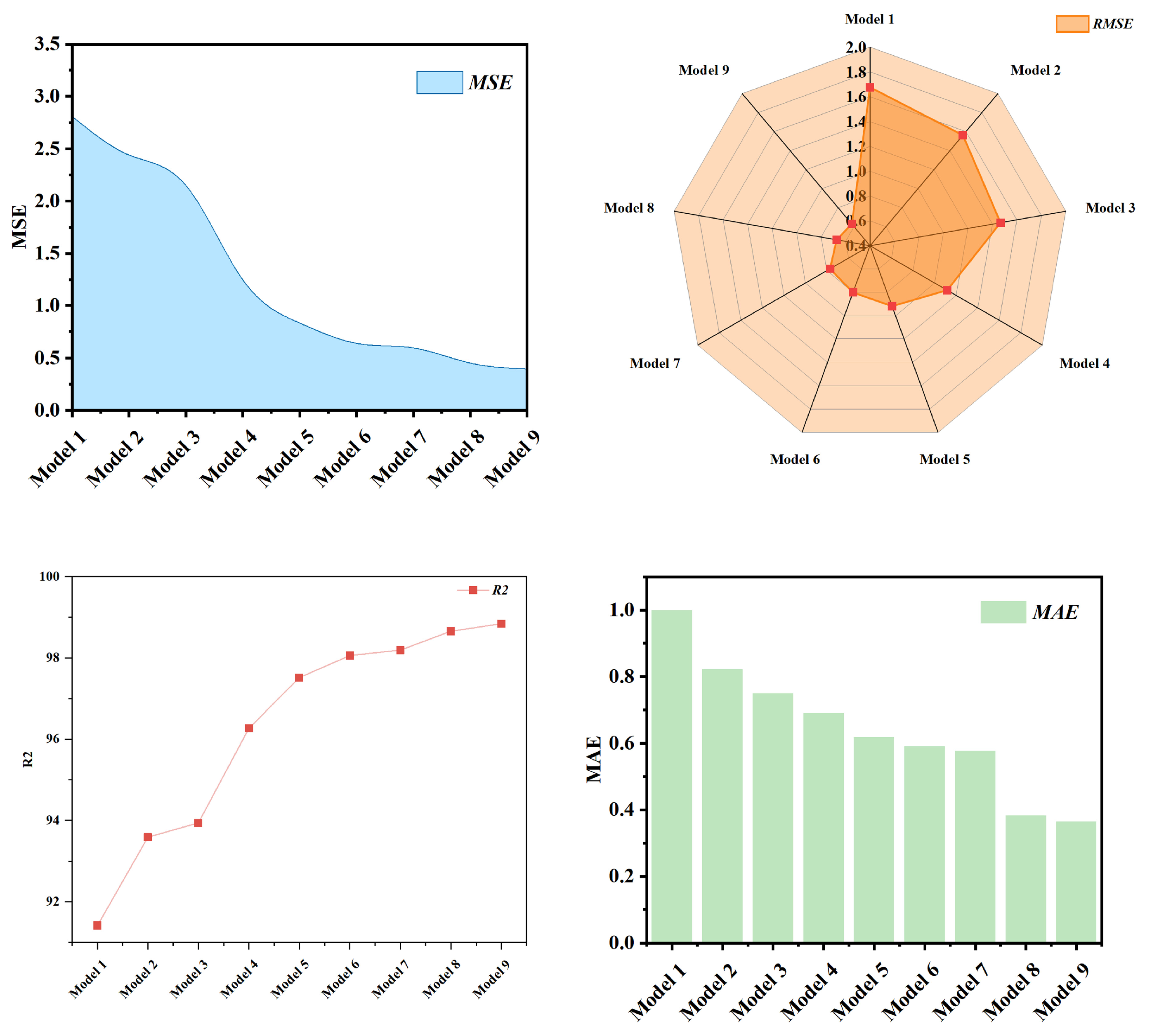

(c) Hybrid learning models have better prediction accuracy than single prediction models. Each PV power sequence has different features and attributes, which makes it difficult for a single prediction model BiGRU to predict various types of sequences. The MSE, RMSE, MAE and R2 of the hybrid learning model are 0.3456, 0.5879, 0.3396, and 99.59%, respectively, which is an improvement of 3.0627, 1.2583, 0.6619, and 3.876% compared to the single model.

The developed hybrid learning paradigm demonstrates robust PV power forecasting capabilities through three interconnected methodological dimensions: advanced data preprocessing, systematic hyperparameter optimization, and sophisticated predictive model architecture. Experiments verify the stability and reliability of the model.