Abstract

The growing complexity of electric vehicle charging station (EVCS) operations—driven by grid constraints, renewable integration, user variability, and dynamic pricing—has positioned reinforcement learning (RL) as a promising approach for intelligent, scalable, and adaptive control. After outlining the core theoretical foundations, including RL algorithms, agent architectures, and EVCS classifications, this review presents a structured survey of influential research, highlighting how RL has been applied across various charging contexts and control scenarios. This paper categorizes RL methodologies from value-based to actor–critic and hybrid frameworks, and explores their integration with optimization techniques, forecasting models, and multi-agent coordination strategies. By examining key design aspects—including agent structures, training schemes, coordination mechanisms, reward formulation, data usage, and evaluation protocols—this review identifies broader trends across central control dimensions such as scalability, uncertainty management, interpretability, and adaptability. In addition, the review assesses common baselines, performance metrics, and validation settings used in the literature, linking algorithmic developments with real-world deployment needs. By bridging theoretical principles with practical insights, this work provides comprehensive directions for future RL applications in EVCS control, while identifying methodological gaps and opportunities for safer, more efficient, and sustainable operation.

1. Introduction

1.1. General

The accelerating adoption of electric vehicles (EVs) is reshaping both the transportation and energy sectors, particularly through the growing demand for electric vehicle charging stations (EVCSs) [1,2,3]. As countries pursue decarbonization and sustainable mobility, EVCSs have become essential infrastructure, enabling broader EV integration by ensuring convenient and reliable access to charging. This accessibility plays a vital role in encouraging consumers to shift away from fossil fuel transportation [4]. However, incorporating EVCSs into existing power distribution networks presents several technical challenges, such as load balancing, managing peak demand, and optimizing infrastructure planning [5,6,7,8,9]. As a result, the effective deployment, coordination, and operation of EVCSs has become a key concern for urban planners, utility operators, and policymakers working toward smarter and more sustainable cities [10,11,12].

In the early stages, EVCS control relied on basic rule-based methods—such as immediate charging upon connection or simple time-of-use (TOU) schedules—which, while straightforward, lacked the flexibility to adapt to real-time grid and user dynamics [13]. As EV usage increased, such approaches began to show clear limitations, including grid stress, inefficient energy usage, and a lack of responsiveness [14]. This led to the emergence of more advanced control strategies, such as optimization-based techniques—including linear programming and offline scheduling approaches [15,16]—followed by the adoption of model predictive control (MPC), which enables forecasting within a predefined horizon to anticipate and adjust for future events [17,18,19,20,21]. However, the reliance on accurate system models and their computational complexity stimulated interest toward more flexible, learning-based approaches [22,23]. In this context, novel machine learning (ML) methods, particularly those capable of adaptive, intelligent control, emerged as promising tools for managing the uncertainty, variability, and scale of EVCS systems [24,25,26,27].

Reinforcement learning, a subfield of ML, has attracted significant attention as a key approach for adaptive control due to its ability to learn optimal decision policies through direct interaction with dynamic environments [28,29]. Unlike traditional approaches, RL does not require explicit modeling of system behavior—instead, it learns from experience by trial and error, optimizing for long-term cumulative rewards. Such methodology has been successfully applied in numerous contexts, devastated by complex, uncertain dynamics, such as building energy management [30,31,32], demand response [33,34], traffic control [35,36,37,38], and robotic navigation [39,40]. In the EVCS domain, RL appears particularly effective due to its ability to adapt to uncertainties such as variable user behavior, unsteady electricity prices, and periodic renewable generation [41,42,43]. Its strength in handling complex, multi-agent environments also renders it suitable for EVCS control, where numerous interconnected decisions need to take place in real time [44,45,46,47,48].

When compared to conventional methods like rule-based control (RBC) or MPC, RL offers considerable advantages in adaptability, scalability, and handling system nonlinearity and stochasticity [49,50]. It is particularly well-suited to managing large-scale, heterogeneous EVCS networks with minimal reliance on precise modeling. Moreover, RL agents may be tailored to optimize multiple, often competing objectives—such as user satisfaction, grid stability, and energy cost—by continuously adjusting charging strategies in response to real-time operational data [51,52,53]. RL-based control also supports integration with vehicle-to-grid (V2G) technologies [54,55,56], renewable energy sources (RESs) [57,58], and shared infrastructure scenarios [59]. Such features position RL as a powerful tool for next-generation EVCS management, especially in contexts where traditional optimization methods struggle to scale or adapt effectively [11]. In particular, in cases where adaptive control, such as RL, is integrated as a hybrid control scheme, methodological advances further demonstrate the potential of optimization and decision-making as concerns complex, non-linear domains [60,61].

Despite its potential, though, RL faces several challenges. For instance, long training times, sample inefficiency, and potential instability during the learning process [6,31] may prohibit the efficient control procedure. Additionally, ensuring explainability and safety is crucial in critical infrastructure applications such as EVCSs. Another limitation concerns the heavy reliance on simulation environments, with limited real-world implementation to date—raising valid questions about generalizability and robustness under real-world conditions [6,11]. Moreover, designing effective state and action representations, reward functions, and coordination mechanisms in multi-agent settings remains complex, particularly in the absence of standardized benchmarks and frameworks tailored to EVCSs [45]. Overcoming these challenges requires an elaborate understanding of RL behavior in diverse operational scenarios, along with a careful balance of performance trade-offs.

1.2. Motivation

With growing interest in using RL for EVCS control, a wide range of studies has emerged, demonstrating both the potential of RL and the challenges involved in its practical application. The current paper review responds to that momentum by offering a focused, RL-specific analysis of the field. After introducing the different EVCS types and key mathematical RL concepts relevant to EVCSs, this paper maps out and categorizes the most influential frameworks developed over the past decade. Given the diversity of RL methods-spanning algorithms, agent structures, state–action representations, reward designs, and evaluation practices—there is a clear need for systematic synthesis. This review addresses that need by identifying common patterns, open challenges, and research gaps through a structured statistical analysis of high-impact studies. To this end, this paper is intended as a resource for researchers, practitioners, and system designers interested in the current state and future direction of RL-based EVCS control. By organizing the literature into a consistent comparison framework and highlighting practical insights, this paper aims to help readers understand the technical landscape and support the informed development of future, real-world-ready solutions.

1.3. Previous Works

The academic literature includes numerous review studies examining EVCSs, each focusing on distinct frameworks and methodologies. Abdullah et al. [62] conducted a focused review on the application of RL in EV charging coordination. The paper emphasized the shortcomings of traditional RBC and centralized optimization approaches under uncertainty, advocating for decentralized RL methods as a scalable and adaptive alternative. Such a study discussed the integration of vehicle-to-grid (V2G) capabilities and categorized RL strategies, though it did not delve into algorithmic details or benchmark environments. In a broader context, Al-Oagili et al. [63] explored EV charging control strategies through scheduling, clustering, and forecasting approaches. While not specific to RL, this work contributed valuable insights into data-driven coordination techniques and distinguished between probabilistic and AI-based methodologies. The proposed work identified practical implementation challenges that added to the real-world applicability of the findings. Similarly, Shahriar et al. [64] examined various machine learning approaches for predicting EV charging behavior, covering supervised, unsupervised, and deep learning techniques. Although RL was not the primary focus, such work underscored the importance of user behavior modeling and high-quality data for effective EVCS control—laying the groundwork for the integration of predictive analytics with real-time RL systems. More recently, Zhao et al. [65] presented a comprehensive and systematic review dedicated to RL-based scheduling for EV charging. The analysis covered different RL algorithms, agent configurations, training methodologies, and evaluation metrics across diverse stakeholder objectives. By mapping technical advances, identifying challenges, and highlighting future research avenues, the work provided a thorough exploration of the role in EVCS optimization.

1.4. Novelty and Contribution

This review offers a comprehensive and methodologically structured analysis of RL applications in the control and optimization of EVCSs, setting itself apart from prior surveys by focusing exclusively on RL-based frameworks tailored for intelligent EV charging management. Unlike broader reviews that address AI in energy systems or general EV infrastructure planning, this study concentrates specifically on RL techniques designed to improve EVCS operational efficiency, enhance user satisfaction, and strengthen grid integration. A key contribution of this paper lies in its large-scale, in-depth evaluation of RL-based EVCS control strategies. It systematically summarizes and categorizes a wide range of high-impact publications from the past decade (including more than fifty impactful papers), with inclusion based on academic influence (more than 30 citations, or a minimum of 5–15 for recent studies), as indexed in Scopus. Such rigorous filtering ensures the relevance and quality of the analyzed content, enabling a focused synthesis of the most significant research developments and insights into how RL has evolved to address the growing complexity of EVCS control. The review begins by outlining the foundational theory of EVCS and RL algorithms commonly used in EVCS applications, followed by detailed tables that summarize key studies—highlighting their algorithmic structures, evaluation methods, and control objectives. Beyond a general overview, this work introduces a structured classification scheme that evaluates RL frameworks along critical dimensions—algorithm type (value-based, policy-based, actor–critic), agent structure (single vs. multi-agent), forecast horizon and control intervals, baseline methods, datasets, performance indexes, deployment settings (e.g., residential, public, fleet-based), and power grid interaction features such as V2G or renewable energy integration. By synthesizing results from a wide array of studies, this review not only highlights the most impactful approaches, but also identifies major challenges, emerging trends, and outlines future directions for advancing RL-driven EVCS control. Table 1 presents a detailed comparison matrix highlighting this review’s contributions in relation to existing surveys in the literature:

Table 1.

Contribution comparison between current and previous works on RL for EVCSs, based on key evaluation attributes.

At a glance, the current review makes five distinct contributions beyond prior surveys. More specifically, this review achieves the following:

- Covers a large number of RL applications for EV charging stations, achieving a scope not reached by earlier surveys. It systematically captures algorithmic design details such as explicit state, action, and reward formulations; the choice of forecast horizons and control time steps; and the baseline control strategies (rule-based, greedy, MILP, MPC, etc.) against which RL methods are compared. This focus allows not only a high-level overview but also a reproducible and technical understanding of how RL has been implemented across diverse studies.

- Introduces a new unified evaluation schema that classifies the literature along multiple dimensions—algorithmic family (value-based, policy-based, actor–critic, hybrid), agent structure (single vs. multi-agent), state space variables and action definitions, reward design principles, forecast horizon and control time step, baseline methodology, deployment environment (residential, workplace, public, depot, highway, renewable-integrated), smart grid interaction features (V2G, bidirectional, renewable integration, connectivity), type of data and simulation platforms used, and the performance metrics reported. These dimensions are consolidated into comprehensive key attribute tables and concise per-paper summaries, enabling transparent cross-comparison of results and establishing a standardized template for reporting future work.

- Derives practical deployment insights by explicitly mapping charger categories and their smart grid features to feasible control cadences, communication requirements, and V2G or renewable integration readiness. By linking the operational characteristics of EVCSs to the RL state–action–reward choices and control horizons, the review highlights what control designs are viable in practice, what simulation assumptions break down in real deployments, and where the main research gaps remain for achieving safe, scalable, and grid-friendly RL-based charging management.

1.5. Paper Structure

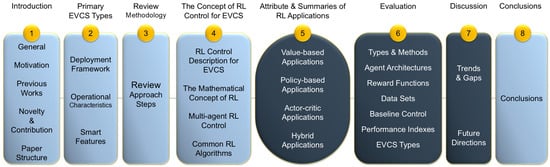

As illustrated in Figure 1, this review is structured to guide the reader from foundational concepts to advanced methodological analysis and synthesis:

Figure 1.

Paper structure.

- Section 1 introduces the general status of RL for EVCSs, the motivation for this review, previous contributions, and the paper structure.

- Section 2 presents the methodological approach adopted for the literature analysis, describing the sequential steps undertaken to integrate the relevant studies.

- Section 3 presents a classification of EVCS types across multiple dimensions, including deployment framework, operational characteristics, and smart features, offering a foundational taxonomy for understanding control requirements.

- Section 4 illustrates a step-by-step description for RL control in BEMS while also providing the mathematical framework of RL methodologies for EVCSs.

- Section 5 illustrates the tables considering the different key attributes and the summaries of the integrated RL applications.

- Section 6 conducts a comprehensive review and evaluation of RL-based EVCS studies published between 2015–2025, synthesizing them in terms of their control strategies, agent design, reward formulations, baselines, datasets, performance indexes, and deployment index.

- Section 7 synthesizes the identified emerging trends and methodological gaps, and proposes future directions toward scalable, explainable RL systems for EVCSs.

- Section 8 concludes this paper by summarizing the key contributions and outlining the implications for both research and practice.

2. Review Methodology

To provide a structured and in-depth synthesis of existing research, the reviewed studies are systematically categorized based on the type of RL approach—value-based, policy-based, actor–critic, or hybrid—alongside agent configurations (single-agent or multi-agent systems) and specific smart charging objectives. Further dimensions such as reward design, baseline control strategies, datasets, performance evaluation metrics, and deployment contexts are also examined to ensure a holistic understanding of RL-driven EVCS optimization:

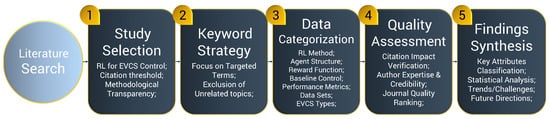

- Study Selection: A rigorous study selection protocol was followed to ensure the quality and relevance of the included works (see Figure 2. Only peer-reviewed articles and top-tier conference papers indexed in Scopus and Web of Science were considered. An initial screening yielded over 200 publications, from which a refined subset was chosen for full-text analysis based on a combination of quality and thematic alignment. The inclusion criteria were as follows: (a) Citation Threshold: A minimum of 30 citations was required, excluding self-citations. For recent publications (2022–2025), a relaxed threshold of 5–15 citations was applied to accommodate their growing influence. (b) Topical Relevance: Only studies directly addressing RL-based control of EVCSs were included. Papers solely focused on EV routing, mobility patterns, or market mechanisms—without an RL control component—were excluded. (c) Peer Review Status: Only peer-reviewed journal articles and reputable conference proceedings (e.g., IEEE, Elsevier, MDPI, Springer) were retained; pre-prints and non-reviewed material were excluded. (d) Methodological Transparency: Studies had to clearly define their RL framework, including state and action space design, reward formulation, and evaluation protocol. (e) Algorithmic Diversity: A balanced representation across different RL algorithm families was maintained to reflect methodological variety.

Figure 2. Article selection process.

Figure 2. Article selection process. - Keyword Strategy: A focused keyword strategy was used to ensure that the search captured only studies relevant to RL applications in EVCSs. Core search terms included “Reinforcement Learning for Electric Vehicle Charging Stations”, “RL-based Smart EV Charging”, “Multi-agent RL for EV Charging Control”, “Deep Reinforcement Learning for EVCS Optimization”, and “Intelligent Charging Control with Reinforcement Learning”. Efforts were made to exclude unrelated domains such as electric mobility logistics, EV routing, or broader smart grid topics not involving EVCS-specific RL control.

- Data Categorization: Each selected study was systematically classified across multiple dimensions critical to RL-based EVCS control. These dimensions included the specific RL algorithm employed (e.g., Q-learning, DDPG, PPO, A2C), agent architecture (single vs. multi-agent), state and action space design, and reward modeling approach. Additional classification covered simulation or deployment environments, control time steps, prediction horizons, and the type of EVCS implementation (e.g., residential, public, workplace, fleet, mobile, depot-based, or integrated with renewables). Information about benchmark control methods—such as RBC logic, TOU strategies, greedy algorithms, offline optimization, MPC, or RL—was also collected for comparative analysis.

- Quality Assessment: To maintain analytical rigor, a quality-assessment framework was applied. Citation counts from Scopus served as a primary filter, based on the thresholds noted earlier. Beyond citation metrics, the academic standing of the authors and their institutional affiliations were also considered, particularly their contributions to RL, energy systems, or EV-infrastructure research. Studies with well-defined experimental setups, clearly justified reward structures, and comprehensive comparisons with baselines were prioritized. Emphasis was placed on works that introduced methodological or architectural innovations and reported extensive evaluations using recognized performance indicators.

- Findings Synthesis: The extracted insights were thematically synthesized to allow for cross-comparison of RL applications in EVCS control. This synthesis involved grouping studies based on RL architecture, deployment environments, interaction with the power grid (e.g., V2G or RES integration), and evaluation objectives (e.g., energy cost savings, peak load reduction, user comfort). Such a structured approach also enabled basic meta-analyses across studies, facilitating the identification of emerging trends, key limitations, and underexplored areas. The resulting framework formed a comprehensive knowledge base to support future advancements in RL-driven EVCS management.

The methodology employed for the literature analysis may be outlined in Figure 3.

Figure 3.

Literature approach.

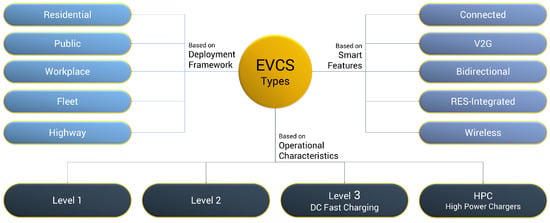

3. Primary EVCS Types

EVCS frameworks may be systematically categorized along three key dimensions—deployment context, operational characteristics, and smart feature capabilities. The deployment framework context defines where and for whom the charger is installed, such as residential, public, workplace, or fleet environments. The operational characteristics refer to the charger’s power level and speed—ranging from slow residential units to ultra-fast highway systems—which determine how quickly vehicles can be recharged. Lastly, the smart features and grid interaction capabilities describe the technological sophistication of the charger, including connectivity, bidirectional energy flow, renewable integration, and wireless functionality [66,67]. This three-fold classification framework is essential for understanding the technical and contextual diversity of EVCSs in both research and real-world applications. Figure 4 delivers such a three-dimensional classification of EVCS frameworks. More specifically:

Figure 4.

Primary EVCS types based on deployment framework, smart features, and operational characteristics.

3.1. EVCS Types Based on Deployment Framework

The deployment framework of an EVCS refers to the physical setting and functional purpose of the charging infrastructure, which varies significantly based on user needs, usage behavior, and local infrastructure constraints. Different deployment contexts demand tailored design and control strategies. For instance, residential setups prioritize user convenience and integration with home energy systems, while public or fleet-based deployments require scalability, fast service, and grid coordination. Recognizing these deployment types is essential for designing effective and context-aware RL optimization strategies. Broadly, EVCS deployment categories include the following:

- Residential EVCSs: Typically installed in private homes or apartment complexes, residential chargers offer Level 1 or Level 2 charging and are intended for individual users. These systems are often part of broader smart home energy networks and are integrated into demand-side management programs [68]. A notable subset is community or shared residential charging, found in multi-unit dwellings or energy communities. These setups prioritize equitable access, cooperative scheduling, and shared infrastructure ownership among residents [69].

- Public EVCSs: These chargers are accessible to all users and are located in urban areas such as shopping malls, city centers, and public parking facilities [70]. They typically include Level 2 and DC fast-charging stations and are essential for alleviating range anxiety. An overlapping category includes workplace chargers with public access during non-business hours [71], sharing operational concerns such as pricing models, accessibility, and utilization rates.

- Workplace EVCSs: Installed at corporate campuses or business facilities, workplace chargers primarily serve employees during work hours. They support strategies like load balancing, integration with solar energy systems, and sustainability initiatives [72]. Unlike public-access chargers, these systems benefit from predictable charging patterns and controlled access.

- Fleet EVCSs: Designed for centralized, high-power charging of commercial fleets such as buses, taxis, or delivery vans, fleet EVCSs enables scheduled charging aligned with route planning and operational needs [73]. These stations play a vital role in logistics electrification and often use advanced dispatch algorithms and energy cost optimization techniques.

- Highway EVCSs: Positioned along highways or intercity routes, these fast and ultra-fast charging stations support long-distance travel. They are optimized for short dwell times, high user throughput, and wide-area-network planning [74]. Fleet operators, particularly those in freight or long-haul transport, may also use these facilities, resulting in overlap with depot-type charging infrastructure [75].

- Mobile EVCSs: Mobile EVCSs consist of portable charging units that can be rapidly deployed in temporary or emergency scenarios, including rural zones, outdoor events, or disaster recovery operations [76]. They offer unmatched flexibility and are often used as backup or supplemental charging for residential or fleet operations [77].

3.2. EVCS Types Based on Operational Characteristics

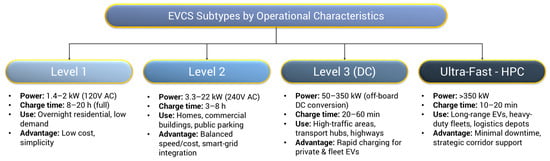

Another important classification of EVCSs relates to their operational characteristics, specifically the power output and corresponding charging speed (see Figure 5). Such factors not only influence the charger’s suitability for different contexts—residential vs. highway—but also affect energy management, grid load, and user convenience. The four primary EVCS types (See also Figure 5) vary significantly in terms of power capacity, infrastructure cost, and deployment use cases [78,79]:

Figure 5.

EVCS subtypes based on operational characteristics.

- Level 1 Chargers: Such subtypes concern the most basic and slowest charging systems, providing 1.4–2 kW via a standard 120V AC outlet. A full charge typically takes 8 to 20 h, making them ideal for overnight residential use where charging speed is not critical [80]. Their affordability and simplicity make them suitable for households with low daily driving demands [79,80].

- Level 2 Chargers: Offering 3.3–22 kW of power through a 240 V connection, Level 2 chargers significantly reduce charging time to around 3–8 h. They are widely used in homes with higher electrical capacity, commercial buildings, and public parking areas. Balancing performance and cost, Level 2 chargers are commonly integrated into smart grid systems for improved load management [78].

- Level 3—DC Fast Chargers: These high-powered systems deliver 50–350 kW and can charge most EVs up to 80% in just 20 to 60 min. By converting AC to DC within the charging station itself, they bypass the vehicle’s onboard charger to allow rapid energy transfer [80]. DC fast chargers are typically found in high-traffic commercial areas, transportation hubs, and along highways, serving both private users and fleet vehicles [81].

- Ultra-Fast–High-Power Chargers (HPCs): Exceeding 350 kW, these next-generation chargers are designed for ultra-rapid refueling of heavy-duty or long-range EVs. HPC stations can deliver a near-full charge in just 10–20 min [82,83]. Their deployment is common along strategic transport corridors, logistics hubs, and in fleet depots where minimizing vehicle downtime is essential [83].

3.3. EVCS Types Based on Smart Features

As EVCSs evolve beyond basic power delivery, their integration into intelligent and flexible energy ecosystems has become increasingly critical. This classification dimension focuses on the smart features of EVCSs—specifically their communication, control, and interaction capabilities with users and the power grid. These features not only enhance user experience but also facilitate load balancing, renewable energy integration, and overall grid reliability. Smart-enabled EVCSs are essential building blocks for future smart cities and resilient transportation infrastructures.

- Smart-Connected EVCSs: These chargers are equipped with communication interfaces that enable real-time data exchange with users, utilities, and energy management platforms [84]. Smart functionalities may include user authentication, remote diagnostics, usage-based billing, dynamic charging schedules, and intelligent load management [85]. Such chargers play a pivotal role in demand response (DR) programs and are commonly linked to mobile applications or cloud-based control systems [86].

- V2G-Enabled EVCSs: Vehicle-to-grid (V2G)-enabled chargers allow energy to flow bidirectionally—enabling EVs not only to charge from the grid but also to return excess energy to it [87,88]. This bidirectional capability supports grid services such as frequency regulation, peak demand mitigation, and emergency power supply. V2G systems are typically implemented in scenarios where EVs function as distributed energy storage assets, enhancing grid flexibility and stability [89].

- Bidirectional EVCSs: While V2G represents a subset, the broader category of bidirectional EVCSs includes support for vehicle-to-home (V2H), vehicle-to-building (V2B), and vehicle-to-load (V2L) applications. These systems allow energy to flow between the EV and various endpoints, enabling use cases like home backup power during outages or supporting microgrid operations. Such functionality increases energy autonomy and system resilience.

- RES-Integrated EVCSs: These charging systems are coupled with on-site renewable energy sources (RESs), most commonly solar photovoltaic (PV) or small-scale wind turbines [90]. Often paired with battery energy storage systems (ESSs), RES-integrated EVCSs help mitigate renewable variability and allow EVs to be charged using clean, locally generated power [91]. This configuration is particularly valuable in eco-villages, rural areas, and sustainability-focused communities.

- Wireless EVCSs: Wireless charging stations transfer energy via inductive coupling between a ground pad and a receiver mounted beneath the vehicle [92,93]. Although still in early commercial or pilot stages, this technology eliminates the need for physical connectors, offering a seamless and automated charging experience. Wireless EVCSs are particularly promising for autonomous vehicle fleets, shared mobility hubs, and curbside urban charging, where automation and user convenience are key priorities.

4. The Concept of RL Control for EVCSs

RL presents a powerful paradigm for optimizing the operation of EVCSs, especially under dynamic and uncertain conditions. In an RL-based system, an intelligent agent learns to make sequential decisions by interacting with its environment,-comprising EV arrivals and departures, user preferences, electricity prices, grid conditions, and renewable energy availability.

At each decision step, the agent observes the current state of the environment—such as battery levels, charger occupancy, and time constraints—and selects an action, for example, when and how much to charge a vehicle. After executing this action, the environment transitions to a new state, and the agent receives a reward signal that quantifies the effectiveness of its decision. This reward may reflect objectives such as minimizing energy costs, avoiding grid overloads, maximizing renewable energy usage, or meeting user deadlines. Over time, the RL agent learns an optimal policy that balances these trade-offs, enabling real-time, adaptive control of the EV charging process.

4.1. RL Control Description for EVCSs

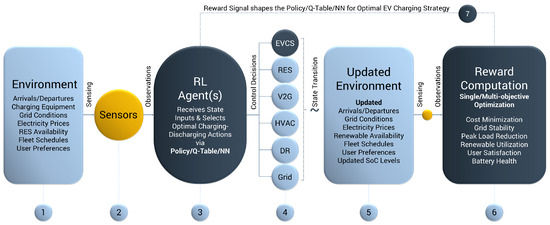

A step-by-step description of the RL control optimization procedure for EVCSs—along with interactions with other energy system components—is illustrated in Figure 6 and outlined as follows:

Figure 6.

Step-by-step RL control optimization procedure for EVCSs.

- Environment: The environment includes the EVCS infrastructure and its broader energy ecosystem. This encompasses EV arrivals and departures, energy availability from the grid or renewables, dynamic electricity prices, user preferences (e.g., departure time, desired state of charge), battery limitations, and possible grid-side constraints. In more complex scenarios, the environment may involve multiple stations, aggregators, and fleet operators. While the RL agent does not control the environment, it continuously adapts to its evolving state.

- Sensors and Observations: Real-time data is collected through smart meters, session logs, reservation systems, user applications, and grid communication interfaces. Observed variables include EV arrival times, current SoC, time-to-departure, energy demand, charger availability, historical usage patterns, grid signals (e.g., time-of-use tariffs, demand response events), and renewable generation levels. This data forms the observable state space for the RL agent.

- RL Agent(s): The RL agent is responsible for making optimal charging decisions. It may be centralized—controlling one or more stations—or decentralized, where each EV or charger acts independently. The agent selects actions such as which EV to charge, how much energy to deliver, or when to pause or resume charging. These decisions aim to optimize multiple objectives, including energy cost reduction, peak load mitigation, user satisfaction, and renewable energy utilization. Through continuous interaction with the environment, the agent improves its policy over time.

- Control Decision Application: Once the RL agent selects an action, it is translated into executable commands such as initiating charging, adjusting power levels, or deferring sessions. In depot or fleet contexts, this may include multi-vehicle scheduling. These actions are applied in real time through the electric vehicle supply equipment (EVSE), subject to physical constraints such as charger capacity and transformer limits.

- Environment Update: Following the action, the environment evolves naturally. Vehicles gain charge, some depart, others arrive; electricity prices fluctuate; renewable output varies; and user behavior introduces further uncertainty. This results in a new system state, which becomes the next input to the agent’s learning process.

- Reward Computation: The system computes a numerical reward that reflects how well the agent’s action aligned with predefined objectives. Reward components may include energy cost savings, success in peak shaving, alignment with renewable output, user satisfaction (e.g., full charge before departure), or penalties for poor performance. In V2G scenarios, rewards may also account for grid services provided or revenue from energy discharge.

- Reward Signal Feedback: The reward is fed back into the RL algorithm, enabling the agent to refine its policy. Algorithms such as Q-learning, DDPG, or PPO are used to guide this learning process. With each interaction cycle, the agent improves its ability to handle diverse conditions, including fluctuating prices, variable loads, and unpredictable user demands—ultimately enabling intelligent, real-time control of EV charging operations.

Through continuous learning and adaptation, RL-based EVCS control frameworks have the potential to outperform traditional rule-based or pre-scheduled methods. By dynamically responding to real-world complexities such as stochastic EV arrivals, time-varying pricing, intermittent renewable energy, and system constraints, RL enables smarter, user-centric, and grid-supportive charging strategies.

4.2. The Mathematical Concept of RL

RL models EVCS control as a Markov decision process (MDP), enabling agents to learn optimal charging strategies through continuous interaction with a dynamic environment. This formalism allows the agent to make sequential decisions under uncertainty, adapting to real-time variables such as electricity pricing, EV arrival and departure times, grid load conditions, renewable energy output, and user demand. As a model-free, data-driven approach, RL eliminates the need for manually crafted rules or pre-defined schedules, offering adaptive control that evolves over time.

The mathematical foundation of RL is defined by the MDP tuple , where

- S represents the set of environment states (e.g., SoC levels, grid load, energy prices, EV requests);

- A denotes the set of actions (e.g., charging rate adjustment, delay charging, activate V2G);

- is the transition probability to a new state from state s under action a;

- defines the immediate reward for executing action a in state s;

- is the discount factor used to balance short- and long-term rewards.

The agent aims to learn a policy that maximizes the expected cumulative return:

This leads to the definition of the state–value function:

and the action-value function:

In model-free learning approaches like Q-learning, the Q-function is iteratively updated using the following:

where denotes the learning rate that determines the step size in learning.

4.3. Multi-Agent Reinforcement Learning

While single-agent RL is suitable for isolated EVCS control, real-world scenarios often involve multiple interacting entities—EVs, chargers, aggregators, and grid operators—each with their own objectives and decision-making capabilities. In such settings, multi-agent reinforcement learning (MARL) becomes essential, enabling decentralized or distributed control via multiple coordinated RL agents.

In MARL, we define a set of agents , each operating with its own policy . The environment is modeled as a stochastic game represented by the tuple , where

- is the global state space;

- is the action space of agent i;

- is the state transition probability;

- is the reward for agent i;

- is the discount factor for future rewards.

Each agent seeks to maximize its expected return:

However, from an individual agent’s perspective, the environment becomes non-stationary as other agents simultaneously update their strategies. This complexity introduces significant challenges, necessitating specialized coordination and training mechanisms.

Value and Action Networks in MARL

In both single-agent and multi-agent RL, decision-making is commonly supported by two core components [31]:

- Value Networks (Critics): These networks evaluate the quality of a given state or state–action pair. The state-value function predicts the long-term return when following policy from state s, while the action-value function estimates the expected return when taking action a in state s. In actor–critic algorithms, the critic informs and guides policy improvement.

- Action Networks (Policies): These networks determine the agent’s actions. In discrete spaces, the policy outputs action probabilities; in continuous settings, it generates control commands (e.g., specific charging rates). Policies are optimized using signals from the critic to favor actions with higher expected returns.

In MARL environments, value and policy networks require careful design due to the presence of multiple agents and shared decision spaces. Their structure, training strategy, and coordination mechanism are critical to achieving robust and scalable learning outcomes [31,37]:

- Structure: Describes how networks are distributed or shared among agents. Common configurations include the following:

- -

- Centralized Critic with Separate Policies: A single critic observes the global state; each agent maintains an independent policy.

- -

- Fully Separate Critics and Policies: Agents operate entirely independently, which maximizes autonomy but can hinder learning stability.

- -

- Shared Critic and Policy: All agents share a common network, promoting unified control but limiting agent specialization.

- -

- Hybrid–Partially Shared: Network layers are partially shared to capture global patterns, while deeper layers remain agent-specific.

- Training: Refers to how agents update their policies and what information is available during learning. Variants include the following:

- -

- Centralized Training with Decentralized Execution (CTDE): Global information is used during training; agents act based on local data at runtime.

- -

- Fully Decentralized Training: Agents learn from their own experience only, enabling privacy but risking convergence issues.

- -

- Fully Centralized Training: A central controller manages training across agents; computationally intensive but often stable.

- -

- Hybrid Approaches: Combine centralized and local training, e.g., agents train locally with periodic global updates.

- Coordination: Focuses on how agents cooperate or share information:

- -

- Implicit Coordination: Achieved via shared objectives or rewards, without direct communication.

- -

- Explicit Coordination: Involves message exchange, negotiation, or prediction sharing.

- -

- Emergent Coordination: Cooperation arises naturally as agents learn interdependencies through interaction.

- -

- Hierarchical Coordination: Involves structured agent roles, such as leaders assigning tasks to sub-agents.

In the context of EVCSs, MARL provides the foundation for enabling decentralized yet coordinated decision-making across vehicles, stations, and energy systems, supporting intelligent, adaptive control that balances local autonomy with global optimization.

4.4. Common RL Algorithms for EVCS Control

RL algorithms used for EVCS management can be broadly categorized into three main types: value-based, policy-based, and actor–critic approaches [37]. Each class offers distinct advantages in handling control challenges related to discrete versus continuous action spaces, centralized versus decentralized control, and the presence of dynamic or stochastic environments.

4.4.1. Value-Based Algorithms

Value-based methods focus on estimating the expected return of each action and selecting the action with the highest value. These algorithms are particularly well-suited for EVCS environments involving discrete decisions, such as choosing between charging, idling, or discharging [94,95].

- Q-Learning (QL): Q-Learning is a widely used off-policy algorithm that learns the optimal action-value function independently of the current policy. It is especially effective in small-scale EVCS systems and updates the Q-values using the following:Despite its simplicity, Q-Learning struggles with scalability in large or high-dimensional EVCS networks [58].

- Deep Q-Networks (DQNs): DQN extends Q-Learning by approximating the Q-function using deep neural networks. It incorporates experience replay and target networks to stabilize training [96]:DQN has been applied in community-scale EVCS deployments and load balancing tasks where temporal variability is a key factor [97].

4.4.2. Policy-Based Algorithms

Unlike value-based methods, policy-based algorithms directly optimize the policy without explicitly estimating the value function. These are especially useful in continuous action domains, such as fine-tuning the charging rate over time.

- Proximal Policy Optimization (PPO): PPO achieves a balance between policy exploration and training stability by constraining the magnitude of policy updates [98,99]:where and is the advantage estimate. PPO has demonstrated strong performance in scalable EVCS applications, particularly under dynamic pricing conditions.

4.4.3. Actor–Critic Algorithms

Actor–critic methods combine the strengths of value-based and policy-based algorithms. The actor proposes actions, while the critic evaluates them, enabling efficient and stable learning in complex environments.

- Deep Deterministic Policy Gradient (DDPG): DDPG is well-suited for continuous control tasks, such as modulating EV charging rates. The critic and actor are updated as follows [100]:

- Soft Actor–Critic (SAC): SAC enhances exploration by adding an entropy term to the learning objective, promoting robustness in uncertain environments [101]:This algorithm has been adopted in V2G-enabled EVCS settings, where the environment is highly dynamic and unpredictable [102].

- Advantage Actor–Critic (A2C): A2C improves training efficiency and reduces variance using the advantage function [103]:A2C has been used in decentralized, multi-agent EVCS control, where minimal communication among agents is required but cooperative behavior is still essential.

Each of these RL paradigms enables EVCS control systems to autonomously learn optimal strategies that adapt to user preferences, grid constraints, and cost objectives—offering a scalable and intelligent alternative to traditional rule-based or model-driven approaches.

5. Attribute Tables and Summaries of RL Applications

This section illustrates the high impact of RL applications in EVCSs from 2015 to 2025, by offering a high-level overview of value-based, policy-based, actor–critic, and hybrid high-impact applications found in the literature. This approach allows readers to quickly identify relevant applications in the attribute tables and refer to the detailed summaries for deeper insights into their methodologies and findings.

5.1. Attribute Tables and Summaries Description

Key attribute tables, systematically illustrate each application according to key characteristics, ensuring a comprehensive understanding of the overall approach:

- Ref.: Contains the reference application, which is listed in the first column.

- Year: Contains the publication year for each research application.

- Method: Contains the specific RL algorithmic methodology applied in each application.

- Agent: Contains the agent type of the concerned methodology (single-agent or multi-agent RL approach).

- FH/TS: Illustrates the forecast horizon (FH) and the time step (TS) intervals for the RL control application considering the EVCSs.

- Baseline: Illustrates the comparison methods used to evaluate the proposed RL approach (such as RBC, MPC, greedy, fixed, MILP, or other RL-based strategies).

- Type: Contains the primary deployment type of the concerned EVCSs (e.g., residential, public, fleet, highway, etc.).

- Integration: Indicates additional energy systems and mechanisms interacting with the EVCSs (e.g., RES, ESS, HVAC, DR, V2G, main grid, etc.).

- Data: Describes the type of data used to train the RL algorithms. Studies utilizing actual measurements from real-world EVCS deployments are labeled as real; those using simulated or synthetic data are labeled as synth; and those combining both sources are marked as Both.

Moreover, the summaries are integrated in the tables that follow each key attribute table, providing a brief synopsis of the applications. More specifically:

- Author: Contains the name of the author along with the reference application.

- Summary: Contains a brief description of the research work.

The abbreviations “-” or “N/A” represent the “not identified” elements in tables and figures.

5.2. Value-Based RL Applications

The value-based RL applications for TSC and their primary attributes are integrated in Table 2, while the brief summaries of the applications are illustrated in Table 3.

Table 2.

Key attributes of value-based RL applications for EVCSs.

Table 3.

Summaries of value-based RL applications for EVCSs.

5.3. Policy-Based RL Applications

The policy-based RL applications for EVCSs and their primary attributes are integrated in Table 4, while brief summaries of the applications are illustrated in Table 5:

Table 4.

Key attributes of policy-based RL applications for EVCSs.

Table 5.

Summaries of policy-based RL applications for EVCSs.

5.4. Actor–Critic RL Applications

The actor–critic RL applications for EVCSs and their primary attributes are depicted in Table 6, while brief summaries of the applications are illustrated in Table 7:

Table 6.

Key attributes of actor–critic RL applications for EVCSs.

Table 7.

Summaries of actor–critic RL applications for EVCSs.

5.5. Hybrid RL Applications

The hybrid RL applications for EVCSs and their primary attributes are depicted in Table 8, while brief summaries of the applications are illustrated in Table 9:

Table 8.

Key attributes of hybrid RL applications for EVCSs.

Table 9.

Summary of hybrid RL applications for EVCSs.

6. Evaluation

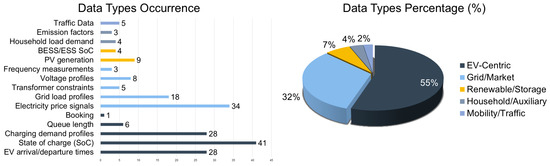

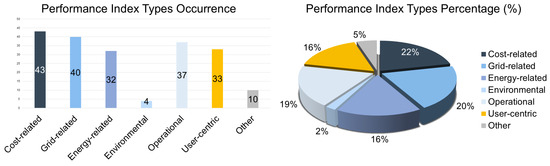

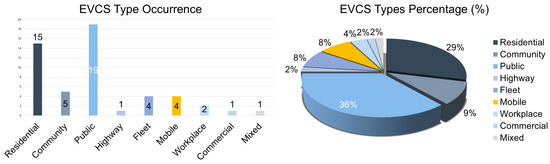

To offer a structured and comparative overview of existing RL applications for EVCSs, the evaluation focuses on seven key attributes:

- Methodology and Type: Defines the core structure and features of each RL algorithm.

- Agent Architectures: Explores prevailing agent architectures, emphasizing multi-agent RL trends.

- Reward Functions: Analyzes reward design variations across RL control implementations.

- Baseline Control: Reviews baseline strategies used to compare and validate RL performance.

- Datasets: Reviews the utilized data types for RL implementation in EVCS frameworks.

- Performance Indexes: Identifies commonly used evaluation metrics and their characteristics.

- EVCS Types: Identifies commonly used EVCS types in research, identifying trends and limitations.

Such dimensions capture the critical factors in designing, deploying, and benchmarking RL-based controllers. Dissecting the literature along these axes helps readers understand how RL techniques align with various traffic control frameworks, design constraints, and performance objectives—supporting informed choices for different network scenarios.

6.1. RL Types and Methodologies

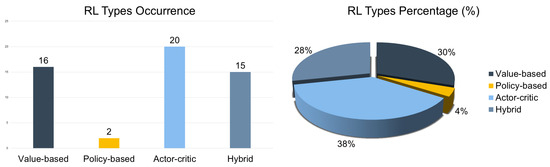

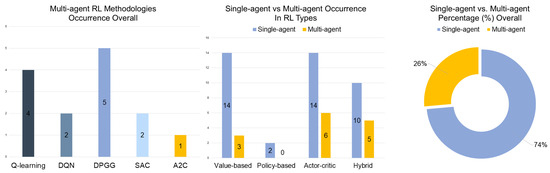

The analysis of RL methodologies applied to EVCS control reveals that actor–critic approaches dominate in volume, reflecting their strong capability to manage continuous action spaces, multi-agent interactions, and constraint-aware decision-making (see Figure 7—right). Techniques such as DDPG and SAC are frequently employed in grid-integrated and scalable scheduling frameworks [126,129,137,138], where they often outperform value-based methods in high-dimensional, dynamic environments (see Figure 7—left). Value-based RL algorithms—such as Q-learning and DQN—remain widely used, particularly in simpler, single-agent, or discretized scenarios where cost optimization is the primary focus [104,106,117]. In contrast, policy-based RL methods appear far less frequently in the literature, with only a few notable studies. Nevertheless, in those limited cases, policy-based strategies have demonstrated considerable promise, especially in embedding safety constraints directly into the EVCS decision-making pipeline [120,121]. Such methodological distribution reflects a broader evolution in the field—while value-based methods laid the groundwork for early EVCS control solutions, actor–critic architectures have since emerged as the dominant paradigm for managing complex, real-world, and multi-agent charging environments. More specifically:

Figure 7.

(Left): RL type occurrence overall; (Right): RL type percentage (%) overall.

Value-based: The development of value-based RL in EVCS applications illustrates a clear trajectory, evolving from early model-free Q-learning toward more advanced deep and hybrid formulations that address the increasing complexity of charging systems. Initial studies, such as those using fitted Q-Iteration [104,109], validated the feasibility of batch-mode RL, enabling training from historical data and reducing the risks associated with real-world exploration. These methods proved especially effective in residential and public charging environments by offering scalability without needing detailed grid models. However, their offline learning nature limited adaptability in real-time scenarios, encouraging a shift toward online learning and function approximation. This shift became evident in studies like [108,120], where SARSA with linear approximators and deep value networks improved the responsiveness and scalability of control systems for dynamic pricing and power flow management. Meanwhile, deep Q-learning frameworks—such as those presented in [106,111,113]—incorporated temporal representation learning (e.g., LSTM networks) to model sequential patterns in charging behavior, improving forecasts and dynamic adaptability. While these innovations marked major advancements, they also introduced new challenges—deep value-based RL models required significant training data and were often sensitive to poorly shaped or sparse reward functions. Multi-agent extensions, as seen in [110,112], broadened the applicability of value-based approaches by decentralizing decision-making across multiple EVs or distributed loads. Such methods tackled issues of fairness and coordination in community-level EVCS operations, but also exposed vulnerabilities such as slow convergence and increased instability due to non-stationary learning environments. To address these, enhancements like prioritized experience replay (PER) and hierarchical coordination schemes were integrated [117].

Notably, hybrid architectures that blend value-based learning with actor–critic principles or incorporate advanced replay mechanisms (e.g., PER, HER) have shown promising results in continuous control environments [114,115,116]. These demonstrate the flexibility of value-based RL to adapt when appropriately extended. Nonetheless, persistent limitations remain—particularly in handling continuous action spaces, which often necessitate integration with policy-gradient methods [115]. Additionally, extensive reliance on simulation restricts real-world deployment potential, a challenge shared across most RL methodologies. Reward engineering remains a central obstacle. For example, while DQN-based methods such as [118] demonstrated effective cost and grid optimization, they lacked explicit modeling of long-term battery degradation or user-centric objectives. These gaps are prompting a convergence in algorithmic design, incorporating hierarchical RL, model-based components, and transfer learning to reduce data requirements and improve generalizability.

Looking ahead, value-based RL is expected to evolve from its traditional single-agent, cost-minimization role toward more sophisticated multi-agent, multi-objective frameworks that jointly optimize user satisfaction, renewable integration, and grid flexibility. Emerging works—such as the cooperative DDQN-PER model of [117] and the dynamic frameworks of [115]—have illustrated this direction. Ultimately, the next generation of value-based RL will need to bridge discrete and continuous control strategies, integrate domain-aware reward structures, and deliver scalable, real-world-ready policies.

Policy-based: Although policy-based RL approaches remain limited in EVCS literature, they have addressed key limitations of value-based and actor–critic methods by focusing on direct policy optimization and the integration of safety guarantees. For example, Ref. [121] introduced Constrained Policy Optimization (CPO) to enforce grid and operational constraints directly during training, thereby ensuring safe and constraint-compliant charging behavior without the need for post hoc corrections. In a similar direction, Ref. [120] proposed a DNN-enhanced policy-gradient framework, augmented by dynamic programming techniques for real-time power flow control. This approach demonstrated rapid convergence and scalability in complex grid environments. Collectively, these studies highlight the potential of policy-gradient methods to effectively manage continuous control tasks and embed safety-critical features within the decision-making process. However, their broader adoption remains limited, potentially due to high computational demands and relatively low sample efficiency, which constrain their scalability and implementation in larger EVCS systems.

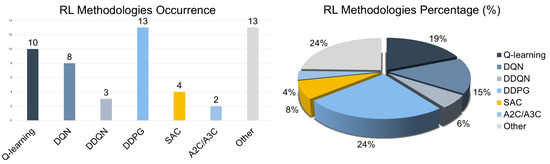

Actor–Critic: Actor–critic RL methodologies have rapidly evolved from basic single-agent implementations to advanced multi-agent and hybrid frameworks capable of addressing large-scale coordination, grid integration, and safety-aware control. Foundational contributions—e.g., the goal representation adaptive dynamic programming (GrADP) approach by [122]—have demonstrated early on the suitability of actor–critic methods for continuous control tasks, particularly in frequency regulation and ancillary services. Building on this foundation, deterministic policy gradient algorithms like DDPG became widely adopted (see Figure 8—left) for their ability to operate in continuous action spaces without discretization overheads [123,128,132]. The integration of recurrent structures, such as LSTM, further improved temporal decision-making in applications like dynamic pricing and SoC-constrained energy scheduling.

Figure 8.

(Left): RL methodology occurrence overall; (Right): RL methodology percentage (%) overall.

Scalability and decentralization have emerged as prominent research directions with the advent of multi-agent actor–critic frameworks. Studies such as [129,135] employed centralized training with decentralized execution (CTDE) architectures, leveraging mechanisms like counterfactual baselines (e.g., COMA) and game-theoretic coordination to mitigate credit assignment challenges while promoting cooperative autonomy. Similarly, in [130], researchers introduced adaptive gradient re-weighting among critics to reduce policy conflict, whereas [134] extended multi-agent DDPG for V2G-enabled frequency regulation, enabling collaborative EV decision-making in grid-supportive scenarios.

Recent advances have also prioritized constraint-aware learning. For instance, Ref. [125] incorporated second-order cone programming (SOCP) into DDPG to enforce voltage stability constraints, while [138] applied a constrained soft actor–critic (CSAC) method to embed grid and operational constraints directly into the policy-learning process. Similarly, Ref. [133] proposed a bilevel DDPG framework that combines predictive LSTM modules with safety shields, enabling feasible and reliable scheduling decisions under uncertainty. Such innovations underscored the growing emphasis on safe and risk-aware RL—an especially important consideration for real-world EVCS operations within tightly constrained grid infrastructures.

Entropy-regularized actor–critic methods such as SAC are also gaining traction due to their improved exploration capabilities and higher sample efficiency in stochastic environments [136,139]. Meanwhile, Ref. [141] combined actor–critic RL with probabilistic forecasting and metaheuristics to develop a risk-sensitive, multi-objective scheduling framework. In parallel, Ref. [131] employed TD3 to address value overestimation and improve the training stability of battery-enabled charging infrastructure. Collectively, these innovations illustrate a trajectory toward integrated, hybrid actor–critic systems that balance learning efficiency with operational safety, grid stability, and market responsiveness.

Although actor–critic RL has shown promise, several challenges still limit its practical use in EVCS control. One major issue is its high sample complexity—these methods often need millions of state–action interactions to learn effectively, which makes real-world training difficult. Another common problem is training instability: if the critic’s estimates are inaccurate, they can misguide the actor, leading to unstable or even failed learning. To tackle such challenges, several strategies have been proposed. Some studies use offline pre-training with historical charging or mobility data to give the model a strong starting point, reducing the need for extensive online learning [106,115]. Others rely on expert demonstrations or simpler rule-based/MPC controllers to guide early learning, which helps improve stability [109,127]. In addition, actor–critic models are increasingly incorporating learned models of the environment or predictive demand approximations to reduce the number of real-world interactions needed. These efforts aim to bridge the gap between data-hungry simulations and the more demanding conditions of real-world EVCS deployment, where efficiency and stability are key.

Furthermore, multi-agent actor–critic models often suffer from non-stationarity and increased coordination overhead in large-scale networks—a limitation only partially addressed through hierarchical control decomposition [124] or federated learning architectures [126]. Going forward, future research should prioritize the integration of hierarchical actor–critic schemes, safety-focused RL, and hybrid decision-making frameworks informed by predictive modeling, in order to bridge the persistent gap between simulation-based learning and real-world deployment in EVCS systems.

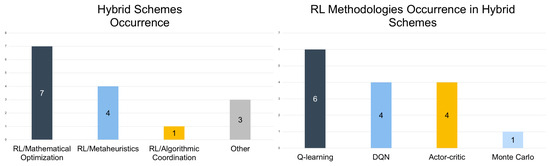

Hybrids: Hybrid RL approaches have also emerged as a prominent direction in EVCS control research, representing a significant evolution of RL by integrating complementary methodologies to overcome the individual limitations of model-free RL, mathematical optimization, and forecasting. Early contributions, such as [142], combined multi-step Q() learning with multi-agent coordination to enhance convergence speed and scalability in mobile EVCS scheduling under dynamic grid conditions. Likewise, Ref. [143] proposed a hybrid of model-based and model-free RL, using value iteration for rapid policy initialization followed by Q-learning refinement—thereby reducing exploration overhead while preserving adaptability.

According to evaluation, hybrid RL schemes are predominantly characterized by combinations of RL with mathematical optimization techniques—such as MILP, ILP, BLP, SP, and game theory—for feasibility and constraint satisfaction [149,151] (see Figure 9—left and right). This hybridization illustrates how the real-time adaptability of RL can be effectively fused with the rigor of optimization frameworks to yield high-quality, feasible charging strategies. For instance, Ref. [149] integrated multi-agent DQN with MILP post-optimization to enable decentralized agents to learn local policies, while MILP ensured coordinated scheduling across battery-swapping and fast-charging stations. Similarly, Ref. [151] used RL in conjunction with MILP to maintain grid-compliant station operations, and [152] merged RL with LSTM-based forecasting and ILP for adaptive yet constraint-respecting V2G scheduling. Additional works have explored RL in tandem with game-theoretic models [145] or surrogate optimization [153], and hybridized RL with metaheuristics like GA, DE, WOA, and MOAVOA to support large-scale planning and global search tasks [148,156]. A smaller subset of hybrid studies also focused on algorithmic coordination and matching: for instance, Ref. [143] demonstrated how local RL decisions can be augmented through tailored global coordination schemes.

Figure 9.

(Left): Hybrid RL scheme occurrence; (Right): RL methodology occurrence as a counterpart in hybrid schemes.

Recent developments in hybrid RL have expanded into multi-agent and actor–critic combinations. For example, Ref. [150] combined soft actor–critic (SAC) for virtual power plant (VPP) energy trading with TD3 for EVCS-level scheduling in a cooperative, multi-agent setting—demonstrating how hybrid RL can co-optimize grid-scale operations and local EV management. Similarly, Ref. [154] introduced a two-level P-DQN-DDPG architecture that integrates discrete booking decisions with continuous pricing control, a particularly valuable advancement for real-time, market-driven EVCS operations. These multi-layered frameworks signal a broader trend in hybrid RL: toward holistic, multi-agent, multi-objective, and multi-level optimization across operational, economic, and grid-interactive domains. In addition, surrogate modeling and simulation-based planning have been incorporated into hybrid RL designs. For instance, Ref. [153] fused Monte Carlo RL with surrogate optimization to solve large-scale EVCS siting problems, while [146] combined DQN with binary linear programming for efficient fleet and charger coordination. These innovations illustrate the potential of hybrid RL to act as an orchestrator—merging data-driven learning with analytical models to enhance convergence speed, policy quality, and scalability.

Overall, hybrid RL has matured into a unifying framework in which RL is no longer treated as a standalone controller, but as the intelligent core of integrated decision-making systems. This paradigm shift effectively addresses challenges such as scalability, safety, and multi-objective optimization in EVCS management, while paving the way for real-world deployment. Future research is expected to build on these foundations by exploring risk-aware scheduling strategies [141], scalable multi-agent architectures [155], and hierarchical RL–optimization pipelines that support real-time, grid-interactive EVCS control.

6.2. Agent Architectures

MARL has emerged as a critical trend in EVCS control, addressing the scalability and coordination challenges posed by large-scale EV integration. As illustrated in Figure 10 (right and center), MARL methodologies have seen widespread adoption across EVCS-related research. Early decentralized Q-learning studies, such as [110,112], demonstrated how independent agents can learn charging policies based solely on local observations, enabling scalable and modular control architectures without the need for centralized oversight. However, such fully decentralized frameworks often suffer from suboptimal global coordination, leading to the rise of centralized training with decentralized execution (CTDE) paradigms.

Figure 10.

(Left): Multi-agent applications by RL methodology; (Center): single-agent vs. multi-agent distribution by RL type; (Right): overall percentage of single-agent vs. multi-agent applications.

Recent works such as [129,130,134,150,155] employed shared critics with decentralized policy networks, allowing agents to access global grid-level knowledge during training while preserving execution autonomy. Moreover, advanced designs such as the non-cooperative game-theoretic MARL framework by [135] introduced spatially discounted rewards to balance local competitiveness with system-level coordination. Hybrid MARL frameworks have also emerged—for instance, the integration of MILP post-optimization in [149] and the hierarchical Kuhn-Munkres matching algorithm in [145]—showcasing how optimization techniques can enhance MARL to ensure grid compliance and infrastructure-wide efficiency. Overall, MARL research in EVCS control is clearly progressing toward hierarchical, hybrid, and cooperative architectures capable of addressing diverse objectives such as grid stability, fairness, and renewable energy integration.

An essential consideration in MARL control lies in the clear identification and implementation of three foundational elements: Structure, Training, and Coordination, as introduced in Section 3. These dimensions are crucial, as they define how knowledge is shared, how policies are learned, and how agent collaboration is orchestrated across the system. A well-defined strategy for each of these dimensions directly impacts scalability, learning stability, and adaptability to complex, dynamic environments like EVCS systems. A closer look reveals the following:

- Structure: Analysis of structural choices in MARL for EVCSs shows a clear dominance of architectures using a shared centralized critic with separate policy networks (see Figure 11—left). This configuration, adopted in studies such as [129,130,134,150], enables agents to benefit from a global value function during training while preserving decentralized policy execution for scalability. In contrast, architectures with fully independent critics and policies, as found in [110,112], prioritize agent autonomy and implementation simplicity at the cost of coordinated global optimization. Partially shared or hybrid structures are rare, appearing in only a few studies such as [142], with most research favoring CTDE-compatible architectures due to their balance of coordination and independence. Some advanced variants, like [130], further enhanced MARL scalability by employing multi-critic architectures with adaptive gradient re-weighting to mitigate inter-agent policy conflict.

Figure 11. (Left): MARL structure-type occurrence in EVCS frameworks; (Center): MARL training-type occurrence in EVCS frameworks; (Right): MARL coordination-type occurrence in EVCS frameworks.

Figure 11. (Left): MARL structure-type occurrence in EVCS frameworks; (Center): MARL training-type occurrence in EVCS frameworks; (Right): MARL coordination-type occurrence in EVCS frameworks. - Training: Centralized Training with Decentralized Execution has become the prevailing training paradigm in MARL-based EVCS control (Figure 11—Center), enabling agents to incorporate global system information during learning while executing actions locally [126,135,155]. CTDE effectively mitigates non-stationarity in multi-agent settings and promotes stable convergence. Fully decentralized training appears mainly in simpler settings involving tabular or basic function-approximation Q-learning [110,112]. Some studies also explored mixed training schemes—such as [145], which combines decentralized Q-learning with periodic centralized matching—demonstrating how hybrid paradigms can enable scalable yet coordinated scheduling.

- Coordination: Implicit coordination has dominated the MARL landscape for EVCS control (see Figure 11—right), relying on shared reward functions or centralized critics to align agent behaviors without direct communication [129,134,150]. Such an approach may reduce communication overhead and simplify implementation, making it ideal for scalable and deployable systems. Emergent coordination—cases where agents collaborate through shared interactions with the environment—have been observed in decentralized frameworks like [110,112]. Explicit or hierarchical coordination mechanisms remain relatively rare; for example, Ref. [145] used a Kuhn-Munkres algorithm to coordinate agents periodically for efficient V2V charging. The general absence of explicit communication-based coordination reflects a broader focus on lightweight, communication-efficient MARL solutions tailored to real-world EVCS deployment.

Overall, MARL remained relatively underexplored in EVCS control, with only 14 of 52 studies (26% percentage—see Figure 10—right) employing decentralized or distributed RL methodologies despite its suitability for large-scale, decentralized charging coordination. Current applications, such as decentralized Q-learning [110,112] and CTDE-based actor–critic methods [129,130,134], demonstrated MARL’s potential to manage grid-constrained, multi-agent environments while enabling scalable cooperation among EVs, aggregators, and grid entities. However, potential challenges, including non-stationarity, high sample complexity, and coordination overheads, seem to prohibit a broader adoption. Future research is anticipated to deploy MARL research more intensively, focusing specifically on hierarchical coordination mechanisms [145], federated and privacy-preserving training schemes [126], and the integration with forecasting and optimization layers [149,155] to improve convergence, safety, and real-world deployability. Such advancements may promote MARL as the next-generation EVCS control, enabling self-organizing, grid-interactive, and fairness-aware charging ecosystems.

6.3. Reward Functions

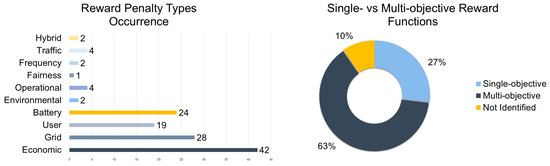

Reward design concerns a foundational element in RL-based EVCS control, as it directly shapes agent behavior and learning convergence. A clear trend in recent literature reveals a shift toward multi-objective reward formulations, which have become significantly more prevalent than single-objective designs (see Figure 12—right). Only a limited subset of studies—such as [104,108,110,113,114,123,127,131,135,136,144,145,148,151]—have relied on single-objective rewards, often focused on isolated goals such as economic optimization or specific grid performance metrics (see Figure 12—right). In contrast, the majority of RL implementations adopted composite reward functions that integrated multiple objectives considering economic, grid, user experience, battery health, environmental sustainability, and fairness-related penalties, reflecting the complexity of modern EVCS ecosystems.

Figure 12.

(Left): Frequency of Reward Components in Overall RL Applications; (Right): Percentage of Single vs. Multi-objective Reward Functions.

Among these objectives, economic-oriented terms concern the most widely utilized penalties; see Figure 12—left). Such penalties typically aim to minimize charging costs or maximize operational profit through dynamic pricing strategies and energy arbitrage mechanisms [104,105,114,136,150] (see Figure 12—left). However, recent work has increasingly paired these with grid-supportive components—such as transformer overload mitigation, load balancing, and voltage/frequency stabilization—to enhance alignment with distribution network performance and reliability [109,110,117,134,137,152].

In parallel, user-centric reward terms have gained traction. These include penalties for unmet SoC targets, excessive waiting times, or high levels of range anxiety [106,112,118,129,130]. Additionally, some studies incorporate battery degradation costs to ensure the long-term health of EV batteries, an increasingly relevant concern for both fleet operators and private users [117,129,131]. Though less common, environmental-oriented rewards—particularly those targeting CO2 reduction and renewable energy utilization—are beginning to emerge, highlighting a growing emphasis on sustainability and carbon neutrality in EVCS design [155,156].

Despite this increasing sophistication in reward structures, several critical challenges remain. Sparse reward environments—such as those described in [115]—may significantly prohibit learning convergence, particularly in complex or sequential decision-making tasks. Additionally, linear weighting schemes used in multi-objective formulations—e.g., [135,152]—are often sensitive to hyperparameter-tuning, potentially introducing bias in the prioritization of objectives. Penalty-based constraint handling also dominates the field, which can lead to transient constraint violations during training [138].

Another notable trend acquired by the evaluations is that, despite the prevalence of multi-objective formulations, almost all existing EVCS RL studies ultimately relied on scalarized rewards through weighted sums or penalties [106,112,115,116,121,129,135,139,155,156]. To this end, almost no work has been found to employ genuine multi-objective RL methods, such as Pareto front learning, multi-objective policy gradients, or separate critics per objective. Such a trend highlights a significant research opportunity to move beyond ad-hoc weighting schemes toward principled frameworks that can capture diverse trade-offs and provide more robust charging policies.

Looking ahead, the development of more nuanced reward mechanisms is essential. Promising directions include risk-aware and probabilistic reward shaping strategies, such as those demonstrated in [141], where penalties are dynamically weighted based on uncertainty or event severity. Hierarchical or modular reward architectures may also offer greater clarity and flexibility, enabling agents to independently learn sub-policies for economic efficiency, grid compliance, and user satisfaction, while maintaining coherence at the system level. Finally, the integration of real-world feedback, particularly in federated or decentralized learning setups [126]—will be vital in ensuring that reward functions are practically grounded, robust, and capable of generalization to large-scale EVCS deployment scenarios.

6.4. Baseline Control

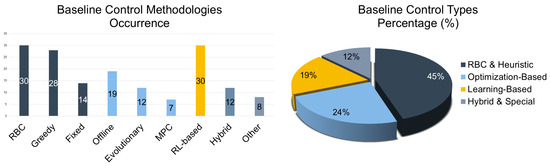

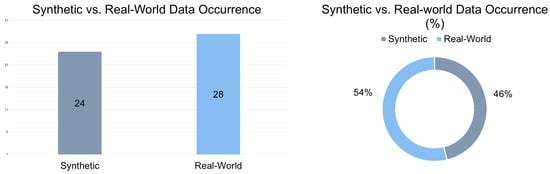

The analysis of baselines employed across RL-based EVCS studies reveals clear patterns regarding how researchers benchmark their approaches, reflecting both methodological maturity and evolving expectations in the field. RBC strategies, typically based on Time-of-Use (TOU) pricing or immediate charging policies, remain among the most widely adopted baselines [104,109,137] (see Figure 13—left). These methods are computationally simple, forecasting-free, and reflect legacy charging practices, making them ideal for demonstrating how RL can dynamically adapt to real-time pricing signals and grid conditions. Particularly in residential and public charging scenarios, outperforming RBC allows RL to establish its relevance in moving from static heuristics to context-aware optimization [118,140]. Another frequently used—yet simplistic—heuristic concerns the greedy or charge-when-plugged strategy, where EVs immediately draw maximum available power upon connection until fully charged [105,118,152]. Despite its lack of intelligence, this baseline provides a clear lower bound for evaluating RL effectiveness, especially in minimizing peak demand, transformer stress, and overall charging costs in single-agent and residential settings [106,118,137,138]. Together with fixed control strategies, these heuristic approaches constitute the dominant form of baseline control in current literature (see Figure 13—right).

Figure 13.

(Left): Occurrence of baseline methodologies in overall RL applications; (Right): percentage of baseline control types used.

Offline optimization methods—particularly mixed-integer linear programming (MILP)—have also been widely used to benchmark RL against theoretically optimal schedules with perfect foresight (see Figure 13—left). Such approaches may offer a valuable upper bound for assessing cost minimization, load balancing, and constraint satisfaction [106,149,151]. For instance, Ref. [116] compared a cooperative DDQN framework with MILP optimization assuming full future knowledge to highlight RL’s capacity to approximate optimal solutions in real-time settings. Similarly, Refs. [111,118] employed offline MILP or deterministic optimization to quantify the performance gap between centralized planning and RL-based adaptive control. These comparisons reinforce RL’s advantage in uncertain or computationally constrained environments where offline methods may be impractical.