1. Introduction

Interior permanent-magnet synchronous machines (IPMSMs) are critical components of modern electric vehicle (EV) drivetrains, valued for their high efficiency, compact design, and robust operational reliability. However, inter-turn short-circuit (ITSC) faults represent significant operational risks, as even minimal winding defects can escalate into severe, irreversible damage such as permanent magnet demagnetization and diminished machine reliability [

1,

2]. Timely detection and accurate diagnosis of these faults are essential to avoid costly downtime, enhance operational safety, and sustain machine performance, especially given the increasing adoption of and reliance on EVs globally. Despite these imperatives, the availability of comprehensive and realistic datasets tailored specifically for ITSC fault analysis remains limited.

Approaches that involve physically induced faults, such as those derived from measurements under controlled short-circuit conditions [

3], deliver critical insights but entail destructive tests, extensive experimental setups, and limited generalizability across various machine designs. Alternatively, finite element method (FEM) simulations offer high-quality synthetic datasets but require intricate machine models, significant computational efforts, and careful modeling of transient states to detect subtle fault signatures [

4]. For instance, the approach proposed in [

5], utilizing stray magnetic field sensing, effectively captures flux variations from faults via FEM simulations and experimental validations. However, it neglects magnetic saturation effects, which are pivotal in fault evolution during high-load conditions. Additionally, intellectual property constraints and highly specific machine configurations further inhibit data sharing, consequently forcing redundant data generation efforts and impeding technological progress.

The growing reliance on machine learning (ML) and artificial intelligence (AI) techniques in motor and EV diagnostics highlights the increasing need for large, high-fidelity datasets [

6,

7]. These techniques thrive on diverse and accurate data to train and validate advanced diagnostic algorithms. However, recent studies [

8] have identified significant gaps in the diagnostic landscape, particularly the lack of datasets and fault features tailored for incipient faults involving minimal shorted turns under transient or saturation conditions. Traditional diagnostic methods and recent ML-based approaches [

9] have demonstrated efficacy in steady-state analysis and multi-source fault identification but fail to provide actionable data or features under non-stationary and saturated operating scenarios. Addressing these limitations is critical for advancing robust ITSC diagnostics in PMSMs suitable for real-world EV applications. For example, the ANN-based fault diagnosis framework proposed in [

10] leverages features such as Total Instantaneous Power (TIP), Phase Shift (PS), and Negative Sequence Voltage (NSV) for SITSC fault detection. While effective under steady-state conditions, these features lack the robustness needed to capture transient dynamics and magnetic saturation effects, which are essential for real-time fault diagnostics in EV drivetrains. This highlights the urgent need for advanced feature identification methods and models that can address the nonlinear and dynamic behaviors characteristic of EV applications.

The absence of robust tools and datasets capturing transient behaviors and saturation effects in ITSC fault models has resulted in reliance on oversimplified models that fail to accurately represent early-stage fault characteristics. Few state-of-the-art studies address the detailed, operation-specific requirements for EVs. For example, the model presented in [

11] analyzes faults with as few as one shorted turn, providing insights into current behavior under varying fault resistance and operational conditions. This model explicitly incorporates magnetic saturation effects through inductance variations derived from FEM simulations in a four-dimensional lookup table, accounting for nonlinearities caused by high fault currents. However, transient dynamics are not explicitly modeled, and while the study employs Field-Oriented Control (FOC) to simulate closed-loop machine responses, it does not propose specific small-scale features suitable for ML applications. Furthermore, the dataset is limited to steady-state conditions and specific machine configurations, lacking the transient fault features essential for developing ML-based diagnostic tools. Although the model demonstrates high consistency between experimental and simulated results, it does not provide quantified detection metrics (e.g., accuracy or error rates) for fault classification.

Similarly, research by Zafarani et al. [

12] investigates low-intensity faults, such as the case of 8/71 (approximately 11%) of one phase’s coils being shorted. However, it does not explore finer granularity for fewer shorted turns. Magnetic saturation effects are partially considered, revealing how flux density distribution changes with fault intensity, but the study does not address transient behaviors specific to EVs, such as rapid torque and speed changes. Both open-loop and closed-loop conditions are analyzed, including the impact of controllers on fault dynamics, but the dataset is not optimized for ML feature extraction, particularly for incipient faults. Furthermore, the dataset lacks transient dynamics, low-speed saturation effects, and generalized fault conditions across diverse machine types, limiting its applicability for ML-based methods aimed at detecting incipient faults during dynamic EV operations. These limitations emphasize the pressing need for datasets and simulation frameworks that enable the identification of fault features under real operating conditions, facilitating the development of high-precision fault-diagnostic methods.

As EV systems grow more complex and interconnected, integrating fault detection models into digital twins and virtual prototyping platforms, as demonstrated in the VISION-xEV framework [

13], can enable agile predictive maintenance strategies. Such frameworks facilitate the simulation of real-world operating conditions, optimizing system-level performance while reducing development cycles. However, existing methods, including VISION-xEV, still lack explicit consideration of magnetic saturation effects and transient fault feature extraction, further highlighting the need for advanced diagnostic solutions tailored to these scenarios.

Despite their proven effectiveness across various domains, Transformer models have remained largely unexplored in electrical machine fault detection, particularly for ITSC faults in PMSMs, where non-stationary operating conditions and transient fault events present unique challenges. Existing fault detection methodologies struggle to capture these dynamic behaviors effectively, underscoring the need for advanced modeling approaches like Transformers that can autonomously learn and adapt to such complex temporal patterns. Existing research predominantly relies on signal processing-based methodologies, including motor current signature analysis (MCSA), wavelet transformations, or hybrid approaches combining distance metrics and time-frequency decompositions [

14,

15,

16]. While these methods offer valuable insights in certain conditions, they often necessitate manual feature extraction, empirical thresholding, or visual scalogram analysis, limiting their scalability and adaptability to complex, non-stationary environments typical of EV applications. Recent work such as [

17] illustrates the use of Gamma indices and feature engineering in field-oriented control (FOC)-driven induction motors for fault detection, yet these approaches remain confined to specific drive conditions and lack generalizability to inverter-fed PMSMs under transient scenarios.

In contrast, recent advancements in deep learning, particularly Transformer architectures, have revolutionized sequential modeling in domains such as natural language processing, computer vision, and time-series forecasting [

18,

19]. Transformers leverage attention mechanisms to capture local and global dependencies, overcoming the limitations of recurrent networks. However, most Transformer adaptations for time-series applications, including those presented by Kämäräinen [

19], focus on periodic signal forecasting, addressing relatively simple datasets and overlooking the non-stationary, transient characteristics inherent in PMSM fault signals. A Transformer-based diagnostic scheme is introduced in which wavelet-transformed stator-current components,

and

, are supplied to the network for automated detection of incipient inter-turn short-circuit (ITSC) faults in inverter-driven permanent-magnet synchronous machines (PMSMs). For this purpose, a dedicated high-fidelity finite element framework is designed to generate flux-linkage look-up tables (LUTs) that encompass both linear and saturated regimes; the inversion of these tables enables efficient real-time execution on FPGA and System-on-Chip (SoC) targets.The inverter-controlled transient behavior of the drive is subsequently co-simulated in

Simulink over an extended operating envelope. From these simulations, short time-localized windows of the stator currents are extracted and subjected to wavelet analysis, yielding time-frequency representations that serve as input tokens to a Transformer encoder. Owing to its self-attention mechanism, the network autonomously learns discriminative temporal features, obviating the manual thresholding and feature engineering required by conventional motor-current signature analysis and wavelet-only techniques. Therefore, the pipeline is able to identify early-stage ITSCs under strongly non-stationary conditions, including rapid torque transients and magnetic saturation. To the authors’ knowledge, this constitutes the first application of a Transformer architecture to PMSM early ITSC diagnosis suitable for real-time application under transient conditions, also demonstrating that the combination of physics-based simulation data and sequential attention modeling provides robust, real-time fault detection suitable for next-generation electric-vehicle powertrains.

3. High-Fidelity Dataset Generation for ITSC Fault Detection

3.1. Static Data Generation

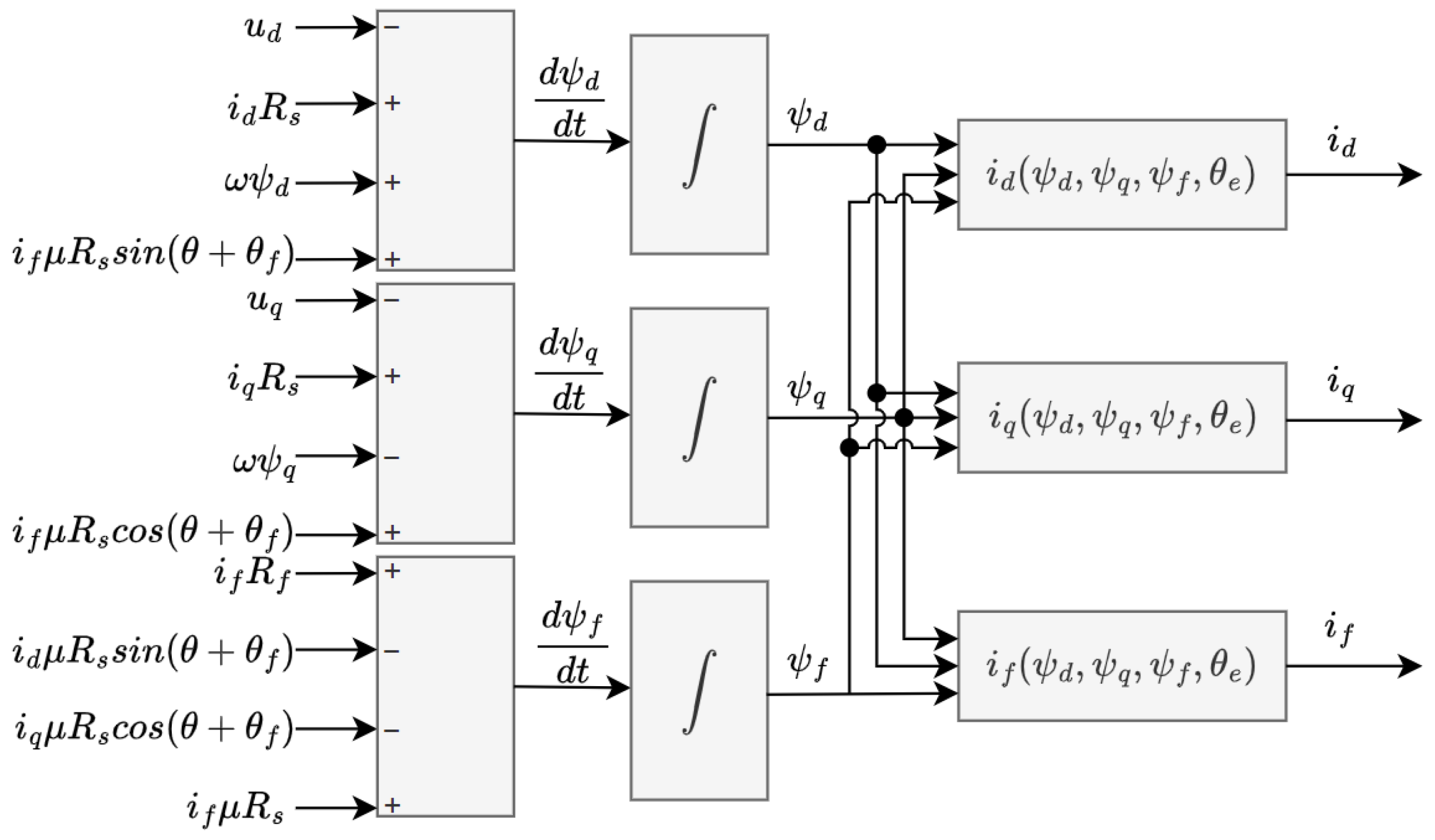

3.1.1. Mapping the Flux-Linkage Relationships

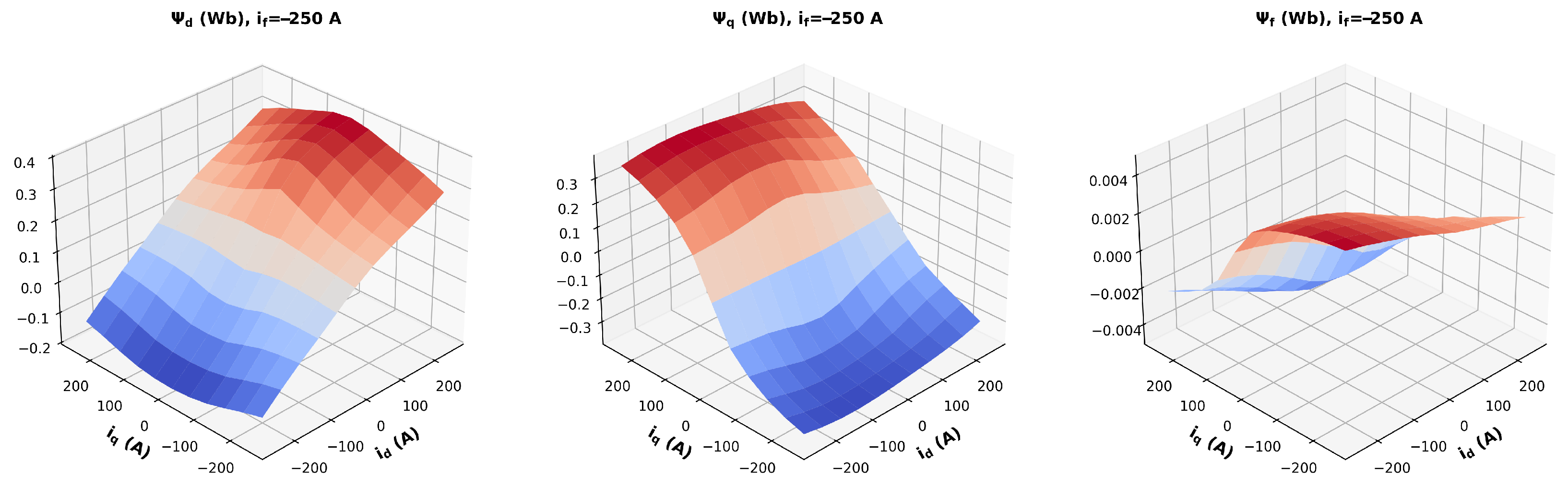

In this section, the framework and procedures for generating flux maps, current maps, and torque map are presented. The dataset is constructed from flux maps, current maps, and torque data samples obtained through static finite element method (FEM) simulations of an IPMSM. Static FEM simulations are used to map the machine’s flux-linkage relationships under various current excitations and rotor positions, providing the necessary nonlinear characteristics for subsequent dynamic simulations without the computational burden of transient FEM analyses.

3.1.2. Simulation Workflow

The simulation workflow for synthetic data generation can be seen in

Figure 4. The FEM model of the machine is developed using open-source FEMM software. To reduce computational time, static FEMM simulations are executed in parallel across multiple CPU cores, controlled by the Python 3.11 multiprocessing library. Machine identification employs equidistant grid points defined by Equation (

14) based on the nominal current range.

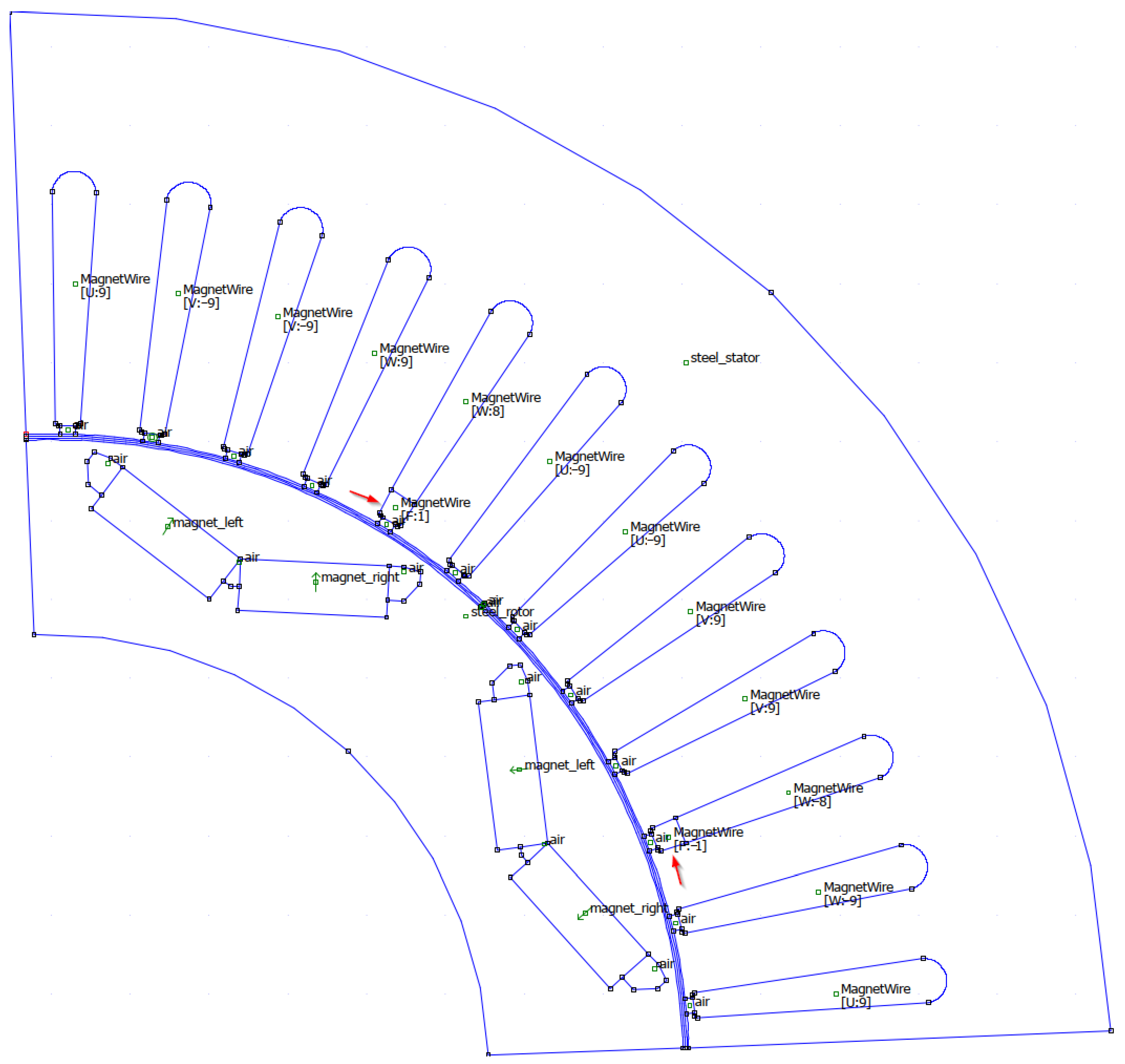

3.1.3. FEM Model

A thoroughly validated IPMSM FEM model of the 2004 hybrid electric Toyota Prius, developed in [

22] and widely characterized in the literature [

23,

24], serves as the baseline for this study. The main specifications of the machine are summarized in

Table 1 [

23]. Compared to the Toyota Prius 2004 IPMSM baseline FEM model, the developed model introduces explicit simulation of fault-induced coil segmentation and asymmetric flux-linkage conditions, which is essential for representing ITSC fault dynamics accurately. Furthermore, the flux-linkage lookup tables (LUTs) generated from FEM simulations facilitate direct, real-time implementation in FPGA-based automotive simulation frameworks commonly used in industry for rapid, high-fidelity EV drivetrain prototyping, eliminating the need for repeated FEM computations during testing. Since an ITSC fault causes asymmetric behavior, it is necessary to simulate the entire machine geometry. In order to simulate the fault, the FEM model is modified by partitioning the affected phase coils into two segments. One segment represents the shorted turn, while the other reflects the remaining healthy turns, with a reduced effective number of turns. This arrangement for one pole pair is depicted in

Figure 5. The maps are created for one shorted turn; therefore, it is possible to scale fluxes to a higher number of shorted turns [

25] (e.g., a fault flux in Equation (

15)). The identified flux maps are depicted in

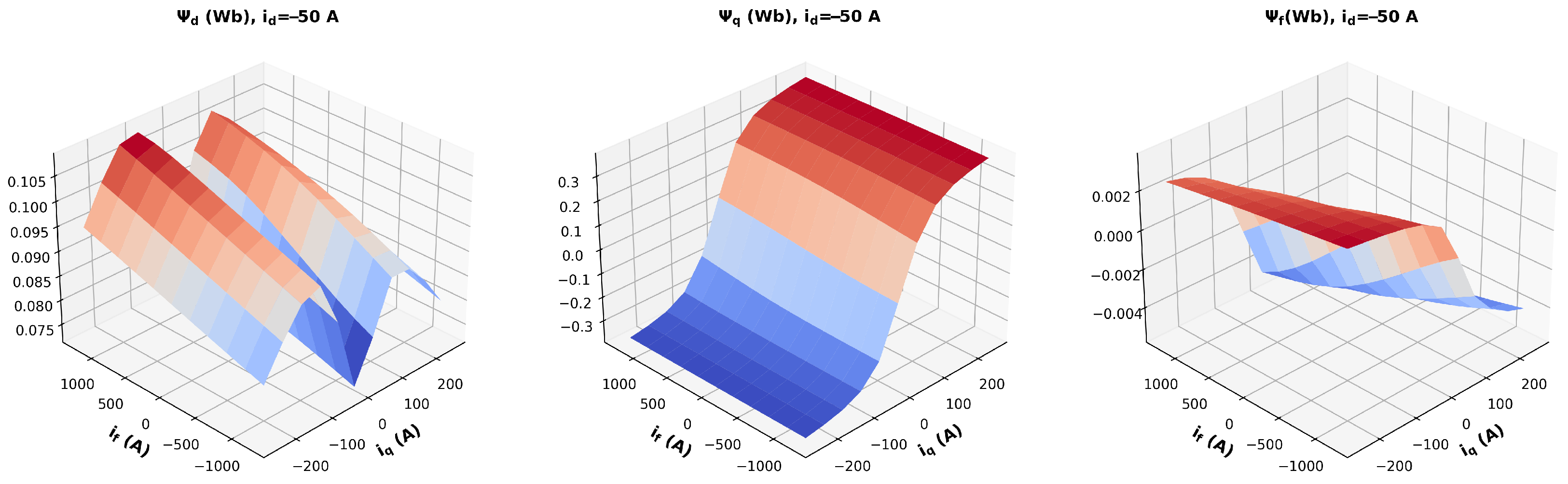

Figure 6 and

Figure 7.

3.1.4. Flux-Map Inversion

For the current calculations in Equation (

14), the inverse mapping of the generated flux maps is performed, interchanging the

,

, and

domains with co-domains

,

, and

[

26]. The equidistant grid points for the inverse map are determined from the original flux map by using Equation (

16).

Here,

m corresponds to the number of grid points in the original map. The inverse solution is obtained by minimizing the root-mean-square flux error residual, as described by [

4]

The algorithm employs MATLAB’s fminconfunction to iteratively determine the currents that align with the pre-computed flux domain. Normalization using the calculated mid-value (

) is essential for numerical stability, as the fault flux is significantly smaller than the other fluxes [

4]. Within the algorithm, the flux function is approximated by using linear interpolation. This inversion process enables the incorporation of complex flux nonlinearity into real-time-capable models, supporting implementations on FPGA or SoC platforms where computational resources are limited.

3.1.5. Technical Validation of Data

The generated flux data is validated by comparing the results and model behavior with findings reported in existing literature [

4]. For this purpose, a dynamic Simulink model directly incorporates the FEM-generated flux data [

27], and the resulting simulation outputs are compared with those obtained from the inverse flux-map model using the calculated current maps. The model behavior is also compared with experimental measurements from a real-world test bench [

28]. The dataset contains measurements of a special-purpose PMSM that realistically emulates the ITSC fault. The main quantities are recorded for different phase faults, numbers of shorted turns, and load torques.

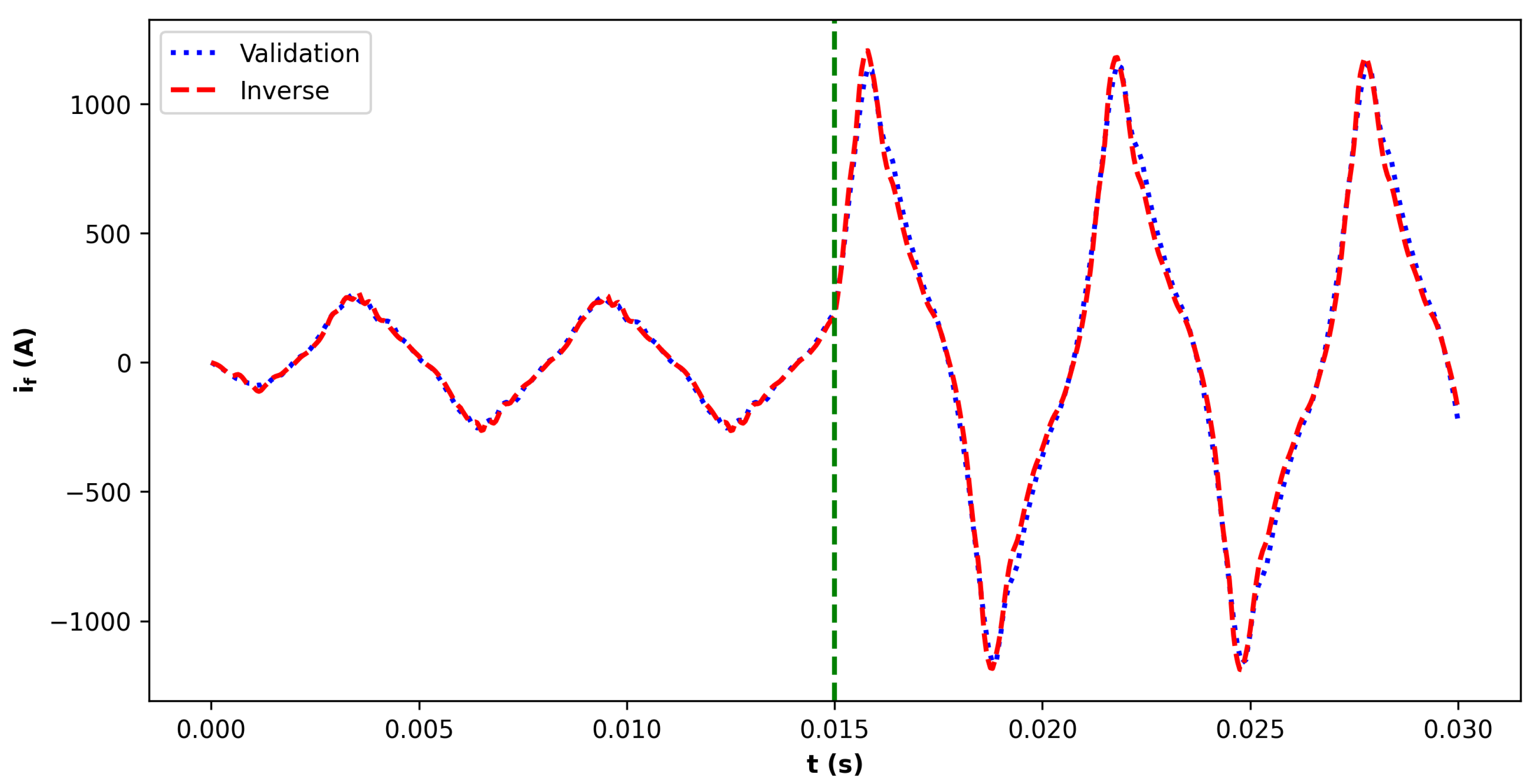

A comparison of the fault current between the flux map-based model and the inverse map model is shown in

Figure 8. The detailed W-phase comparison is shown in

Figure 9, while the three-phase stator currents of the inverse model are illustrated in

Figure 10. In these simulations, the machine operates in generator mode at a speed of 2500 RPM with a 2.2

resistive load. The fault resistance is varied stepwise, decreasing from 10 m

to 1 m

to analyze the model behavior under different fault current magnitudes.

In

Figure 11, the waveform of the experimental data can be seen with four shorted turns in phase V. The machine is operating in motor mode with a 35 Nm load torque.

As demonstrated, the inverse map model closely approximates the flux map-based model, and the flux map-based model, itself, aligns well with findings in the literature. The asymmetric behavior shown by the experimental data can be also observed in the high-fidelity simulation of the faulted PMSM model. These results affirm the reliability of the dataset and the methodology for ITSC fault diagnosis and real-time simulation implementations. While the current approach provides high-fidelity results, further improvements may be achieved by employing finer discretization, more advanced optimization techniques, or higher-resolution solvers to yield even more accurate inverse mappings. This potential enhancement is particularly relevant for critical applications requiring the highest possible accuracy and reliability.

3.2. Transient Data Generation with Simulink

3.2.1. Model

The generated flux maps are integrated into a Simulink-based simulation of an electric vehicle drivetrain controlled using a Field-Oriented Control (FOC) algorithm. The simulated drivetrain includes models of the battery, DC link, inverter, faulty PMSM, and a simplified mechanical system. The inverter model incorporates PWM generation based on duty cycles from the controller and simulates switching behavior. It also generates trigger signals at PWM half-periods to replicate the sampling and computation processes of a real microcontroller-based FOC.

3.2.2. Data Recording

The model simulates intermittent operation modes over a 1-s time window, with a transient event randomly introduced between 0.2 and 0.6 s. The simulation begins at 1000

, and experiments are conducted for different acceleration and deceleration cycles using varying values of

n,

, and

as defined in Equation (

18). The simulations are also repeated for the faults introduced in phases U and V by shifting the electrical angle of the current maps by

and

, respectively.

The inverter switching frequency is set to 10 kHz. With the triggering mechanism described above, this results in a current sampling rate of 20 kHz for the controller. According to [

24], the maximum speed of the machine is approximately 6500

, corresponding to a fundamental current frequency (

) of around 433.33 Hz. This yields a sampling rate that is 46.15 times higher than the fundamental frequency and 23.07 times higher than the Nyquist frequency. Consequently, this sampling rate is sufficient to capture higher-order component harmonics induced by inter-turn short-circuit (ITSC) faults.

Given the 20 kHz sampling rate over a 1 s window and assuming each value is stored as a 32-bit floating-point number, the total data storage required per signal is approximately 78.13 kB. As both

and

are stored, the total memory requirement becomes approximately 156.25 kB per simulation. This storage demand is well within the capabilities of modern embedded microcontroller platforms [

29], but it is technically feasible to extend memory resources with external devices [

30]. The above-mentioned data recording process is depicted in

Figure 12.

3.3. Dataset

The simulations described above yielded a comprehensive dataset comprising 6667 transient samples. Each sample contains a 1 s time series of the and stator-current components sampled at 20 kHz, providing detailed temporal resolution under both healthy and faulty operating conditions. The dataset covers a wide range of operating speeds, mechanical loads, and fault resistances. Faults are introduced independently in all three stator phases (U, V, and W), ensuring exposure to diverse fault conditions.

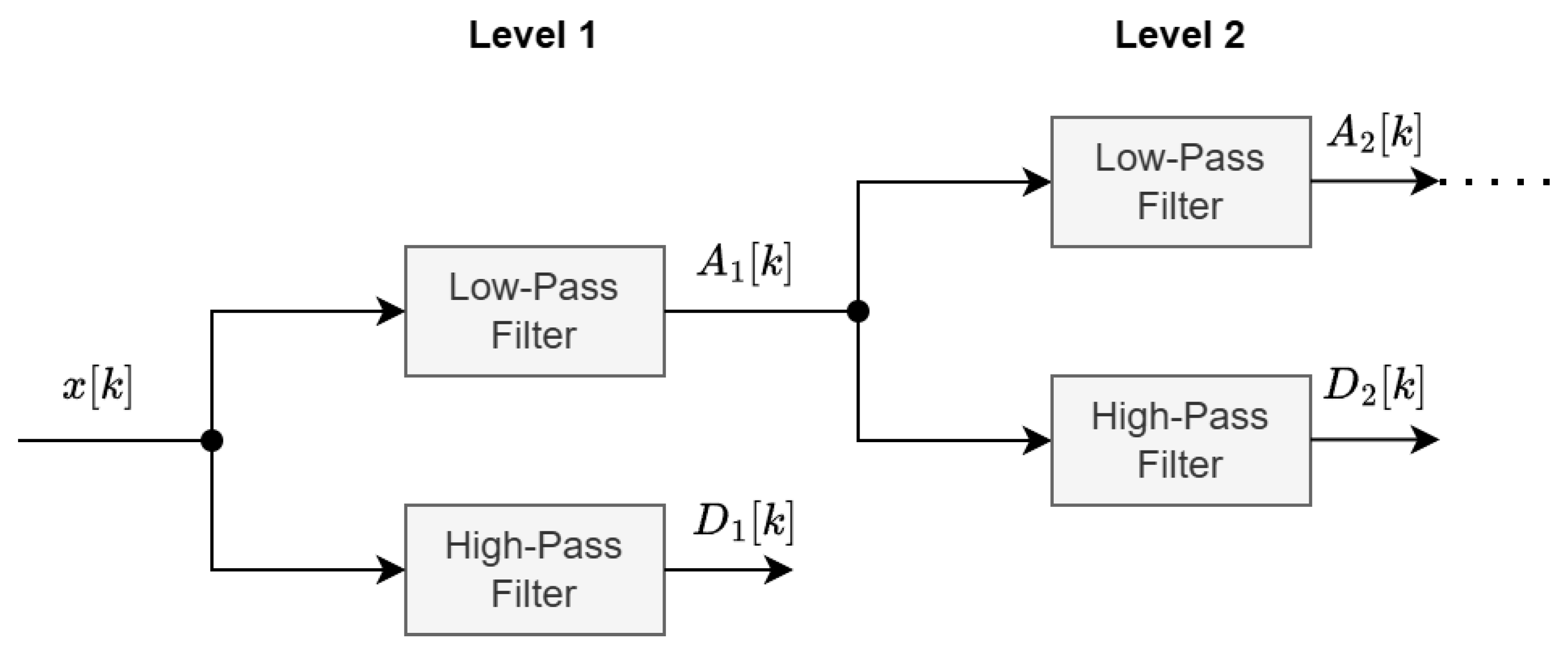

For feature extraction, the Park-vector modulus (), computed from the and components, is subjected to discrete wavelet transform (DWT), enabling time-frequency decomposition of the current signals and capturing both transient and steady-state characteristics. The Daubechies-38 mother wavelet is used with eight levels of decomposition.

The Daubechies-38 wavelet is employed due to its high number of vanishing moments, compact support, and capacity to resolve local signal irregularities across multiple scales. This choice is supported by the theoretical relationship between wavelet coefficients and pointwise H older regularity. Specifically, for a function (

) exhibiting H older regularity of order

at a point

, the decay of wavelet coefficients satisfies

where

a is the scale and

denotes the wavelet coefficient at location

b [

31]. This property allows for the detection of signal singularities such as those associated with incipient inter-turn short circuits, which typically correspond to low-

regions.

This interpretation is supported both theoretically and empirically. In the context of electrical machine fault diagnostics, Zhang et al. [

32] demonstrated that higher-order Daubechies wavelets (e.g., db45) combined with level-6 decomposition improved sensitivity to inter-turn short circuits in induction machines. In a broader context, the capacity of wavelet coefficients to capture local regularity has also been exploited in the study of interplanetary magnetic field fluctuations [

33]. The general mathematical foundation for this behavior is rigorously described in [

31], confirming the suitability of wavelet-based analysis for identifying localized non-stationary phenomena across multifractal signals.

The DWT coefficients of the last four levels from the currents are concatenated to form the final feature vectors. This representation is particularly well-suited for detecting inter-turn short-circuit (ITSC) faults, as these faults often manifest as localized, non-stationary anomalies that are not easily captured by conventional Fourier-based spectral methods. The selection of 386 DWT coefficients ensures that the full time-frequency structure of the current signals is captured across multiple decomposition levels, providing the model with detailed representations of both low- and high-frequency components. This comprehensive feature set is particularly important for detecting localized, non-stationary anomalies associated with ITSC faults. Unlike approaches that rely on specific harmonic components or statistical summaries, this method retains the raw, fine-grained coefficients, allowing the Transformer model to autonomously learn and extract relevant patterns from the entire time-frequency space. The raw dataset exhibits an imbalanced distribution, with faulty samples outnumbering healthy ones. To address this issue, oversampling is applied to the healthy class during in the hold-out split training phase, and a stratified k-fold algorithm is applied for cross-validation. Specifically, the indices of healthy samples (labeled as 0.0) are identified within the training subset. These healthy samples are then duplicated five times and combined with the original training data. By concatenating five copies of the healthy subset to the training data, the class distribution is balanced, ensuring that the machine learning model is exposed to an equal representation of healthy and faulty samples during training. The selection of wavelet levels and the five-fold oversampling factor was determined empirically through iterative evaluation of model performance on validation data. This approach aligns with best practices in handling imbalanced datasets for fault detection, where oversampling the minority class mitigates bias during training. The chosen factor ensured stable convergence and balanced sensitivity between healthy and faulty class predictions, without introducing overfitting artifacts commonly associated with excessive duplication. The final dataset, enriched with high-fidelity transient simulation data and time-frequency features, provides a solid foundation for the training of complex deep learning models, particularly Transformer architectures. Its design ensures the dataset’s alignment with real-world EV drivetrain dynamics, supporting the development of robust and generalizable ITSC fault detection systems.

5. Results

The high-fidelity transient dataset used for model training consists of 6667 samples generated from inverter-driven PMSM simulations. To improve generalization and mitigate the effect of class imbalance, three-fold stratified cross-validation is applied, ensuring that the class distribution is preserved in each fold. The use of stratified cross-validation is shown to reduce the variance of evaluation metrics in classification tasks with limited or imbalanced data, as also reported in [

38].

In addition, a separate hold-out validation strategy is employed with a 50%/40%/10% split for training, validation, and testing, respectively. This setup enables a fixed evaluation scenario and is specifically used to analyze the attention behavior of the Transformer model. The hold-out ratio was chosen to provide sufficient training capacity while retaining unbiased validation and testing sets. This is a common practice in classification scenarios involving medium-sized datasets.

Finally, in order to evaluate the advantages of the Transformer architecture in case of long-term dependencies of the input sequence, an LSTM-RNN model is trained with same dataset, and the final results of the hold-out validation are compared.

5.1. Cross-Validation Results

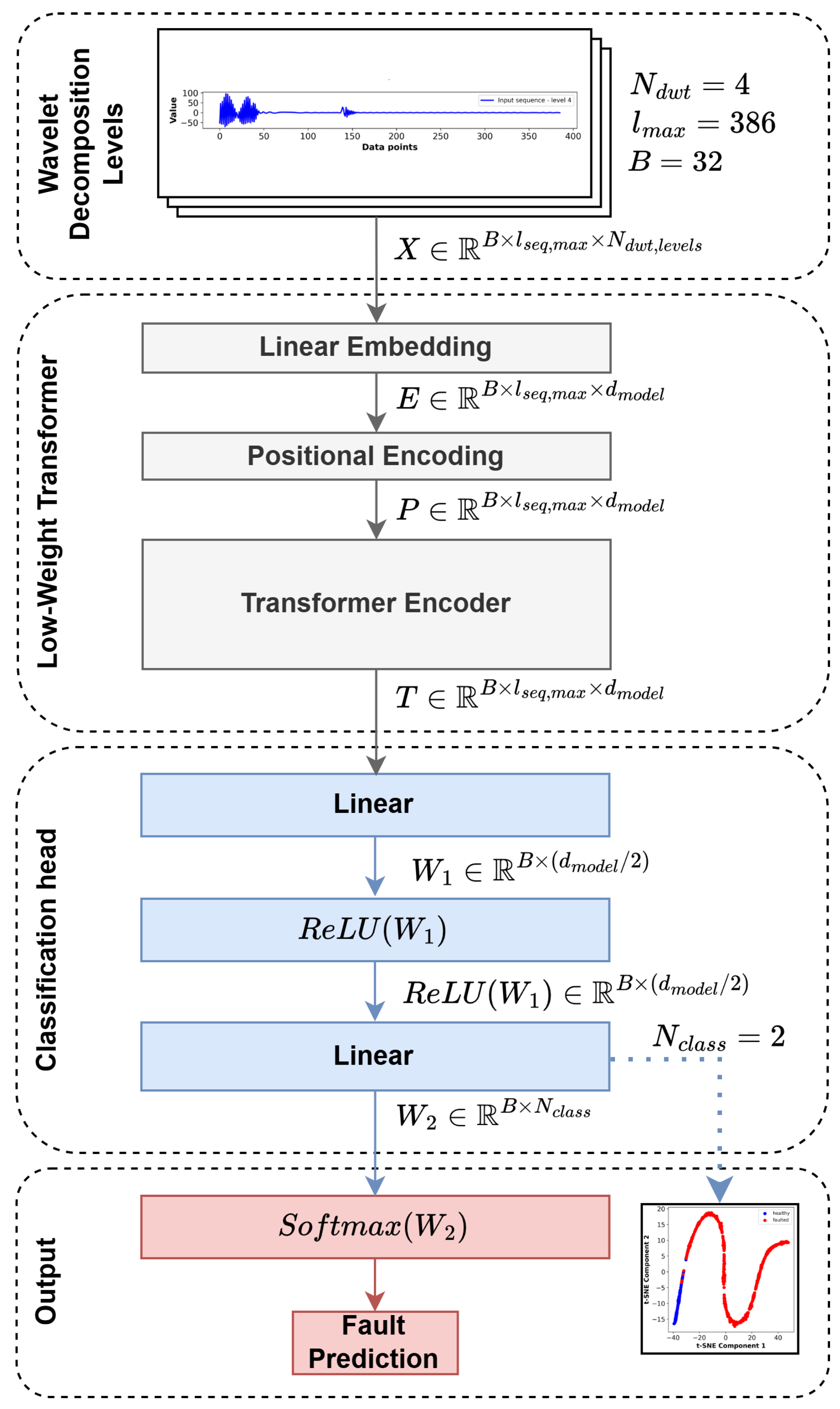

The training configuration consists of the Adam optimization algorithm with a fixed learning rate of 0.0001. The model architecture employs a single encoder layer with an embedding dimension of 32 distributed across 16 attention heads. The feedforward network within the encoder uses a dimensionality of 64. The dropout hyperparameter is set to 0 in order to ensure the proper fit of the low-weight transformer model. Input features are linearly projected into the embedding space, and sequence summarization is performed using mean pooling.

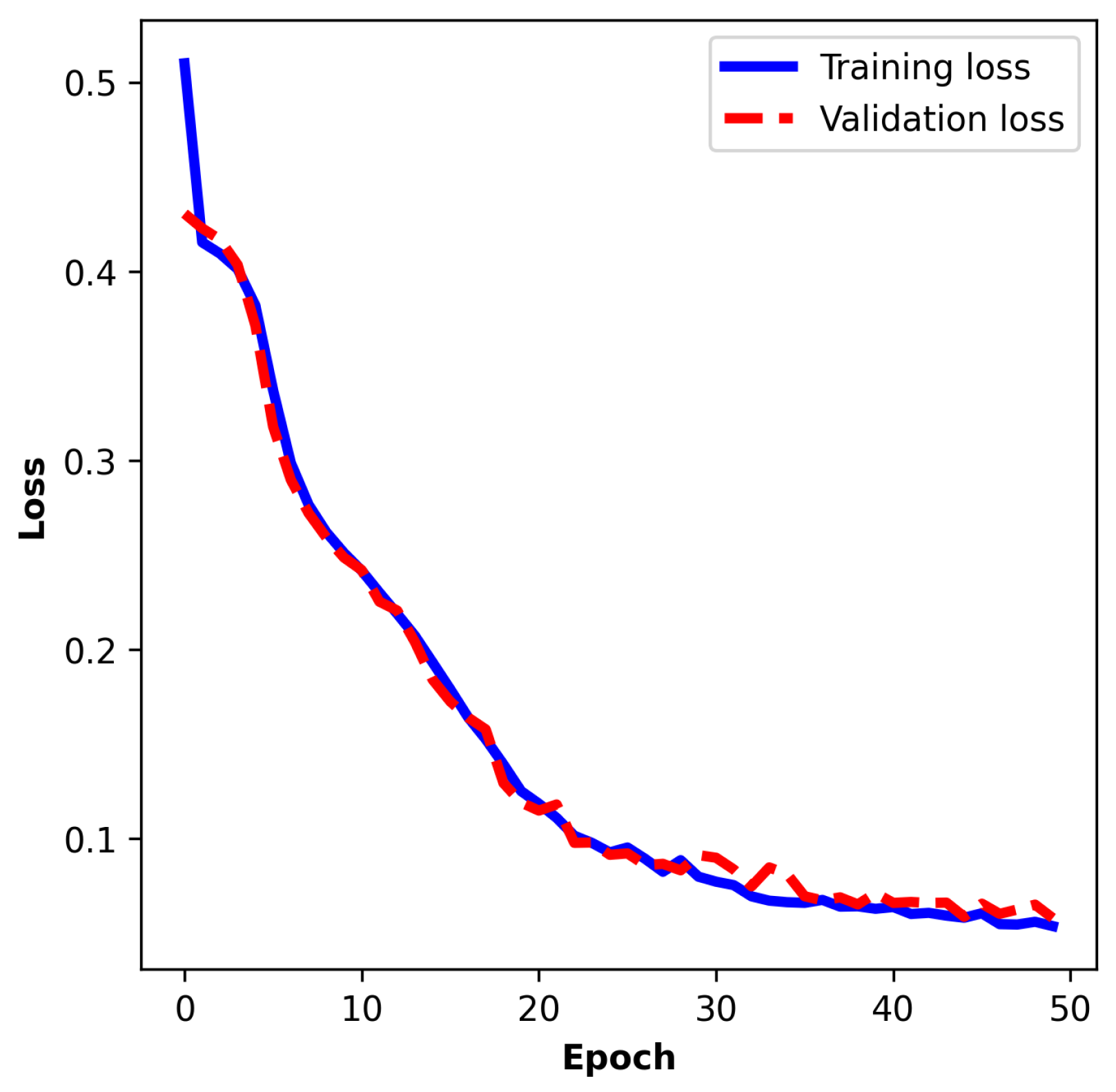

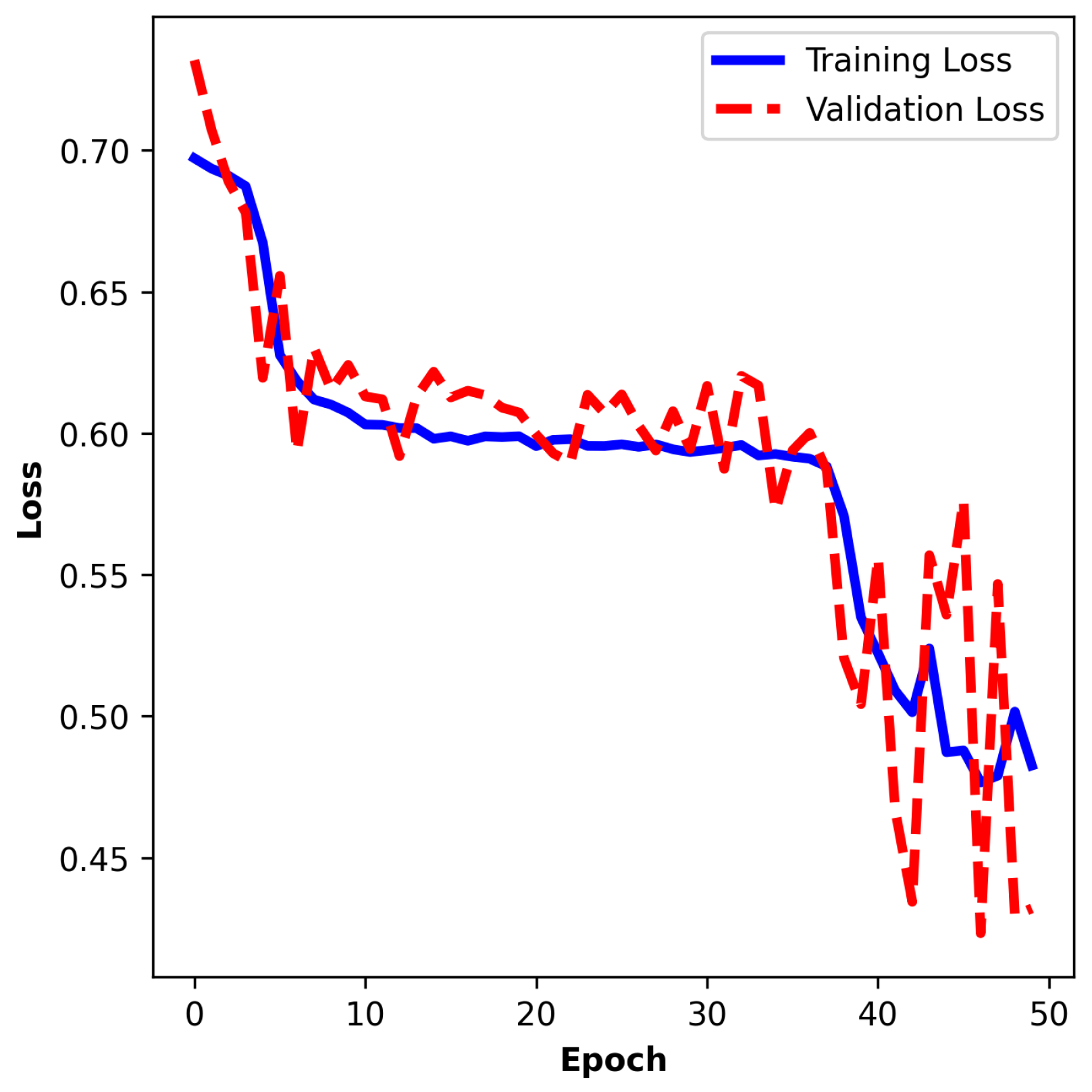

The training process spans 50 epochs, with a batch size of 32 samples and 3 folds. Model evaluation during training reveals steady convergence.

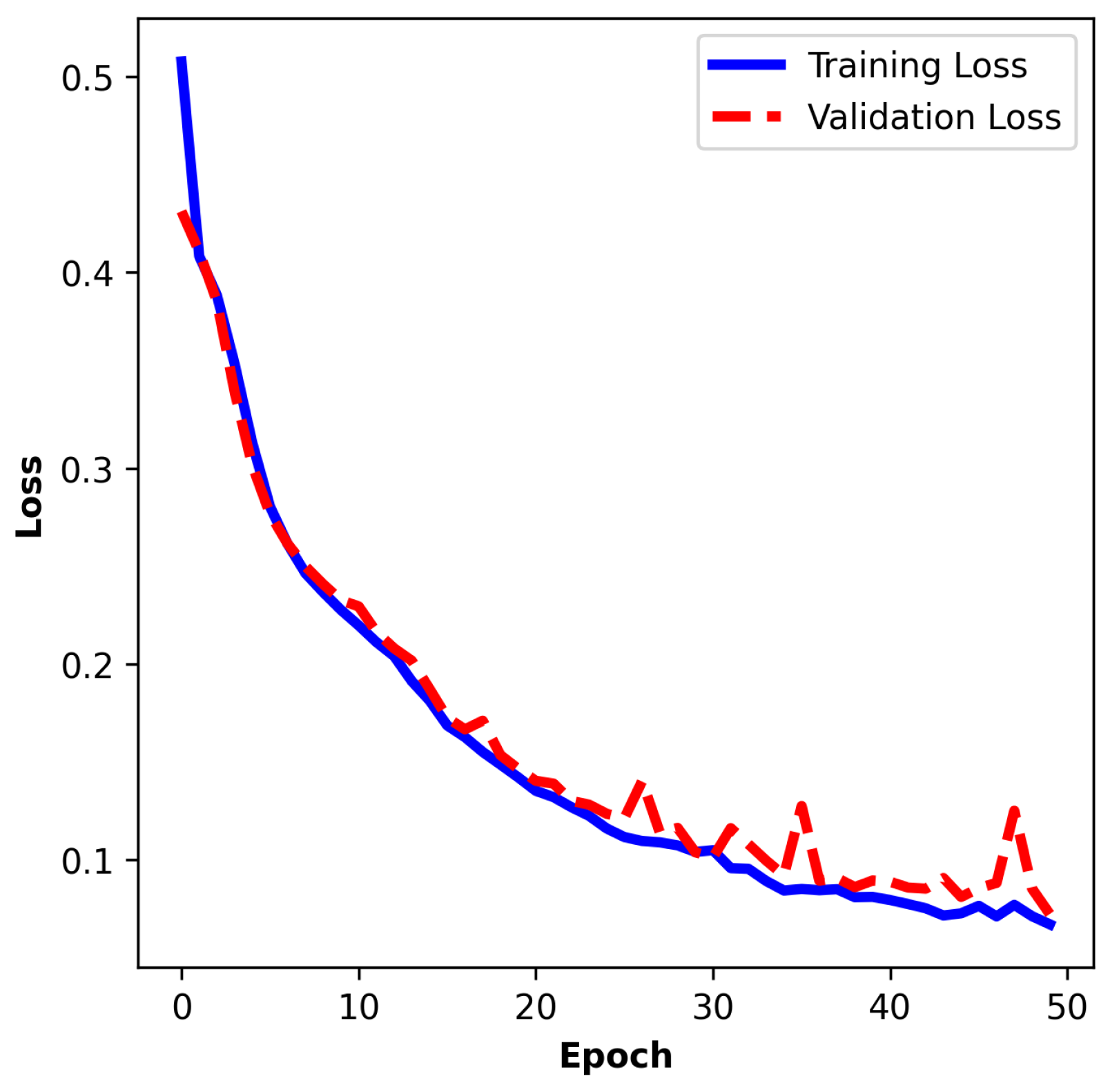

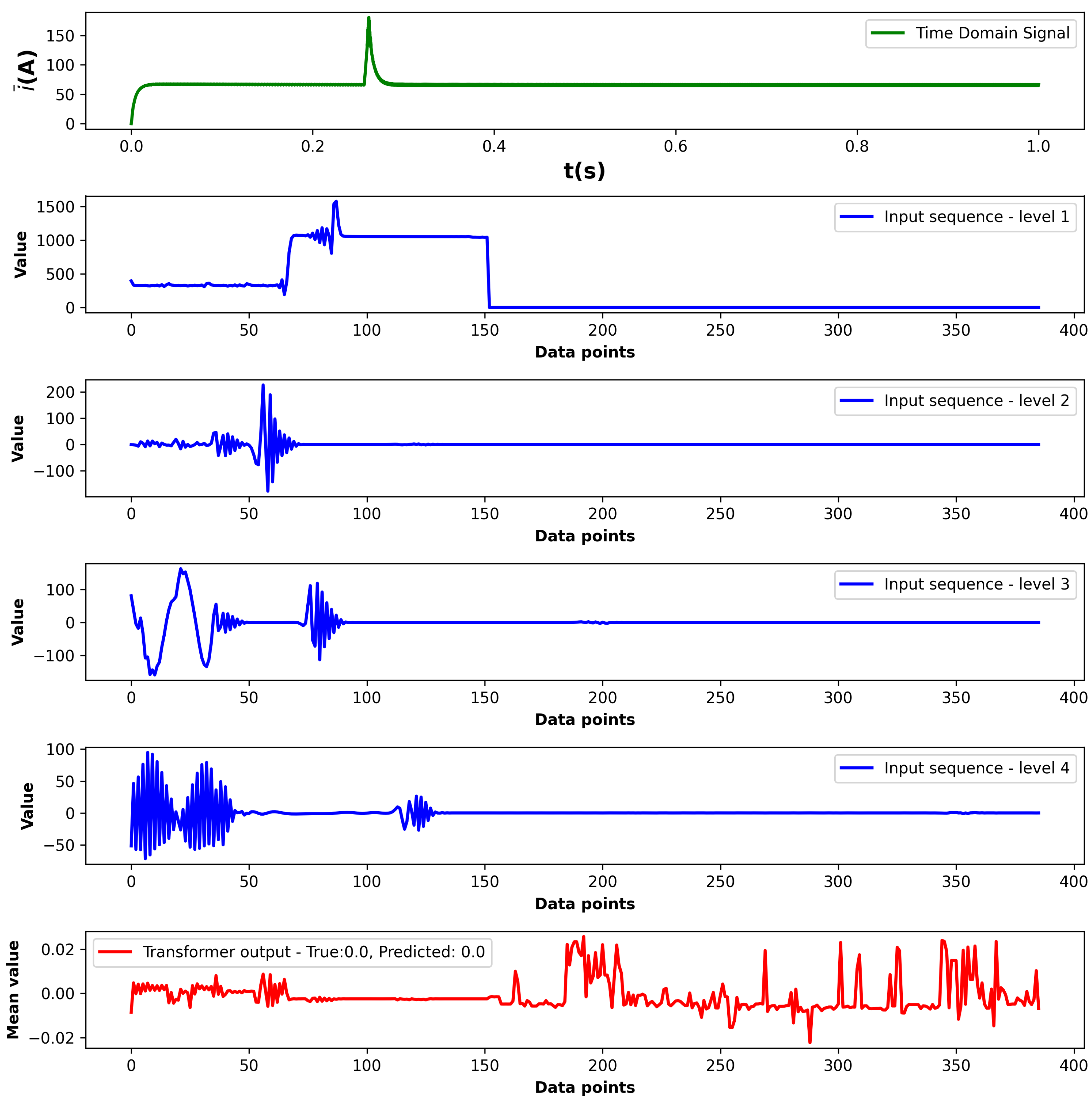

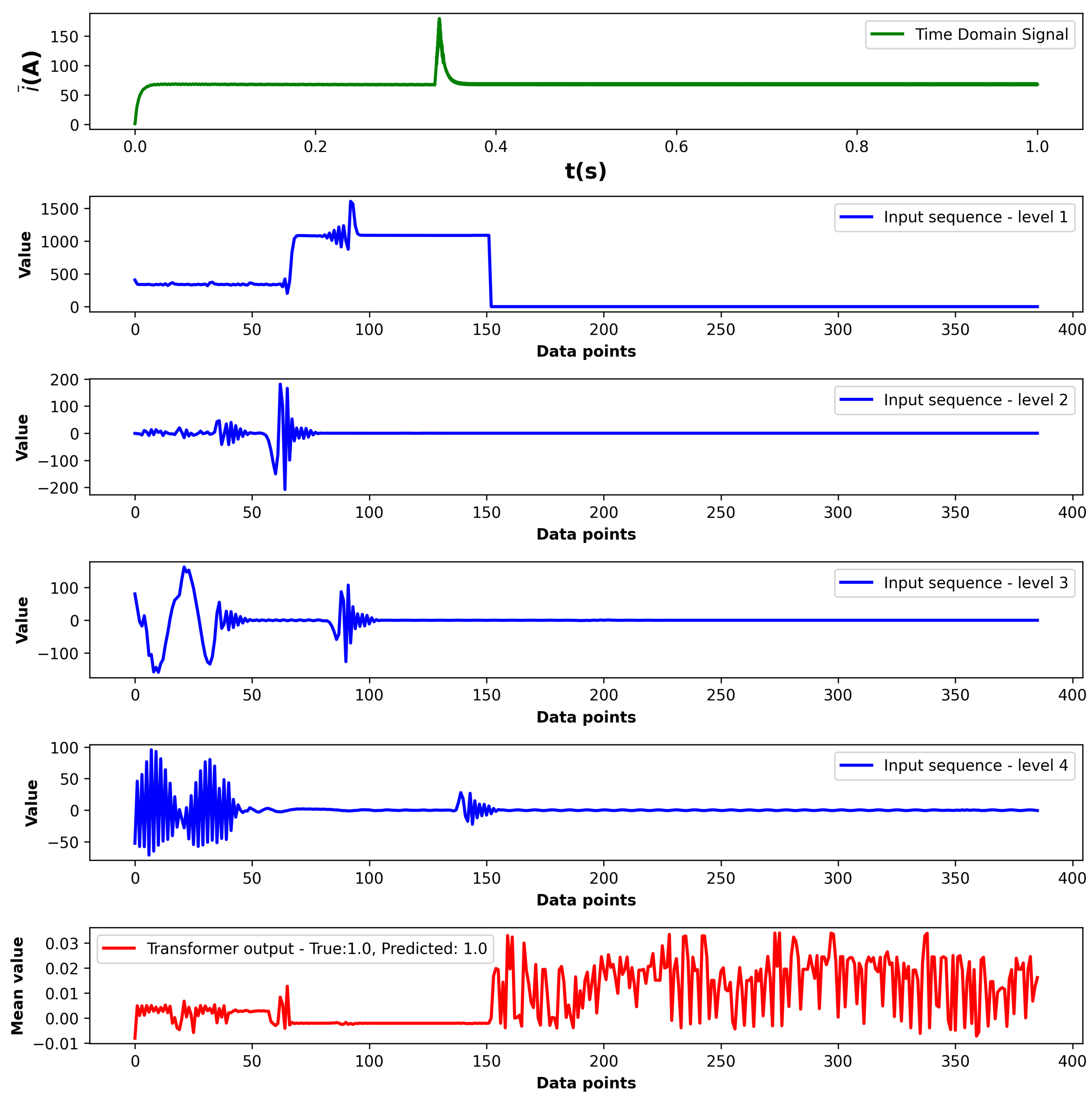

Figure 14,

Figure 15 and

Figure 16 illustrate the training and validation loss curves over the course of three folds. In the first fold, the training loss decreases from an initial value of 0.5136 to 0.0638, while the validation loss drops from 0.4314 to 0.0818 by the final epoch. Correspondingly, training accuracy improves from 80.15% to 97.59%, and validation accuracy increases from 85.47% to 96.72%, demonstrating effective generalization without overfitting. The validation loss exhibits a rapid decline during the early stages of training, indicating efficient pattern learning. Similar results can be observed in the other two folds. The close alignment between the two curves suggests good generalization, with minimal divergence between training and validation performance.

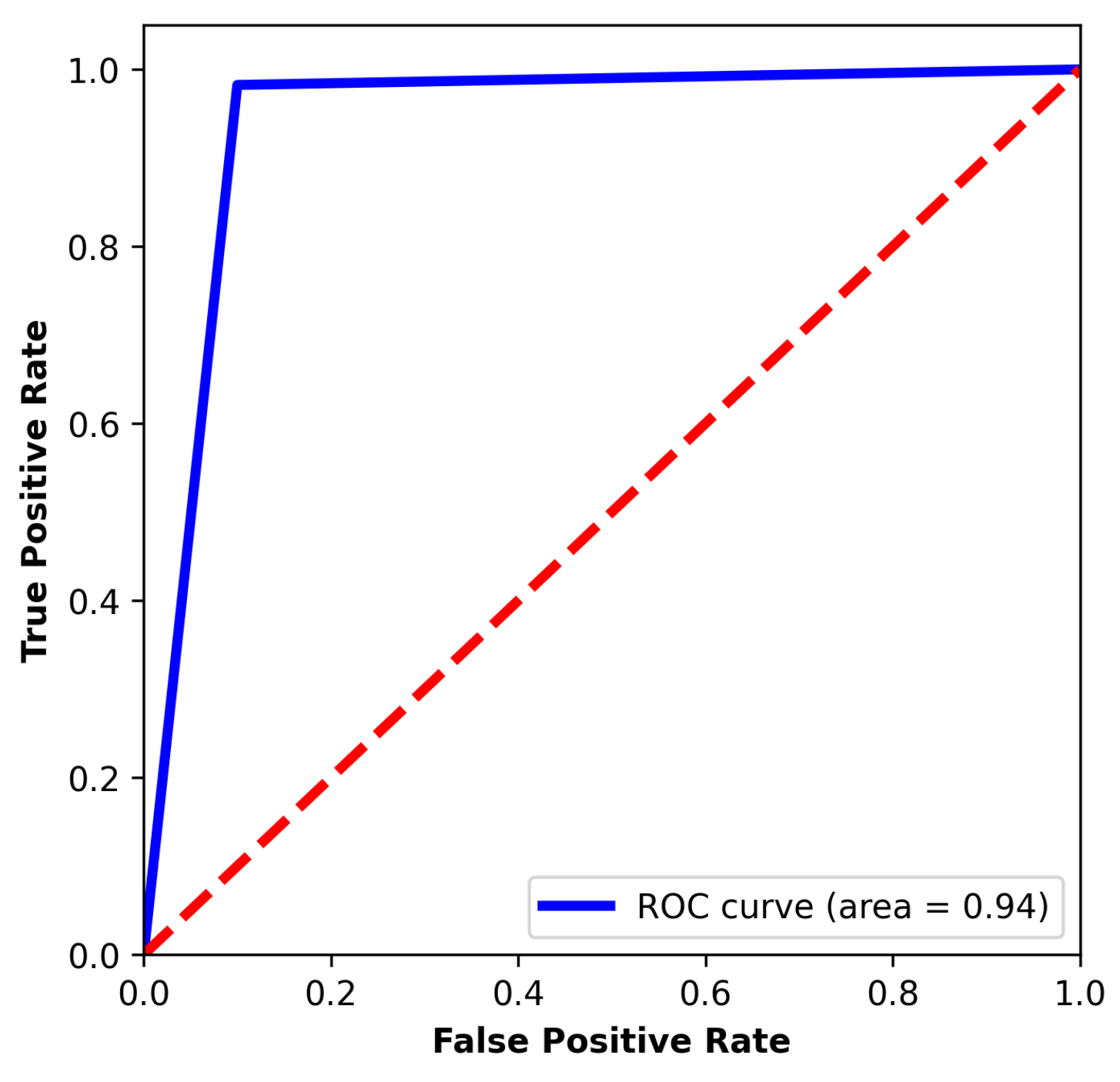

Table 3 shows the main performance metrics of the experiment. The average scores are above above 94%, which shows notable generalization performance for the fault classification of transient signals.

5.2. Hold-Out Training Evaluation

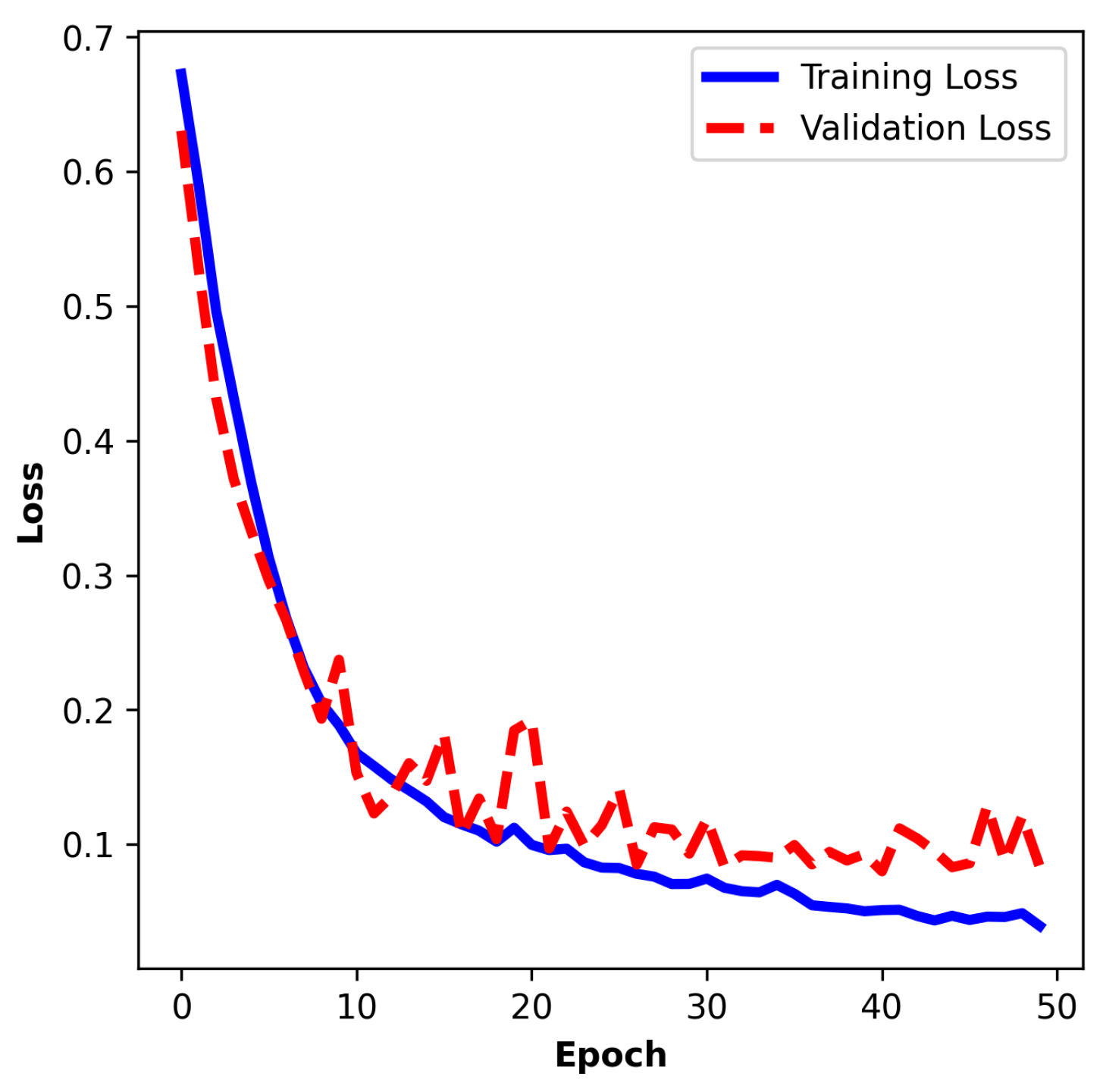

The training curve of the hold-out split training depicted in

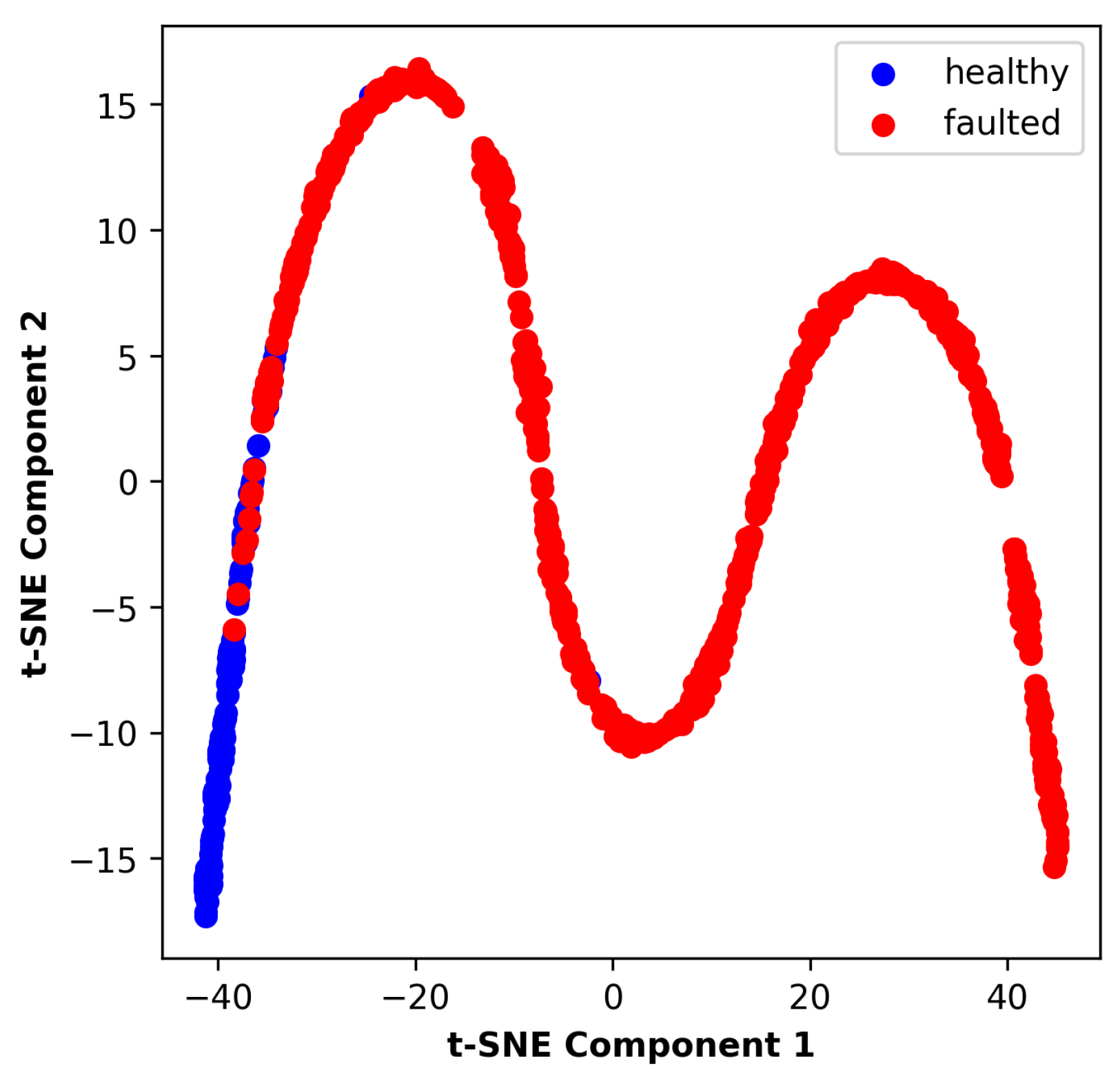

Figure 17 shows performance similar to the K-fold cross-validation training results. The training loss improves from 0.6741 to 0.0767, and the validation loss improves from 0.7008 to 0.0797. The accuracy changes from 59.53% to 96.88% and from 48.80% to 96.92%, respectively. As can be observed in

Figure 18 and

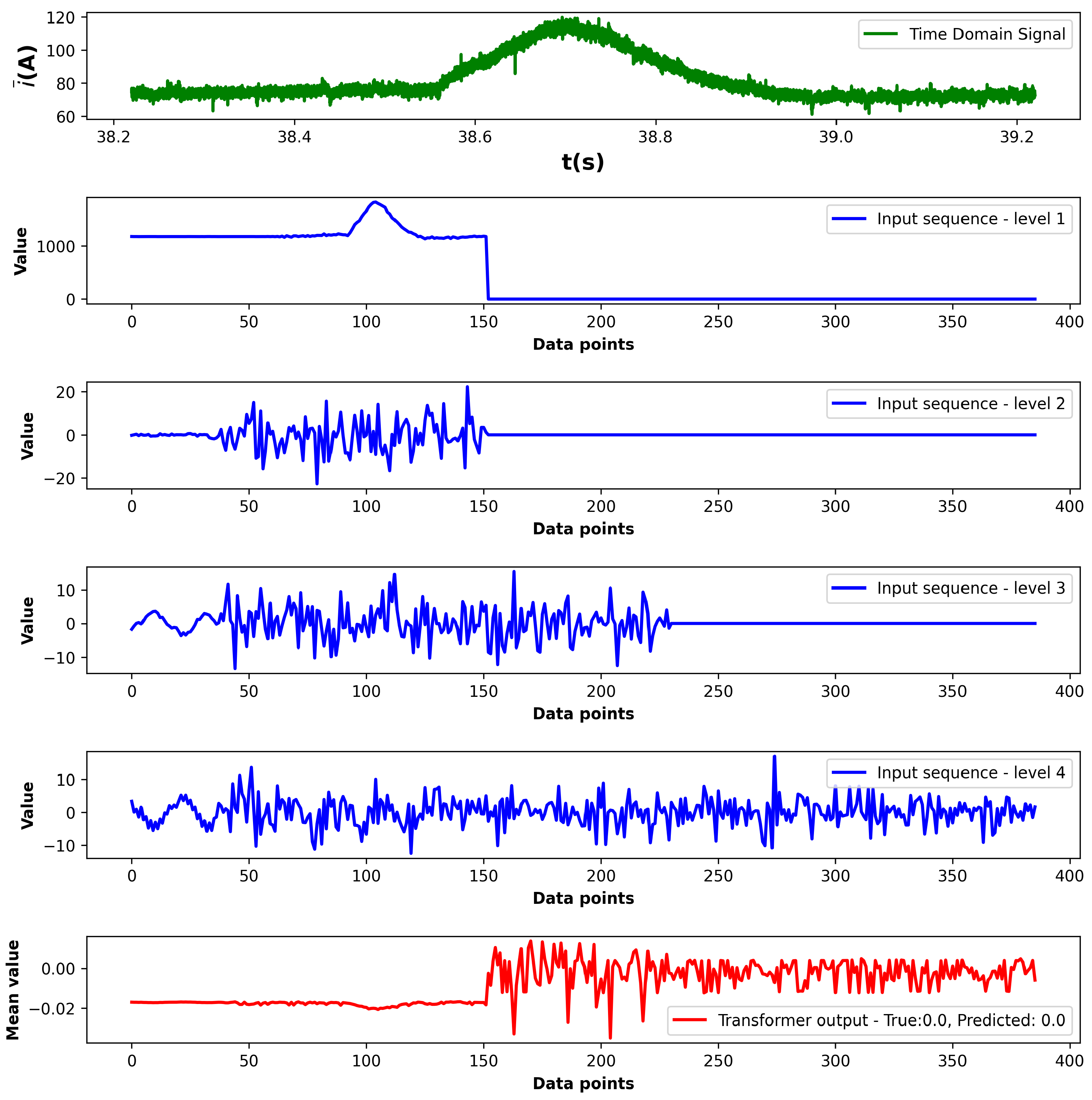

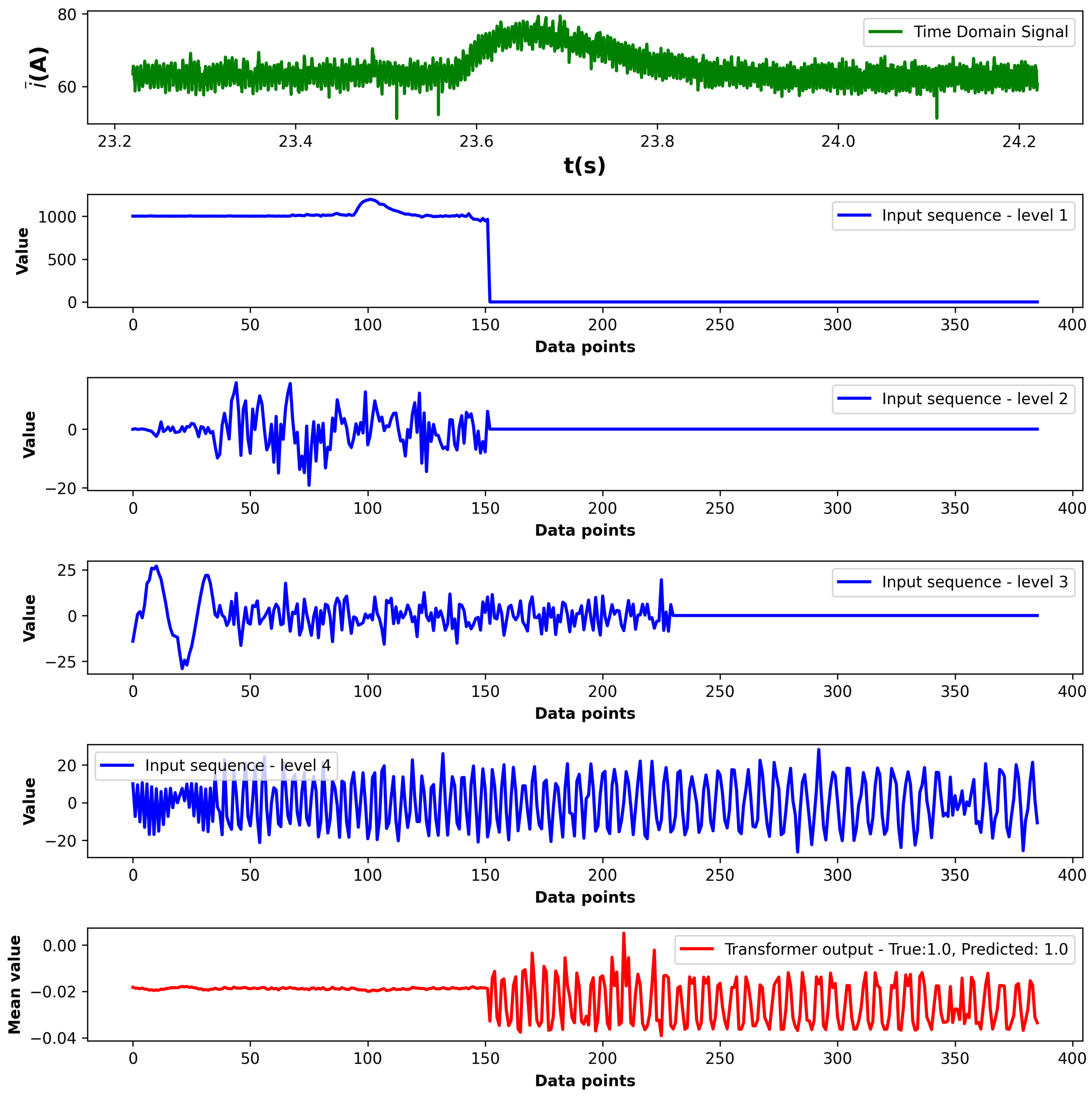

Figure 19, the model demonstrates strong classification performance on the test set and good separability of the output embeddings. Examples of correct healthy and faulted predictions are depicted in

Figure 20 and

Figure 21. The time-domain waveform shows an acceleration transient to 2050

with a constant load of 90 Nm on the machine. The input sequence lengths are not equivalent; therefore, the lower frequency levels are extended with zero padding. To exclude padded parts from the embedding, a source mask is created for the transformer. The mean values of the transformer encoder outputs shows that the post-transient oscillations in level four have the greatest impact on the prediction. In the case of the healthy sample, it can be observed that the fluctuations in the transformer output are much less frequent compared with the faulted sample.

The combination of wavelet-based feature extraction and Transformer modeling provides a powerful framework for incipient fault detection in PMSMs. The attention mechanism enables the model to prioritize critical time segments associated with fault onset, facilitating real-time implementation in embedded diagnostic systems and supporting predictive maintenance strategies in EV drivetrains.

5.3. Comparison with LSTM-RNN Model

For the comparison, a simple, low-weight LSTM model was built based on the basic architecture proposed in [

39]. The hyperparameters of the LSTM-RNN model can be seen in

Table 4. The model has four input dimensions for each DWT sequence and 32 hidden states in the recurrent architecture. Since it is a low-weight model, the dropout rate is set to zero.

Although the LSTM-RNN converges after a few epochs, its validation loss remains relatively high and unstable (see in

Figure 22), confirming the model’s limitations in capturing the transient characteristics of the input sequences. As can be seen from the results collected in

Table 5, the Transformer model outperforms the light-weight LSTM model, since, in contrast with the LSTM, it classifies based on the complete sequence. The inference time of the LSTM model is significantly lower in this setup; however, the performance is not eligible for the low-weight, real-time embedded application.

While LSTM-RNN network excels in traditional time-series prediction tasks, the implemented low-weight architecture lacks the ability to analyze the complex dynamics and long-range temporal dependencies of transient DWT sequences of an EV drive.

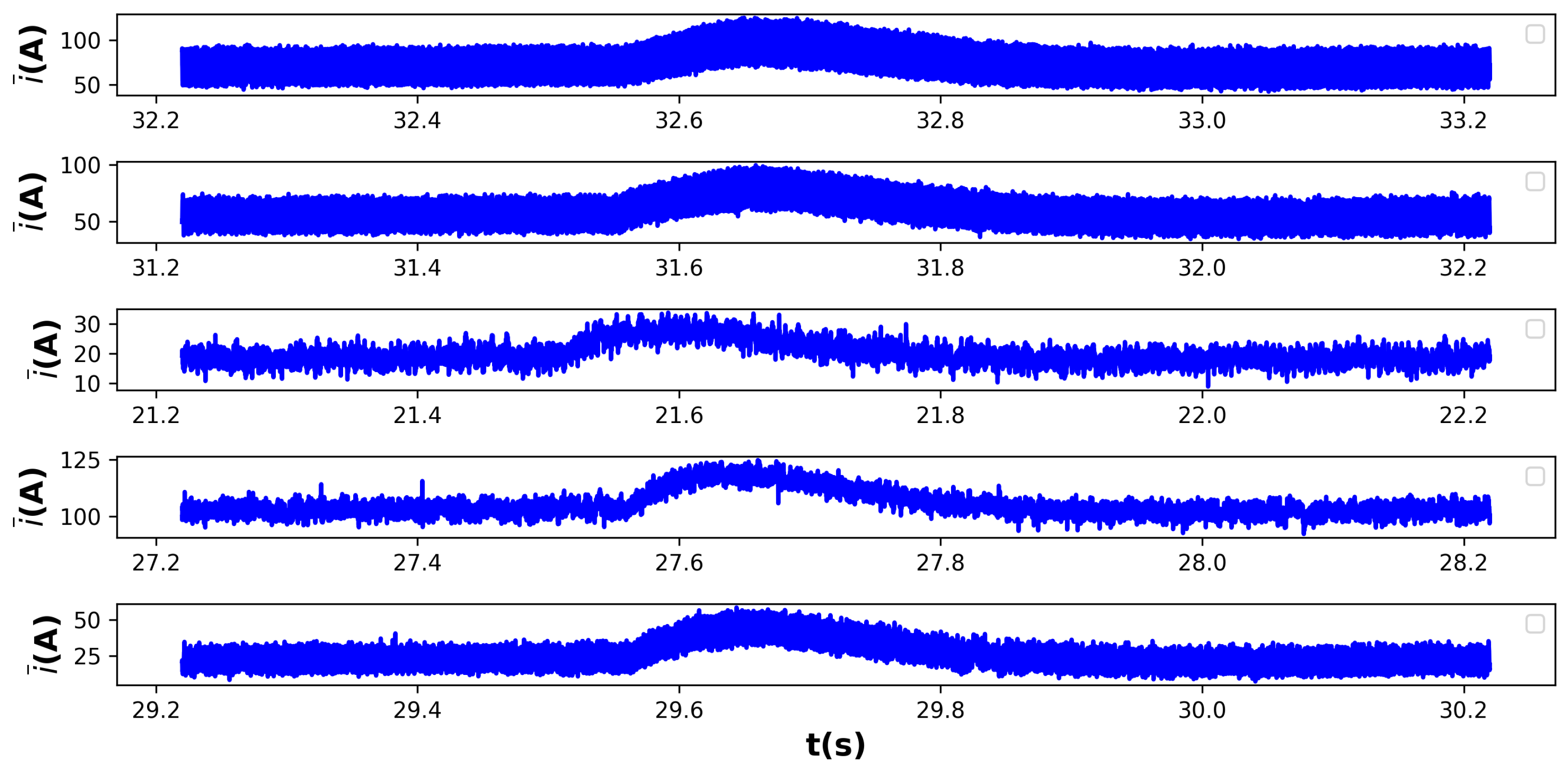

5.4. Assessing the Real-World Generalization of a Transformer-Based ITSC Classifier via Transfer Learning

The robustness and generalization capability of the proposed Transformer-based fault detection model are evaluated using publicly available experimental PMSM measurements presented in [

28]. This external dataset includes real-world waveforms collected from a dual three-phase PMSM specifically configured for ITSC fault emulation. Fault intensities cover one to four shorted turns in phases U and V under varied load torque conditions. Examples of acceleration transients from the dataset are depicted in

Figure 23.

These measurement scenarios represent realistic EV operating environments and provide a meaningful benchmark for the testing of cross-domain applicability. The model trained on FEM-based synthetic transient signals undergoes transfer learning to adapt to the motor architecture described in [

28,

40]. This dual three-phase PMSM consists of two electrically separated but magnetically coupled sub-systems and employs a concentric coil structure with 24 stator slots and 10 pole pairs. The motor is explicitly designed to support fail-operational behavior and includes dedicated winding taps for precise ITSC fault injection [

40]. In comparison, the motor model used in this paper to generate the synthetic dataset is characterized by a classical three-phase topology and a different winding layout. The dual three-phase configuration [

28,

40] presents a significantly different internal coil connection scheme and mutual inductance profile, which affect fault current behavior and flux-linkage dynamics. By fine-tuning the pretrained Transformer model on this structurally different motor’s measurement data, its ability to generalize across PMSM configurations is evaluated.

Although the dataset [

28,

40] reflects real PMSM operation, the noise characteristics and filtering procedures used during acquisition remain undocumented. To compensate for this uncertainty and to simulate a more representative industrial environment, additive zero-mean Gaussian noise is introduced to the measured current signals. The standard deviation of the noise is set to

, corresponding to 1.5% of the maximum signal amplitude. This augmentation accounts for typical inverter switching noise and sensor inaccuracies, enabling a realistic robustness evaluation under noisy conditions.

By validating the model under noisy real-world data, the study further examines its transferability to unseen environments. In addition, the FEM-based simulation framework proposed in this paper supports the generation of new synthetic datasets for arbitrary PMSM configurations. This modularity facilitates adaptation of the diagnostic approach to other machines with different pole counts, winding structures, or operational constraints, supporting broader applicability in practical EV systems. The Transformer model, initially trained on the synthetic transient dataset, is fine-tuned using experimental measurement waveforms from transient data samples reported in [

28]. During fine-tuning, the early Transformer encoder layers responsible for feature extraction remain fixed, while classification layers undergo further training for 75 epochs at a reduced learning rate (

, Adam optimizer). This approach ensures adaptation to experimental data characteristics without overfitting. Classification results after transfer learning are summarized in

Table 6, confirming robust generalization performance across different fault severities. The validation loss shown in

Figure 24 indicates a consistent decrease during the epoch with only training of the classification head of the model. This shows that the Transformer encoder and synthetic dataset is capable of generalizing the main features of the faulted transient waveform. The attention weights presented in

Figure 25 and

Figure 26 show similar behavior as for the synthetic data samples.

This transfer learning setup highlights the model’s capacity to adapt to new domains with distinct electromagnetic characteristics, thereby reinforcing its potential for real-world ITSC fault detection in diverse PMSM-based EV drivetrain architectures. The ability to generalize across motor types, fault severities, and noisy real-world conditions demonstrates not only the flexibility but also the robustness of the proposed diagnostic approach. In particular, the model maintains stable performance despite measurement uncertainties and added Gaussian noise, reflecting its resilience under practical signal quality constraints. Furthermore, the modular synthetic data generation framework enables extension to additional motor designs, supporting future applications in adaptive, data-driven diagnostic systems.

5.5. Benchmarking Against State-of-the-Art ITSC Diagnostic Architectures

Table 7 presents a side-by-side comparison of six representative ITSC diagnosis approaches and our proposed solution. Existing methods either rely on purely analytical or FEM-only studies without machine learning metrics ([

41,

42]), are validated under steady-state bench conditions only ([

43,

44,

45,

46]), or require external sensors and lack EV-specific transient validation ([

45,

46]). Two references from recent years (2024–2025) have also been considered. A paper by Fan et al. [

47] proposed a high-sensitivity hybrid CNN–GRU model using fractional Fourier Mel-spectrogram features to monitor insulation degradation in PMSMs; however, their approach does not target ITSCs and lacks an EV drive cycle and transient testing. Nandakumar and Gunasekaran [

48] introduced a Bi-LSTM + FFNN hybrid model optimized by the Walrus Optimization Algorithm for detection of inter-turn faults in induction motors under steady-state simulations, yet it has not been validated for EV settings or transient scenarios. None of the surveyed methods concurrently (i) leverages a high-fidelity EV-specific FEM dataset that captures both static operation and high-duty inverter-drive transients with full magnetic saturation; (ii) implements a compact DWT–Transformer topology optimized for sensorless, current-only real-time inference on embedded hardware; and (iii) validates incipient inter-turn fault detection under aggressive inverter-drive transient load profiles typical of traction-drive dynamics.

6. Conclusions

This paper presents a new diagnostic framework for detecting incipient inter-turn short-circuit (ITSC) faults in permanent-magnet synchronous machines (PMSMs), integrating discrete wavelet transform (DWT) feature extraction with a Transformer-based classification model. Unlike conventional motor current signature analysis (MCSA) techniques, which often rely on steady-state spectral features or manual scalogram interpretation, the proposed method employs time-frequency decomposition to capture transient, non-stationary current disturbances characteristic of inverter-driven EV operations.

A key contribution of this work is the development of a high-fidelity simulation environment, including a flux saturation-aware PMSM model utilizing four-dimensional flux-linkage lookup tables (LUTs). This approach enables realistic modeling of fault-induced flux distortions under varying load, speed, and saturation conditions—scenarios often neglected in existing studies. The resulting dataset, covering diverse operating points and transient dynamics, provides a solid foundation for the training of data-driven models.

The Transformer architecture, with its self-attention mechanism, proved effective in capturing both localized transient patterns and long-range dependencies within the wavelet-transformed current signals. This structure, compared to recurrent models such as LSTM or RNNs, offers superior efficiency due to its parallel processing capability and reduced parameter count, making it suitable for real-time, embedded fault detection applications.

To the best of the authors’ knowledge, this is the first application of Transformer models for ITSC fault detection in PMSMs during transient operation, leveraging a comprehensive and realistic simulation dataset, as well as automatic feature extraction. The proposed framework demonstrated high classification accuracy, with the potential to support predictive maintenance strategies in electric vehicle (EV) drivetrains. Future work may include experimental validation on physical test benches and further optimization for ultra-low-power embedded platforms.