Enhancing PV Hosting Capacity of Electricity Distribution Networks Using Deep Reinforcement Learning-Based Coordinated Voltage Control

Abstract

1. Introduction

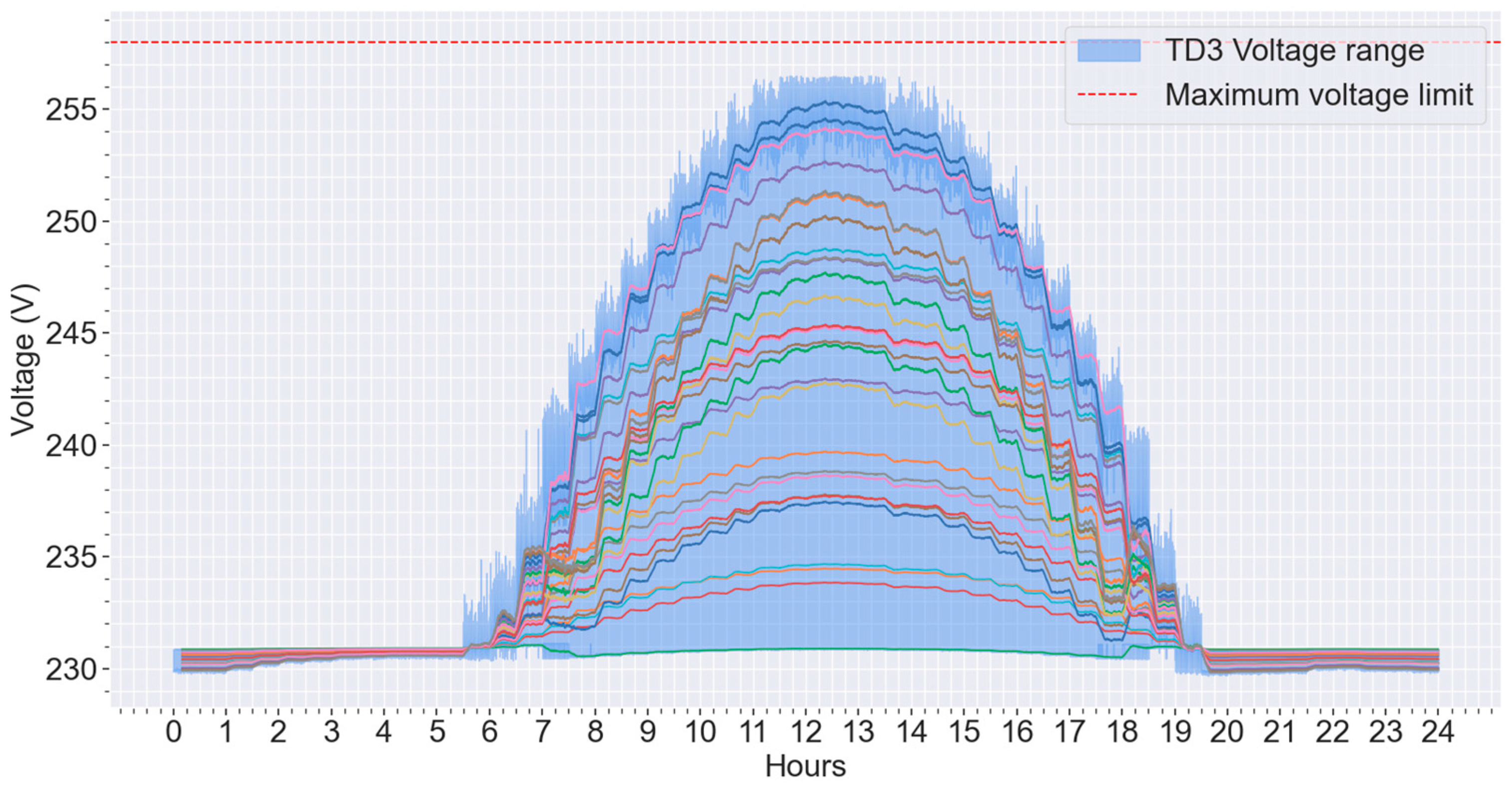

- A methodology to implement coordinated voltage control using the TD3 algorithm.

- Quantification of enhanced HC due to the proposed TD3-based coordinated voltage control scheme.

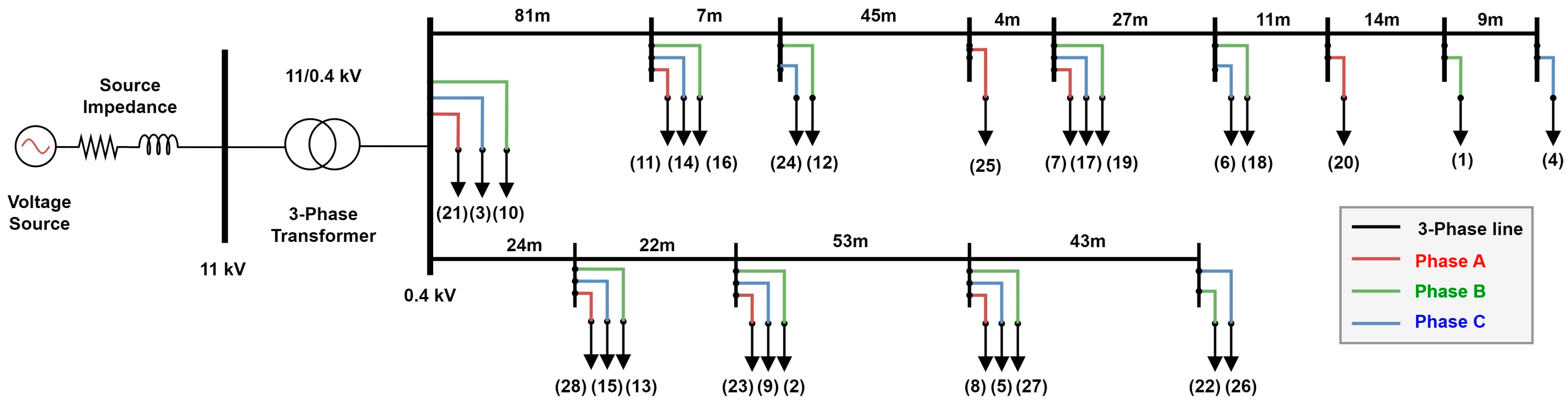

- Performance evaluation of the proposed scheme using a model of a real-world unbalanced LV distribution network.

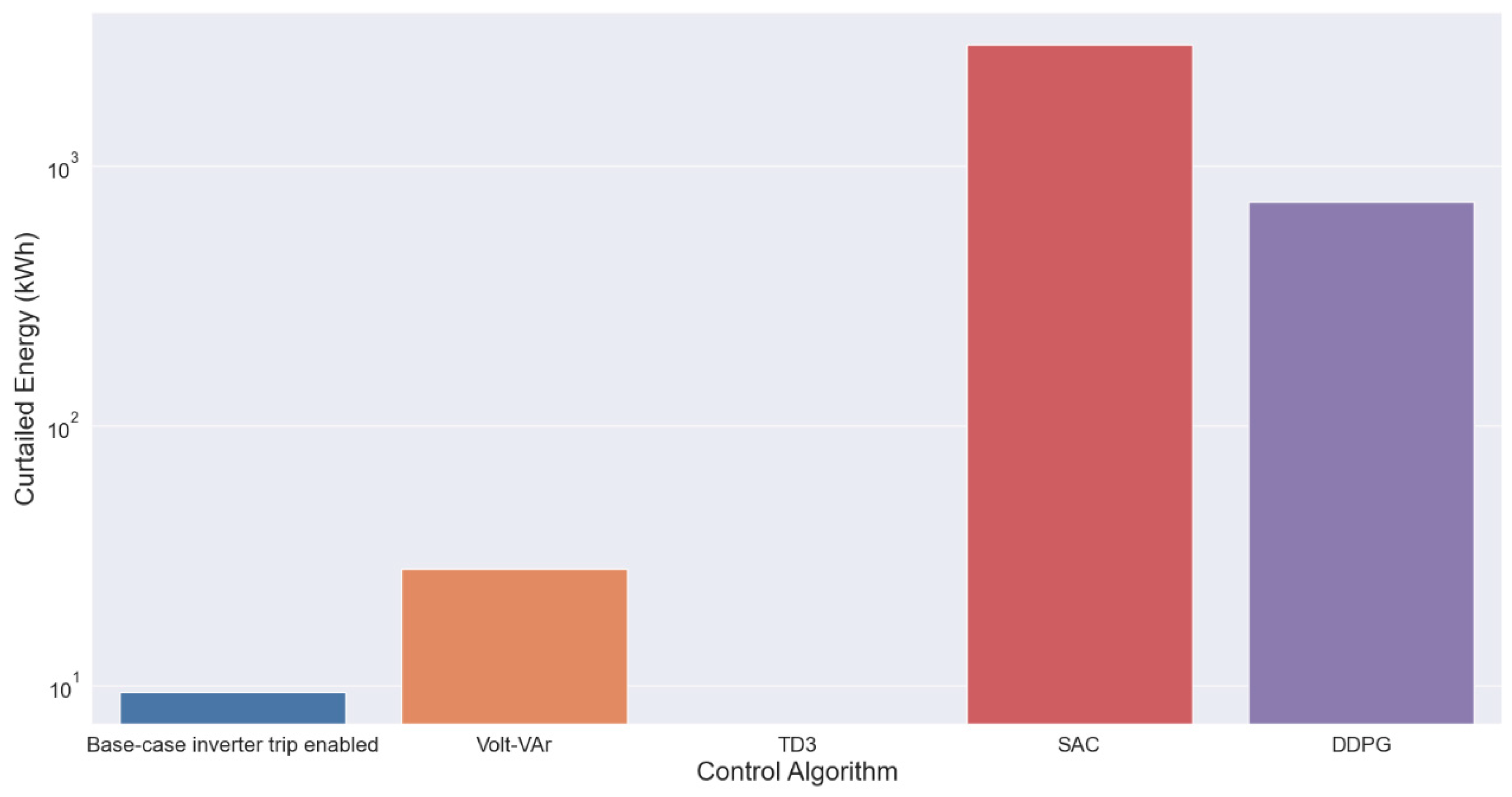

- Comparative analysis with other control algorithms such as Volt-VAr/Volt-Watt and DRL algorithms such as DDPG and SAC.

- A discussion detailing the implementation safety, scalability, sample efficiency, and constraint satisfaction of the proposed coordinated control scheme.

2. Problem Formulation

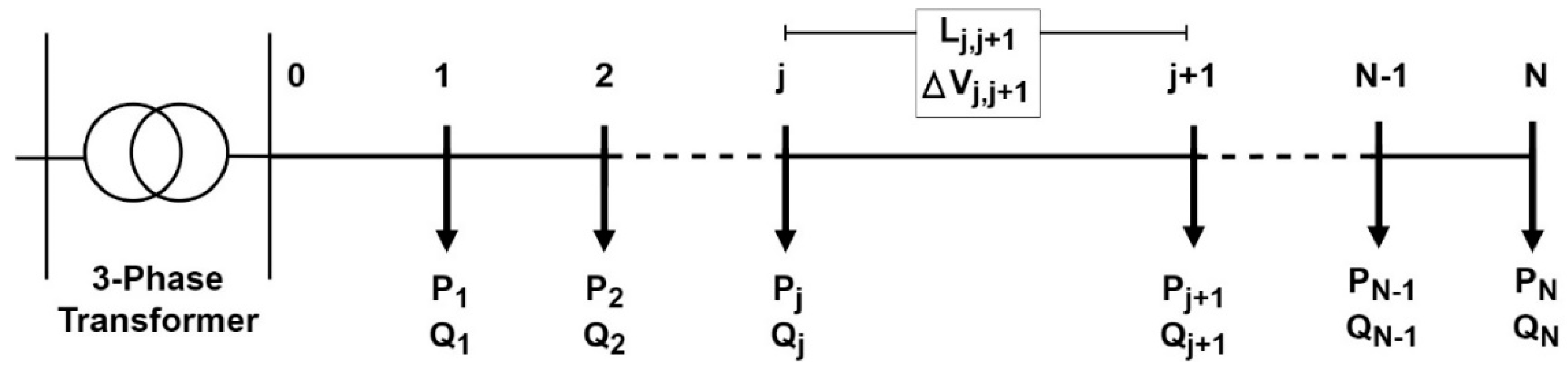

2.1. Preliminaries

2.2. Markov Decision Process

- represents the state space .

- represents the action space .

- is the transition probability function, with being the probability of transitioning into the next state due to an action taken in the current state .

- is the reward function, with being the immediate reward received by an agent after transitioning to the state from the state due to an action .

- is the discount factor .

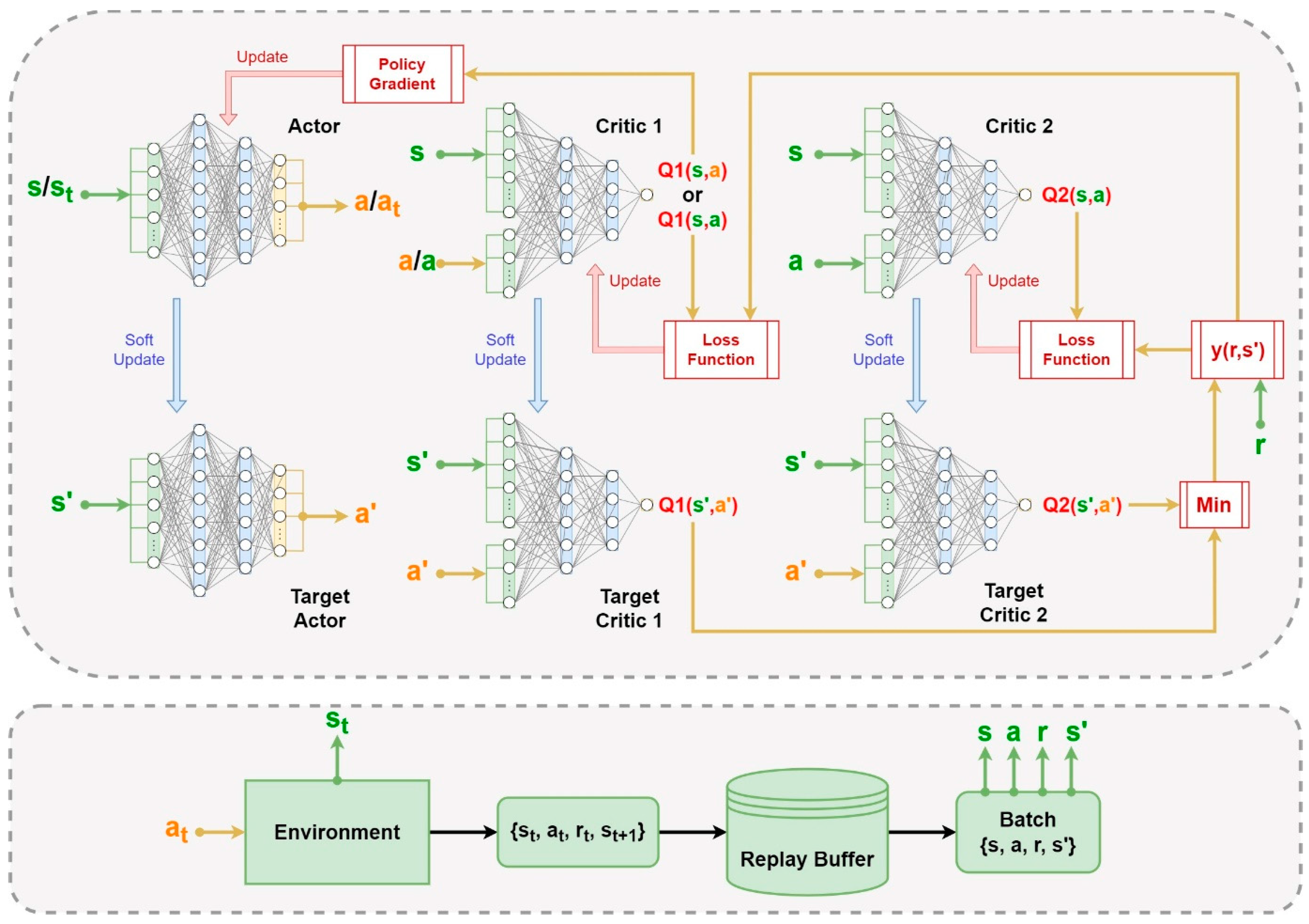

2.3. Twin Delayed Deep Deterministic Policy Gradient Algorithm

| Algorithm 1. TD3 Algorithm-Twin delayed deep deterministic policy gradient. | |

| 1: | respectively. |

| 2: | |

| 3: | |

| 4: | for to do: |

| 5: | Observe state |

| 6: | Execute action in the environment. Then |

| 7: | Store experience tuple |

| 8: | if : |

| 9: | Randomly sample a batch |

| 10: | Calculate target actions with clipped noise |

| 11: | Calculate the target for the critic update |

| 12: | Update critics and respectively |

| 13: | if then: |

| 14: | Update by the deterministic policy gradient: |

| 15: | Update target networks with : |

| 16: | end if |

| 17: | end if |

| 18: | end for |

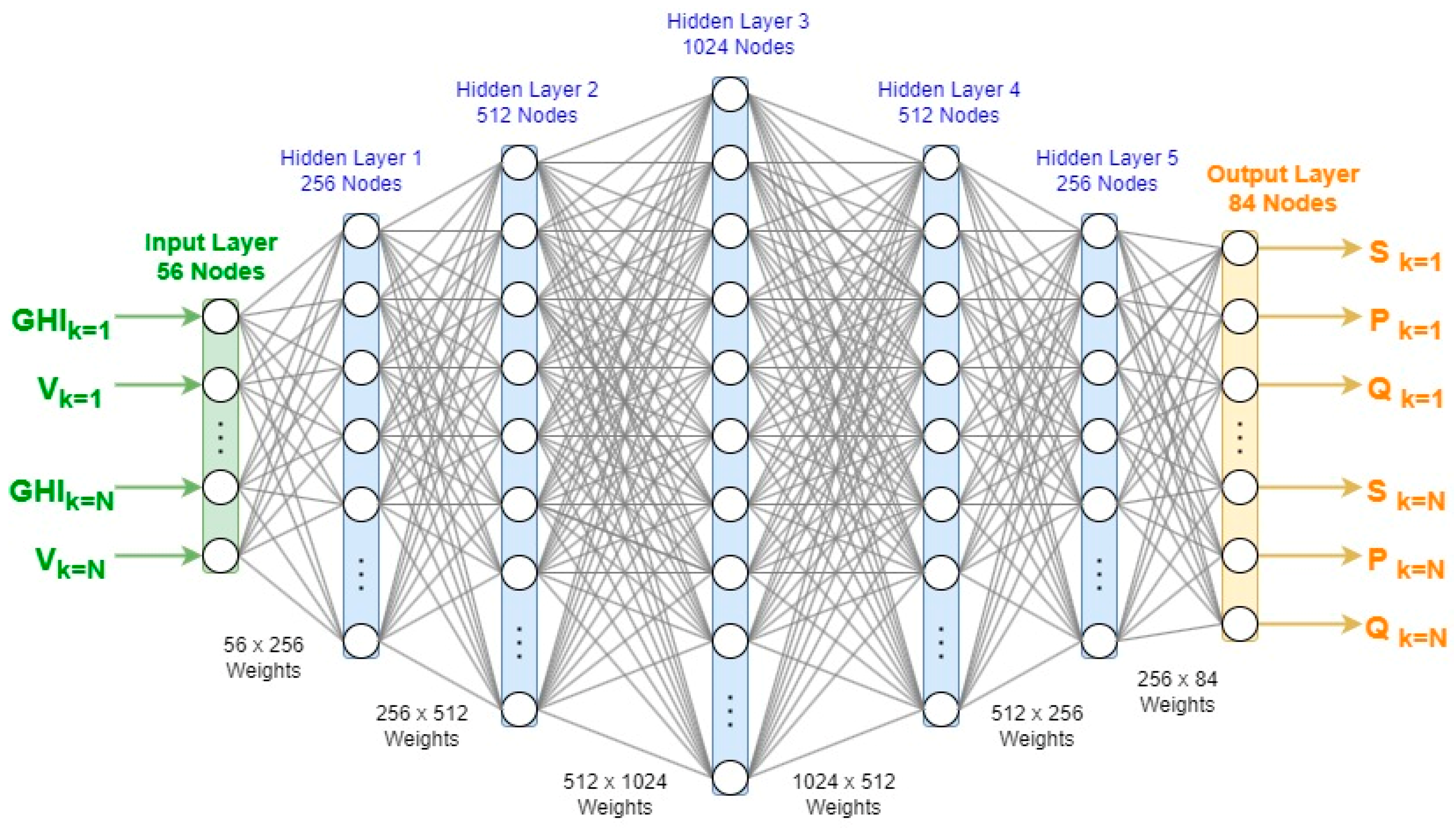

3. Design of the TD3 Agent

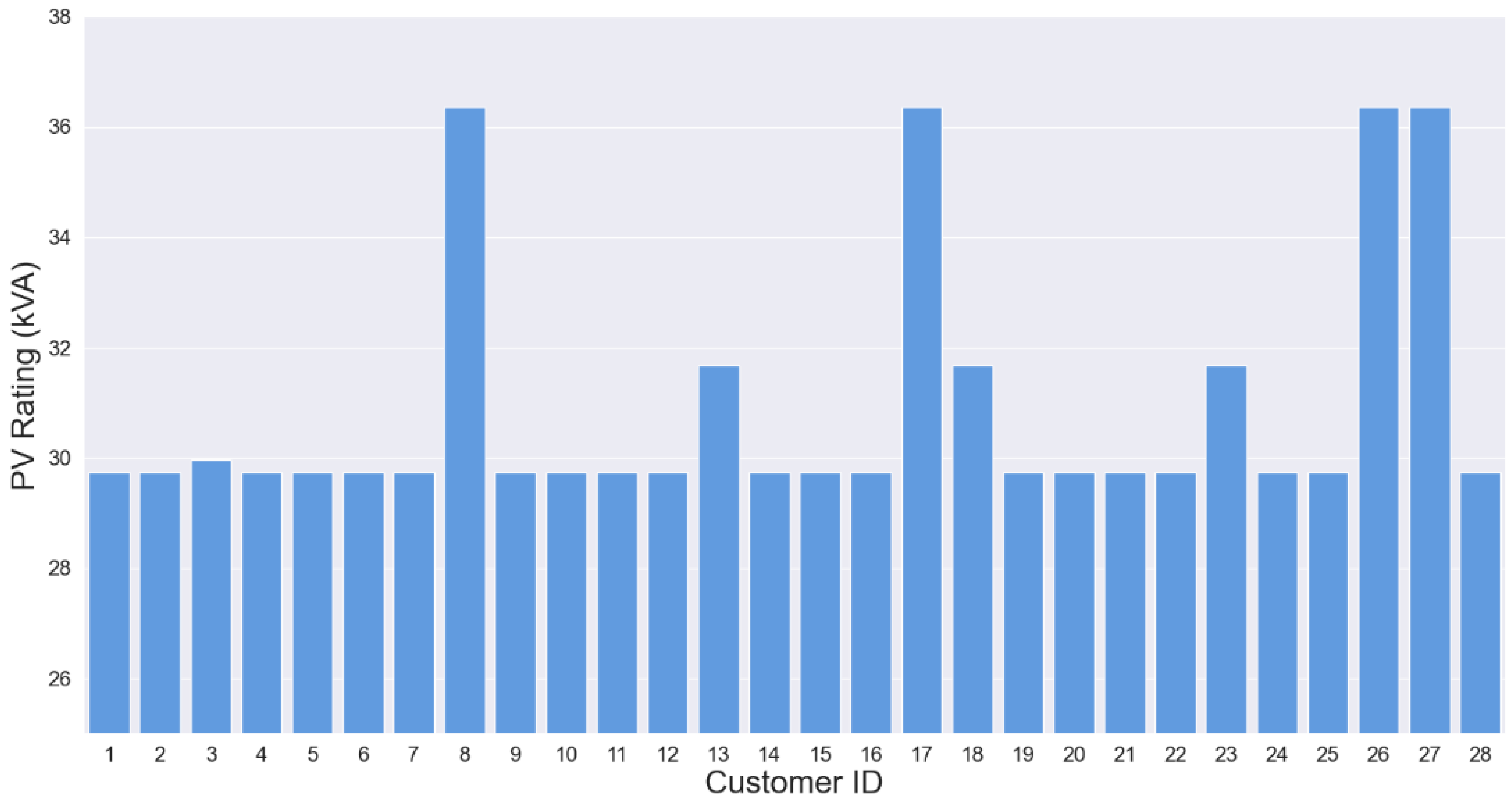

3.1. Hosting Capacity Assessment

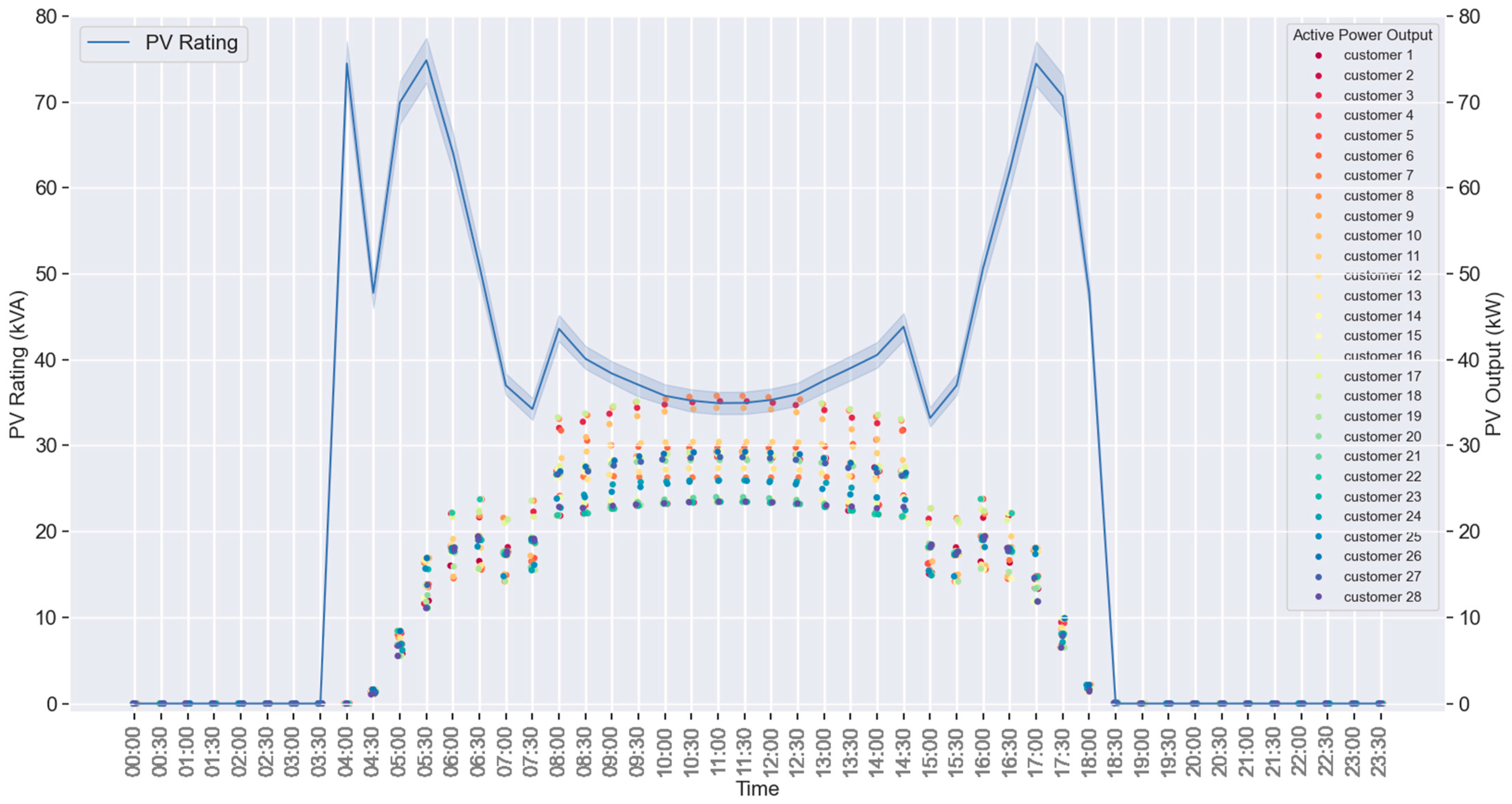

- = global horizontal irradiance ( at customer ;

- = voltage at the customer connection point (CCP) of customer .

- actor network output to determine the maximum PV inverter rating for customers ;

- actor network output to determine the active power output of the inverters ;

- actor network output to determine the reactive power output of the inverters .

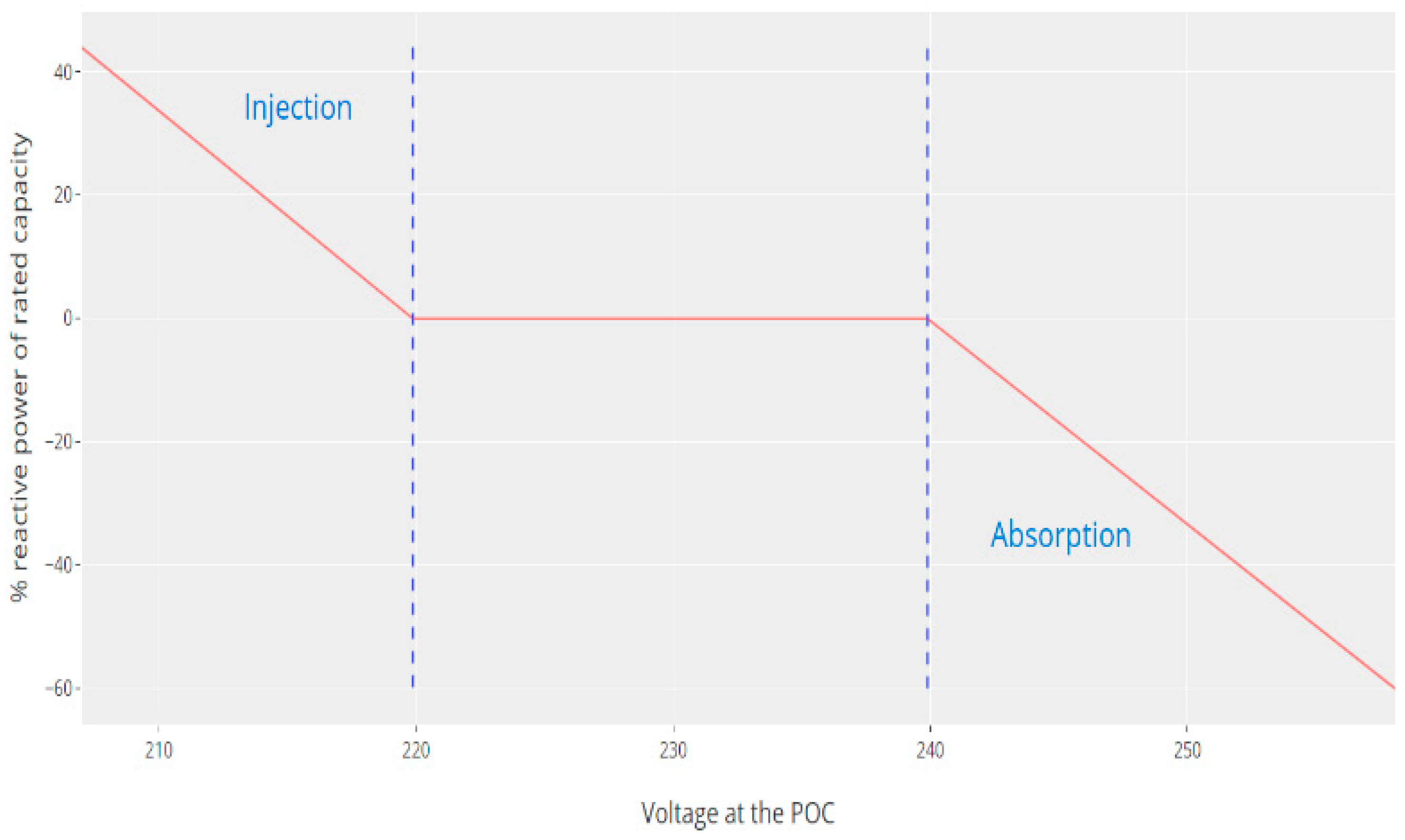

3.2. Coordinated Voltage Control

- global horizontal irradiance ( at customer ;

- voltage at CCP of customer ;

- active power at CCP of customer ;

- reactive power at CCP of customer .

- actor network output to determine the active power output of the inverters ;

- actor network output to determine the reactive power output of the inverters .

4. Case Study

4.1. Experimental Setup

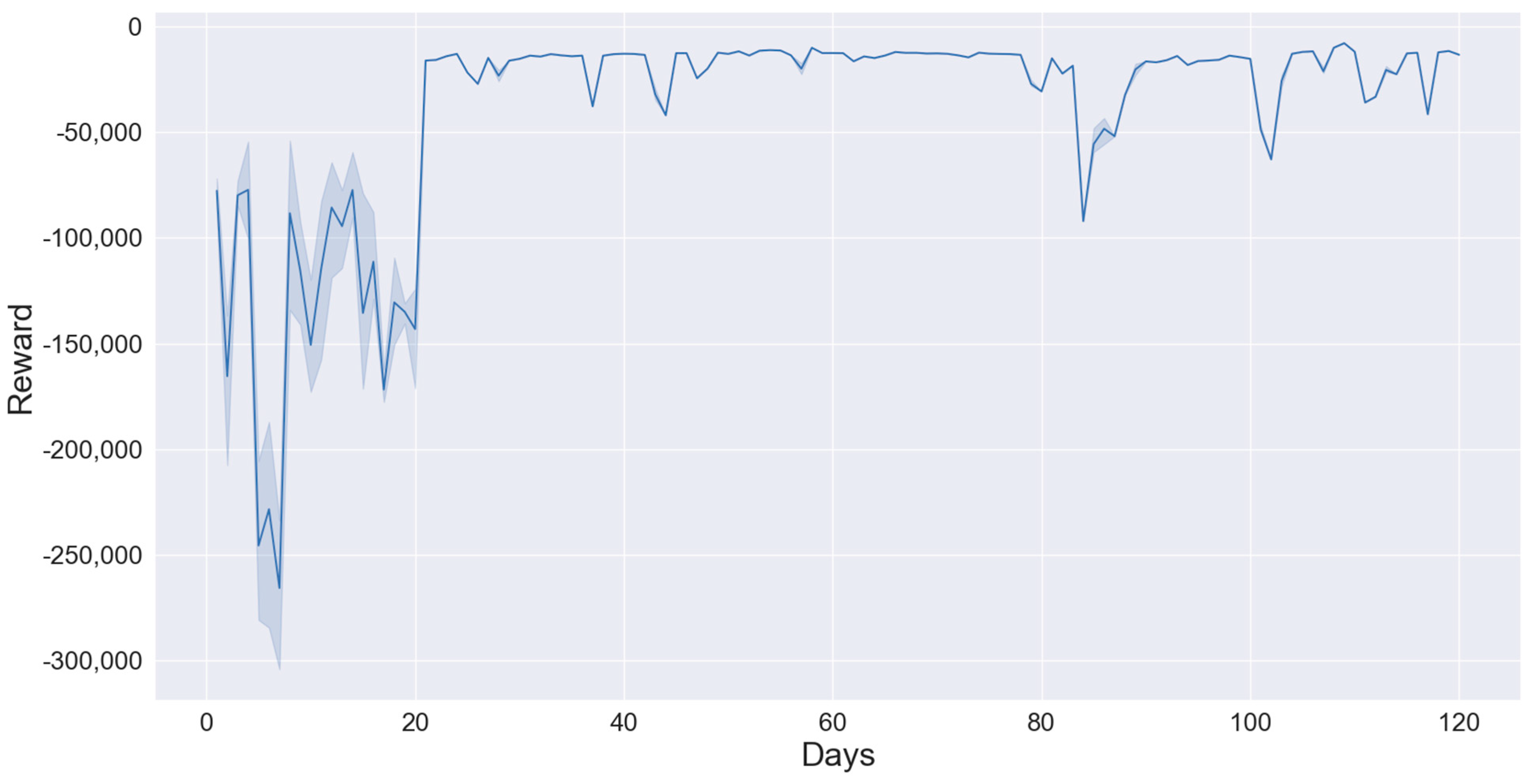

4.2. Quantification of the Enhanced HC

| Algorithm 2. Summary of the enhanced HC assessment methodology-Training and evaluation process of TD3 agent for HC assessment | |

| 1: | Collect data sets 1 and 2. Define |

| 2: | Define the values for reward parameters and hyperparameters |

| 3: | Initialize agent for HC assessment with random weights |

| 4: | Using Data Set 1: for do: |

| 5: | Take action and execute power flow calculation for time step |

| 6: | Train the agent (steps 6 to 17 in Algorithm 1) |

| 7: | end for |

| 8: | |

| 9: | for HC evaluation |

| 10: | do: |

| 11: | Take action and execute power flow calculation for time step |

| 12: | |

| 13: | end for |

| 14: | if total operational constraint violations = 0 then: |

| 15: | Identify |

| 16: | else: go back to step 2 |

| 17: | end if |

4.3. Training and Performance Testing of the TD3 Agent Used for Coordinated Voltage Control

| Algorithm 3. Summary of the training and performance evaluation methodology for the proposed coordinated voltage control scheme-Training and evaluation process of TD3 agent for coordinated voltage control | |

| 1: | |

| 2: | Set the PV ratings of customers to their HC limit identified in step 15 of algorithm 2 |

| 3: | |

| 4: | |

| 5: | do: |

| 6: | Take action |

| 7: | Train the agent (steps 6 to 17 in Algorithm 1) |

| 8: | end for |

| 9: | |

| 10: | for performance evaluation |

| 11: | do: |

| 12: | Take action |

| 13: | Check for operational constraint violations |

| 14: | end for |

| 15: | if total operational constraint violations 0, then: go back to step 3, end if |

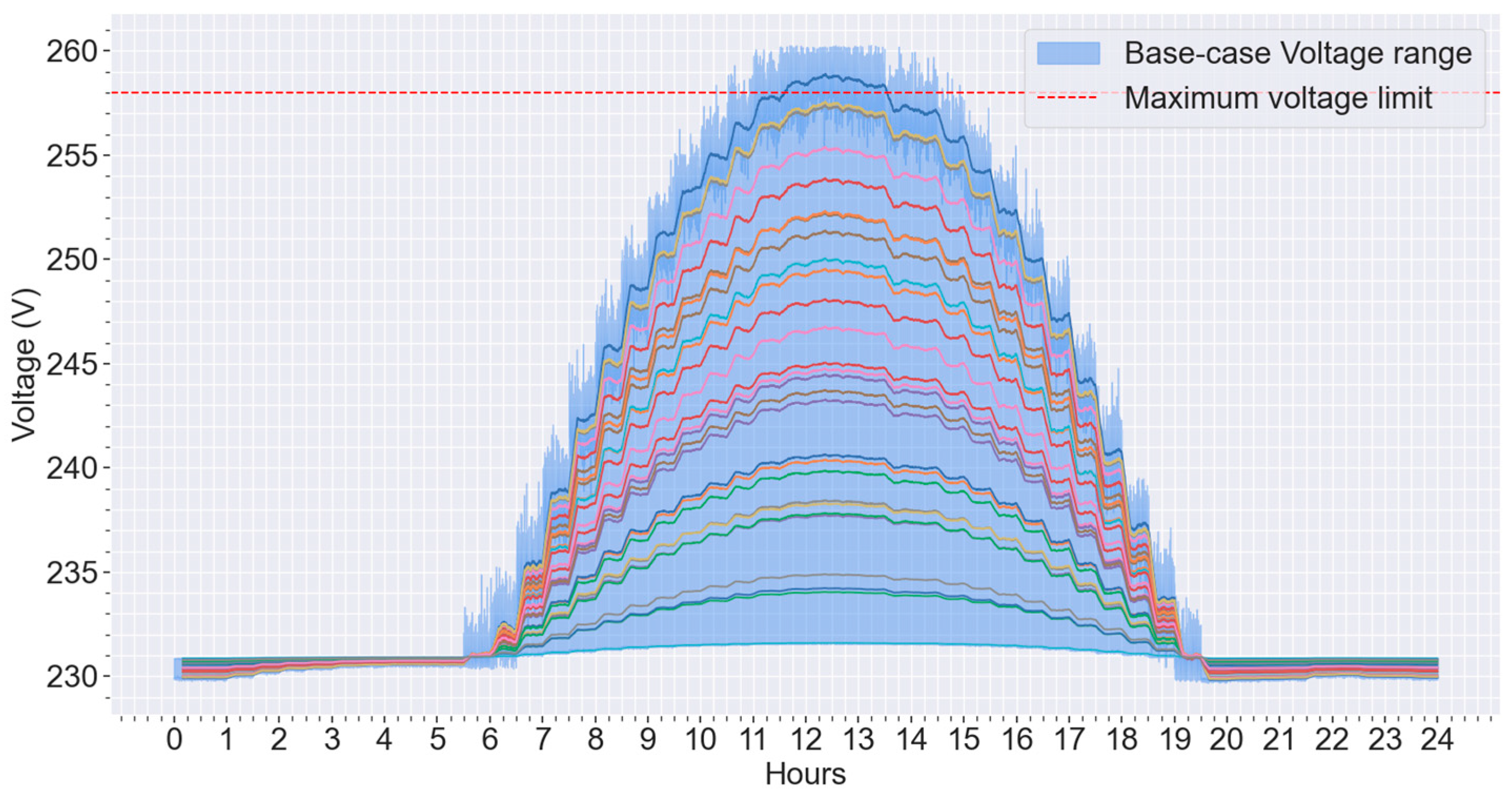

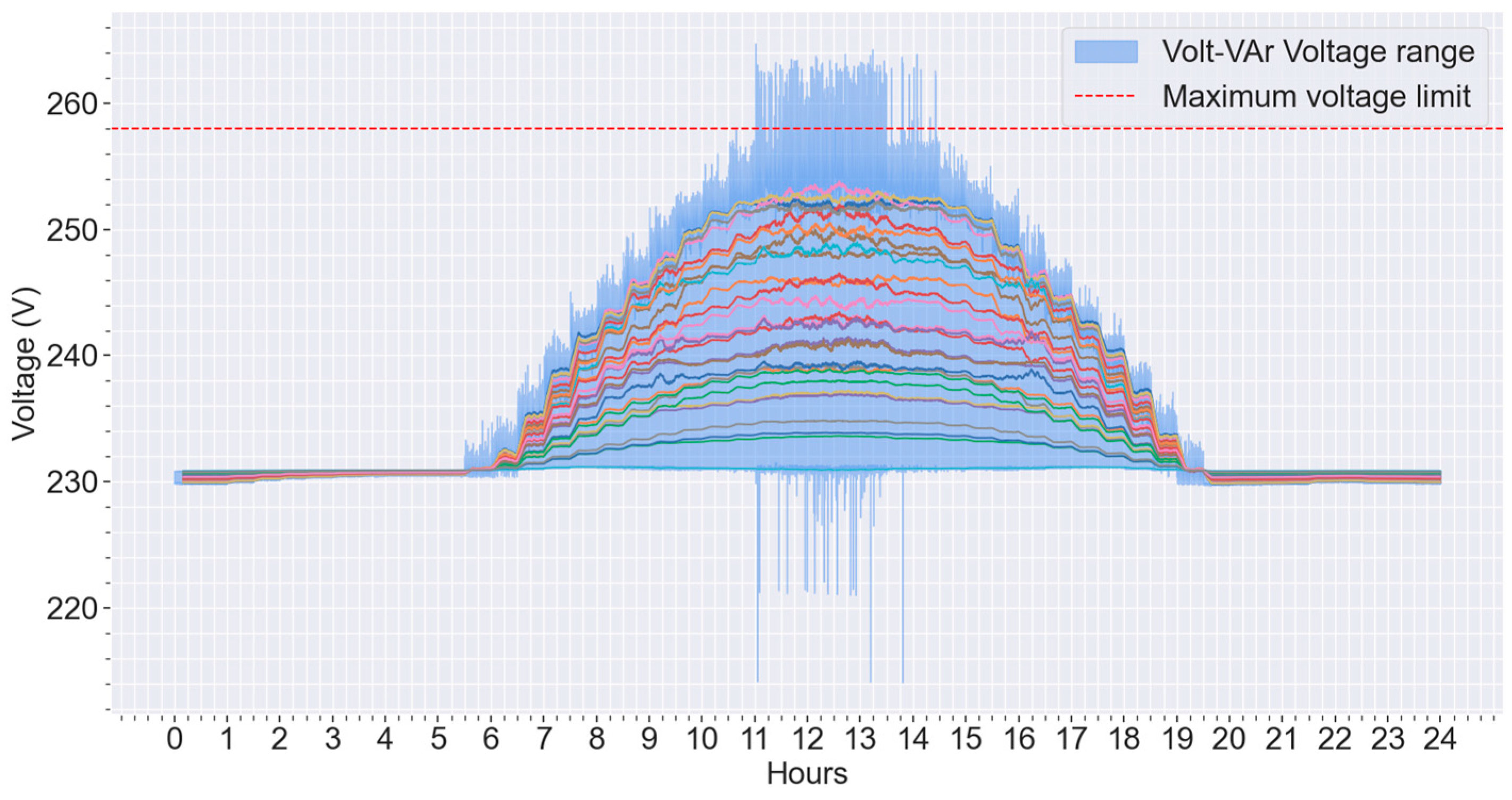

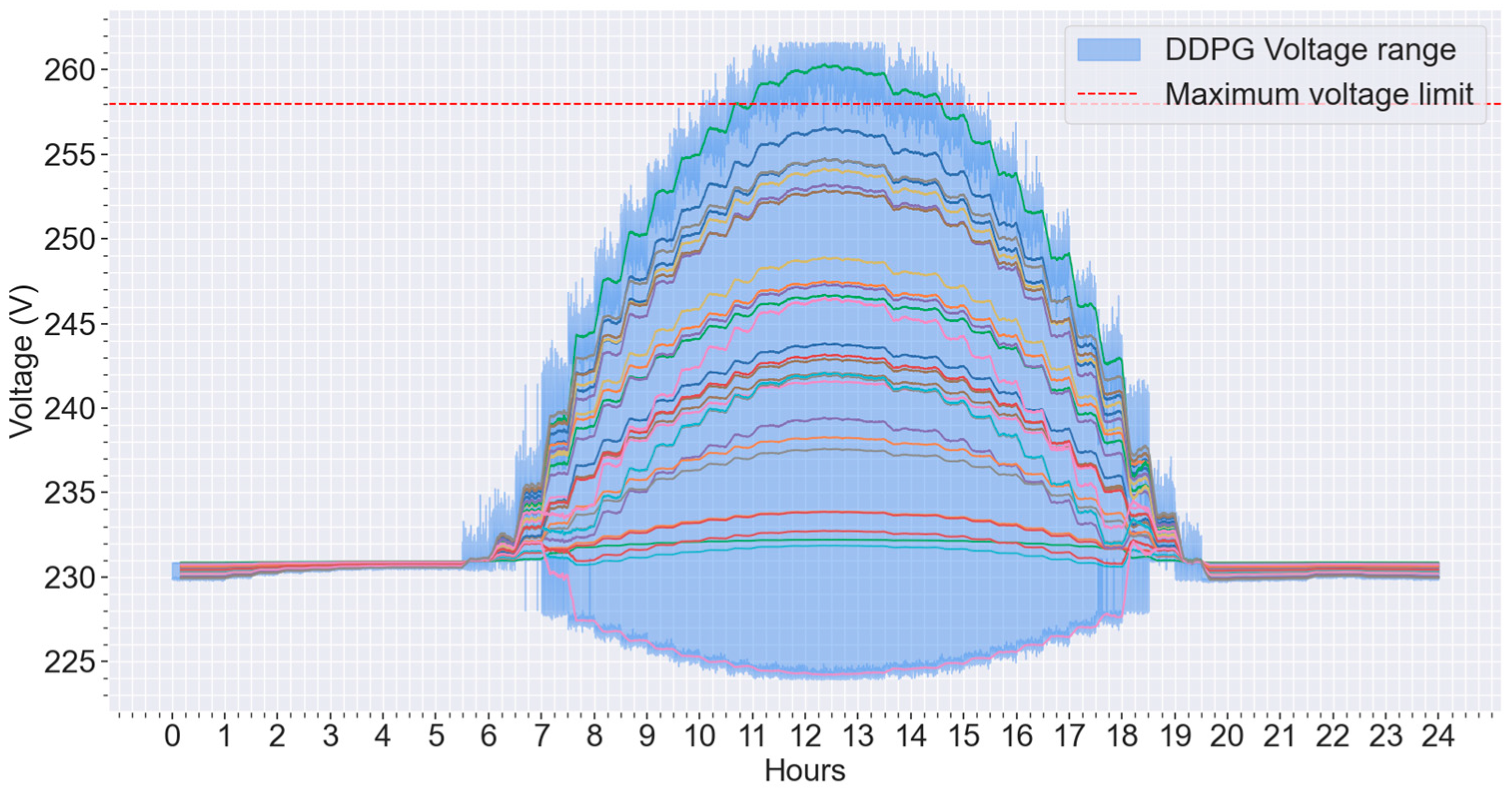

4.4. Comparative Analysis of Different Voltage Control Methods

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ding, F.; Mather, B.; Gotseff, P. Technologies to Increase PV Hosting Capacity in Distribution Feeders. In Proceedings of the 2016 IEEE Power and Energy Society General Meeting (PESGM), Boston, MA, USA, 17–21 July 2016. [Google Scholar] [CrossRef]

- Ismael, S.M.; Abdel Aleem, S.H.E.; Abdelaziz, A.Y.; Zobaa, A.F. State-of-the-Art of Hosting Capacity in Modern Power Systems with Distributed Generation. Renew Energy 2019, 130, 1002–1020. [Google Scholar] [CrossRef]

- Torquato, R.; Salles, D.; Pereira, C.O.; Meira, P.C.M.; Freitas, W. A Comprehensive Assessment of PV Hosting Capacity on Low-Voltage Distribution Systems. IEEE Trans. Power Deliv. 2018, 33, 1002–1012. [Google Scholar] [CrossRef]

- Kharrazi, A.; Sreeram, V.; Mishra, Y. Assessment Techniques of the Impact of Grid-Tied Rooftop Photovoltaic Generation on the Power Quality of Low Voltage Distribution Network—A Review. Renew. Sustain. Energy Rev. 2020, 120, 109643. [Google Scholar] [CrossRef]

- Zubo, R.H.A.; Mokryani, G.; Rajamani, H.S.; Aghaei, J.; Niknam, T.; Pillai, P. Operation and Planning of Distribution Networks with Integration of Renewable Distributed Generators Considering Uncertainties: A Review. Renew. Sustain. Energy Rev. 2017, 72, 1177–1198. [Google Scholar] [CrossRef]

- Rajabi, A.; Elphick, S.; David, J.; Pors, A.; Robinson, D. Innovative Approaches for Assessing and Enhancing the Hosting Capacity of PV-Rich Distribution Networks: An Australian Perspective. Renew. Sustain. Energy Rev. 2022, 161, 112365. [Google Scholar] [CrossRef]

- Mulenga, E.; Bollen, M.H.J.; Etherden, N. A Review of Hosting Capacity Quantification Methods for Photovoltaics in Low-Voltage Distribution Grids. Int. J. Electr. Power Energy Syst. 2020, 115, 105445. [Google Scholar] [CrossRef]

- Carollo, R.; Chaudhary, S.K.; Pillai, J.R. Hosting Capacity of Solar Photovoltaics in Distribution Grids under Different Pricing Schemes. In Proceedings of the 2015 IEEE PES Asia-Pacific Power and Energy Engineering Conference (APPEEC), Brisbane, QLD, Australia, 15–18 November 2015. [Google Scholar] [CrossRef]

- Heslop, S.; Macgill, I.; Fletcher, J.; Lewis, S. Method for Determining a PV Generation Limit on Low Voltage Feeders for Evenly Distributed PV and Load. Energy Procedia 2014, 57, 207–216. [Google Scholar] [CrossRef][Green Version]

- Ebe, F.; Idlbi, B.; Morris, J.; Heilscher, G.; Meier, F. Evaluation of PV Hosting Capacities of Distribution Grids with Utilisation of Solar Roof Potential Analyses. CIRED Open Access Proc. J. 2017, 2017, 2265–2269. [Google Scholar] [CrossRef]

- Ebe, F.; Idlbi, B.; Morris, J.; Heilscher, G.; Meier, F. Evaluation of PV Hosting Capacity of Distribution Grids Considering a Solar Roof Potential Analysis—Comparison of Different Algorithms. In Proceedings of the 2017 IEEE Manchester PowerTech, Powertech 2017, Manchester, UK, 18–22 June 2017. [Google Scholar] [CrossRef]

- Heslop, S.; MacGill, I.; Fletcher, J. Maximum PV Generation Estimation Method for Residential Low Voltage Feeders. Sustain. Energy Grids Netw. 2016, 7, 58–69. [Google Scholar] [CrossRef]

- Bracale, A.; Caramia, P.; Carpinelli, G.; Di Fazio, A.R.; Varilone, P. A Bayesian-Based Approach for a Short-Term Steady-State Forecast of a Smart Grid. IEEE Trans. Smart Grid 2013, 4, 1760–1771. [Google Scholar] [CrossRef]

- Panigrahi, B.K.; Sahu, S.K.; Nandi, R.; Nayak, S. Probabilistic Load Flow of a Distributed Generation Connected Power System by Two Point Estimate Method. In Proceedings of the 2017 International Conference on Circuit, Power and Computing Technologies (ICCPCT), Kollam, India, 20–21 April 2017; pp. 1–5. [Google Scholar]

- Aien, M.; Fotuhi-Firuzabad, M.; Aminifar, F. Probabilistic Load Flow in Correlated Uncertain Environment Using Unscented Transformation. IEEE Trans. Power Syst. 2012, 27, 2233–2241. [Google Scholar] [CrossRef]

- Schwippe, J.; Krause, O.; Rehtanz, C. Extension of a Probabilistic Load Flow Calculation Based on an Enhanced Convolution Technique. In Proceedings of the 2009 IEEE PES/IAS Conference on Sustainable Alternative Energy (SAE), Valencia, Spain, 28–30 September 2009; pp. 1–6. [Google Scholar] [CrossRef]

- Schellenberg, A.; Rosehart, W.; Aguado, J. Cumulant-Based Probabilistic Optimal Power Flow (P-OPF) with Gaussian and Gamma Distributions. IEEE Trans. Power Syst. 2005, 20, 773–781. [Google Scholar] [CrossRef]

- Deboever, J.; Grijalva, S.; Reno, M.J.; Broderick, R.J. Fast Quasi-Static Time-Series (QSTS) for Yearlong PV Impact Studies Using Vector Quantization. Sol. Energy 2018, 159, 538–547. [Google Scholar] [CrossRef]

- López, C.D.; Idlbi, B.; Stetz, T.; Braun, M. Shortening Quasi-Static Time-Series Simulations for Cost-Benefit Analysis of Low Voltage Network Operation with Photovoltaic Feed-In. In Proceedings of the Power and Energy Student Summit (PESS) 2015, Dortmund, Germany, 13–14 January 2015. [Google Scholar] [CrossRef]

- Qureshi, M.U.; Grijalva, S.; Reno, M.J.; Deboever, J.; Zhang, X.; Broderick, R.J. A Fast Scalable Quasi-Static Time Series Analysis Method for PV Impact Studies Using Linear Sensitivity Model. IEEE Trans. Sustain Energy 2019, 10, 301–310. [Google Scholar] [CrossRef]

- Reno, M.J.; Deboever, J.; Mather, B. Motivation and Requirements for Quasi-Static Time Series (QSTS) for Distribution System Analysis. In Proceedings of the 2017 IEEE Power & Energy Society General Meeting, Chicago, IL, USA, 16–20 July 2017; pp. 1–5. [Google Scholar]

- Jain, A.K.; Horowitz, K.; Ding, F.; Sedzro, K.S.; Palmintier, B.; Mather, B.; Jain, H. Dynamic Hosting Capacity Analysis for Distributed Photovoltaic Resources—Framework and Case Study. Appl. Energy 2020, 280, 115633. [Google Scholar] [CrossRef]

- Antoniadou-Plytaria, K.E.; Kouveliotis-Lysikatos, I.N.; Georgilakis, P.S.; Hatziargyriou, N.D. Distributed and Decentralized Voltage Control of Smart Distribution Networks: Models, Methods, and Future Research. IEEE Trans. Smart Grid 2017, 8, 2999–3008. [Google Scholar] [CrossRef]

- Pippi, K.D.; Kryonidis, G.C.; Nousdilis, A.I.; Papadopoulos, T.A. A Unified Control Strategy for Voltage Regulation and Congestion Management in Active Distribution Networks. Electr. Power Syst. Res. 2022, 212, 108648. [Google Scholar] [CrossRef]

- Xu, T.; Wade, N.S.; Davidson, E.M.; Taylor, P.C.; McArthur, S.D.J.; Garlick, W.G. Case-Based Reasoning for Coordinated Voltage Control on Distribution Networks. Electr. Power Syst. Res. 2011, 81, 2088–2098. [Google Scholar] [CrossRef]

- Jabr, R.A. Linear Decision Rules for Control of Reactive Power by Distributed Photovoltaic Generators. IEEE Trans. Power Syst. 2018, 33, 2165–2174. [Google Scholar] [CrossRef]

- Li, P.; Zhang, C.; Wu, Z.; Xu, Y.; Hu, M.; Dong, Z. Distributed Adaptive Robust Voltage/VAR Control with Network Partition in Active Distribution Networks. IEEE Trans. Smart Grid 2020, 11, 2245–2256. [Google Scholar] [CrossRef]

- Li, J.; Liu, C.; Khodayar, M.E.; Wang, M.H.; Xu, Z.; Zhou, B.; Li, C. Distributed Online VAR Control for Unbalanced Distribution Networks with Photovoltaic Generation. IEEE Trans. Smart Grid 2020, 11, 4760–4772. [Google Scholar] [CrossRef]

- Liu, H.J.; Shi, W.; Zhu, H. Distributed Voltage Control in Distribution Networks: Online and Robust Implementations. IEEE Trans. Smart Grid 2018, 9, 6106–6117. [Google Scholar] [CrossRef]

- Papadimitrakis, M.; Kapnopoulos, A.; Tsavartzidis, S.; Alexandridis, A. A Cooperative PSO Algorithm for Volt-VAR Optimization in Smart Distribution Grids. Electr. Power Syst. Res. 2022, 212, 108618. [Google Scholar] [CrossRef]

- Nayeripour, M.; Fallahzadeh-Abarghouei, H.; Waffenschmidt, E.; Hasanvand, S. Coordinated Online Voltage Management of Distributed Generation Using Network Partitioning. Electr. Power Syst. Res. 2016, 141, 202–209. [Google Scholar] [CrossRef]

- Zhao, B.; Xu, Z.; Xu, C.; Wang, C.; Lin, F. Network Partition-Based Zonal Voltage Control for Distribution Networks with Distributed PV Systems. IEEE Trans. Smart Grid 2018, 9, 4087–4098. [Google Scholar] [CrossRef]

- Li, Z.; Wu, Q.; Chen, J.; Huang, S.; Shen, F. Double-Time-Scale Distributed Voltage Control for Unbalanced Distribution Networks Based on MPC and ADMM. Int. J. Electr. Power Energy Syst. 2023, 145, 108665. [Google Scholar] [CrossRef]

- Li, P.; Ji, H.; Yu, H.; Zhao, J.; Wang, C.; Song, G.; Wu, J. Combined Decentralized and Local Voltage Control Strategy of Soft Open Points in Active Distribution Networks. Appl. Energy 2019, 241, 613–624. [Google Scholar] [CrossRef]

- Farina, M.; Guagliardi, A.; Mariani, F.; Sandroni, C.; Scattolini, R. Model Predictive Control of Voltage Profiles in MV Networks with Distributed Generation. Control. Eng. Pract. 2015, 34, 18–29. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Wang, J.; Zhang, Y. Deep Reinforcement Learning Based Volt-VAR Optimization in Smart Distribution Systems. IEEE Trans. Smart Grid 2021, 12, 361–371. [Google Scholar] [CrossRef]

- El Helou, R.; Kalathil, D.; Xie, L. Fully Decentralized Reinforcement Learning-Based Control of Photovoltaics in Distribution Grids for Joint Provision of Real and Reactive Power. IEEE Open Access J. Power Energy 2021, 8, 175–185. [Google Scholar] [CrossRef]

- Liu, H.; Wu, W. Federated Reinforcement Learning for Decentralized Voltage Control in Distribution Networks. IEEE Trans. Smart Grid 2022, 13, 3840–3843. [Google Scholar] [CrossRef]

- Kou, P.; Liang, D.; Wang, C.; Wu, Z.; Gao, L. Safe Deep Reinforcement Learning-Based Constrained Optimal Control Scheme for Active Distribution Networks. Appl. Energy 2020, 264, 114772. [Google Scholar] [CrossRef]

- Yang, Q.; Wang, G.; Sadeghi, A.; Giannakis, G.B.; Sun, J. Two-Timescale Voltage Control in Distribution Grids Using Deep Reinforcement Learning. IEEE Trans. Smart Grid 2020, 11, 2313–2323. [Google Scholar] [CrossRef]

- Lee, X.Y.; Sarkar, S.; Wang, Y. A Graph Policy Network Approach for Volt-Var Control in Power Distribution Systems. Appl. Energy 2022, 323, 119530. [Google Scholar] [CrossRef]

- Cao, D.; Hu, W.; Xu, X.; Wu, Q.; Huang, Q.; Chen, Z.; Blaabjerg, F. Deep Reinforcement Learning Based Approach for Optimal Power Flow of Distribution Networks Embedded with Renewable Energy and Storage Devices. J. Mod. Power Syst. Clean Energy 2021, 9, 1101–1110. [Google Scholar] [CrossRef]

- Xing, Q.; Chen, Z.; Zhang, T.; Li, X.; Sun, K.H. Real-Time Optimal Scheduling for Active Distribution Networks: A Graph Reinforcement Learning Method. Int. J. Electr. Power Energy Syst. 2023, 145, 108637. [Google Scholar] [CrossRef]

- Qi, Y. TD3-Based Voltage Regulation for Distribution Networks with PV and Energy Storage System. In Proceedings of the 2023 Panda Forum on Power and Energy (PandaFPE), Chengdu, China, 27–30 April 2023; pp. 505–509. [Google Scholar] [CrossRef]

- Liu, Q.; Guo, Y.; Deng, L.; Tang, W.; Sun, H.; Huang, W. Robust Offline Deep Reinforcement Learning for Volt-Var Control in Active Distribution Networks. In Proceedings of the 5th IEEE Conference on Energy Internet and Energy System Integration: Energy Internet for Carbon Neutrality, EI2 2021, Taiyuan, China, 22–24 October 2021; pp. 442–448. [Google Scholar] [CrossRef]

- Liu, H.; Wu, W.; Wang, Y. Bi-Level Off-Policy Reinforcement Learning for Two-Timescale Volt/VAR Control in Active Distribution Networks. IEEE Trans. Power Syst. 2022, 38, 385–395. [Google Scholar] [CrossRef]

- Cao, D.; Zhao, J.; Hu, W.; Ding, F.; Yu, N.; Huang, Q.; Chen, Z. Model-Free Voltage Control of Active Distribution System with PVs Using Surrogate Model-Based Deep Reinforcement Learning. Appl. Energy 2022, 306, 117982. [Google Scholar] [CrossRef]

- Fujimoto, S.; Van Hoof, H.; Meger, D. Addressing Function Approximation Error in Actor-Critic Methods. In Proceedings of the 35th International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Wu, J.; Yuan, J.; Weng, Y.; Ayyanar, R. Spatial-Temporal Deep Learning for Hosting Capacity Analysis in Distribution Grids. IEEE Trans. Smart Grid 2022, 14, 354–364. [Google Scholar] [CrossRef]

- Xu, X.; Chen, X.; Wang, J.; Fang, L.; Xue, F.; Lim, E.G. Cooperative Multi-Agent Deep Reinforcement Learning Based Decentralized Framework for Dynamic Renewable Hosting Capacity Assessment in Distribution Grids. Energy Rep. 2023, 9, 441–448. [Google Scholar] [CrossRef]

- Yao, Y.; Ding, F.; Horowitz, K.; Jain, A. Coordinated Inverter Control to Increase Dynamic PV Hosting Capacity: A Real-Time Optimal Power Flow Approach. IEEE Syst. J. 2022, 16, 1933–1944. [Google Scholar] [CrossRef]

- AS/NZS 4777.2:2020; Grid Connection of Energy Systems via Inverters, Part 2: Inverter Requirements. Standards New Zealand: Wellington, New Zealand, 2020.

- IEC/TR 61000-3-14:2011; Electromagnetic Compatibility (EMC) Part 3.14: Limits-Assessment of Emission Limits for Harmonics, Interharmonics, Voltage Fluctuations and Unbalance for the Connection of Disturbing Installations to LV Power Systems. International Electrotechnical Commission: Geneva, Switzerland, 2011.

| Features | [39,45,46,47] | [37] | [49] | [50] | [51] | Current Work |

|---|---|---|---|---|---|---|

| DRL-based coordinated voltage control | ✓ | ✓ | × | ✓ | × | ✓ |

| Comparative analysis with other voltage control algorithms | ✓ | ✓ | × | ✓ | ✓ | ✓ |

| Implementation on real-world unbalanced LV network models | × | ✓ | ✓ | × | × | ✓ |

| Deep learning-based real-time HC assessment | × | × | ✓ | ✓ | × | ✓ |

| Quantification of enhanced HC | × | × | × | × | ✓ | ✓ |

| A discussion on performance level and limitations | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Actor learning rate | 0.001 |

| Critic learning rate | 0.001 |

| Discount factor | 0.99 |

| Batch size | 750 |

| Standard deviation of the added Gaussian noise for | 0.01 |

| Target network update rate | 0.005 |

| Activation function of hidden layers | ReLU |

| Activation function of output layer | tanh |

| Input layer size | 2 |

| Output layer size | 3 |

| Size of hidden layers | {256, 512, 1024, 512, 256} |

| 0.001 | |

| 0.001 | |

| 0.99 | |

| Batch size | 500 |

| 0.1 | |

| 0.005 | |

| Activation function of hidden layers | ReLU |

| Activation function of output layer | tanh |

| Input layer size | |

| Output layer size | |

| Size of hidden layers | {256, 512, 1024, 512, 256} |

| R1 Ω/km | X1 Ω/km | R0 Ω/km | X0 Ω/km | |||

|---|---|---|---|---|---|---|

| Main Feeder | 0.298557 | 0.259633 | 1.132508 | 0.945961 | ||

| Service Feeder | 1.480003 | 0.088 | - | - | ||

| Network Constraints | ||||||

| Data Set No. | Number of Days | Time Step Resolution | Total Time Steps | Simulation |

|---|---|---|---|---|

| 1 | 120 | 30 min | 5760 | Training of HC and VC agents |

| 2 | 120 | 30 min | 5760 | Evaluation of HC agent |

| 3 | 1 | 5 s | 17,280 | Evaluation of VC agent |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suchithra, J.; Rajabi, A.; Robinson, D.A. Enhancing PV Hosting Capacity of Electricity Distribution Networks Using Deep Reinforcement Learning-Based Coordinated Voltage Control. Energies 2024, 17, 5037. https://doi.org/10.3390/en17205037

Suchithra J, Rajabi A, Robinson DA. Enhancing PV Hosting Capacity of Electricity Distribution Networks Using Deep Reinforcement Learning-Based Coordinated Voltage Control. Energies. 2024; 17(20):5037. https://doi.org/10.3390/en17205037

Chicago/Turabian StyleSuchithra, Jude, Amin Rajabi, and Duane A. Robinson. 2024. "Enhancing PV Hosting Capacity of Electricity Distribution Networks Using Deep Reinforcement Learning-Based Coordinated Voltage Control" Energies 17, no. 20: 5037. https://doi.org/10.3390/en17205037

APA StyleSuchithra, J., Rajabi, A., & Robinson, D. A. (2024). Enhancing PV Hosting Capacity of Electricity Distribution Networks Using Deep Reinforcement Learning-Based Coordinated Voltage Control. Energies, 17(20), 5037. https://doi.org/10.3390/en17205037