Stress-Testing MQTT Brokers: A Comparative Analysis of Performance Measurements

Abstract

:1. Introduction

2. Background

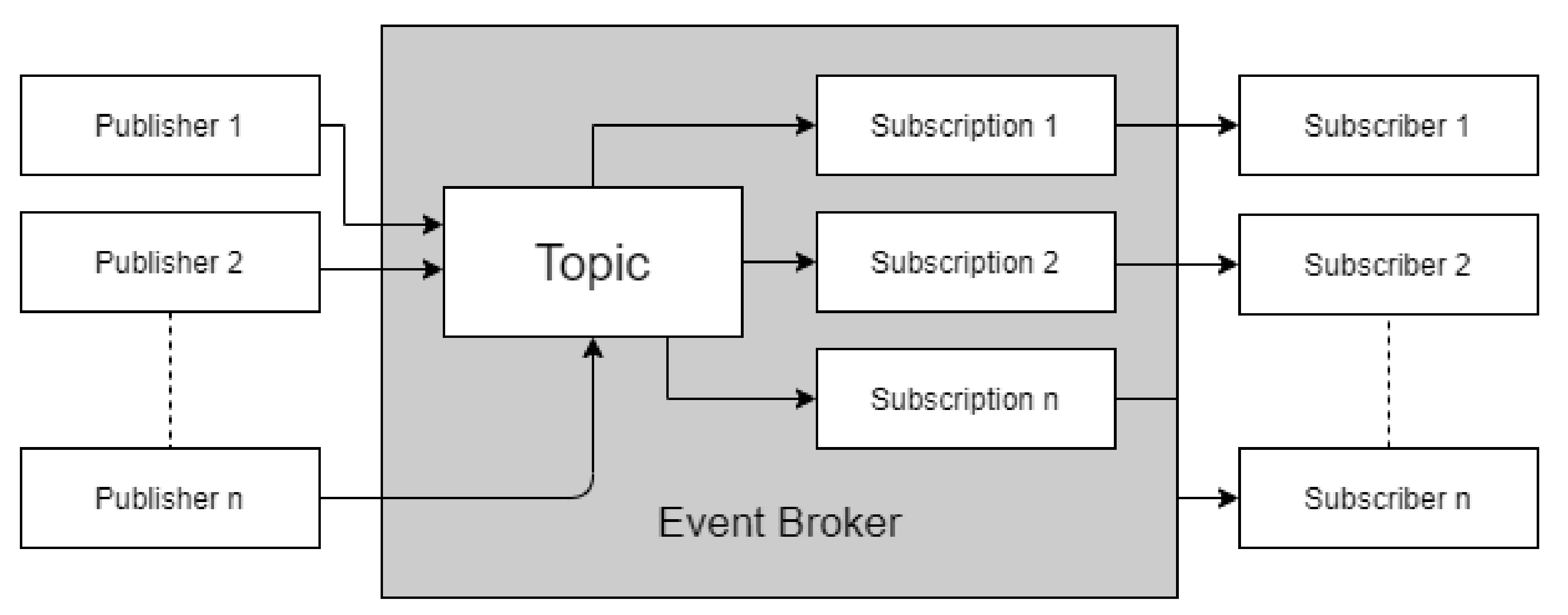

2.1. Basics of a Publish/Subscribe Messaging Service

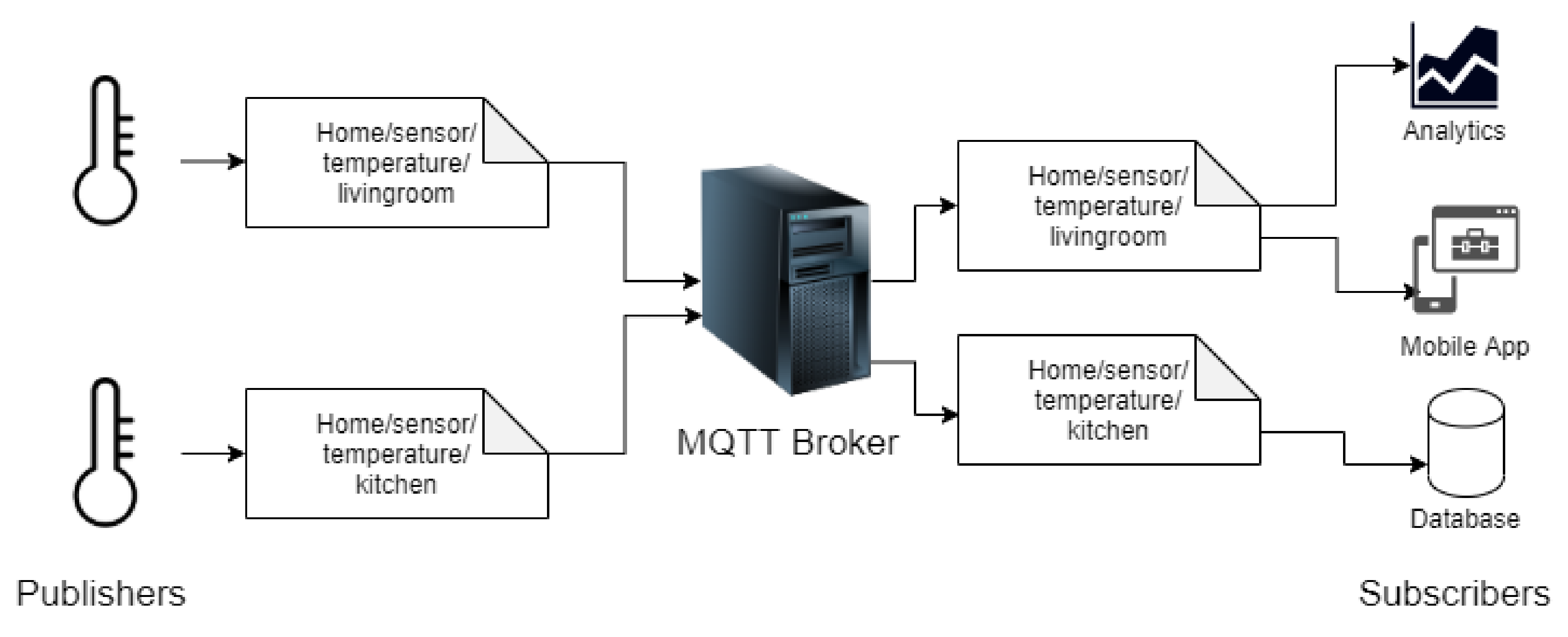

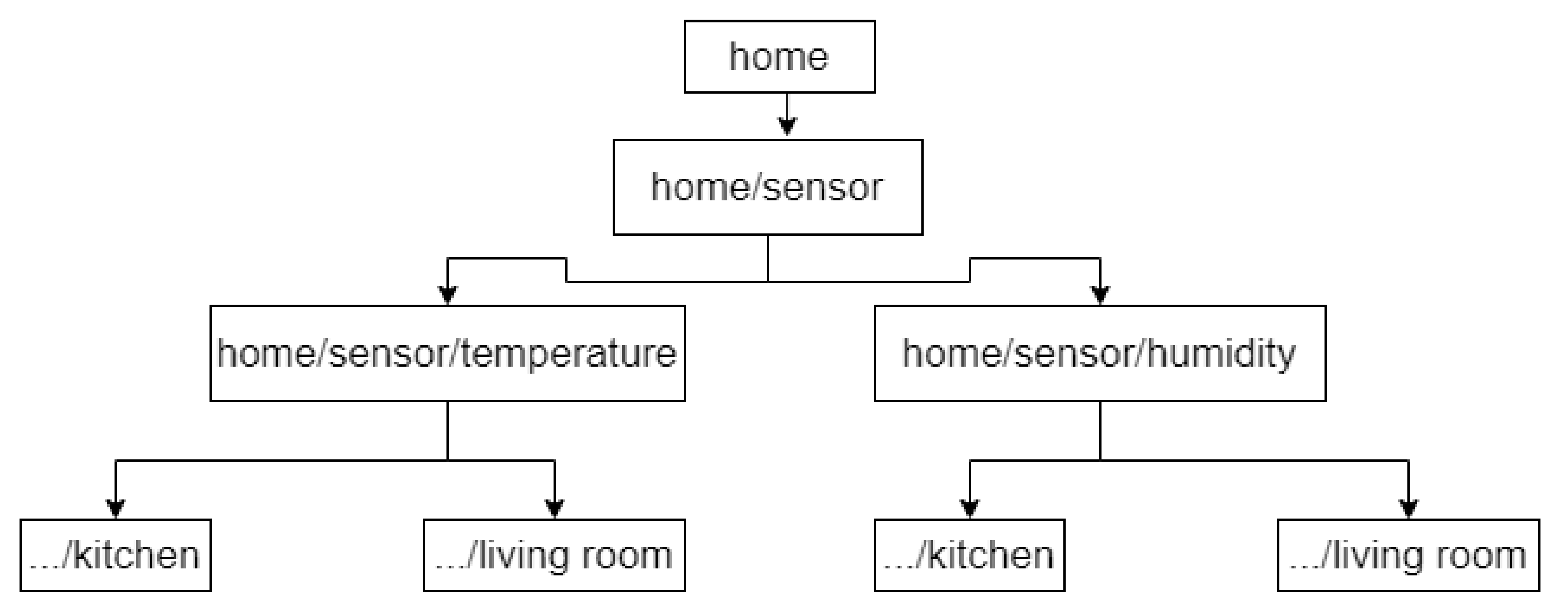

2.2. Overview of MQTT Architecture

- A Publisher or Producer (An MQTT client).

- A Broker (An MQTT server).

- A Consumer or Subscriber (An MQTT client).

2.3. Scalability and Types of MQTT Broker Implementations

2.4. Evaluating the Performance of a Messaging Service

2.4.1. Latency

2.4.2. Scalability

2.4.3. Availability

3. Related Work

4. Local and Cloud Test Environment Settings and Benchmarking Results

- one is a local testing environment, and

- the other one is a cloud-based testing environment.

4.1. Evaluation Scenario

4.1.1. Evaluation Conditions

4.1.2. Latency Calculations

4.1.3. Message Payload

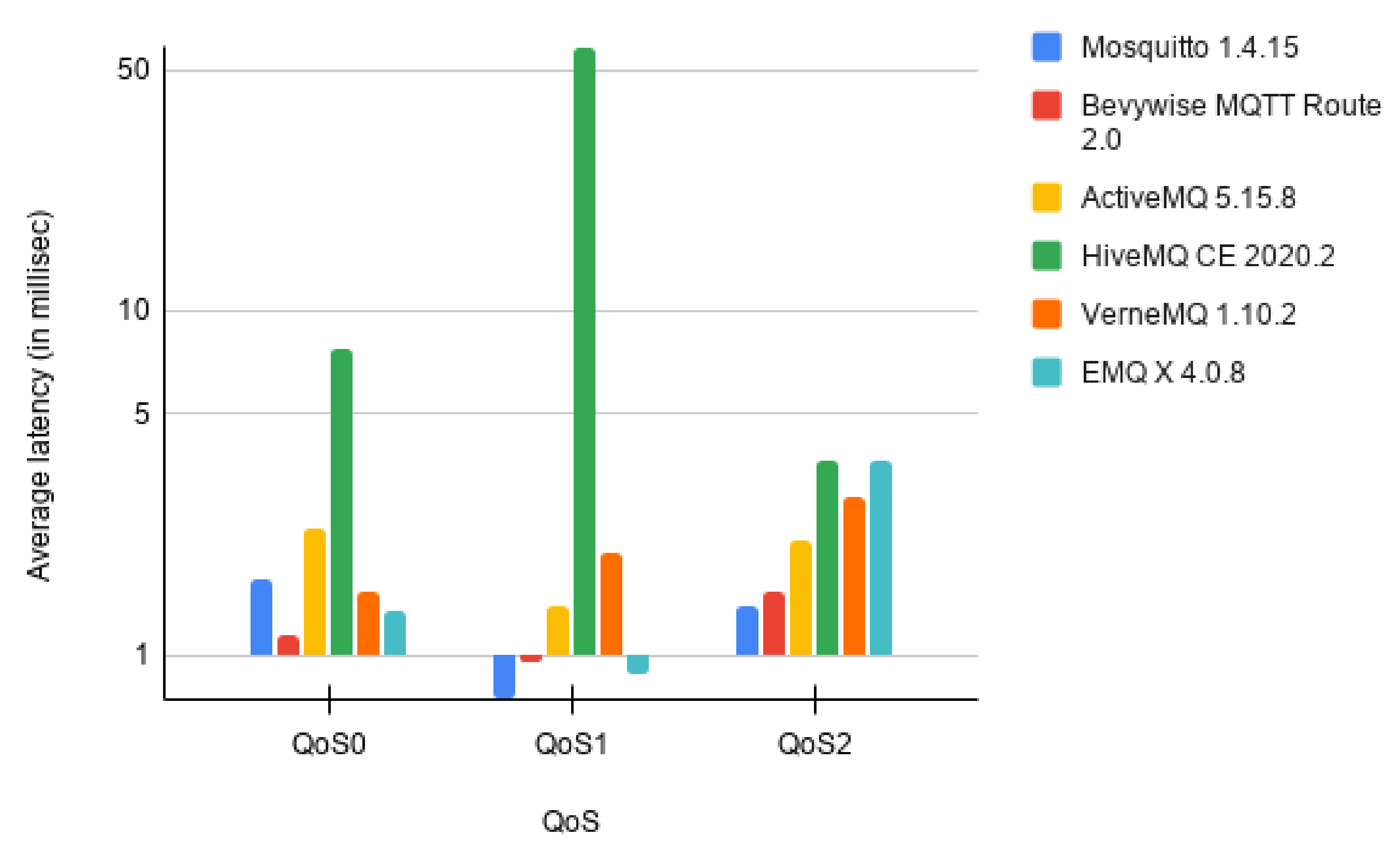

4.2. Benchmarking Results

5. Discussion

5.1. Local Evaluation Results

5.2. Cloud-Based Evaluation Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- IHS Markit, Number of Connected IoT Devices Will Surge to 125 Billion by 2030. Available online: https://technology.ihs.com/596542/ (accessed on 6 August 2020).

- Karagiannis, V.; Chatzimisios, P.; Vazquez-Gallego, F.; Alonso-Zarate, J. A survey on application layer protocols for the internetof things. Trans. IoT Cloud Comput. 2015, 3, 11–17. [Google Scholar]

- Naik, N. Choice of effective messaging protocols for IoT systems: MQTT, CoAP, AMQP and HTTP. In Proceedings of the 2017 IEEE International Systems Engineering Symposium (ISSE), Vienna, Austria, 11–13 October 2017. [Google Scholar] [CrossRef] [Green Version]

- Bandyopadhyay, S.; Bhattacharyya, A. Lightweight Internet protocols for web enablement of sensors using constrained gateway devices. In Proceedings of the 2013 International Conference on Computing, Networking and Communications (ICNC), San Diego, CA, USA, 28–31 January 2013. [Google Scholar] [CrossRef]

- MQTT v5.0. Available online: https://docs.oasis-open.org/mqtt/mqtt/v5.0/mqtt-v5.0.html (accessed on 6 August 2020).

- Messaging Technologies for the Industrial Internet and the Internet Of Things. Available online: https://www.smartindustry.com/assets/Uploads/SI-WP-Prismtech-Messaging-Tech.pdf (accessed on 6 August 2020).

- Han, N.S. Semantic Service Provisioning for 6LoWPAN: Powering Internet of Things Applications on Web. Other [cs.OH]. Institut National des Télécommunications, Paris. 2015. Available online: https://tel.archives-ouvertes.fr/tel-01217185/document (accessed on 6 August 2020).

- Kawaguchi, R.; Bandai, M. Edge Based MQTT Broker Architecture for Geographical IoT Applications. In Proceedings of the 2020 International Conference on Information Networking (ICOIN), Barcelona, Spain, 7–10 January 2020. [Google Scholar] [CrossRef]

- Sasaki, Y.; Yokotani, T. Performance Evaluation of MQTT as a Communication Protocol for IoT and Prototyping. Adv. Technol. Innov. 2019, 4, 21–29. [Google Scholar]

- Al-Fuqaha, A.; Guizani, M.; Mohammadi, M.; Aledhari, M.; Ayyash, M. Internet of Things: A Survey on Enabling Technologies, Protocols, and Applications. IEEE Commun. Surv. Tutorials 2015, 17, 2347–2376. [Google Scholar] [CrossRef]

- Farhan, L.; Kharel, R.; Kaiwartya, O.; Hammoudeh, M.; Adebisi, B. Towards green computing for Internet of things: Energy oriented path and message scheduling approach. Sustain. Cities Soc. 2018, 38, 195–204. [Google Scholar] [CrossRef] [Green Version]

- Mishra, B.; Mishra, B. Evaluating and Analyzing MQTT Brokers with stress testing. In Proceedings of the 12th Conference of PHD Students in Computer Science, CSCS 2020, Szeged, Hungary, 24–26 June 2020; pp. 32–35. Available online: http://www.inf.u-szeged.hu/~cscs/pdf/cscs2020.pdf (accessed on 6 January 2021).

- Mishra, B.; Kertesz, A. The use of MQTT in M2M and IoT systems: A survey. IEEE Access 2020, 8, 201071–201086. [Google Scholar] [CrossRef]

- Pub/Sub: A Google-Scale Messaging Service|Google Cloud. Available online: https://cloud.google.com/pubsub/architecture (accessed on 6 August 2020).

- Liubai, C.W.; Zhenzhu, F. Bayesian Network Based Behavior Prediction Model for Intelligent Location Based Services. In Proceedings of the 2006 2nd IEEE/ASME International Conference on Mechatronics and Embedded Systems and Applications, Beijing, China, 13–16 August 2006; pp. 1–6. [Google Scholar] [CrossRef]

- Jutadhamakorn, P.; Pillavas, T.; Visoottiviseth, V.; Takano, R.; Haga, J.; Kobayashi, D. A scalable and low-cost MQTT broker clustering system. In Proceedings of the 2017 2nd International Conference on Information Technology (INCIT), Nakhonpathom, Thailand, 2–3 November 2017. [Google Scholar] [CrossRef]

- MQTT and CoAP, IoT Protocols|The Eclipse Foundation. Available online: https://www.eclipse.org/community/eclipse_newsletter/2014/february/article2.php (accessed on 6 June 2020).

- Mishra, B. Performance evaluation of MQTT broker servers. In Proceedings of the International Conference on Computational Science and Its Applications; Springer: Cham, Switzerland, 2018; pp. 599–609. [Google Scholar]

- Eugster, P.T.; Felber, P.A.; Guerraoui, R.; Kermarrec, A.-M. The Many Faces of Publish/Subscribe. ACM Comput. Surv. 2003, 35, 114–131. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.; Kim, H.; Hong, D.; Ju, H. Correlation analysis of MQTT loss and delay according to QoS level. In Proceedings of the International Conference on Information Networking 2013 (ICOIN), Bangkok, Thailand, 28–30 January 2013; pp. 714–717. [Google Scholar] [CrossRef]

- Pipatsakulroj, W.; Visoottiviseth, V.; Takano, R. muMQ: A lightweight and scalable MQTT broker. In Proceedings of the 2017 IEEE International Symposium on Local and Metropolitan Area Networks (LANMAN), Osaka, Japan, 12–14 June 2017. [Google Scholar] [CrossRef]

- Bondi, A.B. Characteristics of scalability and their impact on performance. In Proceedings of the 2nd International Worksop on Software and Performance, Ottawa, ON, Canada, 1 September 2000. [Google Scholar]

- Detti, A.; Funari, L.; Blefari-Melazzi, N. Sub-linear Scalability of MQTT Clusters in Topic-based Publish-subscribe Applications. IEEE Trans. Netw. Serv. Manag. 2020, 17, 1954–1968. [Google Scholar] [CrossRef]

- Thangavel, D.; Ma, X.; Valera, A.; Tan, H.X.; Tan, C.K. Performance evaluation of MQTT and CoAP via a common middleware. In Proceedings of the 2014 IEEE Ninth International Conference on iNtelligent Sensors, Sensor Networks and Information Processing (ISSNIP), Singapore, 21–24 April 2014; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar]

- Chen, Y.; Kunz, T. Performance evaluation of IoT protocols under a constrained wireless access network. In Proceedings of the 2016 International Conference on Selected Topics in Mobile & Wireless Networking (MoWNeT), Cairo, Egypt, 11–13 April 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Pham, M.L.; Nguyen, T.T.; Tran, M.D. A Benchmarking Tool for Elastic MQTT Brokers in IoT Applications. Int. J. Inf. Commun. Sci. 2019, 4, 70–78. [Google Scholar]

- Bertr-Martinez, E.; Dias Feio, P.; Brito Nascimento, V.D.; Kon, F.; Abelém, A. Classification and evaluation of IoT brokers: A methodology. Int. J. Netw. Manag. 2020, 31, e2115. [Google Scholar]

- Koziolek, H.; Grüner, S.; Rückert, J. A Comparison of MQTT Brokers for Distributed IoT Edge Computing; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12292. [Google Scholar]

- Documentation|Google Cloud. Available online: https://cloud.google.com/docs (accessed on 24 March 2021).

- Using Network Service Tiers|Google Cloud. Available online: https://cloud.google.com/network-tiers/docs/using-network-service-tiers (accessed on 24 March 2021).

- Machine Types|Compute Engine Documentation|Google Cloud. Available online: https://cloud.google.com/com\protect\discretionary{\char\hyphenchar\font}{}{}pute/docs/machine-types (accessed on 24 March 2021).

- paho-mqtt PyPI. Available online: https://pypi.org/project/paho-mqtt/ (accessed on 10 August 2020).

- MQTT Blaster. Available online: https://github.com/MQTTBlaster/MQTTBlaster (accessed on 28 June 2021).

- Mosquitto Man Page|Eclipse Mosquitto. Available online: https://mosquitto.org/man/mosquitto-8.html (accessed on 10 August 2020).

- MQTT Broker Developer Documentation-MQTT Broker-Bevywise. Available online: https://www.bevywise.com/mqtt-broker/developer-guide.html (accessed on 10 August 2020).

- ActiveMQ Classic. Available online: https://activemq.apache.org/components/classic/ (accessed on 10 August 2020).

- HiveMQ Community Edition 2020.3 Is Released. Available online: https://www.hivemq.com/blog/hivemq-ce-2020-3-released/ (accessed on 10 August 2020).

- Getting Started—VerneMQ. Available online: https://docs.vernemq.com/getting-started (accessed on 10 August 2020).

- MQTT Broker for IoT in 5G Era|EMQ. Available online: https://www.EMQX.io/ (accessed on 10 August 2020).

- Understanding CPU Usage in Linux|OpsDash. Available online: https://www.opsdash.com/blog/cpu-usage-linux.html/ (accessed on 3 September 2021).

- System CPU Utilization Workspace|IBM. Available online: https://www.ibm.com/docs/en/om-zos/5.6.0?topic=workspaces-system-cpu-utilization-workspace/ (accessed on 3 September 2021).

| Paper | Publication Year | Aim |

|---|---|---|

| Thangavel, Dinesh, et al. [24] | 2014 | testing performance of MQTT and CoAP protocols in terms of end-to-end delay and bandwidth consumption |

| Chen, Y., & Kunz, T. [25] | 2016 | evaluating performance of MQTT, CoAP, DDS and a custom UDP-based protocol in a medical test environment |

| Mishra, B. [18] | 2018 | comparing performance of MQTT brokers under basic domestic use condition |

| Pham, M. L., Nguyen, et al. [26] | 2019 | introduced an MQTT benchmarking tool named MQTTBrokerBench. |

| Bertrand-Martinez, Eddas, et al. [27] | 2020 | proposed a methodology for the classification and evaluation of IoT brokers. |

| Koziolek H, Grüner S, et al. [28] | 2020 | compared performance of three distributed MQTT brokers |

| Our work | 2020 | comparing and analyzing performance of MQTT brokers by them under stress test, both scalable and non-scalable brokers taken into consideration |

| HW/SW Details | Publisher | MQTT Broker | Subscriber |

|---|---|---|---|

| CPU | 64 bit AMD Ryzen 5 2500 U @3.6 GHz | 64 bit An Intel(R) Core(TM) i5-7260 U CPU @2.20 GHz | Intel(R) Pentium(R) CPU 2020 M @2.40 GHz |

| Memory | 8 GB, SODIMM DDR4 Synchronous Unbuffered (Unregistered) 2400 MHz (0.4 ns) | 8 GB, SODIMM DDR4 Synchronous Unbuffered (Unregistered) 2400 MHz (0.4 ns) | 2 GB, SODIMM DDR3 Synchronous 1600 MHz (0.6 ns) |

| Network | 1 Gbit/s, RTL8111/8168/8411 PCI Express Gigabit Ethernet Controller | 1 Gbit/s, Intel Ethernet Connection (4) I219-V | AR8161 Gigabit Ethernet, speed = 1 Gbit/s |

| HDD | WDC WD10SPZX-24Z (5400 rpm), 1 TB, connected over SATA 6gbps interface | WDC WD5000LPCX-2 (5400 rpm), 500 TB, connected over SATA 6gbps interface | HGST HTS545050A7 |

| OS, Kernel | Elementary OS 5.1.4, Kernel 4.15.0-74-generic | Elementary OS 5.1.4, Kernel 4.15.0-74-generic | Linux Mint 19, Kernel 4.15.0-20-generic |

| HW/SW Details | Publisher/Subscriber/Server |

|---|---|

| Machine type | c2-standard-8 [31] |

| CPU | 8 vCPUs |

| Memory | 32 GB |

| Disk size | local 30 GB SSD |

| Disk type | Standard persistent disk |

| Network Tier | Premium |

| OS, Kernel | 18.04.1-Ubuntu SMP x86_64 GNU/Linux, 5.4.0-1038-gcp |

| MQTT Brokers | Mosquitto | Bevywise MQTT Route | ActiveMQ | HiveMQ CE | VerneMQ | EMQ X |

|---|---|---|---|---|---|---|

| OpenSource | Yes | No | Yes | Yes | Yes | Yes |

| Written in (prime programming language) | C | C, Python | Java | Java | Erlang | Erlang |

| MQTT Version | 3.1.1, 5.0 | 3.x, 5.0 | 3.1 | 3.x, 5.1 | 3.x, 5.0 | 3.1.1 |

| QoS Support | 0, 1, 2 | 0, 1, 2 | 0, 1, 2 | 0, 1, 2 | 0, 1, 2 | 0, 1, 2 |

| Operating System Support | Linux, Mac, Windows | Windows, Windows server, Linux, Mac and Raspberry Pi | Windows, Unix/ Linux/Cygwin | Linux, Mac, Windows | Linux, Mac OS X | Linux, Mac, Windows, BSD |

| Number of topics: | 3 (via 3 publisher threads) |

| Number of publishers: | 3 |

| Number of subscribers: | 15 (subscribing to all 3 topics) |

| Payload: | 64 bytes |

| Topic names used to publish large number of messages: | ‘topic/0’, ‘topic/1’, ‘topic/2’ |

| Topic used to calculate latency: | ‘topic/latency’ |

| QoS | QoS0 | QoS1 | QoS2 | |||

|---|---|---|---|---|---|---|

| Observations/Broker (non-scalable) | Mosquitto 1.4.15 | Bevywise MQTT Route 2.0 | Mosquitto 1.4.15 | Bevywise MQTT Route 2.0 | Mosquitto 1.4.15 | Bevywise MQTT Route 2.0 |

| Peak message rate (msgs/sec) | 32,016.00 | 32,839.00 | 9488.00 | 3542.49 | 6585.00 | 2649.00 |

| Average process CPU usage(%) at above message rate | 84.29 | 97.93 | 89.00 | 95.79 | 96.73 | 98.20 |

| Projected message processing rate at 100% process CPU usage | 37,983.15 | 33,533.14 | 10,660.67 | 3698.18 | 6807.61 | 2697.56 |

| Average latency (in ms) | 1.65 | 1.13 | 0.74 | 0.96 | 1.38 | 1.53 |

| QoS | QoS0 | QoS1 | QoS2 | |||

|---|---|---|---|---|---|---|

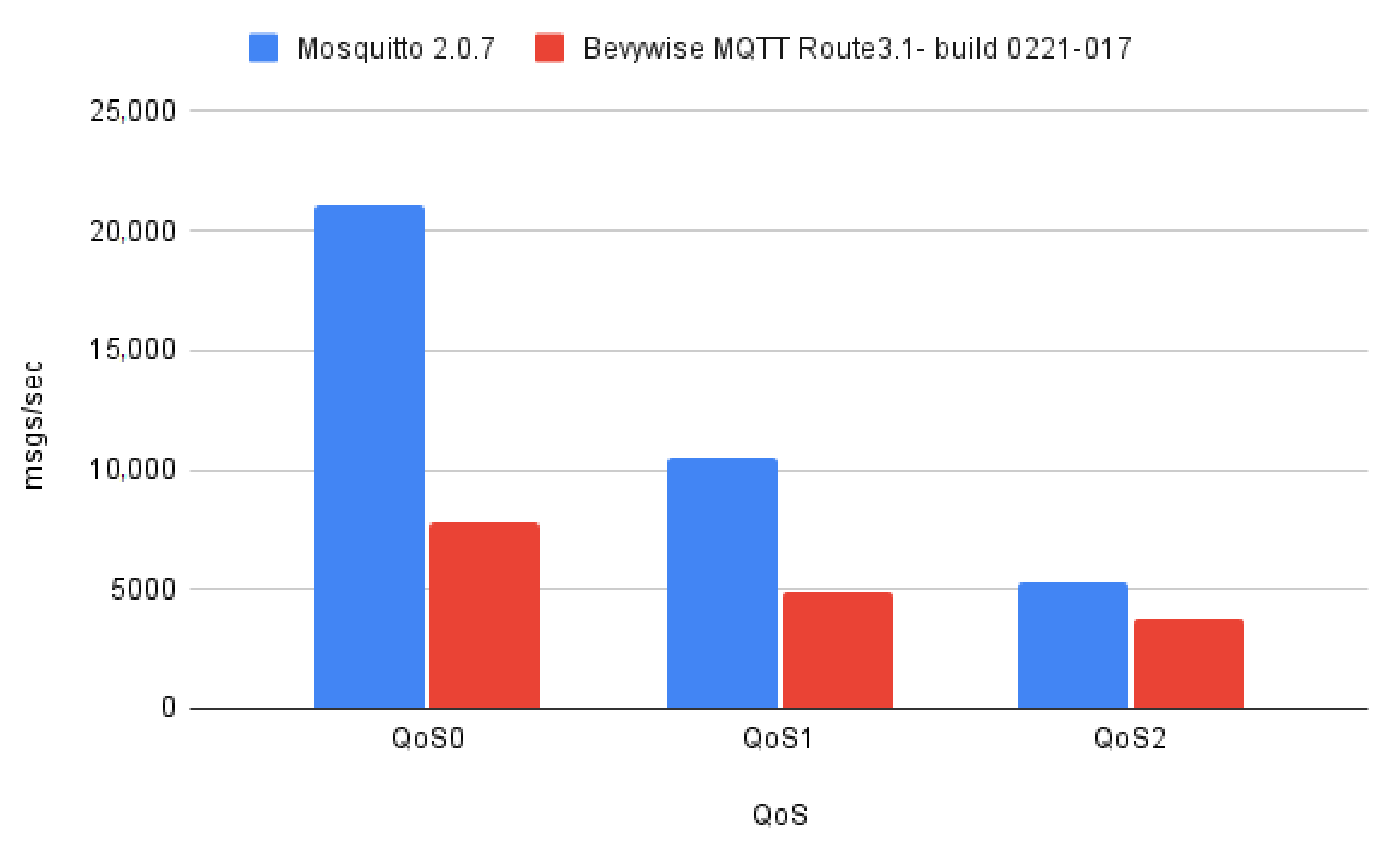

| Observations/Broker (non-scalable) | Mosquitto 2.0.7 | Bevywise MQTT Route 3.1- build 0221-017 | Mosquitto 2.0.7 | Bevywise MQTT Route 3.1- build 0221-017 | Mosquitto 2.0.7 | Bevywise MQTT Route 3.1- build 0221-017 |

| Peak message rate (msgs/sec) | 17,946.00 | 7815.00 | 8927.00 | 4861.00 | 4423.00 | 3688.00 |

| Average process CPU usage(%) at above message rate | 85.12 | 100.31 | 84.70 | 100.34 | 83.24 | 97.91 |

| Projected message processing rate at 100% process CPU usage. | 21083.18 | 7790.85 | 10539.55 | 4844.53 | 5313.55 | 3766.72 |

| Average latency (in ms) | 0.47 | 0.89 | 0.50 | 0.69 | 0.98 | 1.30 |

| QoS | QoS0 | QoS1 | QoS2 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

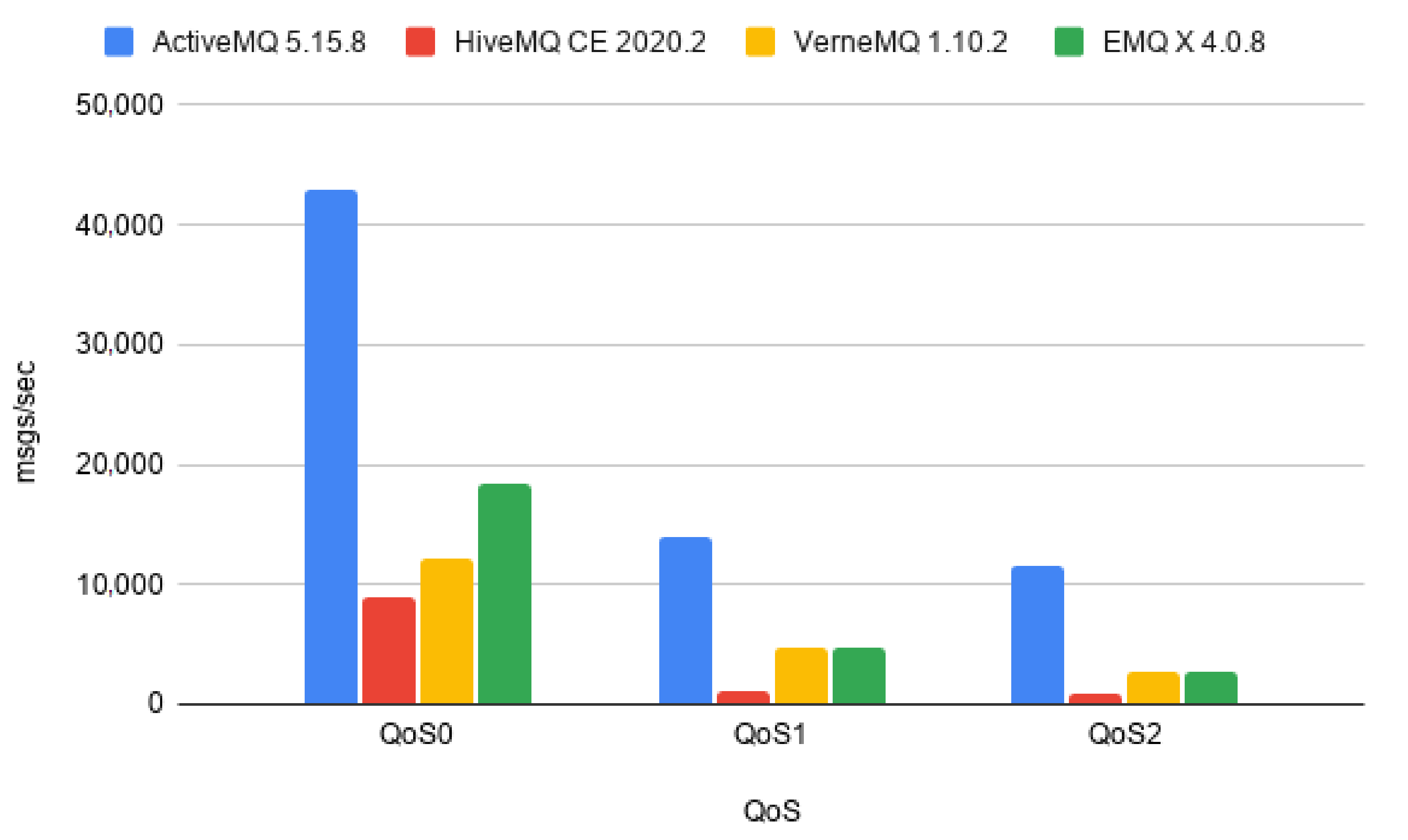

| Observations/Broker (scalable) | ActiveMQ 5.15.8 | HiveMQ CE 2020.2 | VerneMQ 1.10.2 | EMQ X 4.0.8 | ActiveMQ 5.15.8 | HiveMQ CE 2020.2 | VerneMQ 1.10.2 | EMQ X 4.0.8 | ActiveMQ 5.15.8 | HiveMQ CE 2020.2 | VerneMQ 1.10.2 | EMQ X 4.0.8 |

| Peak message rate (msgs/sec) | 39,479.00 | 8748.00 | 11,760.00 | 18,034.00 | 12,873.00 | 708.00 | 4655.00 | 4633.41 | 10,508.00 | 579.00 | 2614.00 | 2627.31 |

| Average system CPU usage(%) at above message rate | 91.78 | 97.93 | 96.51 | 98.71 | 92.56 | 63.44 | 97.34 | 96.82 | 90.91 | 64.28 | 96.79 | 95.54 |

| Projected message processing rate at 100% system CPU usage | 43,014.82 | 8932.91 | 12,185.27 | 18,269.68 | 13,907.74 | 1116.02 | 4782.21 | 4785.59 | 11,558.68 | 900.75 | 2700.69 | 2749.96 |

| Average latency (in ms) | 2.33 | 7.69 | 1.53 | 1.34 | 1.38 | 58.48 | 1.98 | 0.87 | 2.14 | 3.66 | 2.89 | 3.68 |

| QoS | QoS0 | QoS1 | QoS2 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

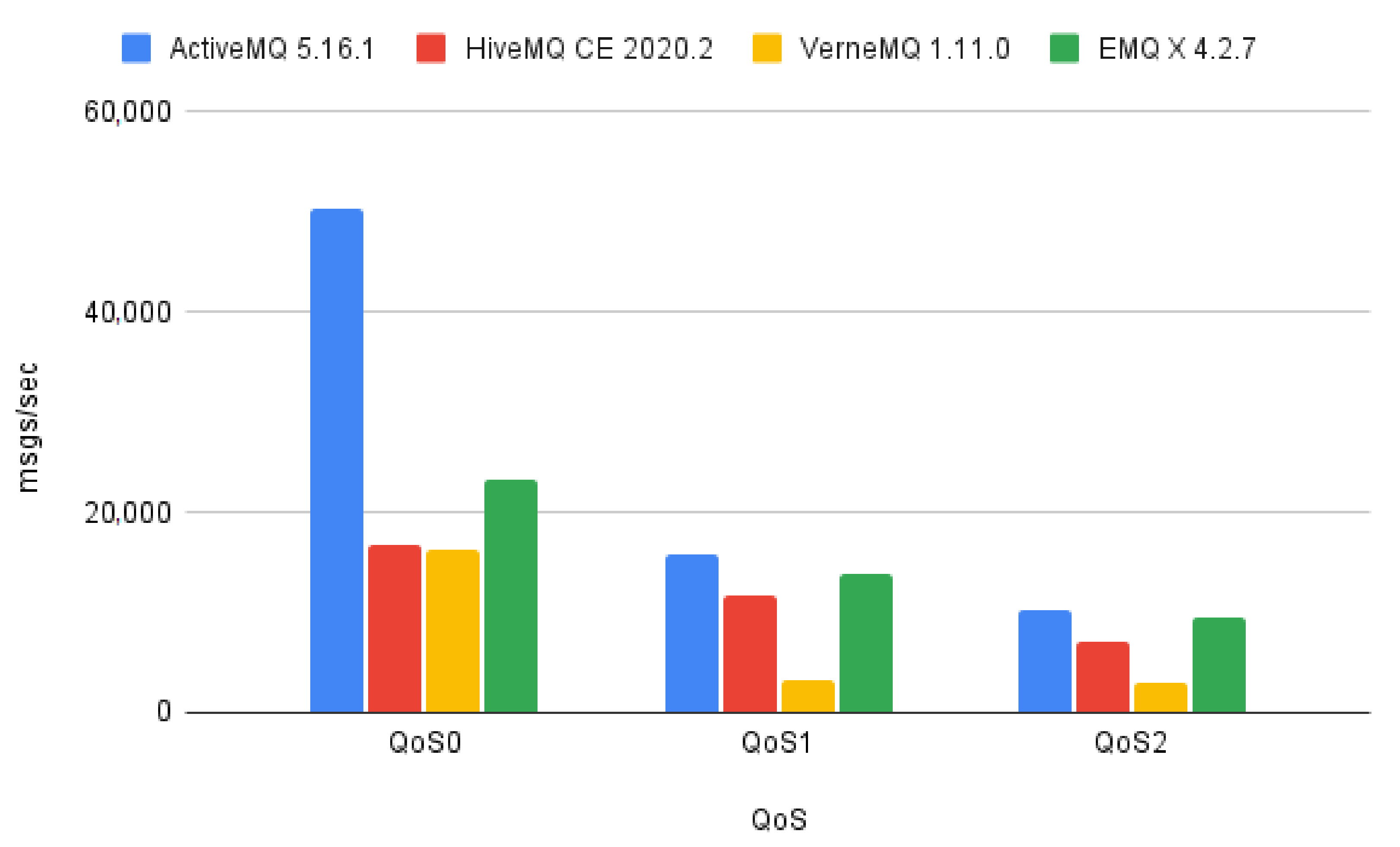

| Observations/Broker (non-scalable) | ActiveMQ 5.16.1 | HiveMQ CE 2020.2 | VerneMQ 1.11.0 | EMQX Broker 4.2.7 | ActiveMQ 5.16.1 | HiveMQ CE 2020.2 | VerneMQ 1.11.0 | EMQX Broker 4.2.7 | ActiveMQ 5.16.1 | HiveMQ CE 2020.2 | VerneMQ 1.11.0 | EMQX Broker 4.2.7 |

| Peak message rate (msgs/sec) | 41,697.00 | 13,338.00 | 14,332.00 | 17,838.00 | 9663.00 | 8188.00 | 2622.00 | 11,054.00 | 6196.00 | 4887.00 | 2240.00 | 7342 |

| Average process CPU usage (%) at above message rate | 82.77 | 80.09 | 88.29 | 76.83 | 60.73 | 70.43 | 82.16 | 79.28 | 59.97 | 68.32 | 72.70 | 76.84 |

| Projected message rate at 100% system CPU usage. | 50,376.95 | 16,653.76 | 16,232.87 | 23,217.49 | 15,911.41 | 11,625.73 | 3191.33 | 13,942.99 | 10,331.83 | 7153.10 | 3081.16 | 9554.92 |

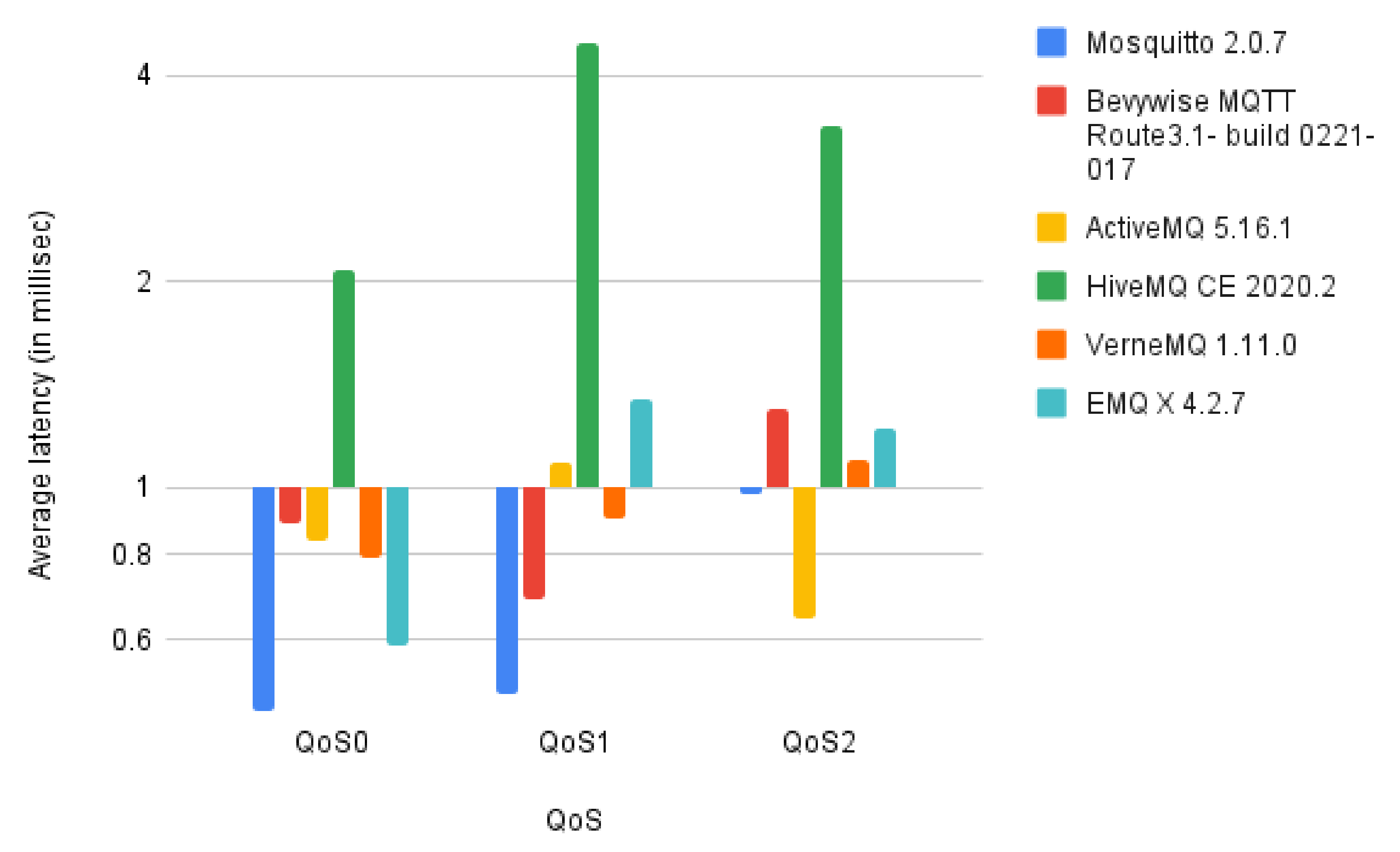

| Average latency (in ms) | 0.83 | 2.07 | 0.79 | 0.59 | 1.09 | 4.48 | 0.90 | 1.35 | 0.64 | 3.38 | 1.10 | 1.22 |

| Brokers | Average Latency in ms. | ||

|---|---|---|---|

| QoS0 | QoS1 | QoS2 | |

| Mosquitto 1.4.15 | 1.65 | 0.74 | 1.38 |

| Bevywise MQTT Route 2.0 | 1.13 | 0.96 | 1.53 |

| ActiveMQ 5.15.8 | 2.33 | 1.38 | 2.14 |

| HiveMQ CE 2020.2 | 7.69 | 58.48 | 3.66 |

| VerneMQ 1.10.2 | 1.53 | 1.98 | 2.89 |

| EMQ X 4.0.8 | 1.34 | 0.87 | 3.68 |

| Brokers | Average Latency in ms. | ||

|---|---|---|---|

| QoS0 | QoS1 | QoS2 | |

| Mosquitto 2.0.7 | 0.47 | 0.50 | 0.98 |

| Bevywise MQTT Route 3.1- build 0221-017 | 0.89 | 0.69 | 1.30 |

| ActiveMQ 5.16.1 | 0.83 | 1.09 | 0.64 |

| HiveMQ CE 2020.2 | 2.07 | 4.48 | 3.38 |

| VerneMQ 1.11.0 | 0.79 | 0.90 | 1.10 |

| EMQ X 4.2.7 | 0.59 | 1.35 | 1.22 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mishra, B.; Mishra, B.; Kertesz, A. Stress-Testing MQTT Brokers: A Comparative Analysis of Performance Measurements. Energies 2021, 14, 5817. https://doi.org/10.3390/en14185817

Mishra B, Mishra B, Kertesz A. Stress-Testing MQTT Brokers: A Comparative Analysis of Performance Measurements. Energies. 2021; 14(18):5817. https://doi.org/10.3390/en14185817

Chicago/Turabian StyleMishra, Biswajeeban, Biswaranjan Mishra, and Attila Kertesz. 2021. "Stress-Testing MQTT Brokers: A Comparative Analysis of Performance Measurements" Energies 14, no. 18: 5817. https://doi.org/10.3390/en14185817

APA StyleMishra, B., Mishra, B., & Kertesz, A. (2021). Stress-Testing MQTT Brokers: A Comparative Analysis of Performance Measurements. Energies, 14(18), 5817. https://doi.org/10.3390/en14185817