Deep Neural Network Approach for Prediction of Heating Energy Consumption in Old Houses

Abstract

1. Introduction

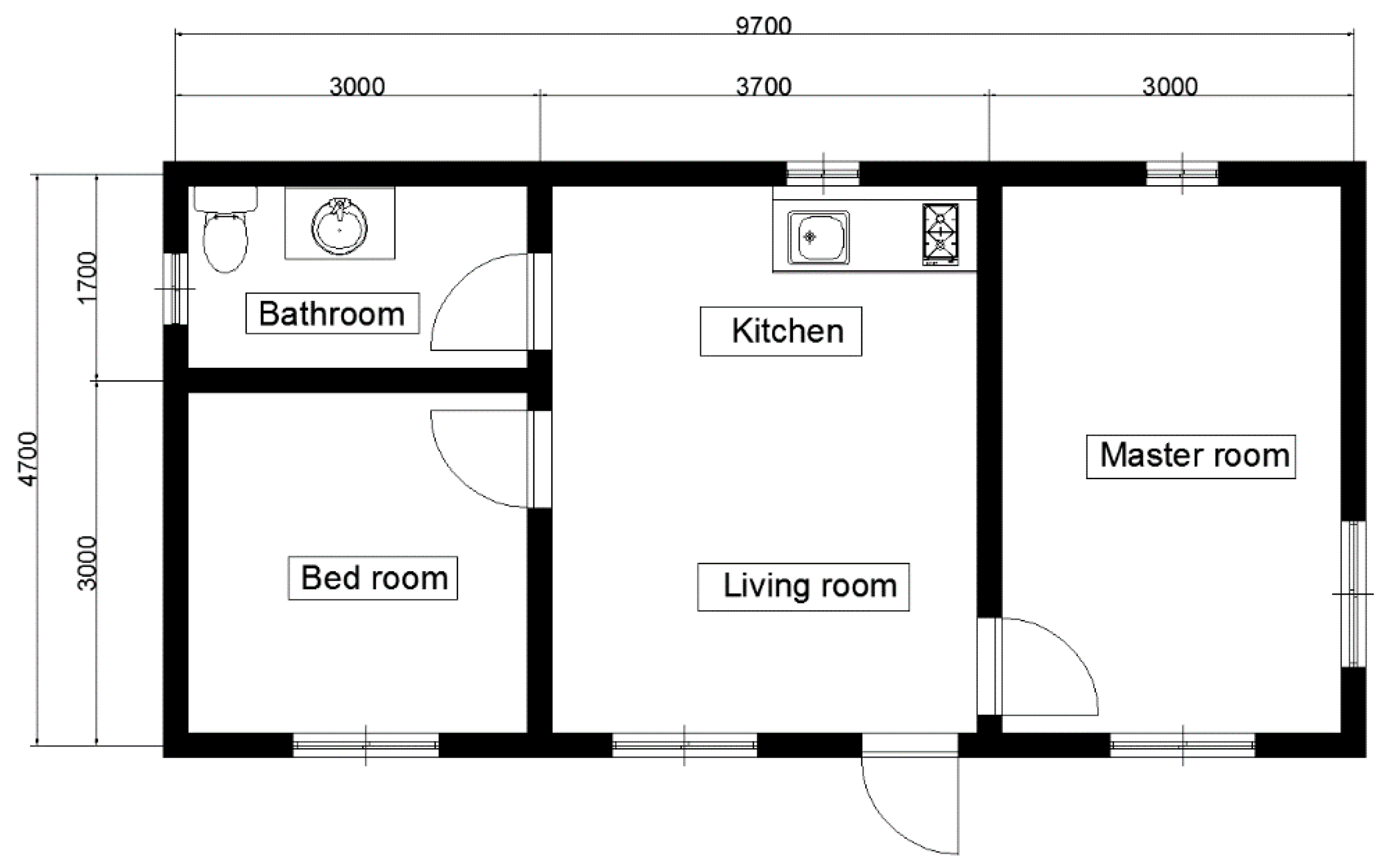

2. Characteristics of Old Houses

3. Input Data and Configuration of DNN Model

3.1. Preparation of Input Data

- Error elimination: this is the process of eliminating inaccurate information from the collected input data. For example, address or meteorological data, etc., that do not match or contradict the actual data for the old, detached houses of this study, were removed.

- Missing data elimination: collected data may contain missing values, and statistical analysis using these datasets does not produce the desired results. Therefore, the data were checked for missing values and the data set containing missing values was removed or replaced with one containing the correct values.

- Outlier elimination: an outlier, in statistics, is a data point that differs significantly from other observations. The outlier may be due to variability in the measurement, or it may indicate experimental error; the latter are sometimes excluded from the data set. An outlier can cause serious distortion of the analytical results. The outlier value is determined to be greater than 97.5% or less than 2.5% in the normal distribution. This study removed outliers using the Mahalanobis distance method [26]. The Mahalanobis distance (MD) is the distance between two points in multivariate space. The MD calculation—Mahalanobis Score (Probability)—p-value test was conducted using IBM SPSS statistic software. Through this, 16,158 data were extracted from 17,008 data.

- Normalization: the data range distribution is adjusted by changing the range of data with different scales to 0 and 1. In this study, data normalization was performed using the following Equation (1):

3.2. Neural Network Model

3.3. Modeling Approach

- Correlation analysis: the Pearson correlation coefficients between the input variable and the target variable were calculated using the IBM Statistical Package for the Social Sciences (SPSS) [32]. From these, the input variables with a strong correlation were included as the key input variables.

- Prediction accuracy analysis using an initial DNN model: the following stepwise method was applied. Possible combination cases were created, based on the key input variables selected from the correlation analysis above, and the coefficient of determination (R2) was calculated for each combination case. The final key input variables were determined by a stepwise method that excludes the combination case of the input variable with the lowest coefficient of determination.

4. Results and Discussion

4.1. Selection of Key Input Variables

4.2. Optimal DNN Model

4.3. Summury and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Korean Statistical Information Service (KSIS). Population and Housing Census. 2018. Available online: http://kosis.kr/index/index.do (accessed on 4 September 2020).

- Bourdeau, M.; Zhai, X.Q.; Nefzaoui, E.; Guo, X.; Chatellier, P. Modeling and forecasting building energy consumption: A review of data-driven techniques. Sustain. Cities Soc. 2019, 48, 101533. [Google Scholar] [CrossRef]

- O’Neill, Z.; Narayanan, S.; Brahme, R. Model-based thermal load estimation in buildings. Proc. Simbuild 2010, 4, 474–481. [Google Scholar]

- Naganathan, H.; Chong, W.O.; Chen, X. Building energy modeling (BEM) using clustering algorithms and semi-supervised machine learning approaches. Autom. Constr. 2019, 72, 187–194. [Google Scholar] [CrossRef]

- Ryan, E.M.; Sanquist, T.F. Validation of building energy modeling tools under idealized and realistic conditions. Energy Build. 2012, 47, 375–382. [Google Scholar] [CrossRef]

- Esen, H.; Escen, M.; Ozsolak, O. Modelling and experimental performance analysis of solar-assisted ground source heat pump system. J. Exp. Theor. Artif. Intell. 2017, 29, 1–17. [Google Scholar] [CrossRef]

- Kang, I. Development of ANN (Artificial Neural Network) Based Predictive Model for Energy Consumption of HVAC System in Office Building. Master’s Thesis, Chung-Ang University, Seoul, Korea, 2017. [Google Scholar]

- Robinson, C.; Dilkina, B.; Hubbs, J.; Zhang, W.; Guhathakurta, S.; Brown, M.A.; Pendyala, R.M. Machine learning approaches for estimating commercial building energy consumption. Appl. Energy 2017, 208, 889–904. [Google Scholar] [CrossRef]

- Ahmad, T.; Chen, H.; Huang, R.; Yabin, G.; Wang, J.; Shair, J.; Akram, H.M.A.; Mohsan, S.A.H.; Kazime, M. Supervised based machine learning models for short, medium and long-term energy prediction in distinct building environment. Energy 2018, 158, 17–32. [Google Scholar] [CrossRef]

- Chou, J.-S.; Tran, D.-S. Forecasting energy consumption time series using machine learning techniques based on usage patterns of residential householders. Energy 2018, 165 Pt B, 709–726. [Google Scholar] [CrossRef]

- D’Amico, A.; Ciulla, G.; Traverso, M.; Brano, V.L.; Palumbo, E. Artificial Neural Networks to assess energy and environmental performance of buildings: An Italian case study. J. Clean. Prod. 2019, 239, 117993. [Google Scholar] [CrossRef]

- Ciulla, G.; D’Amico, A.; Brano, V.L.; Traverso, M. Application of optimized artificial intelligence algorithm to evaluate the heating energy demand of non-residential buildings at European level. Energy 2019, 176, 380–391. [Google Scholar] [CrossRef]

- Katsatos, A.L.; Moustris, K.P. Application of Artificial Neuron Networks as energy consumption forecasting tool in the building of Regulatory Authority of Energy, Athens, Greece. Energy Procedia 2019, 157, 851–861. [Google Scholar] [CrossRef]

- Satrio, P.; Mahlia, T.M.I.; Giannetti, N.; Saito, K. Optimization of HVAC system energy consumption in a building using artificial neural network and multi-objective genetic algorithm. Sustain. Energy Technol. Assess. 2019, 35, 48–57. [Google Scholar]

- Deb, C.; Lee, S.E.; Santamouris, M. Using artificial neural networks to assess HVAC related energy saving in retrofitted office buildings. Sol. Energy 2018, 163, 32–44. [Google Scholar] [CrossRef]

- González, P.A.; Zamarreño, J.M. Prediction of hourly energy consumption in buildings based on a feedback artificial neural network. Energy Build. 2005, 37, 595–601. [Google Scholar] [CrossRef]

- Huang, Y.; Yuan, Y.; Chen, H.; Wang, J.; Guo, Y.; Ahmad, T. A novel energy demand prediction strategy for residential buildings based on ensemble learning. Energy Procedia 2019, 158, 3411–3416. [Google Scholar] [CrossRef]

- Biswas, M.A.R.; Robinson, M.D.; Fumo, N. Prediction of residential building energy consumption: A neural network approach. Energy 2016, 117 Pt 1, 84–92. [Google Scholar] [CrossRef]

- Tardioli, G.; Kerrigan, R.; Oates, M.; O’Donnell, J.; Finn, D. Data Driven Approaches for Prediction of Building Energy Consumption at Urban Level. Energy Procedia 2015, 78, 3378–3383. [Google Scholar] [CrossRef]

- Mohandes, S.R.; Zhang, X.; Mahdiyar, A. A comprehensive review on the application of artificial neural networks in building energy analysis. Neurocomputing 2019, 340, 55–75. [Google Scholar] [CrossRef]

- Luo, X.J.; Oyedele, L.O.; Ajayi, A.O.; Akinade, O.O.; Owolabi, H.A.; Ahmed, A. Feature extraction and genetic algorithm enhanced adaptive deep neural network for energy consumption prediction in buildings. Renew. Sustain. Energy Rev. 2020, 131, 109980. [Google Scholar] [CrossRef]

- Korea Institute of Energy Research (KIER). Low-Income Household Energy Efficiency Improvement Project Report; Korea Institute of Energy Research (KIER): Daejeon, Korea, 2018. [Google Scholar]

- Kim, J. Heating Energy Baseline and Saving Model Development of Detached Houses for Low-Income Households. Master’s Thesis, University of Science and Technology, Daejeon, Korea, 2015. [Google Scholar]

- International Organization for Standardization (ISO). Energy Performance of Buildings—Energy Needs for Heating and Cooling, Internal Temperatures and Sensible and Latent Heat Loads—Part 1: Calculation Procedures; Standard No. 52016-1; ISO: Geneva, Switzerland, 2017. [Google Scholar]

- Lee, S.-J.; Kim, J.; Jeong, H.; Yoo, S.; Lee, S. Heating Energy Efficiency Improvement Analysis of Low-income Houses. J. KIAEBS 2017, 11, 212–218. [Google Scholar]

- Hazewinkel, M. Encyclopedia of Mathematics; Springer: Berlin, Germany, 2001. [Google Scholar]

- Aggarwal, C.C. Neural Networks and Deep Learning: A Textbook, 1st ed.; Springer: Berlin, Germany, 2018. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; The MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Ye, Z.; Kim, M.K. Predicting electricity consumption in a building using an optimized back-propagation and Levenberg-Marquardt back-propagation neural network: Case study of a shopping mall in China. Sustain. Cities Soc. 2018, 42, 176–183. [Google Scholar] [CrossRef]

- Hinton, P.R.; McMurray, I.; Brownlow, C. SPSS Explained, 2nd ed.; Routledge: London, UK, 2014. [Google Scholar]

- Ahmad, M.W.; Mourshed, M.; Rezgui, Y. Trees vs Neurons: Comparison between random forest and ANN for high-resolution prediction of building energy consumption. Energy Build. 2017, 147, 77–89. [Google Scholar] [CrossRef]

- IBM. IBM SPSS Software. Available online: https://www.ibm.com/kr-ko/analytics/spss-statistics-software (accessed on 4 September 2020).

- Mathworks. Matlab Software. Available online: https://www.mathworks.com/products/matlab.html (accessed on 4 September 2020).

- Wang, J.C. A study on the energy performance of hotel buildings in Taiwan. Energy Build. 2012, 49, 268–275. [Google Scholar] [CrossRef]

- Reed, R.; MarksII, R.J. Neural Smithing: Supervised Learning in Feedforward Artificial Neural Networks; A Bradford Book: Cambridge, MA, USA, 1999. [Google Scholar]

- Department of Energy (DOE). EnergyPlus Software. Available online: https://energyplus.net/ (accessed on 4 September 2020).

- Department of Energy (DOE). M&V Guidelines: Measurement and Verification for Federal Energy Projects, Version 3.0; Department of Energy (DOE): Washington, DC, USA, 2008; pp. 21–22. [Google Scholar]

| Number | General | Area | U-Value | HVAC | Heating Energy Consumption | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| City | Building Orientation | Structure | Region | Year of Completion | Heating Space | Wall | Window | Door | Roof | Floor | Wall | Window | Door | Roof | Floor | ACH | Boiler | Efficiency | ||

| 1 | Daegu | East | Heavy | Gyeongsang | 25-Jan-90 | 16.30 | 34.22 | 0.86 | 1.44 | 16.32 | 16.32 | 0.76 | 3.90 | 2.70 | 0.52 | 0.76 | 1.00 | Oil | 90.00 | 2985 |

| 2 | Busan | South | Heavy | Busan | 01-Jan-70 | 40.00 | 56.88 | 4.35 | 1.89 | 0.00 | 40.08 | 1.54 | 3.90 | 2.70 | 0.00 | 1.54 | 1.00 | Gas | 83.50 | 6823 |

| 3 | Wando | South | Heavy | Jeollanam-do | 07-May-64 | 60.00 | 53.88 | 0.24 | 2.88 | 60.00 | 60.00 | 1.54 | 5.29 | 2.40 | 1.54 | 1.54 | 1.00 | Oil | 80.00 | 14,975 |

| 4 | Wando | South | Heavy | Jeollanam-do | 03-Jul-80 | 60.00 | 76.81 | 3.08 | 7.28 | 60.00 | 60.00 | 1.05 | 5.30 | 2.40 | 1.05 | 1.05 | 1.00 | Oil | 80.00 | 12,701 |

| 5 | Wando | South | Heavy | Jeollanam-do | 08-Aug-70 | 53.00 | 54.98 | 5.24 | 4.00 | 53.00 | 53.00 | 1.54 | 5.30 | 2.50 | 1.54 | 1.54 | 1.00 | Oil | 80.00 | 14,486 |

| 6 | Wando | South | Heavy | Jeollanam-do | 11-Sep-65 | 51.00 | 57.54 | 3.08 | 5.82 | 51.00 | 51.00 | 1.54 | 5.30 | 2.40 | 1.54 | 1.54 | 1.00 | Oil | 80.00 | 14,243 |

| 7 | Wando | South | Heavy | Jeollanam-do | 29-May-85 | 16.00 | 33.37 | 1.54 | 1.89 | 16.00 | 16.00 | 0.58 | 2.80 | 2.70 | 0.58 | 0.58 | 1.00 | Oil | 80.00 | 2524 |

| 8 | Wando | East | Heavy | Jeollanam-do | 07-Jul-87 | 50.00 | 74.38 | 3.79 | 3.46 | 50.00 | 50.00 | 0.58 | 4.47 | 2.58 | 0.58 | 0.58 | 1.00 | Oil | 80.00 | 6172 |

| 9 | Wando | North | Heavy | Jeollanam-do | 07-Jul-83 | 25.00 | 30.40 | 5.44 | 1.36 | 25.00 | 25.00 | 0.58 | 6.60 | 2.40 | 0.58 | 1.16 | 1.00 | Oil | 80.00 | 3553 |

| 10 | Wando | South | Heavy | Jeollanam-do | 08-Jun-68 | 37.00 | 49.98 | 8.50 | 1.36 | 37.00 | 37.00 | 1.54 | 5.30 | 2.40 | 1.54 | 1.54 | 1.00 | Oil | 80.00 | 10,489 |

| 11 | Wando | North | Heavy | Jeollanam-do | 06-Apr-85 | 50.00 | 69.73 | 5.88 | 1.89 | 50.00 | 50.00 | 0.58 | 2.80 | 2.70 | 0.58 | 0.58 | 1.00 | Oil | 80.00 | 5502 |

| 12 | Wando | North-East | Heavy | Jeollanam-do | 02-Apr-95 | 21.00 | 41.28 | 2.42 | 0.00 | 21.00 | 21.00 | 0.76 | 6.60 | 0.00 | 0.52 | 0.76 | 1.00 | Oil | 80.00 | 3148 |

| 13 | Wando | South | Heavy | Jeollanam-do | 07-May-03 | 53.00 | 67.66 | 8.00 | 3.78 | 53.00 | 53.00 | 0.58 | 6.60 | 2.55 | 0.35 | 0.41 | 1.00 | Briquette | 70.00 | 4213 |

| 14 | Wando | South | Heavy | Jeollanam-do | 18-Apr-64 | 100.00 | 96.76 | 8.40 | 0.00 | 100.00 | 100.00 | 1.54 | 3.87 | 0.00 | 1.54 | 1.54 | 1.00 | Oil | 80.00 | 24,453 |

| 15 | Wando | South | Heavy | Jeollanam-do | 03-Jul-90 | 30.00 | 62.74 | 0.00 | 1.89 | 30.00 | 30.00 | 0.76 | 0.00 | 2.70 | 0.52 | 0.76 | 1.00 | Oil | 80.00 | 5495 |

| 16 | Wando | South | Heavy | Jeollanam-do | 06-Jul-89 | 45.00 | 57.52 | 5.04 | 3.69 | 45.00 | 45.00 | 0.76 | 3.90 | 2.55 | 0.52 | 0.76 | 1.00 | Oil | 80.00 | 6165 |

| 17 | Wando | South | Heavy | Jeollanam-do | 07-Jun-86 | 50.00 | 65.58 | 6.45 | 1.89 | 50.00 | 50.00 | 0.58 | 5.30 | 2.70 | 0.58 | 0.58 | 1.00 | Oil | 80.00 | 6533 |

| 18 | Wando | South | Heavy | Jeollanam-do | 20-Aug-88 | 50.00 | 65.47 | 3.41 | 1.89 | 50.00 | 50.00 | 0.76 | 3.90 | 2.70 | 0.52 | 0.76 | 1.00 | Oil | 85.00 | 5382 |

| 19 | Wando | North | Heavy | Jeollanam-do | 04-Aug-88 | 86.00 | 81.90 | 8.96 | 1.89 | 172.00 | 0.00 | 0.76 | 3.90 | 2.70 | 0.52 | 0.00 | 1.00 | Oil | 80.00 | 12,023 |

| 20 | Wando | South | Heavy | Jeollanam-do | 16-Sep-00 | 60.00 | 68.08 | 6.16 | 1.89 | 60.00 | 60.00 | 0.76 | 5.30 | 2.40 | 0.52 | 0.76 | 1.00 | Oil | 80.00 | 8290 |

| 21 | Wando | South | Heavy | Jeollanam-do | 22-May-70 | 38.00 | 55.63 | 3.49 | 1.36 | 38.00 | 38.00 | 1.54 | 5.30 | 2.70 | 1.54 | 1.54 | 1.00 | Oil | 80.00 | 10,057 |

| 22 | Wando | North-East | Heavy | Jeollanam-do | 23-Sep-77 | 28.00 | 44.41 | 1.76 | 1.44 | 28.00 | 28.00 | 1.54 | 6.60 | 2.70 | 1.54 | 1.54 | 1.00 | Oil | 80.00 | 9014 |

| 23 | Busan | South | Heavy | Gyeongsang | 08-Apr-71 | 16.00 | 16.60 | 1.08 | 0.00 | 17.00 | 17.00 | 1.54 | 3.90 | 0.00 | 1.54 | 1.54 | 1.00 | - | 50.00 | 4232 |

| 24 | Wando | South | Heavy | Jeollanam-do | 13-Jul-78 | 50.00 | 60.06 | 5.94 | 0.00 | 50.00 | 50.00 | 1.54 | 6.60 | 0.00 | 1.54 | 1.54 | 1.00 | Oil | 80.00 | 15076 |

| 25 | Wando | South | Heavy | Jeollanam-do | 07-Jul-85 | 45.00 | 49.58 | 13.34 | 0.00 | 45.00 | 45.00 | 0.58 | 5.11 | 0.00 | 0.58 | 0.58 | 1.00 | Oil | 85.00 | 6360 |

| 26 | Wando | South | Heavy | Jeollanam-do | 07-Jun-65 | 45.00 | 75.76 | 9.88 | 5.00 | 45.00 | 45.00 | 1.54 | 5.30 | 2.40 | 1.54 | 1.54 | 1.00 | Oil | 80.00 | 14,025 |

| 27 | Wando | South | Heavy | Jeollanam-do | 20-Jun-59 | 25.00 | 43.18 | 10.26 | 1.12 | 25.00 | 25.00 | 1.54 | 5.30 | 2.70 | 1.54 | 1.54 | 1.00 | Oil | 80.00 | 8623 |

| 28 | Wando | South | Heavy | Jeollanam-do | 08-Jun-83 | 40.00 | 64.73 | 5.07 | 2.88 | 40.00 | 40.00 | 0.58 | 5.61 | 2.40 | 0.58 | 1.16 | 1.00 | Oil | 80.00 | 6932 |

| 29 | Wando | South | Heavy | Jeollanam-do | 08-Mar-86 | 65.00 | 68.71 | 3.92 | 1.89 | 65.00 | 65.00 | 0.58 | 3.90 | 2.70 | 0.58 | 0.58 | 1.00 | Oil | 90.00 | 6329 |

| 30 | Wando | South | Heavy | Jeollanam-do | 10-Aug-63 | 40.00 | 68.64 | 2.64 | 3.52 | 40.00 | 40.00 | 1.54 | 5.30 | 2.40 | 1.54 | 1.54 | 1.00 | Oil | 80.00 | 13,233 |

| 31 | Wando | North-East | Heavy | Jeollanam-do | 05-Jun-85 | 28.00 | 53.55 | 3.52 | 1.89 | 28.00 | 28.00 | 0.58 | 6.60 | 2.70 | 0.58 | 0.58 | 1.00 | Oil | 80.00 | 4378 |

| 32 | Wando | South | Heavy | Jeollanam-do | 18-Jun-83 | 45.00 | 57.36 | 8.64 | 0.00 | 45.00 | 45.00 | 0.58 | 6.60 | 0.00 | 0.58 | 1.16 | 1.00 | Electricity | 100.00 | 5939 |

| 33 | Wando | West | Heavy | Jeollanam-do | 08-May-65 | 48.00 | 59.17 | 0.00 | 9.91 | 48.00 | 48.00 | 1.54 | 0.00 | 2.44 | 1.54 | 1.54 | 1.00 | Oil | 80.00 | 14,901 |

| 34 | Wando | South | Heavy | Jeollanam-do | 18-Jun-77 | 45.00 | 57.70 | 13.83 | 1.95 | 45.00 | 45.00 | 1.54 | 5.30 | 2.40 | 1.54 | 1.54 | 1.00 | Oil | 80.00 | 14,866 |

| 35 | Wando | South-East | Heavy | Jeollanam-do | 14-Sep-02 | 40.00 | 60.74 | 7.42 | 5.76 | 40.00 | 40.00 | 0.58 | 6.18 | 2.40 | 0.35 | 0.41 | 1.00 | Oil | 80.00 | 5843 |

| Type | Input Variable | Target Variable |

|---|---|---|

| General details | Region (32 cities) Building orientation (E, W, S, N, NE, NW, SE, SW) Structure (heavy, light) Year of completion ACH Type of boiler Boiler efficiency | Energy consumption [kWh/a] |

| Area [m2] | Heating space area Wall Roof Floor Window Door | |

| U-value [W/(m2·K)] | Wall Roof Floor Window Door |

| Parameters | Feature | Value | |

|---|---|---|---|

| Model | Back propagation efficiently computes the gradient of the loss function with respect to the weights of the network for a single input-output example | LMA (Levenberg Marquardt Algorithm) [29] | |

| Data division (%) | Training:Validation:Testing | 70:15:15 | |

| Structural Parameters | Hidden layer | The increase in the number of hidden layers/neurons, the predict performance is improved, but the calculation takes a long time | 1 |

| Neuron of hidden layer | 10 | ||

| Learning parameters | Learning rate | The smaller value has improved predict performance, but the longer it takes to learn | 0.2 |

| Momentum | Basically, it starts with 0.1, and the bigger value it is, the faster the learning speed | 0.6 | |

| Epochs | Maximum number of learning | 1000 | |

| Goal | Target error ratio between actual and predicted values | 0.01 | |

| Type | Input Variable | Pearson Correlation Coefficient | Rank |

|---|---|---|---|

| General details | Region | 0.162 | 13 |

| Area [m2] | Building orientation | −0.012 | 17 |

| U-value (Heat transmission coefficient) [W/(m2·K)] | Structure | 0.159 | 14 |

| Year of completion | −0.539 | 6 | |

| ACH | 0.012 | 16 | |

| Type of boiler | N/A | N/A | |

| Boiler efficiency | −0.276 | 9 | |

| Heating space area | 0.430 | 8 | |

| Wall | 0.488 | 7 | |

| Roof | 0.617 | 2 | |

| Floor | 0.557 | 4 | |

| Window | 0.252 | 12 | |

| Door | 0.275 | 10 | |

| Wall | 0.604 | 3 | |

| Roof | 0.636 | 1 | |

| Floor | 0.550 | 5 | |

| Window | 0.269 | 11 | |

| Door | −0.051 | 15 |

| Parameter | Case 1 | Case 2 | Case 3 | Case 4 | Case 5 | Case 6 | Case 7 | Case 8 | Case 9 | Case 10 | Case 11 | Case 12 | Case 13 | Case 14 | Case 15 | Case 16 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Window U-value [W/(m2·K)] | × | ○ | × | × | × | ○ | × | × | ○ | ○ | × | ○ | ○ | ○ | × | ○ |

| Window area [m2] | × | × | ○ | × | × | ○ | ○ | × | × | × | ○ | ○ | ○ | × | ○ | ○ |

| Door area [m2] | × | × | × | ○ | × | × | ○ | ○ | ○ | × | × | ○ | × | ○ | ○ | ○ |

| Boiler efficiency [%] | × | × | × | × | ○ | × | × | ○ | × | ○ | ○ | × | ○ | ○ | ○ | ○ |

| Heating space area [m2] | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| Wall area [m2] | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| Year of completion | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| Floor U-value [W/(m2·K)] | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| Floor area [m2] | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| Wall U-value [W/(m2·K)] | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| Roof area [m2] | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| Roof U-value [W/(m2·K)] | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| R2-value | 0.893 | 0.890 | 0.896 | 0.895 | 0.931 | 0.896 | 0.896 | 0.933 | 0.896 | 0.929 | 0.933 | 0.898 | 0.936 | 0.935 | 0.935 | 0.935 |

| Number of Hidden Layer | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| R2-value | 0.936 | 0.945 | 0.949 | 0.951 | 0.954 | 0.953 | 0.952 | 0.953 | 0.952 | 0.950 |

| Number of Neurons in Hidden Layer | 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| R2-value | 0.954 | 0.955 | 0.955 | 0.954 | 0.956 | 0.958 | 0.954 |

| Number of Neurons in Hidden Layer | 17 | 18 | 19 | 20 | 21 | 22 | 23 |

| R2-value | 0.958 | 0.956 | 0.956 | 0.959 | 0.958 | 0.961 | 0.958 |

| Number of Neurons in Hidden Layer | 24 | 25 | 26 | 27 | 28 | 29 | 30 |

| R2-value | 0.959 | 0.958 | 0.959 | 0.958 | 0.960 | 0.959 | 0.958 |

| Division | Values |

|---|---|

| Window U-value [W/(m2·K)] | 5.84 |

| Window area [m2] | 8.05 |

| Heating space area [m2] | 44.52 |

| Wall area [m2] | 56.09 |

| Year of completion | 1980 |

| Floor U-value [W/(m2·K)] | 1.05 |

| Floor area [m2] | 44.52 |

| Wall U-value [W/(m2·K)] | 1.05 |

| Roof area [m2] | 44.52 |

| Roof U-value [W/(m2·K)] | 1.05 |

| Boiler efficiency [%] | 80 |

| Energy consumption(Calculated) [kWh/yr] | 12143 |

| Energy consumption (Calculated/m2) [kWh/m2·yr] | 272.75 |

| Division | Values |

|---|---|

| Optimal DNN model-predicted annual heating consumption [kWh/yr] | 13,307 |

| Optimal DNN model- predicted annual heating consumption per unit area [kWh/m2·yr] | 298.90 |

| EnergyPlus-calculated annual heating consumption [kWh/yr] | 12,143 |

| EnergyPlus-calculated annual heating consumption per unit area [kWh/m2·yr] | 272.75 |

| Cv(RMSE) [%] | 8.74 |

| Division | Monthly | Hourly |

|---|---|---|

| Tolerance limits (Cv(RMSE)) | 15% | 30% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.; Cho, S.; Kim, S.-H.; Kim, J.; Chae, S.; Jeong, H.; Kim, T. Deep Neural Network Approach for Prediction of Heating Energy Consumption in Old Houses. Energies 2021, 14, 122. https://doi.org/10.3390/en14010122

Lee S, Cho S, Kim S-H, Kim J, Chae S, Jeong H, Kim T. Deep Neural Network Approach for Prediction of Heating Energy Consumption in Old Houses. Energies. 2021; 14(1):122. https://doi.org/10.3390/en14010122

Chicago/Turabian StyleLee, Sungjin, Soo Cho, Seo-Hoon Kim, Jonghun Kim, Suyong Chae, Hakgeun Jeong, and Taeyeon Kim. 2021. "Deep Neural Network Approach for Prediction of Heating Energy Consumption in Old Houses" Energies 14, no. 1: 122. https://doi.org/10.3390/en14010122

APA StyleLee, S., Cho, S., Kim, S.-H., Kim, J., Chae, S., Jeong, H., & Kim, T. (2021). Deep Neural Network Approach for Prediction of Heating Energy Consumption in Old Houses. Energies, 14(1), 122. https://doi.org/10.3390/en14010122