BEACH-Gaze: Supporting Descriptive and Predictive Gaze Analytics in the Era of Artificial Intelligence and Advanced Data Science

Highlights

- This paper presents a novel analytical platform designed to support the sequential simulation of real-time gaze analytics with integrated machine learning models that allow researchers to perform gaze-driven predictions without extensive technical expertise.

- Three use cases, including applications in autism support, adaptive visualization, and enhancing safety in civil aviation, are presented to showcase the effectiveness of the proposed platform.

- This work contributes to the increased accessibility of eye tracking by empowering a broader community of practitioners across disciplines to leverage gaze in machine intelligence and scientific discovery.

Abstract

1. Introduction

2. Related Work

3. BEACH-Gaze

3.1. Design Overview

3.2. Descriptive Gaze Analytics

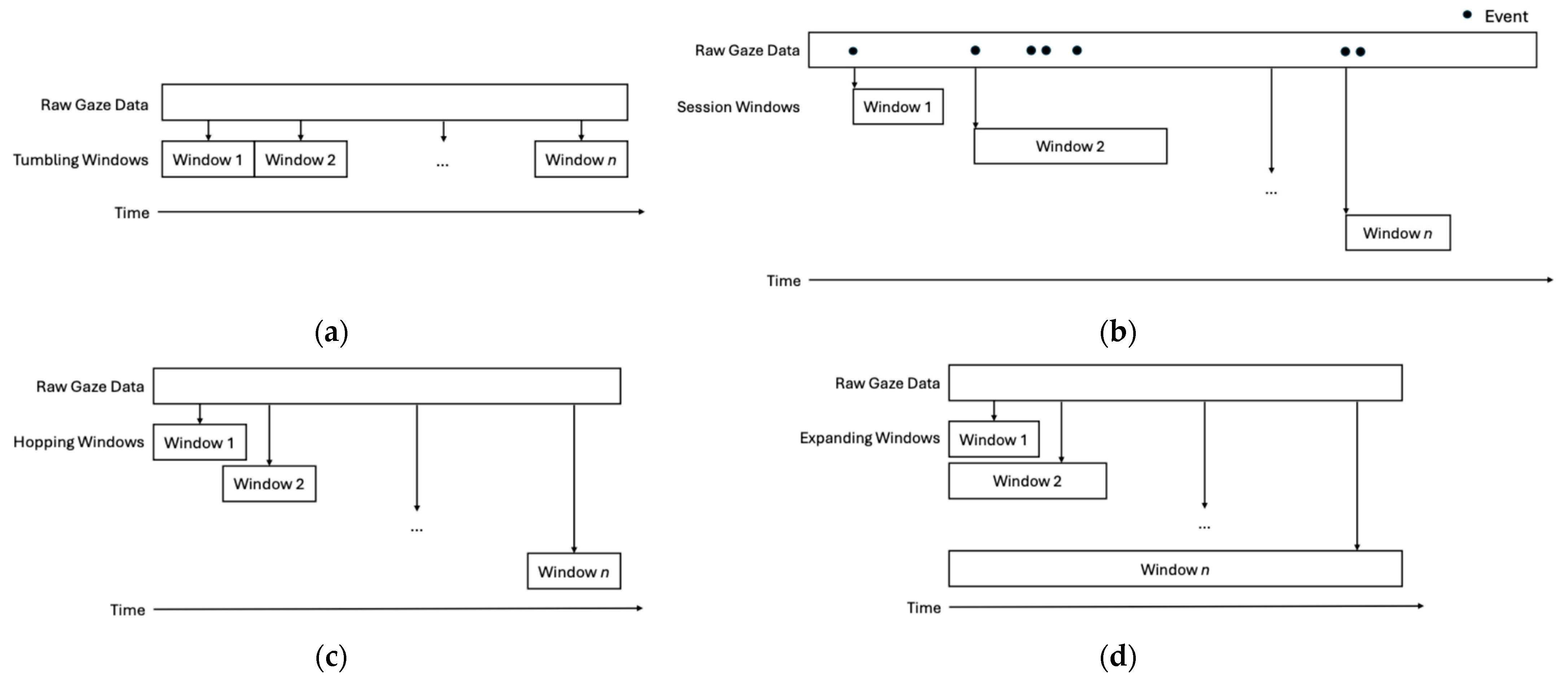

3.3. Evolution of DGMs Captured via Window Segmentation

3.4. Predictive Gaze Analytics

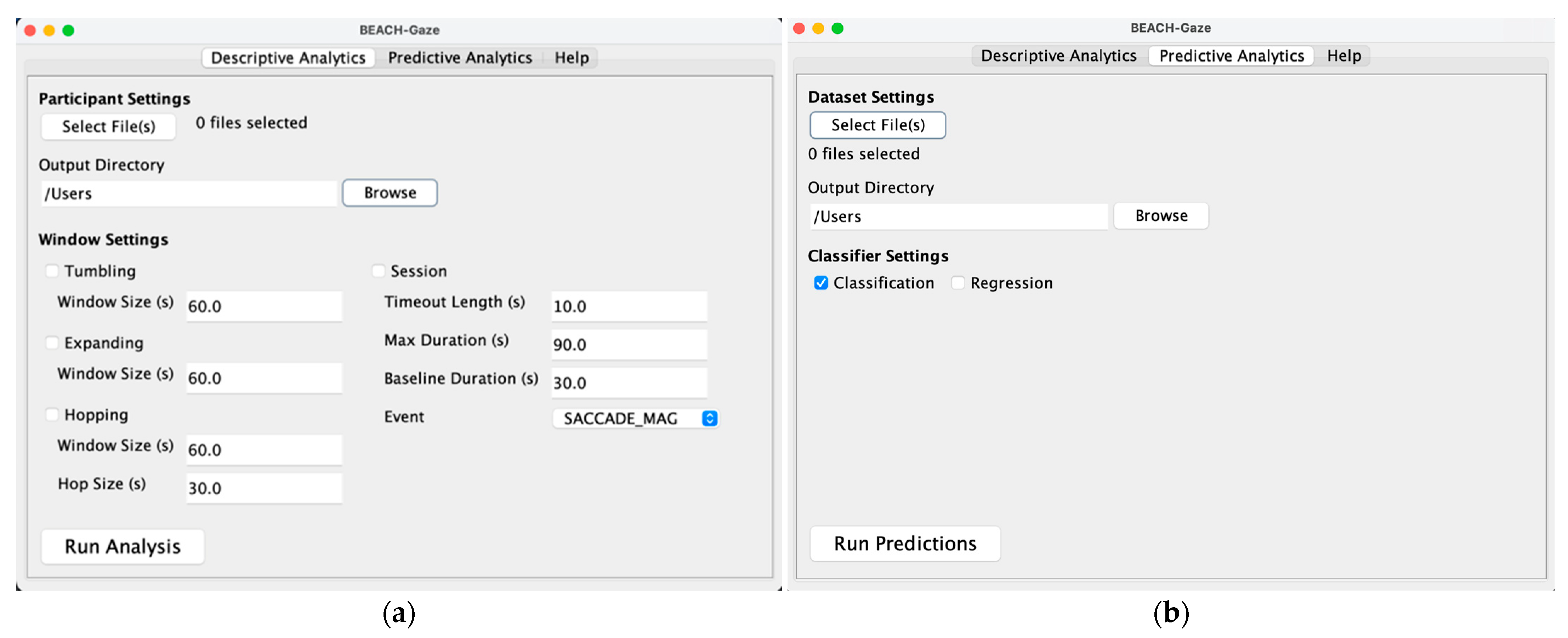

3.5. User Interface

4. Use Cases

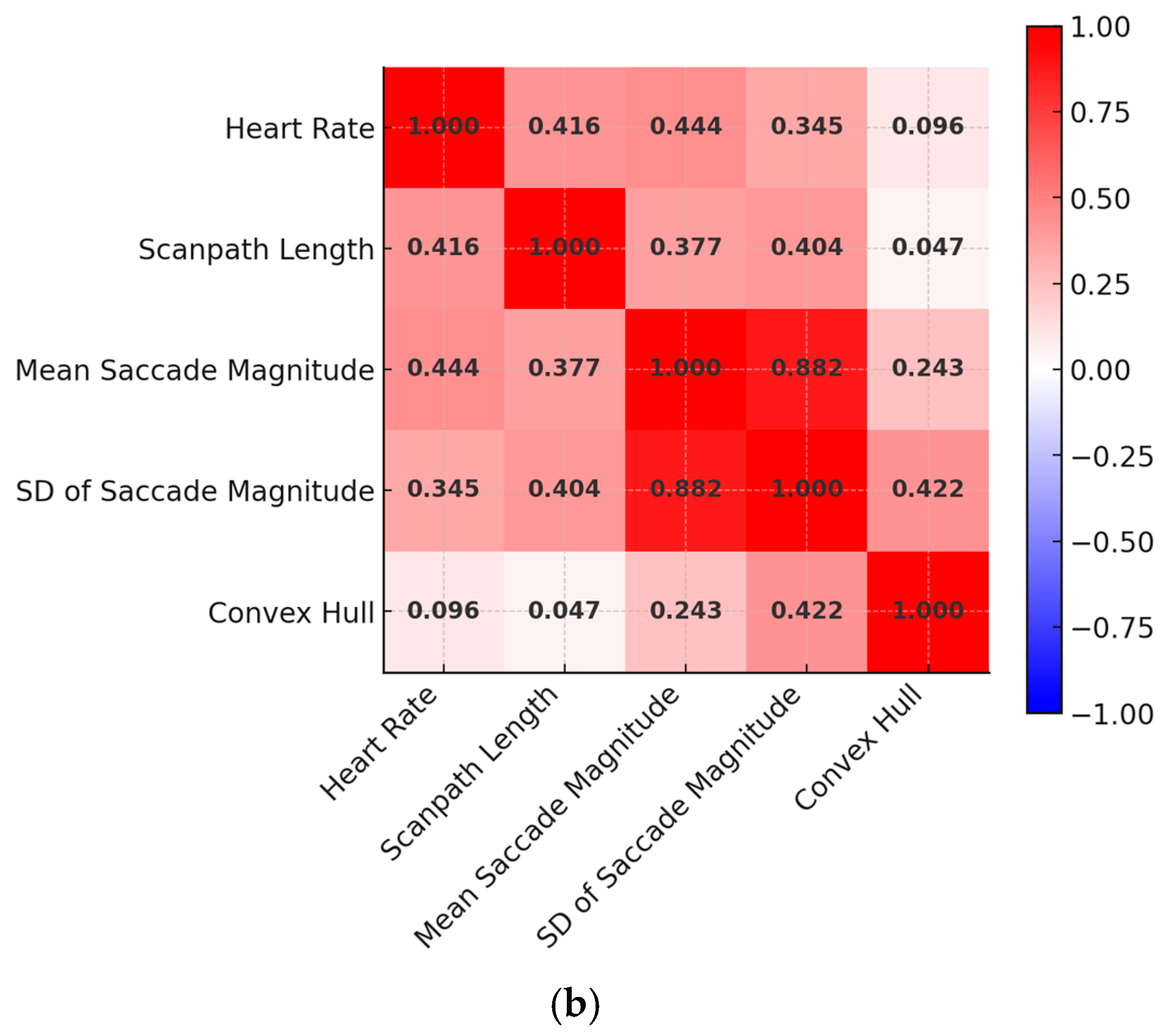

4.1. Technology Assisted Exercise for Individuals with Autism Spectrum Disorder

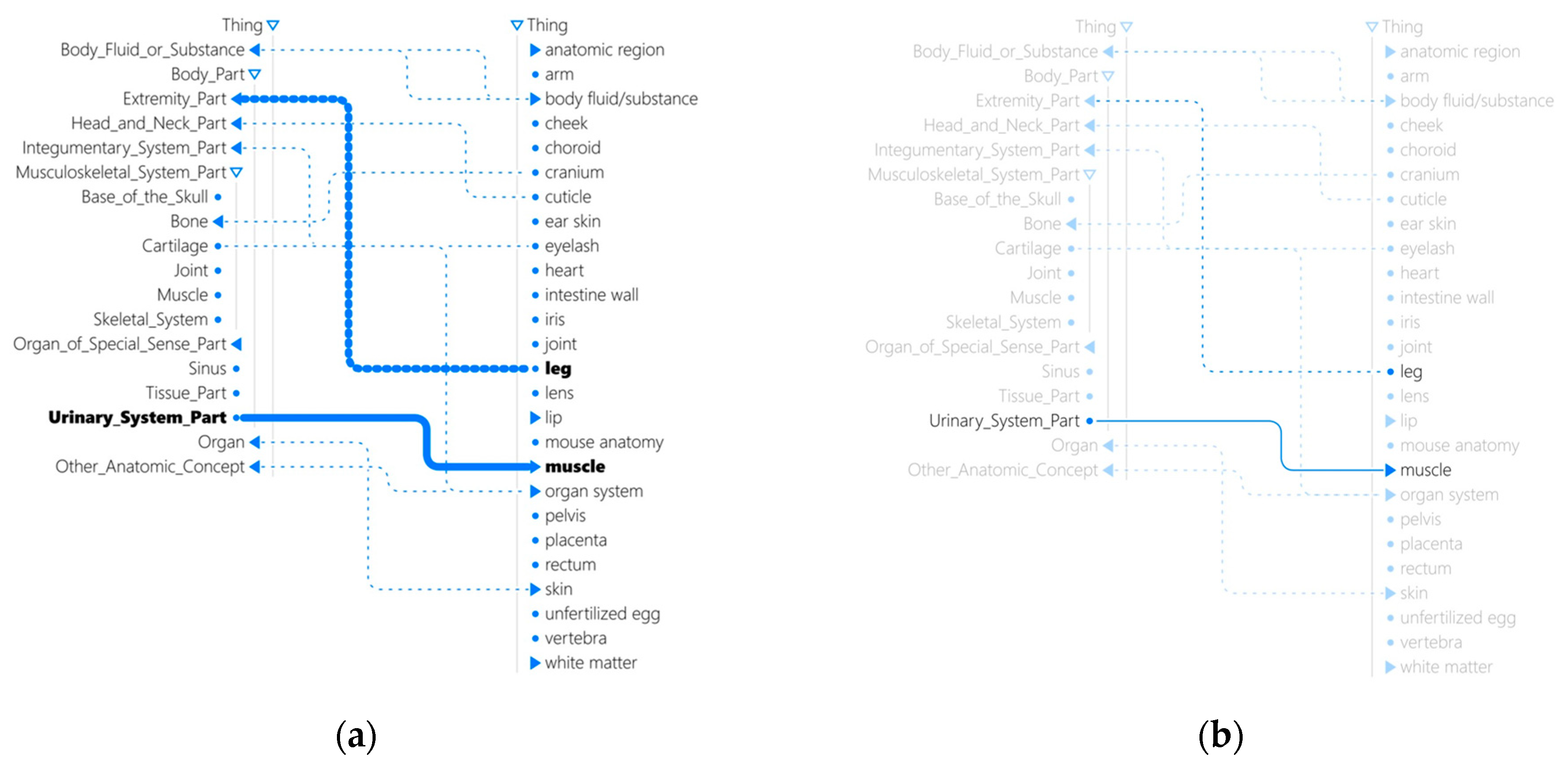

4.2. Physiologically Adaptive Visualization for Mappings Between Ontologies

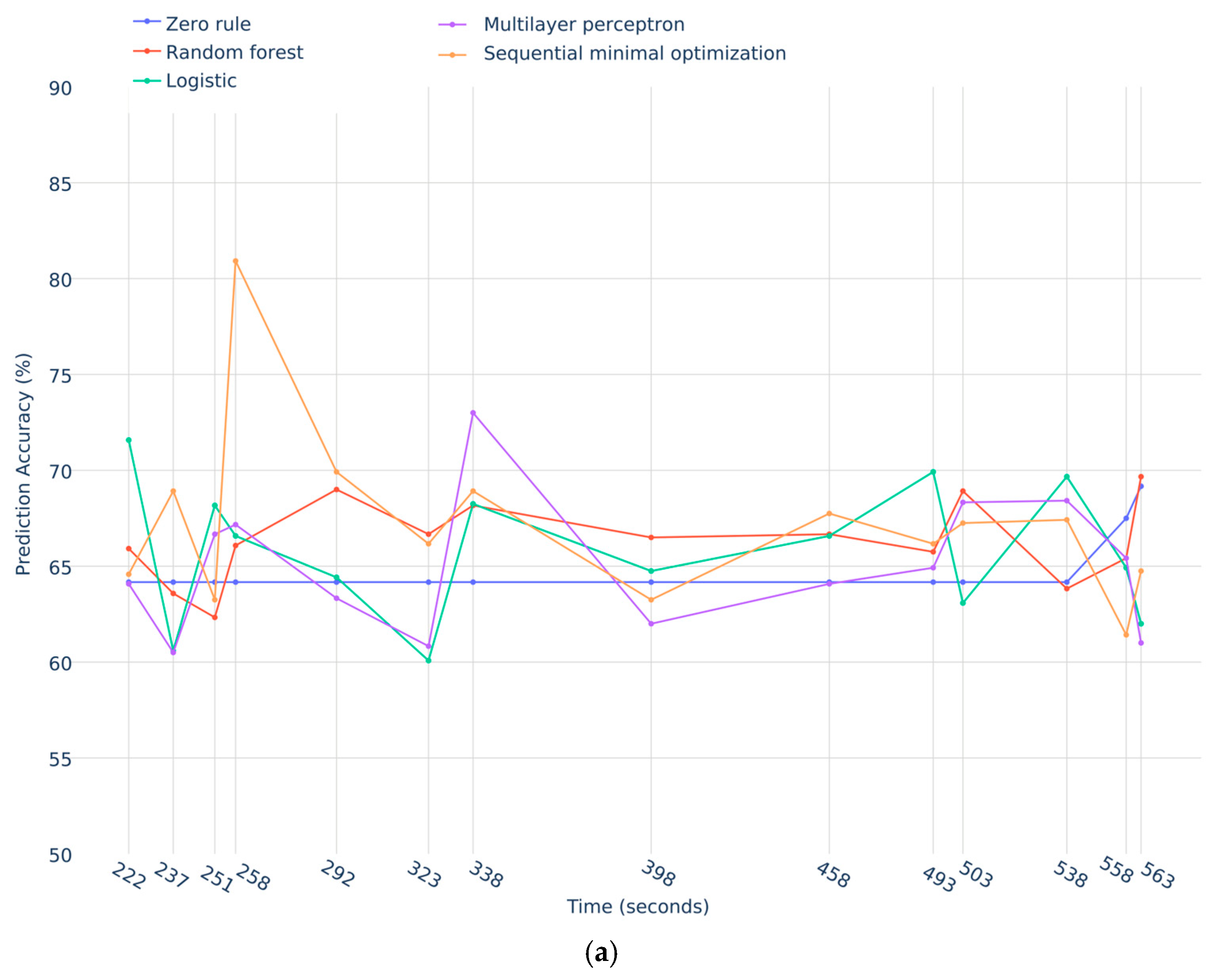

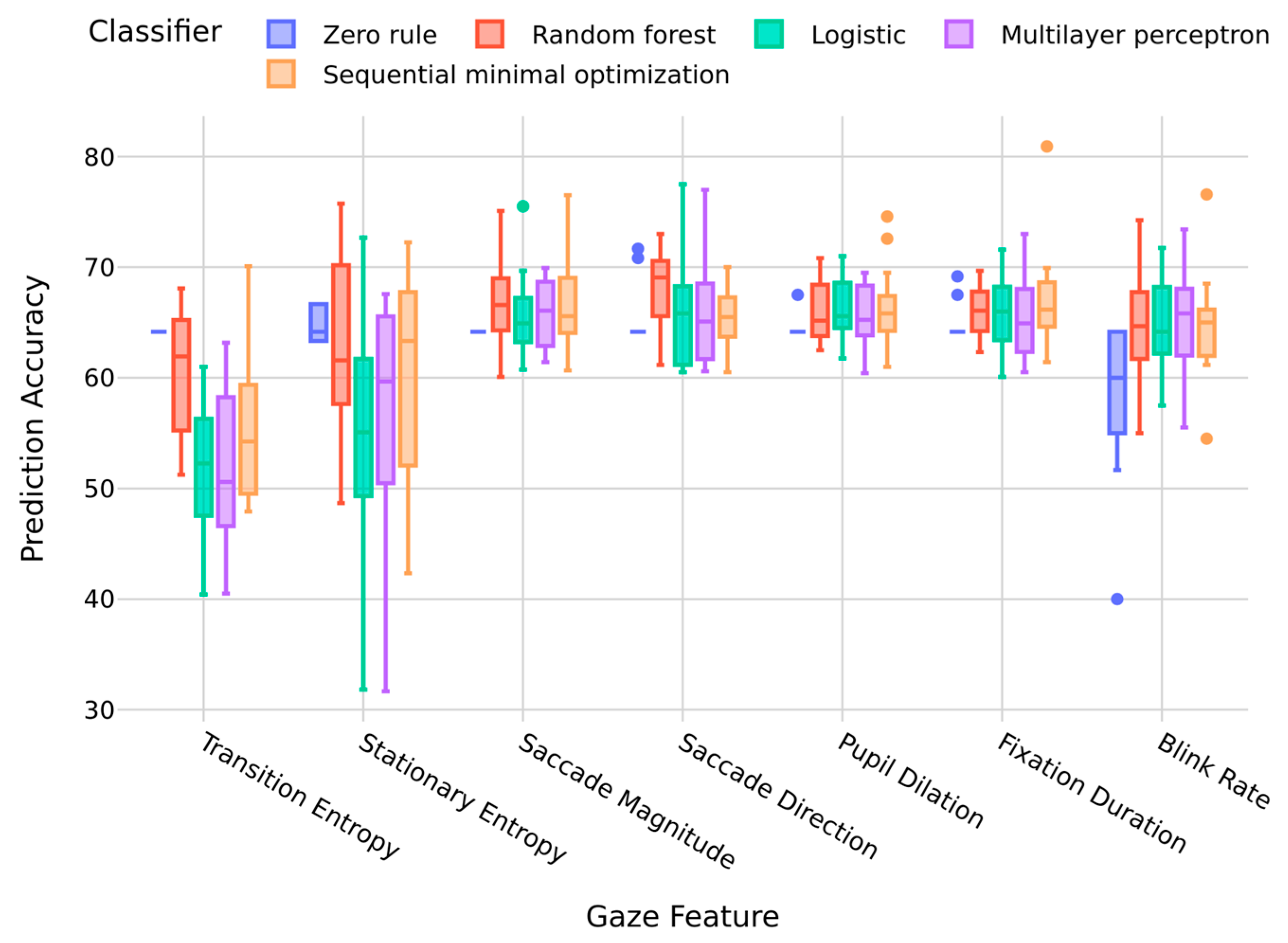

4.3. Enhanced Aviation Safety via Gaze-Driven Predictions of Pilot Performance

5. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| BEACH-Gaze | Beach Environment for the Analytics of Human Gaze |

| AOI | area of interest |

| GUI | graphical user interface |

| DGM | descriptive gaze measure |

| SD | standard deviation |

| WEKA | Waikato Environment for Knowledge Analysis |

| ASD | autism spectrum disorder |

| BPM | beats per minute |

| ILS | Instrument Landing System |

References

- Zammarchi, G.; Conversano, C. Application of Eye Tracking Technology in Medicine: A Bibliometric Analysis. Vision 2021, 5, 56. [Google Scholar] [CrossRef]

- Ruppenthal, T.; Schweers, N. Eye Tracking as an Instrument in Consumer Research to Investigate Food from A Marketing Perspective: A Bibliometric and Visual Analysis. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 1095–1117. [Google Scholar] [CrossRef]

- Moreno-Arjonilla, J.; López-Ruiz, A.; Jiménez-Pérez, J.R.; Callejas-Aguilera, J.E.; Jurado, J.M. Eye-tracking on Virtual Reality: A Survey. Virtual Real 2024, 28, 38. [Google Scholar] [CrossRef]

- Arias-Portela, C.Y.; Mora-Vargas, J.; Caro, M. Situational Awareness Assessment of Drivers Boosted by Eye-Tracking Metrics: A Literature Review. Appl. Sci. 2024, 14, 1611. [Google Scholar] [CrossRef]

- Ke, F.; Liu, R.; Sokolikj, Z.; Dahlstrom-Hakki, I.; Israel, M. Using Eye-Tracking in Education: Review of Empirical Research and Technology. Educ. Technol. Res. Dev. 2024, 72, 1383–1418. [Google Scholar] [CrossRef]

- Duchowski, A.T. Eye Tracking Methodology: Theory and Practice, 3rd ed.; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Dalmaijer, E.S.; Mathôt, S.; Van der Stigchel, S. PyGaze: An open-source, cross-platform toolbox for minimal-effort programming of eyetracking experiments. Behav. Res. Methods 2014, 46, 913–921. [Google Scholar] [CrossRef]

- Sogo, H. GazeParser: An open-source and multiplatform library for low-cost eye tracking and analysis. Behav. Res. Methods 2013, 45, 684–695. [Google Scholar] [CrossRef]

- Peirce, J.W. PsychoPy—Psychophysics software in Python. J. Neurosci. Methods 2007, 162, 8–13. [Google Scholar] [CrossRef]

- Straw, A. Vision Egg: An open-source library for realtime visual stimulus generation. Front. Neuroinform. 2008, 2, 4. [Google Scholar] [CrossRef] [PubMed]

- Voßkühler, A.; Nordmeier, V.; Kuchinke, L.; Jacobs, A.M. OGAMA (Open Gaze and Mouse Analyzer): Open-source software designed to analyze eye and mouse movements in slideshow study designs. Behav. Res. Methods 2008, 40, 1150–1162. [Google Scholar] [CrossRef]

- Zhang, X.; Sugano, Y.; Bulling, A. Evaluation of Appearance-Based Methods and Implications for Gaze-Based Applications. In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–13. [Google Scholar] [CrossRef]

- Krause, A.F.; Essig, K. LibreTracker: A Free and Open-source Eyetracking Software for head-mounted Eyetrackers. In Proceedings of the 20th European Conference on Eye Movements, Alicante, Spain, 18–22 August 2019; p. 391. [Google Scholar]

- De Tommaso, D.; Wykowska, A. TobiiGlassesPySuite: An open-source suite for using the Tobii Pro Glasses 2 in eye-tracking studies. In Proceedings of the 11th ACM Symposium on Eye Tracking Research & Applications, New York, NY, USA, 25–28 June 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Akkil, D.; Isokoski, P.; Kangas, J.; Rantala, J.; Raisamo, R. TraQuMe: A tool for measuring the gaze tracking quality. In Proceedings of the Symposium on Eye Tracking Research and Applications, New York, NY, USA, 26–28 March 2014; pp. 327–330. [Google Scholar] [CrossRef]

- Kar, A.; Corcoran, P. Development of Open-source Software and Gaze Data Repositories for Performance Evaluation of Eye Tracking Systems. Vision 2019, 3, 55. [Google Scholar] [CrossRef]

- Ghose, U.; Srinivasan, A.A.; Boyce, W.P.; Xu, H.; Chng, E.S. PyTrack: An end-to-end analysis toolkit for eye tracking. Behav. Res. Methods 2020, 52, 2588–2603. [Google Scholar] [CrossRef] [PubMed]

- Faraji, Y.; van Rijn, J.W.; van Nispen, R.M.A.; van Rens, G.H.M.B.; Melis-Dankers, B.J.M.; Koopman, J.; van Rijn, L.J. A toolkit for wide-screen dynamic area of interest measurements using the Pupil Labs Core Eye Tracker. Behav. Res. Methods 2023, 55, 3820–3830. [Google Scholar] [CrossRef]

- Kardan, S.; Lallé, S.; Toker, D.; Conati, C. EMDAT: Eye Movement Data Analysis Toolkit, version 1.x.; The University of British Columbia: Vancouver, BC, Canada, 2021. [Google Scholar] [CrossRef]

- Niehorster, D.C.; Hessels, R.S.; Benjamins, J.S. GlassesViewer: Open-source software for viewing and analyzing data from the Tobii Pro Glasses 2 eye tracker. Behav. Res. Methods 2020, 52, 1244–1253. [Google Scholar] [CrossRef]

- Cercenelli, L.; Tiberi, G.; Corazza, I.; Giannaccare, G.; Fresina, M.; Marcelli, E. SacLab: A toolbox for saccade analysis to increase usability of eye tracking systems in clinical ophthalmology practice. Comput. Biol. Med. 2017, 80, 45–55. [Google Scholar] [CrossRef]

- Andreu-Perez, J.; Solnais, C.; Sriskandarajah, K. EALab (Eye Activity Lab): A MATLAB Toolbox for Variable Extraction, Multivariate Analysis and Classification of Eye-Movement Data. Neuroinformatics 2016, 14, 51–67. [Google Scholar] [CrossRef] [PubMed]

- Krassanakis, V.; Filippakopoulou, V.; Nakos, B. EyeMMV toolbox: An eye movement post-analysis tool based on a two-step spatial dispersion threshold for fixation identification. J. Eye Mov. Res. 2014, 7, 1–10. [Google Scholar] [CrossRef]

- Berger, C.; Winkels, M.; Lischke, A.; Höppner, J. GazeAlyze: A MATLAB toolbox for the analysis of eye movement data. Behav. Res. Methods 2012, 44, 404–419. [Google Scholar] [CrossRef]

- Mardanbegi, D.; Wilcockson, T.D.W.; Xia, B.; Gellersen, H.W.; Crawford, T.O.; Sawyer, P. PSOVIS: An interactive tool for extracting post-saccadic oscillations from eye movement data. In Proceedings of the 19th European Conference on Eye Movements, Wuppertal, Germany, 20–24 August 2017; Available online: http://cogain2017.cogain.org/camready/talk6-Mardanbegi.pdf (accessed on 4 November 2025).

- Zhegallo, A.V.; Marmalyuk, P.A. ETRAN—R: Extension Package for Eye Tracking Results Analysis. In Proceedings of the 19th European Conference on Eye Movements, Wuppertal, Germany, 20–24 August 2017. [Google Scholar] [CrossRef]

- Gazepoint Product Page. Available online: https://www.gazept.com/shop/?v=0b3b97fa6688 (accessed on 24 July 2025).

- BEACH-Gaze Repository. Available online: https://github.com/TheD2Lab/BEACH-Gaze (accessed on 24 July 2025).

- Liu, G.T.; Volpe, N.J.; Galetta, S.L. Neuro-Ophthalmology Diagnosis and Management, 3rd ed.; Elsevier: Amsterdam, The Netherlands, 2018; Chapter 13; p. 429. ISBN 9780323340441. [Google Scholar]

- Spector, R.H. The Pupils. In Clinical Methods: The History, Physical, and Laboratory Examinations, 3rd ed.; Walker, H.K., Hall, W.D., Hurst, J.W., Eds.; Butterworths: Boston, MA, USA, 1990. [Google Scholar]

- Steichen, B. Computational Methods to Infer Human Factors for Adaptation and Personalization Using Eye Tracking. In A Human-Centered Perspective of Intelligent Personalized Environments and Systems; Human–Computer Interaction Series; Ferwerda, B., Graus, M., Germanakos, P., Tkalčič, M., Eds.; Springer: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

- Bahill, T.; McDonald, J.D. Frequency limitations and optimal step size for the two-point central difference derivative algorithm with applications to human eye movement data. IEEE Trans. Biomed. Eng. 1983, BME-30, 191–194. [Google Scholar] [CrossRef]

- McGregor, D.K.; Stern, J.A. Time on Task and Blink Effects on Saccade Duration. Ergonomics 1996, 39, 649–660. [Google Scholar] [CrossRef]

- Holmqvist, K.; Nyström, M.; Andersson, R.; Dewhurst, R.; Jarodzka, H.; van de Weijer, J. Eye Tracking: A Comprehensive Guide to Methods and Measures, 1st ed.; Oxford University Press: Oxford, UK, 2011. [Google Scholar]

- Krejtz, K.; Duchowski, A.; Szmidt, T.; Krejtz, I.; González Perilli, F.; Pires, A.; Vilaro, A.; Villalobos, N. Gaze transition entropy. ACM Trans. Appl. Percept. 2015, 13, 1–20. [Google Scholar] [CrossRef]

- Doellken, M.; Zapata, J.; Thomas, N.; Matthiesen, S. Implementing innovative gaze analytic methods in design for manufacturing: A study on eye movements in exploiting design guidelines. Procedia CIRP 2021, 100, 415–420. [Google Scholar] [CrossRef]

- Steichen, B.; Wu, M.M.A.; Toker, D.; Conati, C.; Carenini, G. Te,Te,Hi,Hi: Eye Gaze Sequence Analysis for Informing User-Adaptive Information Visualizations. In Proceedings of the 22nd International Conference on User Modeling, Adaptation, and Personalization, Aalborg, Denmark, 7–11 July 2014; Volume 8538, pp. 183–194. [Google Scholar] [CrossRef]

- West, J.M.; Haake, A.R.; Rozanski, E.P.; Karn, K.S. eyePatterns: Software for identifying patterns and similarities across fixation sequences. In Proceedings of the 2006 Symposium on Eye Tracking Research & Applications, San Diego, CA, USA, 27–29 March 2006; pp. 149–154. [Google Scholar]

- Frank, E.; Hall, M.A.; Witten, I.H. The WEKA Workbench. In Online Appendix for Data Mining: Practical Machine Learning Tools and Techniques, 4th ed.; Morgan Kaufmann: San Francisco, CA, USA, 2016. [Google Scholar]

- WEKA API. 2024. Available online: https://waikato.github.io/weka-wiki/downloading_weka/ (accessed on 24 July 2025).

- Parrott, M.; Ruyak, J.; Liguori, G. The History of Exercise Equipment: From Sticks and Stones to Apps and Phones. ACSM’s Health Fit. J. 2020, 24, 5–8. [Google Scholar] [CrossRef]

- Matthew, M.J.; Shaw, K.A.; Baio, J.; Washington, A.; Patrick, M.; DiRienzo, M.; Christensen, D.L.; Wiggins, L.D.; Pettygrove, S.; Andrews, J.G.; et al. Prevalence of Autism Spectrum Disorder Among Children Aged 8 Years—Autism and Developmental Disabilities Monitoring Network, 11 Sites, United States, 2016. Morb. Mortal. Wkly. Rep. (MMWR) Surveill. Summ. 2020, 69, 1–12. [Google Scholar] [CrossRef]

- Shaw, K.A.; Williams, S.; Hughes, M.M.; Warren, Z.; Bakian, A.V.; Durkin, M.S.; Esler, A.; Hall-Lande, J.; Salinas, A.; Vehorn, A.; et al. Statewide County-Level Autism Spectrum Disorder Prevalence Estimates—Seven, U.S. States, 2018. Ann. Epidemiol. 2023, 79, 39–43. [Google Scholar] [CrossRef] [PubMed]

- Dieringer, S.T.; Zoder-Martell, K.; Porretta, D.L.; Bricker, A.; Kabazie, J. Increasing Physical Activity in Children with Autism Through Music, Prompting and Modeling. Psychol. Sch. 2017, 54, 421–432. [Google Scholar] [CrossRef]

- Fu, B.; Chao, J.; Bittner, M.; Zhang, W.; Aliasgari, M. Improving Fitness Levels of Individuals with Autism Spectrum Disorder: A Preliminary Evaluation of Real-Time Interactive Heart Rate Visualization to Motivate Engagement in Physical Activity. In Proceedings of the 17th International Conference on Computers Helping People with Special Needs, Online, 9–11 September 2020; Springer: New York, NY, USA; pp. 81–89. [Google Scholar] [CrossRef]

- Fu, B.; Orevillo, K.; Lo, D.; Bae, A.; Bittner, M. Real-Time Heart Rate Visualization for Individuals with Autism Spectrum Disorder—An Evaluation of Technology Assisted Physical Activity Application to Increase Exercise Intensity. In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications—Human Computer Interaction Theory and Applications, Rome, Italy, 27–29 February 2024; SciTePress: Rome, Italy, 2024; pp. 455–463, ISBN 978-989-758-679-8, ISSN 2184-4321. [Google Scholar] [CrossRef]

- Fu, B.; Chow, N. AdaptLIL: A Real-Time Adaptive Linked Indented List Visualization for Ontology Mapping. In Proceedings of the 23rd International Semantic Web Conference, Baltimore, MD, USA, 11–15 November 2025; Volume 15232, pp. 3–22. [Google Scholar] [CrossRef]

- Chow, N.; Fu, B. AdaptLIL: A Gaze-Adaptive Visualization for Ontology Mapping; IEEE VIS: Washington, DC, USA, 2024. [Google Scholar]

- Fu, B. Predictive Gaze Analytics: A Comparative Case Study of the Foretelling Signs of User Performance During Interaction with Visualizations of Ontology Class Hierarchies. Multimodal Technol. Interact. 2024, 8, 90. [Google Scholar] [CrossRef]

- Fu, B.; Steichen, B. Impending Success or Failure? An Investigation of Gaze-Based User Predictions During Interaction with Ontology Visualizations. In Proceedings of the International Conference on Advanced Visual Interfaces, Frascati, Italy, 6–10 June 2022; pp. 1–9. [Google Scholar] [CrossRef]

- Fu, B.; Steichen, B. Supporting User-Centered Ontology Visualization: Predictive Analytics using Eye Gaze to Enhance Human-Ontology Interaction. Int. J. Intell. Inf. Database Syst. 2021, 15, 28–56. [Google Scholar] [CrossRef]

- Fu, B.; Steichen, B.; McBride, A. Tumbling to Succeed: A Predictive Analysis of User Success in Interactive Ontology Visualization. In Proceedings of the 10th International Conference on Web Intelligence, Mining and Semantics, Biarritz, France, 30 June –3 July 2020; ACM: New York, NY, USA; pp. 78–87. [Google Scholar] [CrossRef]

- Fu, B.; Steichen, B. Using Behavior Data to Predict User Success in Ontology Class Mapping—An Application of Machine Learning in Interaction Analysis. In Proceedings of the IEEE 13th International Conference on Semantic Computing, Newport Beach, CA, USA, 30 January–1 February 2019; pp. 216–223. [Google Scholar] [CrossRef]

- Fu, B.; Steichen, B.; Zhang, W. Towards Adaptive Ontology Visualization—Predicting User Success from Behavioral Data. Int. J. Semant. Comput. 2019, 13, 431–452. [Google Scholar] [CrossRef]

- Boeing. Statistical Summary of Commercial Jet Airplane Accidents Worldwide Operations; Aviation Safety, Boeing Commercial Airplanes: Seattle, WA, USA, 2015; pp. 1959–2014. [Google Scholar]

- Lee, J.D.; Seppelt, B.D. Human factors and ergonomics in automation design. In Handbook of Human Factors and Ergonomics, 3rd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2006; pp. 1570–1596. [Google Scholar]

- Shappell, S.; Detwiler, C.; Holcomb, K.; Hackworth, C.; Boquet, A.; Wiegmann, D. Human Error and Commercial Aviation Accidents: A Comprehensive, Fine-Grained Analysis Using HFACS. 2006. Available online: https://commons.erau.edu/publication/1218 (accessed on 24 July 2025).

- Fu, B.; Gatsby, P.; Soriano, A.R.; Chu, K.; Guardado, N.G. Towards Intelligent Flight Deck—A Case Study of Applied Eye Tracking in The Predictions of Pilot Success and Failure During Simulated Flight Takeoff. In Proceedings of the International Workshop on Designing and Building Hybrid Human–AI Systems, Arenzano, Italy, 3 June 2024; Volume 3701. [Google Scholar]

- Fu, B.; Soriano, A.R.; Chu, K.; Gatsby, P.; Guardado, N.G. Modelling Visual Attention for Future Intelligent Flight Deck—A Case Study of Pilot Eye Tracking in Simulated Flight Takeoff. In Proceedings of the 32nd ACM Conference on User Modeling, Adaptation and Personalization, Sardinia, Italy, 1–4 July 2024; pp. 170–175. [Google Scholar] [CrossRef]

- Fu, B.; De Jong, C.; Guardado, N.G.; Soriano, A.R.; Reyes, A. Event-Driven Predictive Gaze Analytics to Enhance Aviation Safety. In Proceedings of the ACM on Human Computer Interaction, Yokohama, Japan, 26 April–1 May 2025; Volume 9. [Google Scholar] [CrossRef]

- Poggi, I. The Gazeionary. In The Language of Gaze (Chapter 6), 1st ed.; Routledge: London, UK, 2024. [Google Scholar] [CrossRef]

| GUI | Temporal Segmentation | Machine Learning | Compatible Eye Trackers | License | |

|---|---|---|---|---|---|

| PyTrack | Data visualizations only | - | - | EyeLink, SMI, Tobii | GPL-3.0 |

| [17] | - | - | - | Pupil Core | GPL-3.0 |

| EMDAT | - | - | - | Tobii | BSD-2 |

| Glasses Viewer | ✓ | - | - | Tobii Pro Glasses | CC BY 4.0 |

| SacLab | ✓ | - | - | ViewPoint | BSD |

| EALab | ✓ | - | ✓ | Tobii, SMI | BSD |

| Eye-MMV | Data visualizations only | - | - | ViewPoint | GPL-3.0 |

| GazeAlyze | ✓ | - | - | Not specified | GNU GPL |

| PSOVIS | Data visualizations only | - | - | EyeLink | GPL-3.0 |

| PyGazeAnalyzer | - | - | - | Eye tracker agnostic | GPL-3.0 |

| ETRAN—R | - | - | - | SMI | CC BY-NC 4.0 |

| BEACH-Gaze | ✓ | ✓ | ✓ | Gazepoint | GNU GPL |

| Gaze Attribute | Descriptive Measure | Definition |

|---|---|---|

| Saccades | Count | The total number of valid saccades |

| Magnitude (px) | The sum, mean, median, SD, minimum, and maximum distance between fixations | |

| Duration (s) | The sum, mean, median, SD, minimum, and maximum duration of the saccades | |

| Amplitude (°) | The sum, mean, median, SD, minimum, and maximum angular distance the eye travels during saccades | |

| Velocity (°/s) | The sum, mean, median, SD, minimum, and maximum speed of eye movements during saccades | |

| Peak velocity (°/s) | The sum, mean, median, SD, minimum, and maximum of the highest speeds of eye movements during saccades | |

| Relative direction (°) | The sum, mean, median, SD, minimum, and maximum angle between two saccades | |

| Absolute direction (°) | The sum, mean, median, SD, minimum, and maximum angle of the saccade with respect to the horizontal axis | |

| Fixations | Count | The total number of valid fixations |

| Duration (s) | The sum, mean, median, SD, minimum, and maximum duration of the fixations | |

| Convex hull (px2) | The smallest area that encompasses all fixations | |

| AOI specific | Stationary entropy | The distribution of a person’s gaze across AOIs |

| Transition entropy | The extent to which a person has transitioned between the different AOIs | |

| Proportion of fixations within AOI | The percentage of fixation counts that occurred in an AOI. | |

| Proportion of fixation duration within AOI | The percentage of fixation durations that occurred in an AOI | |

| AOI pair | Identifies the two AOIs where a gaze transitions from the first to the second in sequence | |

| Transition count | The number of transitions that occurred for an AOI pair | |

| Proportion of transition including self-transitions | The proportion of total transitions that occurred between AOI pairs, inclusive of self-transitions | |

| Proportion of transition excluding self-transitions | The proportion of total transitions that occurred between AOI pairs, exclusive of self-transitions | |

| Pattern of AOI | A string of characters representing a sequence of AOIs, either expanded or collapsed | |

| Pattern frequency | The number of times a pattern appears in a person’s sequence of AOIs visited, either expanded or collapsed | |

| Sequence support | The proportion of sequences in a user group where the pattern appears, relative to the total number of sequences identified for that group, either expanded or collapsed | |

| Mean pattern frequency | The total occurrences of the AOI pattern across all sequences in a user group, divided by the group’s total number of sequences, either expanded or collapsed | |

| Proportional pattern frequency | The proportion of total AOI pattern occurrences within a group relative to the group’s overall number of patterns, either expanded or collapsed | |

| Blinks | Average blink rate (blink/m) | The average rate at which a person blinks per minute |

| Pupil | Left eye average pupil size (mm) | The average pupil size of the left eye |

| Right eye average pupil size (mm) | The average pupil size of the right eye | |

| Average pupil size across both eyes (mm) | The average pupil size across both eyes | |

| Combined | Fixation-to-saccade Ratio | The sum of fixation duration divided by the sum of saccadic duration |

| Scanpath duration (s) | The duration of all fixations and saccades |

| Type | Model |

|---|---|

| Classification | Zero Rule; Bayesian Network; Naive Bayes; Naive Bayes Multinomial Text; Naive Bayes Updateable; Naive Bayes Multinomial; Naive Bayes Multinomial Updateable; Logistic Regression; Multilayer Perceptron; Stochastic Gradient Descent; Stochastic Gradient Descent Text; Simple Logistic Regression; Sequential Minimal Optimization (for Support Vector Machines); Voted Perceptron; Instance-Based k (k-Nearest Neighbors); K* (K-Star); Locally Weighted Learning; Adaptive Boosting Method 1; Attribute Selected Classifier; Bootstrap Aggregating; Classification via Regression; Cross-Validation Parameter Selection; Filtered Classifier; Iterative Classifier Optimizer; Logistic Boosting; Multi-Class Classifier; Multi-Class Classifier Updateable; Multi-Scheme; Random Committee; Randomizable Filtered Classifier; Random Subspace; Stacking; Vote; Weighted Instances Handler Wrapper; Input Mapped Classifier; Decision Table; Repeated Incremental Pruning to Produce Error Reduction (RIPPER); One Rule; Partial Decision Trees; Decision Stump; Hoeffding Tree; C4.5 Decision Tree; Logistic Model Trees; Random Forest; Random Tree; Reduced Error Pruning Tree. |

| Regression | Zero Rule; Gaussian Processes; Linear Regression; Multilayer Perceptron; Simple Linear Regression; Sequential Minimal Optimization for Regression; Bootstrap Aggregating; Cross-Validation Parameter Selection; Regression By Discretization; Multiple Scheme; Random Committee; Randomizable Filtered Classifier; Random Subspace; Stacked Generalization; Voting; Weighted Instances Handler Wrapper; Decision Table; M5 Rules; M5 Prime; Reduced Error Pruning Tree; Instance-Based k-Nearest Neighbors; K* (K-Star); Locally Weighted Learning; Decision Stump; Random Forest; Additive Regression; Attribute Selected Classifier; Elastic Net; Isotonic Regression; Least Median of Squares; Iterative Absolute Error Regression. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, B.; Chu, K.; Soriano, A.R.; Gatsby, P.; Guardado, N.G.; Jones, A.; Halderman, M. BEACH-Gaze: Supporting Descriptive and Predictive Gaze Analytics in the Era of Artificial Intelligence and Advanced Data Science. J. Eye Mov. Res. 2025, 18, 67. https://doi.org/10.3390/jemr18060067

Fu B, Chu K, Soriano AR, Gatsby P, Guardado NG, Jones A, Halderman M. BEACH-Gaze: Supporting Descriptive and Predictive Gaze Analytics in the Era of Artificial Intelligence and Advanced Data Science. Journal of Eye Movement Research. 2025; 18(6):67. https://doi.org/10.3390/jemr18060067

Chicago/Turabian StyleFu, Bo, Kayla Chu, Angelo Ryan Soriano, Peter Gatsby, Nicolas Guardado Guardado, Ashley Jones, and Matthew Halderman. 2025. "BEACH-Gaze: Supporting Descriptive and Predictive Gaze Analytics in the Era of Artificial Intelligence and Advanced Data Science" Journal of Eye Movement Research 18, no. 6: 67. https://doi.org/10.3390/jemr18060067

APA StyleFu, B., Chu, K., Soriano, A. R., Gatsby, P., Guardado, N. G., Jones, A., & Halderman, M. (2025). BEACH-Gaze: Supporting Descriptive and Predictive Gaze Analytics in the Era of Artificial Intelligence and Advanced Data Science. Journal of Eye Movement Research, 18(6), 67. https://doi.org/10.3390/jemr18060067