Eye Movement Indicator Difference Based on Binocular Color Fusion and Rivalry

Abstract

1. Introduction

2. Methods

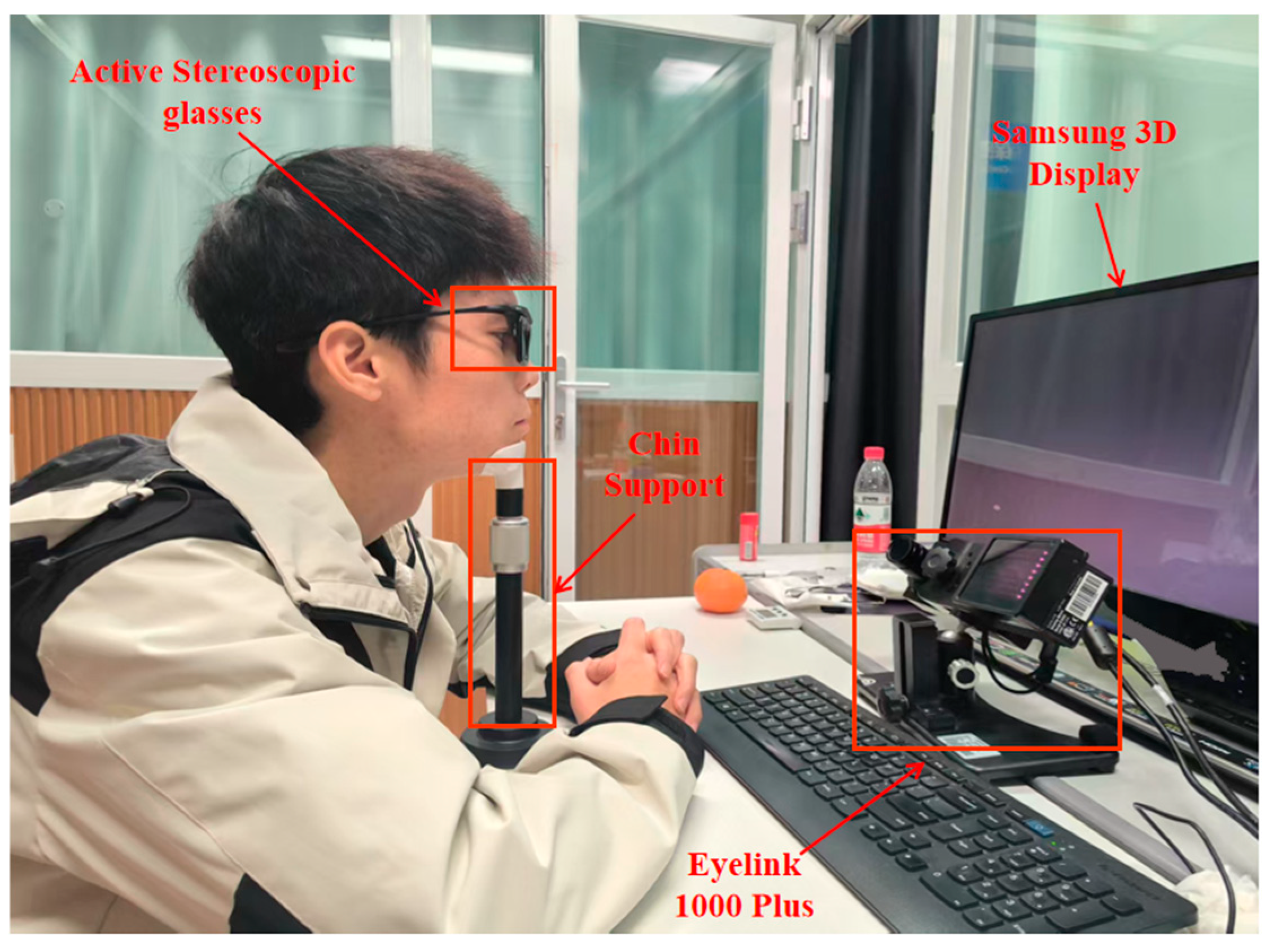

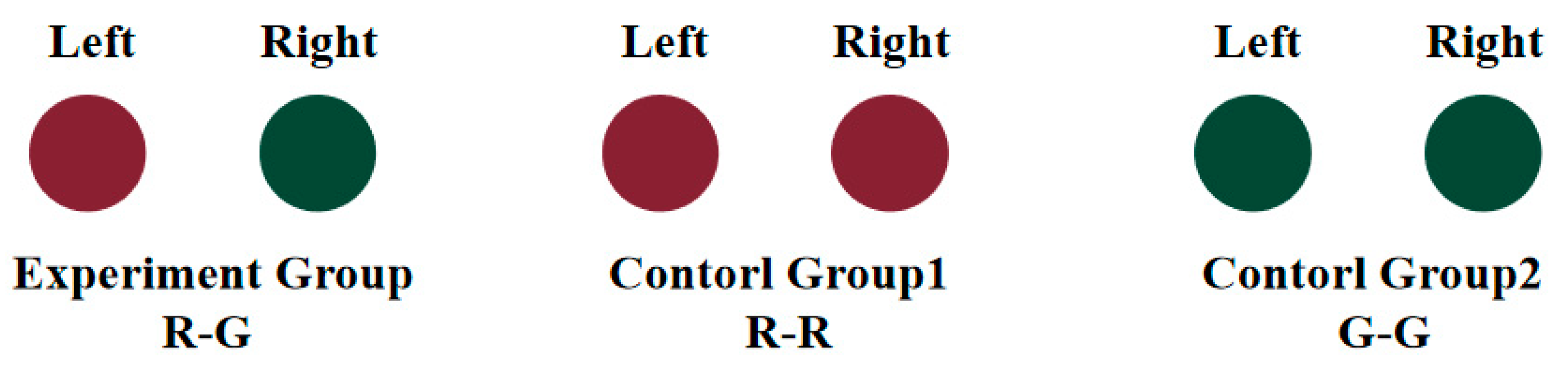

2.1. Instrumentation and Viewing Groups

2.2. Subjects

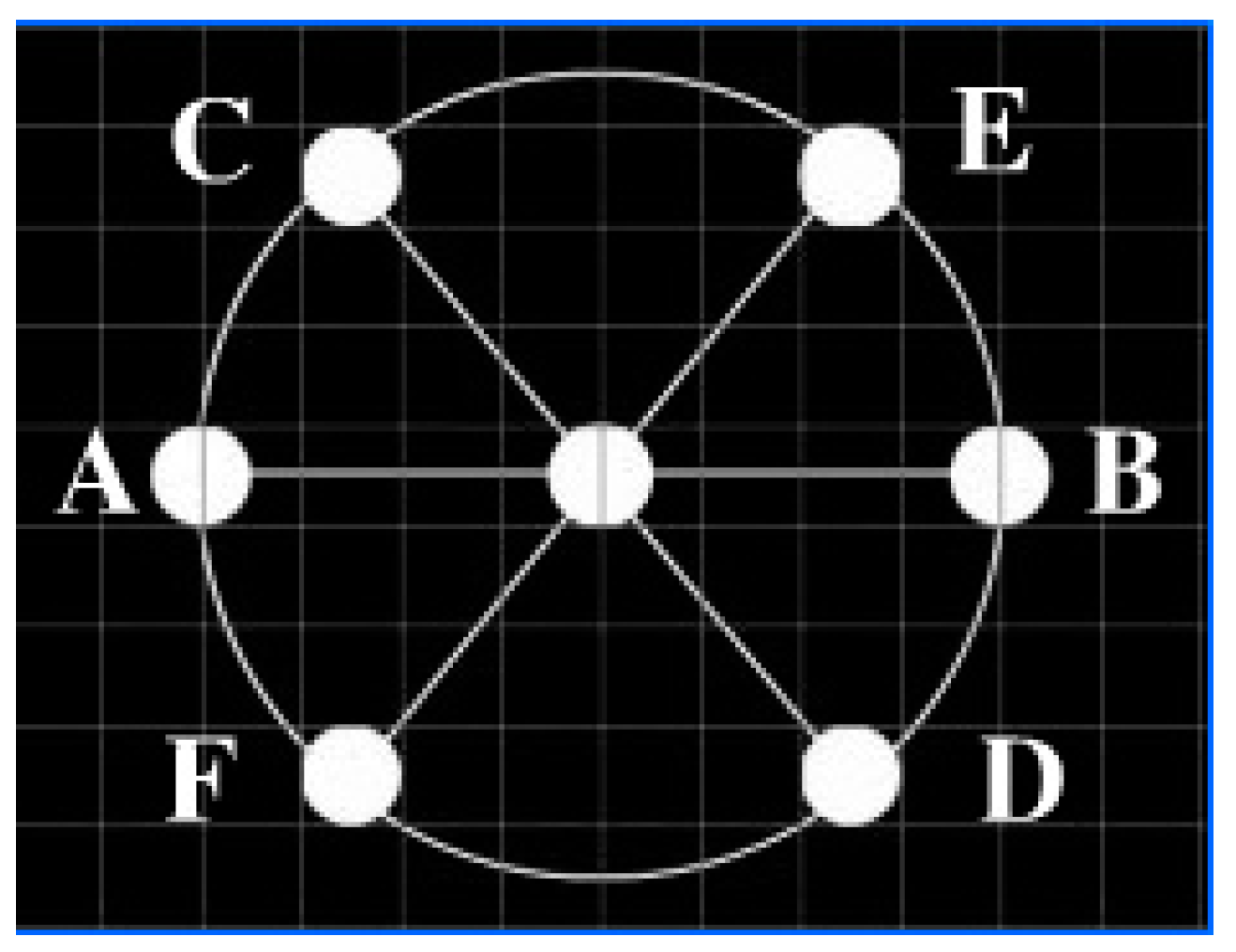

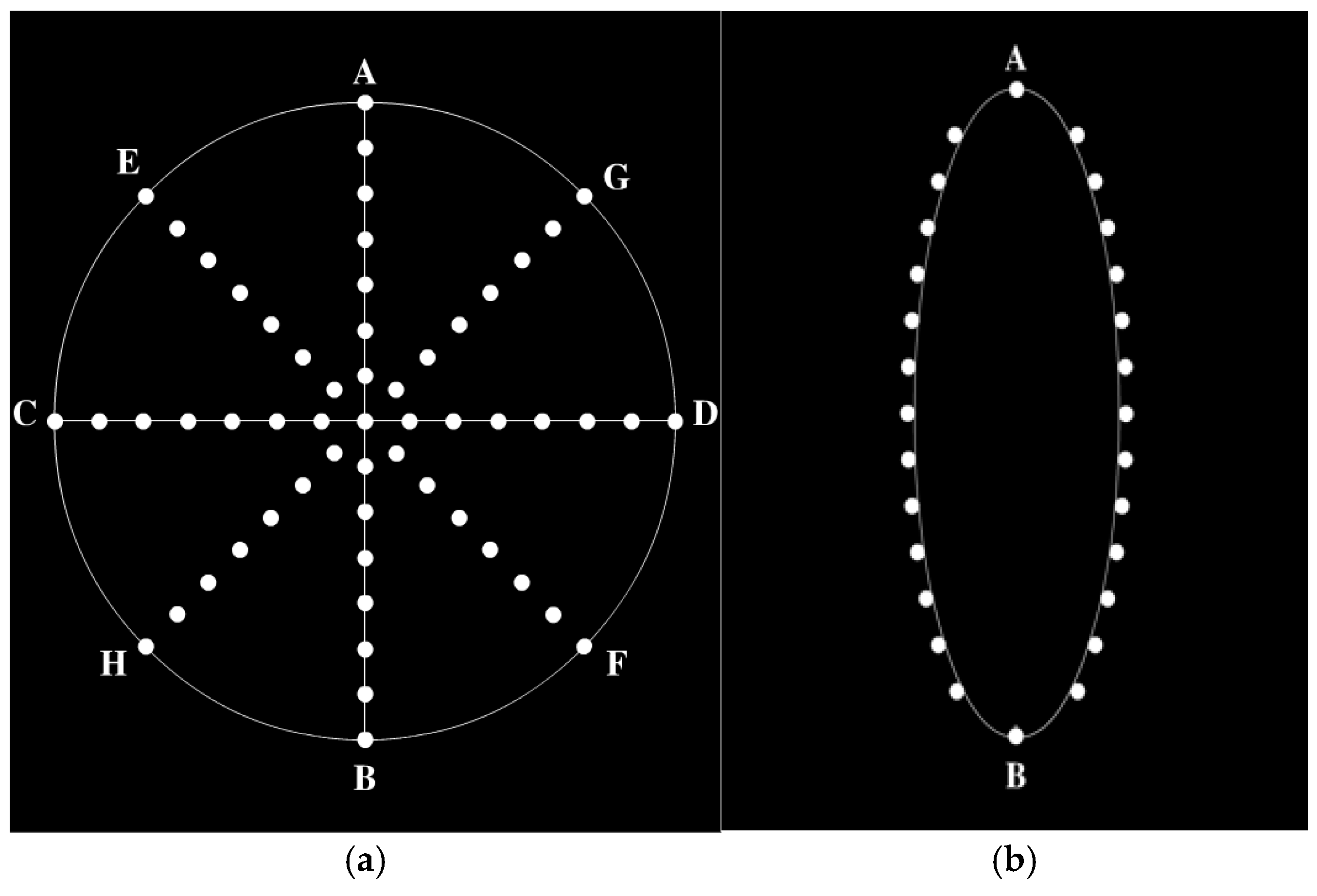

2.3. Stimuli Paradigm

2.4. Procedure

2.5. Data Processing and Statistical Analysis

3. Results

3.1. Results of Interocular Significant Difference

3.2. Results of Normal Distribution Analysis

3.3. Results of Z-Score Normalization Analysis

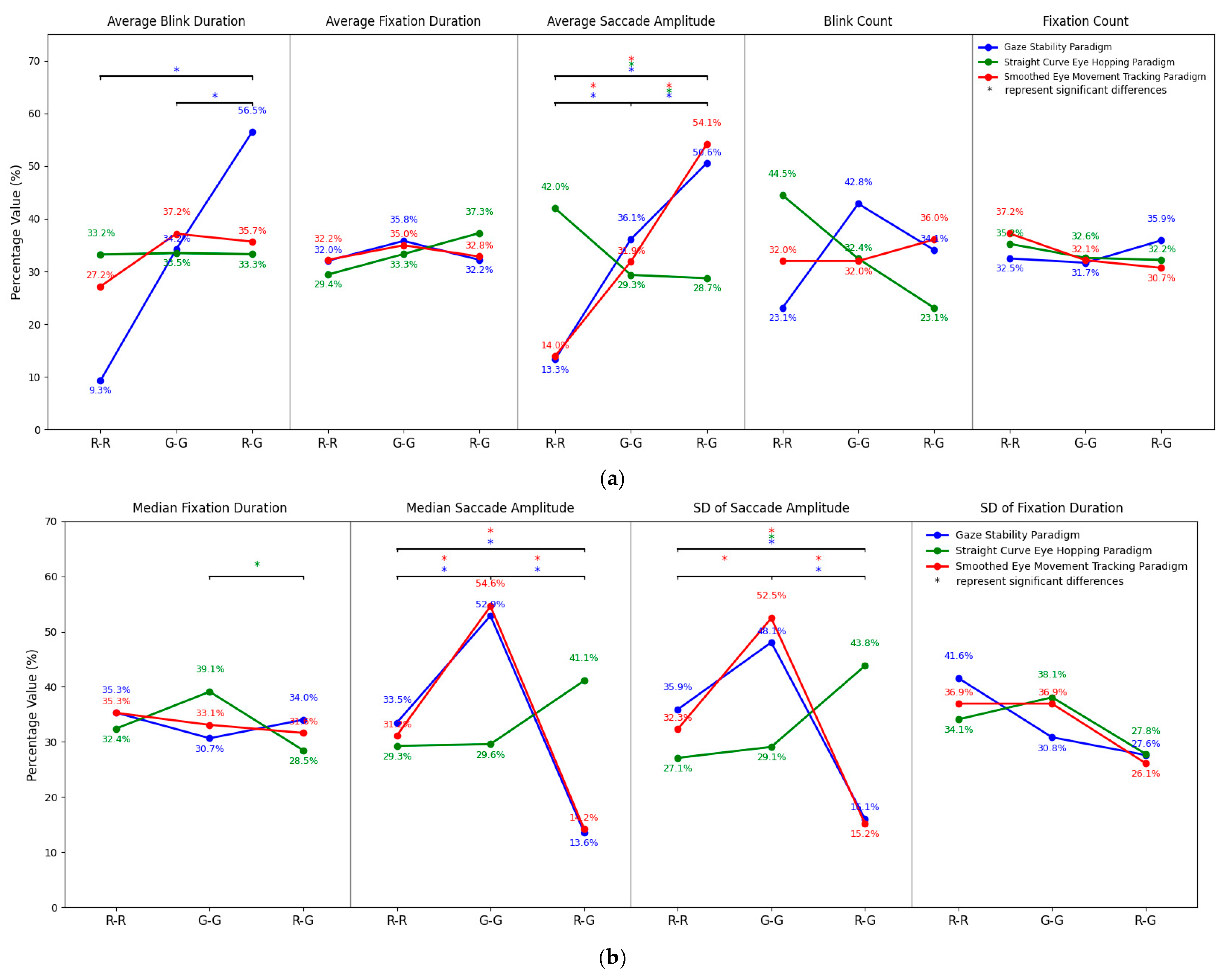

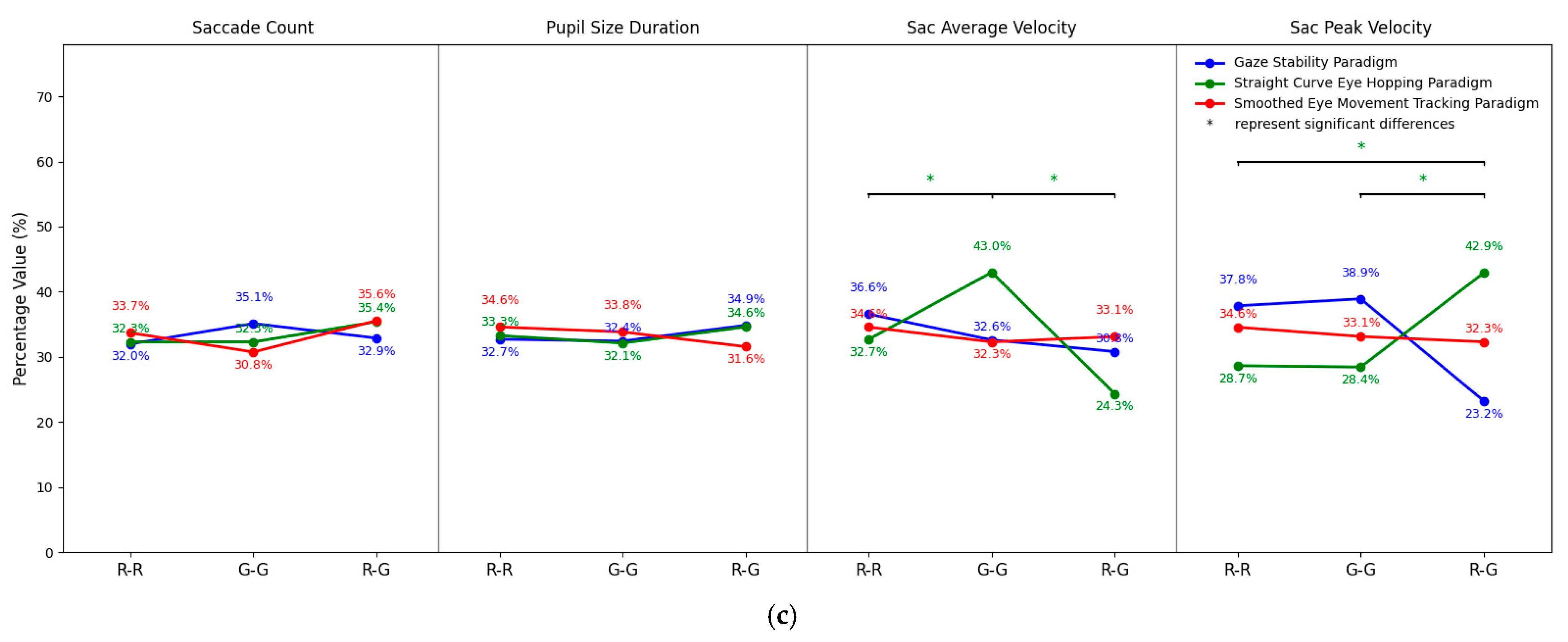

3.4. Results of Cross-Paradigm Merged Analysis

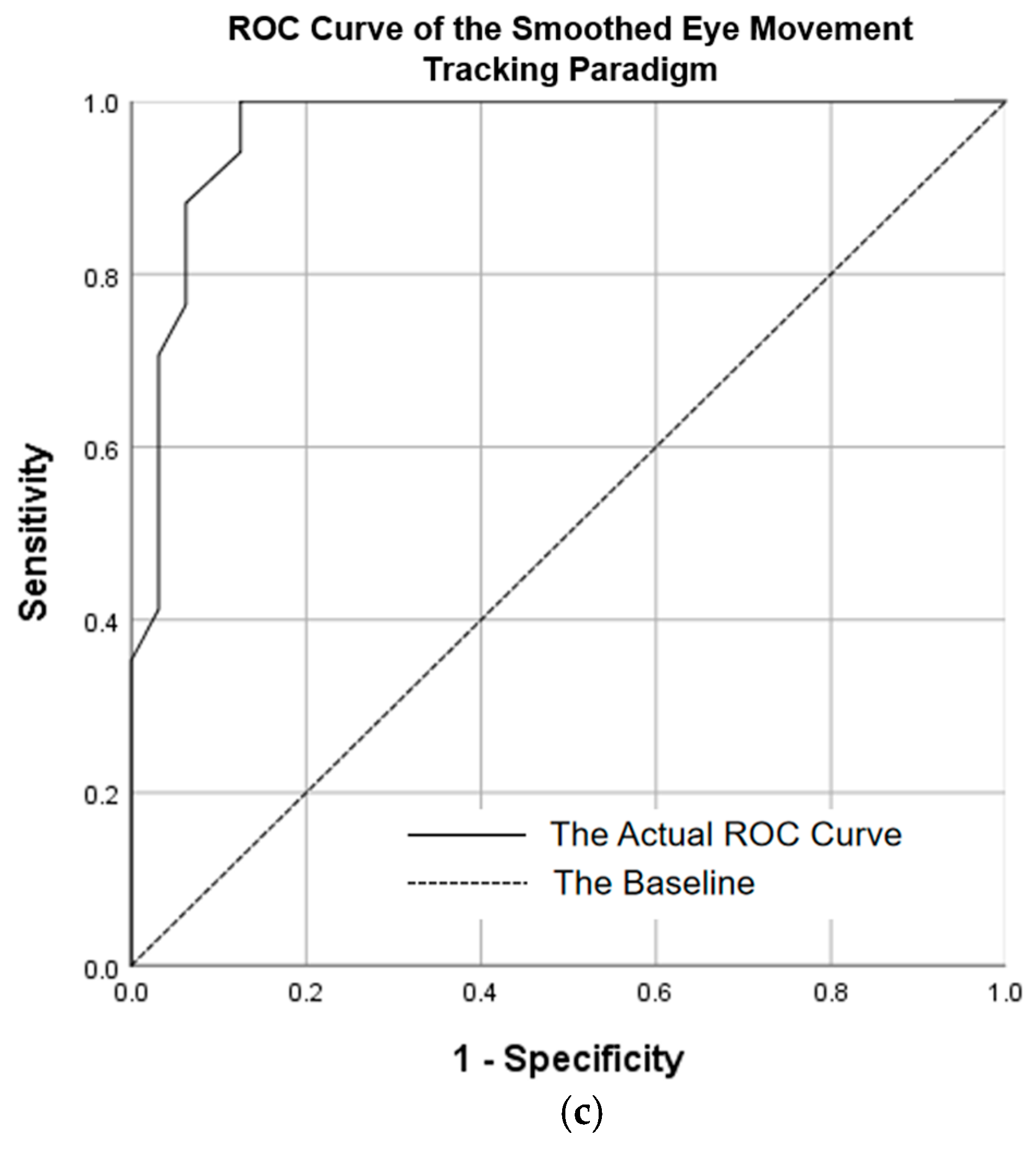

3.5. Results of ROC Curve Analysis

4. Discussion

- 1.

- Differences in Eye Movement Indicators

- 2.

- Difference Between Fusion and Rivalry States Based on Ranges

- 3.

- ROC Analysis and Classification Efficacy

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Total Participants (Non-Myopes/Myopes) | Non-Myopes | Myopes | Stereoacuity@41cm (Arcsec) | ||

|---|---|---|---|---|---|

| Naked Visual Acuity @4m | Naked Eye Acuity@4m | Corrective Acuity@4m | Corrected Degree(D) | ||

| 18(11/7) | OS:20/20 OD:20/20 | OS:20/40~20/25 | OS:20/20 | OS: −1.75 ~−6.00 | 41~55 |

| OD:20/40~20/25 | OD:20/20 | OD: −2.75~−6.00 | |||

| Indicators | Name | Mean | SD | t | p | Cohen’s d |

|---|---|---|---|---|---|---|

| Acceleration X | Left | −10.235 | 1150.613 | 0.081 | 0.935 | 0.004 |

| Right | −44.346 | 1233.721 | ||||

| Acceleration Y | Left | 9.048 | 520.388 | 0.337 | 0.736 | 0.015 |

| Right | −32.468 | 3865.833 | ||||

| Gaze X | Left | 251.948 | 78.303 | 37.77 | 0.000 | 1.689 |

| Right | 60.968 | 139.41 | ||||

| Gaze Y | Left | 377.523 | 5.743 | 107.402 | 0.000 | 4.803 |

| Right | 787.018 | 120.432 | ||||

| Pupil Size | Left | 3799.668 | 839.031 | 9.425 | 0.000 | 0.422 |

| Right | 3447.723 | 830.9 | ||||

| Velocity X | Left | 0.35 | 7.997 | 0.136 | 0.892 | 0.006 |

| Right | 0.178 | 39.272 | ||||

| Velocity Y | Left | 0.363 | 3.82 | 1.225 | 0.221 | 0.055 |

| Right | −0.131 | 12.183 |

| Indicators | Name | Mean | SD | t | p | Cohen’s d |

|---|---|---|---|---|---|---|

| Acceleration X | Left | −21.48 | 1268.941 | −0.545 | 0.586 | 0.023 |

| Right | 37.983 | 3387.998 | ||||

| Acceleration Y | Left | −4.973 | 1272.723 | 0.895 | 0.371 | 0.038 |

| Right | −112.16 | 3761.714 | ||||

| Gaze X | Left | 499.159 | 142.151 | −0.515 | 0.606 | 0.022 |

| Right | 502.14 | 128.92 | ||||

| Gaze Y | Left | 396.257 | 127.343 | −4.794 | 0.000 | 0.204 |

| Right | 421.58 | 120.324 | ||||

| Pupil Size | Left | 1597.771 | 801.02 | 7.565 | 0.000 | 0.323 |

| Right | 1378.965 | 527.798 | ||||

| Velocity X | Left | −0.19 | 18.995 | −0.448 | 0.654 | 0.019 |

| Right | 0.226 | 24.279 | ||||

| Velocity Y | Left | 1.33 | 11.553 | 0.509 | 0.611 | 0.022 |

| Right | 0.989 | 19.003 |

| Indicators | Eye | Mean | SD | t | p | Cohen’s d |

|---|---|---|---|---|---|---|

| Acceleration X | Left | 14.163 | 497.723 | 0.533 | 0.594 | 0.028 |

| Right | −6.692 | 1056.822 | ||||

| Acceleration Y | Left | −29.448 | 864.872 | −0.125 | 0.9 | 0.006 |

| Right | −19.085 | 2365.992 | ||||

| Gaze X | Left | 408.603 | 73.545 | −12.566 | 0.000 | 0.65 |

| Right | 453.215 | 59.399 | ||||

| Gaze Y | Left | 487.712 | 55.7 | −1.94 | 0.053 | 0.1 |

| Right | 493.012 | 47.51 | ||||

| Pupil Size | Left | 2725.338 | 672.047 | 22.001 | 0.000 | 1.138 |

| Right | 1980.695 | 622.701 | ||||

| Velocity X | Left | 0.388 | 3.645 | −0.339 | 0.735 | 0.018 |

| Right | 0.455 | 4.094 | ||||

| Velocity Y | Left | 0.324 | 7.426 | −0.04 | 0.968 | 0.002 |

| Right | 0.342 | 10.539 |

| Indicators | Gaze Stability (n = 54) | Smoothed Eye Movement Tracking (n = 108) | Straight Curve Eye Hopping (n = 54) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Skewness | Kurtosis | S-W Test | Skewness | Kurtosis | S-W Test | Skewness | Kurtosis | S-W Test | |

| Average Blink Duration | 0.92 | −0.61 | 0.78 (0.000) | 1.88 | 2.25 | 0.59 (0.000) | 2.67 | 6.21 | 0.45 (0.000) |

| Average Fixation Duration | 0.54 | −0.31 | 0.95 (0.036) | 2.16 | 8.21 | 0.82 (0.000) | 0.39 | 0.19 | 0.98 (0.439) |

| Average Saccade Amplitude | −0.02 | −1.19 | 0.96 (0.047) | 2.96 | 11.22 | 0.69 (0.000) | 0.19 | 1.04 | 0.96 (0.083) |

| Blink Count | 0.92 | 0.66 | 0.77 (0.000) | 2.37 | 6.76 | 0.59 (0.000) | 2.80 | 7.46 | 0.45 (0.000) |

| Fixation Count | 0.85 | 0.36 | 0.85 (0.000) | 1.14 | 0.97 | 0.82 (0.000) | 0.53 | 0.27 | 0.88 (0.000) |

| Median Fixation Duration | 0.38 | −0.52 | 0.97 (0.181) | 2.03 | 7.14 | 0.83 (0.000) | 0.28 | 0.45 | 0.99 (0.765) |

| Median Saccade Amplitude | 0.001 | −1.14 | 0.96 (0.079) | 3.10 | 11.98 | 0.67 (0.000) | 0.14 | 1.12 | 0.96 (0.054) |

| SD Saccade Amplitude | 0.39 | −1.09 | 0.93 (0.004) | 2.17 | 5.59 | 0.77 (0.000) | 0.33 | 1.31 | 0.92 (0.001) |

| SD Fixation Duration | 1.19 | 1.60 | 0.90 (0.000) | 1.93 | 4.38 | 0.80 (0.000) | 0.18 | 0.26 | 0.95 (0.035) |

| Saccade Count | 0.59 | 0.38 | 0.87 (0.000) | 0.57 | −0.03 | 0.88 (0.000) | 0.04 | 0.67 | 0.85 (0.000) |

| Pupil Size Duration | −0.60 | 0.65 | 0.97 (0.152) | 0.52 | 1.43 | 0.95 (0.001) | 1.00 | 2.79 | 0.94 (0.009) |

| Sac Avg Velocity | 1.11 | 1.62 | 0.91 (0.001) | 0.01 | −1.21 | 0.95 (0.001) | 1.15 | 2.97 | 0.93 (0.003) |

| Sac Peak Velocity | 1.47 | 1.66 | 0.82 (0.000) | 2.28 | 4.07 | 0.52 (0.000) | 2.95 | 9.80 | 0.64 (0.000) |

| Indicators | Exp./Ctr. | Mean | SD | p | Cohen’s d |

|---|---|---|---|---|---|

| Median Fixation Duration (ms) | R-G/R-R | 244.9/254.1 | 105.7/135.1 | 0.823 | 0.1 |

| R-G/G-G | 244.9/220.7 | 105.7/101.2 | 0.547 | 0.2 | |

| R-R/G-G | 254.1/220.7 | 135.1/101.2 | 0.408 | 0.3 | |

| Median Saccade Amplitude (°) | R-G/R-R | 9.4/23.1 | 5.1/7.1 | 0.000 | 0.5 |

| R-G/G-G | 9.4/36.4 | 5.1/6.9 | 0.000 | 0.5 | |

| R-R/G-G | 23.1/36.4 | 7.1/6.9 | 0.000 | 0.1 | |

| Pupil Size Duration (ms) | R-G/R-R | 4773.0/4479.9 | 1418.3/767.2 | 0.476 | 0.3 |

| R-G/G-G | 4773.0/4439.9 | 1418.3/1180.1 | 0.412 | 0.3 | |

| R-R/G-G | 4479.9/4439.9 | 767.2/1180.1 | 0.921 | 0.0 |

| Indicators | Exp./Ctr. | Mean | SD | p | Cohen’s d |

|---|---|---|---|---|---|

| Average Blink Duration (ms) | R-G/R-R | 1.0/9.5 | 1.6/6.3 | 0.002 | 1.451 |

| R-G/G-G | 1.0/19.5 | 1.6/11.5 | 0.009 | 1.427 | |

| R-R/G-G | 9.5/19.5 | 6.3/11.5 | 0.145 | 0.546 | |

| Average Fixation Duration (ms) | R-G/R-R | 199.4/260.5 | 120.5/118.8 | 0.752 | 0.155 |

| R-G/G-G | 199.4/243.0 | 120.5/114.7 | 0.669 | 0.071 | |

| R-R/G-G | 260.5/243.0 | 118.8/114.7 | 0.874 | 0.087 | |

| Average Saccade Amplitude (°) | R-G/R-R | 9.5/26.5 | 5.3/6.8 | 0.000 | 2.681 |

| R-G/G-G | 9.5/38.5 | 5.3/10.4 | 0.000 | 3.230 | |

| R-R/G-G | 26.5/38.5 | 6.8/10.4 | 0.000 | 1.167 | |

| Blink Count (times) | R-G/R-R | 0.0/1.0 | 0.6/0.9 | 0.035 | 0.739 |

| R-G/G-G | 0.0/1.0 | 0.6/0.6 | 0.128 | 0.462 | |

| R-R/G-G | 1.0/1.0 | 0.9/0.6 | 0.375 | 0.372 | |

| Fixation Count (times) | R-G/R-R | 2.0/2.0 | 1.2/0.9 | 0.495 | 0.101 |

| R-G/G-G | 2.0/3.0 | 1.2/1.1 | 0.261 | 0.281 | |

| R-R/G-G | 2.0/3.0 | 0.9/1.1 | 0.477 | 0.216 | |

| SD Saccade Amplitude (°) | R-G/R-R | 6.5/19.5 | 4.1/7.8 | 0.000 | 1.662 |

| R-G/G-G | 6.5/30.5 | 4.1/13.1 | 0.001 | 1.760 | |

| R-R/G-G | 19.5/30.5 | 7.8/13.1 | 0.038 | 0.629 | |

| SD Fixation Duration (ms) | R-G/R-R | 79.2/89.9 | 100.7/88.7 | 0.183 | 0.331 |

| R-G/G-G | 79.2/90.7 | 100.7/71.3 | 0.516 | 0.044 | |

| R-R/G-G | 89.9/90.7 | 88.7/71.3 | 0.429 | 0.343 | |

| Saccade Count (times) | R-G/R-R | 2.0/2.0 | 1.2/0.8 | 0.402 | 0.159 |

| R-G/G-G | 2.0/2.0 | 1.2/0.9 | 0.227 | 0.306 | |

| R-R/G-G | 2.0/2.0 | 0.8/0.9 | 0.564 | 0.190 | |

| Sac Avg Velocity (°/s) | R-G/R-R | 59.3/79.4 | 28.8/46.7 | 0.402 | 0.435 |

| R-G/G-G | 59.3/78.2 | 28.8/24.0 | 0.669 | 0.088 | |

| R-R/G-G | 79.4/78.2 | 46.7/24.0 | 0.591 | 0.391 | |

| Sac Peak Velocity (°/s) | R-G/R-R | 139.4/126.8 | 177.3/183.1 | 0.862 | 0.180 |

| R-G/G-G | 139.4/187.9 | 177.3/213.1 | 0.150 | 0.418 | |

| R-R/G-G | 126.8/187.9 | 183.1/213.1 | 0.393 | 0.249 |

| Independent Samples t-Test Result (df = 34) | ||||||

|---|---|---|---|---|---|---|

| Indicators | Exp./Ctr. | Average | Standard | p | t | Cohen’s d |

| Average Fixation Duration (ms) | R-G/R-R | 260.6/283.1 | 83.8/94.2 | 0.461 | −0.7 | 0.3 |

| R-G/G-G | 260.6/265.7 | 83.8/86.2 | 0.870 | −0.2 | 0.1 | |

| R-R/G-G | 283.1/265.7 | 94.2/86.2 | 0.581 | −9.6 | 3.4 | |

| Average Saccade Amplitude (°) | R-G/R-R | 9.5/21.6 | 4.9/6.0 | 0.000 | −6.4 | 2.2 |

| R-G/G-G | 9.5/36.7 | 4.9/7.8 | 0.000 | −9.4 | 3.3 | |

| R-R/G-G | 21.6/36.7 | 6.0/7.8 | 0.000 | −0.1 | 0.0 | |

| Median Fixation Duration (ms) | R-G/R-R | 251.9/280.9 | 98.6/100.4 | 0.390 | −0.8 | 0.3 |

| R-G/G-G | 251.9/263.5 | 98.6/91.9 | 0.738 | −0.3 | 0.1 | |

| R-R/G-G | 280.9/263.5 | 100.4/91.9 | 0.617 | 0.5 | 0.2 | |

| Median Saccade Amplitude (°) | R-G/R-R | 9.8/21.5 | 5.3/8.0 | 0.000 | −6.2 | 2.1 |

| R-G/G-G | 9.8/37.7 | 5.3/7.8 | 0.000 | −9.4 | 3.3 | |

| R-R/G-G | 21.5/37.7 | 8.0/7.8 | 0.000 | −0.2 | 0.1 | |

| Independent Sample Mann–Whitney U Test Result | |||||

|---|---|---|---|---|---|

| Indicators | Exp./Ctr. | Median | Standard Deviation | p | Cohen’s d |

| Average Blink Duration (ms) | R-G/R-R | 1.0/1.0 | 0.5/1.6 | 0.428 | 0.528 |

| R-G/G-G | 1.0/1.0 | 0.5/3.3 | 0.284 | 0.644 | |

| R-R/G-G | 1.0/1.0 | 1.6/3.3 | 0.492 | 0.391 | |

| Blink Count (times) | R-G/R-R | 0.0/0.0 | 0.8/0.4 | 0.172 | 0.752 |

| R-G/G-G | 0.0/0.0 | 0.8/0.9 | 0.435 | 0.133 | |

| R-R/G-G | 0.0/0.0 | 0.4/0.9 | 0.582 | 0.337 | |

| Fixation Count (times) | R-G/R-R | 2.5/2.0 | 1.2/0.7 | 0.349 | 0.403 |

| R-G/G-G | 2.5/2.0 | 1.2/0.8 | 0.331 | 0.391 | |

| R-R/G-G | 2.0/2.0 | 0.7/0.8 | 0.875 | 0.000 | |

| SD Saccade Amplitude (°) | R-G/R-R | 8.5/20.5 | 4.5/8.3 | 0.001 | 1.493 |

| R-G/G-G | 8.5/33.5 | 4.5/12.4 | 0.000 | 2.203 | |

| R-R/G-G | 20.5/33.5 | 8.3/12.4 | 0.002 | 0.996 | |

| SD Fixation Duration (ms) | R-G/R-R | 102.5/129.9 | 61.4/75.6 | 0.168 | 0.489 |

| R-G/G-G | 102.5/151.3 | 61.4/76.0 | 0.071 | 0.588 | |

| R-R/G-G | 129.9/151.3 | 75.6/76.0 | 0.874 | 0.092 | |

| Saccade Count (times) | R-G/R-R | 2.0/2.0 | 1.0/0.7 | 0.683 | 0.127 |

| R-G/G-G | 2.0/2.0 | 1.0/0.5 | 0.345 | 0.273 | |

| R-R/G-G | 2.0/2.0 | 0.7/0.5 | 0.548 | 0.177 | |

| Pupil Size Duration (ms) | R-G/R-R | 3889.5/3843.5 | 1050.6/1334.5 | 0.591 | 0.22 |

| R-G/G-G | 3889.5/3929.0 | 1050.6/1574.2 | 0.569 | 0.335 | |

| R-R/G-G | 3843.5/3929.0 | 1334.5/1574.2 | 0.849 | 0.126 | |

| Sac Avg Velocity (°/s) | R-G/R-R | 53.4/53.6 | 12.7/15.6 | 0.569 | 0.254 |

| R-G/G-G | 53.4/53.6 | 12.7/24.1 | 0.975 | 0.144 | |

| R-R/G-G | 53.6/53.6 | 15.6/24.1 | 0.704 | 0.041 | |

| Sac Peak Velocity (°/s) | R-G/R-R | 71.8/82.0 | 31.0/75.3 | 0.411 | 0.36 |

| R-G/G-G | 71.8/68.6 | 31.0/69.0 | 0.874 | 0.263 | |

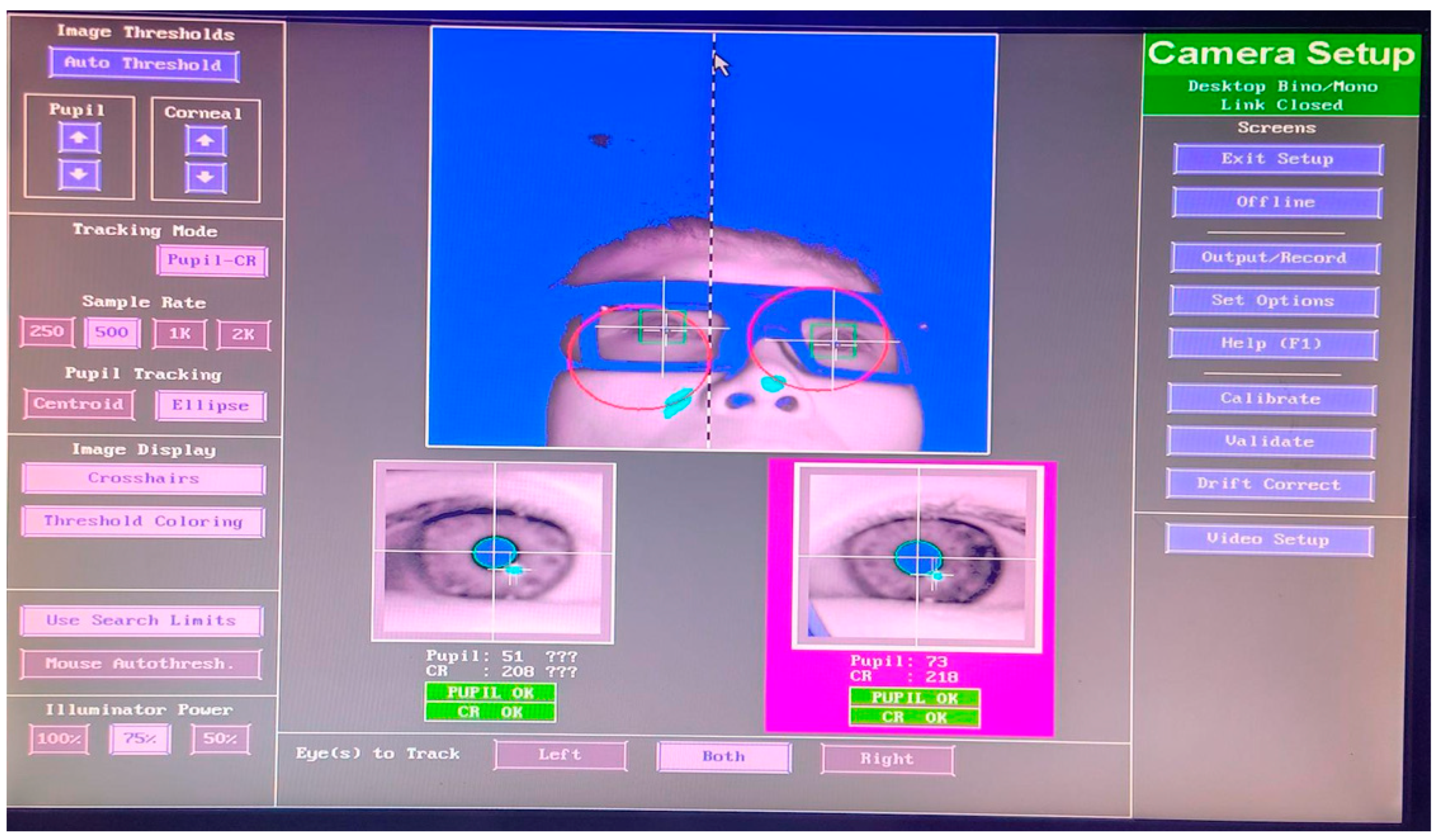

Appendix B. Eye Movement Instrument Calibration Process

- (1)

- Subjects completed an informed consent form describing the general procedure.

- (2)

- Turn off or mute all distracting electronic devices.

- (3)

- Turn on the power supply, power on the host PC and display PC, and wait for the devices to display the interface normally.

- (4)

- Adjust the subject’s seat height so that they can comfortably rest their chin on the chin rest and lean their forehead against the forehead. Locate the captured eye roughly in the center of the display screen or 1/4 of the way from the upper screen.

- (5)

- On the host PC, click the red circle on the subject’s eye to lock the tracking range, press A to automatically adjust the threshold, and adjust the lens focus so that the eye is clearly visible.

- (6)

- Have the subject view the four corners of the monitor, making sure that the pupillary and corneal reflections are tracked over the entire surface of the monitor. If the pupillary or corneal reflexes are lost at the edges of the monitor, make sure the screen is far enough away from the subject. For subjects wearing eyeglasses, the frame sometimes obscured part of the video image of the eye when viewing extreme horizontal or vertical angles, which was adjusted by moving the front of the subject’s chin.

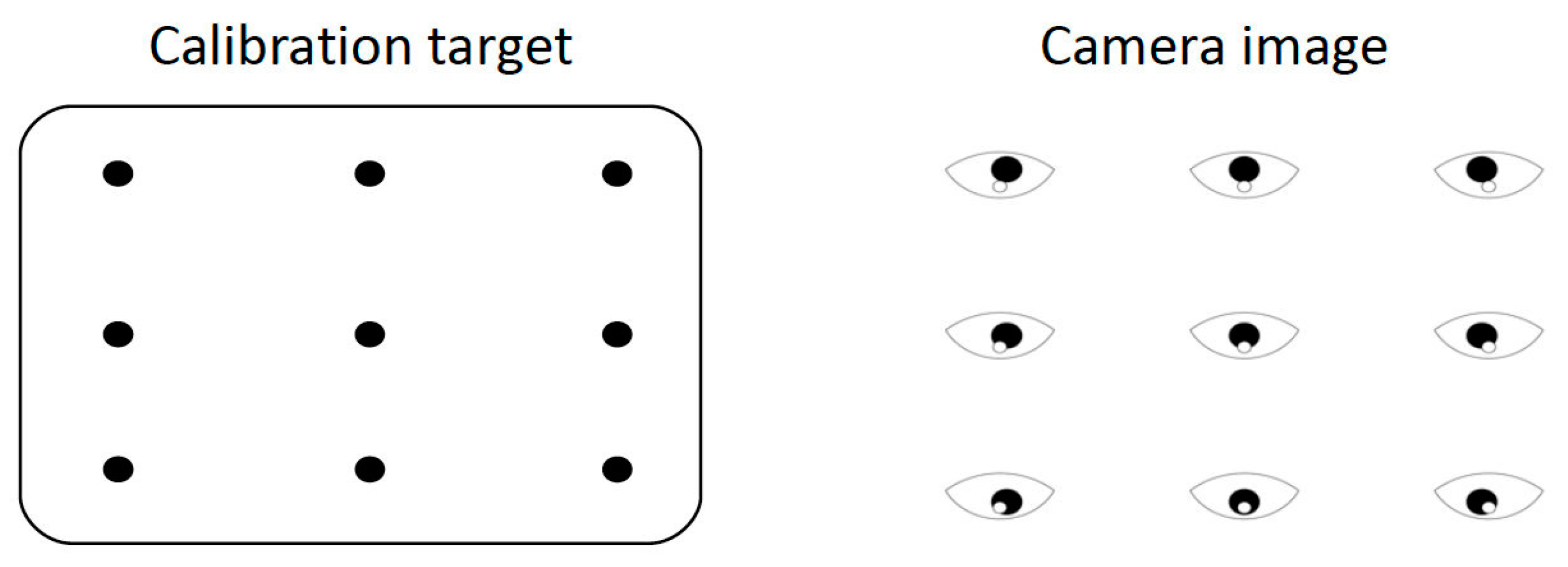

- (7)

- Calibration is the process used to set up the eye tracking software to accurately track eye movements; in this experiment, a nine-point calibration was used in order to optimize the calibration accuracy.

- (8)

- Confirm that the subject’s eye signal is intact and click the Calibrate button on the host PC interface to begin calibration. During calibration, instruct the subject to look at each point until it disappears and then look at the next point.

- (9)

- Validate the calibration. Click the Validate button to confirm the calibration and decide whether it is acceptable or not based on the feedback.

- (10)

- Start the experiment after an acceptable calibration. Click the Output/Record button to begin presenting the stimulus and recording data. Instruct the subject not to talk while the stimulus is displayed. Talking will cause the head to move up and down at the chin, which will decrease the accuracy of eye tracking.

- (11)

- If the subject needs to rest at any time or the quality of the eye movement data decreases, the calibration will be checked and recalibrated as needed.

- (12)

- Upon completion of the experiment, check for the presence of eye movement data in the data folder.

- (13)

- Ask the subject if any discomfort exists, and if not, have the subject leave the laboratory.

References

- Bhardwaj, R.; O’Shea, R.P. Temporal analysis of binocular rivalry. Vis. Res. 2012, 52, 43–47. [Google Scholar] [CrossRef]

- Ludwig, I.; Davies, J.R.; Castet, E. Contextual effects in binocular rivalry revealed by monocular occlusions. Vis. Res. 2007, 47, 2855–2865. [Google Scholar] [CrossRef]

- Xiong, Y.; Zhang, J.; Tong, F. Temporal dynamics of binocular rivalry: New perspectives on neural mechanisms. Neurosci. Bull. 2021, 37, 707–720. [Google Scholar] [CrossRef]

- Breese, B.B. On inhibition. Psychol. Rev. 1899, 6, 229–249. [Google Scholar] [CrossRef]

- Gellhorn, E.; Schöppe, C. Quantitative Untersuchungen über den Wettstreit der Sehfelder: IV. Mitteilung Über den Einfluß von farbigen Nebenreizen auf den Wettstreit. Pflüger’s Arch. Für Die Gesamte Physiol. Des Menschen Und Der Tiere 1925, 208, 393–407. [Google Scholar] [CrossRef]

- Levelt, W.J.M. On Binocular Rivalry; Mouton & Co.: Uvernet-Fours, France, 1965. [Google Scholar]

- Blake, R.; Fox, R. Adaptation to invisible gratings and the site of binocular rivalry suppression. Nature 1974, 249, 488–490. [Google Scholar] [CrossRef]

- Wandell, B.A. Foundations of Vision; Sinauer Associates: Sunderland, MA, USA, 1995. [Google Scholar]

- Li, J.; Thompson, B.; Lam, C.S.Y.; Deng, D.; Chan, L.Y.L.; Maehara, G.; Woo, G.C.; Yu, M.; Hess, R.F. The role of suppression in amblyopia. Investig. Ophthalmol. Vis. Sci. 2013, 54, 2659–2671. [Google Scholar] [CrossRef]

- Brascamp, J.W.; Klink, P.C.; Levelt, W.J.M. The ‘laws’ of binocular rivalry: 50 years of Levelt’s propositions. Vis. Res. 2015, 109, 20–37. [Google Scholar] [CrossRef]

- Riesen, C.; Norcia, A.M.; Banks, M.S. Perceptual switching in binocular rivalry: Neural and behavioral perspectives. J. Vis. 2019, 19, 14. [Google Scholar] [CrossRef]

- Chen, Z.; Shi, J.; Tai, Y.; Huang, X.; Yun, L.; Zhang, C. A quantitative measurement of binocular color fusion limit for different disparities. In Proceedings of the 2017 International Conference on Optical Instruments and Technology: Optical Systems and Modern Optoelectronic Instruments, Beijing, China, 28–30 October 2017. [Google Scholar] [CrossRef]

- Lv, Z.; Liu, X.; Dai, M.; Jin, X.; Huang, X.; Chen, Z. Investigating critical brain area for EEG-based binocular color fusion and rivalry with EEGNet. Front. Neurosci. 2024, 18, 1361486. [Google Scholar] [CrossRef]

- Asano, Y.; Wang, M. An investigation of color difference for binocular rivalry and a preliminary rivalry metric, Δ E* bino. Color Res. Appl. 2024, 49, 51–64. [Google Scholar] [CrossRef]

- Zhang, P.; Jamison, K.; Engel, S.; He, B.; He, S. Binocular rivalry requires visual attention. Neuron 2011, 71, 362–369. [Google Scholar] [CrossRef]

- Botvinick, M.M.; Braver, T.S.; Barch, D.M.; Carter, C.S.; Cohen, J.D. Conflict monitoring and cognitive control. Psychol. Rev. 2001, 108, 624–652. [Google Scholar] [CrossRef]

- Carter, C.S.; van Veen, V. Anterior cingulate cortex and conflict detection: An update of theory and data. Cogn. Affect. Behav. Neurosci. 2007, 7, 367–379. [Google Scholar] [CrossRef]

- Martinez-Conde, S.; Macknik, S.L.; Hubel, D.H. The role of fixational eye movements in visual perception. Nat. Rev. Neurosci. 2004, 5, 229–240. [Google Scholar] [CrossRef]

- Van Dam, L.C.J.; Van Ee, R. The role of (micro)saccades and blinks in perceptual bi-stability from slant rivalry. Vis. Res. 2006, 46, 241–256. [Google Scholar] [CrossRef][Green Version]

- Raveendran, R.N.; Bobier, W.R.; Thompson, B. Binocular vision and fixational eye movements. J.Vis. 2019, 19, 9. [Google Scholar] [CrossRef]

- Kalisvaart, J.P.; Goossens, J. Influence of retinal and extra-retinal motion signals on binocular rivalry alternations. J. Vis. 2013, 13, 12. [Google Scholar] [CrossRef][Green Version]

- Skerswetat, J.; Formankiewicz, M.A.; Waugh, S.J. Relationship between microsaccades, fixational eye movements, and visual acuity. Vis. Res. 2017, 141, 109–118. [Google Scholar] [CrossRef]

- Otero-Millan, J.; Macknik, S.L.; Martinez-Conde, S. Microsaccades and blinks trigger illusory rotation in the "rotating snakes" illusion. J. Neurosci. 2014, 34, 6046–6051. [Google Scholar] [CrossRef]

- Hirota, M.; Matsuoka, Y.; Koshiro, A. Binocular fusion capacity as an indicator of visual fatigue. Optom. Vis. Sci. 2018, 95, 807–814. [Google Scholar] [CrossRef]

- Grossberg, S.; Srinivasan, K.; Yazdanbakhsh, A. On the neural dynamics of saccade generation: The superior colliculus signal as an emergent spatial decision. Neural Netw. 2015, 71, 20–33. [Google Scholar] [CrossRef]

- Rucci, M.; Ahissar, E.; Burr, D. Temporal coding of visual space. Trends Cogn. Sci. 2018, 22, 883–895. [Google Scholar] [CrossRef]

- Schluppeck, D.; Meeson, A.; Wade, A.R. Asymmetric L/M cone contributions to human visual cortex revealed by fMRI adaptation. J. Vis. 2019, 19, 9. [Google Scholar] [CrossRef]

- World Medical Association. World Medical Association Declaration of Helsinki: 5th Amendment of the Declaration of Helsinki. Ethical principles for medical research involving human subjects. Eur. J. Emerg. Med. 2001, 8, 221–223. [Google Scholar] [CrossRef]

- Han, S.; Kim, S.; Jung, J. The effect of visual rivalry in peripheral head-mounted displays on mobility. Sci. Rep. 2023, 13, 20199. [Google Scholar] [CrossRef]

- Xiong, Q.; Liu, H.; Chen, Z.; Tai, Y.; Shi, J.; Liu, W. Detection of binocular chromatic fusion limit for opposite colors. Opt. Express 2021, 29, 35022. [Google Scholar] [CrossRef]

- Benson, P.J.; Beedie, S.A.; Shephard, E.; Giegling, I.; Rujescu, D.; St Clair, D. Simple viewing tests can detect eye movement abnormalities that distinguish schizophrenia cases from controls with exceptional accuracy. Biol. Psychiatry 2012, 72, 716–724. [Google Scholar] [CrossRef]

- Morita, K.; Miura, K.; Fujimoto, M.; Yamamori, H.; Yasuda, Y.; Iwase, M.; Kasai, K.; Hashimoto, R. Eye movement as a biomarker of schizophrenia: Using an integrated eye movement score. Psychiatry Clin. Neurosci. 2016, 71, 104–114. [Google Scholar] [CrossRef]

- Brakemeier, S.; Sprenger, A.; Meyhöfer, I.; McDowell, J.E.; Rubin, L.H.; Hill, S.K.; Keshavan, M.S.; Pearlson, G.D.; Tamminga, C.A.; Gershon, E.S.; et al. Smooth pursuit eye movement deficits as a biomarker for psychotic features in bipolar disorder—Findings from the PARDIP study. Bipolar Disord. 2019, 22, 602–611. [Google Scholar] [CrossRef]

- Takahashi, J.; Hirano, Y.; Miura, K.; Morita, K.; Fujimoto, M.; Yamamori, H.; Yasuda, Y.; Kudo, N.; Shishido, E.; Okazaki, K.; et al. Eye movement abnormalities in major depressive disorder. Front. Psychiatry 2021, 12, 673443. [Google Scholar] [CrossRef]

- Robinson, D.A. The use of control systems analysis in the neurophysiology of eye movements. Annu. Rev. Neurosci. 1981, 4, 463–503. [Google Scholar] [CrossRef]

- Kumar, R.; Indrayan, A. Receiver operating characteristic (ROC) curve for medical researchers. Indian Pediatr. 2011, 48, 277–287. [Google Scholar] [CrossRef]

- Youden, W.J. Index for rating diagnostic tests. Cancer 1950, 3, 32–35. [Google Scholar] [CrossRef]

- Baseler, H.A.; Morland, A.B.; Wandell, B.A. Topographic organization of human visual areas in the absence of input from primary cortex. J. Neurosci. 1999, 19, 2619–2627. [Google Scholar]

- Krauzlis, R.J.; Lovejoy, L.P.; Zénon, A. Superior colliculus and visual spatial attention. Annu. Rev. Neurosci. 2017, 40, 165–182. [Google Scholar] [CrossRef]

- Hafed, Z.M.; Krauzlis, R.J. Similarity of superior colliculus involvement in microsaccade and saccade generation. J. Neurophysiol. 2012, 107, 1904–1916. [Google Scholar] [CrossRef]

- Piantoni, G.; Romeijn, N.; Gomez-Herrero, G.; Van Der Meij, J. Gamma oscillations in the human visual cortex are synchronized with saccadic eye movements. Curr. Biol. 2017, 27, 1–7. [Google Scholar] [CrossRef]

- Denison, R.N.; Piazza, E.A.; Silver, M.A. Predictive context influences perceptual decisions through biasing of pre-stimulus neural activity. J. Vis. 2019, 19, 1–18. [Google Scholar] [CrossRef]

- Nakano, T.; Kato, M.; Morito, Y.; Itoi, S.; Kitazawa, S. Blink-related momentary activation of the default mode network while viewing videos. Proc. Natl. Acad. Sci. USA 2013, 110, 702–706. [Google Scholar] [CrossRef]

- Bieg, H.-J.; Chuang, L.L.; Bülthoff, H.H. Looking at the rope while walking on it: Gaze behavior during tightrope walking. J. Vis. 2015, 15, 1–13. [Google Scholar] [CrossRef]

- Hafed, Z.M.; Goffart, L.; Krauzlis, R.J. A neural mechanism for microsaccade generation in the primate superior colliculus. Science 2009, 323, 940–943. [Google Scholar] [CrossRef] [PubMed]

- Grossberg, S.; Srinivasan, K.; Yazdanbakhsh, A. Predictive remapping of attention during eye movements. Vis. Res. 2015, 110, 144–156. [Google Scholar] [CrossRef]

- Blake, R.; Logothetis, N.K. Visual competition. Nat. Rev. Neurosci. 2002, 3, 13–21. [Google Scholar] [PubMed]

- Einhäuser, W.; Stout, J.; Koch, C.; Carter, O. Pupil dilation reflects perceptual selection and predicts subsequent stability in perceptual rivalry. Proc. Natl. Acad. Sci. USA 2008, 105, 1704–1709. [Google Scholar]

- Kambeitz, J.; Cabral, C.; Sacchet, M.D.; Gotlib, I.H.; Zahn, R.; Serpa, M.H.; Walter, M.; Falkai, P.; Koutsouleris, N. Detecting neuroimaging biomarkers for depression: A meta-analysis of multivariate pattern recognition studies. Biol. Psychiatry 2016, 82, 330–338. [Google Scholar] [CrossRef]

- Niemeier, M.; Crawford, J.D.; Tweed, D.B. Optimal transsaccadic integration explains distorted spatial perception. Nature 2003, 422, 76–80. [Google Scholar]

| Paradigm | Indicators | Binocular Color Fusion (n = 36) | Binocular Color Rivalry (n = 18) | ||

|---|---|---|---|---|---|

| Mean ± SD | Range | Mean ± SD | Range | ||

| Gaze Stability | Average Blink Duration | 0.317 ± 0.771 | −0.454~1.088 | −0.654 ± 0.2 | −0.854~−0.454 |

| Average Saccade Amplitude | 0.525 ± 0.769 | −0.244~1.294 | −1.082 ± 0.389 | −1.471~−0.693 | |

| Median Saccade Amplitude | 0.527 ± 0.759 | −0.232~1.286 | −1.088 ± 0.405 | −1.493~−0.683 | |

| SD Saccade Amplitude | 0.381 ± 0.784 | −0.403~1.165 | −0.785 ± 0.382 | −1.167~−0.403 | |

| Straight Curve Eye Hopping | Average Saccade Amplitude | −1.412 ± 0.507 | −1.919~−0.905 | 0.397 ± 1.302 | −0.905~1.700 |

| Median Saccade Amplitude | −1.455 ± 0.525 | −1.980~−0.950 | 0.33 ± 1.28 | −0.950~1.610 | |

| SD Saccade Amplitude | −1.368 ± 0.541 | −1.909~−0.827 | 0.359 ± 1.186 | −0.827~1.545 | |

| Sac Avg Velocity | 0.747 ± 0.371 | 0.376~1.118 | −0.509 ± 0.895 | −1.404~0.376 | |

| Sac Peak Velocity | −0.760 ± 0.076 | −0.836~−0.684 | 0.816 ± 1.492 | −0.676~2.308 | |

| Smoothed Eye Movement Tracking | Average Saccade Amplitude | 1.421 ± 0.813 | 0.608~2.234 | −1 ± 0.392 | −1.392~0.608 |

| Median Saccade Amplitude | 0.507 ± 0.855 | −0.348~1.362 | −0.955 ± 0.403 | −1.358~−0.552 | |

| SD Saccade Amplitude | 0.527 ± 0.971 | −0.444~1.498 | −0.82 ± 0.376 | −1.196~−0.444 | |

| Indicators | Binocular Color Fusion (n = 36) | Binocular Color Rivalry (n = 18) | ||

|---|---|---|---|---|

| Mean ± SD | Range | Mean ± SD | Range | |

| Average Saccade Amplitude | 0.951 ± 0.343 | 0.608~1.294 | −0.799 ± 0.106 | −0.905~−0.693 |

| Median Saccade Amplitude | 0.527 ± 0.759 | −0.232~1.286 | −0.817 ± 0.134 | −0.950~−0.683 |

| SD Saccade Amplitude | 0.381 ± 0.784 | −0.403~1.165 | −0.636 ± 0.192 | −0.827~−0.444 |

| Indicators | Average Saccade Amplitude | Median Saccade Amplitude | SD Saccade Amplitude |

|---|---|---|---|

| Average Saccade Amplitude | 1 (0.000) | 0.95 (0.000) | 0.54 (0.000) |

| Median Saccade Amplitude | 0.95 (0.000) | 1 (0.000) | 0.52 (0.000) |

| SD Saccade Amplitude | 0.54 (0.000) | 0.52 (0.000) | 1 (0.000) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Dai, M.; Cheng, F.; Yun, L.; Chen, Z. Eye Movement Indicator Difference Based on Binocular Color Fusion and Rivalry. J. Eye Mov. Res. 2025, 18, 10. https://doi.org/10.3390/jemr18020010

Zhang X, Dai M, Cheng F, Yun L, Chen Z. Eye Movement Indicator Difference Based on Binocular Color Fusion and Rivalry. Journal of Eye Movement Research. 2025; 18(2):10. https://doi.org/10.3390/jemr18020010

Chicago/Turabian StyleZhang, Xinni, Mengshi Dai, Feiyan Cheng, Lijun Yun, and Zaiqing Chen. 2025. "Eye Movement Indicator Difference Based on Binocular Color Fusion and Rivalry" Journal of Eye Movement Research 18, no. 2: 10. https://doi.org/10.3390/jemr18020010

APA StyleZhang, X., Dai, M., Cheng, F., Yun, L., & Chen, Z. (2025). Eye Movement Indicator Difference Based on Binocular Color Fusion and Rivalry. Journal of Eye Movement Research, 18(2), 10. https://doi.org/10.3390/jemr18020010