Primacy of Mouth over Eyes to Perceive Audiovisual Mandarin Lexical Tones

Abstract

1. Introduction

2. Methods

2.1. Participants

2.2. Design and Materials

2.3. Procedure

3. Results

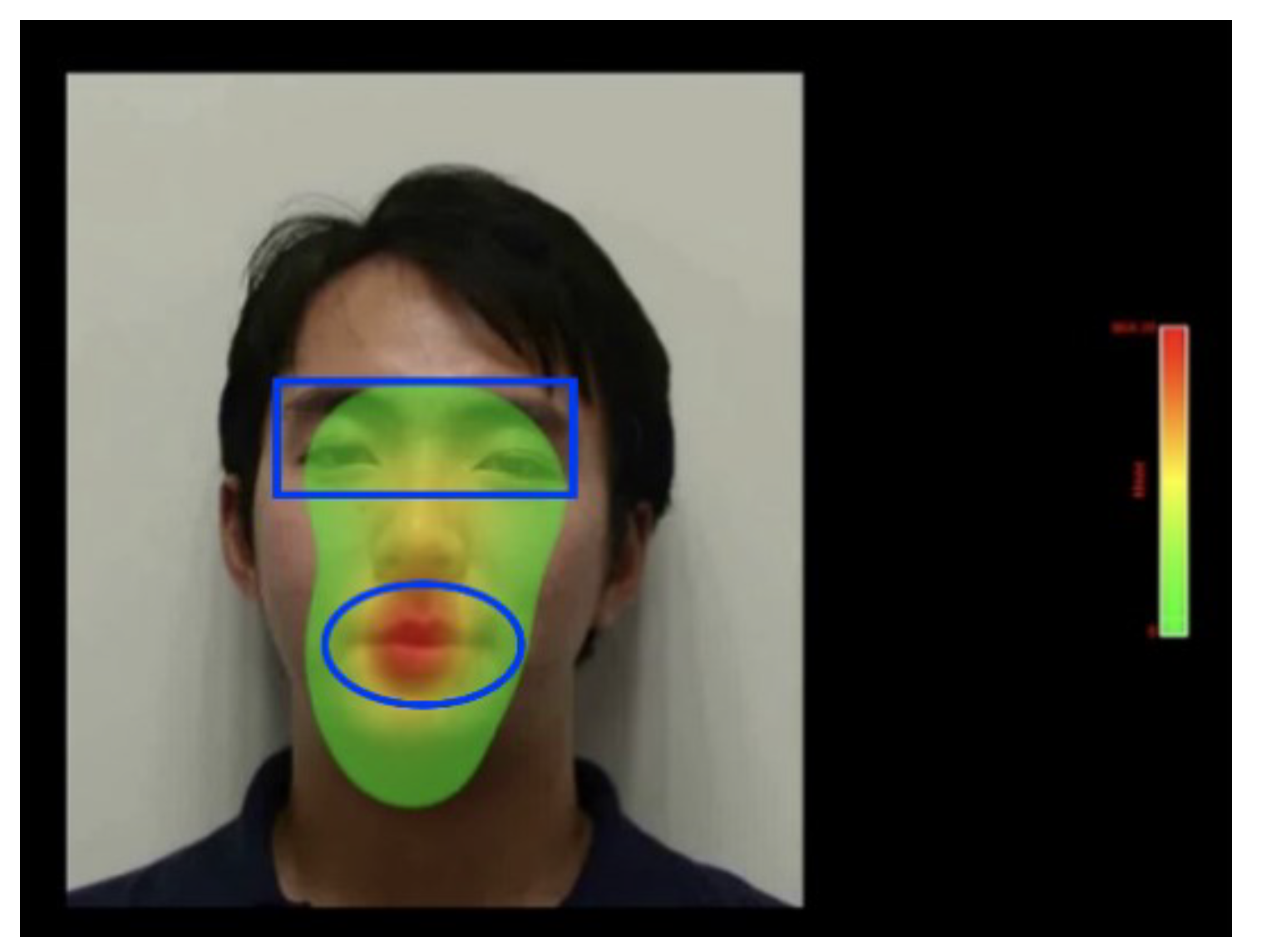

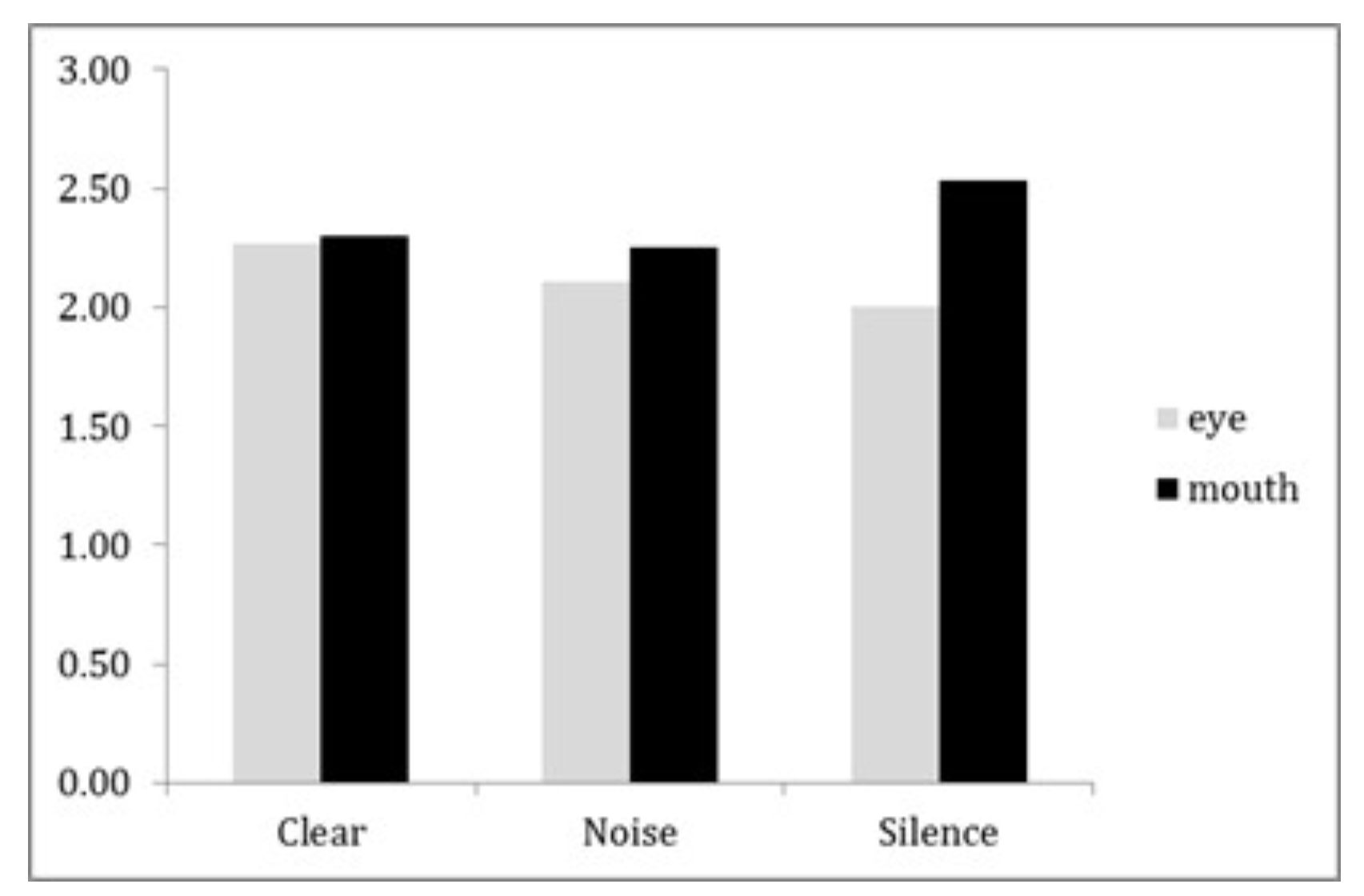

3.1. Fixations

3.2. Gaze Duration

4. Discussion

Ethics and Conflict of Interest

Acknowledgments

References

- Alsius, A., M. Paré, and K. G. Munhall. 2018. Forty years after hearing lips and seeing voices: The McGurk effect revisited. Multisensory Research 31, 1–2: 111–144. [Google Scholar] [CrossRef] [PubMed]

- Best, V., E. J. Ozmeral, and B. G. Shinn-Cunningham. 2007. Visually-guided attention enhances target identification in a complex auditory scene. Journal for the Association for Research in Otolaryngology 8, 2: 294–304. [Google Scholar] [CrossRef] [PubMed]

- Buchan, J. N., M. Paré, and K. G. Munhall. 2008. The effect of varying talker identity and listening conditions on gaze behavior during audiovisual speech perception. Brain Research 1242: 162–171. [Google Scholar] [CrossRef] [PubMed]

- Burnham, D., B. Kasisopa, A. Reid, S. Luksaneeyanawin, F. Lacerda, V. Attina, and D. Webster. 2015. Universality and language-specific experience in the perception of lexical tone and pitch. Applied Psycholinguistics 36, 6: 1459–1491. [Google Scholar] [CrossRef]

- Burnham, D., J. Reynolds, E. Vatikiotis-Bateson, H. Yehia, V. Ciocca, R. H. Morris, and C. Jones. 2006. The perception and production of phones and tones: The role of rigid and non-rigid face and head motion. Available online: https://ro.uow.edu.au/cgi/viewcontent.cgi?article=1676&context=edupapers.

- Cavé, C., I. Guaïtella, R. Bertrand, S. Santi, F. Harlay, and R. Espesser. 1996. About the relationship between eyebrow movements and F0 variations. Fourth International Conference on Spoken Language Processing ICSLP’96, Volume 4, pp. 2175–2178. [Google Scholar]

- Chen, T. H., and D. W. Massaro. 2008. Seeing pitch: Visual information for lexical tones of Mandarin-Chinese. The Journal of the Acoustical Society of America 123, 4: 2356–2366. [Google Scholar] [CrossRef]

- Cruz, M., J. Butler, C. Severino, M. Filipe, and S. Frota. 2020. Eyes or mouth? Exploring eye gaze patterns and their relation with early stress perception in European Portuguese. Journal of Portuguese Linguistics 19, 1: 4. [Google Scholar] [CrossRef]

- Cruz, M., M. Swerts, and S. Frota. 2017. The role of intonation and visual cues in the perception of sentence types: Evidence from European Portuguese varieties. Laboratory Phonology: Journal of the Association for Laboratory Phonology 8, 1: 23. [Google Scholar] [CrossRef][Green Version]

- Cvejic, E., J. Kim, and C. Davis. 2010. Prosody off the top of the head: Prosodic contrasts can be discriminated by head motion. Speech Communication 52, 6: 555–564. [Google Scholar] [CrossRef]

- Cvejic, E., J. Kim, and C. Davis. 2012. Recognizing prosody across modalities, face areas and speakers: Examining perceivers’ sensitivity to variable realizations of visual prosody. Cognition 22, 3: 442–453. [Google Scholar] [CrossRef]

- Everdell, I. T., H. Marsh, M. D. Yurick, K. G. Munhall, and M. Paré. 2007. Gaze behaviour in audiovisual speech perception: Asymmetrical distribution of face-directed fixations. Perception 36, 10: 1535–1545. [Google Scholar] [CrossRef] [PubMed]

- Fisher, C. G. 1968. Confusions among visually perceived consonants. Journal of Speech and Hearing Research 11, 4: 796–804. [Google Scholar] [CrossRef]

- Greenberg, S., H. Carvey, L. Hitchcock, and S. Chang. 2003. Temporal properties of spontaneous speech—A syllable-centric perspective. Journal of Phonetics 31, 3–4: 465–485. [Google Scholar] [CrossRef]

- Gussenhoven, C. 2004. The phonology of tone and intonation. Cambridge University Press. [Google Scholar]

- Gussenhoven, C. 2015. Suprasegmentals. Edited by J. D. Wright. In International encyclopedia of the social & behavioral sciences, 2nd ed. Elsevier: vol. 23, pp. 714–721. [Google Scholar]

- Hisanaga, S., K. Sekiyama, T. Igasaki, and N. Murayama. 2016. Language/culture modulates brain and gaze processes in audiovisual speech perception. Scientific Reports 6: 35265. [Google Scholar] [CrossRef]

- Kim, J., E. Cvejic, and C. Davis. 2014. Tracking eyebrows and head gestures associated with spoken prosody. Speech Communication 57: 317–330. [Google Scholar] [CrossRef]

- Kim, J., and C. Davis. 2014. How visual timing and form information affect speech and nonspeech processing. Brain and Language 137: 86–90. [Google Scholar] [CrossRef] [PubMed]

- Kitamura, C., B. Guellaï, and J. Kim. 2014. Motherese by eye and ear: Infants perceive visual prosody in point-line displays of talking heads. PLoS ONE 9, 10: e111467. [Google Scholar] [CrossRef]

- Krahmer, E., and M. Swerts. 2007. The effects of visual beats on prosodic prominence: Acoustic analyses, auditory perception and visual perception. Journal of Memory and Language 57, 3: 396–414. [Google Scholar] [CrossRef]

- Ladd, D. R. 2008. Intonational phonology, 2nd ed. Cambridge University Press. [Google Scholar]

- Lalonde, K., and L. A. Werner. 2021. Development of the mechanisms underlying audiovisual speech perception benefit. Brain Sciences 11, 1: 49. [Google Scholar] [CrossRef]

- Lansing, C. R., and G. W. McConkie. 1999. Attention to facial regions in segmental and prosodic visual speech perception tasks. Journal of Speech, Language, and Hearing Research 42, 3: 526–539. [Google Scholar] [CrossRef]

- Lusk, L. G., and A. D. Mitchel. 2016. Differential gaze patterns on eyes and mouth during audiovisual speech segmentation. Frontiers in Psychology 7: 52. [Google Scholar] [CrossRef]

- MacNeilage, P. F. 1998. The frame/content theory of evolution of speech production. Behavioral and Brain Sciences 21, 4: 499–511. [Google Scholar] [CrossRef]

- Magnotti, F. J., B. D. Mallick, G. Feng, B. Zhou, W. Zhou, and S. M. Beauchamp. 2015. Similar frequency of the McGurk effect in large samples of native Mandarin Chinese and American English speakers. Experimental Brain Research 233: 2581–2586. [Google Scholar] [CrossRef]

- McGurk, H., and J. MacDonald. 1976. Hearing Lips and Seeing Voices. Nature 264: 746–748. [Google Scholar] [CrossRef]

- Mixdorff, H., P. Charnvivit, and D. Burnham. 2005. Auditory-visual perception of syllabic tones in Thai. Auditory-Visual Speech Processing 2005, British, CO, Canada, July 24–27; pp. 3–8. [Google Scholar]

- Mixdorff, H., Y. Hu, and D. Burnham. 2005. Visual cues in Mandarin tone perception. The Ninth European Conference on Speech Communication and Technology, Lisbon, Portugal, September 4–8; pp. 405–408. [Google Scholar]

- Munhall, K. G., J. A. Jones, D. E. Callan, T. Kuratate, and E. Vatikiotis-Bateson. 2004. Visual prosody and speech intelligibility: Head movement improves auditory speech perception. Psychological Science 15, 2: 133–137. [Google Scholar] [CrossRef]

- Paré, M., R. C. Richler, M. ten Hove, and K. G. Munhall. 2003. Gaze behavior in audiovisual speech perception: The influence of ocular fixations on the McGurk effect. Perception & Psychophysics 65, 4: 553–567. [Google Scholar]

- Sekiyama, K. 1997. Cultural and linguistic factors in audiovisual speech processing: The McGurk effect in Chinese subjects. Perception & Psychophysics 59, 1: 73–80. [Google Scholar]

- Sekiyama, K., and I. Y. Tohkura. 1993. Inter-language differences in the influence of visual cues in speech perception. Phonetics 21, 4: 427–444. [Google Scholar] [CrossRef]

- Shuai, L., and T. Gong. 2014. Temporal relation between top-down and bottom-up processing in lexical tone perception. Frontiers in Behavioral Neuroscience 8: 97. [Google Scholar] [CrossRef] [PubMed]

- Summerfield, Q. 1979. Use of visual information for phonetic perception. Phonetica 36, 4–5: 314–331. [Google Scholar] [CrossRef] [PubMed]

- Swerts, M., and E. Krahmer. 2008. Facial expression and prosodic prominence: Effects of modality and facial area. Journal of Phonetics 36, 2: 219–238. [Google Scholar] [CrossRef]

- Thomas, S. M., and T. R. Jordan. 2004. Contributions of oral and extraoral facial movement to visual and audiovisual speech perception. Journal of Experimental Psychology: Human Perception and Performance 30, 5: 873–888. [Google Scholar] [CrossRef]

- Tomalski, P., H. Ribeiro, H. Ballieux, E. L. Axelsson, E. Murphy, D. G. Moore, and E. Kushnerenko. 2013. Exploring early developmental changes in face scanning patterns during the perception of audiovisual mismatch of speech cues. European Journal of Developmental Psychology 10, 5: 611–624. [Google Scholar] [CrossRef]

- Tuomainen, J., T. S. Andersen, K. Tiippana, and M. Sams. 2005. Audio–visual speech perception is special. Cognition 96, 1: 13–22. [Google Scholar] [CrossRef]

- Vatikiotis-Bateson, E., I. Eigsti, S. Yano, and K. G. Munhall. 1998. Eye movement of perceivers during audiovisual speech perception. Perception & Psychophysics 60, 6: 926–940. [Google Scholar]

- Xie, H., B. Zeng, and R. Wang. 2018. Visual timing information in audiovisual speech perception: Evidence from lexical tone contour. INTERSPEECH 2018—18th Annual Conference of the International Speech Communication Association, Hyderabad, India, September 2–6; pp. 3781–3785. [Google Scholar]

- Xu, Y. 1997. Contextual tonal variations in Mandarin. Journal of Phonetics 25, 1: 61–83. [Google Scholar] [CrossRef]

- Yeung, H. H., and J. F. Werker. 2013. Lip movements affect infants’ audiovisual speech perception. Psychological Science 24, 5: 603–612. [Google Scholar] [CrossRef]

- Yi, A., W. Wong, and M. M. Eizenman. 2013. Gaze patterns and audiovisual speech enhancement. Journal of Speech, Language, and Hearing Research 56, 2: 471–480. [Google Scholar] [CrossRef]

- Yip, M. 2002. Tone. Cambridge University Press. [Google Scholar]

- Wilson, A. M., A. Alsius, M. Paré, and K. G. Munhall. 2016. Spatial frequency requirements and gaze strategy in visual-only and audiovisual speech perception. Journal of Speech, Language, and Hearing Research 59, 4: 601–615. [Google Scholar] [CrossRef]

- Wong, P. C. 2002. Hemispheric specialization of linguistic pitch patterns. Brain Research Bulletin 59, 2: 83–95. [Google Scholar] [CrossRef]

| Native | Mandarin | Native | English | |

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| Clear | 0.98 | 0.02 | 0.96 | 0.05 |

| Noisy | 0.76 | 0.08 | 0.69 | 0.13 |

| Silent | 0.54 | 0.07 | 0.56 | 0.07 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

This article is licensed under a Creative Commons Attribution 4.0 International license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, B.; Yu, G.; Hasshim, N.; Hong, S. Primacy of Mouth over Eyes to Perceive Audiovisual Mandarin Lexical Tones. J. Eye Mov. Res. 2023, 16, 1-12. https://doi.org/10.16910/jemr.16.4.4

Zeng B, Yu G, Hasshim N, Hong S. Primacy of Mouth over Eyes to Perceive Audiovisual Mandarin Lexical Tones. Journal of Eye Movement Research. 2023; 16(4):1-12. https://doi.org/10.16910/jemr.16.4.4

Chicago/Turabian StyleZeng, Biao, Guoxing Yu, Nabil Hasshim, and Shanhu Hong. 2023. "Primacy of Mouth over Eyes to Perceive Audiovisual Mandarin Lexical Tones" Journal of Eye Movement Research 16, no. 4: 1-12. https://doi.org/10.16910/jemr.16.4.4

APA StyleZeng, B., Yu, G., Hasshim, N., & Hong, S. (2023). Primacy of Mouth over Eyes to Perceive Audiovisual Mandarin Lexical Tones. Journal of Eye Movement Research, 16(4), 1-12. https://doi.org/10.16910/jemr.16.4.4