Pun Processing in Advertising Posters: Evidence from Eye Tracking

Abstract

Introduction

Background

Image–text relations in a polycode text

A pun as a way to construct a polycode advertising poster

Eye-tracking as a method to study the processing of polycode texts

Research questions

Material and Methods

Hypothesis

Participants

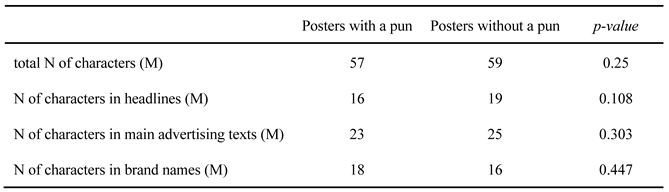

Material

Procedure

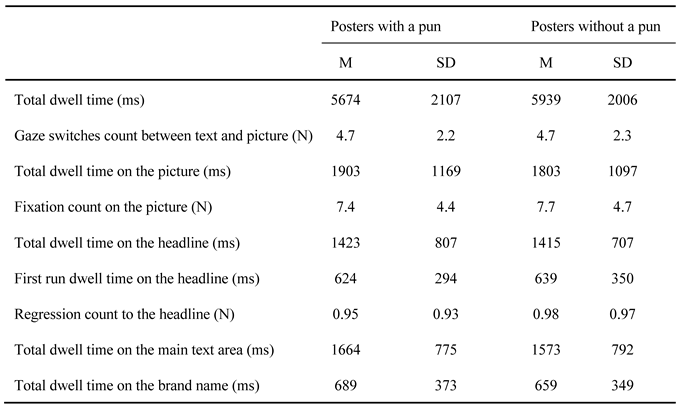

Results

Discussion

Conclusion

- It is advisable to incorporate puns into the advertising posters as they serve multiple purposes. Firstly, the ambiguity in the slogan of an advertising poster encourages a more thorough examination of main text area, which refers to the area where the primary advertising text is located. Secondly, puns enhance the recognition of the poster, leading to a more favorable subjective assessment and increasing the likelihood of attracting visual attention.

- When designing an advertising poster that contains only one image element, it is unnecessary to select a portrait or image of a person as this element. Research indicates that there is no significant difference in viewing posters featuring faces and those featuring other visual elements.

- When constructing advertising posters, it is advisable to allocate extra consideration to the textual component, particularly when formulating the title. This section receives initial attention and subsequent revisits, contrary to the conventional belief that the visual processing of a multimodal text commences with an image.

Ethics and Conflict of Interest

Acknowledgments

References

- Acarturk, C., and C. Habel. 2012. Edited by Cox and J. San Diego. Eye tracking in multimodal comprehension of graphs In R. In Proceedings of the workshop on technology-enhanced diagrams research. Canterbury, UK: CEUR, vol. 887, pp. 11–25. [Google Scholar]

- Acarturk, C., C. Habel, K. Cagiltay, and O. Alacam. 2008. Multimodal Comprehension of Language and Graphics: Graphs with and without annotations. Journal of Eye Movement Research 1, 3. [Google Scholar] [CrossRef]

- Alemdag, E., and K. Cagiltay. 2018. A systematic review of eye tracking research on multimedia learning. Computers & Education 125: 413–428. [Google Scholar] [CrossRef]

- Andrzejewska, M., and A. Stolińska. 2016. Comparing the Difficulty of Tasks Using Eye Tracking Combined with Subjective and Behavioural Criteria. Journal of Eye Movement Research 9, 3: 1–16. [Google Scholar] [CrossRef]

- Bartsch, S. 2004. Structural and functional properties of collocations in English: a corpus study of lexical and pragmatic constraints on lexical co-occurrence. Tübingen: Narr. [Google Scholar]

- Bates, D., M. Mächler, B. Bolker, and S. Walker. 2015. Fitting linear mixed-effects models using lme4. Journal of Statistical Software 67, 1: 1–48. [Google Scholar] [CrossRef]

- Boardman, R., and H. Mccormick. 2022. Attention and behaviour on fashion retail websites: an eye-tracking study. Information Technology & People 35, 7: 2219–2240. [Google Scholar]

- Carrol, G., and K. Conklin. 2014. Eye-tracking multi-word units: some methodological questions. Journal of Eye Movement Research 7, 5: 1–11. [Google Scholar] [CrossRef]

- Cerf, M., E. P. Frady, and C. Koch. 2009. Faces and text attract gaze independent of the task: Experimental data and computer model. Journal of Vision 9, 12: 101–105. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y. 2022. Salient visual foci on human faces in viewers’ engagement with advertisements: Eye-tracking evidence and theoretical implications. Multimodality & Society 2, 1: 3–22. [Google Scholar] [CrossRef]

- Chernigovskaya, T., S. Alexeeva, A. Dubasava, T. Petrova, V. Prokopenya, and D. Chernova. 2018. The gaze of Schroedinger’s cat: eye-tracking in psycholinguistics. St. Petersburg, St. Petersburg University Press. [Google Scholar] [CrossRef]

- Couronné, T., A. Guérin-Dugué, M. Dubois, P. Faye, and C. Marendaz. 2010. A statistical mixture method to reveal bottom-up and top-down factors guiding the eye-movements. Journal of Eye Movement Research 3, 2: 1–13. [Google Scholar] [CrossRef]

- Dictionary of the Russian language (1999). Evgenieva, A. P., ed. Moscow: Rus. lang., Polygraph resources.

- Djafarova, E. 2008. Why Do Advertisers Use Puns? A Linguistic Perspective. Journal of Advertising Research 48: 267–275. [Google Scholar] [CrossRef]

- Edell, J. A., and R. Staelin. 1983. The information processing of pictures in print advertisements. Journal of Consumer Research 10: 45–61. [Google Scholar] [CrossRef]

- Frazier, L., and K. Rayner. 1982. Making and correcting errors during sentence comprehension: Eye movements in the analysis of structurally ambiguous sentences. Cognitive Psychology 14, 2: 178–210. [Google Scholar]

- Hardiess, G., and C. Weissert. 2021. Interaction between image and text during the process of biblical art reception. Journal of Eye Movement Research 13, 2: 14. [Google Scholar] [CrossRef]

- Higgins, E., M. Leinenger, and K. Rayner. 2014. Eye movements when viewing advertisements. Frontiers in Psychology 5: 210. [Google Scholar] [CrossRef]

- Holmberg, N., K. Holmqvist, and H. Sandberg. 2015. Children’s attention to online adverts is related to low-level saliency factors and individual level of gaze control. Journal of Eye Movement Research 8, 2. [Google Scholar] [CrossRef]

- Holmqvist, K., N. Nystrom, R. Andersson, R. Dewhurst, H. Jarodzka, and J. van de Weijer. 2011. Eye tracking: a comprehensive guide to methods and measures. Oxford, UK: Oxford University Press. [Google Scholar]

- Holsanova, J. 2014. Edited by C. Jewitt. Reception of multimodality: Applying eye tracking methodology in multimodal research. In Routledge Handbook of Multimodal Analysis. London: Routledge, pp. 285–296. [Google Scholar]

- Holsanova, J., H. Rahm, and K. Holmqvist. 2006. Entry points and reading paths on the newspaper spread: Comparing semiotic analysis with eye-tracking measurements. Visual Communication 5: 65–93. [Google Scholar] [CrossRef]

- Hyönä, J. 2010. The use of eye movements in the study of multimedia learning. Learning and Instruction 20, 2: 172–176. [Google Scholar] [CrossRef]

- Juhasz, B. J., M. M. Gullick, and L. W. Shesler. 2011. The Effects of Age-of-Acquisition on Ambiguity Resolution: Evidence from Eye Movements. Journal of Eye Movement Research 4, 1: 1–14. [Google Scholar] [CrossRef]

- Kergoat, M., T. Meyer, and A. Merot. 2017. Picture-based persuasion in advertising: The impact of attractive pictures on verbal ad’s content. Journal of Consumer Marketing 34: 624–635. [Google Scholar] [CrossRef]

- Kinzer, C. K., S. Turkay, D. L. Hoffman, N. Gunbas, P. Chantes, A. Chaiwinij, and T. Dvorkin. 2012. Edited by P. J. Dunston, S. K. Fullerton, C. C. Bates, K. Headley and P. M. Stecker. Examining the effects of text and images on story comprehension: An eye-tracking study of reading in a video game and comic book. In 61st Yearbook of the Literacy Research Association. Oak Creek: LRA, pp. 259–275. [Google Scholar]

- Konovalova, A., and T. Petrova. 2023. Edited by X. S. Yang, S. Sherratt, N. Dey and A. Joshi. Image and Text in Ambiguous Advertising Posters. In Proceedings of Seventh International Congress on Information and Communication Technology. Lecture Notes in Networks and Systems. Singapore: Springer, Vol. 465, pp. 109–119. [Google Scholar] [CrossRef]

- Kress, G., and T. van Leeuwen. 1996. Reading Images: The Grammar of Visual Design. New York: Routledge. [Google Scholar]

- Kuznetsova, A., P. B. Brockhoff, and R. H. B. Christensen. 2017. lmerTest package: Tests in linear mixed effects models. Journal of Statistical Software 82, 13: 1–26. [Google Scholar] [CrossRef]

- Lagerwerf, L. 2002. Deliberate ambiguity in slogans. Recognition and appreciation. Document Design 3: 244–260. [Google Scholar] [CrossRef][Green Version]

- Liversedge, S. P., I. D. Gilchrist, and S. Everling. 2011. The Oxford handbook of eye movements. New York: Oxford University Press. [Google Scholar][Green Version]

- Marino, J. 2016. Reading screens: what eye tracking tells us about the writing in digital longform journalism. Literary Journalism Studies 8: 138–149. [Google Scholar][Green Version]

- Martinec, R., and A. Salway. 2005. A system for image–text relations in new (and old) media. Visual Communication 4, 3: 337–371. [Google Scholar] [CrossRef]

- Marzban, S., G. Fábián, and B. Weiss. 2023. Edited by E. Kasneci, F. Shic and M. Khamis. The effect of intersemiotic relations on L2 learners’ multimodal reading. In Proceedings of the 2023 Symposium on Eye Tracking Research and Applications (ETRA '23). New York: Association for Computing Machinery, Vol. 79, pp. 1–8. [Google Scholar] [CrossRef]

- Mayer, R. E. 2009. Multimedia learning, 2nd ed. New York: Cambridge University Press. [Google Scholar]

- McConkie, G. W. 1983. Edited by K. Rayner. Eye movements and perception during reading. In Eye movements in reading: Perceptual and linguistic aspects. New York: Academic Press, pp. 65–96. [Google Scholar]

- Obermiller, C., and A. G. Sawyer. 2011. The effects of advertisement picture likeability on information search and brand choice. Marketing Letters 22: 101–113. [Google Scholar] [CrossRef]

- Partington, A. 2009. A linguistic account of wordplay: The lexical grammar of punning. Journal of Pragmatics 41: 1794–1809. [Google Scholar] [CrossRef]

- Petrova, T., and E. Riekhakaynen. 2019. Edited by XS. Yang, S. Sherratt, N. Dey and A. Joshi. Processing of Verbal and Non-verbal Patterns: An EyeTracking Study of Russian. In Third International Congress on Information and Communication Technology. Advances in Intelligent Systems and Computing. Springer: Singapore: Vol. 797, pp. 269–276. [Google Scholar]

- Petrova, T. E., E. I. Riekhakaynen, and V. S. Bratash. 2020. An Eye-Tracking Study of Sketch Processing: Evidence From Russian. Frontiers in Psychology 11: 297. [Google Scholar] [CrossRef]

- Pieters, R., and M. Wedel. 2004. Attention Capture and Transfer in Advertising: Brand, Pictorial, and Text-Size Effects. Journal of Marketing 68: 36–50. [Google Scholar] [CrossRef]

- Puškarević, I., U. Nedeljković, V. Dimovski, and K. Možina. 2016. An eye tracking study of attention to print advertisements: Effects of typeface figuration. Journal of Eye Movement Research 9, 5: 1–18. [Google Scholar] [CrossRef]

- R Core Team. 2023. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing. Available online: https://www.R-project.org/.

- Radach, R., S. Lemmer, C. Vorstius, D. Heller, and K. Radach. 2003. Edited by J. Hyönä, R. Radach and D. Heller. Eye movements in the processing of print advertisements. In The Mind’s Eye: Cognitive and Applied Aspects of Eye Movement Research. Amsterdam: North Holland, pp. 609–632. [Google Scholar]

- Rayner, K., C. Rotello, A. Stewart, J. Keir, and S. Duffy. 2001. Integrating text and pictorial information: eye movements when looking at print advertisements. Journal of Experimental Psychology Applied 7: 219–226. [Google Scholar] [CrossRef]

- Sannikov, V. Z. 2002. Russian language in the mirror of the language play. Moscow: Iazyki slavianskoi kultury (Languages of Slavic Culture). [Google Scholar]

- Scheiter, K., and A. Eitel. 2017. Edited by C. Was, F. Sansost and B. Morris. The use of eye tracking as a research and instructional tool in multimedia learning. In Eye-tracking technology applications in educational research. Hershey PA, USA: Information Science Reference, pp. 143–165. [Google Scholar]

- Schnotz, W. 2005. Edited by R. E. Mayer. An integrated model of text and picture comprehension. In The Cambridge Handbook of Multimedia Learning. Cambridge, MA: Cambridge University Press, pp. 49–69. [Google Scholar]

- Sharmin, S., O. Špakov, and K.-J. Räihä. 2012. The Effect of Different Text Presentation Formats on Eye Movement Metrics in Reading. Journal of Eye Movement Research 5, 3. [Google Scholar] [CrossRef]

- Sinclair, J. 1987. Edited by R. Steele and T. Threadgold. Collocation: a progress report. In Language Topics: Essays in Honour of Michael Halliday. Amsterdam: John Benjamins, pp. 319–331. [Google Scholar]

- Slioussar, N. A., T. E. Petrova, E. V. Mikhailovskaya, N. V. Cherepovskaya, V. K. Prokopenya, D. A. Chernova, and T. V. Chernigovskaya. 2017. Experimental studies of the mental lexicon: phrases with literal and non-literal meanings. Voprosy Jazykoznanija (Topics in the study of language) 3: 83–98. [Google Scholar] [CrossRef]

- Tanaka, K. 1992. The pun in advertising: a pragmatic approach. Lingua 87: 91–102. [Google Scholar] [CrossRef]

- Wang, Q., S. Yang, M. Liu, Z. Cao, and Q. Ma. 2014. An eye-tracking study of website complexity from cognitive load perspective. Decision Support Systems 62: 1–10. [Google Scholar] [CrossRef]

- Wedel, M., and R. Pieters. 2000. Eyes fixations on advertisements and memory for brands: a model and findings. Marketing Science 19: 297–312. [Google Scholar] [CrossRef]

- Wedel, M., and R. Pieters. 2008. Edited by N. K. Malhotra. A Review of Eye-Tracking Research in Marketing. In Review of Marketing Research. Bingley: Emerald Group Publishing Limited, Vol. 4, pp. 123–147. [Google Scholar] [CrossRef]

- Wolfe, J. M., and T. S. Horowitz. 2004. What attributes guide the deployment of visual attention and how do they do it? Nature Reviews Neuroscience 5, 6: 495–501. [Google Scholar] [CrossRef]

- Zambarbieri, D., E. Carniglia, and C. Robino. 2008. Eye Tracking Analysis in Reading Online Newspapers. Journal of Eye Movement Research 2, 4: 1–8. [Google Scholar] [CrossRef]

- Zhao, F., W. Schnotz, I. Wagner, and R. Gaschler. 2020. Texts and pictures serve different functions in conjoint mental model construction and adaptation. Memory & Cognition 48: 69–82. [Google Scholar] [CrossRef]

|

|

© 2023 by the author. 2023 Konovalova, A. & Petrova, T. This article is licensed under a Creative Commons Attribution 4.0 International License.

Share and Cite

Konovalova, A.; Petrova, T. Pun Processing in Advertising Posters: Evidence from Eye Tracking. J. Eye Mov. Res. 2023, 16, 1-17. https://doi.org/10.16910/jemr.16.3.5

Konovalova A, Petrova T. Pun Processing in Advertising Posters: Evidence from Eye Tracking. Journal of Eye Movement Research. 2023; 16(3):1-17. https://doi.org/10.16910/jemr.16.3.5

Chicago/Turabian StyleKonovalova, Anastasiia, and Tatiana Petrova. 2023. "Pun Processing in Advertising Posters: Evidence from Eye Tracking" Journal of Eye Movement Research 16, no. 3: 1-17. https://doi.org/10.16910/jemr.16.3.5

APA StyleKonovalova, A., & Petrova, T. (2023). Pun Processing in Advertising Posters: Evidence from Eye Tracking. Journal of Eye Movement Research, 16(3), 1-17. https://doi.org/10.16910/jemr.16.3.5