Eye-Hand Coordination Patterns of Intermediate and Novice Surgeons in a Simulation-Based Endoscopic Surgery Training Environment

Abstract

Introduction

Methods

Participants

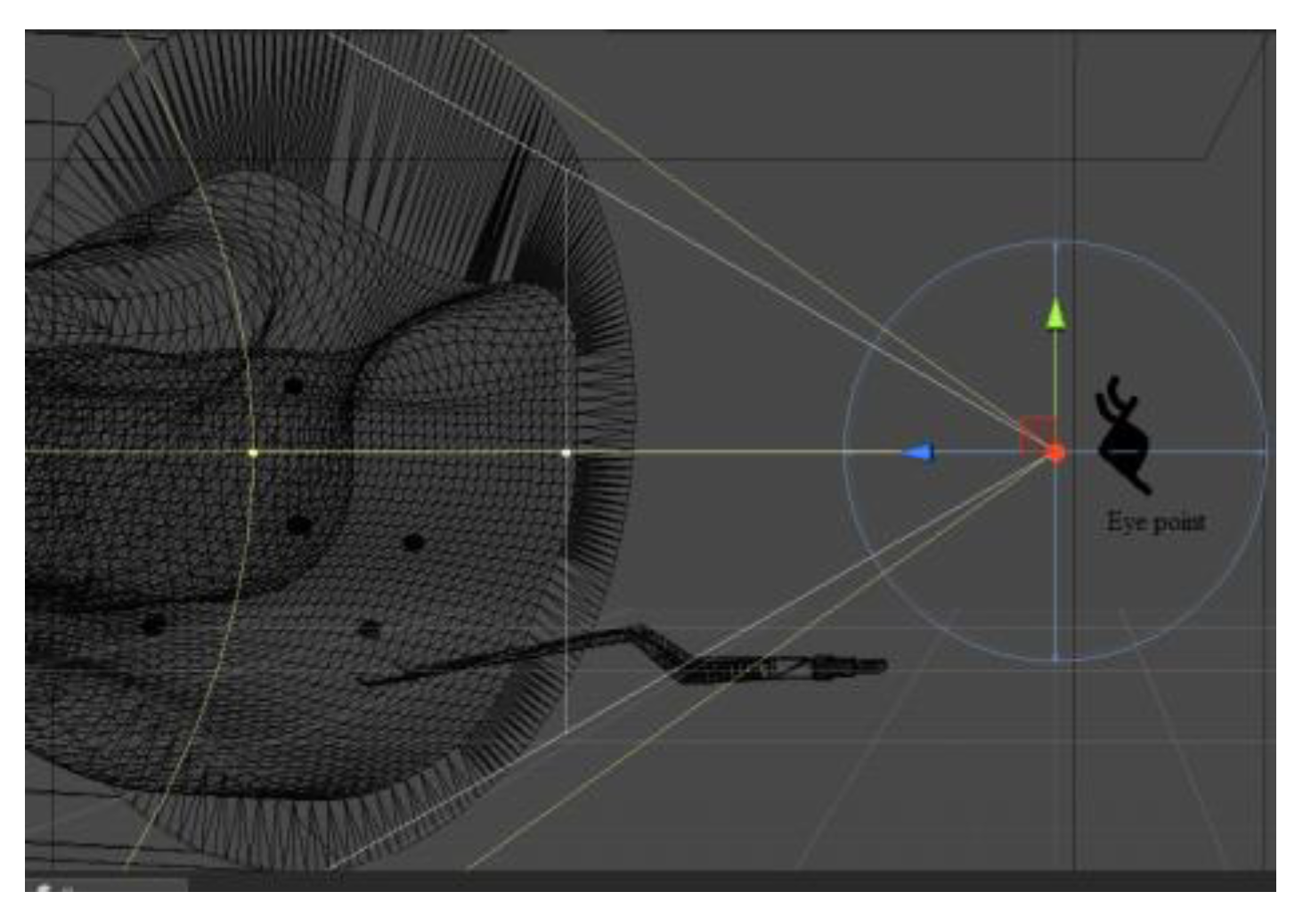

Apparatus

Design

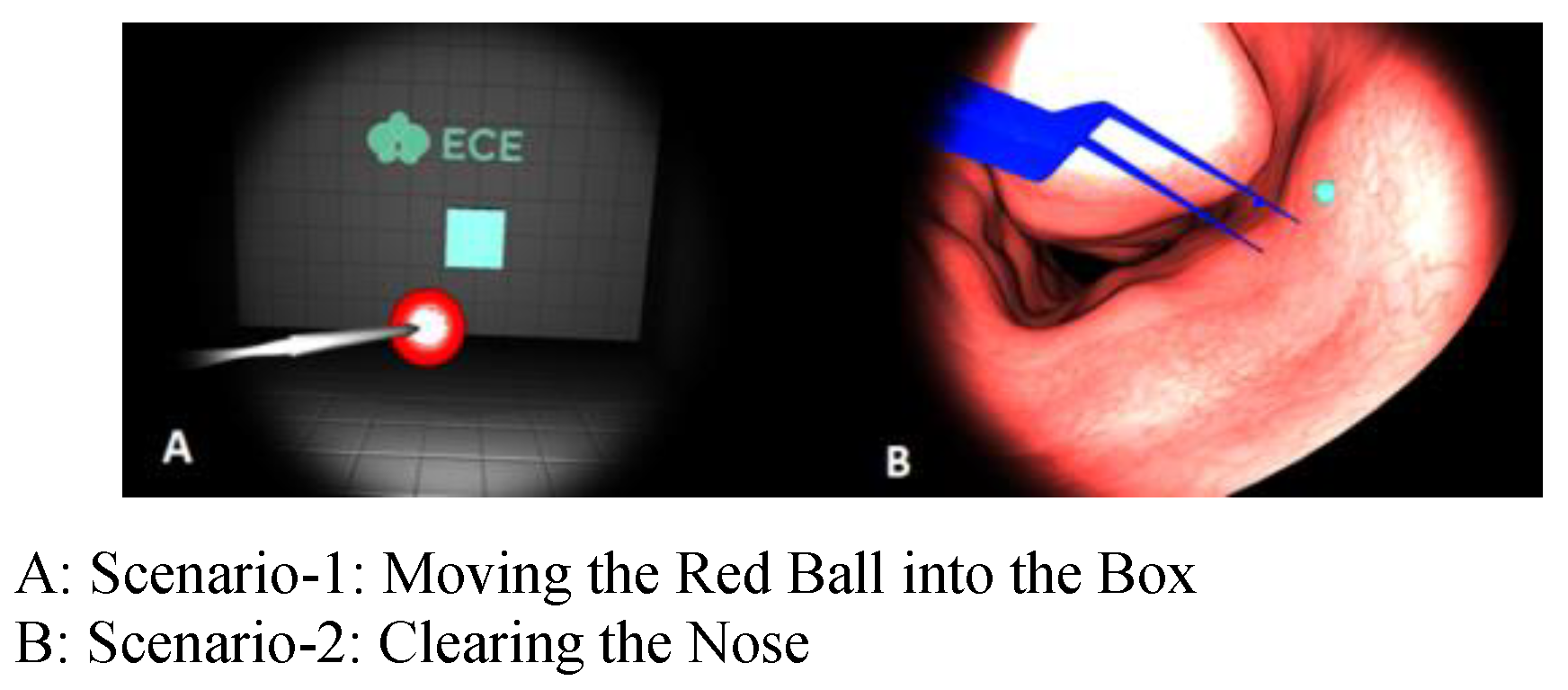

‘Moving the Red Ball into the Box’ Scenario

‘Clearing the Nose’ Scenario

Procedure

Metrics

Results

Eye- Hand Correlation Results for Scenario-1

Eye-Hand Correlation for Intermediates

Eye-Hand Correlation for Novices

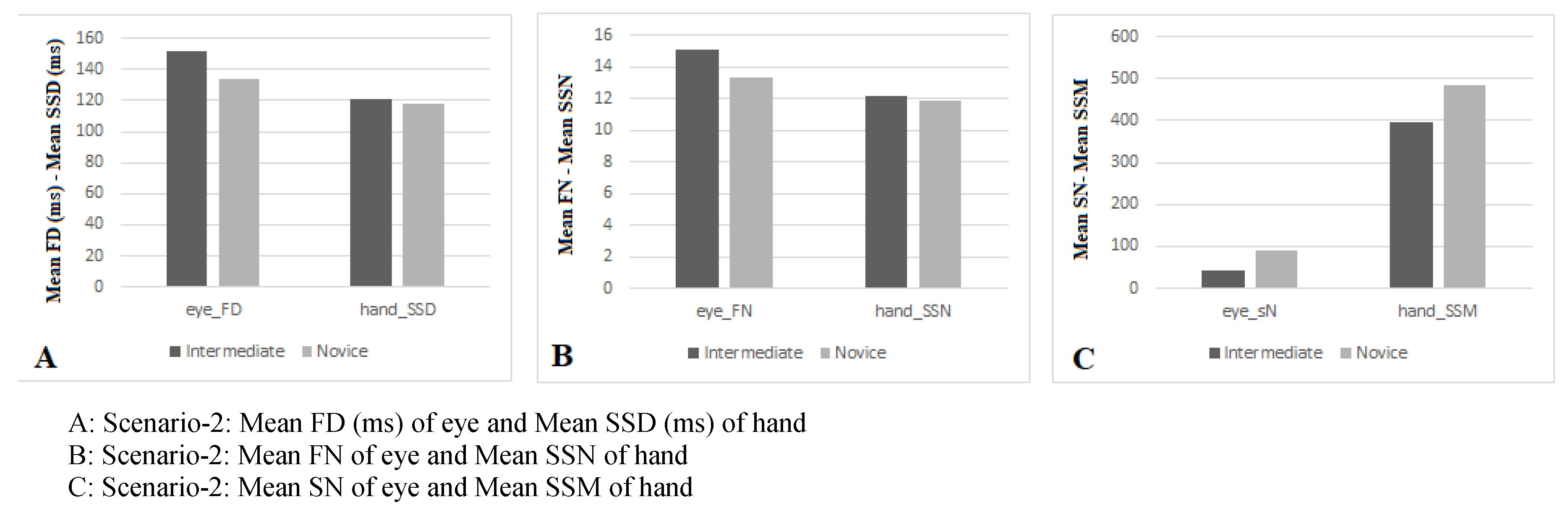

Eye- Hand Correlation Results for Scenario-2

Eye-Hand Correlation for Intermediates

Eye-Hand Correlation for Novices

Analyzing the Questionnaire Data

Discussion

Conclusion

Limitations and Future Work

Ethics and Conflict of Interest

Acknowledgements

References

- Andersson, R., L. Larsson, K. Holmqvist, M. Stridh, and M. Nyström. 2017. One algorithm to rule them all? An evaluation and discussion of ten eye movement event-detection algorithms. Behavior Research Methods 49, 2: 616–637. [Google Scholar] [CrossRef] [PubMed]

- Andreatta, P. B., D. T. Woodrum, P. G. Gauger, and R. M. Minter. 2008. LapMentor metrics possess limited construct validity. Simulation in healthcare 3, 1: 16–25. [Google Scholar] [CrossRef] [PubMed]

- Ayodeji, I., M. Schijven, J. Jakimowicz, and J. Greve. 2007. Face validation of the Simbionix LAP Mentor virtual reality training module and its applicability in the surgical curriculum. Surgical endoscopy 21, 9: 1641–1649. [Google Scholar] [CrossRef] [PubMed]

- Basdogan, C., S. De, J. Kim, M. Muniyandi, H. Kim, and M. A. Srinivasan. 2004. Haptics in minimally invasive surgical simulation and training. IEEE computer graphics and applications 24, 2: 56–64. [Google Scholar] [CrossRef]

- Batmaz, A. U., M. de Mathelin, and B. Dresp-Langley. 2017. Seeing virtual while acting real: Visual display and strategy effects on the time and precision of eye-hand coordination. PloS one 12, 8: e0183789. [Google Scholar] [CrossRef]

- Berkenstadt, H., A. Ziv, D. Barsuk, I. Levine, A. Cohen, and A. Vardi. 2003. The use of advanced simulation in the training of anesthesiologists to treat chemical warfare casualties. Anesthesia & Analgesia 96, 6: 1739–1742. [Google Scholar]

- Bishop, D., G. Kuhn, and C. Maton. 2014. Telling people where to look in a soccer-based decision task: A nomothetic approach. Journal of eye movement research 7, 2. [Google Scholar] [CrossRef]

- Cagiltay, N. E., E. Ozcelik, G. Sengul, and M. Berker. 2017. Construct and face validity of the educational computer-based environment (ECE) assessment scenarios for basic endoneurosurgery skills. Surgical endoscopy 31, 11: 4485–4495. [Google Scholar] [CrossRef]

- Cohen, J. 1988. Set correlation and contingency tables. Applied Psychological Measurement 12, 4: 425–434. [Google Scholar] [CrossRef]

- D’Angelo, A.-L. D., D. N. Rutherford, R. D. Ray, S. Laufer, C. Kwan, E. R. Cohen, and C. M. Pugh. 2015. Idle time: an underdeveloped performance metric for assessing surgical skill. The American journal of surgery 209, 4: 645–651. [Google Scholar] [CrossRef]

- Dalmaijer, E. 2014. Is the low-cost EyeTribe eye tracker any good for research?: PeerJ PrePrints. [Google Scholar]

- Dankelman, J., C. K. A. Grimbergen, and H. G. Stassen. 2007. New technologies supporting surgical intervenltions and training of surgical skills-a look at projects in europe supporting minimally invasive techniques. IEEE Engineering in Medicine and Biology Magazine 26, 3: 47–52. [Google Scholar] [CrossRef]

- Datta, V., A. Chang, S. Mackay, and A. Darzi. 2002. The relationship between motion analysis and surgical technical assessments. The American journal of surgery 184, 1: 70–73. [Google Scholar] [CrossRef] [PubMed]

- Evgeniou, E., and P. Loizou. 2013. Simulation-based surgical education. ANZ journal of surgery 83, 9: 619–623. [Google Scholar] [CrossRef] [PubMed]

- Gegenfurtner, A., E. Lehtinen, and R. Säljö. 2011. Expertise differences in the comprehension of visualizations: A meta-analysis of eye-tracking research in professional domains. Educational Psychology Review 23, 4: 523–552. [Google Scholar] [CrossRef]

- Gordon, J. A., W. M. Wilkerson, D. W. Shaffer, and E. G. Armstrong. 2001. “Practicing” medicine without risk: students’ and educators’ responses to high-fidelity patient simulation. Academic Medicine 76, 5: 469–472. [Google Scholar] [CrossRef]

- Helsen, W. F., D. Elliott, J. L. Starkes, and K. L. Ricker. 2000. Coupling of eye, finger, elbow, and shoulder movements during manual aiming. Journal of motor behavior 32, 3: 241–248. [Google Scholar] [CrossRef]

- Hermens, F., R. Flin, and I. Ahmed. 2013. Eye movements in surgery: A literature review. Journal of Eye movement research 6, 4. [Google Scholar] [CrossRef]

- Hernandez, J., S. Bann, Y. Munz, K. Moorthy, V. Datta, S. Martin, and T. Rockall. 2004. Qualitative and quantitative analysis of the learning curve of a simulated surgical task on the da Vinci system. Surgical Endoscopy And Other Interventional Techniques 18, 3: 372–378. [Google Scholar]

- Jiang, X., B. Zheng, and M. S. Atkins. 2015. Video processing to locate the tooltip position in surgical eye–hand coordination tasks. Surgical innovation 22, 3: 285–293. [Google Scholar] [CrossRef]

- Jiang, X., B. Zheng, R. Bednarik, and M. S. Atkins. 2015. Pupil responses to continuous aiming movements. International Journal of Human-Computer Studies 83: 1–11. [Google Scholar] [CrossRef]

- Johnson, B. P., J. A. Lum, N. J. Rinehart, and J. Fielding. 2016. Ocular motor disturbances in autism spectrum disorders: Systematic review and comprehensive meta-analysis. Neuroscience & Biobehavioral Reviews 69: 260–279. [Google Scholar]

- Kirk, R. 1996. Teaching the craft of operative surgery. Annals of the Royal College of Surgeons of England 78, 1 Suppl: 25–28. [Google Scholar]

- Laerd, Statistics. 2017. Pearson’s product-moment correlation using SPSS Statistics. Statistical tutorials and software guides. from https://statistics.laerd.com/.

- Lanfranco, A. R., A. E. Castellanos, J. P. Desai, and W. C. Meyers. 2004. Robotic surgery: a current perspective. Annals of surgery 239, 1: 14. [Google Scholar] [CrossRef]

- Latko, W. A., T. J. Armstrong, J. A. Foulke, G. D. Herrin, R. A. Rabourn, and S. S. Ulin. 1997. Development and evaluation of an observational method for assessing repetition in hand tasks. American Industrial Hygiene Association Journal 58, 4: 278–285. [Google Scholar] [CrossRef]

- Law, B., M. S. Atkins, A. E. Kirkpatrick, and A. J. Lomax. 2004. Eye gaze patterns differentiate novice and experts in a virtual laparoscopic surgery training environment. Paper presented at the Proceedings of the 2004 symposium on Eye tracking research & applications. [Google Scholar]

- Lehmann, K. S., J. P. Ritz, H. Maass, H. K. Cakmak, U. G. Kuehnapfel, C. T. Germer, and H. J. Buhr. 2005. A prospective randomized study to test the transfer of basic psychomotor skills from virtual reality to physical reality in a comparable training setting. Annals of surgery 241, 3: 442. [Google Scholar] [CrossRef]

- Martin, J., G. Regehr, R. Reznick, H. Macrae, J. Murnaghan, C. Hutchison, and M. Brown. 1997. Objective structured assessment of technical skill (OSATS) for surgical residents. British journal of surgery 84, 2: 273–278. [Google Scholar]

- McDougall, E. M., F. A. Corica, J. R. Boker, L. G. Sala, G. Stoliar, J. F. Borin, and R. V. Clayman. 2006. Construct validity testing of a laparoscopic surgical simulator. Journal of the American College of Surgeons 202, 5: 779–787. [Google Scholar] [CrossRef] [PubMed]

- Menekse Dalveren, G. G., and N. E. Cagiltay. 2018. Insights from surgeons’ eye-movement data in a virtual simulation surgical training environment: effect of experience level and hand conditions. Behaviour & Information Technology 37, 5: 517–537. [Google Scholar]

- Mohamadipanah, H., C. Parthiban, K. Law, J. Nathwani, L. Maulson, S. DiMarco, and C. Pugh. 2016. Hand smoothness in laparoscopic surgery correlates to psychomotor skills in virtual reality. Paper presented at the Wearable and Implantable Body Sensor Networks (BSN), 2016 IEEE 13th International Conference on. [Google Scholar]

- Moore, M. J., and C. L. Bennett. 1995. The learning curve for laparoscopic cholecystectomy. The American journal of surgery 170, 1: 55–59. [Google Scholar]

- Moorthy, K., Y. Munz, S. K. Sarker, and A. Darzi. 2003. Objective assessment of technical skills in surgery. BMJ: British Medical Journal 327, 7422: 1032. [Google Scholar] [CrossRef]

- Ooms, K., L. Dupont, L. Lapon, and S. Popelka. 2015. Accuracy and precision of fixation locations recorded with the low-cost Eye Tribe tracker in different experimental setups. Journal of eye movement research 8, 1. [Google Scholar] [CrossRef]

- Oropesa, I., M. K. Chmarra, P. Sánchez-González, P. Lamata, S. P. Rodrigues, S. Enciso, and E. J. Gómez. 2013. Relevance of motion-related assessment metrics in laparoscopic surgery. Surgical innovation 20, 3: 299–312. [Google Scholar] [CrossRef] [PubMed]

- Oropesa, I., P. Sánchez-González, M. K. Chmarra, P. Lamata, A. Fernández, J. A. Sánchez-Margallo, and E. J. Gómez. 2013. EVA: laparoscopic instrument tracking based on endoscopic video analysis for psychomotor skills assessment. Surgical endoscopy 27, 3: 1029–1039. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Oropesa, I., P. Sánchez-González, M. K. Chmarra, P. Lamata, R. Pérez-Rodríguez, F. W. Jansen, and E. J. Gómez. 2014. Supervised classification of psychomotor competence in minimally invasive surgery based on instruments motion analysis. Surgical endoscopy 28, 2: 657–670. [Google Scholar] [CrossRef]

- Oropesa, I., P. Sánchez-González, P. Lamata, M. K. Chmarra, J. B. Pagador, J. A. Sánchez-Margallo, and E. J. Gómez. 2011. Methods and tools for objective assessment of psychomotor skills in laparoscopic surgery. journal of surgical research 171, 1: e81–e95. [Google Scholar] [CrossRef]

- Parr, J., S. Vine, N. Harrison, and G. Wood. 2018. Examining the spatiotemporal disruption to gaze when using a myoelectric prosthetic hand. Journal of motor behavior 50, 4: 416–425. [Google Scholar] [CrossRef]

- Reiley, C. E., H. C. Lin, D. D. Yuh, and G. D. Hager. 2011. Review of methods for objective surgical skill evaluation. Surgical endoscopy 25, 2: 356–366. [Google Scholar] [CrossRef]

- Schmitt, L. M., E. H. Cook, J. A. Sweeney, and M. W. Mosconi. 2014. Saccadic eye movement abnormalities in autism spectrum disorder indicate dysfunctions in cerebellum and brainstem. Molecular autism 5, 1: 47. [Google Scholar] [CrossRef]

- Silvennoinen, M., J.-P. Mecklin, P. Saariluoma, and T. Antikainen. 2009. Expertise and skill in minimally invasive surgery. Scandinavian Journal of Surgery 98, 4: 209–213. [Google Scholar] [CrossRef]

- Snyder, L. H., J. L. Calton, A. R. Dickinson, and B. M. Lawrence. 2002. Eye-hand coordination: saccades are faster when accompanied by a coordinated arm movement. Journal of Neurophysiology 87, 5: 2279–2286. [Google Scholar] [CrossRef]

- Stylopoulos, N., and K. G. Vosburgh. 2007. Assessing technical skill in surgery and endoscopy: A set of metrics and an algorithm (C-PASS) to assess skills in surgical and endoscopic procedures. Surgical innovation 14, 2: 113–121. [Google Scholar] [CrossRef] [PubMed]

- The Eye Tribe. 2014. Basics. from http://theeyetribe.com/dev.theeyetribe.com/dev.theeyetribe.com/general/index.html.

- Tien, G., M. S. Atkins, B. Zheng, and C. Swindells. 2010. Measuring situation awareness of surgeons in laparoscopic training. Paper presented at the Proceedings of the 2010 symposium on eye-tracking research & applications. [Google Scholar]

- Tien, T., P. H. Pucher, M. H. Sodergren, K. Sriskandarajah, G.-Z. Yang, and A. Darzi. 2014. Eye tracking for skills assessment and training: a systematic review. journal of surgical research 191, 1: 169–178. [Google Scholar] [CrossRef] [PubMed]

- Uemura, M., M. Tomikawa, R. Kumashiro, T. Miao, R. Souzaki, S. Ieiri, and M. Hashizume. 2014. Analysis of hand motion differentiates expert and novice surgeons. journal of surgical research 188, 1: 8–13. [Google Scholar] [CrossRef]

- van der Lans, R., M. Wedel, and R. Pieters. 2011. Defining eye-fixation sequences across individuals and tasks: the Binocular-Individual Threshold (BIT) algorithm. Behavior Research Methods 43, 1: 239–257. [Google Scholar] [CrossRef]

- Wang, T.-N., T.-H. Howe, K.-C. Lin, and Y.-W. Hsu. 2014. Hand function and its prognostic factors of very low birth weight preterm children up to a corrected age of 24 months. Research in developmental disabilities 35, 2: 322–329. [Google Scholar] [CrossRef]

- Wentink, B. 2001. Eye-hand coordination in laparoscopy-an overview of experiments and supporting aids. Minimally Invasive Therapy & Allied Technologies 10, 3: 155–162. [Google Scholar]

- Wilson, M., J. McGrath, S. Vine, J. Brewer, D. Defriend, and R. Masters. 2010. Psychomotor control in a virtual laparoscopic surgery training environment: gaze control parameters differentiate novices from experts. Surgical endoscopy 24, 10: 2458–2464. [Google Scholar] [CrossRef] [PubMed]

- Yamaguchi, S., K. Konishi, T. Yasunaga, D. Yoshida, N. Kinjo, K. Kobayashi, and K. Tanoue. 2007. Construct validity for eye–hand coordination skill on a virtual reality laparoscopic surgical simulator. Surgical endoscopy 21, 12: 2253–2257. [Google Scholar] [CrossRef]

| Skill Level | Age | Department | Gender | ||

|---|---|---|---|---|---|

| NRS | ENT | F | M | ||

| Intermediate | 28.4 | 1 | 5 | 1 | 4 |

| Novice | 25.6 | 4 | 5 | 1 | 9 |

| NRS: Neurosurgery ENT: Ear Nose Throat | |||||

| Skill Level | Average number of Endoscopic Surgery | ||

|---|---|---|---|

| Observed | Assisted | Performed | |

| Intermediate | 52.0 | 39.6 | 23.8 |

| Novice | 8.2 | 1.0 | 0.0 |

| Intermediate | Novice | |||

| Eye Metrics | M | SD | M | SD |

| FD | 121.53 | 19.05 | 112.70 | 26.55 |

| FN | 12.14 | 1.91 | 11.26 | 2.66 |

| SN | 20.80 | 13.46 | 28.60 | 32.46 |

| Hand Metrics | M | SD | M | SD |

| SSD | 92.99 | 10.25 | 101.98 | 15.03 |

| SSN | 9.30 | 1.01 | 10.19 | 1.51 |

| SSM | 55.80 | 37.48 | 55.10 | 15.82 |

| Skill Level | FD - SSD | FN - SSN | SN - SSM |

|---|---|---|---|

| Intermediate | -.836 Strong- | -.837 Strong- | .755 Strong+ |

| Novice | .448 Moderate + | .448 Moderate+ | .590 Strong+ |

| Intermediate | Novice | |||

|---|---|---|---|---|

| Eye Metrics | M | SD | M | SD |

| FD | 151.25 | 30.48 | 133.42 | 42.49 |

| FN | 15.11 | 3.04 | 13.34 | 4.24 |

| SN | 41.40 | 23.58 | 91.90 | 82.30 |

| Hand Metrics | M | SD | M | SD |

| SSD | 121.37 | 16.60 | 118.28 | 15.13 |

| SSN | 12.15 | 1.65 | 11.84 | 1.52 |

| SSM | 395.00 | 143.63 | 486.00 | 143.86 |

| Skill Level | FD - SSD | FN – SSN | SN - SSM |

|---|---|---|---|

| Intermediate | -.900* Strong- | -.900* Strong- | .846 Strong+ |

| Novice | -.443 Moderate- | -.441 Moderate- | .06 Small+ |

| Intermediate | Novice | |||

|---|---|---|---|---|

| Questionnaire Item | M | SD | M | SD |

| The participant shows developed depth perception skills in a 3D environment. | 3.33 | .42 | 2.12 | .34 |

| The participant shows developed skills in eye-hand coordination. | 3.53 | .30 | 1.92 | .52 |

Copyright © 2018. This article is licensed under a Creative Commons Attribution 4.0 International License.

Share and Cite

Topalli, D.; Cagiltay, N.E. Eye-Hand Coordination Patterns of Intermediate and Novice Surgeons in a Simulation-Based Endoscopic Surgery Training Environment. J. Eye Mov. Res. 2018, 11, 1-14. https://doi.org/10.16910/jemr.11.6.1

Topalli D, Cagiltay NE. Eye-Hand Coordination Patterns of Intermediate and Novice Surgeons in a Simulation-Based Endoscopic Surgery Training Environment. Journal of Eye Movement Research. 2018; 11(6):1-14. https://doi.org/10.16910/jemr.11.6.1

Chicago/Turabian StyleTopalli, Damla, and Nergiz Ercil Cagiltay. 2018. "Eye-Hand Coordination Patterns of Intermediate and Novice Surgeons in a Simulation-Based Endoscopic Surgery Training Environment" Journal of Eye Movement Research 11, no. 6: 1-14. https://doi.org/10.16910/jemr.11.6.1

APA StyleTopalli, D., & Cagiltay, N. E. (2018). Eye-Hand Coordination Patterns of Intermediate and Novice Surgeons in a Simulation-Based Endoscopic Surgery Training Environment. Journal of Eye Movement Research, 11(6), 1-14. https://doi.org/10.16910/jemr.11.6.1