Abstract

Although the 45-dots calibration routine of a previous study (Blignaut, 2016) provided very good accuracy, it requires intense mental effort and the routine proved to be unsuccessful for young children who struggle to maintain concentration. The calibration procedures that are normally used for difficult-to-calibrate participants, such as autistic children and infants, do not suffice since they are not accurate enough and the reliability of research results might be jeopardised. Smooth pursuit has been used before for calibration and is applied in this paper as an alternative routine for participants who are difficult to calibrate with conventional routines. Gaze data is captured at regular intervals and many calibration targets are generated while the eyes are following a moving target. The procedure could take anything between 30 s and 60 s to complete, but since an interesting target and/or a conscious task may be used, participants are assisted to maintain concentration. It was proven that the accuracy that can be attained through calibration with a moving target along an even horizontal path is not significantly worse than the accuracy that can be attained with a standard method of watching dots appearing in random order. The routine was applied successfully for a group of children with ADD, ADHD and learning abilities. This result is important as it provides for easier calibration – especially in the case of participants who struggle to keep their gaze focused and stable on a stationary target for long enough.

Introduction

Video-based eye tracking is based on the principle that near-infrared light shone onto the eyes is reflected off the different structures in the eye to create four Purkinje re-flections (Crane & Steele, 1985). The standard way of cal-ibrating such eye trackers is through presentation of a se-ries of dots (or gaze targets) at known positions on the dis-play and expect the participant to watch the dots until enough gaze data is sampled (Nyström, Andersson, Holmqvist, & van de Weijer, 2013). While expensive com-mercial systems utilise a model of the eye to compute the gaze direction (Hansen & Ji, 2010), self-assembled eye trackers use mostly polynomial expressions to map the rel-ative position of the pupil to the corneal reflections (the so-called pupil-glint vector) to gaze coordinates. A least squares estimation is used to minimise the distances be-tween the observed points and the actual points in the cal-ibration grid (Hoormann, Jainta, & Jaschinski, 2008).

Normally, five or nine dots are used. The more dots that are used, the better the accuracy of the system should be. Good accuracy is important when the stimuli is close to each other as in reading, where a researcher wants to determine the number of fixations on individual syllables. A procedure is described in a previous study (Blignaut, 2016) where 45 dots are displayed in a 9×5 grid. Twenty-three of the dots are used as calibration targets, while the complete set of dots is used to select the best possible re-gression polynomial. The dots are displayed in random or-der to prevent participants to pre-empt the position of the next dot and take their eyes away from a dot before the gaze was registered.

While the procedure described in Blignaut (2016) is ac-curate with a reported average offset of 0.32°, it requires intense and prolonged concentration and participants do not always understand that they have to keep their eyes fixated on a dot until the next one appears. Unsurprisingly, the routine proved to be unsuccessful for young children with Attention Deficit Disorder (ADD), Attention Deficit Hyperactivity Disorder (ADHD) and learning disabilities. An occupational therapist using the system complained that young the children with these conditions did not un-derstand exactly what was expected of them and some of them could not maintain concentration for the entire pe-riod.

The challenge is, therefore, to capture gaze data at as many known locations as possible, with the least possible mental effort while maintaining attention on the target. In this paper, a smooth pursuit calibration routine is proposed with a target moving across the display at a constant speed. The target could also be an animated image of something of interest to a small child, such as a butterfly or an air-plane. In order to further motivate the child participant to watch the target closely, it could change colour, shape or image at varying intervals and the child could be chal-lenged to count the number of changes.

The need for calibration and existing calibration proce-dures are discussed in the following section. The difficul-ties that are experienced with the standard routines to cal-ibrate certain groups of participants (collectively referred to as difficult-to-calibrate (DC) participants) are high-lighted and previous attempts to solve the problem are dis-cussed. Thereafter, the presentation of a moving target with a related task is offered as a solution to capture the attention of the DC participants for long enough so that the procedure can be completed.

The evaluation of smooth pursuit calibration (SPC) is done in two phases: First, the accuracy of the approach is validated based on comparison with a standard calibration procedure using able and cooperating participants. Second, the applicability of the approach is validated for a group of early primary school children with various forms of one or more cognitive disorders.

The paper concludes with a discussion of the results.

The Role of Calibration

The Need for Calibration

The output from eye-tracking devices varies with indi-vidual differences in the shape or size of the eyes, such as the corneal bulge and the relationship between the eye fea-tures (pupil and corneal reflections) and the foveal region on the retina. Ethnicity, viewing angle, head pose, colour, texture, light conditions, position of the iris within the eye socket and the state of the eye (open or closed) all influ-ence the appearance of the eye (Hansen & Ji, 2010) and, therefore, the quality of eye-tracking data (Holmqvist et al., 2011). In particular, the individual shapes of partici-pant eye balls, and the varying positions of cameras and illumination require all eye-trackers to be calibrated.

The Procedure

Calibration refers to a procedure to gather data so that the coordinates of the pupil and one or more corneal re-flections in the coordinate system of the eye-video can be converted to x- and y-coordinates that represent the partic-ipant’s point of regard in the stimulus space. The proce-dure usually consists of asking the participant to look at a number of pre-defined points at known angular positions while storing samples of the measured quantity (Abe, Ohi, & Ohyama, 2007; Kliegl & Olson, 1981; Tobii, 2010). There is no consensus on exactly when to collect these samples, but Nyström et al. (2013) showed that partici-pants know better than the operator or the system when they are looking at a target.

Mapping to Point of Regard

The transformation from eye-position to point of re-gard can be either model-based (geometric) or interpola-tion-based (Hansen & Ji, 2010). With model-based gaze estimation, a model of the eye is built from the observable eye features (pupil, corneal reflection, etc.) to compute the gaze direction. In this case, calibration is not used to deter-mine the actual gaze position but rather to record the eye features from different angles. See Hansen and Ji (2010) for a comprehensive overview of possible transformations.

Interpolation might involve, for example, a linear re-gression between the known data set and the correspond-ing raw data, using a least squares estimation to minimize the distances between the observed points and the actual points (Hoormann et al., 2008). Other examples of 2-di-mensional interpolation schemes can be found in McConkie (1981) as well as Kliegl and Olson (1981) while a cascaded polynomial curve fit method is described in Sheena and Borah (1981).

Theoretically, the transformation should remove any systematic error, but the limited number of calibration points that are normally used limits the accuracy that can be achieved. Typical calibration schemes require 5 or 9 pre-defined points, and rarely use more than 20 points (Borah, 1998).

Auto-Calibration

Huang, Kwok, Ngai, Chan, and Leong (2016) pre-sented an auto-calibrating system that identifies and col-lects gaze data unobtrusively during user interaction events since there is a likely correlation between gaze and cursor and caret locations. The procedure presented by Huang et al. (2016) recalibrates continuously and becomes more and more accurate with additional use. They reported an average error of 2.56° which is not good but has the advantage that there is no need for an explicit calibration phase.

Swirski and Dodgson (2013) describe a procedure that fits a pupil motion model to a set of eye images. Infor-mation from multiple frames is combined to build a 3D eye model that is based on assumptions on how the motion of the pupil is constrained. No calibration is needed and since the procedure is based on pupil ellipse geometry alone, it is not necessary to illuminate the eyes to create a corneal reflex. At best, a mean error of 1.68° was reported.

In summary, while auto-calibration might solve the problem of calibrating for participants who struggle to maintain concentration, it is not good enough for studies where high accuracy is needed.

Previous Attempts to Track Difficult-to-Calibrate Participants

In the quest for a solution to calibrate young children who find it difficult to concentrate on a target for the dura-tion of a calibration routine, one can learn from the expe-rience of others who faced similar challenges, for example tracking infants, toddlers and children with autism.

Tracking infants and toddlers pose a challenge as it is hardly ever possible to get them to sit down long enough to focus on calibration targets. Aslin (2012) mentioned that small flashing (or shrinking) targets work well with in-fants, but argued that accuracy is unlikely to ever be better than 1° because infants are unable to precisely and reliably fixate small stimuli. He further asserted that if 1° of accu-racy is insufficient to answer a particular question, then an eye tracker should not be used for the research and alter-native methods should be implemented.

Sasson and Elison (2012) indicated that eye tracking of young children with autism involves unique challenges that are not present when tracking normal-developing older children or adults. They used the normal calibration routines provided by the manufacturer to track the gaze data of their participants, but used large stimuli, spanning more than 5°. Although participants find such stimuli pleasing to look at, the researcher cannot be exactly sure where the participant looked at the time of data capture. This will almost certainly result in bad accuracy that will not be feasible for tasks where high accuracy is required, such as reading.

In a study by Pierce, Conant, Hazin, Stoner, and Desmond (2011), toddlers were seated on their parent’s lap in front of a Tobii T120 eye tracker and a partition sepa-rated the operator from the toddler. To obtain calibration information, toddlers were shown images of an animated cat that appeared in 9 locations on the screen. Using a soft-ware facility that superimposes the point of regard on the test image in real time, the operator observed the infant’s gaze position and head position on a secondary monitor, making note of obvious deviations from expected gaze po-sitions. The entire process was repeated if the infant’s eyes were no longer picked up. No mention was made of the achieved accuracy, but it is reasonable to expect that the accuracy could not be better than the size of the calibration stimulus (the animated cat).

Franchak, Kretch, Soska, and Adolph (2011) used a head-mounted eye tracker but displayed stimuli on a com-puter screen. A sounding target appeared at a single loca-tion within a 3×3 matrix on the monitor to induce eye movements. Calibration involved as few as 3 and as many as 9 points spread across visual space. Subjective judge-ment was used to determine whether fixations deviated from targets by more than about 2° and the procedure was repeated if necessary. Although the spatial accuracy is lower than that of typical desk-mounted systems, it was regarded as adequate for determining the target of fixations in natural settings. The entire process of preparing the equipment and calibrating the infant took about 15 minutes.

Corbetta, Guan, and Williams (2012) followed a simi-lar procedure to calibrate an ETL-500 head-mounted eye tracker through timely coordination between one experi-menter facing the child and another experimenter running the interactive calibration software of the eye tracker. The experimenter facing the child was presenting a small, vis-ually attractive and sounding toy at one of the five prede-fined spatial positions. When the child was staring at the toy in that position, the experimenter running the calibra-tion software was prompted to capture the gaze data. The researchers did not report the accuracy achieved, but one can once again assume that the accuracy could not be bet-ter than the size of the toy used as calibration stimulus.

In summary, it is clear that in an attempt to calibrate so-called difficult-to-calibrate participants, various re-searchers used sound and animation of larger objects as calibration targets. Furthermore, the number of calibration points is mostly limited and the accuracy that can be achieved is not expected to be better than 2°. It is also dif-ficult to tell the actual accuracy that was obtained during a specific experimental set-up or participant recording. The need exists, therefore, for a calibration routine that is easy to execute and can be used for difficult-to-calibrate partic-ipants, yet accurate enough to provide reliable research re-sults – especially if the experiment involves smaller or closely spaced targets.

Smooth Pursuit Calibration

Smooth Pursuit Eye Movements

Smooth-pursuit eye movements are continuous, slow rotations of the eyes (Spering & Gegenfurtner, 2008) that are used to stabilise the image of a moving object of inter-est on the fovea, thus maintaining high acuity (Nagel, Sprenger, Steinlechner, Binkofski, & Lencer, 2012; Thier & Ilg, 2005). Conscious attention is needed to maintain ac-curate smooth pursuit (Hutton & Tegally, 2005; Madelain, Krauzlis, & Wallman, 2005).

Smooth pursuit gain is expressed as the ratio of smooth eye movement velocity to the velocity of a foveal target (Sharpe, 2008). If the gain is less than 1, gaze will fall be-hind the target to create a retinal slip that will have to be reduced by one or more "catch-up" saccades (Van Gelder, Lebedev, Liu, & Tsui, 1995). According to Meyer, Lasker, and Robinson (1985), normal subjects can follow a target with a gain of 90% up to a target velocity of 100 deg/s.

Smooth pursuit gain increases with age, especially for the first 3 months of an infant’s life (Von Hofsten & Rosander, 1997; Richards & Holley, 1999). Accardo, Pensiero, Da Pozzo, and Perissutti (1995) found that ve-locity gain of children aged 7-12 is slightly lower than that of adults.

Smooth pursuit can also be affected by attention (Souto & Kerzel, 2011; Van Donkelaar & Drew, 2002). More spe-cifically, performance with smooth pursuit tasks can be dramatically improved when subjects are asked to analyse some or other changing characteristic of the target, such as reading a changing letter or number on the target (Holzman, Levy, & Proctor, 1976) or pressing a button (Iacono & Lykken, 1979).

Smooth pursuit performance is also affected by stimu-lus background (Brenner, Smeets, & van den Berg, 2001; Kerzel, Souto, & Ziegler, 2008; Lindner, Schwarz, & Ilg, 2001), target position (J. Pola & Wyatt, 2001), target ve-locity (Kowler & McKee, 1987; Meyer et al., 1985), target visibility (Becker & Fuchs, 1985; Pola & Wyatt, 1997), target direction (Engel, Anderson, & Soechting, 2000) and predictability of target direction (Soechting, Rao, & Juveli, 2010).

Smooth pursuit impairment and dysfunction can be linked to mental illnesses such as schizophrenia (Holzman & Levy, 1977; Holzman et al., 1976; Levin et al., 1988; O'Driscoll & Callahan, 2008), autism (Takarae, Minshew, Luna, Krisky, & Sweeney, 2004), physical anhedonia and perceptual aberrations (Gooding, Miller & Kwapil, 2000; O'Driscoll, Lenzenweger, & Holzman, 1998; Simons & Katkin, 1985), Alzheimer’s disease (Fletcher & Sharpe, 1988) and attention-deficit hyperactivity disorder (ADHD) (Fried et al., 2014).

Smooth Pursuit Calibration in General

The concept of calibrating while a participant follows a moving target has been exploited with success in the past. Pfeuffer, Vidal, Turner, Bulling, and Gellersen (2013) ex-plains a procedure where gaze data for calibration is only sampled when the participant is attending to the target as indicated by high correlation between eye and target movement. They showed that pursuit calibration is tolerant to interruption and can be used to calibrate without partic-ipants being aware of the procedure.

Pfeuffer et al. (2013) used a Tobii TX300 eye tracker to test their calibration procedure and collected gaze data at 60 Hz. At a target speed of 5.8°/s, it took 20 seconds to complete the target trajectory and an accuracy of just less than 0.6° were achieved. The results were compared with the 5-point calibration routine of Tobii which took 19 sec-onds to complete and delivered an accuracy of ≈0.7°.

Celebi, Kim, Wang, Wall, and Shic (2014) follows the approach of Pfeuffer et al. (2013) but argues that an Archi-medean spiral would provide better spatial coverage of the stimulus plane with little redundancy. At a linear velocity of 6.4°/s, the calibration procedure took 27 seconds during which 1600 data points were collected. Upon testing 10 healthy adults on an Eyelink 1000 eye tracker running at at 500 Hz, their approach delivered an average accuracy of 0.84° compared to 1.39° with a standard 9-point calibra-tion procedure (which took 23 seconds to complete).

Celebi et al. (2014) stated explicitly that the goal of their smooth pursuit approach towards calibration is to im-prove the calibration for toddlers and children with or without developmental disabilities although they did not test the approach with such participants.

Gredebäck, Johnson, and Von Hofsten (2010) de-scribes a calibration routine that makes use of a moving object to lure infants’ eyes to 2 or 5 calibration targets, but they reported accuracy according to the manufacturer’s specifications of 0.5° -a value which has been computed with a conventional calibration routine under ideal circum-stances and with cooperating adult participants.

Smooth Pursuit Calibration for DC Participants

Participants who struggle to maintain concentration on tedious tasks can be cognitively stimulated by indicating or counting the number of transitions of the target from one stimulus to another (Holzman et al., 1976). In this study, participants were requested to follow a grey disk of 1.5° diameter on a white background (Figure 1) that contained a coloured dot (0.2°) in the centre. The dot changed colour in cycles of blue (2 seconds) and red (500 ms) and the par-ticipants were then asked to say the word "Red" aloud every time that the disk changed to red.

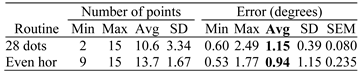

Figure 1.

Trajectories of moving target.

The target is initially displayed statically in the top left corner and the participant can be prepared as to the direc-tion and nature of the motion that could be expected. The experimenter can initiate movement with a button as soon as the participant is ready. Three alternative trajectories were tested with the target moving along an even horizon-tal path, a wavy horizontal path and vertical (cf Figure 1).

Depending on the speed of movement, the interval be-tween windows and the trajectory, a large number of tar-gets can be extracted from the continuous gaze data. In the procedure of Pfeuffer et al. (2013), gaze data is collected whenever a participant attends to the moving target. In our approach, gaze samples (or more specifically, pupil-glint vectors for each eye) are captured for very short windows (100 ms) at intervals of 500 ms. When data is not available at a specific interval, the point is ignored. This means that, at a framerate of 200 Hz, 20 samples were recorded within a 100 ms window. At a velocity of 6.65/s (gaze distance 700 mm, 300 px/s on a 19.5", 1600×900 screen), the sam-ples would span 0.665 in the direction of movement.

The radius of curvature was set so that the horizontal and vertical trajectories would cover 6 and 9 distinct Y and X coordinates respectively (cf Figure 1). This resulted in 76 targets (in 38 s) being captured for both the even and wavy horizontal movements and 66 targets (in 33 s) for the vertical movement.

Since the eyes move smoothly to follow the target, it can be expected that a convex hull around the sample points would be elongated along the direction of move-ment. The samples within every window were sorted ac-cording to the x and y dimensions of the pupil-glint vectors and only the intersection of the centre 80% of samples around the median in each dimension are retained.

In contrast with a standard 5-point or 9-point calibra-tion procedure where all points are needed for the regres-sion, the multitude of points that are available with this procedure allows the removal of points where participants blinked or where their attention was distracted. All win-dows for which the dispersion (Max(maxX-minX, (maxY-minY)) of contained samples are above 5°, are also re-moved. For each of the remaining windows of gaze data samples, the average location and the average pupil-glint vector are calculated.

From here on, the procedure as explained in Blignaut (2016) is followed. The gaze data windows represent cali-bration points at known locations and are used to deter-mine a gaze mapping polynomial set per participant. Re-gression coefficients are recalculated in real-time – based on a subset of calibration points in the region of the current gaze. Real-time localized corrections are done that are based on calibration targets in the same region. See Blignaut (2016) for a detailed discussion of the procedure.

Accuracy of Smooth Pursuit Calibration

In this section, the accuracy of the approach is vali-dated based on a comparison with a standard calibration procedure using healthy and cooperating adult partici-pants. The applicability of the approach for difficult-to-calibrate participants will be addressed in the next section.

Equipment

For this study, an eye tracker with two infrared illumi-nators, 480 mm apart, and the UI-1550LE camera from IDS Imaging (https://en.ids-imaging.com) was assembled. All recordings were made at a framerate of 200 Hz.

Every frame that is captured by the eye camera was an-alysed and the centres of the pupils and the corneal reflec-tions (glints) were identified. A regression-based approach was followed to map the pupil-glint vector to a point of regard in display coordinates. The regression coefficients are determined through a calibration process.

Method

Seventeen healthy and cooperating adult participants were recruited through convenience sampling and pre-sented with four calibration routines, namely a moving tar-get along an even horizontal path, a moving target along a wavy horizontal path and a target moving vertically (cf Figure 1). The 45-dots routine as proposed in a previous study (Blignaut, 2016) was also presented for comparison purposes. The procedure was executed only once for every participant.

After every routine, a 7×4 grid of dots was displayed to determine the accuracy of the procedure. As for the 45 dots, the 28 dots appeared in random order to prevent par-ticipants from pre-empting the position of the next dot and prematurely look away. The regression coefficients as de-termined in the preceding calibration routine was used to map the gaze data to screen coordinates. The accuracy for a specific participant was calculated as the average offset between the known locations and the reported gaze coor-dinates across the 28 dots. The performance of a calibra-tion routine is expressed as the average accuracy over all participants.

Recording of Calibration Points

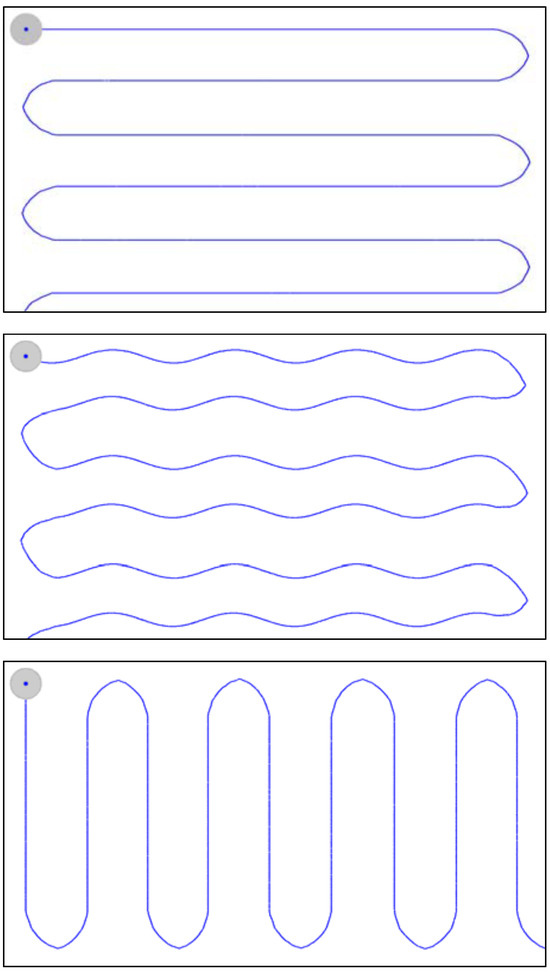

Figure 2 shows the calibration points that were rec-orded for a specific participant while the target was mov-ing along an even horizontal path. The mapped gaze coor-dinates of the sample data are enclosed by convex hulls – green for the left eye and red for the right eye.

Figure 2.

Calibration points with accompanying gaze data samples as captured with a horizontally moving target. Missing points marked with circles.

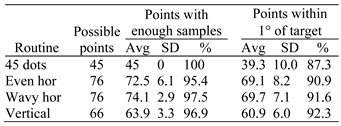

In the example presented in Figure 2, five of the cali-bration windows did not contain enough sample data – probably due to blinks. Table 1 shows that for routines that involve a moving target, on average between 2 and 4 cali-bration windows are lost in this way. Since there are more than enough other points to be used in the subsequent re-gression and because the lost points are seldom at succes-sive locations, this does not pose a problem.

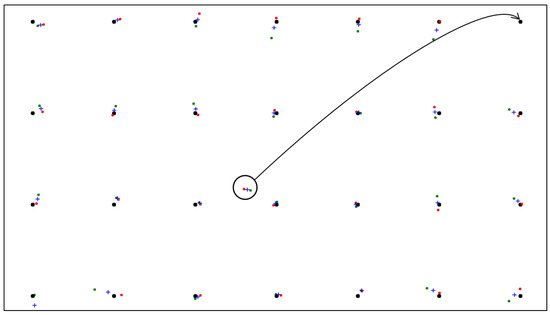

Table 1.

Average number of points (across participants) with enough samples and with mapped gaze coordinates within 1° of the tar-get per calibration routine (SD: Standard deviation).

Initially, the set of calibration points was also used as validation targets and the offsets between the calibration points and the mapped gaze coordinates were calculated. All points with offsets larger than 1.0° were then removed from the set of calibration targets and the regression pro-cedure was repeated. The remaining calibration points are shown in blue in Figure 2, while black dots indicate points that were excluded for real-time interpolation. Table 1 also shows the final number of points that was used to deter-mine the polynomial coefficients through regression.

Validation Results

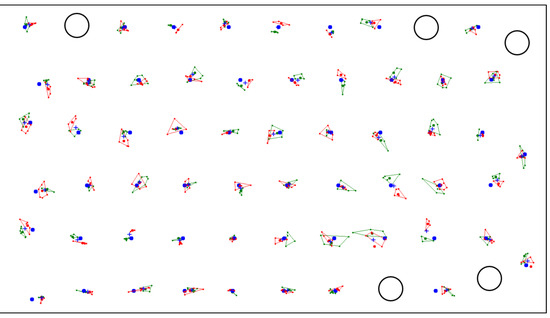

Figure 3 shows the validation points for a calibration that was done for a moving target along an even horizontal path. The average of all samples within a window is shown for the left and right eyes. A + indicates the average posi-tion between the left and right eyes.

Figure 3.

Validation points with left (green) and right (red) eye averages of samples per point. The average be-tween eyes is indicated with a blue +. A point where the participant was distracted, is also shown.

The example in Figure 3 was specifically selected to illustrate the occurrence of outliers. These outliers may oc-cur if the participant loses concentration or is distracted by external stimuli. Sometimes (some of) the samples are cap-tured during a blink, in which case the samples for the left and right eyes appear to be disconnected. Validation points were excluded from the calculation of average offset if the offset was larger than 3° or if the mapped gaze coordinates for the two eyes were more than 3° apart. Table 2 shows the average number of validation points that was included for each of the calibration routines. These thresholds were set large enough not to exclude valid data but small enough to ensure that unwanted gaze behaviour is excluded.

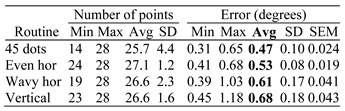

Table 2.

Average number of validation points that was included and the average error (over participants and validation targets) for each of the calibration routines. (SD: Standard deviation, SEM: Standard error of the mean).

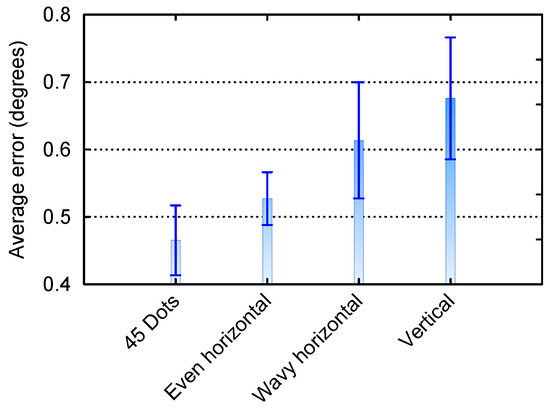

Table 2 also shows the average error across the 28 val-idation points and 17 participants per calibration routine. The important column that needs to be interpreted to com-pare the four calibration routines is boldfaced. Figure 4 provides a visualisation of the same results. The vertical bars denote the 95% confidence intervals of the means.

Figure 4.

Average error over participants and val-idation targets for four calibration routines. The vertical bars denote the 95% confidence intervals of the means.

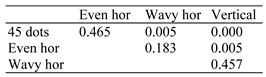

A repeated measures analysis of variance (each partic-ipant calibrated with four different routines) showed that the calibration routine has a significant (α = .001) effect on the magnitude of the error (F(3,48) = 9.74, p < .000). Table 3 shows the results of Tukey’s test for the honestly signif-icant difference between pairs of means. The differences between the means for the 45-dots and vertical movement as well as the difference between 45-dots and horizontal movement along a wavy path were significant (α = .01). Although the accuracy for the moving target along an even horizontal path was worse than that of the 45-dots routine, it was not significantly so (α = .05).

Table 3.

p-Values for the significance of the difference in er-ror between pairs of means.

It can, therefore, be concluded that a moving target along an even horizontal path has the potential to be used as alternative calibration routine. This will be tested in the next section with a sample of difficult-to-calibrate partici-pants.

Applicability of Smooth Pursuit Calibration for Difficult-to-Calibrate Participants

Equipment

The same self-assembled eye tracker was used as in the previous section.

Method

A school for learners with special education needs were visited and all learners from Grade 1 to Grade 3 (ages 6 – 11) for which permission of the parents were obtained, were tested. The school accommodates learners who are cerebrally palsied, physically and/or learning disabled. The school has specially qualified remedial teachers as well as a multi-disciplinary support structure that includes psychologists, social workers, occupational therapists, speech therapists, physiotherapists as well as a profes-sional nurse. The school follows the normal mainstream syllabi but there are no more than 10 learners in a class to enable teachers to provide specialised and individual atten-tion.

Several lessons were learned in the process of captur-ing data. Initially, learners were requested to follow the moving target without any further instruction. It soon be-came evident that they struggle to maintain focus on the target for the duration of the trajectory. The target was then programmed to change colour in cycles of blue (2 seconds) and red (500 ms) and learners were instructed to call out the word "Red" whenever the target changes to red. For the procedures where dots were involved, every dot appeared in a different colour and learners were instructed to call out the colour for the dot every time.

Although the system allows moderate head move-ments, many learners had excessive sideways and back and forth head movements – some of which were involun-tary. A chinrest was then used to maintain head position, but it caused instability of the eyes every time that the learners vocalised their response on a colour change of the target. Finally, the learners were instructed to push their foreheads against a barrier that was set such that a fixed gaze distance of 700 mm was maintained.

It was also realised that the sets of 45 dots for calibra-tion and 28 dots for validation was too exhausting and therefore these were limited to 23 calibration targets (in rows of 5, 4, 5, 4, 5 targets each) and 15 validations targets in a grid of 5×3.

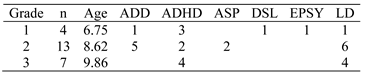

Eventually, 24 participants were tested with the final configuration of target movement, headrest and calibration sets. The number of learners per grade and condition is shown in Table 4. Note that some learners had more than one condition.

Table 4.

Number of learners per grade and condition.(ADD: Attention deficit disorder; ADHD: Attention deficit hyperactivity disorder; ASP: Asperger syndrome; DSL: Dys-lexia; EPSY: Epilepsy; LD: Learning disability).

The learners were presented with a moving target along an even horizontal path (SP) (cf Figure 1) and the 23-dots routine. After every routine, the 5×3 grid of dots was dis-played to determine the accuracy of the procedure. As was the case for the validation of accuracy with healthy adults, the dots appeared in random order to prevent learners from pre-empting the position of the next dot and prematurely look away. The performance of both the 23-dot and SP cal-ibration routines was expressed as the average accuracy over all participants as determined through the 15 dots val-idation routine.

Validation Results

As for the validation of accuracy with healthy adults, validation points were excluded from the calculation of av-erage offset if the offset was larger than 3° or if the mapped gaze coordinates for the two eyes were more than 3° apart. Table 5 shows the average number of validation points that were included for each one of the calibration routines. A repeated measures analysis of variance for the effect of cal-ibration routine (23-dots vs SP) on the number of valid points showed that the SP routine leads to more reliable data as there are significantly less points that have to be discarded (F(1,47) = 47.7, p < .001).

Table 5.

Average number of validation points that was included and the average error (over participants and validation targets) for each of the calibration routines. (SD: Standard deviation, SEM: Standard error of the mean).

Table 5 also shows the average error across the 15 val-idation points and 24 participants per calibration routine. A repeated measures analysis of variance for the effect of calibration routine (23-dots vs SP) on the accuracy of tracking showed that the SP routine is significantly better than a dots-based routine (F(1,47) = 12.57, p < .000) for difficult-to-calibrate participants.

Summary

The conventional way of calibrating remote video-based eye trackers is through presentation of a series of gaze targets at known positions while participants are ex-pected to watch the targets. For regression-based mapping of eye features to gaze coordinates, more gaze targets nor-mally mean better (more accurate) calibration. Unfortu-nately, more gaze targets also require more mental effort from participants. Through informal observations, it was realised that, although the 45 dots-routine of a previous study (Blignaut, 2016) provided very good accuracy, it ex-pected too much mental effort for participants who strug-gle to maintain concentration.

Depending on the type of experiment, better accuracy might be expected than can be achieved with calibration free or auto-calibrating systems. The calibration proce-dures that are normally used for infants, toddlers and au-tistic children do also not suffice since they are not accu-rate enough and the reliability of research results might be jeopardised.

In this paper, the use of smooth pursuit with a target moving across the display at a constant speed, is proposed. This approach is motivated by the fact that attention to a moving target can be maintained more easily – especially if accompanied by a concurrent and related task such as analysis of some or other changing characteristic of the tar-get (Holzman et al., 1976; Iacono & Lykken, 1979).

While the participant is following the target, gaze data is captured at regular intervals and many calibration targets are saved that can be used in subsequent regression and interpolation. Because of the abundance of points, the pro-cedure allows the exclusion of points of dubious quality. Depending on the speed of movement and the trajectory, the procedure could take anything between 30 s and 60 s to complete.

Validation of the proposed routine was done in two phases: The accuracy of the routine was validated by com-paring its performance with that of a standard calibration procedure for healthy and cooperating adults. Thereafter, the applicability of the approach for participants who are normally difficult to calibrate, is validated by applying it for a group of early primary school children with various forms of one or more cognitive disorders.

It was proven through a repeated measures, within-par-ticipants, analysis of variance that the accuracy that can be attained through calibration with a moving target along an even horizontal path is not significantly worse than the ac-curacy that can be attained with a standard method of watching dots. Accuracy of around the 0.5° mark were ob-tained for both routines for a group of seventeen adults which is comparable with the 0.6° attained by Pfeuffer et al. (2013) and better than the 0.84° attained by Celebi et al. (2014).

For a group of young children with various forms of cognitive disorders such as ADD, ADHD and learning dis-abilities, smooth pursuit calibration proved to be superior to the standard routine. For this group, an average accuracy of below 1° could be achieved with SP while it was not the case with a standard routine of 28 dots. This is a significant improvement on the 1.5°-2.5° errors that can be attained by calibration-free or auto-calibrating routines such as those of Huang et al. (2016) and Swirski and Dodgson (2013).

Future Research

Since smooth pursuit ability develops until the age of adolescence (Accardo et al., 1995), one can expect that older children will benefit even more from the smooth pur-suit approach. This needs to be investigated.

Furthermore, the smooth pursuit approach was tested above for children with ADD, ADHD and learning disa-bilities. No children with autism were tested and it remains to be seen of the approach will work for such conditions since it is known that smooth pursuit is impaired in autism and similar conditions (Pierce et al., 2011; Takarae et al., 2004).

References

- Abe, K., S. Ohi, and M. Ohyama. 2007. Edited by C. Stephanidis. An eye-gaze input system using information on eye movement history. In Universal Access in HCI, Part II. HCII2007, LNCS 4555. Springer Berlin Heidelberg: pp. 721–729. [Google Scholar]

- Accardo, A. P., S. Pensiero, S. Da Pozzo, and P. Perissutti. 1995. Characteristics of horizontal smooth pursuit eye movements to sinusoidal stimulation in children of primary school age. Vision Research 35, 4: 539–548. [Google Scholar] [CrossRef] [PubMed]

- Aslin, R.N. 2012. Infant eyes: A window on cognitive development. Infancy 17, 1: 126–140. [Google Scholar] [CrossRef]

- Becker, W., and A.F. Fuchs. 1985. Prediction in the oculomotor system: smooth pursuit during transient disappearance of a visual target. Experimental Brain Research 57, 3: 562–575. [Google Scholar] [CrossRef] [PubMed]

- Blignaut, P.J. 2016. Idiosyncratic feature-based gaze mapping. Journal of Eye Movement Research 9, 3: 1–17. [Google Scholar] [CrossRef]

- Borah, J. Technology and application of head based control. RTO Lecture series on Alternative Control Technologies, Brétigny, France, Ohio, USA, 8 October, 14-15 October.

- Brenner, E., J.B. Smeets, and A.V. van den Berg. 2001. Smooth eye movements and spatial localisation. Vision Research 41, 17: 2253–2259. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Celebi, F.M., E.S. Kim, Q. Wang, C.A. Wall, and F. Shic. 2014. A smooth pursuit calibration technique. In Eye Tracking Research and Applications (ETRA). Safety Harbor, Florida: pp. 26–28 March 2014. [Google Scholar]

- Corbetta, D., Y. Guan, and J.L. Williams. 2012. Infant eye-tracking in the context of goal-directed actions. Infancy 17, 1: 102–125. [Google Scholar] [CrossRef]

- Crane, H.D., and C.M. Steele. 1985. Generation-V dual-Purkinje-image eyetracker. Applied Optics 24, 4: 527–537. [Google Scholar] [CrossRef]

- Engel, K.C., J.H. Anderson, and J. F. Soechting. 2000. Similarity in the response of smooth pursuit and manual tracking to a change in the direction of target motion. Journal of Neurophysiol 84, 3: 1149–1156. [Google Scholar] [CrossRef]

- Fletcher, W.A., and J.A. Sharpe. 1988. Smooth pursuit dysfunction in Alzheimer's disease. Neurology 38, 2: 272–277. [Google Scholar] [CrossRef]

- Franchak, J.M., K.S. Kretch, K.C. Soska, and K.E. Adolph. 2011. Head-mounted eye tracking: A new method to describe infant looking. Child Development 82, 6: 1738–1750. [Google Scholar] [CrossRef]

- Fried, M., E. Tsitsiashvili, Y.S. Bonneh, A. Sterkin, T. Wygnanski-Jaffe, T. Epstein, and U. Polat. 2014. ADHD subjects fail to suppress eye blinks and microsaccades while anticipating visual stimuli but recover with medication. Vision Research 101: 62–72. [Google Scholar] [CrossRef] [PubMed]

- Gooding, D.C., M.D. Miller, and T.R. Kwapil. 2000. Smooth pursuit eye tracking and visual fixation in psychosis-prone individuals. Psychiatry Research 93, 1: 41–54. [Google Scholar] [CrossRef] [PubMed]

- Gredebäck, G., S. Johnson, and C. Von Hofsten. 2010. Eye tracking in infancy research. Developmental Neuropsychology 35, 1: 1–19. [Google Scholar] [CrossRef]

- Hansen, D.W., and Q. Ji. 2010. In the eye of the beholder: A survey of models for eyes and gaze. IEEE Transactions on Pattern Analysis and Machine Intelligence 32, 3: 478–500. [Google Scholar] [CrossRef] [PubMed]

- Holmqvist, K., M. Nyström, R. Andersson, R. Dewhurst, H. Jarodzka, and J. Van de Weijer. 2011. Eye tracking: A comprehensive guide to methods and measures. Oxford University Press: London. [Google Scholar]

- Holzman, P.S., D.L. Levy, and L.R. Proctor. 1976. Smooth pursuit eye movements, attention, and Schizophrenia. Archives General Psychiatry 33, 12: 1415–1420. [Google Scholar] [CrossRef]

- Holzman, P.S., and D.L. Levy. 1977. Smooth pursuit eye movements and functional psychoses: A review. Schizophrenia Bulletin 3, 1: 15–27. [Google Scholar] [CrossRef]

- Hoormann, J., S. Jainta, and W. Jaschinski. 2008. The effect of calibration errors on the accuracy of the eye movement recordings. Journal of Eye Movement Research 1, (2):3: 1–7. [Google Scholar] [CrossRef]

- Huang, M.X., T.C.K. Kwok, G. Ngai, S.C.F. Chan, and H.V. Leong. 2016. Building a personalized, auto-calibrating eye tracker from user interactions. CHI 2016, San Jose, California; 7-12 May. [Google Scholar] [CrossRef]

- Hutton, S.B., and D. Tegally. 2005. The effects of dividing attention on smooth pursuit eye tracking. Experimental Brain Research 163, 3: 306–313. [Google Scholar] [CrossRef]

- Iacono, W.G., and D.T. Lykken. 1979. Electro-oculographic recording and scoring of smooth pursuit and saccadic eye tracking: A parametric study using monozygotic twins. Psychophysiology 16, 2: 94–107. [Google Scholar] [CrossRef]

- Kerzel, D., D. Souto, and N.E. Ziegler. 2008. Effects of attention shifts to stationary objects during steady-state smooth pursuit eye movements. Vision Research 48, 7: 958–969. [Google Scholar] [CrossRef]

- Kliegl, R., and R.K. Olson. 1981. Reduction and calibration of eye monitor data. Behavior Research Methods and Instrumentation 13, 2: 107–111. [Google Scholar] [CrossRef]

- Kowler, E., and S. P. McKee. 1987. Sensitivity of smooth eye movement to small differences in target velocity. Vision Research 27, 6: 993–1015. [Google Scholar] [CrossRef] [PubMed]

- Levin, S., A. Luebke, D.S. Zee, T.C. Hain, D.A. Robinson, and P.S. Holzman. 1988. Smooth pursuit eye movements in schizophrenics: Quantitative measurements with the search-coil technique. Journal of Psychiatric Research 22, 3: 195–206. [Google Scholar] [CrossRef]

- Lindner, A., U. Schwarz, and U.J. Ilg. 2001. Cancellation of self-induced retinal image motion during smooth pursuit eye movements. Vision Research 41, 13: 1685–1694. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Madelain, L., R.J. Krauzlis, and J. Wallman. 2005. Spatial deployment of attention influences both saccadic and pursuit tracking. Vision Research 45, 20: 2685–2703. [Google Scholar] [CrossRef]

- McConkie, G.W. 1981. Evaluating and reporting data quality in eye movement research. Behavior Research Methods & Instrumentation 13, 2: 97–106. [Google Scholar] [CrossRef][Green Version]

- Meyer, C.H., A.G. Lasker, and D.A. Robinson. 1985. The upper limit of human smooth pursuit velocity. Vision Research 25, 4: 561–563. [Google Scholar] [CrossRef]

- Nagel, M., A. Sprenger, S. Steinlechner, F. Binkofski, and R. Lencer. 2012. Altered velocity processing in schizophrenia during pursuit eye tracking. PLoS One 7, 6: e38494. [Google Scholar] [CrossRef]

- Nyström, M., R. Andersson, K. Holmqvist, and J. van de Weijer. 2013. The influence of calibration method and eye physiology on eyetracking data quality. Behavior Research Methods 45, 1: 272–288. [Google Scholar] [CrossRef]

- O'Driscoll, G.A., and B.L. Callahan. 2008. Smooth pursuit in Schizophrenia: A meta-analytic review of research since 1993. Brain and Cognition 68, 3: 359–370. [Google Scholar] [CrossRef]

- O'Driscoll, G.A., M.F. Lenzenweger, and P.S. Holzman. 1998. Antisaccades and smooth pursuit eye tracking and schizotypy. Archives of General Psychiatry 55, 9: 837–843. [Google Scholar] [CrossRef] [PubMed]

- Pfeuffer, K., M. Vidal, J. Turner, A. Bulling, and H. Gellersen. 2013. Pursuit calibration: Making gaze calibration less tedious and more flexible. Proceedings of the 26th annual ACM symposium on user interface software and technology; pp. 261–270. [Google Scholar] [CrossRef]

- Pierce, K., D. Conant, R. Hazin, R. Stoner, and J. Desmond. 2011. Preference for geometric patterns early in life as a risk factor for autism. Archives of General Psychiatry 68, 1: 101–109. [Google Scholar] [CrossRef] [PubMed]

- Pola, J., and H.J. Wyatt. 1997. Offset dynamics of human smooth pursuit eye movements: Effects of target presence and subject attention. Vision Research 37, 18: 2579–2595. [Google Scholar] [CrossRef]

- Pola, J., and H.J. Wyatt. 2001. The role of target position in smooth pursuit deceleration and termination. Vision Research 41, 5: 655–669. [Google Scholar] [CrossRef] [PubMed]

- Richards, J.E., and F.B. Holley. 1999. Infant attention and the development of smooth pursuit tracking. Developmental Psychology 35, 3: 856–867. [Google Scholar] [CrossRef]

- Sasson, N.J., and J.T. Elison. 2012. Eye tracking young children with autism. Journal of Visualized Experiments (61): e3675. [Google Scholar] [CrossRef]

- Sharpe, J.A. 2008. Neurophysiology and neuroanatomy of smooth pursuit: lesion studies. Brain and Cognition 68, 3: 241–254. [Google Scholar] [CrossRef]

- Sheena, D., and J. Borah. 1981. Edited by D.F. Fisher, R.A. Monty and J.W. Senders. Compensation for some second order effects to improve eye position measurements. In Eye Movements: Cognition and Visual Percep-tion. Lawrence Erlbaum Associates: Hillsdale. [Google Scholar]

- Simons, R.F., and W. Katkin. 1985. Smooth pursuit eye movements in subjects reporting physical anhedonia and perceptual aberrations. Psychiatry Research 14, 4: 275–289. [Google Scholar] [CrossRef]

- Soechting, J.F., H.M. Rao, and J.Z. Juveli. 2010. Incorporating prediction in models for two-dimensional smooth pursuit. PLoS One 5, 9: e12574. [Google Scholar] [CrossRef]

- Souto, D., and D. Kerzel. 2011. Attentional constraints on target selection for smooth pursuit eye movements. Vision Research 51, 1: 13–20. [Google Scholar] [CrossRef][Green Version]

- Spering, M., and K.R. Gegenfurtner. 2008. Contextual effects on motion perception and smooth pursuit eye movements. Brain Research 1225: 76–85. [Google Scholar] [CrossRef] [PubMed]

- Swirski, L., and N. Dodgson. 2013. A fully automatic, temporal approach to single camera, glint-free 3D eye model fitting. European Conference of Eye Movements (ECEM), Lund, Sweden. [Google Scholar]

- Takarae, Y., N.J. Minshew, B. Luna, C.M. Krisky, and J. A. Sweeney. 2004. Pursuit eye movement deficits in autism. Brain 127, 12: 2584–2594. [Google Scholar] [CrossRef] [PubMed]

- Thier, P., and U.J. Ilg. 2005. The neural basis of smooth-pursuit eye movements. Current Opinion in Neurobiology 15, 6: 645–652. [Google Scholar] [CrossRef] [PubMed]

- Tobii Technology. 2010. Product description, Tobii T/X series eye trackers, rev. 2.1. June 2010. Tobii Technology AB. [Google Scholar]

- Van Donkelaar, P., and A.S. Drew. 2002. The allocation of attention during smooth pursuit eye movements. Progress in Brain Research 140: 267–277. [Google Scholar] [CrossRef]

- Van Gelder, P., S. Lebedev, P.M. Liu, and W.H. Tsui. 1995. Anticipatory saccades in smooth pursuit: Task effects and pursuit vector after saccades. Vision Research 35, 5: 667–678. [Google Scholar] [CrossRef]

- Von Hofsten, C.V., and K. Rosander. 1997. Development of smooth pursuit tracking in young infants. Vision Research 37, 13: 1799–1810. [Google Scholar] [CrossRef]

Copyright © 2017 2017 International Association of Orofacial Myology