Sampling Rate Influences Saccade Detection in Mobile Eye tracking of a Reading Task

Abstract

:Introduction

Methods

Participants

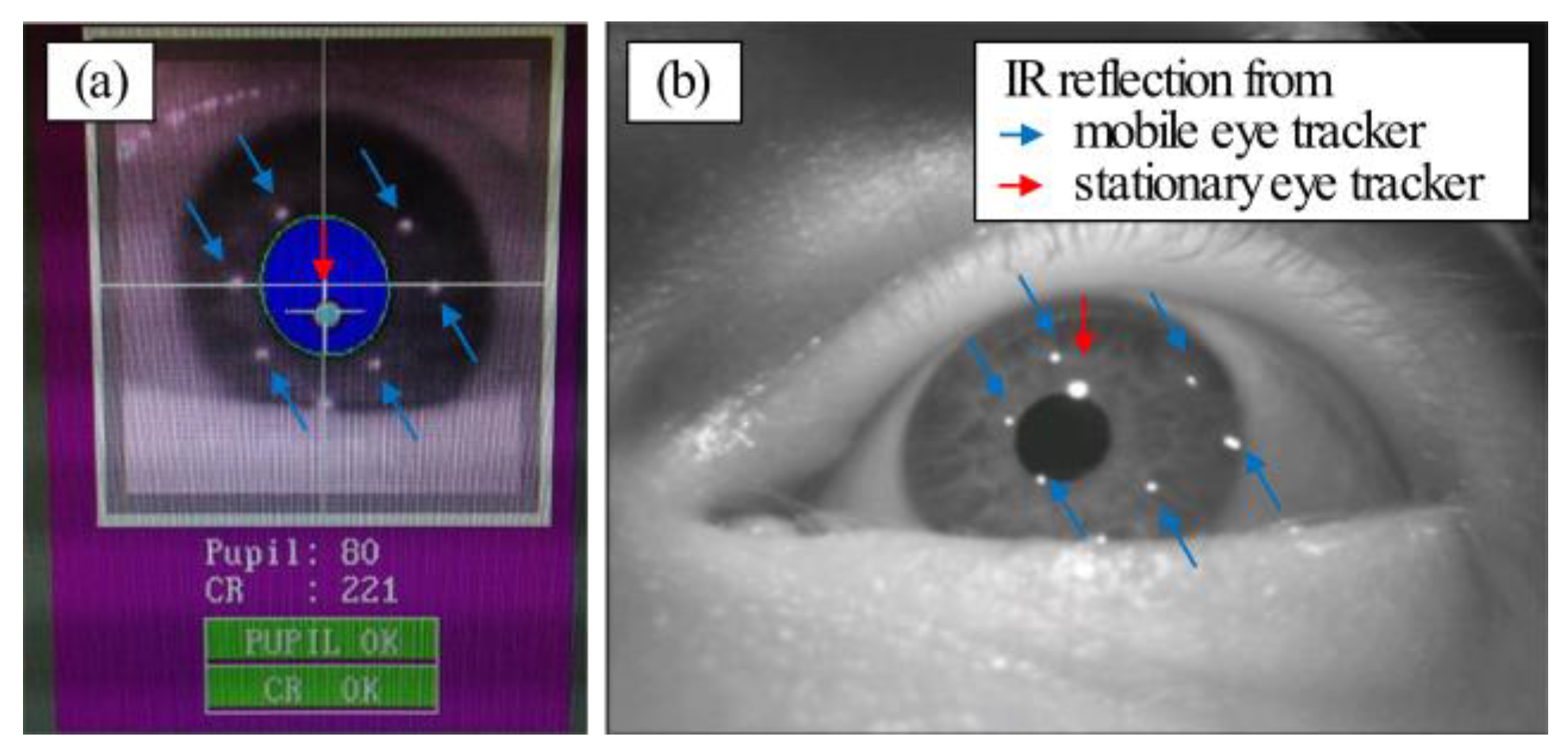

Equipment andExperimentalProcedure

Analysis

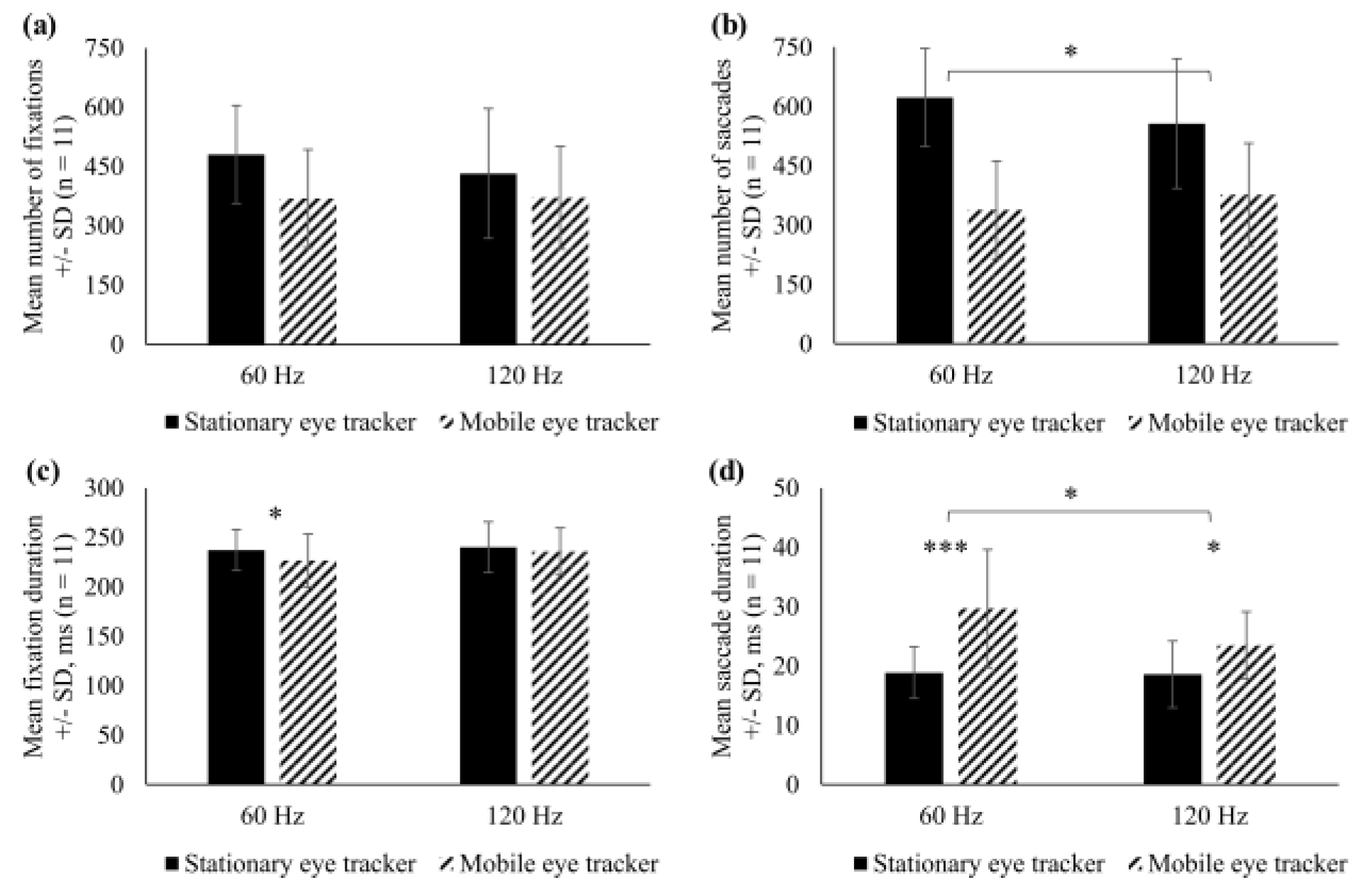

Results

Discussion

Mobile Eye Tracking and Reading

Event Detection Algorithms for Eye Move-Ment Data

Head-Worn vs. Head Fixed Eye Tracking

Fixation Statistics in Reading

Future Implications in Virtual Reality Applications

Conclusions

Ethics and Conflict of Interest

Acknowledgments

References

- Babcock, J. S., and J. B. Pelz. 2004. Building a light-weight eyetracking headgear. Proceedings of the 2004 symposium on Eye tracking research \& applications; pp. 109–114. [Google Scholar] [CrossRef]

- Bahill, A. T., M. R. Clark, and L. Stark. 1975. The main sequence, a tool for studying human eye movements. Mathematical Biosciences 24, 3-4: 191–204. [Google Scholar]

- Behrens, F., M. Mackeben, and W. Schroder-Preikschat. 2010. An improved algorithm for automatic detec-tion of saccades in eye movement data and for calcu-lating saccade parameters. Behav Res Methods 42, 3: 701–708. [Google Scholar] [CrossRef] [PubMed]

- Behrens, F., and L. R. Weiss. 1992. An algorithm sepa-rating saccadic from nonsaccadic eye movements au-tomatically by use of the acceleration signal. Vision Res 32, 5: 889–893. [Google Scholar] [PubMed]

- Benson, P. J., S. A. Beedie, E. Shephard, I. Giegling, D. Rujescu, and D. St. Clair. 2012. Simple Viewing Tests Can Detect Eye Movement Abnormalities That Distinguish Schizophrenia Cases from Controls with Exceptional Accuracy. Biological Psychiatry 72, 9: 716–724. [Google Scholar] [CrossRef]

- Bettenbühl, M., C. Paladini, K. Mergenthaler, R. Kliegl, R. Engbert, and M. Holschneider. 2010. Mi-crosaccade characterization using the continuous wavelet transform and principal component analysis. 3, 5. [Google Scholar] [CrossRef]

- Biscaldi, M., S. Gezeck, and V. Stuhr. 1998. Poor sac-cadic control correlates with dyslexia. Neuropsycho-logia 36, 11: 1189–1202. [Google Scholar] [CrossRef]

- Boukhalfi, T., C. Joyal, S. Bouchard, S. M. Neveu, and P. Renaud. 2015. Tools and Techniques for Real-time Data Acquisition and Analysis in Brain Comput-er Interface studies using qEEG and Eye Tracking in Virtual Reality Environment. IFAC-PapersOnLine 48, 3: 46–51. [Google Scholar] [CrossRef]

- Bulling, A., and H. Gellersen. 2010. Toward mobile eye-based human-computer interaction. IEEE Pervasive Computing 9, 4: 8–12. [Google Scholar]

- Crawford, J. D., J. C. Martinez-Trujillo, and E. M. Klier. 2003. Neural control of three-dimensional eye and head movements. Current Opinion in Neurobiology 13, 6: 655–662. [Google Scholar] [CrossRef]

- Dalmaijer, E. 2014. Is the low-cost EyeTribe eye track-er any good for research? PeerJ PrePrints 2: e585v1. [Google Scholar] [CrossRef]

- DiScenna, A. O., V. Das, A. Z. Zivotofsky, S. H. Seidman, and R. J. Leigh. 1995. Evaluation of a video track-ing device for measurement of horizontal and vertical eye rotations during locomotion. Journal of Neurosci-ence Methods 58, 1–2: 89–94. [Google Scholar] [CrossRef]

- Duchowski, Shivashankaraiah V., T. Rawls, A. K. Gramo-padhye, B. J. Melloy, and B. Kanki. 2000. Binocular eye tracking in virtual reality for inspection training. Paper presented at the Proceedings of the 2000 symposium on Eye tracking research & applica-tions, Palm Beach Gardens, Florida, USA. [Google Scholar]

- Duchowski, A. 2007. Eye Tracking Methodology: The-ory and Practice. Springer. [Google Scholar]

- Eden, G. F., J. F. Stein, H. M. Wood, and F. B. Wood. 1994. Differences in eye movements and reading problems in dyslexic and normal children. Vision Re-search 34, 10: 1345–1358. [Google Scholar] [CrossRef]

- Engbert, R., and R. Kliegl. 2003. Microsaccades uncover the orientation of covert attention. Vision Research 43, 9: 1035–1045. [Google Scholar] [CrossRef]

- Fernández, G., M. Sapognikoff, S. Guinjoan, D. Orozco, and O. Agamennoni. 2016. Word processing dur-ing reading sentences in patients with schizophrenia: evidences from the eyetracking technique. Compre-hensive Psychiatry 68: 193–200. [Google Scholar] [CrossRef]

- Fetter, M. 2007. Vestibulo-ocular reflex Neuro-ophthalmology. Karger Publish-ers: Vol. 40, pp. 35–51. [Google Scholar]

- Harris, C. M., and D. M. Wolpert. 2006. The main se-quence of saccades optimizes speed-accuracy trade-off. Biological Cybernetics 95, 1: 21–29. [Google Scholar] [CrossRef]

- Hessels, R. S., T. H. W. Cornelissen, C. Kemner, and I. T. C. Hooge. 2015. Qualitative tests of remote eyetracker recovery and performance during head ro-tation. Behavior Research Methods 47, 3: 848–859. [Google Scholar] [CrossRef] [PubMed]

- Inhoff, A. W., B. A. Seymour, D. Schad, and S. Greenberg. 2010. The size and direction of saccadic curva-tures during reading. Vision Res 50, 12: 1117–1130. [Google Scholar] [CrossRef]

- Isokoski, P., M. Joos, O. Spakov, and B. Martin. 2009. Gaze controlled games. Universal Access in the In-formation Society 8, 4: 323–337. [Google Scholar]

- Isokoski, P., and B. Martin. 2006. Eye tracker input in first person shooter games. Paper presented at the Proceedings of the 2nd Conference on Communica-tion by Gaze Interaction: Communication by Gaze In-teraction-COGAIN 2006: Gazing into the Future. [Google Scholar]

- Joseph, H. S., S. P. Liversedge, H. I. Blythe, S. J. White, and K. Rayner. 2009. Word length and landing po-sition effects during reading in children and adults. Vision Res 49, 16: 2078–2086. [Google Scholar] [CrossRef]

- Juhola, M., and I. Pyykko. 1987. Effect of sampling fre-quencies on the velocity of slow and fast phases of nystagmus. Int J Biomed Comput 20, 4: 253–263. [Google Scholar]

- Kassner, M., W. Patera, and A. Bulling. 2014. Pupil: an open source platform for pervasive eye tracking and mobile gaze-based interaction. Paper presented at the Proceedings of the 2014 ACM international joint con-ference on pervasive and ubiquitous computing: Ad-junct publication. [Google Scholar]

- Kemner, C., M. N. Verbaten, J. M. Cuperus, G. Camffer-man, and H. van Engeland. 1998. Abnormal Sac-cadic Eye Movements in Autistic Children. Journal of Autism and Developmental Disorders 28, 1: 61–67. [Google Scholar] [CrossRef] [PubMed]

- Kliegl, R., A. Nuthmann, and R. Engbert. 2006. Track-ing the mind during reading: the influence of past, present, and future words on fixation durations. Jour-nal of experimental psychology: General 135, 1: 12. [Google Scholar]

- Kok, E. M., and H. Jarodzka. 2017. Before your very eyes: the value and limitations of eye tracking in med-ical education. Medical Education 51, 1: 114–122. [Google Scholar] [CrossRef]

- Krejtz, K., C. Biele, D. Chrzastowski, A. Kopacz, A. Niedzielska, P. Toczyski, and A. Duchowski. 2014. Gaze-controlled gaming: immersive and diffi-cult but not cognitively overloading. Paper presented at the Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Com-puting: Adjunct Publication. [Google Scholar]

- Lahey, J. N., and D. Oxley. 2016. The Power of Eye Tracking in Economics Experiments. American Eco-nomic Review 106, 5: 309–313. [Google Scholar] [CrossRef]

- Li, D., J. Babcock, and D. J. Parkhurst. 2006. openEyes: a low-cost head-mounted eye-tracking solution. Paper presented at the Proceedings of the 2006 symposium on Eye tracking research & applications, San Di-ego, California. [Google Scholar]

- Liversedge, S. P., and J. M. Findlay. 2000. Saccadic eye movements and cognition. Trends in Cognitive Sci-ences 4, 1: 6–14. [Google Scholar] [CrossRef]

- Marx, S., G. Respondek, M. Stamelou, S. Dowiasch, J. Stoll, F. Bremmer, and W. Einhauser. 2012. Valida-tion of mobile eye-tracking as novel and efficient means for differentiating progressive supranuclear palsy from Parkinson's disease. Frontiers in Behav-ioral Neuroscience 6, 88. [Google Scholar] [CrossRef]

- Molina, A. I., M. A. Redondo, C. Lacave, and M. Ortega. 2014. Assessing the effectiveness of new devices for accessing learning materials: An empirical analysis based on eye tracking and learner subjective percep-tion. Computers in Human Behavior 31: 475–490. [Google Scholar] [CrossRef]

- Niehorster, D. C., T. H. W. Cornelissen, K. Holmqvist, I. T. C. Hooge, and R. S. Hessels. 2017. What to ex-pect from your remote eye-tracker when participants are unrestrained. Behavior Research Methods, 1–15. [Google Scholar] [CrossRef]

- Nilsson Benfatto, M., G. Öqvist Seimyr, J. Ygge, T. Pan-sell, A. Rydberg, and C. Jacobson. 2016. Screen-ing for Dyslexia Using Eye Tracking during Reading. PLoS One 11, 12: e0165508. [Google Scholar] [CrossRef]

- Nyström, M., and K. Holmqvist. 2010. An adaptive algo-rithm for fixation, saccade, and glissade detection in eyetracking data. Behavior Research Methods 42, 1: 188–204. [Google Scholar] [CrossRef]

- Oliveira, D., L. Machín, R. Deliza, A. Rosenthal, E. H. Wal-ter, A. Giménez, and G. Ares. 2016. Consum-ers' attention to functional food labels: Insights from eye-tracking and change detection in a case study with probiotic milk. LWT-Food Science and Tech-nology 68: 160–167. [Google Scholar] [CrossRef]

- Ooms, K., L. Dupont, L. Lapon, and S. Popelka. 2015. Accuracy and precision of fixation locations recorded with the low-cost Eye Tribe tracker in different exper-imental setups. Journal of eye movement research 8, 1. [Google Scholar]

- Pfeiffer, T., and C. Memili. 2016. Model-based real-time visualization of realistic three-dimensional heat maps for mobile eye tracking and eye tracking in virtual re-ality. Paper presented at the Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications, Charleston, South Carolina. [Google Scholar]

- Pfeiffer, T., and P. Renner. 2014. EyeSee3D: a low-cost approach for analyzing mobile 3D eye tracking data using computer vision and augmented reality technol-ogy. Paper presented at the Proceedings of the Sym-posium on Eye Tracking Research and Applications, Safety Harbor, Florida. [Google Scholar]

- Pollatsek, A., and K. Rayner. 1982. Eye movement con-trol in reading: The role of word boundaries. Journal of Experimental Psychology: Human Perception and Performance 8, 6: 817–833. [Google Scholar] [CrossRef]

- Quinlivan, B., J. S. Butler, I. Beiser, L. Williams, E. McGovern, S. O'Riordan, and R. B. Reilly. 2016. Application of virtual reality head mounted display for investigation of movement: a novel effect of ori-entation of attention. J Neural Eng 13, 5: 056006. [Google Scholar] [CrossRef] [PubMed]

- Rayner. 1998. Eye movements in reading and infor-mation processing: 20 years of research. Psychol Bull 124, 3: 372–422. [Google Scholar] [PubMed]

- Rayner. 2009. Eye movements and attention in reading, scene perception, and visual search. The Quarterly Journal of Experimental Psychology 62, 8: 1457–1506. [Google Scholar] [CrossRef]

- Rayner, and G. W. McConkie. 1976. What guides a read-er's eye movements? Vision Research 16, 8: 829–837. [Google Scholar] [CrossRef]

- Rayner, K., A. Pollatsek, J. Ashby, and C. Clifton, Jr. 2012. Psychology of reading. Psychology Press. [Google Scholar]

- Rifai, K., and S. Wahl. 2016. Specific eye–head coordi-nation enhances vision in progressive lens wearers. Journal of Vision 16, 11: 5–5. [Google Scholar] [CrossRef]

- Rosch, J. L., and J. J. Vogel-Walcutt. 2013. A review of eye-tracking applications as tools for training. Cogni-tion, Technology & Work 15, 3: 313–327. [Google Scholar] [CrossRef]

- Rosenhall, U., E. Johansson, and C. Gillberg. 2007. Oculomotor findings in autistic children. The Journal of Laryngology & Otology 102, 5: 435–439. [Google Scholar] [CrossRef]

- Salvucci, D. D., and J. H. Goldberg. 2000. Identifying fixations and saccades in eye-tracking protocols. Pa-per presented at the Proceedings of the 2000 sympo-sium on Eye tracking research & applications, Palm Beach Gardens, Florida, USA. [Google Scholar]

- Schneider, W. X., and H. Deubel. 1995. Edited by R. W. John M. Findlay and W. K. Robert. Visual Attention and Saccadic Eye Movements: Evidence for Obligato-ry and Selective Spatial Coupling. In Studies in Visual In-formation Processing. North-Holland: Vol. Volume 6, pp. 317–324. [Google Scholar]

- Sereno, A. B., and P. S. Holzman. 1995. Antisaccades and smooth pursuit eye movements in schizophrenia. Biological Psychiatry 37, 6: 394–401. [Google Scholar] [CrossRef] [PubMed]

- Shulgovskiy, V. V., M. V. Slavutskaya, I. S. Lebedeva, S. A. Karelin, V. V. Moiseeva, A. P. Kulaichev, and V. G. Kaleda. 2015. Saccadic responses to consecu-tive visual stimuli in healthy people and patients with schizophrenia. Human Physiology 41, 4: 372–377. [Google Scholar] [CrossRef]

- t Hart, B. M., J. Vockeroth, F. Schumann, K. Bartl, E. Schneider, P. König, and W. Einhäuser. 2009. Gaze allocation in natural stimuli: Comparing free exploration to head-fixed viewing conditions. Visual Cognition 17, 6-7: 1132–1158. [Google Scholar] [CrossRef]

- Tanriverdi, V., and R. J. K. Jacob. 2000. Interacting with eye movements in virtual environments. Paper pre-sented at the Proceedings of the SIGCHI conference on Human Factors in Computing Systems, The Hague, The Netherlands. [Google Scholar]

- van der Geest, J. N., and M. A. Frens. 2002. Recording eye movements with video-oculography and scleral search coils: a direct comparison of two methods. Journal of Neuroscience Methods, 114, 2, 185–195. [Google Scholar] [CrossRef]

- Vickers, S., H. Istance, and A. Hyrskykari. 2013. Per-forming locomotion tasks in immersive computer games with an adapted eye-tracking interface. ACM Transactions on Accessible Computing (TACCESS), vol. 5, p. 2. [Google Scholar]

- Vidal, M., J. Turner, A. Bulling, and H. Gellersen. 2012. Wearable eye tracking for mental health monitoring. Computer Communications 35, 11: 1306–1311. [Google Scholar] [CrossRef]

- Vitu, F., G. W. McConkie, P. Kerr, and J. K. O'Regan. 2001. Fixation location effects on fixation durations during reading: an inverted optimal viewing position effect. Vision Research 41, 25–26: 3513–3533. [Google Scholar] [CrossRef]

- Vitu, F., J. K. O'Regan, A. W. Inhoff, and R. Topolski. 1995. Mindless reading: eye-movement characteris-tics are similar in scanning letter strings and reading texts. Percept Psychophys 57, 3: 352–364. [Google Scholar]

- Wedel, M. 2013. Attention research in marketing: A review of eye tracking studies. Robert H. Smith School Research Paper No. RHS, 2460289. [Google Scholar]

- Yang, S. N., and G. W. McConkie. 2001. Eye movements during reading: a theory of saccade initiation times. Vision Research 41, 25–26: 3567–3585. [Google Scholar] [CrossRef]

| 60 Hz mobile eye tracker | 120 Hz mobile eyetracker | Relative difference between mobile eye trackers | |

| Mean ± SD | |||

| Number of saccades | 56.11 ± 12.44 % | 68.37 ± 13.97 % | 12.25 % * |

| Duration of saccades (ms) | -10.81 ± 7.51 ms | -4.89 ± 2.76 ms | 5.91 ms * |

| Number of fixations | 76.72 ± 18.67 % | 86.41 ± 15.43 % | 9.69 % |

| Duration of fixations (ms) | 10.55 ± 10.13 ms | 4.30 ± 14.33 ms | 6.25 ms |

Copyright © 2017 2017 International Association of Orofacial Myology

Share and Cite

Leube, A.; Rifai, K.; Wahl, S. Sampling Rate Influences Saccade Detection in Mobile Eye tracking of a Reading Task. J. Eye Mov. Res. 2017, 10, 1-11. https://doi.org/10.16910/jemr.10.3.3

Leube A, Rifai K, Wahl S. Sampling Rate Influences Saccade Detection in Mobile Eye tracking of a Reading Task. Journal of Eye Movement Research. 2017; 10(3):1-11. https://doi.org/10.16910/jemr.10.3.3

Chicago/Turabian StyleLeube, Alexander, Katharina Rifai, and Siegfried Wahl. 2017. "Sampling Rate Influences Saccade Detection in Mobile Eye tracking of a Reading Task" Journal of Eye Movement Research 10, no. 3: 1-11. https://doi.org/10.16910/jemr.10.3.3

APA StyleLeube, A., Rifai, K., & Wahl, S. (2017). Sampling Rate Influences Saccade Detection in Mobile Eye tracking of a Reading Task. Journal of Eye Movement Research, 10(3), 1-11. https://doi.org/10.16910/jemr.10.3.3