1. Introduction

Can Digital Twins (DTs) [

1,

2] really make cities smart? The pressure to transform cities into

Smart Cities is mounting amongst urban policymakers worldwide [

3]. Initially, the hope was that

smartification would be instilled in cities by simply creating citywide Internet of Things (IoT) installations and remote sensing applications [

4,

5,

6]. The idea behind this thinking is simple yet alluring: to have everything in the city attached to the Internet and make it proclaim its own state as if it were a smart object [

7] remotely observing everything [

4,

6]. Once observed and connected, everything should become measurable. In such a Smart City, every minute detail, if measured, can be included in the analysis. This would allow for the uncovering of new insights [

8] and give stakeholders a deeper understanding of processes that were previously thought to be fully understood.

However, the complexity of the collected data, the observed processes, and the city itself requires a unifying view. A DT [

9] provides a platform on which cities can become really smart by shifting attention from data collection to data use. Here, a digital representation of the city allows for modelling and predicting its ever-changing state via simulations [

10,

11,

12,

13,

14]. Unfortunately, until now, the virtual and Physical Environments of cities remain insufficiently interconnected [

15]. At best, DTs are remotely displayed in city administration offices to obtain insights [

9,

16]. However, DTs have the potential beyond administration to inform decision-making on all levels from citizens to politicians and planners [

17]. To realise such a wide-ranging implementation of a DT for a Smart City, its data must be accessible in situ within its physical context. Situated visualisations [

18,

19] enable sensing and actuating in the environment with which it is combined.

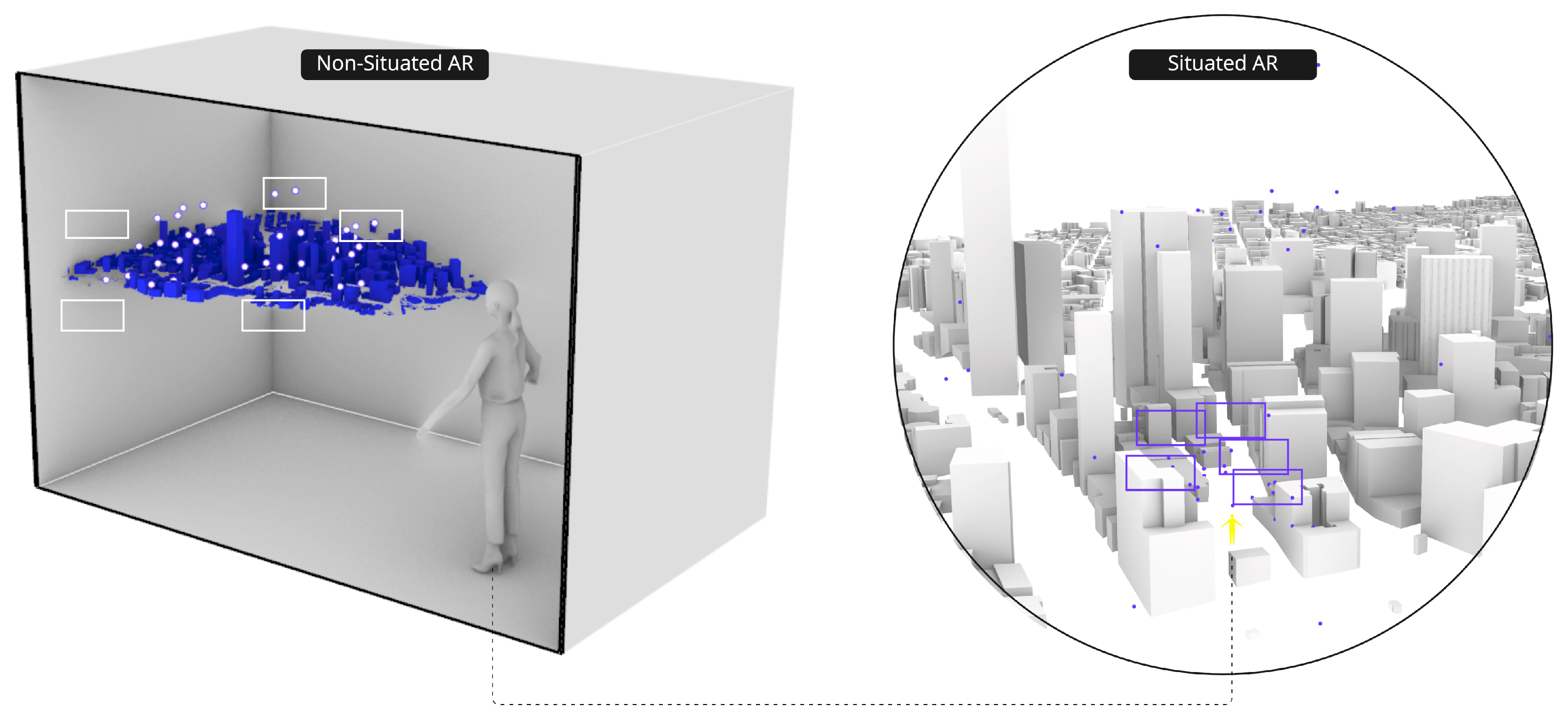

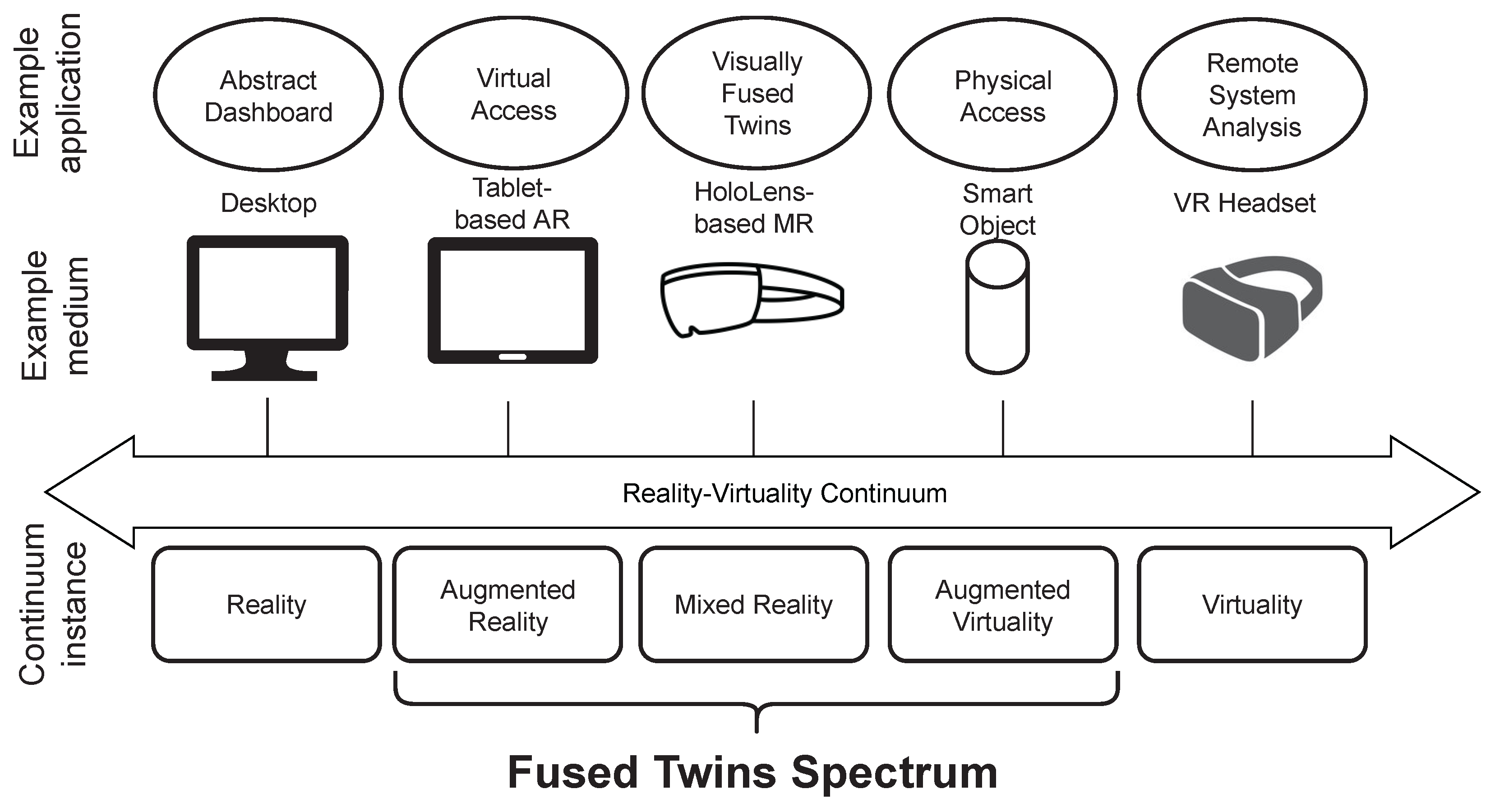

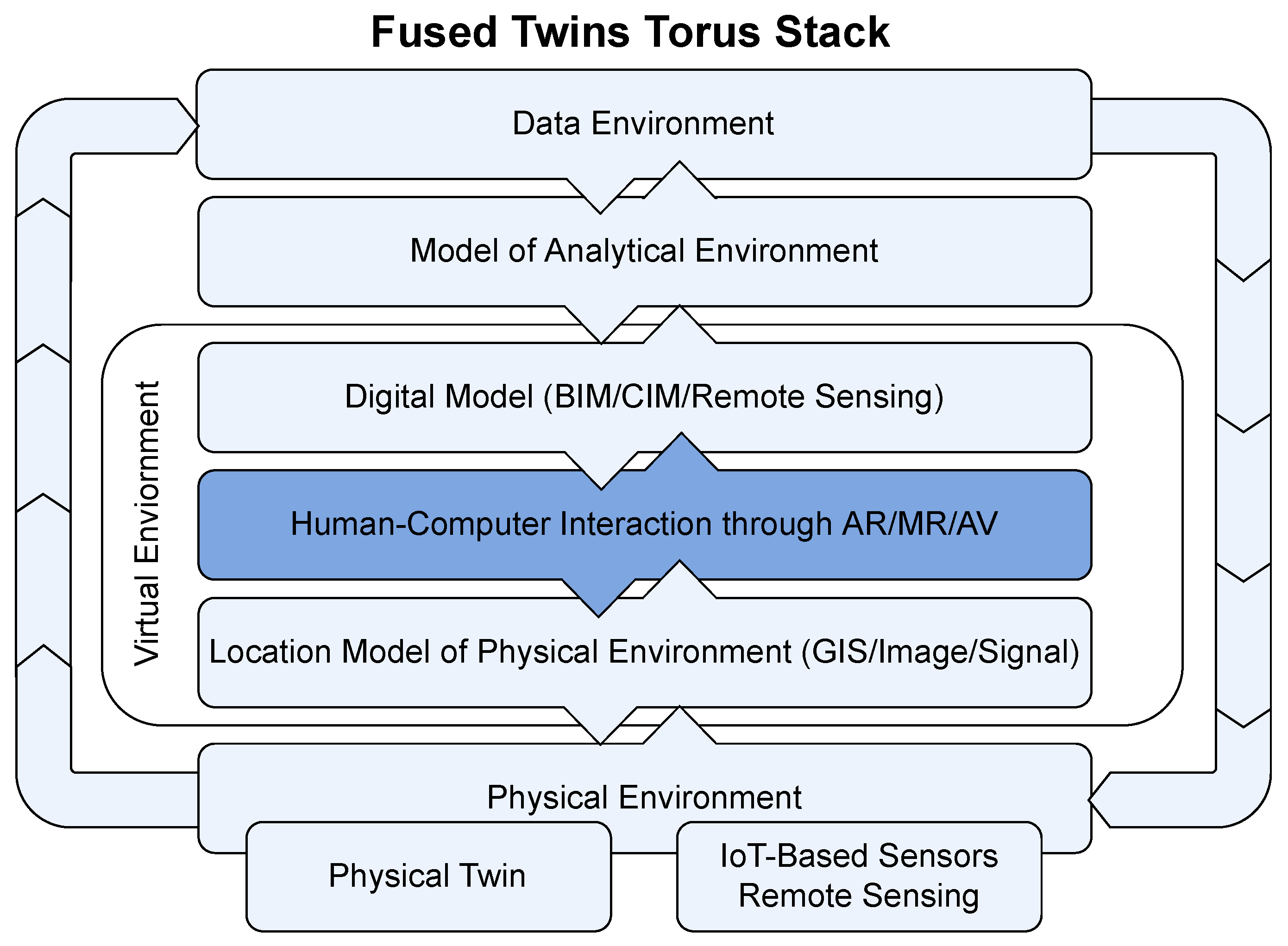

We introduce the Fused Twins (FTs) (on a grammatical side note, FTs are always plural because they consist of both the Physical Twin and the Digital Twin and their interaction) paradigm (see

Figure 1) to enable the conceptualisation of an accessible Smart City. We argue that there is a need to clarify the definitions of DTs, Situated Analytics, and Smart Cities with an emphasis on the visualisation and development of a practical interface. The FTs concept aims to embed the DT in its Physical Twin (PT), fusing them via Extended Reality (XR) applications [

17]. Throughout this paper, we follow the working hypothesis that

DTs combined with Situated Analytics enable lower cognitive load, enhanced cognition, and easier embodiment; and therefore, FTs can make Smart Cities more accessible to users ranging from managers and policy makers to citizens. Ultimately, only when data are accessible where it is most needed and understandable to those who consult it, can DTs fulfil the promise that IoT alone could not: to power Smart City developments by interlinking the physical and Virtual Environments.

This review is structured in four main sections. To define the term FTs, we need a thorough understanding of Smart Cities, Situated Analytics, and DTs. The first three sections cover the necessary background to develop, understand, and apply the FTs paradigm. We dedicate

Section 2 to gaining an understanding of how Smart Cities arose as a research field and why they converge towards DT research.

Section 3 covers Situated Analytics as a novel data exploration approach for Smart Cities. The similarity in the implementation structure leads us to the following section on DTs.

Section 4 elaborates on DTs. We provide a definition following [

23], where a DT is constructed using five components (see

Figure 2). DTs is a vague term that requires multiple clarifications in terms of composability (

Section 4.1), servicisation (

Section 4.2), and differences among cognate terms (i.e., functionally and historically related but slightly different terms [

24];

Section 4.3). Finally, in

Section 5, we cover the FTs concept in depth including the required steps to implement FTs and classify examples of de facto FTs in the recent literature.

Section 6 provides an in-depth discussion on Smart Cities (

Section 6.1), Situated Analytics (

Section 6.2), DTs (

Section 6.3), and the newly introduced FTs paradigm (

Section 6.4).

Beyond the definition for FTs, we also provide several new points on a wide range of topics. These topics have often been covered shallowly in shorter research papers, which has left a great potential for confusion. In this review, we try to discern some of these, especially in the context of DTs.

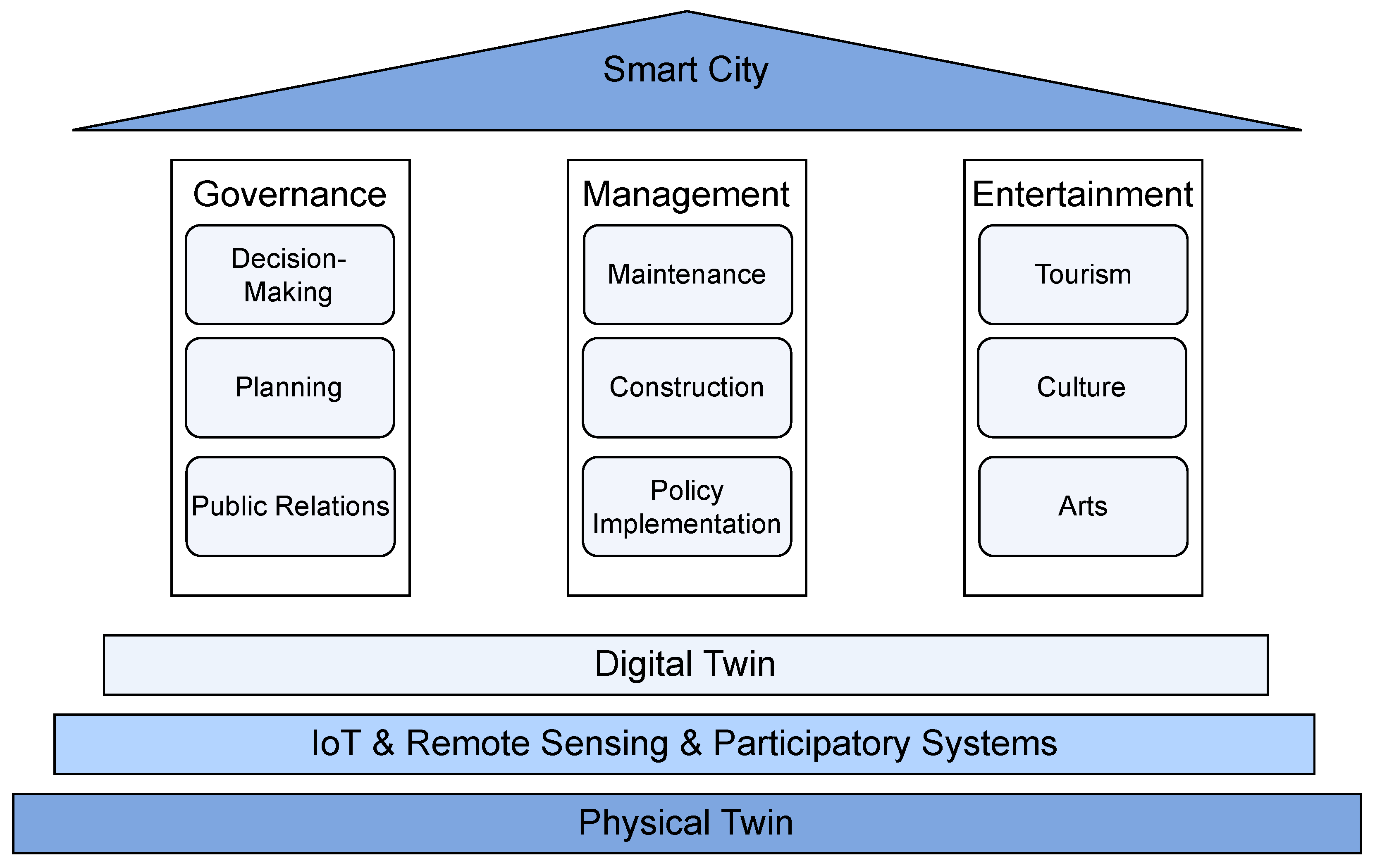

2. Smart Cities

Smart Cities are an inherently technological approach to resolving urban issues that spawn economic, social, environmental, and governmental aspects [

25] (see

Figure 3). Smart Cities are constituted using bench-marking indicators that can be constructed from technological sources [

26]. This technologist approach only provides a narrow perspective [

27] that is often divided along disciplinary boundaries [

28]. The weakness in the Smart City concept is juxtaposed by its necessity because technological advances are one of the few options to manage the complexity of modern cities [

25]. However, at the same time, it must be acknowledged that there are other perspectives or “urban imaginaries” [

29] beyond Smart Cities such as resilient cities [

30], intelligent cities [

26], and sustainable cities [

31]. In the remainder of this section, we will expand on the evolution of “Smart Cities” from the nineteenth century all the way to its portrayal in contemporary urban discourse.

Throughout their evolution, advances in ICT have repeatedly helped cities to cope with growing complexity [

32,

33,

34]. During the nineteenth century, industrial cities were faced with the management challenges of large industrial enterprises as well as new administration challenges due to unprecedented population growth. The need to process information and communicate it quickly over great distances led to the invention of the telegraph, which enabled the industrial city to keep growing [

32,

35].

This transformation, from a society in which communication was impeded by distance to a society in which distance is addressed using ICTs, has forever changed humanity and cities [

35]. On the one hand, critical obstacles to the growth of cities were removed with the integration of technologies that enabled businesses to flourish and municipalities to govern more effectively. On the other hand, futurists such as Toffler [

36] and Negroponte [

37] predicted that these technologies will lead to the “death of distance” because it is possible that improved telecommunications technologies will make cities, and space itself, obsolete. Their basic proposition was that electronics will eliminate the need for face-to-face interactions and that the cities at their core facilitate those interactions and thus would themselves become obsolete [

36,

37]. Clearly, this is not yet the case. Major cities have become points of intense investment in ICT because the production, exchange, and dissemination of information were and still are critical to their function. Paraphrasing [

38], the shift from goods handling to service and information industries has made cities into communication systems that are central to information flow in the global economy [

38].

Today, the integration of ICTs into Smart Cities has become a complex man-made creation that is unparalleled in its cascading effects on every aspect of the city and its citizens [

32,

33]. Two innovations in the 20th and 21st centuries have enabled this evolution: the rise of the Internet infrastructure for networked computers and mobile personal computing. In the words of [

33], “The democrati[s]ation of computing power that started with the PC in the 1970s and leap[t] onto the Internet in the 1990s is now spilling out into the streets [

33]” which can be interpreted as the rise of IoT as part of the Smart City landscape.

Contemporary ICTs have restored the importance of space to the previous discourse on urban telecommunication [

39]. Everything and everyone in cities generate geo-tagable information which can reveal the position of a multitude of stationary or moving objects in real time [

39,

40]. Geo-tags enrich space with contextualised electronic content and are a fundamental dimension of a sort of return to space, or

spatial turn, to use the expression coined by urban geographer Soja [

41]. This spatial turn of digital technologies has been reinforced by the proliferation of electronic interfaces and wireless communications, which realise the notion of Ubiquitous Computing or Ambient Intelligence [

33,

39,

42,

43,

44,

45] and ultimately underpin a DT.

Sometimes visible, but more often hidden, countless chips and sensors allow objects, people, and vehicles to be located; consumption levels and transactions to be recorded; and temperatures, pollution levels, population densities and flows to be measured [

46,

47,

48]. The possibility of transforming every Thing in the built environment into something more “smart” appears more feasible than ever before. Low cost, miniature sensors enable everything to sense and be sensed. The ability to perform analytics over the data collected has become ubiquitous, enabling Things to “think”, while wireless communication technology enables Things to “talk” to one another as part of the Internet of Things (IoT) [

33,

37,

39,

40]. However, as noted earlier, this dream of smartification has not been realised due to the increasing complexity of cities. It increasingly looks as if a DT is required to provide the necessary abstractions to enable the Smart City.

Ambient Intelligence has taken on many forms that resemble the functionality of DTs, but they remain disconnected and often disjoint. This leaves it up to the observer to connect the dots, forming the meta-analysis on the city level. Examples of Ambient Intelligence range from trash cans and parking lots to streetlights. Smart waste disposal containers in Barcelona are able to know when they have reached their maximum capacity and must be serviced [

49]. Smart parking uses sensors to know if a parking lot is free or not. Citizens can then be directed to available parking in the London borough of Westminster [

50]. In the Future City Glasgow project [

51], streetlights can be equipped to sense and analyse what is happening below them. Sensors collect data on temperature, humidity, noise, and movement, and interpret the data into a functional “information-scape”. The streetlights can then respond in real time by adapting their light intensity or by communicating the interpreted data to the nearby police station, reporting any ongoing crime.

There are also even more ambitious approaches towards constructing a DT. The Spanish city of Santander with 180,000 inhabitants installed 20,000 sensors to monitor temperature, luminosity, ground humidity, and available parking slots [

52]. Paris’s 120,000 trees have each been equipped with a radio-frequency identification (RFID) chip [

53]. In a large number of towns, vehicular traffic is monitored in real time through technology ranging from induction loops, to wireless sensors, to video cameras. In Singapore, this monitoring enabled the introduction of one of the first dynamic pricing systems, which consists of making drivers paying in proportion to the desirability and, more importantly, the congestion levels of thoroughfares [

39]. This new capacity of cities to detect, measure, and record what is happening within them is truly unprecedented in their history [

33,

39,

40] and yet requires new infrastructure to manage the sheer complexity [

54,

55]. In the words of [

56]: “If aerial photography revealed the skeletal structure of the city during the nineteenth century, the revolution brought about by contemporary ICTs is likely to reveal its circulatory and nervous systems—as it reveals the “real-time city”. For the first time in history, cities can be understood as a whole, the way biologists see a cell—instantaneously and in excruciating detail, but also alive [

56]”.

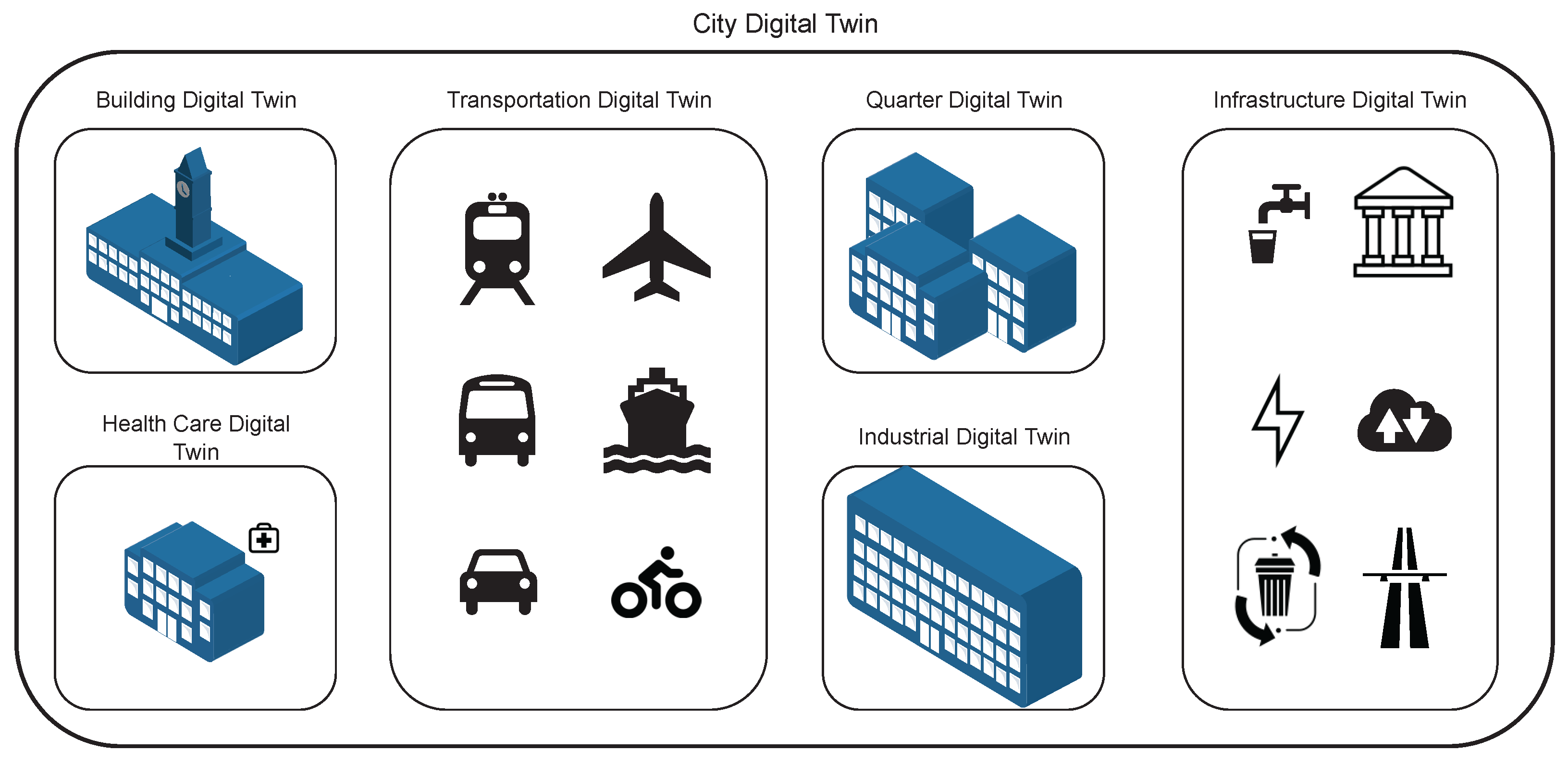

This new understanding of real-time urban dynamics is not (yet) centralised, nor is it ensured to be the property of city governments and planners. If servicised properly and arranged as DT components, it may remain distributed in nature with a low barrier to entrance and can be accessed from nearly everywhere at nearly any time. The ongoing rise in personal mobile computing, especially smartphones, is transforming how individuals perceive and interact with the urban space that surrounds them. Situated Analytics powered by DT infrastructure would provide citizens, tourists, and other users unprecedented insights into the city to help them in their every day life and in planning how to use the city in the future. Using DTs to power Smart Cities will make analytical tools available to everybody and will make them accessible in a spatial context that will democratise the understanding and use of the city. To realise this vision of a Smart City and deal with the complexity that is already plaguing current implementations of Smart Cities, DTs offer a bedrock. The composability of separate DTs into a city-wide DT, underpinning the Smart City, is a promising approach (see

Figure 4).

3. Situated Analytics

Augmented Reality (AR) combines computer-generated visual content in 3D with the real world by superimposing virtual content onto a view of the real world [

57,

58]. The principles of an AR system are three-fold, combining real and virtual elements, registering in three dimensions, and, importantly, providing interactions in real-time [

57]. There are at least two categories of approaches to produce AR. First, screen-based approaches, or “see-through AR”, can be divided into the optical combination of a screen with the user’s perception of the real world or 2D displays that replay a live recording of the real world and superimpose virtual content [

57,

59]. Second, projector-based approaches, or “spatial AR”, consist of projecting the virtual content directly onto the real world, benefiting from projection mapping algorithms and hardware [

59,

60,

61]. The last decades have refined see-through AR and, today, the main lines of AR systems are either based on the HoloLens for optical systems and ARKit or ARCore for display-based AR on smartphones and tablets. With the systems maturing to the point where it is both easy to use and easy to generate content, AR applications are going mainstream [

62] and find applications across all industries [

63], commerce [

64], entertainment [

65], education [

66], and medicine [

67].

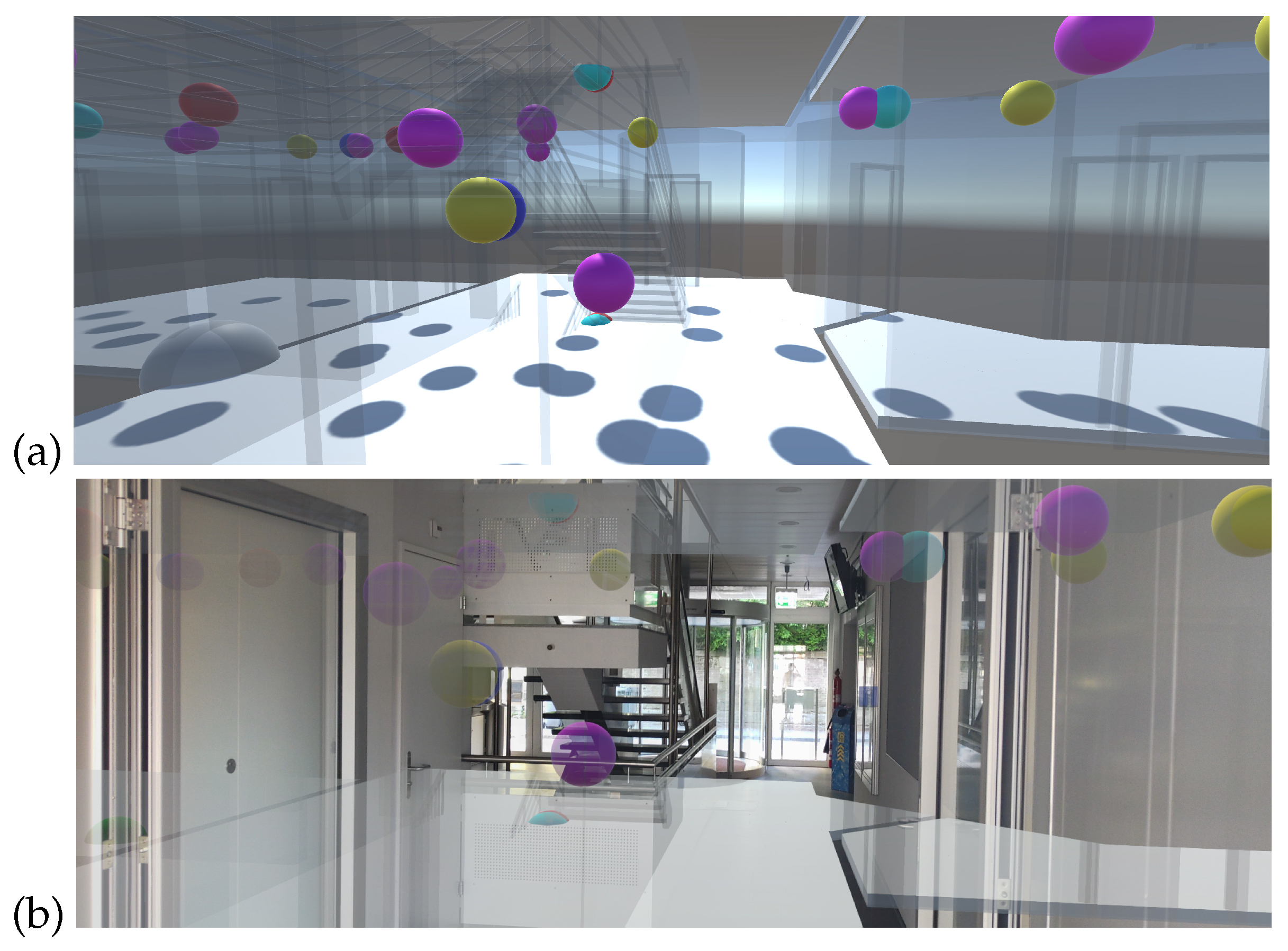

As AR becomes more than a gadget, it opens the door for immersive analytics [

68] of data and information either in situ or remotely (see

Figure 5). Whereas the systems to display AR have been developed over the last decades, the visualisation of information in this new media is still in its infancy and yet rapidly evolving [

69]. For example, traditional visualisation on 2D screens and print is a well established field with many well known representations and toolboxes, and high literacy among producers and consumers. However, most spatial 3D visualisations are still hand-coded and often imitate 2D visualisations. 3D visualisations also offer the opportunity for interaction and embedding in the augmented space [

70,

71], possibly easing cognitive load and enhancing cognition [

72] through externalisation [

73].

The development of AR technologies also enables new types of interaction with digital content. Specifically, embodied interaction is a concept that reconnects the fields of tangible and social computing and argues that interaction is fundamentally physical and social. Thus, failing to consider these aspects for design interaction would be a fallacy [

74,

75]. Embodied interaction is defined as “the creation, manipulation, and sharing of meaning through engaged interaction with artifacts” and places the responsibility of meaning-making on users rather than designers [

74]. The level of responsibility for meaning-making at the object’s level is driven by different definitions of affordances across research fields that have yet to be reconciled [

76]. There is an epistemological difference between affordances as properties of the object or interaction [

77] and affordances as properties of the users’ mental representation [

78]. From both Normanian [

78] and Gibsonian [

77] perspectives, AR can be viewed as deeply anchored in the physical world and therefore demonstrate strong affordances for embodied interaction [

57]. Moreover, AR supports a higher amount of embodiment compared to 2D displays by enabling high levels of sensorimotor engagement and immersion [

79].

For example, anchoring and embedding visualisations in the physical 3D world can offer tangible ways to explore one’s physiological data [

80] and, to some extent, one’s own “personal” DT. For example, Tobe is an AR tangible humanoid DT to explore one’s physiological data such as heart rate and breathing patterns and was used in a social context to let two users relax together via data observation [

81]. Similarly, Teegi is an AR tangible humanoid DT used to explore one’s brain activity via electroencephalogram visualisations [

82]. Non-humanoid representations have also been explored. Inner Garden is an augmented sandbox embodying one’s physiological state, representing breathing patterns as waves and cardiac coherence as weather [

83]. In the wider context of embodied interaction, users’ bodies have also been explored as canvases for digital content [

84], for example, by co-locating display and interaction on the users’ hands [

85] or utilising embodied metaphors, such as mimicking scissors with one’s hand to cut through digital content [

86]. Tests of embodied AR approaches have also been initiated for data exploration in the context of geographical data for visually impaired users [

87,

88,

89].

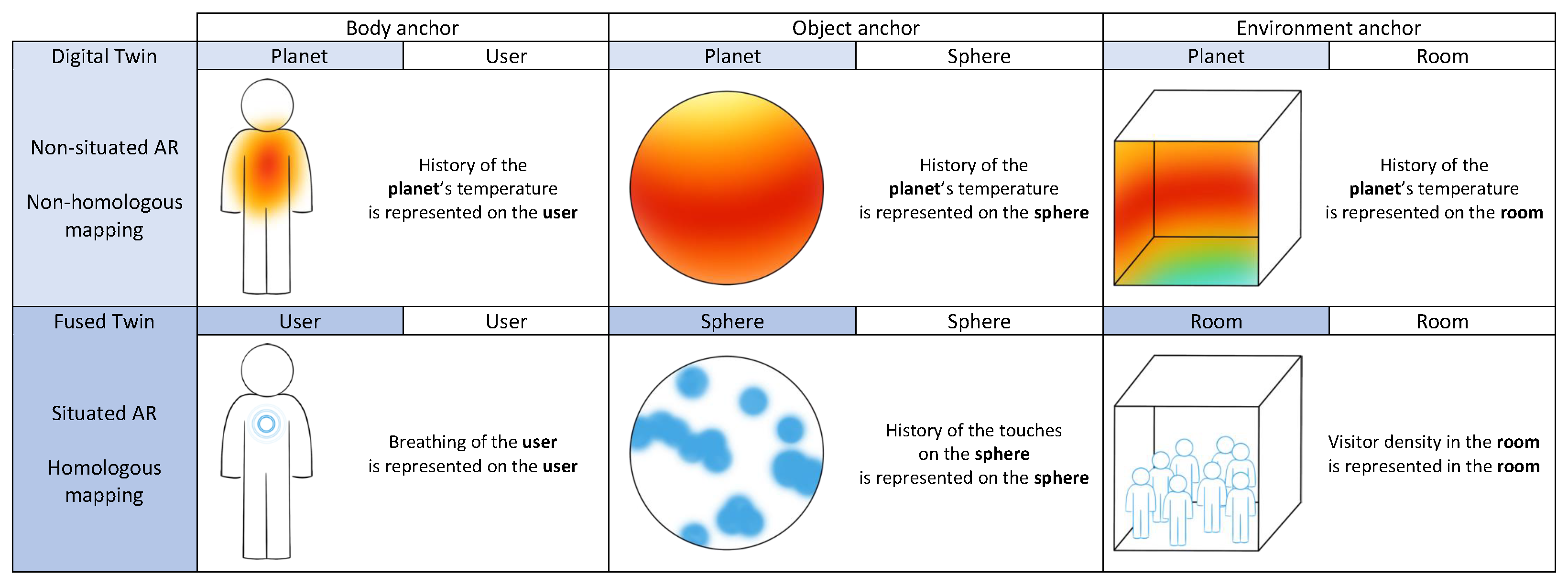

The integration of users’ bodies and their physical context has been conceptualised during interaction with digital content. Towards that end, different physicalities of embodiment have been described: body-centred, object-centred, and environment-centred embodiment [

90,

91]. We offer examples of embodied DTs and FTs following this categorisation in

Figure 6. These categories themselves include direct-embodied, enacted, manipulated, surrogate, and augmented approaches [

90,

91]. The direct-embodied approach considers the users’ bodies as primary constituents of cognition and therefore the body state is used to convey information. In the enacted approach, bodily actions are considered a form of enacting knowledge through movement. The manipulated approach uses the body to directly manipulate a representative object. The surrogate approach uses a physical avatar that performs an interaction with the object. Finally, in the augmented approach, the body is used to manipulate an avatar to interact with the larger environment. The impact of the physicality of embodiment on interaction and cognition is not yet clear. However, these considerations are of importance because a mismatch between activity design and type of embodiment can result in decreased movements, persistence, and learning outcomes [

92].

When discussing the spatial context of data visualisation and data embodiment, we also ought to consider the role of the users’ bodies in the interaction. Often, the users’ bodies are solely considered as physical entities (i.e.,

Körper) utilised to push buttons and perform actions, rather than feeling entities (i.e.,

Leib) [

93]. Novel approaches such as somaesthetic appreciation design [

94,

95] describe the manner in which users’ bodies may be integrated in the design process as well as in the experience with digital content and data. Empirical evidence suggests that bodies’ representations impact the sense of embodiment [

96,

97], as well as the types of gestures performed to interact with a system and the time spent observing and interacting with data [

98]. In this regard, the challenge of FTs extends beyond meaningfully anchoring virtual data onto a physical context to include the design of the interaction with the augmentation from an embodied, somaesthetic perspective [

74,

94,

95].

Two further problems arise when visualising embodied information in augmented space. First, most traditional authoring tools are focused on 2D visualisations, and new toolkits such as DXR [

99] and RagRug [

100] have only provided the basis to display 2D visualisations of data in 3D. Second, the benefit of the third dimension is difficult to grasp and even more difficult to implement. Most modern immersive analytics actually rely on embedding 2D displays into the real world [

17,

99,

100,

101]. Visualisations in 3D are typically derived from the previous 2D media, but there is a trend towards exploring new possibilities. For example, multiple 2D visualisations can be arranged to use the third dimension to filter information and visualise this filtration [

102].

Situated visualisations are less represented in the literature. Recently, there has been some defining work that characterised the types of enhancements that immersive analytics may provide through enhanced vision and visual synesthesia [

72]. Addressing the question of how situated visualisations might improve cognition is an ongoing research area. The supposition has been put forth that cognition may be improved by enhanced attention, enhanced numeracy, enhanced recall, enhanced comparison, and enhanced prediction [

72]. Moreover, theoretical frameworks such as embodied, situated, and socially situated cognition support the idea that cognition is deeply rooted in its physical and social context [

103,

104,

105]. However, there are no empirical evaluations yet because, so far, researchers have not focused on the embodiment of the user in the analytics or understanding how the spatial context can reduce cognitive load or enhance cognition.

Some research is starting to address the impact of the third immersive dimension on visualisation possibilities [

106]. For example, one small study demonstrated that a toolkit to create new immersive visualisations helped to keep users engaged for longer and produce more elaborate immersed visualisations. We have a clear understanding of how 2D visualisation improves cognitive load through externalisation [

73], but for AR, the evidence is less clear [

107,

108,

109,

110,

111]. Previous work has proposed the following mechanisms for how 3D visualisations can improve cognition by reducing task switching with visual cues [

112,

113] and context-sensitive displays [

114]. Moreover, recent work also explores the potential of AR to reduce negative split-attention effects in multimedia activities [

85,

115,

116]. Split-attention effects [

117] occur when two related sources of information are spatially or temporally distant. However, split attention is not always negative. For example, indexing (i.e., connecting multiple data representations through pointing gestures) is investigated as a mechanism for sense-making [

118]. The distance between information sources can also be reduced using AR, in particular the FTs paradigm, because it co-locates physical and virtual entities in real time [

57,

115,

116,

119,

120]. In addition, overwhelming displays of information have been shown to decrease performance [

121]. There is no definite answer as to how immersive analytics ultimately improves users’ performance, but theoretic arguments keep this research area active.

Furthermore, the spatial context in the augmented space has often been used as an empty canvas [

99] instead of a source of information [

122]. Most commonly, this spatial context has been used for geographic representations to show the built environment either in the past through remote sensing or as planned in the future [

122]. The subfield of Situated Analytics [

19] combines data with its spatial context to achieve a form of virtual data physicalisation [

123] and embodied interaction [

70,

74,

75,

85,

86] that enables humans to maximise the use of all senses to perceive the data (see

Figure 7). Indeed, information on cities can be embedded in the situated context with meaningful 3D representations that users can explore. For example, situated AR has been used to teach historical events by anchoring the relevant data in the actual physical context of these events [

124]. The real world can become the canvas to help users make sense of the information in situ [

125].

4. The Digital Twin

Before we explore how FTs can make DTs more accessible, we will review DTs, their historical background, and their relations to other adjacent technologies and the wide range of definitions that accompany them. Historically, the conceptualisation of DTs originated from the industrial branch of IoT developments in 2003 [

1]. The concept was loosely defined at the time but already included the most important components: a physical object, a virtual object, and their connections [

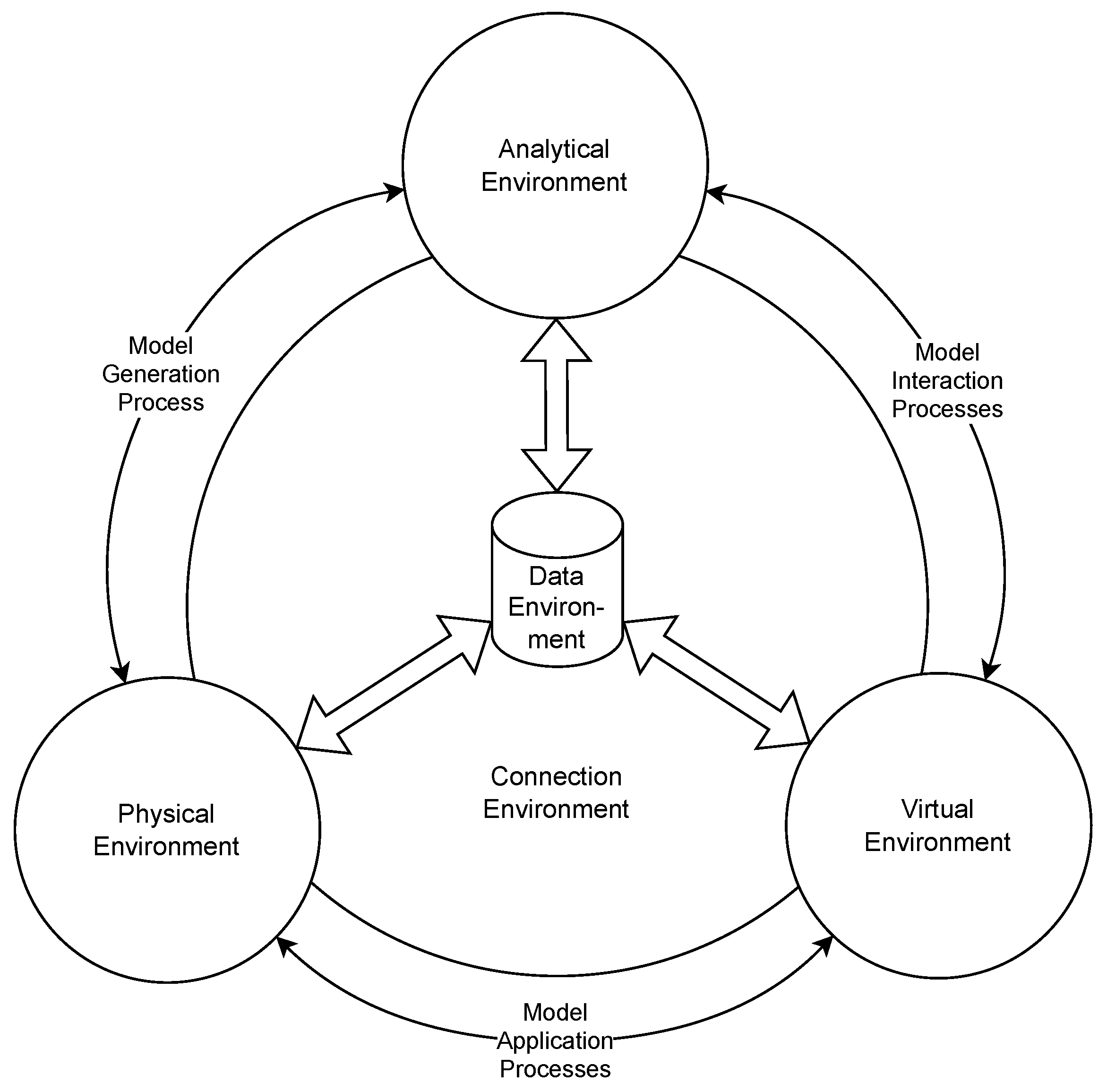

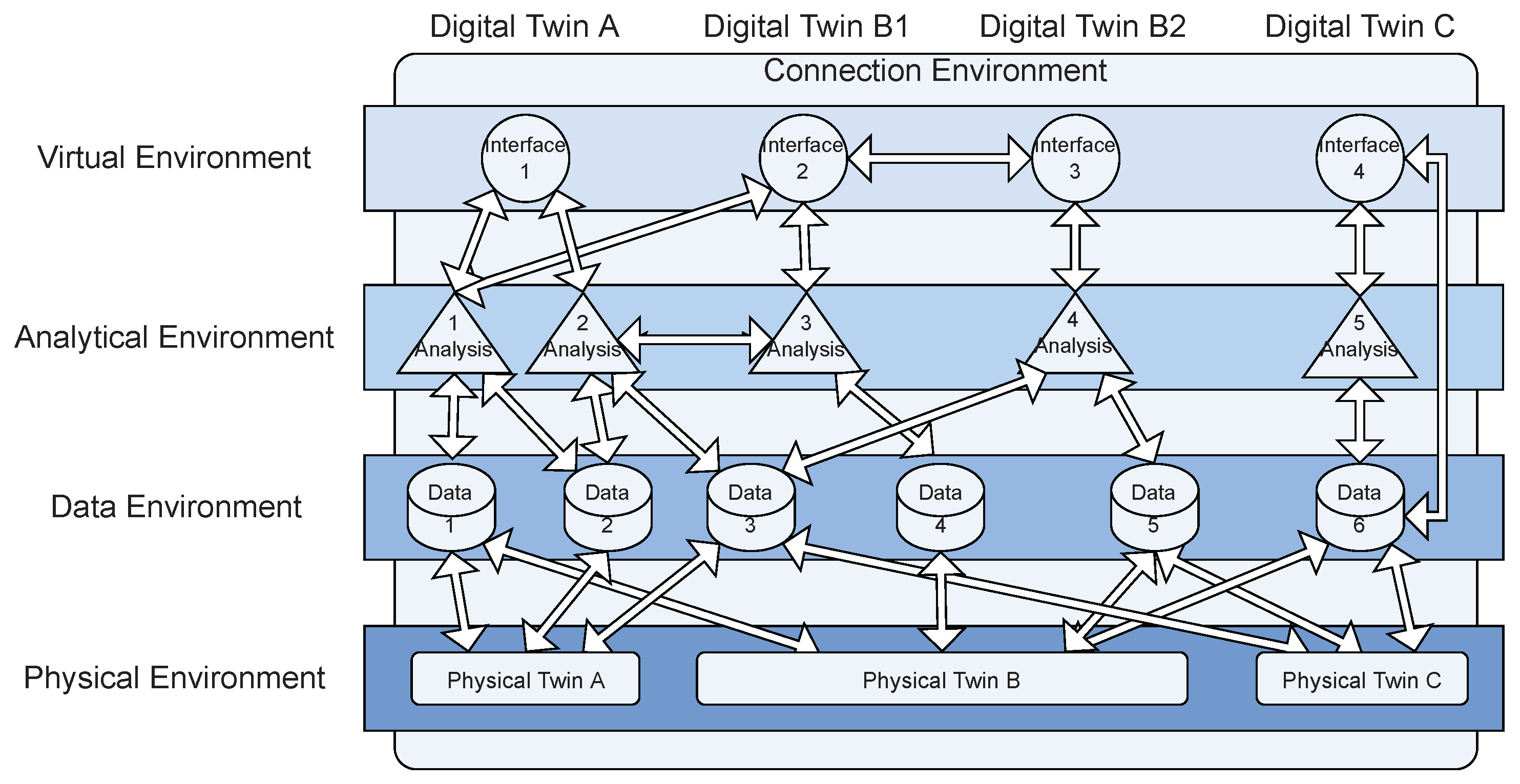

2]. Throughout this section, we will expand this understanding to reach our definition of a DT based on composability and the five components, the Physical Environment, the Data Environment, the Analytical Environment, the Virtual Environment, and the Connection Environment (see

Figure 2).

Historically, there was a lag in the adoption of DTs because the technologies necessary to effectively implement a DT were not yet developed [

8]. In the 2010s, the DT concept outgrew its industrial roots by capturing cyber–physical integration as well as representing the physical world virtually [

127,

128]. As DTs grow in size from factories and cities [

129] to the globe [

130], remote sensing with drones and satellites plays an increasingly important role to keep DT representations up to date but also offer an opportunity for interactions with the city through interactive mobile display technology [

61]. For a full review on current DT applications, we refer to [

54]. Here, we will elaborate the definitions of DTs to form a common basis from where we can develop the concept of FTs.

DTs have been “hyped” according to Gartner as one of the top ten most promising technological trends of the previous decade [

8,

131,

132,

133,

134]. Beyond the hype, across many different definitions, DTs fuzzily describe any software aiming to closely represent something in reality [

135]. These qualities are in line with common and older definitions such as Cyber–Physical Systems (CPSs) [

136], Ubiquitous Computing (UC) [

46], and Mirror Worlds [

137], which begs the question of whether we can (and should) draw a line between DTs and these concepts. Here, we argue that DTs should be approached as an analogy to a mental model that helps to structure information and actions and to attach them to the real world through appropriate mechanisms such as the FTs concept.

In this review, we next situate DTs in the context of their predecessors. DTs are a specialised form of Cyber–Physical System (CPS) [

136] because they focus on the accurate representation and manipulation of processes in the real world with a model. DTs are similar to Ubiquitous Computing [

46] because they attach computations to real-world objects, but without the FTs paradigm, DTs are ultimately not Ubiquitous Computing because the computations are detached from the embodiment of the represented object. Fusing the PT and DT [

17,

101] joins CPS properties with the PT to produce truly Ubiquitous Computing as envisioned by Weiser [

46].

Whereas Ubiquitous Computing has no clear requirements for the kind of computations to be performed, there is a common theme across different definitions of DTs with respect to the need for simulations to predict a system’s response to critical events. The aim to predict often goes beyond simulating known events towards understanding deviations between the actual observations and the predictions to indicate unknown issues [

138,

139]. Furthermore, DTs are often expected not only to report on the state of the system, but to also actively manipulate the system through actuators [

2,

54,

139]. The tension between the accuracy of DT predictions and computational capacity is captured by the idea of Mirror Worlds [

137], which perfectly imitate reality similar to a mirror. Early ideations of the DT therefore required the underlying model to support similitude [

138], while similitude requires the implementation of a multi-physics, multi-scale, probabilistic, ultra-fidelity simulation that mirrors the PT based on historical data, real-time sensor data, and a physical model. The “ideal DT” requires similitude to become as close as physically possible to mirroring the real world. Indeed, DTs need to model the mutual dependency of hardware and software to tightly couple the PT and the DT, producing high-quality simulations. Until recently, most simulations were feasible only for certain sub-components of complex systems that relied on scientific knowledge and real observations to produce a more accurate offline simulation. Therefore, the requirements of similitude have only been satisfied in specialised cases such as with the components of flying vehicles [

138]. New computational methods increase simulation performance such that events in the DT can be simulated fast enough for predictive purposes in the real world [

140]. However, even with respect to aircraft, there are only plans to produce a DT of a whole vehicle [

141,

142] which are expected to be completed in the coming decade. Regarding the sheer scale of cities, the Mirror Worlds that would underlie a Smart City seem to be a distant dream. Due to the simulation complexity of large-scale systems such as cities, researchers have since called for a less rigorous model definition to make DTs relevant to real-world applications [

9].

In other words, stepping away from ideal DTs to “practical DTs” can allow for more practical applications. Easing any of the core aspects of similitude allows for the implementation of many useful concepts such as analytical assessment, predictive diagnosis, and performance optimisation [

8], even in large-scale systems. Limiting the functionality of DTs to a synchronous representation of reality can also allow for the monitoring of complex production processes, adjust these processes, and possibly optimise them [

143]. Ideally, the model underlying a DT is complex enough to enable a system to continuously react to dynamic changes in physical space [

144], including unforeseen/unexpected changes [

145]. DTs also open the door to continuous design improvement through data feedback loops [

146] enabling data assimilation into a model [

147,

148]. These incomplete but practical DTs can become especially useful if they are composable to cover more features of the PT. Next, we will introduce specialised, practical DTs which answer questions on particular topics and can be composed to resemble ideal DTs.

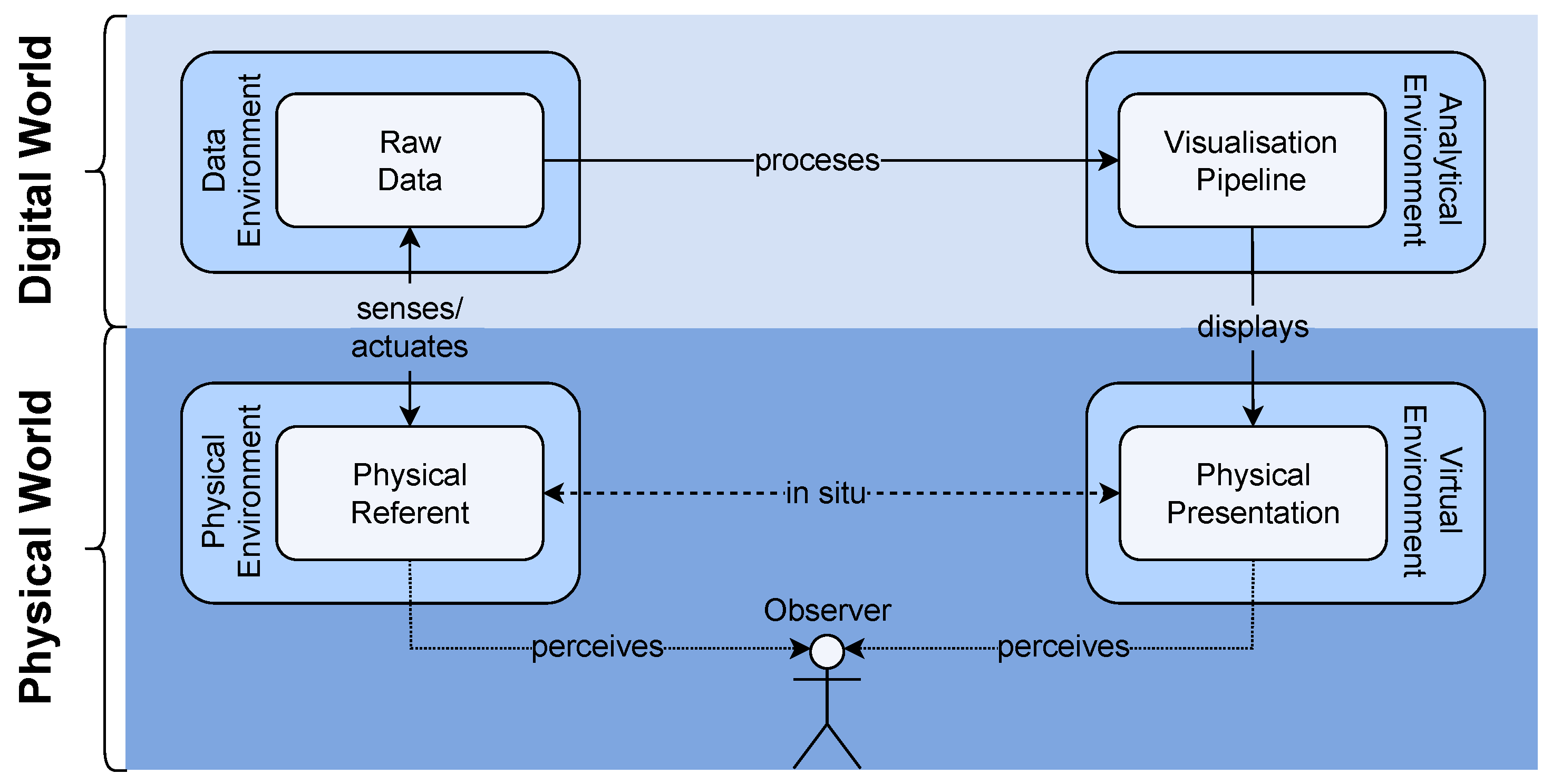

In our definition, we side-step the discussions surrounding ideal DTs by focusing on structural features of practical DTs. We refine the definition of a DT based on earlier work [

8] as a five component system: the Physical Environment, the Data Environment, the Analytical Environment, the Virtual Environment, and the Connection Environment (see

Figure 2). In the Physical Environment, IoT systems [

47] and remote sensing units [

149] try to capture the PT and provide interactions with the PT through actuators. The Data Environment contains the DT’s memory and is usually located somewhere in the cloud but may also be stored locally or at the edge [

150,

151]. For the Analytical Environment, the processes and models of the DT are computed [

152], often in the cloud, and insights and predictions are communicated back to the Data Environment. The Virtual Environment makes the DT accessible to human users and possibly other machines [

17,

101,

153]. Finally, the Connection Environment provides an Application Programming Interface (API) to transfer data as required between the components. We consider two ways of designing the Connection Environment, (1) from a service perspective, where the Connection Environment is not an independent component but refers to the APIs exposed by the different components, and (2) from a compatibility/hypervisor layer perspective where the Connection Environment is an independent component that mediates between the other different components in the system. Each component could be implemented in many different ways, and making a sensible choice may be overwhelming. This review gives an overview to guide newcomers through the most important components and decisions that are required to implement the FTs concept with a DT.

To understand DTs, it is necessary to comprehend the function and goal of the core components in relation to the literature. Specifically, we connect our definition of the Physical Environment to research on IoT and remote sensing, as well as our definitions of Analytical and Virtual Environments to research on simulation and interaction with DTs. Our definitions of the Data and Connection Environments are not broadly covered in previous research because previous implementations have used case-specific data solutions and were not focused on interoperability.

The Physical Environment component captures the PT and associated interactions via IoT and remote sensing infrastructure. The composition of IoT stack is regularly repeated but rarely analytically decomposed with respect to its trade-offs. For most applications, the stack is designed with the purpose of underlining where and why a specific new technology is needed [

154,

155,

156,

157]. Technically, this is a useful approach to help experts situate a technology in the literature. However, these stacks typically make many unspecified assumptions that we disentangle here. We start with the notion that the DT perspective can be mapped to aspects of IoT and remote sensing. Following the semantic decomposition of the term IoT [

158], Things themselves are not actually

in the Internet, but information about them is perceived either with attached sensing units or with remote sensing and then shared via the Internet. The DT can be used as the necessary representation of the perceived Thing in the Internet.

Previous resource-oriented and service-oriented architectures have represented IoT hardware as practical DTs without using this particular nomenclature [

157,

159,

160,

161,

162,

163,

164]. In contrast to IoT definitions, the DT perspective does not necessitate the digital information to be shared globally as may be implied by the term Internet. The potential local scope of DTs is reflected in their origins in cyber–physical systems [

2,

136] where the application scope is usually limited to an industrial production site. Nonetheless, the DT perspective provides us with a useful definition of the properties that a Thing should have when represented digitally. A broad range of example IoT applications have been addressed in previous publications [

7,

45,

47,

165,

166,

167] and can be generalised to DT solutions.

The Analytical and Virtual Environments in DTs are difficult to precisely define because their development is multi-disciplinary and reaches beyond the simulation perspective. These paradigms have been advanced by independent research in the areas of information science, production engineering, data science, and computer science [

8] as well as electrical engineering [

168]. A DT is a highly complex system that combines infrastructure, simulation, data processing, human–computer interaction, and services [

8]. These approaches give rise to another understanding of DTs as an advanced digitalisation of a system that extends representation of the PT with operations. This produces a rich digital model that has many desired properties such as scalability, interoperability, extensibility, and high fidelity [

169]. To arrive at a digital model, digitalisation in a space can also be understood as a virtualisation process [

170]. Here, the system is represented in 3D space via modelling and remote sensing. First, behaviours are extracted and knowledge representations are created. Second, the interactions between its constituent elements are modelled and integrated into the knowledge representations. Third, operations and their effects are modelled. Lastly, everything is assembled in a simulation to make the system match the PT as close as possible. Because digitalisation/virtualisation only creates the Analytical Environment (see

Figure 2), most research has clearly focused on the analysis and not interaction with the DT. Most research on DTs also follows the simple physical–digital split into PT and DT components [

2,

135], but this split often fails to capture the difference between the Analytical Environment for computation and the Virtual Environment for interaction (but see [

23]). The FTs paradigm offers a new perspective that highlights the unique contribution of the Virtual Environment, resulting in a more intuitive use of the DT in nearly every application domain.

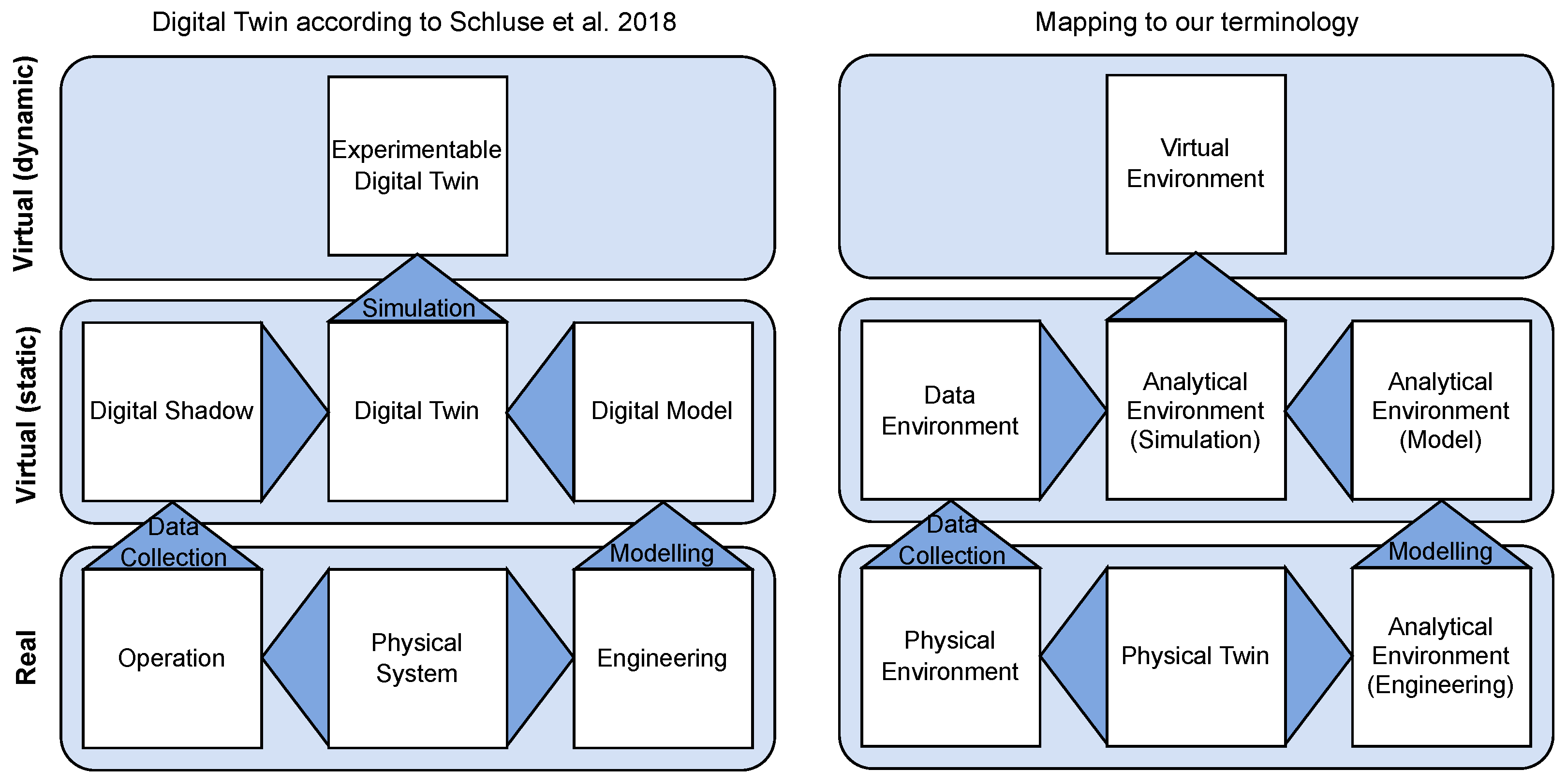

There may also be issues with many definitions of DTs because of the unrecognised difference between a universal ideal DT and a partial practical DT. Ultimately, universal ideal DTs are unachievable because of the need to integrate the complex requirements of similitude and to comply with the many definitions across different literatures. Therefore, any practical DT is only partially implemented compared to ideal DTs. Confusion regarding this distinction has led to a heated debate and has resulted in the differentiation of DTs according to their degree of completeness from digital models and Digital Shadows to (ideal) DTs [

152,

171], see

Figure 8.

A digital model is an independent representation of a real-world system that may be used in simulations but that is generally not fed real-time data. According to our interpretation, a digital model reflects the implementation of the Analytical Environment. A Digital Shadow is the assembly of data from the real-world system for descriptive purposes. By our interpretation, a Digital Shadow reflects the Physical Environment of the DT. Thus, our definitions can shift the discussion on Digital Shadows and digital models from whether something is a DT to what component of a DT is implemented. While a completely implemented, ideal DT at the urban scale is probably out of scope for now, it is feasible to implement and compose partial practical DTs that are capable of performing important tasks for Smart Cities. To elucidate IoT, DTs, and Smart Cities, the present paper adopts the FTs perspective because it unites three views on DTs that are often confounded: Why to generate the DT (i.e., business case for the Analytical Environment), how to generate the DT (i.e., technological implementation of the Physical Environment), and how to use the DT (i.e., end-user interaction of the Virtual Environment).

4.1. Composition of Digital Twins

Composed DTs offers an integrative perspective to understand and address the complexity of the PT by using multiple abstractions and performing different tasks on each abstraction (see

Figure 9). Towards this end, DTs are composable through their Connection Environment allowing both for the nesting and recombination of components. This approach allows us to re-conceptualise any sensors in the Physical Environment as virtually belonging to all associated composites of the DTs. Composites of the DTs can be produced to offer previously unavailable information via new virtual sensors [

172] or virtual sensor networks [

173,

174].

For DTs to be composable, interoperability between components is the most important feature [

169,

175,

176,

177,

178]. An example composition of the different components is shown across associated DTs in

Figure 10. The Physical Environment contains sensory equipment within arbitrary boundaries to capture properties of the PT, which is immutable and equally anchors all overlapping DTs. The Data Environment is a heterogeneous composition of storage architectures that best befits the data requirements. The Analytical Environment is usually defined per task per DT. In addition, it may also have feedback loops to other DTs via the Data Environment. Outputs of the Analytical Environment are fed back into the Data Environment as virtual sensors to provide the information for display and interaction at a later time. The Virtual Environment forms a local Mirror World [

137] that allows for the visual inspection of information in the DTs. The scope of the Virtual Environment is limited to the current composition of DTs and allows for access to and interaction with both the data from the Physical Environment and the data from the Analytical Environment. The Connection Environment manages access rights between components and ensures that users (both humans and other DTs) have the appropriate access rights to use the respective environments, addressing concerns regarding trust, security, and privacy [

178,

179,

180,

181,

182,

183,

184,

185,

186,

187,

188,

189,

190,

191,

192].

In practice, DTs are applicable to all fields in which IoT has been applied [

47,

165,

193], including industry [

159,

194], health care [

43,

44,

166,

195,

196,

197,

198], urban management [

48,

199,

200], vehicular application [

201], and mobile end-user applications [

202]. However, the composability of DTs suggests that, by extension, these applications should be composable, too. For example, DTs in industry, health care, and transportation could exchange vital information to improve their effectiveness, but, to the best of our knowledge, this has not been accomplished in practice. To understand how DTs of these varied systems could be composed in the context of true Smart Cities, we categorise each application by its system type and location type to form a taxonomy of DT contexts (see

Table 1).

The system type of a DT is either open or closed [

203]. Open systems are characterised by an exchange with the wider environment in which they are embedded, whereas closed systems do not directly exchange with the environment and are usually observed in isolation. In addition, closed systems usually supervise well defined processes, whereas open systems try to characterise ill defined processes. Obviously, no system is entirely isolated, but industrial processes or health applications can be considered relatively closed systems with usually well defined interactions with the environment. In contrast, cities and traffic [

178] have no clear limit to what is entering from the surrounding environment, and congestion may be a side effect of this ill defined boundary.

The location type of a DT describes the relation between the unit of observation and the sensors as active or passive sensing [

204]. In stationary systems, the sensors are fixed in the environment and observe throughput. In mobile systems, the sensors are moving around either with the observed unit or independent of it. They may also capture environmental conditions around the observed unit such as in human navigation [

205] and health monitoring [

206].

When composing different types of DTs, it is important to understand their characteristics. Mobile DTs may only temporarily compose with Stationary DTs, while Closed DTs often form units within Open DTs. Open Stationary DTs often form the overarching type of DT that contains other types of DTs, whereas Mobile Closed DTs usually describe atomic units in composed DTs. Typically, Mobile Closed DTs can be part of Mobile Open DTs when a person has a Body Area Network (BAN) [

207] for health care applications and a Personal Area Network (PAN) [

208] to interact with the environment. In this example, the PAN will provide a wide range of interactions that can be composed with the Open Stationary DT of a Smart City, whereas the BAN will only share limited information. Similarly, a Closed Stationary DT for a Smart Factory within Open Stationary DT of a Smart City will only share limited information.

4.2. Servicisation of Digital Twins

While the word DT suggests some form of unitary entity, it would be more accurate to understand DTs as context-aware representations of Things in the cloud composed of services that act upon the abstraction. Services can range from interacting with the environment to representing the sensed and forming the bedrock of ubiquitous computing [

46]. Basic services again can be composed into higher-order services to address more complex issues.

With digital representation by the DT, it is possible to turn everything into a service—or

servicise [

209,

210,

211,

212]. In other words, the customer buys the temporary use of a service associated with a product instead of the product itself. The cost of developing and maintaining a DT’s infrastructure justifies this approach because it is more efficient to reuse resources rather than to duplicate functionalities [

212]. Therefore, DTs can be freely composed of services that conform to the requirements of the Connection Environment.

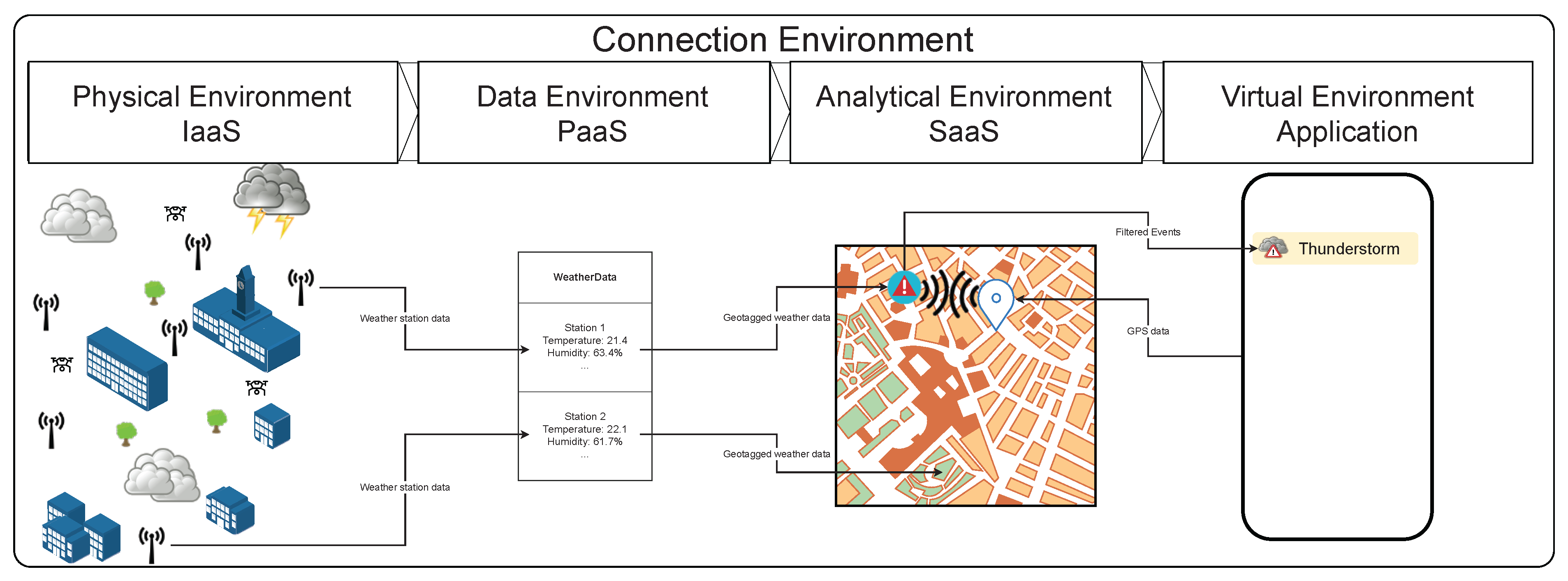

The three most common themes in “as a Service” (*aaS) are Infrastructure as a Service (IaaS), Platform as a Service (PaaS) and Software as a Service (SaaS). Newer types of services can be subsumed in these categories, including Sensing as a Services (SenaaS) [

213,

214], Semantics as a Service (SemaaS) [

176], and Robots as a Service (RaaS) [

215]. Notably, Infrastructure, Platform, and Software “as a Service” form an interesting interaction with the DT components because they can provide abstractions for particular components (see

Figure 2). From an abstraction perspective, each replaces the lower layers of the stack with an interface that hides implementation complexity. In this section, we will use the example of weather services as part of a Smart City to explain how IaaS, PaaS, and SaaS can map to the components of a DT (see

Figure 11).

4.2.1. Infrastructure as a Service (IaaS)

A DT requires the creation of a Physical Environment as the representation of the PT. The Physical Environment provides access to and measurement of the PT using infrastructure to collect raw data (see

Figure 2). Creating and maintaining the DT infrastructure is the most physically laborious part of developing a DT. This severely limits the number of entities that can afford to create a DT on a city scale. However, provided as a service, it may be possible to finance the required infrastructure in aggregate and reuse it across multiple DTs. When creating a DT, the IaaS approach allows for the reuse of a service provider’s communication network, sensor network, and remote sensing units rather than having to build one’s own system from scratch. Therefore, IaaS enables one to have alternative competing and composable DTs based on the same or different data source(s). There are also more specialised intrastructure services that are available such as Sensing as a Service (SenaaS) [

213,

214], Remote Sensing as a Service (RSaaS) [

216], and Robots as a Service (RaaS) [

215].

In our weather service example (see

Figure 11), the sensors, network, and remote sensing units in a Smart City could be servicised. The city government or a private provider could implement a policy to maintain such an IaaS to make digital services in a city more accessible, enabling the creation of different city DTs. This would allow new entrants to easily develop their own DTs that provide large-scale digital services without having to build physical hardware with redundant functionality [

210].

At the same time, IaaS may raise some concerns regarding security. The IoT used for IaaS consists of billions of locatable, addressable, and readable devices [

191]. There are three avenues by which security in IoT must be enforced: information, physical infrastructure, and management practices [

189]. Security breaches from unexpected behaviour may arise from data collection and management via privacy protection problems, heterogeneous network authentication, and access control problems [

189,

190,

191,

217]. The fundamental challenges for all of these topics are questions of trust: how to give whom access for what purpose and for what duration. Over the last decade, the IEEE and IETF have laid the foundations to build a security infrastructure along the IEEE 802.15.4 standard [

218,

219] and the IETF LLN stack [

208]. These efforts include sensor network security [

184], wireless sensor network security [

183], and dedicated secure protocols such as CoAP/Lithe [

220,

221], 6LowPSec [

222], and RPL [

223]. Ultimately, such as with the Internet, security will be an ongoing process that must be attended to at all times.

4.2.2. Platform as a Service (PaaS)

PaaS extends access to hardware by simplifying interfaces. PaaS usually packages digital representations into a Data Environment and thus reduces the complexity of accessing the data. Platforms vary largely and are usually tied to one of the five IoT application areas, industry [

159,

194], health care [

43,

44,

166,

195,

196,

197,

198], urban management [

48,

199,

200], vehicular applications [

201], and end-user applications [

202]. The concept of PaaS can be vague, and its use may sometimes be difficult to differentiate from IaaS or SaaS. In the context of a DT, a PaaS ought to provide only

access to data as a Data Environment in contrast to an IaaS that collects

raw data from the physical world as a Physical Environment and a SaaS that

derives higher-order data from previously collected data as a Analytical Environment.

A DT probably cannot rely on a single PaaS to source all of the required data. More often, a DT will mix and match PaaS sources [

224] to fulfil its task. The Data Environment is more complex than simply making data available and needs to be curated, indexed, and integrated into a knowledge graph based on context-awareness [

156], ontologies [

225,

226,

227], and middleware [

228,

229,

230]. In reality, IaaS and PaaS are often mixed and sometimes offer a subset of services that should be associated with SaaS [

231].

In our weather service example, the PaaS stores the information of the weather-related sensor data from sensing units throughout the city, including their spatiotemporal context. Specifically, the PaaS does not include any analytical components but offers a raw data source upon which higher-order data services may be built. A clear distinction is necessary between services to disambiguate their different responsibilities in the DT and ensure composability.

4.2.3. Software as a Service (SaaS)

According to the classical SaaS literature, SaaS is often exposed directly to an end user [

202] and ought to follow a singular use case. However, in the context of the DT, SaaS could be mapped to both the Analytical Environment and the Virtual Environment. Following the “as a service” paradigm, we suggest limiting the use of SaaS to the Analytical Environment in DTs to reduce redundant functionality and to enable more servicisation in end-user applications (see

Figure 2). Conventionally, one may consider SaaS as built upon PaaS, but the relationship between the Data and Analytical Environments may actually be bidirectional. The results of the analyses may be written back to the Data Environment before being forwarded to the Virtual Environment for user interaction. Thus, there may be an ambiguity that makes it difficult to separate SaaS from PaaS. The difference lies in whether one considers the results of an analysis to be newly computed as a SaaS or retrieved from memory as a PaaS.

In our weather service example, the classification of the predictive weather data to an end user could be qualified as SaaS if it is being calculated directly. However, if the predictive weather data are provided as a component of a Smart City environmental observation system, it would be qualified as a PaaS. In general, a SaaS conducts some analytical tasks and represents the Analytical Environment of DTs because it generates the models to mirror reality in the DT.

4.3. The Cognates of Digital Twins

DTs are a relatively new perspective that shares a common but amorphous root with a variety of other perspectives. While this root may be difficult to trace precisely and may differ from the intent of the author, it may have originated with various thinkers such as Wiener [

136], Weiser [

46], or even Tesla ([

232], p. 122). Given this complexity, we borrowed the term “cognate” from linguistics [

24], which refers to words with a formal resemblance or a common historical root. The confusing overlap among DT cognates is driven by developments across a diversity of research backgrounds such as information science, production engineering, data science, and computer science [

8]. The different components of the DT are prioritised differently in each field, which results in similar yet slightly different meanings. For instance, the cognate term IoT is dominating the public sphere according to search word trends (see

Figure 12). However, as hype gives way to pragmatism, simply having the physical infrastructure necessary for the creation of DTs is insufficient by itself. The two cognates IoT and DTs are often confused, but IoT may form the basis of DTs in a similar manner that the Internet forms the basis of the Web. Although it is too early to determine, search word trends may eventually place DTs or another cognate as the ultimate concept to describe these systems. The following sections clarify the nuances associated with DTs and their cognates across different disciplines.

4.3.1. Cyber–Physical Systems

A Cyber–Physical System (CPS) represents physical objects as virtual objects. Users can interact with either physical or virtual objects to change the state of both. A DT is a special instance of a CPS that includes a model of the PT for predictive operations.

The term DT is relatively new because the original idea of digital representation was first described in detail by Wiener [

136] in the field of control theory. Later, the term Cyber–Physical Systems (CPSs) Theory [

233,

234] was coined to describe a

physical object, homologous to a “Thing” [

158] in the IoT context and the PT in the DT context. This physical object has a

cyber correspondence, which is homologous to the “Digital Twin” [

2]. A priori, CPSs have no predetermined (physical) scope and are an idealised form to describe possible interactions with entities [

45,

235]. Therefore, the CPS literature is rarely linked directly to DTs, remote sensing, or the IoT (but see [

236,

237]). CPSs are increasingly used to capture social data as well [

238]. Their high level of abstraction makes CPSs a suitable paradigm to theorise concerning DTs, and findings in the CPS literature are very relevant to DTs.

4.3.2. (Wireless) Sensor (and Actuator) Networks

(Wireless) Sensor (and Actuator) Networks (WSN/SN/SAN/WSAN) provide sensing and actuating infrastructure that processes the signals to and from the physical world to enable a CPS or DT.

A DT cannot be conceptualised without having first digitised the PT. While the DT is usually presented as agnostic with respect to its underlying hardware, the DT cannot be constructed without it. Creating this representation requires sensing to comprehend the physical world and actuating to change the physical world [

168,

193]. Sensor networks ground any Cyber–Physical System in the physical world by providing readings thereof. For each device, questions of how to implement “autonomicity, scalability, energy efficiency, heterogeneity in terms of user equipment capabilities, complexity and environments” [

239] need to be answered. Sensor networks had been researched long before the origination of the terms IoT or DT. However, contemporary research focuses more on how sensor networks are seen as a component of an IoT system [

240].

In a sensor network context, there are active and passive devices that can be placed in the environment. Passive devices called Radio Frequency Identification (RFID) harvest energy from the transmission signal via induction to return a signal [

241]. The signal can be used as an identification of an object in order to trigger a software-based service or to determine its location depending on the RFID reader [

242]. Typically, RFID cannot return environmental information (but see [

243]). Active devices are usually battery powered and have actual computational infrastructure, sensory equipment, and possibly actuators to interact with the environment. Whether active or passive devices are required depends on the task. A DT will often require a combination of both active and passive devices to efficiently represent the PT and the processes therein. A comparison of tasks and the technology that best captures them can be found in

Table 2.

Without using the term DT, RFID implements a similar abstraction because a “physical object is accompanied by a rich, globally accessible virtual object that contains both current and historical information on that object’s physical properties, origin, ownership, and sensory context [

241]”. RFID helps to embed access to knowledge in the environment and can make access to the DT context-driven [

241]. For example, if users and environment are both tagged, the appropriate DT services can be called to facilitate the users’ task. At the same time, the system can track such interactions to help model activity for the DT. However, RFID tags could reveal sensitive information, and it is important to manage access control [

244].

In contrast to passive devices, active devices usually have local computational power but may be constrained, which should be classified according to usability. Resource constraints on active devices can be grouped into six categories: battery, communication range, bandwidth, memory storage, processing capability, half duplex communication [

178]. The IETF has classified devices based on hardware capabilities [

245], and each class requires different operating systems (OSs) to function optimally [

246,

247]. They range from Class 0 devices to Class 2 devices. Class 0 devices have a tailored OS that provides no functionality beyond the required features. In contrast, Class 1 devices and above may already run a generalised if bare OS fulfilling the criteria of a small memory footprint, support for heterogeneous hardware, network connectivity, energy efficiency, real-time capabilities, security, and opportune scheduling policies [

246,

247]. Active devices can be fully integrated into DTs and can be managed from within the DT, whereas passive devices currently only provide anchor points for DTs.

4.3.3. Smart Objects

Smart Objects are physical objects that have embedded computational power to provide interactions for its virtual object to make it more interesting and may represent the fusion of the PT and DT.

A DT can be understood as a highly accurate model of a physical system [

2,

9,

135], whereas a Smart Object (SO) offers interesting interactions with a particular object through embedded or remote computing power [

7]. Both DTs and SOs represent a CPS [

234]. It could be argued that the concept of an SO either incorporates or encapsulates the concept of a DT. However, a DT is usually required to mirror the role of an actual object in a larger context, whereas SOs only provide end users with interactions with the particular object [

7]. Nonetheless, many SOs will represent the underlying physical object sufficiently closely to use them interchangeably with a DT. Assuming that an SO qualifies as a DT and that the SO can offer physical access to its digital representation, it can be considered a dual

embodiment for both the object (i.e., PT) and the DT, providing a physical instance of the FTs paradigm (see

Figure 9).

However, a DT may also describe an entity that is composed of multiple SOs, other Things, processes, and high-order entities. These entities may entail buildings, cities, or regions [

4,

248,

249,

250] modelled through remote sensing [

54,

129,

251]. Moreover, a DT may also be formed of Things that are immaterial or at least incomprehensible in the physical world such as phantom traffic jams [

252]. These can be considered purely virtual objects in contrast to virtualised objects [

253], which would have a mapping back to a physical object. Composition allows for the formation of even-higher-order virtual objects that form the basis of a DT in a complex system such as the processes or aggregates representing a city [

10,

254], traffic flow [

255], or production line [

23]. Furthermore, a DT may also be produced exclusively by remote sensing without having local sensors attached to the PT [

54]. A DT is thus a more encompassing concept than an SO.

4.3.4. Ubiquitous and Pervasive Computing/Ambient Intelligence

Ubiquitous Computing is a paradigm that expects computation to be available for all objects to form a CPS, whereas Pervasive Computing specifies the computation to occur at the object or close to it. SO and Ambient Intelligence are instances of Ubiquitous Computing in CPSs in which the sensing and actuating capacity is directly embedded into the object that is described by the corresponding DT.

Ubiquitous and Pervasive Computing are usually considered interchangeable. The former is an older term to link computations to any object in the physical world [

46]. The latter is a younger term and is associated with placing computing power within (or close to) any object in the physical world [

256]. Without loss of generality, we will only refer to Ubiquitous Computing in the remainder of the present paper. For a DT, any data collected in the physical world must be represented in “cyberspace” and thus requires computation. Many Ubiquitous Computing applications pre-date the IoT paradigm but have been subsumed by it (see [

257]).

The closeness between the Thing and computations performed on sensor data from the Thing has often resulted in them being regarded as equivalent to each other [

47]. Similarly, the concept of a DT is often used as a synonym for both the PT (homologous to the Thing) and the Physical Environment with WSAN (homologous to computations on sensor data from the Thing) from which the DT is created. Ubiquitous Computing offers us a lens to distinguish between PT and DT by considering the difference between computations on the state of an object and the actual object. SOs [

7,

258,

259,

260] are a typical example where the distinctions between PT, DT, sensors, actuators, and computations are blurred. SOs are expected to have awareness of their environment and have some model of the ongoing activities, policies, and processes around them. At the same time, SOs should represent functions, rules, and flows that allow for interactivity [

7,

258]. This formulation clearly indicates that the SOs have a Physical Environment in which they are embedded, an Analytical Environment to model their behaviour, a Data Environment to maintain their state, and a Virtual Environment in which their interactivity can be triggered. When the computations on the object or Thing become unrecognisable to humans because they are “woven into the background”, as predicted by Weiser [

46], the term Ambient Intelligence becomes more relevant [

42,

43,

44,

45]. Making a clear distinction between a Thing, an SO, and Ambient Intelligence is probably neither possible nor desirable.

4.3.5. Internet of Things

The IoT is an amalgam of competing technologies with the goal of digitally representing the physical world. It interconnects WSANs to be combined into local CPSs that in turn compose larger DTs.

The idea behind IoT was first hyped [

261,

262,

263], then overestimated, and then slowly became practical [

264]. The term IoT was probably coined towards the end of the last millennia [

265], but its roots are older, albeit unnamed. The functional similarity of CPSs [

136] and Ubiquitous Computing [

46], as well as stipulations from early thinkers such as Nikolai Tesla ([

232], p. 122), make its historical provenance a question of choosing a remotely related cognate. Following Atzori and colleagues [

232], we take the probable origin of the term “Internet of Things” by Ashton in 1999 [

265] as a starting point to limit ourselves to cases where the general idea of IoT was already the goal of research.

Nearly anything remotely related to Information and Communication Technologies (ICT) [

264] is included in modern IoT research [

47,

165,

247,

266,

267,

268,

269,

270,

271,

272,

273,

274,

275,

276,

277] because IoT promises to be the invisible glue to digitally represent the world and offers new means of analysing and understanding [

46]. In part, this may be due to the well known term

Internet that immediately allows people to associate it with global information infrastructure [

158]. On the other side, a

Thing is vague enough to label any entity that can be distinguished by some measure [

158]. The Intranet of Things in a WSAN has been transformed through ICT [

277,

278,

279] and cloud-centric processing [

268,

280,

281] to the IoT. This loose description is what allowed the IoT to soak up many kinds of ICT developments for the last decade (see

Figure 12).

While the term IoT has recently become the most common term for the linkage between the virtual and the real, that was not always the case. Previously, a set of competing ideas (e.g., CPS, WSAN, and Ubiquitous Computing) co-created the ecosystem that today is generously labelled IoT and which laid the hardware foundation for DTs. These ideas were closely related but ultimately based on different assumptions because they originated from different fields. According to public perception, the terminology has settled on IoT even as the underlying technology is still quickly diversifying and expanding. With current trends, DTs appear to be a concept that is set to overtake the IoT in terms of public perception (see

Figure 12).

Another issue is that IoT is a buzzword that is difficult to grasp because it is overloaded with meaning. Simply tapping into the literature is overwhelming and, at the same time, highly specific. The many technologies peddled under the umbrella-term IoT would be more distinctive if labelled specifically according to their function. While the technological umbrella-term is now well established, if ill defined, there is still a lingering sense that different fields of application are uniquely distinct and require special attention. Research and development is thus heavily directed towards five distinct real-world applications that promise the biggest gains: industrial application, urban management, health care, vehicular applications, and end-user applications [

47,

165,

202]. This ingrained belief regarding the distinction leads to difficulties in synthesising common knowledge across applications. Overcoming this barrier could help the interdisciplinary fields of IoT and DTs in many ways. Not only would it enable learning from each other to clarify their theoretical backgrounds, but it would also make the use of IoT as a basis for DTs more accessible. Indeed, a clearly communicated and unified approach to IoT would lower the barriers of entry tremendously.

Weiser [

46] postulated the goal of Ubiquitous Computing to make the computations disappear in the mundane of the environment. To obtain services that hide in plain sight, three categories of design considerations have been brought forward that need to be considered for IoT to work: fundamental properties of a Thing, network communication properties, and real-time interaction properties. The fundamental properties of a Thing include existence, sense of self, connectivity, interactivity, dynamicity, and sometimes environmental awareness [

178,

191,

239]. Much research has also been conducted on properties to make network communication more efficient, including energy, latency, throughput, modular design for improved scalability, topology, security of information, safety [

42,

178,

239], deployment, mobility, cost, efficient resource usage, heterogeneity, communication modality, infrastructure, coverage, network size, and lifetime [

178,

239]. Real-time requirements in industrial and healthcare settings have brought forth another set of properties that need to be considered to enable real-time interaction with IoT networks: predictable delays and latencies, congestion-free communication, accurate time synchronisation, real-time assurance, interoperability with existing infrastructure, and prioritised communication [

178].

The historic development IoT and the evolution of definitions has been addressed in detail by [

232]. The work identified three generations of IoT development with different foci that have resulted in the current diffusive definitions (see

Table 3). An additional new generation is identified in this review paper that exemplifies the most current trends of DT research in IoT that were not reported in [

232]. Notably, while these four generations focus on distinctive features, they are effectively contemporary because these generations are still actively researched. To make sense of these concurrent generations, it is best to understand each generation as a necessary condition to start work on the following generations.

The first generation is driven by the rise of RFID tags [

265] to digitally distinguish Things. At this time, IoT was often taken to be synonymous with RFID. This generation is tightly coupled to Ubiquitous Computing [

46], Machine-to-Machine (M2M), and CPSs as the RFID technologies ultimately derive from these research fields. However, by the late 2000s, most of these technologies had been tagged IoT. The second generation is driven by interconnecting Things instead of just centralising the information flow. It focuses on the network component of IoT devices and realistically brings the

Internet into the IoT [

282]. Control of information becomes more central as well, reinforcing the connections to its roots in CPSs [

136]. The third generation is driven by a re-centralisation around cloud technologies that matured meanwhile [

268,

280,

281]. This generation focuses on processing data and generating knowledge. The fourth generation is driven by a second wave of decentralisation due to time sensitivity of applications, fog computing [

283], and the rise of DTs [

2] and servicisation [

212]. As questions of processing have been addressed, the newest generation brings more efficiency to IoT. IoT is often mentioned as a multiplier that makes other technologies more attractive [

232], contributing to the diffusion of the term.

4.3.6. M2M

Machine-to-Machine (M2M) communication allows Things and network components to interact automatically with each other without human actions, thus enabling automated formation of CPSs or DTs on different scales.

The complexity of an IoT system underpinning a DT requires much communication between each involved Thing and the network infrastructure to support a control system [

136,

234,

285]. These communication protocols have been aggregated and named M2M communication. M2M is an ongoing research field and is usually required for all other IoT-related and DT-related activities [

286,

287,

288,

289]. Essentially, M2M describes how IoT components interact with each other and can be understood as part of the implementation of the Connection Environment of a DT. As such, M2M, IoT, and DTs cannot be meaningfully separated, although they do not constitute the same concept. Before IoT became the defining word for ICT, M2M was a contender for being the umbrella-term for these technologies.

The number of devices connected to IoT today surpasses the number of devices manually connected to the Internet. Thus, it is impossible to manually manage the communication between devices by connecting them or maintaining them [

178,

290,

291,

292]. This aspect triggered the development of the M2M concept in the early 2000s [

288,

293] followed by standardisations such as oneM2M [

153] in the 2010s. At the centre of M2M activities are the protocols that are used to exchange information between devices. Protocols describe predefined procedures of how to initiate, maintain, and reestablish communication [

153]. While some protocols are high-level, they are often bound by the physical limitations of the communication capabilities of the constrained devices. The complexity of the classical standard Internet Protocol (IP) was quickly disqualified as a platform [

294] because of the assumptions that the computing infrastructure was powerful, wired, and connected and that communication was a negligible overhead. However, constrained devices have been highly optimised in terms of energy consumption, size, and costs such that communication has become the major bottleneck [

295].

The physical infrastructure to connect constrained devices to the network is usually referred to as physical/PHY [

181], and the data link/MAC address is used to uniquely identify single units [

296,

297,

298]. These terms originate from the OSI model and refer to the physical and data link layers between devices [

299]. Originally, a link would be a copper or fibre cable. However, wireless transmission has adopted the same terminology, so PHY usually refers to some kind of antenna that uses a pattern of signals to encode information [

251]. To enable a more efficient use of bandwidth and potentially allow for more devices, Cognitive Radios allow secondary users to use communication channels while primary users are idle [

287,

300,

301]. The main trade-off for the PHY connection is between data rates, connection distances, and energy consumption. Currently, technology can be approximately grouped into four application areas: Body Area Networks (BAN), Personal Area Networks (PAN), Local Area Networks (LAN), and Wide Area Networks (WAN) (see

Table 4 for an overview).

The large standardising bodies, IEEE and IETF, approach protocols in constrained environments based on expansion of the IEEE Standard 802.15 [

315,

316] and the IETF LLN protocol [

294,

295] to handle IP. In addition, there are technological niches that have not been fully covered by those standardising organisations such as the Low Power Wide Area Networks (LPWAN) where protocols vary and are highly incompatible, representing a low level of industry cooperation [

309,

310,

311,

312]. However, several consortia have developed around the different core technologies associated with each protocol, and only subsequent years will show how prevalent each WAN technology has become.

The commonality among all protocols is that they are simplified to move data from the constrained devices to some kind of translation unit that transfers the data into the IP protocol, making it widely available on the Internet [

317,

318,

319]. This translation is necessary to cope with the reduced computing capacity of constrained devices as well as questions of how to transmit data and scale local IoT networks for use in a DT. A difficulty arises for these protocols because the translation unit of constrained devices not only sends data (such as with sensors) but may also receive data (such as with actuators). This aspect requires a bidirectional translation protocol that can uniquely address every node from the outside [

320].

4.3.7. Computation-on-the-Wire/Dew/Mist/Fog/Cloud and Edge Computing

Computation-on-the-wire computes all necessary transformations on data at the best possible point in the network according to the subsidiarity principle to deliver Ubiquitous Computing for CPSs such as DTs.