Abstract

Background and Objectives: Diabetes is a global public health challenge, with increasing prevalence worldwide. The implementation of artificial intelligence (AI) in the management of this condition offers potential benefits in improving healthcare outcomes. This study primarily investigates the barriers and facilitators perceived by healthcare professionals in the adoption of AI. Secondarily, by analyzing both quantitative and qualitative data collected, it aims to support the potential development of AI-based programs for diabetes management, with particular focus on a possible bottom-up approach. Materials and Methods: A scoping review was conducted following PRISMA-ScR guidelines for reporting and registered in the Open Science Framework (OSF) database. The study selection process was conducted in two phases—title/abstract screening and full-text review—independently by three researchers, with a fourth resolving conflicts. Data were extracted and assessed using Joanna Briggs Institute (JBI) tools. The included studies were synthesized narratively, combining both quantitative and qualitative analyses to ensure methodological rigor and contextual depth. Results: The adoption of AI tools in diabetes management is influenced by several barriers, including perceived unsatisfactory clinical performance, high costs, issues related to data security and decision-making transparency, as well as limited training among healthcare workers. Key facilitators include improved clinical efficiency, ease of use, time-saving, and organizational support, which contribute to broader acceptance of the technology. Conclusions: The active and continuous involvement of healthcare workers represents a valuable opportunity to develop more effective, reliable, and well-integrated AI solutions in clinical practice. Our findings emphasize the importance of a bottom-up approach and highlight how adequate training and organizational support can help overcome existing barriers, promoting sustainable and equitable innovation aligned with public health priorities.

1. Introduction

1.1. Prevalence of Diabetes and Social Impact

According to the International Diabetes Federation (IDF), diabetes currently affects approximately 589 million individuals worldwide between the ages of 20 and 79. Among the various forms of diabetes, Type 2 Diabetes (T2D) accounts for the vast majority of cases, with a prevalence estimated between 87% and 91%. Projections for 2050 indicate a substantial increase in global prevalence, expected to reach 853 million cases within the same age range, which will likely be accompanied by a corresponding rise in healthcare costs [1,2,3,4,5]. The majority of individuals with diabetes are obese and physically inactive, particularly within the 45–64 age group (28.9% of males and 32.8% of females) [6,7,8]. In Italy alone, the prevalence of diabetes was estimated at 4 million individuals in 2023 [9,10]. While genetic predisposition and advancing age are recognized contributors to the pathogenesis of numerous chronic diseases such as diabetes [11,12], it is predominantly unhealthy lifestyle behaviors that significantly influence both the onset and progression of these conditions [13,14,15,16,17]. In particular, dietary patterns characterized by excessive caloric intake, a high consumption of refined grains in place of whole grains, and insufficient physical activity constitute the principal modifiable risk factors [18,19,20]. These detrimental habits not only facilitate the development of disease but also exacerbate its clinical course, substantially increasing the risk of complications. Such complications include both peripheral vascular disorders and more complex cardiovascular events, with potentially severe outcomes such as acute myocardial infarction and cerebrovascular accidents (stroke), which are associated with increased morbidity and mortality between in T2D and Type 1 Diabetes (T1D) [21,22,23]. In light of this evidence, the implementation of comprehensive primary and secondary prevention strategies aimed at promoting healthier lifestyles is imperative [24,25,26]. Emphasis should be placed on balanced nutritional intake, caloric moderation, and the adoption of regular physical activity, with the goal of reducing the global burden of disease and improving population health outcomes in T1D and T2D [27,28,29,30].

1.2. Use of Devices and Technology in Diabetes Management

At the same time, with the promotion of healthy lifestyle behaviors, diabetes management—particularly for T1D—has long benefited significantly from technological innovation applied in clinical settings, especially with regard to glucose self-monitoring and insulin delivery. Devices such as continuous glucose monitoring (CGM) systems, insulin pumps (IP), and smart multiple daily injection (MDI) systems, often integrated into hybrid closed-loop systems, have become indispensable supports in daily clinical practice [21,22]. They play a critical role in reducing glycemic variability and preventing hypoglycemic episodes, thereby helping to avert major diabetes-related complications. In the context of T2D as well, digital technologies—including self-management applications and telemonitoring tools—are proving valuable, particularly in the personalization of therapeutic interventions and the optimization of clinical outcomes and complication management [31,32,33,34]. In recent years, artificial intelligence (AI) has taken an increasingly prominent role in both diabetes research and clinical practice, owing to its capacity to process and analyze large volumes of clinical and behavioral data efficiently and accurately [35,36,37,38]. Predictive models based on AI techniques such as machine learning (ML) and deep learning (DL) are being developed and implemented to support clinical decision-making, enhance the prediction of complication risks, and personalize treatment pathways [39,40,41]. Applications of AI in diabetes care range from early diagnosis to automated insulin dosing management and the identification of critical glycemic patterns, thereby contributing to the emergence of a new paradigm in precision medicine for diabetes [35,36,37,38,39,40,41]. Nonetheless, the issue of potential barriers and facilitators perceived by healthcare professionals in the effective implementation of these AI-based technologies in routine clinical practice remains largely unexplored—a gap this study aims to address in a bottom-up view, defined as “an approach guided by healthcare workers’ insights and daily experiences” [42].

1.3. Study Aims

The primary objective of this study was to investigate the main barriers and facilitators perceived by healthcare professionals involved in the implementation of artificial intelligence (AI) in diabetes management:

- What are the barriers and facilitators to the use of AI by healthcare professionals in the management of diabetes?

Secondarily, the study aims to explore and support research through the collection of both quantitative and qualitative data to inform the development and implementation of specific AI-based programs for diabetes management, following a bottom-up approach involving healthcare professionals.

- Which quantitative and qualitative insights, as perceived by healthcare professionals, can most effectively inform the bottom-up implementation of AI in diabetes care?

2. Materials and Methods

2.1. Study Design and Registration

A scoping review was conducted to ensure methodological rigor and the relevance of selected studies. This review followed the Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews guidelines (PRISMA-ScR) (PRISMA-ScR checklist available in Supplementary Table S1) [43]. The protocol for this review was registered in the Open Science Framework database (https://osf.io/xgy2z; accessed on 12 June 2025).

2.2. Search Strategy

The search strategy was developed adopting the Population, Concept, Context (PCC) framework (Table 1), without temporal restrictions [44]. The search strategy, updated to 31 January 2025, involved the use of keywords matched using specific Boolean operators such as AND/OR in the databases PubMed Medline, Scopus, CINHAL, and Embase. Search strings are available in Supplementary Table S2.

Table 1.

Inclusion and exclusion criteria, described according to the PCC framework.

2.3. Eligibility Criteria

The inclusion criteria encompassed primary studies published in English without temporal restriction and relevant to the study’s objectives and involving healthcare workers in AI processes. All studies that did not meet the stated inclusion criteria were excluded. The authors nevertheless attempted to include studies in Chinese as well, after evaluating the English-language abstract to ensure it met the inclusion criteria.

2.4. Study Selection Process

The study selection process for this review followed a two-phase procedure: an initial screening of titles and abstracts, followed by a detailed evaluation of full-text articles. All potentially relevant articles were imported into the reference manager Ryyan (https://www.rayyan.ai/; accessed on 20 June 2025) for data organization and management. The initial screening was conducted independently and blind by three authors (G.C., A.M., and M.R.), who evaluated titles and abstracts based on their relevance to the study and in accordance with the predefined inclusion criteria. A fourth independent researcher (A.C.) resolved any disagreements at this stage. Following the initial screening, full-text articles meeting the preliminary criteria were independently assessed by the first three researchers, and the fourth still managed conflicts. Any discrepancies were resolved through consensus meetings, with the last researcher (A.C.) acting as an arbitrator to ensure integrity in the selection process. This systematic approach ensured a rigorous and unbiased selection of studies for this review.

2.5. Data Extraction and Quality Appraisal

Data extraction from the included studies was organized into key categories, consistent with the methodological framework [43,44]. This structured categorization facilitated both detailed reporting and thorough analysis. The main categories included intervention, outer setting, inner setting, individual characteristics, and implementation process. This structured approach enhanced the clarity and depth of our analysis, aligning with established methodological standards. The extracted data were presented as a narrative summary, organized according to the review’s objectives and supplemented in Table 2. The risk of bias and methodological quality of the included studies were assessed using established guidelines of the JBI framework [43]. Two independent reviewers (A.M. and A.C.) conducted the evaluation to ensure objectivity. Any disagreements were resolved through discussions with a third researcher (M.R.), ensuring that a consensus was reached. The risk of bias and methodological quality of the included studies were evaluated using JBI checklists for qualitative [45] and cross-sectional studies [46] and the MMAT tool for mixed-methods studies [47]. Decisions regarding methodological quality of the studies included were made, independently, by two reviewers, and any disagreements were resolved by discussion. The sum of the points was classified as the percentage of the items present; thus, a score lower than 70% was classified as low-quality, between 70 and 79% of the checklist criteria was classified as medium–high-quality, between 80 and 90% was assigned high-quality, and a score greater than 90% of the criteria was classified as excellent-quality [48]. However, due to the exploratory nature of the present work, no studies were excluded for insufficient quality.

2.6. Conceptual and Analytical Framework

The synthesis and presentation of study results followed established guidelines [43,44]. The description of the identified barriers and facilitators is structured according to the Consolidated Framework for Implementation Research (CFIR) [49], a comprehensive theoretical framework widely used to guide implementation research. CFIR comprises five major domains—intervention characteristics, outer setting, inner setting, characteristics of individuals, and implementation process—that offer a systematic approach to understanding factors influencing implementation. In this scoping review, CFIR was used as a guiding structure to map and interpret both qualitative and quantitative data extracted from the selected studies, enabling a comprehensive and theory-informed synthesis of the findings. Key statistical measures, including means (M), standard deviations (SD), and p-values, were integral to the analysis. To maintain the integrity of the original studies, statistical significance reporting was preserved as presented in each study. Consistent with scientific conventions, p-values of 0.05 or lower were considered statistically significant, ensuring the inclusion of robust and meaningful findings in the review. In addition to the quantitative synthesis, qualitative data were also systematically extracted and analyzed, where applicable, to capture nuanced insights and contextual dimensions of the study findings. The qualitative synthesis followed established principal thematic frameworks in the study included and to further enhance and complete the analysis conducted.

2.7. Synthesis of the Results

In this review, while the benefits of meta-analysis are acknowledged, a combined quantitative synthesis was deemed not feasible due to the heterogeneity of the included studies. This variability, characterized by differences in intervention types and methodologies for quantifying relationships between variables, led to inconsistencies in both methodological and statistical approaches. As a result, a detailed narrative synthesis was chosen, following established guidelines for synthesis without meta-analysis (SWiM) [50]. This approach was selected for its effectiveness in transparently and rigorously synthesizing diverse quantitative data, aligning with the PRISMA guidelines [43]. Data synthesis was performed based on the CFIR framework [49]. The CFIR is a well-established conceptual model in implementation science, and it is a comprehensive and standardized meta-theoretical framework. The updated version of the framework is organized into five domains: intervention, outer setting, inner setting, individual characteristics, and implementation process [49]. The CFIR served as a foundational structure for the exploratory assessment of barriers and facilitators to implementing imaging-based, AI-assisted diagnostic decision-making. The comprehensive and adaptable use of the updated CFIR throughout data collection, analysis, and reporting aimed to enhance research efficiency, generate generalizable findings to inform AI implementation practices, and contribute to a robust evidence base for tailoring implementation strategies to overcome key barriers.

3. Results

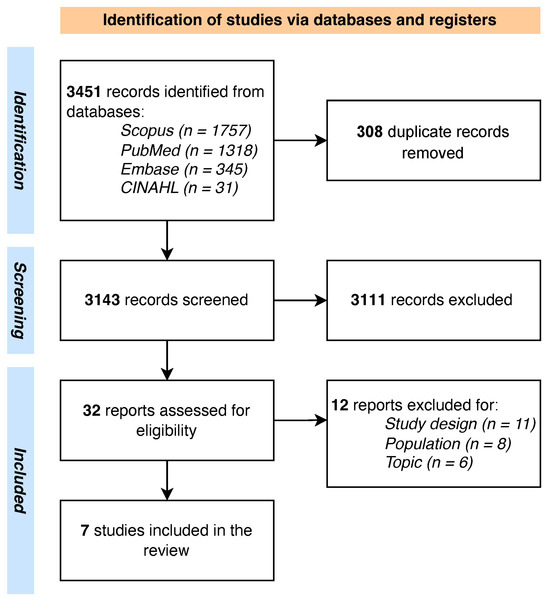

The PRISMA flowchart of the screening process is shown in Figure 1. A total of n = 3451 records were retrieved from the databases, and after carefully removing the duplicates (n = 593), the researchers (GC, AM, AC, and MR) screened a total of n = 3143 for title and abstract. Thirty-two full texts were screened. Finally, a total of n = 7 studies, conducted between 2019 and 2024, were included [51,52,53,54,55,56,57].

Figure 1.

PRISMA flowchart.

Studies’ characteristics are shown in Table 2. The population varied from 10 participants to 207, with a range of ages from 40 to 60 years. The involved healthcare professionals were mainly nurses, healthcare assistants, and doctors (i.e., ophthalmologists, diabetologists, endocrinologists, physicians, and general practitioners).

Table 2.

Summary of the included studies.

Table 2.

Summary of the included studies.

| Study | Country | Study Design | Setting | Sample Size (N, % Female) | AI Type |

|---|---|---|---|---|---|

| Held et al., 2022 [51] | Germany | Qualitative | Primary care | 24 (42) | Smartphone-based and AI-supported diagnosis tools for the screening of diabetic retinopathy |

| Liao et al., 2024 [52] | China | Qualitative | Hospital and community healthcare center | 40 (42.5) | AI-assisted system for diabetic retinopathy screening |

| Petersen et al., 2024 [53] | Denmark | Qualitative | Hospital | 18 (61) | AI-assisted system for diabetic retinopathy screening |

| Romero et al., 2019 [55] | United States | Mixed -methods | Primary care outpatient clinics | 83 (N/A) | AI-powered clinical decision support system for identifying diabetes patients at risk of poor glycemic control |

| Roy et al., 2024 [54] | India | Cross-sectional | Physicians in clinical practice | 202 (N/A) | AI-based diabetes diagnostic interventions |

| Wahlich et al., 2024 [57] | United Kingdom | Qualitative | Hospital and community healthcare center | 98 (N/A) | AI-assisted system for diabetic retinopathy screening |

| Wewetzer et al., 2023 [56] | Germany | Cross-sectional | Primary care | 209 (107) | AI-assisted system for diabetic retinopathy screening |

AI: artificial intelligence; N: number; N/A: not applicable; %: percentage.

The study conducted by Liao et al. also included healthcare administrative staff and information technology experts [52]. The majority of the included studies were focused on the use of AI for diabetic retinopathy screening [51,52,53,56,57], with only two studies focused on AI as a tool for glycemic control and AI as a general tool for diagnostic intervention [54,55].

Most of the studies adopted a qualitative design (n = 4) that used semi-structured interviews [51,52,53,57]. Two quantitative cross-sectional studies were based on surveys [54,56], and finally, one study adopted a mixed-methods design [55].

All the included studies described research conducted in primary care settings, with the exception of the study conducted by Roy et al. [54], which was conducted in a clinic. Notably, two studies were performed inside community healthcare centers [52,57].

3.1. Quality Appraisal

Table 3 shows the overall quality of the included studies according to the JBI framework [44]. Complete quality appraisal is available in Supplementary Tables S3–S5.

Table 3.

Results of quality appraisal.

Regarding the four qualitative studies, Whalich et al. [57] and Held et al. [51] were rated as medium-quality due to the absence of statements addressing the researchers’ cultural positions and the mutual influence between the researchers and the research process. The studies by Liao et al. [52] and Peterson et al. [53] were well designed.

The two studies with a cross-sectional design presented as low-quality due to the lack of information during the description of the inclusion and exclusion criteria [54] and the setting of the study, while the study by Wewetzer et al. [56] was reported as medium-quality owing to the lack of managing confounding factors.

Finally, the mixed-methods study performed by Romero et al. [55] all was well designed and conducted, with a high-quality rating.

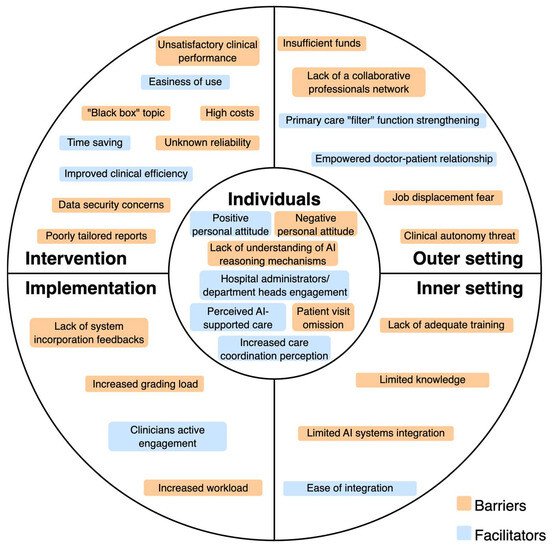

3.2. Barriers and Facilitators Identified According to the CFIR Framework

The following sections present findings according to the CFIR framework domains, and Figure 2 illustrates the main identified barriers and facilitators. Comprehensive data extraction is shown in Supplementary Table S6.

Figure 2.

Barriers and facilitators of AI implementation for diabetes management reported by healthcare professionals.

3.2.1. Individuals Domain

The principal barrier is the negative personal attitude towards AI systems, with associated skepticism [51], incompetence in understanding the AI reasoning mechanisms [52], or forgetfulness to visit the patient [53].

The facilitators belonging to this domain are engagement of hospital administrators or department heads [52], positive attitude towards the future of AI technology [57], perception of increased care coordination [55], or perception of AI as a support [57].

3.2.2. Intervention Domain

The relevant barrier under this domain is the unsatisfactory clinical performance of the AI system. This aspect is related to image recognition, time (duration of examination and latency of results), validity (AI system may miss some retinopathy changes), and uncertainty about the accuracy and trustworthiness of AI outputs [51,52,53,54]. Also, Romero et al. [55] reported as a barrier the high false positive rate in patient risk classification, whereas the studies of Wewetzer et al. [56] and Wahlich et al. [57] highlighted diagnostic limitations of AI systems (they may not detect other conditions besides the one for which they have been designed for, leading to incomplete diagnosis). Finally, doubts about reliability and accuracy may negatively impact physician confidence in the system [56,57]. Another common barrier is related to the financial burden of AI software/high acquisition costs [52,53,56].

Another fundamental barrier to consider is the concern about data security, liability, and how the system made the decision (black box problem) [54,55,57]. Romero et al. [55] and Liao et al. [52] also reported the poorly tailored reporting of AI systems.

The identified facilitators are an improved clinical efficiency [52,54,55], easiness of use [52,54], and the time sparing effect, both for the patient and for the physician [53,56,57]. Only one study reported a financial facilitator [51].

3.2.3. Implementation Process Domain

The principal barriers are the lack of feedback in incorporation of the system [52], the increased workload due to extra steps outside the routine workflow [55], and the impact of a grading workload [57].

The only facilitator identified in the implementation process is the successful active engagement of the clinicians [52].

3.2.4. Inner Setting Domain

The principal barrier related to the inner setting is the lack of adequate training or limited knowledge and training on the AI system [52,54,55]. Also, the lack of integration of the AI system into the hospital/facility information system is another relevant concern that acts as a barrier [54,55,56].

The only inner setting facilitator identified under this domain is the ease of integration, with the associated simple installation [51,52].

3.2.5. Outer Setting Domain

For the outer setting domain, the crucial emerging barrier is the lack of a collaborative network between primary, secondary, and tertiary hospitals, which includes the related tensions between GPs and specialists with the concern of a lower referral rate [51,52]. Also, the fear of job displacement or changes in clinical autonomy is another concern that is reported as a barrier [54]. A study also reported that insufficient reimbursement by health care systems may act as a significant barrier [56].

The facilitators reported that can be classified under this domain are the development of national guidelines related to AI [52], the strengthening of the primary doctor filter function, and an associated closer relationship between patient and GP [51].

4. Discussion

The implementation of AI systems in healthcare represents one of the most significant challenges of the coming decade, not only for daily clinical practice but also for public health systems as a whole. The findings of this study, interpreted through the CFIR framework [49], clearly show that the barriers and facilitators to AI adoption operate on multiple levels, reflecting organizational, technological, individual, and systemic dynamics that influence full implementation and value realization.

4.1. Innovation, Effectiveness, and Trust in Technology

The most critical issue that emerged relates to the perceived unsatisfactory clinical performance—an aspect with profound implications for public health [58,59]. If AI systems are unable to ensure adequate levels of sensitivity and specificity—or if their reliability is perceived as uncertain—they risk undermining the quality of care and increasing the chances of missed or inappropriate diagnoses [60]. In the context of population screening (such as diabetic retinopathy), a high number of false positives or false negatives could either overload the system or falsely reassure patients. This confirms what has already been observed in international studies, which emphasize the need for rigorous clinical validation before the large-scale deployment of AI systems [61,62]. Furthermore, the lack of transparency in AI’s decision-making processes undermines the trust of healthcare professionals and patients, posing an ethical and regulatory challenge essential for the sustainable development of the technology [60,63]. This issue is currently the focus of attention within the European Health Data Space and the EU Artificial Intelligence Act, which introduces strict requirements for the “explainability” and traceability of collected data [64]. These concerns are rooted primarily in the CFIR domain of intervention, where the perceived evidence strength, complexity, and relative advantage of the AI tools are critical to their adoption. Additionally, elements from the individuals domain emerge, particularly regarding trust and acceptance by healthcare professionals.

4.2. Equity and Sustainability: AI Costs, Access, and Integration

The barrier represented by the costs of acquiring and managing AI can also be interpreted in terms of equity in access to healthcare innovations [65]. This economic barrier was identified within the CFIR domain of intervention characteristics, highlighting the perceived complexity and resource intensity associated with adopting AI-based tools. Mainly in publicly funded universal healthcare systems, the adoption of expensive technologies risks creating territorial inequalities, where larger or better-funded facilities can afford to implement AI, while peripheral or resource-limited ones risk being effectively excluded [66]. This issue was already highlighted by the World Health Organization (WHO) in the 2021 report “Ethics and Governance of Artificial Intelligence for Health”, which urges careful evaluation of AI’s redistributive impact in public health contexts [67]. Adding to this is the poor integration with existing information systems, which are often not designed to accommodate supplementary technologies, thereby hindering true interoperability. Without full connectivity between AI, electronic health records, and healthcare management systems, AI risks remaining an isolated technology, incapable of delivering real value to patient care [68,69]. These considerations also align with the principles of the United Nations Sustainable Development Goals (SDGs), particularly those promoting health, innovation, and equity in access to care [70].

4.3. Healthcare System and Multilevel Governance

In terms of governance and shared decision-making, it becomes clear that the lack of coordination between levels of care (e.g., primary care, specialists, hospitals) and potential communication difficulties among professionals represent critical barriers to AI implementation. This issue is part of a broader challenge in healthcare governance, which calls for dynamic and modern models of both vertical and horizontal service integration [71,72,73]. Technology alone does not solve these problems; on the contrary, it can amplify them if introduced without a clear and shared organizational framework. The concern, particularly regarding the potential loss of clinical autonomy—clearly emerging from the data reviewed—is a key issue for the “social” legitimacy of digital innovations in healthcare [74,75]. According to Greenhalgh et al. (2017), the adoption of new technologies requires a co-construction of change, in which professionals are actively involved not only in the use but also in the design and evaluation of the tools to be implemented in clinical practice [76]. These barriers align with the CFIR domains of inner setting and implementation process, emphasizing organizational coordination gaps and limited stakeholder engagement in technology adoption.

4.4. Training, Digital Literacy, and Empowerment

These issues fall within the CFIR domain of characteristics of individuals, particularly focusing on knowledge, self-efficacy, and the need for ongoing professional development to support effective AI adoption. From an internal perspective, the lack of adequate training on AI tools is a systemic barrier that must be urgently addressed—especially if the goal is to adopt AI-based care systems across multiple levels of healthcare delivery [77]. Structured pathways for continuous education are therefore essential, integrating “AI literacy” into university curriculum, ongoing professional development programs, and career advancement tracks. This ensures they do not become mere executors but rather informed actors who use technology as an extension of their clinical skill expertise [78,79].

4.5. Opportunities for Public Health

Despite the critical issues that have emerged, the identified facilitators show significant transformative potential. Time savings, increased clinical efficiency, the perception of greater care coordination and positive acceptance by some operators are valuable elements for the success of public policies that aim at the equitable and sustainable digitalization of healthcare [80,81,82].

The presence of possible national and international guidelines to support health professionals is perceived as an enabling element, and in this scenario, AI could represent a turning point if integrated with global implementation strategies, clinical audits, and impact assessment [64]. It is desirable that an international public control room is created to guarantee the implementation of AI, capable of providing technical standards, ethical assessments, and support to local decision makers and organizers of health services in general that meet all the quality standards necessary for the use of AI in clinical practice [67,83]. These facilitators reflect the CFIR domains of outer setting and implementation process, particularly highlighting the role of policy support, external incentives, and structured strategies to guide effective and equitable AI integration.

4.6. Barriers and Facilitators in a Bottom-Up Perspective

The introduction of AI and other technological tools into clinical settings—particularly for the management of chronic diseases—represents a significant opportunity to rethink traditional models of care and transition toward a more predictive, proactive, and personalized approach [84,85,86,87,88,89]. Chronic conditions such as diabetes, hypertension, cardiovascular, and respiratory diseases represent a substantial burden for public healthcare systems and require coordinated, continuous, and patient-centered management strategies [90,91]. In this context, AI can serve as a catalyst for innovation, provided that it is embedded in a well-structured and responsive clinical and organizational ecosystem [92]. Building on the CFIR framework, our analysis identified key barriers and facilitators across its five domains: characteristics of the intervention, outer setting, inner setting, individual characteristics, and implementation process. These domains provided the foundation for a structured and theory-informed classification of the data, while the discussion reframed these results in light of a bottom-up perspective—that is, how professionals working within healthcare systems perceive, experience, and respond to the integration of AI in their clinical practice [49,93,94]. From a practical standpoint, AI-based systems—such as those used for diabetic retinopathy screening or cardiovascular risk stratification [95,96]—can support the early detection of complications, thereby reducing the need for hospital-based interventions and enabling more timely, preventive care. This dual benefit supports both patients, who receive appropriate interventions sooner, and healthcare systems, which benefit from improved outcomes and resource efficiency [97]. However, integrating AI into routine clinical practice necessitates parallel transformations in organizational routines, workforce training, and digital competencies among healthcare professionals [98]. Rather than framing AI as a substitute for human expertise, it is essential to foster a collaborative model in which technology enhances the clinical judgment and skills of professionals. This reframing can help overcome cultural resistance to adoption, often rooted in concerns about professional identity and loss of autonomy [99,100]. Among the barriers, frontline professionals reported challenges such as poor interoperability of AI with existing electronic health records, lack of shared implementation strategies, and insufficient digital literacy. These aspects reflect issues related to the CFIR domains of intervention characteristics, inner setting, and outer setting. Additionally, fragmented care coordination and lack of shared governance structures were seen as limiting factors in the effective deployment of AI across healthcare levels [101,102]. Conversely, several facilitators emerged from a bottom-up viewpoint, highlighting how AI adoption can be positively influenced by perceived improvements in workflow efficiency, time savings, and greater integration between primary and specialty care. Professionals also reported a willingness to engage with new tools in general technology devices when training is adequate and when they are actively involved in implementation decisions in clinical and social decision [103,104,105].

4.7. A Public Health View on AI in Clinical Practice

For chronic disease management, where continuity and coordination of care are essential, AI systems can support transitions between care settings, enhance remote monitoring, and offer predictive insights that guide therapeutic decisions [106,107,108]. However, without appropriate regulation and inclusive planning, the risk of exacerbating health inequities remains high—particularly in underserved or digitally marginalized populations [109,110]. To prevent such disparities, healthcare professionals must be empowered not only as end users but also as digital mediators and educators, helping to guide patients and caregivers in the effective and ethical use of AI technologies [111,112,113,114,115]. Their proximity to patients and deep understanding of local care pathways position them as crucial actors in fostering responsible innovation. Finally, the development of AI for chronic care must be embedded in a broader cultural and ethical framework that views data as a collective asset and public health as the primary driver for technological development. This shift is necessary to ensure that data-driven medicine evolves in a fair, sustainable, and inclusive direction [116,117].

4.8. Strengths and Limitations

The study’s strengths include its solid methodological framework, facilitated by the adoption of the JBI methodology, the PRISMA-ScR guidelines for reporting [43,44], and the registration of the protocol on the OSF database, elements that ensure transparency and international rigor. The use of the CFIR framework [49] allowed for an in-depth analysis of the factors influencing AI adoption by healthcare workers, facilitating a coherent and useful classification from a public health perspective. However, some limitations should be considered. The exploratory nature of the scoping review did not allow for a quantitative synthesis of data due to the methodological heterogeneity of the included studies and the cohorts considered. Additionally, many findings are based on subjective perceptions of the involved workers in the studies included, which requires caution in interpreting the collected data and highlights the need for future integration with objective data and evidence-driven research. For these elements, future studies aimed at addressing these gaps are required. In particular, it is suggested to develop longitudinal research and randomized studies that evaluate not only perceptions but also the real effectiveness and efficiency of AI in managing diabetes and chronic diseases in general from a healthcare worker’s point of view. This approach would help build more comprehensive and useful evidence for the sustainable implementation of technological innovation in public health perspectives.

5. Conclusions

The adoption of AI in chronic care, particularly in diabetes management, is shaped by a dynamic interplay of perceived barriers and facilitators among healthcare professionals. Their active and continuous involvement represents a key opportunity to develop more effective, reliable, and context-aware AI solutions that are better integrated into everyday clinical workflows. Promoting targeted training programs and sustained organizational support for healthcare workers involved in the complex management of diabetes may help overcome current challenges, advancing more equitable and sustainable innovation. Crucially, these findings point toward the value of a bottom-up approach—one that prioritizes the perspectives and practical needs of frontline professionals—as a promising pathway to support the successful implementation of AI in clinical practice. Framing AI adoption within a broader public health perspective, attentive to systemic readiness and social equity, can guide more inclusive strategies that align innovation with real-world healthcare priorities.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/medicina61081403/s1, Table S1: PRISMA-ScR checklist; Table S2: Search strategy; Table S3: Cross-sectional studies quality appraisal; Table S4: Qualitative studies quality appraisal; Table S5: Mixed-methods studies quality appraisal; Table S6: Complete data extraction.

Author Contributions

Conceptualization, G.C. (Giovanni Cangelosi), A.M., M.R., A.C., M.P. and F.P.; methodology, M.P., F.P., A.M. and G.C. (Giovanni Cangelosi); validation, M.P. and F.P.; formal analysis, G.C. (Giovanni Cangelosi), A.M., A.C., M.R., G.C. (Gabriele Caggianelli) and S.M.; investigation, G. Cangelosi, A.M., A.C., M.R., G.C. (Gabriele Caggianelli) and S.M.; data curation, A.M., M.R., G.C. (Giovanni Cangelosi), G.C. (Gabriele Caggianelli) and S.M.; writing—original draft preparation, A.C., G.C. (Giovanni Cangelosi), G.C. (Gabriele Caggianelli) and S.M.; writing—review and editing, A.M., M.R., M.P., F.P., A.C., G.C. (Giovanni Cangelosi) and G.C. (Gabriele Caggianelli); visualization, A.C.; supervision, M.P. and F.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article or Supplementary Material.

Acknowledgments

Any use of artificial intelligence in the preparation of this manuscript was exclusively for final linguistic editing, supported by a native English-speaking author of the study. The content of this article is entirely the result of the original intellectual effort and research of the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| IDF | International Diabetes Foundation |

| T2D | Type 2 Diabetes |

| T1D | Type 1 Diabetes |

| CGM | Continuous Glucose Monitoring |

| IP | Insulin Pump |

| MDI | Multiple Daily Injection |

| ML | Machine Learning |

| DL | Deep Learning |

| PRISMA-ScR | Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews |

| OSF | Open Science Foundation |

| PCC | Population, Concept, Context |

| JBI | Joanna Briggs Institute |

| MMAT | Mixed-Methods Appraisal Tool |

| CFIR | Consolidated Framework for Implementation Research |

| SD | Standard Deviation |

| SWIM | Synthesis Without Meta-Analysis |

| GP | General Practitioner |

| WHO | World Health Organization |

| EU | European Union |

References

- Aschner, P.; Karuranga, S.; James, S.; Simmons, D.; Basit, A.; Shaw, J.E.; Wild, S.H.; Ogurtsova, K.; Saeedi, P. The International Diabetes Federation’s Guide for Diabetes Epidemiological Studies. Diabetes Res. Clin. Pract. 2021, 172, 108630. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization Diabetes—Fact Sheets. Available online: https://www.who.int/news-room/fact-sheets/detail/diabetes (accessed on 13 June 2025).

- Chen, X.; Zhang, L.; Chen, W. Global, Regional, and National Burdens of Type 1 and Type 2 Diabetes Mellitus in Adolescents from 1990 to 2021, with Forecasts to 2030: A Systematic Analysis of the Global Burden of Disease Study 2021. BMC Med. 2025, 23, 48. [Google Scholar] [CrossRef] [PubMed]

- Saeedi, P.; Petersohn, I.; Salpea, P.; Malanda, B.; Karuranga, S.; Unwin, N.; Colagiuri, S.; Guariguata, L.; Motala, A.A.; Ogurtsova, K.; et al. Global and Regional Diabetes Prevalence Estimates for 2019 and Projections for 2030 and 2045: Results from the International Diabetes Federation Diabetes Atlas, 9th Edition. Diabetes Res. Clin. Pract. 2019, 157, 107843. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, P.; Le, L.K.-D.; Ananthapavan, J.; Gao, L.; Dunstan, D.W.; Moodie, M. Economics of Sedentary Behaviour: A Systematic Review of Cost of Illness, Cost-Effectiveness, and Return on Investment Studies. Prev. Med. 2022, 156, 106964. [Google Scholar] [CrossRef]

- Alkaf, B.; Blakemore, A.I.; Järvelin, M.-R.; Lessan, N. Secondary Analyses of Global Datasets: Do Obesity and Physical Activity Explain Variation in Diabetes Risk across Populations? Int. J. Obes. 2021, 45, 944–956. [Google Scholar] [CrossRef]

- Komuro, K.; Kaneko, H.; Komuro, J.; Suzuki, Y.; Okada, A.; Mizuno, A.; Fujiu, K.; Takeda, N.; Morita, H.; Node, K.; et al. Differences in the Association of Lifestyle-Related Modifiable Risk Factors with Incident Cardiovascular Disease between Individuals with and without Diabetes. Eur. J. Prev. Cardiol. 2025, 32, 376–383. [Google Scholar] [CrossRef]

- Chimoriya, R.; Rana, K.; Adhikari, J.; Aitken, S.J.; Poudel, P.; Baral, A.; Rawal, L.; Piya, M.K. The Association of Physical Activity With Overweight/Obesity and Type 2 Diabetes in Nepalese Adults: Evidence From a Nationwide Non-Communicable Disease Risk Factor Survey. Obes. Sci. Pract. 2025, 11, e70046. [Google Scholar] [CrossRef]

- Istituto Superiore di Sanità Giornata Mondiale Diabete: Dalla Prevalenza All’accesso Alle Cure, I Numeri Della Sorveglianza Passi. Available online: https://www.iss.it/-/giornata-mondiale-diabete-da-prevalenza-ad-accesso-cure-i-numeri-del-sistema-passi (accessed on 13 June 2025).

- Istituto Nazionale di Statistica Il Diabete in Italia. Available online: https://www.istat.it/it/files/2017/07/REPORT_DIABETE.pdf (accessed on 13 June 2025).

- Jiang, Y.; Hu, J.; Chen, F.; Liu, B.; Wei, M.; Xia, W.; Yan, Y.; Xie, J.; Du, S.; Tian, X.; et al. Comprehensive Systematic Review and Meta-Analysis of Risk Factors for Childhood Obesity in China and Future Intervention Strategies. Lancet Reg. Health-West. Pac. 2025, 58, 101553. [Google Scholar] [CrossRef]

- Blanken, C.P.S.; Bayer, S.; Buchner Carro, S.; Hauner, H.; Holzapfel, C. Associations Between TCF7L2, PPARγ, and KCNJ11 Genotypes and Insulin Response to an Oral Glucose Tolerance Test: A Systematic Review. Mol. Nutr. Food Res. 2025, 69, e202400561. [Google Scholar] [CrossRef]

- Wang, Y.; Cao, P.; Liu, F.; Chen, Y.; Xie, J.; Bai, B.; Liu, Q.; Ma, H.; Geng, Q. Gender Differences in Unhealthy Lifestyle Behaviors among Adults with Diabetes in the United States between 1999 and 2018. IJERPH 2022, 19, 16412. [Google Scholar] [CrossRef]

- Teck, J. Diabetes-Associated Comorbidities. Prim. Care Clin. Off. Pract. 2022, 49, 275–286. [Google Scholar] [CrossRef]

- Pearson-Stuttard, J.; Holloway, S.; Polya, R.; Sloan, R.; Zhang, L.; Gregg, E.W.; Harrison, K.; Elvidge, J.; Jonsson, P.; Porter, T. Variations in Comorbidity Burden in People with Type 2 Diabetes over Disease Duration: A Population-Based Analysis of Real World Evidence. eClinicalMedicine 2022, 52, 101584. [Google Scholar] [CrossRef]

- Yu, M.G.; Gordin, D.; Fu, J.; Park, K.; Li, Q.; King, G.L. Protective Factors and the Pathogenesis of Complications in Diabetes. Endocr. Rev. 2024, 45, 227–252. [Google Scholar] [CrossRef] [PubMed]

- Bech, A.A.; Madsen, M.D.; Kvist, A.V.; Vestergaard, P.; Rasmussen, N.H. Diabetes Complications and Comorbidities as Risk Factors for MACE in People with Type 2 Diabetes and Their Development over Time: A Danish Registry-Based Case–Control Study. J. Diabetes 2025, 17, e70076. [Google Scholar] [CrossRef] [PubMed]

- Henney, A.E.; Gillespie, C.S.; Alam, U.; Hydes, T.J.; Boyland, E.; Cuthbertson, D.J. Ultra-processed Food and Non-communicable Diseases in the United Kingdom: A Narrative Review and Thematic Synthesis of Literature. Obes. Rev. 2024, 25, e13682. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Khandpur, N.; Desjardins, C.; Wang, L.; Monteiro, C.A.; Rossato, S.L.; Fung, T.T.; Manson, J.E.; Willett, W.C.; Rimm, E.B.; et al. Ultra-Processed Food Consumption and Risk of Type 2 Diabetes: Three Large Prospective U.S. Cohort Studies. Diabetes Care 2023, 46, 1335–1344. [Google Scholar] [CrossRef]

- Dai, W.; Albrecht, S.S. Sitting Time and Its Interaction With Physical Activity in Relation to All-Cause and Heart Disease Mortality in U.S. Adults with Diabetes. Diabetes Care 2024, 47, 1764–1768. [Google Scholar] [CrossRef]

- Rafiei, S.K.S.; Fateh, F.; Arab, M.; Espanlo, M.; Dahaghin, S.; Gilavand, H.K.; Shahrokhi, M.; Fallahi, M.S.; Zardast, Z.; Ansari, A.; et al. Weight Change and the Risk of Micro and Macro Vascular Complications of Diabetes: A Systematic Review. MJMS 2024, 31, 18–31. [Google Scholar] [CrossRef]

- Costa Hoffmeister, M.; Hammel Lovison, V.; Priesnitz Friedrich, E.; Da Costa Rodrigues, T. Ambulatory Blood Pressure Monitoring and Vascular Complications in Patients with Type 1 Diabetes Mellitus—Systematic Review and Meta-Analysis of Observational Studies. Diabetes Res. Clin. Pract. 2024, 217, 111873. [Google Scholar] [CrossRef]

- Perais, J.; Agarwal, R.; Evans, J.R.; Loveman, E.; Colquitt, J.L.; Owens, D.; Hogg, R.E.; Lawrenson, J.G.; Takwoingi, Y.; Lois, N. Prognostic Factors for the Development and Progression of Proliferative Diabetic Retinopathy in People with Diabetic Retinopathy. Cochrane Database Syst. Rev. 2023, 2023, CD013775. [Google Scholar] [CrossRef]

- Cangelosi, G.; Grappasonni, I.; Nguyen, C.T.T.; Acito, M.; Pantanetti, P.; Benni, A.; Petrelli, F. Mediterranean Diet (MedDiet) and Lifestyle Medicine (LM) for Support and Care of Patients with Type II Diabetes in the COVID-19 Era: A Cross-Observational Study. Acta Biomed. Atenei Parm. 2023, 94, e2023189. [Google Scholar] [CrossRef]

- Kanbour, S.; Ageeb, R.A.; Malik, R.A.; Abu-Raddad, L.J. Impact of Bodyweight Loss on Type 2 Diabetes Remission: A Systematic Review and Meta-Regression Analysis of Randomised Controlled Trials. Lancet Diabetes Endocrinol. 2025, 13, 294–306. [Google Scholar] [CrossRef]

- Petrelli, F.; Cangelosi, G.; Scuri, S.; Cuc Thi Thu, N.; Debernardi, G.; Benni, A.; Vesprini, A.; Rocchi, R.; De Carolis, C.; Pantanetti, P.; et al. Food Knowledge of Patients at the First Access to a Diabetology Center: Food Knowledge of Patients at the First Access to a Diabetology Center. Acta Biomed. Atenei Parm. 2020, 91, 160–164. [Google Scholar] [CrossRef]

- Aljawarneh, Y.M.; Wardell, D.W.; Wood, G.L.; Rozmus, C.L. A Systematic Review of Physical Activity and Exercise on Physiological and Biochemical Outcomes in Children and Adolescents with Type 1 Diabetes. J. Nurs. Scholarsh. 2019, 51, 337–345. [Google Scholar] [CrossRef]

- Steiman De Visser, H.; Fast, I.; Brunton, N.; Arevalo, E.; Askin, N.; Rabbani, R.; Abou-Setta, A.M.; McGavock, J. Cardiorespiratory Fitness and Physical Activity in Pediatric Diabetes: A Systemic Review and Meta-Analysis. JAMA Netw. Open 2024, 7, e240235. [Google Scholar] [CrossRef]

- Santos, L. The Impact of Nutrition and Lifestyle Modification on Health. Eur. J. Intern. Med. 2022, 97, 18–25. [Google Scholar] [CrossRef] [PubMed]

- Amerkamp, J.; Benli, S.; Isenmann, E.; Brinkmann, C. Optimizing the Lifestyle of Patients with Type 2 Diabetes Mellitus—Systematic Review on the Effects of Combined Diet-and-Exercise Interventions. Nutr. Metab. Cardiovasc. Dis. 2025, 35, 103746. [Google Scholar] [CrossRef] [PubMed]

- Mannoubi, C.; Kairy, D.; Menezes, K.V.; Desroches, S.; Layani, G.; Vachon, B. The Key Digital Tool Features of Complex Telehealth Interventions Used for Type 2 Diabetes Self-Management and Monitoring with Health Professional Involvement: Scoping Review. JMIR Med. Inf. 2024, 12, e46699. [Google Scholar] [CrossRef] [PubMed]

- Jancev, M.; Vissers, T.A.C.M.; Visseren, F.L.J.; Van Bon, A.C.; Serné, E.H.; DeVries, J.H.; De Valk, H.W.; Van Sloten, T.T. Continuous Glucose Monitoring in Adults with Type 2 Diabetes: A Systematic Review and Meta-Analysis. Diabetologia 2024, 67, 798–810. [Google Scholar] [CrossRef]

- Ferreira, R.O.M.; Trevisan, T.; Pasqualotto, E.; Chavez, M.P.; Marques, B.F.; Lamounier, R.N.; Van De Sande-Lee, S. Continuous Glucose Monitoring Systems in Noninsulin-Treated People with Type 2 Diabetes: A Systematic Review and Meta-Analysis of Randomized Controlled Trials. Diabetes Technol. Ther. 2024, 26, 252–262. [Google Scholar] [CrossRef]

- Saetang, T.; Greeviroj, P.; Thavaraputta, S.; Santisitthanon, P.; Houngngam, N.; Laichuthai, N. The Effectiveness of Telemonitoring and Integrated Personalized Diabetes Management in People with Insulin-treated Type 2 Diabetes Mellitus. Diabetes Obes. Metab. 2024, 26, 5233–5238. [Google Scholar] [CrossRef] [PubMed]

- Kiran, M.; Xie, Y.; Anjum, N.; Ball, G.; Pierscionek, B.; Russell, D. Machine Learning and Artificial Intelligence in Type 2 Diabetes Prediction: A Comprehensive 33-Year Bibliometric and Literature Analysis. Front. Digit. Health 2025, 7, 1557467. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Tian, D.; Zhao, X.; Zhang, L.; Xu, Y.; Lu, X.; Chen, Y. Evolutionary Patterns and Research Frontiers of Artificial Intelligence in Age-Related Macular Degeneration: A Bibliometric Analysis. Quant. Imaging Med. Surg. 2025, 15, 813–830. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Bee, Y.M.; Lim, C.C.; Sabanayagam, C.; Yim-Lui Cheung, C.; Wong, T.Y.; Ting, D.S.W.; Lim, L.-L.; Li, H.; He, M.; et al. Use of Artificial Intelligence with Retinal Imaging in Screening for Diabetes-Associated Complications: Systematic Review. eClinicalMedicine 2025, 81, 103089. [Google Scholar] [CrossRef]

- Elmotia, K.; Abouyaala, O.; Bougrine, S.; Ouahidi, M.L. Effectiveness of AI-Driven Interventions in Glycemic Control: A Systematic Review and Meta-Analysis of Randomized Controlled Trials. Prim. Care Diabetes 2025, 19, 345–354. [Google Scholar] [CrossRef]

- Yismaw, M.B.; Tafere, C.; Tefera, B.B.; Demsie, D.G.; Feyisa, K.; Addisu, Z.D.; Zeleke, T.K.; Siraj, E.A.; Worku, M.C.; Berihun, F. Artificial Intelligence Based Predictive Tools for Identifying Type 2 Diabetes Patients at High Risk of Treatment Non-Adherence: A Systematic Review. Int. J. Med. Inform. 2025, 198, 105858. [Google Scholar] [CrossRef]

- Usman, T.M.; Saheed, Y.K.; Nsang, A.; Ajibesin, A.; Rakshit, S. A Systematic Literature Review of Machine Learning Based Risk Prediction Models for Diabetic Retinopathy Progression. Artif. Intell. Med. 2023, 143, 102617. [Google Scholar] [CrossRef]

- Khokhar, P.B.; Gravino, C.; Palomba, F. Advances in Artificial Intelligence for Diabetes Prediction: Insights from a Systematic Literature Review. Artif. Intell. Med. 2025, 164, 103132. [Google Scholar] [CrossRef]

- Senot, C.; Chandrasekaran, A.; Ward, P.T. Role of Bottom-Up Decision Processes in Improving the Quality of Health Care Delivery: A Contingency Perspective. Prod. Oper. Manag. 2016, 25, 458–476. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef]

- Pollock, D.; Peters, M.D.J.; Khalil, H.; McInerney, P.; Alexander, L.; Tricco, A.C.; Evans, C.; De Moraes, É.B.; Godfrey, C.M.; Pieper, D.; et al. Recommendations for the Extraction, Analysis, and Presentation of Results in Scoping Reviews. JBI Evid. Synth. 2023, 21, 520–532. [Google Scholar] [CrossRef]

- Lockwood, C.; Munn, Z.; Porritt, K. Qualitative Research Synthesis: Methodological Guidance for Systematic Reviewers Utilizing Meta-Aggregation. Int. J. Evid.-Based Healthc. 2015, 13, 179–187. [Google Scholar] [CrossRef] [PubMed]

- Moola, S.; Munn, Z.; Tufanaru, C.; Aromataris, E.; Sears, K.; Sfetcu, R.; Currie, M.; Qureshi, R.; Mattis, P.; Lisy, K.; et al. Chapter 7: Systematic Reviews of Etiology and Risk. In Joanna Briggs Institute Reviewer’s Manual; Aromataris, E., Munn, Z., Eds.; The Joanna Briggs Institute: North Adelaide, Australia, 2017. [Google Scholar]

- Hong, Q.N.; Fàbregues, S.; Bartlett, G.; Boardman, F.; Cargo, M.; Dagenais, P.; Gagnon, M.-P.; Griffiths, F.; Nicolau, B.; O’Cathain, A.; et al. The Mixed Methods Appraisal Tool (MMAT) Version 2018 for Information Professionals and Researchers. EFI 2018, 34, 285–291. [Google Scholar] [CrossRef]

- Camp, S.; Legge, T. Simulation as a Tool for Clinical Remediation: An Integrative Review. Clin. Simul. Nurs. 2018, 16, 48–61. [Google Scholar] [CrossRef]

- Damschroder, L.J.; Aron, D.C.; Keith, R.E.; Kirsh, S.R.; Alexander, J.A.; Lowery, J.C. Fostering Implementation of Health Services Research Findings into Practice: A Consolidated Framework for Advancing Implementation Science. Implement. Sci. 2009, 4, 50. [Google Scholar] [CrossRef]

- Campbell, M.; McKenzie, J.E.; Sowden, A.; Katikireddi, S.V.; Brennan, S.E.; Ellis, S.; Hartmann-Boyce, J.; Ryan, R.; Shepperd, S.; Thomas, J.; et al. Synthesis without Meta-Analysis (SWiM) in Systematic Reviews: Reporting Guideline. BMJ 2020, 368, l6890. [Google Scholar] [CrossRef]

- Held, L.A.; Wewetzer, L.; Steinhäuser, J. Determinants of the Implementation of an Artificial Intelligence-Supported Device for the Screening of Diabetic Retinopathy in Primary Care—A Qualitative Study. Health Inform. J. 2022, 28, 14604582221112816. [Google Scholar] [CrossRef]

- Liao, X.; Yao, C.; Jin, F.; Zhang, J.; Liu, L. Barriers and Facilitators to Implementing Imaging-Based Diagnostic Artificial Intelligence-Assisted Decision-Making Software in Hospitals in China: A Qualitative Study Using the Updated Consolidated Framework for Implementation Research. BMJ Open 2024, 14, e084398. [Google Scholar] [CrossRef]

- Petersen, G.B.; Joensen, L.E.; Kristensen, J.K.; Vorum, H.; Byberg, S.; Fangel, M.V.; Cleal, B. How to Improve Attendance for Diabetic Retinopathy Screening: Ideas and Perspectives From People with Type 2 Diabetes and Health-Care Professionals. Can. J. Diabetes 2024, 49, 121–127. [Google Scholar] [CrossRef]

- Roy, M.; Jamwal, M.; Vasudeva, S.; Singh, M. Physicians Behavioural Intentions towards AI-Based Diabetes Diagnostic Interventions in India. J. Public Health 2024. [Google Scholar] [CrossRef]

- Romero-Brufau, S.; Wyatt, K.D.; Boyum, P.; Mickelson, M.; Moore, M.; Cognetta-Rieke, C. A Lesson in Implementation: A Pre-Post Study of Providers’ Experience with Artificial Intelligence-Based Clinical Decision Support. Int. J. Med. Inform. 2020, 137, 104072. [Google Scholar] [CrossRef]

- Wewetzer, L.; Held, L.A.; Goetz, K.; Steinhäuser, J. Determinants of the Implementation of Artificial Intelligence-Based Screening for Diabetic Retinopathy-a Cross-Sectional Study with General Practitioners in Germany. Digit. Health 2023, 9, 20552076231176644. [Google Scholar] [CrossRef]

- Wahlich, C.; Chandrasekaran, L.; Chaudhry, U.A.R.; Willis, K.; Chambers, R.; Bolter, L.; Anderson, J.; Shakespeare, R.; Olvera-Barrios, A.; Fajtl, J.; et al. Patient and Practitioner Perceptions around Use of Artificial Intelligence within the English NHS Diabetic Eye Screening Programme. Diabetes Res. Clin. Pract. 2025, 219, 111964. [Google Scholar] [CrossRef]

- Lucero-Prisno, D.E.; Shomuyiwa, D.O.; Kouwenhoven, M.B.N.; Dorji, T.; Odey, G.O.; Miranda, A.V.; Ogunkola, I.O.; Adebisi, Y.A.; Huang, J.; Xu, L.; et al. Top 10 Public Health Challenges to Track in 2023: Shifting Focus beyond a Global Pandemic. Public Health Chall. 2023, 2, e86. [Google Scholar] [CrossRef] [PubMed]

- Lucero-Prisno, D.E.; Shomuyiwa, D.O.; Kouwenhoven, M.B.N.; Dorji, T.; Adebisi, Y.A.; Odey, G.O.; George, N.S.; Ajayi, O.T.; Ekerin, O.; Manirambona, E.; et al. Top 10 Public Health Challenges for 2024: Charting a New Direction for Global Health Security. Public Health Chall. 2025, 4, e70022. [Google Scholar] [CrossRef]

- Balasubramaniam, N.; Kauppinen, M.; Rannisto, A.; Hiekkanen, K.; Kujala, S. Transparency and Explainability of AI Systems: From Ethical Guidelines to Requirements. Inf. Softw. Technol. 2023, 159, 107197. [Google Scholar] [CrossRef]

- Rajesh, A.E.; Davidson, O.Q.; Lee, C.S.; Lee, A.Y. Artificial Intelligence and Diabetic Retinopathy: AI Framework, Prospective Studies, Head-to-Head Validation, and Cost-Effectiveness. Diabetes Care 2023, 46, 1728–1739. [Google Scholar] [CrossRef]

- Hasan, S.U.; Siddiqui, M.A.R. Diagnostic Accuracy of Smartphone-Based Artificial Intelligence Systems for Detecting Diabetic Retinopathy: A Systematic Review and Meta-Analysis. Diabetes Res. Clin. Pract. 2023, 205, 110943. [Google Scholar] [CrossRef] [PubMed]

- Jha, D.; Durak, G.; Das, A.; Sanjotra, J.; Susladkar, O.; Sarkar, S.; Rauniyar, A.; Kumar Tomar, N.; Peng, L.; Li, S.; et al. Ethical Framework for Responsible Foundational Models in Medical Imaging. Front. Med. 2025, 12, 1544501. [Google Scholar] [CrossRef]

- European Commission Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 Laying down Harmonised Rules on Artificial Intelligence and Amending Regulations (EC) No 300/2008, (EU) No 167/2013, (EU) No 168/2013, (EU) 2018/858, (EU) 2018/1139 and (EU) 2019/2144 and Directives 2014/90/EU, (EU) 2016/797 and (EU) 2020/1828 (Artificial Intelligence Act) (Text with EEA Relevance) 2024. Available online: https://eur-lex.europa.eu/eli/reg/2024/1689/oj/eng (accessed on 28 July 2025).

- Ahmad, Z.; Rahim, S.; Zubair, M.; Abdul-Ghafar, J. Artificial Intelligence (AI) in Medicine, Current Applications and Future Role with Special Emphasis on Its Potential and Promise in Pathology: Present and Future Impact, Obstacles Including Costs and Acceptance among Pathologists, Practical and Philosophical Considerations. A Comprehensive Review. Diagn. Pathol. 2021, 16, 24. [Google Scholar] [CrossRef]

- Wilkinson, D.; Savulescu, J. Cost-Equivalence and Pluralism in Publicly-Funded Health-Care Systems. Health Care Anal. 2018, 26, 287–309. [Google Scholar] [CrossRef]

- World Health Organization Ethics and Governance of Artificial Intelligence for Health. WHO Guidance. Available online: https://www.who.int/publications/i/item/9789240029200 (accessed on 5 June 2025).

- Walker, D.M.; Tarver, W.L.; Jonnalagadda, P.; Ranbom, L.; Ford, E.W.; Rahurkar, S. Perspectives on Challenges and Opportunities for Interoperability: Findings From Key Informant Interviews with Stakeholders in Ohio. JMIR Med. Inf. 2023, 11, e43848. [Google Scholar] [CrossRef]

- Garabedian, P.; Kain, J.; Emani, S.; Singleton, S.; Rozenblum, R.; Samal, L.; Mueller, S. User Requirements and Conceptual Design for an Electronic Data Platform for Interhospital Transfer Between Acute Care Hospitals: User-Centered Design Study. JMIR Hum. Factors 2025, 12, e67884. [Google Scholar] [CrossRef] [PubMed]

- United Nations Sustainable Development Goals. Available online: https://sdgs.un.org/goals (accessed on 21 July 2025).

- Gordon, D.; McKay, S.; Marchildon, G.; Bhatia, R.S.; Shaw, J. Collaborative Governance for Integrated Care: Insights from a Policy Stakeholder Dialogue. Int. J. Integr. Care 2020, 20, 3. [Google Scholar] [CrossRef]

- Faijue, D.D.; Segui, A.O.; Shringarpure, K.; Razavi, A.; Hasan, N.; Dar, O.; Manikam, L. Constructing a One Health Governance Architecture: A Systematic Review and Analysis of Governance Mechanisms for One Health. Eur. J. Public Health 2024, 34, 1086–1094. [Google Scholar] [CrossRef] [PubMed]

- Wagner, J.K.; Doerr, M.; Schmit, C.D. AI Governance: A Challenge for Public Health. JMIR Public Health Surveill. 2024, 10, e58358. [Google Scholar] [CrossRef] [PubMed]

- Sharma, M.; Savage, C.; Nair, M.; Larsson, I.; Svedberg, P.; Nygren, J.M. Artificial Intelligence Applications in Health Care Practice: Scoping Review. J Med. Internet Res. 2022, 24, e40238. [Google Scholar] [CrossRef]

- Hassan, M.; Kushniruk, A.; Borycki, E. Barriers to and Facilitators of Artificial Intelligence Adoption in Health Care: Scoping Review. JMIR Hum Factors 2024, 11, e48633. [Google Scholar] [CrossRef]

- Greenhalgh, T.; Wherton, J.; Papoutsi, C.; Lynch, J.; Hughes, G.; A’Court, C.; Hinder, S.; Fahy, N.; Procter, R.; Shaw, S. Beyond Adoption: A New Framework for Theorizing and Evaluating Nonadoption, Abandonment, and Challenges to the Scale-Up, Spread, and Sustainability of Health and Care Technologies. J Med. Internet Res. 2017, 19, e367. [Google Scholar] [CrossRef]

- Ahmed, M.I.; Spooner, B.; Isherwood, J.; Lane, M.; Orrock, E.; Dennison, A. A Systematic Review of the Barriers to the Implementation of Artificial Intelligence in Healthcare. Cureus 2023, 15, e46454. [Google Scholar] [CrossRef]

- Han, S.; Kang, H.S.; Gimber, P.; Lim, S. Nursing Students’ Perceptions and Use of Generative Artificial Intelligence in Nursing Education. Nurs. Rep. 2025, 15, 68. [Google Scholar] [CrossRef]

- Chew, B.-H.; Ngiam, K.Y. Artificial Intelligence Tool Development: What Clinicians Need to Know? BMC Med. 2025, 23, 244. [Google Scholar] [CrossRef]

- Alowais, S.A.; Alghamdi, S.S.; Alsuhebany, N.; Alqahtani, T.; Alshaya, A.I.; Almohareb, S.N.; Aldairem, A.; Alrashed, M.; Bin Saleh, K.; Badreldin, H.A.; et al. Revolutionizing Healthcare: The Role of Artificial Intelligence in Clinical Practice. BMC Med. Educ. 2023, 23, 689. [Google Scholar] [CrossRef]

- Singh, K.; Prabhu, A.; Kaur, N. The Impact and Role of Artificial Intelligence (AI) in Healthcare: Systematic Review. CTMC 2025, 25. [Google Scholar] [CrossRef]

- Aravazhi, P.S.; Gunasekaran, P.; Benjamin, N.Z.Y.; Thai, A.; Chandrasekar, K.K.; Kolanu, N.D.; Prajjwal, P.; Tekuru, Y.; Brito, L.V.; Inban, P. The Integration of Artificial Intelligence into Clinical Medicine: Trends, Challenges, and Future Directions. Dis.-a-Mon. 2025, 71, 101882. [Google Scholar] [CrossRef] [PubMed]

- Corrêa, N.K.; Galvão, C.; Santos, J.W.; Del Pino, C.; Pinto, E.P.; Barbosa, C.; Massmann, D.; Mambrini, R.; Galvão, L.; Terem, E.; et al. Worldwide AI Ethics: A Review of 200 Guidelines and Recommendations for AI Governance. Patterns 2023, 4, 100857. [Google Scholar] [CrossRef]

- Ratti, M.; Ceriotti, D.; Rescinito, R.; Bibi, R.; Panella, M. Does Robotic Assisted Technique Improve Patient Utility in Total Knee Arthroplasty? A Comparative Retrospective Cohort Study. Healthcare 2024, 12, 1650. [Google Scholar] [CrossRef] [PubMed]

- Uwimana, A.; Gnecco, G.; Riccaboni, M. Artificial Intelligence for Breast Cancer Detection and Its Health Technology Assessment: A Scoping Review. Comput. Biol. Med. 2025, 184, 109391. [Google Scholar] [CrossRef] [PubMed]

- Sguanci, M.; Mancin, S.; Gazzelloni, A.; Diamanti, O.; Ferrara, G.; Morales Palomares, S.; Parozzi, M.; Petrelli, F.; Cangelosi, G. The Internet of Things in the Nutritional Management of Patients with Chronic Neurological Cognitive Impairment: A Scoping Review. Healthcare 2024, 13, 23. [Google Scholar] [CrossRef]

- Sguanci, M.; Palomares, S.M.; Cangelosi, G.; Petrelli, F.; Sandri, E.; Ferrara, G.; Mancin, S. Artificial Intelligence in the Management of Malnutrition in Cancer Patients: A Systematic Review. Adv. Nutr. 2025, 16, 100438. [Google Scholar] [CrossRef]

- Hosain, M.N.; Kwak, Y.-S.; Lee, J.; Choi, H.; Park, J.; Kim, J. IoT-Enabled Biosensors for Real-Time Monitoring and Early Detection of Chronic Diseases. Phys. Act. Nutr. 2024, 28, 60–69. [Google Scholar] [CrossRef]

- Pantanetti, P.; Cangelosi, G.; Morales Palomares, S.; Ferrara, G.; Biondini, F.; Mancin, S.; Caggianelli, G.; Parozzi, M.; Sguanci, M.; Petrelli, F. Real-World Life Analysis of a Continuous Glucose Monitoring and Smart Insulin Pen System in Type 1 Diabetes: A Cohort Study. Diabetology 2025, 6, 7. [Google Scholar] [CrossRef]

- Global Burden of Disease Collaborative Network Global. Burden of Disease Study 2021 (GBD 2021) Results. 2024. Available online: https://www.healthdata.org/sites/default/files/2024-05/GBD_2021_Booklet_FINAL_2024.05.16.pdf (accessed on 5 June 2025).

- Subramanian, M.; Wojtusciszyn, A.; Favre, L.; Boughorbel, S.; Shan, J.; Letaief, K.B.; Pitteloud, N.; Chouchane, L. Precision Medicine in the Era of Artificial Intelligence: Implications in Chronic Disease Management. J. Transl. Med. 2020, 18, 472. [Google Scholar] [CrossRef] [PubMed]

- Shao, H.; Shi, L.; Lin, Y.; Fonseca, V. Using Modern Risk Engines and Machine Learning/Artificial Intelligence to Predict Diabetes Complications: A Focus on the BRAVO Model. J. Diabetes Its Complicat. 2022, 36, 108316. [Google Scholar] [CrossRef] [PubMed]

- Rodrigues, I.B.; Fahim, C.; Garad, Y.; Presseau, J.; Hoens, A.M.; Braimoh, J.; Duncan, D.; Bruyn-Martin, L.; Straus, S.E. Developing the Intersectionality Supplemented Consolidated Framework for Implementation Research (CFIR) and Tools for Intersectionality Considerations. BMC Med. Res. Methodol. 2023, 23, 262. [Google Scholar] [CrossRef]

- Wienert, J.; Zeeb, H. Implementing Health Apps for Digital Public Health—An Implementation Science Approach Adopting the Consolidated Framework for Implementation Research. Front. Public Health 2021, 9, 610237. [Google Scholar] [CrossRef]

- Scanzera, A.C.; Beversluis, C.; Potharazu, A.V.; Bai, P.; Leifer, A.; Cole, E.; Du, D.Y.; Musick, H.; Chan, R.V.P. Planning an Artificial Intelligence Diabetic Retinopathy Screening Program: A Human-Centered Design Approach. Front. Med. 2023, 10, 1198228. [Google Scholar] [CrossRef]

- Pan, M.; Li, R.; Wei, J.; Peng, H.; Hu, Z.; Xiong, Y.; Li, N.; Guo, Y.; Gu, W.; Liu, H. Application of Artificial Intelligence in the Health Management of Chronic Disease: Bibliometric Analysis. Front. Med. 2025, 11, 1506641. [Google Scholar] [CrossRef]

- Serin, O.; Yıldırım, B.F.; Duman, F.; Ercorumlu, K.; Yavas, R.; Tasar, M.A.; Celik, M. Physician Perspectives on Artificial Intelligence in Healthcare: A Cross-Sectional Study of Child-Focused Care in a Turkish Tertiary Hospital. Int. J. Med. Inform. 2025, 203, 106003. [Google Scholar] [CrossRef]

- Gumus, E.; Alan, H. Perspectives of Physicians, Nurses, and Patients on the Use of Artificial Intelligence and Robotic Nurses in Healthcare. Int. Nurs. Rev. 2025, 72, e70017. [Google Scholar] [CrossRef]

- Vanamali, D.R.; Gara, H.K.; Dronamraju, V.A.C. Evaluation of Knowledge, Attitudes, and Practices among Healthcare Professionals toward Role of Artificial Intelligence in Healthcare. J. Assoc. Phys. India 2025, 73, e6–e12. [Google Scholar]

- Singareddy, S.; Sn, V.P.; Jaramillo, A.P.; Yasir, M.; Iyer, N.; Hussein, S.; Nath, T.S. Artificial Intelligence and Its Role in the Management of Chronic Medical Conditions: A Systematic Review. Cureus 2023, 15, e46066. [Google Scholar] [CrossRef]

- Nair, P.M.; Silwal, K.; Kodali, P.B.; Fogawat, K.; Binna, S.; Sharma, H.; Tewani, G.R. Impact of Holistic, Patient-Centric Yoga & Naturopathy-Based Lifestyle Modification Program in Patients with Musculoskeletal Disorders: A Quasi-Experimental Study. Adv. Integr. Med. 2023, 10, 184–189. [Google Scholar] [CrossRef]

- Baines, R.; Bradwell, H.; Edwards, K.; Stevens, S.; Prime, S.; Tredinnick-Rowe, J.; Sibley, M.; Chatterjee, A. Meaningful Patient and Public Involvement in Digital Health Innovation, Implementation and Evaluation: A Systematic Review. Health Expect. 2022, 25, 1232–1245. [Google Scholar] [CrossRef] [PubMed]

- Wong, A.; Berenbrok, L.A.; Snader, L.; Soh, Y.H.; Kumar, V.K.; Javed, M.A.; Bates, D.W.; Sorce, L.R.; Kane-Gill, S.L. Facilitators and Barriers to Interacting with Clinical Decision Support in the ICU: A Mixed-Methods Approach. Crit. Care Explor. 2023, 5, e0967. [Google Scholar] [CrossRef] [PubMed]

- Scuri, S.; Tesauro, M.; Petrelli, F.; Argento, N.; Damasco, G.; Cangelosi, G.; Nguyen, C.T.T.; Savva, D.; Grappasonni, I. Use of an Online Platform to Evaluate the Impact of Social Distancing Measures on Psycho-Physical Well-Being in the COVID-19 Era. IJERPH 2022, 19, 6805. [Google Scholar] [CrossRef] [PubMed]

- Trinh, S.; Skoll, D.; Saxon, L.A. Health Care 2025: How Consumer-Facing Devices Change Health Management and Delivery. J. Med. Internet Res. 2025, 27, e60766. [Google Scholar] [CrossRef]

- Awad, A.; Trenfield, S.J.; Pollard, T.D.; Ong, J.J.; Elbadawi, M.; McCoubrey, L.E.; Goyanes, A.; Gaisford, S.; Basit, A.W. Connected Healthcare: Improving Patient Care Using Digital Health Technologies. Adv. Drug. Deliv. Rev. 2021, 178, 113958. [Google Scholar] [CrossRef]

- Neborachko, M.; Pkhakadze, A.; Vlasenko, I. Current Trends of Digital Solutions for Diabetes Management. Diabetes Metab. Syndr. Clin. Res. Rev. 2019, 13, 2997–3003. [Google Scholar] [CrossRef]

- Yang, J.; Amrollahi, A.; Marrone, M. Harnessing the Potential of Artificial Intelligence: Affordances, Constraints, and Strategic Implications for Professional Services. J. Strateg. Inf. Syst. 2024, 33, 101864. [Google Scholar] [CrossRef]

- Castiello, T. The Benefits and Challenges of Digitally-Enabled Cardiology. Br. J. Hosp. Med. 2025, 86, 1–6. [Google Scholar] [CrossRef]

- Warraich, H.J.; Tazbaz, T.; Califf, R.M. FDA Perspective on the Regulation of Artificial Intelligence in Health Care and Biomedicine. JAMA 2025, 333, 241. [Google Scholar] [CrossRef]

- Geukes Foppen, R.J.; Gioia, V.; Gupta, S.; Johnson, C.L.; Giantsidis, J.; Papademetris, M. Methodology for Safe and Secure AI in Diabetes Management. J. Diabetes Sci. Technol. 2025, 19, 620–627. [Google Scholar] [CrossRef] [PubMed]

- Gundlack, J.; Negash, S.; Thiel, C.; Buch, C.; Schildmann, J.; Unverzagt, S.; Mikolajczyk, R.; Frese, T.; PEAK Consortium. Artificial Intelligence in Medical Care—Patients’ Perceptions on Caregiving Relationships and Ethics: A Qualitative Study. Health Expect. 2025, 28, e70216. [Google Scholar] [CrossRef] [PubMed]

- Gundlack, J.; Thiel, C.; Negash, S.; Buch, C.; Apfelbacher, T.; Denny, K.; Christoph, J.; Mikolajczyk, R.; Unverzagt, S.; Frese, T. Patients’ Perceptions of Artificial Intelligence Acceptance, Challenges, and Use in Medical Care: Qualitative Study. J. Med. Internet Res. 2025, 27, e70487. [Google Scholar] [CrossRef] [PubMed]

- Witkowski, K.; Okhai, R.; Neely, S.R. Public Perceptions of Artificial Intelligence in Healthcare: Ethical Concerns and Opportunities for Patient-Centered Care. BMC Med. Ethics 2024, 25, 74. [Google Scholar] [CrossRef]

- Alsaleh, A. The Impact of Technological Advancement on Culture and Society. Sci. Rep. 2024, 14, 32140. [Google Scholar] [CrossRef]

- Fatoum, H.; Hanna, S.; Halamka, J.D.; Sicker, D.C.; Spangenberg, P.; Hashmi, S.K. Blockchain Integration With Digital Technology and the Future of Health Care Ecosystems: Systematic Review. J. Med. Internet Res. 2021, 23, e19846. [Google Scholar] [CrossRef]

- Xie, Y.; Zhai, Y.; Lu, G. Evolution of Artificial Intelligence in Healthcare: A 30-Year Bibliometric Study. Front. Med. 2025, 11, 1505692. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the Lithuanian University of Health Sciences. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).