Artificial Intelligence for the Analysis of Biometric Data from Wearables in Education: A Systematic Review

Abstract

1. Introduction

2. Materials and Methods

- RQ1

- How can biometric data collected via wearable devices and analyzed through AI algorithms provide reliable information in educational contexts?

- RQ2

- How can these frameworks enable continuous personalization in education?

- Wearable sensors that are are unobtrusive and accessible;

- Biometrics;

- AI algorithms;

- Stated in the abstract or introduction that the scope of the paper was within education.

(TITLE-ABS-KEY (“AI” OR “Artificial Intelligence” OR “Machine Learning” OR “Deep Learning” OR “Reinforcement Learning” OR “Neural Network*”) AND TITLE-ABS-KEY (“wearable” OR “wearable device*” OR “wearable sensor*” OR “wearable technology*” OR “smart wearable*” OR “biometric wearable*”) AND TITLE-ABS-KEY (“education*” OR “school*” OR “college*” OR “universit*” OR “lecture*” OR “student*” OR “learning environment*” OR “classroom*” OR “teacher*” OR “curriculum*” OR “pedagog*” OR “Intelligent tutoring system”) AND NOT TITLE-ABS-KEY ((“health*” AND NOT “mental”) OR “medicine*” OR “medical*” OR “patient*” OR “clinical*” OR “rehabilitation*” OR “therapy*” OR “sport*” OR “fitness*” OR “nursing*” OR “physiotherapy*”))

- a.

- The authors did not collect biometric data ().

- b.

- The study did not involve AI algorithms ().

3. Results

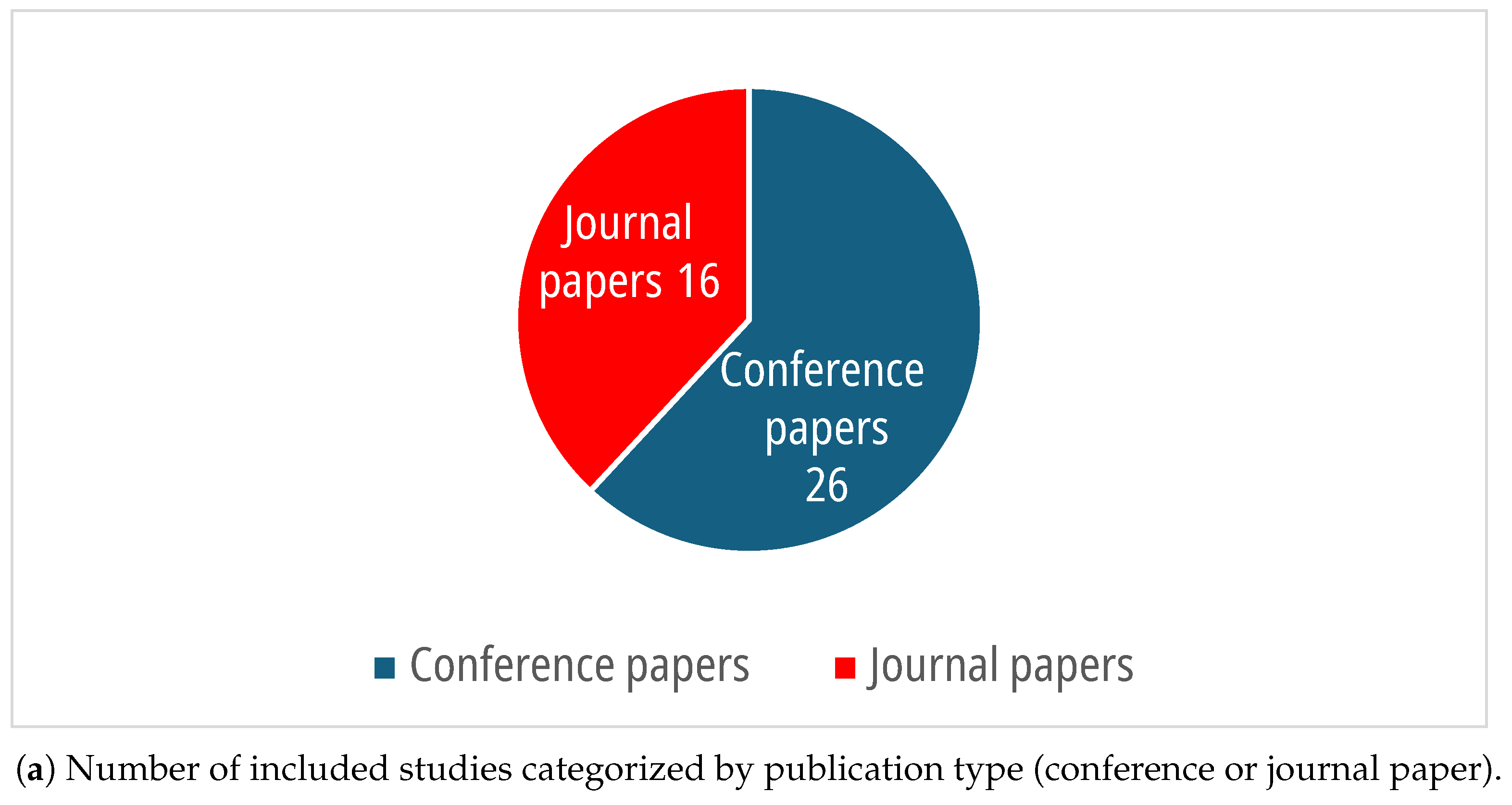

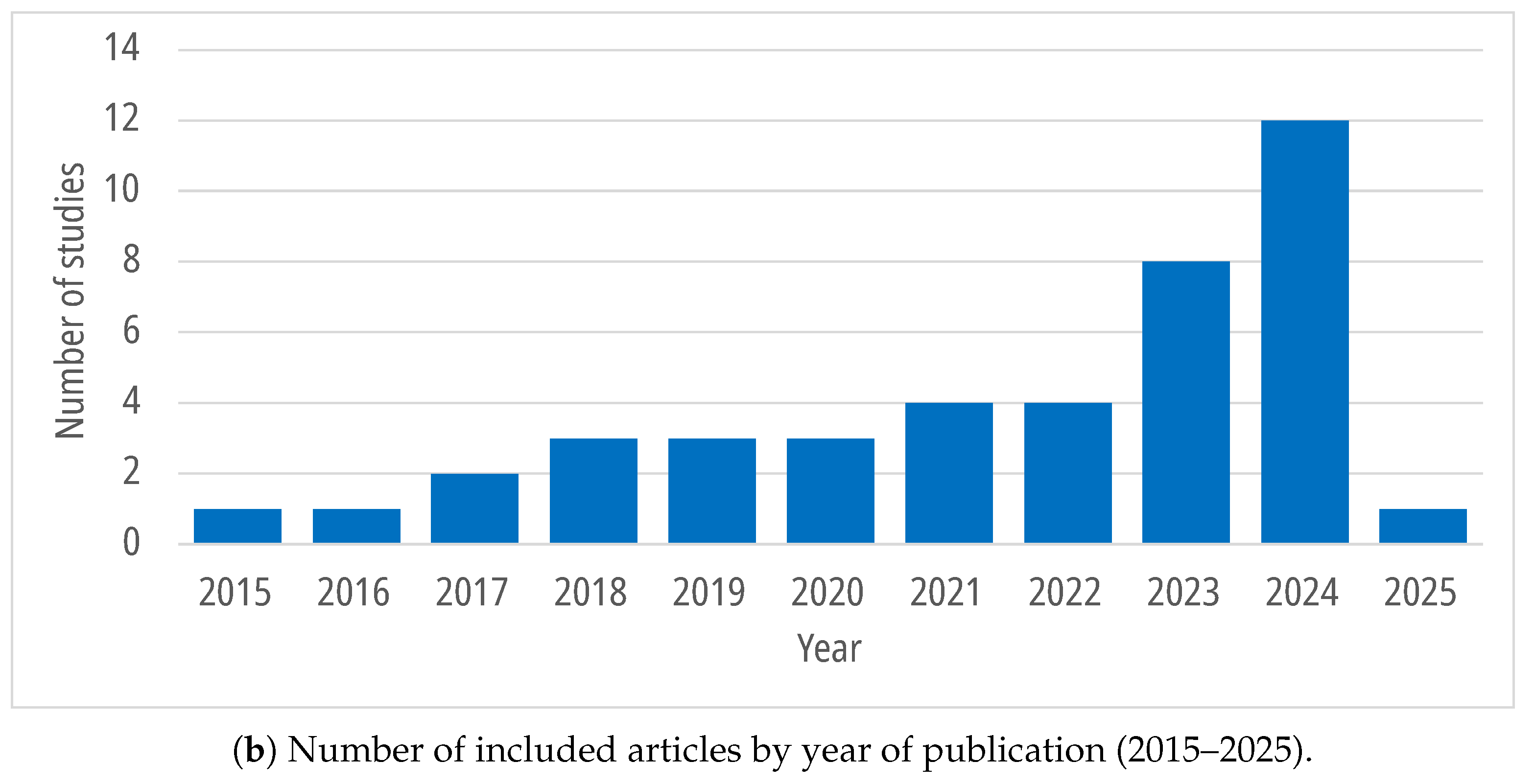

3.1. Characteristics of Included Studies

- Objective of the study;

- Sample size and description;

- Collected data and used devices;

- Proposed task;

- Tools and models used, including AI algorithms and datasets;

- Best accuracy score;

- Best F1 score.

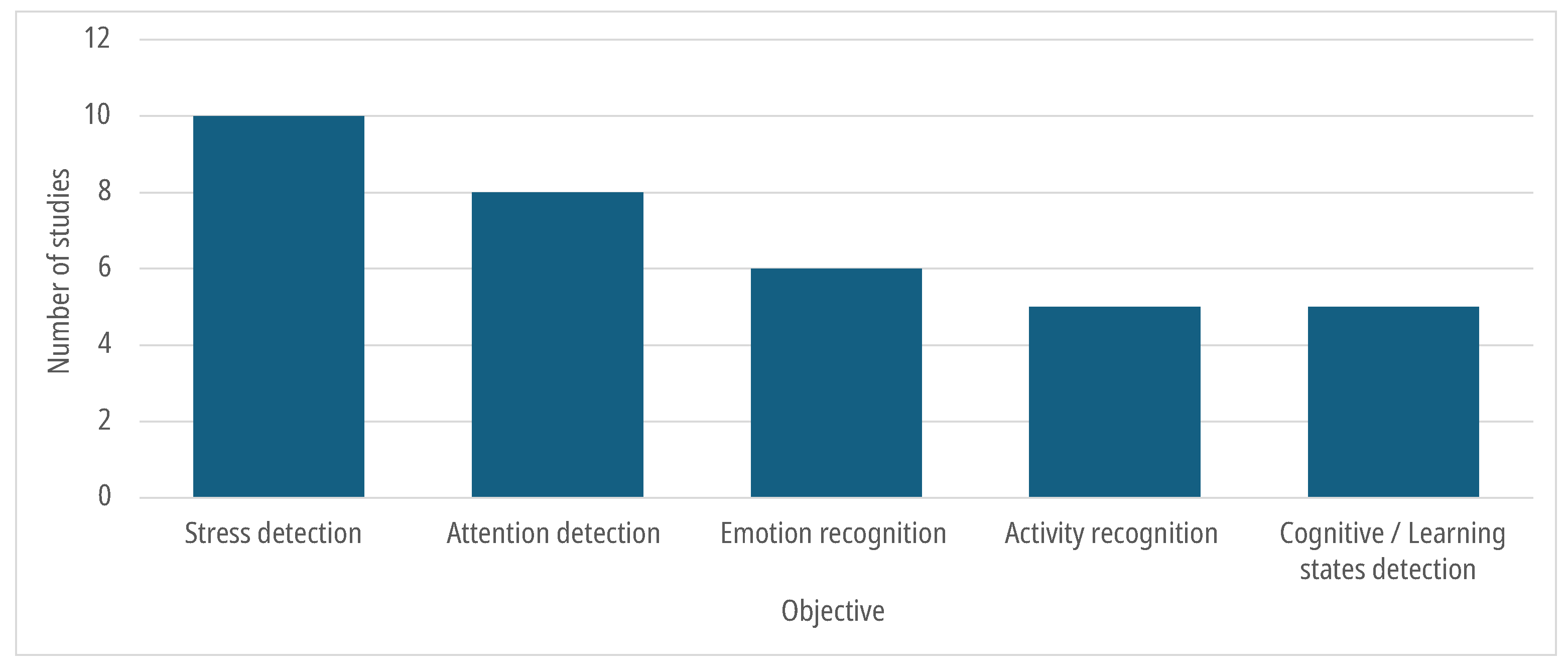

3.2. Objectives and Tasks

| Study | Objective | Sample | Data and Devices | Task | Tools | Best Accuracy | Best F1 |

|---|---|---|---|---|---|---|---|

| [25] | Concentration estimation | 13 students at a Japanese university | Accelerometer and gyroscope data (MetamotionS), heart rate (Fitbit), face orientation and eye gazing (Webcam) | Two video lectures watching on Youtube. Participants report their feelings every 90 s | Gradient boosting, decision tree, logistic regression, random forest, and SVM (classification) | 74.4% Random forest with user-dependent-cross-validation | Not provided |

| [26] | Affective and motivational states measurement | 22 graduate and undergraduate students in Australia | EEG along with performance metrics (Emotiv EPOCX), eye tracking (Tobii Nano Pro), GSR (Empatica E4) | Pretest on the previous knowledge, essay reading and writing, post-task assessment | ConvTran (classification) | Metacognitive processes: 74.1% (EEG performance metrics), low cognitive processes: 91.5% (EEG), high cognitive processes: 92.2% (EEG) | Metacognitive processes: 73.5% (EEG performance metrics), low cognitive processes: 91.5% (EEG), high cognitive processes: 92.2% (EEG) |

| [24] | Stress level detection | 30 students at several universities | PPG (Polar variety sense), ECG (BMD101), EEG (Mindwave Mobile) | Sudoku solving task, divided in three scenarios, followed by self-assessment of stress level | StressNeXt, LRCN, self-supervised CNN (classification) | 93.42% LRCN with ECG data | 88.11% LRCN with ECG data |

| [27] | Activity recognition | 8 neurodiverse students | Accelerometer and gyroscope data, heart rate (Google Wear OS) | Reading and follow up Q&A, typing, prompt writing, reading and follow up Q&A | Logistic regression, MLP, CRNN, single LSTM, federated multi-task hierarchical attention model (FATHOM) (classification) | 97.5% CRNN, Leave-one-out cross-validation | 91.8% FATHOM, Leave-one-out cross validation |

| [28] | Cognitive states detection (focused attention and working memory skills level) | 86 undergraduate students | EEG (Emotiv EPOC) | Cognifit test, that stimulate perception, memory, attention, and other cognitive states | Logistic regression (feature selection), NN, linear SVC (classification) | 90% linear SVC, focused attention | Not provided |

| [29] | English communication enhancement | Not provided | Temperature sensors, blood pressure sensors, pulse oximeter, heartbeat sensors, ECG sensors, EEG sensors | Not provided | kNN, NB, SVM, SVM with an improved satin Bower bird optimization algorithm (SVM-ISBBO) (classification) | 92.34% SVM-ISBBO | Not provided |

| [30] | Attention and interest level detection | 30 students | PPG, acceleration, and gyroscope data (second generation Moto 360 smartwatch) | Two lectures, followed by administration of a questionnaire | Decision tree, NN, SVM, naïve Bayes (classification) | 98.99% Decision tree, interest level, 95.79% SVM, difficulty level | Not provided |

| [31] | Activity recognition (reading/relaxing with open eyes) | 14 college students | EEG (Muse portable brainwave reader) | MATH, SHUT (eyes), READ (and answer test), OPEN (relaxation with open eyes) | K-means (classification) | 71% K-means (K = 12) | Not provided |

| [32] | Teacher activity and social plane prediction of interaction | One teacher | Eye tracking, EEG, accelerometers, subjective video and audio | Lecture simulation: explanation, questioning, group work, whole-class game | Random forest, SVM, gradient boosted tree (classification) | 67.3% teacher activity, random forest (Markov chain, top 80 features), 89.9% social plane, gradient boosted tree (top 81 features) | Not provided |

| [33] | Classification of learning events, personalized learning system implementation | 15 healthy participants | EEG (Emotiv EPOC), Oculus | Wisconsin Card Sorting Test (WCST) (classification), 2D video watching, 3D video watching, questionnaire administration (personalization) | SVM (Gaussian kernel), CNN, deep spatiotemporal convolutional bidirectional LSTM network (DSTCLN) (classification), Q-learning (personalization) | 84.81% DSTCLN | Not provided |

| [34] | Learning states and learning analytics analysis | Two groups: 32 third-year high school students and 20 first-year high school students in Hong Kong | Heart Rate, calories consumption, accelerometer and gyroscope data (Fitbit Versa) | Wearing a smartwatch during school time and, preferably, all the time for one week. Reporting learning activities periodically through a mobile app | LSTM, hybrid algorithm integrating LSTM and CNN (classification) | 95.6% LSTM | 80% LSTM |

| [35] | Computing heart rate variability from heart rate and step count | 25 university students, Auckland | HRV ECG-based (Polar H10), HR PPG-based (Fitbit Sense) | Three days monitoring on weekdays from 9 am to 4 pm. Answering a questionnaire about worry, stress, and anxiety | Naïve Bayes, linear and logistic regression, decision tree, random forest, LSTM (classification) | Not provided | Not provided |

| [36] | Attention level prediction | 18 students aged 12–15 of a middle school in Chongqing, China | BVP, IBI, GSR, skin temperature (Empatica E4), EEG | Learning video watching, student action recording | SVM, decision tree, random forest, naïve Bayes, Bayesian network, logistic regression, kNN (classification) | 75.86% SVM | 70.1% SVM |

| [37] | Attention level detection | 100 participants | EEG (Neurosky device) | Video lesson | CART, XGBOOST (feature selection), K-means (clustering), SVM linear kernel, logistic regression, ridge Regression (classification) | 91.68% SVM | 91.53% SVM |

| [38] | Learning immersion experience evaluation | 37 college students in China | VR glasses (Pico Neo 2), EEG (BrainLink headband), PPG (KS-CM01 finger-clip) | Questions reading without answers, VR video about the city of Guilin and online teaching video on English words, questionnaire administration | SVM-RBF (radial basis function) (classification) | 89.72% SVM | Not provided |

| [39] | Self-assessed concentration detection | 16 students from Haaga-Helia University of Applied Sciences in Helsinki | HR, GSR, skin temperature, accelerometer data (Empatica E3) | Wearing device during home study, self-reporting concentration through mobile app | Boosted regression tree, CNN (classification) | 99.9% Boosted regression tree, pseudo-labeled set | Not provided |

| [40] | Fatigue level detection | 23 healthy undergraduate students | BVP, GSR, EEG-related features (Empatica E4) | Test Auditory Odball (AO) | Random forest (feature selection), multiple linear regression (classification) | 91% MLR | Not provided |

| [23] | Stress detection for autistic college students | 20 (10 neurotypical, 10 autistic) college students in the USA | Heart Rate, sleep, GSR, temperature and accelerometer (Fitbit), step count, GPS location, sound intensity and light data (phone sensors) | Pre-interview, wearing Fitbit during regular lives activities for at least one week, post-interview | Information Sieve algorithm (to label unlabeled data), logistic regression, kNN, SVM linear kernel, NN (classification) | 70% SVM | Not provided |

| [41] | Perceived satisfaction, usefulness, and performance estimation | 31 university students forming 6 groups | GSR, BVP, HR, skin temperature (Empatica E4) | Wearing device during each class, survey filling | GSR explorer (noise removal), random forest, SVM with linear, radial and polynomial kernels (classification) | Not provided | Not provided |

| [42] | Emotional state detection | 30 people from lectures and/or workshops in China | Heart rate, acceleration | Wearing device during 5 days of lectures/workshops | Decision tree, kNN, logistic regression, random forest, multilayer perceptron, SVM with linear, radial and polynomial kernels, gradient boost, XGBoost, LSTM (classification) | Activation: 89.53% random forest. Tiredness: 95.14% gradient boosting. Pleasant feelings: 91.65% random forest, gradient boosting. Quality: 93.13% gradient boosting. Understanding: 93.80% XGBoost | Not provided |

| [43] | Stress detection | 9 participants | GSR (custom-built device), heart rate (LG smartwatch and Polar H7) | Hand in ice (S), singing (S), game (S), stroop (S), math (S), light conversation (NS), homework (NS), emails (NS), eating (NS) | Correlation-based feature subset evaluation (feature selection), naïve Bayes, SVM, logistic regression, random forest (classification) | Not provided | Intended stress: 59.2% naïve Bayes. Self-reported stress: 78.8% random forest |

| [44] | Emotion detection | 4 students | Heart beat, step count (Xiaomi MIband 1 S) | Wearing Xiaomi MIband for different time | SVM (classification) | Fusion model: 92.02% user 1, 94.07% user 2, 93.36% user 3, 96.81% user 4 | Not provided |

| [45] | Degree of retention and subjective difficulty detection | 8 healthy males among college students and social workers | Eye potentials, acceleration, and angular acceleration (JINS MEME), body temperature, RRI, LF/HF, HR, accelerations (MyBeat) | From TOIEC: 210 English vocabulary questions, self-reporting degree of retention and subjective difficulty | Not provided | 81% LOSO and Cross-validation | Not provided |

| [46] | Activities monitoring | 44% of a total of 18 undergraduate students of Computer Engineering | Accelerometer and gyroscope data, heart rate, pedometer, skin temperature, and calories (MSBand) | Activities monitoring for 8 weeks, self-report by the participants | MLP, naïve Bayes, J48, random forest, JRIP (classification) | 87.2% random forest | Not provided |

| [47] | Stress level recognition | 10 students of the Faculty of electrical engineering Tuzla | ECG, GSR | Relax, oral presentation, written exam | SVM linear kernel, linear discriminant analysis, ensemble, kNN, J4.8 (classification) | 91% SVM ECG and GSR | Not provided |

| [48] | Perceived difficulty level recognition and success prediction | 27 individuals | EEG (Emotiv EPOC), ECG, EMG (Shimmer v2) | English Text, 20 questions from Oxford Quick Placement Test | kNN (K = 1, 3, 5) SVM, linear and radial basis function kernel (LSVM, SVM-RBF), linear discriminant analysis (LDA), decision trees (DT) (classification) | 81.92% LSVM EEG-MFCC [0.5–40] mel frequency cepstral coefficients | 74.21% LSVM EEG-MFCC [0.5–40] |

| [49] | Critical thinking detection | Engineering undergraduate students | EEG (Muse headband) | Detecting false and irrelevant information from a video | SVM (linear, quadratic, cubic, medium Gaussian, coarse Gaussian), kNN, NB, decision tree (classification) | 100% | Not provided |

| [50] | Stress classification | 23 engineering students | EEG (Emotiv EPOC), GSR, skin temperature, HR (Empatica E4) | MIST (Montreal Imaging Stress Task) | Random forest, kNN (classification) | 99.98% random forest | Not provided |

| [51] | Stress detection | 21 participants of an algorithmic programming contest | Acceleration, PPG, GSR, skin temperature (Empatica E4) | Wearing device during free day, lectures and contest session | PCA anda LDA, PCA and SVM (radial), logistic regression, random forest, multilayer perception (classification) | 92.15% logistic regression (HR and GSR), multilayer perception (HR, GSR, and ACC) | Not provided |

| [52] | User/device recognition, class/break recognition, estimating self-reported affect and mood state | 42 students and 2 professors from University of Italian-speaking Switzerland | GSR, BVP, acceleration, skin temperature (Empatica E4), heart rate derived from BVP | Wearing device during 26 classes (including exams), self-reporting lifestyle habits | Random forest, light gradient boosting machine (LGBM), spectro-temporal residual network (STResNet) (classification) | 56.63% STResNet user/device 90.8% LGBM class/break | 49% STResNet user/device 72% STResNet class/break |

| [53] | Cognitive state detection | 127 undergraduate university students each day for 6 weeks) | EEG (Emotiv EPOC) | Cognifit test | Logistic regression (LR), NNs, SVMs, random forest, LSTM, ConvLSTM (classification) | RF: engagement (92.1%). LR: instantaneous Attention (95%), focused Attention (98%), working Memory (94%), visual Perception (95%), NN: planning (95.6%), shifting (95.6%) | Not provided |

| [54] | Physical, social and cognitive stressor identification | 26 university students | ECG (smartshirt), HRV extracted from ECG (Kubios Scientific software, unknown version), timestamps of activities (Empatica E4) | Cold pressor (physical), Trier Social Stress Test (social), Seated Stroop task (cognitive), State-Trait Anxiety Inventory (self-reported state anxiety) | SVM with linear kernel, random forest trees, naïve Bayes, kNN (classification) | 79.1% SVM (multi-class, 10-fold CV) | Not provided |

| [55] | Student grades prediction, considering the students’ stress factors | 10 students, augmented to 7680 students through data augmentation | GSR, Skin Temperature, Heart Rate (Empatica E4) | Students wore the device during three exams | Physionet dataset, CNN, decision tree regressor, support vector regressor (SVR), KNN regressor, random forest regressor (classification) | Not provided | Not provided |

| [56] | Developing an LSTM-based emotion recognition system | 30 participants | Respiration, GSR, ECG, EMG, skin temperature, and BVP | Watching relaxing, boring, amusing, and scary videos | CASE dataset, LSTM (classification) | Not provided | 95.1% LSTM, incorporating all eight sensing modalities |

| [57] | Stress level analysis | 10 university student | Physionet dataset, GSR, Skin Temperature (Empatica E4) | Students wore the device during three exams | SVM, KNN, 10-fold cross-validation (classification) | 70% KNN | 80% KNN |

| [58] | Providing educators with real-time insights into student engagement and cognitive responses | Not provided | Eye-tracking, typing behavior, heart rate, GSR, mouse movements, and click pattern | The data were collected during online exams | Distributed machine learning (DML), Residual network (ReSNet) (classification) | 85.7% ResNet + DML | Not provided |

| [59] | Prediction of depression, stress, and anxiety | 700 students at Notre Dame university in 2015–2017 period, dropped to 300 in the 2017–2019 period | Step counts, active minutes, heart rate, sleep metrics (Fitbit), bad habits, personal inventory, education, exercise, health, origin, personal information, sex, and sleep (self-reported survey) | Data collection during academic life | NetHealth dataset, Multitask learning (MTL), random forest, XGBoost, LSTM (classification) | Not provided | Not provided |

| [60] | Emotion recognition | 15 participants aged between 24 and 29 | GSR, respiration, skin temperature, weight | Exposition to four distinct emotional states: baseline, stress, amusement, and meditation, all of which were labeled accordingly | WESAD dataset, recursive feature elimination in random forest (REF-RF), through 10-fold cross-validation (feature selection), EmoMA-Net (classification) | 99.66% EmoMA-Net | 98.43% EmoMA-Net |

| [61] | PPG data generation | 10 university student | PPG signal | Students wore the device during three exams | Physionet dataset, conditional probabilistic auto-regressive (CPAR) model (classification) | Not applicable | Not applicable |

| [62] | Stress detection | 15 participants aged between 24 and 29 | ECG, GSR, EMG, respiration, skin temperature, and three-axis acceleration (RespiBAN), blood volume pulse (BVP), GSR, body temperature, and three-axis acceleration (Empatica E4) | Exposition to four distinct emotional states: baseline, stress, amusement, and meditation, all of which were labeled accordingly | WESAD dataset, extra tree classifier (feature selection), XGBoost, fine-tuning (classification) | Not provided | 96% fine-tuned XGBoost |

| [63] | Engagement recognition across classrooms, presentations and workplaces, under a unified methodological framework | 24 university students (SEED dataset), 10 audience member across multiple presentations (APSYNC dataset), 14 academic workers (Workplace dataset) | GSR (Empatica E4) | Wearing the device during nine lectures (SEED database), presentations (APSYNC dataset), and various tasks over 28 days (Workplace dataset) | SEED dataset, APSYNC dataset, Workplace dataset, 27 of machine learning models, 5-fold cross-validation, Leave Out Participant Out (LOPO) and Leave One Session Out (LOSO), single-dataset training, multi-dataset training, Leave-One-Dataset-Out (LODO) cross-validation, impurity-based feature importance analysis (classification) | 93.4% APSYNC dataset, single-dataset training, 5-fold validation | Not provided |

| [64,65] | Emotion recognition into human–computer interaction | 15 participants aged between 24 and 29 (WESAD), Not provided (self-collected dataset) | ECG | Exposition to four distinct emotional states: baseline, stress, amusement, and meditation, all of which were labeled accordingly (WESAD), Not provided (self-collected dataset) | WESAD dataset, CNN (classification) | 87.90% (WESAD) | 87.71% (WESAD) |

3.3. Bias Assessment

3.4. AI Algorithms

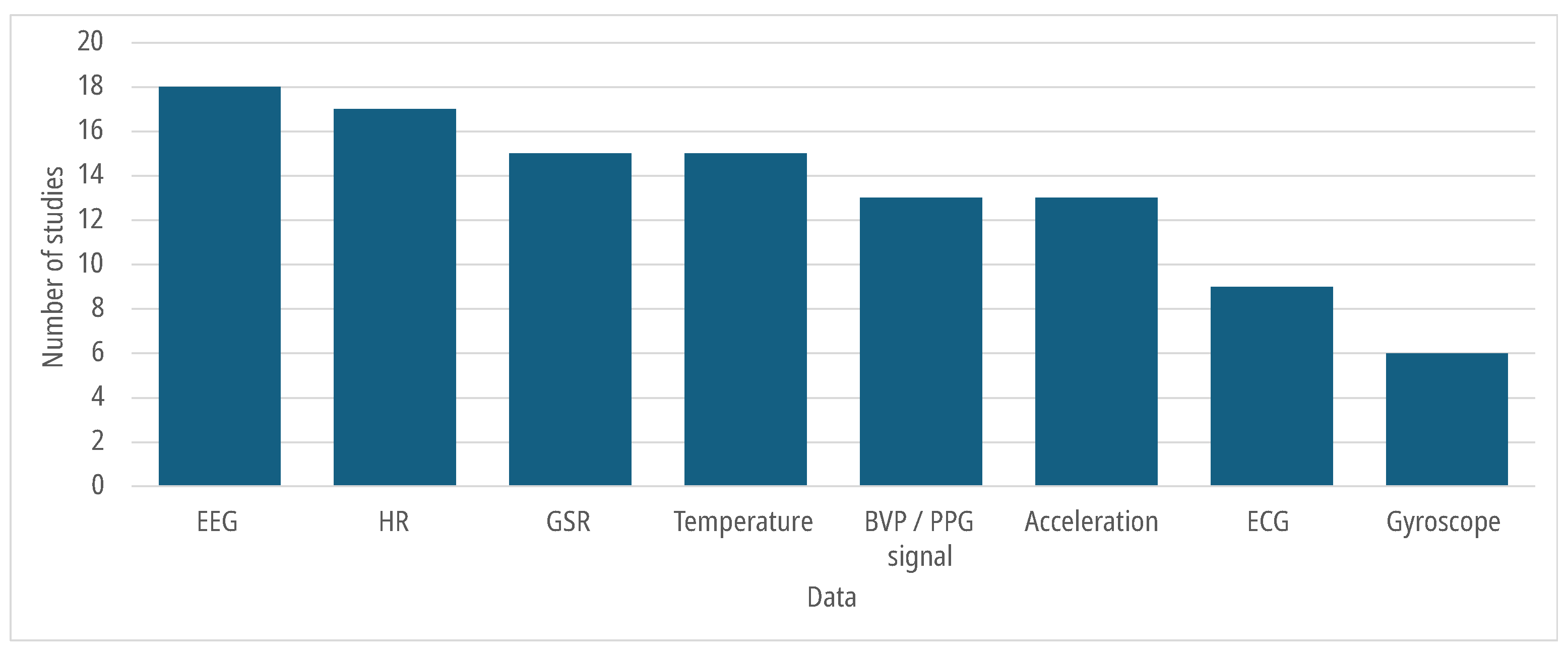

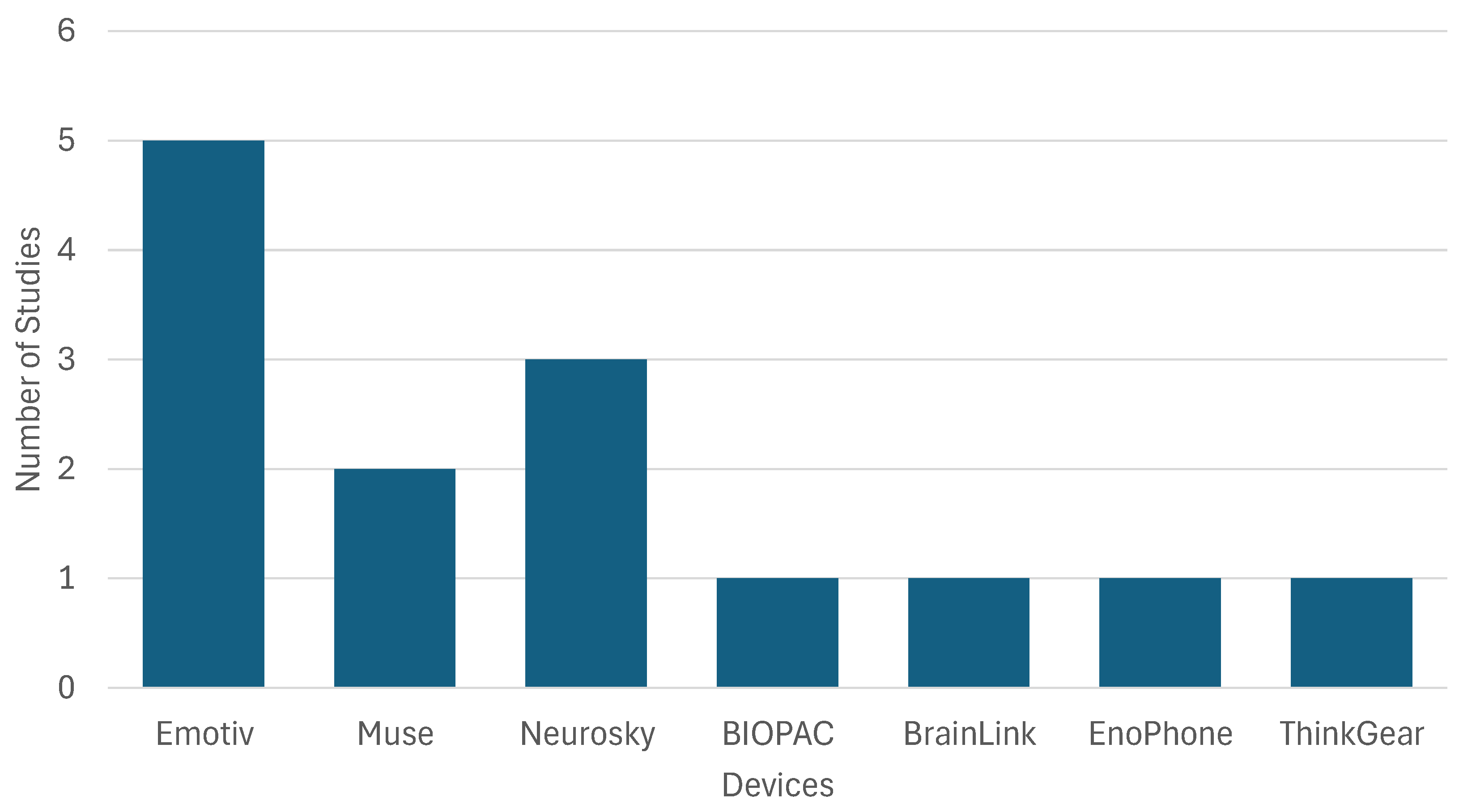

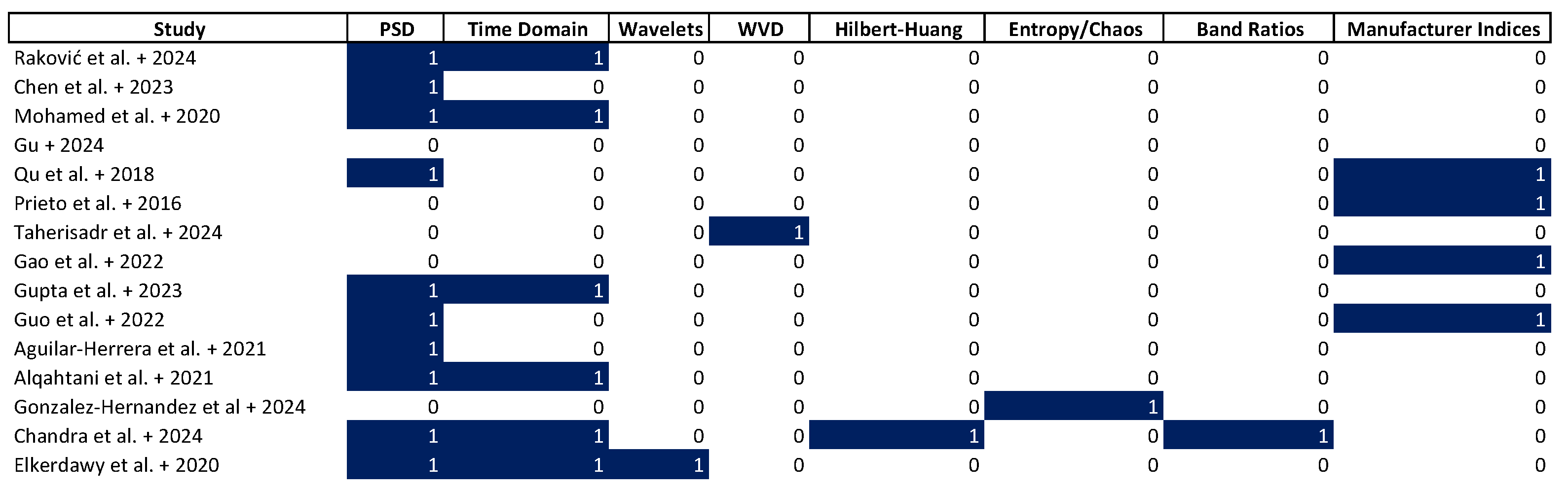

3.5. Devices and Collected Data

3.6. Multi-Model Approach

3.7. Datasets

3.8. Personalized Learning

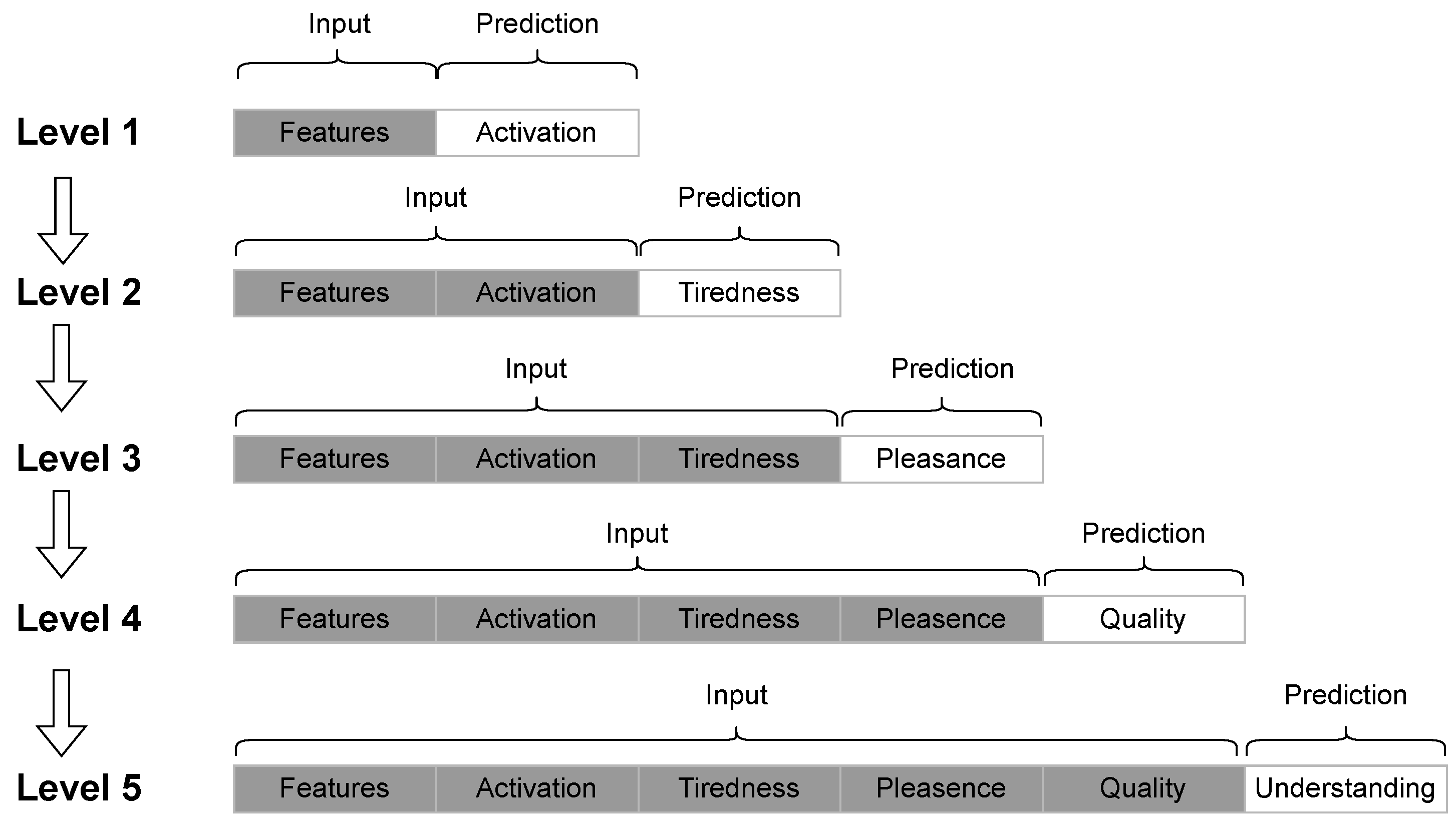

3.9. ERUDITE

- : Give a break.

- : Enable VR (Virtual Reality).

- : Disable VR (Virtual Reality).

- : Changing the content of the presentation.

- : No change.

3.10. Online Processing

4. Discussion

4.1. Strengths, Weaknesses, and Future Opportunities of the Considered Studies

4.2. Strengths and Weaknesses of This Review

4.3. Answers to Research Questions

- RQ1 How can biometric data collected via wearable devices and analyzed through AI algorithms provide reliable information in educational contexts?

- Answer: The included studies show that AI applied to wearable biosignals yields reliable indicators of stress, attention, cognitive engagement, perceived difficulty, fatigue, and learning-relevant activities under classroom-proximate tasks. For stress, models trained on ECG and HRV, GSR, and PPG reached high accuracy in validated protocols and authentic settings. For attention and engagement, wearable and EEG-based models achieved strong performance during lectures, videos, and cognitive tests, and high rates for multiple instantaneous and sustained attention constructs when using EEG with traditional and deep models. Human activity recognition relevant to classroom orchestration and inclusive support also performed well. Together, the results of this review indicate that wearable biometrics analyzed with standard machine learning and deep learning can provide valid task-level information about learners’ affective and cognitive states in educational contexts, if sensing, labeling, and validation are implemented carefully.

- RQ2 How can these frameworks enable continuous personalization in education?

- Answer: The review identifies several information types that are both detectable with wearables and directly actionable for continuous personalization. First, stress load and arousal derived from ECG or HRV, GSR, and PPG can guide pacing, breaks, and task sequencing during activities (e.g., moving from high-pressure tasks to relaxation when stress exceeds a threshold). Second, attention and engagement metrics inferred from EECG and wrist signals are suitable for dynamic difficulty control and modality adjustments during lectures and videos, and for daily study monitoring that can trigger guidance in self-regulated learning. Third, perceived difficulty and success likelihood, estimated from combined EEG, ECG, and EMG during testing, can be used to time hints, adjust item difficulty, or choose feedback modality within an intelligent tutoring workflow. A reinforcement learning prototype [33] illustrates a closed loop in which EEG-based learner states trigger actions such as breaks, VR on or off, and content changes, using performance-linked rewards to converge on effective policies. Fourth, profiling and orchestration information supports both individual and group personalization: repeated EEG-based estimates of cognitive skills can inform level placement, while activity recognition and smartwatch-based analytics provide context for inclusive support in neurodiverse populations and for routine classroom management. These opportunities can enable continuous didactic personalization using signals and procedures proposed withing the studies examined in this review in educational settings.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SVM | Support vector machine |

| LRCN | Long-term recurrent convolutional networks |

| CNN | Convolutional neural network |

| CRNN | Convolutional recurrent neural network |

| MLP | Multilayer perceptron |

| LSTM | Long short-term memory |

| DSTCLN | Deep spatiotemporal Convolutional bidirectional LSTM Network |

| NN | Neural network |

| SVC | Support vector clustering |

| kNN | K-nearest neighbors |

| NB | Naïve Bayes |

| SBBO | Satin bowerbird optimization |

| CART | Classification and regression tree |

| XGBOOST | Extreme gradient boosting |

| RBF | Radial basis function kernel |

| SVR | Support vector regressor |

| ResNet | Residual network |

| DML | Distributed Machine Learning |

| JRIP | Repeated incremental pruning to produce error reduction |

| PCA | Principal component analysis |

| LDA | Linear discriminant analysis |

| LGBM | Light gradient boosting machine |

| STResNet | Spectro-temporal residual network |

| TSMS | Time Series Memory System |

| REF–RF | Recursive feature elimination in random forest |

| CBAM | Convolutional Block Attention Module |

| CPAR | Conditional probabilistic auto-regressive model |

| MLR | Multiple linear regression |

| MTL | Multitask learning |

| MFCC | Mel frequency cepstral coefficients |

| ECG | Electrocardiography |

| HR | Heart rate |

| HRV | Heart rate variability |

| GSR | Galvanic skin response |

| PPG | Photoplethysmogram |

| BVP | Blood volume pulse |

| SpO2 | Peripheral oxygen saturation |

| EEG | Electroencephalography |

| ANS | Autonomic nervous system |

| HAR | Human activity recognition |

| BP | Blood pressure |

| ICG | Impedance cardiogram |

| AI | Artificial intelligence |

| MIST | Montreal imaging stress test |

| TSS | Trier social stress test |

| WCST | Wisconsin Card Sorting Test |

| PSD | Power spectral density |

| FFT | Fast Fourier transformation |

| WVD | Wigner–Ville distributions |

| LOO | Leave-one-out |

| LOSO | Leave-one-subject-out |

| LLM | Large language model |

| MSE | Mean squared error |

| MFCC | Mel frequency cepstrum coefficient |

| TP | True positive |

| TN | True negative |

| FP | False positive |

| FN | False negative |

| ITS | Intelligent tutoring system |

| LS | Learning state |

| DS | Drowsiness state |

| SSQ | Simulator sickness questionnaire |

| VR | Virtual reality |

| IoT | Internet of Things |

| EMG | Electromyography |

Appendix A. Glossary

- Anxiety: The anticipation of a future threat causes muscle tension and alertness, preparing the body for danger [85].

- Attention: The behavioral and cognitive processes involved in focusing on certain information [86].

- Concentration: The ability to maintain sustained attention on a task during a certain time [87].

- Engagement: The concept refers to students who are meaningfully engaged in learning activities through interaction with others and worthwhile tasks: it involves active cognitive processes such as problem-solving and critical thinking [88].

- Stress: The non-specific response of the body to any demand made upon it [89].

References

- Doherty, C.; Baldwin, M.; Keogh, A.; Caulfield, B.; Argent, R. Keeping Pace with Wearables: A Living Umbrella Review of Systematic Reviews Evaluating the Accuracy of Consumer Wearable Technologies in Health Measurement. Sport. Med. 2024, 54, 2907–2926. [Google Scholar] [CrossRef]

- Li, R.T.; Kling, S.R.; Salata, M.J.; Cupp, S.A.; Sheehan, J.; Voos, J.E. Wearable Performance Devices in Sports Medicine. Sport. Health 2016, 8, 74–78. [Google Scholar] [CrossRef] [PubMed]

- Bayoumy, K.; Gaber, M.; Elshafeey, A.; Mhaimeed, O.; Dineen, E.H.; Marvel, F.A.; Martin, S.S.; Muse, E.D.; Turakhia, M.P.; Tarakji, K.G.; et al. Smart wearable devices in cardiovascular care: Where we are and how to move forward. Nat. Rev. Cardiol. 2021, 18, 581–599. [Google Scholar] [CrossRef]

- Voss, C.; Schwartz, J.; Daniels, J.; Kline, A.; Haber, N.; Washington, P.; Tariq, Q.; Robinson, T.N.; Desai, M.; Phillips, J.M.; et al. Effect of Wearable Digital Intervention for Improving Socialization in Children With Autism Spectrum Disorder: A Randomized Clinical Trial. JAMA Pediatr. 2019, 173, 446–454. [Google Scholar] [CrossRef]

- Ancillon, L.; Elgendi, M.; Menon, C. Machine Learning for Anxiety Detection Using Biosignals: A Review. Diagnostics 2022, 12, 1794. [Google Scholar] [CrossRef] [PubMed]

- Pittig, A.; Arch, J.J.; Lam, C.W.R.; Craske, M.G. Heart rate and heart rate variability in panic, social anxiety, obsessive-compulsive, and generalized anxiety disorders at baseline and in response to relaxation and hyperventilation. Int. J. Psychophysiol. Off. J. Int. Organ. Psychophysiol. 2013, 87, 19–27. [Google Scholar] [CrossRef]

- Barbayannis, G.; Bandari, M.; Zheng, X.; Baquerizo, H.; Pecor, K.W.; Ming, X. Academic Stress and Mental Well-Being in College Students: Correlations, Affected Groups, and COVID-19. Front. Psychol. 2022, 13, 886344. [Google Scholar] [CrossRef]

- Hernández-Mustieles, M.A.; Lima-Carmona, Y.E.; Pacheco-Ramírez, M.A.; Mendoza-Armenta, A.A.; Romero-Gómez, J.E.; Cruz-Gómez, C.F.; Rodríguez-Alvarado, D.C.; Arceo, A.; Cruz-Garza, J.G.; Ramírez-Moreno, M.A.; et al. Wearable Biosensor Technology in Education: A Systematic Review. Sensors 2024, 24, 2437. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, C.W.; Fournier-Viger, P.; Van, L.D.; Tseng, Y.C. Analyzing students’ attention in class using wearable devices. In Proceedings of the 2017 IEEE 18th International Symposium on A World of Wireless, Mobile and Multimedia Networks (WoWMoM), Macau, China, 12–15 June 2017; pp. 1–9. [Google Scholar] [CrossRef]

- Prieto, L.P.; Sharma, K.; Kidzinski, Ł.; Rodríguez-Triana, M.J.; Dillenbourg, P. Multimodal Teaching Analytics: Automated Extraction of Orchestration Graphs from Wearable Sensor Data. J. Comput. Assist. Learn. 2018, 34, 193–203. [Google Scholar] [CrossRef] [PubMed]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J.M. Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors. Sensors 2016, 16, 426. [Google Scholar] [CrossRef]

- Li, J.; Xue, J.; Cao, R.; Du, X.; Mo, S.; Ran, K.; Zhang, Z. Finerehab: A multi-modality and multi-task dataset for rehabilitation analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–18 June 2024; pp. 3184–3193. [Google Scholar]

- Constable, M.D.; Zhang, F.X.; Conner, T.; Monk, D.; Rajsic, J.; Ford, C.; Park, L.J.; Platt, A.; Porteous, D.; Grierson, L.; et al. Advancing healthcare practice and education via data sharing: Demonstrating the utility of open data by training an artificial intelligence model to assess cardiopulmonary resuscitation skills. Adv. Health Sci. Educ. Theory Pract. 2025, 30, 15–35. [Google Scholar] [CrossRef]

- du Plooy, E.; Casteleijn, D.; Franzsen, D.L. Personalized adaptive learning in higher education: A scoping review of key characteristics and impact on academic performance and engagement. Heliyon 2024, 10, e39630. [Google Scholar] [CrossRef] [PubMed]

- Lindberg, R.; Seo, J.; Laine, T.H. Enhancing Physical Education with Exergames and Wearable Technology. IEEE Trans. Learn. Technol. 2016, 9, 328–341. [Google Scholar] [CrossRef]

- Silvis-Cividjian, N.; Kenyon, J.; Nazarian, E.; Sluis, S.; Gevonden, M. On Using Physiological Sensors and AI to Monitor Emotions in a Bug-Hunting Game. In Proceedings of the 2024 on Innovation and Technology in Computer Science Education V. 1, Milan Italy, 8–10 July 2024; Association for Computing Machinery: New York, NY, USA, 2024. ITiCSE 2024. pp. 429–435. [Google Scholar] [CrossRef]

- DIGI-ME Project. DIGI-ME: Digital Skills for Transformative Innovation Management and Entrepreneurship. Available online: https://digime-project.eu/ (accessed on 8 November 2025).

- Pereira, T.M.C.; Conceição, R.C.; Sencadas, V.; Sebastião, R. Biometric Recognition: A Systematic Review on Electrocardiogram Data Acquisition Methods. Sensors 2023, 23, 1507. [Google Scholar] [CrossRef]

- Yang, W.; Wang, S.; Cui, H.; Tang, Z.; Li, Y. A Review of Homomorphic Encryption for Privacy-Preserving Biometrics. Sensors 2023, 23, 3566. [Google Scholar] [CrossRef] [PubMed]

- Schmitt, M.; Flechais, I. Digital deception: Generative artificial intelligence in social engineering and phishing. Artif. Intell. Rev. 2024, 57, 324. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Sterne, J.A.; Hernán, M.A.; Reeves, B.C.; Savović, J.; Berkman, N.D.; Viswanathan, M.; Henry, D.; Altman, D.G.; Ansari, M.T.; Boutron, I.; et al. ROBINS-I: A tool for assessing risk of bias in non-randomised studies of interventions. BMJ 2016, 355, i4919. [Google Scholar] [CrossRef] [PubMed]

- Islam, T.Z.; Wu Liang, P.; Sweeney, F.; Pragner, C.; Thiagarajan, J.J.; Sharmin, M.; Ahmed, S. College Life is Hard!—Shedding Light on Stress Prediction for Autistic College Students using Data-Driven Analysis. In Proceedings of the 2021 IEEE 45th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 12–16 July 2021; pp. 428–437, ISSN 0730-3157. [Google Scholar] [CrossRef]

- Chen, Q.; Lee, B.G. Deep Learning Models for Stress Analysis in University Students: A Sudoku-Based Study. Sensors 2023, 23, 6099. [Google Scholar] [CrossRef]

- Tanaka, N.; Watanabe, K.; Ishimaru, S.; Dengel, A.; Ata, S.; Fujimoto, M. Concentration Estimation in Online Video Lecture Using Multimodal Sensors. In Proceedings of the Companion of the 2024 on ACM International Joint Conference on Pervasive and Ubiquitous Computing, Melbourne, VIC, Australia, 5–9 October 2024; pp. 71–75. [Google Scholar] [CrossRef]

- Raković, M.; Li, Y.; Foumani, N.M.; Salehi, M.; Kuhlmann, L.; Mackellar, G.; Martinez-Maldonado, R.; Haffari, G.; Swiecki, Z.; Li, X.; et al. Measuring Affective and Motivational States as Conditions for Cognitive and Metacognitive Processing in Self-Regulated Learning. In Proceedings of the 14th Learning Analytics and Knowledge Conference, New York, NY, USA, 18–22 March 2024; LAK ’24. pp. 701–712. [Google Scholar] [CrossRef]

- Zheng, H.; Mahapasuthanon, P.; Chen, Y.; Rangwala, H.; Evmenova, A.S.; Genaro Motti, V. WLA4ND: A Wearable Dataset of Learning Activities for Young Adults with Neurodiversity to Provide Support in Education. In Proceedings of the 23rd International ACM SIGACCESS Conference on Computers and Accessibility, New York, NY, USA, 18–22 October 2021; ASSETS ’21. pp. 1–15. [Google Scholar] [CrossRef]

- Mohamed, Z.; Halaby, M.E.; Said, T.; Shawky, D.; Badawi, A. Facilitating Classroom Orchestration Using EEG to Detect the Cognitive States of Learners. In Proceedings of the The International Conference on Advanced Machine Learning Technologies and Applications (AMLTA2019), Cairo, Egypt, 28–30 March 2019; Hassanien, A.E., Azar, A.T., Gaber, T., Bhatnagar, R., Tolba, M.F., Eds.; Springer: Cham, Switzerland, 2020; pp. 209–217. [Google Scholar] [CrossRef]

- Gu, Y. Research on Speech Communication Enhancement of English Web-based Learning Platform based on Human-computer Intelligent Interaction. Scalable Comput. Pract. Exp. 2024, 25, 709–720. [Google Scholar] [CrossRef]

- Zhu, Z.; Ober, S.; Jafari, R. Modeling and detecting student attention and interest level using wearable computers. In Proceedings of the 2017 IEEE 14th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Eindhoven, The Netherlands, 9–12 May 2017; pp. 13–18. [Google Scholar] [CrossRef]

- Qu, X.; Hall, M.; Sun, Y.; Sekuler, R.; Hickey, T. A Personalized Reading Coach using Wearable EEG Sensors—A Pilot Study of Brainwave Learning Analytics; SciTePress: Setúbal, Portugal, 2018; pp. 501–507. [Google Scholar] [CrossRef]

- Prieto, L.; Sharma, K.; Dillenbourg, P.; Rodríguez-Triana, M. Teaching Analytics: Towards Automatic Extraction of Orchestration Graphs Using Wearable Sensors. In Proceedings of the LAK ’16: Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, Edinburgh, UK, 25–29 April 2016. [Google Scholar] [CrossRef]

- Taherisadr, M.; Faruque, M.A.A.; Elmalaki, S. ERUDITE: Human-in-the-Loop IoT for an Adaptive Personalized Learning System. IEEE Internet Things J. 2024, 11, 14532–14550. [Google Scholar] [CrossRef]

- Zhou, Z.; Tam, V.; Lui, K.; Lam, E.; Hu, X.; Yuen, A.; Law, N. A Sophisticated Platform for Learning Analytics with Wearable Devices. In Proceedings of the 2020 IEEE 20th International Conference on Advanced Learning Technologies (ICALT), Tartu, Estonia, 6–9 July 2020; pp. 300–304. [Google Scholar] [CrossRef]

- Warren, J.; Ni, L.; Fry, B.; Stowell, M.; Gardiner, C.; Whittaker, R.; Tane, T.; Dobson, R. Predicting Heart Rate Variability from Heart Rate and Step Count for University Student Weekdays. In Proceedings of the 2024 46th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 15–19 July 2024; pp. 1–4, ISSN 2694-0604. [Google Scholar] [CrossRef]

- Gao, S.; Lai, S.; Wu, F. Learning Attention Level Prediction via Multimodal Physiological Data Using Wearable Wrist Devices. In Proceedings of the 2022 Eleventh International Conference of Educational Innovation through Technology (EITT), New York, NY, USA, 16–17 December 2022; pp. 13–18, ISSN 2166-0549. [Google Scholar] [CrossRef]

- Gupta, S.; Kumar, P.; Tekchandani, R. A machine learning-based decision support system for temporal human cognitive state estimation during online education using wearable physiological monitoring devices. Decis. Anal. J. 2023, 8, 100280. [Google Scholar] [CrossRef]

- Guo, J.; Wan, B.; Wu, H.; Zhao, Z.; Huang, W. A Virtual Reality and Online Learning Immersion Experience Evaluation Model Based on SVM and Wearable Recordings. Electronics 2022, 11, 1429. [Google Scholar] [CrossRef]

- Södergård, C.; Laakko, T. Inferring Students’ Self-Assessed Concentration Levels in Daily Life Using Biosignal Data from Wearables. IEEE Access 2023, 11, 30308–30323. [Google Scholar] [CrossRef]

- Aguilar-Herrera, A.J.; Delgado-Jiménez, E.A.; Candela-Leal, M.O.; Olivas-Martinez, G.; Álvarez Espinosa, G.J.; Ramírez-Moreno, M.A.; Lozoya-Santos, J.d.J.; Ramírez-Mendoza, R.A. Advanced Learner Assistance System’s (ALAS) Recent Results. In Proceedings of the 2021 Machine Learning-Driven Digital Technologies for Educational Innovation Workshop, Monterrey, Mexico, 15–17 December 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Giannakos, M.N.; Sharma, K.; Papavlasopoulou, S.; Pappas, I.O.; Kostakos, V. Fitbit for learning: Towards capturing the learning experience using wearable sensing. Int. J. Hum.-Comput. Stud. 2020, 136, 102384. [Google Scholar] [CrossRef]

- Araño, K.A.; Gloor, P.; Orsenigo, C.; Vercellis, C. “Emotions are the Great Captains of Our Lives”: Measuring Moods Through the Power of Physiological and Environmental Sensing. IEEE Trans. Affect. Comput. 2022, 13, 1378–1389. [Google Scholar] [CrossRef]

- Egilmez, B.; Poyraz, E.; Zhou, W.; Memik, G.; Dinda, P.; Alshurafa, N. UStress: Understanding college student subjective stress using wrist-based passive sensing. In Proceedings of the 2017 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Kona, HI, USA, 13–17 March 2017; pp. 673–678. [Google Scholar] [CrossRef]

- Le-Quang, B.L.; Dao, M.S.; Nazmudeen, M.S.H. Wemotion: A System to Detect Emotion Using Wristbands and Smartphones. In Proceedings of the Advances in Signal Processing and Intelligent Recognition Systems; Thampi, S.M., Marques, O., Krishnan, S., Li, K.C., Ciuonzo, D., Kolekar, M.H., Eds.; Springer: Singapore, 2019; pp. 92–103. [Google Scholar]

- Mori, T.; Hasegawa, T. Estimation of degree of retention and subjective difficulty of four-choice English vocabulary questions using a wearable device. In Proceedings of the TENCON 2018—2018 IEEE Region 10 Conference, Jeju, Republic of Korea, 28–31 October 2018; pp. 0605–0610, ISSN 2159-3450. [Google Scholar] [CrossRef]

- Herrera-Alcántara, O.; Barrera-Animas, A.Y.; González-Mendoza, M.; Castro-Espinoza, F. Monitoring Student Activities with Smartwatches: On the Academic Performance Enhancement. Sensors 2019, 19, 1605. [Google Scholar] [CrossRef]

- Hasanbasic, A.; Spahic, M.; Bosnjic, D.; Adazic, H.H.; Mesic, V.; Jahic, O. Recognition of stress levels among students with wearable sensors. In Proceedings of the 2019 18th International Symposium INFOTEH-JAHORINA (INFOTEH), East Sarajevo, Bosnia and Herzegovina, 20–22 March 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Alqahtani, F.; Katsigiannis, S.; Ramzan, N. Using Wearable Physiological Sensors for Affect-Aware Intelligent Tutoring Systems. IEEE Sens. J. 2021, 21, 3366–3378. [Google Scholar] [CrossRef]

- Gonzalez-Hernandez, H.G.; Peña-Cortés, D.V.; Flores-Amado, A.; Oliart-Ros, A.; Martinez-Ayala, M.A.; Mora-Salinas, R.J. Towards the Automatic Detection of Critical Thinking Through EEG and Facial Emotion Recognition. In Proceedings of the 2024 IEEE Global Engineering Education Conference (EDUCON), Kos Island, Greece, 8–11 May 2024; pp. 1–8, ISSN 2165-9567. [Google Scholar] [CrossRef]

- Chandra, V.; Sethia, D. Machine learning-based stress classification system using wearable sensor devices. IAES Int. J. Artif. Intell. (IJ-AI) 2024, 13, 337–347. [Google Scholar] [CrossRef]

- Can, Y.S.; Chalabianloo, N.; Ekiz, D.; Ersoy, C. Continuous Stress Detection Using Wearable Sensors in Real Life: Algorithmic Programming Contest Case Study. Sensors 2019, 19, 1849. [Google Scholar] [CrossRef]

- Laporte, M.; Gjoreski, M.; Langheinrich, M. LAUREATE: A Dataset for Supporting Research in Affective Computing and Human Memory Augmentation. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies; Association for Computing Machinery: New York, NY, USA, 2023; Volume 7. [Google Scholar] [CrossRef]

- Elkerdawy, M.; Elhalaby, M.; Hassan, A.; Maher, M.; Shawky, D.; Badawi, A. Building Cognitive Profiles of Learners Using EEG. In Proceedings of the 2020 11th International Conference on Information and Communication Systems (ICICS), Irbid, Jordan, 7–9 April 2020; pp. 027–032, ISSN 2573-3346. [Google Scholar] [CrossRef]

- He, M.; Cerna, J.; Alkurdi, A.; Dogan, A.; Zhao, J.; Clore, J.L.; Sowers, R.; Hsiao-Wecksler, E.T.; Hernandez, M.E. Physical, Social and Cognitive Stressor Identification using Electrocardiography-derived Features and Machine Learning from a Wearable Device. In Proceedings of the 2024 46th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 15–19 July 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Agarwal, V.; Ahmad, N.; Hasan, M.G. Physiological Signals based Student Grades Prediction using Machine Learning. In Proceedings of the 2023 OITS International Conference on Information Technology (OCIT), Raipur, India, 13–15 December 2023; pp. 208–213. [Google Scholar] [CrossRef]

- Awais, M.; Raza, M.; Singh, N.; Bashir, K.; Manzoor, U.; Islam, S.U.; Rodrigues, J.J. LSTM-Based Emotion Detection Using Physiological Signals: IoT Framework for Healthcare and Distance Learning in COVID-19. IEEE Internet Things J. 2020, 8, 16863–16871. [Google Scholar] [CrossRef]

- Le Tran Thuan, T.; Nguyen, P.K.; Gia, Q.N.; Tran, A.T.; Le, Q.K. Machine Learning Algorithms for Stress Level Analysis Based on Skin Surface Temperature and Skin Conductance. In Proceedings of the 2024 IEEE 6th Eurasia Conference on Biomedical Engineering, Healthcare and Sustainability (ECBIOS), Tainan, Taiwan, 14–16 June 2024; pp. 421–424. [Google Scholar] [CrossRef]

- Patil, S.; Sungheetha, A.; G, B.; Kalaivaani, P.T.; Kandaswamy, V.A.; Jagannathan, S.K. Design and Behavioral Analysis of Students during Examinations using Distributed Machine Learning. In Proceedings of the 2023 International Conference on Research Methodologies in Knowledge Management, Artificial Intelligence and Telecommunication Engineering (RMKMATE), Chennai, India, 1–2 November 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Saylam, B.; İncel, Ö.D. Multitask Learning for Mental Health: Depression, Anxiety, Stress (DAS) Using Wearables. Diagnostics 2024, 14, 501. [Google Scholar] [CrossRef]

- Wu, T.; Huang, Y.; Purwanto, E.; Craig, P. EmoMA-Net: A Novel Model for Emotion Recognition Using Hybrid Multimodal Neural Networks in Adaptive Educational Systems. In Proceedings of the 2024 7th International Conference on Big Data and Education, Oxford, UK, 24–26 September 2024; Association for Computing Machinery: New York, NY, USA, 2025. ICBDE ’24. pp. 65–71. [Google Scholar] [CrossRef]

- Chan, J.T.W.; Chui, K.T.; Lee, L.-K.; Paoprasert, N.; Ng, K.-K. Data Generation using a Probabilistic Auto-Regressive Model with Application to Student Exam Performance Analysis. In Proceedings of the 2024 International Symposium on Educational Technology (ISET), Macau, Macao, 29 July–1 August 2024; pp. 87–90. [Google Scholar] [CrossRef]

- Hoang, T.H.; Dang, T.K.; Trang, N.T.H. Personalized Stress Detection for University Students Using Wearable Devices. In Proceedings of the 2025 19th International Conference on Ubiquitous Information Management and Communication (IMCOM), Bangkok, Thailand, 3–5 January 2025; pp. 1–7. [Google Scholar] [CrossRef]

- Alchieri, L.; Alecci, L.; Abdalazim, N.; Santini, S. Recognition of Engagement from Electrodermal Activity Data Across Different Contexts. In Proceedings of the Adjunct Proceedings of the 2023 ACM International Joint Conference on Pervasive and Ubiquitous Computing & the 2023 ACM International Symposium on Wearable Computing (UbiComp/ISWC ’23 Adjunct), Cancun, Mexico, 8–12 October 2023; pp. 108–112. [Google Scholar] [CrossRef]

- Qin, Y. Artificial Intelligence Technology-Driven Teacher Mental State Assessment and Improvement Method. Int. J. Inf. Commun. Technol. Educ. (IJICTE) 2024, 20, 1–17. [Google Scholar] [CrossRef]

- Wu, W.; Zuo, E.; Zhang, W.; Meng, X. Multi-physiological signal fusion for objective emotion recognition in educational human–computer interaction. Front. Public Health 2024, 12, 1492375. [Google Scholar] [CrossRef] [PubMed]

- Foumani, N.M.; Tan, C.W.; Webb, G.I.; Salehi, M. Improving position encoding of transformers for multivariate time series classification. Data Min. Knowl. Discov. 2024, 38, 22–48. [Google Scholar] [CrossRef]

- Zhou, Z.; Tam, V.; Lui, K.; Lam, E.; Yuen, A.; Hu, X.; Law, N. Applying Deep Learning and Wearable Devices for Educational Data Analytics. In Proceedings of the 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI), Portland, OR, USA, 4–6 November 2019; pp. 871–878. [Google Scholar] [CrossRef]

- Mekruksavanich, S.; Hnoohom, N.; Jitpattanakul, A. A Deep Residual-based Model on Multi-Branch Aggregation for Stress and Emotion Recognition through Biosignals. In Proceedings of the 2022 19th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), Prachuap Khiri Khan, Thailand, 24–27 May 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Schmidt, P.; Reiss, A.; Duerichen, R.; Marberger, C.; Van Laerhoven, K. Introducing WESAD, a Multimodal Dataset for Wearable Stress and Affect Detection. In Proceedings of the 20th ACM International Conference on Multimodal Interaction, Boulder, CO, USA, 16–20 October 2018; Association for Computing Machinery: New York, NY, USA, 2018. ICMI ’18. pp. 400–408. [Google Scholar] [CrossRef]

- Amin, M.R.; Wickramasuriya, D.S.; Faghih, R.T. A Wearable Exam Stress Dataset for Predicting Grades using Physiological Signals. In Proceedings of the 2022 IEEE Healthcare Innovations and Point of Care Technologies (HI-POCT), Houston, TX, USA, 10–11 March 2022. [Google Scholar] [CrossRef]

- Sharma, K.; Castellini, C.; van den Broek, E.L.; Albu-Schaeffer, A.; Schwenker, F. A dataset of continuous affect annotations and physiological signals for emotion analysis. Sci. Data 2019, 6, 196. [Google Scholar] [CrossRef]

- Purta, R.; Mattingly, S.; Song, L.; Lizardo, O.; Hachen, D.; Poellabauer, C.; Striegel, A. Experiences measuring sleep and physical activity patterns across a large college cohort with fitbits. In Proceedings of the 2016 ACM International Symposium on Wearable Computers (ISWC ’16), Heidelberg, Germany, 12–16 September 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 28–35. [Google Scholar] [CrossRef]

- Cohen, S.; Kamarck, T.; Mermelstein, R. A global measure of perceived stress. J. Health Soc. Behav. 1983, 24, 385–396. [Google Scholar] [CrossRef]

- Di Lascio, E.; Gashi, S.; Santini, S. Unobtrusive Assessment of Students’ Emotional Engagement during Lectures Using Electrodermal Activity Sensors. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies; Association for Computing Machinery: New York, NY, USA, 2018; Volume 2, p. 103. [Google Scholar] [CrossRef]

- Gashi, S.; Di Lascio, E.; Santini, S. Using unobtrusive wearable sensors to measure the physiological synchrony between presenters and audience members. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies; Association for Computing Machinery: New York, NY, USA, 2019; Volume 3, pp. 1–19. [Google Scholar] [CrossRef]

- Di Lascio, E.; Gashi, S.; Hidalgo, J.S.; Nale, B.; Debus, M.E.; Santini, S. A multi-sensor approach to automatically recognize breaks and work activities of knowledge workers in academia. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies; Association for Computing Machinery: New York, NY, USA, 2020; Volume 4, pp. 1–20. [Google Scholar] [CrossRef]

- Wang, H.; Li, Y.; Hu, X.; Yang, Y.; Meng, Z.; Chang, K.M. Using EEG to improve massive open online courses feedback interaction. CEUR Workshop Proc. 2013, 1009, 59–66. [Google Scholar]

- Hong, H.; Dai, L.; Zheng, X. Advances in Wearable Sensors for Learning Analytics: Trends, Challenges, and Prospects. Sensors 2025, 25, 2714. [Google Scholar] [CrossRef]

- Khosravi, S.; Bailey, S.G.; Parvizi, H.; Ghannam, R. Wearable Sensors for Learning Enhancement in Higher Education. Sensors 2022, 22, 7633. [Google Scholar] [CrossRef] [PubMed]

- Hashim, S.; Omar, M.; Ab Jalil, H.; Sharef, N. Trends on Technologies and Artificial Intelligence in Education for Personalized Learning: Systematic Literature Review. Int. J. Acad. Res. Progress. Educ. Dev. 2022, 11, 884–903. [Google Scholar] [CrossRef]

- Ahmed, A.; Aziz, S.; Abd-alrazaq, A.; AlSaad, R.; Sheikh, J. Leveraging LLMs and wearables to provide personalized recommendations for enhancing student well-being and academic performance through a proof of concept. Sci. Rep. 2025, 15, 4591. [Google Scholar] [CrossRef]

- Meng, L.; Guo, Y. Generative LLM-based distance education decision design in Argentine universities. Edelweiss Appl. Sci. Technol. 2025, 9, 2587–2599. [Google Scholar] [CrossRef]

- Iren, D.; Marinucci, L.; Conte, R.; Billeci, L.; Jarodzka, H.; Kaakinen, J. Prohibited or Permitted? Navigating the Regulatory Maze of the AI Act on Eye Tracking and Emotion Recognition. In Proceedings of the 4th International Workshop on Imagining the AI Landscape After the AI Act, in Conjunction with HHAI2025, Pisa, Italy, 9 June 2025. [Google Scholar]

- European Union. Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 Laying down Harmonised Rules on Artificial Intelligence and Amending Regulations (EC) No 300/2008, (EU) No 167/2013, (EU) No 168/2013, (EU) 2018/858, (EU) 2018/1139 and (EU) 2019/2144 and Directives 2014/90/EU, (EU) 2016/797 and (EU) 2020/1828 (Artificial Intelligence Act) (Text with EEA Relevance). 2024. OJ L 2024/1689, 2024. Available online: https://eur-lex.europa.eu/eli/reg/2024/1689/oj/eng (accessed on 24 September 2025).

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; American Psychiatric Publishing: Washington, DC, USA, 2013. [Google Scholar]

- Anderson, J.R. Cognitive Psychology and Its Implications, 6th ed.; Worth Publishers: New York, NY, USA, 2004. [Google Scholar]

- Fernández-Castillo, A.; Caurcel, M.J. State test-anxiety, selective attention and concentration in university students. Int. J. Psychol. 2015, 50, 265–271. [Google Scholar] [CrossRef] [PubMed]

- Kearsley, G.; Shneiderman, B. Engagement theory: A framework for technology-based teaching and learning. Educ. Technol. 1998, 38, 20–23. [Google Scholar]

- Selye, H. Stress and distress. Compr. Ther. 1975, 1, 9–13. [Google Scholar]

| Study | Interventions Classification | Missing Data | Outcome Measurement | Results Selection |

|---|---|---|---|---|

| [25] | Moderate | Low | Low | Moderate |

| [26] | Low | Low | Low | Low |

| [24] | Moderate | Low | Low | Low |

| [27] | Low | Low | Moderate | Low |

| [28] | Low | Low | Low | Low |

| [29] | No information | No information | Moderate | Low |

| [30] | Moderate | Low | Low | Low |

| [31] | Low | Moderate | Low | Low |

| [32] | Low | Low | Moderate | Moderate |

| [33] | Low | Low | Moderate | Low |

| [34] | Moderate | Moderate | Moderate | Low |

| [35] | Moderate | Low | Low | Low |

| [36] | Moderate | Moderate | Low | Low |

| [37] | Low | Low | Low | Low |

| [38] | Moderate | Low | Low | Low |

| [39] | Moderate | Moderate | Low | Low |

| [40] | Low | Low | Low | Low |

| [23] | Moderate | Serious | Moderate | Low |

| [41] | Low | Low | Low | Low |

| [42] | Moderate | Moderate | Moderate | Low |

| [43] | Serious | Moderate | Moderate | Low |

| [44] | Moderate | Low | Moderate | Low |

| [45] | Moderate | Low | Moderate | No information |

| [46] | Moderate | Low | Low | Low |

| [47] | Low | Low | Low | Low |

| [48] | Low | Low | Low | Moderate |

| [49] | Moderate | Low | Low | Low |

| [50] | Low | Low | Low | Low |

| [51] | Serious | Low | Low | Low |

| [52] | Moderate | Low | Low | Low |

| [53] | Low | Low | Low | Moderate |

| [54] | Low | Low | Moderate | Low |

| [55] | Serious | Low | Low | Low |

| [56] | Moderate | Low | Low | Low |

| [57] | Serious | Low | Moderate | Low |

| [58] | Serious | Low | Serious | Moderate |

| [59] | Low | Low | Low | Low |

| [60] | Moderate | Low | Low | Low |

| [61] | No information | Low | Moderate | Moderate |

| [62] | Moderate | Low | Low | Moderate |

| [63] | Moderate | Low | Low | Moderate |

| [64,65] | Moderate | Moderate | Low | Low |

| Group Description | Studies Included in the Group |

|---|---|

| EEG | [28,31,33,37,49,53] |

| EEG + GSR | [26,36,40,50] |

| EEG + ACC | [32] |

| EEG + BVP | [38] |

| EEG + ECG | [24,29,48] |

| ECG | [47,54,64,65] |

| ECG + GSR + BVP | [56] |

| HR + Step count | [35,44,59] |

| HR + ACC | [25,27,34,42,45,46] |

| HR + GSR | [41,43,55,58] |

| HR + ACC + GSR | [23,39] |

| ACC + BVP | [30,51,52] |

| ACC + BVP+ GSR | [62] |

| GSR | [57,60,63] |

| PPG | [61] |

| LS | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 0 |

|---|---|---|---|---|---|---|---|---|

| DS | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 |

| SSQ | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 |

| State |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meini, V.; Bachi, L.; Omezzine, M.A.; Procissi, G.; Pigni, F.; Billeci, L. Artificial Intelligence for the Analysis of Biometric Data from Wearables in Education: A Systematic Review. Sensors 2025, 25, 7042. https://doi.org/10.3390/s25227042

Meini V, Bachi L, Omezzine MA, Procissi G, Pigni F, Billeci L. Artificial Intelligence for the Analysis of Biometric Data from Wearables in Education: A Systematic Review. Sensors. 2025; 25(22):7042. https://doi.org/10.3390/s25227042

Chicago/Turabian StyleMeini, Vittorio, Lorenzo Bachi, Mohamed Amir Omezzine, Giorgia Procissi, Federico Pigni, and Lucia Billeci. 2025. "Artificial Intelligence for the Analysis of Biometric Data from Wearables in Education: A Systematic Review" Sensors 25, no. 22: 7042. https://doi.org/10.3390/s25227042

APA StyleMeini, V., Bachi, L., Omezzine, M. A., Procissi, G., Pigni, F., & Billeci, L. (2025). Artificial Intelligence for the Analysis of Biometric Data from Wearables in Education: A Systematic Review. Sensors, 25(22), 7042. https://doi.org/10.3390/s25227042