1. Introduction

State estimation is fundamental to a broad range of engineering and scientific fields [

1,

2,

3,

4], as it involves inferring non-measurable internal states of a system. These states are essential for understanding, predicting, and controlling system performance [

5,

6,

7], yet they often remain inaccessible due to sensor limitations, challenging environmental conditions [

1], or the inherent system complexity. To address these challenges, various state estimation techniques have been developed, enabling the inference of internal states from available measurement data [

8]. Among the widely used techniques are the Kalman Filter and its nonlinear extensions [

9,

10,

11,

12,

13] and the Luenberger observer [

14] for linear systems and its nonlinear system extensions [

15,

16,

17,

18].

Traditionally, state estimation techniques assume that model parameters are either (i) known a priori or (ii) unknown but constant throughout the estimation horizon (i.e., time-invariant) [

15]. While this simplifies system modeling and analysis, it fails to account for the complexities of real-world systems where parameters may be uncertain or time-varying. In practice, model parameters often change over time due to environmental variations, operational shifts, or gradual system degradation. Neglecting these dynamics can lead to distorted representations of system behavior and inaccurate state predictions, as states and parameters are inherently interdependent. Therefore, it is crucial to employ methods capable of simultaneously estimating both system states and time-varying system parameters, enabling a more accurate and comprehensive characterization of system dynamics.

Extensive research has been devoted to state estimation in systems with known parameters, including numerous comparative analyses of classical filters and observers. Studies evaluating the Extended, Unscented, Cubature, and Ensemble Kalman Filters in nonlinear systems [

19,

20,

21] have highlighted important trade-offs between estimation accuracy, numerical stability, and computational complexity, while comparative investigations of deterministic observers and adaptive Kalman filters under various noise and dynamic conditions [

22,

23] have provided valuable insights into estimator performance. However, these works primarily focus on state estimation alone and do not explicitly address the challenges associated with estimating system parameters that are uncertain or time-varying. Despite numerous review papers on Kalman filtering and observer design, to the best of the authors’ knowledge, no comprehensive review unifies and compares methods developed for simultaneous state and parameter estimation across both stochastic (Kalman-based) and deterministic (observer-based) frameworks. The present work therefore fills this gap by providing a structured, tutorial-style review that integrates theoretical foundations, algorithmic developments, and comparative perspectives on the principal approaches to concurrent state and parameter estimation.

The manuscript structure is as follows: Presented in

Section 2 are Kalman filter-based state and parameter estimation methods for both linear and nonlinear systems, along with their modified versions for joint state-parameter estimation. It also includes a comparative analysis of these methods based on the reviewed literature and highlights their key limitations. Developed in

Section 3 is a detailed examination of observer-based methods and their applications in state-parameter estimation. Provided in

Section 4 a comparative discussion of Kalman-based and observer-based approaches. Finally, summarized in

Section 5 are the key insights from the reviewed methods followed by the concluding remarks and future research directions.

2. State and Parameters Estimation: Kalman-Based Methods

The Kalman filter, introduced by Rudolf E. Kalman [

24], is a widely used algorithm for estimating the internal states of linear dynamic systems from noisy or incomplete measurement data [

25]. It operates recursively, processing a sequence of measurements over time to predict and refine state estimates. By accounting for uncertainties in both the system model and measurements, the Kalman filter produces optimal estimates of the system’s current state. Its strength lies in its ability to iteratively improve predictions by integrating new data, thereby reducing uncertainty over time. The filter operates in two key steps: the prediction (propagation) step, where the state estimate and covariance are projected forward in time, and the update (correction) step, where new measurements are incorporated to adjust the state estimate and minimize estimation error [

26]. This recursive framework makes the Kalman filter highly effective for real-time applications in various fields, including control systems, navigation, and signal processing.

To describe the Kalman filter, consider the stochastic system

where

is the state,

the input, and

the measurement.

are the state transition, input, and observation matrices;

and

are the process and measurement noise covariances. Note that the time-invariant case is recovered by setting

,

,

,

, and

.

The Kalman filter algorithm uses the state transition in (

1) to predict the states at time step

k, incorporating the process noise as zero-mean Gaussian noise with covariance

. These predictions are used to compute the predicted system output through the measurement model in (

2). The uncertainties are captured in the error covariance matrix

, which influences the calculation of the Kalman gain. The Kalman gain optimally balances the predicted states and measured data by weighting them according to the noise statistics represented in the

and

matrices. This recursive process improves the system behavior estimates.

The Kalman filter process is summarized in

Table 1, where

represents the predicted state estimate,

is the updated state estimate,

is the predicted error covariance,

is the updated error covariance,

is the control input,

is the measurement at time

k,

is the measurement residual, and

is the Kalman gain.

is the identity matrix, employed in the update step to adjust the error covariance.

The use of the Kalman filter for simultaneous state estimation and parameter identification was first explored in the early 1970s [

27,

28,

29,

30]. Parameter identification involves utilizing the Kalman filter in a dynamically evolving system, where the state variables are augmented to incorporate the parameters being estimated [

31]. This approach, known as joint state and parameter estimation, allows for the simultaneous estimation of both system states and parameters within a single filtering process, recursively updating the estimates over time as new measurements become available.

The dual state-parameter estimation is an alternative technique [

32]. In this approach [

33,

34], the state vector

and the parameter vector

are treated separately using two distinct concurrently executed filters: one for state estimation and the other for parameter estimation. This approach enables each filter to specialize its task, potentially improving accuracy and robustness. Other approaches combine a Kalman-based filter for state estimation with parameter estimation algorithms such as Least Squares Estimation (LSE) [

35,

36] or Recursive Least Squares Estimation (RLSE) [

37] for parameter estimation. These hybrid solutions have the Kalman filter sequentially update the state estimates while the parameters are periodically updated using a batch or recursive least squares approach [

15].

2.1. Kalman-Based Filters

This section presents the different variants of Kalman-based algorithms and their adaptations for both joint and dual state-parameter estimation. A comparative analysis is also provided, drawing on representative applications and published studies to evaluate the performance of these approaches in simultaneous state and parameter estimation tasks.

2.1.1. Extended Kalman Filter

The Extended Kalman Filter (EKF) is a nonlinear extension of the standard Kalman Filter, designed to estimate the states of systems with nonlinear dynamics and measurements [

38]. It accomplishes this by employing a Taylor series expansion to linearize the nonlinear system dynamics around the current state estimate, allowing it to effectively handle the complexities of nonlinear behavior.

To illustrate the EKF, consider the following discrete-time nonlinear state-space model, described by the state and measurement equations [

1]

where

and

are the state and the measurement vectors, respectively. The process noise

and the measurement noise

are assumed to be zero-mean with covariance matrices

and

, respectively. The functions

f and

h represent the nonlinear state transition function and the nonlinear measurement function, respectively. Defining the Jacobians

and

as

State Transition Jacobian Measurement Transition Jacobianwhere

and

are the Jacobian matrices of the nonlinear state transition function

f and measurement function

h, evaluated at the estimated state

with control input

, and the predicted state

, respectively.

The EKF is executed through the following two recursive steps [

1], as described herein.

State and Covariance Estimate Predictions: In the prediction step, the state estimate

is projected forward in time using the nonlinear state transition function

based on the previous state estimate

and the current control input

. Simultaneously, the covariance estimate

is predicted by propagating the previous covariance

through the state transition matrix

, with the addition of the process noise covariance

to account for uncertainty

State and Covariance Estimate Update: In the update step, the Kalman gain

is computed, which weighs the new measurement

relative to the predicted measurement derived from the nonlinear measurement function

. This gain is used to adjust the predicted state estimate

, resulting in the updated state estimate

. The covariance estimate is also updated by incorporating the measurement, reducing uncertainty in the state estimate. The updated covariance

reflects the reduced uncertainty after considering the new measurement

In Equations (

7)–(11),

represents the Kalman gain, which determines the weight given to the new measurement versus its estimate during the update process. The term

denotes the a priori state estimate, predicted before incorporating the current measurement, while

is the a posteriori state estimate, updated after accounting for the measurement. Similarly,

is the a priori covariance matrix, representing the uncertainty in the state prediction before the update, and

is the a posteriori prediction error covariance matrix, which reflects the reduced uncertainty after the measurement has been processed. This process allows the Kalman filter to refine both the state estimate and the uncertainty (covariance) using incoming measurements, iteratively improving the accuracy of the system’s understanding as new data becomes available.

2.1.2. Unscented Kalman Filter

The Extended Kalman Filter approximates nonlinear systems by linearizing them around the current state estimate, whereas the Unscented Kalman Filter (UKF) directly processes the system’s nonlinear functions. By avoiding the approximation errors inherent in the EKF’s linearization, by instead propagating a set of sample points, called sigma points, through the system’s true nonlinear dynamics, the UKF offers greater accuracy, particularly in highly nonlinear and complex systems. This makes the UKF a more attractive choice in scenarios where the EKF’s performance is constrained. The UKF algorithm involves generating sigma points, predicting the state and covariance, incorporating new measurements, and refining the state estimate. The detailed steps are outlined as follows [

39].

Initialization:

Begin by initializing the state estimate

and the covariance

Sigma Points Generation: Generate sigma points

based on the current state estimate

and covariance

where

,

L is the dimension of the state vector, and

and

are the scaling parameters.

State Prediction: Propagate sigma points through the nonlinear state transition function

Calculate the predicted state mean

and covariance

where

is the process noise covariance,

and

are the weights for the covariance and mean, respectively, defined as

Here,

is a constant used to incorporate prior knowledge of the distribution of

(e.g., choosing

is optimal for a Gaussian distribution) [

39].

Measurement Update: Propagate the sigma points through the nonlinear measurement function

Calculate the predicted measurement mean

and its covariance

where

is the measurement noise covariance.

Kalman Gain and State Update: Compute the cross-covariance

and the Kalman gain

Finally, update the state estimate

and the covariance

using the actual measurement

These steps collectively define the Unscented Kalman Filter (UKF) algorithm, where stage, from sigma point generation to the final state update, is executed deterministically based on the underlying nonlinear functions. This structured process enables the UKF to deliver recursive state estimates without requiring explicit Jacobians, thereby simplifying implementation while preserving higher-order accuracy.

2.1.3. Cubature Kalman Filter

The Cubature Kalman Filter (CKF) is an advanced variant of the Kalman Filter designed to handle the complexities of nonlinear systems, particularly in high-dimensional spaces. Unlike the Unscented Kalman Filter, which relies on sigma points, the CKF employs a cubature rule based on spherical-radial integration, enhancing its ability to manage system nonlinearities more effectively, thereby improving estimation performance in complex scenarios [

40,

41]. The following presents a detailed formulation of the CKF, including its prediction and correction phases for a general nonlinear discrete-time system.

For a nonlinear discrete-time system, the CKF operates through the following steps.

Initialization: Initialize state and covariance estimates

Prediction Step: Compute the cubature points

based on the current state estimate

and its covariance

where

are the cubature points chosen according to the spherical-radial cubature rule [

41]. The cubature points are then propagated through the state transition function

f Compute the predicted state mean as

where

.

Compute the predicted state covariance as

Correction Step: Propagate the cubature points through the measurement function

h Calculate the predicted measurement mean as

Next, compute the innovation covariance

and cross-covariance

as

Calculate the Kalman gain

as

Finally, the updated state estimate and the state covariance are

The CKF algorithm, as detailed above, defines a structured and recursive process for nonlinear state estimation. By leveraging numerical integration through deterministic cubature points, it enables consistent prediction and correction without the need for linear approximations nor heuristic sampling, making it more suitable for a broad range of complex nonlinear system applications.

2.1.4. Ensemble Kalman Filter

The Ensemble Kalman Filter (EnKF), introduced by Evensen in 1994 [

42,

43], is a Monte Carlo approximation of the traditional Kalman Filter, intended for high-dimensional, nonlinear systems applications [

24]. Departing from the standard Kalman filter, which assumes Gaussian distributions to compute single state estimation, the EnKF employs a large ensemble of state estimates to approximate the distribution of possible states. This EnKF solution produced a more robust prediction in complex and uncertain environments [

44,

45]. The four primary steps in the EnKF process are: initialization, prediction, update, and iteration.

Initialization: An ensemble of N members is initialized, where each member represents a possible state of the system.

Prediction Step: Propagate each ensemble member

using the state dynamics

where

f represents the system dynamics and

the process noise for the

ith ensemble member.

Update Step: Update each predicted ensemble member using the observation

where

is the updated ensemble member,

is the Kalman gain,

is the output,

is the observation operator, and

is the measurement noise.

Kalman Gain Calculation: Compute the Kalman gain using the forecast covariance matrix

derived from the ensemble members

The forecast covariance matrix is approximated by

where

is the sample mean of the forecasted ensemble

Iteration: The updated ensemble is then used as the new initial condition for the next iteration of the process.

2.2. Joint State and Parameter Estimation Using Kalman Based Algorithms

In joint state and parameter estimation, the system’s state vector is augmented to include both the dynamic states and the unknown parameters to be estimated. The standard filter equations are then applied, modified to facilitate the simultaneous estimation of both quantities, states and parameters, from system measurements.

Let

denote the state vector and

the model parameters vector; the augmented state vector is then defined as

Note: When a system includes unknown parameters, even a model that is linear in the state variables may lead to a nonlinear estimation problem once the parameters are treated as additional states. This occurs because the parameters often appear multiplicatively in the system matrices, introducing nonlinear couplings between the original states and the parameters in the augmented model.

By incorporating the dynamics of the parameters into the system and measurement models, the updated representation becomes

where

,

, and

are the augmented state transition, input, and observation matrices, respectively, and

is the augmented process noise vector. These matrices explicitly combine the nominal system matrices with the parameter-dependent components, embedding the parameter effects directly within the dynamic update. Specifically,

incorporates both the system dynamics and parameter coupling terms,

represents the input mapping including parameter dependencies, and

defines how both the states and parameters influence the measured outputs. This representation preserves the discrete-time state-space structure while enabling simultaneous estimation of both system states and parameters within a unified framework.

Joint state and parameter estimation is widely applied in engineering. For both linear and nonlinear systems, the Joint Extended Kalman Filter (JEKF) is a commonly used method. A typical application is in battery management systems, where the JEKF is used to estimate the state of charge (SOC) and various model parameters [

46]. However, in systems with significant nonlinearities, the performance of the JEKF may degrade due to the inaccuracies introduced by the linearization and Jacobian approximation.

To overcome these limitations, the Joint Unscented Kalman Filter (JUKF) is often employed as it avoids linearization by propagating sigma points through the nonlinear system models, capturing the true mean and covariance of the state distribution more accurately [

39]. It has been successfully employed in many research studies. For instance, in [

47], the JUKF was used for simultaneous state and parameter estimation in vehicle dynamics, enhancing the performance of driver assistance systems under nonlinear conditions. Similarly, in [

48], the JUKF was applied to Managed Pressure Drilling (MPD) to estimate downhole states and drilling parameters using topside measurements, demonstrating reliable performance even during transient drilling operations.

An alternative to the JUKF is the Joint Cubature Kalman Filter (JCKF), which replaces sigma points with cubature points based on spherical-radial integration rules [

41]. This method retains the benefits of derivative-free estimation while improving numerical stability and accuracy. In [

49], the JCKF was employed for estimating vehicle states and road-dependent parameters, such as tire-road friction coefficients, which are critical for adaptive vehicle control under varying road conditions.

The Joint Ensemble Kalman Filter (JEnKF) leverages ensemble-based methods for joint estimation in high-dimensional, nonlinear systems. Rather than relying on analytical approximations of distributions, the JEnKF uses a Monte Carlo ensemble to represent the posterior distribution. In [

44], the Joint EnKF (JEnKF) was employed to estimate soil moisture states and land surface parameters, achieving high estimation accuracy. Additional applications of the JEnKF in joint state-parameter estimation can be found in [

44,

50,

51,

52].

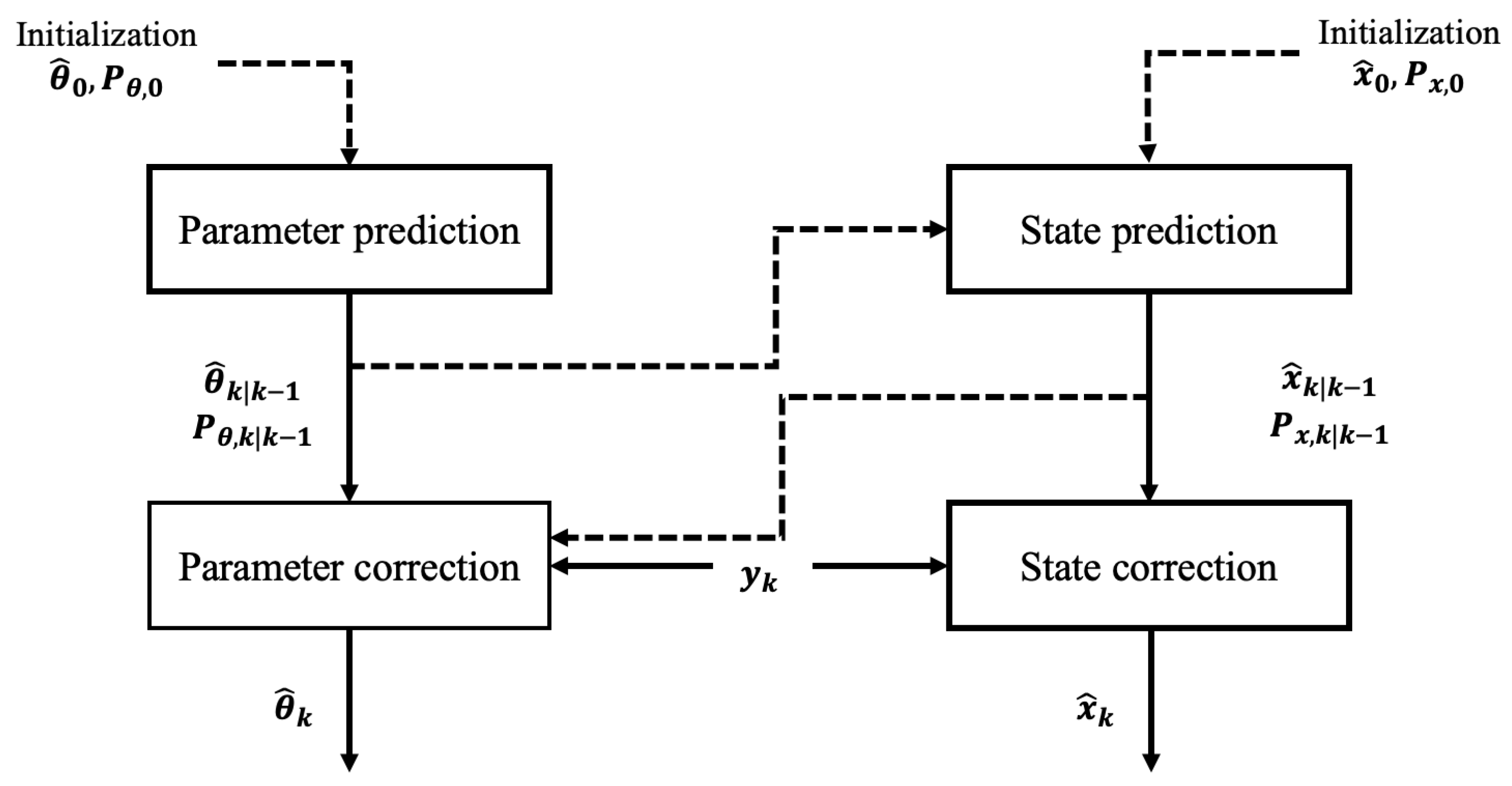

2.3. Dual State and Parameter Estimation

In dual estimation, two independent filters operate in parallel: one for state estimation and the other for model parameters identification. The state filter utilizes system measurements and the current model to estimate the dynamic state variables, while the parameter filter relies on the updated state estimates to refine the parameter estimates. This structure enables a decoupled yet interactive estimation process that enhances adaptability and robustness. A schematic of the dual estimation framework is illustrated in

Figure 1.

The dual estimation approach has been applied successfully in various engineering domains. For example, in [

53], the Dual Extended Kalman Filter (DEKF) was employed to estimate both vehicle states and parameters, specifically tire cornering stiffness, which changes under different driving conditions. By allowing continuous adaptation of the parameters as vehicle dynamics evolved, the method dramatically improved state estimation accuracy and enhanced real-time vehicle control performance.

Similarly, in [

54] the Dual Unscented Kalman Filter (DUKF) was utilized for state and parameter estimation in surface-atmosphere exchange processes, addressing missing data in net ecosystem CO

2 exchange (NEE). The DUKF provided continuous updates that outperformed conventional gap-filling techniques by improving estimation accuracy and reducing subjectivity, while effectively accounting for uncertainties in both model structure and measurements.

In [

55], the Dual Cubature Kalman Filter (DCKF) was utilized to estimate the state of charge and circuit model parameters in lithium-ion batteries, delivering accurate and reliable results under varying operational conditions. Additionally, in [

56], the Dual Ensemble Kalman Filter (DEnKF) was implemented to enhance streamflow forecasting, enabling simultaneous estimation of hydrological states and parameters, such as soil moisture and storage capacities, within a dynamic model. This approach addressed limitations of traditional calibration techniques, by enabling continuous updates in response to environmental changes.

2.4. Comparison

Both joint and dual state-parameter estimation methods aim to recover the same quantities, namely, the system states and the unknown parameters, from noisy measurements. The distinction lies not in the problem formulation but in the computational architecture used to achieve this goal, specifically: (1) In joint estimation, the state and parameter vectors are combined into a single augmented state vector, and one unified filter (e.g., EKF, UKF, CKF, or EnKF) simultaneously estimates them within a single recursive process. (2) In dual estimation, two filters operate in parallel: one estimates the states using the current parameter estimates, while the other updates the parameters based on the most recent state estimates. This decoupling reduces filter coupling errors and often improves numerical stability, at the cost of increased computational complexity and a possible time lag between state and parameter updates.

Numerous research studies have compared the performance of joint and dual estimation approaches across different applications, including chemical processes, vehicle dynamics, and environmental systems [

44,

49,

53,

54,

56,

57,

58,

59,

60]. In [

57] the Joint Extended Kalman Filter (JEKF) was evaluated against the Dual Extended Kalman Filter (DEKF) for simultaneous estimation of states and parameters in a highly nonlinear continuous stirred tank reactor (CSTR). The results highlighted the advantages of DEKF, particularly in reducing the risk of filter divergence by decoupling the state and parameter estimation processes. Moreover, the DEKF reduces computational load by allowing the parameter estimator to be turned off once the parameters converge to their optimal values, without compromising the accuracy of state estimation. This feature is particularly beneficial in systems where parameters stabilize over time, thus improving filter efficiency.

Another study in [

58] proposed a modified version of the Dual Unscented Kalman Filter (MDUKF), which builds on the DUKF’s ability to deactivate the parameter estimator once sufficient accuracy is achieved. Unlike the standard DUKF, which continuously estimates state and parameter variables separately, the MDUKF improves performance by integrating a refined approach that allows the parameter estimator to be turned off once a satisfactory accuracy level is reached via a selective update mechanism. This strategy significantly reduces computational load while improving estimation performance. The effectiveness of this approach was demonstrated in the dual estimation of vehicle states and critical parameters such as side-slip angle, lateral tire-road forces, and the tire-road friction coefficient.

Similarly, the performance of the JCKF against the DCKF was assessed in [

49]. The simulation results indicate that while both methods achieve accurate estimations, the DCKF consistently surpasses the JCKF in terms of accuracy, computational efficiency, and robustness, especially under varying conditions. These findings makes the dual estimation approach the preferred choice for real-time applications, especially in scenarios where environmental conditions are unpredictable.

A concise summary of the main characteristics, advantages, and limitations of the joint and dual Kalman-based estimation methods is provided in

Table 2.

2.5. Challenges with Kalman-Based Methods

The performance of Kalman-based filters heavily depends on the appropriate selection of the process and measurement noise covariance matrices,

and

[

1]. These matrices define the statistical properties of process and measurement noise, respectively. Achieving the right balance is crucial: if the covariance values are set too low, the filter may become overly sensitive to disturbances, leading to inaccurate estimates. Conversely, excessively high values can result in overly conservative estimates, diminishing the filter’s responsiveness and adaptability [

1].

One major challenge is accurately modeling the complex and nonlinear noise sources that are prevalent in real-world applications [

61]. Noise characteristics often change over time and are affected by environmental factors and operating conditions, making it challenging to accurately capture their statistical properties. Inaccurate noise modeling can result in incorrect assumptions about system behavior, leading to degraded filter performance or even divergence [

62,

63].

Another challenge lies in tuning critical algorithm-specific parameters, such as the unscented transform parameters (

,

, and

) for the Unscented Kalman Filter (UKF) [

64,

65], the optimal placement and weights of cubature points for the Cubature Kalman Filter (CKF), and the appropriate number of ensemble members for the Ensemble Kalman Filter (EnKF). These parameters are often tuned manually with minimal theoretical guidance, relying on trial and error until satisfactory filter performance is achieved. However, this ad hoc approach is time-consuming and does not guarantee optimal parameter selection, especially given the large number of parameters involved [

46].

To address these challenges, several research efforts have proposed systematic approaches [

66,

67,

68]. For example, a reference recursive recipe (RRR) has been developed for tuning Kalman filter covariance matrices, which iteratively updates the matrices based on sample statistics derived from the filter [

69,

70]. Another approach utilizes particle swarm optimization (PSO) algorithms to fine-tune filter parameters. These algorithms iteratively adjust the covariance matrices at each time step by optimizing a predefined cost function, as demonstrated in [

1,

71,

72]. The approach in [

72] utilize a particle swarm optimization (PSO) algorithm to fine-tune Kalman filter parameters, iteratively updating the filter covariance matrices at each time step by minimizing the mean squared error. Additionally, Ref. [

64] introduces a systematic framework for tuning the scaling parameters

,

, and

of the unscented transform in the UKF, providing a structured approach to enhance filter performance while reduce reliance on manual tuning.

These advancements provide promising alternatives to traditional filter parameter selection methods, enabling more efficient and accurate selection and enhancing the robustness of Kalman-based filters in practical applications.

3. Simultaneous States and Parameters Estimation: Observer-Based Methods

Observer-based approaches provide an alternative framework to Kalman filtering for the simultaneous estimation of system states and parameters. These methods are grounded in deterministic system theory and are often favored for their conceptual simplicity, reduced computational requirements, and ease of implementation in certain classes of systems. This section presents a range of observer-based techniques that have been explored in the literature for simultaneous state and parameter estimation. For consistency with the formulations in

Section 2, all observer-based approaches discussed in this section are derived from the general discrete-time nonlinear system model (3) and (4), with specific assumptions (linearity, time-variation, or parameter dependence) applied as required by each observer type.

3.1. Observer-Based Methods for Simultaneous State and Parameters Estimation

State estimation via Luenberger observers was first introduced by D. Luenberger [

14]. It typically has the following form

where

is the estimated state,

is the control input,

is the system output,

and

are the system matrices, and

is the output matrix.

is the observer gain matrix, selected to place the poles of the error dynamic matrix

at a desired location determined by the desired convergence rate of the estimations. One major assumption in the design of the Luenberger observer is that the system model (i.e.,

,

, and

matrices) match the true plant dynamics (i.e., Equation (

1)).

Note: To maintain consistency with the notation used in

Section 2, the matrices

,

, and

in Equation (

50) correspond respectively to

,

, and

in Equation (

1).

The application of Luenberger observers for simultaneous state and parameter estimation in linear time-invariant systems was first explored in the early 1970s [

73]. The fundamental approach involved augmenting the observer with integrators to estimate constant parameters alongside system states. Later development enabled the application of these methods to time-varying systems [

74].

For linear systems, a simple approach is to augment the state vector with the unknown parameters using an augmented Luenberger observer [

75]. An alternative approach involves organizing the unknown parameters into structured uncertainty matrices, which are then identified using a modified Luenberger observer architecture in combination with a parameter estimation algorithm to simultaneously perform state and parameter estimation [

15].

For nonlinear systems, adaptive observers [

76], high-gain observers [

77], and sliding mode observers are popular. These techniques provide robust alternatives for handling system nonlinearities and uncertainties. A common assumption across all these methods is that the parameter vector

is observable from the available measurements, ensuring the system’s states and parameters can be effectively estimated.

The reviewed literature demonstrates a variety of observer-based approaches for simultaneous state and parameter estimation, offering effective alternatives to stochastic filtering methods, especially in systems where model structure is well-understood and noise characteristics are not dominant.

3.1.1. Augmented Luenberger Observer

Consider the discrete-time linear system defined as

where

is the state vector,

is the control input,

is the measurement output, and

is the vector of unknown parameters. The matrices

,

, and

represent the system dynamics, input, and output, respectively, while

is the parameters matrix, capturing the influence of the parameters on the system dynamics.

In this method, the modified observer equation for the augmented system can be written as [

75]

where

is the estimate of the augmented state vector, and

is the observer gain matrix. The gain

is designed to ensure that the matrix

is Schur stable. This implies that all the eigenvalues lie strictly inside the unit circle. As in the standard Luenberger observer, the output error term

is used to correct the estimates, driving them towards the true values. The convergence rate of the observer can be adjusted when designing the observer gain

. Common methods to do that is via pole placement or linear matrix inequalities (LMI) [

78,

79].

The system matrices

,

, and

of the augmented system in (

53) can be written as

It is commonly assumed that the influence of parameters is incorporated into the state matrix

. However, in practice, parameters may also affect the input matrix

[

15] or the output matrix

[

80]. This flexibility allows the observer structure to be adapted to different system representations. However, a notable drawback of this method is its restriction to linear systems, as described in Equations (51) and (52), along with the assumption that parameters are constant or vary slowly (

). When the unknown parameters undergo significant variations, this assumption can degrade performance, preventing the estimates from accurately tracking changes in the true parameters. Moreover, since many real-world systems are inherently nonlinear, directly applying this method often fails to achieve effective state-parameter estimation. In such cases, alternative approaches, such as adaptive observers, are required to overcome these challenges.

3.1.2. Observer-RLSE-CR Method

The Observer-RLSE-CR method integrates deterministic state estimation with adaptive parameter identification in systems with time-varying uncertainties. In this approach, the system matrices are expressed as the sum of a nominal and a perturbation matrix [

15]

where

is the system state,

is the system input, and

is the measured system output. The system matrices are defined as

and

, where

and

are the nominal system parameters, and

and

are the unknown time-varying parameters. The system output matrix,

, defines the individual state(s) that are measured.

The study in [

15] proposes an Observer-RLSE-CR framework for the joint estimation of system states and parameters. The proposed algorithm integrates a modified Luenberger equation for state estimation, as shown in Equation (

57), while concurrently estimating the uncertainty matrices online using recursive least squares estimation with covariance reset (RLSE-CR). Specifically,

where

is the state estimate,

is the observer gain,

and

are the estimates of the system state and input uncertainty matrices, respectively. These time-varying uncertainty estimates are obtained online using the RLSE-CR algorithm, which recursively minimizes a least-squares cost function while implementing a covariance reset mechanism to improve estimator stability and responsiveness. The reset is activated once convergence criteria are met, thereby enhancing adaptability in the presence of parameter drift or abrupt changes.

The flowchart of the algorithm proposed in [

15] is presented in

Figure 2. Further insights into the parameter estimation process can be found in [

15]. Additionally, a systematic method is introduced for selecting the sliding window size

p in the RLSE-CR procedure, based on the desired convergence rate and system settling time as well.

A comparison between the Observer-RLSE-CR estimator, the augmented Kalman filter, and the Kalman filter coupled with RLSE is provided in [

15]. While all the algorithms effectively estimate both states and parameters, the Observer-RLSE-CR has shown benefits over the Kalman-based filters in capturing uncertainties within the input uncertainty matrix

.

This approach presents several advantages over the augmented Luenberger observer. It not only enables accurate joint estimation of system states and time-varying parameters but also effectively mitigates noise introduced by measurement sensors. However, a key limitation of this method is its restriction to linear systems.

3.1.3. High Gain Observer

High-gain observers are widely used for nonlinear systems or systems with varying dynamics [

81,

82]. It employs a large observer gain to amplify the output error, enabling rapid corrections of the estimates and facilitating fast convergence to the true values. In linear systems, a standard augmented Luenberger observer formulation is generally adopted, with the observer gain set to a high level. For nonlinear systems, a more structured approach is required. This often involves transforming the system into an observable canonical form and defining an appropriate parameter estimation law before applying the high-gain observer.

Consider the discrete-time nonlinear system defined by

where

is the system state,

is the system input,

is the system output,

is the unknown parameters vector, and

f and

h are nonlinear functions describing the system dynamics and output behavior. The parameters may be constant or slowly varying such that

, where

g is the dynamic law governing the parameters variation over time.

When dealing with constant parameters, i.e.,

, the estimation process is simplified. An immediate method for the concurrent system state and unknown parameters estimation is to augment the state vector with the unknown parameters [

77]. Defining the augmented state vector as

Thus, the high-gain observer for the nonlinear augmented system can be written as

where

is the high-gain observer matrix for the augmented system. The innovation term

corrects the estimates based on output discrepancies.

For time-varying parameters, a separate adaptive update law is introduced to allow the observer to track parameter changes, as follows

Here, is a time-varying learning rate that controls the adaptation speed. The innovation term continues to serve as the correction signal, enabling the parameter estimate to follow dynamic changes effectively.

Meanwhile, the state estimates continue to evolve based on the standard high-gain observer formulation, using the estimated parameters to generate forward predictions, as follows

where

is the high-gain matrix for the state estimation.

Another approach that can be adopted for the joint state-parameter estimation is concurrently employing a high-gain observer (i.e., Equation (

63)) for state estimation and another estimator such as a Kalman filter or a Recursive Least Squares for parameter estimation.

One drawback of high-gain observers is their heightened sensitivity to modeling errors and measurement noise. The large gains can amplify noise, leading to reduced estimation accuracy and diminished performance. Additionally, high-gain observers often require the system to be expressed in a particular form, such as an observable canonical form or strict-feedback form, thereby limiting their applicability to certain systems.

3.1.4. Sliding Mode Observer

State estimation via sliding mode observer (SMO) was first introduced in the mid 1980s [

83,

84]. For the system

,

, the SMO has a similar form to the standard observer with a replacement of the innovation term with a discontinuous switching function as shown below [

84]

The sliding mode term

enhances the observer’s capacity to manage uncertainties and disturbances by introducing a sliding surface that forces the estimation error to converge to zero in finite time. However, in practical implementations, this mechanism can lead to undesirable chattering effects. To alleviate this, practitioners often replace the switching term with a saturation function, thereby reducing chattering while preserving robustness. Thus, the modified SMO can be written in the form

where

is the estimation error and

is a small positive threshold used to reduce the effect of chattering. The saturation function is defined as [

85,

86]

Various strategies have been proposed to extend SMOs for joint estimation of states and parameters. One approach involves augmenting the state vector with unknown parameters, treating them as additional state variables to be estimated using a single SMO framework [

87]. Another method employs dual SMO structures, with one observer dedicated to state estimation and the other to parameter identification, operating in parallel [

88]. An alternative strategy uses an adaptive SMO for state estimation coupled with a separate online parameter estimator. In this configuration, the parameter estimator continuously updates the model parameters in real time, and the observer adapts accordingly [

89].

3.1.5. Adaptive Observer

Adaptive observer is commonly used when dealing with nonlinear time-varying systems [

74,

90,

91]. Consider the nonlinear system defined in Equations (58) and (59). The observer for state estimates can be represented with the following equations

where

is the nonlinear, time-varying observer gain, and

is the estimation error. For adaptive observers, an adaptation law is designed to update the parameter estimates as follows

where

is a positive definite matrix controlling the rate of parameter adaptation and

is the regressor related to the system dynamics. For simple problems,

is often set constant such that

where

are constants that determine the adaptation speed of each parameter

. Larger values of

lead to faster convergence but may also amplify noise and destabilize the system if set too high.

In more advanced implementations, time-varying gain matrices

are adopted. One strategy involves decreasing the values of

as the estimation error diminishes, helping to stabilize the adaptation over time. Another common method leverages Lyapunov stability theory, for a guaranteed asymptotic stability of the estimation error [

90,

92].

Defining the Lyapunov function

where

is a symmetric positive definite matrix, and

is the parameter estimation error.

Stability is ensured by choosing

such that the Lyapunov difference satisfies:

[

93].

Adaptive observers are particularly effective in nonlinear, time-varying settings to achieve accurate state and parameter estimation. They rely on an adaptive law that continuously updates parameters over time. The key features of their design include the time-varying observer gain , the adaptation law driven by the estimation error , and the parameter update rate , which may be constant or time-varying, adaptively tuned to maintain stability and desired performance.

3.2. Challenges with Observer-Based Methods

The performance of the Luenberger observer depends heavily on the accuracy of the system model. Modeling uncertainties or incorrect system dynamics can significantly degrade its estimation capabilities, and in some cases, lead to divergence [

94]. Furthermore, a major limitation of observer-based methods is their sensitivity to noise, as they do not explicitly incorporate process and measurement noise the way Kalman-based filters do. This makes them particularly vulnerable in noisy environments, often resulting in biased or noisy estimates of states and parameters.

High-gain observers are especially sensitive to measurement noise. Although the use of large gains accelerates convergence, it also amplifies noise, resulting in a trade-off between convergence speed and robustness. Proper tuning of the observer gain is therefore critical, as excessive gain values may destabilize the estimation process or introduce significant noise artifacts into the state estimates.

Sliding mode observers face their own set of challenges, notably the chattering effect caused by high-frequency switching nature of the sliding mode correction term. This can introduce undesirable oscillations that can be detrimental to physical systems. Furthermore, designing effective SMOs often requires careful selection of the sliding surface and observer parameters, typically achieved through trial-and-error procedures, which further complicates practical implementation and tuning.

Adaptive observers pose unique challenges as well. One key requirement is the presence of persistent excitation in the system inputs to ensure convergence of parameter estimates. If this condition is not met, the observer may fail to adequately capture parameter dynamics, resulting in poor estimation accuracy. Additionally, the design of the adaptation gain matrix must balance speed of convergence with robustness to noise and modeling uncertainties.

Finally, across all observer types, another common limitation is their dependence on accurate initial conditions. Poorly initialized state or parameter estimates can lead to slow convergence, large transient errors, or in some cases, complete divergence of the estimation process.

4. Comparative Discussion of Kalman-Based and Observer-Based Approaches

From a broader perspective, Kalman-based and observer-based approaches represent two complementary philosophies in simultaneous state and parameter estimation. Kalman-based filters, rooted in stochastic estimation theory, explicitly account for process and measurement noise through covariance modeling and probabilistic estimation. When the statistical properties of the noise are well characterized, these filters provides statistically optimal estimates in the minimum mean-square error sense. The probabilistic foundation enables Kalman-based algorithms to achieve high robustness against random disturbances, measurement uncertainties, and modeling imperfections. However, these advantages come at the expense of increased computational cost and sensitivity to incorrect noise covariance tuning or modeling errors, which can lead to divergence or degraded performance, particularly in highly nonlinear or time-varying systems.

Observer-based methods, by contrast, are grounded in deterministic system theory. They reconstruct system states and parameters through feedback mechanisms that exploit the structure of the underlying model rather than probabilistic assumptions. Because they do not rely on explicit noise statistics, observers are often applied in systems where the model dynamics are well characterized but noise properties are uncertain or difficult to quantify. While this independence from statistical noise modeling simplifies implementation, it also limits robustness to measurement disturbances. Nevertheless, deterministic observers generally exhibit faster convergence, simpler implementation, and lower computational requirements than their Kalman-based counterparts. However, they lack built-in uncertainty quantification mechanisms (e.g., they do not, by construction, produce uncertainty measures such as covariance matrices or confidence intervals) and their performance may degrade in the presence of significant measurement noise, unmodeled dynamics, or parameter drift.

In practical terms, the selection between Kalman-based and observer-based approaches depends on the nature of the system and the information available about its uncertainty sources. For systems dominated by stochastic disturbances, where statistical modeling is feasible and computational resources are sufficient, Kalman-based filters remain the preferred choice. Conversely, for systems with reliable deterministic models, limited sensor data, or stringent real-time constraints, observer-based approaches often provide a more efficient and flexible solution. A concise comparison of the key characteristics, advantages, and limitations of Kalman-based and observer-based approaches is presented in

Table 3.

Recent studies have also explored hybrid estimation frameworks that integrate the statistical optimality of Kalman filtering with the structural adaptability of deterministic observers. Such hybrid schemes have been proposed across various domains, including vehicle dynamics, robotics, and energy systems, to enhance robustness and estimation accuracy under mixed stochastic and deterministic uncertainties [

88,

95,

96,

97]. These hybrid architectures show promising directions for achieving improved estimation accuracy and stability, particularly in nonlinear systems with time-varying parameters.

5. Conclusions

Presented in this manuscript is a comprehensive review and a comparative analysis of various methodologies explored in the literature for the simultaneous estimation of system states and parameters, focusing on approaches based on Kalman filters and Luenberger observers. While Kalman-based methods excel in handling stochastic noise, observer-based methods offer simplicity and efficiency in deterministic settings.

The methods reviewed are generally categorized into three main approaches:

Augmented State Approach: The unknown parameters are treated as additional states within the system, leading to an augmented state vector. An observer is then designed to estimate the full set of states, including both the system states and the unknown parameters. This method is advantageous in systems where the states and parameters are interdependent, but the complexity of the augmented observer increases with the number of parameters, which can affect computational efficiency and robustness to noise.

Decoupled Estimation Approach: The estimation tasks for the unknown parameters and the system states are decoupled, and two separate observers are run concurrently, one for state estimation and the other for parameter estimation. This approach simplifies the observer design by separating the two estimation problems. However, challenges arise when strong interactions exist between the system states and parameters, which can lead to inaccuracies or slow convergence.

Parameter Identification Coupled with State Estimation: A parameter identification technique is used to estimate the unknown parameters, which is then coupled with a state observer for state estimation. This approach leverages well-established parameter estimation techniques, such as least squares, in conjunction with traditional state observers. While this method can be efficient, its accuracy and convergence are highly dependent on the parameter identification process and the model’s sensitivity to parameter changes.

The challenges and limitations associated with each estimation method have been explored. A significant challenge is noise sensitivity, particularly in methods like the Luenberger observer, which rely heavily on accurate model assumptions. Robustness to noise is a critical factor, especially in real-world systems where measurements are often noisy or uncertain. Additionally, the computational complexity of the methods varies significantly, with some requiring substantial resources due to the complexity of the observer design or the number of parameters involved.

Each method offers distinct advantages and trade-offs depending on the system’s characteristics and performance requirements. For example, the augmented state approach provides a unified framework for simultaneous state and parameter estimation but may impose a higher computational burden. On the other hand, decoupled approaches can reduce computational effort but may struggle when state and parameter dynamics are strongly coupled.

The selection of the most appropriate method ultimately depends on several factors, including the quality and quantity of available data, the complexity of the system dynamics, the accuracy of the system model, and the desired level of computational efficiency. Future research could focus on hybrid approaches that integrate the strengths of multiple methods, as well as adaptive techniques that dynamically adjust the estimation strategy based on real-time data characteristics and evolving system behavior. These advancements could further enhance the robustness and applicability of state and parameter estimation methods in complex, noisy environments.

Future Research Directions

Although significant progress has been made in simultaneous state and parameter estimation using Kalman filters and observer-based approaches, several important research challenges remain open. One promising direction is the development of hybrid stochastic-deterministic frameworks that leverage the complementary strengths of both paradigms. For example, embedding observer feedback structures within Kalman-based filters could improve numerical stability and convergence under parameter uncertainty, while integrating covariance adaptation into deterministic observers may enhance robustness to noise and unmodeled dynamics.

A second key challenge concerns the treatment of strongly coupled states and parameters (e.g, cases when state and parameters contributions to measured outputs are difficult to distinguish), where interdependence reduces observability and can lead to estimator divergence or slow convergence. Future research should focus on systematic observability analysis, parameter sensitivity quantification, and coupling-decoupling strategies that preserve estimation accuracy while maintaining computational tractability.

Another important direction involves handling fast time-varying parameters. Most existing simultaneous state and parameter estimation algorithms assume slowly varying or piecewise-constant (quasi-static) parameters (i.e., within the estimation window), which limits their applicability in systems with rapid parameter evolution. Addressing this issue requires adaptive observers and Kalman filter variants capable of tracking fast parameter dynamics without compromising numerical stability or state accuracy.

Furthermore, data-driven and learning-assisted estimation techniques are expected to play an increasingly important role. The fusion of physics-based observers with neural or regression-based parameter estimators could enable real-time adaptation to nonlinear or partially known dynamics while maintaining interpretability and physical consistency.

From a computational perspective, the scalability of simultaneous state and parameter estimation algorithms to high-dimensional systems remains an unsolved challenge. Efficient reduced-order, distributed implementations, particularly for systems with large augmented state vectors, represent a critical area for future exploration.