Improved YOLOv10: A Real-Time Object Detection Approach in Complex Environments

Abstract

1. Introduction

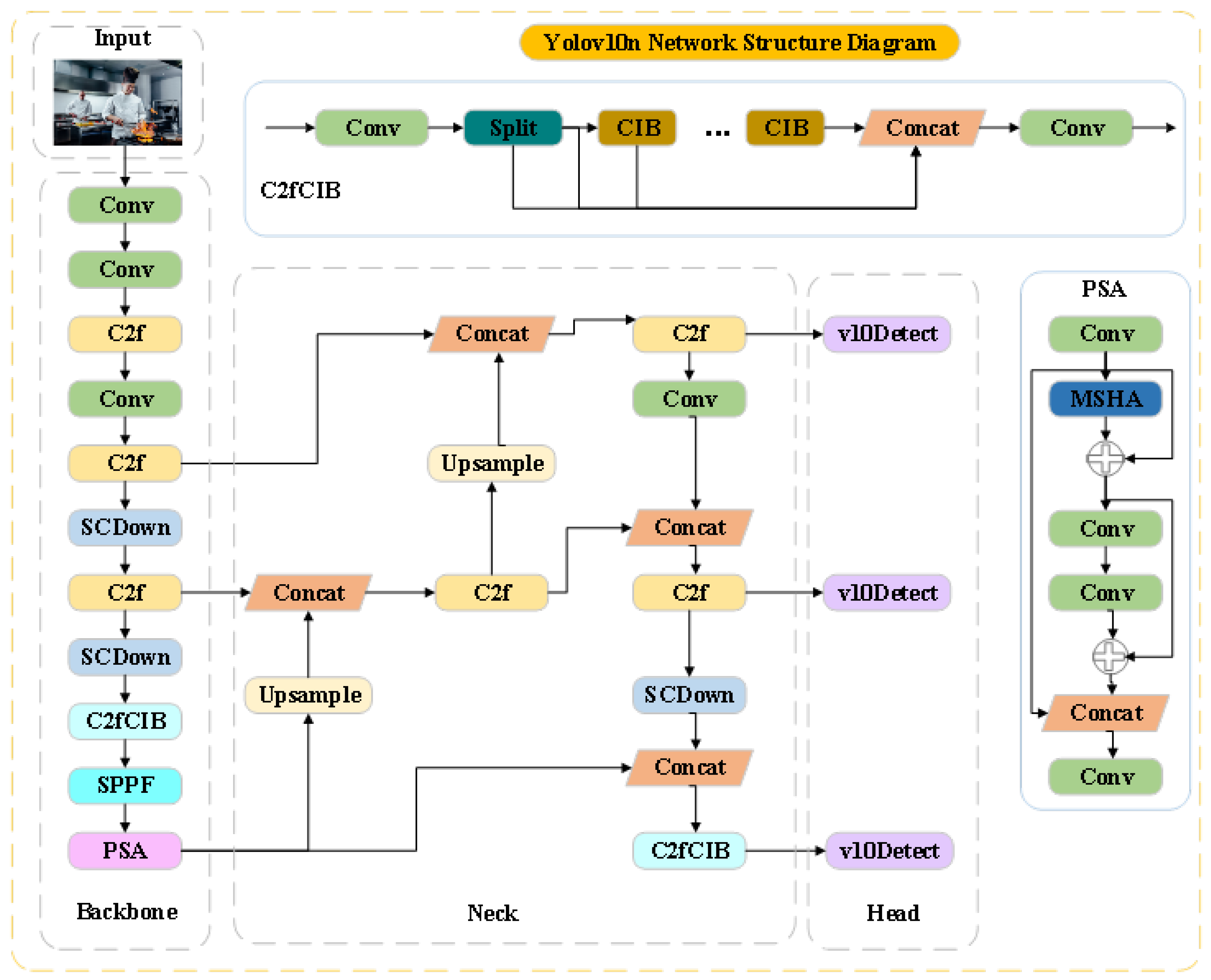

2. Principle of YOLOv10

3. Improved YOLOv10 Object Detection Algorithm

3.1. Improved Model Network Structure

3.2. Mosaic Enhanced Network

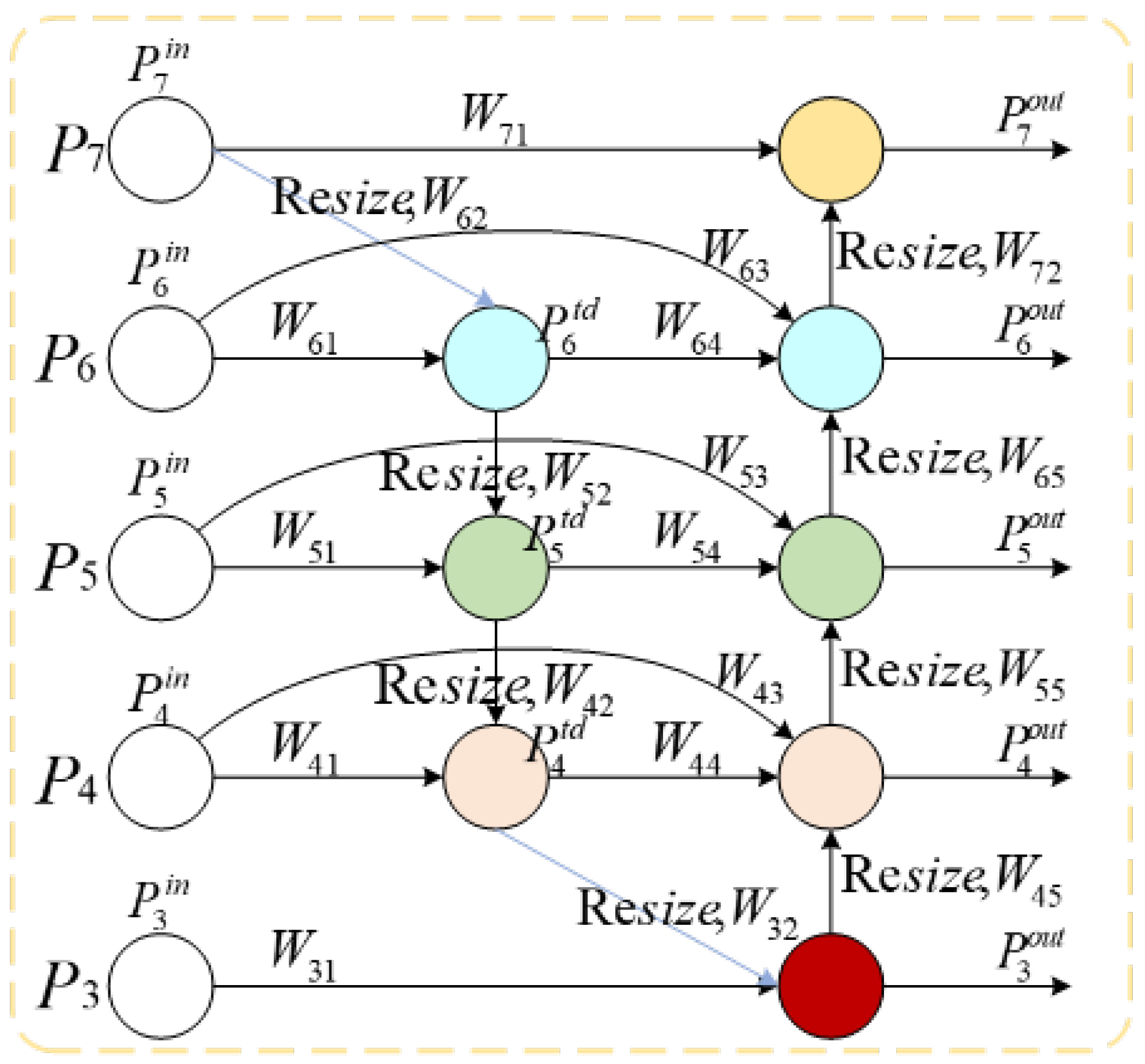

3.3. BiFPN Fusion Module

3.4. Squeeze-and-Excitation Networks

4. Experimental Verification

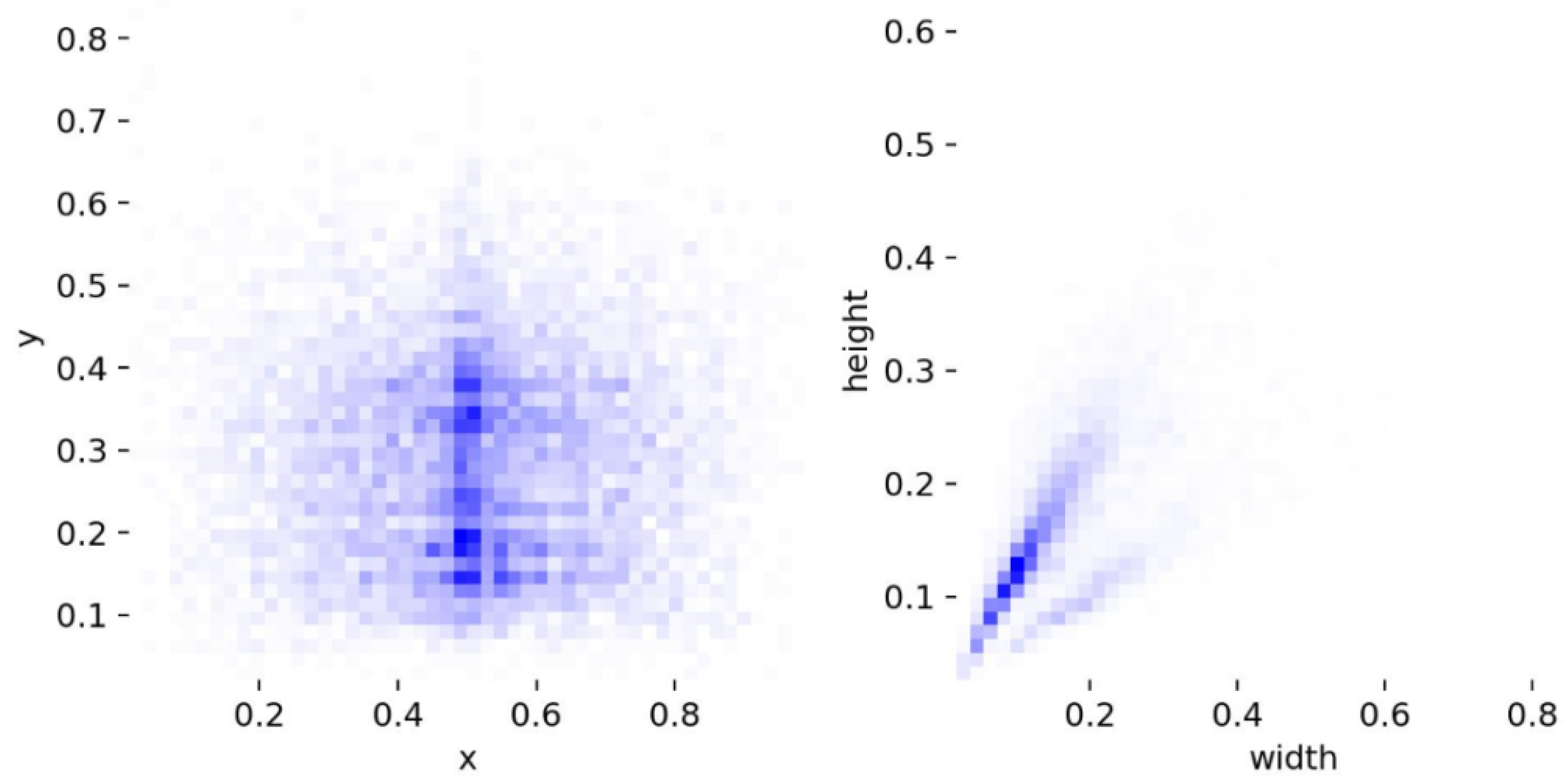

4.1. Dataset

4.2. Experimental Environment

4.3. Evaluation Indicators

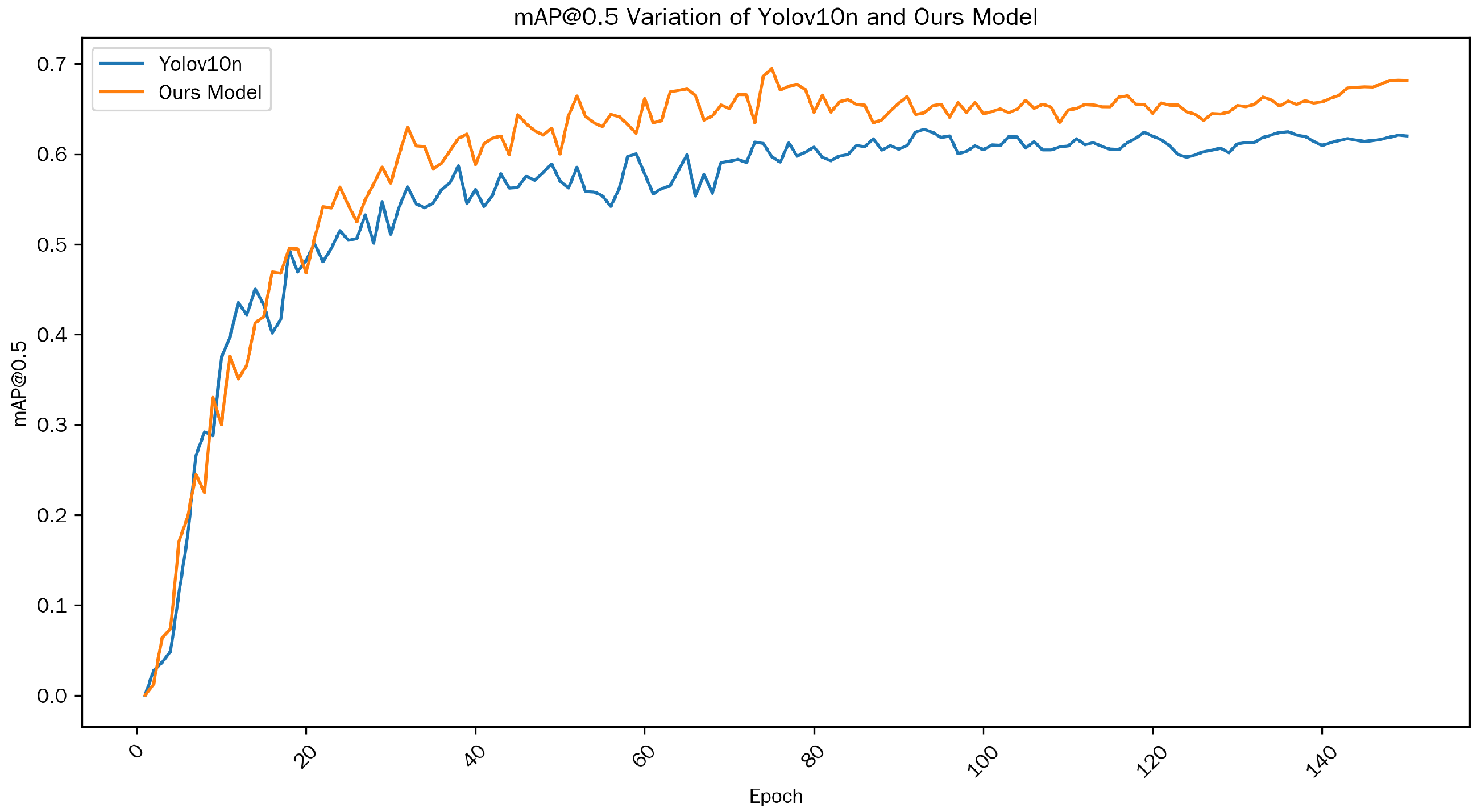

4.4. Ablation Experiment

4.5. Comparative Experiment of Different Object Detection Models

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sharma, V.K.; Roohie, N.M. A Comprehensive and Systematic Look up into Deep Learning Based Object Detection Techniques: A Review. Comput. Sci. Rev. 2020, 38, 100301. [Google Scholar] [CrossRef]

- Zaidi, S.S.A.; Ansari, M.S.; Aslam, A.; Kanwal, N.; Asghar, M.; Lee, B. A Survey of Modern Deep Learning Based Object Detection Models. Digit. Signal Process. 2022, 126, 103514. [Google Scholar] [CrossRef]

- Dong, X.; Yan, S.; Duan, C. A Lightweight Vehicles Detection Network Model Based on YOLOv5. Eng. Appl. Artif. Intell. 2022, 113, 104914. [Google Scholar] [CrossRef]

- Aziz, L.; Salam, M.S.B.H.; Sheikh, U.U.; Ayub, S. Exploring Deep Learning-Based Architecture, Strategies, Applications and Current Trends in Generic Object Detection: A Comprehensive Review. IEEE Access 2020, 8, 170461–170495. [Google Scholar] [CrossRef]

- Deng, J.; Xuan, X.; Wang, W.; Li, Z.; Yao, H.; Wang, Z. A Review of Research on Object Detection Based on Deep Learning. J. Phys. Conf. Ser. 2020, 1684, 012028. [Google Scholar] [CrossRef]

- Chen, X.H. Research on Chef’s Head and Face Attire Detection Algorithm Based on Improved YOLO Algorithm. Master’s Thesis, Anhui Jianzhu University, Hefei, China, 2024. [Google Scholar] [CrossRef]

- Maity, M.; Banerjee, S.; Chaudhuri, S. Faster R-CNN and YOLO Based Vehicle Detection: A Survey. In Proceedings of the 2021 5th International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 8–10 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1442–1447. [Google Scholar] [CrossRef]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for Object Detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 3520–3529. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 7263–7271. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the Computer Vision—ECCV 2024, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2025; Volume 14703, pp. 1–15. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, L.L.; Deng, X.L. Based on the Improved YOLOv5s Small Object Detection Algorithm. J. Anhui Univ. Sci. Technol. 2024, 38, 69–77. [Google Scholar] [CrossRef]

- Jiang, X.L.; Yang, L.; Zhu, J.H. A Safety Helmet Detection Algorithm Based on Improved YOLOXs for Complex Scenarios. J. Nanjing Norm. Univ. Nat. Sci. Ed. 2023, 46, 107–114. [Google Scholar] [CrossRef]

- Pei, Y.X.; Huang, Z. A Occlusion Target Recognition Algorithm Based on Improved YOLOv4. J. Hainan Trop. Ocean Inst. 2025, 32, 106–113. [Google Scholar] [CrossRef]

- Cao, J.W.; Luo, F.; Ding, W.C. BS-YOLO: A Novel Small Target Detection Algorithm Based on BSAM Attention Mechanism and SCConv. Comput. Eng. 2024, 1–10. [Google Scholar] [CrossRef]

- Wu, S.; Geng, J.; Wu, C.; Yan, Z.; Chen, K. Multi-Sensor Fusion 3D Object Detection Based on Multi-Frame Information. Trans. Beijing Inst. Technol. 2023, 43, 1282–1289. [Google Scholar] [CrossRef]

- Yang, L.; Chen, Y.F.; Li, H.M.; Shi, J.; An, P. Object Detection Algorithm for Autonomous Driving Scenes Based on Improved YOLOv8. Comput. Eng. Appl. 2025, 61, 131–141. Available online: http://cea.ceaj.org/EN/Y2025/V61/I1/131 (accessed on 31 December 2024).

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A New Backbone That Can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1571–1580. [Google Scholar] [CrossRef]

- Yang, J.; Fu, X.; Hu, Y.; Huang, Y.; Ding, X.; Paisley, J. PanNet: A Deep Network Architecture for Pan-Sharpening. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1753–1761. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Wait YOLOv10: Real Time End-to-End Object Detection. Unpublished Manuscript. 2024. Available online: https://api.semanticscholar.org/CorpusID:269983404 (accessed on 31 December 2024).

- OpenMMLab. MMYOLO: OpenMMLab YOLO Series Toolbox and Benchmark [Computer Software]. Version 0.6.0. 2023. Available online: https://github.com/open-mmlab/mmyolo (accessed on 1 November 2024).

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 10778–10787. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Hu, X. Focal Modulation Networks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 12345–12354. [Google Scholar] [CrossRef]

- Lou, C.C.; Nie, X. Research on Lightweight-Based Algorithm for Detecting Distracted Driving Behaviour. Electronics 2023, 12, 4640. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Boddu, S.; Mukherjee, A.; Seal, A. YOLOv5-Based Object Detection for Emergency Response in Aerial Imagery. In Proceedings of the SoutheastCon, Concord, NC, USA, 22–30 March 2025; IEEE: Piscataway, NJ, USA, 2024; pp. 1536–1541. Available online: https://api.semanticscholar.org/CorpusID:274596900 (accessed on 31 December 2024).

| Layers | From | Parameters | Module |

|---|---|---|---|

| 0 | −1 | 464 | Conv |

| 1 | −1 | 4672 | Conv |

| 2 | −1 | 7392 | C2fSE |

| 3 | −1 | 18,560 | Conv |

| 4 | −1 | 49,920 | C2fSE |

| 5 | −1 | 9856 | SCDown |

| 6 | −1 | 198,656 | C2fSE |

| 7 | −1 | 36,096 | SCDown |

| 8 | −1 | 462,336 | C2fSE |

| 9 | −1 | 164,608 | SPPF |

| 10 | −1 | 312,064 | FocalModulation |

| 11 | −1 | 249,728 | PSA |

| 12 | 4 | 4224 | Conv |

| 13 | 6 | 8320 | Conv |

| 14 | 11 | 16,512 | Conv |

| 15 | −1 | 0 | nn.Upsample |

| 16 | [−1, 13] | 2 | BifpnFusion |

| 17 | −1 | 29,056 | C2f |

| 18 | −1 | 0 | nn.Upsample |

| 19 | [−1, 12] | 2 | BifpnFusion |

| 20 | −1 | 29,056 | C2f |

| 21 | 2 | 18,560 | Conv |

| 22 | [−1, 12, 20] | 3 | BifpnFusion |

| 23 | −1 | 29,056 | C2f |

| 24 | −1 | 36,929 | Conv |

| 25 | [−1, 13, 17] | 3 | BifpnFusion |

| 26 | −1 | 52,864 | C2fCIB |

| 27 | −1 | 73,856 | Conv |

| 28 | [−1, 14] | 2 | BifpnFusion |

| 29 | −1 | 187,648 | C2fCIB |

| 30 | [23, 26, 29] | 862,888 | v10Detect |

| Category | Version |

|---|---|

| GPU | NVIDIA GeForce RTX 4070 Ti × 1 |

| Video Memory | 12 GB |

| Python | Python 3.9 |

| Pytorch | Pytorch 2.0.1 |

| CUDA | CUDA 11.8 |

| Base Model | BiFPN Module | SE Module | Precision (%) | Recall (%) | mAP@0.5 (%) |

|---|---|---|---|---|---|

| YOLOv10n | 72.4 | 58.8 | 61.8 | ||

| ✔ | 81.7 | 60.5 | 67.5 | ||

| ✔ | 73.5 | 63.3 | 66.6 | ||

| ✔ | ✔ | 74.2 | 64.3 | 69.5 |

| Base Model | BiFPN Module | SE Module | Parameters | GFLOPs | Inference (ms) |

|---|---|---|---|---|---|

| YOLOv10n | 2,695,976 | 8.2 | 7.4 | ||

| ✔ | 2,537,780 | 8.1 | 8.9 | ||

| ✔ | 2,846,792 | 8.2 | 9.7 | ||

| ✔ | ✔ | 2,852,820 | 8.3 | 12.1 |

| Model | mAP@0.5 | mAP@0.5:0.95 | Inference (ms) | Parameters/M |

|---|---|---|---|---|

| Yolov5n | 66.9 | 38.3 | 5.5 | 1.9 |

| Yolov8n | 64.7 | 36.9 | 4.7 | 3.0 |

| Yolov10n | 61.8 | 33.7 | 7.4 | 2.7 |

| This paper | 69.5 | 36.7 | 12.1 | 2.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Q.; Nie, X. Improved YOLOv10: A Real-Time Object Detection Approach in Complex Environments. Sensors 2025, 25, 6893. https://doi.org/10.3390/s25226893

Wu Q, Nie X. Improved YOLOv10: A Real-Time Object Detection Approach in Complex Environments. Sensors. 2025; 25(22):6893. https://doi.org/10.3390/s25226893

Chicago/Turabian StyleWu, Qili, and Xin Nie. 2025. "Improved YOLOv10: A Real-Time Object Detection Approach in Complex Environments" Sensors 25, no. 22: 6893. https://doi.org/10.3390/s25226893

APA StyleWu, Q., & Nie, X. (2025). Improved YOLOv10: A Real-Time Object Detection Approach in Complex Environments. Sensors, 25(22), 6893. https://doi.org/10.3390/s25226893