1. Introduction

With the increasingly severe global climate change challenges, the demand for energy transition has become increasingly urgent. Solar photovoltaic power generation, as one of the most promising renewable energy technologies, is experiencing rapid development worldwide [

1,

2]. According to the latest data from the International Renewable Energy Agency, global cumulative PV installed capacity exceeded 2.2 TW by the end of 2024, representing significant growth compared to 1.6 TW in 2023, with annual new installations exceeding 600 GW. It is projected that between 2024 and 2030, the world will add over 5500 GW of renewable energy capacity, with its share in global power generation capacity exceeding 20%. Centralized PV power plants have become the primary driving force in the PV industry development due to their economies of scale and cost advantages, with individual installation capacities evolving from tens of megawatts in the early stages to hundreds of megawatts and even gigawatt-scale at present. However, as PV power plant scales continue to expand and operational lifespans increase, PV module failure issues have become increasingly prominent, constituting a critical bottleneck constraining the sustainable development of the PV industry. Real-time fault detection and classification in photovoltaic systems represents a fundamental task for ensuring power plant operational quality [

3]. During long-term outdoor operation, PV modules are subjected to the combined effects of multiple environmental factors, including ultraviolet radiation, temperature cycling, humid-heat environments, wind-sand erosion, and mechanical stress, making them susceptible to various failures and performance degradation [

4,

5]. The primary failure modes include hot-spot effects, cell cracks, backsheet degradation, junction box failures, and soiling, with their occurrence mechanisms closely related to installation types, geographical locations, and environmental conditions [

6]. These failures not only result in a 5–25% reduction in module power output but may also trigger serious safety incidents such as arcing and fires in severe cases, causing substantial economic losses and safety hazards [

7].

Traditional photovoltaic power plant operation and maintenance primarily rely on three approaches: manual inspection, electrical parameter monitoring through supervisory systems, and ground-based robotic inspection. Manual inspection requires O&M personnel to carry handheld infrared thermal cameras or multimeters to examine PV modules individually. While this method can identify certain obvious appearance defects and electrical faults, it exhibits significant limitations. First, the detection efficiency is extremely low. For a 300 MW photovoltaic power plant containing approximately one million modules, comprehensive manual inspection often requires several months to complete [

8]. Second, the detection costs are prohibitively high, necessitating substantial human resource investment, with annual O&M costs reaching 2–3% of the total plant investment [

9]. Additionally, maintenance personnel must operate in harsh environments characterized by high temperatures, high voltages, and complex terrain, facing significant safety risks including electrical shock and falls [

10]. More critically, manual inspection is susceptible to subjective factors such as personnel skill levels and working conditions, leading to quality issues including missed detections and false positives, which severely compromise the reliability and practicality of manual inspection approaches [

11].

Although electrical parameter monitoring based on supervisory systems enables remote automated monitoring, it can only monitor electrical parameter variations at the string or inverter level, failing to precisely locate specific faulty components and exhibiting insufficient sensitivity to early-stage faults. Al-Subhi et al. proposed a universal photovoltaic cell parameter estimation method based on optimized deep neural networks, achieving adaptive parameter identification across temperature, irradiance, and power rating variations, thereby providing a new paradigm for precise modeling of intelligent PV systems [

12]. Satpathy et al. developed a low-cost monitoring system based on power line communication, integrating optimized sensor networks with web applications to achieve real-time performance monitoring, multi-type fault diagnosis, and alarm functions [

13]. When electrical parameters exhibit anomalies, faults have typically progressed to severe stages, missing the optimal maintenance window and potentially triggering safety incidents such as fires [

14]. Furthermore, electrical monitoring cannot identify faults that do not affect short-term electrical performance but may lead to long-term reliability issues.

Ground-based robotic inspection, as an emerging automated inspection method in recent years, has partially addressed the issues of low efficiency in manual inspection and insufficient precision in electrical monitoring. Ground inspection robots typically employ wheeled or tracked mobile platforms equipped with visible light cameras, thermal infrared cameras, LiDAR, and other sensor equipment, enabling autonomous navigation along predetermined paths while collecting photovoltaic module images. Compared to manual inspection, ground-based robots offer advantages including 24 h continuous operation capability, standardized detection protocols, and immunity to subjective human factors. Although ground-based robotic inspection has improved detection efficiency to some extent, it still exhibits numerous limitations. The mobility of robots is severely constrained, as they can only traverse ground roads or pathways between modules. When confronting complex terrain conditions and dense module layouts in large-scale photovoltaic power plants, robots often fail to reach all inspection positions. There remains considerable room for technological advancement in autonomous route planning and navigation, as well as photovoltaic fault detection and feature analysis [

15]. Furthermore, ground-based robots suffer from limited detection perspectives, capable of capturing module images only from lateral or oblique downward angles, failing to obtain frontal views of module surfaces, which directly compromises fault detection accuracy and reliability. The environmental adaptability of robots is also suboptimal, as they are prone to mobility difficulties or equipment failures under adverse conditions such as rain, snow, waterlogged surfaces, or loose sandy terrain, severely limiting their application effectiveness in complex environments. Additionally, ground-based robots require regular maintenance of critical components, including tires, batteries, and sensors, resulting in increased maintenance frequency and persistently high operational costs [

16]. While ground-based robotic detection efficiency shows improvement compared to manual inspection, it remains significantly inferior to the large-scale and terrain-independent coverage capabilities of unmanned aerial vehicles, which, despite also relying on batteries and optical sensors, offer higher flexibility and operational efficiency.

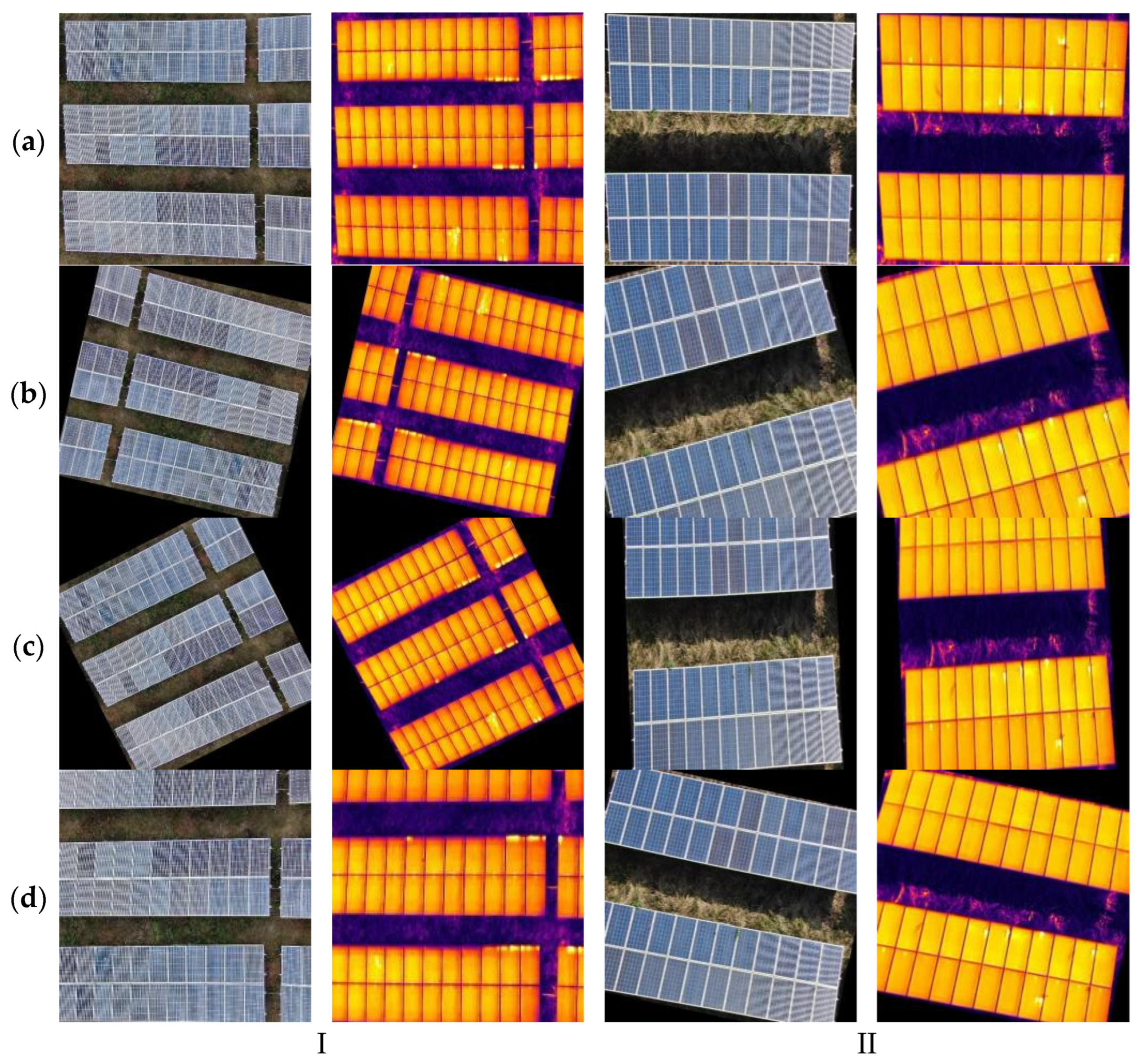

With the rapid development of unmanned aerial vehicle (UAV) remote sensing technology, its applications in the renewable energy sector have become increasingly widespread, particularly demonstrating significant advantages in photovoltaic power plant inspection and fault diagnosis. UAVs possess characteristics of high efficiency, flexibility, and low cost, enabling them to carry various sensors and complete image acquisition of large-area photovoltaic modules within short timeframes. This study constructs a dual-modal image dataset for photovoltaic module fault detection, with data sourced from fixed-altitude aerial photography conducted by UAVs over photovoltaic power plants, encompassing both visible light and thermal infrared modalities. The dataset focuses on three common and representative fault types: hot spots, diode short circuits, and physical obstructions. Unlike traditional single-modal images, dual-modal data can more comprehensively reflect the optical and thermal characteristics of module surfaces. Hot spot regions typically manifest as distinct high-temperature concentration points in infrared images while potentially lacking obvious features in visible light images. Diode short circuits form regularly distributed abnormal thermal zones in infrared images, often accompanied by localized temperature increases. Physical obstructions are primarily evident in visible light images, such as birds, fallen leaves, or foreign objects covering panel surfaces, affecting light absorption. These faults exhibit characteristics including significant inter-modal presentation differences and large variations in regional dimensions. Particularly, some hot spot or obstruction areas occupy only minimal proportions of the module surface, further increasing detection difficulty.

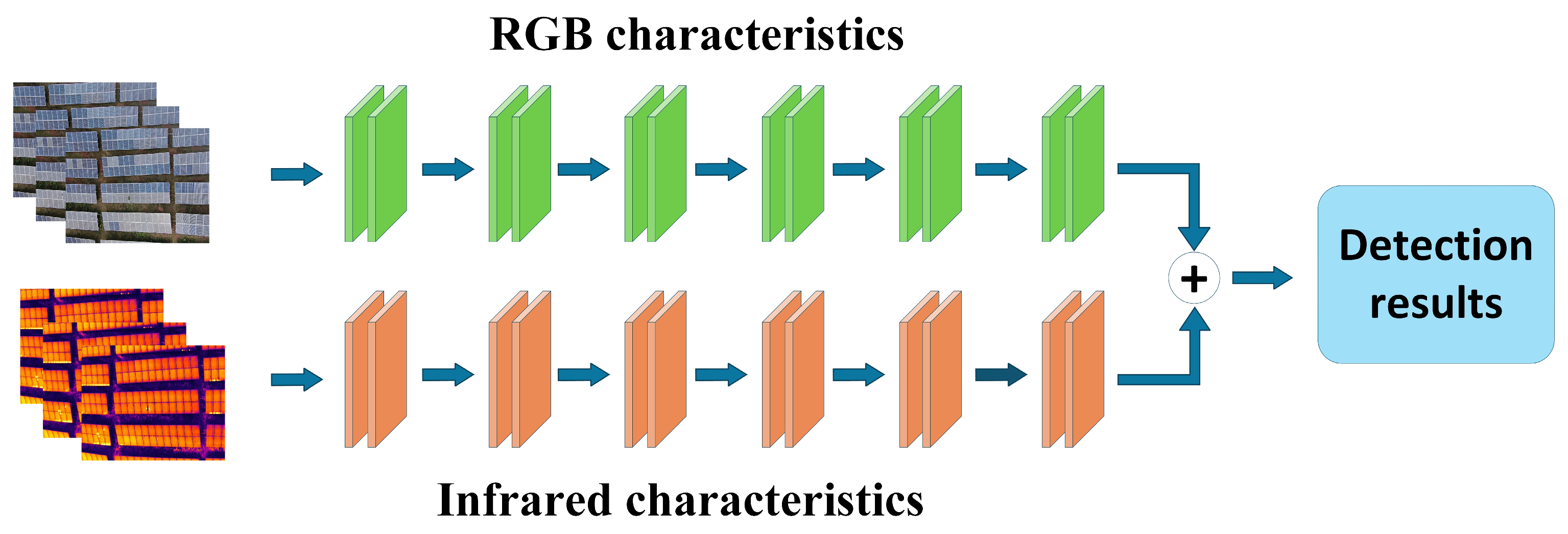

Motivation and Research Contributions: Photovoltaic modules in actual operation are susceptible to faults, including hot spots, diode short circuits, and physical obstructions. These fault types exhibit high diversity and complexity, with significantly different manifestations in images, presenting enormous challenges for automated detection. Hot spots are typically small in volume and irregularly distributed, often difficult to identify in visible light images, while presenting only weak temperature anomalies in infrared images. Diode short circuits, although possessing certain thermal characteristics, exhibit variable patterns and are easily confused with other thermal anomalies. Physical obstructions often have irregular shapes and uncertain locations, appearing only in visible light images. To address these challenges, this study proposes a multimodal data-based fault detection method for photovoltaic modules. The method employs a specially designed YOLOv11 model architecture capable of accurately identifying typical fault patterns in photovoltaic modules, including hot spots, diode short circuits, and physical obstructions. Through preprocessing and size standardization of dual-modal images (visible light and thermal infrared) collected by UAVs, the RGBIRPV dataset was constructed. Based on a dual-branch feature extraction structure and cross-modal fusion module, the proposed YOLOv11 model achieves high-precision detection of dual-modal images, demonstrating excellent performance in fault recognition of hot spots, diode short circuits, and obstructions. The proposed end-to-end detection pipeline not only significantly improves the accuracy and recall rate of fault identification but also provides robust technical support for the refined and high-frequency deployment of intelligent operation and maintenance systems in photovoltaic power plants. The effectiveness of this method establishes a solid foundation for the practical and large-scale application of UAV-based automatic inspection technology for photovoltaic modules.

2. Related Work

With the rapid development of the economy and society, deep learning technology has increasingly attracted significant attention from both academia and industry due to its powerful data processing capabilities and broad application prospects. Convolutional Neural Networks have demonstrated excellent performance in tasks such as image recognition, object detection, and semantic segmentation. Meanwhile, the application of advanced technologies, including attention mechanisms, residual connections, and multi-scale feature pyramids, has further enhanced algorithm performance and robustness. Deep learning-based object detection algorithms can be primarily categorized into two major types: one-stage and two-stage approaches. Two-stage algorithms, represented by the R-CNN series, first generate candidate regions and then perform classification and regression on each candidate region. These algorithms achieve high detection accuracy but operate at relatively slower speeds. In contrast, one-stage algorithms such as YOLO and SSD directly predict object categories and locations within the network, achieving end-to-end detection. Although their accuracy is slightly lower, they operate faster and are more suitable for real-time application scenarios. Zhu et al. proposed the C2DEM-YOLO deep learning model, which achieves 92.31% mean average precision in photovoltaic electroluminescence (EL) defect detection through innovative design of the C2Dense feature extraction module and EMA cross-spatial attention mechanism [

17]. Lang et al. proposed an innovative photovoltaic EL defect detection algorithm based on YOLO architecture, achieving 77.9% mAP50 detection accuracy on the PVEL-AD dataset through integration of polarized self-attention mechanisms and CNN-Transformer hybrid modules, representing a significant improvement of 17.2% over baseline methods [

18]. Ding et al. proposed a photovoltaic EL defect detection method based on improved YOLOv5, effectively addressing defect sample imbalance and scale variation issues through an innovative design of Focal-EIoU loss function and cascade detection networks [

19].

The rapid development of unmanned aerial vehicle (UAV) technology and significant cost reduction in recent years have made UAV-based intelligent inspection technology for photovoltaic power plants a focal point of industry attention. Wang et al. proposed an improved YOLOX model called PV-YOLO, which adopts the PVTv2 backbone network and CBAM attention mechanism, optimizes label assignment strategies, and employs the SIoU loss function while designing lightweight models of different scales to accommodate diverse detection scenarios [

20]. Hong et al. proposed the CEMP-YOLOv10n algorithm, which achieves 86.6% accuracy and 87.3% mAP in photovoltaic infrared detection through ABCG_Block optimization for feature extraction, ERepGFPN enhancement for feature fusion, PConv streamlining for detection heads, and MECA attention mechanism for improved recognition accuracy. Simultaneously, the model’s computational load is reduced to 4.7 GFLOPs, providing an effective solution for UAV edge deployment and intelligent photovoltaic operation and maintenance [

21]. Bommes et al. developed a semi-automatic photovoltaic module detection system based on UAV thermal imaging, which is projected to detect 3.5–9 MWp capacity daily with theoretical gigawatt-scale expansion potential [

22]. Di Tommaso et al. proposed a multi-stage automatic detection model based on YOLOv3, achieving rapid and accurate diagnosis of multiple defect types in photovoltaic systems. The system, through multi-scale feature optimization and adaptive algorithm design, is compatible with different application scenarios including rooftop and ground-mounted power plants, with its defect severity classification functionality significantly enhancing the targeting of preventive maintenance [

23]. UAV inspection technology demonstrates significant technical advantages and application value. In terms of efficiency, UAVs can rapidly traverse large-area regions, completing comprehensive detection of a 300 MW photovoltaic power plant within 2–3 days, representing a 10–20-fold improvement in detection efficiency compared to manual inspection and substantially shortening inspection cycles. From a safety perspective, UAVs employ remote control methods, effectively avoiding safety risks associated with maintenance personnel’s direct contact with high-voltage equipment, providing safer and more reliable solutions for power plant operation and maintenance. Regarding detection accuracy, UAVs possess hovering capabilities, enabling close-range photography above modules to acquire high-resolution detection images, with detection accuracy significantly superior to traditional manual inspection methods.

In terms of sensor technology, visible light cameras and thermal infrared cameras are core equipment for UAV inspection. Li et al. developed an automated detection platform for photovoltaic systems based on UAVs, achieving efficient identification of typical defects such as dust accumulation through the integration of visible light image processing algorithms and multi-sensor integration technology [

24]. Visible light cameras can capture appearance information of photovoltaic modules, effectively identifying appearance defects including glass breakage, frame deformation, contamination and obstruction, and junction box appearance abnormalities. Their imaging principle is based on electromagnetic wave reflection in the visible light spectrum, offering advantages of high resolution, rich color information, and low cost. Thermal infrared cameras, based on infrared radiation emitted by objects themselves, can detect temperature distribution on module surfaces, effectively identifying thermal anomaly faults such as hot spots, cell cracks, junction box overheating, and local shading. Jamuna et al. proposed a linear iterative fault diagnosis system based on infrared thermal imaging, achieving precise detection and power optimization of photovoltaic module faults through the integration of thermal feature analysis and maximum power point tracking algorithms [

25]. Vergura et al. systematically analyzed the influence mechanisms of dynamic variations in key parameters such as emissivity and reflected temperature, addressing radiation measurement errors caused by relative position changes during UAV infrared detection of photovoltaic modules [

26]. These two imaging technologies exhibit clear complementarity: visible light images possess advantages in spatial resolution and detail representation, but cannot detect internal thermal anomalies; thermal infrared images can reveal invisible thermal faults but have relatively low spatial resolution and lack textural detail information. Winkel et al. proposed an electrothermal coupling analysis model that achieves a precise distinction between genuine defects and contamination artifacts in photovoltaic modules through the integration of spatial dirt distribution data from RGB images with temperature fields from infrared thermal imaging [

27].

The introduction of dual-modal image fusion technology has provided new solutions for photovoltaic fault detection. Cardoso et al. proposed an intelligent monitoring system for photovoltaic modules based on UAV multimodal 3D modeling, achieving 99.12% accuracy improvement in module-level thermal characteristic analysis through the integration of RGB-thermal imaging photogrammetry technology [

28]. Feng et al. proposed an intelligent detection framework for photovoltaic modules that integrates dual infrared cameras with deep learning, achieving high-speed module segmentation at 36 FPS through YOLOv5 and fault classification with 95% accuracy through ResNet, innovatively addressing the detection challenges of minute thermal defects in low-altitude environments [

29]. Through simultaneous acquisition of visible light and thermal infrared images, combined with advanced image processing and artificial intelligence algorithms for dual-modal information fusion analysis, real-time online identification of typical defects such as cracks and hot spots has been achieved, enabling comprehensive and accurate detection of photovoltaic module faults [

30]. Kuo et al. constructed an intelligent operation and maintenance system for photovoltaic power plants based on UAV multimodal image fusion, proposing a dual-channel CNN architecture that separately processes IR and RGB data to achieve high-accuracy comprehensive classification [

31]. Dual-modal detection methods not only enable the detection of complex faults that cannot be identified by single-modal approaches but also improve detection robustness and reliability while reducing the influence of environmental factors on detection results.

Although the application of dual-modal image fusion technology in photovoltaic fault detection shows broad prospects, it still faces numerous complex technical challenges. Image registration constitutes a core challenge, as visible light and thermal infrared cameras exhibit significant differences in key parameters, including imaging principles, resolution, and field of view, necessitating the establishment of precise geometric correspondence relationships to ensure fusion accuracy. Ying et al. proposed an intelligent fault diagnosis scheme based on improved YOLOv5s and multi-level image registration, innovatively employing GPDF-AKAZE multi-scale registration technology to achieve 94.12% fault component localization recall rate in a 212.85 kW power plant [

32]. Meanwhile, feature fusion presents equally critical challenges, requiring research on how to effectively integrate complementary information from two different modalities while fully utilizing their respective advantages, avoiding information redundancy and conflicts, and ensuring post-fusion information quality. Algorithm robustness represents another important challenge, as algorithms must adapt to image processing requirements under various complex conditions, including different illumination conditions, weather conditions, and shooting angles, considering the complex and variable operating environments of photovoltaic power plants. Centralized photovoltaic power plant component fault detection technology based on UAV dual-modal images represents an inevitable trend in photovoltaic industry technological advancement, possessing significant theoretical value and practical significance. This study aims to establish a multimodal photovoltaic component fault detection method suitable for UAV images, encompassing key components including dual-modal image acquisition, preprocessing, registration, fusion, and fault detection, providing technical support for intelligent operation and maintenance of photovoltaic power plants and promoting high-quality development of the photovoltaic industry. Through the organic combination of technological innovation and industrial application, this research expects to contribute scientific and technological strength to the global energy transition and carbon neutrality goals.

4. Results

4.1. Experimental Environment and Parameter Settings

All experiments in this study were conducted under a unified hardware and software environment to ensure the reliability and reproducibility of results. Python 3.8 was used as the programming language, with model training and testing performed on the PyTorch 2.2.0 deep learning framework. The hardware configuration utilized an NVIDIA RTX 4090 GPU (NVIDIA Corporation Santa Clara, CA, USA) equipped with 24GB of VRAM, providing ample computational resources for model training.

Detailed experimental environment configuration information is shown in

Table 1. Key model training parameter settings are listed in

Table 2. These detailed environmental and parameter configurations ensure experimental transparency, providing a reliable foundation for reproducing and comparing subsequent research.

4.2. Model Evaluation Metrics

To comprehensively evaluate the performance of the constructed multimodal PV fault detection model, this paper employs multiple evaluation metrics commonly used in the object detection field, including precision, recall, average precision, and mean average precision. These metrics are calculated based on the statistical results of the four fundamental elements in the confusion matrix: TP, FP, TN and FN. Different metrics reflect the model’s detection capabilities from distinct dimensions, holding significant importance in photovoltaic module fault detection.

Precision represents the proportion of samples that are actually faulty among all samples predicted as faulty by the model. This metric measures the model’s ability to control the misclassification of normal components as faulty, which helps reduce unnecessary maintenance costs in practical engineering applications. The calculation formula is:

Recall measures the proportion of all actual defect samples that the model correctly identifies. It reflects the model’s ability to detect defects, with higher recall indicating fewer missed defects. This is particularly significant in early defect detection and safety monitoring. The calculation formula is:

Since precision and recall often involve a trade-off relationship, the F1 score is introduced as a comprehensive evaluation metric to assess overall model performance. The calculation formula is:

Here, ξ is a minimal constant that prevents the denominator from becoming zero.

Average Precision reflects a model’s detection accuracy at different confidence thresholds by calculating the area under the precision-recall curve for a given class. Specifically, AP integrates or averages the precision values corresponding to different recall points between 0 and 1 for a target class, thereby providing a comprehensive evaluation of the model’s overall performance on that class. The formula is:

The mean average precision (mAP) represents the average precision of all categories, reflecting the model’s overall detection accuracy across the entire dataset. The calculation of mAP relies on the intersection-over-union (IoU) threshold between predicted results and ground truth bounding boxes. The mAP metric carries different evaluative significance under varying threshold conditions. The formula is:

4.3. Multi-Modal Fusion YOLOv11 Model

To comprehensively evaluate the performance of different fusion strategies in multimodal object detection tasks, this study systematically compares the detection performance of four mainstream fusion strategies based on the RGBIRPV dataset using the YOLOv11 multimodal fusion network architecture. Specifically, the pixel-level fusion strategy directly concatenates RGB and infrared image data at the input layer. While it preserves complete raw information and spatial details, it significantly increases the input dimensionality and computational complexity of the network and may introduce redundant information. The early feature-level fusion strategy integrates multimodal features during the shallow feature extraction stage of the network, enabling full utilization of complementary information between modalities and establishing effective cross-modal feature associations in the early stages of network training. The mid-level feature fusion strategy performs feature integration in the intermediate layers of the network, achieving a favorable balance between feature representation capability and computational efficiency. The decision-level fusion strategy combines the prediction results of each modal branch at the output layer, which, while maintaining the independence of each modality, exhibits a relatively shallow degree of fusion.

Experimental results demonstrate that the mid-level feature fusion strategy achieves the best performance in terms of mAP@50 detection accuracy (as shown in

Table 3). By performing deep fusion at the intermediate stage of multimodal feature extraction, this strategy achieves an optimal balance between computational cost and model performance, effectively integrating complementary information from different modalities and enabling the network to establish robust cross-modal associations at critical stages of feature learning.

4.4. Cross-Validation Experiments

To evaluate the model’s robustness and generalization capability, this study employs a five-fold cross-validation method. The training and validation sets are combined into a dataset comprising 5060 images, which is uniformly divided into five subsets. In each round of experimentation, four subsets are used for training while the remaining subset serves as validation. This process is repeated five times, ensuring each subset is used for validation once. This approach reduces bias introduced by data partitioning and enhances the reliability of evaluation results.

Each round of experiments followed a standard training procedure, with performance metrics—including precision, recall, F1 score, and mean average precision (mAP)—calculated on the validation set. Upon completing all experiments, results from each round were aggregated to compute the mean and standard deviation of metrics, enabling a robust estimation of model performance. Experimental results are presented in

Table 4.

As shown in

Table 4, the model demonstrates overall stable performance across five-fold cross-validation. The average mAP@50 reaches 82.3% with a standard deviation of only 1%, indicating minimal fluctuation across different datasets and strong robustness. The standard deviations for Precision and Recall are 1.5% and 2.1%, respectively, suggesting consistent performance across datasets. Concurrently, the standard deviation of the F1 score is only 0.8%, further validating the model’s balanced trade-off between precision and recall. Based on the cross-validation results, the model with the optimal mAP performance on the validation set was ultimately selected for evaluation on the test set.

Table 5 presents a comparison between this model’s best results on the validation set and its performance on the test set.

As shown in

Table 5, the model with the best performance on the validation set exhibits slight fluctuations in overall performance on the test set. mAP@50 decreased from 83.4% to 83.2%, F1 score dropped from 79.7% to 78.3%, and Precision fell from 83.5% to 80.1%. Despite these declines, the small magnitude indicates the model maintains stable generalization performance on unseen data. Overall results demonstrate that the model retains high detection accuracy even in complex scenarios.

4.5. Ablation Experiment

To validate the effectiveness and practicality of an improved fault detection scheme for multimodal photovoltaic modules, this paper proposes a feature-level mid-term fusion detection method combining visible and infrared images based on the YOLOv11n baseline model. Experiments were conducted using a self-built RGBIRPV dataset. Under identical experimental settings, the specific contributions of each module to model performance were quantified. Evaluation metrics encompass detection accuracy and model complexity dimensions, including precision, recall, mAP@50, mAP@50:95, F1 score, number of parameters, floating-point operations, and model volume.

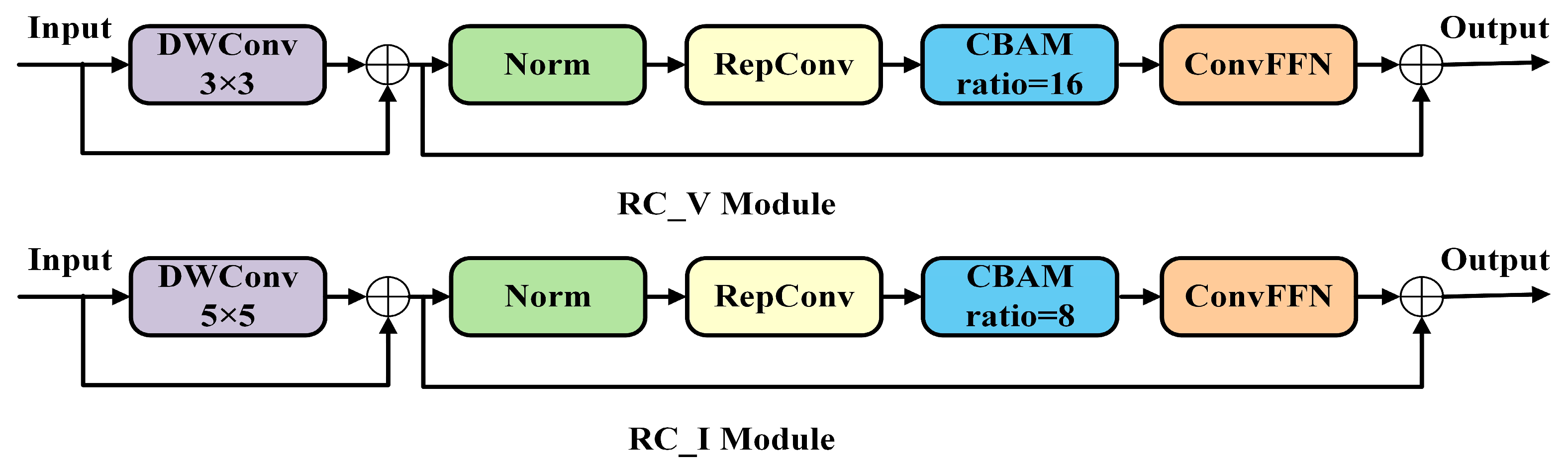

4.5.1. RC Module Experiment

To investigate the impact of the attention compression ratio on feature extraction across different modalities, we conducted ablation studies on the RC_V and RC_I modules. As summarized in

Table 6, the configuration with RC_V = 16 and RC_I = 8 enabled the model to most effectively leverage the complementary properties of bimodal features: the visible branch excelled at preserving fine details and textures, while the infrared branch showed enhanced sensitivity in thermal feature extraction and anomaly localization. Compared to a uniform compression ratio, this asymmetric design significantly improved detection performance, achieving a precision of 83.8%, a recall of 74.1%, and an mAP@50 of 83.0%. These results collectively validate the rationality and effectiveness of the proposed modality-specific attention differentiation strategy.

This study systematically evaluated the impact of heterogeneous convolutional kernel parameters on multimodal feature extraction in the RC_V and RC_I modules via ablation experiments. As presented in

Table 7, the model performed best with the RC_V = 3 and RC_I = 5 configuration, with a precision of 83.8%, mAP@50 of 83.0%, and F1-score of 78.6%. This outcome indicates that this kernel combination significantly boosts detection accuracy and mean average precision, validating that using small kernels for detail in the visible branch and large kernels for global context in the infrared branch is an effective strategy.

4.5.2. Module Ablation Experiment

Table 8 ablation experiments demonstrate that the YOLOv11n model integrated at the feature level achieves benchmark performance in photovoltaic module fault detection:

p = 79.6%, R = 76.3%, mAP@50 = 81.9%, mAP@50:95 = 66.7%, F1 = 77.9%, with 3.95 parameters, 9.5 GFLOPs of floating-point operations, and a model size of 8.3 MB. These results demonstrate the baseline model’s capability to detect faulty modules, though it remains suboptimal in achieving high threshold accuracy and lightweight design. In single-module enhancements, introducing the RC module strengthened the model’s edge feature extraction for PV module defects, improving

p-value by 4.2%, mAP@50 by 1.1%, and F1 score by 0.8%, while reducing parameters by 5.8%. However, R-value decreased by 2.2%, indicating that this architecture may sacrifice some false-negative detection control capabilities while enhancing localization accuracy. After introducing the FA module, the R value remained largely stable, while the F1 score improved to 78.7% and mAP@50 increased by 0.6%. This indicates that the feature aggregation mechanism enhances global information modeling capabilities, positively impacting multi-class defect detection. Adding the EVG module reduced parameters by 7.6% while maintaining mAP@50:95 = 67.1%. Despite minor fluctuations in accuracy metrics, its lightweight design enables feasible deployment on resource-constrained monitoring equipment in photovoltaic power plants. In the fusion approach, the RC and FA modules achieve a balance between performance and reliability. mAP@50 increases to 83.3%, and mAP@50:95 grows by 0.5%, while parameter size and GFLOPs consumption decrease. This demonstrates that heavy-parameter convolutions and feature aggregation mechanisms play a facilitating role in PV fault detection. The RC-EVG combination achieves mAP@50:95 = 79.3%, while increasing the R value by 1.1% and the F1 value by 1.4%, demonstrating strong recognition capabilities for localized micro-hotspot faults. However, GFLOPs increased to 10.1, leading to higher computational overhead. The FA-EVG model maintains detection stability while boosting the R value to 77.7% and reducing parameters to 3.59 million. With a model size of only 7.7 MB, it holds excellent prospects for edge deployment.

Ultimately, the proposed RFE-YOLO model achieved performance metrics of p = 82.5%, R = 76.5%, mAP@50 = 83.7%, mAP@50:95 = 67.6%, and F1 = 79.4%. Compared to the baseline model, this approach reduced the number of parameters by 6.6% while maintaining a model size below 8.0 MB. Experimental results demonstrate that RFE-YOLO maintains high detection robustness under multi-threshold conditions while balancing lightweight design with accuracy. This model is suitable for deployment on edge computing devices, enabling rapid and precise identification of various photovoltaic module fault types.

4.6. Comparison Experiments of Different Models

To evaluate the effectiveness of photovoltaic module fault detection and ensure a comprehensive comparison, the experimental results cover both single-modal and multi-modal scenarios. The comparison focuses on multiple metrics, including p, R, mAP, F1, parameter, GFLOPs, and model size.

Single-modal detection models based on infrared images include YOLOv5n, YOLOv6n, YOLOv8n, YOLOv9t, YOLOv10n, YOLOv11n, YOLOv12n [

41], and RT-DETR-l [

42]. Multi-modal detection models based on visible and infrared image fusion include mid-fusion-YOLOv11n, DEYOLO [

36], ICAFusion [

43], and RFE-YOLO. This table enables a comprehensive evaluation of the performance differences among the proposed methods. Specific performance metric comparisons are shown in

Table 9.

In single-modal infrared image experiments, YOLOv11n demonstrated superior performance in photovoltaic module fault detection tasks. Specifically, this model achieved the best results across three core metrics: mAP@50 (80.6%), mAP@50:95 (60.8%), and F1 score (77.0%). It also attained a recall rate of 77.1%, the highest among all compared models, demonstrating strong fault detection capability and a low risk of missed detections. In contrast, while YOLOv12n holds an advantage in precision and RT-DETR-l excels in mAP@50:95, both suffer from substantial model size and computational overhead, making them unsuitable for deployment in resource-constrained real-world scenarios. YOLOv11n, however, achieves both high accuracy and efficiency with only 2.58 million parameters, 6.3 GFLOPs, and a model size of 5.5 MB, fully demonstrating its outstanding lightweight characteristics and practical value.

In multimodal visible and infrared image experiments, RFE-YOLO demonstrated the most outstanding overall performance. This model achieved the best results across four key metrics: precision of 82.5%, mAP@50 of 83.7%, mAP@50:95 of 67.6%, and F1 score of 79.4%. While achieving a recall rate of 76.5%. This indicates not only more accurate localization of PV module faults but also effective reduction in missed detection rates, ensuring the completeness and reliability of detection results. In contrast, feature-level mid-stage fusion models like YOLOv11n and ICAFusion exhibit insufficient generalization capabilities at high IoU thresholds. While DEYOLO demonstrates superior precision, its large parameter count and model size limit practical deployment. Notably, RFE-YOLO achieves high-precision detection in complex scenarios with a lightweight configuration requiring only 3.69 parameters and an 8.0 MB model size, further highlighting its practical value in PV plant operations and maintenance. Aligning with real-world application needs, RFE-YOLO advantages extend beyond significant improvements in experimental metrics to include deployability. For large-scale inspection tasks in photovoltaic power plants, detection models must simultaneously deliver high accuracy and efficiency. RFE-YOLO achieves rapid inference with low computational overhead while maintaining detection performance, making it highly suitable for deployment on drones or edge computing devices. This demonstrates that the method not only enhances fault detection accuracy but also significantly boosts its feasibility and application potential in real-world photovoltaic operations and maintenance environments.

In the RGB and IR dual-modality comparison experiments, four models were selected for comparison: mid-stage fusion YOLOv11n, DEYOLO, ICAFusion, and RFE-YOLO. Each set of experiments was independently repeated five times, and the results were plotted as box plots to demonstrate the performance distribution and stability of different models across multiple experiments, as shown in

Figure 13. Given the high dependency of photovoltaic module fault detection tasks on detection accuracy, this study employs mAP@50 as the key comparison metric. This metric authentically reflects the stability of fault localization and accuracy in photovoltaic module fault detection, aligning closely with real-world requirements for stable, reliable detection results that minimize missed faults.

As shown in

Figure 13, RFE-YOLO demonstrates significantly superior performance compared to other methods on the mAP@50 metric. Statistically, RFE-YOLO achieves an average score of 0.837, surpassing Mid-fusion-YOLOv11n, DEYOLO, and ICAFusion by 2.2%, 2.2%, and 5.7%, respectively. The box plot reveals that the performance distribution range of RFE-YOLO shows almost no overlap with other methods, indicating its significant performance advantage. Particularly compared to ICAFusion, RFE-YOLO demonstrates a notable improvement in detection accuracy. Notably, RFE-YOLO not only excels in average performance but also surpasses the average minimum values of Mid-fusion-YOLOv11n and DEYOLO, fully demonstrating the method’s robustness and stability. Although DEYOLO exhibits an upward performance trend in consecutive experiments, RFE-YOLO achieves a superior balance between detection accuracy and model stability through its effective integration of multi-scale feature extraction fusion mechanisms. This makes it a more reliable and efficient solution for object detection tasks in complex environments.

4.7. Visual Analytics

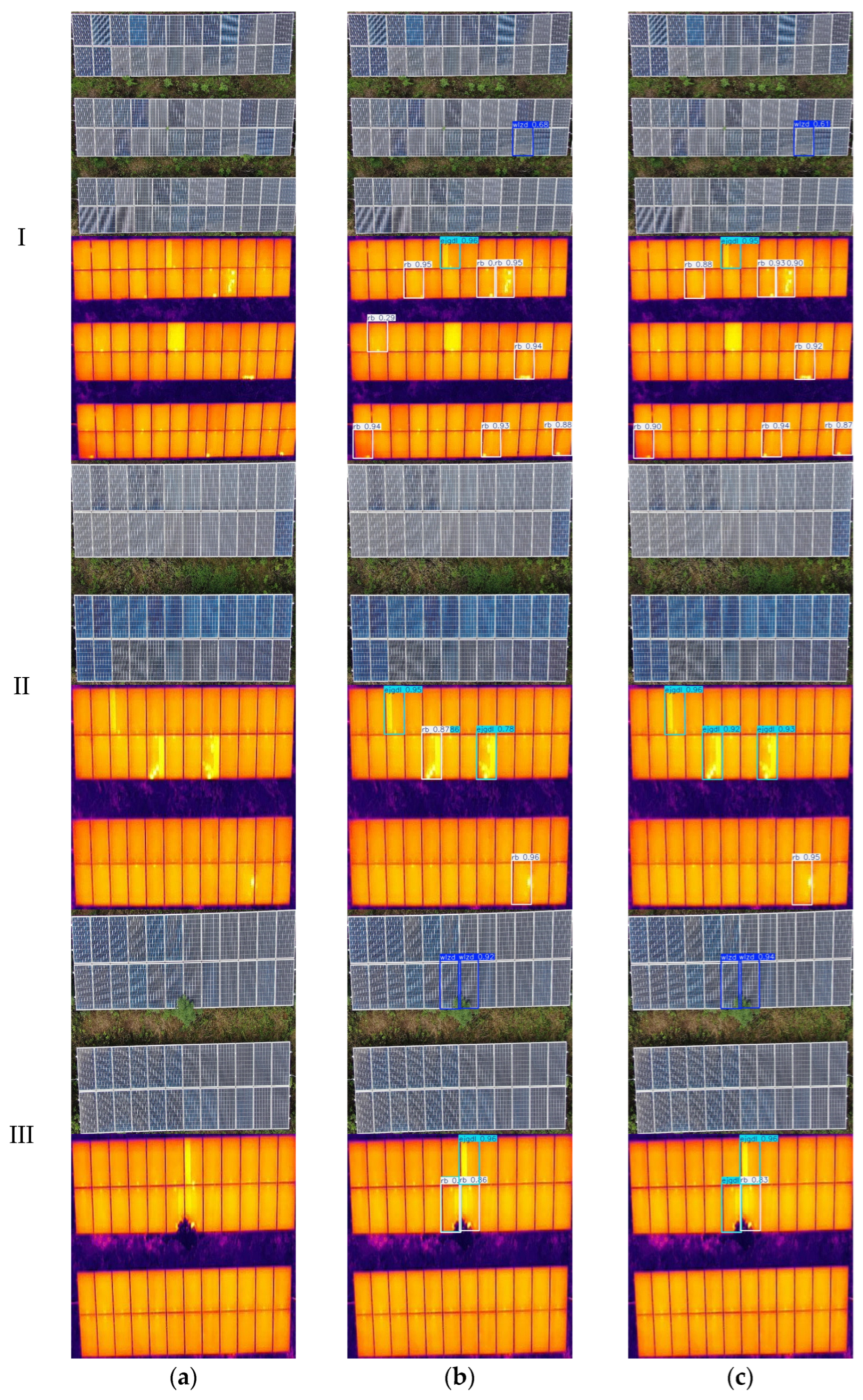

To evaluate the overall performance of the improved RFE-YOLO model, three representative sets of visible and infrared images were selected from the test dataset for comparative analysis. The detection results before and after model enhancement are shown in

Figure 14.

In testing three sets of fault images from different types of photovoltaic modules, this study compares the mid-term fusion YOLOv11n model with the improved RFE-YOLO model. The experiments included visible light images containing only physical obstruction categories, while infrared images involved only hot spots and diode short circuits. Overall test results indicate that the RFE-YOLO model achieves more accurate PV module fault identification. In Group I images, the mid-term fusion YOLOv11n model misclassified normal areas as hot spot faults in infrared images, whereas the RFE-YOLO model accurately distinguished them and produced higher confidence detection outputs for other faults. In Group II images, both models correctly identified the absence of physical shading in visible light images. However, in infrared images, the mid-fusion YOLOv11n model misclassified diode short-circuit faults as hot spot faults, whereas the RFE-YOLO model not only correctly identified the fault type but also demonstrated significantly higher confidence levels than the comparison model. In Group III images, both models detected physical obstruction in visible light images, but the RFE-YOLO model demonstrated markedly higher detection confidence. In infrared images, the mid-term fusion YOLOv11n model again misclassified diode short-circuit faults as hot spots, whereas the RFE-YOLO model achieved accurate identification.

In summary, the RFE-YOLO model outperforms the mid-term fusion YOLOv11n model in both fault detection accuracy and confidence levels, effectively reducing false detections. This result demonstrates that the improved RFE-YOLO model exhibits greater robustness and reliability in photovoltaic module fault detection tasks.

5. Discussion

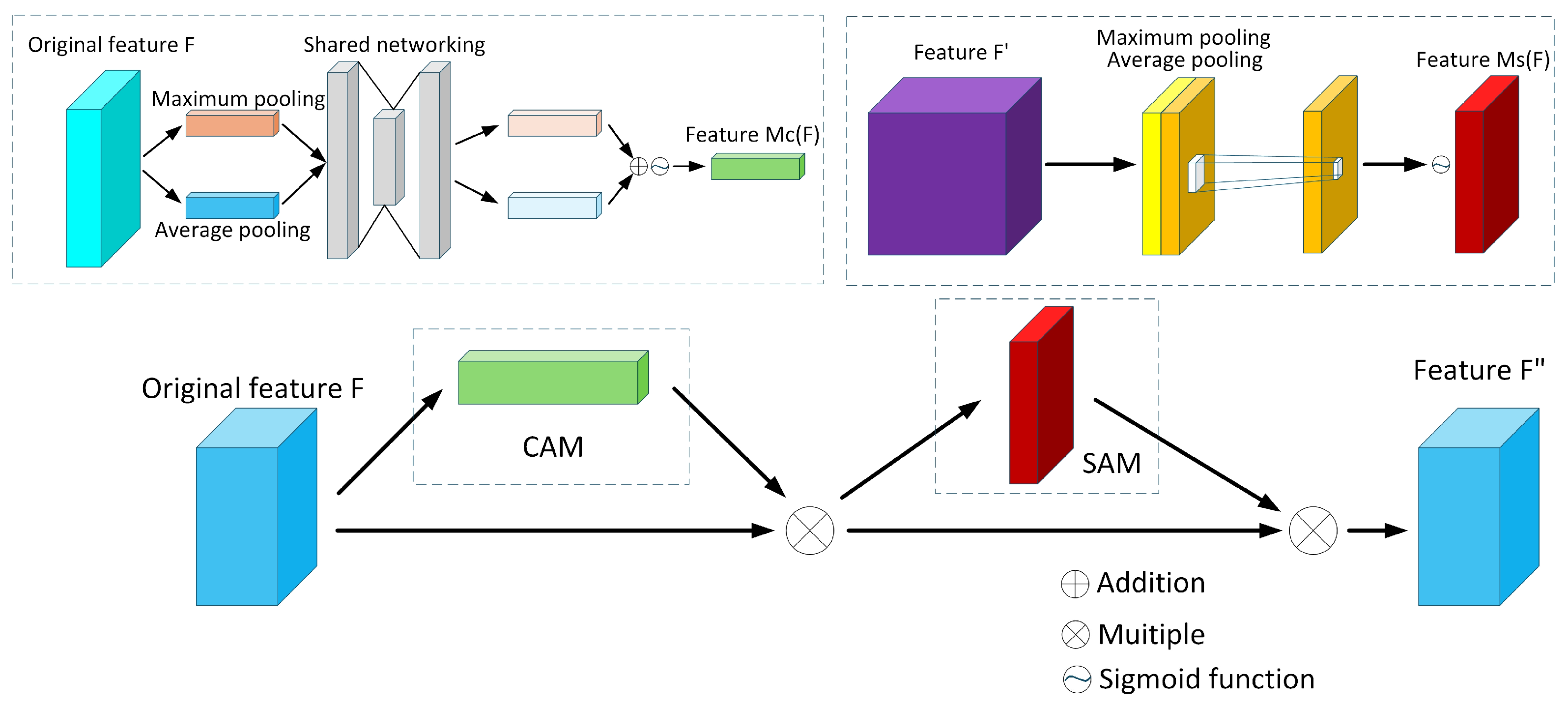

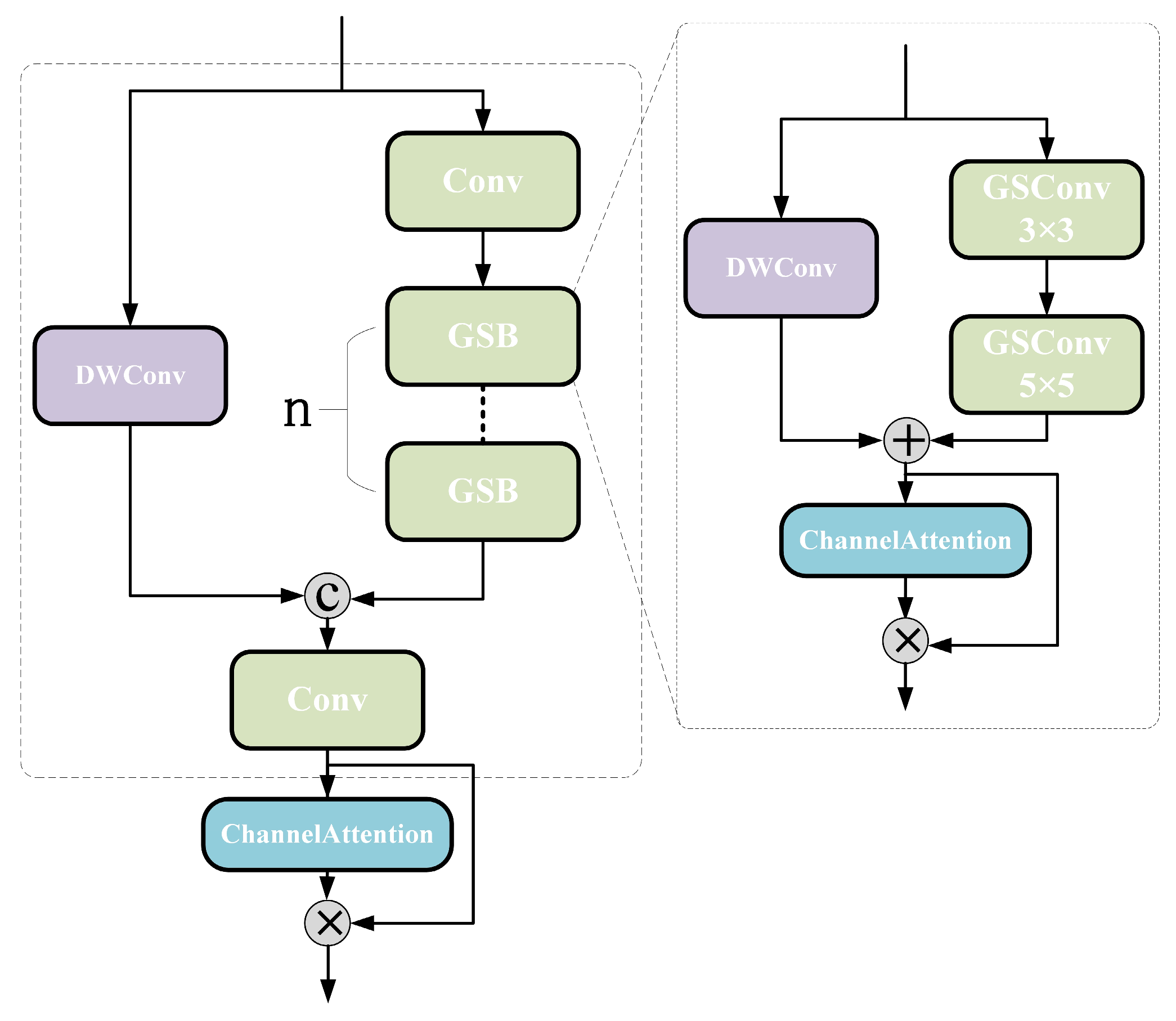

Rapid and accurate detection of photovoltaic module failures is crucial for power plant health management and operational decision-making. To this end, this study constructs the multimodal detection model RFE-YOLO for visible-infrared images based on the lightweight and deployable YOLOv11n framework. By comparing different fusion strategies, feature-level mid-term fusion is selected as the multimodal fusion architecture. The model’s core introduces a multi-modal re-parameterized feature extraction RC module based on the CBAM attention mechanism. To address the imbalance in dual-modal quality caused by environmental variations, heterogeneous RC_V and RC_I submodules are designed to differentially enhance visible light structural features and infrared thermal distribution features. A lightweight FA fusion module is further introduced, which adaptively integrates complementary information through learnable modality balancing and cascaded attention mechanisms. The GSConv-constructed EVG enhancement module strengthens the extraction of shallow textures and deep semantics while ensuring computational efficiency, significantly improving detection accuracy and robustness for subtle defects such as hot spots and diode short circuits.

Although the model demonstrates strong performance under controlled dataset conditions, its feature representation and prediction stability require improvement when addressing imaging quality degradation caused by sudden environmental changes, such as overexposure, low contrast, and noise interference. Future research should integrate edge computing devices with IoT cloud platforms to systematically validate the model’s real-time performance in multimodal data scenarios, ensuring its robustness and reliability in complex real-world environments. In terms of model accuracy and related metrics, this study achieves significant improvements over existing methods. However, experimental results also reveal several pressing issues. As a crucial foundation for model experimentation, although this paper strives to simulate real-world detection tasks for photovoltaic modules, limitations remain, including restricted target category coverage and inconsistent data quality. Furthermore, uncertainties introduced during manual annotation may lead to labeling errors or classification biases, potentially affecting model training outcomes. Regarding model architecture optimization, while RFE-YOLO has made phased progress in parameter control and computational complexity, further exploration of lighter network structures and algorithms is needed to enhance computational efficiency while maintaining high accuracy. Through continuous refinement of model design, more efficient computational performance in practical applications is anticipated, accelerating the deployment of related technologies. In the context of photovoltaic power plant operations and maintenance, this study offers a practical and lightweight solution for module fault detection. It assists maintenance personnel in rapidly identifying component failures, reduces reliance on manual inspections, and enhances fault response efficiency. It is hoped that this work will contribute to advancing intelligent monitoring technologies for power plant operations and maintenance.

6. Conclusions

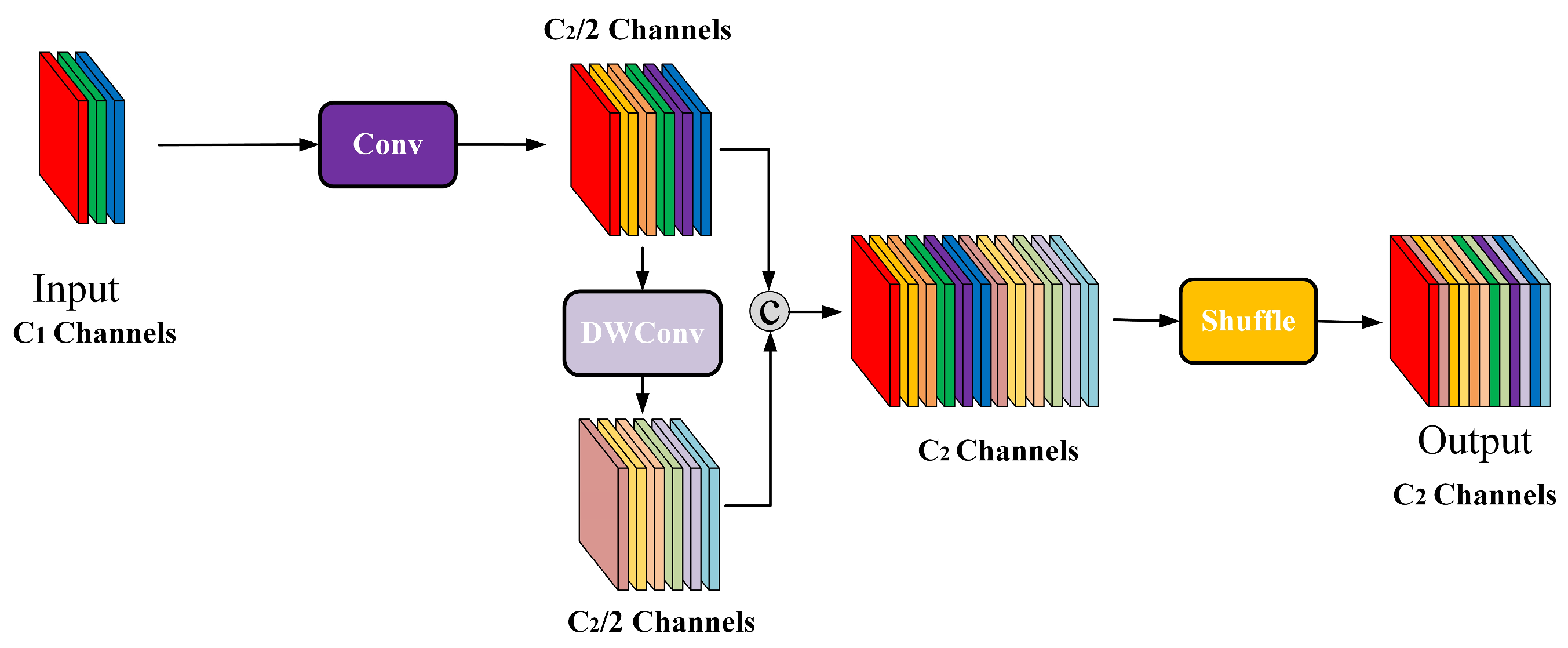

This paper constructs an RGBIRPV dataset based on real-world centralized PV power plant environments, featuring matched infrared and visible light images. This enriches data resources in the field and provides crucial support for in-depth research on PV module fault detection tasks. Based on this dataset, we developed an RFE-YOLO detection model tailored for multimodal PV module images. The model’s core comprises three synergistic modules: an RC feature extraction module designed with CBAM attention and reparameterization techniques, where heterogeneous RC_V and RC_I submodules enhance visible structural textures and infrared thermal distribution features, respectively, effectively mitigating modal quality imbalance caused by imaging condition variations; We propose a lightweight adaptive fusion FA module that dynamically adjusts the contribution of dual-modal features through learnable weight allocation and attention cascading mechanisms, achieving efficient integration of complementary information. We introduce an EVG multi-scale enhancement module based on GSConv, which maintains low computational overhead while strengthening the fusion of shallow details and deep semantics, significantly improving robustness in identifying minute defects such as hot spots and diode short circuits.

Experimental results demonstrate that the proposed model achieves a mAP of 83.7% on the real-world test dataset. Compared to the baseline method, it improves mAP by 1.8% and precision by 2.9%, while reducing the number of parameters and computational complexity by 3.2% and 5.4%, respectively. These findings validate the effectiveness of this approach for multimodal photovoltaic module fault detection, providing a feasible technical pathway for real-time intelligent monitoring of power plants.

Future research will focus on three key areas: (1) Integrating historical power generation performance data with multimodal UAV imagery to construct a joint dataset covering a broader range of failure modes, while exploring deep learning-based predictive diagnostic methods for precise severity assessment; (2) Further reducing computational overhead through techniques such as model pruning, quantization, or knowledge distillation, and investigating ultra-lightweight architectures suitable for resource-constrained edge devices; (3) Promote the practical integration and field deployment of RFE-YOLO within photovoltaic power plant operation and maintenance systems to enable dynamic early warnings for module status, precise fault localization, and effective control.