A Facial-Expression-Aware Edge AI System for Driver Safety Monitoring

Abstract

1. Introduction

2. Related Work

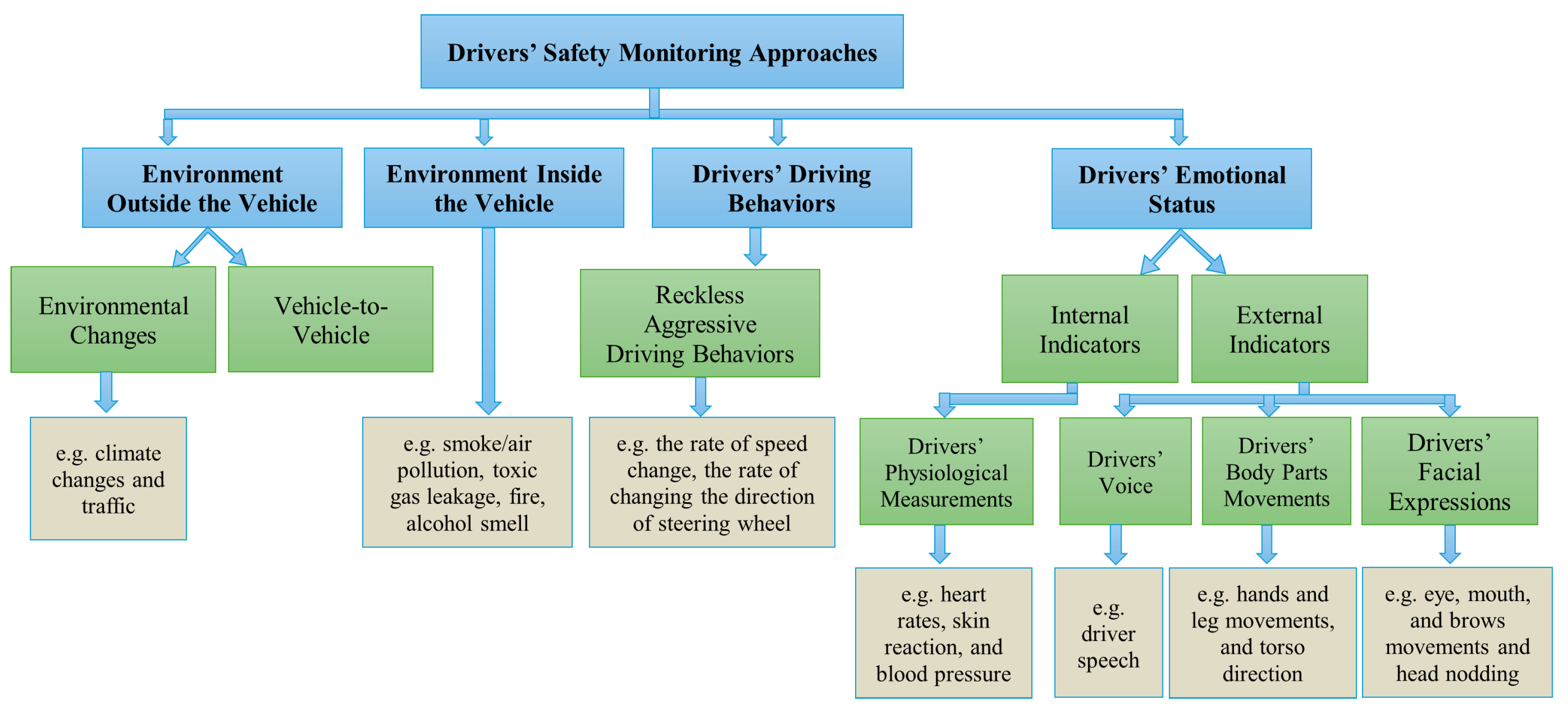

2.1. Driver Safety Monitoring Approaches

- External Vehicle Environment: Road conditions, traffic, and surrounding obstacles;

- Internal Vehicle Environment: Cabin conditions, passenger presence, and potential distractions;

- Driver Behavior: Steering patterns, braking habits, and adherence to traffic rules;

- Driver State: Fatigue levels, emotional responses, and attentiveness.

2.2. Facial Expression Detection and Analysis

2.3. Existing DMS Products with Facial Expressions Monitoring

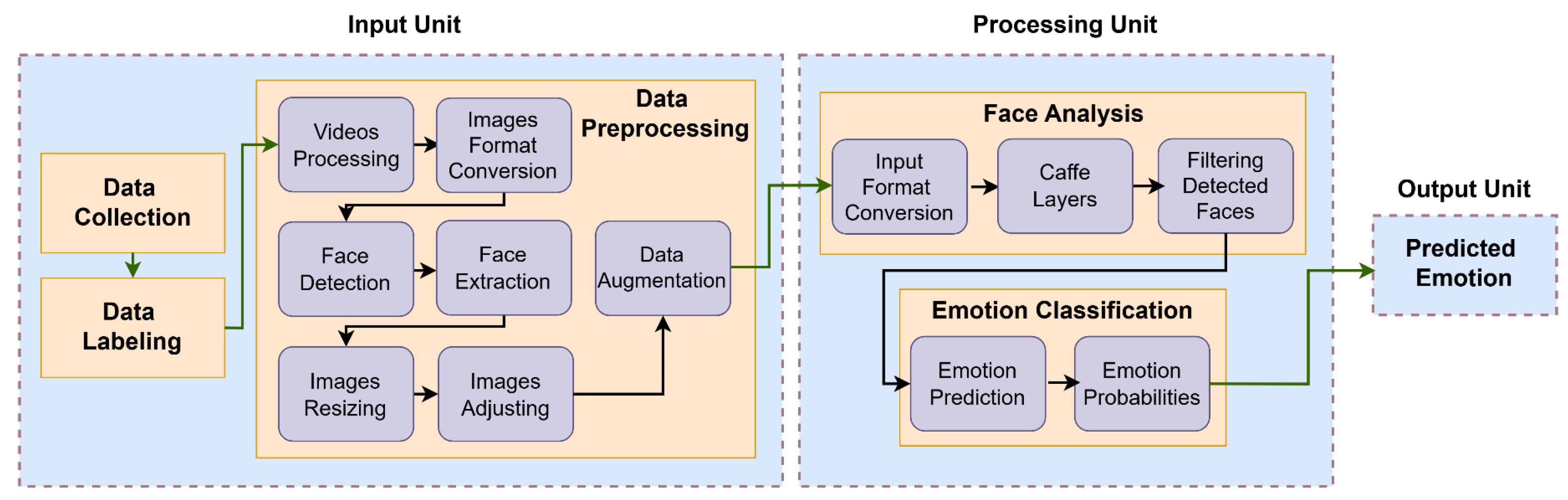

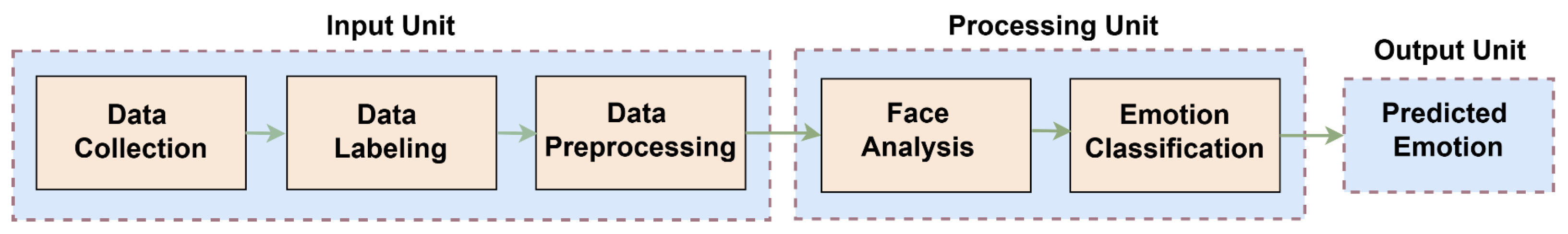

3. System Overview

3.1. Input Unit

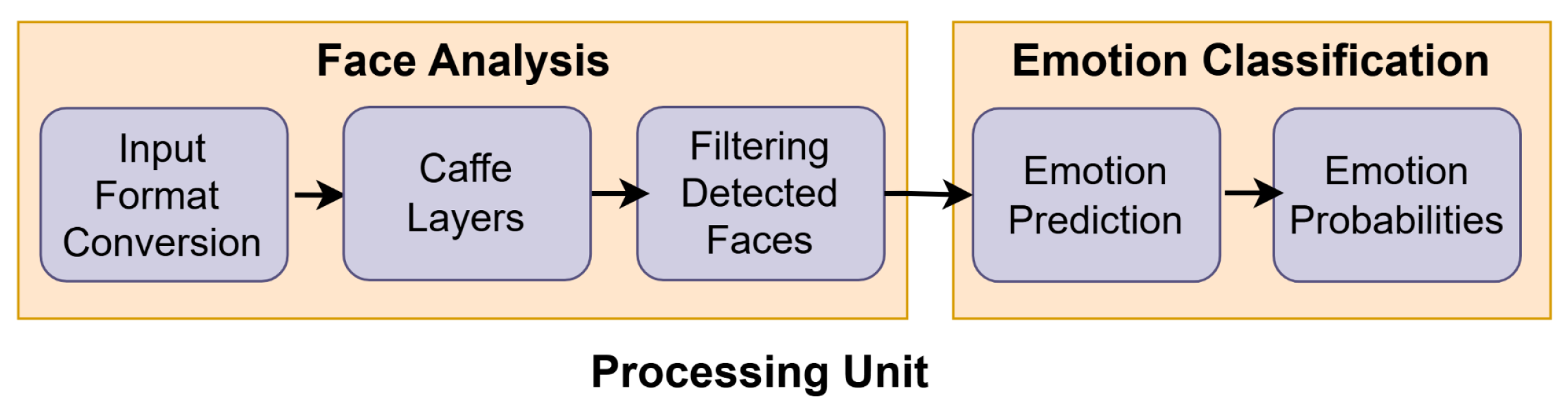

3.2. Processing Unit

3.3. Output Unit

3.4. System Dataflow

- ■

- Data Collection: Images and videos are obtained from different datasets and loaded by the system.

- ■

- Data Labeling: Images and video frames collected from various datasets are manually labeled and sorted into four separate subfolders to ensure proper categorization.

- ■

- Data Preprocessing: Raw images and video frames undergo preprocessing to prepare them for input into the system and to ensure compatibility with the system’s input requirements.

- ■

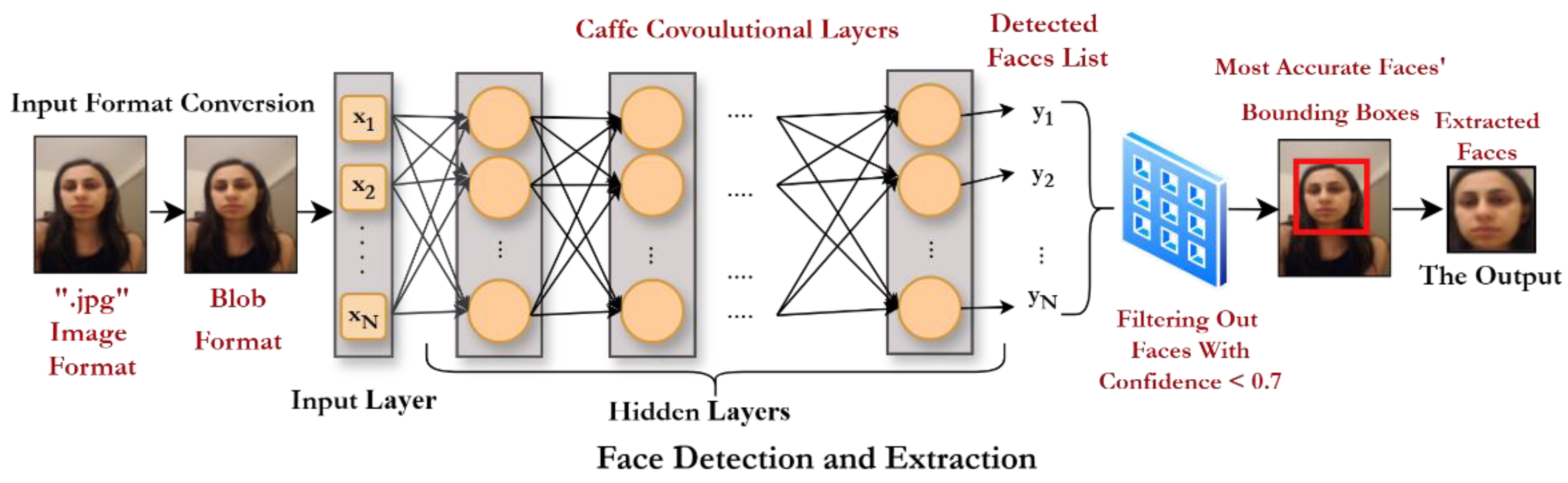

- Face Detection and Extraction: During the preprocessing stage, faces are detected using the DNN-based method, and the corresponding facial regions are extracted to produce images containing human faces only.

- ■

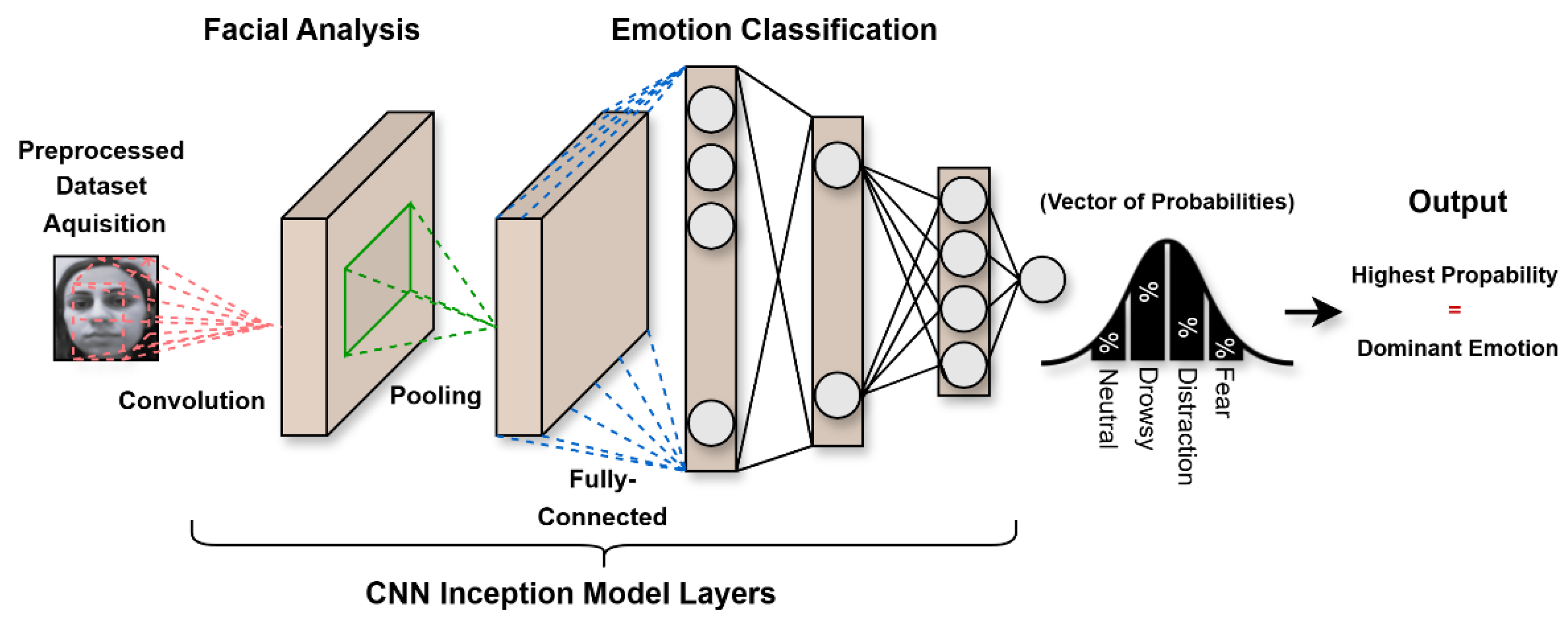

- Face Analysis: The extracted facial data are fed into the model, where they are analyzed by a CNN-based method to automatically learn the distinctive features of various driver facial expressions (distraction, drowsiness, and fear), as well as the neutral face expression.

- ■

- Emotion Prediction: The model computes and outputs the probability distribution across all facial expression categories.

- ■

- Output Interpretation: The output probability distribution is examined to determine the probability of the maximum value, which is then mapped to the corresponding facial expression label (e.g., ’Neutral’ and ’Drowsy’). The system subsequently displays the emotion with the highest predicted probability.

3.5. Emotions Detected by the Proposed System

4. The Learning Model

4.1. Data Collection

4.2. Data Labeling

4.3. Data Preprocessing

- ■

- Input layer;

- ■

- Feature extraction layers (convolutional, pooling, Rectified Linear Unit (ReLU));

- ■

- Normalization layers (batch normalization, dropout);

- ■

- Output layers (softmax, loss);

- ■

- A final concatenation layer for feature integration.

4.4. Facial Analysis

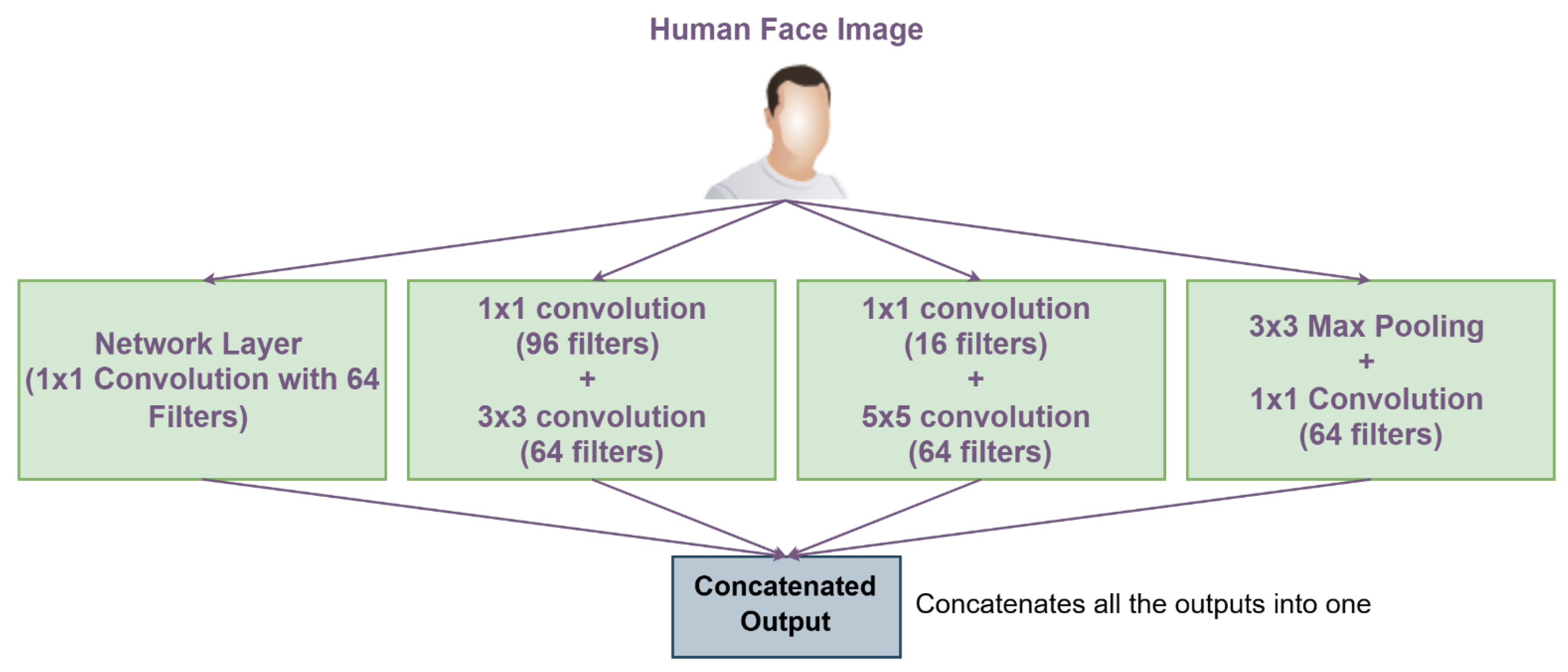

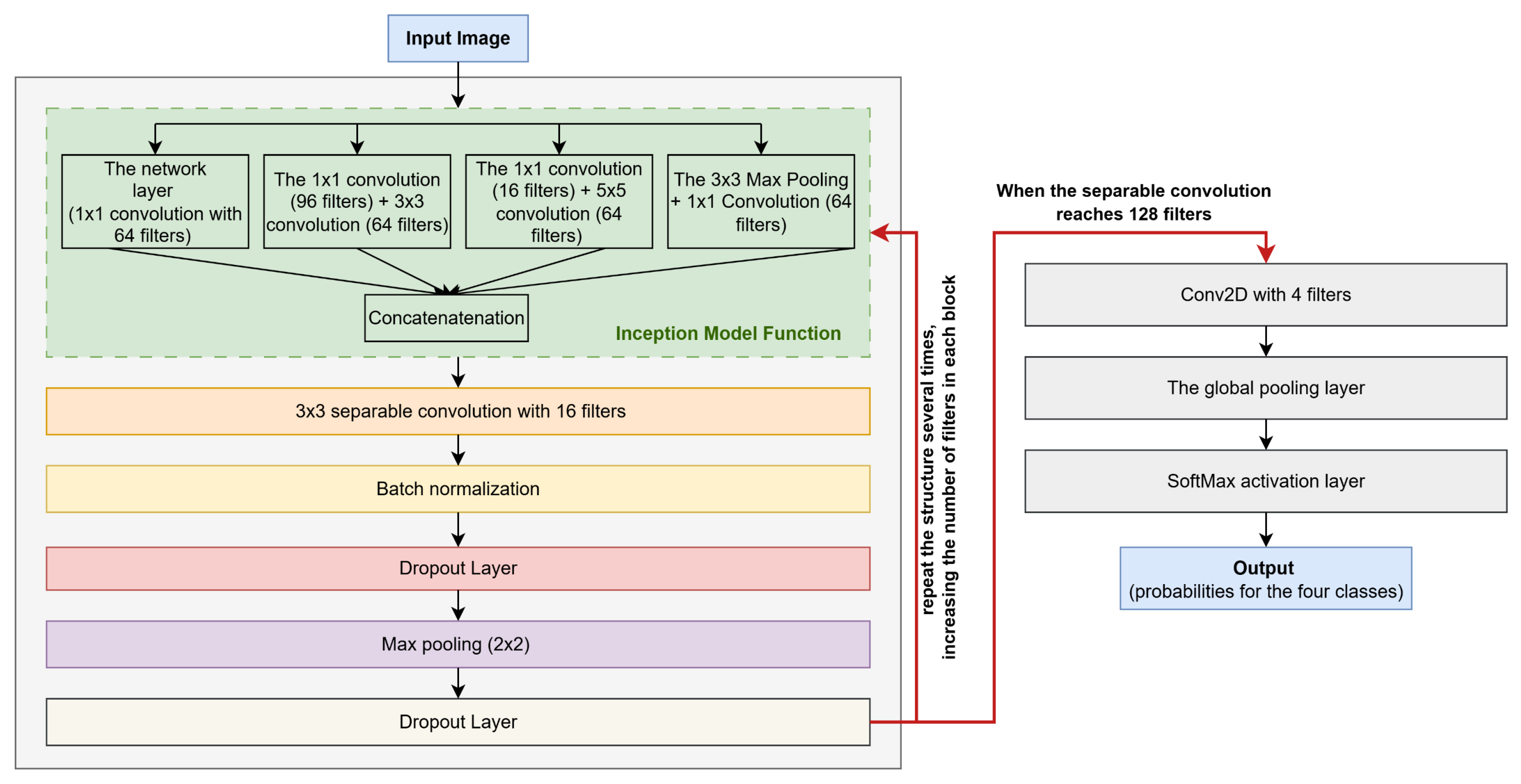

- ■

- Dimensionality Reduction Path: A 1 × 1 convolution (64 filters) that reduces input depth while preserving essential information by converting RGB images to grayscale when needed.

- ■

- Local Feature Extraction Path: A 1 × 1 convolution (96 filters) followed by a 3 × 3 convolution (64 filters) for dimensionality reduction and fine detail capture such as eye corners, wrinkles, or subtle mouth deformations.

- ■

- Global Feature Extraction Path: A 1 × 1 convolution (16 filters) followed by a 5 × 5 convolution (64 filters) to capture broader spatial patterns such as overall facial regions and broader emotional patterns.

- ■

- Pooling Path: 3 × 3 max pooling followed by 1 × 1 convolution (64 filters) to extract dominant features of the face, like the eyes and the mouth, while maintaining dimensionality.

- ■

- Feature Extraction: A 3 × 3 separable convolution (16 filters) extracts spatial features.

- ■

- Normalization: Batch normalization stabilizes and accelerates training.

- ■

- Regularization: Dropout (25%) is applied to prevent overfitting.

- ■

- Dimensionality Reduction: 2 × 2 max pooling decreases feature map dimensions.

- ■

- This structure repeats iteratively, with each block increasing the filter count while maintaining the same operation sequence (Inception module → separable convolution → batch normalization → dropout → max pooling).

4.5. Classification

5. Model Training and Testing

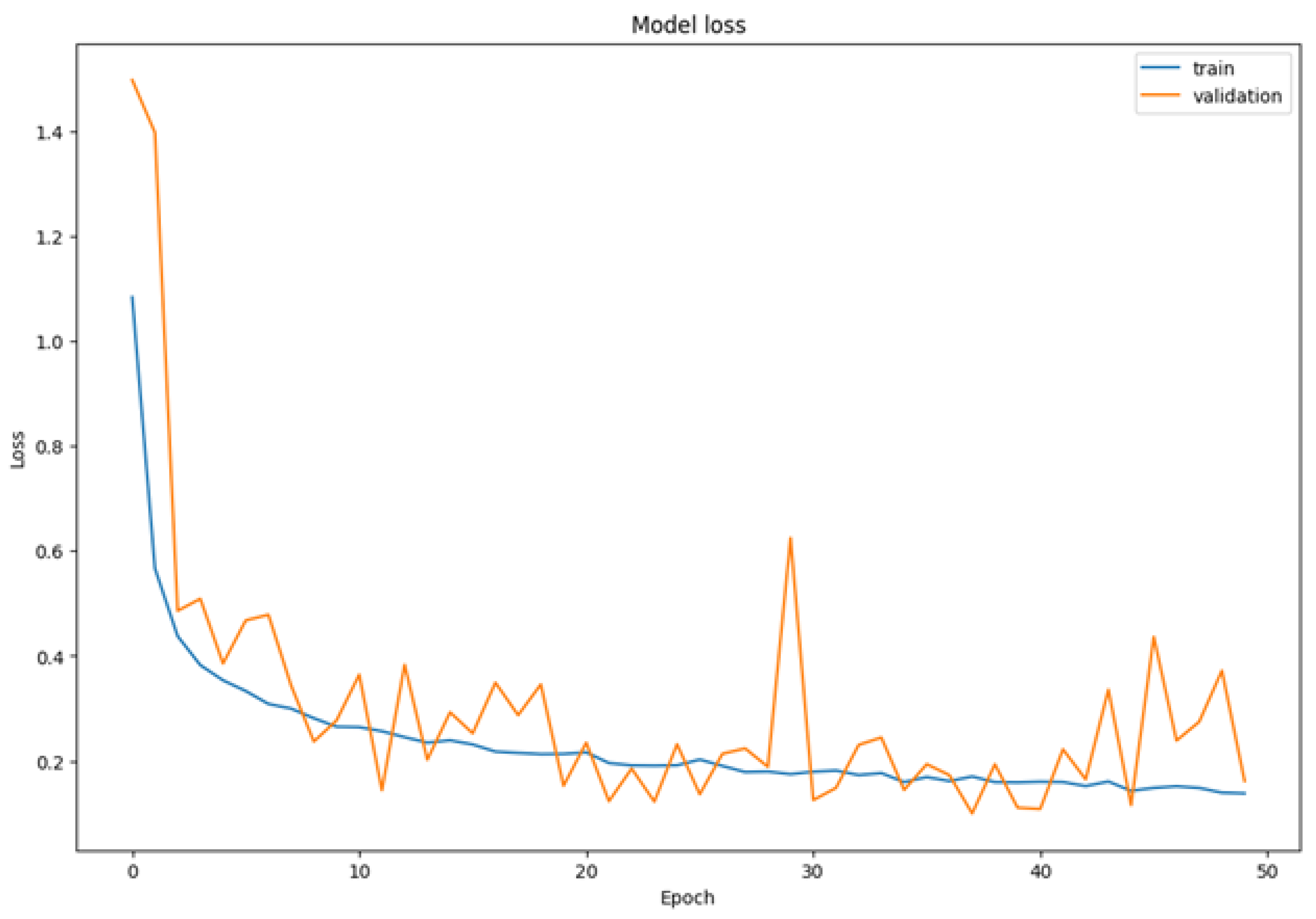

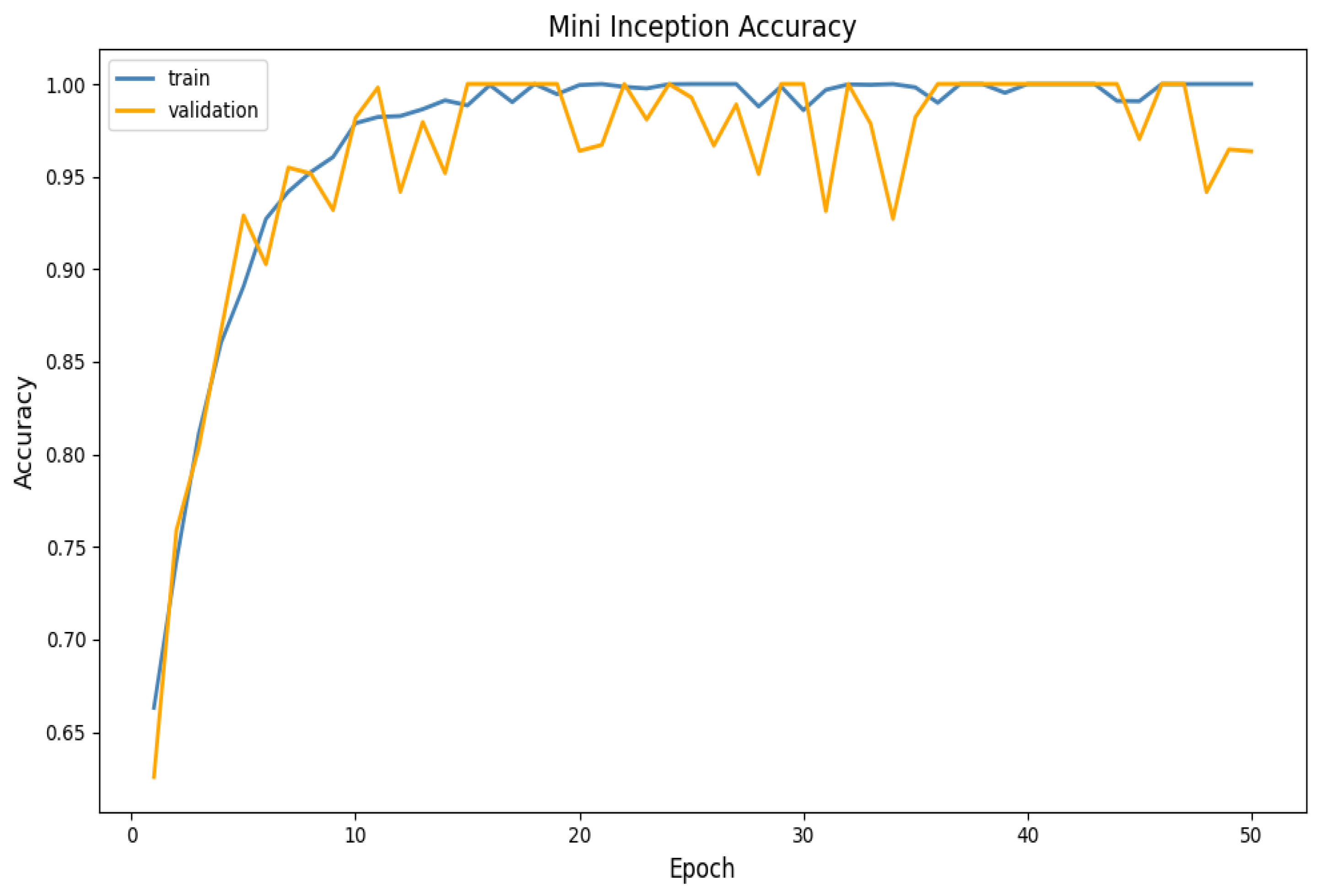

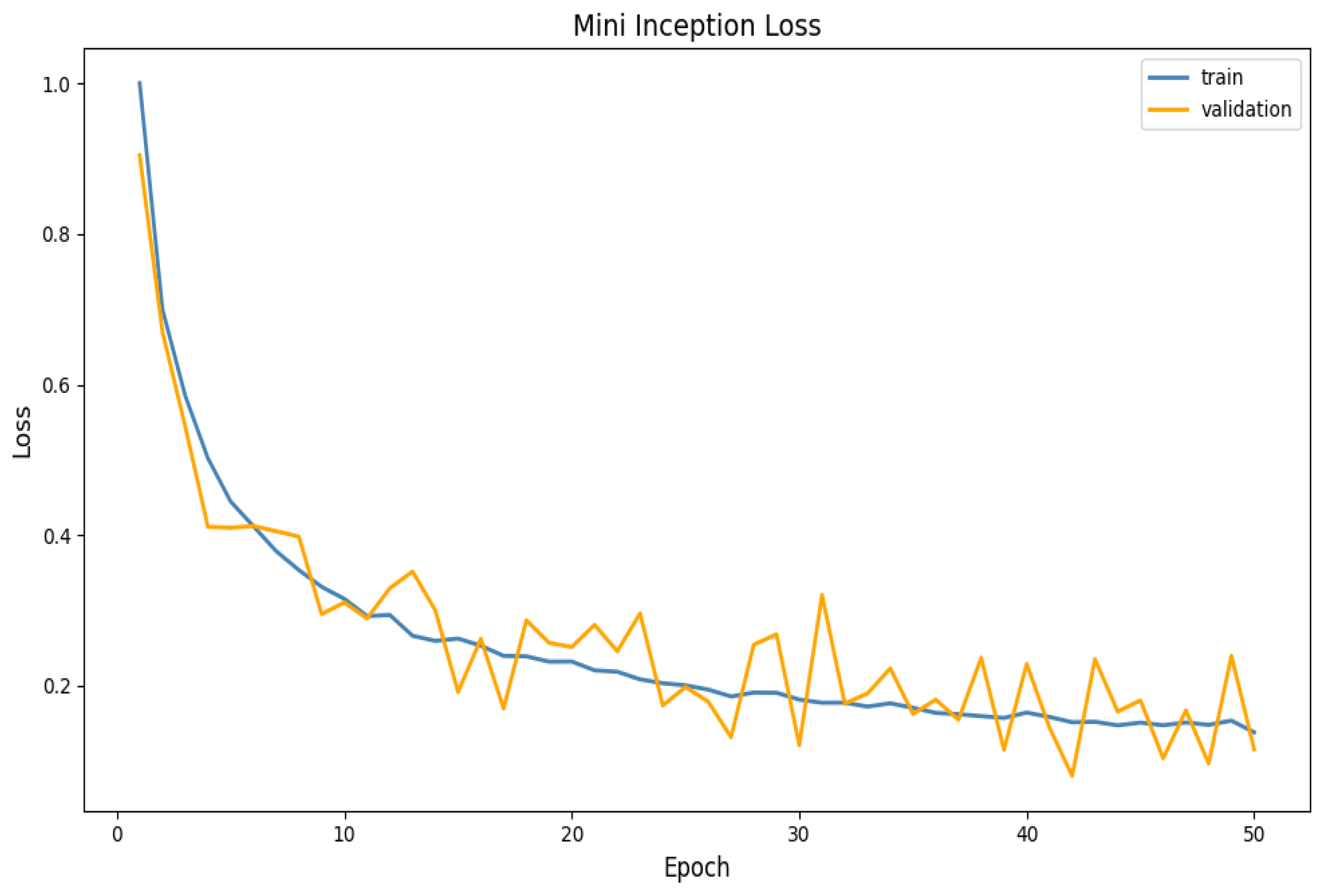

5.1. Training Phase

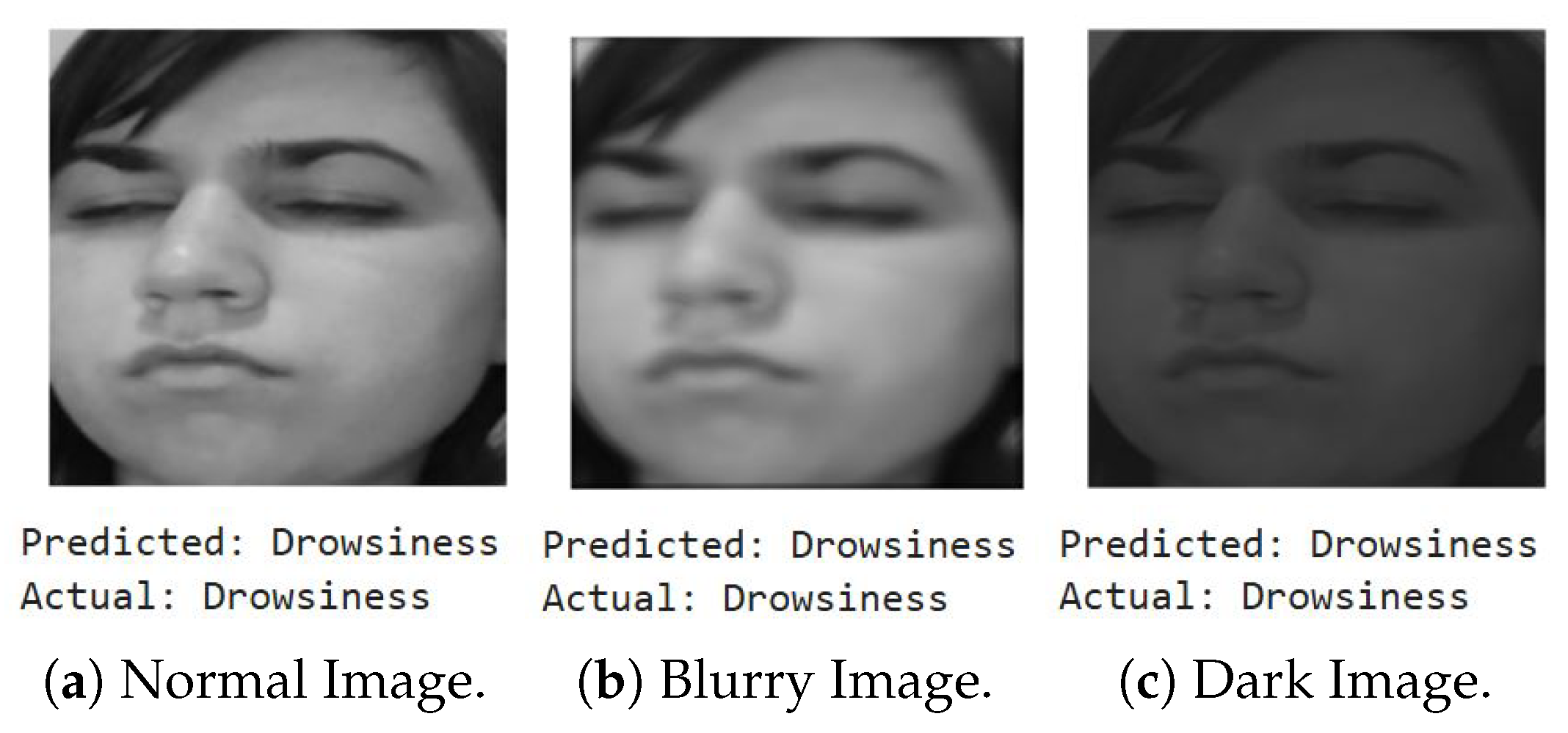

5.2. Testing Phase

6. Performance Evaluation

7. Comparative Analysis of the Best Existing Models Against Our Model

- Jain et al. [9] depict an Automated Hyperparameter Tuned Model (AHTM) that combines Squirrel Search Optimization with Deep Learning Enabled Facial Emotion Recognition (SSO-DLFER) for face detection and emotion recognition. The model first uses RetinaNet for face detection and then applies SSO-DLFER-based Neural Architectural Search (NASNet) to extract emotions.

- Sudha et al. [10] present the Driver Facial Expression Emotion Recognition (DFEER) system using a parallel multi-version optimizer. The main goal is to handle partial occlusions and motion variations of expressions.

- Sukhavasi et al. [11] present a hybrid model that combines a Support Vector Machine (SVM) and CNN to detect six to seven driver emotions with different poses. The model fuses Gabor and Local Binary Patterns (LBPs) to extract features which are usedby the hybrid model for classification.

- Hilal et al. [18] propose Transfer Learning-Driven Facial Emotion Recognition for Advanced Driver Assistance System (TLDFER-ADAS) for driver facial emotion recognition. The model uses Manta Ray Foraging Optimization (MRFO) and Quantum Dot Neural Networks (QDNNs) for emotion classification.

7.1. Input Data

7.2. Preprocessing Techniques

7.3. Architectural Differences and Performance

8. Hardware Implementation

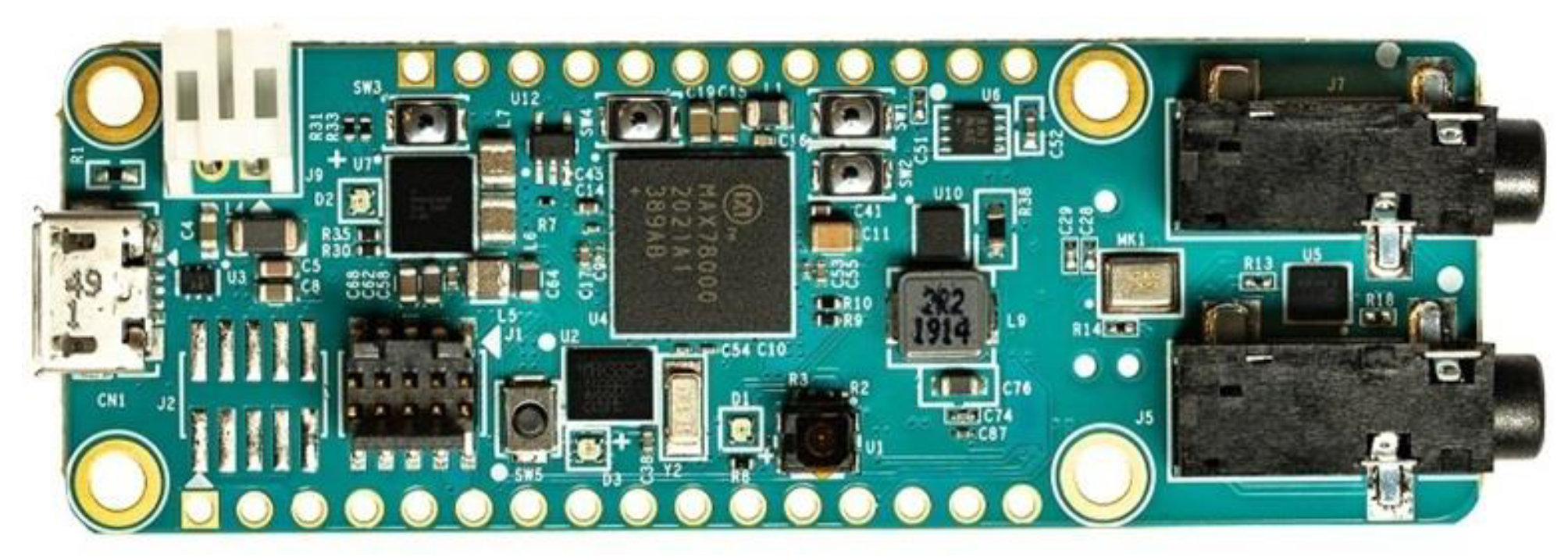

8.1. Microcontroller Selection

CNN Accelerator

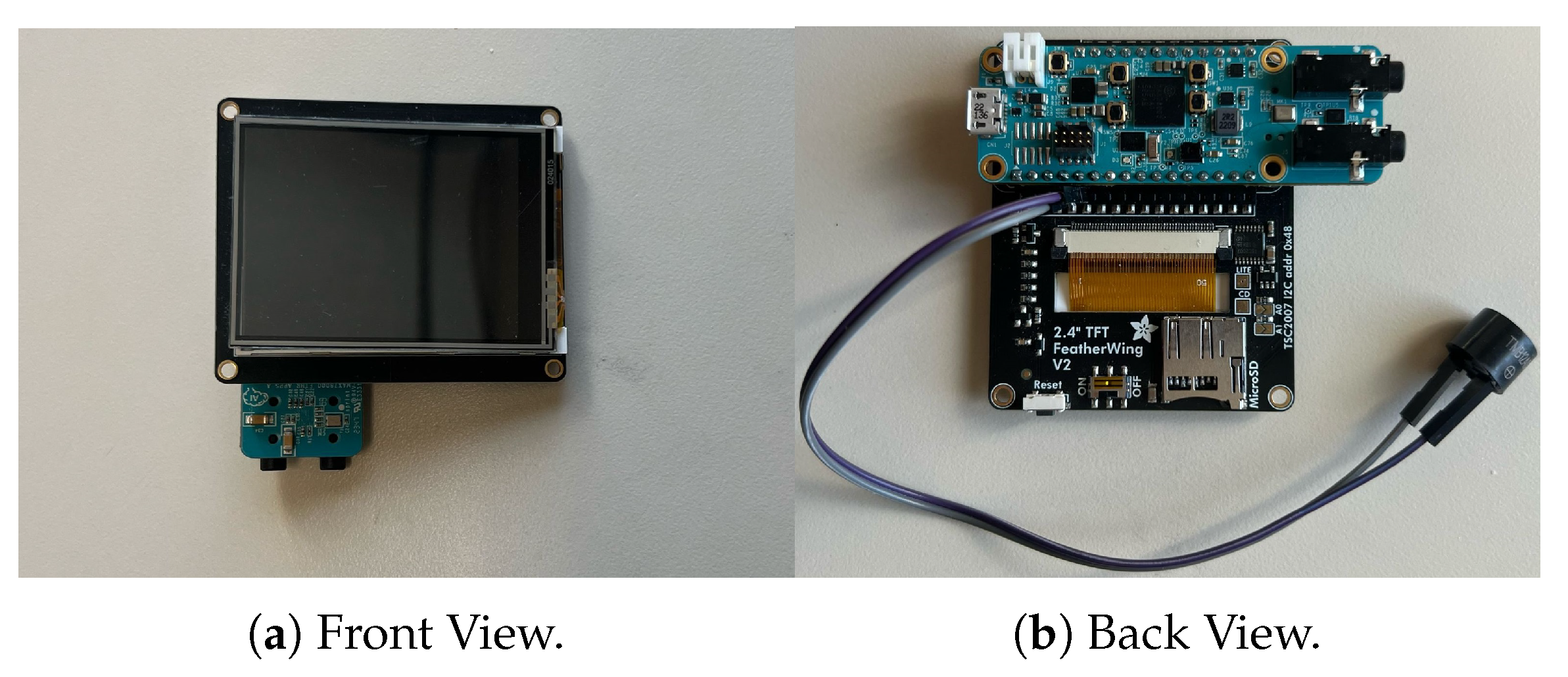

8.2. Components and Sensors Integration

- ■

- A built-in CMOS VGA Image Sensor (Camera): This microcontroller allows image processing on the board and the direct processing of grayscale images to save memory and power. It has the Complementary Metal Oxide Semiconductor (CMOS) Video Graphics Array (VGA) image sensor that works as a camera with low power consumption. It is also called OV7675. The maximum frame rate the camera can achieve under optimal conditions is 30 frames per second (FPS). Its VGA resolution is 640 × 480 pixels, and it has a Parallel Camera Interface (PCIF) of a 12-bit interface that facilitates transferring data quickly to the microcontroller when performing real-time image processing [58].

- ■

- A built-in microphone: This microcontroller has a digital microphone (SPH0645LM4H-B), which reduces the surrounding noise and enhances signal quality.

- ■

- A built-in Wi-Fi: This microcontroller has a Secure Digital Input Output (SDIO) interface, which facilitates the connection of Wi-Fi modules such as the ESP8266 or ESP32. We can connect a Feather-compatible Wi-Fi board to the Feather headers. This allows sending and receiving data to and from the Alexa-based alarm system.

- ■

- A built-in API (Application Programming Interface): The SDK (Software Development Kit 1.0.1) in this microcontroller provides APIs for deep learning inference, GPIO, I2C, SPI, UART, camera interfaces, and more through a set of libraries.

- ■

- A FeatherWing Touchscreen: This Thin Film Transistor (TFT) screen is 2.4 inches with 240 × 320 pixels to show detected faces and emotions.

- ■

- An Active Buzzer: This DC 3 V active buzzer is connected to the microcontroller and is used to produce the alarm sound when an alarming emotion is detected.

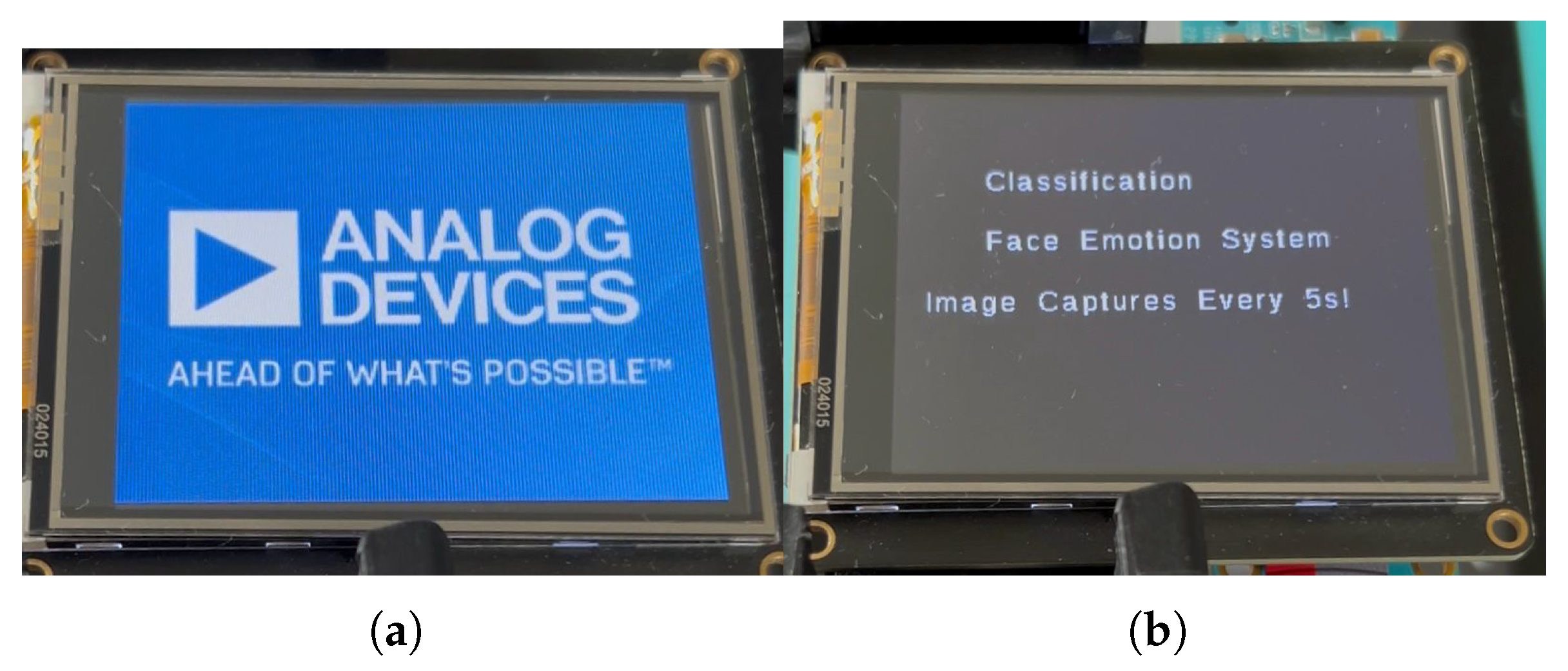

8.3. Microcontroller Configuration

8.4. System Flow

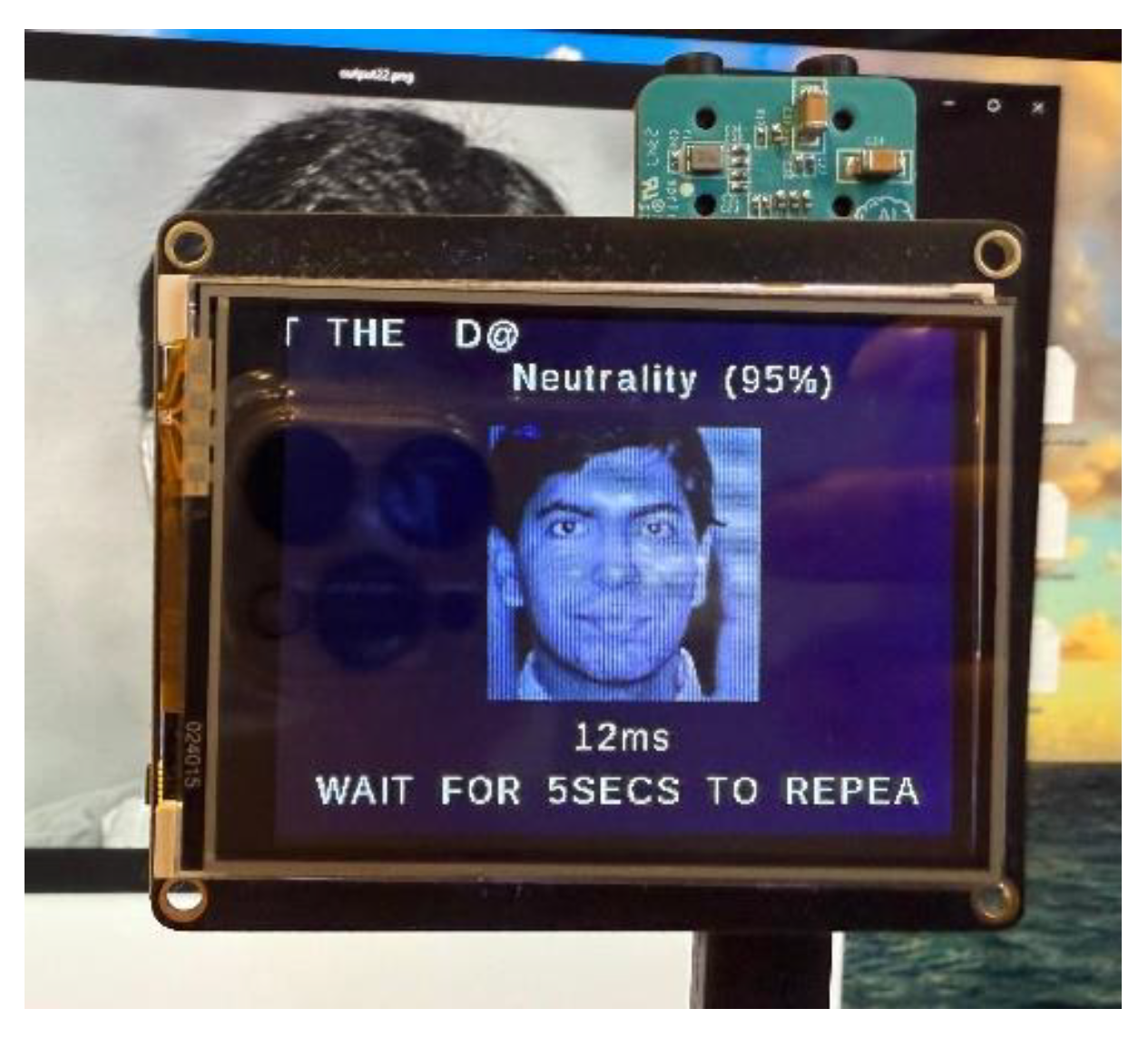

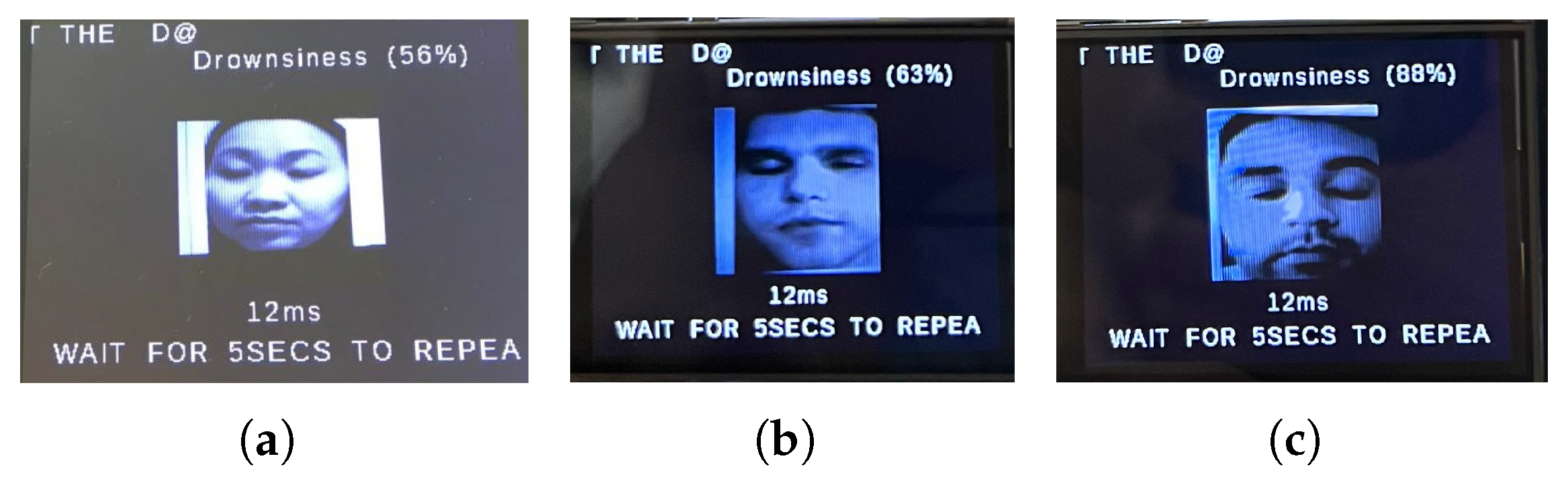

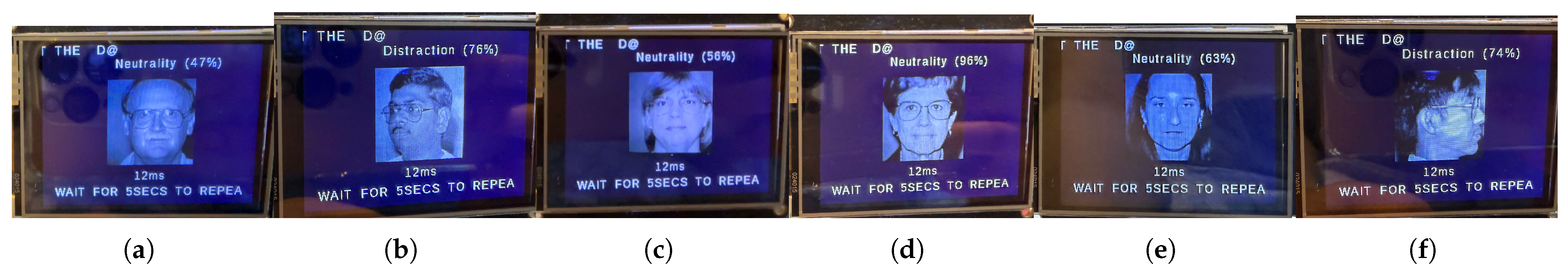

8.5. Performance Demonstration

9. Comparison with Existing Driver Alert Systems

10. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hyder, A.A.; Hoe, C.; Hijar, M.; Peden, M. The political and social contexts of global road safety: Challenges for the next decade. Lancet 2022, 400, 127–136. [Google Scholar] [CrossRef]

- Hedges & Compan. How Many Cars Are There in the World? Available online: https://hedgescompany.com/blog/2021/06/how-many-cars-are-there-in-the-world/ (accessed on 26 September 2024).

- World Health Organization. Global Status Report on Road Safety 2023; World Health Organization: Geneva, Switzerland, 2023; Available online: https://www.who.int/teams/social-determinants-of-health/safety-and-mobility/global-status-report-on-road-safety-2023 (accessed on 26 September 2024).

- Koesdwiady, A.; Soua, R.; Karray, F.; Kamel, M.S. Recent Trends in Driver Safety Monitoring Systems: State of the Art and Challenges. IEEE Trans. Veh. Technol. 2017, 66, 4550–4563. [Google Scholar] [CrossRef]

- Grand View Research. Driver Monitoring System Market Size, Share & Trends Analysis Report. Available online: https://www.grandviewresearch.com/industry-analysis/driver-monitoring-system-market-report (accessed on 25 August 2025).

- Prasad, V. Traffic Camera Dangerous Driver Detection (TCD3). In Proceedings of the IS&T International Symposium on Electronic Imaging 2017: Intelligent Robotics and Industrial Applications using Computer Vision, Society for Imaging Science and Technology, Online, 16–19 January; 2017; pp. 67–72. [Google Scholar] [CrossRef]

- Shen, X.; Wu, Q.; Fu, X. Effects of the duration of expressions on the recognition of microexpressions. J. Zhejiang Univ. Sci. B 2012, 13, 221–230. [Google Scholar] [CrossRef]

- Thomas Cronin. Ask the Brains. Sci. Am. Mind 2010, 21, 70. [Google Scholar] [CrossRef]

- Jain, D.K.; Shamsolmoali, P.; Sehdev, P.; Mohamed, M.A. An Automated Hyperparameter Tuned Deep Learning Model Enabled Facial Emotion Recognition for Autonomous Vehicle Drivers. Image Vis. Comput. 2023, 133, 104659. [Google Scholar] [CrossRef]

- Sudha, S.S.; Suganya, S.S. On-road Driver Facial Expression Emotion Recognition with Parallel Multi-Verse Optimizer (PMVO) and Optical Flow Reconstruction for Partial Occlusion in Internet of Things (IoT). Meas. Sens. 2023, 26, 100711. [Google Scholar] [CrossRef]

- Sukhavasi, S.B.; Sukhavasi, S.B.; Elleithy, K.; El-Sayed, A.; Elleithy, A. A Hybrid Model for Driver Emotion Detection Using Feature Fusion Approach. Int. J. Environ. Res. Public Health 2022, 19, 3085. [Google Scholar] [CrossRef]

- Zhang, X.; Li, J.; Liu, Y.; Zhang, Z.; Wang, Z.; Luo, D.; Zhou, X.; Zhu, M.; Salman, W.; Hu, G.; et al. Design of a Fatigue Detection System for High-Speed Trains Based on Driver Vigilance Using a Wireless Wearable EEG. Sensors 2017, 17, 486. [Google Scholar] [CrossRef]

- Sahoo, G.K.; Das, S.K.; Singh, P. Deep Learning-Based Facial Emotion Recognition for Driver Healthcare. In Proceedings of the 2022 National Conference on Communications (NCC), Virtual, 24-27 May 2022; pp. 154–159. [Google Scholar] [CrossRef]

- Sahoo, G.K.; Ponduru, J.; Das, S.K.; Singh, P. Deep Leaning-Based Facial Expression Recognition in FER2013 Database: An in-Vehicle Application. 2022. Available online: https://www.researchgate.net/publication/368596012_Deep_Leaning-Based_Facial_Expression_Recognition_in_FER2013_Database_An_in-Vehicle_Application (accessed on 22 August 2024).

- Shang, Y.; Yang, M.; Cui, J.; Cui, L.; Huang, Z.; Li, X. Driver Emotion and Fatigue State Detection Based on Time Series Fusion. Electronics 2023, 12, 26. [Google Scholar] [CrossRef]

- Pant, M.; Trivedi, S.; Aggarwal, S.; Rani, R.; Dev, A.; Bansal, P. Driver’s Companion-Drowsiness Detection and Emotion Based Music Recommendation System. In Proceedings of the 2022 International Conference on Computing, Communication, and Intelligent Systems (ICCCIS), Greater Noida, India,, 4–5 November 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Hu, H.-X.; Zhang, L.-F.; Yang, T.-J.; Hu, Q. A Lightweight Two-Stream Model for Driver Emotion Recognition. J. Phys. Conf. Ser. 2022, 2400, 012002. [Google Scholar] [CrossRef]

- Hilal, A.M.; Elkamchouchi, D.H.; Alotaibi, S.S.; Maray, M.; Othman, M.; Abdelmageed, A.A.; Zamani, A.S.; Eldesouki, M.I. Manta Ray Foraging Optimization with Transfer Learning Driven Facial Emotion Recognition. Sustainability 2022, 14, 14308. [Google Scholar] [CrossRef]

- Xiao, H.; Li, W.; Zeng, G.; Wu, Y.; Xue, J.; Zhang, J.; Li, C.; Guo, G. On-Road Driver Emotion Recognition Using Facial Expression. Appl. Sci. 2022, 12, 807. [Google Scholar] [CrossRef]

- Wang, S.; Yang, Z.; Liu, N.; Jiang, J. Improved face detection algorithm based on YOLO-v2 and Dlib. Eurasip J. Image Video Process. 2019, 2019, 41. [Google Scholar] [CrossRef]

- Ma, B.; Fu, Z.; Rakheja, S.; Zhao, D.; He, W.; Ming, W.; Zhang, Z. Distracted Driving Behavior and Driver’s Emotion Detection Based on Improved YOLOv8 With Attention Mechanism. IEEE Access 2024, 12, 37983–37994. [Google Scholar] [CrossRef]

- Hollósi, J.; Kovács, G.; Sysyn, M.; Kurhan, D.; Fischer, S.; Nagy, V. Driver Distraction Detection in Extreme Conditions Using Kolmogorov—Arnold Networks. Computers 2025, 14, 184. [Google Scholar] [CrossRef]

- Seeing Machines. Technology to Get Everyone Home Safe. Available online: https://seeingmachines.com/ (accessed on 27 September 2024).

- Jungo. Home. Available online: https://jungo.com/ (accessed on 29 July 2024).

- Xperi Corporation. Xperi Announces FotoNation’s Third-Generation Image Processing Unit. 2018. Available online: https://investor.xperi.com/news/news-details/2018/Xperi-Announces-FotoNations-Third-Generation-Image-Processing-Unit/default.aspx (accessed on 4 November 2023).

- EDGE3 Technologies. EDGE3 Technologies. Available online: https://www.edge3technologies.com/ (accessed on 29 July 2024).

- DENSO. Driver Status Monitor—Products & Services—What We Do. DENSO Global Website. 2024. Available online: https://www.denso.com/global/en/business/products-and-services/mobility/pick-up/dsm/ (accessed on 29 July 2024).

- EYESEEMAG. Serenity by Ellcie Healthy: For Protecting Senior Citizens. Available online: https://www.virtuallab.se/en/products/ellcie-healthy/ (accessed on 29 July 2024).

- Optalert. Optalert—Drowsiness, OSA and Neurology Screening. Available online: https://www.optalert.com/ (accessed on 27 September 2024).

- DBS GT. Full Display Mirror—Aston Martin. Available online: https://www.fulldisplaymirror.com/about/ (accessed on 27 September 2024).

- Smart Eye. Smart Eye Pro—Remote Eye Tracking System. Available online: https://www.smarteye.se/smart-eye-pro/ (accessed on 27 September 2024).

- ZF. CoDRIVE. 2024. Available online: https://www.zf.com/products/en/cars/products_64085.html (accessed on 29 July 2024).

- OmniVision. OmniVision—CMOS Image Sensor Manufacturer—Imaging Solutions. Available online: https://www.ovt.com/ (accessed on 27 September 2024).

- DENSO Corporation. Driver Status Monitor. Products & Services—Mobility, DENSO Global Website. Available online: https://www.denso.com/global/en/business/products-and-services/mobility/safety-cockpit/ (accessed on 18 October 2025).

- Autoliv. Innovation—Autoliv. 2024. Available online: https://www.autoliv.com/innovation (accessed on 27 September 2024).

- O’Brien, R.S. The Psychosocial Factors Influencing Aggressive Driving Behaviour. 2011. Available online: https://eprints.qut.edu.au/44160/ (accessed on 27 September 2024).

- Wang, X.; Liu, Y.; Chen, L.; Shi, H.; Han, J.; Liu, S.; Zhong, F. Research on Emotion Activation Efficiency of Different Drivers. Sustainability 2022, 14, 13938. [Google Scholar] [CrossRef]

- Marasini, G.; Caleffi, F.; Machado, L.M.; Pereira, B.M. Psychological Consequences of Motor Vehicle Accidents: A Systematic Review. Transp. Res. Part Traffic Psychol. Behav. 2022, 89, 249–264. [Google Scholar] [CrossRef]

- Federal Motor Carrier Safety Administration. CMV Driving Tips—Driver Fatigue. 2015. Available online: https://www.fmcsa.dot.gov/safety/driver-safety/cmv-driving-tips-driver-fatigue (accessed on 27 September 2024).

- Florida Law Group. Driving Anxiety: Causes, Symptoms & Ways To Prevent It. 2023. Available online: https://www.thefloridalawgroup.com/news-resources/driving-anxiety-causes-symptoms-ways-to-prevent-it/ (accessed on 27 September 2024).

- Dataset, F.E.R. Face Expression Recognition Dataset. Kaggle. Available online: https://www.kaggle.com/datasets/jonathanoheix/face-expression-recognition-dataset (accessed on 27 September 2024).

- D. D. D. (DDD). Driver Drowsiness Dataset (DDD). Kaggle. Available online: https://www.kaggle.com/datasets/ismailnasri20/driver-drowsiness-dataset-ddd (accessed on 27 September 2024).

- Driver-Drowsy. Driver-Drowsy Dataset. Kaggle. Available online: https://www.kaggle.com/datasets/khanh14ph/driver-drowsy (accessed on 20 May 2024).

- F. L. D. D. D. (FL3D). Frame Level Driver Drowsiness Detection (FL3D). Kaggle. Available online: https://www.kaggle.com/datasets/matjazmuc/frame-level-driver-drowsiness-detection-fl3d (accessed on 27 September 2024).

- Traindata, “Traindata,” Kaggle. Available online: https://www.kaggle.com/datasets/sundarkeerthi/traindata (accessed on 20 May 2024).

- Lyons, M.; Kamachi, M.; Gyoba, J. The Japanese Female Facial Expression, (JAFFE) Dataset. Available online: https://zenodo.org/records/3451524 (accessed on 14 June 2024).

- Lyons, M.J.; Kamachi, M.; Gyoba, J. Coding Facial Expressions with Gabor Wavelets (IVC Special Issue). arXiv 2020, arXiv:2009.05938. [Google Scholar]

- Lyons, M.J. “Excavating AI” Re-excavated: Debunking a Fallacious Account of the JAFFE Dataset. arXiv 2021, arXiv:2107.13998. [Google Scholar]

- Livingstone, S.R.; Russo, F.A. The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS). 2018. [CrossRef]

- Livingstone, S.R.; Russo, F.A. The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A Dynamic, Multimodal Set of Facial and Vocal Expressions in North American English. PLoS ONE 2018, 13, e0196391. [Google Scholar] [CrossRef]

- Rahman, M.S.; Venkatachalapathy, A.; Sharma, A. Synthetic Distracted Driving (SynDD1) Dataset. 2022. Available online: https://data.mendeley.com/datasets/ptcp7rp3wb/4 (accessed on 27 September 2024).

- Rahman, M.S.; Venkatachalapathy, A.; Sharma, A.; Wang, J.; Gursoy, S.V.; Anastasiu, D.; Wang, S. Synthetic Distracted Driving (SynDD1) Dataset for Analyzing Distracted Behaviors and Various Gaze Zones of a Driver. Data Brief 2023, 46, 108793. [Google Scholar] [CrossRef]

- UTA-RLDD. UTA-RLDD. Available online: https://sites.google.com/view/utarldd/home (accessed on 27 September 2024).

- Ghoddoosian, R.; Galib, M.; Athitsos, V. A Realistic Dataset and Baseline Temporal Model for Early Drowsiness Detection. arXiv 2019, arXiv:1904.07312. [Google Scholar]

- Phillips, P.J.; Wechsler, H.; Huang, J.; Rauss, P. The FERET database and evaluation procedure for face recognition algorithms. Image Vis. Comput. 1998, 16, 295–306. [Google Scholar] [CrossRef]

- Phillips, P.J.; Moon, H.; Rizvi, S.A.; Rauss, P.J. The FERET evaluation methodology for face recognition algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1090–1104. [Google Scholar] [CrossRef]

- Analog Devices. MAX78000FTHR Evaluation Kit. 2025. Available online: https://www.analog.com/media/en/technical-documentation/data-sheets/MAX78000FTHR.pdf (accessed on 19 February 2025).

- OmniVision Technologies. OVM7692 Product Page. 2025. Available online: https://www.ovt.com/products/ovm7692/ (accessed on 4 March 2025).

- Biswal, A.K.; Singh, D.; Pattanayak, B.K.; Samanta, D.; Yang, M.-H. IoT-Based Smart Alert System for Drowsy Driver Detection. Wirel. Commun. Mob. Comput. 2021, 2021, 6627217. [Google Scholar] [CrossRef]

- Sowmyashree, P.; Sangeetha, J. Multistage End-to-End Driver Drowsiness Alerting System. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 464–473. [Google Scholar] [CrossRef]

- Sharath, S.M.; Yadav, D.S.; Ansari, A. Emotionally Adaptive Driver Voice Alert System for Advanced Driver Assistance System (ADAS) Applications. In Proceedings of the 2018 International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 13–14 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 509–512, ISBN 978-1-5386-5873-4. [Google Scholar] [CrossRef]

| Ref. | Classifier Methods | Face Detectors | Datasets Names | Samples Counts | Emotions Classes | Accuracy | Blur Images Support | Dark Images Support |

|---|---|---|---|---|---|---|---|---|

| [9] | SSO-DLFER(GRU) 1 | Retina-Net | KDEF 2 | 5000 | Happy, sad, surprised, angry, disgusted, afraid, and neutral | 99.69% | NA 19 | NA |

| KMU-FED 3 | 55 videos | Various changes in illumination (front, left, right, and back light) and partial occlusions | 99.50% | |||||

| [10] | VGGNet 4 | - | KMU-FED | 55 videos | Various changes in illumination (front, left, right, and back light) and partial occlusions | 95.92% | Yes | NA |

| CK+ 5 | 981 | Anger, Disgust, Fear, Happiness, Sadness, Surprise, Neutral, Contempt | 94.78% | |||||

| [11] | CNN+SVM 6 | Haar Features Cascade | FER2013 7 | 32,298 | Angry, Disgust, Fear, Happy, Sad, Surprise, Neutral | 84.41% | Yes | NA |

| CK+ | 981 | Anger, Disgust, Fear, Happiness, Sadness, Surprise, Neutral, Contempt | 95.05% | |||||

| KDEF 8 | 5000 | Happy, sad, surprised, angry, disgusted, afraid, and neutral | 98.57% | |||||

| KMU-FED | 55 videos | Various changes in illumination (front, left, right, and back light) and partial occlusions | 98.64% | |||||

| [12] | CogEmo-Net (CNN) 9 | MT-CNN 10 | CK+ | 981 | Anger, Disgust, Fear, Happiness, Sadness, Surprise, Neutral, Contempt | 0.907% | NA | NA |

| DEFE+ 11 | NA 12 | Anger and happiness | 0.351 | |||||

| [13] | Squeeze-Net 1.1 | - | CK+ | 981 | Anger, Disgust, Fear, Happiness, Sadness, Surprise, Neutral, Contempt | 86.86% | Yes | NA |

| KDEF | 5000 | Happy, sad, surprised, angry, disgusted, afraid, and neutral | 91.39% | |||||

| FER2013 | 32,298 | Angry, Disgust, Fear, Happy, Sad, Surprise, Neutral | 61.09% | |||||

| KMU-FED | 55 videos | Various changes in illumination (front, left, right, and back light) and partial occlusions | 95.83% | |||||

| [14] | Custom CNN + VGG16 | - | FER2013 | 32,298 | Angry, Disgust, Fear, Happy, Sad, Surprise, Neutral | 68.34% | Yes | NA |

| [15] | Lightweight RMXception | DLib 13 | FER2013 | 32,298 | Angry, Disgust, Fear, Happy, Sad, Surprise, Neutral | 73.32% | Yes | NA |

| [16] | CNN | DLib | FER2013 | 32,298 | Angry, Disgust, Fear, Happy, Sad, Surprise, Neutral | 83% | Yes | NA |

| [17] | Lightweight SICNET+RCNET 14 | - | FER2013 | 32,298 | Angry, Disgust, Fear, Happy, Sad, Surprise, Neutral | 69.65% | Yes | NA |

| [18] | TLDFER-ADAS (QDNN) 15 | - | FER2013 | 32,298 | Angry, Disgust, Fear, Happy, Sad, Surprise, Neutral | 99.31% | Yes | NA |

| CK+ | 981 | Anger, Disgust, Fear, Happiness, Sadness, Surprise, Neutral, Contempt | 99.29% | |||||

| [19] | FERDER-net -Xception | Inspired deep cascaded multitask framework | On-road driverFE dataset | 12 videos | Anger, Happiness, Sadness, Disgust, Contempt, Surprise | 0.966 | NA | NA |

| [20] | ViT 16 | Viola and Jones | CK+ | 981 | Anger, Disgust, Fear, Happiness, Sadness, Surprise, Neutral, Contempt | 0.6665 recognition rate | NA | NA |

| KMU-FED | 55 sequences of images | Various changes in illumination (front, left, right, and back light) and partial occlusions | - | |||||

| [21] | Improved YOLOv8 17 and Multi-Head Self-Attention (MHSA) | YOLOv8 and CNN | Distracted Driving Behavior Dataset | 20 | Drinking, Smoking, Phone usage | mAP 18 = 0.814 | NA | NA |

| FER2013 | 32,298 | Angry, Disgust, Fear, Happy, Sad, Surprise, Neutral | mAP = 0.733 | |||||

| This work (2024) | CNNs | DNN 19 | Face Expression Recognition Dataset | 35,900 | Angry, disgust, fear, happy, neutral, sad, surprise | 98.6% | Yes | Yes |

| The Driver Drowsiness Dataset (DDD) | 41,790 | Drowsy and non-drowsy | ||||||

| Driver-drowsy Dataset | 66,000 | Drowsy and not Drowsy | ||||||

| Frame Level Driver Drowsiness Detection (FL3D) | 53,331 | Alert, microsleep, and yawning | ||||||

| Traindata Dataset | 1218 | Distraction, multi-face, no face, normal, object | ||||||

| Japanese Female Facial Expressions (JAFFE) | 213 Images | Neutral, Happy, Angry, Disgust, Fear, Sad, Surprise | ||||||

| The Ryerson Audio-Visual Database of Emotional Speech & Song (RAV-DESS) | 1440 Videos | Calm, Happy, Sad, Angry, Fearful, Surprise, and Disgust | ||||||

| Synthetic Distracted Driving (SynDD1) | 6 videos (each is about 10 min long) for each participant | Distracted activities and gaze zones | ||||||

| UTA-RLDD 20 Dataset | 30 h of RGB videos | Alert, low-vigilant, drowsy | ||||||

| FERET Dataset 21 | PPM Images | Distracted, happy, neutral |

| Product | High Precision | Blurry Image Stabilization | Night Vision Capability | Robustness to Lighting Changes | Power Effectiveness | Indirect Face Detection | Facial Features | Unobtrusive |

|---|---|---|---|---|---|---|---|---|

| Seeing-Machines’ FaceAPI [23] | Yes | Yes | Yes | No | Yes | Yes | Yes | Yes |

| Jungo’s CoDriver [24] | Yes | No | No | No | No | No | Yes | Yes |

| Xperi’s FotoNation [25] | No | No | No | No | No | No | No | No |

| Edge3 Technologies [26] | Yes | No | No | Yes | Yes | No | Yes | Yes |

| Denso Driver Attention Monitor [27] | Yes | No | No | No | No | Yes | Yes | Yes |

| Ellice Healthy Eyewear [28] | Yes | No | No | No | Yes | No | Yes | No |

| Optalert [29] | Yes | No | No | Yes | No | No | No | Yes |

| Gentex Full Display Mirror [30] | No | No | No | No | No | No | Yes | Yes |

| Smart Eye Pro [31] | Yes | No | Yes | Yes | Yes | No | Yes | Yes |

| ZF CoDriver [32] | Yes | No | No | Yes | Yes | No | Yes | Yes |

| Omni-Vision OV2311 [33] | No | Yes | No | No | No | No | No | Yes |

| Denso Driver Status Monitor [34] | No | No | No | No | Yes | Yes | Yes | No |

| Autoliv Driver Facial Monitoring Camera [35] | No | No | No | No | Yes | No | No | No |

| Emotion | Eye Features | Mouth Features | Head Cues | Temporal Pattern |

|---|---|---|---|---|

| Fear | wide-open eyes | wide-open mouth | forward-facing head | Sudden changes |

| Distraction | open eyes | closed mouth | tilted or rotated head | Intermittent shifts |

| Drowsiness | closed or semi-closed eyes | closed or open mouth | Nodding, slow head tilt | Gradual slow progression |

| Neutral | Normal openness | Relaxed or closed | Balanced, steady, and forward-facing head | Stable baseline |

| Dataset | Accessibility | Data Format | Emotion | Participant Count | Sample Count | Data Size | Estimated Download Frequency |

|---|---|---|---|---|---|---|---|

| Face Expression Recognition Dataset [41] | Public | JPEG | Angry, disgust, fear, happy, neutral, sad, surprise | NA | 35.9 k | 126 MB | More than 53.9 k |

| Driver Drowsiness Dataset (DDD) [42] | Public | PNG | Drowsy and non-drowsy | NA | 41.8 k | 3 GB | More than 5767 |

| Driver Drowsiness Dataset [43] | Public | PNG | Drowsy and not drowsy | NA | 66.5 k | 3 GB | More than 70 |

| Frame Level Driver Drowsiness Detection (FL3D) [44] | Public | JPEG | Drowsiness (alert, microsleep, and yawning) | NA | 53.3 k | 628 MB | More than 445 |

| Traindata Dataset [45] | Public | JPEG | Distraction, multi-face, No face, normal, and object | NA | 1218 | 239 MB | More than 8 |

| Japanese Female Facial Expressions (JAFFE) [46,47,48] | Public | TIFF | Neutral, happy, angry, disgust, fear, sad, surprise | 10 | 213 | 12.2 MB | More than 11 k |

| The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDE-SS) [49,50] | Public | WAV Videos | Calm, happy, sad, angry, fearful, surprise, and disgust | 24 | 1440 Videos | 24.8 GB | More than 50.2 k |

| Synthetic Distracted Driving (SynDD1) Dataset [51,52] | Private | MP4 Videos | Distracted Activities, and Gaze Zones | 10 | 60 Long Videos | 48.1 GB | NA |

| UTA-RLDD Dataset [53,54] | Private | MP4, MOV, M4V Videos | Drowsiness (alert, sleepy, very sleepy) | 30 | 90 Total Videos (3 Videos for Each) | 120 GB | NA |

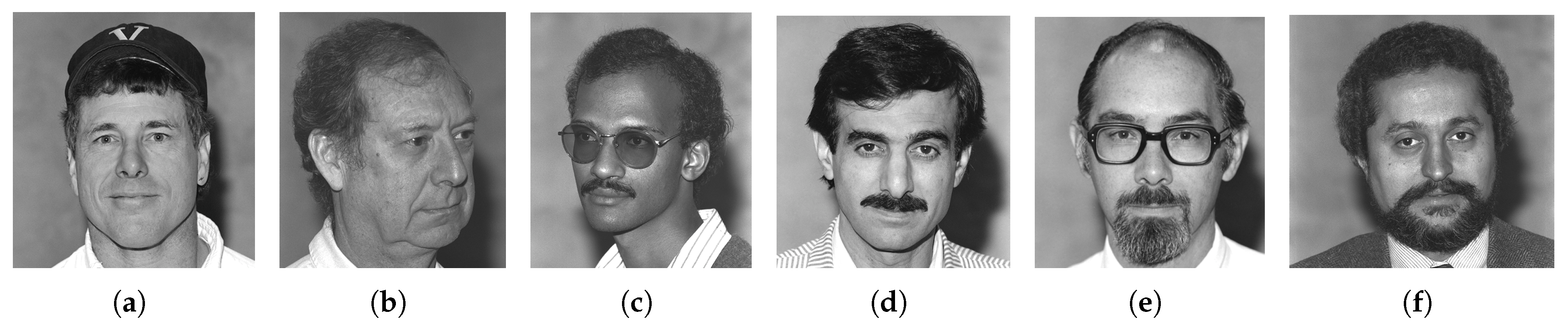

| FERET Dataset [55,56] | Private | PPM Images | Distracted, happy, neutral | NA | around 5000 Total Images | 8.5 GB | NA |

| The Parameters | Their Values |

|---|---|

| Image size | 240 × 240 pixels |

| Optimizer | Adam |

| Loss Function | Categorical Cross-entropy |

| Activation Function | ReLU |

| Batch Size | 32 |

| Learning Rate | 0.001 |

| Measuring Metric | Accuracy |

| Distraction | Drowsiness | Neutrality | Fear | |

|---|---|---|---|---|

| F1_score | 0.978579 | 0.969773 | 0.971165 | 1.0 |

| Precision | 0.958057 | 1.000000 | 0.965596 | 1.0 |

| Recall | 1.000000 | 0.941320 | 0.976798 | 1.0 |

| Model | Dataset(s) | Samples |

|---|---|---|

| Hybrid [11] | FER2013 1, CK+ 2, KDEF 3, KMU-FED 4 | 41,500 images (Approx) |

| AHTM 5 [9] | KDEF, KMU-FED | 6000 images (Approx) |

| DFEER 6 [10] | CK+, KMU-FED | 1500 images (Approx) |

| TLDFER-ADAS 7 [18] | FER2013, CK+ | 36,000 images (Approx) |

| Proposed Model | FER2013, DDD 8, Driver-drowsy dataset, FL3D 9, Traindata Dataset, JAFFE 10, RAVDESS 11, SynDD1 12, UTA-RLDD 13 Dataset, FERET Dataset 14 | Overall: 198,000 images (Approx) + 1600 videos (Approx) |

| Model | Normalization | Format Unification | Grayscale Conversion | Contrast Enhancement | Data Augmentation |

|---|---|---|---|---|---|

| Hybrid [11] | Yes | No | Yes | Yes | No |

| AHTM [9] | No | No | No | No | No |

| DFEER [10] | Yes | No | No | No | No |

| TLDFER-ADAS [18] | Yes | No | No | Yes | No |

| Proposed Model | Yes | Yes | Yes | Yes | Yes |

| Model | Classifier | Layer Type | Filter Size/Stride | Filters Quantity | Output Size | Accuracy |

|---|---|---|---|---|---|---|

| Hybrid [11] | CNN+SVM 1 | Conv1 | 3 × 3/1 | 8 | - | 94.20% |

| Maxpool1 | 2 × 2/1 | 8 | - | |||

| Conv2 | 3 × 3/1 | 16 | - | |||

| Maxpool2 | 2 × 2/1 | 16 | - | |||

| Conv3 | 3 × 3/1 | 32 | - | |||

| FC1 2 | - | - | 1000 | |||

| FC1 | - | - | 7 | |||

| AHTM [9] | SSO-DLFER (GRU) 3 | Average-Pooling | - | - | - | 99.60% |

| Identity Mapping | - | - | - | |||

| Conv | - | - | - | |||

| Separable Conv | - | - | - | |||

| MaxPooling | - | - | - | |||

| Encoder | - | 414 | - | |||

| Decoder | - | - | - | |||

| WELM 4 Classifier | - | - | - | |||

| DFEER [10] | VGGNet 5 | Conv1 | 3 × 3/1 | 2 | 64 | 95.35% |

| Maxpool1 | 3 × 3/1 | 1 | 64 | |||

| Conv2 | 3 × 3/1 | 2 | 128 | |||

| Maxpool2 | 3 × 3/1 | 1 | 128 | |||

| Conv3 | 3 × 3/1 | 3 | 256 | |||

| Maxpool3 | 3 × 3/1 | 1 | 256 | |||

| Conv4 | 3 × 3/1 | 3 | 512 | |||

| Maxpool4 | 3 × 3/1 | 1 | 512 | |||

| Feature Fusion | 3 × 3/1 | 1 | 1024 | |||

| Conv1 | 3 × 3/1 | 1 | 512 | |||

| Feature Fusion | 1 × 1/1 | 1 | 1 | |||

| Conv2 | - | - | - | |||

| Feature Fusion | - | - | - | |||

| Conv3 | - | - | - | |||

| TLDFER ADAS [18] | TLDFER-ADAS (QDNN 6) | Xception Conv1 | 3 × 3/1 | - | - | 99.30% |

| Xception Conv2 | 3 × 3/1 | - | - | |||

| Depth-wise Conv Layer | 3 × 3/1 | - | - | |||

| Pointwise Conv Layer | 1 × 1/1 | - | - | |||

| Proposed Model | Inception-Model (CNNs) | Convolutional Layer | 1 × 1/1 | 64 | Vector of length four 7 | 98.6% |

| Convolution Kernel | 1 × 1/1 | 96 | ||||

| Separable Convolutional Layer | 3 × 3/1 | 64 | ||||

| Convolution Kernel | 1 × 1/1 | 16 | ||||

| Separable Convolutional Layer | 5 × 5/1 | 64 | ||||

| Max Pooling Layer | 3 × 3/1 | - | ||||

| Convolution Kernel | 1 × 1/1 | 64 |

| Model | Dataset | Accuracy (per Dataset) | Precision | Recall | F1-score | ROC |

|---|---|---|---|---|---|---|

| Hybrid [11] | FER2013 | 84.4% | NA | NA | NA | NA |

| CK+ | 95.1% | |||||

| KDEF | 98.5% | |||||

| KMU-FED | 98.6% | |||||

| AHTM [9] | KDEF | 99.69% | 98.86% | 98.86% | 98.86% | NA |

| KMU-FED | 99.50% | 97.25% | 97.54% | 97.39% | ||

| DFEER [10] | CK+ | 95.92% | 93.57% | 95.63% | 94.60% | NA |

| KMU-FED | 94.73% | 92.54% | 94.42% | 93.48% | ||

| TLDFER-ADAS [18] | FER2013 | 99.31% | 96.06% | 96.71% | 96.36% | 98.15% |

| CK+ | 99.29% | 96.83% | 93.32% | 94.87% | 96.27% | |

| Proposed Model | Distraction | 98.68% | 95.8% | 100% | 97.8% | 98.6% |

| Drowsiness | 100% | 94.1% | 96.9% | |||

| Neutrality | 96.5% | 97.6% | 97.1% | |||

| Fear | 100% | 100% | 100% |

| Features | Details |

|---|---|

| Dual-Core Ultra-Low-Power Microcontroller |

|

| Integrated Peripherals |

|

| Model | Dataset | Accuracy | Precision | Recall | F1-Score | Average ROC |

|---|---|---|---|---|---|---|

| Proposed Model | Distraction | 98.68% | 95.8% | 100% | 97.8% | 98.6% |

| Drowsiness | 100% | 94.1% | 96.9% | |||

| Neutrality | 96.5% | 97.6% | 97.1% | |||

| Fear | 100% | 100% | 100% |

| System | Hardware Used | Processing Efficiency | Detection and Alert Methods | Latency |

|---|---|---|---|---|

| [59] | Raspberry Pi 3, Pi Camera, IoT Modules | Limited processing power, may struggle with real-time detection | Eye Aspect Ratio (EAR), facial landmarks, email alerts | Slower than dedicated AI chips |

| [60] | Camera, fingerprint sensor, robot arm | Multi-stage alert system adds complexity, slower processing | EAR, fingerprint verification, vibration alerts | Higher response time due to multi-stage alerts |

| [61] | Deep Learning on CPU/GPU | Higher processing needs, requires powerful hardware | Deep Learning Emotion Recognition (Facial Analysis) | Potential high latency |

| Our Model | MAX78000FTHR (AI-accelerated MCU) | Optimized for edge AI, low power consumption, real-time processing | AI-based sound detection, emotion analysis | Low-latency edge processing (Fast) |

| System | Accuracy | Cost | Portability | Scalability | Power Consumption | Emotions Detected |

|---|---|---|---|---|---|---|

| [59] | 97.7% | USD 150 Approx. | Many Components | Limited Scalability | Higher | Drowsiness |

| [60] | NA | USD 120 Approx. | Many Components | Complex: Fingerprint, Vibration, Robot Arm (Low Scalability) | Higher | Drowsiness |

| [61] | NA | USD 130 Approx. | Many Components | Limited Scalability | Low | Happy, Sad, Angry, Surprise, Disgust, Neutral and Fear |

| Our Model | 98.6% | USD 75 Approx. (Affordable) | Portable | Highly Scalable | Low | Drowsiness, Distraction, Fear/Panic, and Neutrality |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almodhwahi, M.A.; Wang, B. A Facial-Expression-Aware Edge AI System for Driver Safety Monitoring. Sensors 2025, 25, 6670. https://doi.org/10.3390/s25216670

Almodhwahi MA, Wang B. A Facial-Expression-Aware Edge AI System for Driver Safety Monitoring. Sensors. 2025; 25(21):6670. https://doi.org/10.3390/s25216670

Chicago/Turabian StyleAlmodhwahi, Maram A., and Bin Wang. 2025. "A Facial-Expression-Aware Edge AI System for Driver Safety Monitoring" Sensors 25, no. 21: 6670. https://doi.org/10.3390/s25216670

APA StyleAlmodhwahi, M. A., & Wang, B. (2025). A Facial-Expression-Aware Edge AI System for Driver Safety Monitoring. Sensors, 25(21), 6670. https://doi.org/10.3390/s25216670