A Tactile Cognitive Model Based on Correlated Texture Information Entropy and Multimodal Fusion Learning

Abstract

1. Introduction

2. Materials and Methods

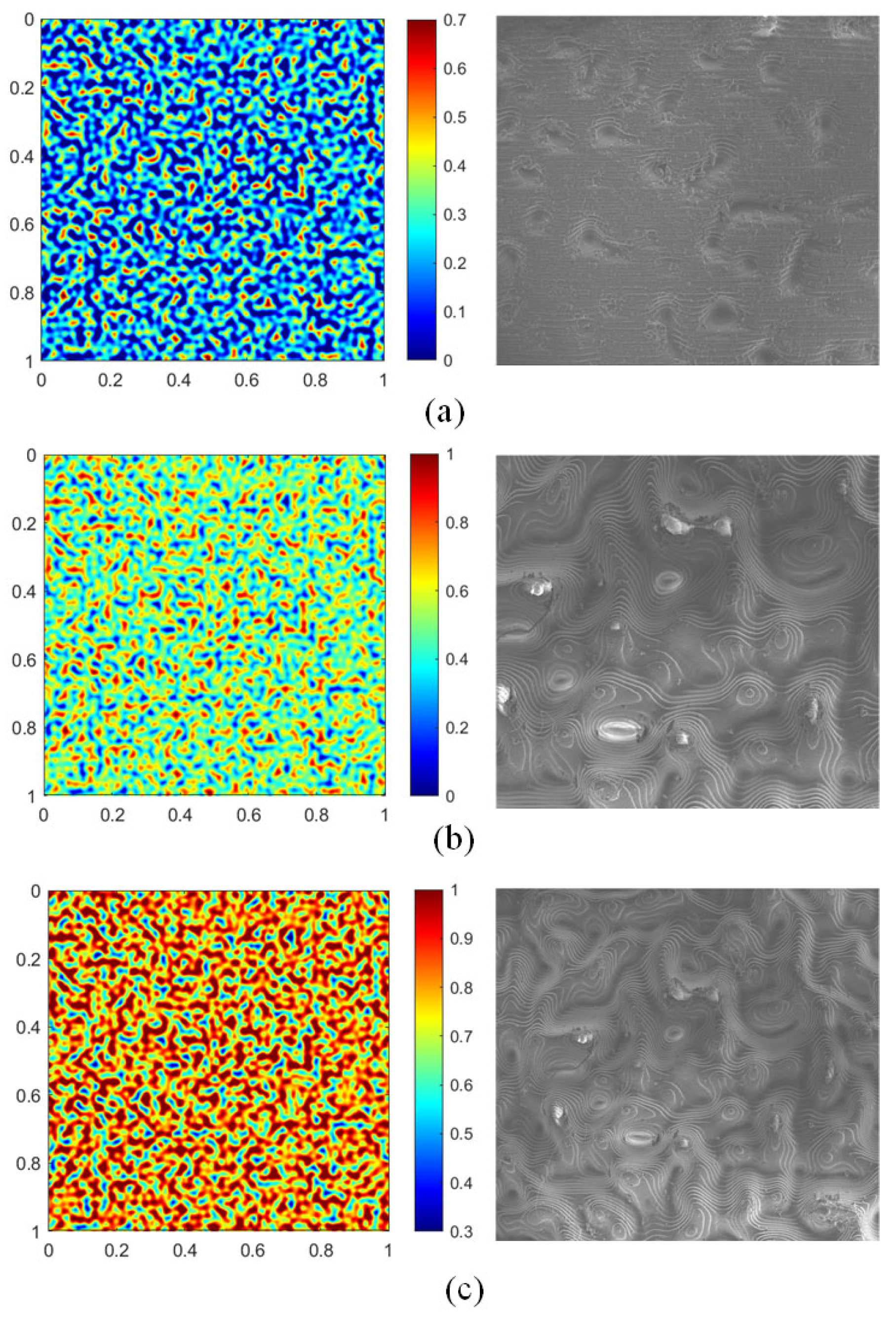

2.1. Design and Fabrication of a Novel Multimodal Texture Dataset

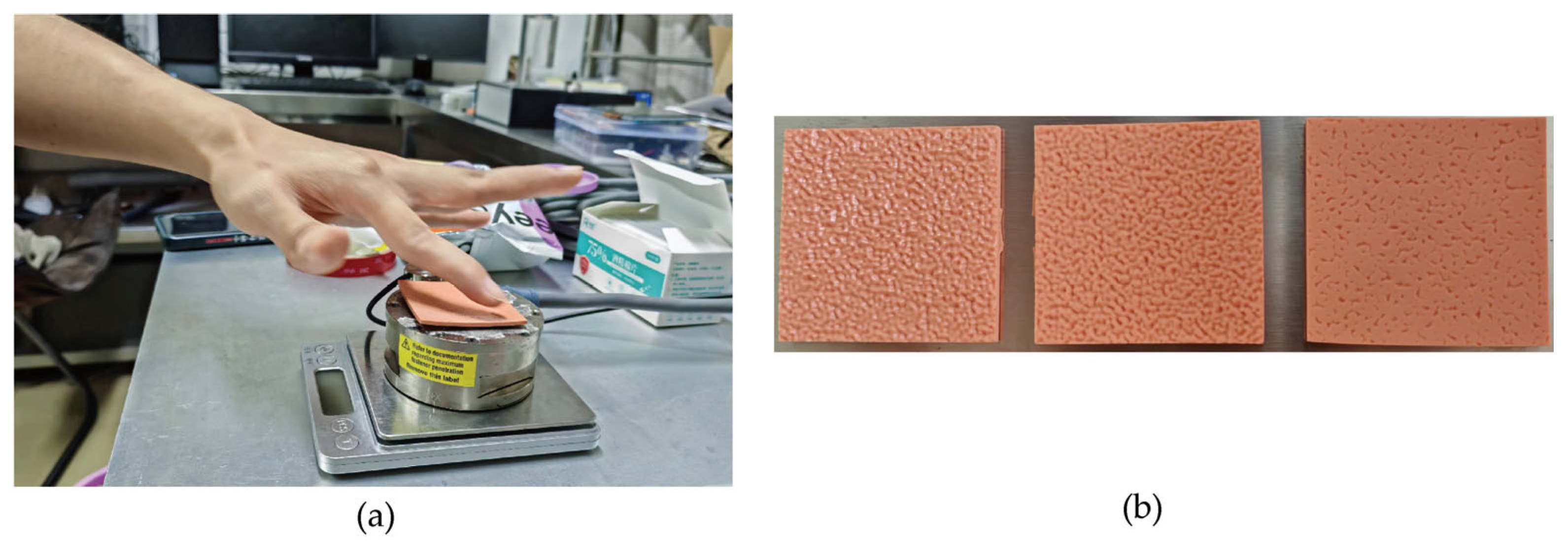

2.1.1. Texture Sample Preparation

2.1.2. Psychophysical Experiment

2.2. Multimodal Signal Acquisition

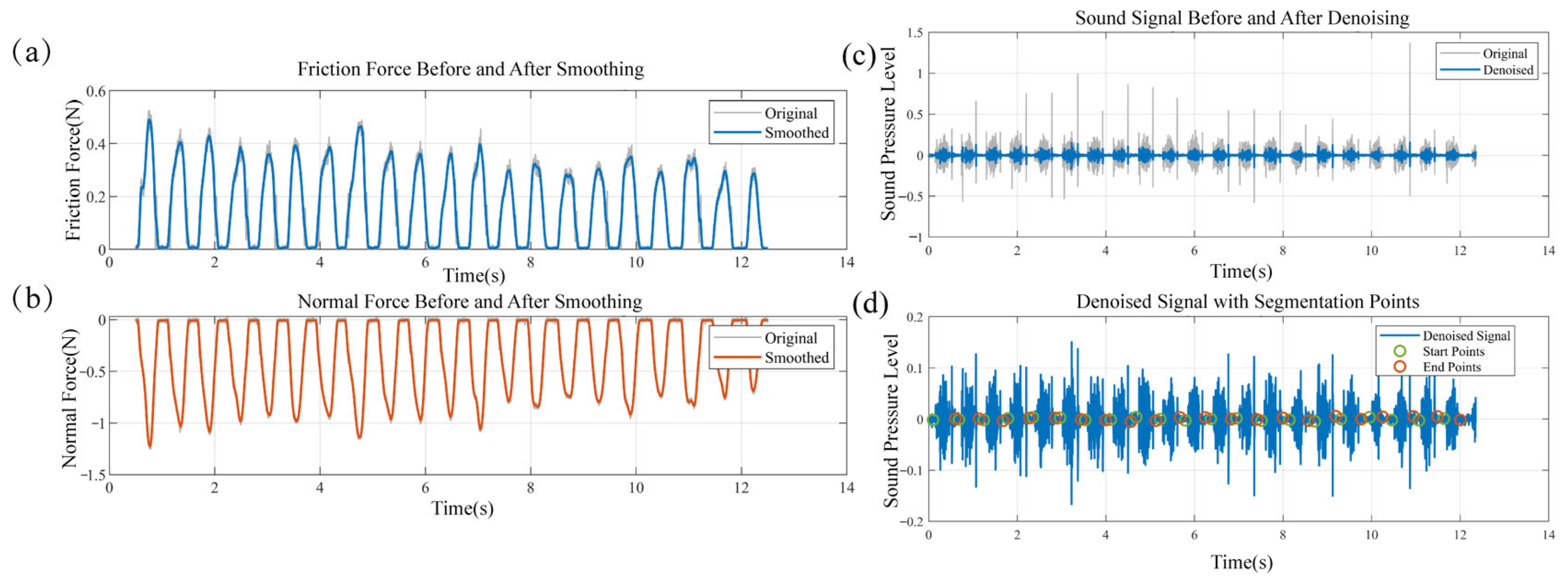

Data Preprocessing

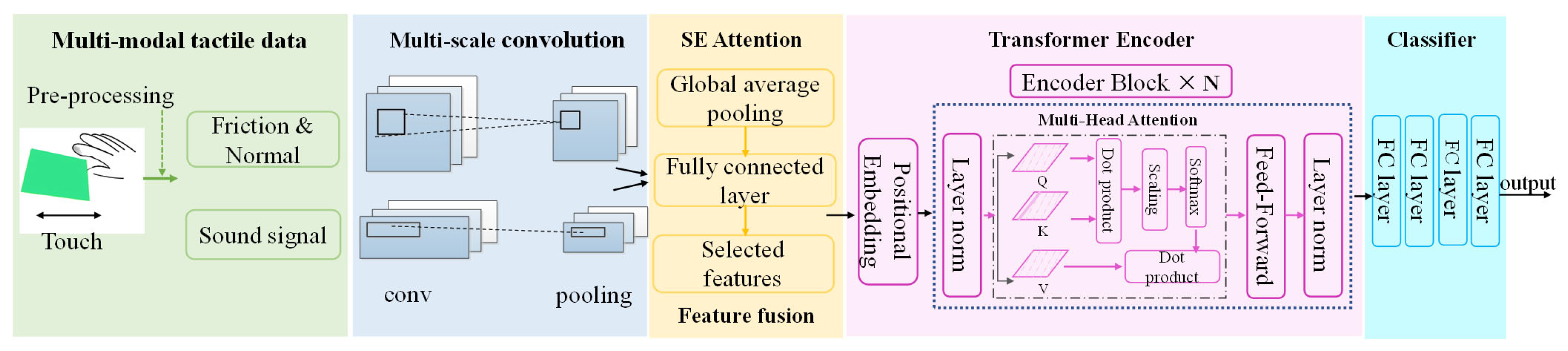

2.3. The Proposed Tactile Cognitive Model

2.4. Experimental Setup and Fusion Strategies

2.5. Baseline Models, Feature Selection, and Performance Evaluation Metrics

3. Results and Discussion

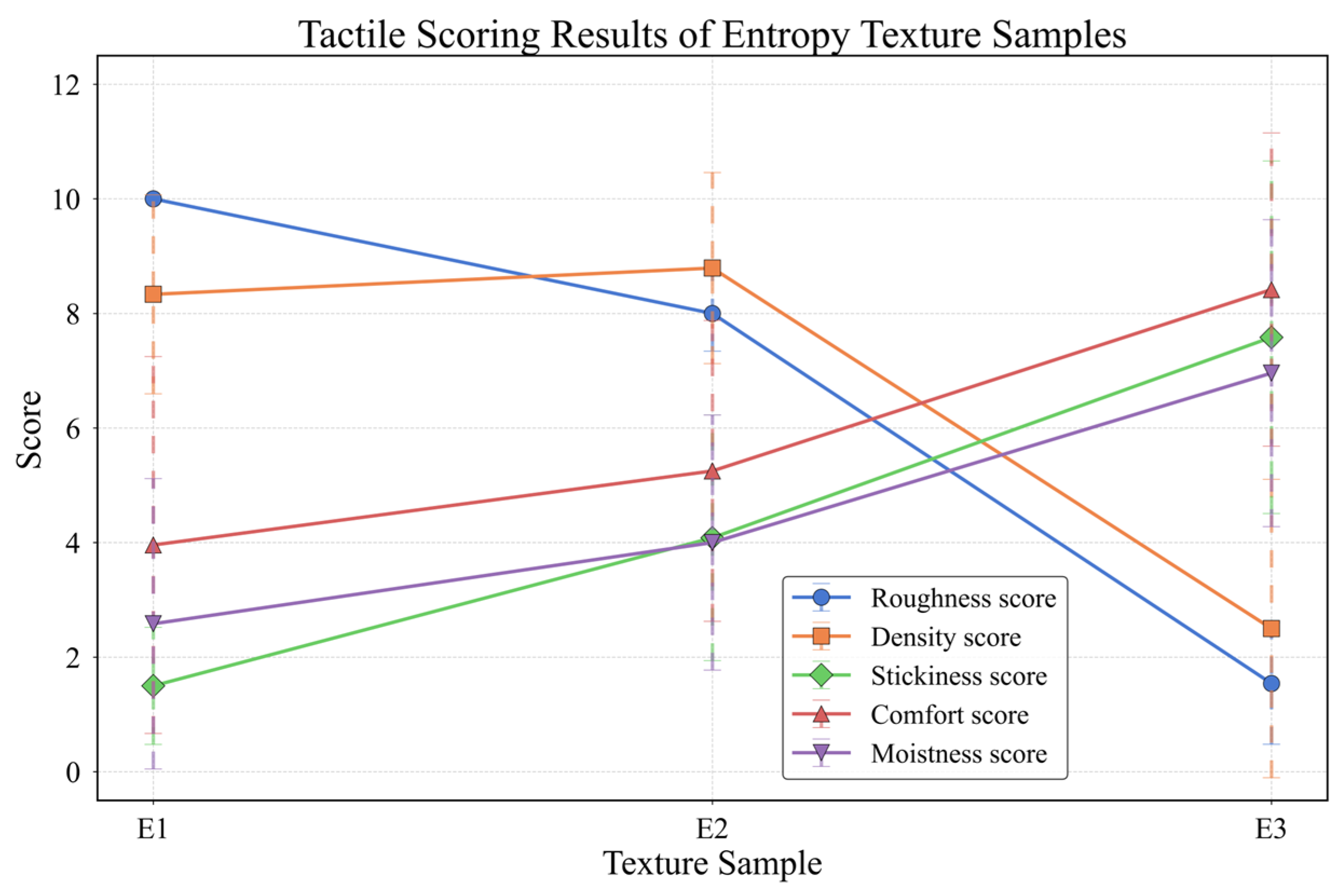

3.1. Psychophysical Experiment Results

3.2. Performance Evaluation of the MFT-Net Model

3.3. Validation on a Public Tactile Dataset

3.4. Ablation Study

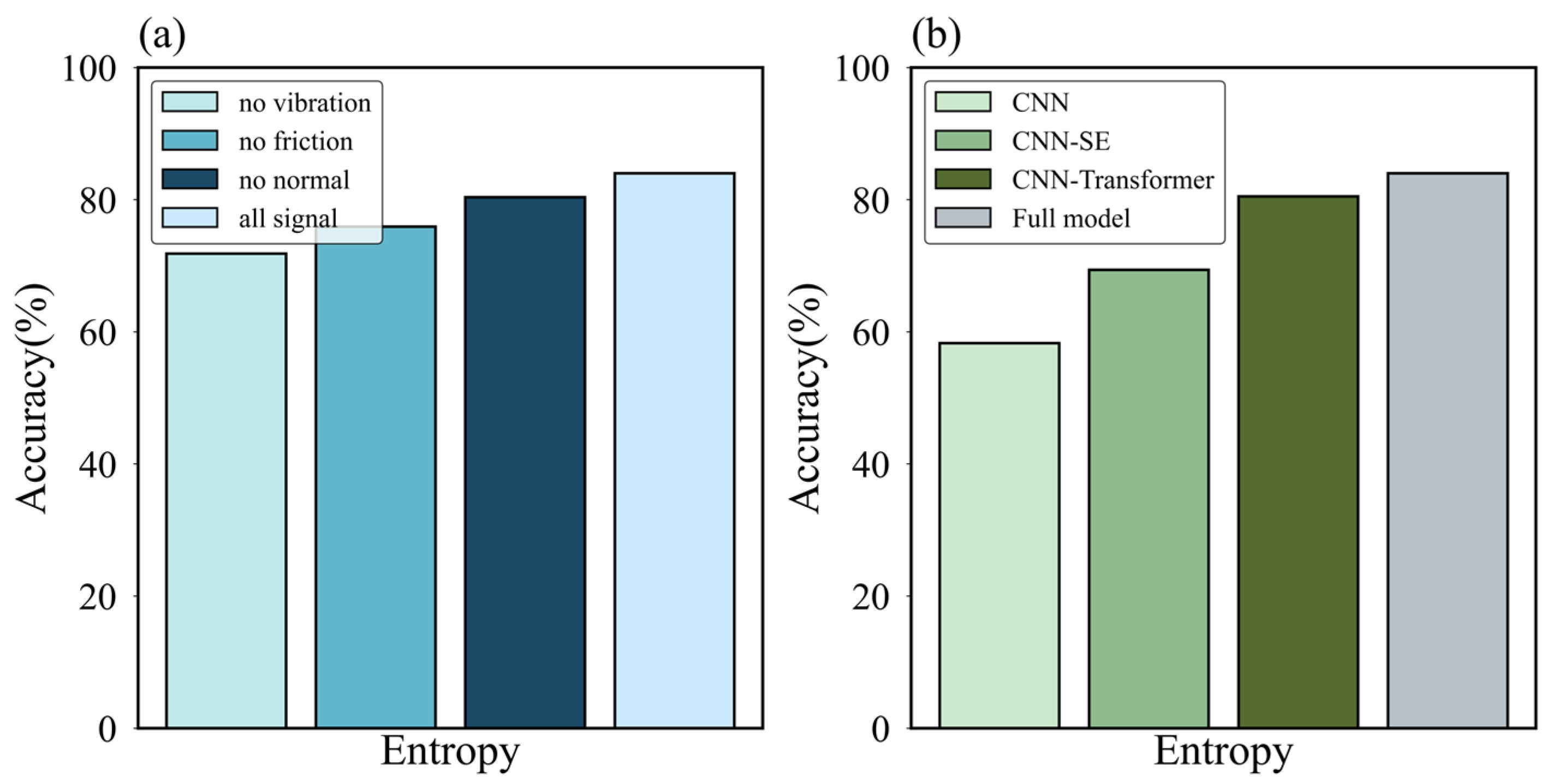

3.4.1. Ablation Analysis of Modalities and Model Components

3.4.2. Analysis of Fusion Strategy Effectiveness

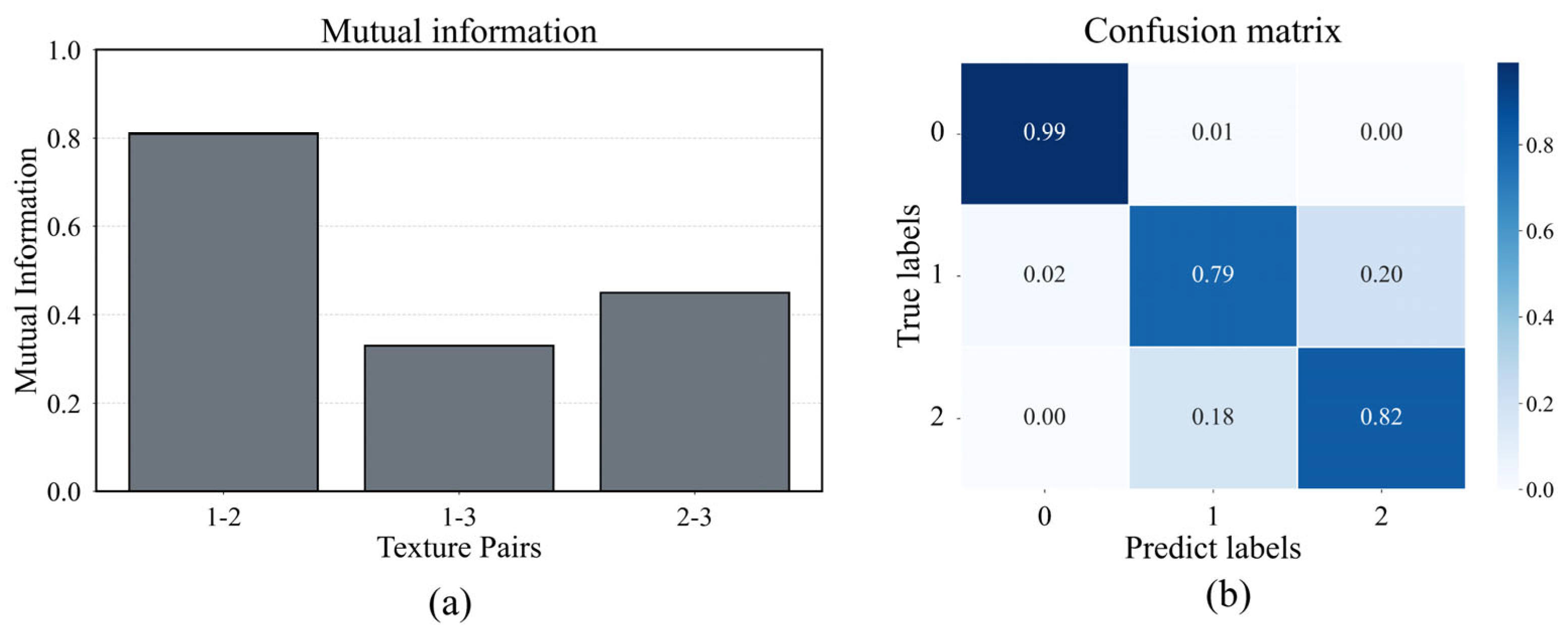

3.5. Cross-Validation of Information-Theoretic Parameters and Model Performance

3.6. Limitation

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhao, Z.; Li, W.; Li, Y.; Liu, T.; Li, B.; Wang, M.; Du, K.; Liu, H.; Zhu, Y.; Wang, Q.; et al. Embedding high-resolution touch across robotic hands enables adaptive human-like grasping. Nat. Mach. Intell. 2025, 7, 889–900. [Google Scholar] [CrossRef]

- Syed, T.N.; Zhou, J.; Lakhiar, I.A.; Marinello, F.; Gemechu, T.T.; Rottok, L.T.; Jiang, Z. Enhancing Autonomous Orchard Navigation: A Real-Time Convolutional Neural Network-Based Obstacle Classification System for Distinguishing ‘Real’ and ‘Fake’ Obstacles in Agricultural Robotics. Agriculture 2025, 15, 827. [Google Scholar] [CrossRef]

- Kang, B.; Zavanelli, N.; Sue, G.N.; Patel, D.K.; Oh, S.; Oh, S.; Vinciguerra, M.R.; Wieland, J.; Wang, W.D.; Majidi, C. A flexible skin-mounted haptic interface for multimodal cutaneous feedback. Nat. Electron. 2025, 1–13. [Google Scholar] [CrossRef]

- Massari, L.; Fransvea, G.; D’Abbraccio, J.; Filosa, M.; Terruso, G.; Aliperta, A.; D’Alesio, G.; Zaltieri, M.; Schena, E.; Palermo, E.; et al. Functional mimicry of Ruffini receptors with fibre Bragg gratings and deep neural networks enables a bio-inspired large-area tactile-sensitive skin. Nat. Mach. Intell. 2022, 4, 425–435. [Google Scholar] [CrossRef]

- Elsherbiny, O.; Gao, J.; Guo, Y.; Tunio, M.H.; Mosha, A.H. Fusion of the deep networks for rapid detection of branch-infected aeroponically cultivated mulberries using multimodal traits. Int. J. Agric. Biol. Eng. 2025, 18, 92–100. [Google Scholar] [CrossRef]

- Wang, J.; Gao, Z.; Zhang, Y.; Zhou, J.; Wu, J.; Li, P. Real-Time Detection and Location of Potted Flowers Based on a ZED Camera and a YOLO V4-Tiny Deep Learning Algorithm. Horticulturae 2021, 8, 21. [Google Scholar] [CrossRef]

- Qiu, D.; Guo, T.; Yu, S.; Liu, W.; Li, L.; Sun, Z.; Peng, H.; Hu, D. Classification of Apple Color and Deformity Using Machine Vision Combined with CNN. Agriculture 2024, 14, 978. [Google Scholar] [CrossRef]

- van de Burgt, N.; van Doesum, W.; Grevink, M.; van Niele, S.; de Koning, T.; Leibold, N.; Martinez-Martinez, P.; van Amelsvoort, T.; Cath, D. Psychiatric manifestations of inborn errors of metabolism: A systematic review. Neurosci. Biobehav. Rev. 2023, 144, 104970. [Google Scholar] [CrossRef] [PubMed]

- Mao, Q.; Liao, Z.; Yuan, J.; Zhu, R. Multimodal tactile sensing fused with vision for dexterous robotic housekeeping. Nat. Commun. 2024, 15, 6871. [Google Scholar] [CrossRef]

- Lee, W.W.; Tan, Y.J.; Yao, H.; Li, S.; See, H.H.; Hon, M.; Ng, K.A.; Xiong, B.; Ho, J.S.; Tee, B.C.K. A neuro-inspired artificial peripheral nervous system for scalable electronic skins. Sci. Robot. 2019, 4, eaax2198. [Google Scholar] [CrossRef]

- Chi, H.G.; Barreiros, J.; Mercat, J.; Ramani, K.; Kollar, T. Multi-Modal Representation Learning with Tactile Data. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems, Abu Dhabi, United Arab Emirates, 14–18 October 2024. [Google Scholar]

- Guo, Z.; Zhang, Y.; Xiao, H.; Jayan, H.; Majeed, U.; Ashiagbor, K.; Jiang, S.; Zou, X. Multi-sensor fusion and deep learning for batch monitoring and real-time warning of apple spoilage. Food Control 2025, 172, 111174. [Google Scholar] [CrossRef]

- Devillard, A.W.M.; Ramasamy, A.; Cheng, X.; Faux, D.; Burdet, E. Tactile, Audio, and Visual Dataset During Bare Finger Interaction with Textured Surfaces. Sci. Data 2025, 12, 484. [Google Scholar] [CrossRef]

- Fu, L.; Datta, G.; Huang, H.; Panitch, W.C.-H.; Drake, J.; Ortiz, J.; Mukadam, M.; Lambeta, M.; Calandra, R.; Goldberg, K. A Touch, Vision, and Language Dataset for Multimodal Alignment. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Monteiro Rocha Lima, B.; Danyamraju, V.; Alves de Oliveira, T.E.; Prado da Fonseca, V. A multimodal tactile dataset for dynamic texture classification. Data Brief 2023, 50, 109590. [Google Scholar] [CrossRef]

- Babadian, R.P.; Faez, K.; Amiri, M.; Falotico, E. Fusion of tactile and visual information in deep learning models for object recognition. Infin. Fusion 2023, 92, 313–325. [Google Scholar] [CrossRef]

- Dong, Y.; Lu, N.; Li, X. Dense attention networks for texture classification. Neurocomputing 2025, 634, 129833. [Google Scholar] [CrossRef]

- Taunyazov, T.; Chua, Y.; Gao, R.; Soh, H.; Wu, Y. Fast Texture Classification Using Tactile Neural Coding and Spiking Neural Network. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 24 October 2020–24 January 2021. [Google Scholar]

- Chen, L.; Karilanova, S.; Chaki, S.; Wen, C.; Wang, L.; Winblad, B.; Zhang, S.-L.; Özçelikkale, A.; Zhang, Z.-B. Spike timing–based coding in neuromimetic tactile system enables dynamic object classification. Science 2024, 384, 660–665. [Google Scholar] [CrossRef] [PubMed]

- Zacharia, V.; Bardakas, A.; Anastasopoulos, A.; Moustaka, M.A.; Hourdakis, E.; Tsamis, C. Design of a flexible tactile sensor for material and texture identification utilizing both contact-separation and surface sliding modes for real-life touch simulation. Nano Energy 2024, 127, 109702. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Zhou, Y.; Zhu, Z.; Bai, X.; Lischinski, D.; Cohen-Or, D.; Huang, H. Non-stationary texture synthesis by adversarial expansion. ACM Trans. Graph. 2018, 37, 49. [Google Scholar] [CrossRef]

- Skedung, L.; Harris, K.L.; Collier, E.S.; Rutland, M.W. The finishing touches: The role of friction and roughness in haptic perception of surface coatings. Exp. Brain Res. 2020, 238, 1511–1524. [Google Scholar] [CrossRef]

- Koone, J.C.; Dashnaw, C.M.; Alonzo, E.A.; Iglesias, M.A.; Patero, K.-S.; Lopez, J.J.; Zhang, A.Y.; Zechmann, B.; Cook, N.E.; Minkara, M.S.; et al. Data for all: Tactile graphics that light up with picture-perfect resolution. Sci. Adv. 2022, 8, eabq2640. [Google Scholar] [CrossRef]

- Skedung, L.; Arvidsson, M.; Chung, J.Y.; Stafford, C.M.; Berglund, B.; Rutland, M.W. Feeling small: Exploring the tactile perception limits. Sci. Rep. 2013, 3, 2617. [Google Scholar] [CrossRef]

- Fishel, J.A.; Loeb, G.E. Bayesian exploration for intelligent identification of textures. Front. Neurorobot. 2012, 6, 4. [Google Scholar] [CrossRef]

- Zhou, X.; Zhao, C.; Sun, J.; Cao, Y.; Yao, K.; Xu, M. A deep learning method for predicting lead content in oilseed rape leaves using fluorescence hyperspectral imaging. Food Chem. 2023, 409, 135251. [Google Scholar] [CrossRef]

- Ji, W.; Zhai, K.; Xu, B.; Wu, J. Green Apple Detection Method Based on Multidimensional Feature Extraction Network Model and Transformer Module. J. Food Prot. 2025, 88, 100397. [Google Scholar] [CrossRef] [PubMed]

- Cheng, J.; Sun, J.; Yao, K.; Xu, M.; Dai, C. Multi-task convolutional neural network for simultaneous monitoring of lipid and protein oxidative damage in frozen-thawed pork using hyperspectral imaging. Meat Sci. 2023, 201, 109196. [Google Scholar] [CrossRef] [PubMed]

- Cheng, J.; Sun, J.; Yao, K.; Dai, C. Generalized and hetero two-dimensional correlation analysis of hyperspectral imaging combined with three-dimensional convolutional neural network for evaluating lipid oxidation in pork. Food Control 2023, 153, 109940. [Google Scholar] [CrossRef]

- Sun, J.; Cheng, J.; Xu, M.; Yao, K. A method for freshness detection of pork using two-dimensional correlation spectroscopy images combined with dual-branch deep learning. J. Food Compos. Anal. 2024, 129, 106144. [Google Scholar] [CrossRef]

- Pan, Y.; Jin, H.; Gao, J.; Rauf, H. Identification of Buffalo Breeds Using Self-Activated-Based Improved Convolutional Neural Networks. Agriculture 2022, 12, 1386. [Google Scholar] [CrossRef]

- Liang, Z.; Xu, X.; Yang, D.; Liu, Y. The Development of a Lightweight DE-YOLO Model for Detecting Impurities and Broken Rice Grains. Agriculture 2025, 15, 848. [Google Scholar] [CrossRef]

- Tao, K.; Wang, A.; Shen, Y.; Lu, Z.; Peng, F.; Wei, X. Peach Flower Density Detection Based on an Improved CNN Incorporating Attention Mechanism and Multi-Scale Feature Fusion. Horticulturae 2022, 8, 904. [Google Scholar] [CrossRef]

- Statistics, L.B.; Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Sun, J.; Cong, S.; Mao, H.; Wu, X.; Yang, N. Quantitative detection of mixed pesticide residue of lettuce leaves based on hyperspectral technique. J. Food Process Eng. 2017, 41, e12654. [Google Scholar] [CrossRef]

- Hao, Z.; Berg, A.C.; Maire, M.; Malik, J. SVM-KNN: Discriminative Nearest Neighbor Classification for Visual Category Recognition. In Proceedings of the Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006. [Google Scholar]

- Xu, M.; Sun, J.; Cheng, J.; Yao, K.; Wu, X.; Zhou, X. Non-destructive prediction of total soluble solids and titratable acidity in Kyoho grape using hyperspectral imaging and deep learning algorithm. Int. J. Food Sci. Technol. 2022, 58, 9–21. [Google Scholar] [CrossRef]

- Hu, Y.; Sheng, W.; Adade, S.Y.-S.S.; Wang, J.; Li, H.; Chen, Q. Comparison of machine learning and deep learning models for detecting quality components of vine tea using smartphone-based portable near-infrared device. Food Control 2025, 174, 111244. [Google Scholar] [CrossRef]

- Strese, M.; Schuwerk, C.; Iepure, A.; Steinbach, E. Multimodal Feature-Based Surface Material Classification. IEEE Trans. Haptics 2017, 10, 226–239. [Google Scholar] [CrossRef] [PubMed]

- Zheng, W.; Liu, H.; Wang, B.; Sun, F. Cross-Modal Material Perception for Novel Objects: A Deep Adversarial Learning Method. IEEE Trans. Autom. Sci. Eng. 2020, 17, 697–707. [Google Scholar] [CrossRef]

- Zheng, H.; Fang, L.; Ji, M.; Strese, M.; Ozer, Y.; Steinbach, E. Deep Learning for Surface Material Classification Using Haptic and Visual Information. IEEE Trans. Multimed. 2016, 18, 2407–2416. [Google Scholar] [CrossRef]

- Strese, M.; Brudermueller, L.; Kirsch, J.; Steinbach, E. Haptic Material Analysis and Classification Inspired by Human Exploratory Procedures. IEEE Trans. Haptics 2020, 13, 404–424. [Google Scholar] [CrossRef] [PubMed]

- Rai, H.M.; Chatterjee, K. Hybrid CNN-LSTM deep learning model and ensemble technique for automatic detection of myocardial infarction using big ECG data. Appl. Intell. 2021, 52, 5366–5384. [Google Scholar] [CrossRef]

- Guo, Z.; Xiao, H.; Dai, Z.; Wang, C.; Sun, C.; Watson, N.; Povey, M.; Zou, X. Identification of apple variety using machine vision and deep learning with Multi-Head Attention mechanism and GLCM. J. Food Meas. Charact. 2025, 1–19. [Google Scholar] [CrossRef]

- Cheng, J.; Sun, J.; Yao, K.; Xu, M.; Tian, Y.; Dai, C. A decision fusion method based on hyperspectral imaging and electronic nose techniques for moisture content prediction in frozen-thawed pork. LWT 2022, 165, 113778. [Google Scholar] [CrossRef]

- Sun, J.; Zhang, L.; Zhou, X.; Yao, K.; Tian, Y.; Nirere, A. A method of information fusion for identification of rice seed varieties based on hyperspectral imaging technology. J. Food Process Eng. 2021, 44, 13797. [Google Scholar] [CrossRef]

- Lin, H.; Xu, P.T.; Sun, L.; Bi, X.k.; Zhao, J.w.; Cai, J.r. Identification of eggshell crack using multiple vibration sensors and correlative information analysis. J. Food Process Eng. 2018, 41, 12894. [Google Scholar] [CrossRef]

| Sample ID | Entropy | Normalized Mutual Information |

|---|---|---|

| Entropy1 | 7.5 | 1–2: 0.81 |

| Entropy2 | 6.5 | 1–3: 0.33 |

| Entropy3 | 5.5 | 2–3: 0.45 |

| Category | Dimension | Description |

|---|---|---|

| Psychophysical | Macro-roughness | Uneven, Uniformly flat, Embossed sensation |

| Psychophysical | Fine roughness | Coarse, Fine, Sparse/Dense |

| Psychophysical | Stickiness/Slipperiness | Low grip, Slippery, High adhesion sensation |

| Psychophysical | Wetness/Dryness | Moist, Dry |

| Affective | Comfort Level | Pleasant and comfortable to the touch |

| Dataset | 0 | 1 | 2 |

|---|---|---|---|

| Entropy | E1 = 5.5 | E2 = 6.5 | E3 = 7.5 |

| Layer | Kernel/Key |

|---|---|

| Conv1 | 1 × 60 |

| Conv2 | 1 × 40 |

| Conv3 | 1 × 20 |

| Conv4,5,6 | 2 × 1 |

| AvgPooling | 1 × 200 |

| Query/Key/Value | 80 |

| Depth | 6 |

| Num_hidden | 80 |

| Head | 10 |

| Parameter | Batch Size | Learning Rate | Epoch | Optimizer | L2 | Dropout |

|---|---|---|---|---|---|---|

| Key | 72 | 0.0001 | 200 | Adam | 0.0001 | 0.4 |

| Force Signal Features | Vibration Signal Features |

|---|---|

| Mean, Variance | Spectral Centroid, Spectral Entropy |

| Root Mean Square (RMS) | Power Spectral Density (PSD), Zero-Crossing Rate (ZCR) |

| Friction Coefficient | Short-Time Energy |

| Energy | Mel-Frequency Cepstral Coefficients (MFCCs) |

| Spectral Centroid, Bandwidth | Grayscale Histogram: Mean, Variance, Entropy |

| Spectral Entropy | Gray-Level Co-occurrence Matrix (GLCM): Energy, Entropy, Inertia |

| Power Spectral Density (PSD) | Fractal Dimension |

| Skewness | Skewness |

| Kurtosis | Kurtosis |

| Model | Acc | Precision | Recall | F1 | BAC | Kappa |

|---|---|---|---|---|---|---|

| MFT-Net | 86.66% | 84.53% | 85.03% | 83.15% | 84.13% | 83.74% |

| RF | 63.33% | 62.44% | 63.02% | 60.75% | 61.43% | 61.83% |

| KNN | 64.67% | 62.51% | 61.33% | 61.89% | 63.59% | 60.94% |

| Model | Data | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

| WCMAL [42] | acceleration, image | 88.6% | 86.5% | 84.8% | 85.6% |

| HapticNet [43] | acceleration, image | 91% | 89.5% | 87.3% | 88.4% |

| Handcrafted multimodal features [41] | vibration, acceleration, friction, image | 75% | 72.6% | 70% | 71.3% |

| Handcrafted multimodal features [44] | vibration, acceleration, friction, image | 90.5% | 89.1% | 87.3% | 88.2% |

| CNN-LSTM [45] | vibration, acceleration, friction | 91.7% | 89.3% | 88.9% | 90.1% |

| Proposed Multi-Model Fusion Network | vibration, acceleration, friction | 93.2% | 91.7% | 90.5% | 89.3% |

| Fusion Strategy | Early Fusion | Intermediate Fusion (SE) | Late Fusion |

|---|---|---|---|

| Entropy | 71.82% | 81.04% | 75.91% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, S.; Gao, C.; Chen, C.; Ru, W.; Yang, N. A Tactile Cognitive Model Based on Correlated Texture Information Entropy and Multimodal Fusion Learning. Sensors 2025, 25, 5786. https://doi.org/10.3390/s25185786

Chen S, Gao C, Chen C, Ru W, Yang N. A Tactile Cognitive Model Based on Correlated Texture Information Entropy and Multimodal Fusion Learning. Sensors. 2025; 25(18):5786. https://doi.org/10.3390/s25185786

Chicago/Turabian StyleChen, Si, Chi Gao, Chen Chen, Weimin Ru, and Ning Yang. 2025. "A Tactile Cognitive Model Based on Correlated Texture Information Entropy and Multimodal Fusion Learning" Sensors 25, no. 18: 5786. https://doi.org/10.3390/s25185786

APA StyleChen, S., Gao, C., Chen, C., Ru, W., & Yang, N. (2025). A Tactile Cognitive Model Based on Correlated Texture Information Entropy and Multimodal Fusion Learning. Sensors, 25(18), 5786. https://doi.org/10.3390/s25185786