Integrating Cross-Modal Semantic Learning with Generative Models for Gesture Recognition

Abstract

1. Introduction

- We propose CM-GR, a cross-modal gesture recognition framework that synthesizes user-specific WiFi data guided by visual 3D skeletal points.

- We design conditional vectors derived from skeletal semantics to introduce biomechanical constraints into WiFi data generation, enabling personalized synthesis without additional user-specific data collection.

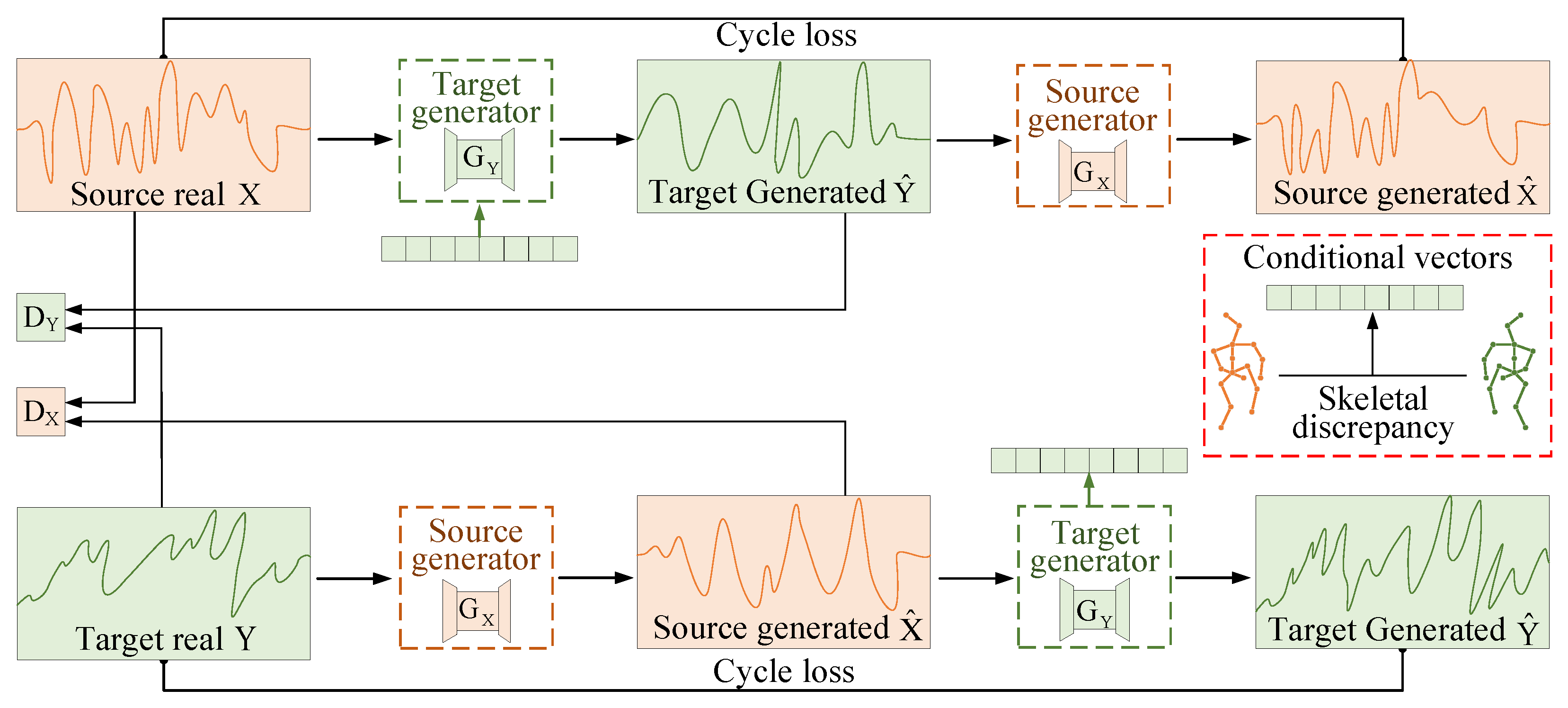

- We develop a dual-stream adversarial architecture with bidirectional domain translation that improves both the fidelity and generalization of generated WiFi data.

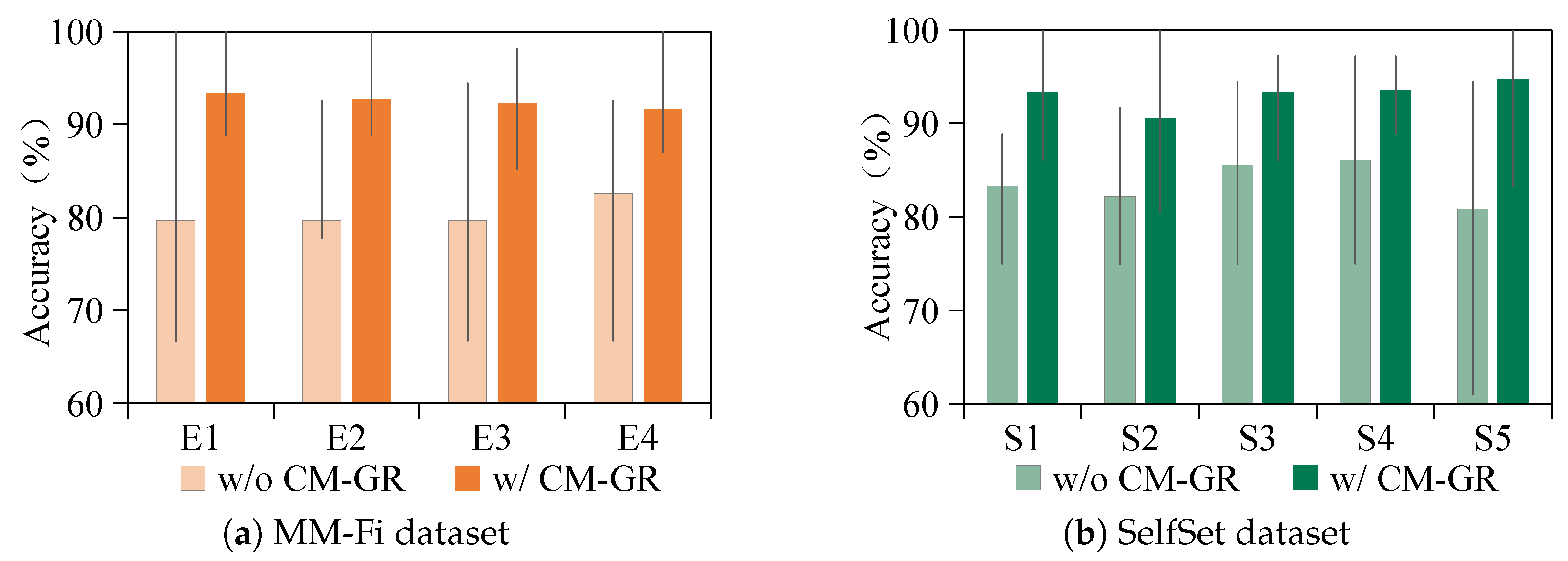

- Extensive experiments on the MM-Fi and SelfSet datasets demonstrate that CM-GR significantly outperforms existing methods in cross-subject gesture recognition, improving the accuracy by 10.26% and 9.5%, respectively.

2. Background

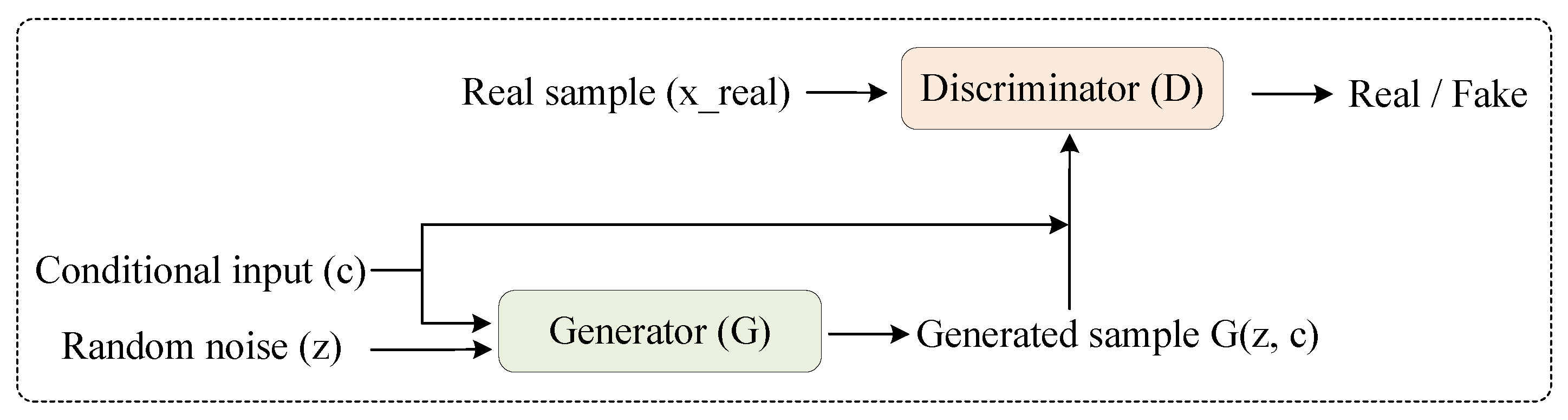

2.1. Generative Adversarial Networks (GANs)

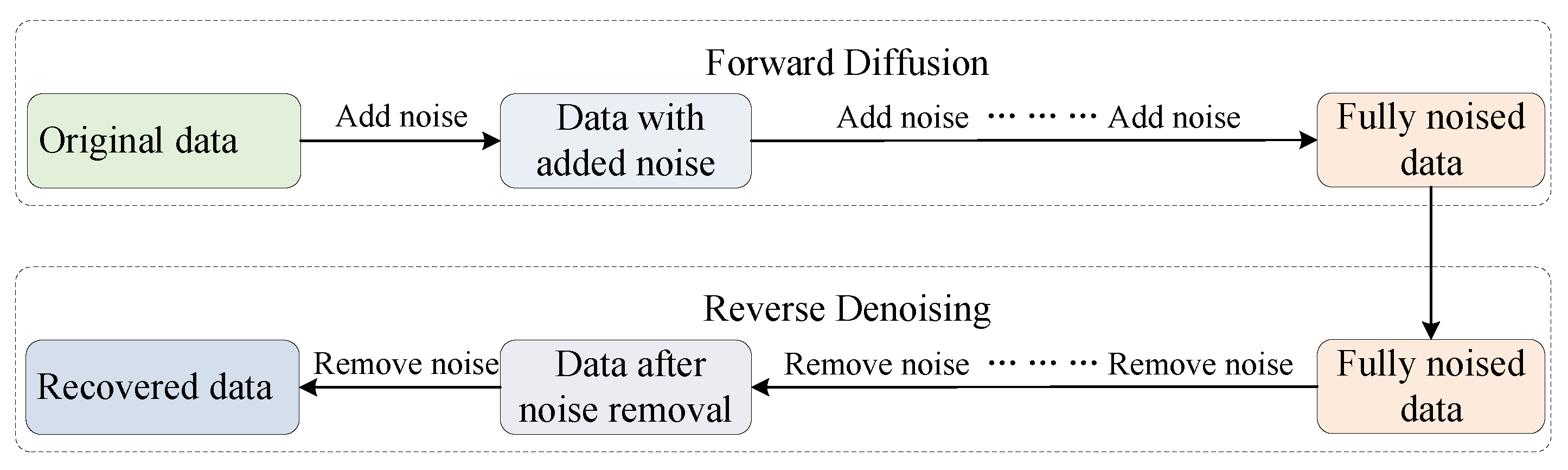

2.2. Diffusion Models

3. Related Work

3.1. Gesture Recognition

3.2. Multimodal Learning

3.3. Generative Models

4. Our Approach

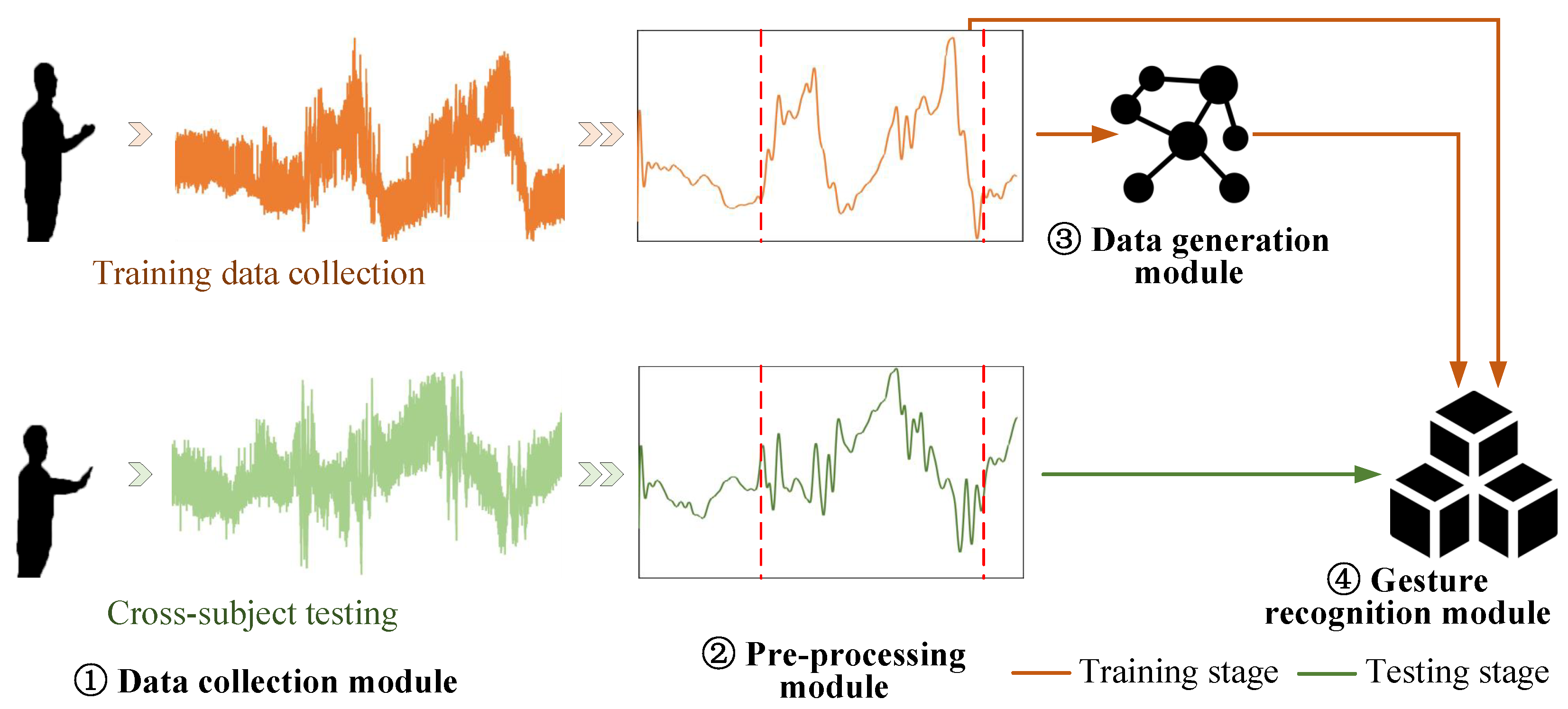

4.1. System Overview

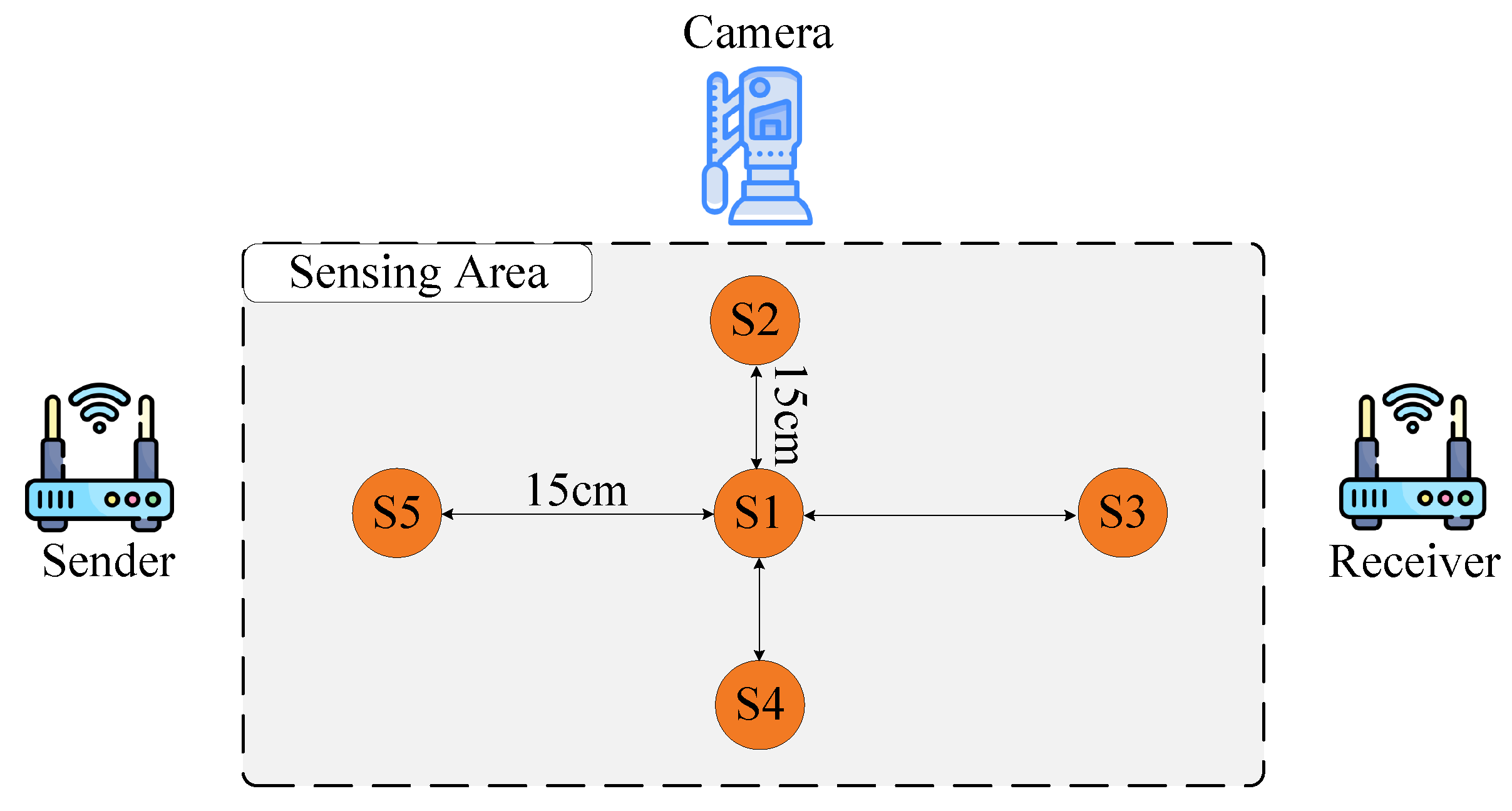

- Data Collection Module: The data collection module plays a key role in capturing raw CSI through a multi-antenna WiFi transceiver. It is responsible for constructing the dataset containing gesture actions, using a miniature computer to gather data across various environments. This foundational step ensures that the system captures a wide variety of gesture patterns, which form the core for further processing.

- Data Preprocessing Module: The data preprocessing module prepares the collected data by applying advanced denoising and localization techniques. These operations aim to remove environmental noise and multipath interference, ensuring that the data are clean and standardized for subsequent analysis. This step is crucial in improving the accuracy and consistency of the gesture recognition model.

- Gesture Recognition Module: Once the data are preprocessed, the gesture recognition module uses them to train a highly accurate gesture recognition model. This module is crucial in interpreting the processed WiFi data and classifying gestures with high precision. However, when deployed to new users, the pretrained model’s representation space struggles to adapt to individual differences in aspects such as height, body shape, and behavior, leading to performance degradation.

- Data Generation Module: To overcome the challenges associated with limited data for new users, the data generation module uses adversarial synthesis to generate high-quality target-specific WiFi sensing data. This process is crucial in enhancing model robustness in cross-subject recognition tasks. The module enables the generation of new data samples without the need for specialized data acquisition from each new user.

4.2. Preprocessing Module

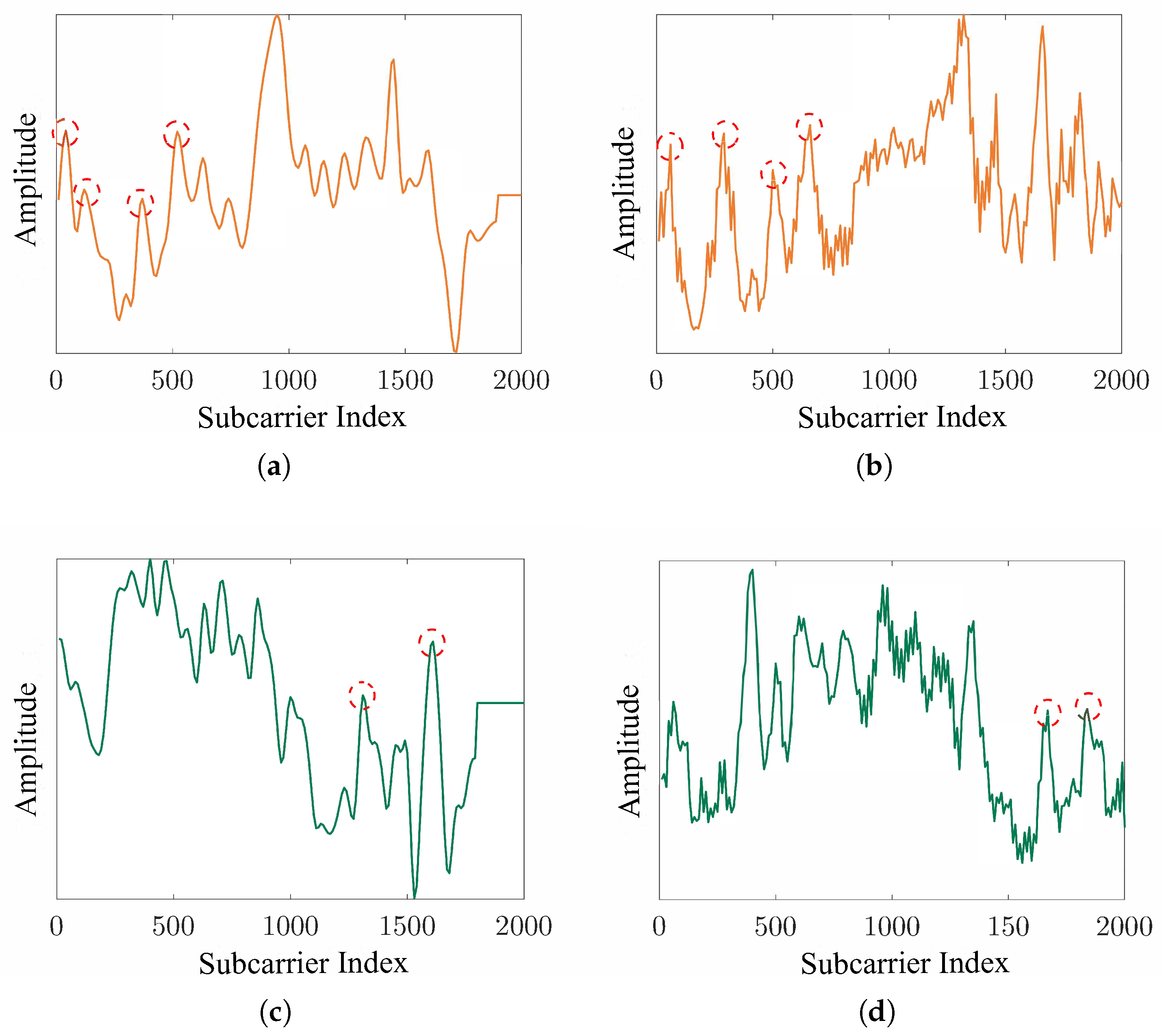

4.2.1. Signal Denoising

4.2.2. Gesture Localization

4.3. Data Generation Module

4.3.1. Signal Generator

4.3.2. Signal Discriminator

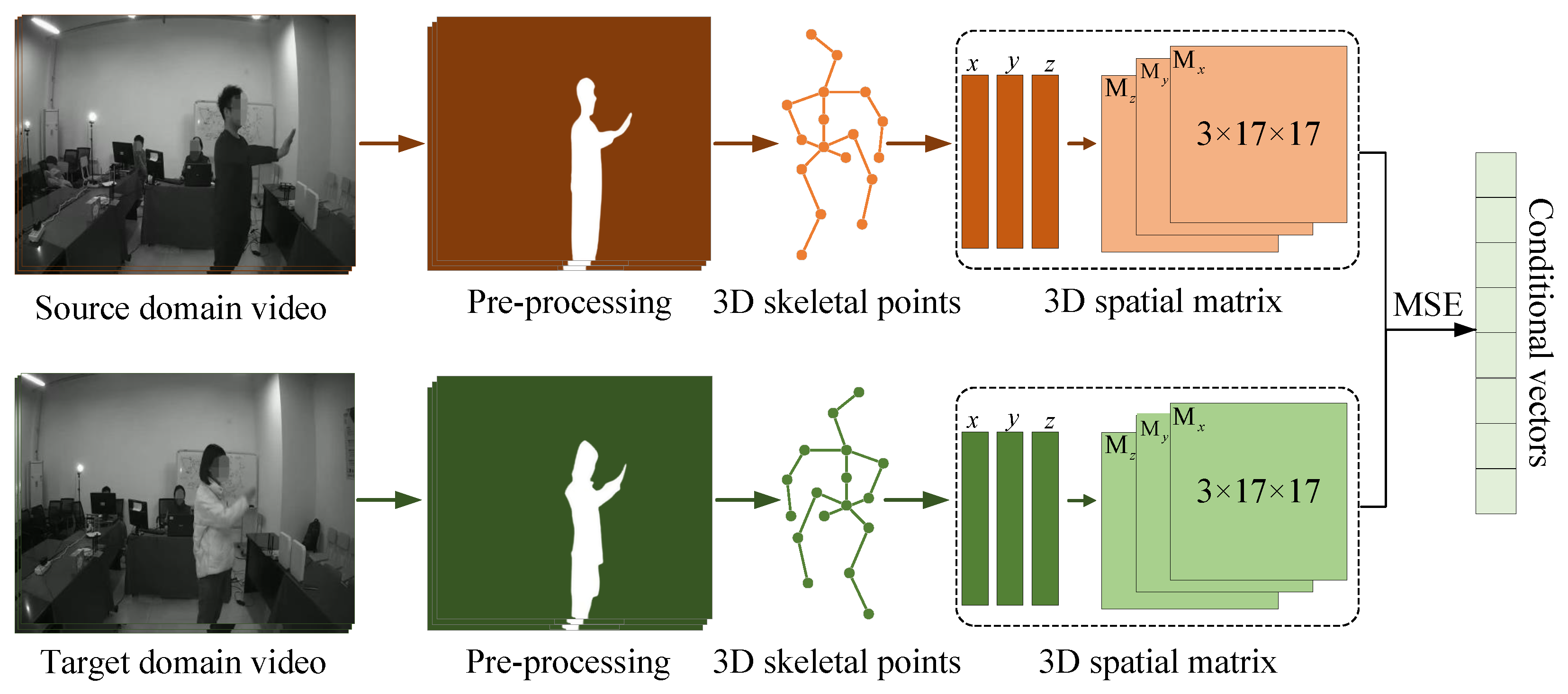

4.3.3. Cross-Modal Conditional Vector

4.3.4. Loss Function

4.4. Gesture Recognition Module

5. Evaluation

5.1. Experimental Setup and Parameter Settings

5.2. Overall Performance

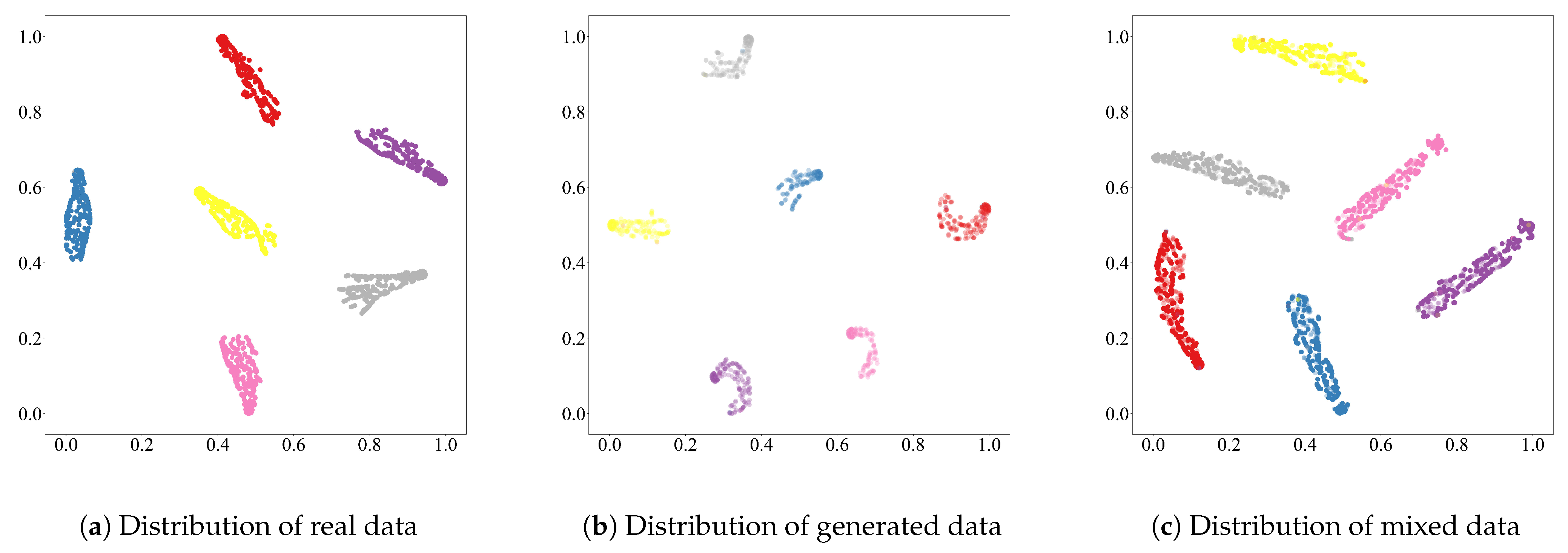

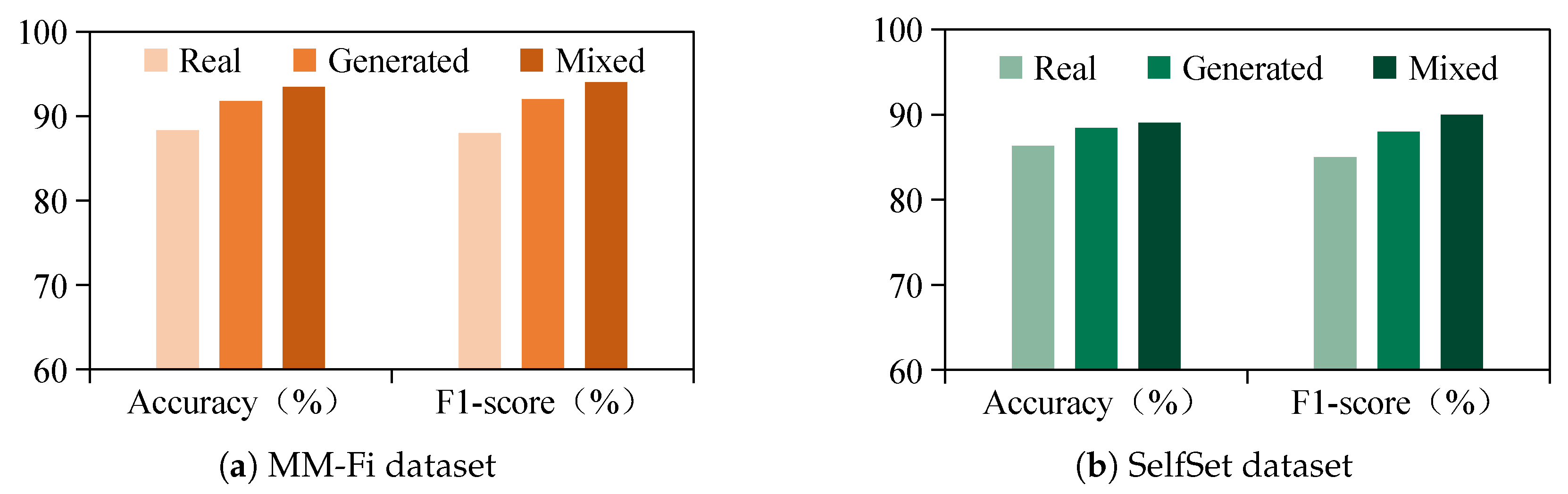

5.3. Performance of Data Generation

5.4. Comparisons with Existing Methods

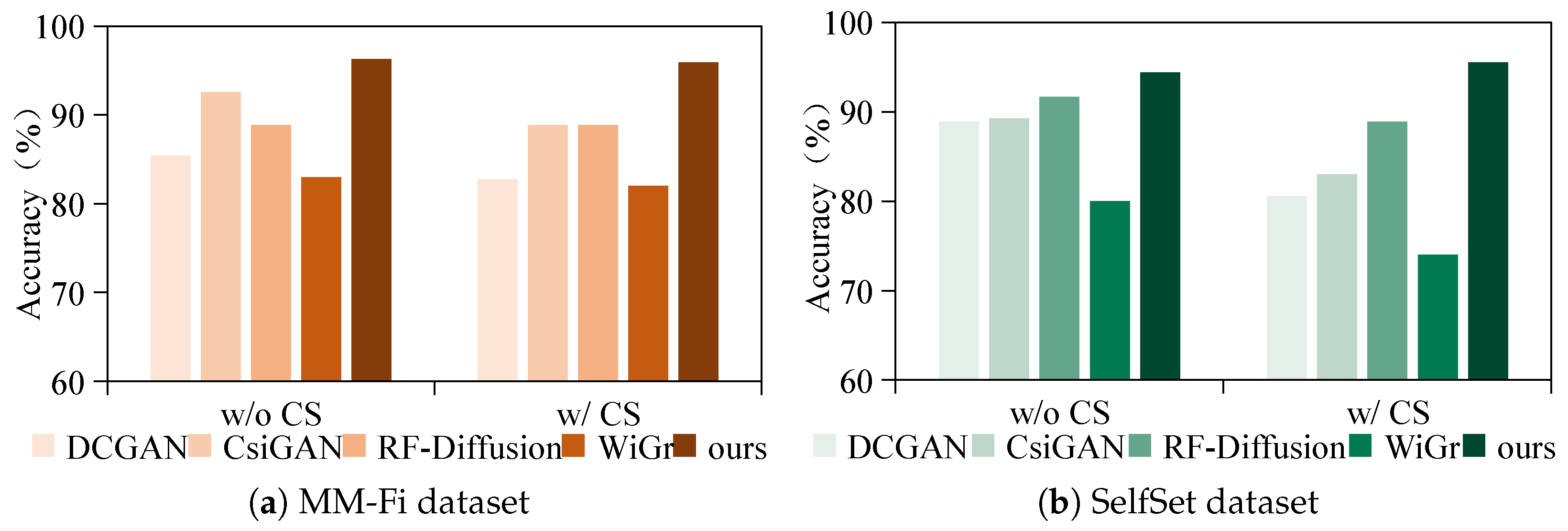

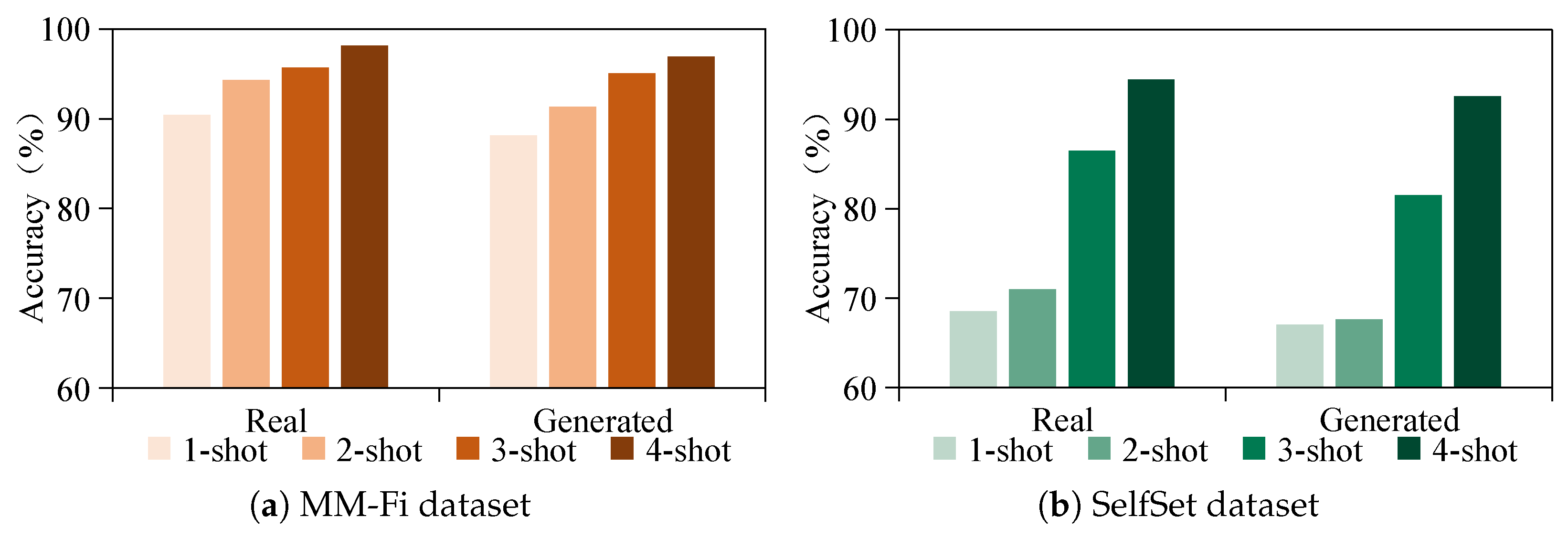

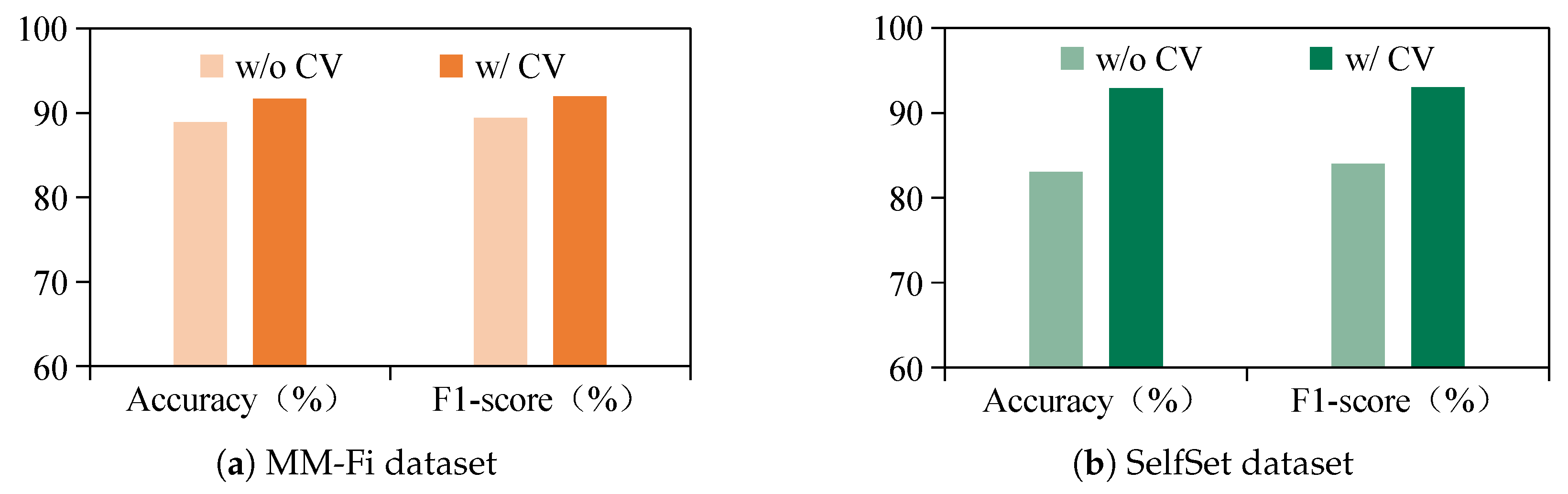

5.5. Performance of Cross-Modal Semantic Learning

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tan, S.; Ren, Y.; Yang, J.; Chen, Y. Commodity WiFi sensing in ten years: Status, challenges, and opportunities. IEEE Internet Things J. 2022, 9, 17832–17843. [Google Scholar] [CrossRef]

- Liu, X.; Meng, X.; Duan, H.; Hu, Z.; Wang, M. A Survey on Secure WiFi Sensing Technology: Attacks and Defenses. Sensors 2025, 25, 1913. [Google Scholar] [CrossRef]

- Miao, F.; Huang, Y.; Lu, Z.; Ohtsuki, T.; Gui, G.; Sari, H. Wi-Fi Sensing Techniques for Human Activity Recognition: Brief Survey, Potential Challenges, and Research Directions. ACM Comput. Surv. 2025, 57, 1–30. [Google Scholar] [CrossRef]

- Chen, C.; Zhou, G.; Lin, Y. Cross-domain wifi sensing with channel state information: A survey. ACM Comput. Surv. 2023, 55, 231. [Google Scholar] [CrossRef]

- Sheng, B.; Han, R.; Cai, H.; Xiao, F.; Gui, L.; Guo, Z. CDFi: Cross-domain action recognition using WiFi signals. IEEE Trans. Mob. Comput. 2024, 23, 8463–8477. [Google Scholar] [CrossRef]

- He, Z.; Bouazizi, M.; Gui, G.; Ohtsuki, T. A Cross-subject Transfer Learning Method for CSI-based Wireless Sensing. IEEE Internet Things J. 2025, 12, 23946–23960. [Google Scholar] [CrossRef]

- Zhai, S.; Tang, Z.; Nurmi, P.; Fang, D.; Chen, X.; Wang, Z. RISE: Robust wireless sensing using probabilistic and statistical assessments. In Proceedings of the 27th Annual International Conference on Mobile Computing and Networking, New Orleans, LA, USA, 25–29 October 2021; pp. 309–322. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Hou, W.; Wu, C. RFboost: Understanding and boosting deep wifi sensing via physical data augmentation. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2024, 8, 58. [Google Scholar] [CrossRef]

- Zhao, X.; An, Z.; Pan, Q.; Yang, L. NeRF2: Neural radio-frequency radiance fields. In Proceedings of the 29th Annual International Conference on Mobile Computing and Networking, Madrid, Spain, 2–6 October 2023; pp. 1–15. [Google Scholar] [CrossRef]

- Li, X.; Chang, L.; Song, F.; Wang, J.; Chen, X.; Tang, Z.; Wang, Z. CrossGR: Accurate and low-cost cross-target gesture recognition using Wi-Fi. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 21. [Google Scholar] [CrossRef]

- Xiao, C.; Han, D.; Ma, Y.; Qin, Z. CsiGAN: Robust channel state information-based activity recognition with GANs. IEEE Internet Things J. 2019, 6, 10191–10204. [Google Scholar] [CrossRef]

- Chi, G.; Yang, Z.; Wu, C.; Xu, J.; Gao, Y.; Liu, Y.; Han, T.X. RF-diffusion: Radio signal generation via time-frequency diffusion. In Proceedings of the 30th Annual International Conference on Mobile Computing and Networking, Washington, DC, USA, 18–22 November 2024; pp. 77–92. [Google Scholar] [CrossRef]

- Zhang, J.; He, K.; Yu, T.; Yu, J.; Yuan, Z. Semi-supervised RGB-D hand gesture recognition via mutual learning of self-supervised models. ACM Trans. Multimed. Comput. Commun. Appl. 2025, 21, 1–20. [Google Scholar] [CrossRef]

- dos Santos, C.C.; Samatelo, J.L.A.; Vassallo, R.F. Dynamic gesture recognition by using CNNs and star RGB: A temporal information condensation. Neurocomputing 2020, 400, 238–254. [Google Scholar] [CrossRef]

- Barbhuiya, A.A.; Karsh, R.K.; Jain, R. Gesture recognition from RGB images using convolutional neural network-attention based system. Concurr. Comput. Pract. Exp. 2022, 34, e7230. [Google Scholar] [CrossRef]

- Leon, D.G.; Gröli, J.; Yeduri, S.R.; Rossier, D.; Mosqueron, R.; Pandey, O.J.; Cenkeramaddi, L.R. Video hand gestures recognition using depth camera and lightweight CNN. IEEE Sens. J. 2022, 22, 14610–14619. [Google Scholar] [CrossRef]

- Qi, J.; Ma, L.; Cui, Z.; Yu, Y. Computer vision-based hand gesture recognition for human-robot interaction: A review. Complex Intell. Syst. 2024, 10, 1581–1606. [Google Scholar] [CrossRef]

- Tasfia, R.; Mohd Yusoh, Z.I.; Habib, A.B.; Mohaimen, T. An overview of hand gesture recognition based on computer vision. Int. J. Electr. Comput. Eng. 2024, 14, 4636–4645. [Google Scholar] [CrossRef]

- Shaikh, M.B.; Chai, D. RGB-D data-based action recognition: A review. Sensors 2021, 21, 4246. [Google Scholar] [CrossRef] [PubMed]

- Gao, K.; Zhang, H.; Liu, X.; Wang, X.; Xie, L.; Ji, B.; Yan, Y.; Yin, E. Challenges and solutions for vision-based hand gesture interpretation: A review. Comput. Vis. Image Underst. 2024, 248, 104095. [Google Scholar] [CrossRef]

- Makaussov, O.; Krassavin, M.; Zhabinets, M.; Fazli, S. A low-cost, IMU-based real-time on device gesture recognition glove. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 3346–3351. [Google Scholar] [CrossRef]

- Wang, D.; Wang, J.; Liu, Y.; Meng, X. HMM-based IMU data processing for arm gesture classification and motion tracking. Int. J. Model. Identif. Control 2023, 42, 54–63. [Google Scholar] [CrossRef]

- Mekruksavanich, S.; Phaphan, W.; Jitpattanakul, A. Sensor-Based Hand Gesture Recognition Using One-Dimensional Deep Convolutional and Residual Bidirectional Gated Recurrent Unit Neural Network. Lobachevskii J. Math. 2025, 46, 464–480. [Google Scholar] [CrossRef]

- Ashry, S.; Das, S.; Rafiei, M.; Baumbach, J.; Baumbach, L. Transfer Learning of Human Activities based on IMU Sensors: A Review. IEEE Sens. J. 2024, 25, 4115–4126. [Google Scholar] [CrossRef]

- Sarowar, M.S.; Farjana, N.E.J.; Khan, M.A.I.; Mutalib, M.A.; Islam, S.; Islam, M. Hand Gesture Recognition Systems: A Review of Methods, Datasets, and Emerging Trends. Int. J. Comput. Appl. 2025, 187, 8887. [Google Scholar] [CrossRef]

- Tan, S.; Yang, J. WiFinger: Leveraging commodity WiFi for fine-grained finger gesture recognition. In Proceedings of the 17th ACM International Symposium on Mobile Ad Hoc Networking and Computing, Paderborn, Germany, 5–8 July 2016; pp. 201–210. [Google Scholar] [CrossRef]

- Gu, Y.; Yan, H.; Zhang, X.; Wang, Y.; Huang, J.; Ji, Y.; Ren, F. Attention-based gesture recognition using commodity wifi devices. IEEE Sens. J. 2023, 23, 9685–9696. [Google Scholar] [CrossRef]

- Cai, Z.; Li, Z.; Chen, Z.; Zhuo, H.; Zheng, L.; Wu, X.; Liu, Y. Device-Free Wireless Sensing for Gesture Recognition Based on Complementary CSI Amplitude and Phase. Sensors 2024, 24, 3414. [Google Scholar] [CrossRef]

- Xiao, J.; Luo, B.; Xu, L.; Li, B.; Chen, Z. A survey on application in RF signal. Multimed. Tools Appl. 2024, 83, 11885–11908. [Google Scholar] [CrossRef]

- Casmin, E.; Oliveira, R. Survey on Context-Aware Radio Frequency-Based Sensing. Sensors 2025, 25, 602. [Google Scholar] [CrossRef]

- Ramachandram, D.; Taylor, G.W. Deep multimodal learning: A survey on recent advances and trends. IEEE Signal Process. Mag. 2017, 34, 96–108. [Google Scholar] [CrossRef]

- Ezzeldin, M.; Ghoneim, A.; Abdelhamid, L.; Atia, A. Survey on multimodal complex human activity recognition. FCI-H Inform. Bull. 2025, 7, 26–44. [Google Scholar] [CrossRef]

- Zou, H.; Yang, J.; Das, H.P.; Liu, H.; Zhou, Y.; Spanos, C.J. WiFi and Vision Multimodal Learning for Accurate and Robust Device-Free Human Activity Recognition. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 426–433. [Google Scholar] [CrossRef]

- Chen, J.; Yang, K.; Zheng, X.; Dong, S.; Liu, L.; Ma, H. WiMix: A lightweight multimodal human activity recognition system based on WiFi and vision. In Proceedings of the 2023 IEEE 20th International Conference on Mobile Ad Hoc and Smart Systems (MASS), Toronto, ON, Canada, 25–27 September 2023; pp. 406–414. [Google Scholar] [CrossRef]

- Lu, X.; Wang, L.; Lin, C.; Fan, X.; Han, B.; Han, X.; Qin, Z. Autodlar: A semi-supervised cross-modal contact-free human activity recognition system. ACM Trans. Sens. Netw. 2024, 20, 1–20. [Google Scholar] [CrossRef]

- Sheng, B.; Sun, C.; Xiao, F.; Gui, L.; Guo, Z. MuAt-Va: Multi-Attention and Video-Auxiliary Network for Device-Free Action Recognition. IEEE Internet Things J. 2023, 10, 10870–10880. [Google Scholar] [CrossRef]

- Wang, W.; Tran, D.; Feiszli, M. What makes training multi-modal classification networks hard? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12695–12705. [Google Scholar] [CrossRef]

- Kumar, A.; Soni, S.; Chauhan, S.; Kaur, S.; Sharma, R.; Kalsi, P.; Chauhan, R.; Birla, A. Navigating the realm of generative models: GANs, diffusion, limitations, and future prospects—A review. In Proceedings of the International Conference on Cognitive Computing and Cyber Physical Systems; Springer: Singapore, 2023; pp. 301–319. [Google Scholar] [CrossRef]

- Kontogiannis, G.; Tzamalis, P.; Nikoletseas, S. Exploring the Impact of Synthetic Data on Human Gesture Recognition Tasks Using GANs. In Proceedings of the 2024 20th International Conference on Distributed Computing in Smart Systems and the Internet of Things (DCOSS-IoT), Abu Dhabi, United Arab Emirates, 29 April–1 May 2024; pp. 384–391. [Google Scholar] [CrossRef]

- Yun, J.; Kim, D.; Kim, D.M.; Song, T.; Woo, J. GAN-based sensor data augmentation: Application for counting moving people and detecting directions using PIR sensors. Eng. Appl. Artif. Intell. 2023, 117, 105508. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Pavllo, D.; Feichtenhofer, C.; Grangier, D.; Auli, M. 3D human pose estimation in video with temporal convolutions and semi-supervised training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7753–7762. [Google Scholar] [CrossRef]

- Yang, J.; Huang, H.; Zhou, Y.; Chen, X.; Xu, Y.; Yuan, S.; Zou, H.; Lu, C.X.; Xie, L. MM-Fi: Multi-modal non-intrusive 4d human dataset for versatile wireless sensing. Adv. Neural Inf. Process. Syst. 2023, 36, 18756–18768. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. In Proceedings of the International Conference on Learning Representations, ICLR 2016, San Juan, PR, USA, 2–4 May 2016; pp. 1–16. [Google Scholar] [CrossRef]

- Zhang, X.; Tang, C.; Yin, K.; Ni, Q. WiFi-based cross-domain gesture recognition via modified prototypical networks. IEEE Internet Things J. 2021, 9, 8584–8596. [Google Scholar] [CrossRef]

| Layer Index | Operation Type | Configuration |

|---|---|---|

| 1 | Conv2D | Kernel = 7 × 7, FM = 32, Stride = 1, BN, ReLU |

| 2 | Conv2D | Kernel = 3 × 3, FM = 64, Stride = 2, BN, ReLU |

| 3 | Conv2D | Kernel = 3 × 3, FM = 128, Stride = 2, BN, ReLU |

| 4 | Residual Block × 9 | Kernel = 3 × 3, FM = 128, Stride = 1, BN, ReLU |

| 5 | Deconv2D | Kernel = 3 × 3, FM = 64, Stride = 2, BN, ReLU |

| 6 | Deconv2D | Kernel = 3 × 3, FM = 32, Stride = 2, BN, ReLU |

| Layer Index | Operation Type | Configuration |

|---|---|---|

| 1 | Conv2D | Kernel = 4 × 4, FM = 64, Stride = 2, LeakyReLU |

| 2 | Conv2D | Kernel = 4 × 4, FM = 128, Stride = 2, BN, LeakyReLU |

| 3 | Conv2D | Kernel = 4 × 4, FM = 256, Stride = 2, BN, LeakyReLU |

| 4 | Conv2D | Kernel = 4 × 4, FM = 512, Stride = 1, BN, LeakyReLU |

| 5 | Conv2D | Kernel = 4 × 4, FM = 1, Stride = 1 |

| Layer Index | Operation Type | Configuration |

|---|---|---|

| 1 | Conv2D | Kernel = 2 × 2, FM = 64, Stride = 1, ReLU |

| 2 | MaxPooling2D | PoolSize = 3 × 3, BN |

| 3 | Dropout | p = 0.5 |

| 4 | Conv2D | Kernel = 2 × 2, FM = 64, Stride = 1, ReLU |

| 5 | MaxPooling2D | PoolSize = 3 × 3, BN1 |

| 6 | Dropout | p = 0.5 |

| 7 | Conv2D | Kernel = 1 × 1, FM = 32, Stride = 1, BN, ReLU |

| 8 | Dense | FM = 1280, BN, ReLU |

| 9 | Dense | FM = 128, BN, ReLU |

| 10 | Dense | FM = 6, Softmax |

| Dataset Name | # Gestures | # Volunteers | # Envs | Env IDs |

|---|---|---|---|---|

| MM-Fi [44] | 9 | 10 | 4 | E1, E2, E3, E4 |

| SelfSet | 6 | 10 | 5 | S1, S2, S3, S4, S5 |

| Participant ID | Gender | Age | Height (m) | Weight (kg) |

|---|---|---|---|---|

| U1 | Male | 24 | 1.69 | 66 |

| U2 | Female | 29 | 1.63 | 48 |

| U3 | Female | 25 | 1.61 | 51 |

| U4 | Male | 26 | 1.77 | 71 |

| U5 | Male | 23 | 1.70 | 62 |

| U6 | Male | 24 | 1.76 | 69 |

| U7 | Male | 25 | 1.80 | 73 |

| U8 | Female | 24 | 1.65 | 46 |

| U9 | Male | 22 | 1.68 | 59 |

| U10 | Female | 23 | 1.73 | 61 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhai, S.; Dai, Z.; Jin, Z.; Qin, P.; Zeng, J. Integrating Cross-Modal Semantic Learning with Generative Models for Gesture Recognition. Sensors 2025, 25, 5783. https://doi.org/10.3390/s25185783

Zhai S, Dai Z, Jin Z, Qin P, Zeng J. Integrating Cross-Modal Semantic Learning with Generative Models for Gesture Recognition. Sensors. 2025; 25(18):5783. https://doi.org/10.3390/s25185783

Chicago/Turabian StyleZhai, Shuangjiao, Zixin Dai, Zanxia Jin, Pinle Qin, and Jianchao Zeng. 2025. "Integrating Cross-Modal Semantic Learning with Generative Models for Gesture Recognition" Sensors 25, no. 18: 5783. https://doi.org/10.3390/s25185783

APA StyleZhai, S., Dai, Z., Jin, Z., Qin, P., & Zeng, J. (2025). Integrating Cross-Modal Semantic Learning with Generative Models for Gesture Recognition. Sensors, 25(18), 5783. https://doi.org/10.3390/s25185783