Abstract

Human skeleton estimation using Frequency-Modulated Continuous Wave (FMCW) radar is a promising approach for privacy-preserving motion analysis. However, the existing methods struggle with sparse radar point cloud data, leading to inaccuracies in joint localization. To address this challenge, we propose a novel deep learning framework integrating convolutional neural networks (CNNs), multi-head transformers, and Bi-LSTM networks to enhance spatiotemporal feature representations. Our approach introduces a frame concatenation strategy that improves data quality before processing through the neural network pipeline. Experimental evaluations on the MARS dataset demonstrate that our model outperforms conventional methods by significantly reducing estimation errors, achieving a mean absolute error (MAE) of 1.77 cm and a root mean squared error (RMSE) of 2.92 cm while maintaining computational efficiency.

1. Introduction

Millimeter wave (mmWave)-based sensing has gained wide attention in recent decades due to its ability to detect humans with high sensitivity and precision [1]. Moreover, using mmWave radar has many advantages over camera systems because it can operate without requiring lighting and without compromising privacy, thus allowing for more continuous and safe monitoring [2].

Recent developments in mmWave technology, including hardware such as Frequency-Modulated Continuous Wave (FMCW) radars, offer novel opportunities in the field of human sensing. Tasks such as human action recognition and body motion require new solutions in signal processing technology and deep learning models to improve sensing capabilities.

The development of mmWave technology to estimate human joint positions in patient rehabilitation systems [3] has advantages over video-based methods because it uses point cloud data, not only preserving the privacy of the monitored person but also offering depth information that cannot be extracted from a typical video. This technology also enables detecting and locating human joints and monitoring the human body in a multitude of scenarios, such as during sleep [4,5].

Liu et al. [6] proposed a method that supports mmWave technology in capturing the human joints. Their method relies on a neural network model that employs an entire attention mechanism for skeleton-based action recognition. Moreover, convolutional neural network (CNN)-based approaches can improve the accuracy of human skeleton estimation [1,7]. In addition, the point-convolution model approach can improve the extraction of local information and point cloud density [8] for accurate human skeleton pose estimation.

Furthermore, mmWave radar-based human skeleton estimation research has shown significant improvements, leading to essential studies such as [9], which applied a sequence-to-sequence (Seq2Seq) model commonly applied in Natural Language Processing (NLP) to process sequences of point cloud data from radar and generate 3D position estimates of human skeleton key points.

In addition to CNNs, researchers have employed other deep learning techniques for human skeleton estimation. In particular, Long Short-Term Memory (LSTM) [10] has shown to be very suitable in capturing long-term dependencies in sequential data, making it ideal for tasks such as sequence prediction and classification.

Another deep learning technology that improves the performance of human skeleton estimation is transformer, which has a multi-head attention mechanism capable of extracting high-level features [11]. This technology, when combined with LSTM, can better capture temporal dependencies.

However, the main challenge arises when the mmWave point cloud data used is very sparse and carries little information compared to other data formats, such as data collected using cameras or Light Detection And Ranging (LiDAR) devices. To overcome this problem, a multi-frame representation fusion model was employed in [12]. However, the mean absolute error (MAE) value of the obtained human skeleton estimation is still high.

To solve this problem, we propose a new framework to estimate the positions of 19 human joints that combines adjacent frames into a new one and design a neural network to capture the spatiotemporal data in this new frame. Concatenating adjacent frames helps to improve the quality of human skeleton-related data points, where merging these frames is an initial phase before entering the main processing stage, which involves CNN, transformer, and Bi-LSTM layers put together in a deep learning neural network for human skeleton estimation. This paper is an extended version of our previous work [13], where we first introduced the concept of frame concatenation and an attention-based CNN–LSTM model for joint estimation. In this version, we provide a more detailed explanation of the methodology, conduct more extensive experiments, and evaluate the model’s performance on unseen subjects and activities.

The contributions of this paper can be summarized as follows:

- We introduce a novel mmWave-based deep learning model that accurately detects human joints, allowing to recognize and analyze skeletal movements. Our model builds upon the existing methods by offering enhanced spatiotemporal feature extraction, leading to clear improvements in detection accuracy and computational efficiency in human skeleton estimation.

- We propose a new preprocessing method that combines adjacent frames into a single one, which is then processed by our main deep learning model that estimates human joint positions.

- We validate the proposed approach on a public dataset to demonstrate its effectiveness in estimating human joint positions. The model’s performance is compared to the existing approaches [1,3] to highlight its advantages and limitations. The outcomes emphasize the model’s strengths in achieving higher accuracy and efficiency for radar-based human skeleton estimation.

The remainder of this paper is as follows: Section 2 provides an overview of the existing works on human skeleton estimation using mmWave radar data. In Section 3, we introduce our proposed framework. Section 4 introduces the experimental specifications in detail and presents and discusses the results. Finally, in Section 5, we conclude our work, providing a clear roadmap for future work.

2. Related Work

In recent years, mmWave radar has become increasingly popular thanks to its ability to operate in dark environmental conditions. It is known for its wavelength, which is in the millimeter range, and mmWave radars operate by transmitting high-frequency electromagnetic waves (e.g., 77–80.2 GHz [3,14]) and analyzing the reflected signals to detect objects and measure their distance, velocity, and angle. It can also capture fine-grained movements such as human joint motion, respiratory movements, and small limb displacements, which are essential for applications like pose estimation and healthcare monitoring. This is achieved through techniques like FMCW modulation, which allows precise range and velocity measurements by analyzing the frequency shift and phase differences in the reflected signals. The ability of this radar to combine several transmit and receive antennas makes the mmWave radar more precise in detection.

One of the research topics involving mmWave radar is pose estimation, where radar point clouds provide a spatial representation of human motion relative to the radar’s field of view. Using radar point cloud data to estimate the position of human joints in 3D in [3] is very profitable because it is cheaper, overcomes privacy issues, and does not depend on lighting, which affects other devices such as cameras. Moreover, the multi-frame representation process can improve radar point cloud data and accelerate model adaptation [12]. Nevertheless, combining features output from different deep learning models was proven to improve the pose estimation accuracy [15]. Some valuable techniques like sorting spatial axes and merging points from frames proved to be useful as well [16].

Sengupta et al. [17] proposed a CNN-based pose estimation approach using mmWave radar data that creates two 3D feature vectors from each radar data point, the first using depth, azimuth, and intensity and the second using depth, height, and intensity. Moreover, the study treats each vector as an image pixel, stacking all the pixels from all the radar points in a frame into an array of size (16 × 16) to create two arrays of images with size (16 × 16 × 3), and processes them using a CNN. Another proposal in [9] introduced a seq2seq model for pose estimation by changing the radar points into voxels. The seq2seq approach is used to process the data through an encoder block and predict the voxelized pose key points at the output iteratively. At the final stage, the pose key locations will be obtained. Another study used voxelization to detect sitting direction using sequential modeling [18].

Another related topic in which mmWave radars were explored is human activity recognition (HAR). The main challenge in developing HAR systems using mmWave radar lies in the limitations and difficulties of collecting extensive, clean, and labeled experimental data. Research using a simulation-based approach in [19] developed an end-to-end simulation framework capable of simulating a realistic mmWave radar based on human motion. Other research using mmWave radar for HAR proposed a pre-learning model trained using 3D coordinates from radar data paired with Kinect data as the ground truth [20]. This model scheme generates more reliable estimates of human joint coordinates in 3D from sparse radar data. Once reliable features are extracted, a Graph Neural Network (GNN) model is used to identify human activities based on predicted joint coordinates.

Another work that uses the Graph Convolutional Network (GCN) approach in frame-based action recognition does not rely on a predefined graph structure but dynamically computes the graph structure based on input movement patterns [6]. Then, a spatial attention module aims to capture spatial features from other nodes at the same timestep, while a temporal attention module aggregates temporal features in the local temporal environment. In [21], Ahmad et al. used a combination of CNN- and Bidirectional Gated Recurrent Unit (Bi-GRU)-generated features with feature selection for human activity recognition techniques using deep-temporal learning. Meanwhile, a deep learning-based method [22] involved a new approach in human pose estimation using mmWave waves and the Inverse Synthetic Aperture Radar (ISAR) algorithm, where the ISAR algorithm produced high-resolution radar images of moving human targets. The main contribution of the research is the development of a robust ISAR-Pose pipeline for radar-based human pose estimation, which can function in various environmental conditions and can be used for both individual and multi-person pose estimation.

In [23], Stevens et al. discussed the development of a joint estimation model that leverages knowledge of the human body kinematics to enhance sensor accuracy. They used an experimental approach with two 3D CNNs trained to estimate the coordinates of 19 body joints based on point cloud data generated by mmWave sensors. Other research works such as [24,25], which used mmWave data, relied on advanced kinematics to overcome noise artifacts.

Neural networks like CNNs have been widely used to process human activity recognition data [26]. Moreover, an approach combining a CNN and LSTM for detecting human joints was implemented in [27], which can achieve competitive estimation accuracy with low MAE values. Another model [10] applied neural network structures such as CNN, LSTM, and ResNet to improve the performance of deep learning models in non-contact human motion detection applications. In this paper, we also use a CNN to extract features from the concatenation of adjacent frames before entering the next processing stage, namely transformer.

Other works applied a transformer to HAR, where the model is designed to work with mmWave point cloud sequences to address the unique characteristics of point cloud data, such as irregularity and sensitivity to environmental noise [28,29]. Another work [30] used a hybrid learning architecture that combines a temporal transformer and LSTM to track joint positions based on global and local information of joint trajectories.

The above works motivated us to use the concatenation of adjacent frames followed by the use of three neural network blocks, namely CNN, transformer, and Bi-LSTM, to improve the tracking and detection of 19 human joint positions.

The role of the transformer in our research is to take the output of the CNN sub-network and process it in the multi-head attention layer to capture spatial and temporal relationships. The output of the transformer, in turn, will be processed by a Bi-LSTM network producing the predictions of the positions of the 19 human joints.

3. Proposed Methodology

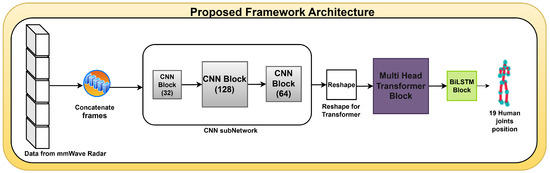

This section introduces the proposed model and the principle of concatenating adjacent frames, which we blend into the main model. The model incorporates a CNN sub-network, a multi-head transformer block, and a Bi-LSTM block, offering a new perspective regarding human joint detection.

The proposed model architecture for detecting human joints involves concatenation of frames, where consecutive frames are combined into one new more informative frame. Figure 1 illustrates the proposed model architecture for detecting human joints, showcasing the different steps of our approach.

Figure 1.

The framework of the proposed method for human joint detection.

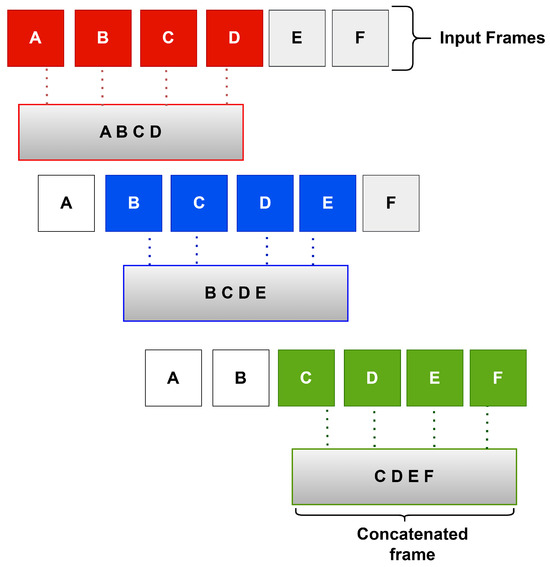

3.1. Concatenate Adjacent Frames

The concatenation step can be described as follows. Suppose that we have 6 consecutive frames: A, B, C, D, E, and F. Each time, n adjacent frames are combined into a single frame. For example, if n is equal to 4, frames A, B, C, and D are combined into one new frame, namely the frame ABCD. In a similar way, the frames B, C, D, and E are combined into the new frame BCDE. Finally, the frames C, D, E, and F are combined into one new frame, namely CDEF. This stage ends up producing fewer frames but with larger dimensions so that the resulting frames can be used by our neural network model. Figure 2 illustrates the process of frame concatenation.

Figure 2.

An example of concatenation of adjacent frames.

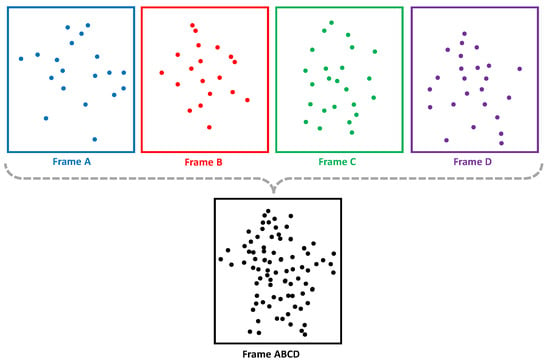

To construct an enriched temporal representation, adjacent frames are concatenated sequentially along the temporal axis rather than the spatial axis. This ensures that the spatial structure of each frame remains intact while capturing the dynamic evolution of movement. Unlike a 3D CNN, which processes spatial correlations across consecutive frames using volumetric convolutions, our model employs a transformer block to model long-term dependencies and a Bi-LSTM layer to extract temporal correlations. Figure 3 shows an illustration of how multiple consecutive point cloud frames are concatenated along the temporal axis. Each frame (Frame A, Frame B, Frame C, and Frame D) retains its original spatial structure. When combined, the resulting point cloud is much denser. Being within a short time window from each other allows us to perform the concatenation. A long time window can lead to detail loss of individual frames, whereas a short time window results in a non-dense point cloud. Therefore, the choice regarding the appropriate number of frames to concatenate is important, as we will demonstrate later.

Figure 3.

An example concatenation in point cloud domain for temporal processing.

Compared to the multi-frame representation fusion approach introduced in [12], where features are extracted independently from each frame and fused at the feature level, our method applies early fusion by concatenating raw adjacent frames before any feature extraction. This enables the network to learn joint spatiotemporal representations directly from the input and reduces redundant computation, resulting in a more efficient and coherent modeling process.

Let be a sample representing a sequence of frames from the dataset with dimensions

where T is the number of frames in the sequence, H and W represent the spatial dimensions of each frame, and C is the number of channels in the input. For each frame index j, the adjacent frame concatenation is performed by concatenating four consecutive frames:

where , yielding a transformed representation:

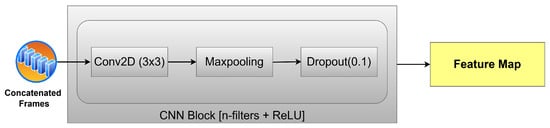

3.2. Integration to the Main Model

The frames produced by the concatenation phase are then processed by a series of convolutional neural network layers, as shown in Figure 1, consisting of three CNN layers using 32, 128, and 64 filters, respectively, as shown in Figure 4; each filter has a kernel size of 3 × 3. The convolutional layers have an ReLU activation each, followed by MaxPooling that takes the maximum value of 2 × 2. Next, a dropout layer is used with a rate of 0.1. The function of this series of convolution layers is to extract essential features from the data in each frame and generate feature maps, which are then processed further by the transformer layer.

Figure 4.

The details of CNN layers.

Here is the mathematical equation to extract features from the concatenated using the Time-Distributed CNN architecture, where each frame in the sequence is processed using a separate 2D convolution. This operation can be formulated as follows:

where * is the 2D convolution operation, and is the ReLU activation function. After passing through several convolution layers with MaxPooling and dropout, the representation of the extracted features is expressed as the following equation:

where are the feature sizes after the downsampling process by MaxPooling; is the number of feature channels after the convolution layer. Equations (4) and (5) directly represent the feature map after going through the CNN block shown in Figure 4.

Although the convolutional layers operate on spatial information, the input tensor of concatenated adjacent frames along the temporal axis implicitly contains temporal transitions between frames. This structure enables the CNN to capture short-term temporal correlations as spatial patterns. For example, gradual changes in joint positions across adjacent frames appear as structured differences that CNN can learn through its filters. Therefore, while CNN does not explicitly model temporal dependencies, its latent feature space reflects motion-induced correlations across the concatenated frames. Explicit temporal modeling is handled subsequently by the transformer and Bi-LSTM layers.

A reshape layer is then employed to change the shape of the tensor without changing the data itself. This layer is used to prepare the output of the convolution layer to match the input required by the transformer layer. Before reshaping, the final convolutional layer output has the shape [bach size, number of frames, height, width, number of channels]. For instance, in our experiments, the batch size is 128, and the dimensions are therefore [128, 18, 28, 14, 5]. Here, the number of frames is 18, the spatial dimensions are 28 and 14, and 5 is the number of channels. The reshape function transforms this input into [128, 18, 28 × 14 × 5], keeping the dimensions “batch size” and “number of frames” and fusing the remaining dimensions into one. The final form is [128, 18, 1960], where each time frame is represented as a feature vector of size 1960. It is important to transform spatial data into a series of feature vectors that the transformer can handle.

Next, this feature is reshaped so that it can be processed by the transformer layer, as shown in Equation (6) below:

This approach allows the model to capture spatial relationships within each frame while preserving temporal information through concatenation of adjacent frames and CNN.

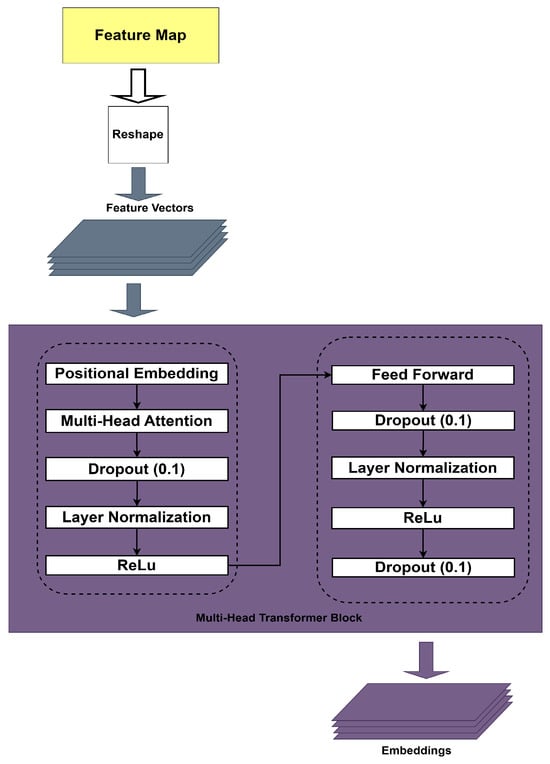

The reshaped data is fed to the multi-head transformer block, as shown in Figure 5. This data goes through the positional embedding stage, a technique used in the transformer model to provide information about the sequence or position of each element in the input sequence. This is important because transformers have no built-in understanding of data order.

Figure 5.

The details of the multi-head transformer block.

Multi-head attention lets the model focus on different parts of the input sequence simultaneously. This layer has eight attention units. Figure 5 shows that this block includes three dropout layers with a drop rate equal to 0.1 to help prevent overfitting, and two normalization layers to normalize the input to stabilize training and speed up convergence. In the multi-head transformer block, an ReLU layer is used to add non-linearity, which is essential for capturing complex relationships in the data. In addition, a Feed-Forward Network (FFN) processes each time frame independently. Regarding transformer processing stage equation, first of all, positional encoding is added to the extracted features as follows:

After that, the multi-head attention (MHA) layer is applied to capture the temporal relationship between frames as in Equation (8):

This process is formulated in the following Equation (9) with a multi-head attention mechanism.

Then, Layer Normalization and Feed-Forward Network (FFN) are applied:

The results of this stage will be used for further processing in Bi-LSTM to capture temporal information more effectively.

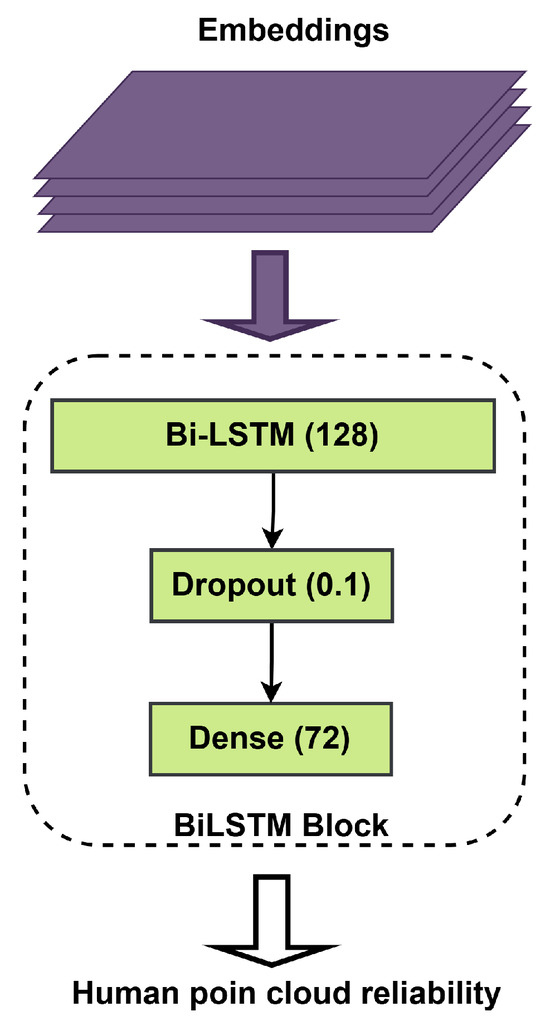

In the next step, Bi-LSTM uses the multi-head transformer block output. Figure 6 explains the Bi-LSTM block, which aims to read a two-way input data sequence. This is very important for predicting the position of critical points with high accuracy. In our model, the number of units in the LSTM was set to 128, and two dropout layers whose drop rate is set to 0.1 were employed to prevent overfitting. In addition, a dense layer with an output vector of dimension 72 is used to predict 19 human joint points. The neural network architecture used in this work is shown in Table 1. The formulation of this operation is provided as follows:

where

Figure 6.

The details of the Bi-LSTM layers.

Table 1.

The architecture of neural network for experiment.

The Bi-LSTM concatenates the hidden states from both forward and backward directions, yielding the final hidden representation:

where d represents the dimensionality of the LSTM hidden states in each direction. This representation is then passed to the fully connected layers for keypoint regression. The output from the Bi-LSTM layer is then passed through fully connected layers to generate the final prediction. The first fully connected layer applies a non-linear transformation:

where and are the weight matrix and bias, respectively. A dropout layer is applied to prevent overfitting:

Finally, the output layer predicts the keypoints using a linear transformation:

where and are the weight matrix and bias of the final layer, respectively. The output represents the estimated keypoint positions.

4. Experiments and Results

This section presents the experiment methods, the results, and a discussion of the proposed model.

4.1. Experimental Setup

In this research, we followed the division of training, validation, and testing sets as in the original paper [3], using their public dataset MARS [3], which is designed to assist in rehabilitating patients with motor disorders using the mmWave radar system. This dataset consists of 10 types of rehabilitation movements performed by four human subjects, with a total of 40,083 frames. This dataset includes the reconstruction of 19 human body joints in 3D space using a point cloud generated by the mmWave radar. The activities performed include left arm extension, right arm extension, both arm extension, left front lunge, right front lunge, squat, left side lunge, right side lunge, left leg extension, and right leg extension. Each subject performed each movement for 2 min, resulting in approximately 10,000 data frames per subject.

This data was then used for model training, validation, and testing. Data collection was conducted indoors to ensure lighting and radar signal reflection consistency. Specific conditions considered during the experiment included no strict limitations as mmWave radars are independent of lighting conditions. The subject stood at 2 m from the radar. The radar was mounted on a 1-meter-high table with a Microsoft Kinect V2 sensor (Microsoft Corp., Redmond, WA, USA). Reference data was obtained from the Kinect V2, which recorded the subject’s movements at a rate of 30 Hz.

Variability in mmWave radar data affects detection accuracy due to several factors. Noise in radar data arises from signal interference, environmental materials, and impacting the generated point cloud. Occlusion occurs when body parts are obscured due to natural movements, such as arms covering the chest during squats or variations in hand positions affecting radar reflections. Additionally, sparsity in point cloud data makes mmWave radar less dense than camera-based or LiDAR methods, necessitating the concatenation of adjacent frames to enhance spatial–temporal information before processing with CNN, transformer, and Bi-LSTM. Furthermore, inter-individual movement variability, influenced by differences in height, posture, and execution of rehabilitation movements, affects data distribution. To improve generalization, the model is trained on data from multiple subjects.

The experiments were conducted using the TI IWR1443 76-81GHz model radar (Texas Instruments, Dallas, TX, USA) is available at (https://www.ti.com/product/IWR1443 (accessed on 8 June 2024)), which produces high-quality point clouds. The radar technical details are provided in Table 2. As shown in this table, the radar configuration includes a frequency range of 76–81 GHz, 3 transmitting antennas, and 4 receiving antennas (resulting in 12 virtual channels), a chirp bandwidth of 3.2 GHz, and a chirp duration of 32 μs. This setup enables a range resolution of 4.69 cm, a velocity resolution of 0.35 m/s, and an angular resolution of approximately 9.55°, which collectively contribute to the generation of high-quality point clouds used for human joint estimation. For the human skeleton estimation task, we initially employed an optimal number of 19 frames [1]. The radar technical details in Table 2 include the hardware and software configuration used in this experiment.

Table 2.

Configuration in the experiment.

All experiments in this research were conducted using carefully selected parameters, as detailed in Table 3. These parameters can be used for reproducibility of our results.

Table 3.

Training parameters.

As explained in Section 3.1, this research implements an approach that combines several adjacent frames into a single more informative one. This method enhances the quality of the data before it is processed in the main blocks of the detection neural network.

After going through several experiment stages, the results were evaluated using the metrics mean absolute error (MAE) and root mean squared error (RMSE) measured for the x, y, and z coordinates of 19 joints. Moreover, the average MAE and RMSE values of the x, y, and z axes are calculated to provide overall performance information. Furthermore, the computational complexity associated with the model can be derived from the number of parameters trained during model training.

4.2. Results

As detailed in Section 4.1, the experiment began with 19 frames, as suggested in [1]. The initial shape of the point cloud before entering the frame merging stage is [128, 19, 14, 14, 5], with 19 representing the initial frames, 14 × 14 the point cloud data, and 5 the channel. The dataset then undergoes the adjacent frame concatenation process, a key step before being trained in the three main blocks: CNN, transformer, and Bi-LSTM. Table 4 displays the experimental results of four different configurations. These configurations represent the same network for different numbers of concatenated frames, referred to as , , , , and .

Table 4.

A comparison of the average MAE and RMSE values for 19 human joint positions across different values of n.

As explained, we start with 19 frames for each instance, which undergo the concatenation process in which multiple consecutive frames are combined into a single one. To determine the best choice, five different experiments were carried out as shown in Table 4. Here, , represents a configuration without any frames concatenated. represents combining two adjacent frames into one new frame with the shape [128, 18, 28, 14, 5], where 18 is the number of frames, 28 × 14 is feature map, and 5 is the depth. represents combining three adjacent frames, which results in an output with the form [128, 17, 42, 14, 5]. Similarly, represents the combination of four adjacent frames into one, and represents the combination of five adjacent frames into one. Their respective outputs are [128, 16, 56, 14, 5] and [128, 15, 70, 14, 5].

The results of observations on the different configurations, as shown in Table 5, show that and have the worst performance because they are less capable of capturing complex features when compared to models with more parameters. and are slightly better even though there is still the potential for overfitting. This overfitting arises from the increase in the number of parameters and the limitations of the data. The increase in parameters occurs because, as more frames are combined, the model has to learn more parameters. This makes it easier for the model to memorize the training data rather than learning generalized patterns. Furthermore, a data limitation arises because the dataset size is not large enough to balance the increase in parameters, causing the model to adapt to specific patterns in the training data. As a result, the model performs well on the training data but fails on new data. has the best results among all the models because it provides a balance in capturing spatial and temporal patterns, making the model the best choice for estimating human joint position in scenarios that require high precision. As a result, is treated as the optimal configuration in this research.

Table 5.

Comparison of the total number of parameters for 19 human joint positions under varying values of n.

In addition to empirical validation, the choice of is theoretically supported by the temporal resolution of the radar system. The MARS radar operates at 30 Hz, meaning each frame captures approximately 33 milliseconds of motion. By concatenating four consecutive frames, the network observes around 133 ms of temporal context, sufficient to capture micro-motion such as limb lifts or subtle joint displacements. Furthermore, since the dataset uses overlapping windows to generate training samples, increasing n beyond 4 introduces substantial redundancy. For example, with , each sample shares four out of five frames with the previous one (80% overlap), reducing sample diversity and leading to higher inter-sample correlation. This makes it more difficult for the model to generalize and learn robust spatiotemporal features. Therefore, is not only optimal empirically but also theoretically justified based on radar sampling characteristics and the dataset construction strategy.

Table 6 shows the MAE and RMSE values from the experimental results of to detect the positions of 19 human joints. The MAE and RMSE values of all 19 joint points are in the range of 1~4 cm, with the exception being the left and right wrists. The average MAE values are 1.94, 1.62, and 1.76 cm for the x, y, and z axes, respectively. Similarly, the average RMSE values for the x, y, and z axes are 3.28, 2.39, and 3.07 cm, respectively. This outperforms, by a large margin, the the method proposed in [3] and noticeably outperforms the method proposed in [1]. The average joint values are smaller than 4 cm. Moreover, our experimental results can reduce the MAE and RMSE values in the right and left wrist joints, where previous research in [3] was unable to detect them accurately.

Table 6.

The experimental results for the average MAE and RMSE for .

As previously indicated, the architecture of our model (for as well as other configurations) is given in Table 1. Our neural network was designed to capture patterns both locally and globally. Local pattern identification refers to the process of identifying spatial and short-term temporal relationships in the data. This process is conducted via the CNN and transformer blocks. The global pattern identification refers to the process of identifying long-term temporal relationships in the data (between non-adjacent frames) via the LSTM sub-network.

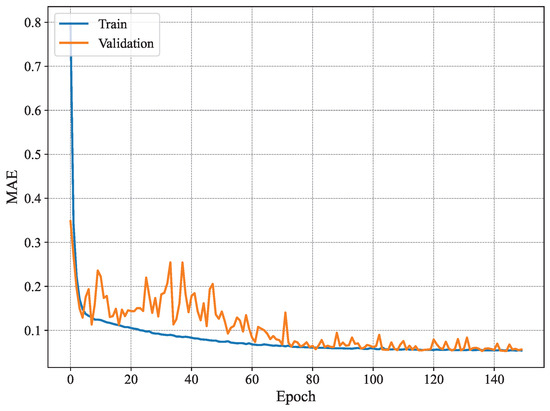

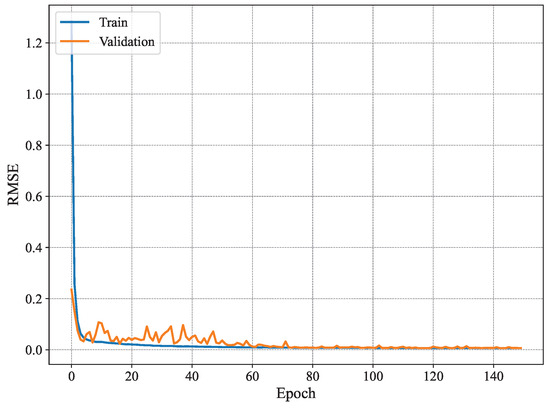

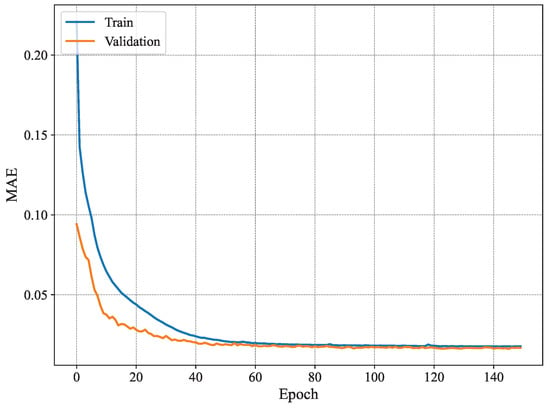

Figure 7 shows the MAE per epoch for the method proposed in [3]. The results show that the training MAE starts at high values, as expected, and decreases gradually with every epoch. However, this behavior is not observed for the validation set, where the MAE does not seem to drop significantly until later epochs (i.e., after 80 epochs). Nonetheless, it fluctuates remarkably even for later epochs. Similar behavior is observed for the RMSE shown in Figure 8, where the training RMSE decreases consistently, whereas the validation one fluctuates until around epoch 70. This behavior may be attributed to the high number of trainable parameters and the architecture of the model proposed in [3], which likely contributes to overfitting, whereby the model learns specific patterns in the training set that do not generalize to unseen data, such as the validation data.

Figure 7.

Average MAE of 19 joint estimates on training and validation sets using MARS.

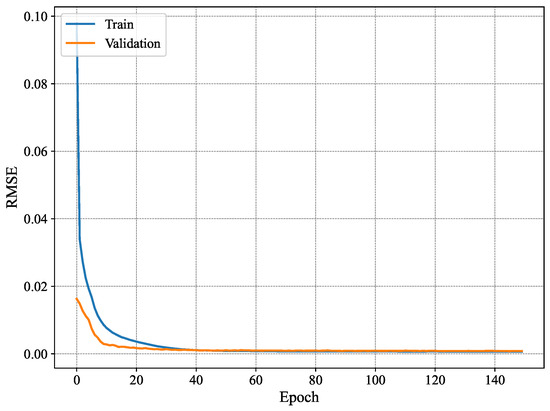

Figure 8.

Average RMSE of 19 joint estimates on training and validation sets using MARS.

Figure 9 shows the MAE per epoch for our proposed method using . As can be seen, our model is more stable than the conventional method [3] and exhibits typical good learning behavior where both the training and validation estimation MAE decrease consistently to converge towards similar values. Starting from epoch 60 or so, the model seems to have converged, and the MAE remains within the same value range until the end of the training. Compared to the results presented in Figure 7, the training behavior of our system model is much better as it does not exhibit any signs of overfitting, namely the fluctuations in validation estimation MAE and the difference in performance between the training and validation curves. Furthermore, Figure 10 shows the RMSE of our proposed model with . The results show similar patterns to those of the MAE, where the model converges slowly until it reaches good performance around epoch 60. The increase in stability is a key difference between the model proposed in [3] and our model for of our proposed model. There has been a reduction in overfitting, and model generalization has increased. Our model offers a good option for handling temporal data and reducing fluctuations often occurring in validation data. Our model is more effective for time-dependent data, such as radar data, for human joint detection.

Figure 9.

Identification MAE for .

Figure 10.

Identification RMSE for .

Next, the experimental results of are compared with the conventional methods [1,3,15,17]. Table 7 and Table 8 show the estimation accuracy of human joint locations using these five models (i.e., ours and the conventional ones). The model in our experiment shows significant improvement in estimation accuracy when compared with [3]. Our proposed model reduces the MAE by 4.1 cm, which indicates a substantial increase in model performance. This can be attributed to the concatenation of adjacent frames, which allowed producing new frames that are more informative. Furthermore, the layers of the transformer block preserve spatiotemporal information, transforming into comprehensive features for the Bi-LSTM to predict the positions of the different joints with high accuracy. This research produces a robust model for the task of human skeleton estimation. Our research model effectively reduces estimation errors and proves to be better in handling frames.

Table 7.

Comparison of MAE and RMSE values from the estimation of 19 connections based on the experimental results of several different models.

Table 8.

Parameter comparison across models for estimating 19 human joints.

We performed statistical tests to evaluate the significance of the MAE and RMSE values in the proposed and conventional models. We employed paired t-tests and p-values and examined both. We compared the two values for the proposed model with those of the conventional method. Table 9 shows the results of the t-tests and the p-values for MAE.

Table 9.

MAE-based statistical comparison using t-test and p-value.

The proposed model demonstrates significant improvement compared to MARS [3] and mmPose [17] (p < 0.05), indicating that this model is statistically more accurate in estimating joint positions than those methods. There is no significant difference compared to CNN+Bi-LSTM [1] (p > 0.05), which indicates that the performance of this model is comparable to CNN+Bi-LSTM. The improvement compared to mRI [15] is almost significant (p ), which means the proposed model is likely better. However, the statistical test is not enough to confirm this difference with certainty. Table 10 shows the t-test results and p-values for RMSE on the conventional model for comparison with the proposed model.

Table 10.

The t-test and p-value analysis for RMSE comparison between models.

The most significant difference was seen in mmPose [17] (p = 0.019), indicating that the proposed model is statistically superior in reducing joint position estimation errors compared to mmPose [17]. This model is also better than MARS [3] (p = 0.038), indicating that this difference is statistically significant (p < 0.05). The improvement compared to CNN+Bi-LSTM [1] (p = 0.194) and mRI [15] (p = 0.075) is not significant, which means that, although the RMSE of this model is lower, the difference is not statistically strong enough to conclude an absolute advantage over these methods.

We also include confidence intervals (CIs) for the reported metrics to indicate the reliability of the results. Based on the experimental results of 95% CIs for MAE and RMSE, we can analyze how stable and reliable the proposed model is compared to the baseline method. Table 11 shows the CI results of the conventional and proposed models.

Table 11.

Confidence interval analysis across conventional and proposed models.

The proposed model has the best performance because it has the lowest MAE and RMSE and the smallest CI. The CNN+Bi-LSTM model [1] is quite close to the performance of the proposed model but still has higher variability. Moreover, MARS [3] and mmPose [17] have very high variability, showing inconsistent and less reliable results.

Next, a detailed comparison of inference time was conducted to determine the computational efficiency. In the context of neural networks such as CNN, LSTM, or transformer, inference time refers to the duration from when data enters the model until the model produces a result. The experiments contain accuracy and computational costs that will be used for analysis. Table 12 compares the inference time and accuracy in the proposed and conventional models.

Table 12.

Performance comparison in terms of inference time and accuracy across models.

Based on the comparison results in Table 12, it can be concluded that the proposed model has the highest accuracy of 98.77%, outperforming CNN+BiLSTM [1] and MARS [3]. However, this increase in accuracy must be paid for with a longer inference time, which is 0.28 ms per sample, compared to CNN + BiLSTM [1] and MARS [3]. MARS [3] and CNN+BiLSTM [1] have low inference times due to their simpler architecture. The proposed model has the longest inference time due to the complexity of the architecture, such as the implementation of a transformer, multiple layers, and concatenating adjacent frames, which increases computation.

To demonstrate the generalizability of the data, the proposed model is tested on unseen activities. In the next step, we implement the Leave One Subject Out (LOSO) method in experiments to test the model in unseen subjects to show generalization outside the dataset [3]. Four subjects participated in our experiments. Therefore, to appropriately implement LOSO, we run four rounds. During each of them, three subjects’ data are used for training, and the remaining data are used for testing. This aims to measure the generalizability of the model to new subjects. In this test, we employed the proposed model and conventional models such as MARS [3] and CNN+Bi-LSTM [1]. Table 13 shows the experimental results regarding MARS [3] for the LOSO method.

Table 13.

Performance of the MARS model on unseen subjects using LOSO testing.

The performance of the MARS model [3] has relatively high errors, both in terms of MAE and RMSE. The highest overall average error was found when testing Subj_2. MARS models [3] tend to be less able to generalize to untrained subjects, resulting in significant errors.

Table 14 shows the accuracy of the CNN + Bi-LSTM model [1] regarding unseen subjects in the LOSO framework. The performance of this model is significantly improved compared to MARS [3]. The highest error occurred when testing Subj_2 and the lowest when testing Subj_3. CNN+Bi-LSTM [1] shows better temporal and spatial sequence learning ability than MARS [3] but still has room for improvement in accurate estimation in all three axes ().

Table 14.

MAE and RMSE results of CNN+Bi-LSTM across unseen subjects under LOSO testing.

Table 15 shows the evaluation of the proposed model (n = 4) using the LOSO protocol for the joint estimation. This model shows the best results compared to the other two models. The average MAE is 2.06–4.12 cm, and the RMSE is 2.46–5.90 cm. The best results were obtained when testing Subj_2. The proposed model can capture spatial and temporal relationships very well and shows strong generalization to previously unseen subjects. The small error values demonstrate the superiority of this model architecture in handling mmWave radar data for joint point estimation.

Table 15.

Performance of the proposed model (n = 4) on unseen subjects using LOSO evaluation.

In addition, we evaluated unseen activities with the Leave Some Activities Out (LSAO) method regarding the proposed approach. LSAO aims to test the model’s generalization ability to activities not seen during training. We split the dataset into five subsets, where each subset includes some activities exclusively. We then proceeded to train different models using different subsets. We run four rounds, where, in each round, four of the five subsets are used for training, and the remaining one is used for testing. By performing five rounds, five models are created on some activities and evaluated on others. This allows us to properly evaluate the proposed method on unseen activities. Table 16 shows the experimental results using the LSAO method on the proposed model. The average MAE and RMSE were stable and low overall, indicating that the model generalized well to unseen activities (i.e., activities that were not encountered during training). Low MAE and RMSE values for the x-axis mean the model is very stable in recognizing horizontal changes. The y-axis (depth) shows the highest MAE and RMSE in all rounds. This is common in mmWave radars as the depth axis is often more prone to distortion or low resolution in estimation. Furthermore, dynamic body orientation towards the sensor (depth) and lack of variation in activity data on the y axis during training further degrade the detection along this axis. For the z-axis, performance is more stable and consistent. Round 3 appears to have the most representative and generalizable combination of testing activities from the training data. It can be seen that, in some rounds, the model is able to adapt well to unseen activities.

Table 16.

Evaluation results of the proposed method under LSAO setup across five rounds.

Table 17 provides a detailed ablation study based on the MAE and RMSE values for joint estimation across the x, y, and z axes and the average over all the axes. The analysis reveals the individual contribution of each architectural component, CNN, transformer, and Bi-LSTM, toward the model’s spatial estimation accuracy.

Table 17.

Ablation study results when removing major components.

The proposed model, which includes all three components (CNN + transformer + Bi-LSTM), achieves the best overall performance with the lowest average error (MAE: 1.77 cm; RMSE: 2.92 cm). When a GRU replaces the Bi-LSTM, the average error increases slightly to 1.84 cm MAE, suggesting that Bi-LSTM provides stronger temporal modeling capabilities, although GRU remains a reasonable alternative.

Removing the transformer leads to further degradation (MAE: 2.07 cm), indicating its critical role in capturing temporal dependencies via attention mechanisms. Removing the Bi-LSTM entirely yields a similar drop (MAE: 2.30 cm), reaffirming the importance of sequential modeling. In contrast, removing the CNN results in the highest overall error (MAE: 3.66 cm; RMSE: 5.76 cm) due to the model’s inability to extract meaningful spatial features early on.

Axis-specific trends show that the x- and z-axes produce lower errors. At the same time, the y-axis often has slightly higher values across configurations, possibly reflecting the radar’s lower depth resolution. These results confirm that each component contributes complementarily to the spatial and temporal learning process and justify the architectural choices made in the proposed model.

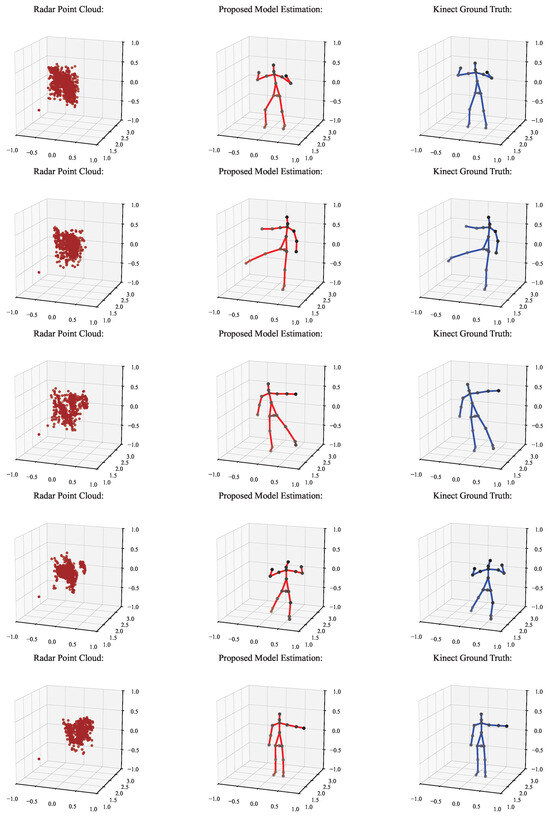

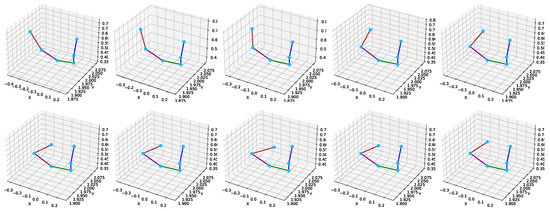

Figure 11 illustrates the qualitative results of the proposed skeleton estimation framework. Each row in the figure corresponds to a representative frame sampled from a sequence. From left to right, the subfigures show (a) the input radar point cloud, (b) the predicted human skeleton obtained from our model, and (c) the ground-truth skeleton derived from the Kinect sensor. The visual comparison shows that the predicted joints align closely with the reference joint locations in different poses, indicating that the model can learn accurate spatial representations from the sparse radar data. This visual evidence complements the quantitative results in Table 6, Table 7 and Table 8 and confirms the robustness of our method in capturing complex limb configurations, such as lunges and extensions.

Figure 11.

Visual comparison of radar point cloud, predicted skeleton by the proposed model, and Kinect-based ground truth over multiple frames.

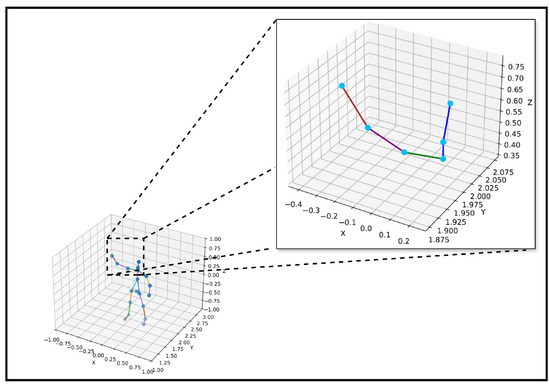

To complement the quantitative evaluation, Figure 12 and Figure 13 provide visual evidence of the model’s ability to maintain accurate and consistent joint estimations across time. Figure 12 presents a detailed inset visualization of the predicted right upper limb skeleton over multiple frames, showing the spatial continuity of joints, such as the right shoulder, right elbow, and right wrist. The skeleton shape is preserved across frames, demonstrating the model’s spatial stability and robustness under temporal variation.

Figure 12.

Visual inset skeleton for right upper limb.

Figure 13.

Time-series plots of joint positions showing model accuracy and consistency.

Different colors are used to indicate specific joint connections to enhance the interpretability of the visualization in Figure 12. The blue lines represent the connections from the neck to the head, spine, and shoulder. The green line connects the spine shoulder to the right shoulder, the purple line denotes the connection from the right shoulder to the right elbow, and the brown line connects the right elbow to the right wrist. The joints are marked as cyan dots to ensure clear visibility of their spatial positions. Figure 13 uses a similar color-coding scheme to maintain visual consistency across visualizations.

In addition, Figure 13 displays time-series plots of joint coordinates corresponding to key upper body joints, including the right wrist, right elbow, right shoulder, neck, and head. These plots compare the predicted trajectories with ground-truth positions from the Kinect sensor. The curves exhibit smooth and consistent movement patterns that closely follow the ground truth. This visual alignment supports the temporal reliability of the proposed model, particularly in capturing complex articulations of the right upper limb.

To provide a more detailed understanding of model behavior across different types of motion, Table 18 presents the joint MAE and RMSE values for four representative activities: left upper limb extension (LUL), right side lung (RSL), right front lung (RFL), and left side lung (LSL). These activities were selected to reflect diverse body parts’ engagements, including unilateral upper limb movement and dynamic lower limb motion. The results indicate that the model achieves lower localization errors for the proximal joints, such as the base of the spine and the mid of the spine, in all activities. In contrast, distal joints, particularly the wrists and ankles, tend to exhibit higher errors, especially during complex motions, such as RFL and LSL. For example, the right ankle shows an MAE exceeding 2.0 cm in the right front lung, suggesting greater difficulty regarding estimation during low-limb activity.

Table 18.

MAE and RMSE for each joint across different activities.

Table 19 summarizes the average MAE and RMSE values across all the joints for each of the ten activities in the dataset. The results show variability in estimation performance across different activities, with Activity 1 yielding the lowest average error (MAE = 0.91 cm; RMSE = 1.05 cm), while Activities 4, 5, and 6 produce higher errors (MAE > 2.0 cm). These results concisely overview the model’s performance consistency across various movement types and highlight specific activity cases where joint estimation is more challenging.

Table 19.

Average MAE and RMSE for each activity.

4.3. Discussion

This research presents a novel framework for detecting human joint positions that provides benefits in accuracy and computational complexity. The combination of CNN, transformer, and Bi-LSTM components in one workflow shows synergy in effectively extracting spatial and temporal features.

To overcome the inherent shortcomings of mmWave radar data, namely sparsity and lack of consistency, the concatenation of adjacent frames plays a key role in enriching the point cloud data. By concatenating frames into a new and more informative frame, the model improves the quality of feature extraction in both the spatial and temporal domains. As demonstrated by the stable learning curve in , this approach improves the detection accuracy and accelerates the model convergence. These results validate that this preprocessing step reduces noise artifacts and enhances signal relevance.

We split the processing into local and global processing. Global processing provides a broader understanding of the patterns and relationships across the entire dataset, which is important for understanding context and long-term dependencies and ensuring accurate predictions of joint positions. Transformer and Bi-LSTM blocks capture temporal data dependencies at different scales. This dual temporal processing flow ensures accurate joint position estimation, even in complex motion scenarios.

From several configurations, was selected as the optimal choice among the tested models as it offers good balance between feature complexity and computational load.

This research demonstrates significant performance improvements compared to conventional models such as MARS [3] and mmPose [17]. Unlike Shi et al. [1], who employed a multi-frame fusion approach to enhance point cloud density, our method concatenates adjacent frames to directly build a temporally and spatially more informative representation. By integrating a CNN, transformer, and Bi-LSTM, our model effectively captures spatiotemporal dependencies and robustly handles sparse radar point clouds. Specifically, our approach reduces the MAE by an average of 4.1 cm compared to the method proposed in [3]. It shows superior generalization by achieving lower RMSE values for training and validation sets.

This advantage is also proven through the paired t-test (Table 9 and Table 10), which shows that the difference in results between the proposed model and MARS [3] and mmPose [17] is statistically significant (p < 0.05). Although the comparison with CNN+Bi-LSTM [1] and mRI [15] is not statistically significant (p > 0.05), our model results still show lower error and better result stability. Even when compared with the quite competitive CNN+Bi-LSTM, our model still shows clear accuracy improvement and result stability, as evidenced by the 95% confidence intervals in Table 11, namely MAE ± CI = 1.77 ± 0.40 cm and RMSE ± CI = 2.91 ± 1.16 cm.

Furthermore, although the p-value for mRI [15] approaches the significance threshold (p ), the observed absolute difference in MAE (4.26 cm vs. 1.77 cm) reflects a moderate to large effect size. This suggests that our model’s improvement is statistically relevant in some instances and practically meaningful for high-precision applications such as rehabilitation or clinical assessment.

In terms of computational efficiency, the inference time analysis in Table 12 shows that the proposed model has an average inference time of 0.28 ms per sample, which is higher than MARS [3] and CNN+Bi-LSTM [1]. This inference time increases due to the complexity of the architecture, especially the use of transformers and the frame merging process.

While the proposed model introduces additional architectural complexity, its average inference time remains practical for real-time use. As shown in Table 12, the model achieves an inference time of 0.28 ms per sample, which is well below typical real-time thresholds (e.g., 30–50 ms for visual feedback systems). Additionally, deployment on resource-constrained edge devices could benefit from standard optimization techniques, such as model pruning, quantization, and architectural simplification, to reduce the memory and compute demands without compromising real-time performance. The MARS model [3] was previously shown to operate with a latency of 64–105 μs per frame. Given that our model still provides substantial timing margin relative to sensor acquisition rates such as Kinect V2 (30 Hz = 33.3 ms) or mmWave radar (10 Hz = 100 ms), the observed accuracy improvement is justified by the acceptable increase in inference latency. Therefore, our model remains suitable for the real-time rehabilitation and deployment of monitoring systems.

In addition, to evaluate the generalization ability, the model was tested using the LOSO method, where one subject was used for testing and three others for training. The results from this scenario show that the model maintains its accuracy even when faced with data from subjects it has never seen before.

Apart from testing generalization using the LOSO approach, this research also evaluates the model’s ability to deal with more complex scenarios using the LSAO approach. The experimental results using the LSAO approach show that, despite a slight decrease in accuracy compared to the LOSO scenario, the proposed model still shows good robustness to changes in activity distribution. The decreases in MAE and RMSE are not too drastic, indicating that the model architecture, especially the frame concatenation and the application of transformer and Bi-LSTM, plays an important role in maintaining stable performance even when new activities are introduced during testing.

Although the MARS dataset primarily consists of dynamic rehabilitation movements, static human poses are inherently present within the sequences. These occur during natural pauses between transitions from one motion to another, such as when a subject temporarily stands still or holds a position before continuing to the next activity. As a result, the model is implicitly exposed to static pose patterns during training and evaluation. In these static intervals, frame-to-frame variation is minimal, and the concatenation of adjacent frames yields denser and more stable point clouds. This reduced temporal fluctuation likely enhances the accuracy of spatial feature extraction and joint localization, even in the absence of motion. Therefore, while not explicitly evaluated as a separate category, the model can generalize to static pose scenarios based on its exposure to such conditions within the dataset.

While the evaluation of the MARS dataset demonstrates strong performance and robustness, we acknowledge that testing on a single dataset may limit generalizability. As part of future work, we plan to collect a new radar dataset under diverse environmental settings and hardware configurations. This will allow for cross-dataset validation and the opportunity to assess the ecological validity of the proposed model in real-world deployment scenarios.

Moreover, as shown in Table 17, the ablation study further highlights the complementary importance of each network block in the proposed model. Removing the CNN block most significantly increases the error, emphasizing its role in early spatial feature extraction. The exclusion of the transformer or Bi-LSTM blocks also leads to notable performance degradation, confirming their roles in capturing temporal dependencies through attention and recurrent mechanisms. These results validate our choice of integrating a CNN, transformer, and Bi-LSTM to achieve a robust spatiotemporal representation.

To further support the temporal robustness of the proposed model, Figure 11 provides a visual sequence comparison where the predicted skeletons are consistently aligned with the Kinect-based ground truth across multiple frames. This visual coherence highlights the model’s ability to preserve joint structure over time. Additionally, Figure 13 presents time-series plots of joint trajectories, such as wrist and elbow, which exhibit smooth and continuous patterns closely following the ground truth. These visualizations collectively demonstrate the model’s temporal stability and reinforce the quantitative improvements in Table 6 and Table 18.

In addition to the quantitative evaluation, Table 18 and Table 19 provide more fine-grained insights by reporting the per-joint and per-activity performance. It is evident that wrist joints, which are located farther from the torso and exhibit greater motion, tend to have higher estimation errors. This trend is especially prominent in activities involving fast upper limb movements. These findings indicate that distal joints remain more challenging to estimate due to motion-induced sparsity and orientation variance, which could be a future target for improvement.

This can be explained by the fact that frame concatenation creates a richer temporal representation, while a transformer allows more flexible generalization of spatial patterns, and Bi-LSTM enhances the modeling of long-term temporal dynamics. The combination of these three blocks produces a latent representation that is more abstract and less dependent on one specific activity type, so the model can still recognize human movement patterns, even in activities that were not previously recognized during training.

A critical comparison shows that the conventional methods often focus on spatial or temporal modeling but lack integration of both. For instance, CNN-based approaches [1] effectively capture spatial features but cannot deal with temporal inconsistencies. In contrast, transformer-based models [28] are capable of temporal modeling but struggle with noise in sparse data. Our framework addresses these limitations by combining local and global processing, which allows our model to achieve state-of-the art results in radar-based human joint estimation.

Despite its benefits, the proposed approach faces some limitations:

- Sensitivity to sparse data: Although merging adjacent frames effectively improves data quality, the sparseness of FMCW radar data still affects the accuracy of detecting difficult joint positions, such as the wrist and ankle. This limitation is evident from their higher MAE values compared to other joints.

- Computational demand: Since merging adjacent frames produces a new one, increasing frame size demands more memory and computational resources, making real-time implementation challenging in resource-constrained environments.

- Data diversity: Reliance on a single dataset such as MARS [3] limits the generalizability of the findings. Expanding the coverage of datasets for different human activities and environmental conditions is needed to validate the robustness of the model.

To address these challenges and build on the current findings, future research should explore the following directions:

- Dynamic frame selection mechanisms: Introduce an adaptive frame selection model that dynamically selects frames based on motion intensity to further improve input quality while reducing unnecessary computational overhead.

- Real-Time implementation: Optimizing real-time processing models is essential for practical applications. Techniques such as lightweight neural architectures, model pruning, and quantization can reduce the computational footprint without sacrificing accuracy.

- Edge-level deployment feasibility: To support future deployment, the proposed model is designed with a radar-compatible architecture that can be optimized for edge devices such as the TI IWR1443. Although real-time benchmarking is not included in this work, the model’s modular structure enables the potential use of lightweight techniques, such as pruning or quantization, to reduce latency and computational cost. This direction is promising for practical applications like in-home rehabilitation and fall detection.

- Generalization across datasets: Future research should focus on training and validating the model on multiple datasets with varying environmental conditions and movement patterns. While the evaluation using the MARS dataset [3] has demonstrated strong performance, testing on a single dataset may limit the external validity of the findings. As part of future work, we plan to collect a new radar dataset under diverse environmental settings and hardware configurations. This will allow for cross-dataset validation and the opportunity to assess the ecological validity of the proposed model in real-world deployment scenarios.

5. Conclusions

In this paper, we introduced a novel approach for human skeleton estimation by leveraging the combination of adjacent frames to create a richer and more comprehensive representation of input data, thereby improving feature quality and model performance. By integrating CNN, transformer, and Bi-LSTM architectures, the proposed model effectively captures spatial and temporal patterns, significantly reducing estimation error compared to conventional models. The experimental results demonstrate that our method of concatenating frames leads to more informative input data, allowing the model to perform well in predicting human joint positions. This research advances the understanding of spatiotemporal dynamics in human skeleton estimation. It sets a new benchmark in the field by outperforming established methods regarding accuracy and computational efficiency.

The experimental setup is designed to align with methodologies established in previous research, ensuring the reliability and robustness of the results. This research provides a comprehensive evaluation of the proposed model’s capabilities by utilizing the MARS public dataset and implementing a series of controlled experiments. The findings highlight the advantages of the frame concatenation approach, as evidenced by the superior performance of our model for , which achieved the best balance between capturing complex features and maintaining computational efficiency. This model demonstrates a marked improvement over other configurations, emphasizing the importance of optimizing the frame selection process to enhance model performance.

Furthermore, the ablation study reaffirms the necessity of including all three components in the model. The removal of any one of the CNN, transformer, or Bi-LSTM modules leads to performance drops, validating their synergistic contribution to accurate joint estimation. Additional evaluations across joints and activities also reveal that wrist joints pose higher estimation challenges, particularly in dynamic-motion scenarios, providing important direction for future model refinement.

The combination of CNN, transformer, and Bi-LSTM blocks, alongside the use of frame concatenation, results in a robust and efficient model that significantly outperforms the conventional approaches.

Author Contributions

Conceptualization, P.H.R.; methodology, P.H.R.; software, P.H.R.; validation, P.H.R., X.S., M.B. and T.O.; formal analysis, P.H.R., X.S. and M.B.; investigation, P.H.R. and M.B.; resources, P.H.R. and X.S.; data curation, X.S.; writing—original draft preparation, P.H.R.; writing—review and editing, P.H.R., M.B. and T.O.; visualization, P.H.R. and M.B.; supervision, T.O.; project administration, T.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Indonesia Endowment Fund for Education Agency (LPDP) under grant number SKPB-417/LPDP/LPDP.3/2023 and JST ASPIRE grant number JPMJAP2326, Japan.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the use of a publicly available dataset generously offered by the authors of [3].

Informed Consent Statement

Not applicable.

Data Availability Statement

This work uses simulated data created using the simulation software introduced in Ref. [3].

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| Bi-LSTM | Bidirectional Long Short-Term Memory |

| Bi-GRU | Bidirectional Gated Recurrent Unit |

| CI | Confidence Interval |

| CNN | Convolutional Neural Network |

| FFN | Feed-Forward Network |

| FMCW | Frequency-Modulated Continuous Wave |

| GCN | Graph Convolutional Network |

| GNN | Graph Neural Network |

| GPU | Graphics Processing Unit |

| GRU | Gated Recurrent Unit |

| HAR | Human Activity Recognition |

| ISAR | Inverse Synthetic Aperture Radar |

| LiDAR | Light Detection And Ranging |

| LOSO | Leave One Subject Out |

| LSAO | Leave Some Activities Out |

| MAE | Mean Absolute Error |

| mmWave | Millimeter Wave |

| NLP | Natural Language Processing |

| RMSE | Root Mean Squared Error |

References

- Shi, X.; Ohtsuki, T. A Robust Multi-Frame mmWave Radar Point Cloud-Based Human Skeleton Estimation Approach with Point Cloud Reliability Assessment. In Proceedings of the 2023 IEEE SENSORS, Vienna, Austria, 29 October–November 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Gurbuz, S.Z.; Rahman, M.M.; Bassiri, Z.; Martelli, D. Overview of Radar-Based Gait Parameter Estimation Techniques for Fall Risk Assessment. IEEE Open. J. Eng. Med. Biol. 2024, 5, 735–749. [Google Scholar] [CrossRef] [PubMed]

- An, S.; Ogras, U.Y. MARS: mmWave-based Assistive Rehabilitation System for Smart Healthcare. ACM Trans. Embed. Comput. Syst. 2021, 20, 1–22. [Google Scholar] [CrossRef]

- Adhikari, A.; Sur, S. MiSleep: Human Sleep Posture Identification from Deep Learning Augmented Millimeter-wave Wireless Systems. ACM Trans. Internet Things 2024, 5, 1–33. [Google Scholar] [CrossRef]

- Liu, X.; Jiang, W.; Chen, S.; Xie, X.; Liu, H.; Cai, Q.; Tong, X.; Shi, T.; Qu, W. PosMonitor: Fine-Grained Sleep Posture Recognition With mmWave Radar. IEEE Internet Things J. 2024, 11, 11175–11189. [Google Scholar] [CrossRef]

- Liu, C.; Zhou, H. Fully Attentional Network for Skeleton-Based Action Recognition. IEEE Access 2023, 11, 20478–20485. [Google Scholar] [CrossRef]

- Al, M.A.; Shi, X.; Mondher, B.; Ohtsuki, T. mmGAT: Pose Estimation by Graph Attention with Mutual Features from mmWave Radar Point Cloud. In Proceedings of the ICC 2024—IEEE International Conference on Communications, Denver, CO, USA, 9–13 June 2024; pp. 2161–2166. [Google Scholar] [CrossRef]

- Zhong, J.; Jin, L.; Wang, R. Point-convolution-based human skeletal pose estimation on millimetre wave frequency modulated continuous wave multiple-input multiple-output radar. IET Biom. 2022, 11, 333–342. [Google Scholar] [CrossRef]

- Sengupta, A.; Cao, S. mmPose-NLP: A Natural Language Processing Approach to Precise Skeletal Pose Estimation Using mmWave Radars. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 8418–8429. [Google Scholar] [CrossRef]

- Song, Y.; Taylor, W.; Ge, Y.; Usman, M.; Imran, M.A.; Abbasi, Q.H. Evaluation of deep learning models in contactless human motion detection system for next generation healthcare. Sci. Rep. 2022, 12, 21592. [Google Scholar] [CrossRef]

- Ghimire, A.; Kakani, V.; Kim, H. SSRT: A Sequential Skeleton RGB Transformer to Recognize Fine-Grained Human-Object Interactions and Action Recognition. IEEE Access 2023, 11, 51930–51948. [Google Scholar] [CrossRef]

- An, S.; Ogras, U.Y. Fast and scalable human pose estimation using mmWave point cloud. In Proceedings of the 59th ACM/IEEE Design Automation Conference, San Francisco, CA, USA, 10–14 July 2022; pp. 889–894. [Google Scholar] [CrossRef]

- Rantelinggi, P.H.; Shi, X.; Bouazizi, M.; Ohtsuki, T. Deep Learning-Based Human Joint Localization Using mmWave Radar and Sequential Frame Fusion. In Proceedings of the IEEE EMBC 2025 (Accepted for Publication), Copenhagen, Denmark, 14–17 July 2025. [Google Scholar]

- Alanazi, M.A.; Alhazmi, A.K.; Alsattam, O.; Gnau, K.; Brown, M.; Thiel, S.; Jackson, K.; Chodavarapu, V.P. Towards a low-cost solution for gait analysis using millimeter wave sensor and machine learning. Sensors 2022, 22, 5470. [Google Scholar] [CrossRef]

- An, S.; Li, Y.; Ogras, U. mRI: Multi-modal 3D Human Pose Estimation Dataset using mmWave, RGB-D, and Inertial Sensors. Adv. Neural Inform. Process. Syst. 2022, 35, 27414–27426. [Google Scholar]

- Chen, L.; Guo, X.; Wang, G.; Li, H. Spatial–Temporal Multiscale Constrained Learning for mmWave-Based Human Pose Estimation. IEEE Trans. Cogn. Develop. Syst. 2024, 16, 1108–1120. [Google Scholar] [CrossRef]

- Sengupta, A.; Jin, F.; Zhang, R.; Cao, S. mm-Pose: Real-Time Human Skeletal Posture Estimation Using mmWave Radars and CNNs. IEEE Sens. J. 2020, 20, 10032–10044. [Google Scholar] [CrossRef]

- Wu, J.; Cui, H.; Dahnoun, N. A voxelization algorithm for reconstructing MmWave radar point cloud and an application on posture classification for low energy consumption platform. Sustainability 2023, 15, 3342. [Google Scholar] [CrossRef]

- Waqar, S.; Pätzold, M. A Simulation-Based Framework for the Design of Human Activity Recognition Systems Using Radar Sensors. IEEE Internet Things J. 2024, 11, 14494–14507. [Google Scholar] [CrossRef]

- Lee, G.; Kim, J. Improving human activity recognition for sparse radar point clouds: A graph neural network model with pre-trained 3D human-joint coordinates. Appl. Sci. 2022, 12, 2168. [Google Scholar] [CrossRef]

- Ahmad, T.; Wu, J.; Alwageed, H.S.; Khan, F.; Khan, J.; Lee, Y. Human Activity Recognition Based on Deep-Temporal Learning Using Convolution Neural Networks Features and Bidirectional Gated Recurrent Unit With Features Selection. IEEE Access 2023, 11, 33148–33159. [Google Scholar] [CrossRef]

- Javadi, S.H.; Bourdoux, A.; Deligiannis, N.; Sahli, H. Human Pose Estimation Based on ISAR and Deep Learning. IEEE Sens. J. 2024, 24, 28324–28337. [Google Scholar] [CrossRef]

- Stevens, S.; Garcia, P.; Kim, H. Improved Joint Estimation for Body-Mounted Motion Capture Sensors Using Human Kinematics Prior Knowledge. In Proceedings of the 2022 IEEE Sensors, Dallas, TX, USA, 30 October–2 November 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Hu, S.; Cao, S.; Toosizadeh, N.; Barton, J.; Hector, M.G.; Fain, M.J. mmPose-FK: A Forward Kinematics Approach to Dynamic Skeletal Pose Estimation Using mmWave Radars. IEEE Sens. J. 2024, 24, 6469–6481. [Google Scholar] [CrossRef]

- Hu, S.; Sengupta, A.; Cao, S. Stabilizing Skeletal Pose Estimation using mmWave Radar via Dynamic Model and Filtering. In Proceedings of the 2022 IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), Ioannina, Greece, 27–30 September 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Ahmed, S.; Cho, S.H. Machine Learning for Healthcare Radars: Recent Progresses in Human Vital Sign Measurement and Activity Recognition. IEEE Commun. Surv. Tutor. 2024, 26, 461–495. [Google Scholar] [CrossRef]

- Rahman, M.M.; Martelli, D.; Gurbuz, S.Z. Radar-Based Human Skeleton Estimation with CNN-LSTM Network Trained with Limited Data. In Proceedings of the 2023 IEEE EMBS International Conference on Biomedical and Health Informatics (BHI), Pittsburgh, PA, USA, 15–18 October 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Yan, J.; Zeng, X.; Zhou, A.; Ma, H. MM-HAT: Transformer for Millimeter-Wave Sensing Based Human Activity Recognition. In Proceedings of the GLOBECOM 2022—2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022; pp. 547–553. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhang, D.; Liang, X.; Liu, X.; Fang, G. RadarFormer: End-to-End Human Perception with Through-Wall Radar and Transformers. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 18285–18299. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Guo, X.; Wang, G. MPTFormer: Toward Robust Arm Gesture Pose Tracking Using Dual-View Radar System. IEEE Sens. J. 2024, 24, 1051–1064. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).