Continuous Talking Face Generation Based on Gaussian Blur and Dynamic Convolution

Abstract

1. Introduction

- A dynamic convolutional transformer generator is designed using a dual pipeline processing mechanism, which enhances the degree of feature correlation of the input features at the time scale and the ability to model details at the spatial scale, respectively.

- A dynamic Gaussian renderer, designed to ensure continuity between the upper and lower facial regions in the generated images, is based on Gaussian blur and enables seamless and natural connection in the generated facial images.

- We have designed a two-stage method for generating talking face videos. By adopting dynamic convolution and Gaussian blur, our method produces talking face videos with synchronized mouth movements and realism.

2. Related Work

2.1. Audio-Driven Generation of Speaking Faces

2.2. Landmark-Based Talking Face Generation

3. Methods

3.1. Face Keypoint Generation Stage

3.1.1. Preprocessing Audio and Video

3.1.2. Dynamic Convolutional Transformer Generator

3.1.3. Loss Function for Face Keypoint Generation

3.2. Face Keypoint Rendering Stage

3.2.1. Landmark and Image Alignment Phase

3.2.2. Dynamic Gaussian Renderer

3.2.3. Loss Functions for Rendering Modules

3.3. Experiments

3.3.1. Experimental Setup

3.3.2. Datasets

3.3.3. Evaluation Metrics

3.4. Results

3.4.1. Quantitative Results

3.4.2. Qualitative Comparison

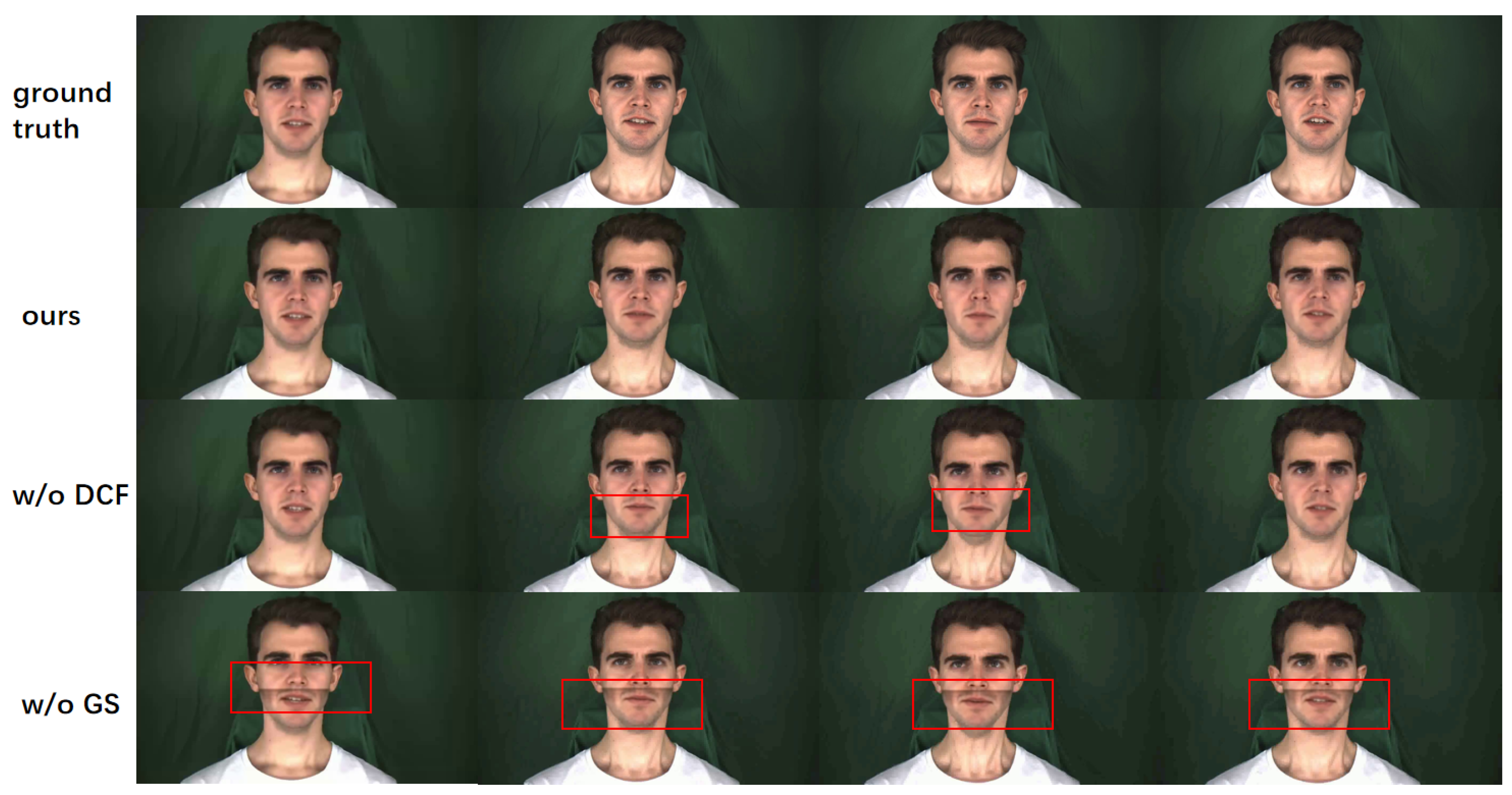

3.5. Ablation Experiments

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, J.; Liu, Y.; Li, X.; Li, W.; Tang, Y. Talking face generation driven by time-frequency domain features of speech audio. Displays 2023, 80, 102558. [Google Scholar] [CrossRef]

- Tan, S.; Ji, B.; Pan, Y. EMMN: Emotional Motion Memory Network for Audio-driven Emotional Talking Face Generation. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 22089–22099. [Google Scholar]

- Jamaludin, A.; Chung, J.S.; Zisserman, A. You Said That? Synthesising Talking Faces from Audio. Int. J. Comput. Vis. 2019, 127, 1767–1779. [Google Scholar] [CrossRef]

- Prajwal, K.R.; Mukhopadhyay, R.; Namboodiri, V.P.; Jawahar, C.V. A Lip Sync Expert Is All You Need for Speech to Lip Generation In the Wild. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020. [Google Scholar]

- Deng, Y.; Yang, J.; Xu, S.; Chen, D.; Jia, Y.; Tong, X. Accurate 3D Face Reconstruction With Weakly-Supervised Learning: From Single Image to Image Set. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–20 June 2019; pp. 285–295. [Google Scholar]

- Li, B.; Wei, X.; Liu, B.; He, Z.; Cao, J.; Lai, Y.-K. Pose-Aware 3D Talking Face Synthesis using Geometry-guided Audio-Vertices Attention. IEEE Trans. Vis. Comput. Graph. 2024, 31, 1758–1771. [Google Scholar] [CrossRef]

- Suwajanakorn, S.; Seitz, S.M.; Kemelmacher-Shlizerman, I. Synthesizing Obama. ACM Trans. Graph. (TOG) 2017, 36, 1–13. [Google Scholar] [CrossRef]

- Zhong, W.; Fang, C.; Cai, Y.; Wei, P.; Zhao, G.; Lin, L.; Li, G. Identity-Preserving Talking Face Generation with Landmark and Appearance Priors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 9729–9738. [Google Scholar]

- Wang, J.; Zhao, Y.; Liu, L.; Xu, T.; Li, Q.; Li, S. Emotional Talking Head Generation based on Memory-Sharing and Attention-Augmented Networks. arXiv 2023, arXiv:2306.03594. [Google Scholar]

- Zhang, Z.; Hu, Z.; Deng, W.; Fan, C.; Lv, T.; Ding, Y. DINet: Deformation Inpainting Network for Realistic Face Visually Dubbing on High Resolution Video. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023. [Google Scholar]

- Chen, L.; Maddox, R.K.; Duan, Z.; Xu, C. Hierarchical Cross-Modal Talking Face Generation With Dynamic Pixel-Wise Loss. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 7824–7833. [Google Scholar]

- Chen, L.; Li, Z.; Maddox, R.K.; Duan, Z.; Xu, C. Lip Movements Generation at a Glance. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Chung, J.S.; Zisserman, A. Lip Reading in the Wild. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016. [Google Scholar]

- Wang, Z.; Wang, S.; Yu, W.; Gao, B.; Li, C.; Wang, T. Accurate Real-Time Live Face Detection Using Snapshot Spectral Imaging Method. Sensors 2025, 25, 952. [Google Scholar] [CrossRef] [PubMed]

- O’Shaughnessy, D. Speaker Diarization: A Review of Objectives and Methods. Appl. Sci. 2025, 15, 2002. [Google Scholar] [CrossRef]

- Ko, S. Analog Gaussian-Shaped Filter Design and Current Mode Compensation for Dot-Matrix TSP Readout Systems. Appl. Sci. 2025, 15, 1845. [Google Scholar] [CrossRef]

- Wu, X.; Hirota, K.; Dai, Y.; Shao, S. Dynamic Multi-Population Mutation Architecture-Based Equilibrium Optimizer and Its Engineering Application. Appl. Sci. 2025, 15, 1795. [Google Scholar] [CrossRef]

- Ravichandran, N.; Bidorn, B.; Mercan, O.; Paneerselvam, B. Data-Driven Machine-Learning-Based Seismic Response Prediction and Damage Classification for an Unreinforced Masonry Building. Appl. Sci. 2025, 15, 1686. [Google Scholar] [CrossRef]

- Huang, G.; Sun, A.; Zhang, H.; Zhang, Y.; Wang, J. Gaussian second derivative blur kernels for image deblurring. Digit. Signal Process. 2024, 155, 104724. [Google Scholar] [CrossRef]

- Alghamdi, M.M.; Wang, H.; Bulpitt, A.J.; Hogg, D.C. Talking Head from Speech Audio using a Pre-trained Image Generator. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022. [Google Scholar]

- Bigioi, D.; Basak, S.; Stypułkowski, M.; Zieba, M.; Jordan, H.; McDonnell, R.; Corcoran, P. Speech Driven Video Editing via an Audio-Conditioned Diffusion Model. Image Vis. Comput. 2023, 142, 104911. [Google Scholar] [CrossRef]

- Hong, F.-T.; Shen, L.; Xu, D. DaGAN++: Depth-Aware Generative Adversarial Network for Talking Head Video Generation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 2997–3012. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Xu, Y.; Wu, Q.; Zhou, H.; Wu, W.; Zhou, B. Semantic-Aware Implicit Neural Audio-Driven Video Portrait Generation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Zhou, Y.; Han, X.; Shechtman, E.; Echevarria, J.; Kalogerakis, E.; Li, D. MakeItTalk: Speaker-Aware Talking Head Animation. ACM Trans. Graph. 2020, 39, 1–15. [Google Scholar]

- Rochow, A.; Schwarz, M.; Behnke, S. FSRT: Facial Scene Representation Transformer for Face Reenactment from Factorized Appearance, Head-pose, and Facial Expression Features. arXiv 2024, arXiv:2404.09736. [Google Scholar]

- Ma, Y.; Zhang, S.; Wang, J.; Wang, X.; Zhang, Y.; Deng, Z. DreamTalk: When Expressive Talking Head Generation Meets Diffusion Probabilistic Model. arXiv 2023, arXiv:2312.09767. [Google Scholar]

- Yi, R.; Ye, Z.; Zhang, J.; Bao, H.; Liu, Y.J. Audio-driven Talking Face Video Generation with Natural Head Pose. arXiv 2020, arXiv:2002.10137. [Google Scholar]

- Song, L.; Wu, W.; Qian, C.; He, R.; Loy, C.C. Everybody’s Talkin’: Let Me Talk as You Want. IEEE Trans. Inf. Forensics Secur. 2020, 17, 585–598. [Google Scholar] [CrossRef]

- Ji, X.; Zhou, H.; Wang, K.; Wu, Q.; Wu, W.; Xu, F.; Cao, X. EAMM: One-Shot Emotional Talking Face via Audio-Based Emotion-Aware Motion Model. In Proceedings of the ACM SIGGRAPH 2022 Conference Proceedings, Virtual Event, 8–11 August 2022. [Google Scholar]

- Zhang, Y.; He, W.; Li, M.; Tian, K.; Zhang, Z.; Cheng, J.; Wang, Y.; Liao, J. Meta Talk: Learning To Data-Efficiently Generate Audio-Driven Lip-Synchronized Talking Face With High Definition. In Proceedings of the ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 4848–4852. [Google Scholar]

- Nian, F.; Wang, W.; Wang, Y.; Zhang, J.; Hu, G.; Li, T. Speech-driven video generation of speaking faces based on keypoint representation. Pattern Recognit. Artif. Intell. 2021, 034, 572–580. [Google Scholar]

- Chen, L.; Cui, G.; Liu, C.; Li, Z.; Kou, Z.; Xu, Y.; Xu, C. Talking-head Generation with Rhythmic Head Motion. In Proceedings of the European Conference on Computer Vision, Glasgow, Scotland, 23–28 August 2020. [Google Scholar]

- Lou, M.; Zhou, H.Y.; Yang, S.; Yu, Y. TransXNet: Learning Both Global and Local Dynamics with a Dual Dynamic Token Mixer for Visual Recognition. arXiv 2023, arXiv:2310.19380. [Google Scholar]

- Tian, Y. Beyond Masking: Demystifying Token-Based Pre-Training for Vision Transformers. arXiv 2022, arXiv:2203.14313. [Google Scholar] [CrossRef]

- Tan, S.; Ji, B.; Bi, M.; Pan, Y. EDTalk: Efficient Disentanglement for Emotional Talking Head Synthesis. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 28 September–4 October 2024. [Google Scholar]

| Stage | Batch Size | Learning Rate | Optimizer | Epochs |

|---|---|---|---|---|

| Audio to keypoint | 32 | Adam | 1500 | |

| Keypoint to face image | 16 | Adam | 20 |

| Method | SSIM ↑ | PSNR ↑ | LSE-D ↓ | LSE-C ↑ |

|---|---|---|---|---|

| ATVG [11] | 0.3944 | 11.55 | 8.223 | 5.584 |

| Wav2Lip [4] | 0.8962 | 27.92 | 7.789 | 6.386 |

| PC-AVS [30] | 0.4867 | 15.75 | 7.101 | 6.928 |

| FSRT [25] | 0.8644 | 25.50 | 9.120 | 4.092 |

| Ours | 0.9332 | 31.30 | 5.309 | 8.186 |

| Method | SSIM ↑ | PSNR ↑ | LPIPS ↓ | LSE-D ↓ | LSE-C ↑ |

|---|---|---|---|---|---|

| ATVG [11] | 0.7315 | 20.5430 | 0.1104 | 8.4222 | 7.0611 |

| Wav2Lip-192 [4] | 0.8487 | 27.6561 | 0.1208 | 8.0912 | 6.9509 |

| PC-AVS [30] | 0.6383 | 20.6301 | 0.1077 | 11.4913 | 3.0293 |

| EDTalk [35] | 0.7505 | 22.0442 | 0.3674 | 10.9544 | 2.4573 |

| FSRT [25] | 0.6774 | 17.7222 | 0.5051 | 11.0702 | 1.9514 |

| Ours | 0.8692 | 30.8394 | 0.0906 | 6.3196 | 8.3954 |

| ATVG [11] | 0.8325 | 23.9723 | 0.0869 | 8.8908 | 5.8337 |

| Wav2Lip-192 [4] | 0.8036 | 25.6863 | 0.1302 | 7.8426 | 6.8515 |

| PC-AVS [30] | 0.7754 | 23.6950 | 0.0960 | 6.5035 | 8.6240 |

| IP-LAP [8] | 0.8109 | 29.2186 | 0.0979 | 7.7351 | 6.2937 |

| EDTalk [35] | 0.8469 | 22.8718 | 0.1641 | 7.6053 | 5.6232 |

| FSRT [25] | 0.8892 | 23.3672 | 0.1665 | 14.132 | 0.1143 |

| Ours | 0.9109 | 29.7470 | 0.0889 | 5.2856 | 8.9076 |

| Method | SSIM ↑ | PSNR ↑ | LSE-D ↓ | LSE-C ↑ |

|---|---|---|---|---|

| w/o DCF | 0.8783 | 28.16 | 7.882 | 4.796 |

| w/o GS | 0.8740 | 27.28 | 6.648 | 8.568 |

| Ours | 0.9272 | 30.08 | 5.309 | 8.186 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, Y.; Liu, Y.; Li, W. Continuous Talking Face Generation Based on Gaussian Blur and Dynamic Convolution. Sensors 2025, 25, 1885. https://doi.org/10.3390/s25061885

Tang Y, Liu Y, Li W. Continuous Talking Face Generation Based on Gaussian Blur and Dynamic Convolution. Sensors. 2025; 25(6):1885. https://doi.org/10.3390/s25061885

Chicago/Turabian StyleTang, Ying, Yazhi Liu, and Wei Li. 2025. "Continuous Talking Face Generation Based on Gaussian Blur and Dynamic Convolution" Sensors 25, no. 6: 1885. https://doi.org/10.3390/s25061885

APA StyleTang, Y., Liu, Y., & Li, W. (2025). Continuous Talking Face Generation Based on Gaussian Blur and Dynamic Convolution. Sensors, 25(6), 1885. https://doi.org/10.3390/s25061885