Abstract

Relative radiometric normalization (RRN) is a critical pre-processing step that enables accurate comparisons of multitemporal remote-sensing (RS) images through unsupervised change detection. Although existing RRN methods generally have promising results in most cases, their effectiveness depends on specific conditions, especially in scenarios with land cover/land use (LULC) in image pairs in different locations. These methods often overlook these complexities, potentially introducing biases to RRN results, mainly because of the use of spatially aligned pseudo-invariant features (PIFs) for modeling. To address this, we introduce a location-independent RRN (LIRRN) method in this study that can automatically identify non-spatially matched PIFs based on brightness characteristics. Additionally, as a fast and coregistration-free model, LIRRN complements keypoint-based RRN for more accurate results in applications where coregistration is crucial. The LIRRN process starts with segmenting reference and subject images into dark, gray, and bright zones using the multi-Otsu threshold technique. PIFs are then efficiently extracted from each zone using nearest-distance-based image content matching without any spatial constraints. These PIFs construct a linear model during subject–image calibration on a band-by-band basis. The performance evaluation involved tests on five registered/unregistered bitemporal satellite images, comparing results from three conventional methods: histogram matching (HM), blockwise KAZE, and keypoint-based RRN algorithms. Experimental results consistently demonstrated LIRRN’s superior performance, particularly in handling unregistered datasets. LIRRN also exhibited faster execution times than blockwise KAZE and keypoint-based approaches while yielding results comparable to those of HM in estimating normalization coefficients. Combining LIRRN and keypoint-based RRN models resulted in even more accurate and reliable results, albeit with a slight lengthening of the computational time. To investigate and further develop LIRRN, its code, and some sample datasets are available at link in Data Availability Statement.

1. Introduction

Relative radiometric normalization (RRN) is the key step for justifying radiometric distortions between bi/multitemporal remote-sensing (RS) images caused by diverse atmospheric interferences, fluctuations in the sun–target–sensor geometry, and sensor characteristics [1,2,3,4]. The RRN technique’s aim is to find pixel pairs between reference and subject images and radiometrically align them through a linear/nonlinear mapping function (MF) [5]. Based on the selection criteria for these pairs, RRN methods are broadly categorized into two groups: dense RRN (DRRN) and sparse RRN (SRRN) [6].

In the DRRN process, the mapping function (MF) between the subject and reference images can be established either linearly or nonlinearly using all the pixels from both images [7]. Histogram matching (HM) [8] is a widely employed DRRN method that effectively addresses radiometric inconsistencies between reference and subject images utilizing their respective histograms. Other frequently used DRRN methods, such as minimum–maximum (MM) [1] and mean–standard deviation (MS) [1], adopt global statistical parameters to establish a linear MF between the input images. DRRN methods are mostly used in image-mosaicking tasks because of their advantageous features, including time efficiency and the capability to handle large-sized image pairs [9]. However, when dealing with image pairs that include significant land cover/land use (LULC) changes, these methods may introduce noise structures and artifacts to the final results because of their equal treatment of all the pixels. SRRN methods, however, are specifically designed to handle radiometric distortions in such image pairs by extracting pseudo-invariant features (PIFs) and using them to establish a more precise MF [6,7,10,11].

In recent years, several SRRN methods [6,12,13,14,15,16,17] have been developed, each adopting a distinct approach for selecting PIFs from image pairs. The controlled-regression SRRN technique introduced by Elvidge et al. in 1995 [3], has appeared as a widely adopted and refined method for radiometric adjustment in the analysis of multitemporal images. In this method, PIFs/no-change pixels are identified using the scattergrams obtained from near-infrared bands through a controlled linear model. Another particularly powerful SRRN method is iteratively reweighted multivariate alteration detection (IRMAD), introduced by Canty and Nielsen [13], which has led to the development of many similar SRRN methods based on its principles. For example, Syariz et al. (2019) [18] presented a spectral-consistent RRN method for multitemporal Landsat 8 images, where PIFs were selected using the IRMAD method. In this method, a common radiometric level situated between image pairs was selected to reduce potential spectral distortions. Despite promising results, these methods primarily rely on iteratively identifying PIFs to re-estimate parameters for aligning images. A more advanced SRRN method was recently proposed by Chen et al. [10], in which PIFs in the shape of polygons were used to form an RRN model and generate reliable normalized images. Although these methods have shown promising results in RRN, they are limited to working with geo/coregistered image pairs and are therefore incompatible when unregistered input images need to be normalized [19].

To overcome the aforementioned limitation, keypoint-based RRN methods [19,20] have been developed that are robust to variations in scale, illumination, and viewpoints between subject and reference images. These methods typically operate in two steps. First, PIFs or true matches are extracted from image pairs through a feature detector/descriptor-matching process. Subsequently, an MF is created using these PIFs to generate a normalized image.

Keypoint-based RRN methods effectively handle radiometric distortions in both registered and unregistered image pairs. Nevertheless, the efficacy of most SRRN methods and these approaches may be compromised when applied to image pairs with identical LCLU classes but located in different geographic areas because of factors such as seasonal fluctuations, climate variations, or other influencing elements. This arises as these methods often overlook these regions during their PIF selection, focusing primarily on spatially aligned PIFs or unchanged pixels to establish meaningful MFs between image pairs. For instance, Figure 1 highlights that both keypoint-based RRN and rule-based RRN methods [6] neglect deep and shallow water classes as potential regions for PIF selection, introducing the possibility of errors in the final results. This limitation becomes evident when these methods are used in scenarios with significant spatial LULC differences, potentially impacting the accuracy of the final RRN results.

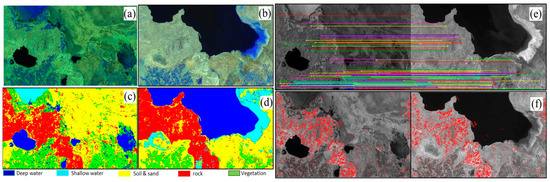

Figure 1.

An example illustrating the limitations of keypoint-based and traditional SRRN methods in selecting PIFs from LCLU classes with varying spatial positions in coregistered reference and subject images. (a) Landsat 5 (TM) image acquired in September 2010 (subject image); (b) Landsat 7 (ETM+) image acquired in August 1999 (reference image); (c) LCLU map of the reference image; (d) LCLU map of the subject image; (e) selected PIFs from the reference and subject images based on the keypoint-based RRN method [21]; (f) selected PIFs from the reference and subject images based on the rule-based RRN [6].

On the other hand, DRRN methods are not limited by spatial constraints and can be considered to include all the LULCs in images during their normalization process. However, as mentioned before, using all the image pixels in their DRRN process may lead to suboptimal RRN results due to the existence of real change pixel pairs. This raises the question of whether an efficient RRN method can be devised to attain accuracy comparable to that of keypoint-based RRN methods while retaining the speed and spatial independence characteristics of DRRN, particularly in handling radiometric calibration for unregistered image pairs with varying LULCs in different locations.

To address this research question, we introduce the location-independent RRN (LIRRN) method, a novel approach designed to reduce radiometric distortions in bitemporal RS images, regardless of their coregistration status. LIRRN efficiently identifies PIFs from diverse ground surface brightnesses in image pairs by employing the dual approach of multithreshold segmentation and image content matching conducted band by band. This process starts with the bandwise multilevel segmentation of subject and reference images, categorizing pixels into dark, gray, and bright classes based on their gray values/digital numbers (DNs). Subsequently, a unique image-content-based matching strategy extracts PIFs from each class, considering the close similarity in DNs within the spectral range of the input images. This band-by-band matching ensures spatial independence and accurately represents the underlying content. By automatically extracting representative PIFs, this method facilitates precise radiometric normalization modeling in the final step of the normalization process. In the subsequent stage, the results of this method are fused with those of the keypoint-based method to enhance overall outcomes, particularly in scenarios requiring radiometric calibration for coregistered images.

This paper is organized as follows. Section 2 presents the proposed LIRRN method, detailing its key components and workflow. Section 3 describes the datasets used for evaluating the method, along with the evaluation metrics that were employed and a comprehensive analysis of the obtained results. Finally, Section 4 provides a summary of the paper’s findings and conclusions, as well as a discussion on potential avenues for future research and development in this field.

2. Materials and Methods

2.1. Methodology

Given bitemporal unregistered/coregistered RS images and , defined respectively over the domains and , in spectral bands as subject and reference images, respectively, let us consider that these images were captured by either inter- or intra-sensors, depicting the same scene but acquired at different/same scales under varying illumination and atmospheric conditions.

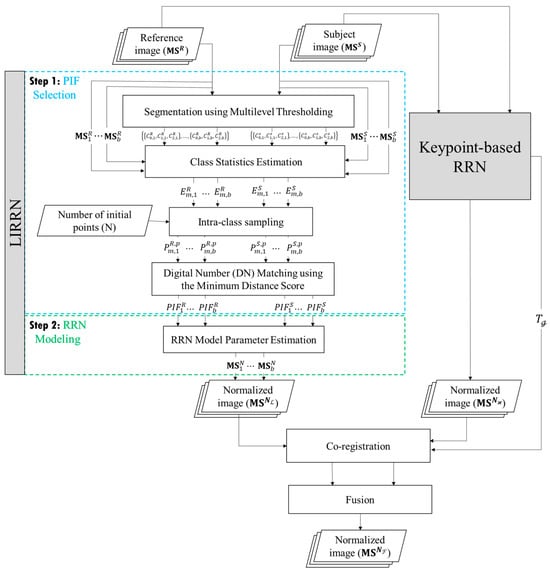

The main goal of the LIRRN method is to efficiently generate a normalized subject image, , from and images regardless of their coregistration status. Figure 2 depicts the two primary steps of the LIRRN method: PIF selection (Section 2.1) and RRN modeling (Section 2.2). In the following scenario (Section 2.3), the image is registered using the transformation matrix from the keypoint-based RRN method and fused with its normalized image, , to generate a more accurate coregistered normalized image, .

Figure 2.

The flowchart of the proposed LIRRN method and its combination with the keypoint-based RRN for the radiometric calibration of unregistered/coregistered bitemporal multispectral image pairs.

2.1.1. PIF Selection

This step aims to identify reliable and spatially independent PIFs from different gray levels of input images in a band-by-band manner. In detail, each band of and images is segmented into three classes (dark, gray, and bright) using the multilevel Otsu method, an extension of the classic Otsu thresholding technique [22]. In this method, a histogram, , is constructed for each band of input images, where represents the number of gray levels. The occurrence probability () for each gray level is determined by , where denotes the total number of pixels in each image. Using threshold combinations, , where , which were automatically detected, cumulative probabilities () and mean gray levels () for each class ( {: dark, : gray, and : bright}) are calculated as follows:

The algorithm then iterates through the threshold combinations, , to maximize the between-class variance, , computed by considering the probabilities and mean gray levels for each class as follows:

The optimal threshold combination, , that maximizes the between-class variance is then selected.

Once each band of input images is segmented into the three aforementioned classes, PIFs are selected using the proposed image-content-based matching strategy. In this way, pixel values in the th class of the th band of and are first extracted and represented as and , respectively. Class statistics, including the minimum (), mean (), and maximum () of these pixel values, are then estimated and denoted as . Afterward, the differences between the extracted pixel values and estimated class statistics are obtained and sorted in ascending order, forming as follows:

where is a function ascendingly rearranging the elements of vectors.

Subsequently, an initial subset, , with N samples for each class in the th band of each input image is selected based on the samples’ proximities to the estimated statistics as follows:

where and are the first and Nth elements of , and is also the number of the selected samples, which can be considered as being in the range [500, 10,000].

Randomly, 10% of these samples are chosen to form subsets for the th class in the th band of the and images.

To find corresponding DNs, a nearest-distance matching strategy is employed by computing pairwise Euclidean distances between the subsets and , resulting in the matrix of pairwise distances, . Sample pairs with minimum distances are then selected to create spectrally matched sets and . Finally, concatenated PIFs for the th band in each of and are formed by gathering samples within across all the statistics, resulting in vectors for each class. Subsequently, vectors across all the classes are combined to generate the final set of concatenated PIFs, denoted as , for the th band in each of and .

2.1.2. RRN Modeling

To establish the relationship between the and images and to generate the normalized image, , a linear regression was performed in a band-by-band way through the previously extracted PIFs in each band as follows:

where and are, respectively, the intercept and slope of the model, which can be obtained using the least-squares method as follows:

where refers to the variance in , is the covariance between and , and and signify the averages of and , respectively.

For a clearer understanding, the step-by-step pseudocode of the proposed LIRRN method is presented in Algorithm 1.

| Algorithm 1 Location-independent Relative Radiometric Normalization (LIRRN) |

| Require: (Reference image), (Subject image), N (Number of initial points, default = 1000) |

| Ensure: (Normalized subject image) |

| 1: for to b do ▷ Loop on the number of bands, i.e., b |

| 2: |

| 3: for to 2 do ▷ Loop on the number of classes |

| 4: |

| 5: |

| 6: , and ▷ Equation (4) |

| 7: and Equation (5) |

| 8: |

| 9: |

| 10: for to do Loop on the number of randomly selected points |

| 11: |

| 12: extract the row and of in |

| 13: |

| 14: |

| 15: remove the element from |

| 16: end for |

| 17: Gather values from sets |

| 18: end for |

| 19: using and in Equations (7) and (8) |

| 20: |

| 21: end for |

| 22: return |

2.2. Fusion of LIRRN and Keypoint-Based RRN

In the context of the multitemporal image analysis, where the acquisition of a normalized coregistered image is pivotal, our approach involves the integration of an LIRRN-generated normalized image, , with the counterpart produced using a keypoint-based method, denoted as . In this scenario, the scale-invariant feature transform (SIFT) operates as the core for the keypoint-based RRN to generate the normalized image, , and geometric transformation matrix, (i.e., generated herein using an affine transformation). The transformation matrix () is then employed to coregister the LIRRN-generated normalized image () with the normalized image, . Finally, they are fused using a weighted average to generate the fused normalized image () as follows:

where and represent the weights assigned to each normalized image and are determined based on the reference image, which can be obtained as follows:

2.3. Data

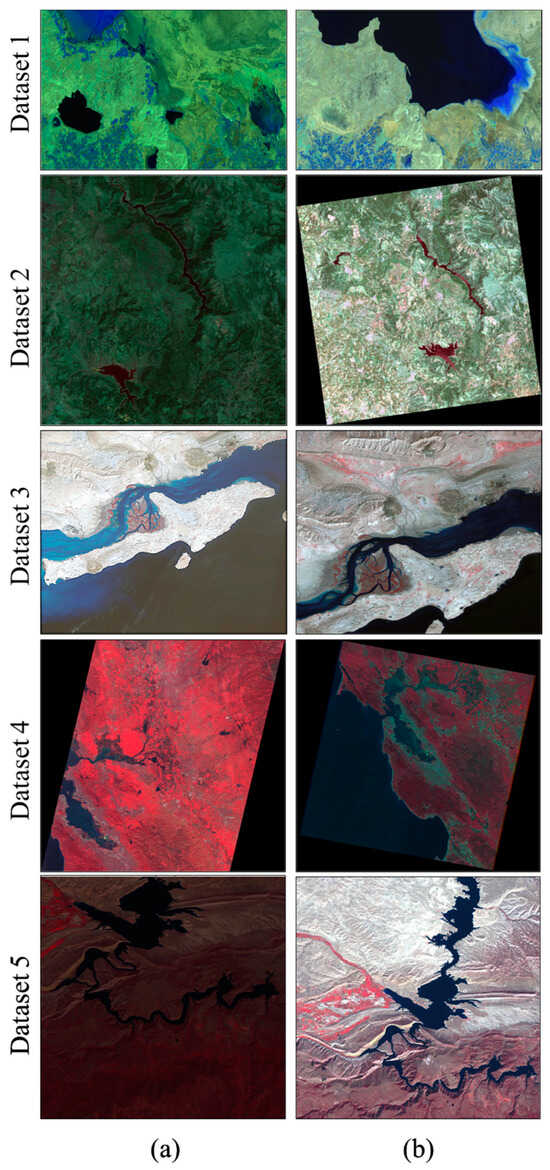

To conduct both quantitative and qualitative assessments, this study utilized five groups of unregistered bitemporal multispectral images captured by either the same/cross/different sensors under diverse imaging conditions (see Table 1 and Figure 3a,b). As can be seen from Table 1, datasets 4 and 5 include image pairs taken from the IRS (LISS IV) and Landsat 5/7 (TM/ETM+) sensors, exhibiting discrepancies in spatial resolutions and viewpoints. Datasets 1 and 3 were captured with identical spatial resolutions using Landsat cross sensors, whereas dataset 2 comprises subject and reference images taken by Landsat 7 (ETM+). It is essential to emphasize that the selected image pairs were not precisely coregistered, where all the subject images lacked geoinformation and were rotated or shifted to ensure a comprehensive evaluation of the effectiveness of the LIRRN method in handling non-georeferenced RS images.

Table 1.

Characteristics of datasets.

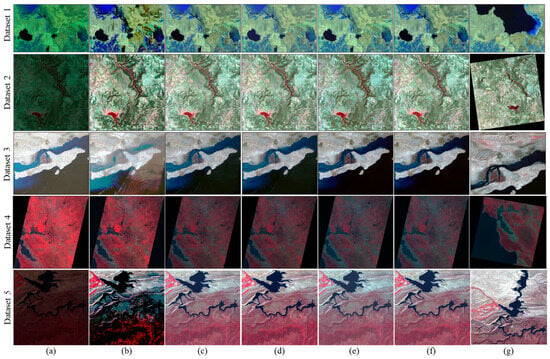

Figure 3.

(a) Subject images and (b) reference images. Color–infrared composites (Green/Red/NIR) and (NIR/Red/Green) were employed respectively to show dataset 1 and datasets 4 and 5 and their results, whereas the normal color composite (Red/Green/Blue) was used to display datasets 2 and 3.

2.4. Evaluation Criteria

The performance of the RRN methods was assessed by calculating the root-mean-square error (RMSE) for the overlapped area of the image pairs as follows:

where is the total number of pixels in the overlapped area. A low RMSE describes acceptable RRN results, while a high RMSE denotes worse results. It is worth noting that to calculate the RMSE, the reference and subject images were first co-registered with subpixel accuracy. Moreover, to evaluate the changes in the detection results, we used common metrics, like the false-alarm rate , the missed-alarm rate , total error (TE) rate, overall accuracy (OA), and F-score (), which can be respectively obtained as follows:

where true positive (TP) is the changed pixels that are accurately classified as changed regions, false positive (FP) is the background that is classified incorrectly as changed regions, true negative (TN) is the changed regions that are accurately classified as changed regions, and false negative (FN) is the changed regions that are incorrectly classified as the background.

3. Experimental Results

3.1. Experimental Setup

The LIRRN method was implemented using MATLAB (version 2018b) on a PC running Windows 10, with an Intel® Core™ i5-6585R CPU (Intel, Santa Clara, CA, USA), clocked at 3.40 GHz, and 32.00 GB of RAM.

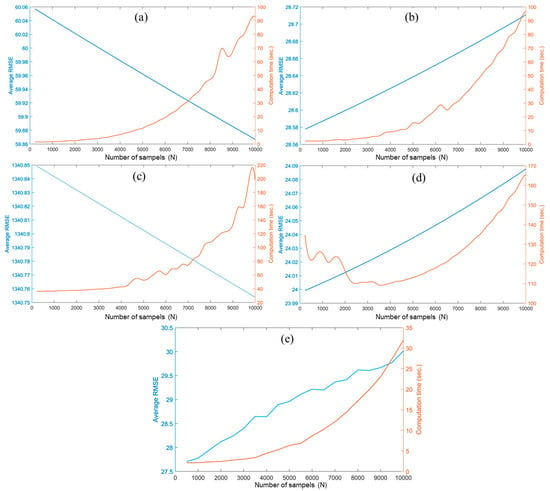

In our investigation, we examined the impact of the parameter N on the performance of the LIRRN method. To do so, we plotted the average RMSE and computational time against different values of N, ranging from 100 to 10,000 at intervals of 500, across all five datasets. These results are presented graphically in Figure 4.

Figure 4.

The average RMSE vs. the computational time of the proposed method for different numbers of samples (N) for datasets 1–5 (a–e), respectively. The blue and red lines refer to RMSE and computational time, respectively.

Upon analyzing the plots presented in Figure 4, it became apparent that the average RMSE of the LIRRN method decreased with an increase in the number of samples for datasets 1 and 3. However, in the cases of datasets 2 and 4, it was observed that the average RMSE actually increased as the number of samples increased. Therefore, it shows that the effectiveness of the LIRRN algorithm is influenced by the choice of the parameter “N” across different datasets. These variations in the performance, whether they involve an increase or decrease, were relatively modest compared to the corresponding changes in the execution time. Across all the plots, it was evident that as the number of samples within the LIRRN process increased, there was a significant lengthening of the computational time, which makes LIRRN infeasible when dealing with large datasets, such as dataset 4. To address this concern, and according to our findings, we preferred to determine a value of N = 1000 in the LIRRN process to achieve a reasonable balance between accuracy and computational efficiency.

3.2. Comparative Results of the SRRN Methods

To assess the effectiveness of the LIRRN method, we conducted a comparative analysis against other relevant methods, including HM [8] and the blockwise KAZE method [19]. Additionally, the results for fusing the LIRRN with the keypoint-based approach were also included in our evaluation to further assess the efficacy of our fusion strategy. For this experiment, we compared both the accuracies and execution times, as seen in Table 2. Moreover, the normalized images produced using the considered techniques are presented in Figure 5 for a visual comparison.

Table 2.

Comparison of the RMSEs and computational times of the proposed LIRRN and fusion models and considered RRN methods for the datasets from 1 to 5.

Figure 5.

(a) Subject images and normalized subject images generated using (b) HM, (c) blockwise KAZE, (d) keypoint-based RRN, (e) LIRRN, and (f) fusion methods and (g) the reference image.

The comparative results presented in Table 2 indicated that all the models effectively reduced radiometric distortions between image pairs, thus contributing to improved subject–image quality. However, it was noteworthy that the HM model, in certain datasets, yielded poor results, exhibiting an even worse performance than those of the raw subject images. Specifically, the proposed LIRRN method demonstrated superior performance over the HM, blockwise KAZE, and keypoint-based approaches in datasets 1–3, while the blockwise KAZE and keypoint-based methods showed better results for datasets 4 and 5, respectively. For example, the raw average RMSE was reduced by 12.59, 91.46, 10,517.84, 23.09, and 64.12 after employing the LIRRN method for the RRN of datasets 1–5, respectively. This indicates its potential to reduce radiometric distortion in unregistered cases or scenarios where the same LULC is observed in different locations of subject and reference images.

The integration of LIRRN with the keypoint-based approach yielded the most promising results across all the datasets, particularly emphasizing its effectiveness in datasets 3–5. For instance, after fusing LIRRN with the keypoint-based method, the average RMSE of the LIRRN method decreased by 11%, 8.5%, and 17.5% for datasets 3–5, respectively. Moreover, implementing the proposed fusion strategy led to a reduction in the average RMSE of the keypoint-based method by 5%, 3%, 15%, 0.1%, and 1% for datasets 1–5, respectively. This indicates that by employing a fusion strategy, we can achieve reasonable results that benefit from both methods, thereby ensuring the accuracy of the RRN when the radiometric calibration of registered cases is required.

The visual results also validate that all the considered methods, except for HM, successfully produced normalized subject images with exceptional color and brightness harmonization when compared to their corresponding reference images, as depicted in Figure 5. In further detail, there were no significant visual differences observed among the results of the blockwise KAZE, keypoint-based, proposed LIRRN, and fusion methods. However, the HM method exhibited artifacts and noise in the generated subject images, as evident in Figure 5b.

Additionally, the tone and contrast of the normalized images generated using HM were consistently higher than those of their corresponding reference images across all the cases. These characteristics can be attributed to the nature of the HM technique, which uses all the image pixels indiscriminately without considering time-invariant regions during processing. This approach leads to a bias toward changed region values, resulting in distortion within the RRN. However, despite its drawbacks, HM was found to be the most efficient RRN method in terms of its execution time, as it relies solely on the histograms of the image pairs as the core of its process.

On the other hand, the computational time of LIRRN was comparable to that of HM and, therefore, shorter than those of the keypoint-based method and the blockwise KAZE method. This makes LIRRN an efficient RRN method for handling large unregistered images. Moreover, the fusion of LIRRN with the keypoint-based method adds the computational time of the keypoint-based method to the execution time of the fusion approach. This is expected from the keypoint-based method, as it employs a matching process to extract PIFs and employs them for the simultaneous radiometry and coregistration of image pairs. Therefore, using the keypoint-based method and its fusion with the LIRRN method proves to be efficient when coregistered normalized images are required, despite the slightly longer computational time compared to those of the individual methods.

Table 2 also demonstrates that the performance of LIRRN is significantly superior to those of the other methods in datasets where image pairs have the same spatial resolution (datasets 1 and 2). This suggests that the performance of the LIRRN model is more affected when faced with datasets that include image pairs with different spatial resolutions. This issue is further discussed in Section 3.4.

3.3. The Impact of the Proposed Fusion-Based Strategy on Unsupervised Change Detection

To enable visual comparisons between the reference images and normalized subject images generated using the proposed fusion strategy method, we employed spectrum-based compressed change vector analysis (C2VA) [23]. Similar to the normalized images generated using the LIRRN method, the subject images were also coregistered with the reference images using the transformation matrix, . Subsequently, C2VA was utilized to depict changes between the subject and reference images, as well as between the normalized and fusion images and the reference images, in the 2D polar domain using magnitudes and orientations, as defined by Liu et al. in 2017 [23]. Finally, Otsu’s thresholding [22] was applied to the magnitudes of the C2VA to generate binary change maps from the inputs. To evaluate the change detection results, we have generated reliable ground-truth maps utilizing post-classification change detection techniques [24], followed by manual rectification to assign classes as changed or unchanged. In this experiment, the raw subject and reference images were regarded as the uncalibrated cases, whereas the normalized subject image and reference image were treated as the calibrated cases for the purposes of clarity and ease of explanation.

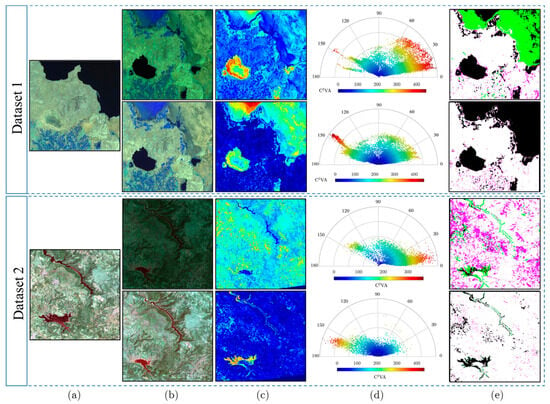

Figure 6a–e illustrates the resulting normalized subject images generated using the fusion approach, along with the subject and reference images, C2VA magnitudes, and binary change maps superimposed on the ground-truth maps for a section of datasets 1 and 2.

Figure 6.

Comparison of C2VA-based change detection results before and after applying the proposed LIRRN method on a subset of datasets 1 and 2. (a) Reference images, (b) subject images (top row), and normalized subject images (bottom row); (c,d) C2VA and its 2D polar change representation domain, respectively, resulting from reference and subject images (top row) and reference and normalized subject images (bottom row); (e) change maps before (top row) and after (bottom row) applying LIRRN and overlaid with ground-truth data (black: changed; white: unchanged; magenta: false alarm; green: missed alarm).

The visual comparison presented in Figure 6 clearly indicated the significant impact of radiometric calibration on the accuracy of unsupervised change detection based on medium-resolution satellite images. Upon calibrating the datasets, the accuracy of the change detection significantly improved across all the datasets. Conversely, when utilizing uncalibrated datasets, a considerable number of missed alarms (depicted in green) and false detections (depicted in magenta) were observed in the generated change maps (see Figure 6e).

Moreover, the C2VA magnitudes derived from the calibrated images enable a clearer identification of the changed regions, thereby facilitating more straightforward thresholding for the generation of accurate change detection results. In contrast, magnitudes derived from uncalibrated images were significantly influenced by noise and anomalies. When derived from calibrated images, the 2D polar change representation domain exhibited a uniform distribution of unchanged regions characterized by low-magnitude values close to zero. This uniform distribution simplified the differentiation between changed and unchanged regions during the thresholding process, resulting in more precise change maps. Conversely, the non-uniform distribution of unchanged regions over zero in the 2D polar change representation domain, derived from uncalibrated images, posed a challenge in accurately distinguishing between changed and unchanged regions. These findings highlight the critical importance for using RNN in unsupervised change detection and confirm the fusion strategy’s superiority in this regard.

These results are supported by quantitative findings presented in Table 3. They demonstrate a significant decrease in , , and TE, while observing a considerable increase in OA and FS scores following the utilization of calibrated datasets. This improvement is particularly evident when comparing the FS score metrics, which exhibit an increase of over 40% after employing calibrated datasets with the LIRRN model. is also notably decreased by ~50% subsequent to the radiometric calibration of the datasets.

Table 3.

Change detection results obtained before and after applying the proposed LIRRN method on a subset of datasets 1 and 2.

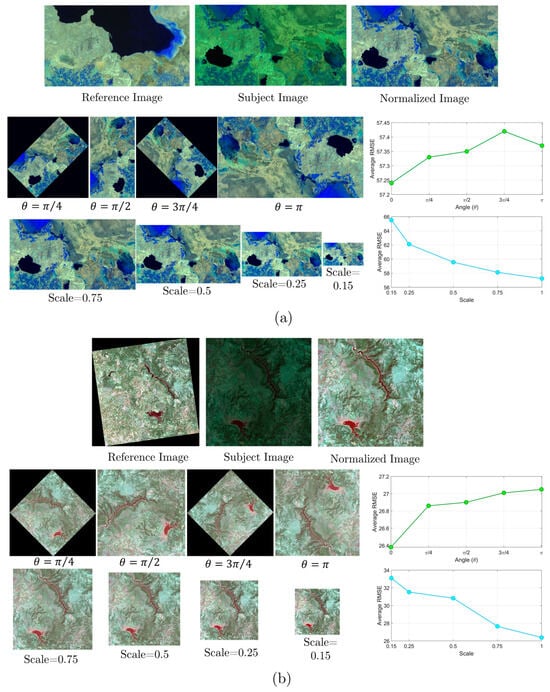

3.4. The Impacts of the Angle and Scale on the Performance of the LIRRN

To examine the influence of varying angles on the LIRRN’s performance, subject images from datasets 1 and 2 were rotated in increments of π/4, ranging from 0 to π, and subsequently processed with LIRRN. Furthermore, to assess LIRRN’s effectiveness across different scales, subject images were subsampled at scales of 0.15, 0.25, 0.5, and 0.75 prior to the LIRRN application. The results of these investigations are shown in Figure 7.

Figure 7.

Comparative analysis of reference, subject, and normalized images generated using the LIRRN method at varied angles and scales applied to subject images from (a) dataset 1 and (b) dataset 2.

As depicted in Figure 7, the normalized images generated using LIRRN at different angles and scales are well-aligned with the reference images, indicating the robustness of the LIRRN model to these distortions. Specifically, the plots in Figure 7 clearly show that the LIRRN method is more robust to angle variation compared to scale variation. For instance, the average RMSE remains relatively constant, with slight differences when the angle increases from 0 to π. In contrast, an increasing trend in the average RMSE is observed in the results of the LIRRN when reducing the resolution scale from 1 to 0.15.

4. Conclusions

In this paper, we proposed the LIRRN method, which can extract PIFs from both input images without relying on their coregistration status. The LIRRN method successfully extracted non-spatially aligned PIFs from various ground surfaces in image pairs by integrating the straightforward thresholding and proposed image content matching. This capability enabled the LIRNN method to successfully perform radiometric adjustment on unregistered image pairs and image pairs with LULC classes positioned differently. Moreover, the ability of the proposed LIRRN to generate registered normalized images was further boosted through fusion with the keypoint-based method.

To assess the effectiveness of the LIRRN method and its fusion, we tested them on five different RS datasets acquired using inter/intra-sensors. Our experimental results showed that LIRRN outperformed conventional techniques, such as the HM, keypoint-based RRN, and blockwise KAZE methods, in the radiometric adjustment of most considered datasets in terms of accuracy. Although the results of the LIRNN and keypoint-based and blockwise KAZE methods were similar, LIRNN was significantly faster and comparable to HM when the RNN of unregistered images was needed. This makes LIRNN an appropriate choice for online and near-online RS applications, where speed is critical. However, the performance of the LIRRN method has shown variability in some cases compared to the keypoint-based method. This variability may be attributed to the LIRRN method’s high focus on image content without considering spatial constraints during processing. This observation was further supported by the results obtained from the fusion of LIRRN with the keypoint-based method, where the proposed fusion method demonstrated more reliable and accurate results than when considering spatial and spectral constraints separately in the RRN process. However, the results of the fusion highly depend on the quality of the matching process embedded in the keypoint-based model. The results also indicated that the LIRRN method exhibited greater robustness against angle variations compared to changes in the resolution.

This study highlights the importance for integrating spatial constraints and image content into the normalization process to potentially enhance results. This suggests that incorporating the results from these factors within a suitable framework can lead to more reliable results and reduce distortions in target images. This highlights the significance of a holistic approach considering spatial and spectral constraints for improved performance in radiometric registration processes. The findings of our change analysis indicated that the proposed fusion approach successfully produced normalized images that can significantly enhance the quality of input images and improve the performance of change detection methods, like C2VA.

The limitations of the LIRRN model can be attributed to its dependency on parameters (N) and Otsu thresholding results. Therefore, investigating the use of learning-based models to automatically identify different parts of input images during the LIRRN process could be a promising avenue for future research. Additionally, integrating more advanced linear or non-linear machine-learning models into LIRRN has the potential to enhance its efficacy and yield even better results. Furthermore, using shape or land use/land cover (LULC) matching based on advanced deep-learning models could present an excellent opportunity to enhance the LIRRN method.

Author Contributions

A.M. (Armin Moghimi), V.S. and A.M. (Amin Mohsenifar): conceptualization, data curation, formal analysis, validation, original draft writing, and software development. T.C. and A.M. (Ali Mohammadzadeh): reviewing and editing of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The code and some of the datasets are available at https://github.com/ArminMoghimi/LIRRN (accessed on 1 April 2023).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hall, F.G.; Strebel, D.E.; Nickeson, J.E.; Goetz, S.J. Radiometric Rectification: Toward a Common Radiometric Response among Multidate, Multisensor Images. Remote Sens. Environ. 1991, 35, 11–27. [Google Scholar] [CrossRef]

- Yang, X.; Lo, C.P. Relative Radiometric Normalization Performance for Change Detection from Multi-Date Satellite Images. Photogramm. Eng. Remote Sensing 2000, 66, 967–980. [Google Scholar]

- Elvidge, C.D.; Ding, Y.; Weerackoon, R.D.; Lunetta, R.S. Relative Radiometric Normalization of Landsat Multispectral Scanner (MSS) Data Using an Automatic Scattergram-Controlled Regression. Photogramm. Eng. Remote Sens. 1995, 11–22. [Google Scholar]

- Roy, D.P.; Ju, J.; Lewis, P.; Schaaf, C.; Gao, F.; Hansen, M.; Lindquist, E. Multi-Temporal MODIS–Landsat Data Fusion for Relative Radiometric Normalization, Gap Filling, and Prediction of Landsat Data. Remote Sens. Environ. 2008, 112, 3112–3130. [Google Scholar] [CrossRef]

- Yuan, D.; Elvidge, C.D. Comparison of Relative Radiometric Normalization Techniques. ISPRS J. Photogramm. Remote Sens. 1996, 51, 117–126. [Google Scholar] [CrossRef]

- Moghimi, A.; Mohammadzadeh, A.; Celik, T.; Amani, M. A Novel Radiometric Control Set Sample Selection Strategy for Relative Radiometric Normalization of Multitemporal Satellite Images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 2503–2519. [Google Scholar] [CrossRef]

- Xu, H.; Wei, Y.; Li, X.; Zhao, Y.; Cheng, Q. A Novel Automatic Method on Pseudo-Invariant Features Extraction for Enhancing the Relative Radiometric Normalization of High-Resolution Images. Int. J. Remote Sens. 2021, 42, 6153–6183. [Google Scholar] [CrossRef]

- Richards, J.A.; Jia, X. Remote Sensing Digital Image Analysis; Springer: Berlin/Heidelberg, Germany, 1999. [Google Scholar]

- Li, X.; Feng, R.; Guan, X.; Shen, H.; Zhang, L. Remote Sensing Image Mosaicking: Achievements and Challenges. IEEE Geosci. Remote Sens. Mag. 2019, 7, 8–22. [Google Scholar] [CrossRef]

- Chen, L.; Ma, Y.; Lian, Y.; Zhang, H.; Yu, Y.; Lin, Y. Radiometric Normalization Using a Pseudo−Invariant Polygon Features−Based Algorithm with Contemporaneous Sentinel− 2A and Landsat− 8 OLI Imagery. Appl. Sci. 2023, 13, 2525. [Google Scholar] [CrossRef]

- Xu, H.; Zhou, Y.; Wei, Y.; Guo, H.; Li, X. A Multi-Rule-Based Relative Radiometric Normalization for Multi-Sensor Satellite Images. IEEE Geosci. Remote Sens. Lett. 2023, 20, 5002105. [Google Scholar] [CrossRef]

- Canty, M.J.; Nielsen, A.A.; Schmidt, M. Automatic Radiometric Normalization of Multitemporal Satellite Imagery. Remote Sens. Environ. 2004, 91, 441–451. [Google Scholar] [CrossRef]

- Canty, M.J.; Nielsen, A.A. Automatic Radiometric Normalization of Multitemporal Satellite Imagery with the Iteratively Re-Weighted MAD Transformation. Remote Sens. Environ. 2008, 112, 1025–1036. [Google Scholar] [CrossRef]

- Denaro, L.G.; Lin, C.H. Hybrid Canonical Correlation Analysis and Regression for Radiometric Normalization of Cross-Sensor Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 976–986. [Google Scholar] [CrossRef]

- Bai, Y.; Tang, P.; Hu, C. KCCA Transformation-Based Radiometric Normalization of Multi-Temporal Satellite Images. Remote Sens. 2018, 10, 432. [Google Scholar] [CrossRef]

- Hajj, M.E.; Bégué, A.; Lafrance, B.; Hagolle, O.; Dedieu, G.; Rumeau, M. Relative Radiometric Normalization and Atmospheric Correction of a SPOT 5 Time Series. Sensors 2008, 8, 2774–2791. [Google Scholar] [CrossRef] [PubMed]

- Zeng, T.; Shi, L.; Huang, L.; Zhang, Y.; Zhu, H.; Yang, X. A Color Matching Method for Mosaic HY-1 Satellite Images in Antarctica. Remote Sens. 2023, 15, 4399. [Google Scholar] [CrossRef]

- Syariz, M.A.; Lin, B.Y.; Denaro, L.G.; Jaelani, L.M.; Van Nguyen, M.; Lin, C.H. Spectral-Consistent Relative Radiometric Normalization for Multitemporal Landsat 8 Imagery. ISPRS J. Photogramm. Remote Sens. 2019, 147, 56–64. [Google Scholar] [CrossRef]

- Moghimi, A.; Sarmadian, A.; Mohammadzadeh, A.; Celik, T.; Amani, M.; Kusetogullari, H. Distortion Robust Relative Radiometric Normalization of Multitemporal and Multisensor Remote Sensing Images Using Image Features. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5400820. [Google Scholar] [CrossRef]

- Kim, T.; Han, Y. Integrated Preprocessing of Multitemporal Very-High-Resolution Satellite Images via Conjugate Points-Based Pseudo-Invariant Feature Extraction. Remote Sens. 2021, 13, 3990. [Google Scholar] [CrossRef]

- Moghimi, A.; Celik, T.; Mohammadzadeh, A. Tensor-Based Keypoint Detection and Switching Regression Model for Relative Radiometric Normalization of Bitemporal Multispectral Images. Int. J. Remote Sens. 2022, 43, 3927–3956. [Google Scholar] [CrossRef]

- Otsu, N. Threshold Selection Method From Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 11, 23–27. [Google Scholar] [CrossRef]

- Liu, S.; Du, Q.; Tong, X.; Samat, A.; Bruzzone, L.; Bovolo, F. Multiscale Morphological Compressed Change Vector Analysis for Unsupervised Multiple Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4124–4137. [Google Scholar] [CrossRef]

- El-Hattab, M.M. Applying Post Classification Change Detection Technique to Monitor an Egyptian Coastal Zone (Abu Qir Bay). Egypt. J. Remote Sens. Sp. Sci. 2016, 19, 23–36. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).