Predicting the Posture of High-Rise Building Machines Based on Multivariate Time Series Neural Network Models

Abstract

1. Introduction

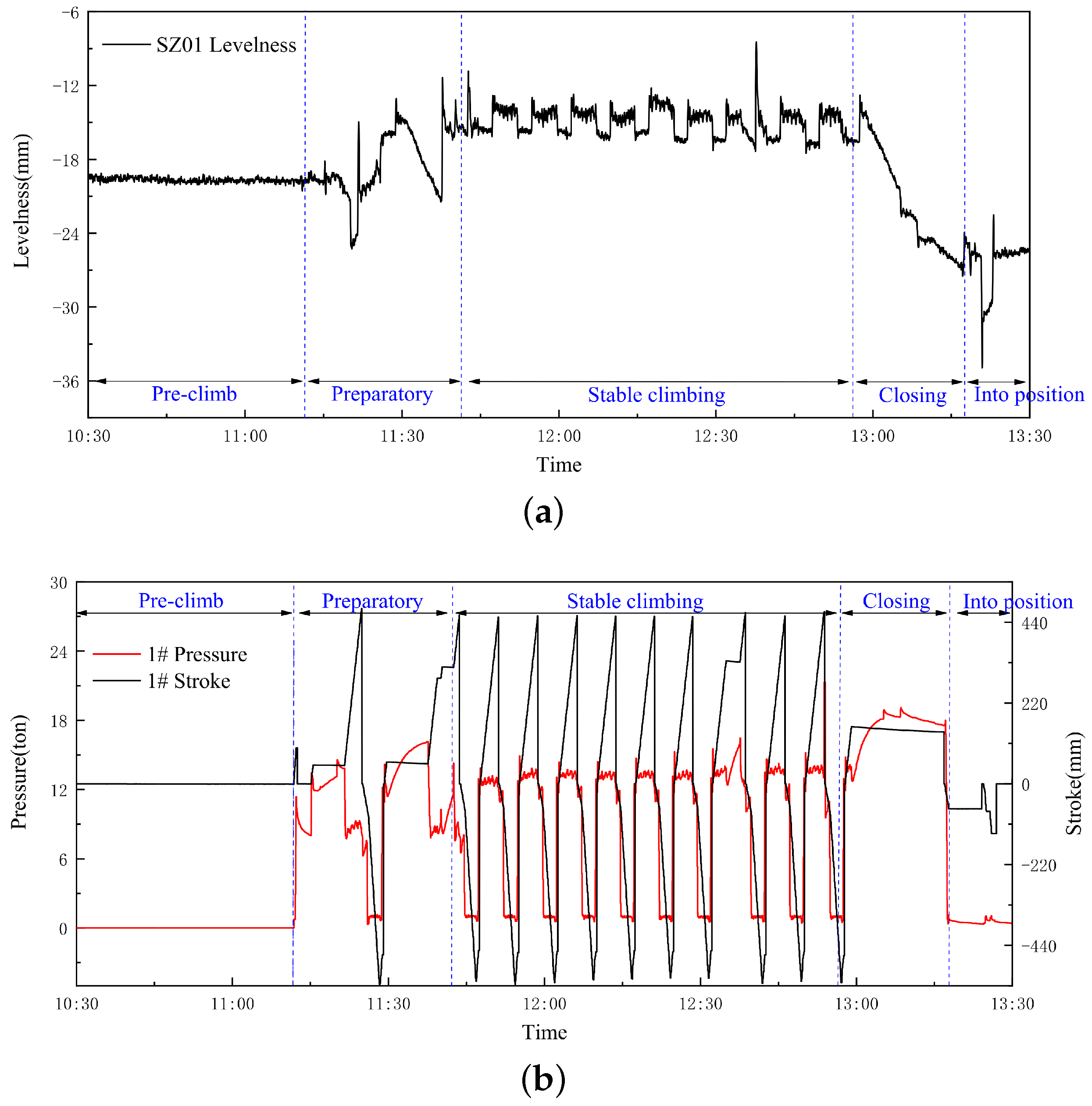

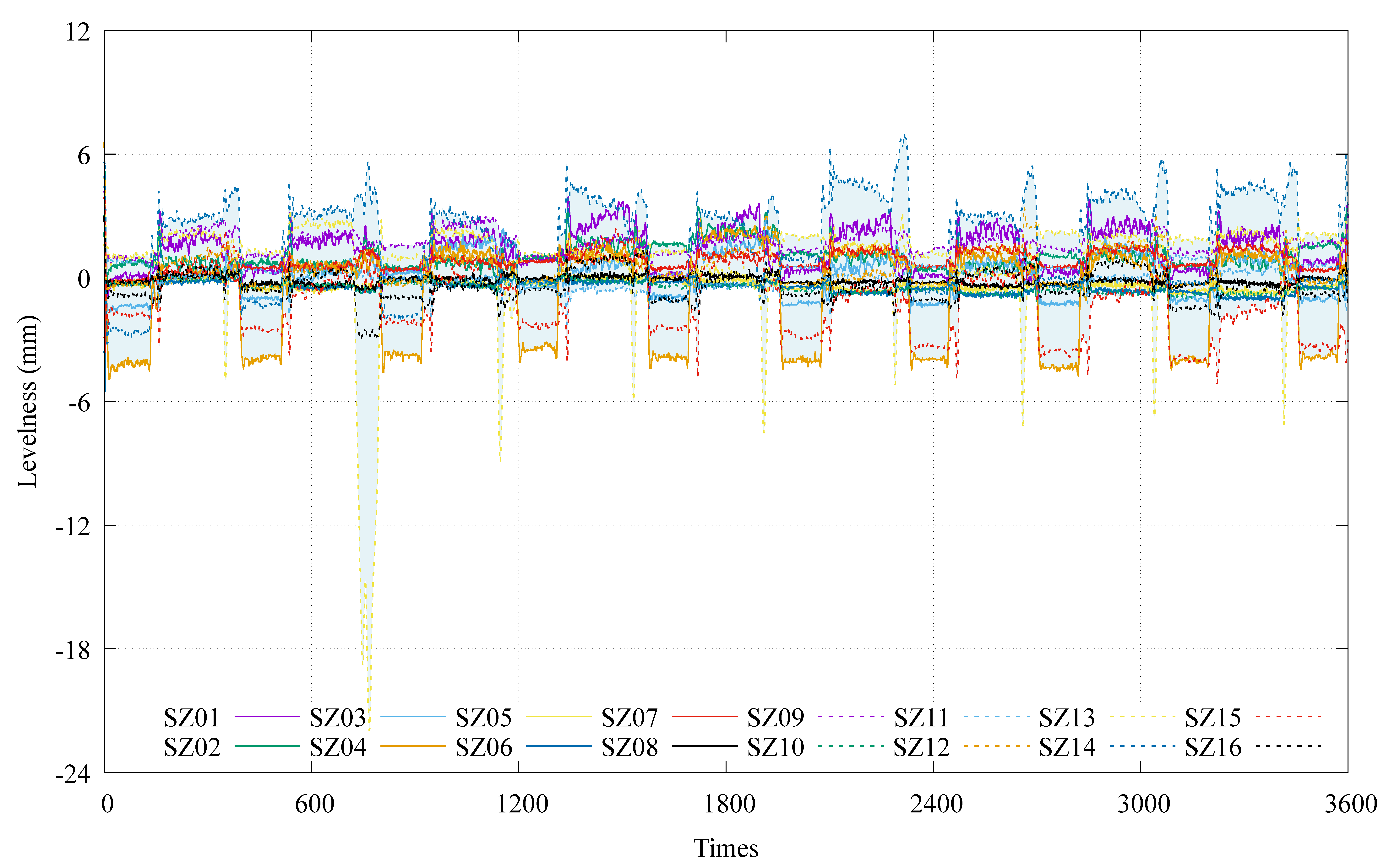

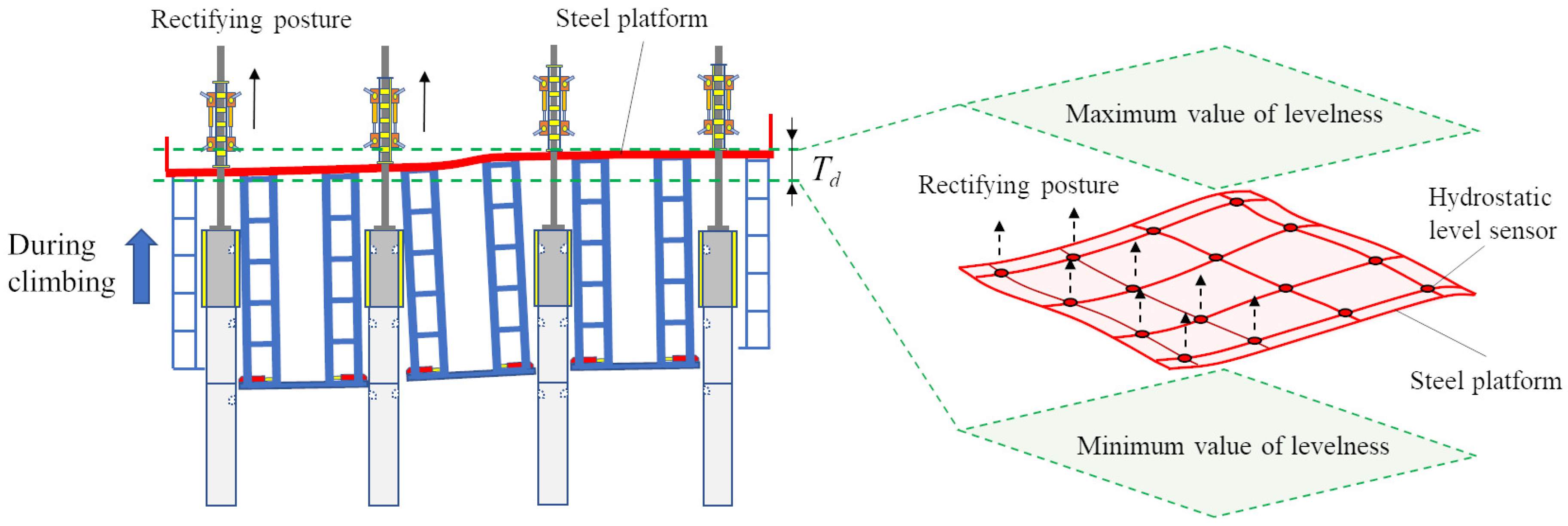

2. The Posture of the HBM

2.1. Data Sources

2.2. The Pre-Processing of Monitoring Data

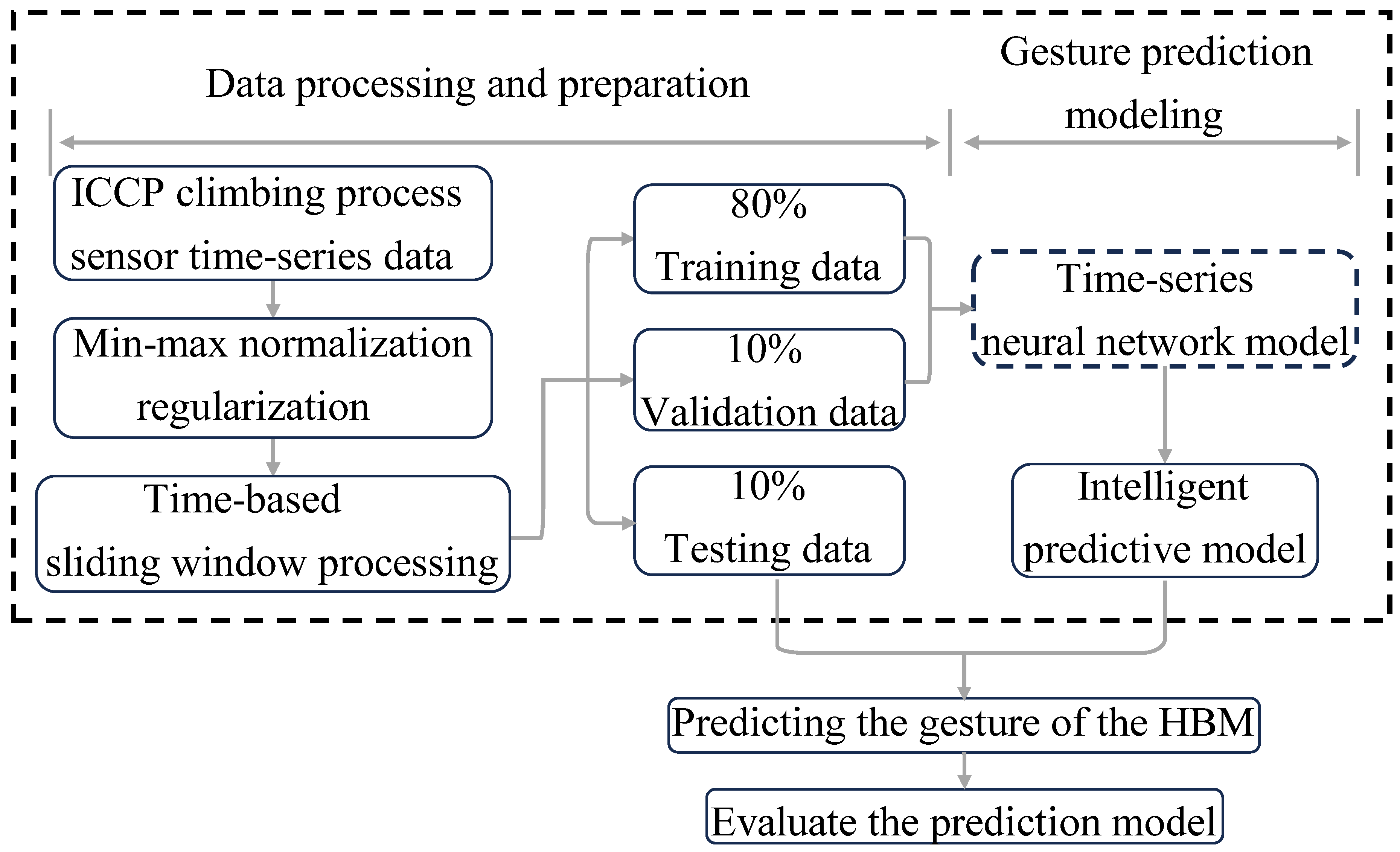

3. Methodology

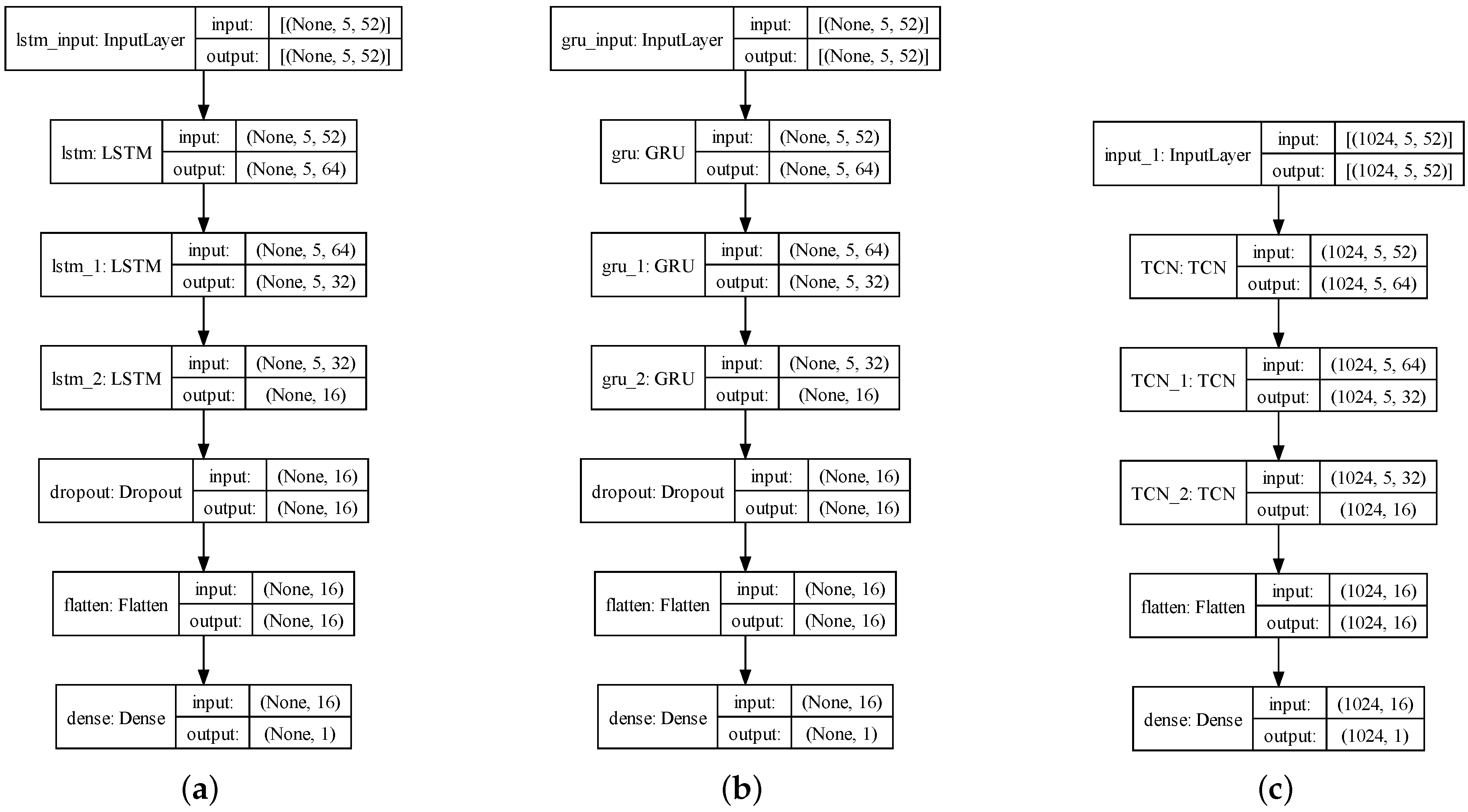

3.1. MTS-Prediction Model

3.1.1. LSTM Model

3.1.2. GRU Model

3.1.3. The TCN Model

3.2. The Architecture for the Posture-Prediction Model of HBMs

3.2.1. Data Standardization and Set Partitioning

3.2.2. The Evaluation of Predictive Performance

3.2.3. MTS-Prediction Architecture

4. Results

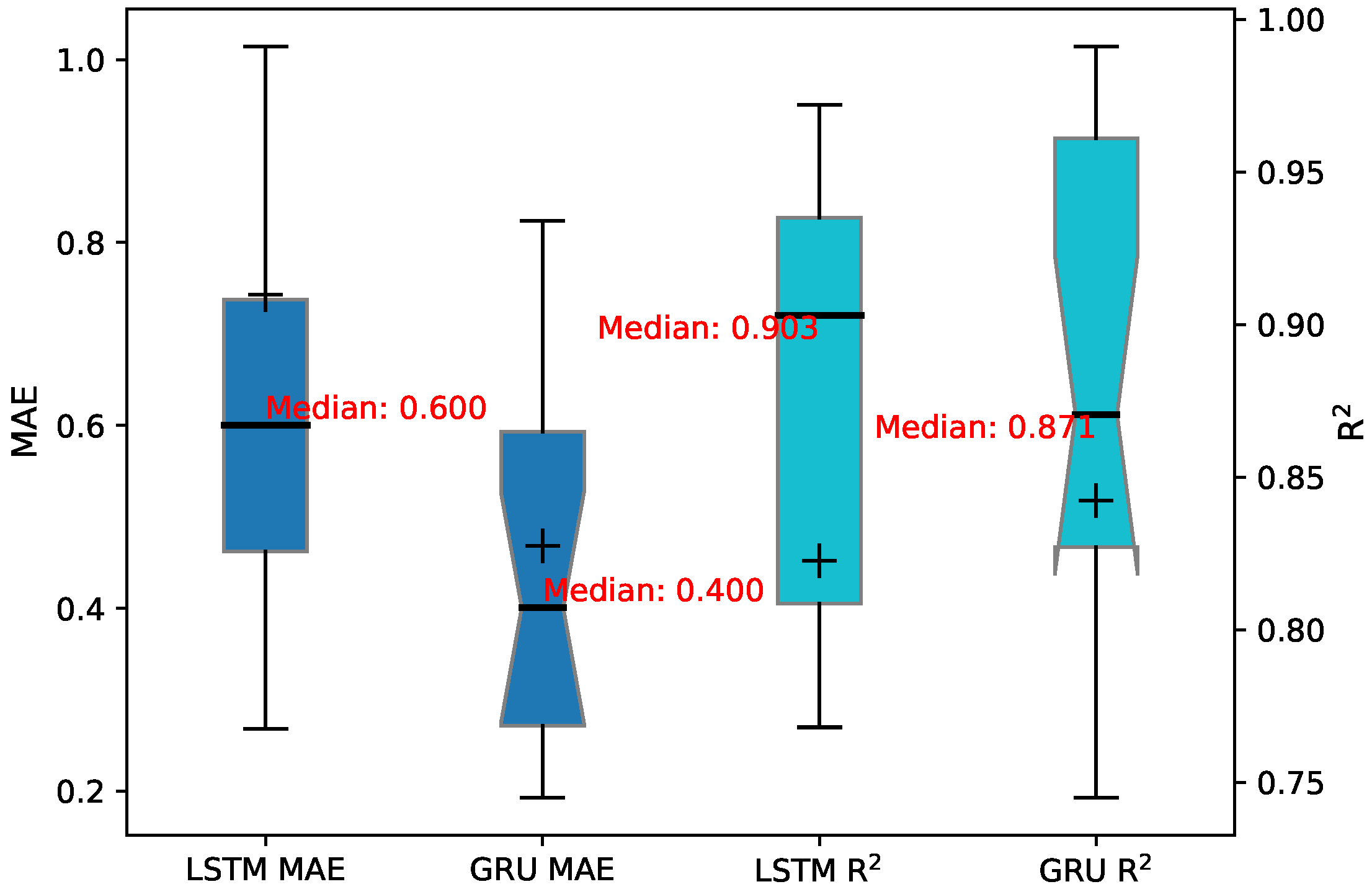

4.1. Sensitivity Analysis

4.2. Model Comparison and Evaluation

5. Discussion

6. Conclusions

- For the same neural network architecture and dataset size, the prediction system that uses the GRU neural network model does a better job of guessing how the HBM posture will change while it climbs. Among the multiple levelness sensors installed on the HBM, only a subset of them demonstrate a strong correlation with the jacking parameters of the jacking mechanism. By adjusting the pressure value of the jacking cylinders, the posture of the HBM can be conveniently corrected. Therefore, we propose employing the GRU neural network-prediction system to anticipate the posture changes of the HBM. Additionally, by adjusting the jacking cylinder pressure value, it is possible to maintain levelness within the threshold value;

- The validation of the measured data demonstrates that the proposed prediction models can accurately determine the levelness deviations and posture of the HBM’s steel platform by solely utilizing the working data from the jacking cylinders. This capability allows for real-time warnings, indicating that these networks can make significant contributions to the safe and efficient operation of the HBM. Moreover, this modified method can also be extended to monitor the operational status of other engineering equipment, such as hydraulic climbing molds, sliding molds, and integral lifting scaffolds. The widespread adoption and implementation of this method could improve the construction levelness of high-rise buildings;

- However, it is important to note that the model was trained and tested solely based on data from a single HBM, and its applicability to other HBMs or construction platforms has yet to be verified. Additionally, the model primarily considers the pressure and stroke of the jacking cylinder as inputs without accounting for the potential influence of other environmental factors, such as weather conditions. Finally, while the GRU provided the best prediction in this study, it should not be assumed that the GRU is the optimal choice in all scenarios. Therefore, future studies should improve the preprocessing and cleaning of the data and validate the generalization ability of these models. More characteristic factors should be considered under a wider range of equipment and conditions for different types of HBM operational data. This approach will lead to more comprehensive and high-performance predictive models;

- The developed models offer real-time predictions to site managers and operators, allowing them to understand the HBM’s status during the climbing process promptly. This timely understanding enables them to make the necessary adjustments in accordance with HBM management requirements and standard specification terms, thus ensuring a safer and more efficient climbing process.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HBM | High-rise building machine |

| SP | Steel platform |

| NNs | Neural networks |

| RNN | Recurrent neural network |

| LSTM | Long short-term memory |

| GRU | Gated recurrent unit |

| TCN | Temporal convolutional neural network |

| MTS | Multivariate time series |

| MAE | Mean absolute error |

| RMSE | Root mean square error |

| R2 | R-squared coefficient |

References

- Wakisaka, T.; Furuya, N.; Inoue, Y.; Shiokawa, T. Automated construction system for high-rise reinforced concrete buildings. Autom. Constr. 2000, 9, 229–250. [Google Scholar] [CrossRef]

- Ikeda, Y.; Harada, T. Application of the automated building construction system using the conventional construction method together. In Proceedings of the 23rd International Symposium on Automation and Robotics in Construction, Tokyo, Japan, 3–5 October 2006; pp. 722–727. [Google Scholar] [CrossRef]

- Bock, T.; Linner, T. Site Automation: Automated/Robotic On-Site Factories, 1st ed.; Cambridge University Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Zayed, T.; Mohamed, E. A case productivity model for automatic climbing system. Engineering 2014, 21, 33–50. [Google Scholar] [CrossRef]

- Liu, X.; Hu, Y.; Chen, D.; Wang, L. Safety Control of Hydraulic Self-climbing Formwork in South Tower Construction of Taizhou Bridge. Procedia Eng. 2012, 45, 248–252. [Google Scholar] [CrossRef]

- Kannan, M.R.; Santhi, M.H. Constructability Assessment of Climbing Formwork Systems Using Building Information Modeling. Procedia Eng. 2013, 64, 1129–1138. [Google Scholar] [CrossRef]

- Gong, J.; Fang, T.; Zuo, J. A Review of Key Technologies Development of Super High-Rise Building Construction in China. Adv. Civ. Eng. 2022, 2022, 5438917. [Google Scholar] [CrossRef]

- Pan, X.; Zuo, Z.; Zhang, L.; Zhao, T. Research on Dynamic Monitoring and Early Warning of the High-Rise Building Machine during the Climbing Stage. Adv. Civ. Eng. 2023, 2023, 9326791. [Google Scholar] [CrossRef]

- Pan, X.; Zhao, T.; Li, X.; Zuo, Z.; Zong, G.; Zhang, L. Automatic Identification of the Working State of High-Rise Building Machine Based on Machine Learning. Appl. Sci. 2023, 13, 11411. [Google Scholar] [CrossRef]

- Zuo, Z.; Huang, Y.; Pan, X.; Zhan, Y.; Zhang, L.; Li, X.; Zhu, M.; Zhang, L.; De Corte, W. Experimental research on remote real-time monitoring of concrete strength for highrise building machine during construction. Measurement 2021, 178, 109430. [Google Scholar] [CrossRef]

- Golafshani, E.M.; Talatahari, S. Predicting the climbing rate of slip formwork systems using linear biogeography-based programming. Appl. Soft Comput. 2018, 70, 263–278. [Google Scholar] [CrossRef]

- Li, S.; Yu, Z.; Meng, Z.; Han, G.; Huang, F.; Zhang, D.; Zhang, Y.; Zhu, W.; Wei, D. Study on construction technology of hydraulic climbing formwork for super high-rise building under aluminum formwork system. Iop Conf. Ser. Earth Environ. Sci. 2021, 769, 032062. [Google Scholar] [CrossRef]

- Kim, M.J.; Kim, T.; Lim, H.; Cho, H.; Kang, K.I. Automated Layout Planning of Climbing Formwork System Using Genetic Algorithm. In Proceedings of the 33rd International Symposium on Automation and Robotics in Construction (ISARC), Auburn, AL, USA, 18–21 July 2016; pp. 770–777. [Google Scholar] [CrossRef]

- Yao, G.; Guo, H.; Yang, Y.; Xiang, C.; Soltys, R. Dynamic Characteristics and Time-History Analysis of Hydraulic Climbing Formwork for Seismic Motions. Adv. Civ. Eng. 2021, 2021, 2139153. [Google Scholar] [CrossRef]

- Dong, J.; Liu, H.; Lei, M.; Fang, Z.; Guo, L. Safety and stability analysis of variable cross-section disc-buckle type steel pipe high support system. Int. J. Press. Vessel. Pip. 2022, 200, 104831. [Google Scholar] [CrossRef]

- Chandrangsu, T.; Rasmussen, K. Investigation of geometric imperfections and joint stiffness of support scaffold systems. J. Constr. Steel Res. 2011, 67, 576–584. [Google Scholar] [CrossRef]

- Shen, Y.; Xu, M.; Lin, Y.; Cui, C.; Shi, X.; Liu, Y. Safety Risk Management of Prefabricated Building Construction Based on Ontology Technology in the BIM Environment. Buildings 2022, 12, 765. [Google Scholar] [CrossRef]

- Pham, C.P.; Nguyen, P.T.; Phan, P.T.; Nguyen, Q.L.; Le, L.P.; Duong, M.T. Risk Factors Affecting Equipment Management in Construction Firms. J. Asian Financ. Econ. Bus. 2020, 7, 347–356. [Google Scholar] [CrossRef]

- He, Z.; Gao, M.; Liang, T.; Lu, Y.; Lai, X.; Pan, F. Tornado-affected safety assessment of tower cranes outer-attached to super high-rise buildings in construction. J. Build. Eng. 2022, 51, 104320. [Google Scholar] [CrossRef]

- Jiang, L.; Zhao, T.; Zhang, W.; Hu, J. System Hazard Analysis of Tower Crane in Different Phases on Construction Site. Adv. Civ. Eng. 2021, 2021, 1–16. [Google Scholar] [CrossRef]

- Audibert, J.; Michiardi, P.; Guyard, F.; Marti, S.; Zuluaga, M.A. Do deep neural networks contribute to multivariate time series anomaly detection? Pattern Recognit. 2022, 132, 108945. [Google Scholar] [CrossRef]

- Park, D.; Hoshi, Y.; Kemp, C.C. A Multimodal Anomaly Detector for Robot-Assisted Feeding Using an LSTM-Based Variational Autoencoder. IEEE Robot. Autom. Lett. 2018, 3, 1544–1551. [Google Scholar] [CrossRef]

- Li, G.; Jung, J.J. Deep learning for anomaly detection in multivariate time series: Approaches, applications, and challenges. Inf. Fusion 2023, 91, 93–102. [Google Scholar] [CrossRef]

- Nguyen, H.; Tran, K.; Thomassey, S.; Hamad, M. Forecasting and Anomaly Detection approaches using LSTM and LSTM Autoencoder techniques with the applications in supply chain management. Int. J. Inf. Manag. 2021, 57, 102282. [Google Scholar] [CrossRef]

- Qin, H.; Yan, M.; Ji, H. Application of Controller Area Network (CAN) bus anomaly detection based on time series prediction. Veh. Commun. 2021, 27, 100291. [Google Scholar] [CrossRef]

- Chen, H.; Liu, H.; Chu, X.; Liu, Q.; Xue, D. Anomaly detection and critical SCADA parameters identification for wind turbines based on LSTM-AE neural network. Renew. Energy 2021, 172, 829–840. [Google Scholar] [CrossRef]

- Ranjan, K.; Tripathy, D.; Prusty, B.R.; Jena, D. An improved sliding window prediction-based outlier detection and correction for volatile time-series. Int. J. Numer. Model. Electron. Netw. Devices Fields 2020, 34, e2816. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, D.; Zhang, X.; Yuan, Z.; Cheng, X. Learning Graph Structures With Transformer for Multivariate Time-Series Anomaly Detection in IoT. IEEE Internet Things J. 2022, 9, 9179–9189. [Google Scholar] [CrossRef]

- Kapoor, B.; Nagpal, B.; Jain, P.K.; Abraham, A.; Gabralla, L.A. Epileptic Seizure Prediction Based on Hybrid Seek Optimization Tuned Ensemble Classifier Using EEG Signals. Sensors 2023, 23, 423. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F. A survey of deep neural network architectures and their applications. Neurocomputing 2016, 234. [Google Scholar] [CrossRef]

- Wu, Y.c.; Feng, J.w. Development and Application of Artificial Neural Network. Wirel. Pers. Commun. 2018, 102. [Google Scholar] [CrossRef]

- Choi, K.; Yi, J.; Park, C.; Yoon, S. Deep Learning for Anomaly Detection in Time-Series Data: Review, Analysis, and Guidelines. IEEE Access 2021, 9, 120043–120065. [Google Scholar] [CrossRef]

- Rollo, F.; Bachechi, C.; Po, L. Anomaly Detection and Repairing for Improving Air Quality Monitoring. Sensors 2023, 23, 640. [Google Scholar] [CrossRef]

- Ding, N.; Ma, H.; Gao, H.; Ma, Y.; Tan, G. Real-time anomaly detection based on long short-Term memory and Gaussian Mixture Model. Comput. Electr. Eng. 2019, 79, 106458. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.; Koutník, J.; Steunebrink, B.; Schmidhuber, J. LSTM: A search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2015, 28, 2222–2232. [Google Scholar] [CrossRef] [PubMed]

- Vos, K.; Peng, Z.; Jenkins, C.; Shahriar, M.R.; Borghesani, P.; Wang, W. Vibration-based anomaly detection using LSTM/SVM approaches. Mech. Syst. Signal Process. 2022, 169, 108752. [Google Scholar] [CrossRef]

- Cho, K.; Merrienboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder-Decoder Approaches. In Proceedings of SSST-8, Eighth Workshop on Syntax, Semantics and Structure in Statistical Translation; Association for Computational Linguistics: Stroudsburg, PA, USA, 2014. [Google Scholar] [CrossRef]

- Henry, A.; Gautam, S.; Khanna, S.; Rabie, K.; Shongwe, T.; Bhattacharya, P.; Sharma, B.; Chowdhury, S. Composition of Hybrid Deep Learning Model and Feature Optimization for Intrusion Detection System. Sensors 2023, 23, 890. [Google Scholar] [CrossRef]

- Chang, W.; Sun, D.; Du, Q. Intelligent Sensors for POI Recommendation Model Using Deep Learning in Location-Based Social Network Big Data. Sensors 2023, 23, 850. [Google Scholar] [CrossRef]

- Yang, B.; Lu, P.; Du, C.; Cao, F. A GRU network framework towards fault-tolerant control for flight vehicles based on a gain-scheduled approach. Aerosp. Sci. Technol. 2024, 146, 108954. [Google Scholar] [CrossRef]

- Bai, S.; Kolter, J.Z.; Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar]

- Yin, C.; Zhang, S.; Wang, J.; Xiong, N.N. Anomaly Detection Based on Convolutional Recurrent Autoencoder for IoT Time Series. IEEE Trans. Syst. Man. Cybern. Syst. 2022, 52, 112–122. [Google Scholar] [CrossRef]

- Wang, S.; Li, B.; Li, G.; Yao, B.; Wu, J. Short-term wind power prediction based on multidimensional data cleaning and feature reconfiguration. Appl. Energy 2021, 292, 116851. [Google Scholar] [CrossRef]

- Mohan, A.; Venkatesan, M.; Prabhavathy, P.; Jayakrishnan, A. Temporal convolutional network based rice crop yield prediction using multispectral satellite data. Infrared Phys. Technol. 2023, 135, 104960. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

| LSTM MAE | LSTM R2 | GRU MAE | GRU R2 | TCN MAE | TCN R2 | |

|---|---|---|---|---|---|---|

| SZ01 | 0.599 | 0.927 | 0.274 | 0.870 | 1.784 | 0.263 |

| SZ02 | 0.484 | 0.813 | 0.345 | 0.860 | 1.536 | 0.034 |

| SZ03 | 0.601 | 0.944 | 0.593 | 0.961 | 1.294 | 0.612 |

| SZ04 | 0.581 | 0.963 | 0.593 | 0.961 | 1.510 | 0.731 |

| SZ05 | 0.795 | 0.821 | 0.456 | 0.745 | 1.069 | 0.267 |

| SZ06 | 0.522 | 0.795 | 0.625 | 0.585 | 1.995 | −0.934 |

| SZ07 | 0.395 | 0.932 | 0.593 | 0.961 | 1.445 | 0.065 |

| SZ08 | 0.364 | 0.903 | 0.224 | 0.815 | 0.680 | 0.671 |

| SZ09 | 0.268 | 0.972 | 0.193 | 0.854 | 1.269 | 0.296 |

| SZ10 | 0.718 | 0.768 | 0.304 | 0.871 | 1.266 | 0.243 |

| SZ11 | 0.316 | 0.903 | 0.261 | 0.959 | 0.855 | 0.641 |

| SZ12 | 0.626 | 0.970 | 0.298 | 0.831 | 3.435 | 0.117 |

| SZ13 | 7.305 | 0.462 | 1.128 | 0.988 | 9.923 | 0.071 |

| SZ14 | 0.656 | 0.916 | 0.515 | 0.957 | 2.072 | 0.350 |

| SZ15 | 1.014 | 0.871 | 0.264 | 0.991 | 2.026 | 0.613 |

| SZ16 | 1.975 | −0.207 | 0.824 | 0.268 | 6.316 | −8.734 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, X.; Huang, J.; Zhang, Y.; Zuo, Z.; Zhang, L. Predicting the Posture of High-Rise Building Machines Based on Multivariate Time Series Neural Network Models. Sensors 2024, 24, 1495. https://doi.org/10.3390/s24051495

Pan X, Huang J, Zhang Y, Zuo Z, Zhang L. Predicting the Posture of High-Rise Building Machines Based on Multivariate Time Series Neural Network Models. Sensors. 2024; 24(5):1495. https://doi.org/10.3390/s24051495

Chicago/Turabian StylePan, Xi, Junguang Huang, Yiming Zhang, Zibo Zuo, and Longlong Zhang. 2024. "Predicting the Posture of High-Rise Building Machines Based on Multivariate Time Series Neural Network Models" Sensors 24, no. 5: 1495. https://doi.org/10.3390/s24051495

APA StylePan, X., Huang, J., Zhang, Y., Zuo, Z., & Zhang, L. (2024). Predicting the Posture of High-Rise Building Machines Based on Multivariate Time Series Neural Network Models. Sensors, 24(5), 1495. https://doi.org/10.3390/s24051495